VISUALIZATION TECHNIQUES UTILIZING THE SENSITIVITY ANALYSIS OF MODELS

- Slides: 34

VISUALIZATION TECHNIQUES UTILIZING THE SENSITIVITY ANALYSIS OF MODELS Ivo Kondapaneni, Pavel Kordík, Pavel Slavík Department of of Computer Science and Engineering, Faculty of of Eletrical Engineering, Czech Technical University in in Prague, Czech Republic Presenting author: Pavel Kordík (kordikp@fel. cvut. cz)

Overview • Motivation • Data mining models • Visualization based on sensitivity analysis • Regression problems • Classification problems • Definition of interesting plots • Genetic search for 2 D and 3 D plots 2

Motivation • Data mining – extracting new, potentially useful information from data • DM Models are automatically generated • Are models always credible? • Are models comprehensible? • How to extract information from models? Visualization 3

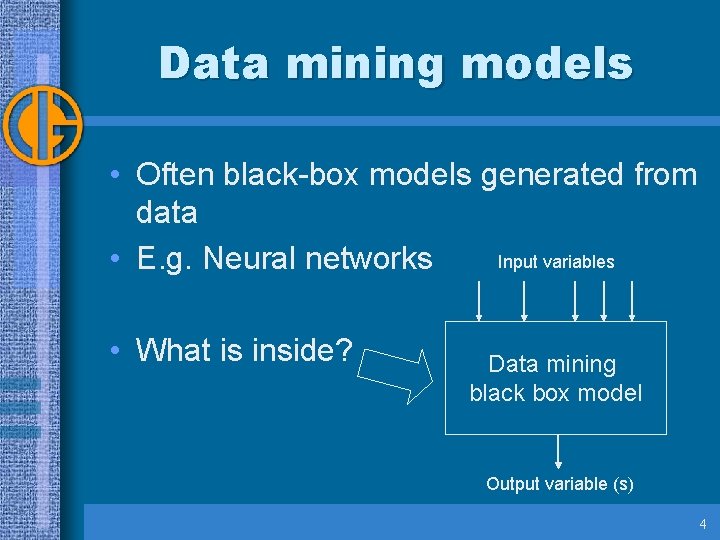

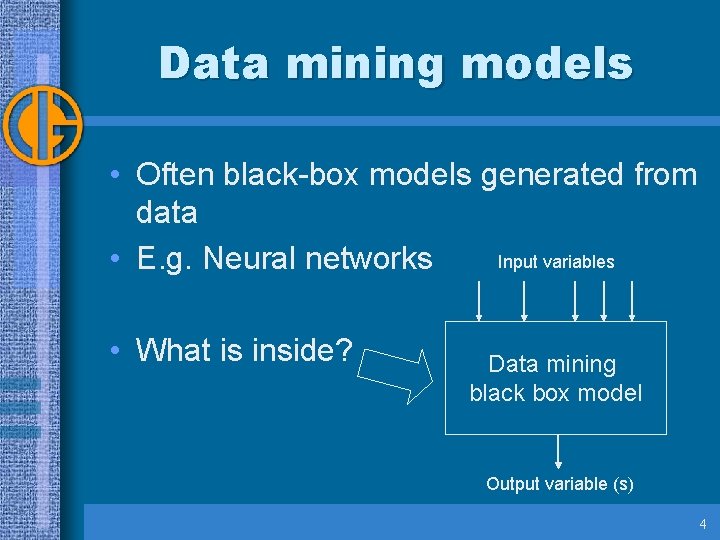

Data mining models • Often black-box models generated from data Input variables • E. g. Neural networks • What is inside? Data mining black box model Output variable (s) 4

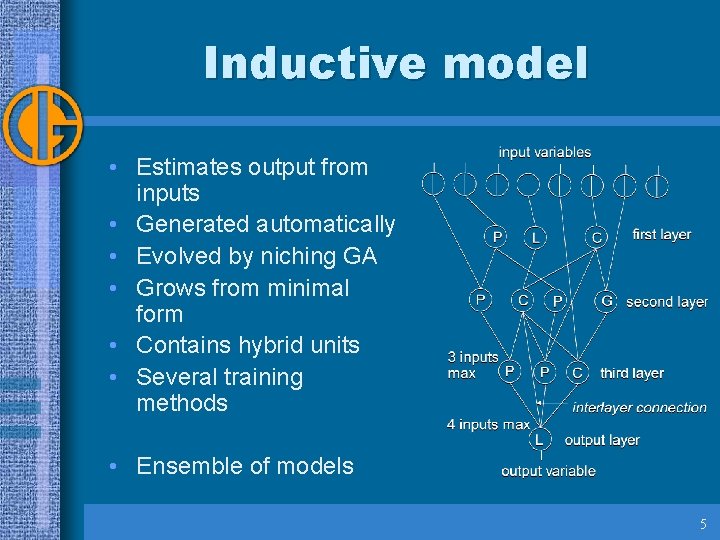

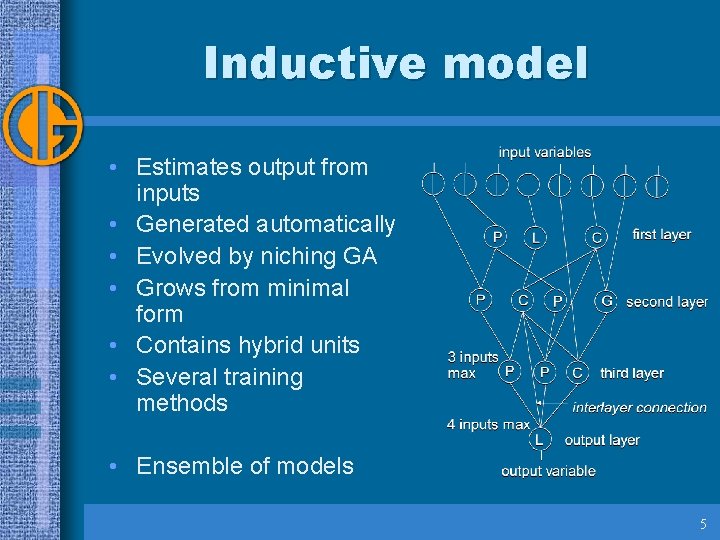

Inductive model • Estimates output from inputs • Generated automatically • Evolved by niching GA • Grows from minimal form • Contains hybrid units • Several training methods • Ensemble of models 5

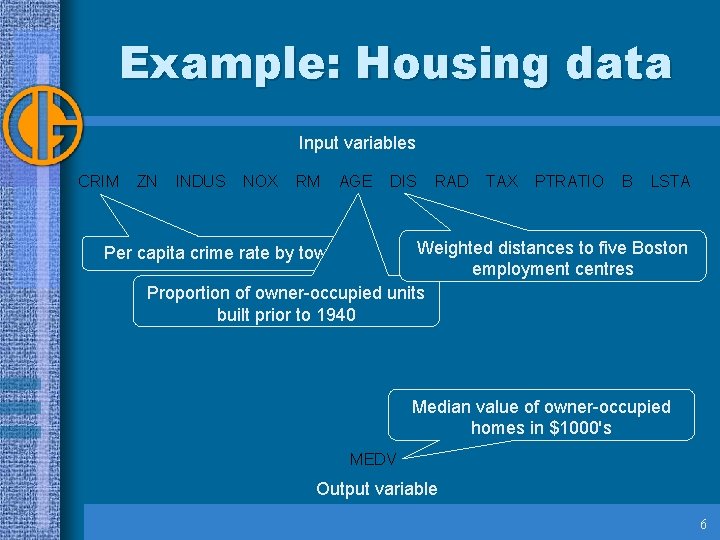

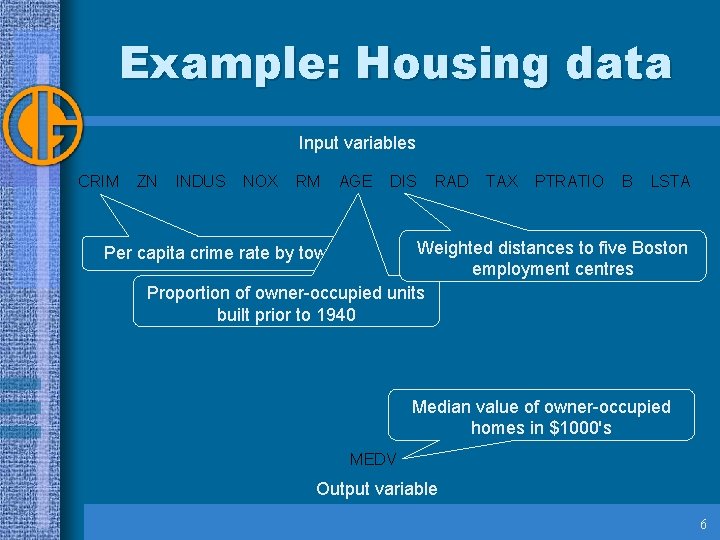

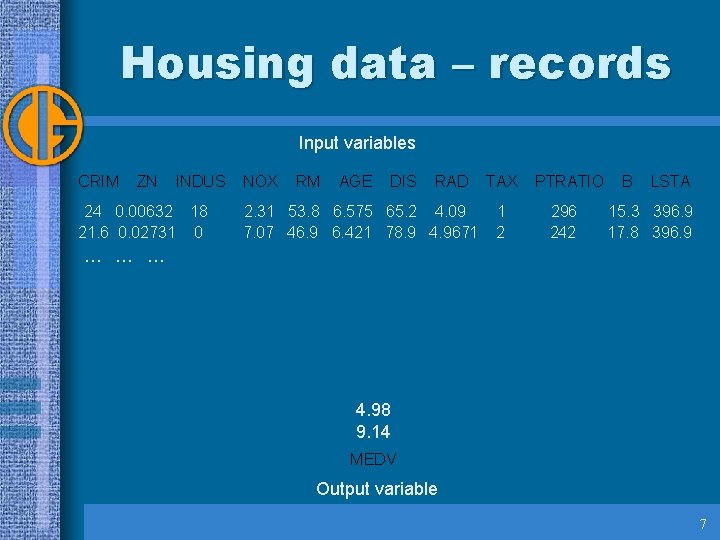

Example: Housing data Input variables CRIM ZN INDUS NOX RM AGE DIS RAD TAX PTRATIO B LSTA Weighted distances to five Boston employment centres Proportion of owner-occupied units built prior to 1940 Per capita crime rate by town Median value of owner-occupied homes in $1000's MEDV Output variable 6

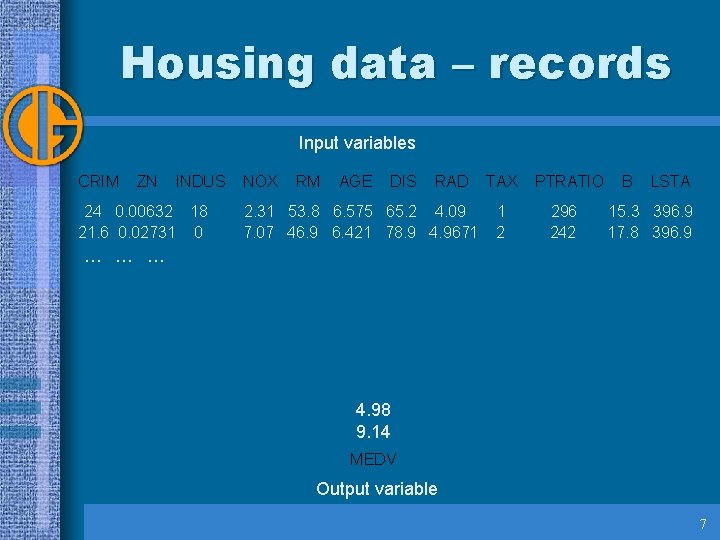

Housing data – records Input variables CRIM ZN INDUS 24 0. 00632 18 21. 6 0. 02731 0 NOX RM AGE DIS RAD 2. 31 53. 8 6. 575 65. 2 4. 09 7. 07 46. 9 6. 421 78. 9 4. 9671 TAX 1 2 PTRATIO 296 242 B LSTA 15. 3 396. 9 17. 8 396. 9 … … … 4. 98 9. 14 MEDV Output variable 7

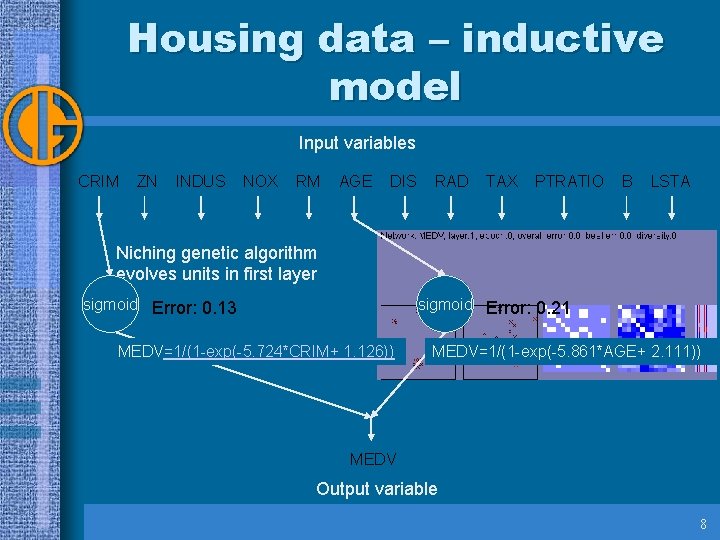

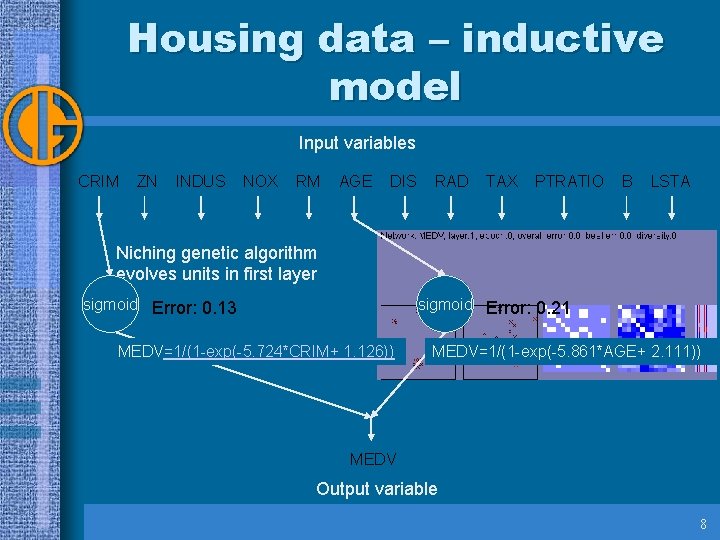

Housing data – inductive model Input variables CRIM ZN INDUS NOX RM AGE DIS RAD TAX PTRATIO B LSTA Niching genetic algorithm evolves units in first layer sigmoid Error: 0. 13 sigmoid Error: 0. 21 MEDV=1/(1 -exp(-5. 724*CRIM+ 1. 126)) MEDV=1/(1 -exp(-5. 861*AGE+ 2. 111)) MEDV Output variable 8

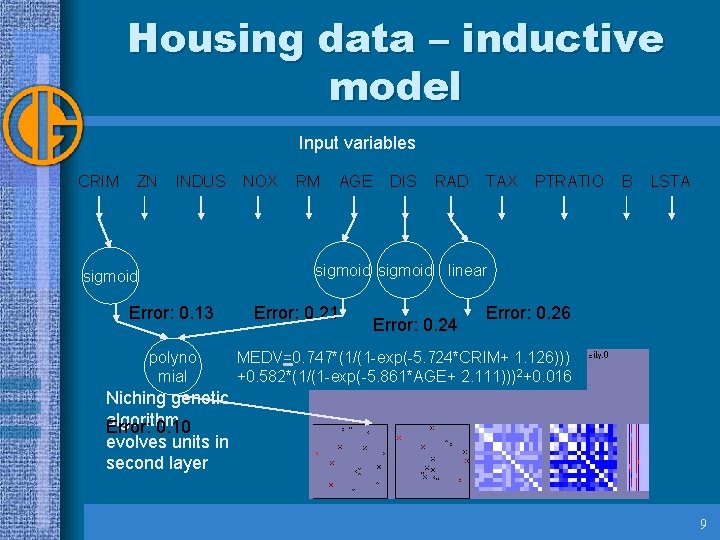

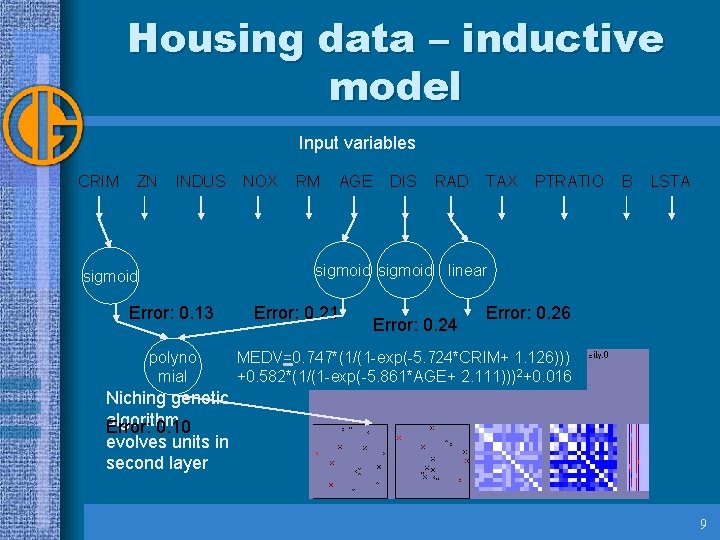

Housing data – inductive model Input variables CRIM ZN INDUS NOX RM AGE DIS RAD TAX PTRATIO B LSTA sigmoid linear sigmoid Error: 0. 13 polyno mial Niching genetic algorithm Error: 0. 10 evolves units in second layer Error: 0. 21 Error: 0. 24 Error: 0. 26 MEDV=0. 747*(1/(1 -exp(-5. 724*CRIM+ 1. 126))) +0. 582*(1/(1 -exp(-5. 861*AGE+ 2. 111)))2+0. 016 MEDV Output variable 9

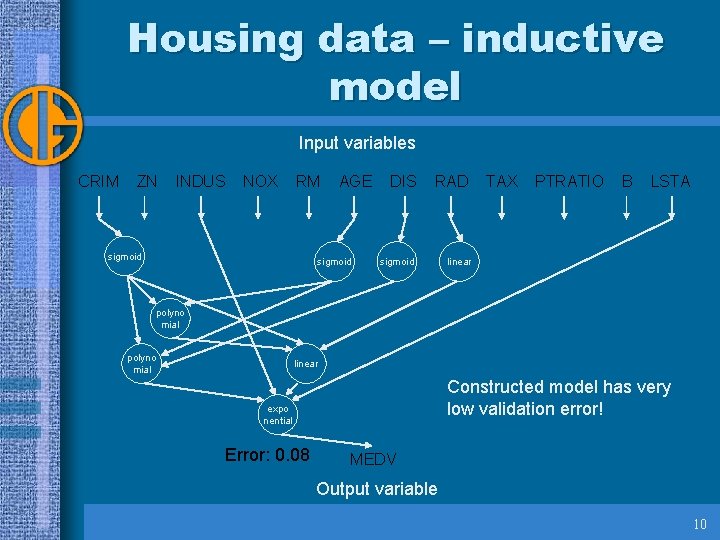

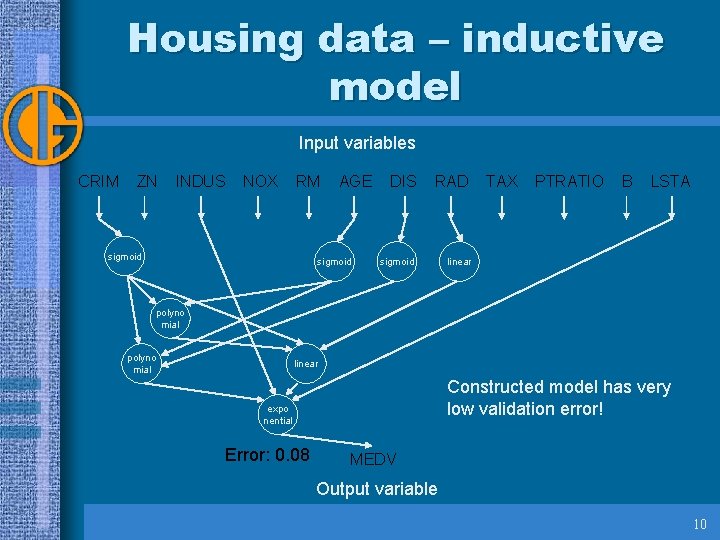

Housing data – inductive model Input variables CRIM ZN INDUS NOX RM sigmoid AGE sigmoid DIS RAD sigmoid TAX PTRATIO B LSTA linear polyno mial linear Constructed model has very low validation error! expo nential Error: 0. 08 MEDV Output variable 10

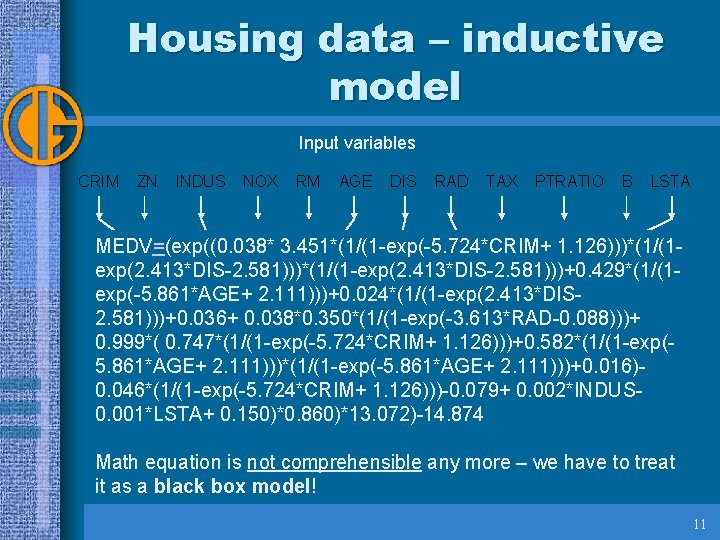

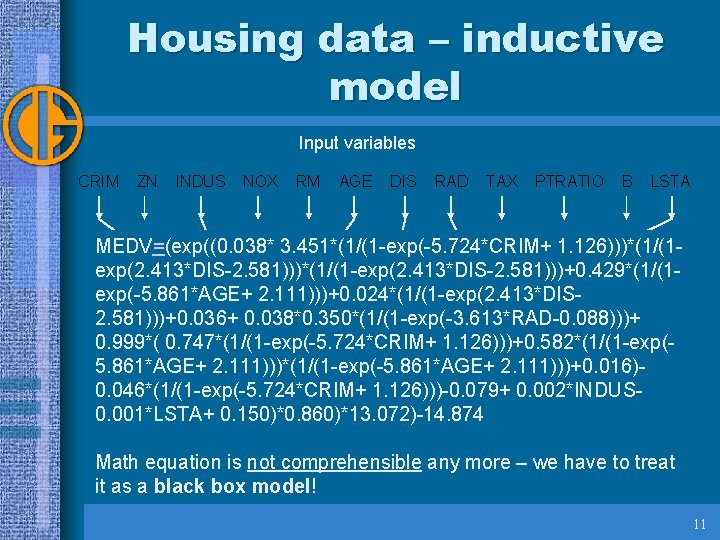

Housing data – inductive model Input variables CRIM ZN INDUS NOX RM AGE DIS RAD TAX PTRATIO B LSTA MEDV=(exp((0. 038* 3. 451*(1/(1 -exp(-5. 724*CRIM+ 1. 126)))*(1/(1 S S S L exp(2. 413*DIS-2. 581)))*(1/(1 -exp(2. 413*DIS-2. 581)))+0. 429*(1/(1 exp(-5. 861*AGE+ 2. 111)))+0. 024*(1/(1 -exp(2. 413*DISP 2. 581)))+0. 036+ 0. 038*0. 350*(1/(1 -exp(-3. 613*RAD-0. 088)))+ 0. 999*( 0. 747*(1/(1 -exp(-5. 724*CRIM+ 1. 126)))+0. 582*(1/(1 -exp(P L 5. 861*AGE+ 2. 111)))*(1/(1 -exp(-5. 861*AGE+ 2. 111)))+0. 016)0. 046*(1/(1 -exp(-5. 724*CRIM+ 1. 126)))-0. 079+ 0. 002*INDUSE 0. 001*LSTA+ 0. 150)*0. 860)*13. 072)-14. 874 MEDV Math equation Error: is not 0. 08 comprehensible any more – we have to treat it as a black box model!Output variable 11

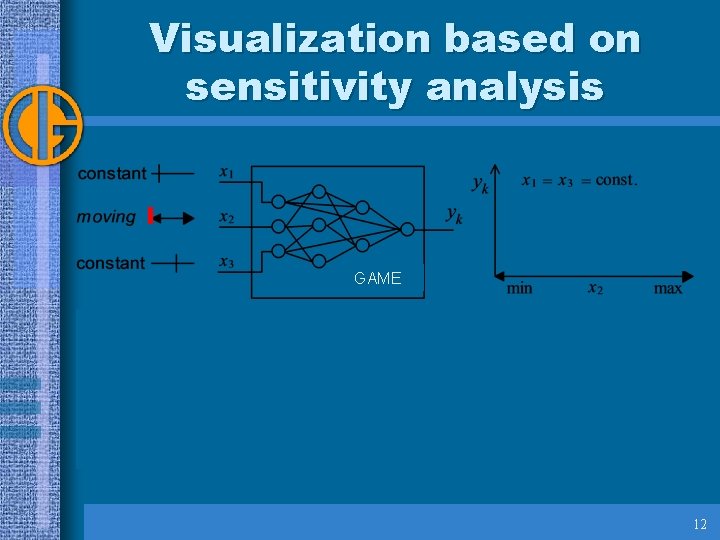

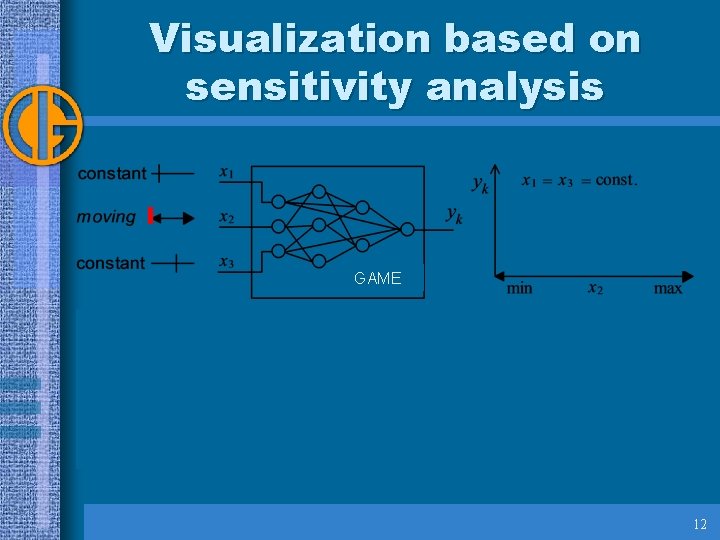

Visualization based on sensitivity analysis GAME 12

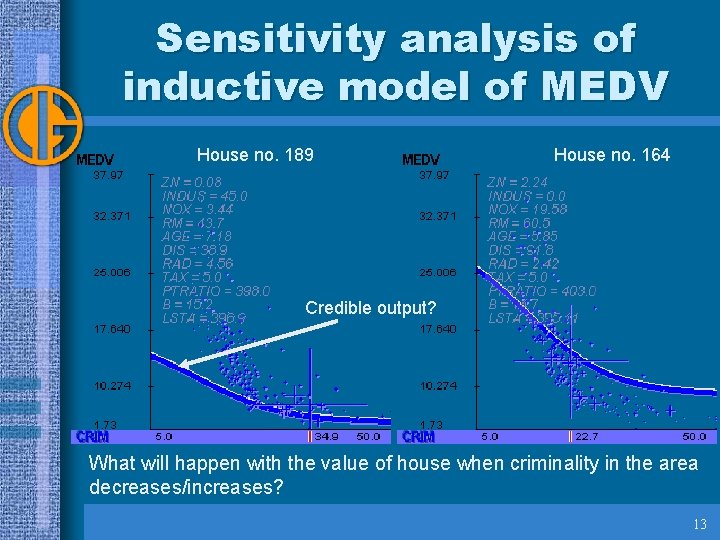

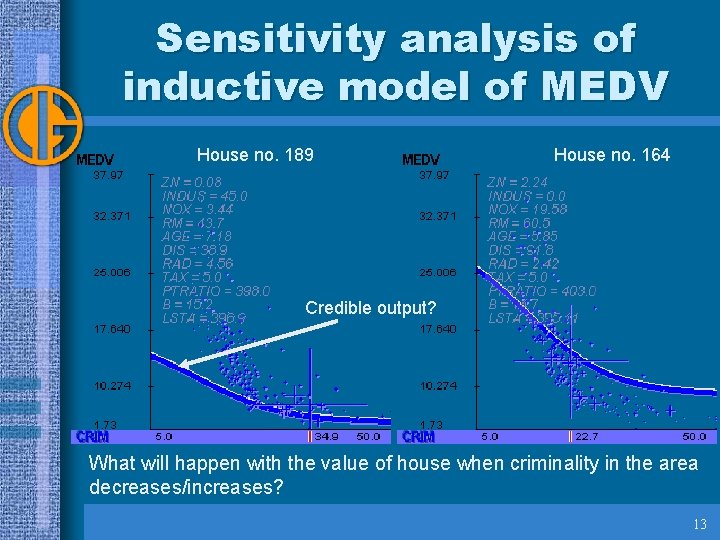

Sensitivity analysis of inductive model of MEDV House no. 189 House no. 164 Credible output? What will happen with the value of house when criminality in the area decreases/increases? 13

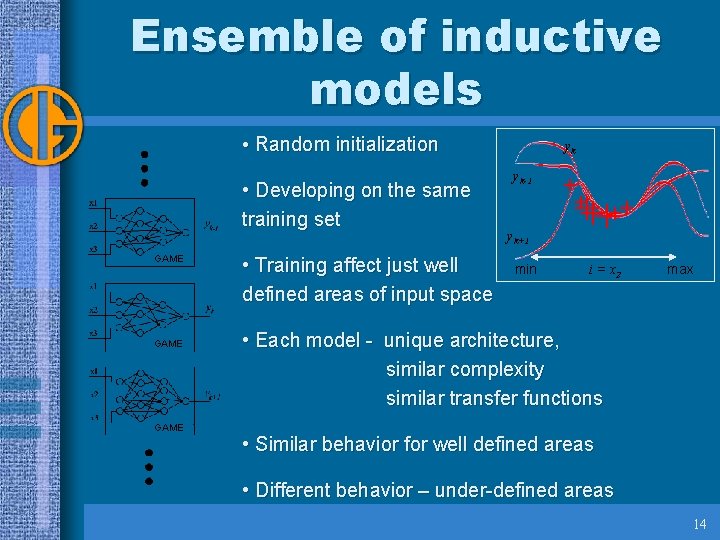

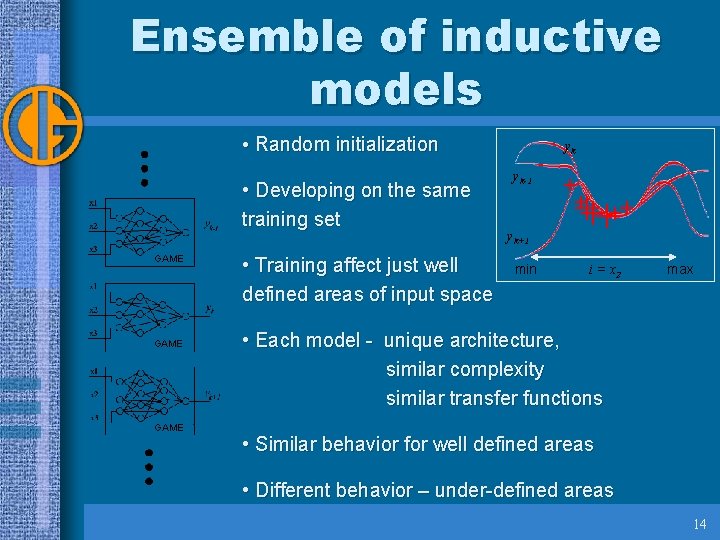

Ensemble of inductive models • Random initialization • Developing on the same training set GAME • Training affect just well defined areas of input space yk yk-1 yk+1 min i = x 2 max • Each model - unique architecture, similar complexity similar transfer functions • Similar behavior for well defined areas • Different behavior – under-defined areas 14

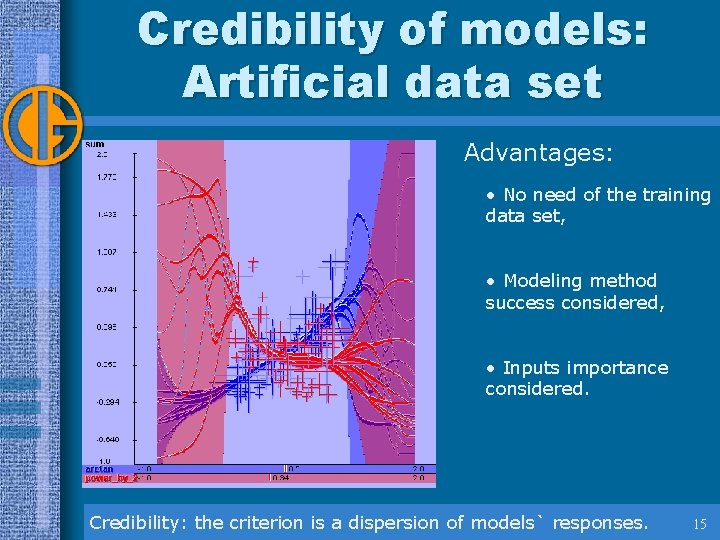

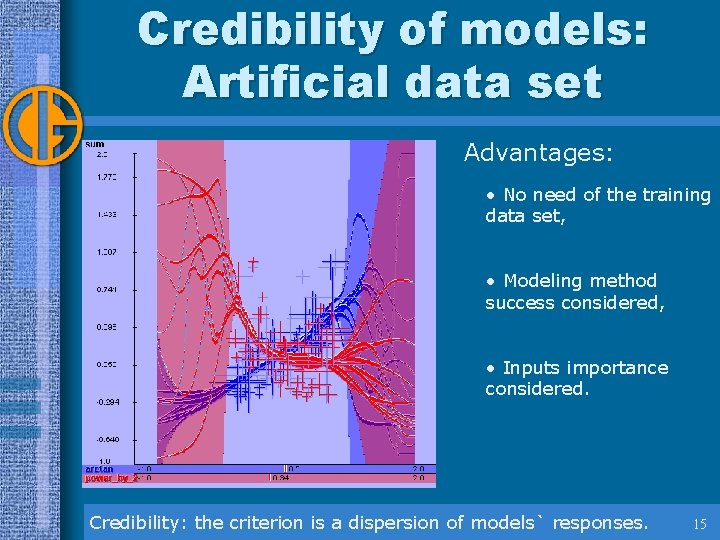

Credibility of models: Artificial data set Advantages: • No need of the training data set, • Modeling method success considered, • Inputs importance considered. Credibility: the criterion is a dispersion of models` responses. 15

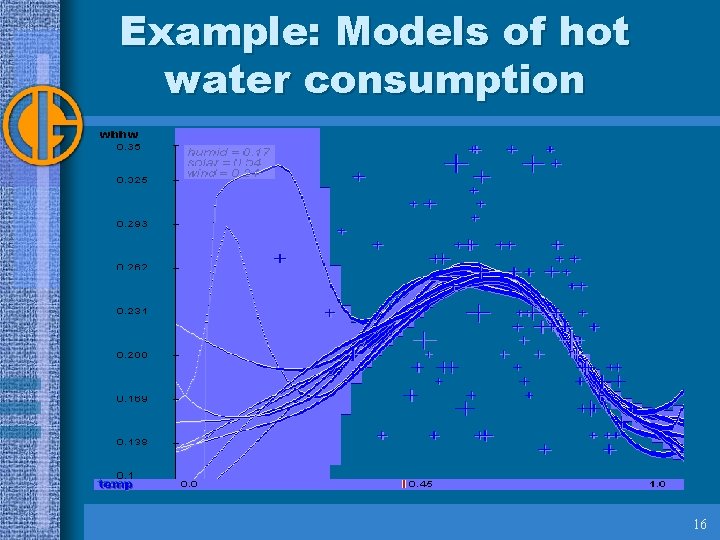

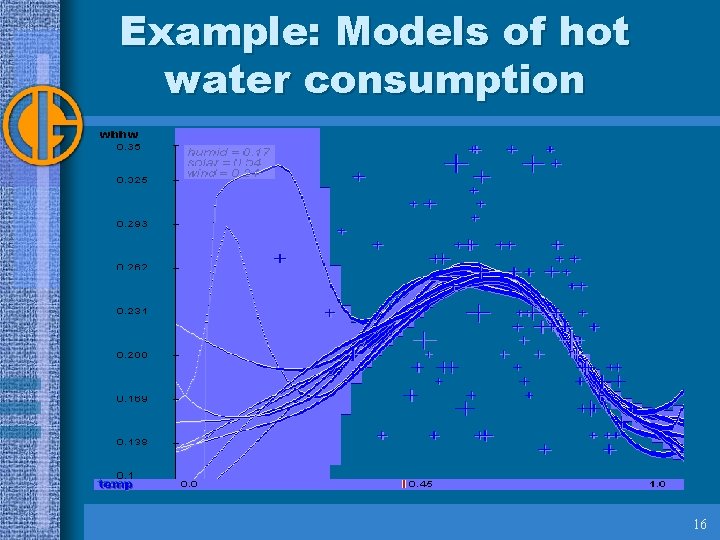

Example: Models of hot water consumption 16

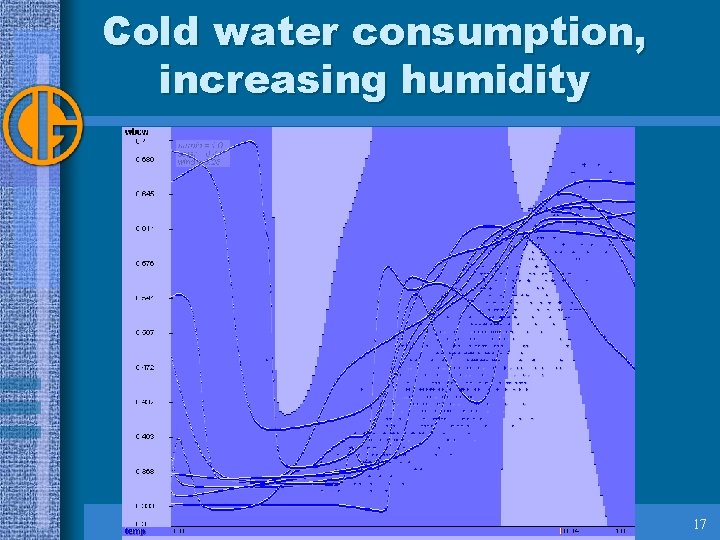

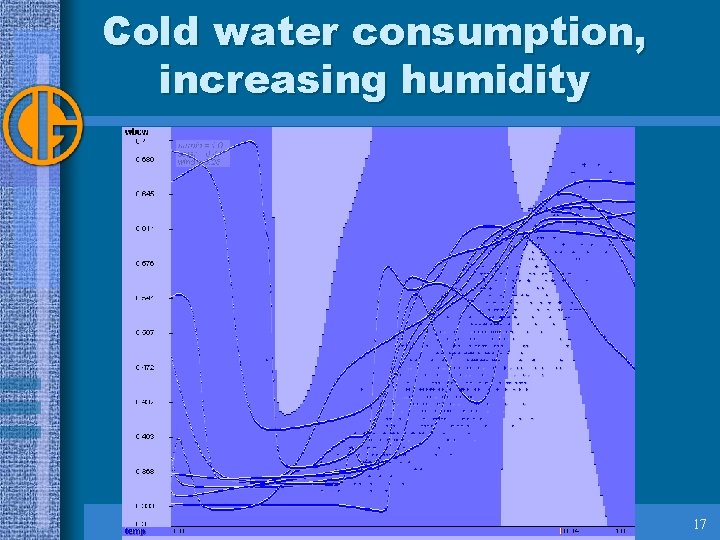

Cold water consumption, increasing humidity 17

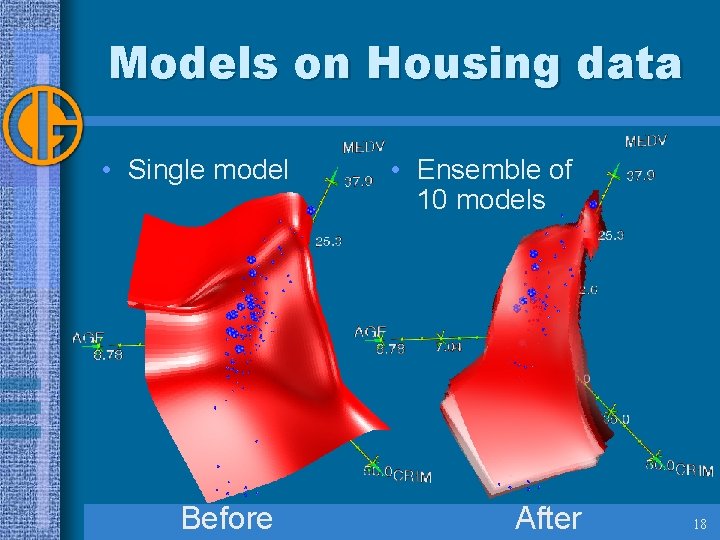

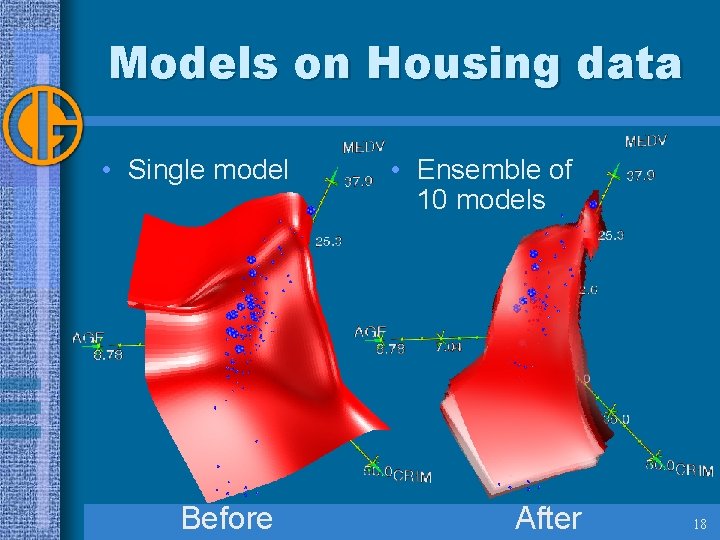

Models on Housing data • Single model Before • Ensemble of 10 models After 18

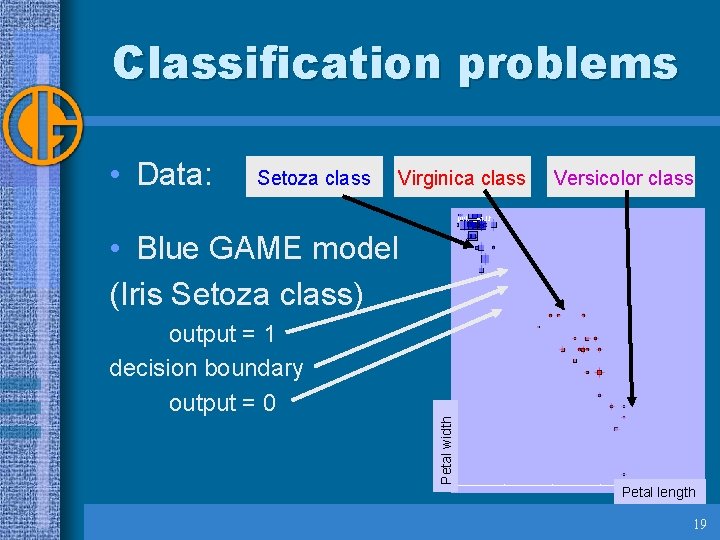

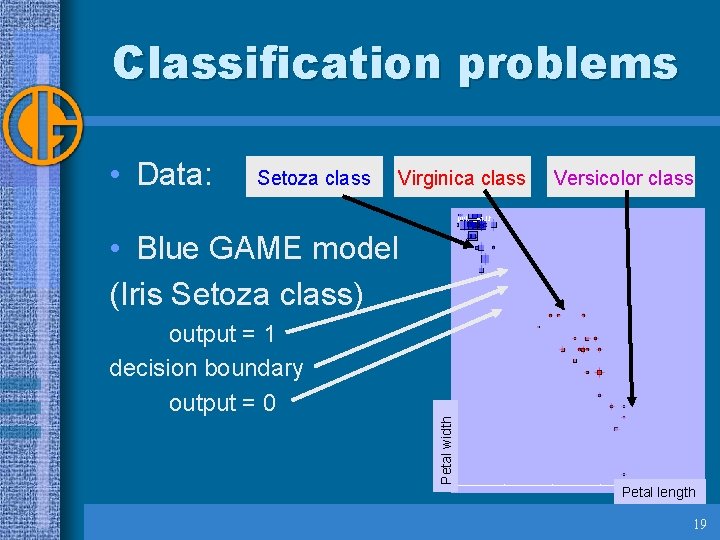

Classification problems • Data: Setoza class Virginica class Versicolor class • Blue GAME model (Iris Setoza class) Petal width output = 1 decision boundary output = 0 Petal length 19

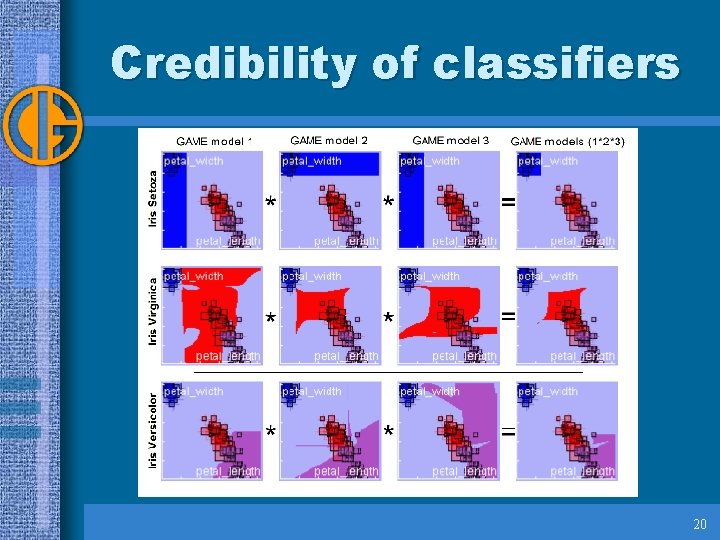

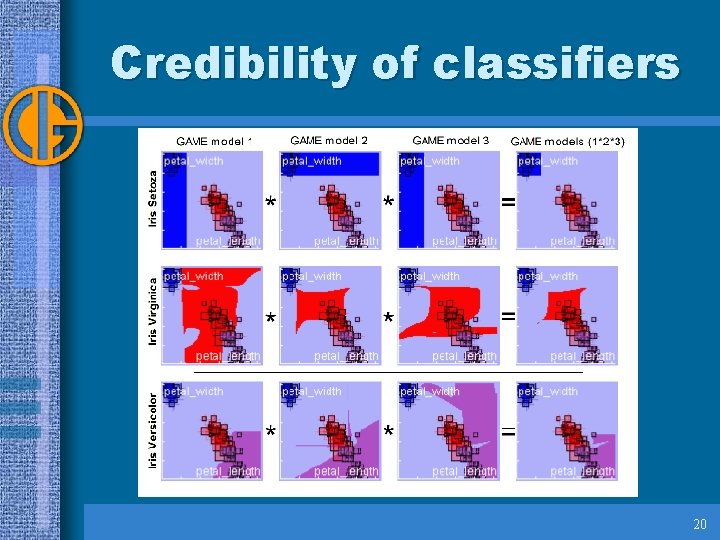

Credibility of classifiers 20

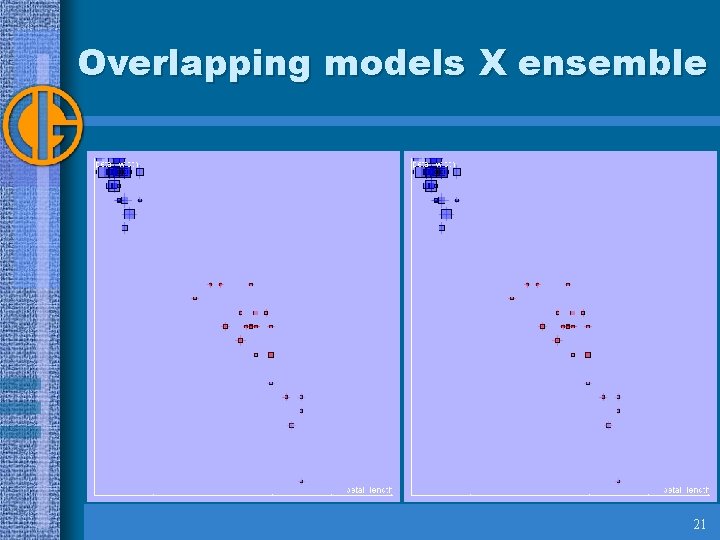

Overlapping models X ensemble 21

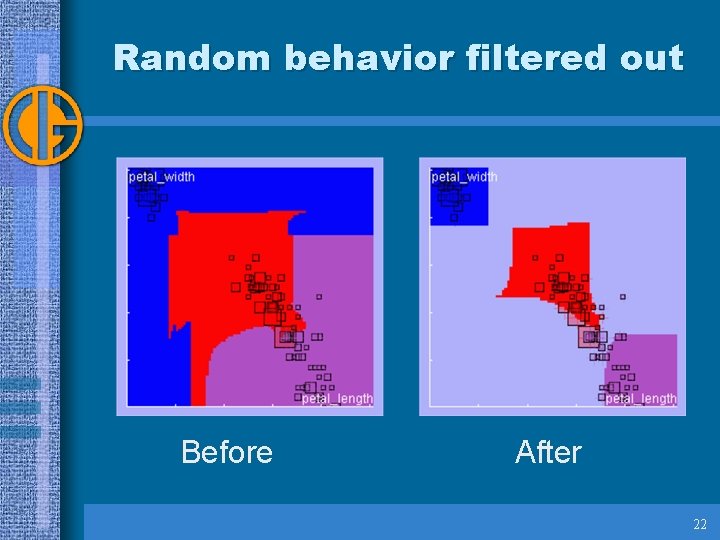

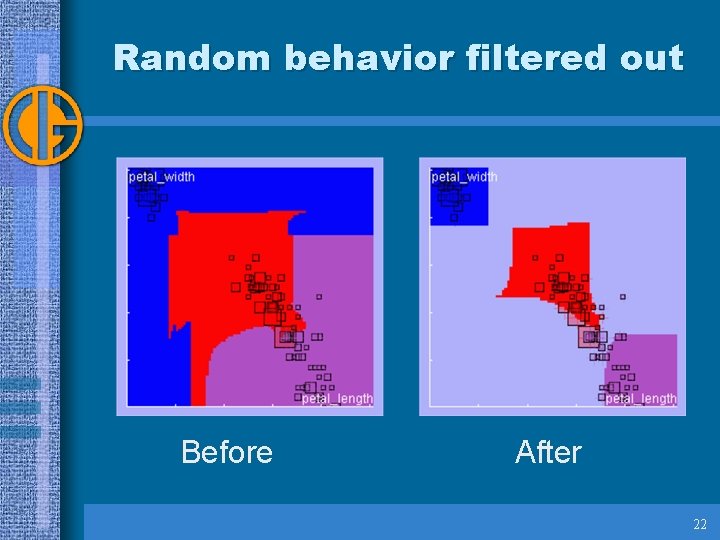

Random behavior filtered out Before After 22

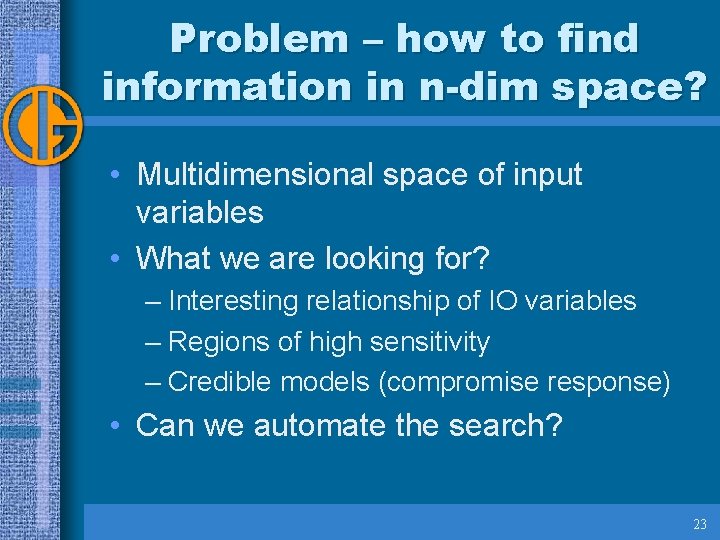

Problem – how to find information in n-dim space? • Multidimensional space of input variables • What we are looking for? – Interesting relationship of IO variables – Regions of high sensitivity – Credible models (compromise response) • Can we automate the search? 23

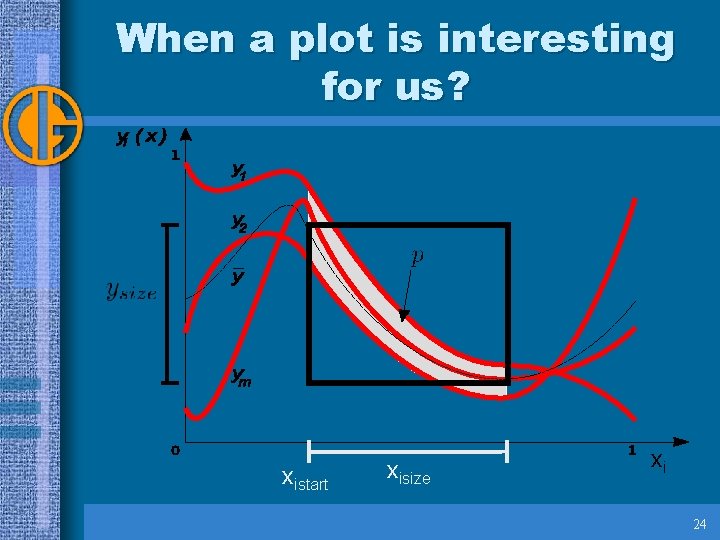

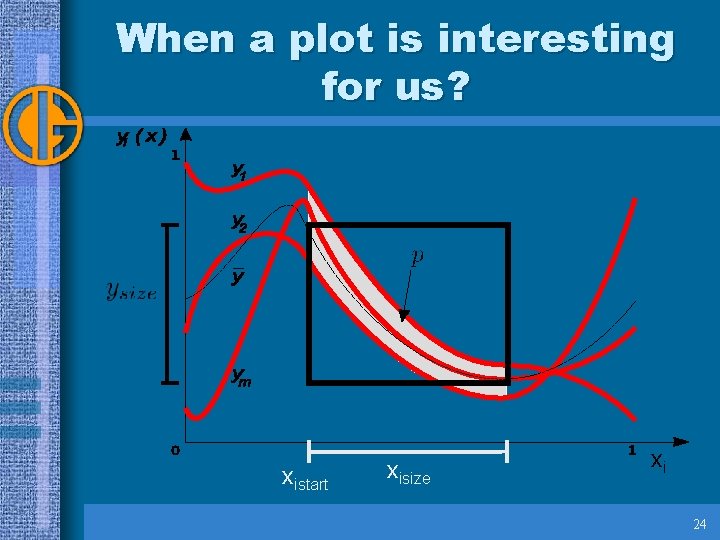

When a plot is interesting for us? xistart xisize xi 24

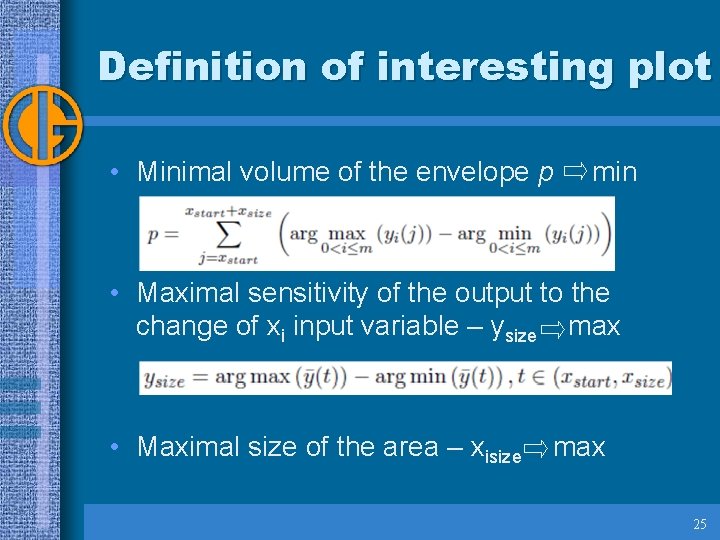

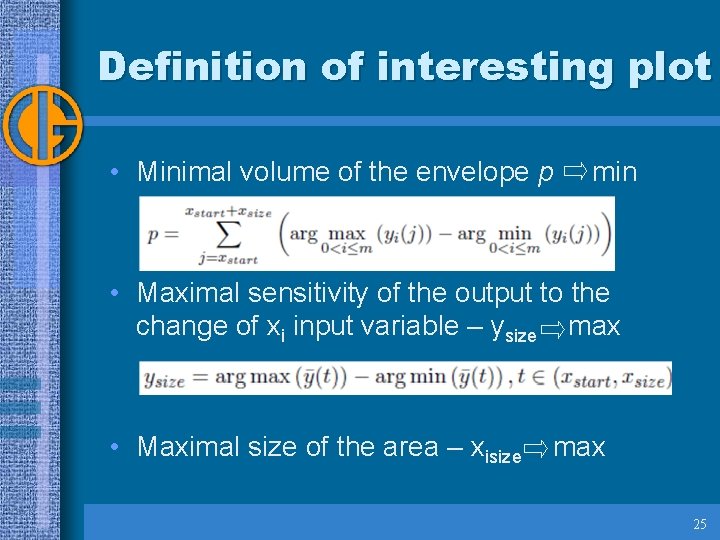

Definition of interesting plot • Minimal volume of the envelope p min • Maximal sensitivity of the output to the change of xi input variable – ysize max • Maximal size of the area – xisize max 25

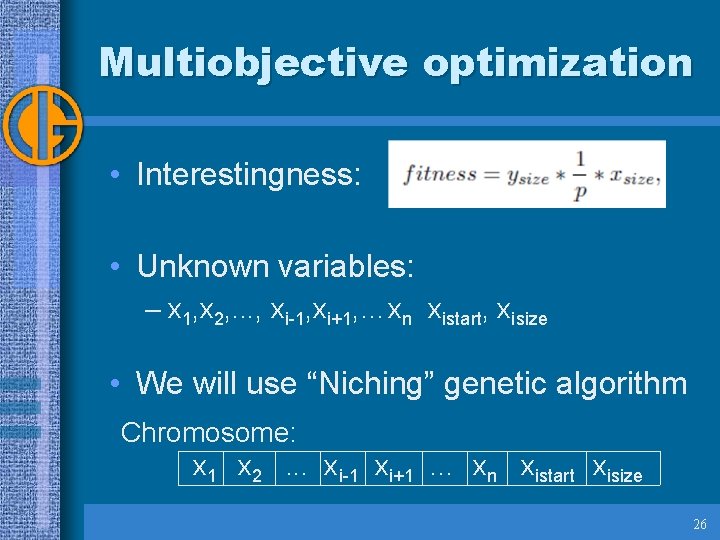

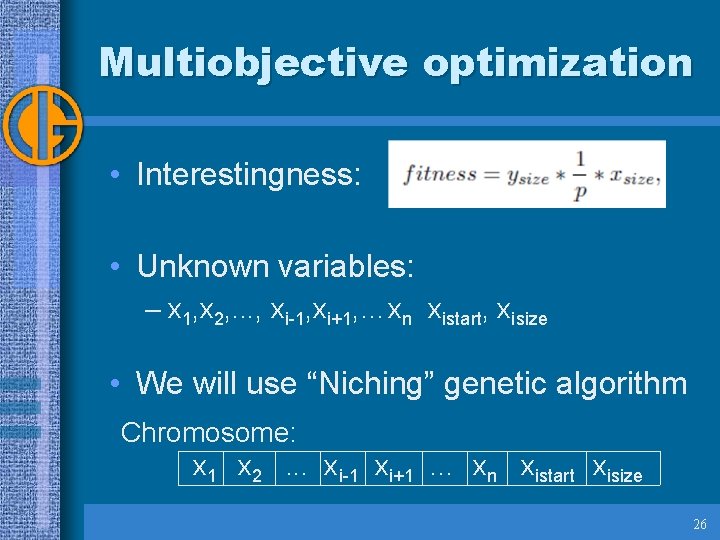

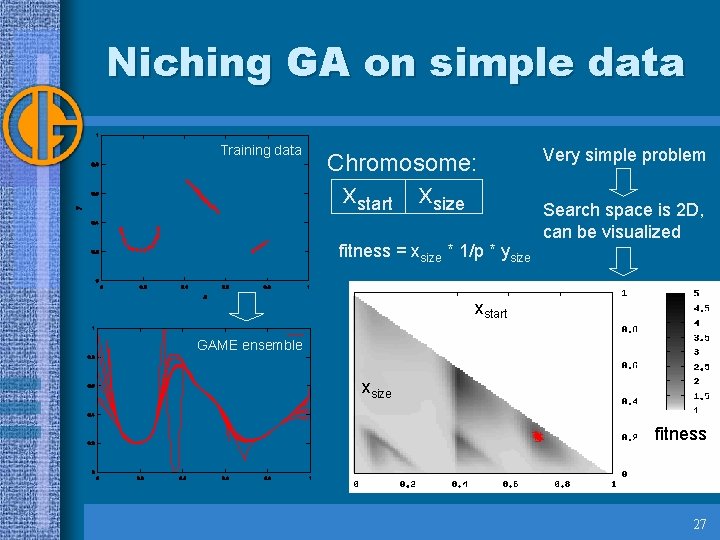

Multiobjective optimization • Interestingness: • Unknown variables: – x 1, x 2, . . . , xi-1, xi+1, …xn xistart, xisize • We will use “Niching” genetic algorithm Chromosome: x 1 x 2. . . xi-1 xi+1 … xn xistart xisize 26

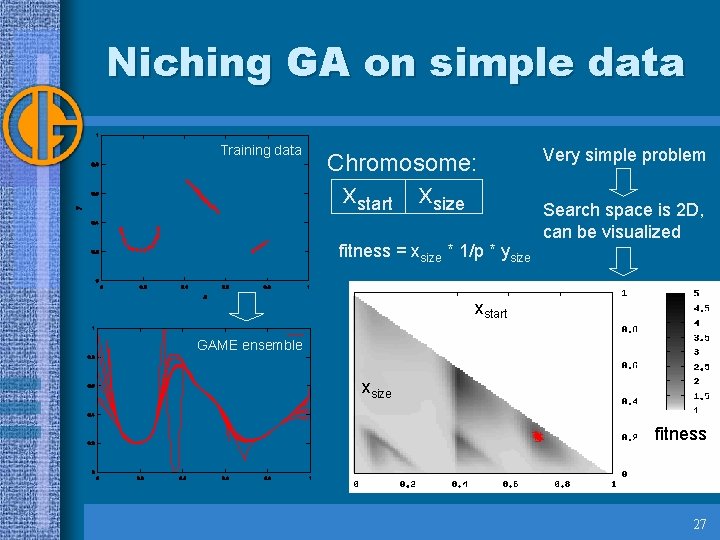

Niching GA on simple data Training data Chromosome: xstart xsize fitness = xsize * 1/p * ysize Very simple problem Search space is 2 D, can be visualized xstart GAME ensemble xsize fitness 27

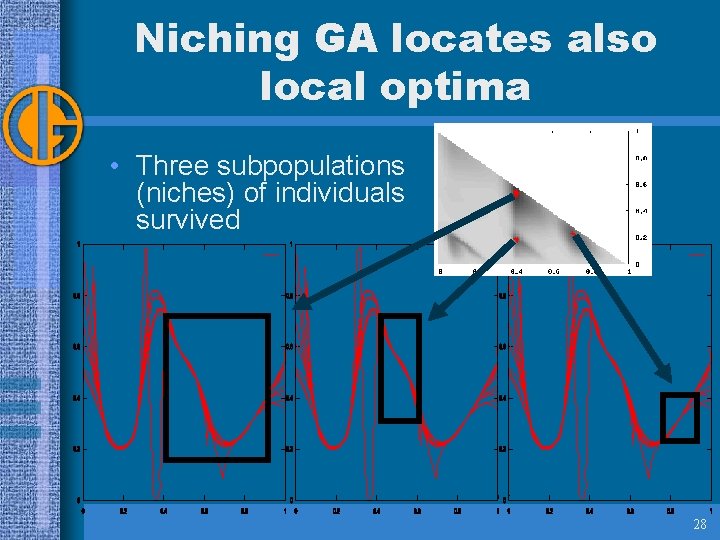

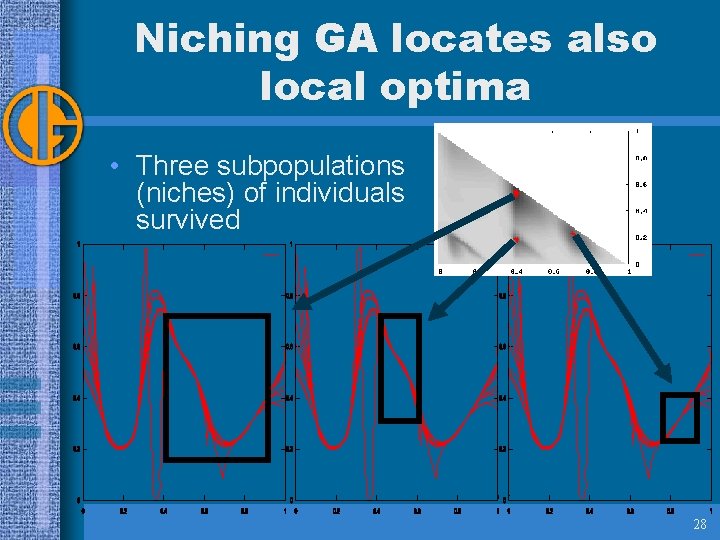

Niching GA locates also local optima • Three subpopulations (niches) of individuals survived 28

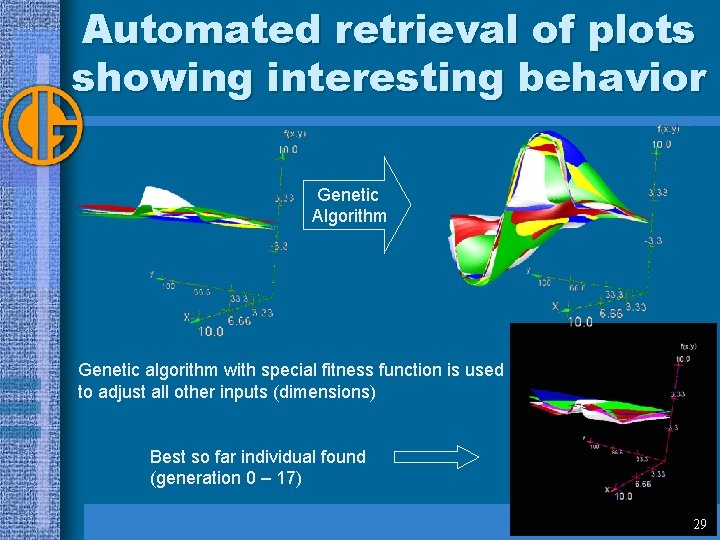

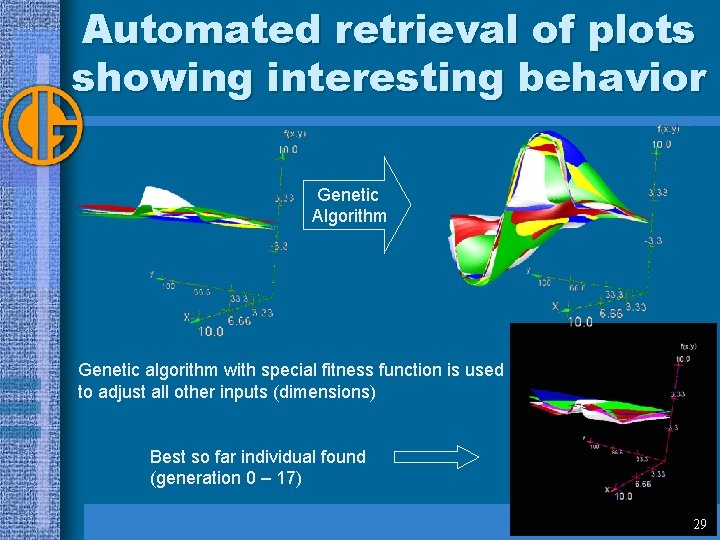

Automated retrieval of plots showing interesting behavior Genetic Algorithm Genetic algorithm with special fitness function is used to adjust all other inputs (dimensions) Best so far individual found (generation 0 – 17) 29

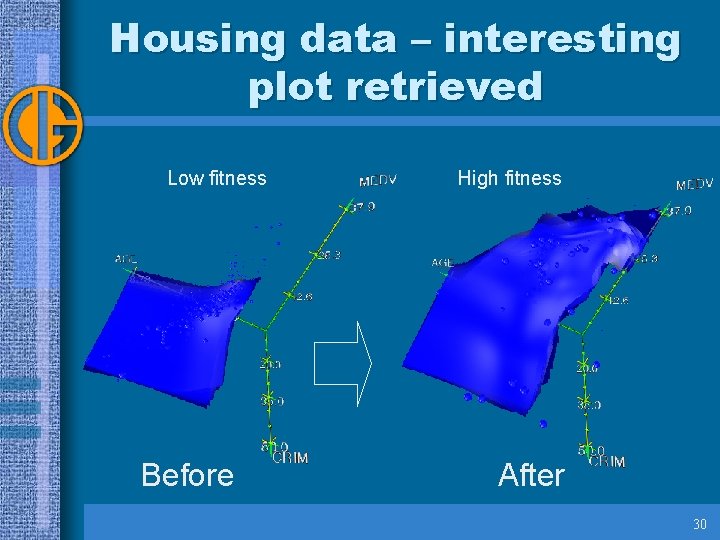

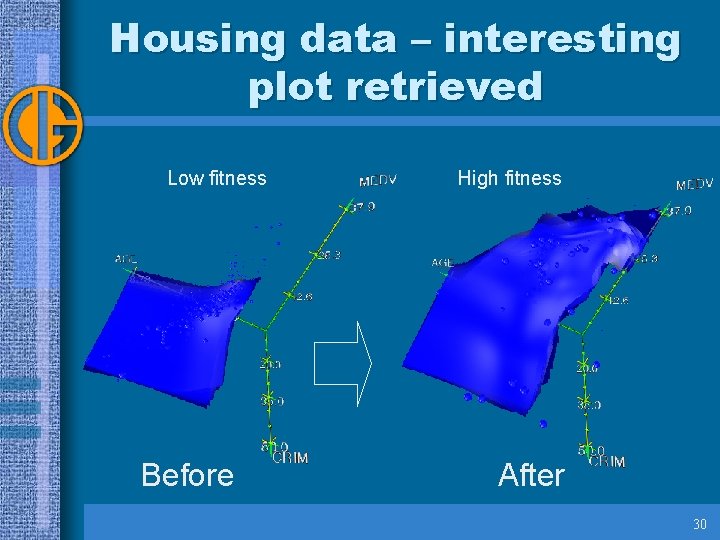

Housing data – interesting plot retrieved Low fitness Before High fitness After 30

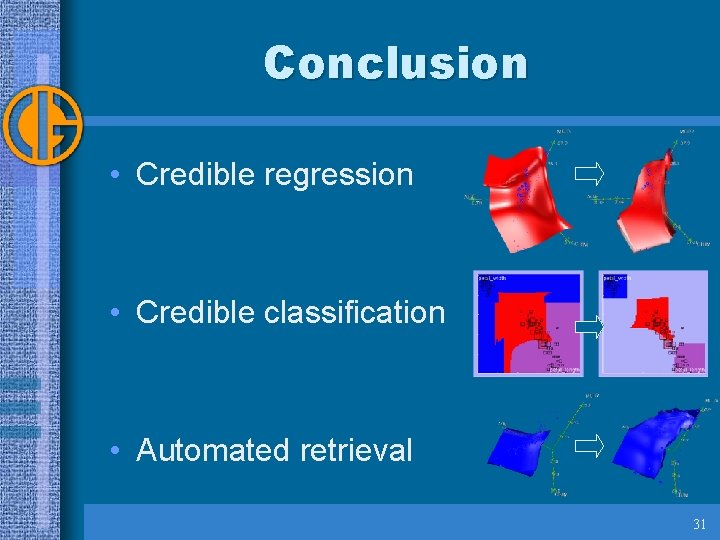

Conclusion • Credible regression • Credible classification • Automated retrieval 31

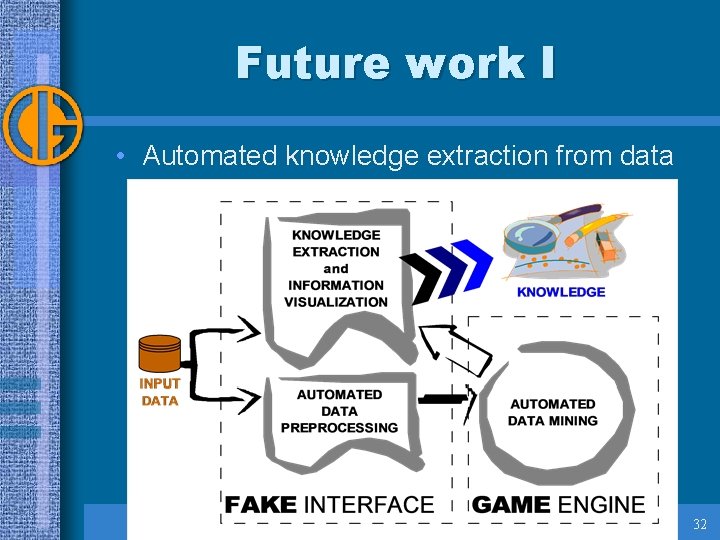

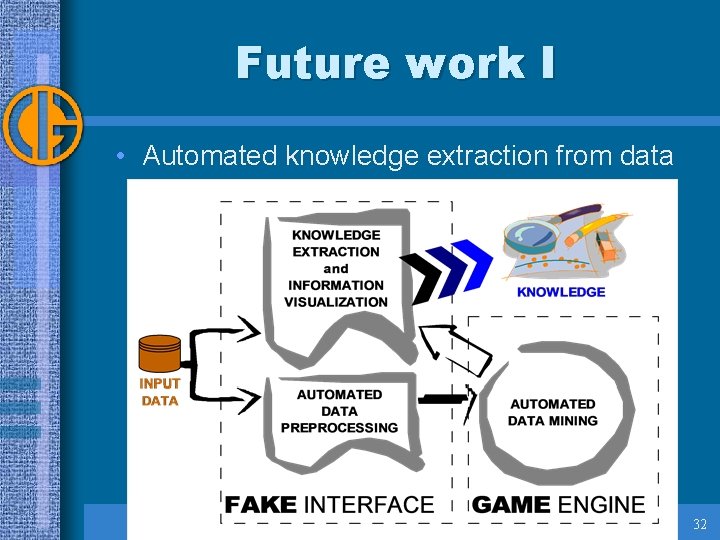

Future work I • Automated knowledge extraction from data 32

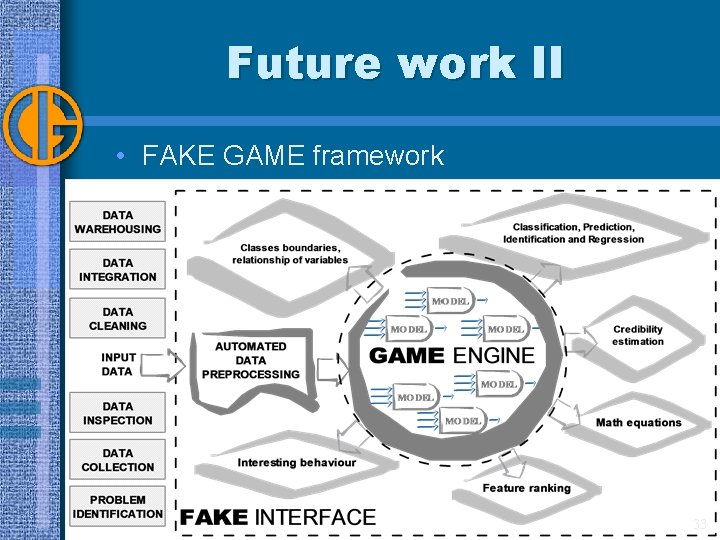

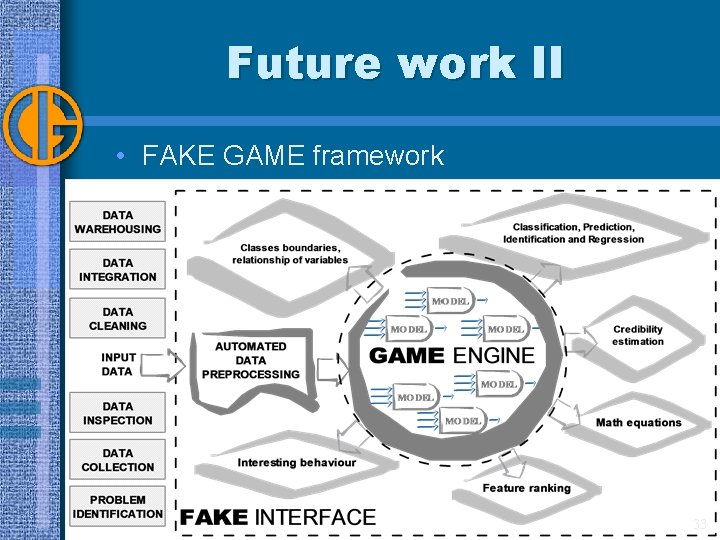

Future work II • FAKE GAME framework 33

Future work III • Just released as open source project – Automated data preprocessing – Automated model building, validation – Optimization methods – Visualization see and join us: http: //www. sourceforge. net/projects/fakegame 34