Visual Recognition With Humans in the Loop ECCV

- Slides: 50

Visual Recognition With Humans in the Loop ECCV 2010, Crete, Greece Steve Branson Catherine Wah Florian Schroff Boris Babenko Serge Belongie Peter Welinder Pietro Perona 1

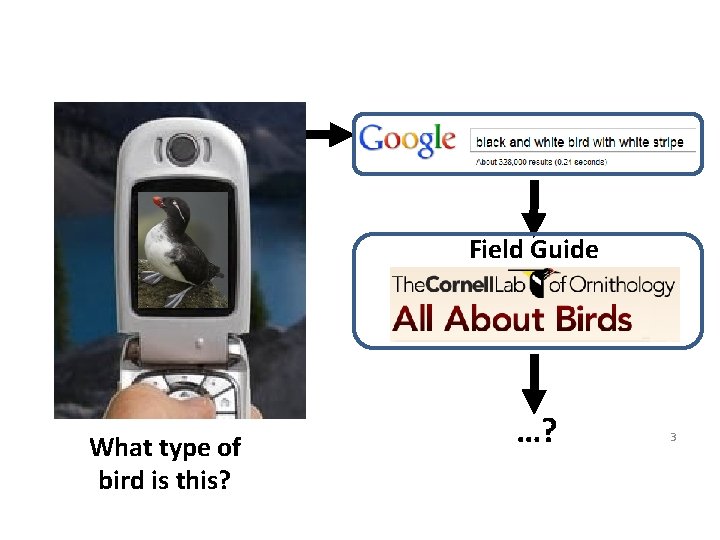

What type of bird is this? 2

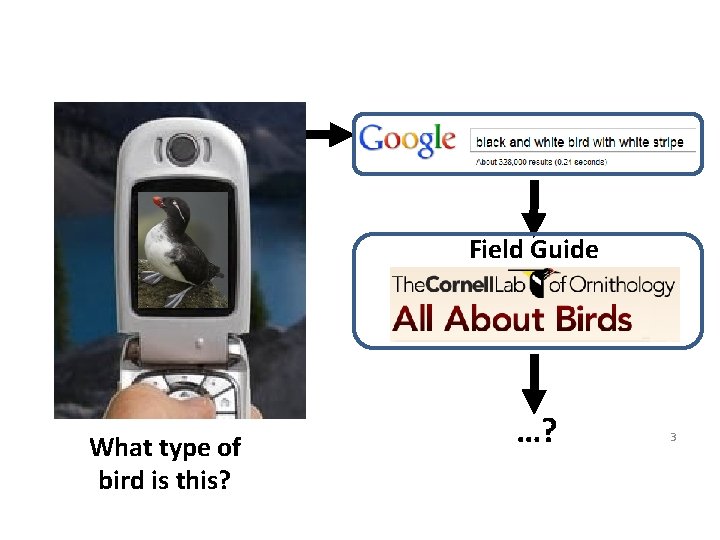

Field Guide What type of bird is this? …? 3

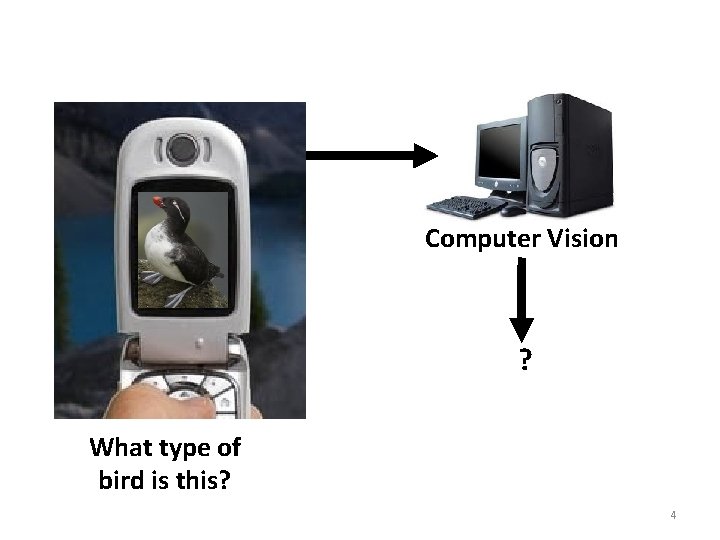

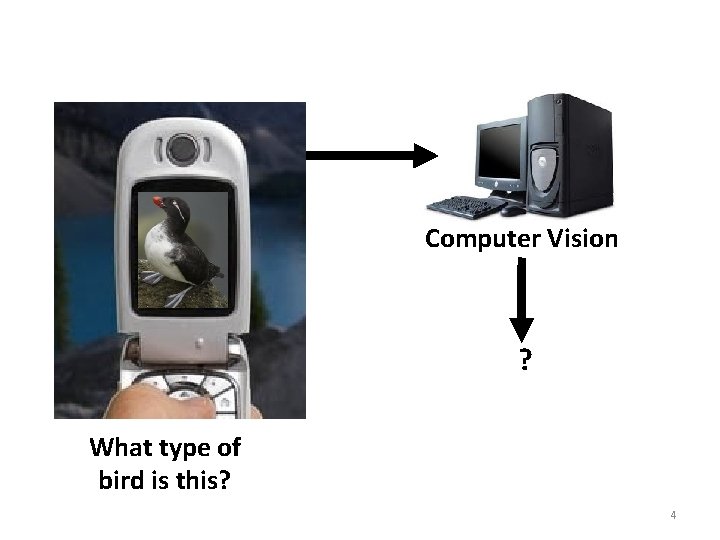

Computer Vision ? What type of bird is this? 4

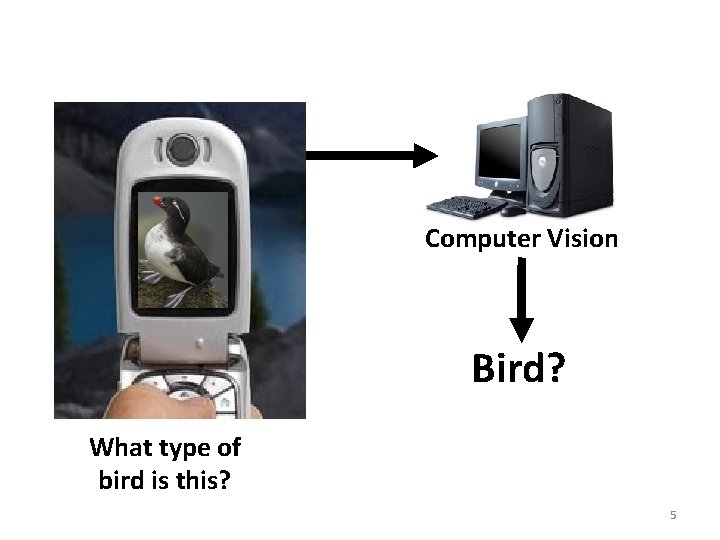

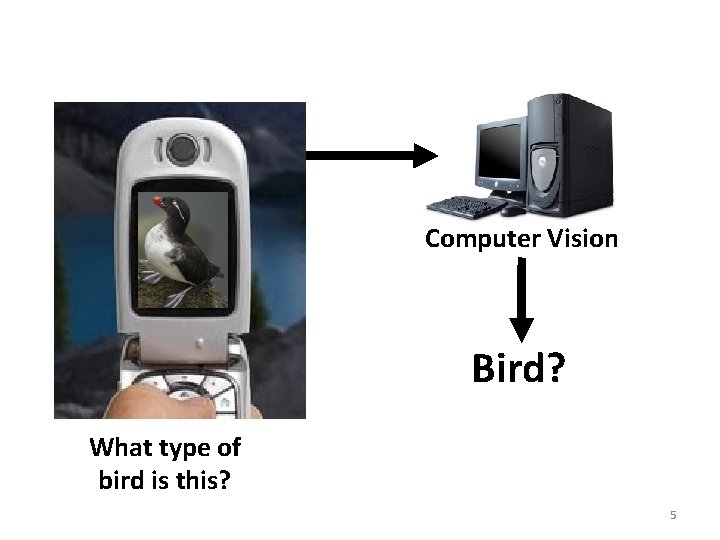

Computer Vision Bird? What type of bird is this? 5

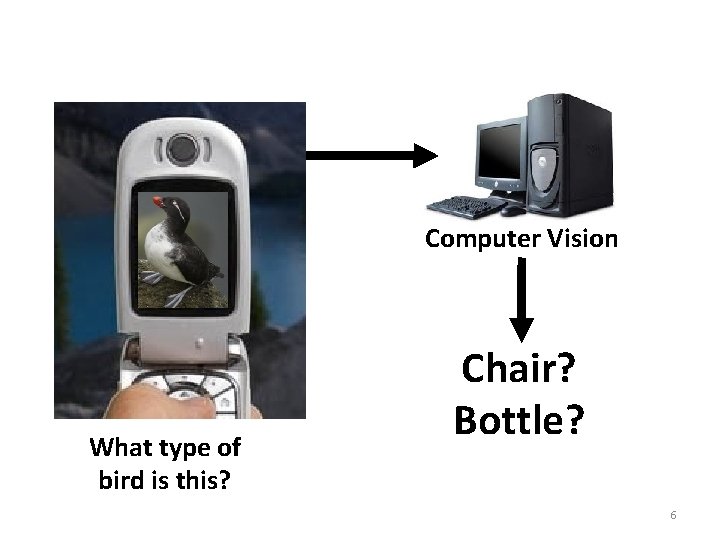

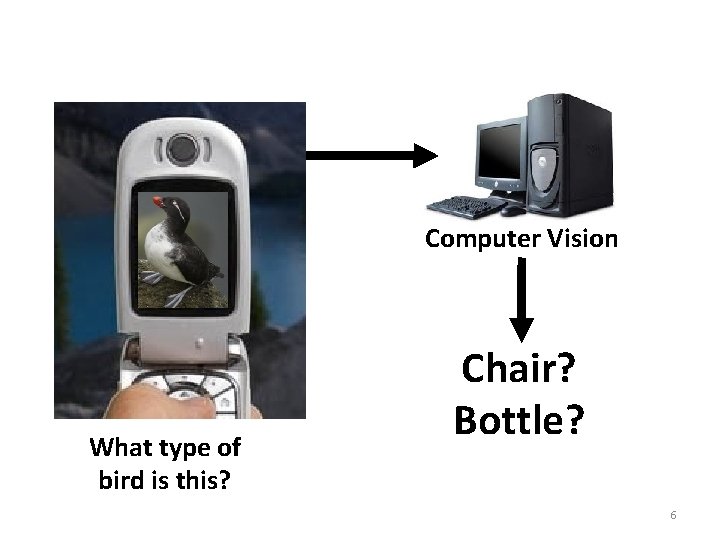

Computer Vision What type of bird is this? Chair? Bottle? 6

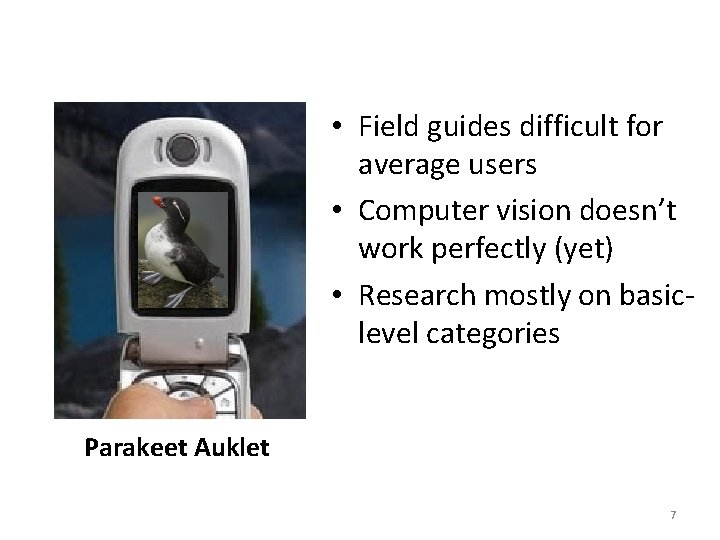

• Field guides difficult for average users • Computer vision doesn’t work perfectly (yet) • Research mostly on basiclevel categories Parakeet Auklet 7

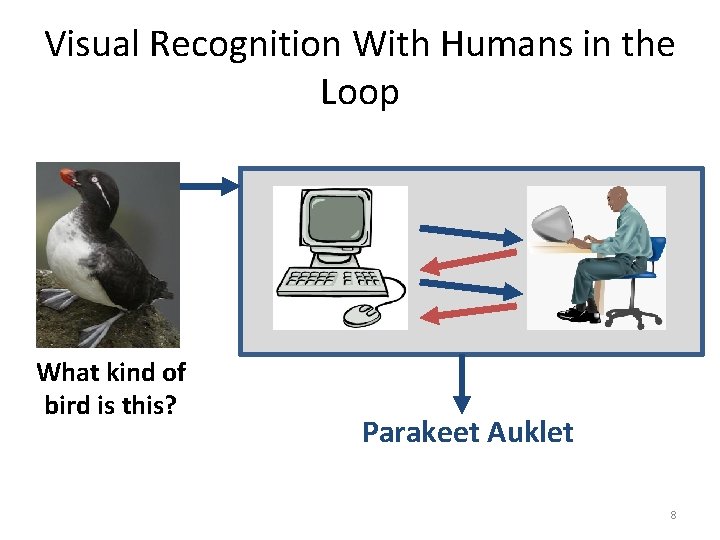

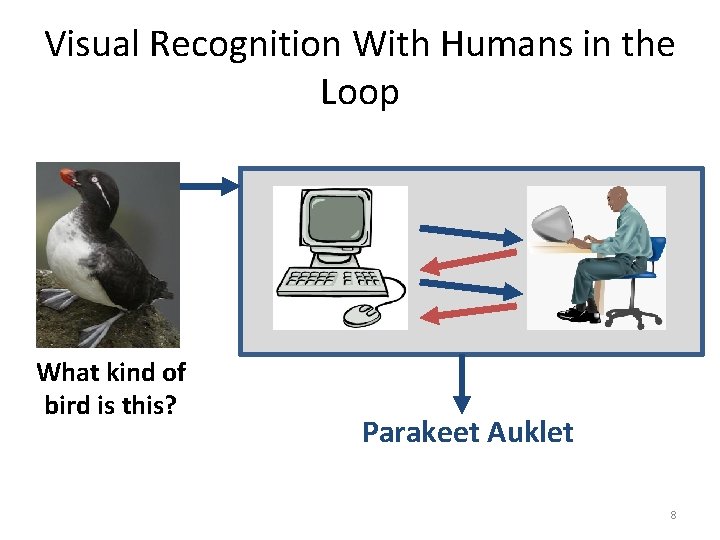

Visual Recognition With Humans in the Loop What kind of bird is this? Parakeet Auklet 8

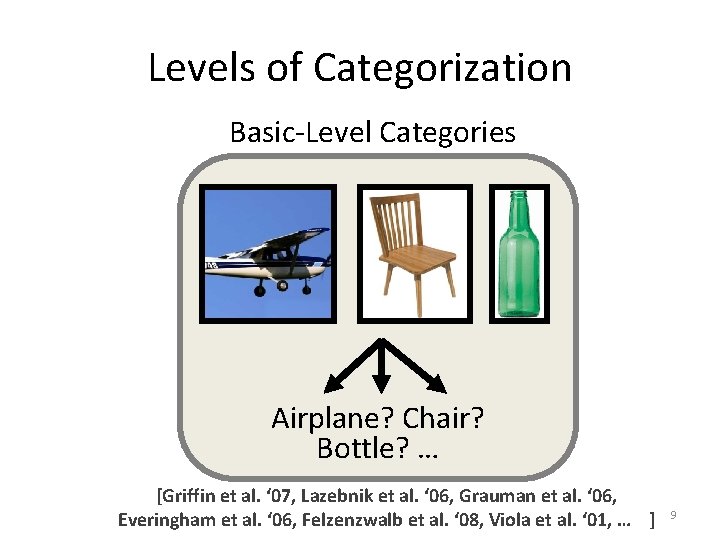

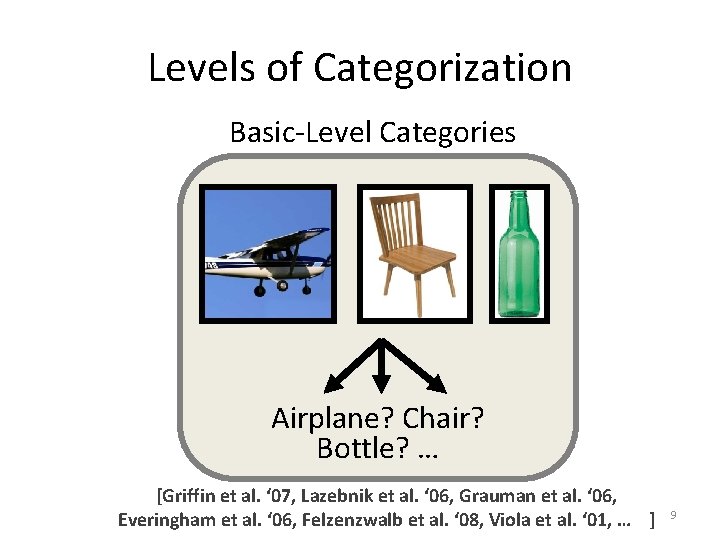

Levels of Categorization Basic-Level Categories Airplane? Chair? Bottle? … [Griffin et al. ‘ 07, Lazebnik et al. ‘ 06, Grauman et al. ‘ 06, Everingham et al. ‘ 06, Felzenzwalb et al. ‘ 08, Viola et al. ‘ 01, … ] 9

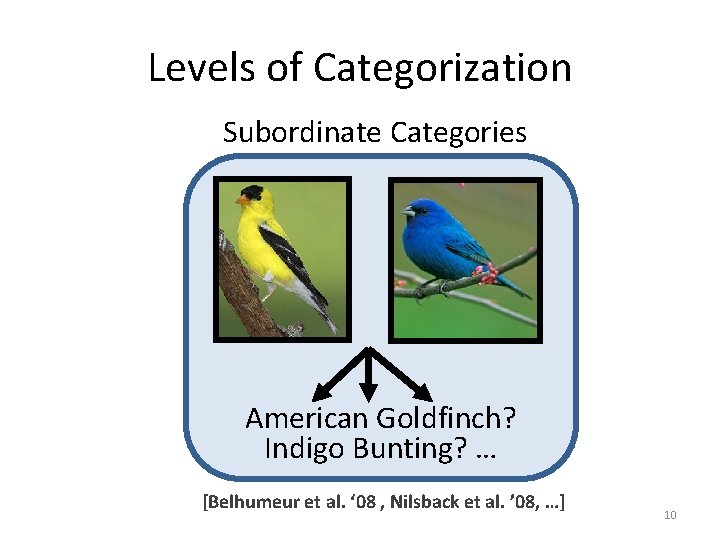

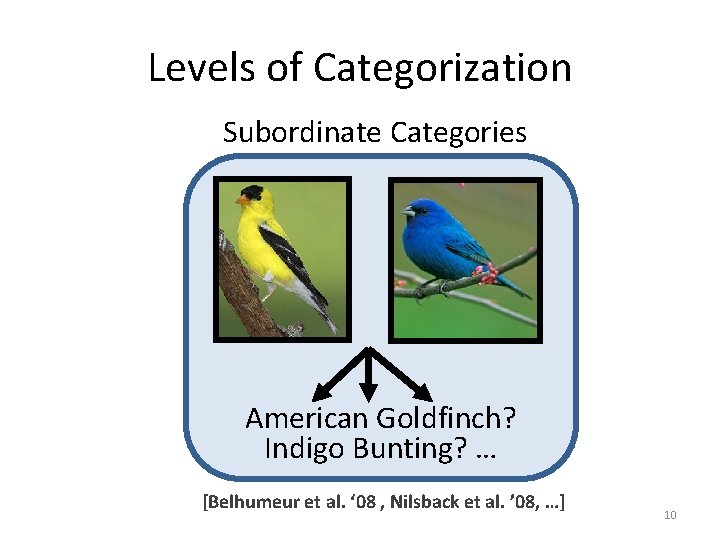

Levels of Categorization Subordinate Categories American Goldfinch? Indigo Bunting? … [Belhumeur et al. ‘ 08 , Nilsback et al. ’ 08, …] 10

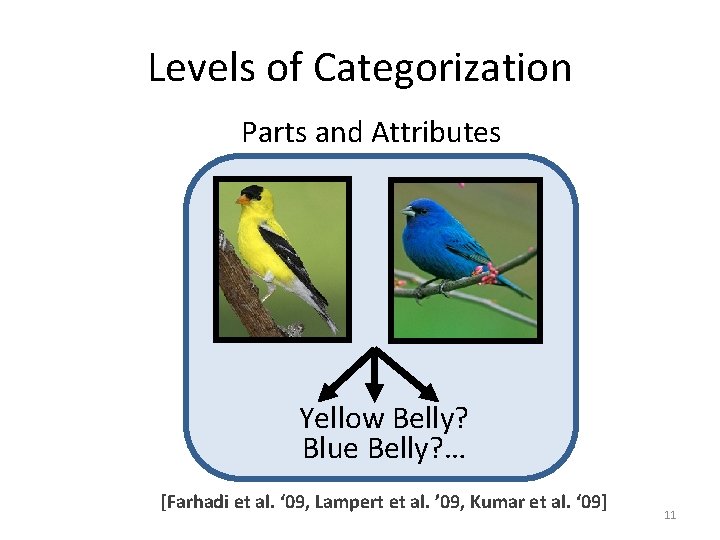

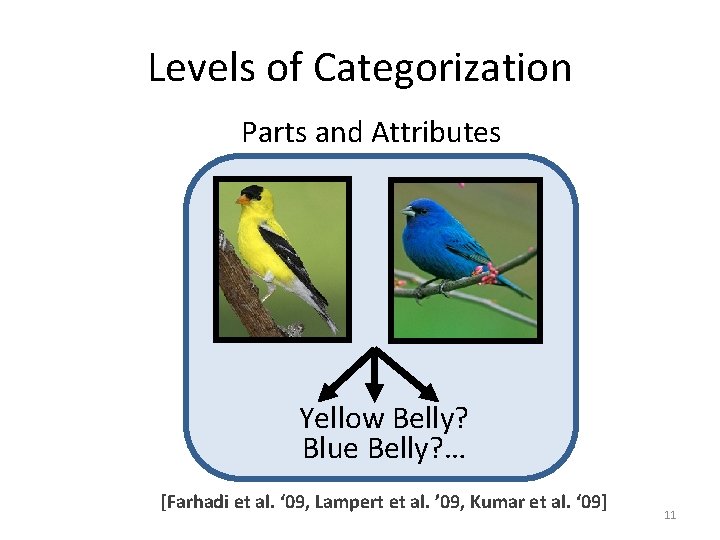

Levels of Categorization Parts and Attributes Yellow Belly? Blue Belly? … [Farhadi et al. ‘ 09, Lampert et al. ’ 09, Kumar et al. ‘ 09] 11

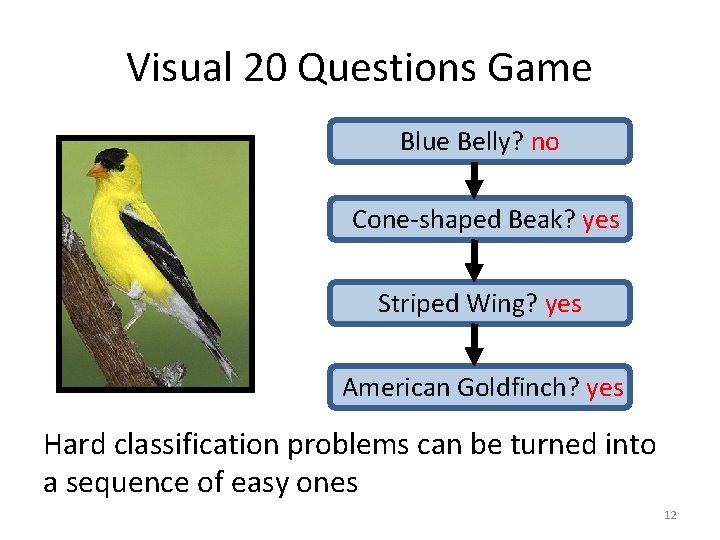

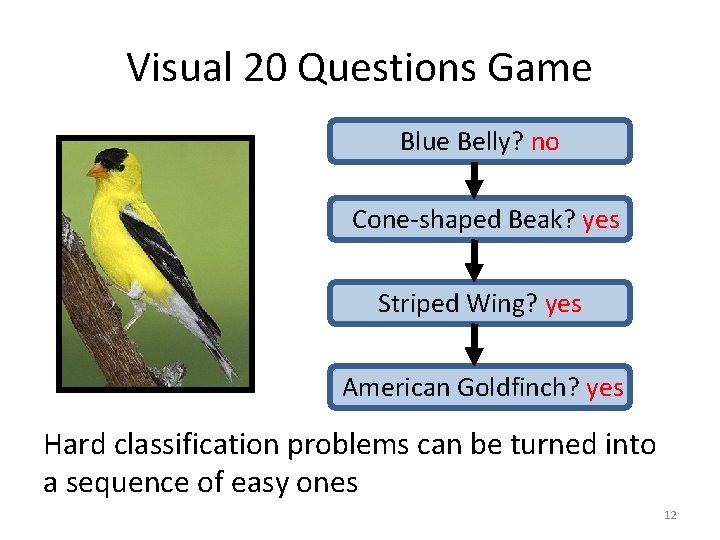

Visual 20 Questions Game Blue Belly? no Cone-shaped Beak? yes Striped Wing? yes American Goldfinch? yes Hard classification problems can be turned into a sequence of easy ones 12

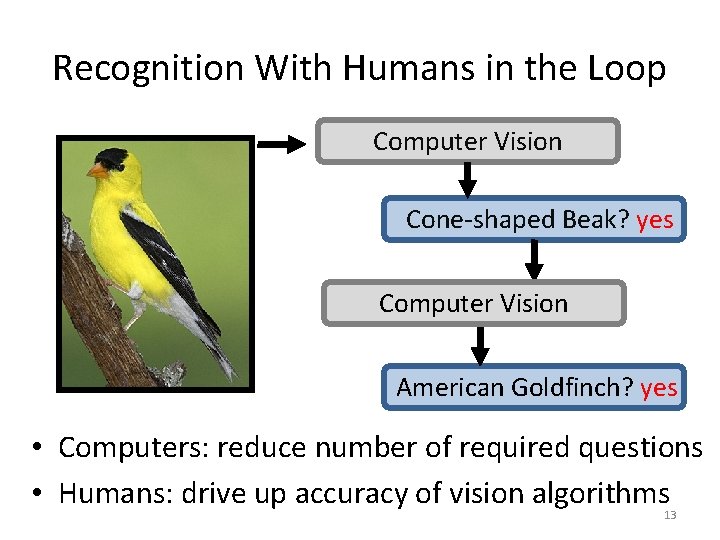

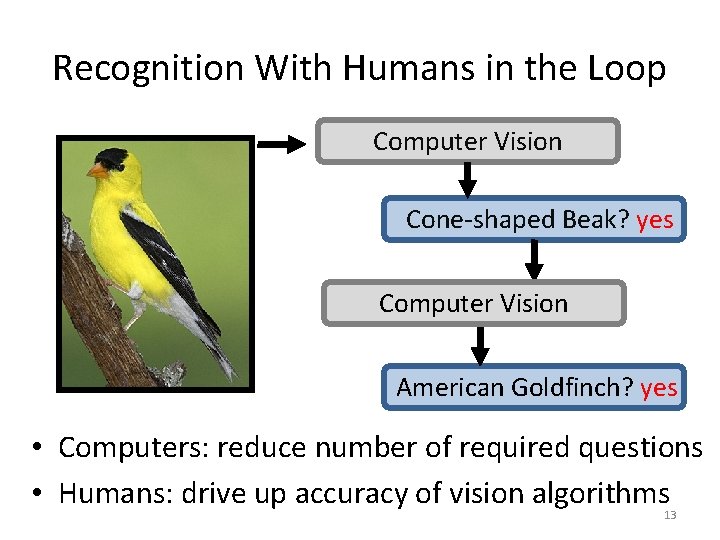

Recognition With Humans in the Loop Computer Vision Cone-shaped Beak? yes Computer Vision American Goldfinch? yes • Computers: reduce number of required questions • Humans: drive up accuracy of vision algorithms 13

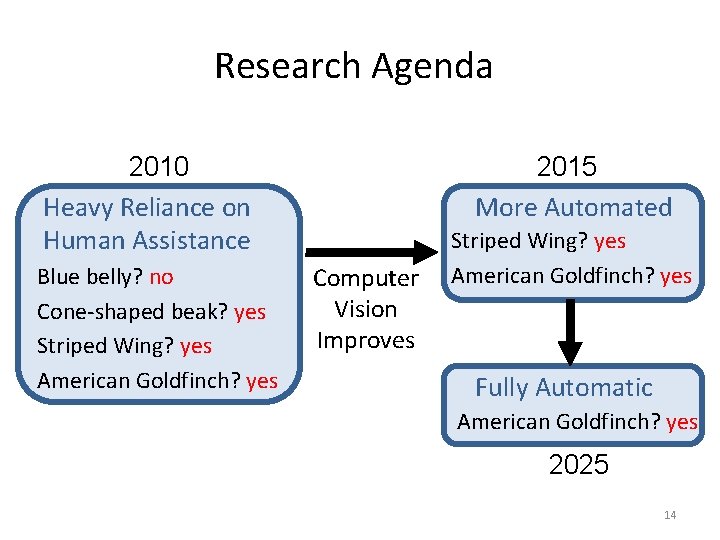

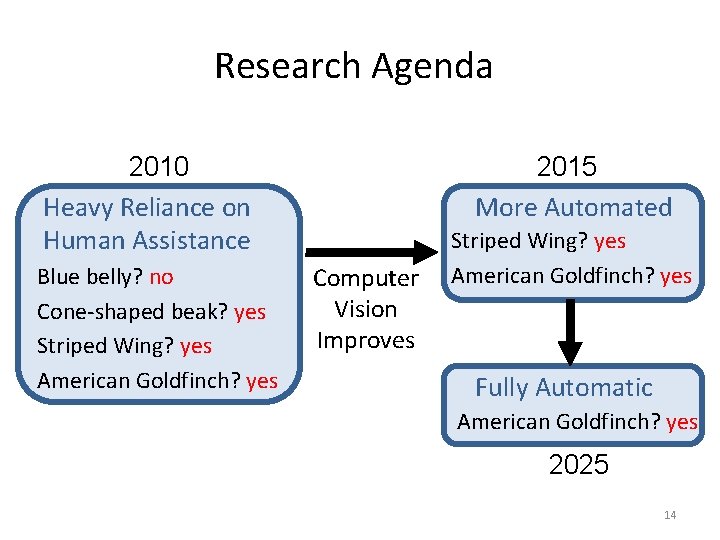

Research Agenda 2010 Heavy Reliance on Human Assistance Blue belly? no Cone-shaped beak? yes Striped Wing? yes American Goldfinch? yes 2015 More Automated Computer Vision Improves Striped Wing? yes American Goldfinch? yes Fully Automatic American Goldfinch? yes 2025 14

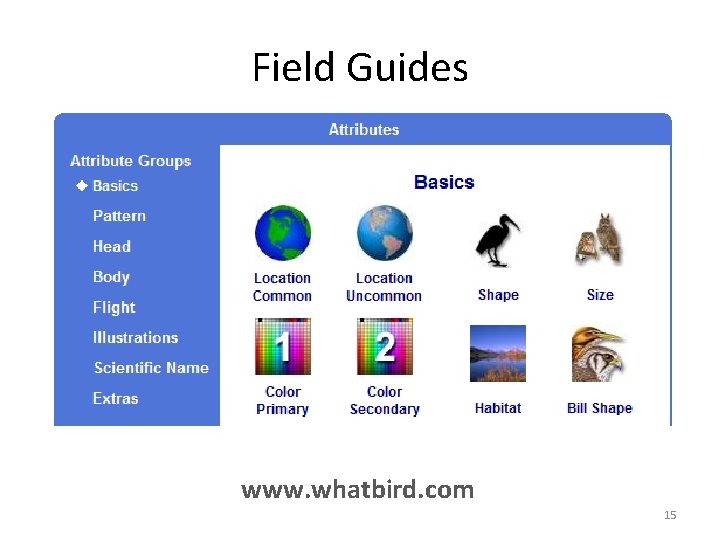

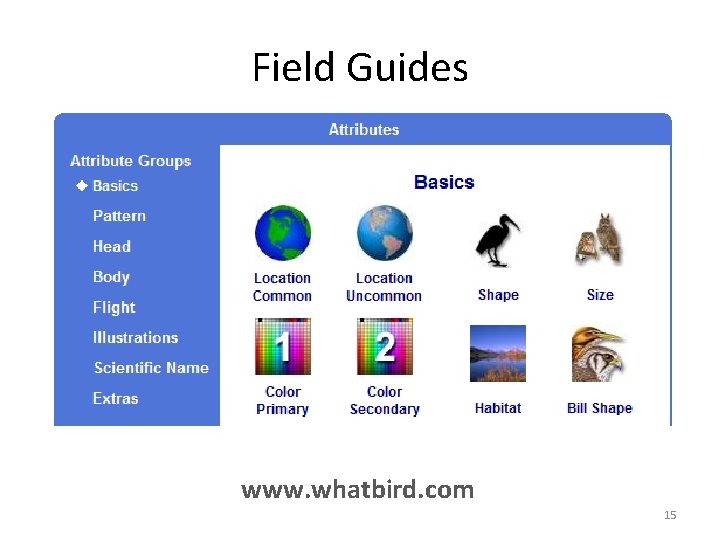

Field Guides www. whatbird. com 15

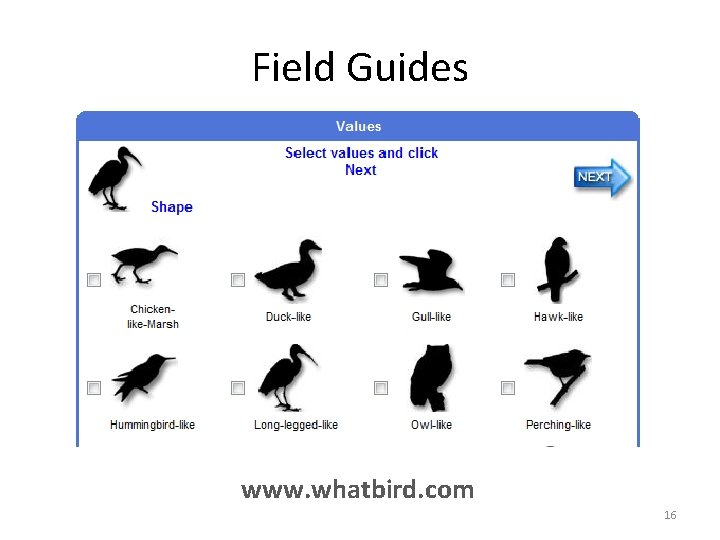

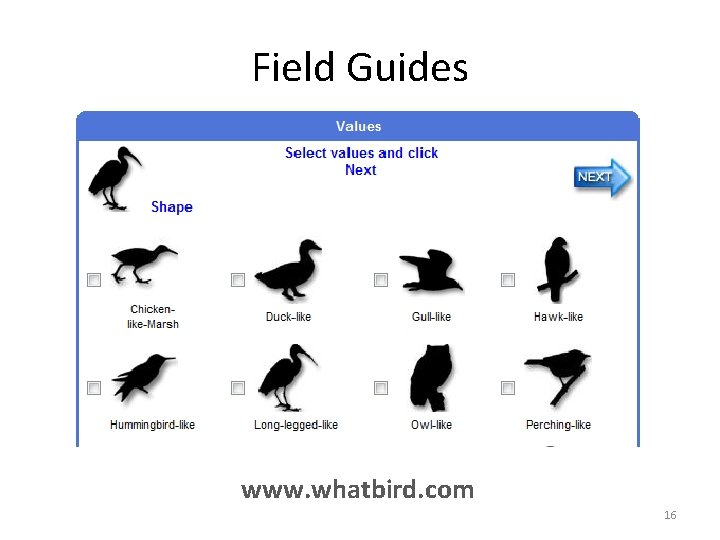

Field Guides www. whatbird. com 16

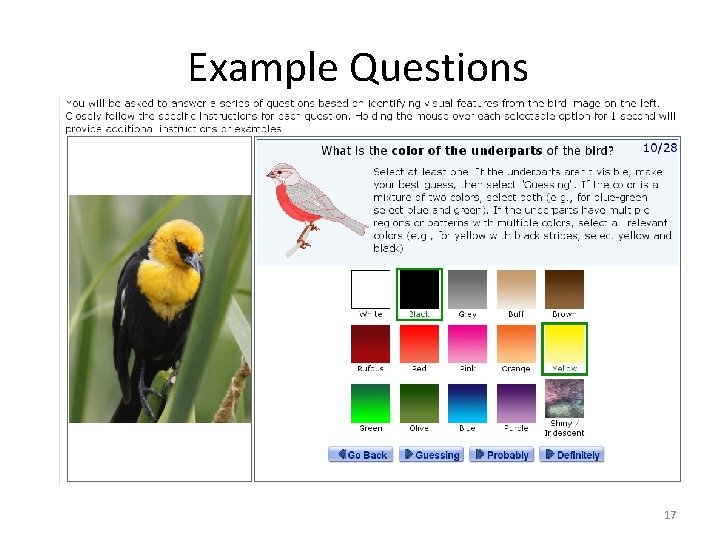

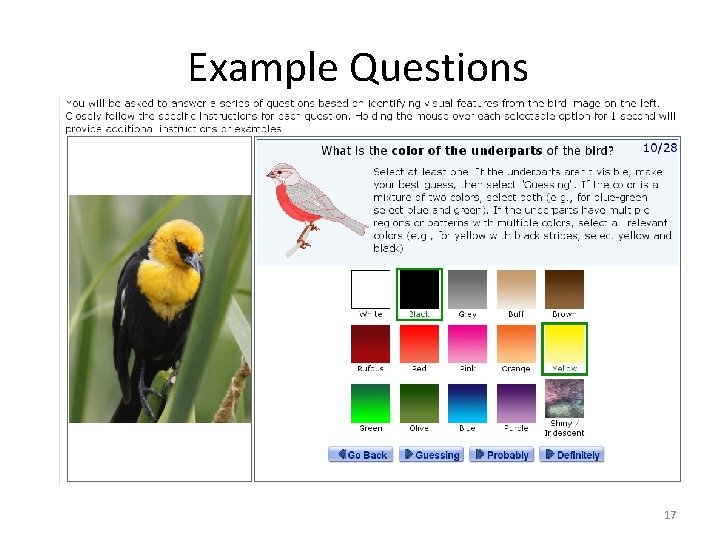

Example Questions 17

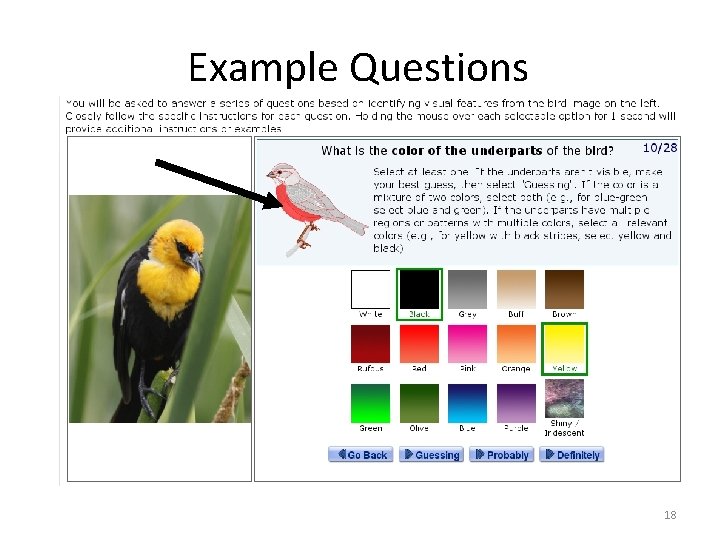

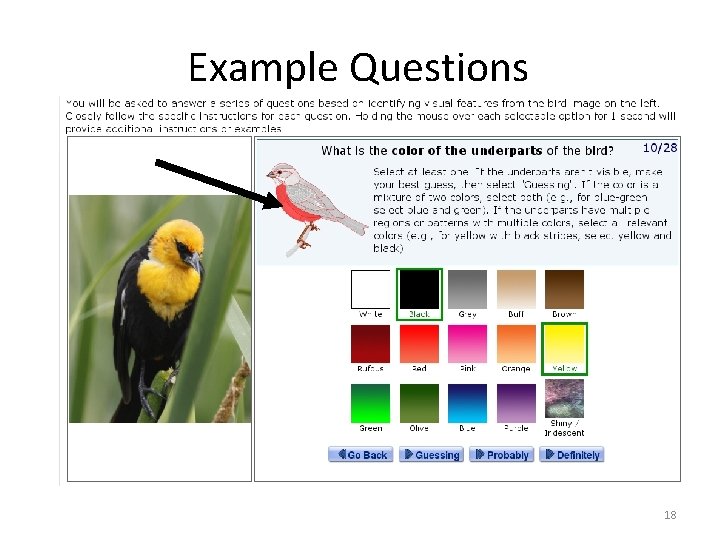

Example Questions 18

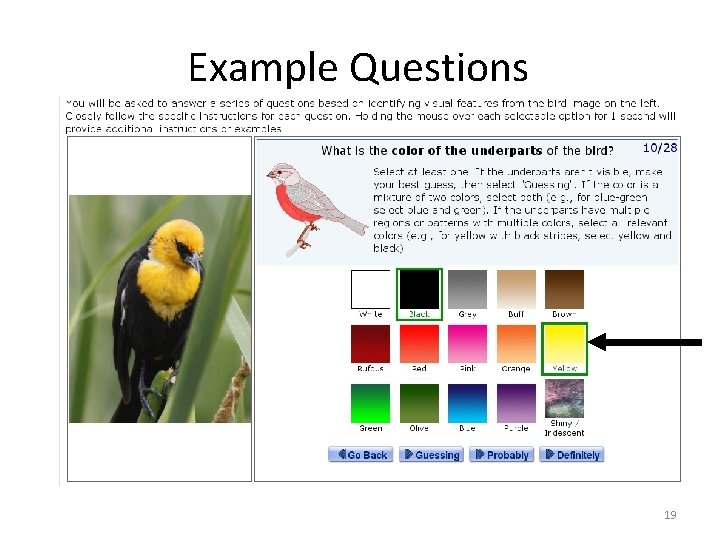

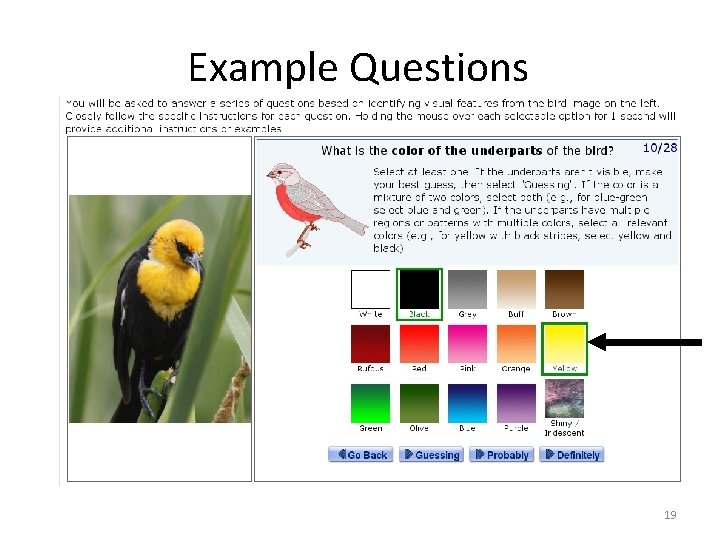

Example Questions 19

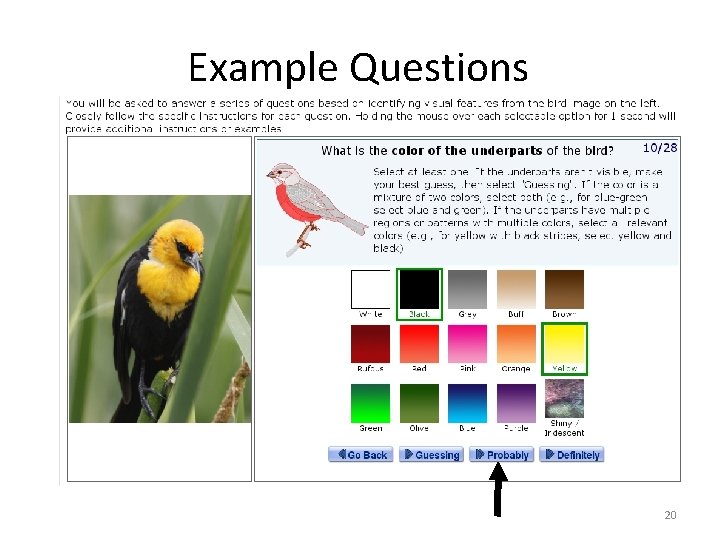

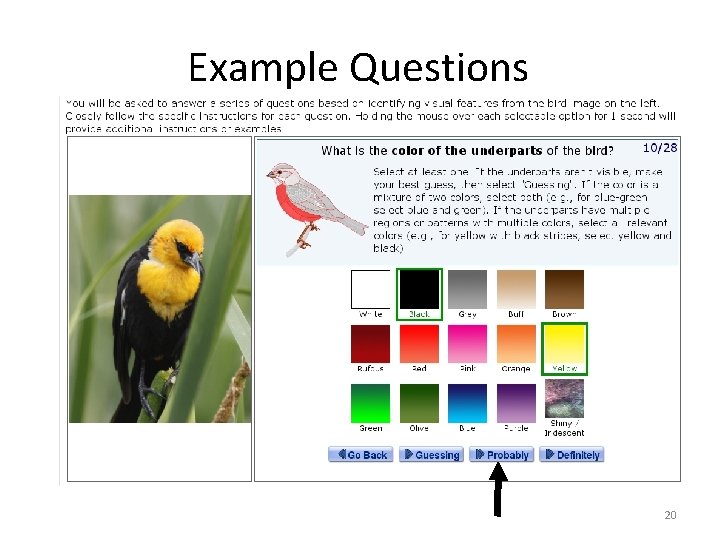

Example Questions 20

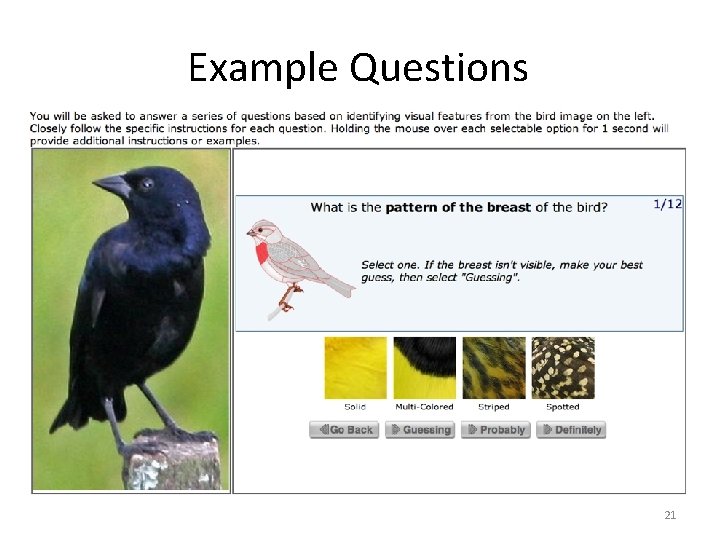

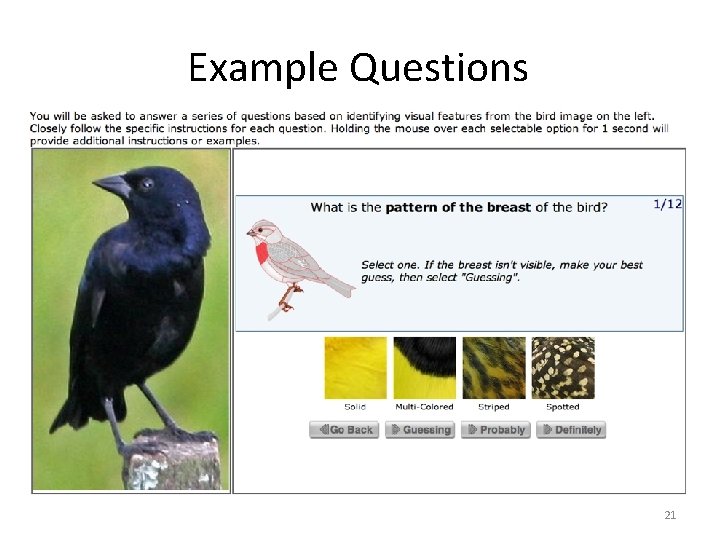

Example Questions 21

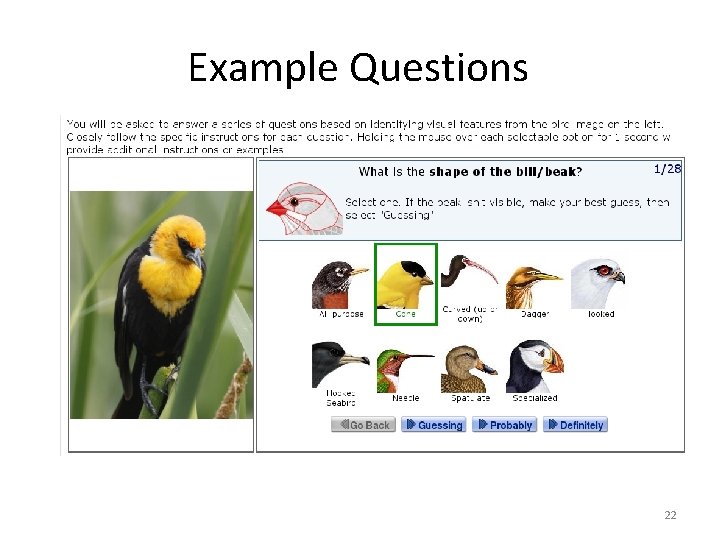

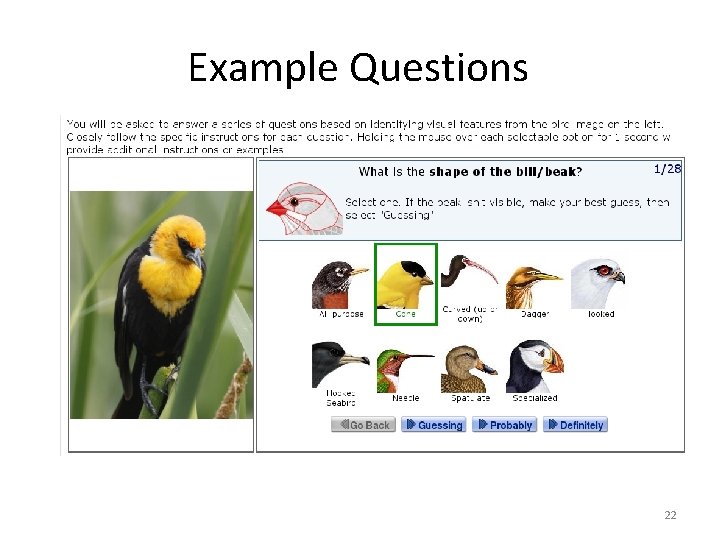

Example Questions 22

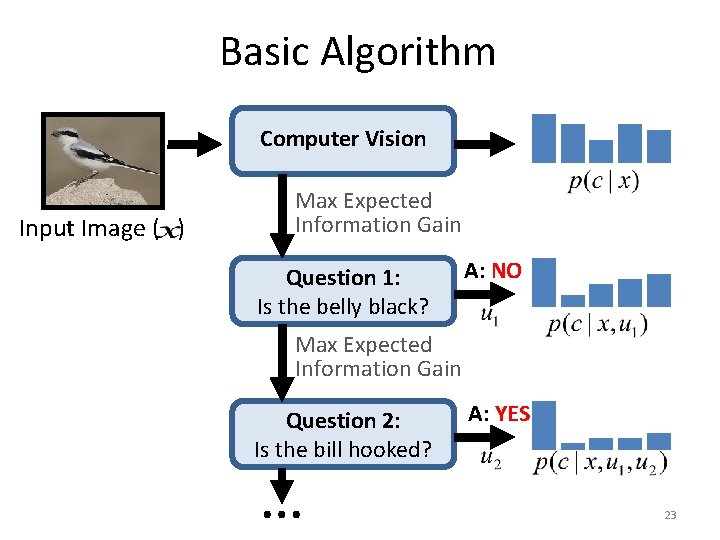

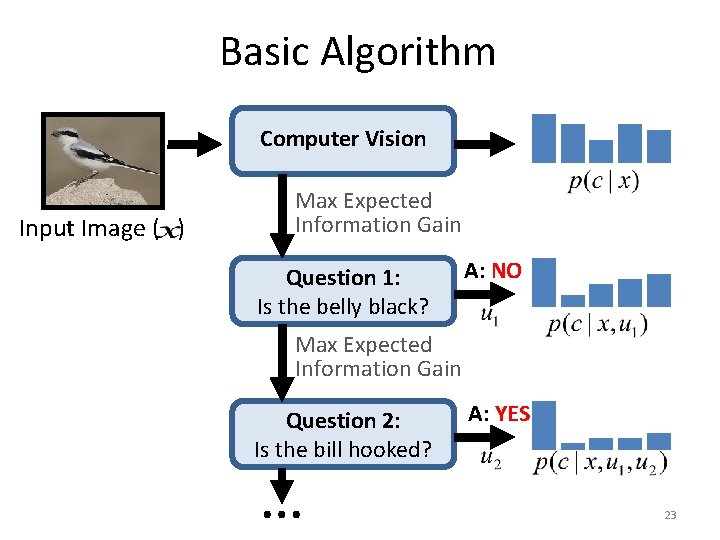

Basic Algorithm Computer Vision Input Image ( ) Max Expected Information Gain Question 1: Is the belly black? A: NO Max Expected Information Gain Question 2: Is the bill hooked? … A: YES 23

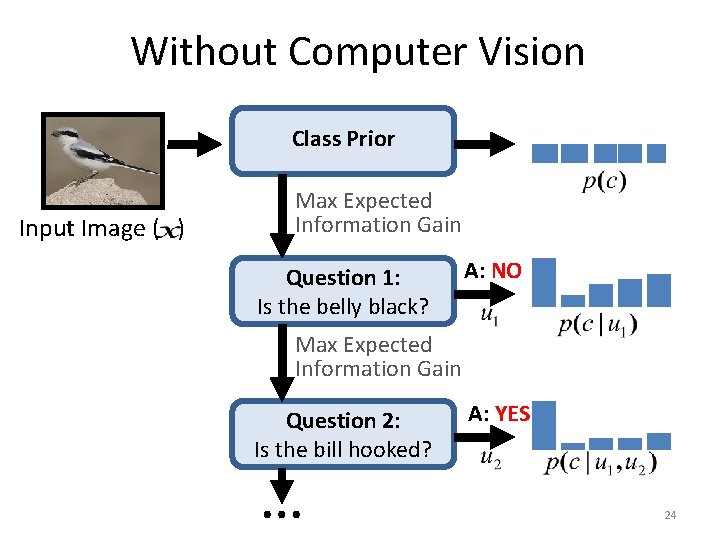

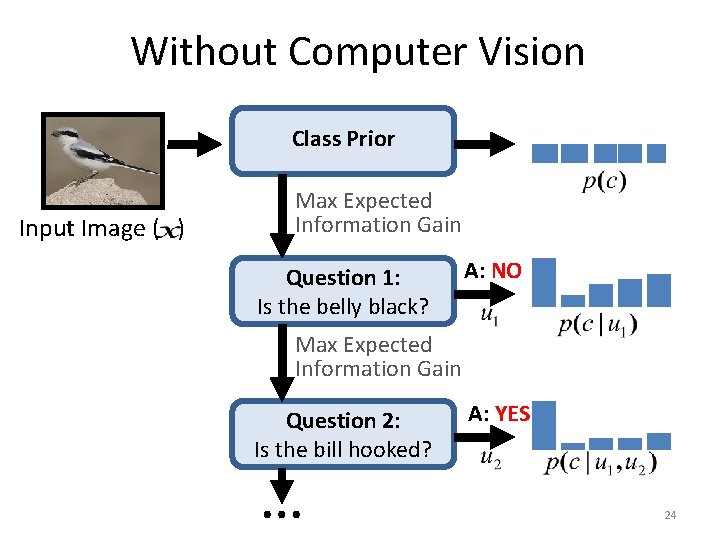

Without Computer Vision Class Prior Input Image ( ) Max Expected Information Gain Question 1: Is the belly black? A: NO Max Expected Information Gain Question 2: Is the bill hooked? … A: YES 24

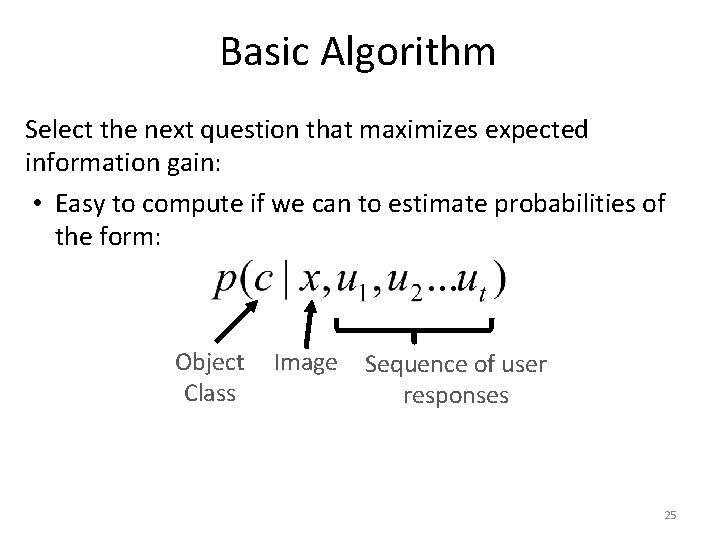

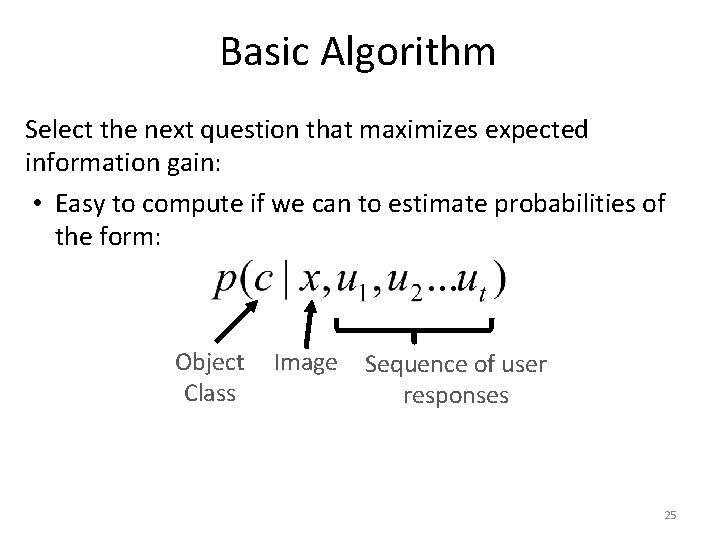

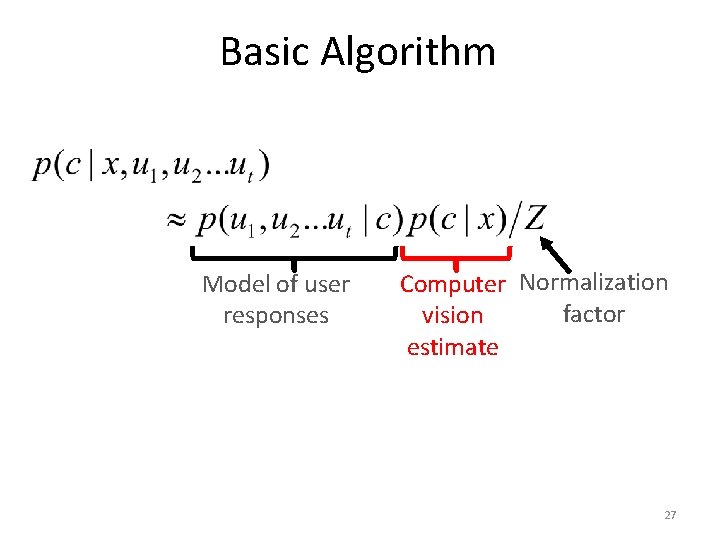

Basic Algorithm Select the next question that maximizes expected information gain: • Easy to compute if we can to estimate probabilities of the form: Object Class Image Sequence of user responses 25

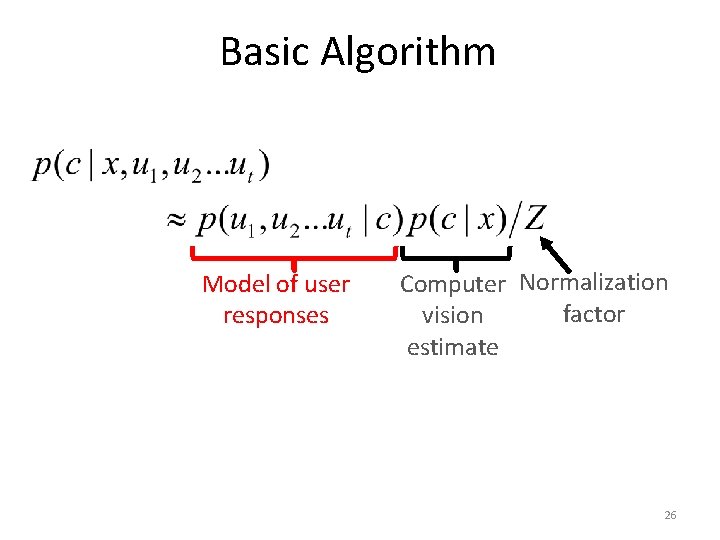

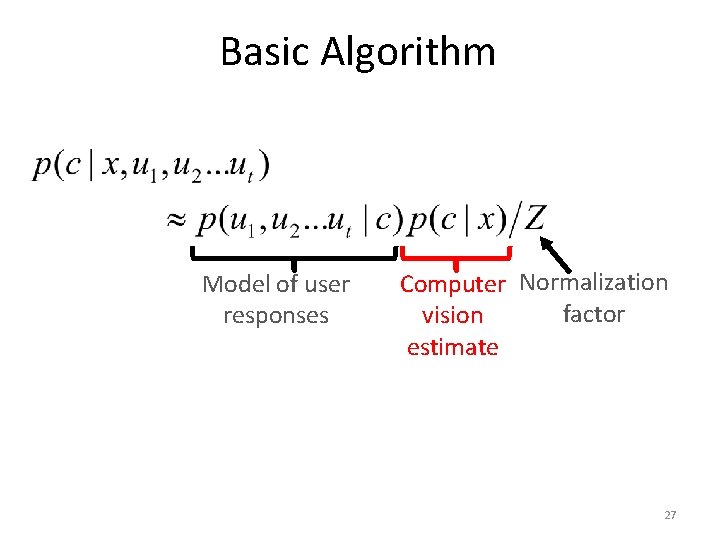

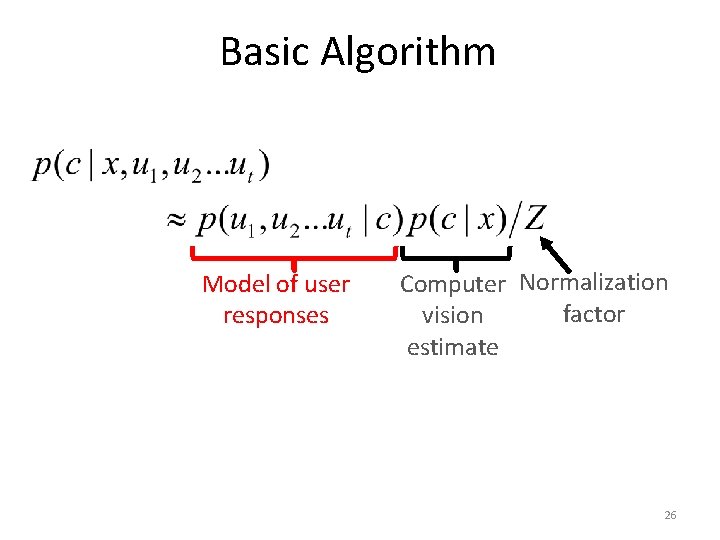

Basic Algorithm Model of user responses Computer Normalization factor vision estimate 26

Basic Algorithm Model of user responses Computer Normalization factor vision estimate 27

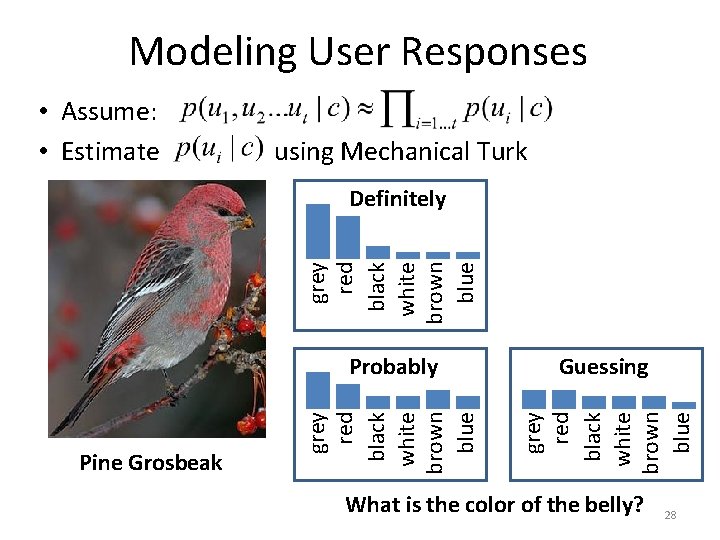

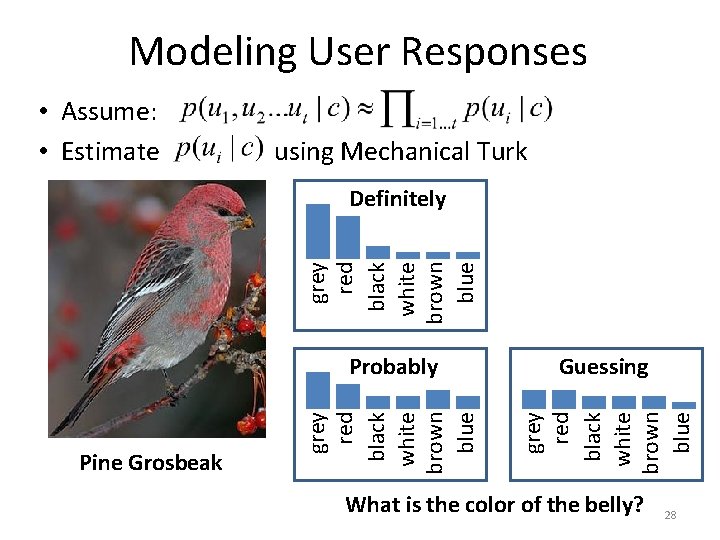

Modeling User Responses • Assume: • Estimate using Mechanical Turk Guessing grey red black white brown blue Pine Grosbeak Probably grey red black white brown blue Definitely What is the color of the belly? 28

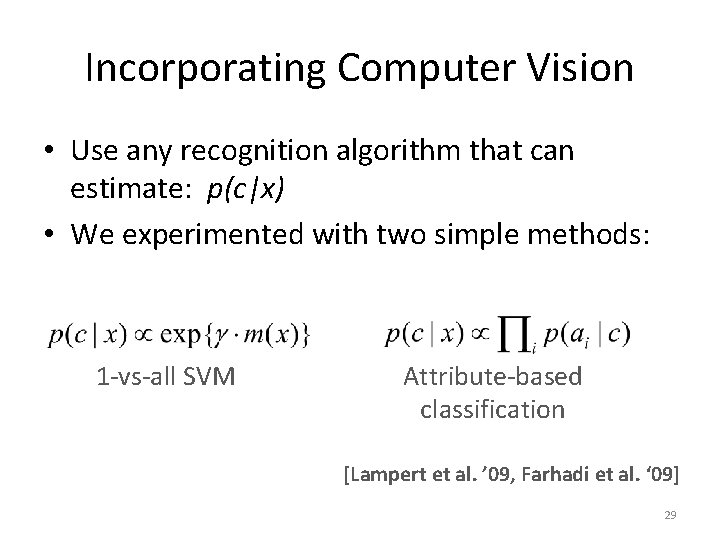

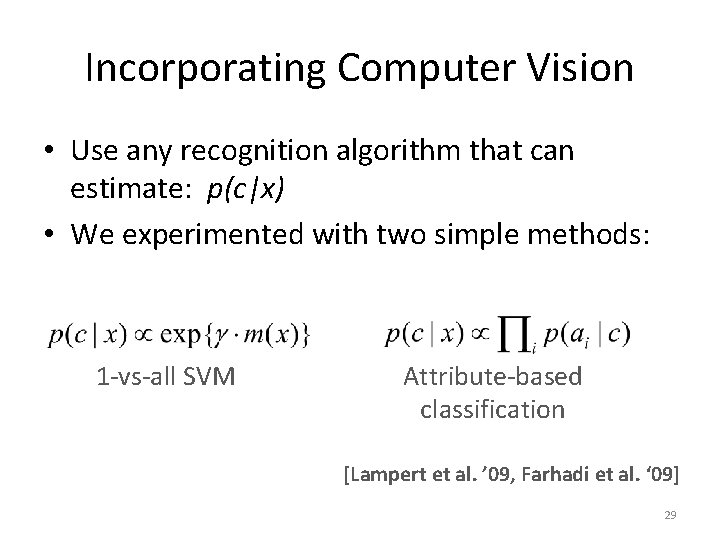

Incorporating Computer Vision • Use any recognition algorithm that can estimate: p(c|x) • We experimented with two simple methods: 1 -vs-all SVM Attribute-based classification [Lampert et al. ’ 09, Farhadi et al. ‘ 09] 29

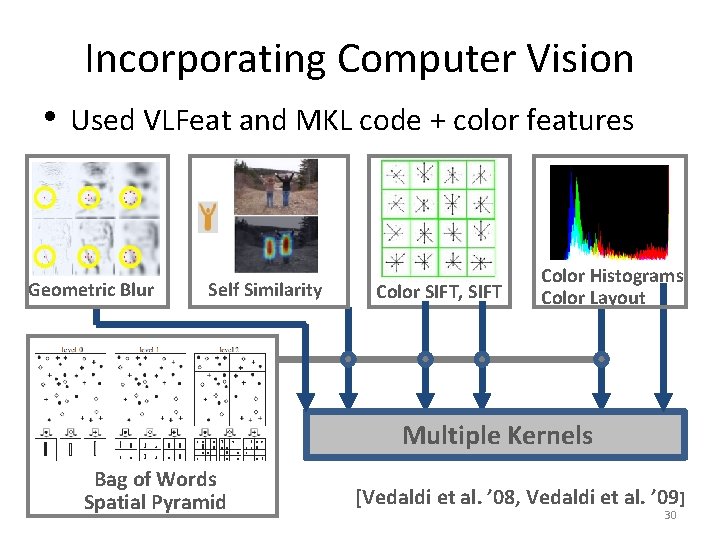

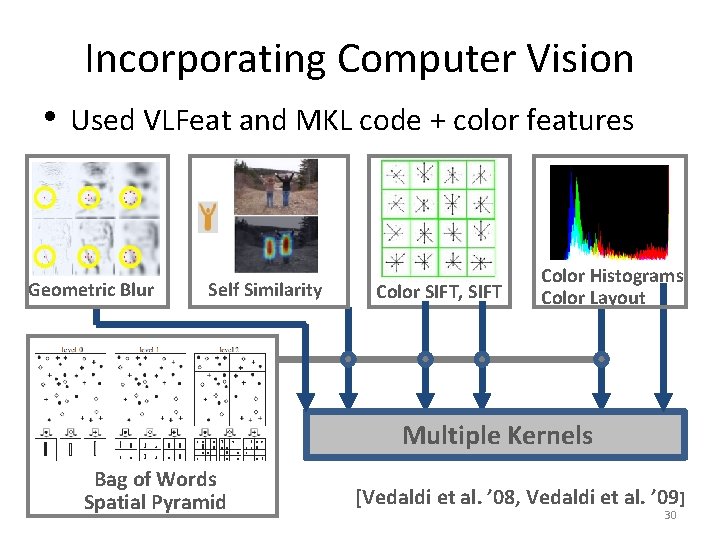

Incorporating Computer Vision • Used VLFeat and MKL code + color features Geometric Blur Self Similarity Color SIFT, SIFT Color Histograms Color Layout Multiple Kernels Bag of Words Spatial Pyramid [Vedaldi et al. ’ 08, Vedaldi et al. ’ 09] 30

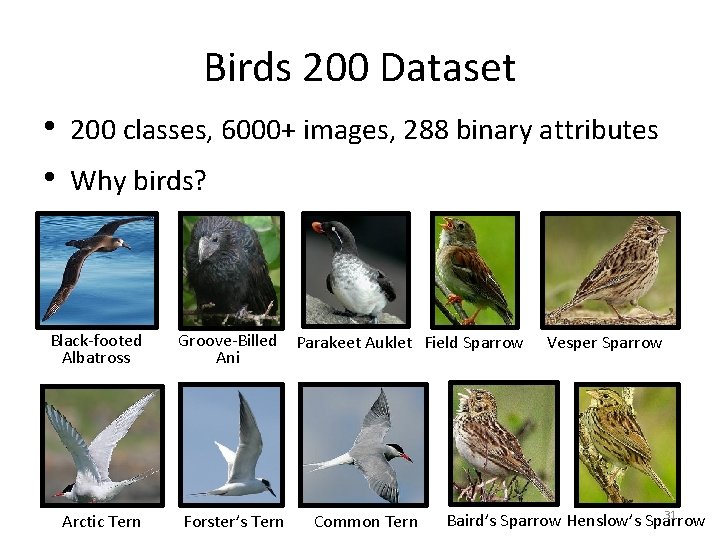

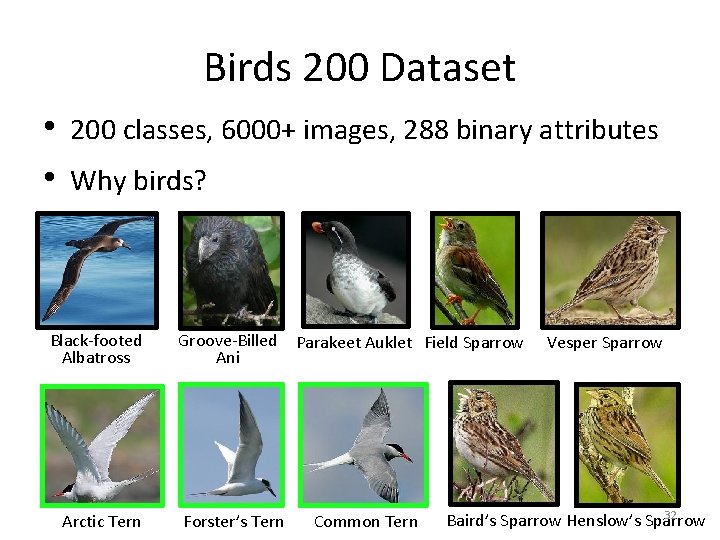

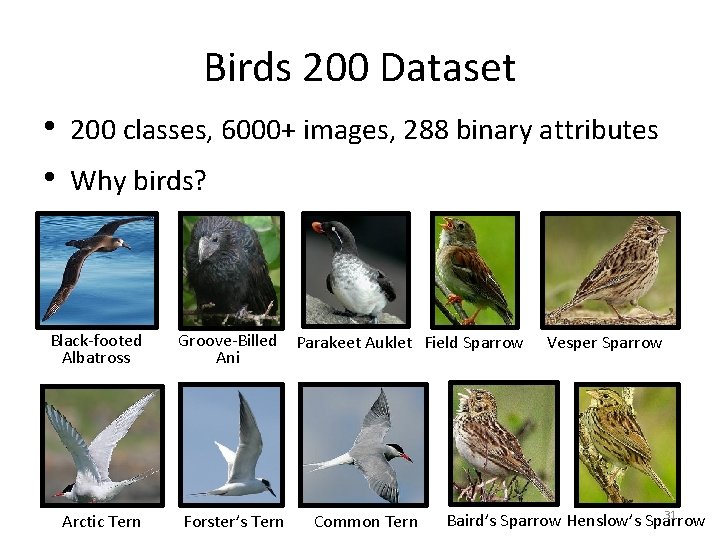

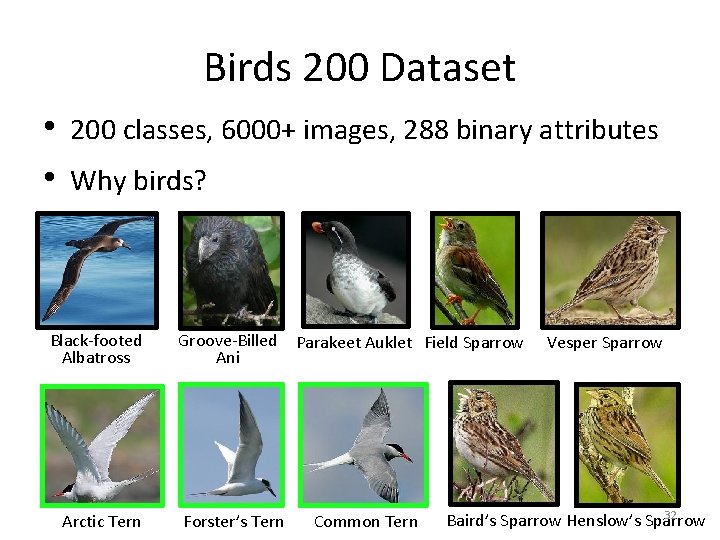

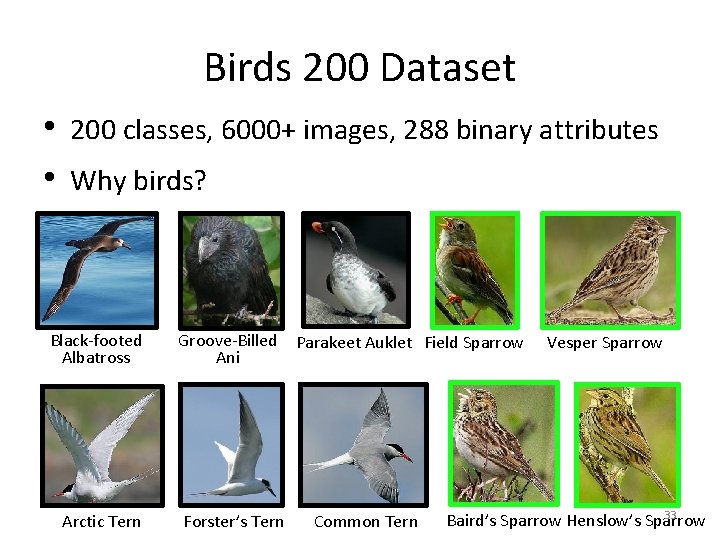

Birds 200 Dataset • • 200 classes, 6000+ images, 288 binary attributes Why birds? Black-footed Albatross Arctic Tern Groove-Billed Ani Forster’s Tern Parakeet Auklet Field Sparrow Common Tern Vesper Sparrow 31 Baird’s Sparrow Henslow’s Sparrow

Birds 200 Dataset • • 200 classes, 6000+ images, 288 binary attributes Why birds? Black-footed Albatross Arctic Tern Groove-Billed Ani Forster’s Tern Parakeet Auklet Field Sparrow Common Tern Vesper Sparrow 32 Baird’s Sparrow Henslow’s Sparrow

Birds 200 Dataset • • 200 classes, 6000+ images, 288 binary attributes Why birds? Black-footed Albatross Arctic Tern Groove-Billed Ani Forster’s Tern Parakeet Auklet Field Sparrow Common Tern Vesper Sparrow 33 Baird’s Sparrow Henslow’s Sparrow

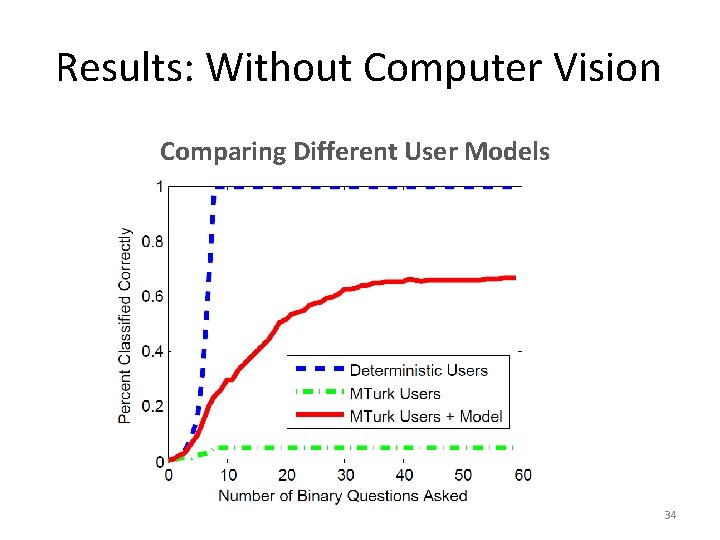

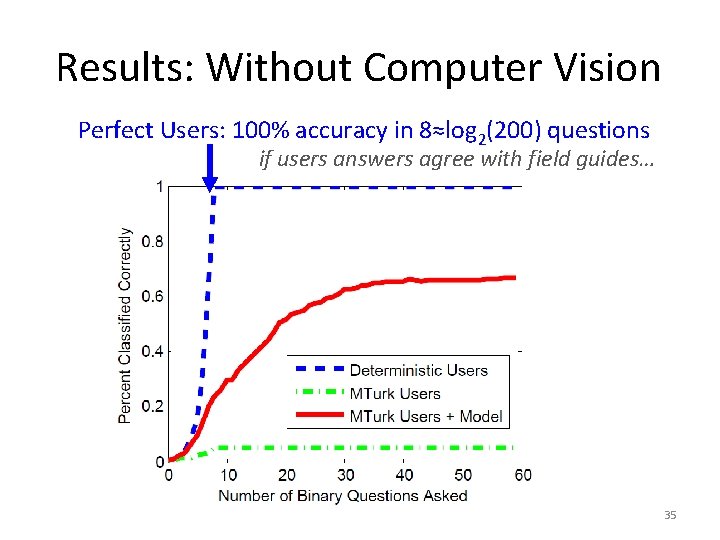

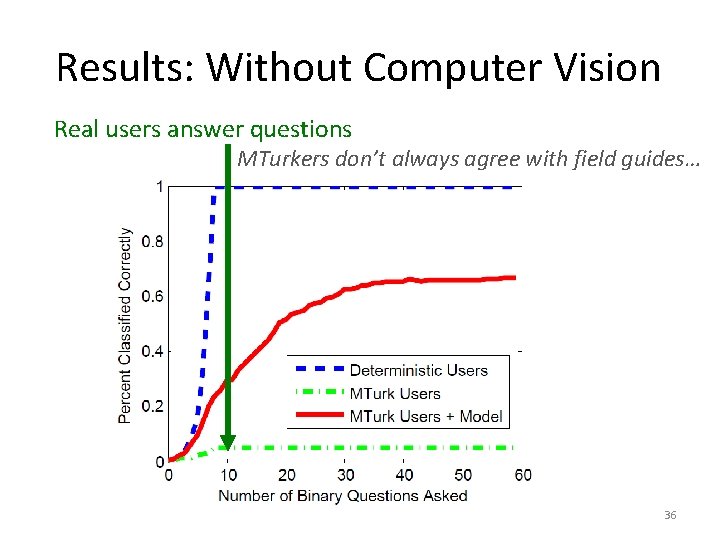

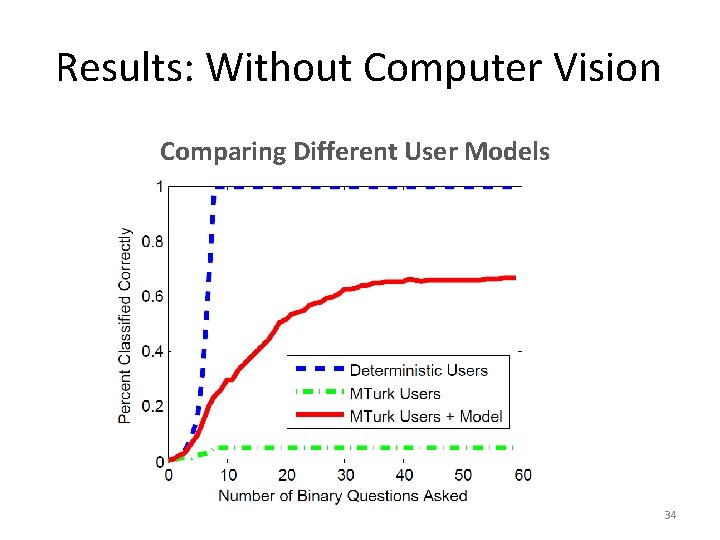

Results: Without Computer Vision Comparing Different User Models 34

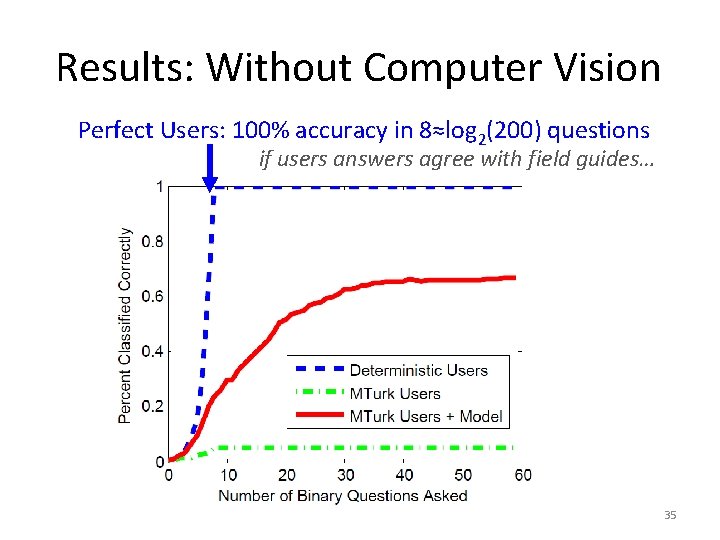

Results: Without Computer Vision Perfect Users: 100% accuracy in 8≈log 2(200) questions if users answers agree with field guides… 35

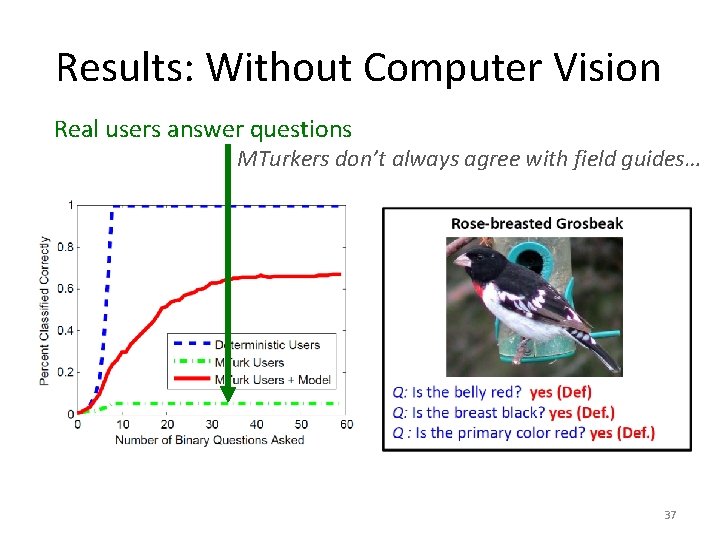

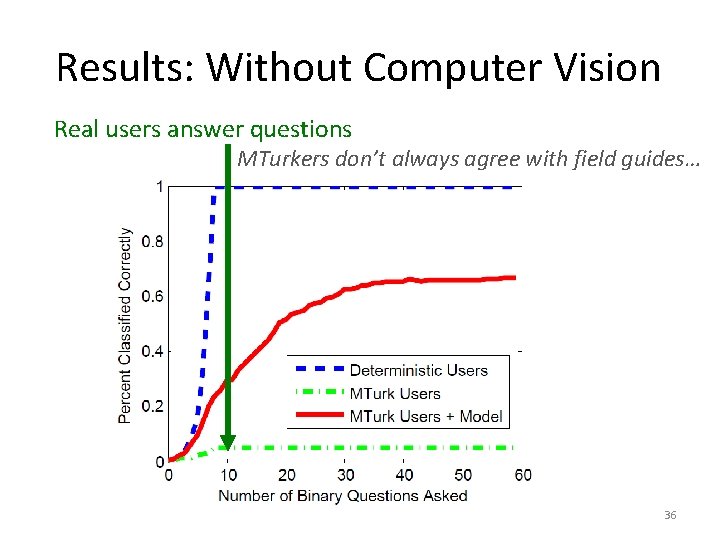

Results: Without Computer Vision Real users answer questions MTurkers don’t always agree with field guides… 36

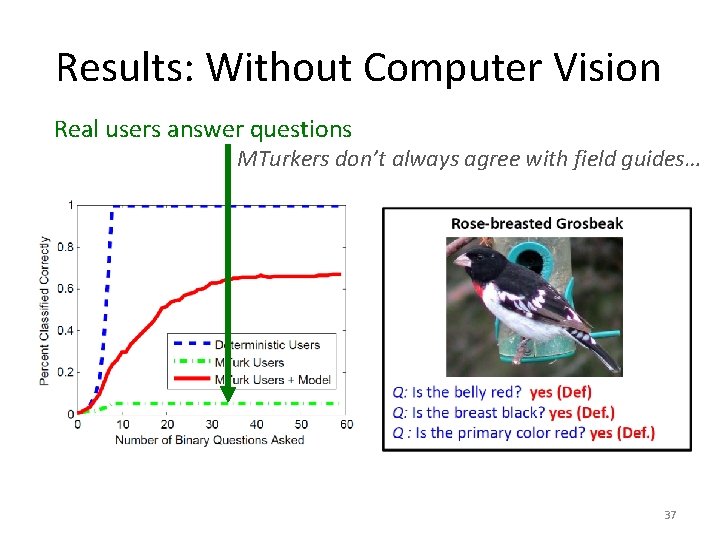

Results: Without Computer Vision Real users answer questions MTurkers don’t always agree with field guides… 37

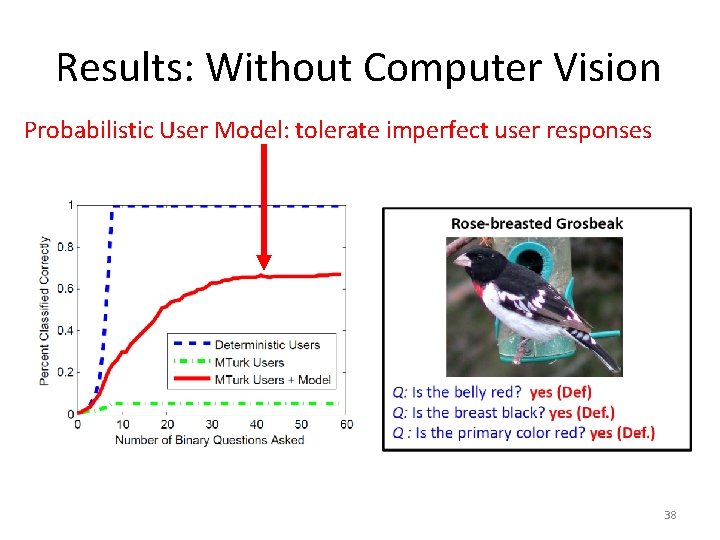

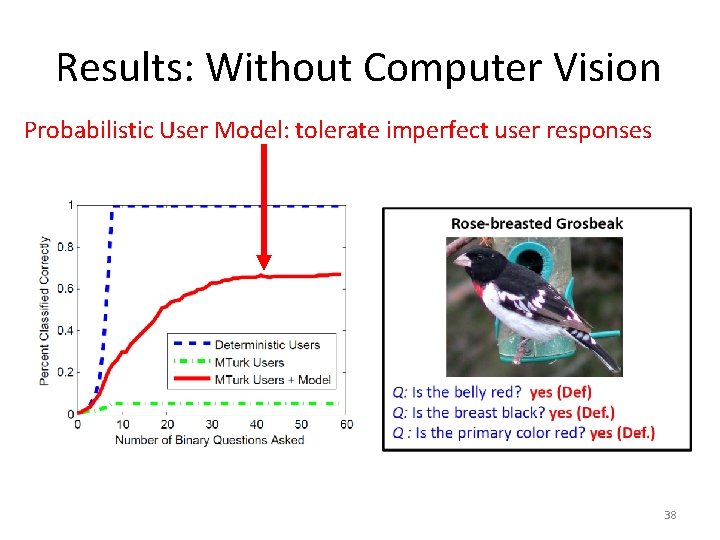

Results: Without Computer Vision Probabilistic User Model: tolerate imperfect user responses 38

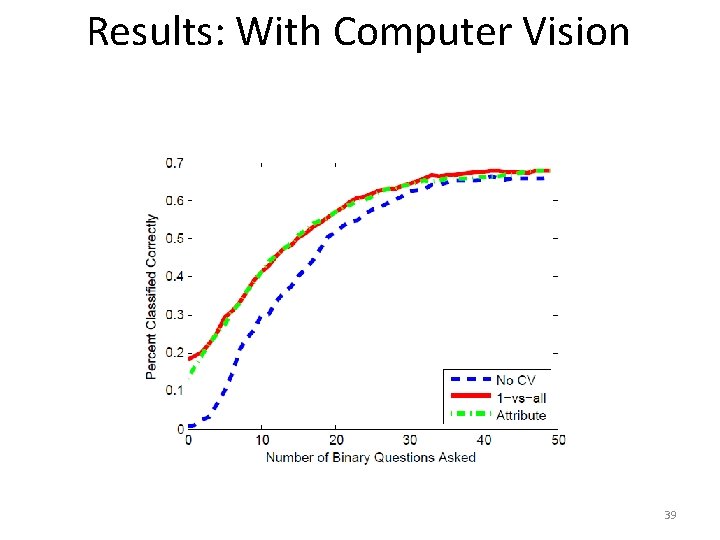

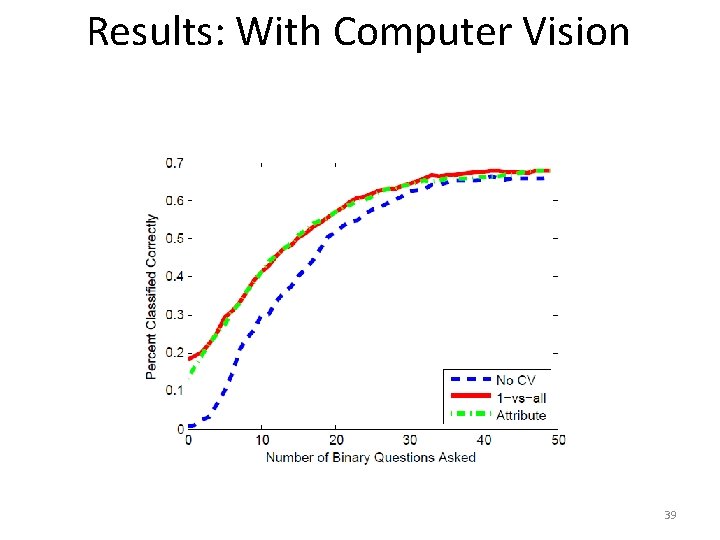

Results: With Computer Vision 39

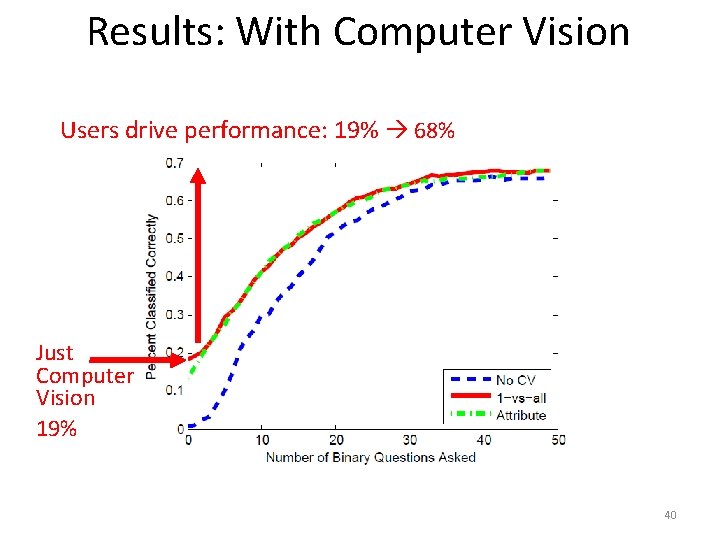

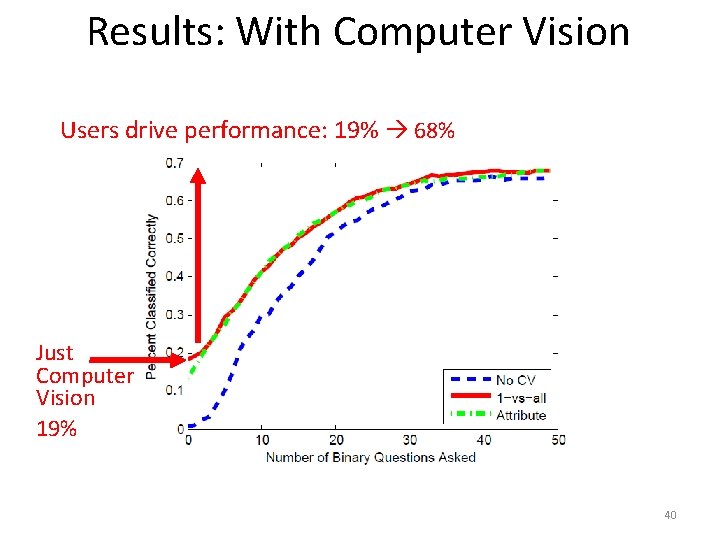

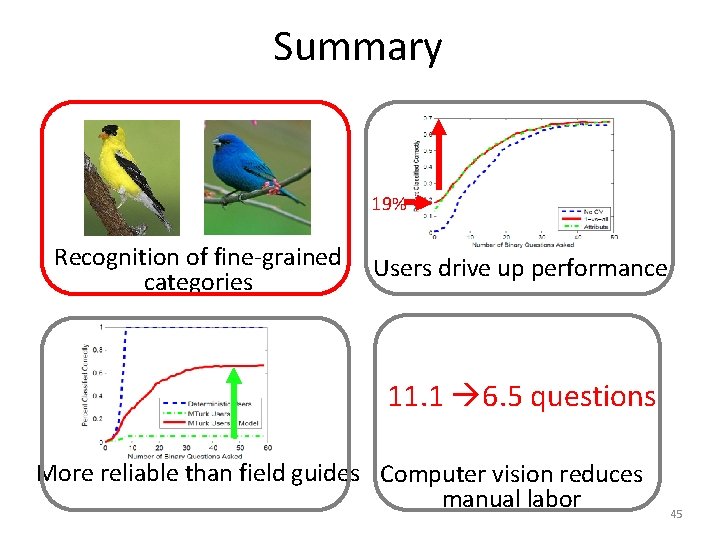

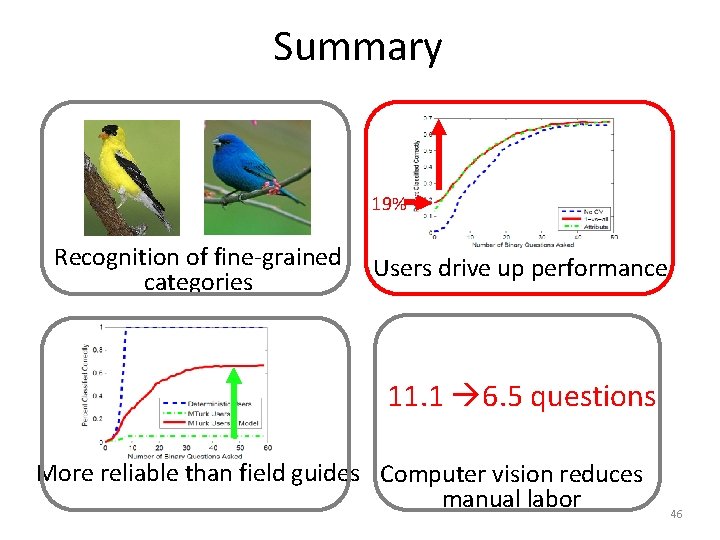

Results: With Computer Vision Users drive performance: 19% 68% Just Computer Vision 19% 40

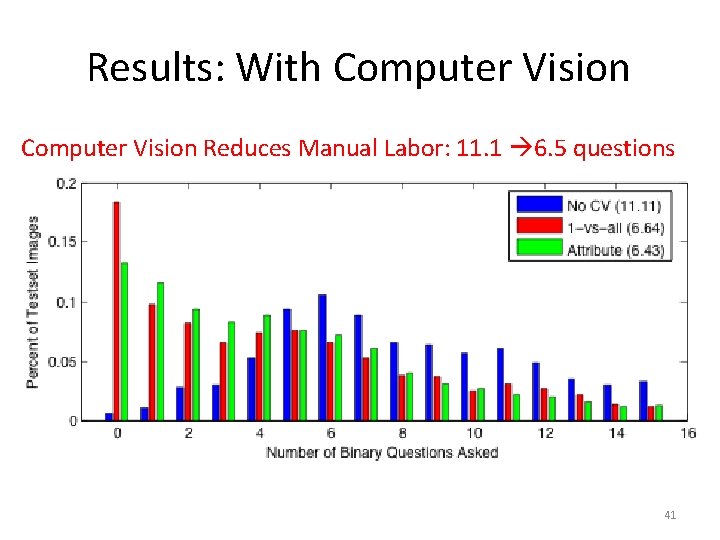

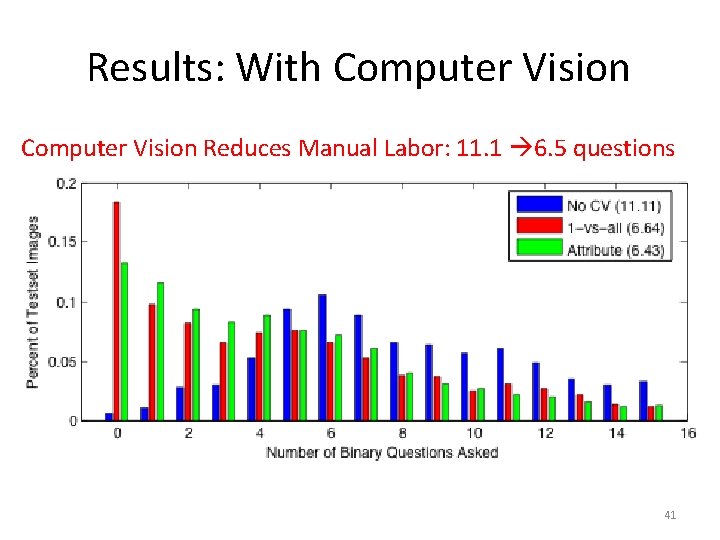

Results: With Computer Vision Reduces Manual Labor: 11. 1 6. 5 questions 41

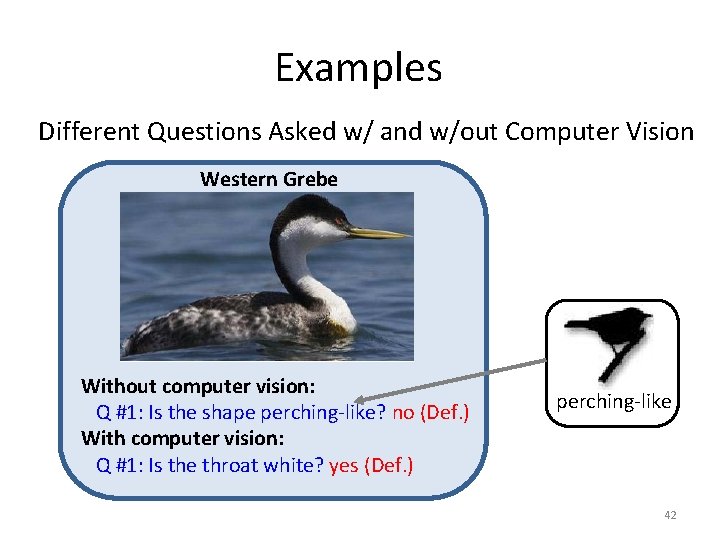

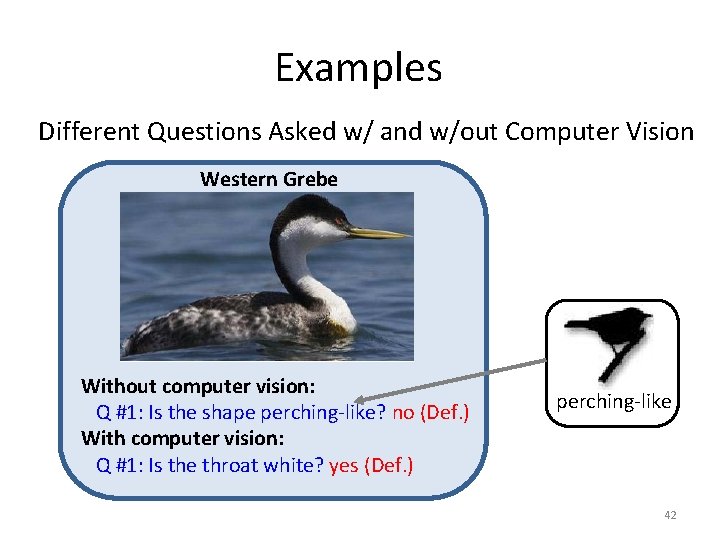

Examples Different Questions Asked w/ and w/out Computer Vision Western Grebe Without computer vision: Q #1: Is the shape perching-like? no (Def. ) With computer vision: Q #1: Is the throat white? yes (Def. ) perching-like 42

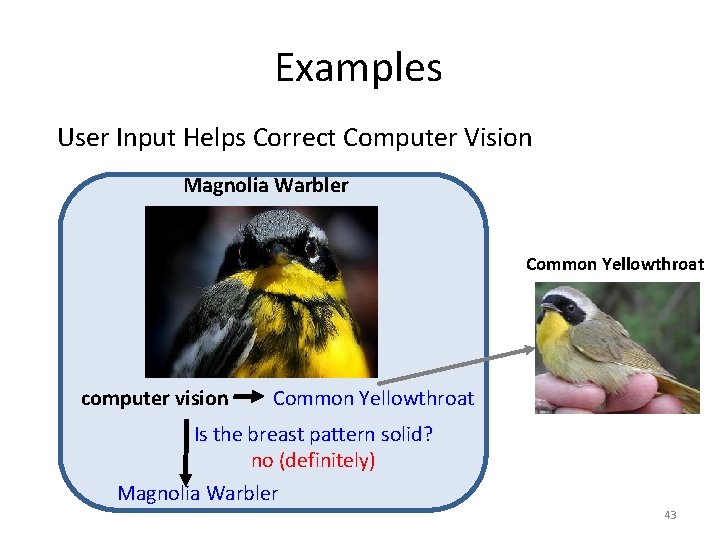

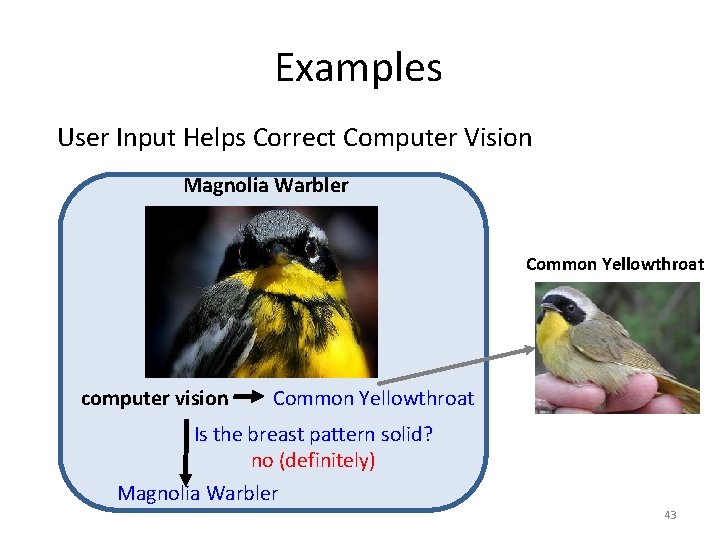

Examples User Input Helps Correct Computer Vision Magnolia Warbler Common Yellowthroat computer vision Common Yellowthroat Is the breast pattern solid? no (definitely) Magnolia Warbler 43

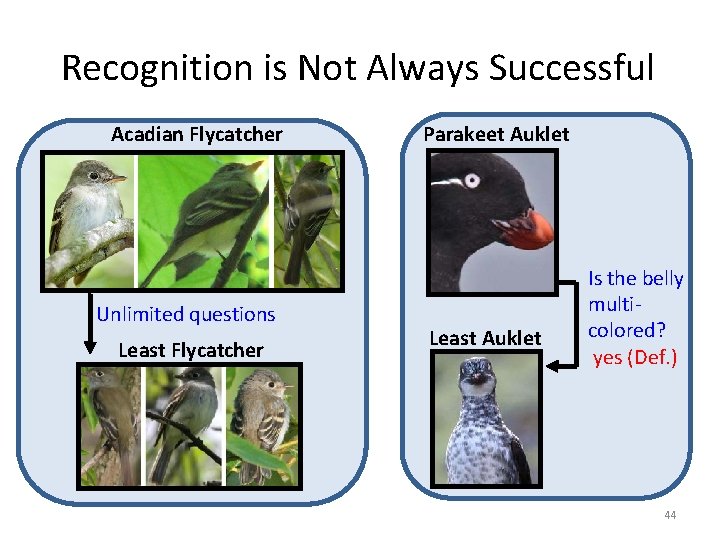

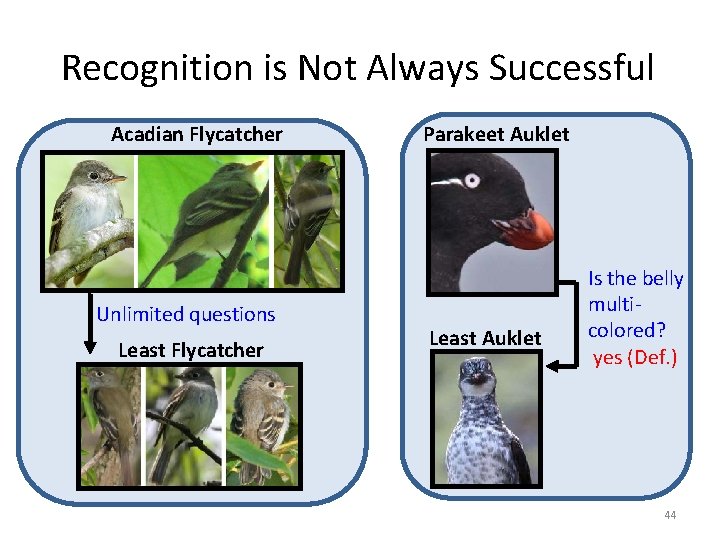

Recognition is Not Always Successful Acadian Flycatcher Unlimited questions Least Flycatcher Parakeet Auklet Least Auklet Is the belly multicolored? yes (Def. ) 44

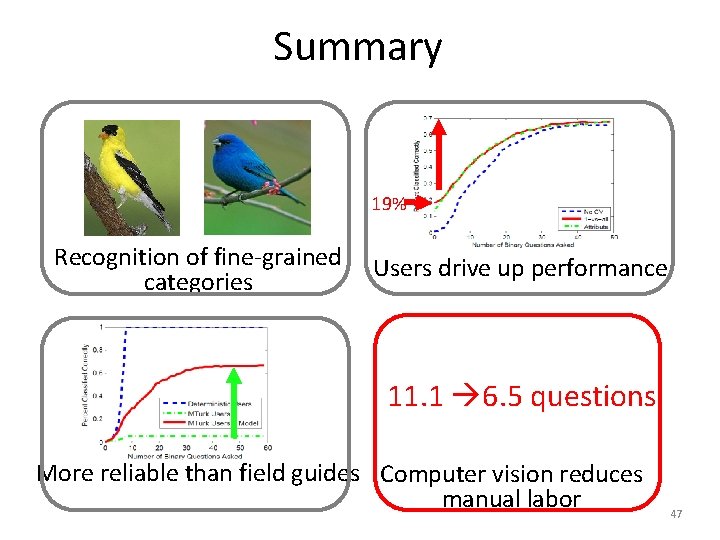

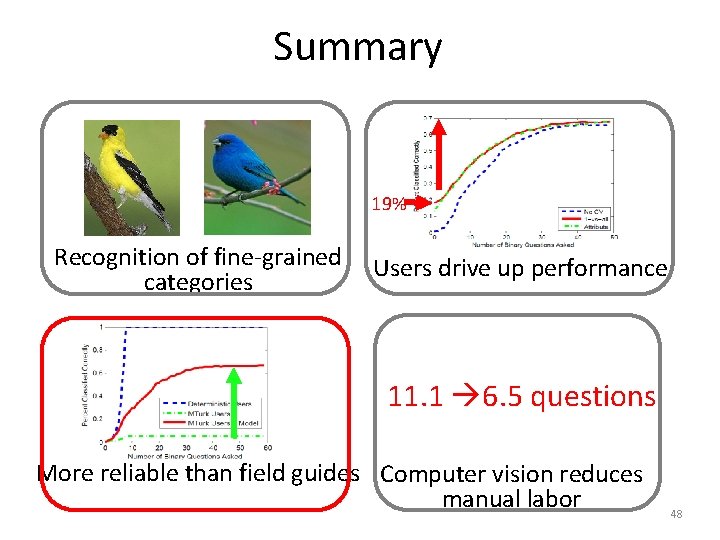

Summary 19% Recognition of fine-grained categories Users drive up performance 11. 1 6. 5 questions More reliable than field guides Computer vision reduces manual labor 45

Summary 19% Recognition of fine-grained categories Users drive up performance 11. 1 6. 5 questions More reliable than field guides Computer vision reduces manual labor 46

Summary 19% Recognition of fine-grained categories Users drive up performance 11. 1 6. 5 questions More reliable than field guides Computer vision reduces manual labor 47

Summary 19% Recognition of fine-grained categories Users drive up performance 11. 1 6. 5 questions More reliable than field guides Computer vision reduces manual labor 48

Future Work • Extend to domains other than birds • Methodologies for generating questions • Improve computer vision 49

Questions? Project page and datasets available at: http: //vision. caltech. edu/visipedia/ http: //vision. ucsd. edu/project/visipedia/ 50