Vision Transformer Vi T When trained on Imagenet

- Slides: 20

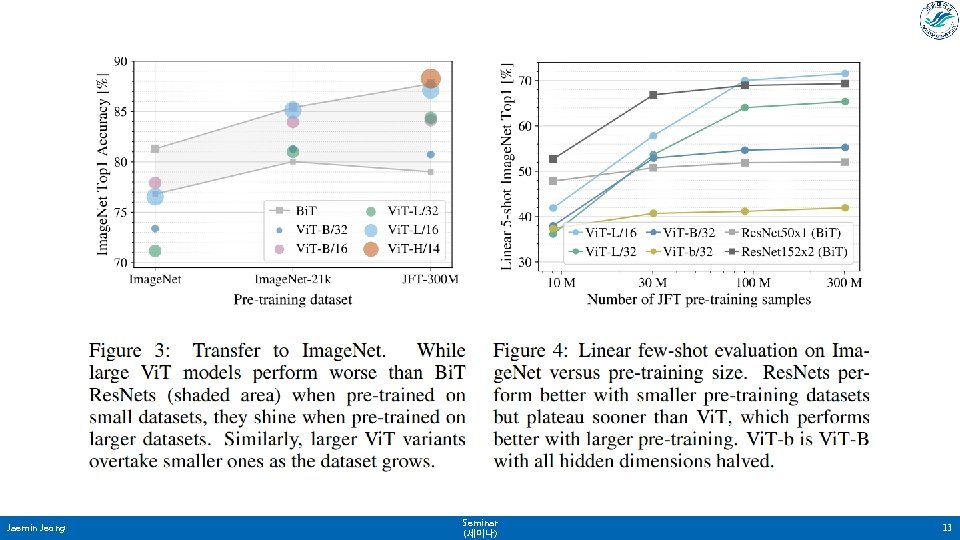

Vision Transformer (Vi. T) • When trained on Imagenet, it provides less accuracy than Res. Net. . • Because, Transformers lack some of the inductive biases inherent to CNNs, such as translation equivariance and locality, and therefore do not generalize well when trained on inshfficient amounts of data. => Pretrain large-scale datasets (14 M - 300 M). • Vision Transformer attains excellent results when pretrained at sufficient scale and transferred to tasks with fewer datapoints. Jaemin Jeong Seminar (세미나) 2

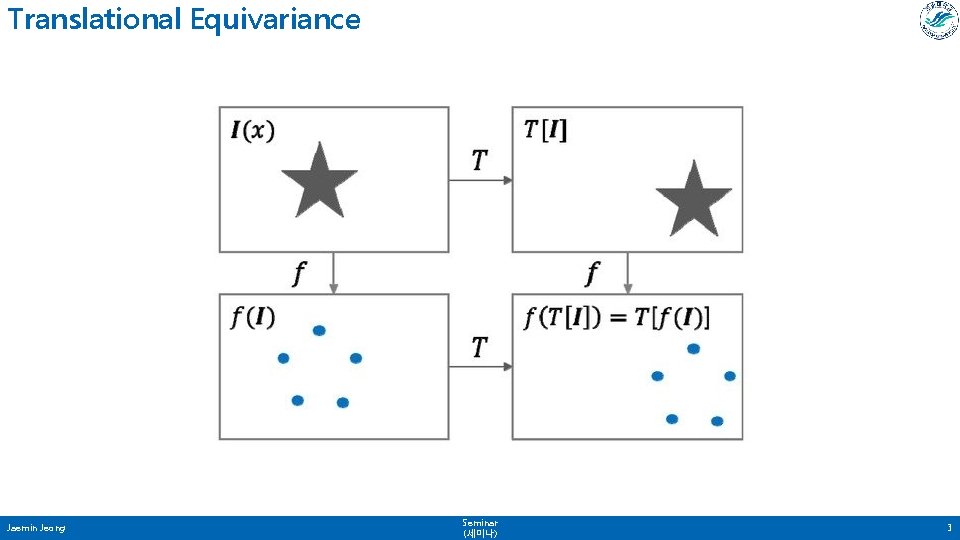

Translational Equivariance Jaemin Jeong Seminar (세미나) 3

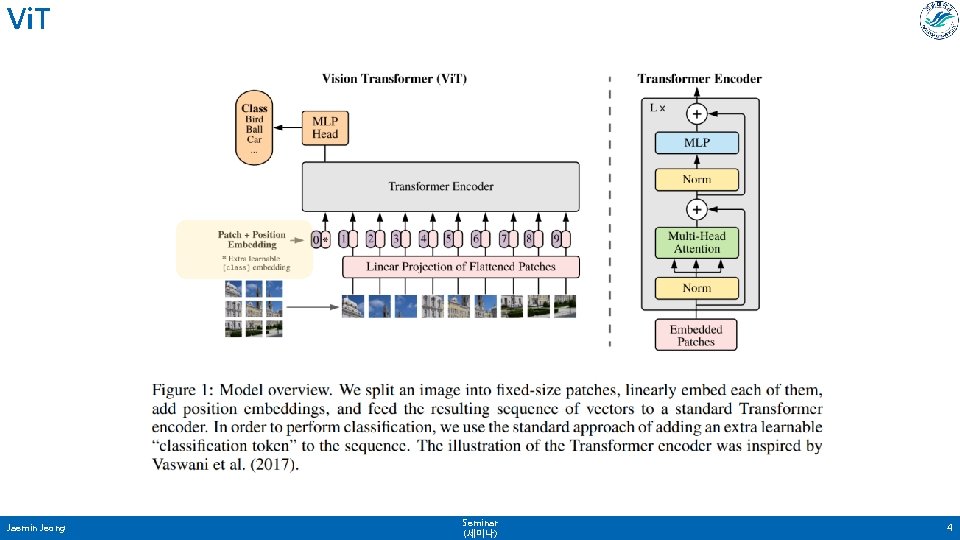

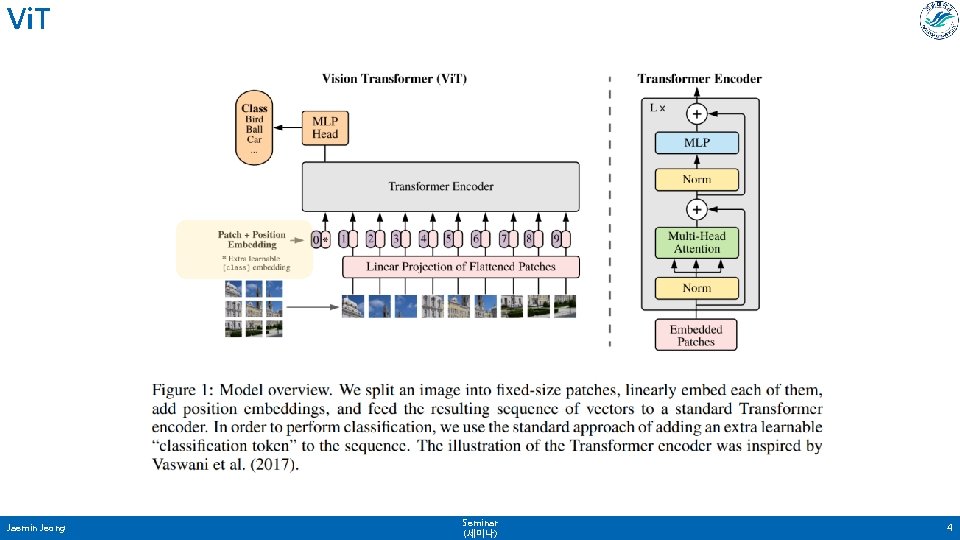

Vi. T Jaemin Jeong Seminar (세미나) 4

Vi. T Jaemin Jeong Seminar (세미나) 5

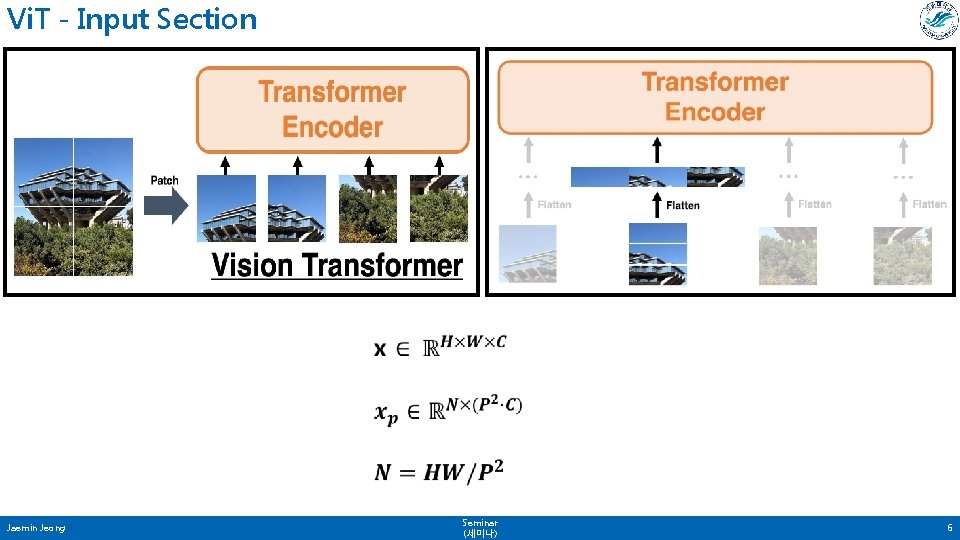

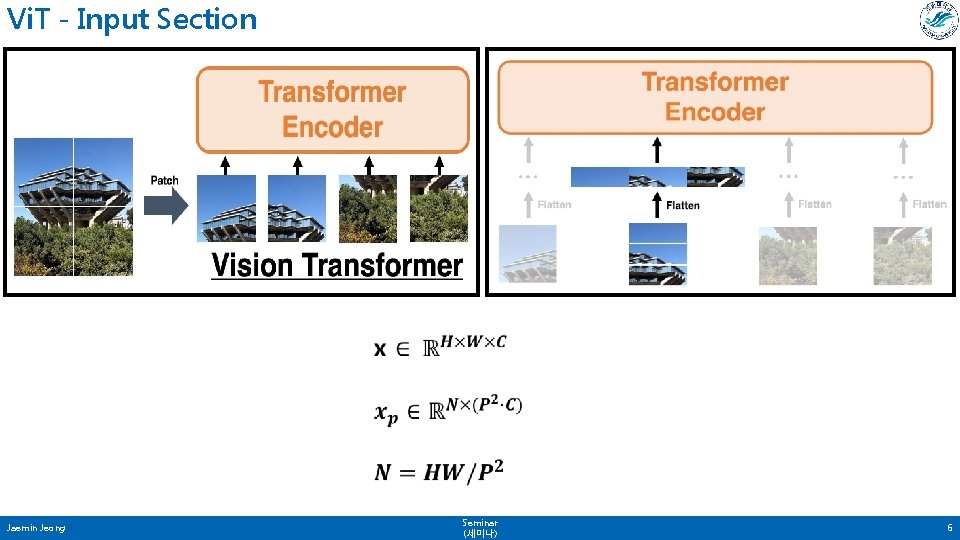

Vi. T - Input Section Jaemin Jeong Seminar (세미나) 6

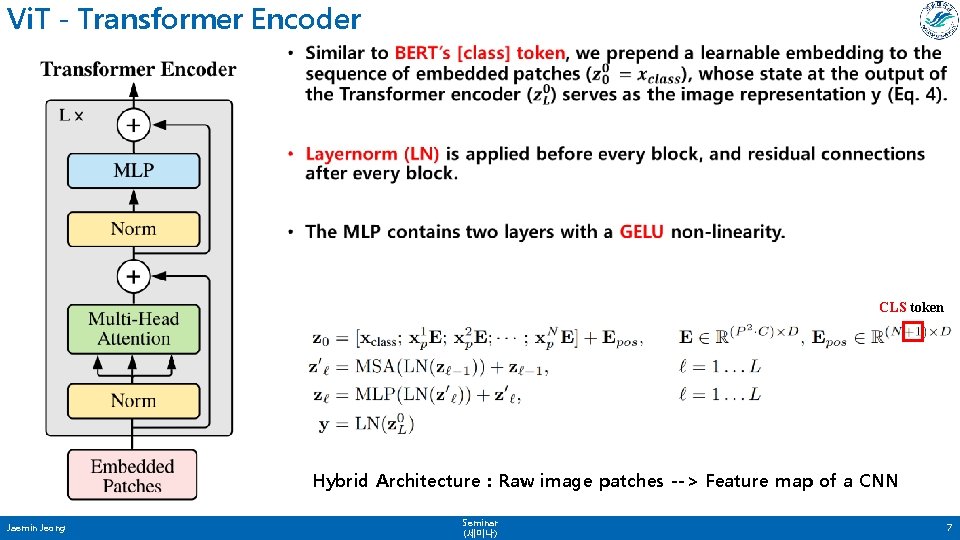

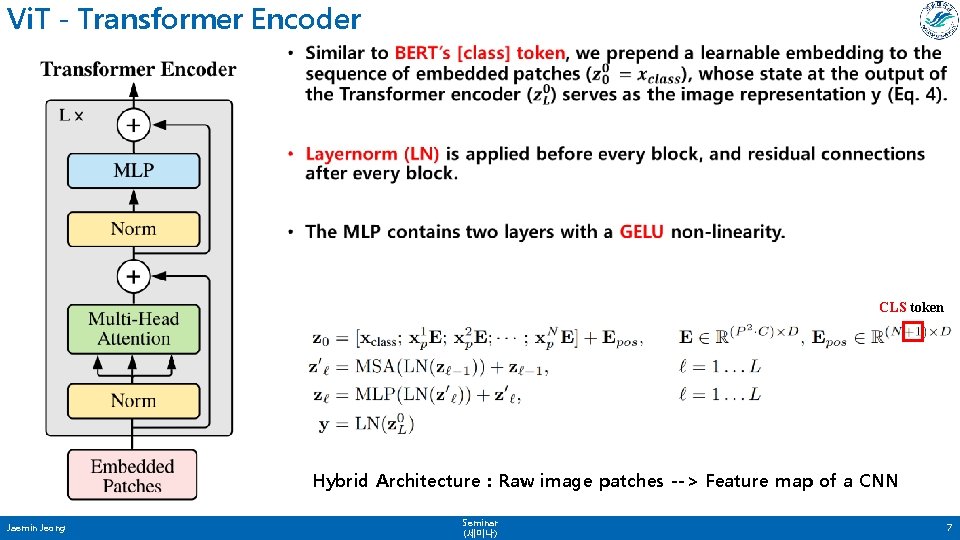

Vi. T - Transformer Encoder • CLS token Hybrid Architecture : Raw image patches --> Feature map of a CNN Jaemin Jeong Seminar (세미나) 7

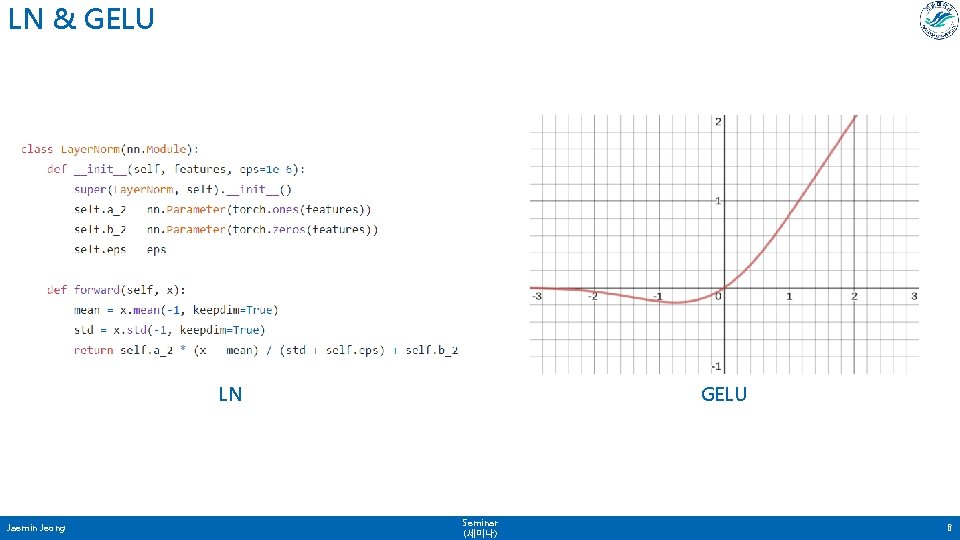

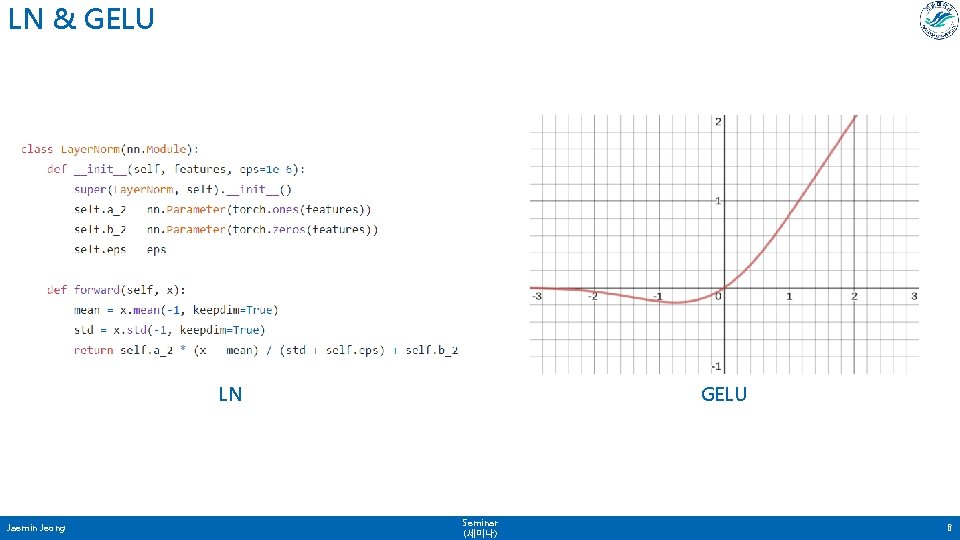

LN & GELU LN Jaemin Jeong GELU Seminar (세미나) 8

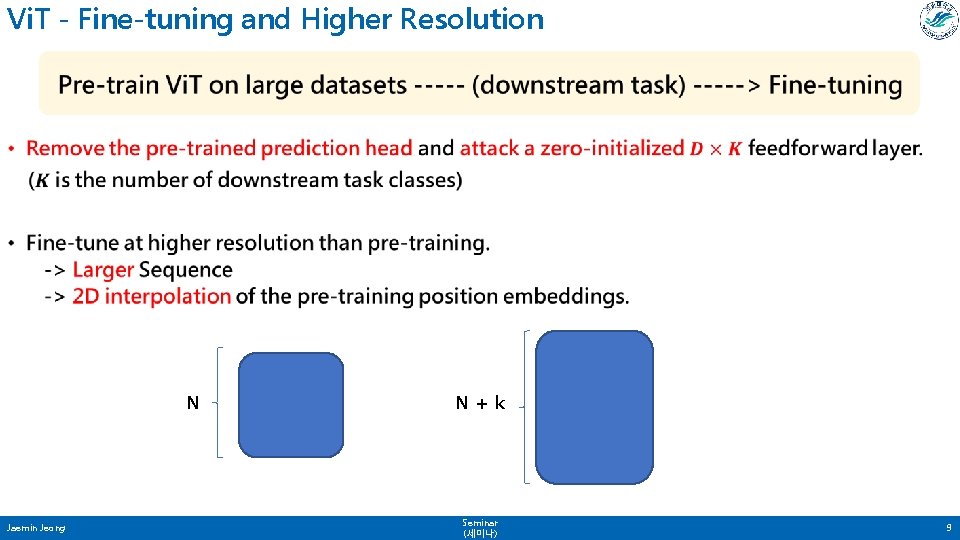

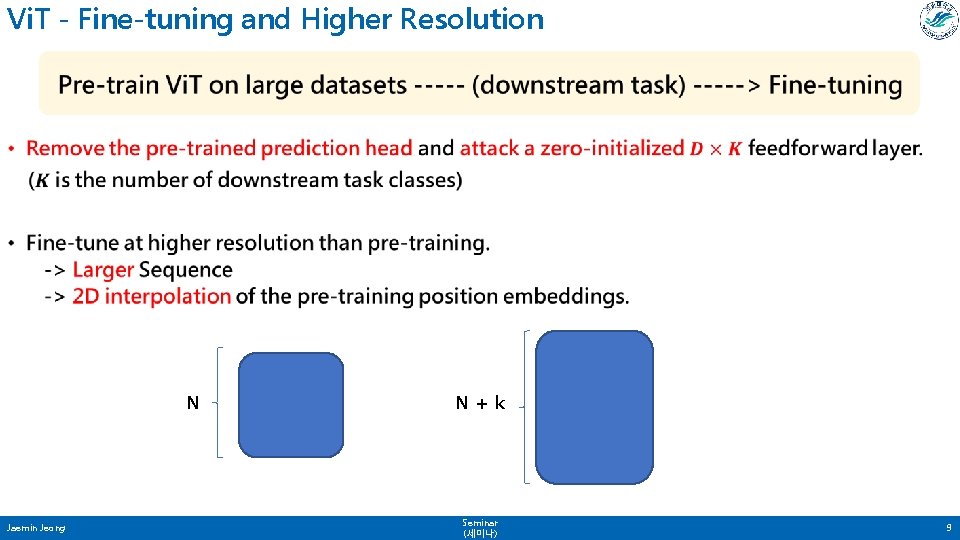

Vi. T - Fine-tuning and Higher Resolution • N Jaemin Jeong N+k Seminar (세미나) 9

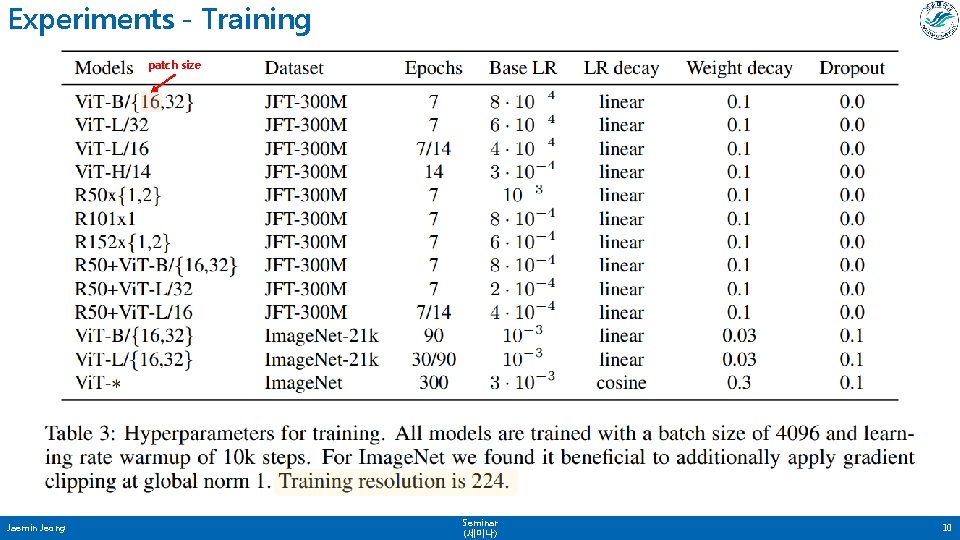

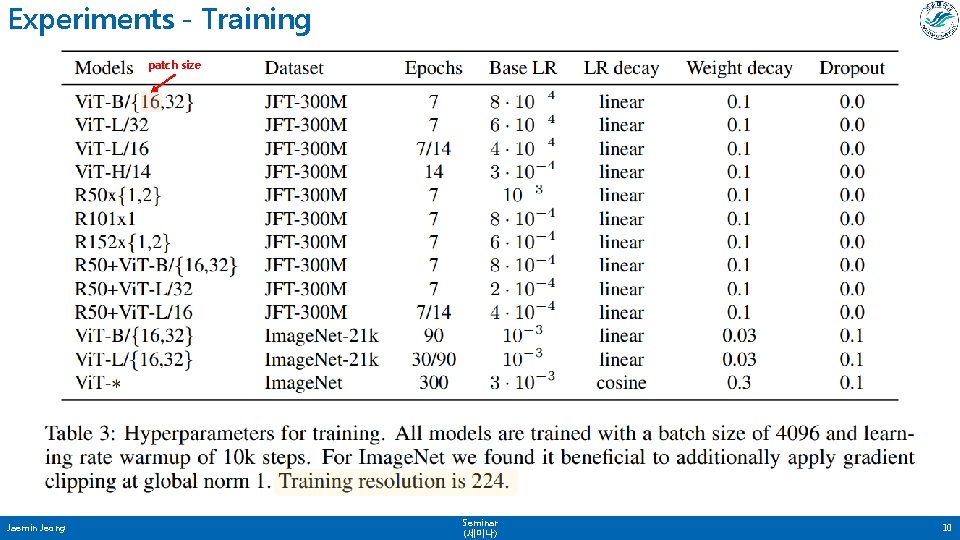

Experiments - Training patch size Jaemin Jeong Seminar (세미나) 10

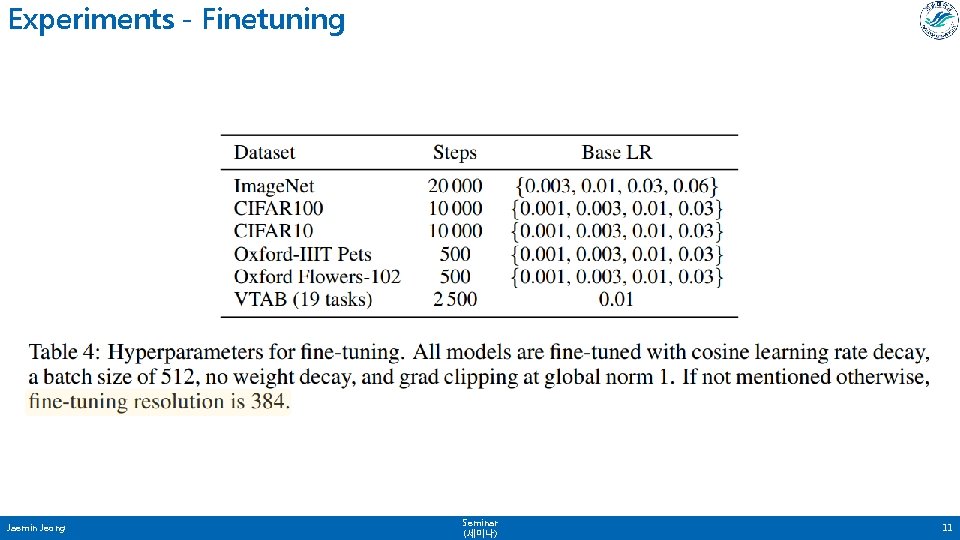

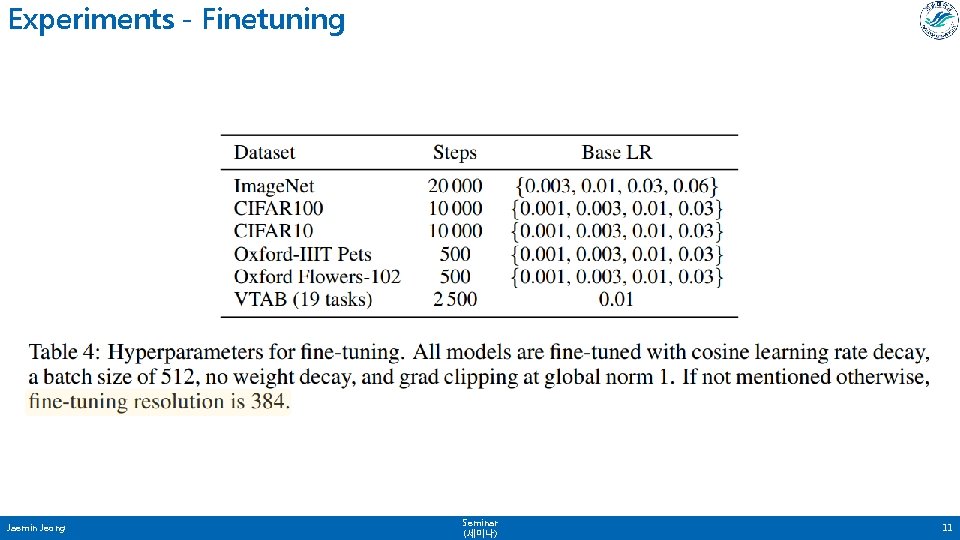

Experiments - Finetuning Jaemin Jeong Seminar (세미나) 11

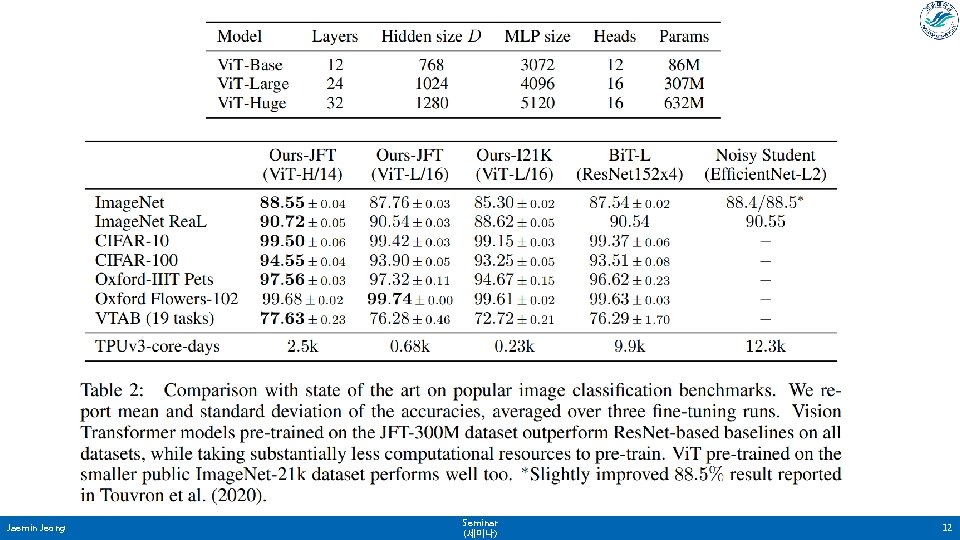

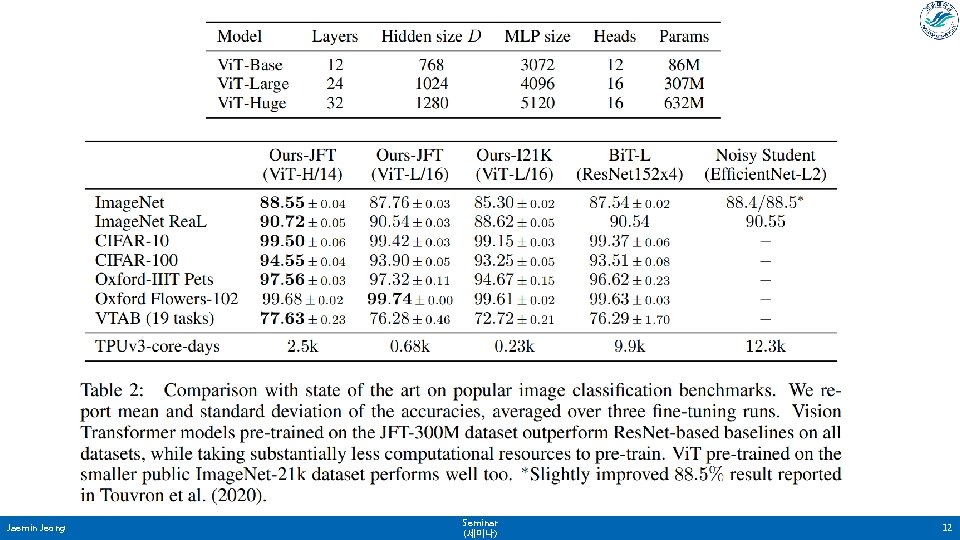

Jaemin Jeong Seminar (세미나) 12

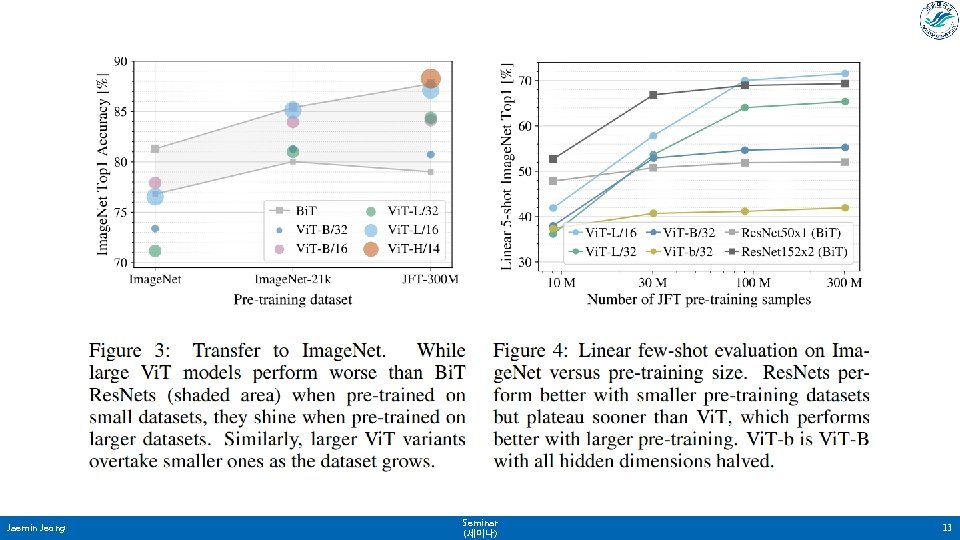

Jaemin Jeong Seminar (세미나) 13

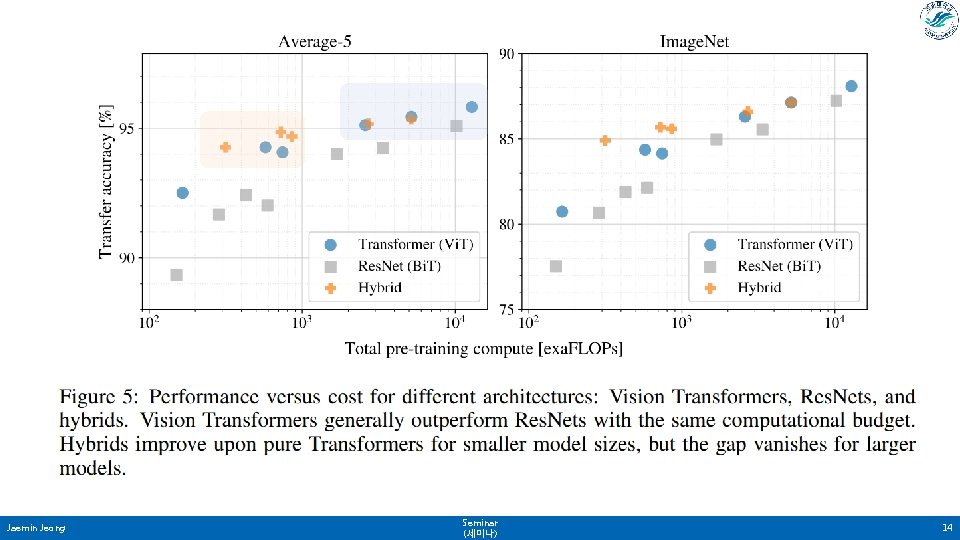

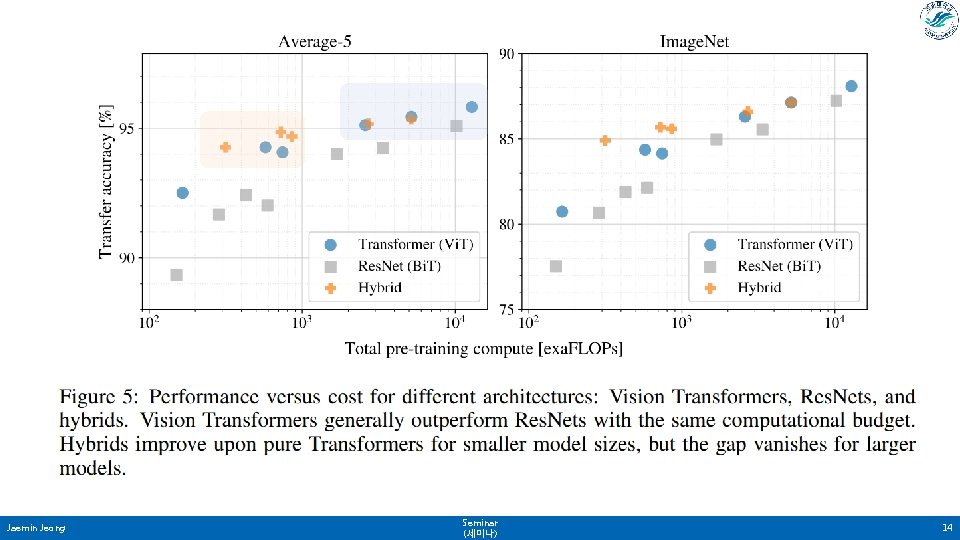

Jaemin Jeong Seminar (세미나) 14

Inspecting vision transformer Jaemin Jeong Seminar (세미나) 15

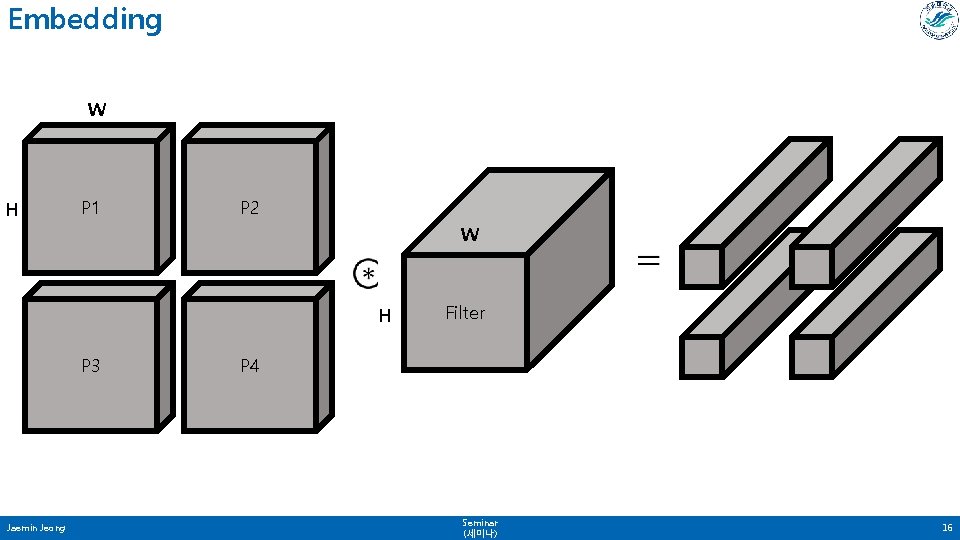

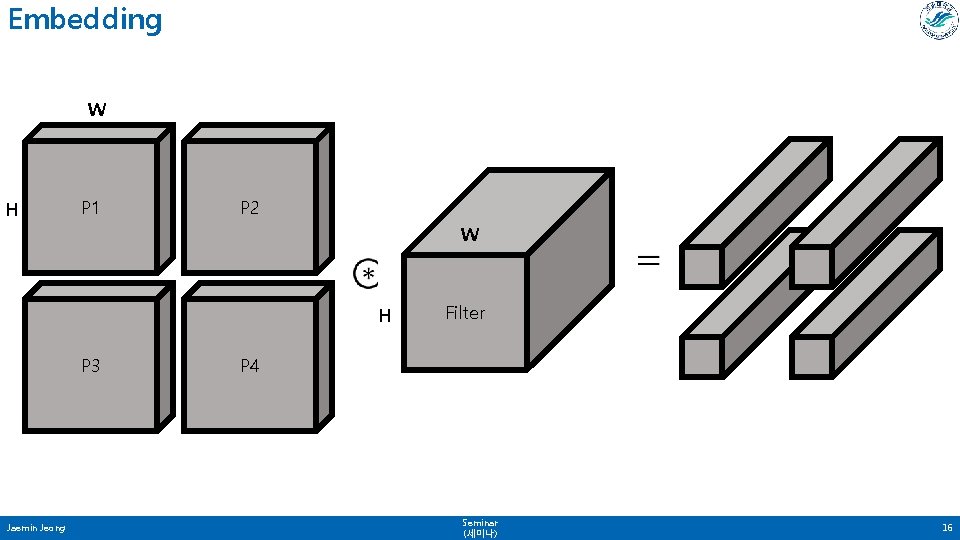

Embedding W H P 1 P 2 W H P 3 Jaemin Jeong Filter P 4 Seminar (세미나) 16

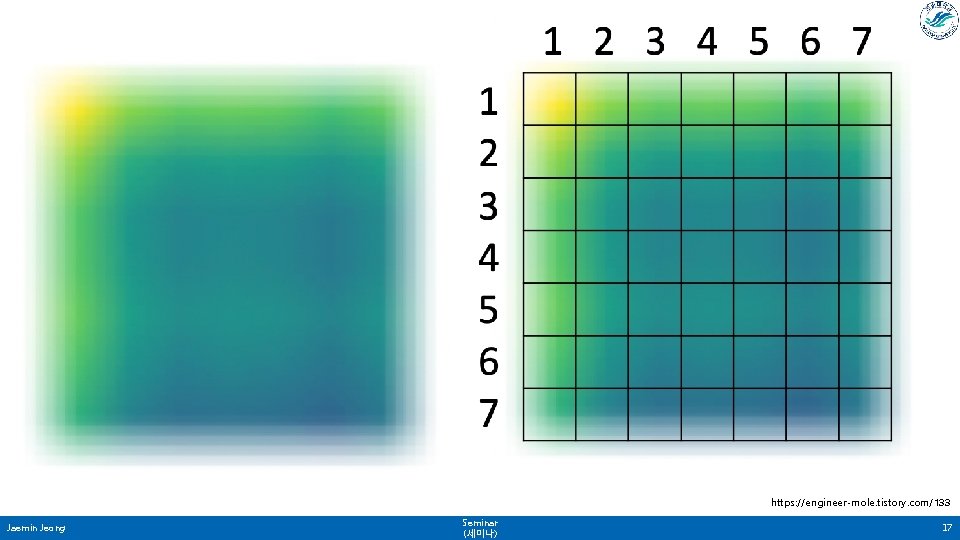

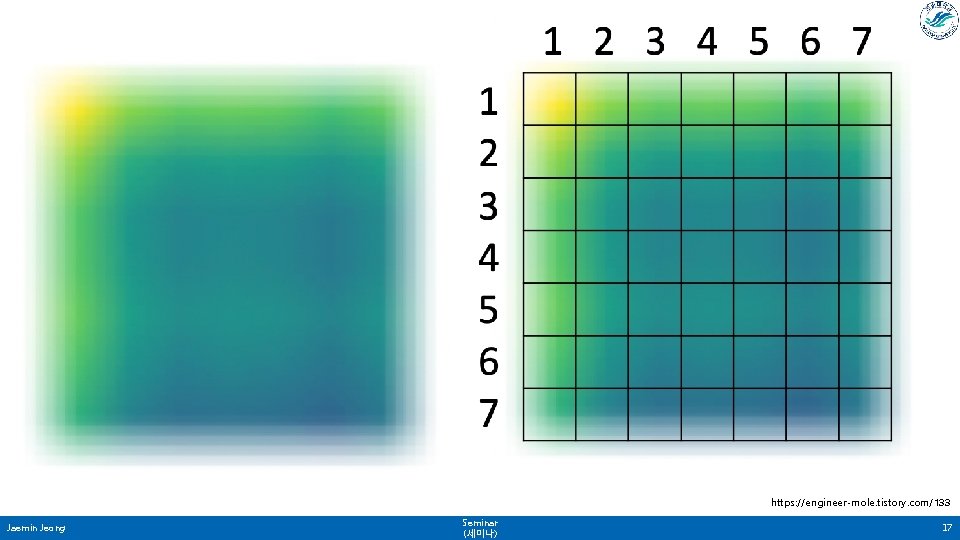

https: //engineer-mole. tistory. com/133 Jaemin Jeong Seminar (세미나) 17

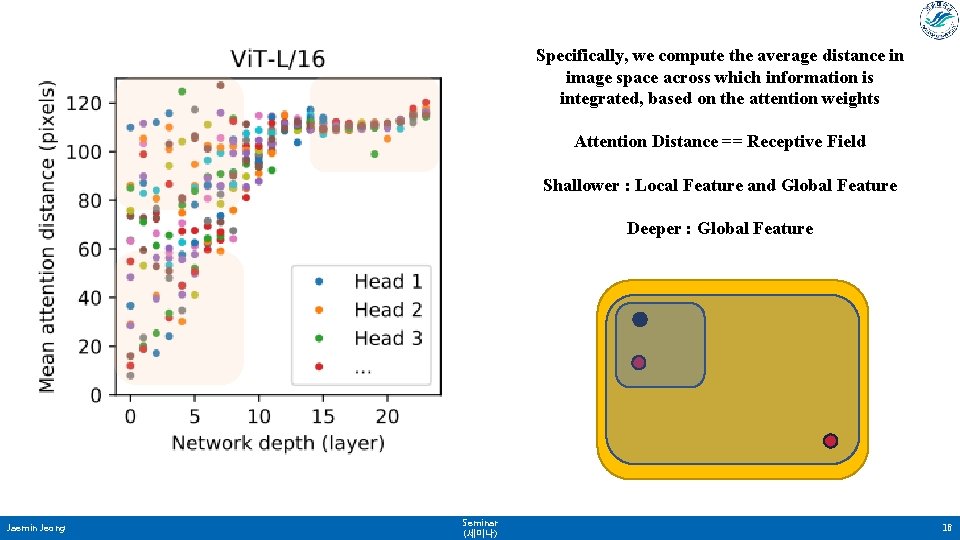

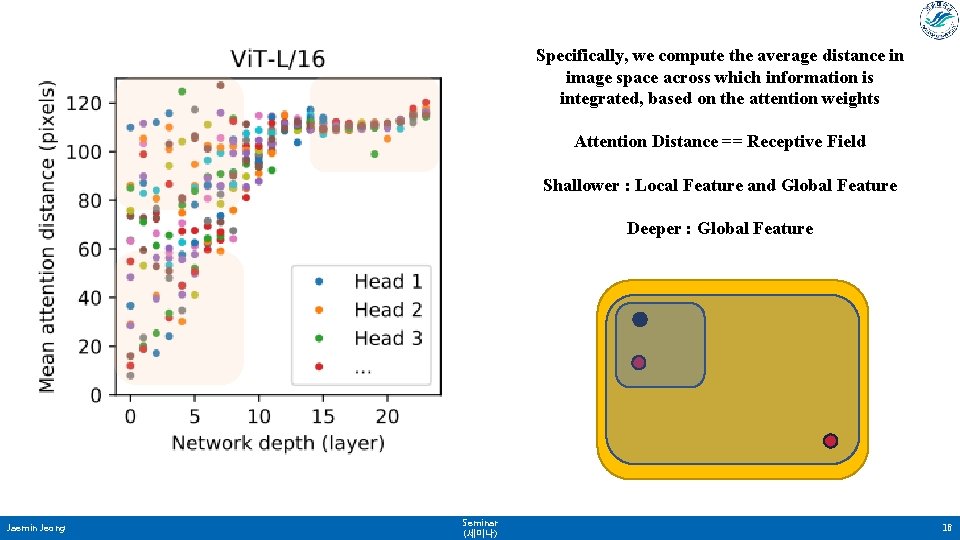

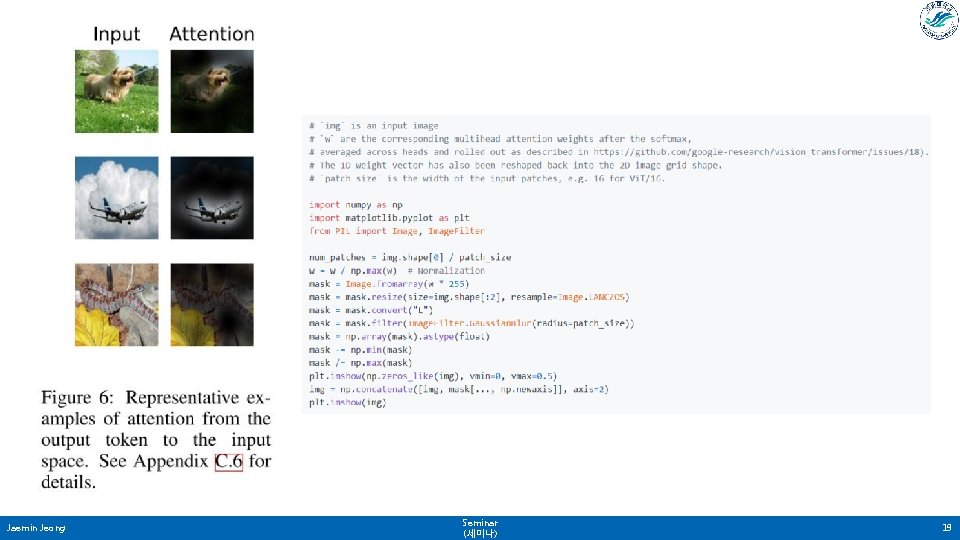

Specifically, we compute the average distance in image space across which information is integrated, based on the attention weights Attention Distance == Receptive Field Shallower : Local Feature and Global Feature Deeper : Global Feature Jaemin Jeong Seminar (세미나) 18

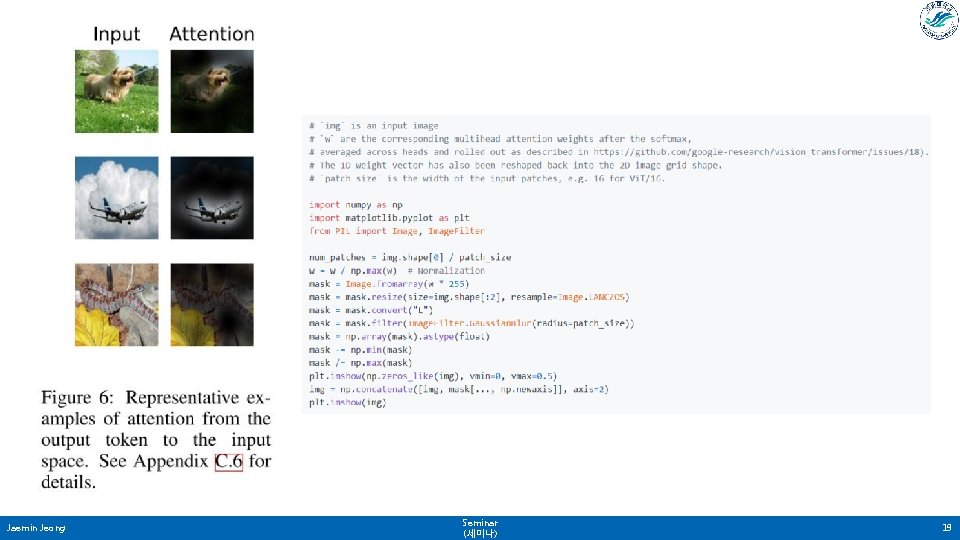

Jaemin Jeong Seminar (세미나) 19

Challenge One is to apply Vi. T to other computer vision tasks, such as detection and segmentation. Another challenge is to continue exploring self-supervised pretraining methods. Finally, further scaling of Vi. T would likely lead to improved performance. Jaemin Jeong Seminar (세미나) 20