Virtuoso Distributed Computing Using Virtual Machines Peter A

Virtuoso: Distributed Computing Using Virtual Machines Peter A. Dinda Prescience Lab Department of Computer Science Northwestern University http: //plab. cs. northwestern. edu

People and Acknowledgements • Students – Ashish Gupta, Ananth Sundararaj, Bin Lin, Alex Shoykhet, Jack Lange, Dong Lu, Jason Skicewicz, Brian Cornell • Collaborators – In-Vigo project at University of Florida • Renato Figueiredo, Jose Fortes • Funders/Gifts – NSF through several awards, VMWare 2

Outline • Motivation and context • Virtuoso model • Virtual networking – Its central importance • Application traffic load measurement and topology inference • Understanding user comfort with resource borrowing – User-centric resource control • Related work • Conclusions 3

How do we deliver arbitrary amounts of computational power to ordinary people? 4

Distributed and Parallel Computing How do we deliver arbitrary amounts of computational power to ordinary people? Interactive Applications 5

Distributed and Parallel Computing How do we deliver arbitrary amounts of computational power to ordinary people? Interactive Applications 6

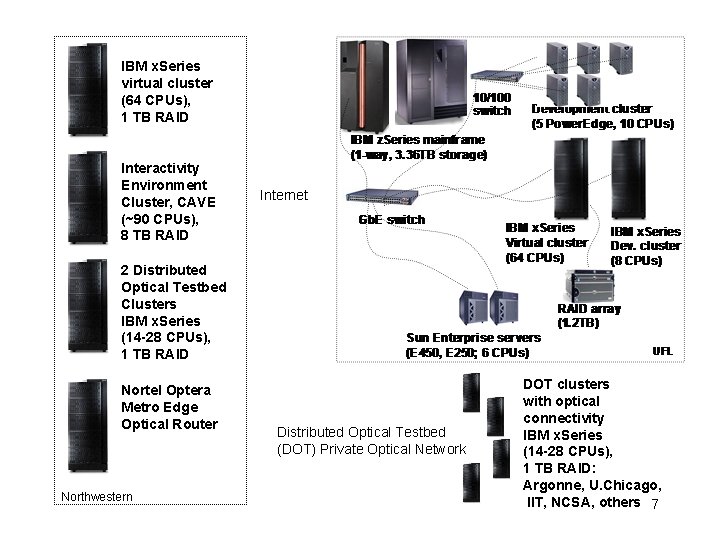

IBM x. Series virtual cluster (64 CPUs), 1 TB RAID Interactivity Environment Cluster, CAVE (~90 CPUs), 8 TB RAID Internet 2 Distributed Optical Testbed Clusters IBM x. Series (14 -28 CPUs), 1 TB RAID Nortel Optera Metro Edge Optical Router Northwestern Distributed Optical Testbed (DOT) Private Optical Network DOT clusters with optical connectivity IBM x. Series (14 -28 CPUs), 1 TB RAID: Argonne, U. Chicago, IIT, NCSA, others 7

Grid Computing • “Flexible, secure, coordinated resource sharing among dynamic collections of individuals, institutions, and resources” • I. Foster, C. Kesselman, S. Tuecke, The Anatomy of the Grid: Enabling Scalable Virtual Organizations, International J. Supercomputer Applications, 15(3), 2001 • Globus, Condor/G, Avaki, EU Data. Grid SW, … 8

Complexity from User’s Perspective • Process or job model – Lots of complex state: connections, special shared libraries, licenses, file descriptors • Operating system specificity – Perhaps even version-specific – Symbolic supercomputer example • Need to buy into some Grid API • Install and learn potentially complex Grid software 9

Users already know how to deal with this complexity at another level 10

Complexity from Resource Owner’s Perspective • Install and learn potentially complex Grid software • Deal with local accounts and privileges – Associated with global accounts or certificates • Protection/Isolation • Support users with different OS, library, license, etc, needs. 11

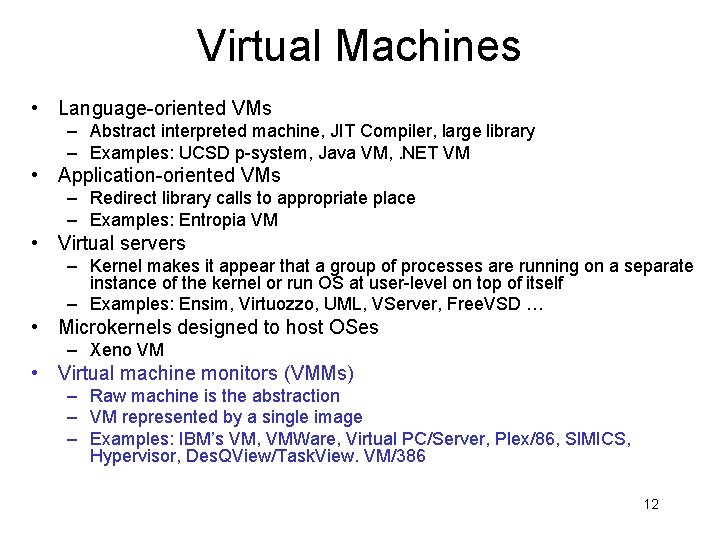

Virtual Machines • Language-oriented VMs – Abstract interpreted machine, JIT Compiler, large library – Examples: UCSD p-system, Java VM, . NET VM • Application-oriented VMs – Redirect library calls to appropriate place – Examples: Entropia VM • Virtual servers – Kernel makes it appear that a group of processes are running on a separate instance of the kernel or run OS at user-level on top of itself – Examples: Ensim, Virtuozzo, UML, VServer, Free. VSD … • Microkernels designed to host OSes – Xeno VM • Virtual machine monitors (VMMs) – Raw machine is the abstraction – VM represented by a single image – Examples: IBM’s VM, VMWare, Virtual PC/Server, Plex/86, SIMICS, Hypervisor, Des. QView/Task. View. VM/386 12

VMWare GSX VM 13

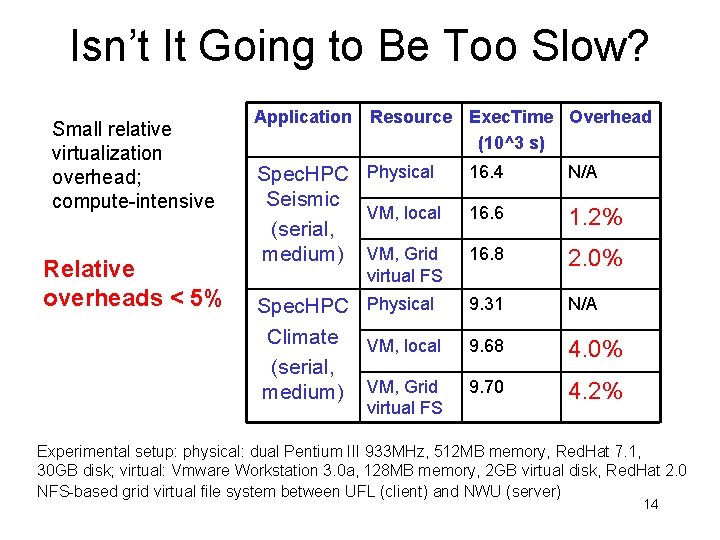

Isn’t It Going to Be Too Slow? Small relative virtualization overhead; compute-intensive Relative overheads < 5% Application Resource Exec. Time Overhead (10^3 s) Spec. HPC Physical Seismic VM, local (serial, medium) VM, Grid 16. 4 N/A 16. 6 1. 2% 16. 8 2. 0% Spec. HPC Physical Climate VM, local (serial, medium) VM, Grid 9. 31 N/A 9. 68 4. 0% 9. 70 4. 2% virtual FS Experimental setup: physical: dual Pentium III 933 MHz, 512 MB memory, Red. Hat 7. 1, 30 GB disk; virtual: Vmware Workstation 3. 0 a, 128 MB memory, 2 GB virtual disk, Red. Hat 2. 0 NFS-based grid virtual file system between UFL (client) and NWU (server) 14

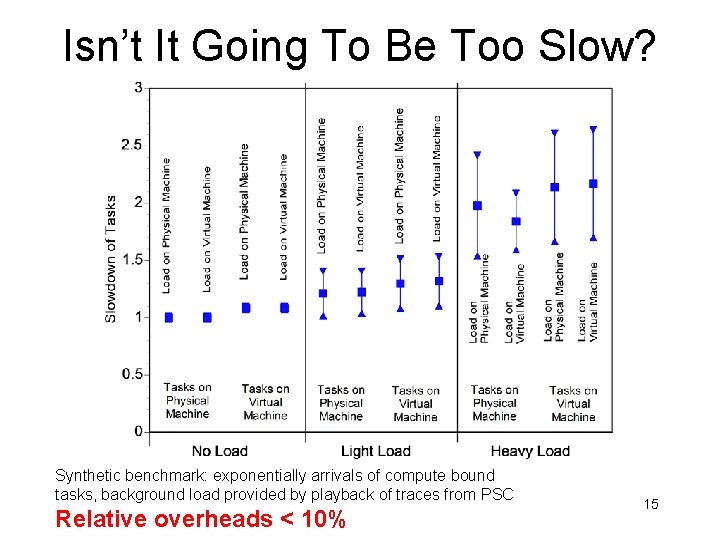

Isn’t It Going To Be Too Slow? Synthetic benchmark: exponentially arrivals of compute bound tasks, background load provided by playback of traces from PSC Relative overheads < 10% 15

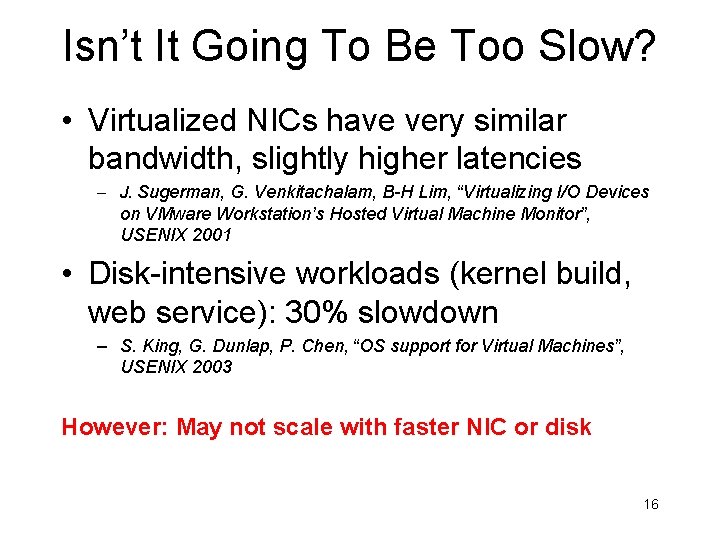

Isn’t It Going To Be Too Slow? • Virtualized NICs have very similar bandwidth, slightly higher latencies – J. Sugerman, G. Venkitachalam, B-H Lim, “Virtualizing I/O Devices on VMware Workstation’s Hosted Virtual Machine Monitor”, USENIX 2001 • Disk-intensive workloads (kernel build, web service): 30% slowdown – S. King, G. Dunlap, P. Chen, “OS support for Virtual Machines”, USENIX 2003 However: May not scale with faster NIC or disk 16

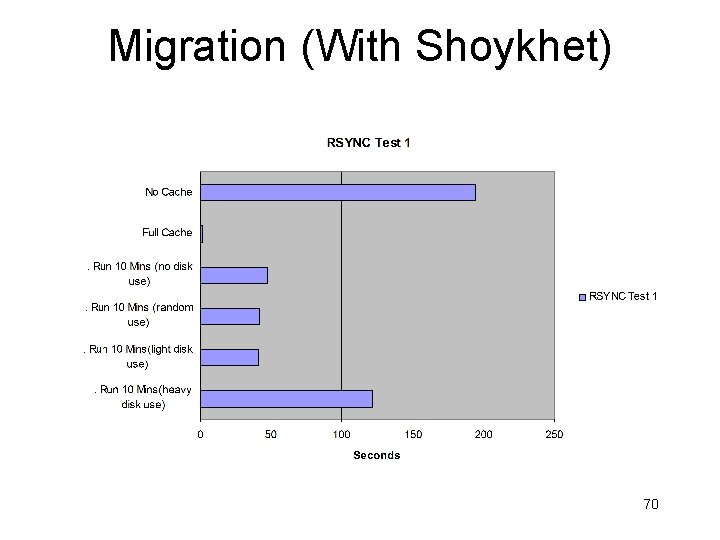

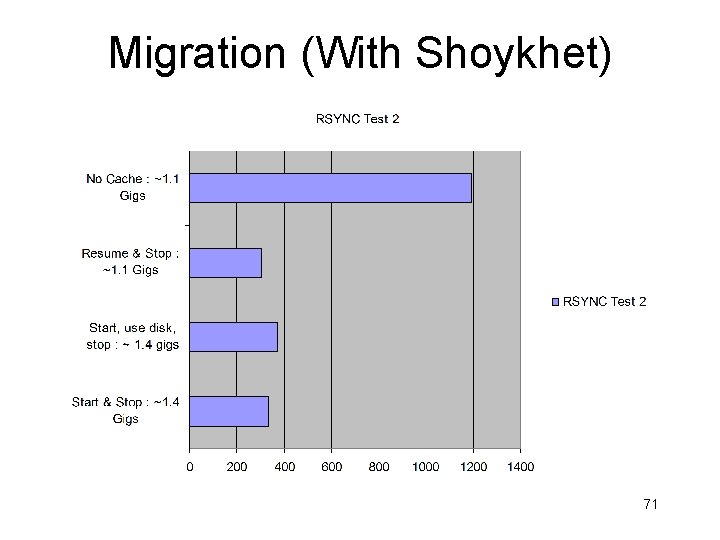

Won’t Migration Be Too Slow? • Appears daunting • Memory + disk! • Nonetheless – Stanford Collective: 20 minutes at DSL speeds! • Sapuntzakis, et al, OSDI 2002, very deep work • Wide variety of techniques – Intel/CMU ISR: 2. 5 -30 seconds from distributed file system at LAN speeds – Our work: 2 -400 seconds with rsync on LAN (Shoykhet) – Current project: versioning file system (Cornell, Patel) 17

Virtuoso • Approach: Lower level of abstraction – Raw machines connected to user’s network • Mechanism: Virtual machine monitors • Our Focus: Middleware support to hide complexity – – – Ordering, instantiation, migration of machines Virtual networking and remote devices Connectivity to remote files, machines Information services Monitoring and prediction Resource control 18

The Virtuoso Model 1. User orders raw machine(s) • • Specifies hardware and performance Basic software installation available • OS, libraries, licenses, etc. 2. Virtuoso creates raw image and returns reference • Image contains disk, memory, configuration, etc. 3. User “powers up” machine 4. Virtuoso chooses provider • Information service 5. Virtuoso migrates image to provider • Efficient network transfer • rsync, demand paging, versioned filesystems 19

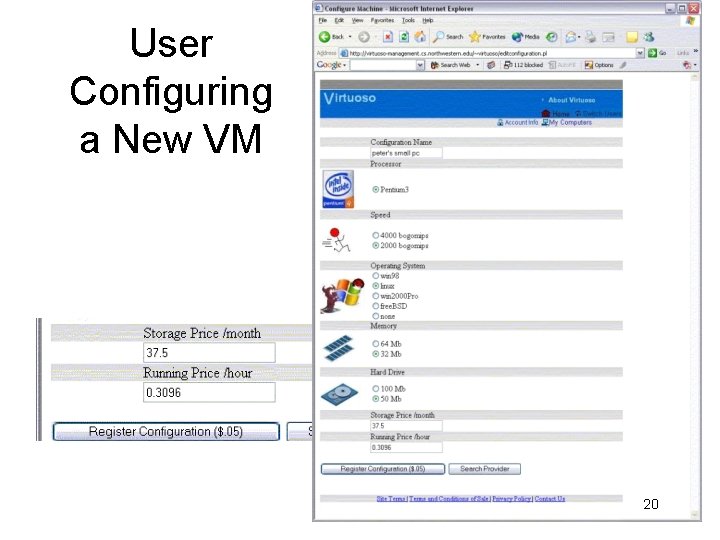

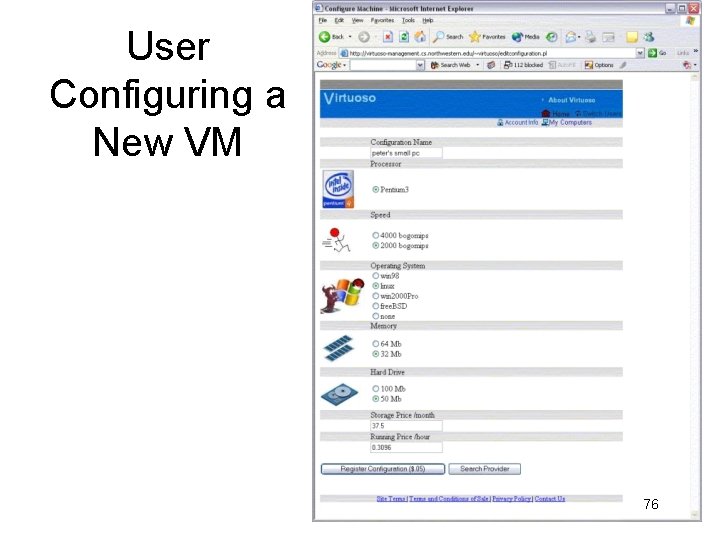

User Configuring a New VM 20

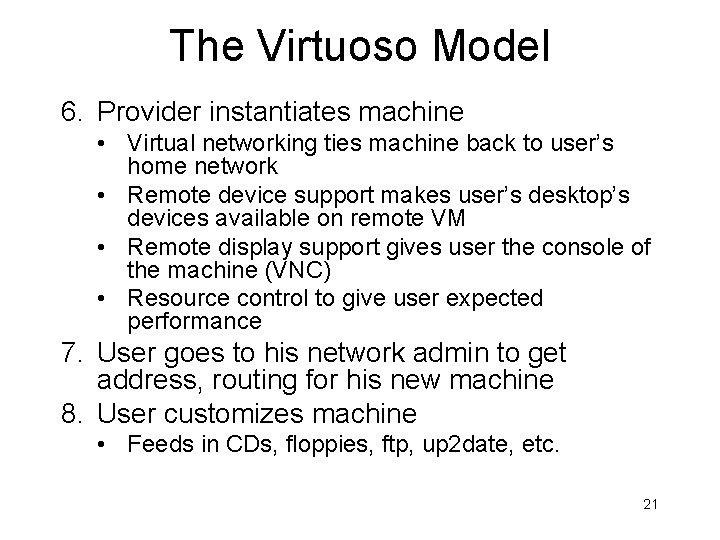

The Virtuoso Model 6. Provider instantiates machine • Virtual networking ties machine back to user’s home network • Remote device support makes user’s desktop’s devices available on remote VM • Remote display support gives user the console of the machine (VNC) • Resource control to give user expected performance 7. User goes to his network admin to get address, routing for his new machine 8. User customizes machine • Feeds in CDs, floppies, ftp, up 2 date, etc. 21

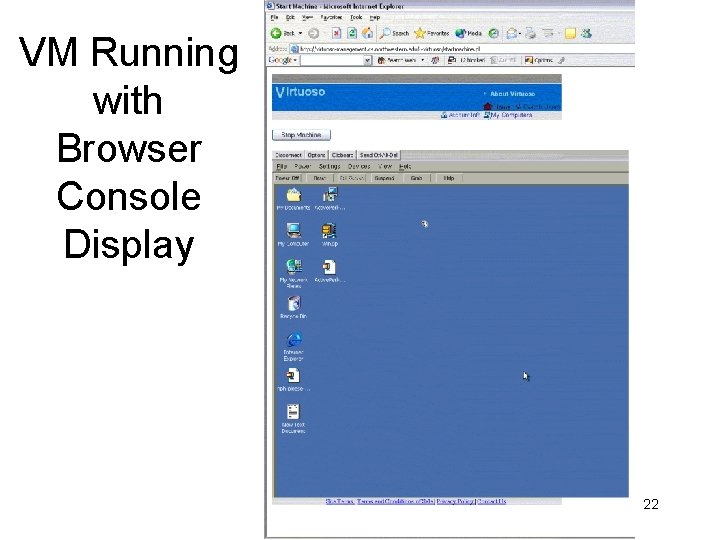

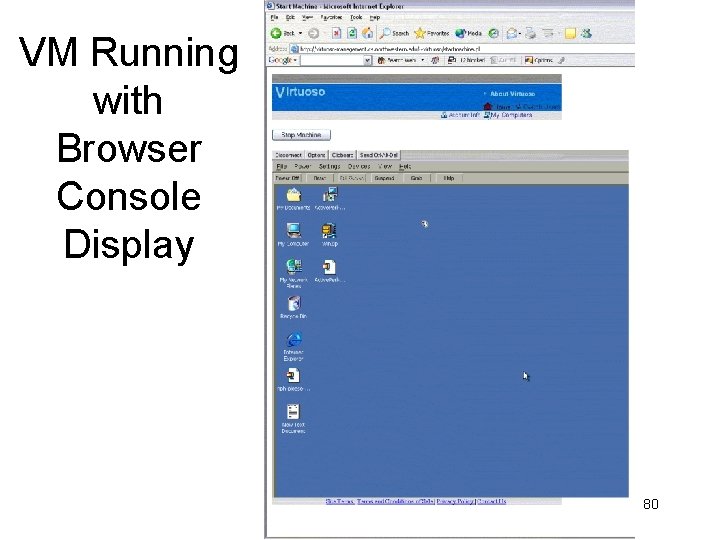

VM Running with Browser Console Display 22

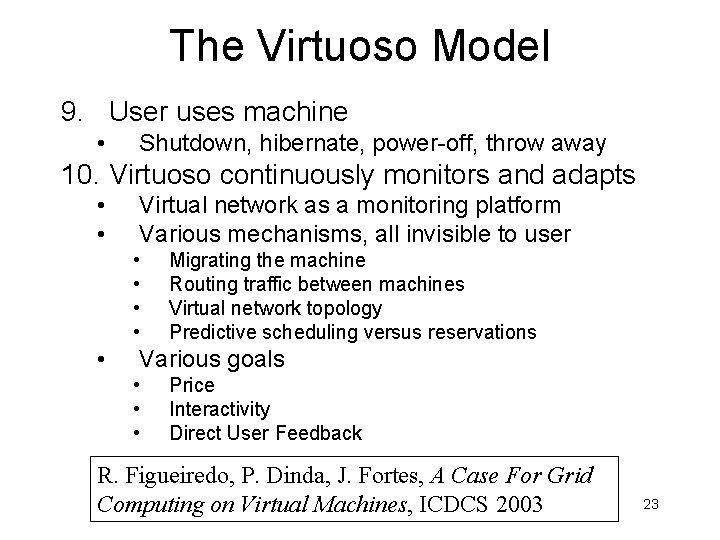

The Virtuoso Model 9. User uses machine • Shutdown, hibernate, power-off, throw away 10. Virtuoso continuously monitors and adapts • • Virtual network as a monitoring platform Various mechanisms, all invisible to user • • • Migrating the machine Routing traffic between machines Virtual network topology Predictive scheduling versus reservations Various goals • • • Price Interactivity Direct User Feedback R. Figueiredo, P. Dinda, J. Fortes, A Case For Grid Computing on Virtual Machines, ICDCS 2003 23

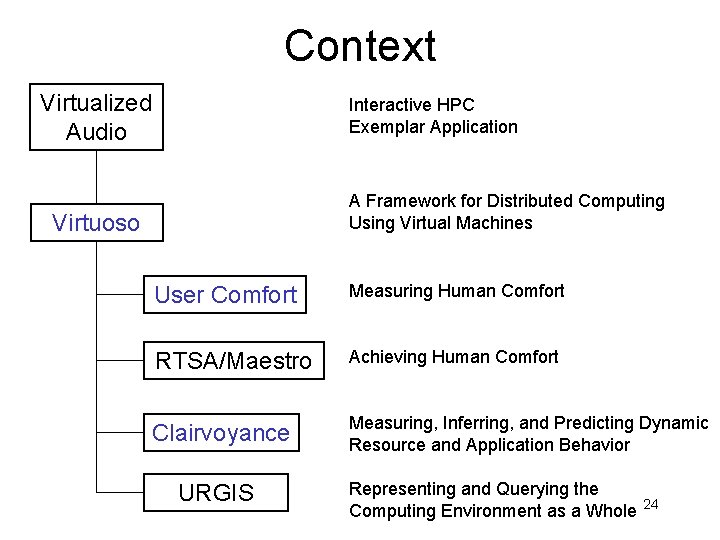

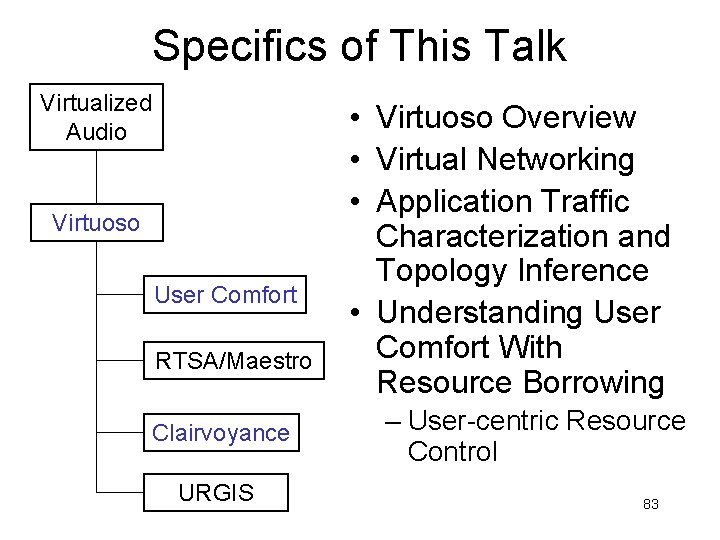

Context Virtualized Audio Interactive HPC Exemplar Application A Framework for Distributed Computing Using Virtual Machines Virtuoso User Comfort Measuring Human Comfort RTSA/Maestro Achieving Human Comfort Clairvoyance Measuring, Inferring, and Predicting Dynamic Resource and Application Behavior URGIS Representing and Querying the Computing Environment as a Whole 24

Outline • Motivation and context • Virtuoso model • Virtual networking – Its central importance • Application traffic load measurement and topology inference • Understanding user comfort with resource Borrowing – User-centric resource control • Related work • Conclusions 25

Why Virtual Networking? (with Sundararaj) • A machine is suddenly plugged into your network. What happens? – Does it get an IP address? – Is it a routeable address? – Does firewall let its traffic through? – To any port? How do we make virtual machine hostile environments as friendly as the user’s LAN? 26

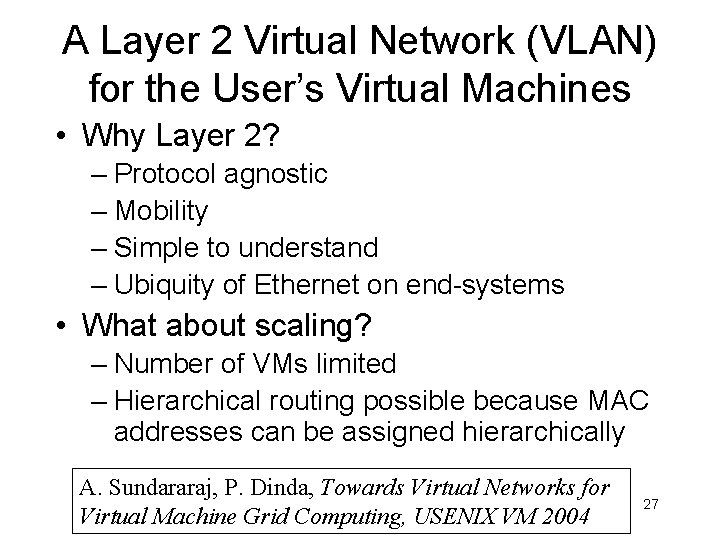

A Layer 2 Virtual Network (VLAN) for the User’s Virtual Machines • Why Layer 2? – Protocol agnostic – Mobility – Simple to understand – Ubiquity of Ethernet on end-systems • What about scaling? – Number of VMs limited – Hierarchical routing possible because MAC addresses can be assigned hierarchically A. Sundararaj, P. Dinda, Towards Virtual Networks for Virtual Machine Grid Computing, USENIX VM 2004 27

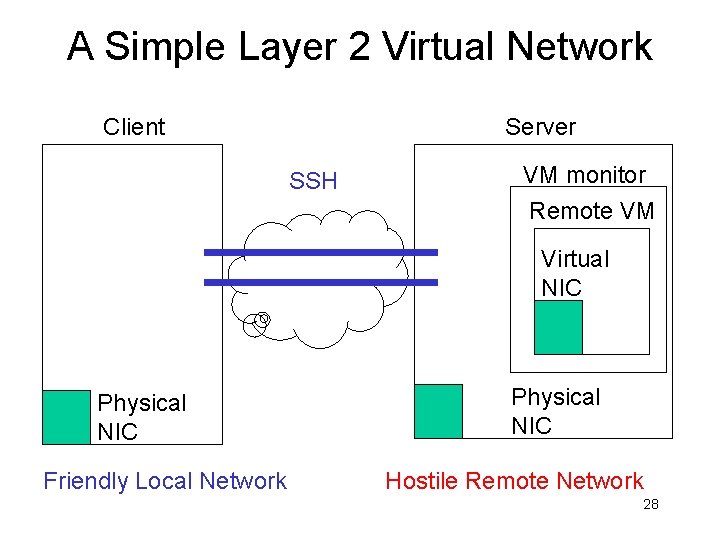

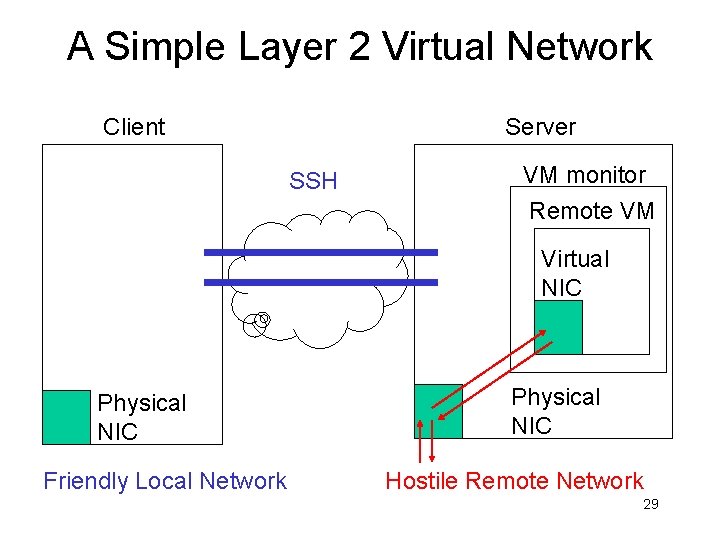

A Simple Layer 2 Virtual Network Client Server SSH VM monitor Remote VM Virtual NIC Physical NIC Friendly Local Network Physical NIC Hostile Remote Network 28

A Simple Layer 2 Virtual Network Client Server SSH VM monitor Remote VM Virtual NIC Physical NIC Friendly Local Network Physical NIC Hostile Remote Network 29

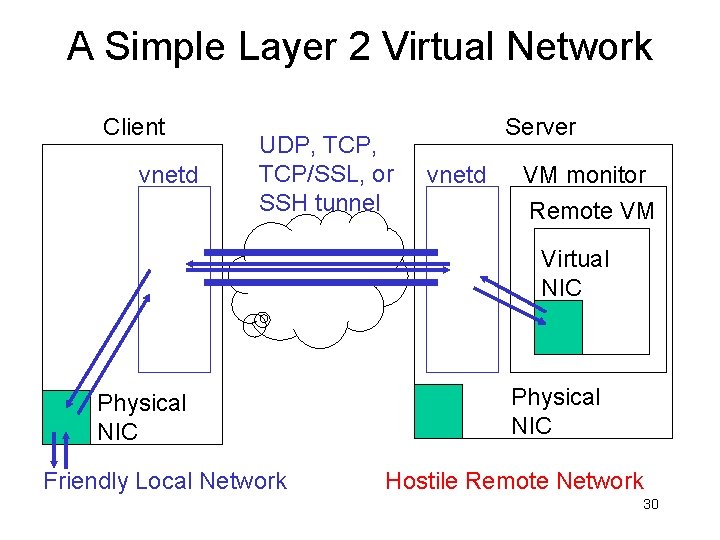

A Simple Layer 2 Virtual Network Client vnetd UDP, TCP/SSL, or SSH tunnel Server vnetd VM monitor Remote VM Virtual NIC Physical NIC Friendly Local Network Physical NIC Hostile Remote Network 30

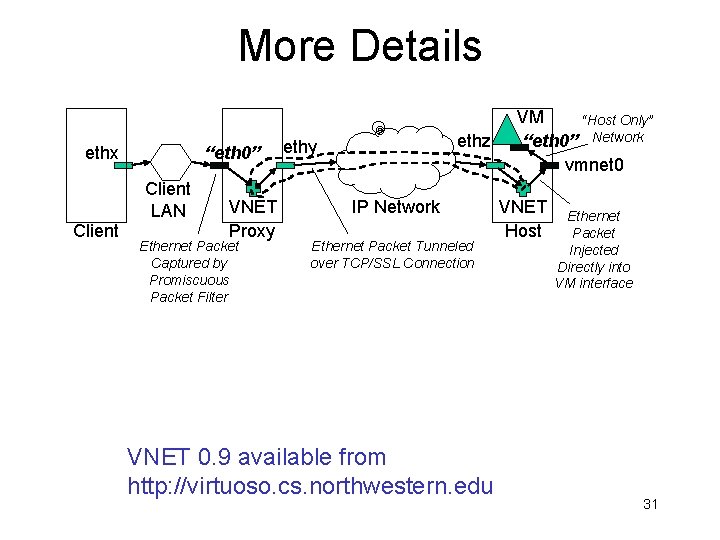

More Details ethx “eth 0” Client LAN Client VNET Proxy Ethernet Packet Captured by Promiscuous Packet Filter ethz ethy IP Network Ethernet Packet Tunneled over TCP/SSL Connection VNET 0. 9 available from http: //virtuoso. cs. northwestern. edu VM “Host Only” “eth 0” Network vmnet 0 VNET Host Ethernet Packet Injected Directly into VM interface 31

Initial Performance Results (LAN) Faster than NAT approach Lots of room for improvement This version you can download and use right now 32

An Overlay Network • Vnetds and connections form an overlay network for routing traffic among virtual machines and the user’s home network • Links can added or removed on demand • Forwarding rules can be added or removed on demand 33

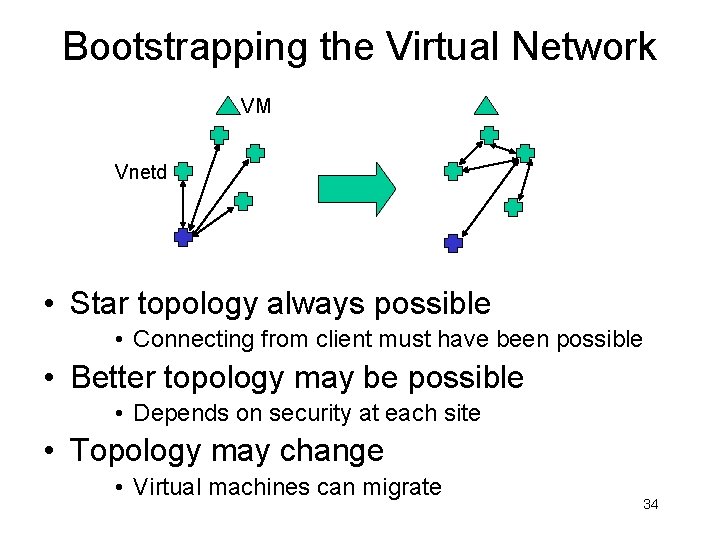

Bootstrapping the Virtual Network VM Vnetd • Star topology always possible • Connecting from client must have been possible • Better topology may be possible • Depends on security at each site • Topology may change • Virtual machines can migrate 34

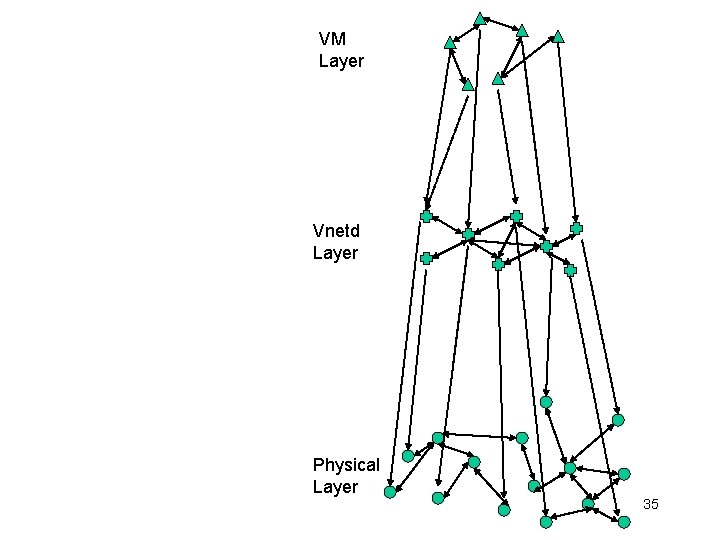

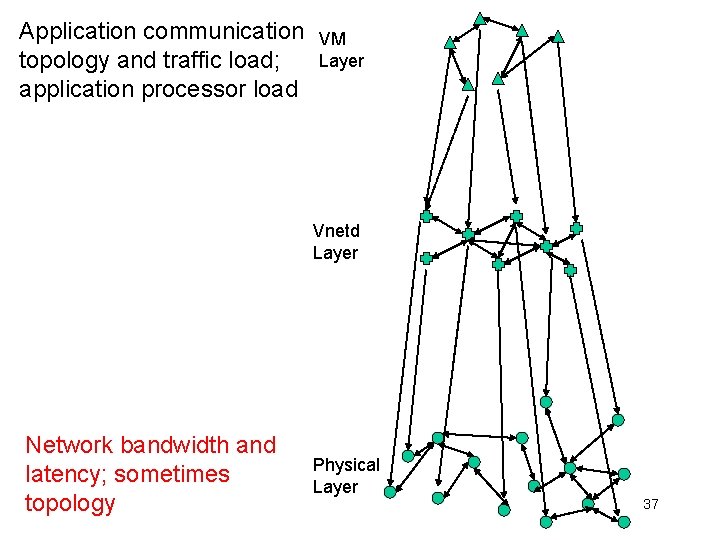

VM Layer Vnetd Layer Physical Layer 35

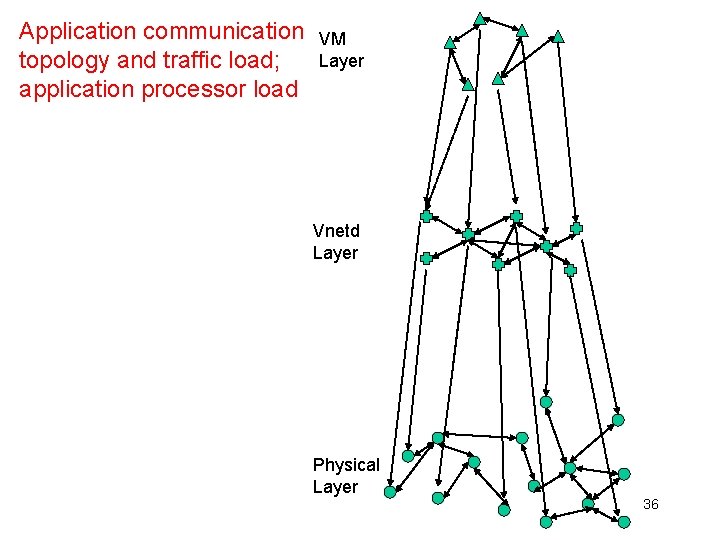

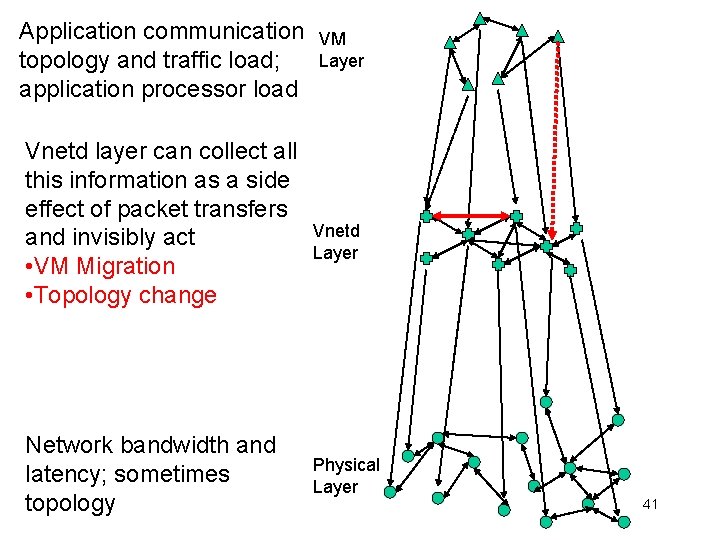

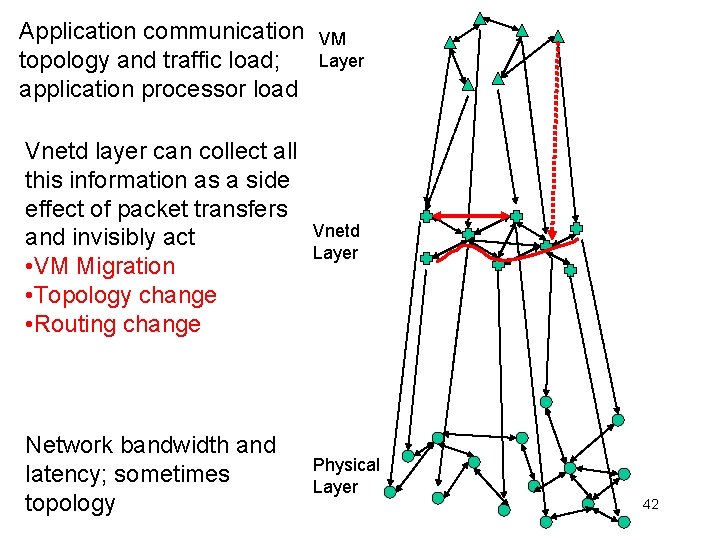

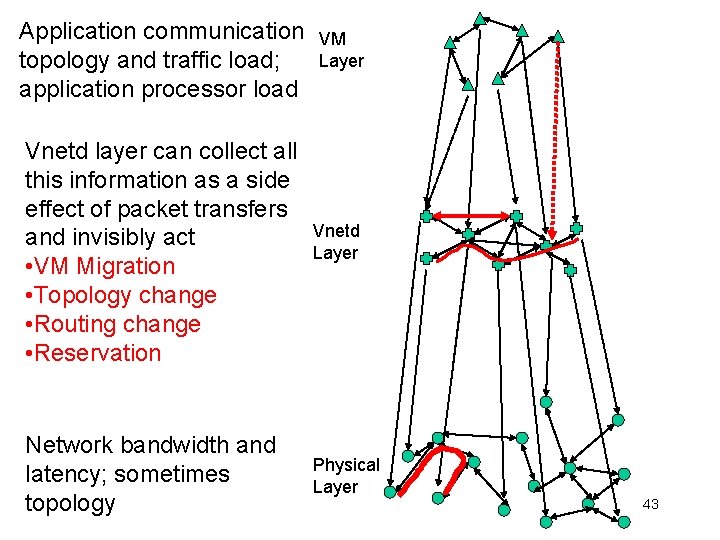

Application communication topology and traffic load; application processor load VM Layer Vnetd Layer Physical Layer 36

Application communication topology and traffic load; application processor load VM Layer Vnetd Layer Network bandwidth and latency; sometimes topology Physical Layer 37

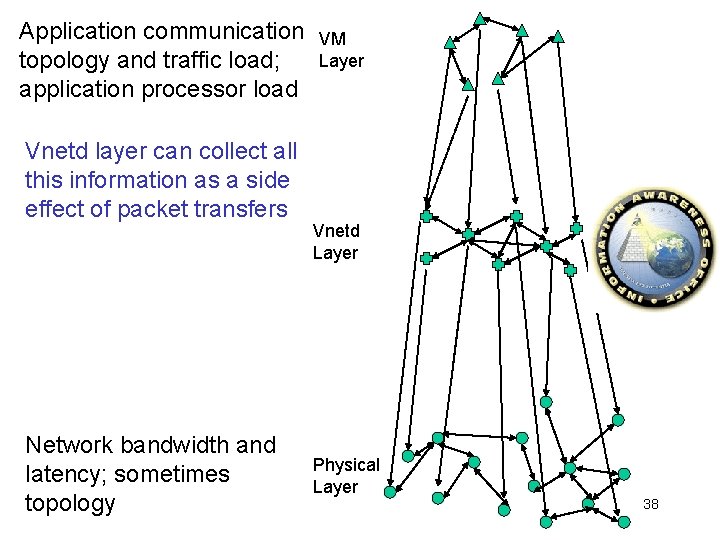

Application communication topology and traffic load; application processor load Vnetd layer can collect all this information as a side effect of packet transfers Network bandwidth and latency; sometimes topology VM Layer Vnetd Layer Physical Layer 38

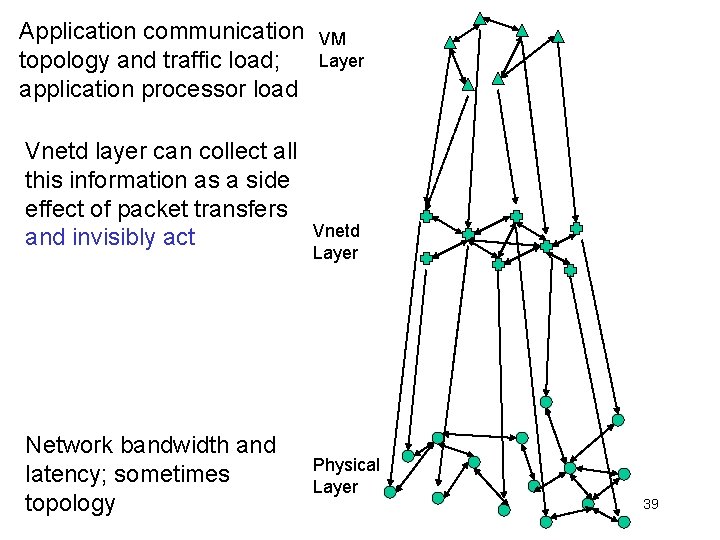

Application communication topology and traffic load; application processor load Vnetd layer can collect all this information as a side effect of packet transfers and invisibly act Network bandwidth and latency; sometimes topology VM Layer Vnetd Layer Physical Layer 39

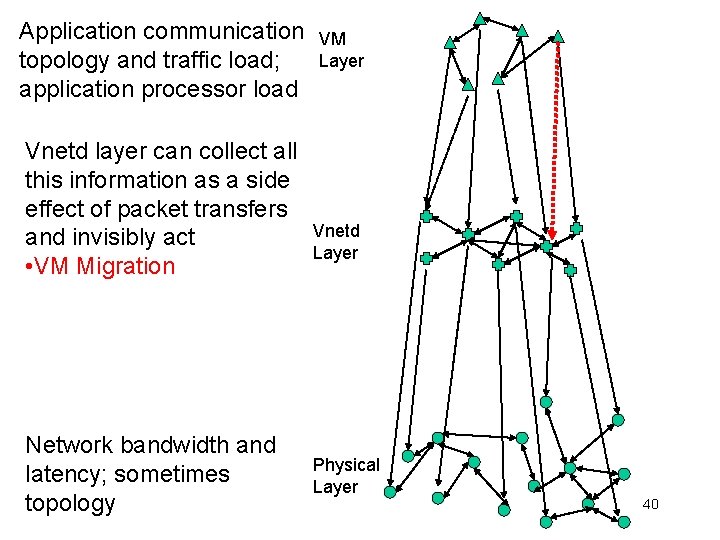

Application communication topology and traffic load; application processor load Vnetd layer can collect all this information as a side effect of packet transfers and invisibly act • VM Migration Network bandwidth and latency; sometimes topology VM Layer Vnetd Layer Physical Layer 40

Application communication topology and traffic load; application processor load Vnetd layer can collect all this information as a side effect of packet transfers and invisibly act • VM Migration • Topology change Network bandwidth and latency; sometimes topology VM Layer Vnetd Layer Physical Layer 41

Application communication topology and traffic load; application processor load VM Layer Vnetd layer can collect all this information as a side effect of packet transfers and invisibly act • VM Migration • Topology change • Routing change Vnetd Layer Network bandwidth and latency; sometimes topology Physical Layer 42

Application communication topology and traffic load; application processor load Vnetd layer can collect all this information as a side effect of packet transfers and invisibly act • VM Migration • Topology change • Routing change • Reservation Network bandwidth and latency; sometimes topology VM Layer Vnetd Layer Physical Layer 43

Outline • Motivation and context • Virtuoso model • Virtual networking – Its central importance • Application traffic load measurement and topology inference • Understanding user comfort with resource borrowing – User-centric resource control • Related work • Conclusions 44

Application Traffic Load Measurement and Topology Inference (With Gupta) • Parallel and distributed applications display particular communication patterns on particular topologies – Intensity of communication can also vary from node to node or time to time. – Combined representation: Traffic Load Matrix • VNET already sees every packet sent or received by a VM • Can we use this information to compute a global traffic load matrix? • Can we eliminate irrelevant communication from matrix to get at application topology? 45

Overall Steps • Low level inter-VM traffic monitoring within VNET • Compute rows and columns of traffic matrix for local VMs • Reduction to a global inter-VM traffic load matrix • Matrix denoising to determine application topology • Offline to online 46

![Traffic Monitoring and Reduction Ethernet Packet Format: ethz SRC|DEST|TYPE|DATA (size) VMTraffic. Matrix[SRC][DEST]+=size Each VM Traffic Monitoring and Reduction Ethernet Packet Format: ethz SRC|DEST|TYPE|DATA (size) VMTraffic. Matrix[SRC][DEST]+=size Each VM](http://slidetodoc.com/presentation_image_h2/08b039215cc1ec4c1f6c77d763268c0e/image-47.jpg)

Traffic Monitoring and Reduction Ethernet Packet Format: ethz SRC|DEST|TYPE|DATA (size) VMTraffic. Matrix[SRC][DEST]+=size Each VM on the host contributes a row and column to the VM traffic matrix VM “Host Only” “eth 0” Network vmnet 0 VNET Host Packets observed here Global reduction to find overall matrix, broadcast back to VNETs Each VNET daemon has a view of the global network load 47

Denoising The Matrix • Throw away irrelevant communication – ARPs, DNS, ssh, etc. • Find maximum entry, a • Eliminate all entries below alpha*a • Very simple, but seems to work very well for BSP parallel applications • Remains to be seen how general it is 48

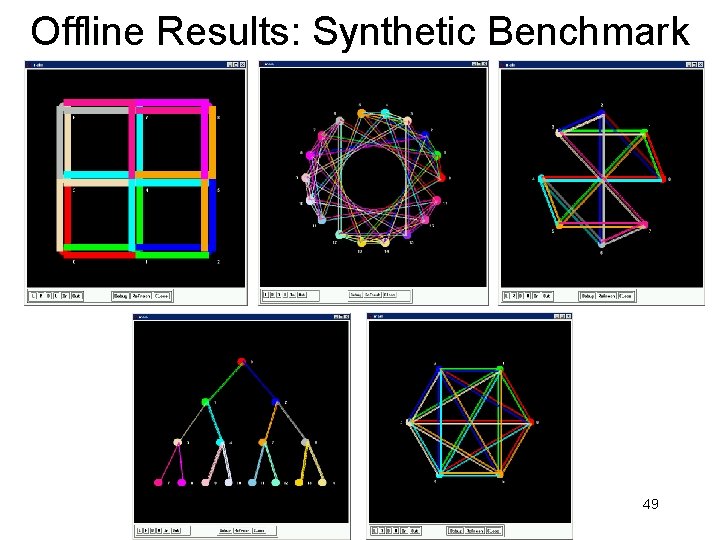

Offline Results: Synthetic Benchmark 49

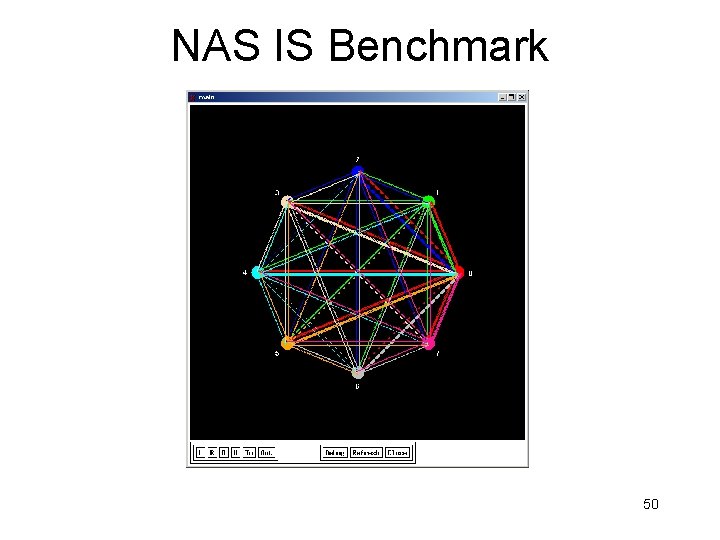

NAS IS Benchmark 50

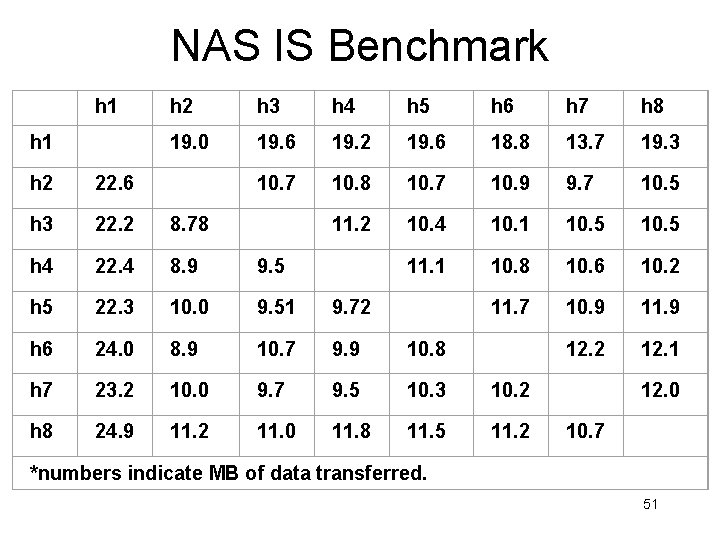

NAS IS Benchmark h 1 h 2 h 3 h 4 h 5 h 6 h 7 h 8 19. 0 19. 6 19. 2 19. 6 18. 8 13. 7 19. 3 10. 7 10. 8 10. 7 10. 9 9. 7 10. 5 11. 2 10. 4 10. 1 10. 5 11. 1 10. 8 10. 6 10. 2 11. 7 10. 9 11. 9 12. 2 12. 1 h 2 22. 6 h 3 22. 2 8. 78 h 4 22. 4 8. 9 9. 5 h 5 22. 3 10. 0 9. 51 9. 72 h 6 24. 0 8. 9 10. 7 9. 9 10. 8 h 7 23. 2 10. 0 9. 7 9. 5 10. 3 10. 2 h 8 24. 9 11. 2 11. 0 11. 8 11. 5 11. 2 12. 0 10. 7 *numbers indicate MB of data transferred. 51

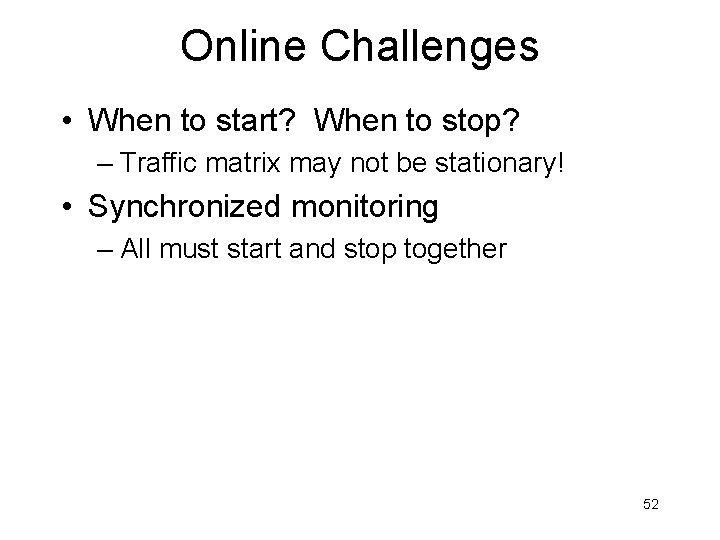

Online Challenges • When to start? When to stop? – Traffic matrix may not be stationary! • Synchronized monitoring – All must start and stop together 52

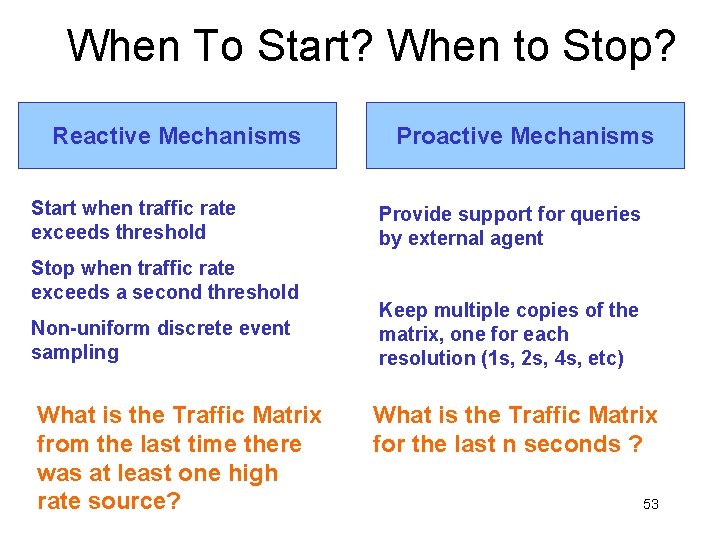

When To Start? When to Stop? Reactive Mechanisms Start when traffic rate exceeds threshold Stop when traffic rate exceeds a second threshold Non-uniform discrete event sampling What is the Traffic Matrix from the last time there was at least one high rate source? Proactive Mechanisms Provide support for queries by external agent Keep multiple copies of the matrix, one for each resolution (1 s, 2 s, 4 s, etc) What is the Traffic Matrix for the last n seconds ? 53

Overheads (100 mbit LAN) • Essentially zero latency impact • 4. 2 % throughput reduction versus VNET A. Gupta, P. Dinda, Inferring the Topology and Traffic Load of Parallel Programs Running In a Virtual Machine Environment, In Submission. 54

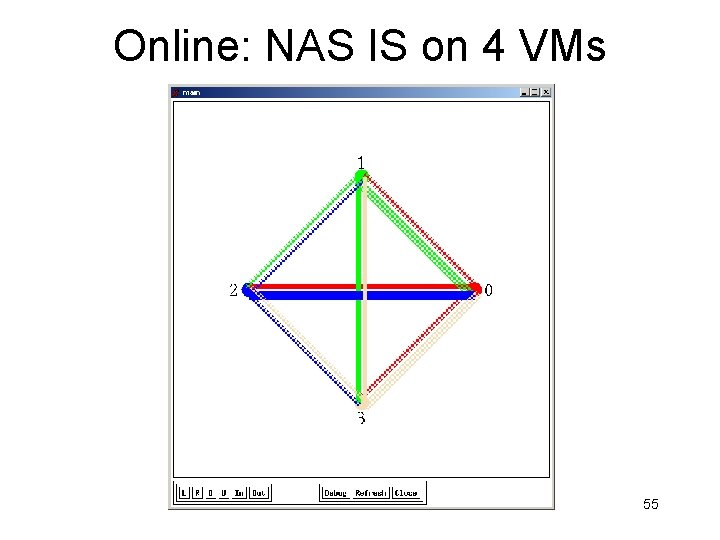

Online: NAS IS on 4 VMs 55

Outline • Motivation and context • Virtuoso model • Virtual networking – Its central importance • Application traffic load measurement and topology inference • Understanding user comfort with resource borrowing – User-centric resource control • Related work • Conclusions 56

Why Understand User Comfort With Resource Borrowing? (With Gupta, Lin) • Provider supports both interactive and batch VMs • Provider controls resources – WFQ (Ensim) – Priority (our nascent work) – Periodic real-time schedule (our plans) • How to use control to provide good interactive performance cheaply? 57

Why Understand User Comfort With Resource Borrowing? • Interactive user specifies peak resource demand for his VM • What level of resource borrowing is he willing to tolerate? • Similar question in SETI@Home style distributed parallel computing 58

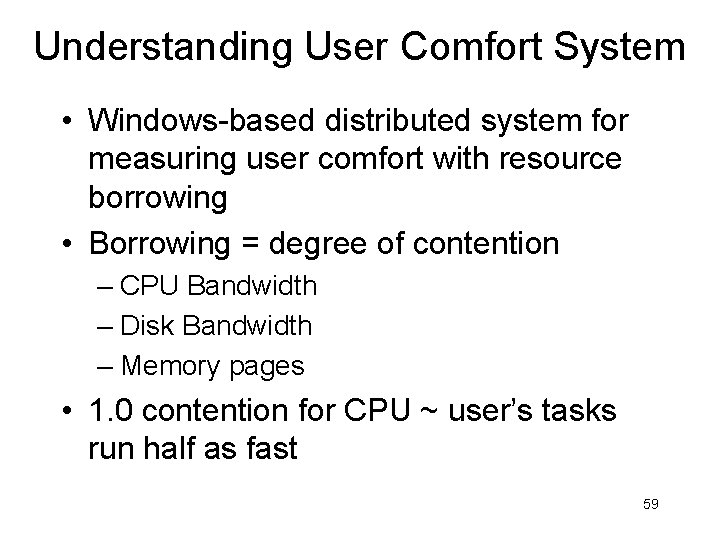

Understanding User Comfort System • Windows-based distributed system for measuring user comfort with resource borrowing • Borrowing = degree of contention – CPU Bandwidth – Disk Bandwidth – Memory pages • 1. 0 contention for CPU ~ user’s tasks run half as fast 59

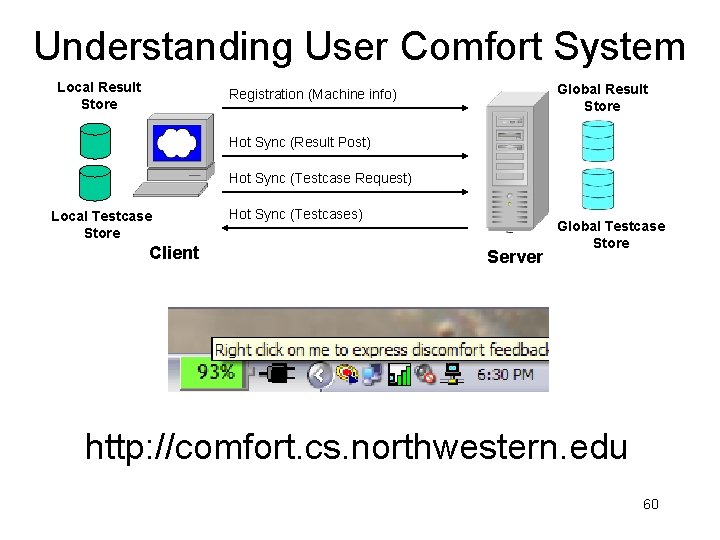

Understanding User Comfort System Local Result Store Global Result Store Registration (Machine info) Hot Sync (Result Post) Hot Sync (Testcase Request) Local Testcase Store Client Hot Sync (Testcases) Server Global Testcase Store http: //comfort. cs. northwestern. edu 60

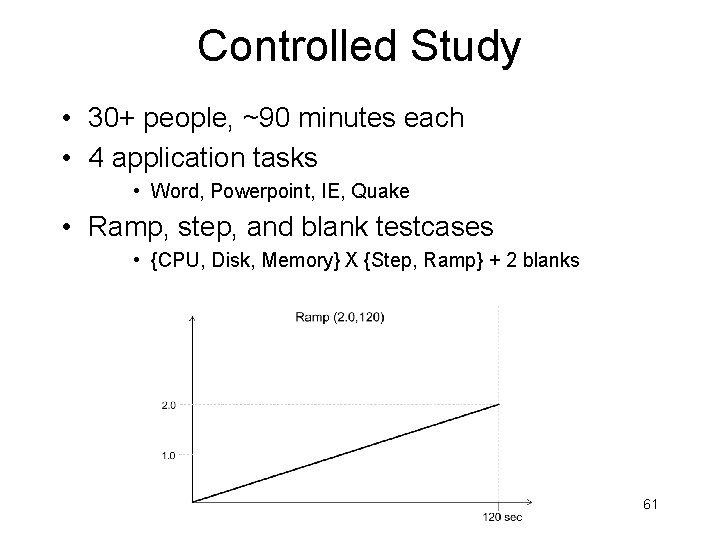

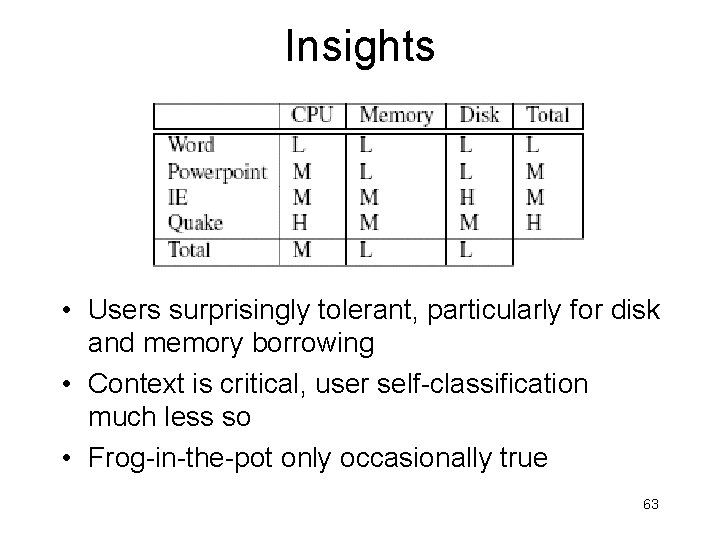

Controlled Study • 30+ people, ~90 minutes each • 4 application tasks • Word, Powerpoint, IE, Quake • Ramp, step, and blank testcases • {CPU, Disk, Memory} X {Step, Ramp} + 2 blanks 61

A. Gupta, B. Lin, P. Dinda, Measuring and Understanding User Comfort with Resource Borrowing, HPDC 2004. 62

Insights • Users surprisingly tolerant, particularly for disk and memory borrowing • Context is critical, user self-classification much less so • Frog-in-the-pot only occasionally true 63

Using User Feedback Directly • Discomfort feedback as congestion indication a la TCP Reno • Rate => VM Priority • Adaptive gain control for congestion avoidance phase – Target: maintain stable time between feedback events • Somewhat promising, but very initial results B. Lin, P. Dinda, D. Lu, User-driven Scheduling Of Interactive Virtual Machines, In Submission. 64

Outline • Motivation and context • Virtuoso model • Virtual networking – Its central importance • Application traffic load measurement and topology inference • Understanding user comfort with resource borrowing – User-centric resource control • Related work • Conclusions 65

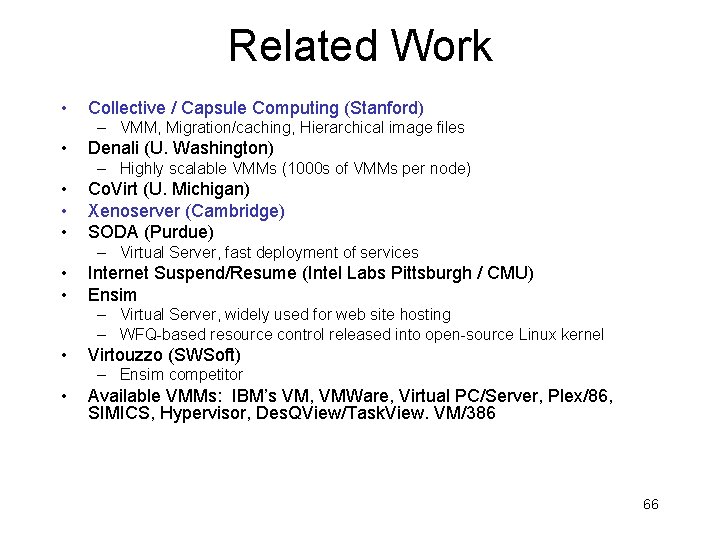

Related Work • Collective / Capsule Computing (Stanford) – VMM, Migration/caching, Hierarchical image files • Denali (U. Washington) – Highly scalable VMMs (1000 s of VMMs per node) • • • Co. Virt (U. Michigan) Xenoserver (Cambridge) SODA (Purdue) – Virtual Server, fast deployment of services • • Internet Suspend/Resume (Intel Labs Pittsburgh / CMU) Ensim – Virtual Server, widely used for web site hosting – WFQ-based resource control released into open-source Linux kernel • Virtouzzo (SWSoft) – Ensim competitor • Available VMMs: IBM’s VM, VMWare, Virtual PC/Server, Plex/86, SIMICS, Hypervisor, Des. QView/Task. View. VM/386 66

Conclusions and Status • Virtual machines on virtual networks as the abstraction for distributed computing • Virtual network as a fundamental layer for measurement and adaptation • Virtuoso prototype running on our cluster • 1 st generation VNET released. 2 nd generation in progress, versioning file system released 67

For More Information • Prescience Lab – http: //plab. cs. northwestern. edu • Virtuoso – http: //virtuoso. cs. northwestern. edu • Join our user comfort study! – http: //comfort. cs. northwestern. edu 68

Papers • R. Figueiredo, P. Dinda, J. Fortes, A Case For Grid Computing on Virtual Machines, ICDCS 2003 • A. Gupta, B. Lin, P. Dinda, Understanding User Comfort With Resource Borrowing, HPDC 2004 • A. Sundararaj, P. Dinda, Towards Virtual Networks for Virtual Machine Grid Computing, USENIX VM 2004. • B. Cornell, P. Dinda, F. Bustamante, Wayback: A Userlevel Versioning File System For Linux, USENIX 2004. • A. Sundararaj, P. Dinda, Exploring Inference-based Monitoring of Virtual Machine Resources, In Submission. • A. Gupta, P. Dinda, Inferring the Topology and Traffic Load of Parallel Programs Running In a Virtual Machine Environment, In Submission. • B. Lin, P. Dinda, User-driven Scheduling of Interactive Virtual Machines, In Submission. 69

Migration (With Shoykhet) 70

Migration (With Shoykhet) 71

Resource Control • Owner has an interest in controlling how much and when compute time is given to a virtual machine • Our approach: A language for expressing these constraints, and compilation to real-time schedules, proportional share, etc. • Very early stages. Trying to avoid kernel modifications. 72

Front Page 73

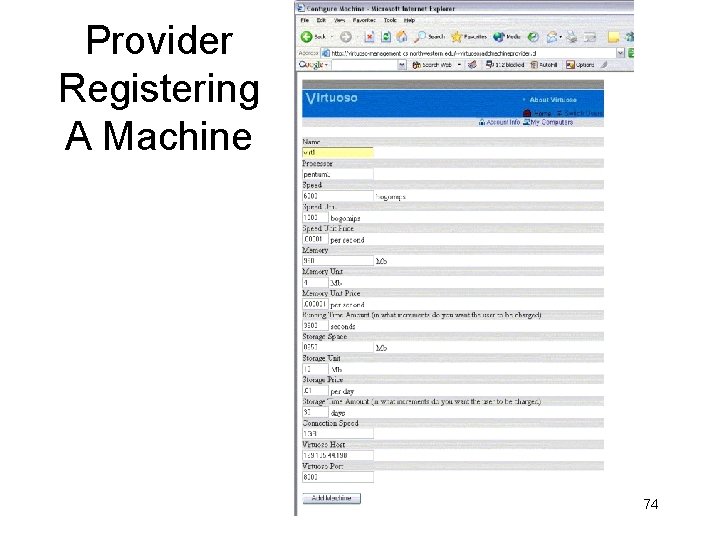

Provider Registering A Machine 74

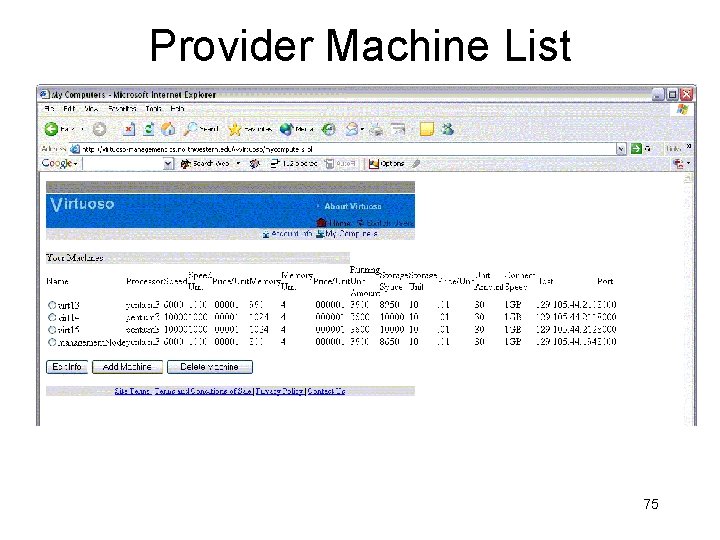

Provider Machine List 75

User Configuring a New VM 76

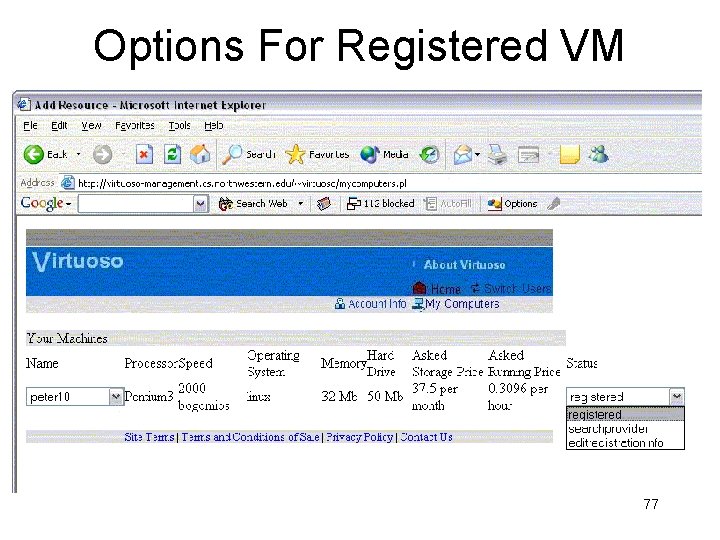

Options For Registered VM 77

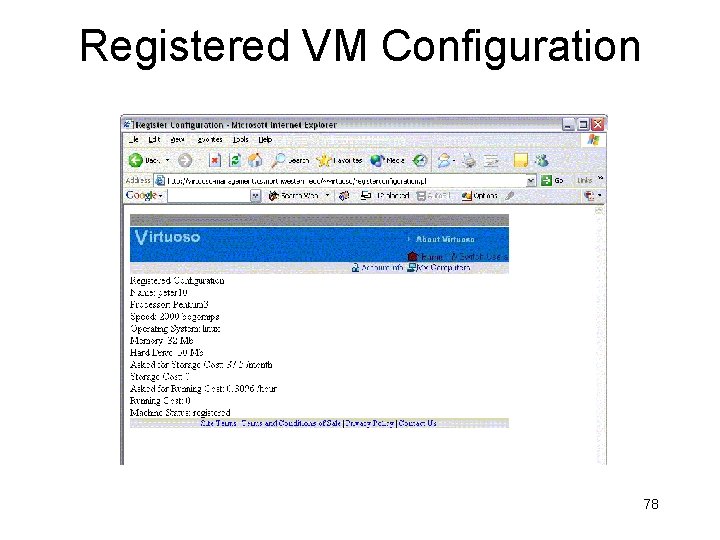

Registered VM Configuration 78

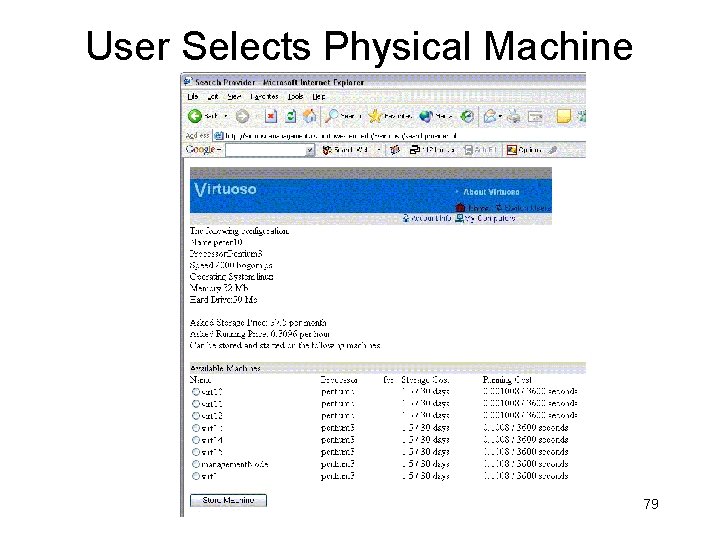

User Selects Physical Machine 79

VM Running with Browser Console Display 80

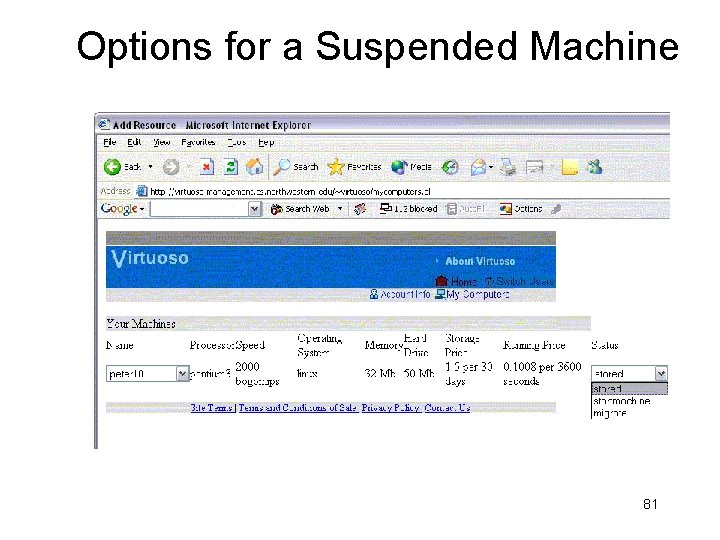

Options for a Suspended Machine 81

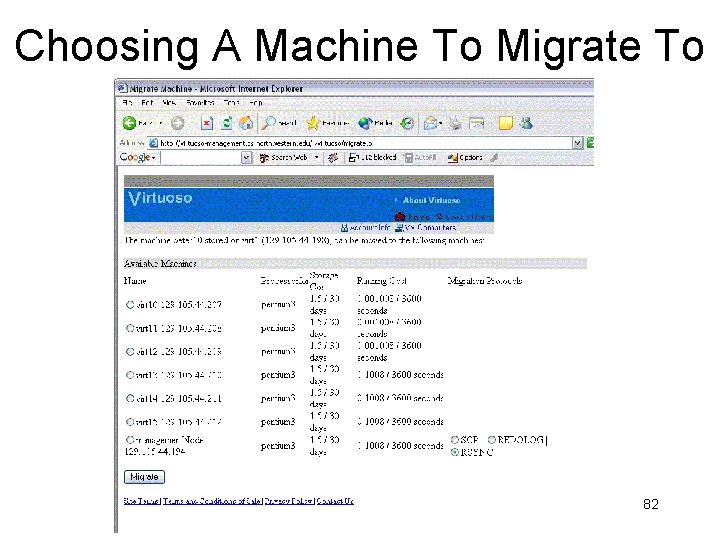

Choosing A Machine To Migrate To 82

Specifics of This Talk Virtualized Audio Virtuoso User Comfort RTSA/Maestro Clairvoyance URGIS • Virtuoso Overview • Virtual Networking • Application Traffic Characterization and Topology Inference • Understanding User Comfort With Resource Borrowing – User-centric Resource Control 83

- Slides: 83