Virtual Memory Management CS3013 Operating Systems Aterm 2009

- Slides: 60

Virtual Memory Management CS-3013, Operating Systems A-term 2009 (Slides include materials from Modern Operating Systems, 3 rd ed. , by Andrew Tanenbaum and from Operating System Concepts, 7 th ed. , by Silbershatz, Galvin, & Gagne) CS-3013 A-term 2009 Virtual Memory Management 1

Useful terms • Thrashing • Too many page faults per unit time • Results from insufficient physical memory to support the working set • System spends most of its time swapping pages in and out, rather than executing process • Working set • The set of pages needed to keep a process from thrashing • Caching • The art and science of keeping the most active elements in fast storage for better execution speed • Depends upon locality of references CS-3013 A-term 2009 Virtual Memory Management 5

Outline for • • the f g o n i y h d c u this topic ca st f e o v i t e ta pl i i t c n n a i A qu ental pr am d n u f Performance metrics Swap-in strategies Page replacement strategies Miscellaneous topics – More on segmentation – Kernel memory allocation – VM summary: Linux & Windows CS-3013 A-term 2009 Virtual Memory Management 6

Demand Paging Performance • Page Fault Rate (p) 0 < p < 1. 0 (measured in average number of faults / reference) • Page Fault Overhead = fault service time + read page time + restart process time • Fault service time ~ 0. 1– 10 sec • Restart process time ~ 0. 1– 100 sec • Read page time ~ 8 -20 milliseconds! • Dominated by time to read page in from disk! CS-3013 A-term 2009 Virtual Memory Management 7

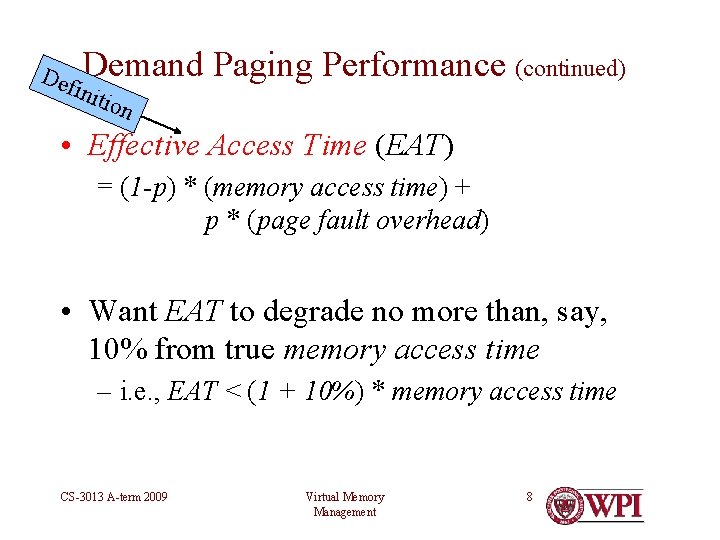

Def Demand Paging Performance (continued) init i on • Effective Access Time (EAT) = (1 -p) * (memory access time) + p * (page fault overhead) • Want EAT to degrade no more than, say, 10% from true memory access time – i. e. , EAT < (1 + 10%) * memory access time CS-3013 A-term 2009 Virtual Memory Management 8

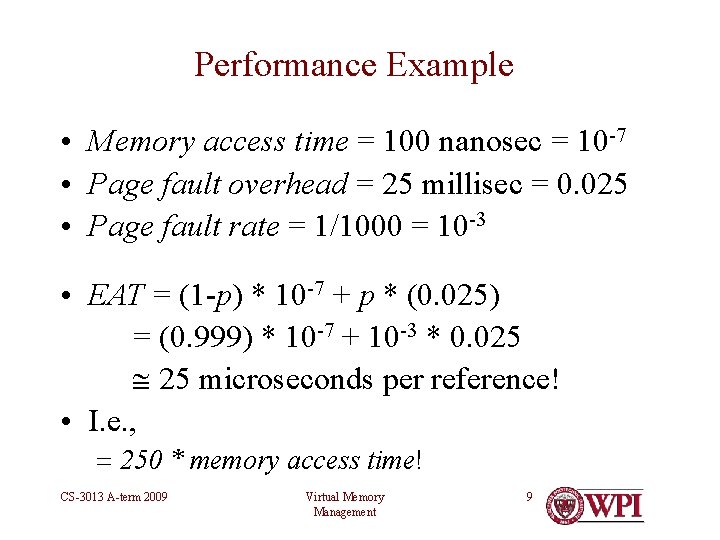

Performance Example • Memory access time = 100 nanosec = 10 -7 • Page fault overhead = 25 millisec = 0. 025 • Page fault rate = 1/1000 = 10 -3 • EAT = (1 -p) * 10 -7 + p * (0. 025) = (0. 999) * 10 -7 + 10 -3 * 0. 025 25 microseconds per reference! • I. e. , = 250 * memory access time! CS-3013 A-term 2009 Virtual Memory Management 9

Performance Example (continued) • Goal: achieve less than 10% degradation (1 -p) * 10 -7 + p * (0. 025) < 1. 1 * 10 -7 i. e. , p < (0. 1 * 10 -7) / (0. 025 - 10 -7) 0. 0000004 • I. e. , 1 fault in 2, 500, 000 accesses! CS-3013 A-term 2009 Virtual Memory Management 10

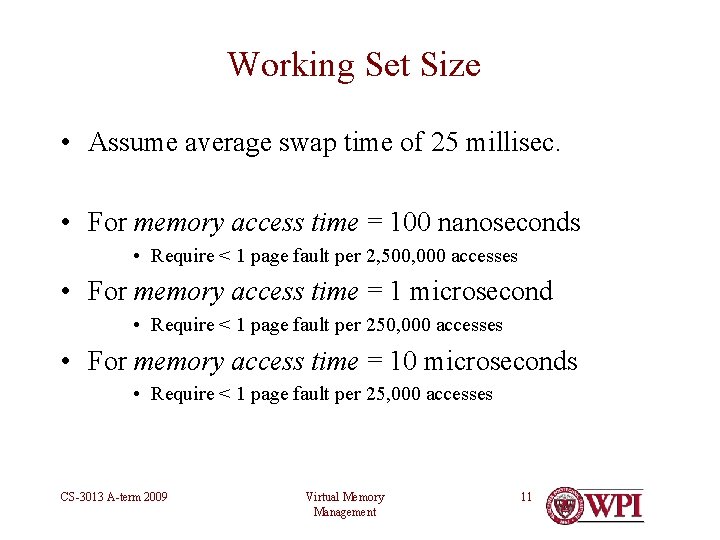

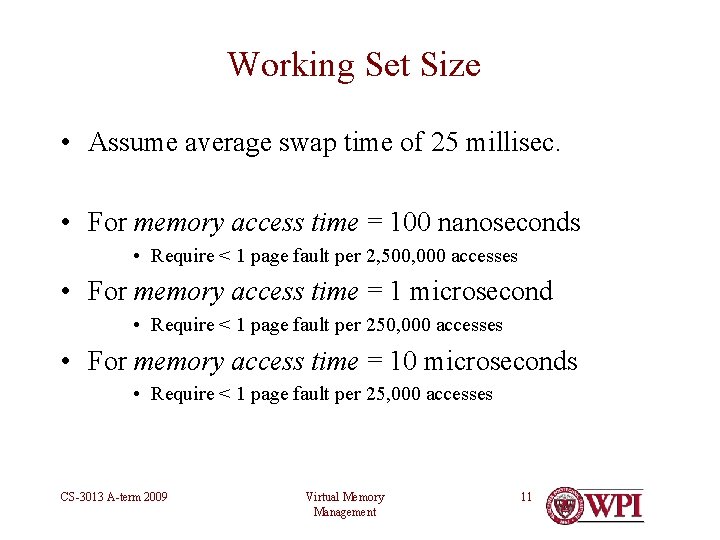

Working Set Size • Assume average swap time of 25 millisec. • For memory access time = 100 nanoseconds • Require < 1 page fault per 2, 500, 000 accesses • For memory access time = 1 microsecond • Require < 1 page fault per 250, 000 accesses • For memory access time = 10 microseconds • Require < 1 page fault per 25, 000 accesses CS-3013 A-term 2009 Virtual Memory Management 11

Object Lesson • Working sets must get larger in proportion to memory speed! • Disk speed ~ constant (nearly) • I. e. , faster computers need larger physical memories to exploit the speed! CS-3013 A-term 2009 Virtual Memory Management 12

Class Discussion 1. What is first thing to do when the PC you bought last year becomes too slow? 2. What else might help? 3. Can we do the same analysis on TLB performance? CS-3013 A-term 2009 Virtual Memory Management 13

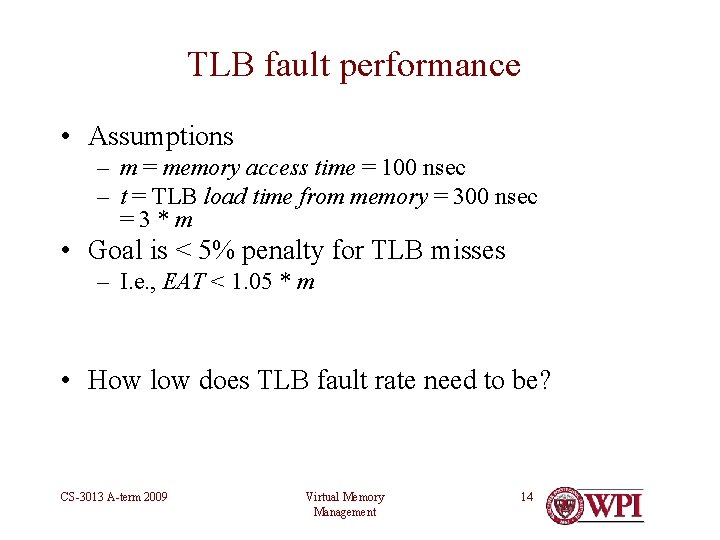

TLB fault performance • Assumptions – m = memory access time = 100 nsec – t = TLB load time from memory = 300 nsec =3*m • Goal is < 5% penalty for TLB misses – I. e. , EAT < 1. 05 * m • How low does TLB fault rate need to be? CS-3013 A-term 2009 Virtual Memory Management 14

TLB fault performance • Assumptions – m = memory access time = 100 nsec – t = TLB load time from memory = 300 nsec =3*m • Goal is < 5% penalty for TLB misses – I. e. , EAT < 1. 05 * m • EAT = (1 -p) * m + p * t < 1. 05 *m < (0. 05 * m) / (t – m) = 0. 05 * m / 2 * m = 0. 025 • I. e. , TLB fault rate should be < 1 per 40 accesses! CS-3013 A-term 2009 Virtual Memory Management 15

TLB fault performance (continued) • Q: How large should TLB be so that TLB faults are not onerous, in these circumstances? • A: Somewhat less than 40 entries • Assuming a reasonable degree of locality! CS-3013 A-term 2009 Virtual Memory Management 16

Summary of this Topic • A quantitative way of estimating how large the cache needs to be to avoid excessive thrashing, where – Cache = Working set in physical memory – Cache = TLB size in hardware • Applicable to all forms of caching CS-3013 A-term 2009 Virtual Memory Management 17

General Observation on Caching • We create caches because • There is not enough fast memory to hold everything we need • Memory that is large enough is too slow • Performance metric for all caches is EAT • Effective Access Time • Goal is to make overall performance close to cache memory performance • By taking advantage of locality — temporal and spatial • By burying a small number of accesses to slow memory under many, many accesses to fast memory CS-3013 A-term 2009 Virtual Memory Management 18

Next topic CS-3013 A-term 2009 Virtual Memory Management 19

s u io i p to c ev r p Caching issues F m • When to put something in the cache o r • What to throw out to create cache space for new items • How to keep cached item and stored item in sync after one or the other is updated • How to keep multiple caches in sync across processors or machines • Size of cache needed to be effective • Size of cache items for efficiency • … CS-3013 A-term 2009 Virtual Memory Management 20

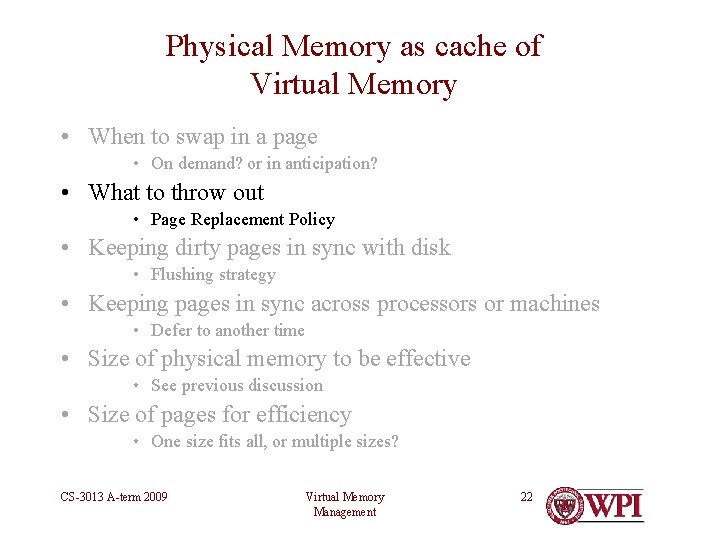

Physical Memory is a Cache of Virtual Memory, so … • When to swap in a page • On demand? or in anticipation? • What to throw out • Page Replacement Policy • Keeping dirty pages in sync with disk • Flushing strategy • Keeping pages in sync across processors or machines • Defer to another time • Size of physical memory to be effective • See previous discussion • Size of pages for efficiency • One size fits all, or multiple sizes? CS-3013 A-term 2009 Virtual Memory Management 21

Physical Memory as cache of Virtual Memory • When to swap in a page • On demand? or in anticipation? • What to throw out • Page Replacement Policy • Keeping dirty pages in sync with disk • Flushing strategy • Keeping pages in sync across processors or machines • Defer to another time • Size of physical memory to be effective • See previous discussion • Size of pages for efficiency • One size fits all, or multiple sizes? CS-3013 A-term 2009 Virtual Memory Management 22

VM Page Replacement • If there is an unused frame, use it. • If there are no unused frames available, select a victim (according to policy) and – If it contains a dirty page (M == 1) • write it to disk – – Invalidate its PTE and TLB entry Load in new page from disk (or create new page) Update the PTE and TLB entry! Restart the faulting instruction • What is cost of replacing a page? • How does the OS select the page to be evicted? CS-3013 A-term 2009 Virtual Memory Management 23

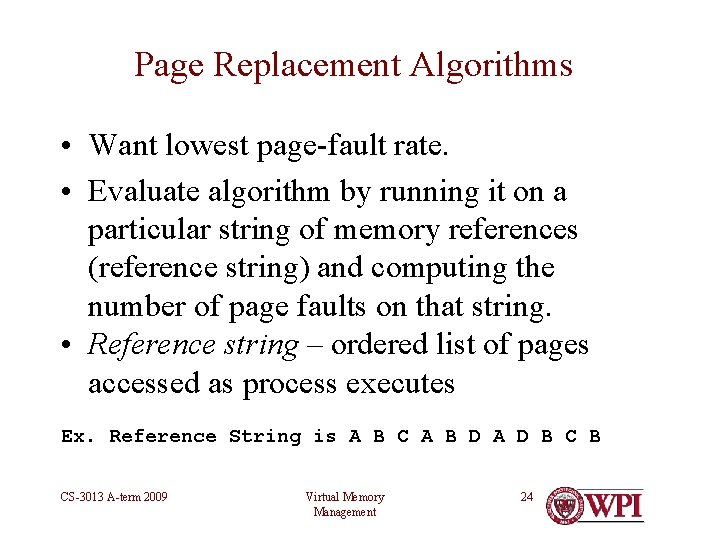

Page Replacement Algorithms • Want lowest page-fault rate. • Evaluate algorithm by running it on a particular string of memory references (reference string) and computing the number of page faults on that string. • Reference string – ordered list of pages accessed as process executes Ex. Reference String is A B C A B D A D B CS-3013 A-term 2009 Virtual Memory Management 24

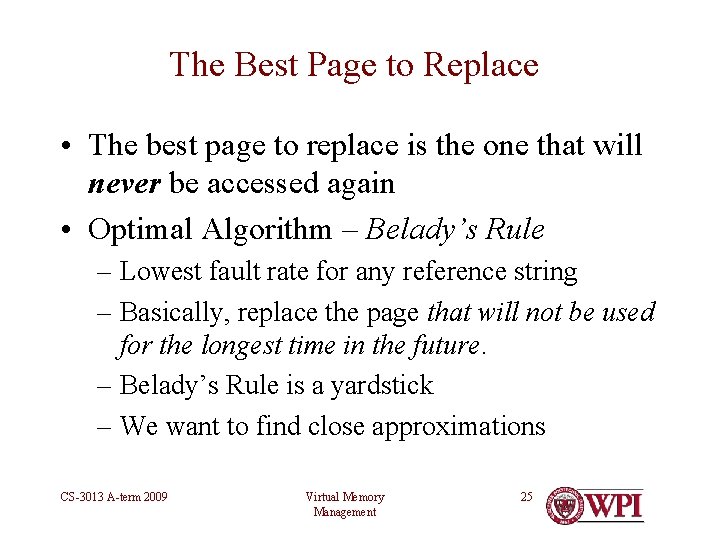

The Best Page to Replace • The best page to replace is the one that will never be accessed again • Optimal Algorithm – Belady’s Rule – Lowest fault rate for any reference string – Basically, replace the page that will not be used for the longest time in the future. – Belady’s Rule is a yardstick – We want to find close approximations CS-3013 A-term 2009 Virtual Memory Management 25

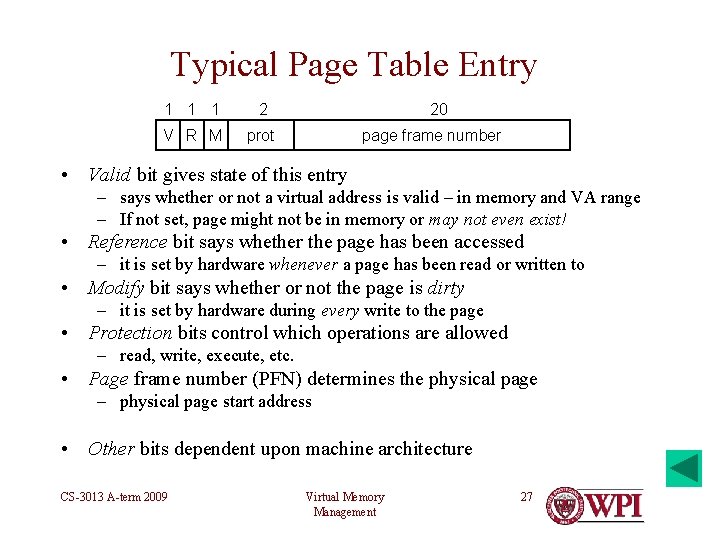

Page Replacement – NRU (Not Recently Used) • Periodically (e. g. , on a clock interrupt) • • When needed, rank order pages as follows 1. 2. 3. 4. • R = 0, M = 0 R = 0, M = 1 R = 1, M = 0 R = 1, M = 1 Evict a page at random from lowest non-empty class • • Clear R bit from all PTE’s Write out if M = 1; clear M when written Characteristics • • CS-3013 A-term 2009 Easy to understand implement Not optimal, but adequate in some cases Virtual Memory Management 26

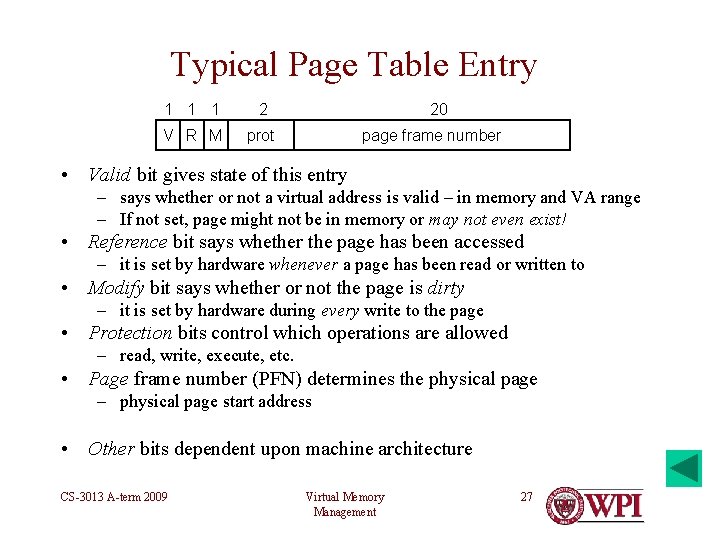

Typical Page Table Entry 1 1 1 2 V R M prot 20 page frame number • Valid bit gives state of this entry – says whether or not a virtual address is valid – in memory and VA range – If not set, page might not be in memory or may not even exist! • Reference bit says whether the page has been accessed – it is set by hardware whenever a page has been read or written to • Modify bit says whether or not the page is dirty – it is set by hardware during every write to the page • Protection bits control which operations are allowed – read, write, execute, etc. • Page frame number (PFN) determines the physical page – physical page start address • Other bits dependent upon machine architecture CS-3013 A-term 2009 Virtual Memory Management 27

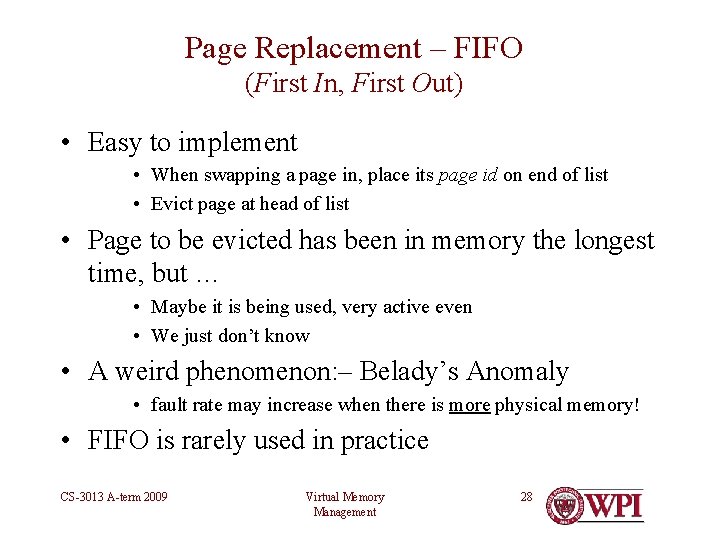

Page Replacement – FIFO (First In, First Out) • Easy to implement • When swapping a page in, place its page id on end of list • Evict page at head of list • Page to be evicted has been in memory the longest time, but … • Maybe it is being used, very active even • We just don’t know • A weird phenomenon: – Belady’s Anomaly • fault rate may increase when there is more physical memory! • FIFO is rarely used in practice CS-3013 A-term 2009 Virtual Memory Management 28

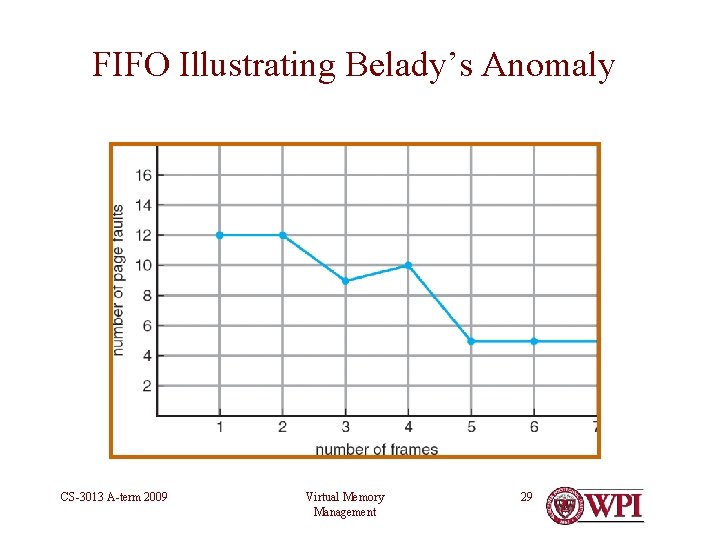

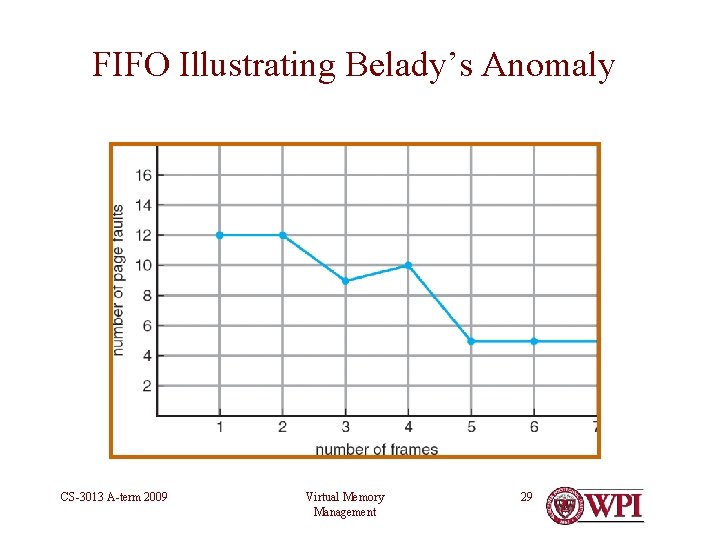

FIFO Illustrating Belady’s Anomaly CS-3013 A-term 2009 Virtual Memory Management 29

Second Chance • Maintain FIFO page list • When a page frame is needed, check reference bit of top page in list • If R == 1 then move page to end of list and clear R, repeat • If R == 0 then evict page • I. e. , a page has to move to top of list at least twice • I. e. , once after the last time R-bit was cleared • Disadvantage • Moves pages around on list a lot (bookkeeping overhead) CS-3013 A-term 2009 Virtual Memory Management 30

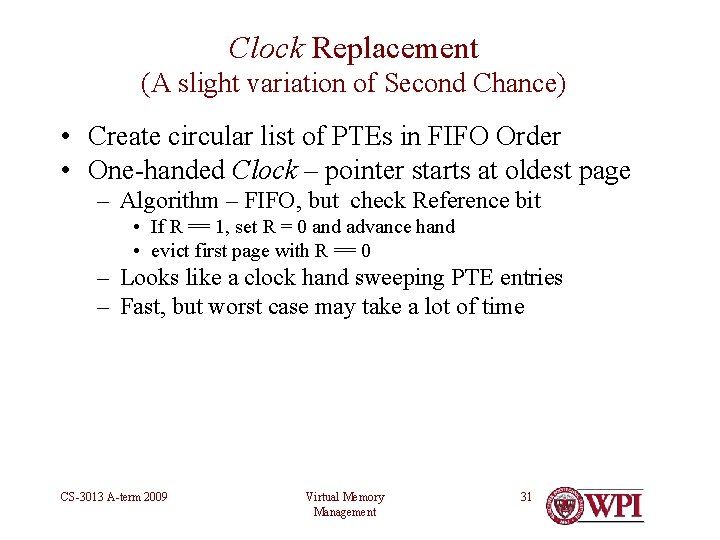

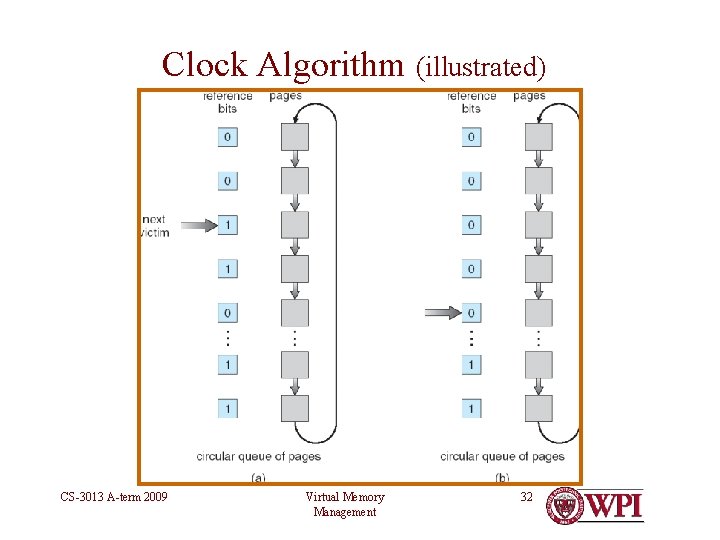

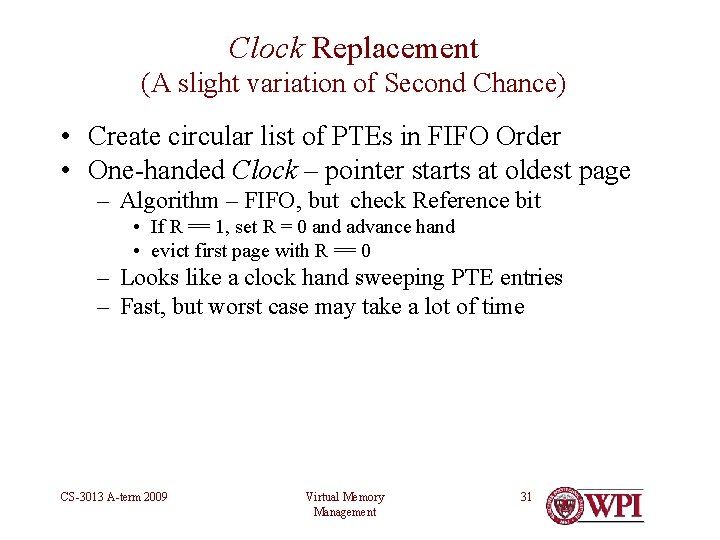

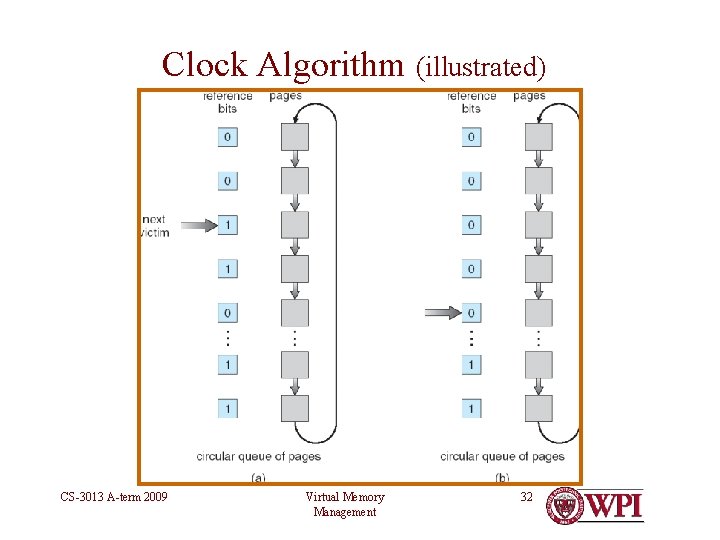

Clock Replacement (A slight variation of Second Chance) • Create circular list of PTEs in FIFO Order • One-handed Clock – pointer starts at oldest page – Algorithm – FIFO, but check Reference bit • If R == 1, set R = 0 and advance hand • evict first page with R == 0 – Looks like a clock hand sweeping PTE entries – Fast, but worst case may take a lot of time CS-3013 A-term 2009 Virtual Memory Management 31

Clock Algorithm (illustrated) CS-3013 A-term 2009 Virtual Memory Management 32

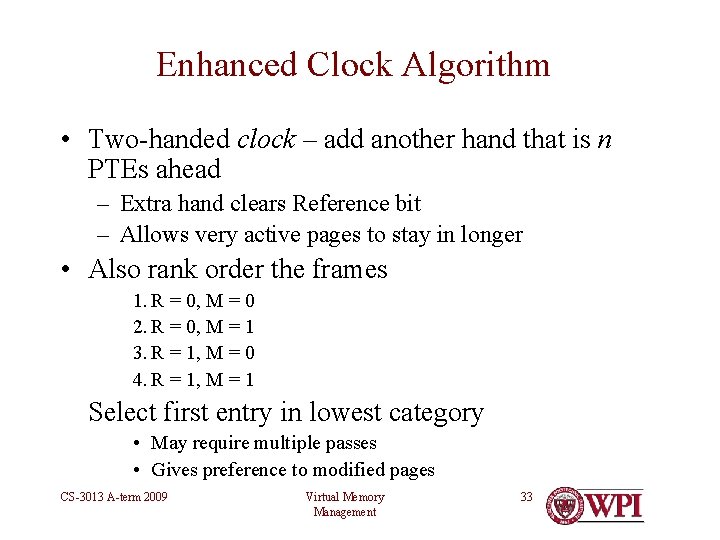

Enhanced Clock Algorithm • Two-handed clock – add another hand that is n PTEs ahead – Extra hand clears Reference bit – Allows very active pages to stay in longer • Also rank order the frames 1. R = 0, M = 0 2. R = 0, M = 1 3. R = 1, M = 0 4. R = 1, M = 1 Select first entry in lowest category • May require multiple passes • Gives preference to modified pages CS-3013 A-term 2009 Virtual Memory Management 33

Least Recently Used (LRU) • Replace the page that has not been used for the longest time 3 Page Frames CS-3013 A-term 2009 On like the a ssu ly Reference String t. A o b Bm. Cp A B D A D B C e n tion eed ed that i aga t is LRU – 5 faults in soo least n A B C A B D A D B C Virtual Memory Management 34

LRU • Past experience may indicate future behavior • Perfect LRU requires some form of timestamp to be associated with a PTE on every memory reference !!! • Counter implementation – Every page entry has a counter; each time page is referenced through this entry, copy the clock into the counter. – When a page needs to be changed, look at the counters to determine which to select • Stack implementation – keep a stack of page numbers in a double link form: – Page referenced: move it to the top – No search for replacement CS-3013 A-term 2009 Virtual Memory Management 35

LRU Approximations • Aging – Keep a counter for each PTE – Periodically (clock interrupt) – check R-bit • If R = 0 increment counter (page has not been used) • If R = 1 clear the counter (page has been used) • Clear R = 0 – Counter contains # of intervals since last access – Replace page having largest counter value • Alternatives – §§ 3. 4. 6 -3. 4. 7 in Tanenbaum CS-3013 A-term 2009 Virtual Memory Management 36

When to Evict Pages (Cleaning Policy) , I. e. a el n r ke • An OS process called the paging daemon – wakes periodically to inspect pool of frames – if insufficient # of free frames • Mark pages for eviction according to policy, set valid bit to zero • Schedule disk to write dirty pages – on page fault • If desired page is marked but still in memory, use it • Otherwise, replace first clean marked page in pool • Advantage • Writing out dirty pages is not in critical path to swapping in CS-3013 A-term 2009 Virtual Memory Management 37 d a e thr

Physical Memory as cache of Virtual Memory • When to swap in a page • On demand? or in anticipation? • What to throw out • Page Replacement Policy • Keeping dirty pages in sync with disk • Flushing strategy • Keeping pages in sync across processors or machines • Defer to another time • Size of physical memory to be effective • See previous discussion • Size of pages for efficiency • One size fits all, or multiple sizes? CS-3013 A-term 2009 Virtual Memory Management 38

What to Page in • Demand paging brings in the faulting page – To bring in more pages, we need to know the future • Users don’t really know the future, but a few OSs have user-controlled pre-fetching • In real systems, – load the initial page – Start running – Some systems (e. g. Win. NT & Win. XP) will bring in additional neighboring pages (clustering) • Alternatively – Figure out working set from previous activity – Page in entire working set of a swapped out process CS-3013 A-term 2009 Virtual Memory Management 39

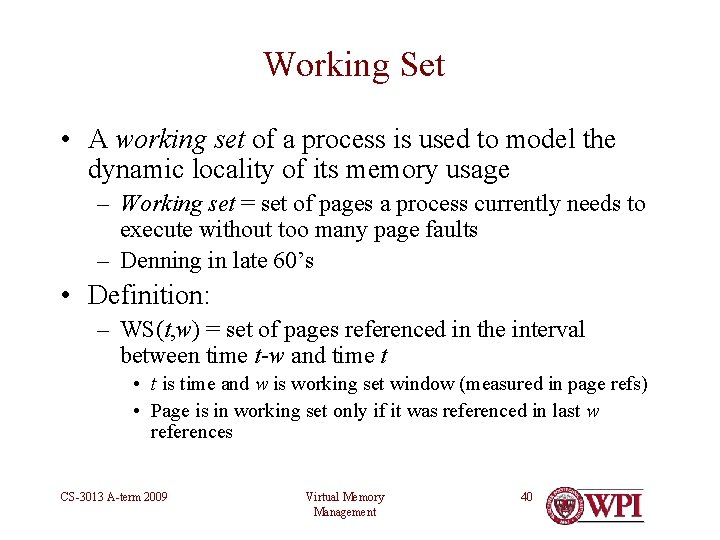

Working Set • A working set of a process is used to model the dynamic locality of its memory usage – Working set = set of pages a process currently needs to execute without too many page faults – Denning in late 60’s • Definition: – WS(t, w) = set of pages referenced in the interval between time t-w and time t • t is time and w is working set window (measured in page refs) • Page is in working set only if it was referenced in last w references CS-3013 A-term 2009 Virtual Memory Management 40

Working Set • w working-set window a fixed number of page references Example: 10, 000 – 2, 000 instructions • WSi (working set of Process Pi) = set of pages referenced in the most recent w (varies in time) – if w too small will not encompass entire locality. – if w too large will encompass several localities. – as w , encompasses entire program. CS-3013 A-term 2009 Virtual Memory Management 41

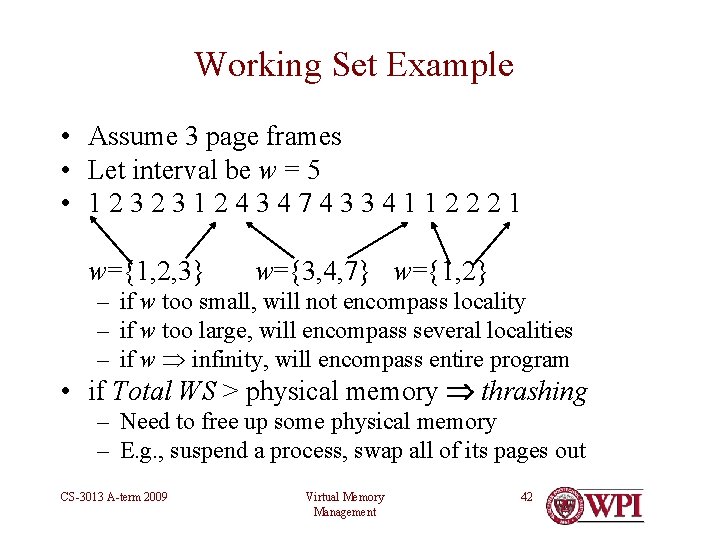

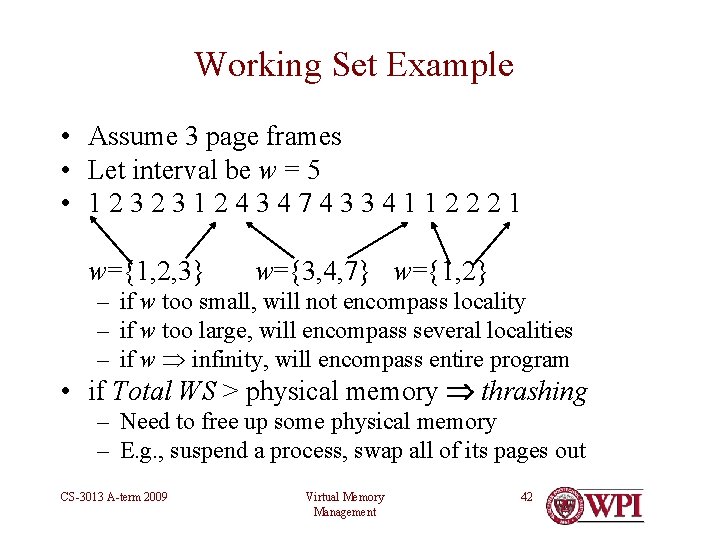

Working Set Example • Assume 3 page frames • Let interval be w = 5 • 123231243474334112221 w={1, 2, 3} w={3, 4, 7} w={1, 2} – if w too small, will not encompass locality – if w too large, will encompass several localities – if w infinity, will encompass entire program • if Total WS > physical memory thrashing – Need to free up some physical memory – E. g. , suspend a process, swap all of its pages out CS-3013 A-term 2009 Virtual Memory Management 42

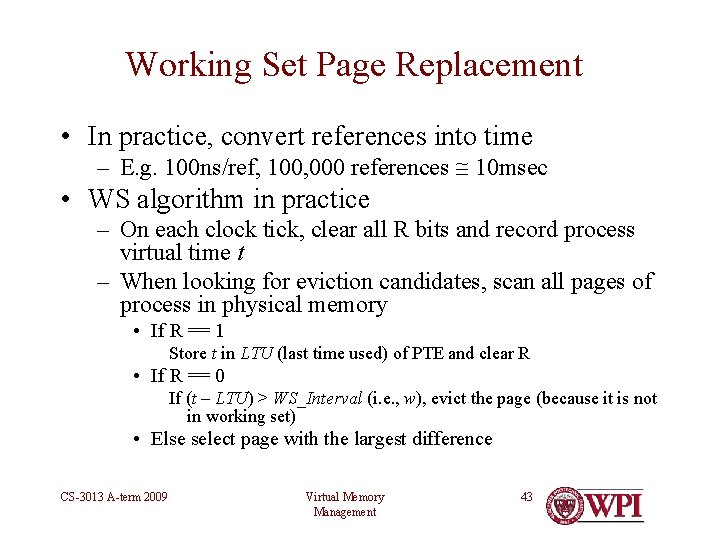

Working Set Page Replacement • In practice, convert references into time – E. g. 100 ns/ref, 100, 000 references 10 msec • WS algorithm in practice – On each clock tick, clear all R bits and record process virtual time t – When looking for eviction candidates, scan all pages of process in physical memory • If R == 1 Store t in LTU (last time used) of PTE and clear R • If R == 0 If (t – LTU) > WS_Interval (i. e. , w), evict the page (because it is not in working set) • Else select page with the largest difference CS-3013 A-term 2009 Virtual Memory Management 43

Working Set Page Replacement , m bau n e n a T • In practice, convert references into time See § 3. 4. 8 – E. g. 100 ns/ref, 100, 000 references 10 msec • WS algorithm in practice – On each clock tick, clear all R bits and record process virtual time t – When looking for eviction candidates, scan all pages of process in physical memory • If R == 1 Store t in LTU (last time used) of PTE and clear R • If R == 0 If (t – LTU) > WS_Interval (i. e. , w), evict the page (because it is not in working set) • Else select page with the largest difference CS-3013 A-term 2009 Virtual Memory Management 44

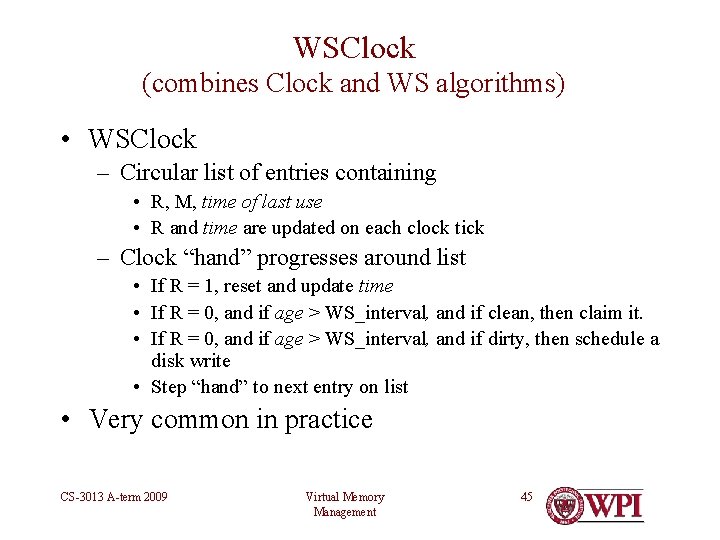

WSClock (combines Clock and WS algorithms) • WSClock – Circular list of entries containing • R, M, time of last use • R and time are updated on each clock tick – Clock “hand” progresses around list • If R = 1, reset and update time • If R = 0, and if age > WS_interval, and if clean, then claim it. • If R = 0, and if age > WS_interval, and if dirty, then schedule a disk write • Step “hand” to next entry on list • Very common in practice CS-3013 A-term 2009 Virtual Memory Management 45

WSClock (combines Clock and WS algorithms) , m bau n e n a. 9 T 4. e § 3 Se • WSClock – Circular list of entries containing • R, M, time of last use • R and time are updated on each clock tick – Clock “hand” progresses around list • If R = 1, reset and update time • If R = 0, and if age > WS_interval, and if clean, then claim it. • If R = 0, and if age > WS_interval, and if dirty, then schedule a disk write • Step “hand” to next entry on list • Very common in practice CS-3013 A-term 2009 Virtual Memory Management 46

Review of Page Replacement Algorithms Tanenbaum, Fig 3 -22 CS-3013 A-term 2009 Virtual Memory Management 47

Virtual Memory Subsystem • All about managing the page cache in RAM of virtual memory … • … which lives primarily on disk • See also Chapter 15 of Linux Kernel Development, by Robert Love CS-3013 A-term 2009 Virtual Memory Management 48

More on Segmentation • Paging is (mostly) invisible to programmer, but segmentation is not • Even paging with two-level page tables is invisible • Segment: an open-ended piece of VM • Multics (H 6000): 218 segments of 64 K words each • Pentium: 16 K segments of 230 bytes each – 8 K global segments, plus 8 K local segments per process – Each segment may be paged or not – Each segment assigned to one of four protection levels • Program consciously loads segment descriptors when accessing a new segment • Only OS/2 used full power of Pentium segments • Linux concatenates 3 segments to simulate contiguous VM CS-3013 A-term 2009 Virtual Memory Management 49

OS Design Issue — Where does Kernel execute? • In physical memory • Old systems (e. g. , IBM 360/67) • Extra effort needed to look inside of VM of any process • In virtual memory • Most modern systems • Shared segment among all processes • Advantages of kernel in virtual memory • Easy to access, transfer to/from VM of any process • No context switch needed for traps, page faults • No context switch needed for purely kernel interrupts CS-3013 A-term 2009 Virtual Memory Management 50

Kernel Memory Requirements • Interrupt handlers • Must be pinned into physical memory • At locations known to hardware De • Critical kernel code to finiti bei on • Pinned, never swapped out ng : Pi sw nne • I/O buffers (user and kernel) app d ed – no • Must be pinned and in contiguous physical memory ou t! t sub jec s file objects, semaphores, etc. ) • Kernel data (e. g. , ic. PCB’s, e t v e • Pinned einr dphysical g!memory n i h g ot e paallocated d • a. Dynamically & freed n niz g /O • Not I o multiples of page size; fragmentation is an issue c – e : r n so on’t a e d R CS-3013 A-term 2009 Virtual Memory Management 51

Definition • Pinned: not subject to being swapped or paged out. – i. e. , one or more contiguous pages of virtual memory that are stored in specific, identifiable, contiguous page frames in physical memory CS-3013 A-term 2009 Virtual Memory Management 52

Kernel Memory Allocation • E. g. , Linux PCB (struct task_struct ) • > 1. 7 Kbytes each • Created on every fork and every thread create – clone() • deleted on every exit • Kernel memory allocators • Buddy system • Slab allocation CS-3013 A-term 2009 Virtual Memory Management 53

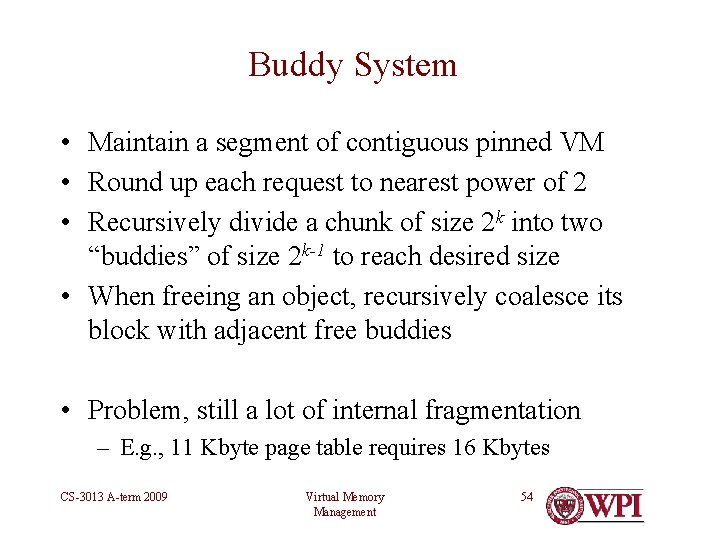

Buddy System • Maintain a segment of contiguous pinned VM • Round up each request to nearest power of 2 • Recursively divide a chunk of size 2 k into two “buddies” of size 2 k-1 to reach desired size • When freeing an object, recursively coalesce its block with adjacent free buddies • Problem, still a lot of internal fragmentation – E. g. , 11 Kbyte page table requires 16 Kbytes CS-3013 A-term 2009 Virtual Memory Management 54

Buddy System (illustrated) CS-3013 A-term 2009 Virtual Memory Management 55

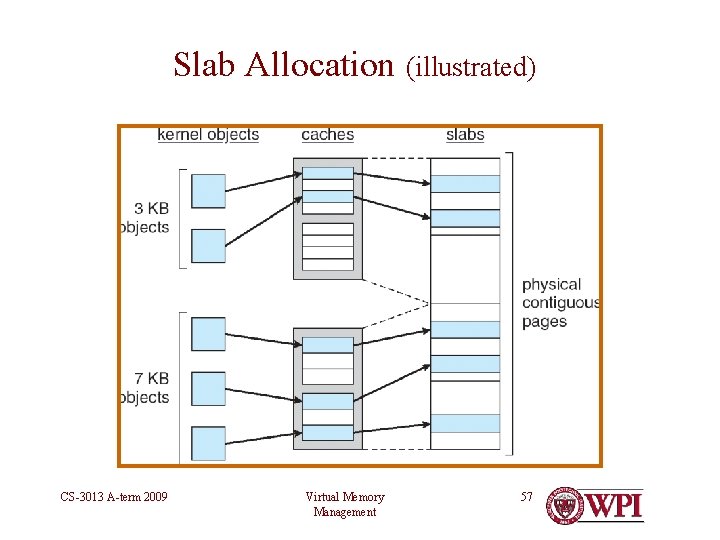

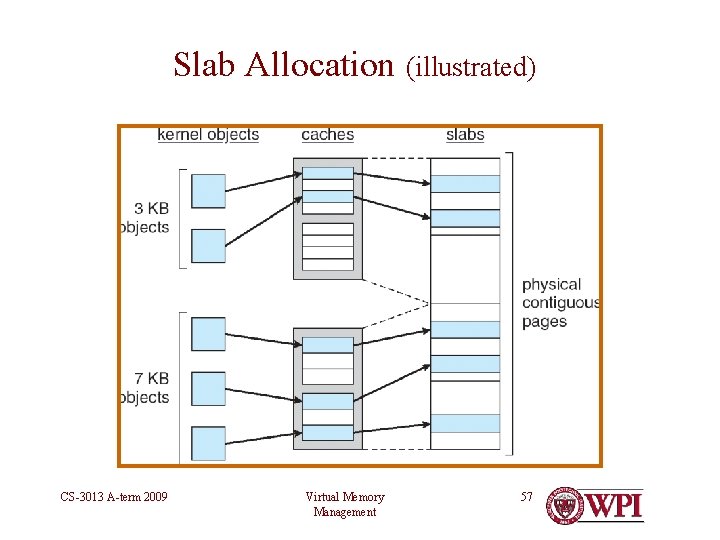

f o e he s u c s Slab Allocation Mi rd ca wo • Maintain a separate “cache” for each major data type • E. g. , task_struct, inode in Linux • Slab: fixed number of contiguous physical pages assigned to one particular “cache” • Upon kernel memory allocation request • Recycle an existing object if possible • Allocate a new one within a slab if possible • Else, create an additional slab for that cache • When finished with an object • Return it to “cache” for recycling • Benefits • Minimize fragmentation of kernel memory • Most kernel memory requests can be satisfied quickly CS-3013 A-term 2009 Virtual Memory Management 56

Slab Allocation (illustrated) CS-3013 A-term 2009 Virtual Memory Management 57

Unix VM • Physical Memory – Core map (pinned) – page frame info – Kernel (pinned) – rest of kernel – Frames – remainder of memory • Page replacement – Page daemon • runs periodically to free up page frames • Global replacement – multiple parameters • Current BSD system uses 2 -handed clock – Swapper – helps page daemon • Look for processes idle 20 sec. or more and swap out longest idle • Next, swap out one of 4 largest – one in memory the longest • Check for processes to swap in CS-3013 A-term 2009 Virtual Memory Management 58

Linux VM • Kernel is pinned • Rest of frames used – Processes – Buffer cache – Page Cache • Multilevel paging – 3 levels – Contiguous slab memory allocation using Buddy Algorithm • Replacement – goal keep a certain number of pages free – Daemon (kswapd) runs once per second • Clock algorithm on page and buffer caches • Clock on unused shared pages • Modified clock (by VA order) on user processes (by # of frames) CS-3013 A-term 2009 Virtual Memory Management 59

Windows NT • Uses demand paging with clustering. Clustering brings in pages surrounding the faulting page. • Processes are assigned working set minimum (20 -50) and working set maximum (45 -345) • Working set minimum is the minimum number of pages the process is guaranteed to have in memory. • A process may be assigned as many pages up to its working set maximum. • When the amount of free memory in the system falls below a threshold, automatic working set trimming is performed to restore the amount of free memory. (Balance set manager) • Working set trimming removes pages from processes that have pages in excess of their working set minimum CS-3013 A-term 2009 Virtual Memory Management 60

VM Summary • Memory Management – from simple multiprogramming support to efficient use of multiple system resources • Models and measurement exist to determine the goodness of an implementation • In real systems, must tradeoff – Implementation complexity – Management overhead – Access time overhead CS-3013 A-term 2009 Virtual Memory Management 61

Reading Assignment • Tanenbaum – §§ 3. 1– 3. 3 (previous topics) • Memory Management • Paging – §§ 3. 4– 3. 6 (this topic) • Page Replacement Algorithms • Design Issues for Paging Systems • Implementation Issues for Paging Systems – § 3. 7 • More on Segmentation CS-3013 A-term 2009 Virtual Memory Management 62

Questions? CS-3013 A-term 2009 Virtual Memory Management 63