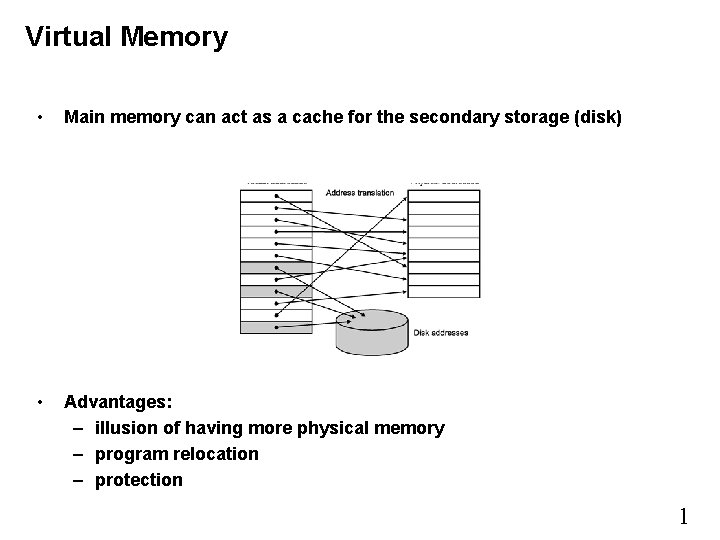

Virtual Memory Main memory can act as a

- Slides: 15

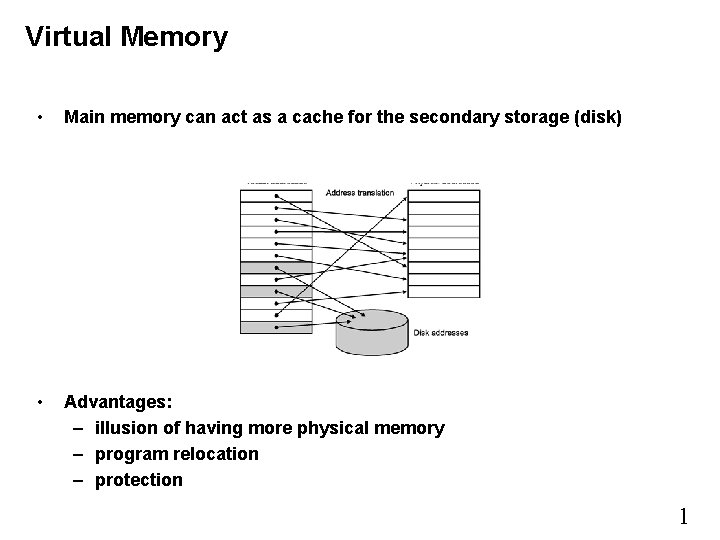

Virtual Memory • Main memory can act as a cache for the secondary storage (disk) • Advantages: – illusion of having more physical memory – program relocation – protection 1

Q 1: Where can a page be placed in main memory. A 1: Anywhere with minor restrictions. This means that the placement is fully associative. Recall that a fully associative cache is the most desirable; however, it is also the most difficult to implement. The ratio of miss to hit times for cache is less that 10. For virtual memory it is probably grater than 10, 000. Hence, the placement scheme can be implemented by the operating system and can be more complex than a fast hardware placement scheme. Q 2: How is data found? A 2: Virtual address is translated to a Physical address. Q 3: Which page should be replaced? A 3: LRU Q 4: What happens on a write? A 4: Write back. Write through too expensive in time. 2

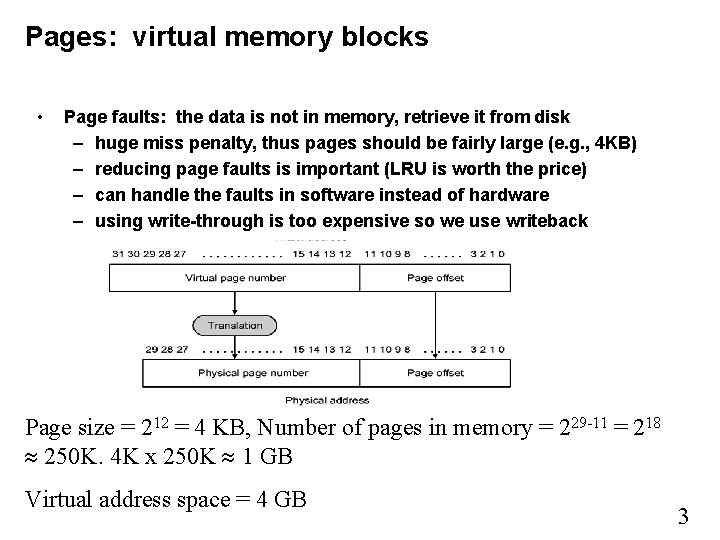

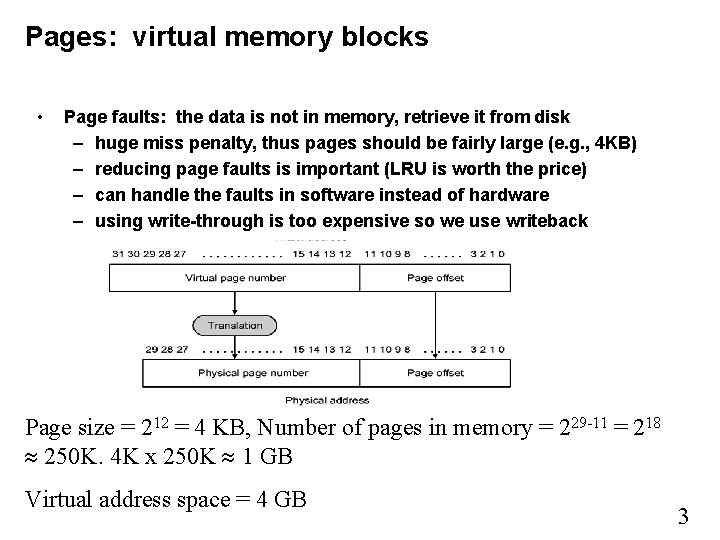

Pages: virtual memory blocks • Page faults: the data is not in memory, retrieve it from disk – huge miss penalty, thus pages should be fairly large (e. g. , 4 KB) – reducing page faults is important (LRU is worth the price) – can handle the faults in software instead of hardware – using write-through is too expensive so we use writeback Page size = 212 = 4 KB, Number of pages in memory = 229 -11 = 218 250 K. 4 K x 250 K 1 GB Virtual address space = 4 GB 3

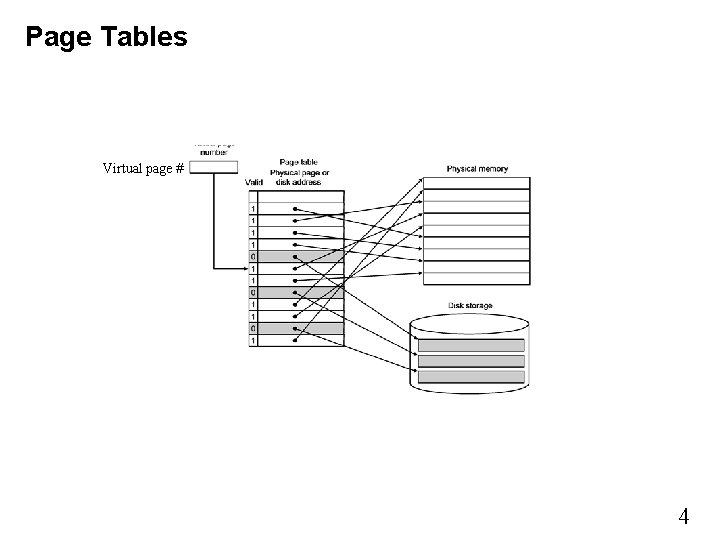

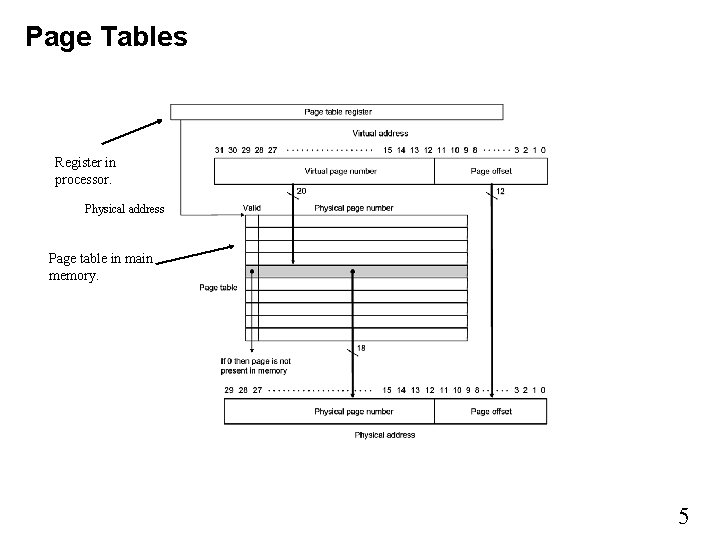

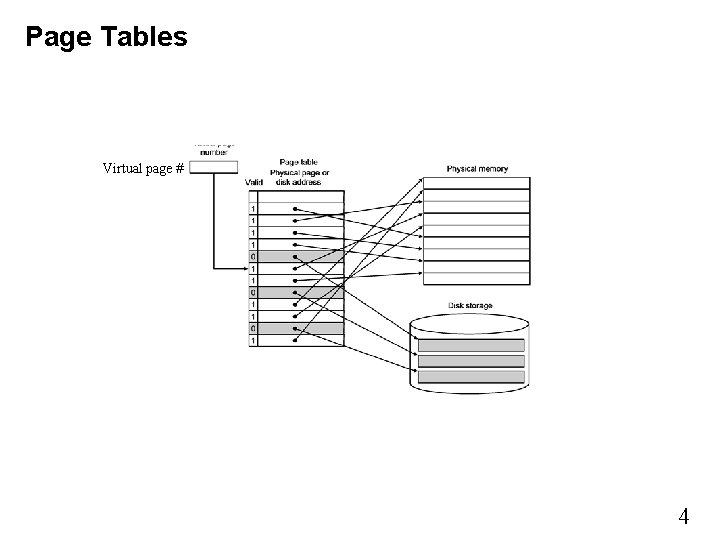

Page Tables Virtual page # 4

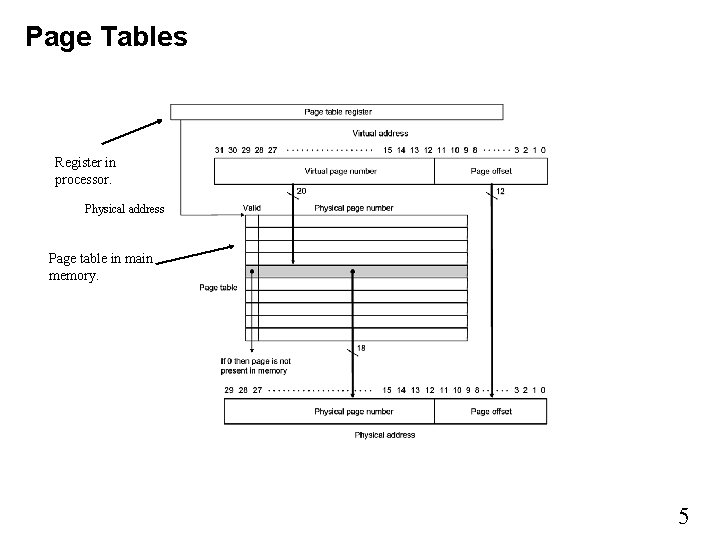

Page Tables Register in processor. Physical address Page table in main memory. 5

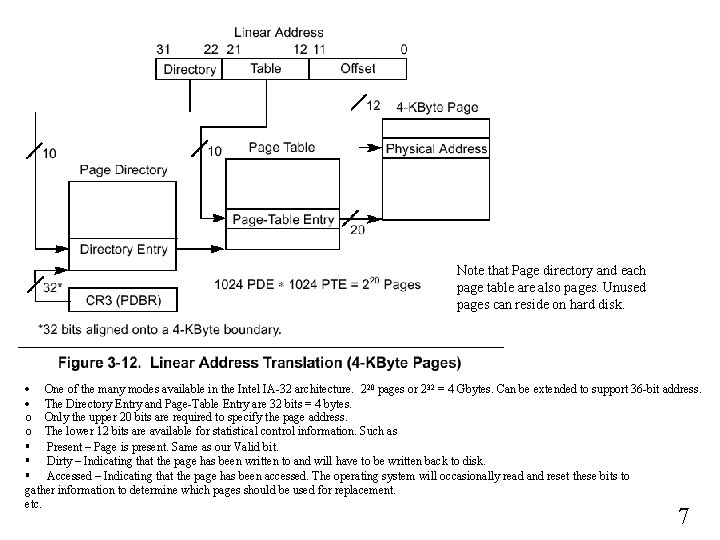

Example: Let page size be 4 K bytes i. e. 12 bits of address. Let the virtual and physical address be 32 bits. 232/212 = 220 pages. 32 bits or 4 bytes would be used in each page entry (but not all bits are needed). Therefore, 222 bytes of main memory would be required for the page table! Let’s look at one of the ways Intel addressed this problem. 6

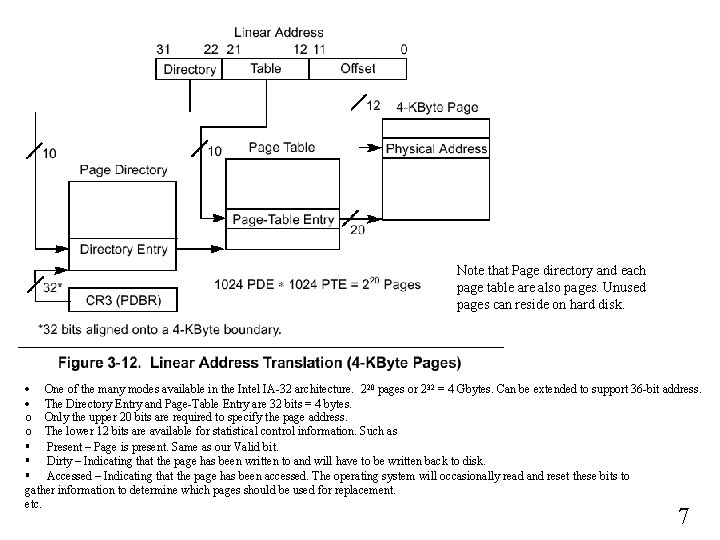

Note that Page directory and each page table are also pages. Unused pages can reside on hard disk. · One of the many modes available in the Intel IA-32 architecture. 220 pages or 232 = 4 Gbytes. Can be extended to support 36 -bit address. · The Directory Entry and Page-Table Entry are 32 bits = 4 bytes. o Only the upper 20 bits are required to specify the page address. o The lower 12 bits are available for statistical control information. Such as § Present – Page is present. Same as our Valid bit. § Dirty – Indicating that the page has been written to and will have to be written back to disk. § Accessed – Indicating that the page has been accessed. The operating system will occasionally read and reset these bits to gather information to determine which pages should be used for replacement. etc. 7

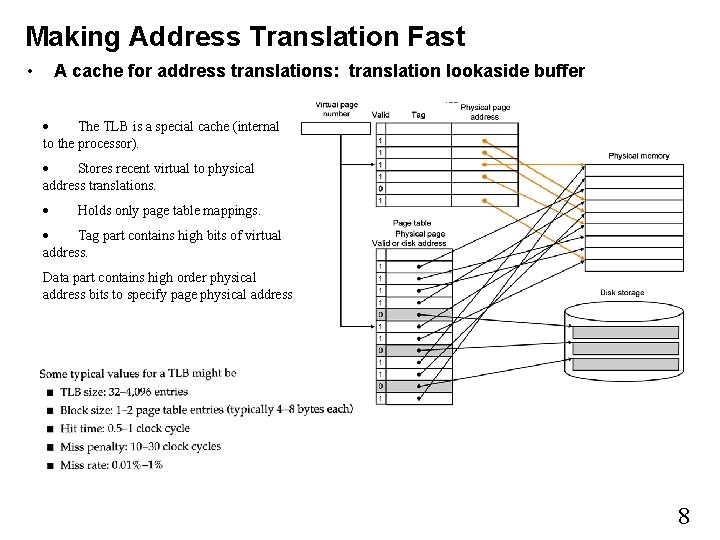

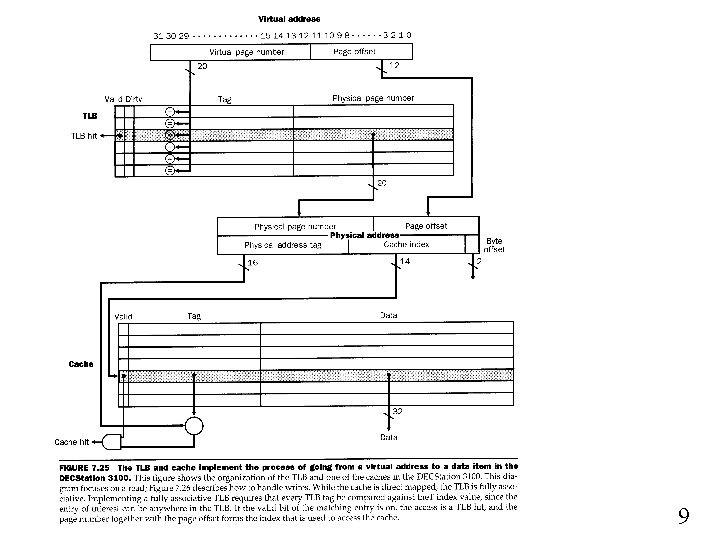

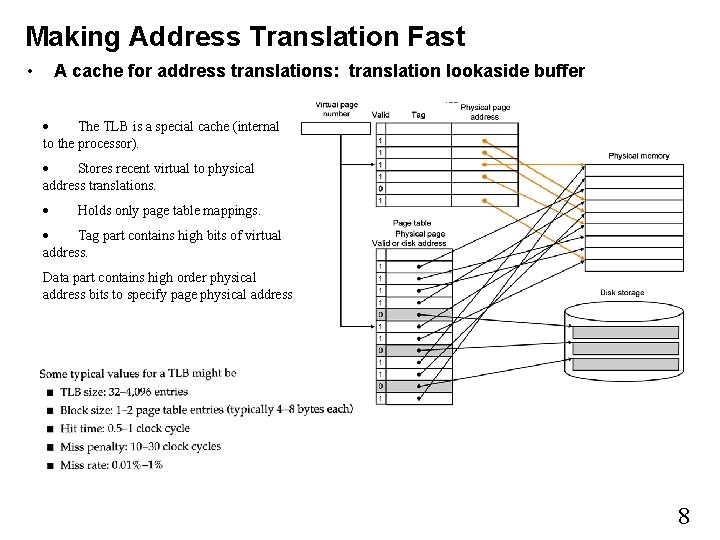

Making Address Translation Fast • A cache for address translations: translation lookaside buffer · The TLB is a special cache (internal to the processor). · Stores recent virtual to physical address translations. · Holds only page table mappings. · Tag part contains high bits of virtual address. Data part contains high order physical address bits to specify page physical address 8

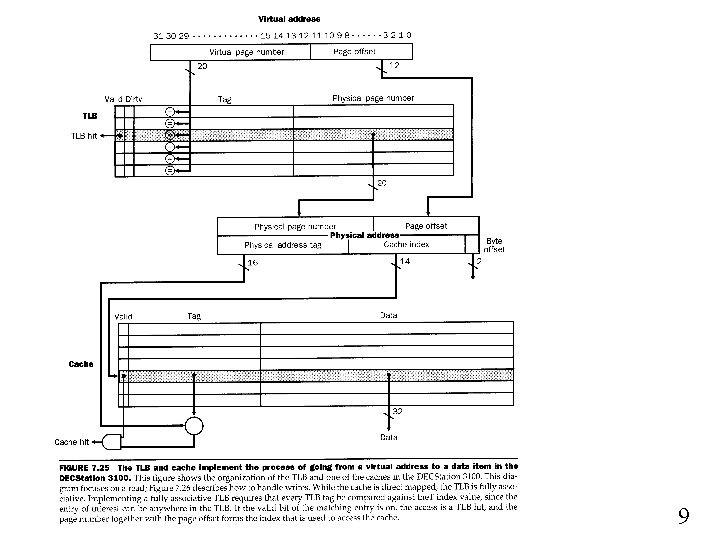

9

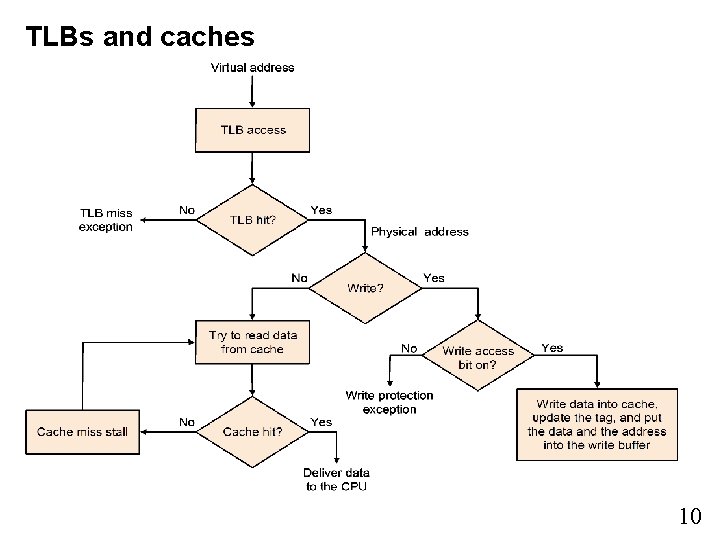

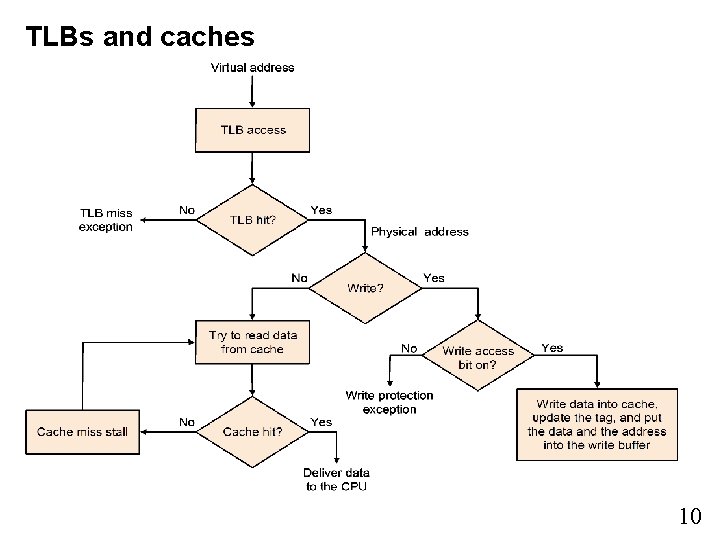

TLBs and caches 10

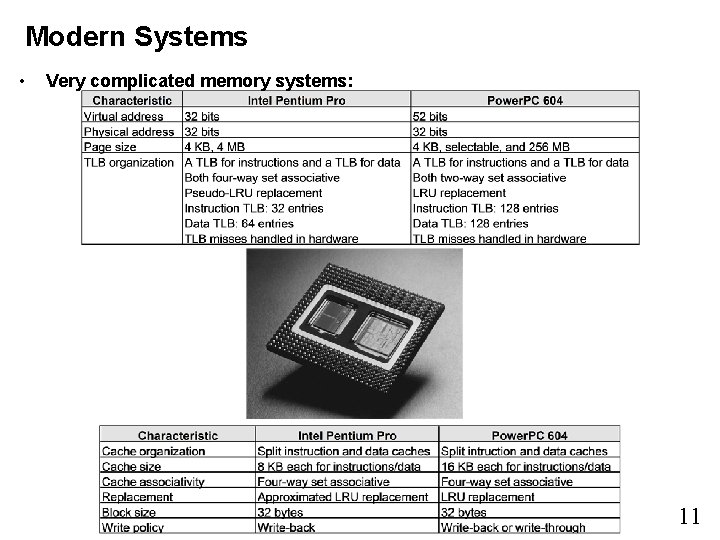

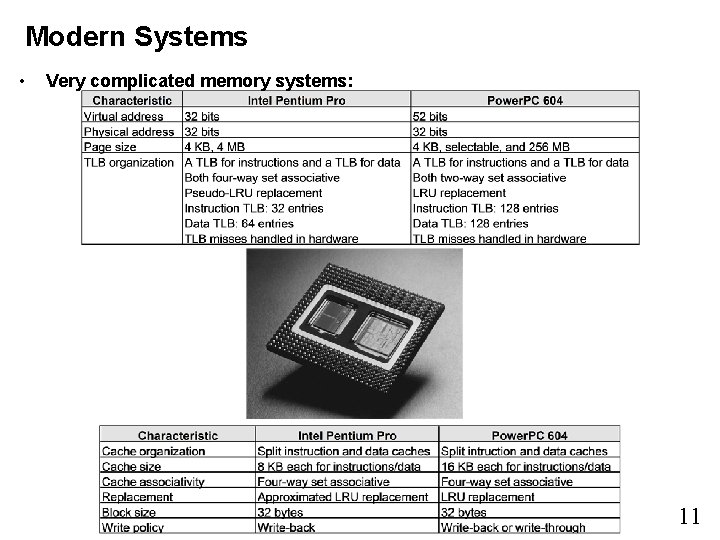

Modern Systems • Very complicated memory systems: 11

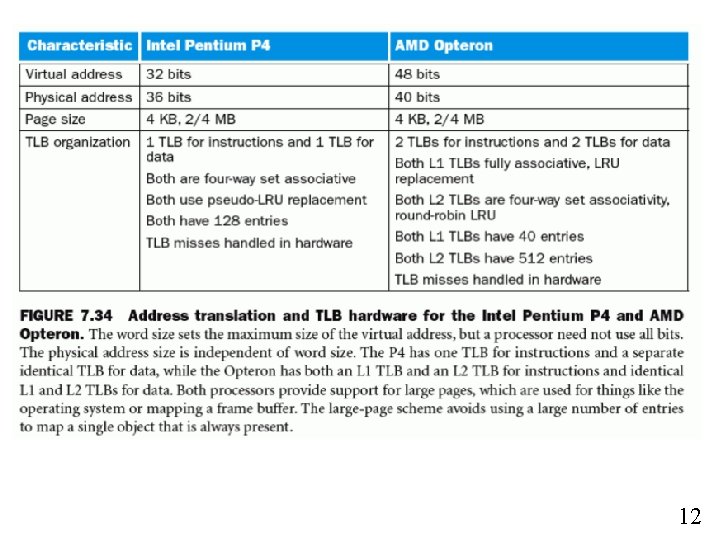

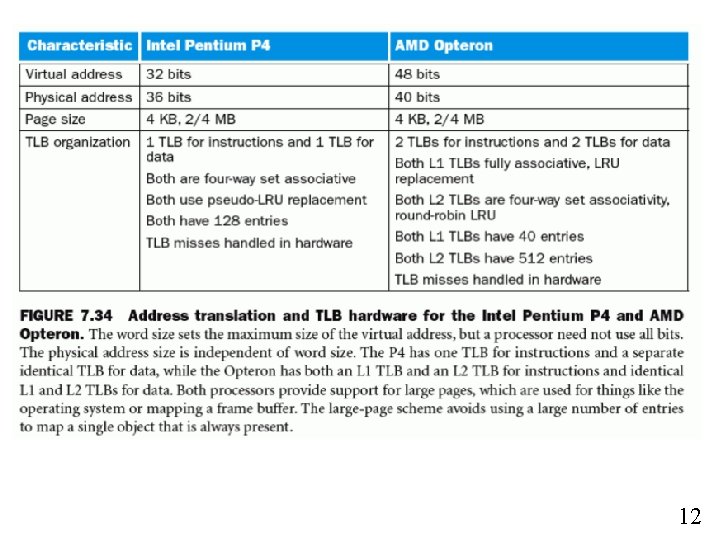

12

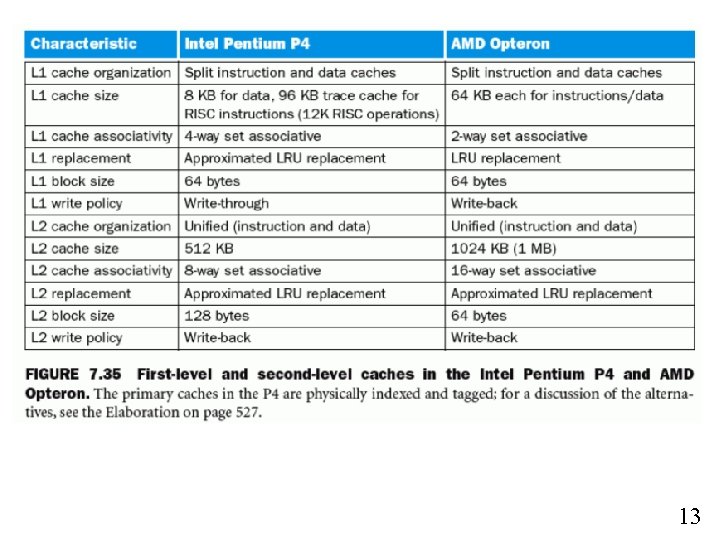

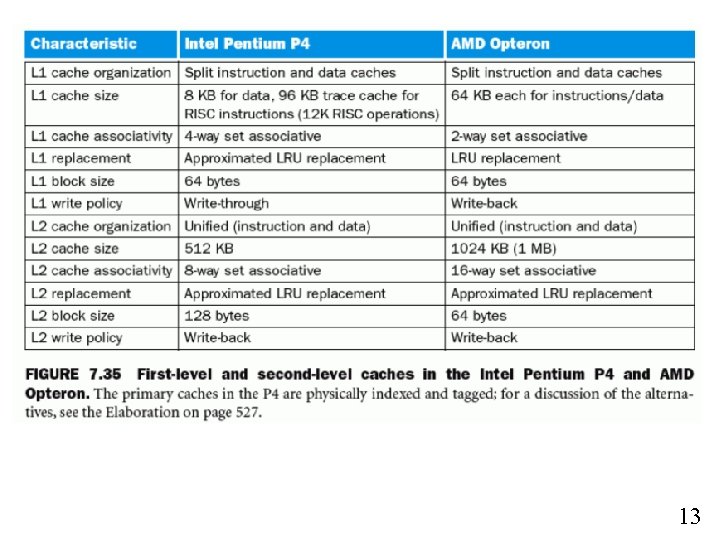

13

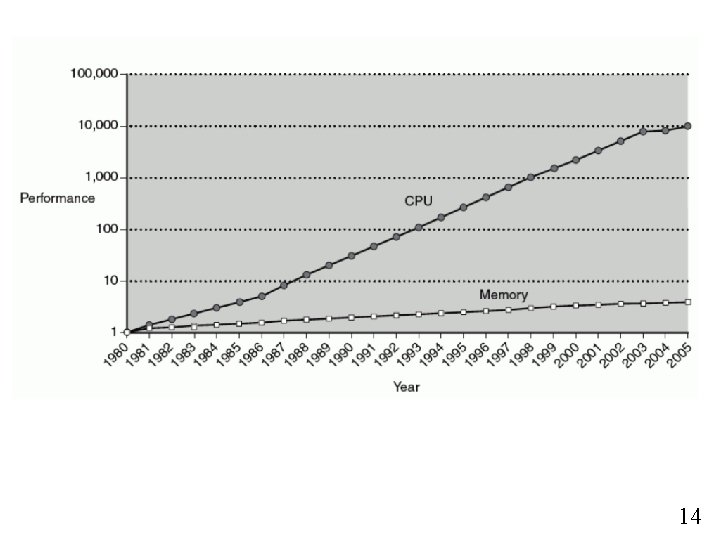

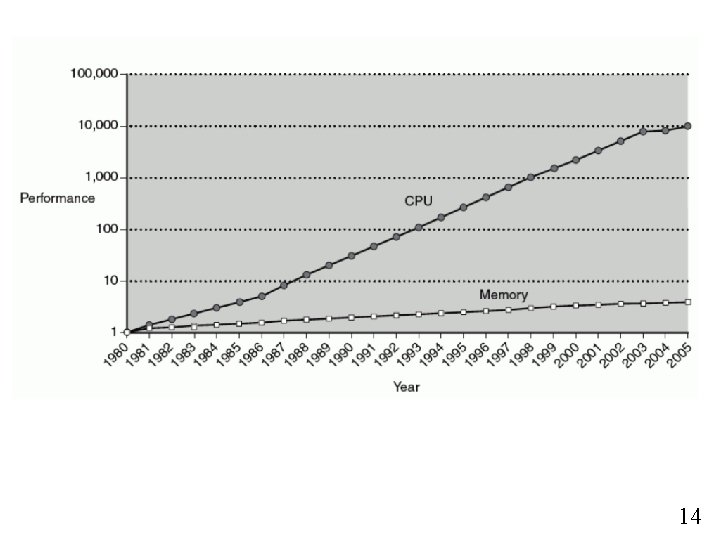

14

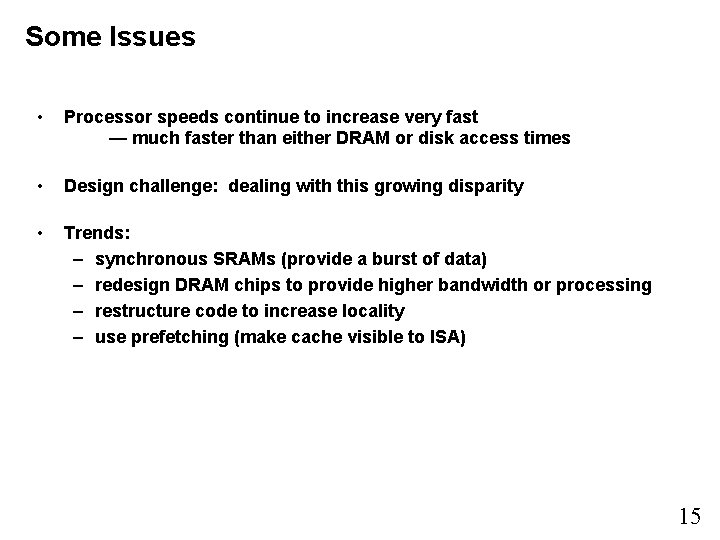

Some Issues • Processor speeds continue to increase very fast — much faster than either DRAM or disk access times • Design challenge: dealing with this growing disparity • Trends: – synchronous SRAMs (provide a burst of data) – redesign DRAM chips to provide higher bandwidth or processing – restructure code to increase locality – use prefetching (make cache visible to ISA) 15