Virtual Memory 1 Outline Virtual Space Address translation

- Slides: 85

Virtual Memory 1

Outline • Virtual Space • Address translation • Accelerating translation – with a TLB – Multilevel page tables • Different points of view • Suggested reading: 10. 1~10. 6 TLB: Translation lookaside buffers 2

10. 1 Physical and Virtual Addressing 3

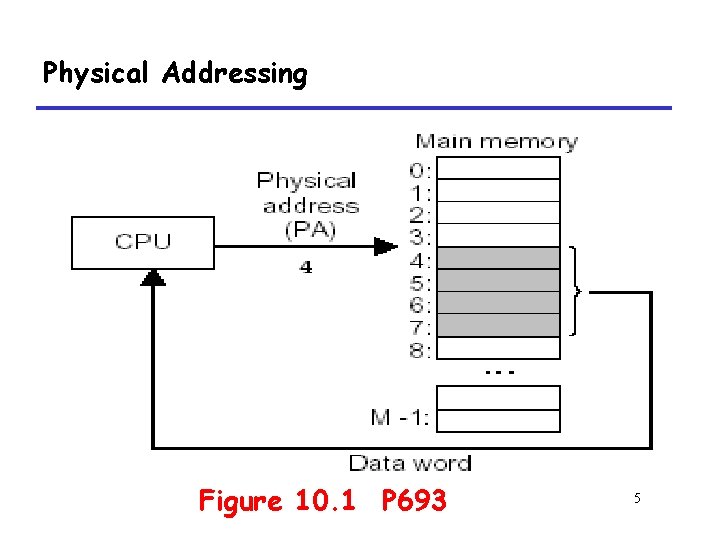

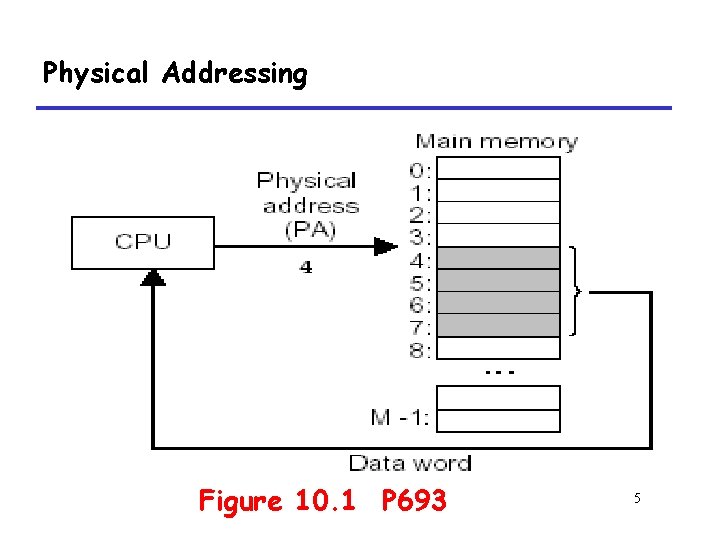

Physical Addressing • Attributes of the main memory – Organized as an array of M contiguous byte-sized cells – Each byte has a unique physical address (PA) started from 0 • physical addressing – A CPU use physical addresses to access memory • Examples – Early PCs, DSP, embedded microcontrollers, and Cray supercomputers Contiguous: 临近的 4

Physical Addressing Figure 10. 1 P 693 5

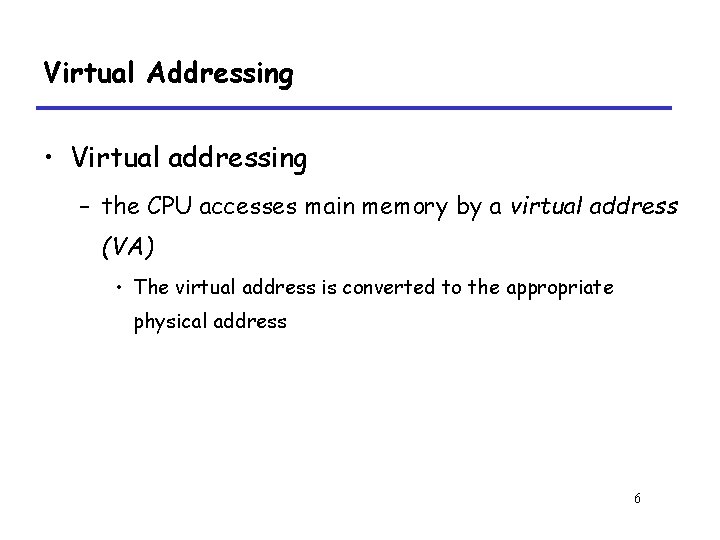

Virtual Addressing • Virtual addressing – the CPU accesses main memory by a virtual address (VA) • The virtual address is converted to the appropriate physical address 6

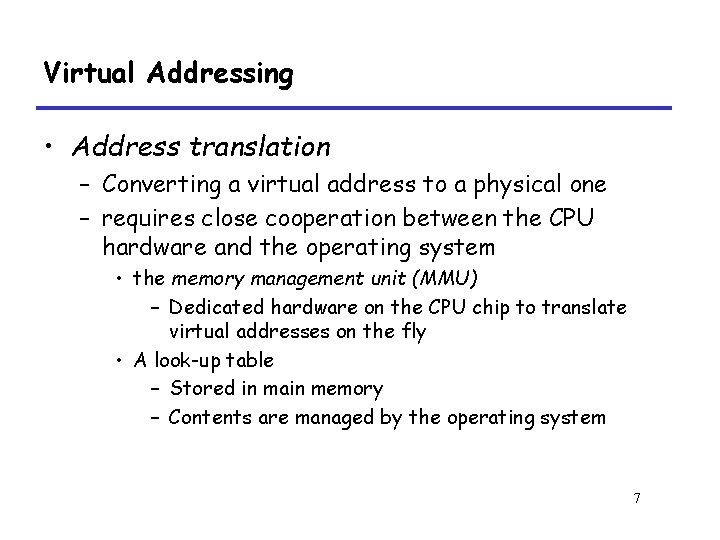

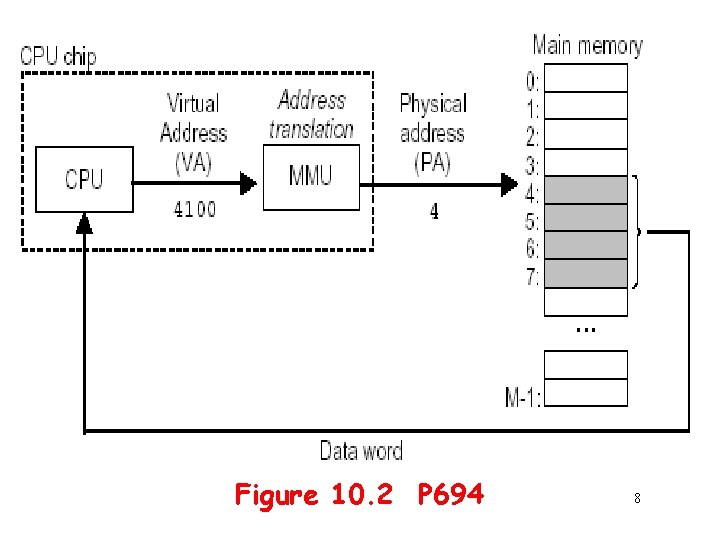

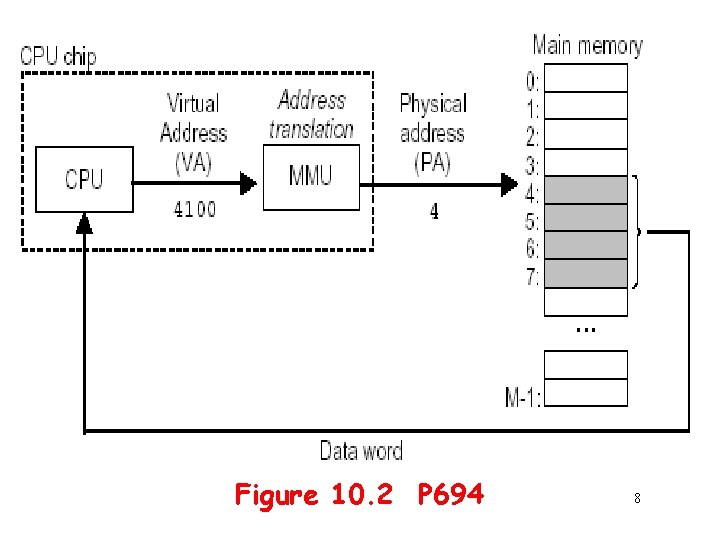

Virtual Addressing • Address translation – Converting a virtual address to a physical one – requires close cooperation between the CPU hardware and the operating system • the memory management unit (MMU) – Dedicated hardware on the CPU chip to translate virtual addresses on the fly • A look-up table – Stored in main memory – Contents are managed by the operating system 7

Figure 10. 2 P 694 8

10. 2 Address Space 9

Address Space • Address Space – An ordered set of nonnegative integer addresses • Linear Space – The integers in the address space are consecutive • N-bit address space 10

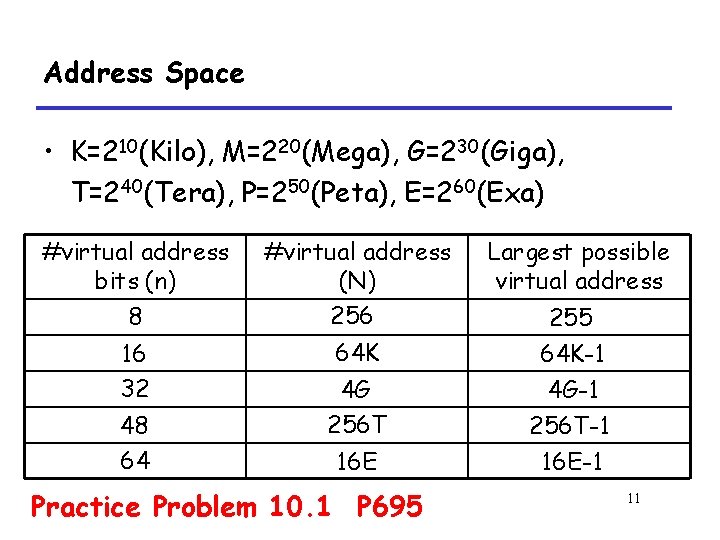

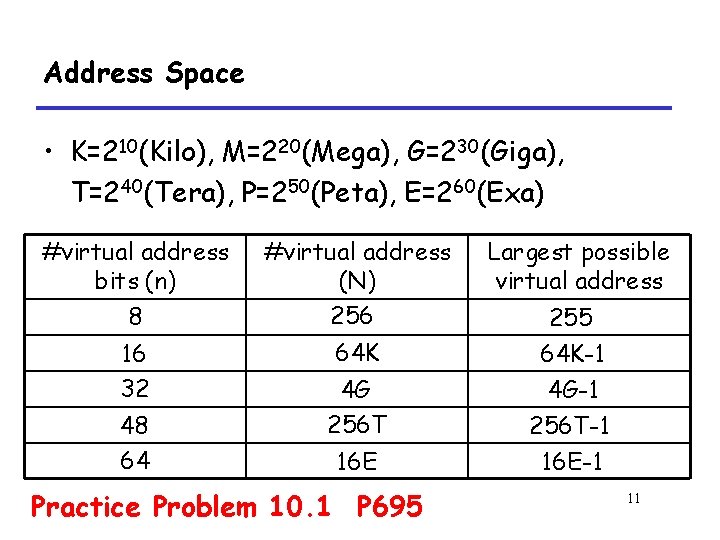

Address Space • K=210(Kilo), M=220(Mega), G=230(Giga), T=240(Tera), P=250(Peta), E=260(Exa) #virtual address bits (n) 8 16 32 48 64 #virtual address (N) 256 64 K 4 G 256 T 16 E Practice Problem 10. 1 P 695 Largest possible virtual address 255 64 K-1 4 G-1 256 T-1 16 E-1 11

Address Space • Data objects and their attributes – Bytes vs. addresses • Each data object can have multiple independent addresses 12

10. 3 VM as a Tool for Caching 13

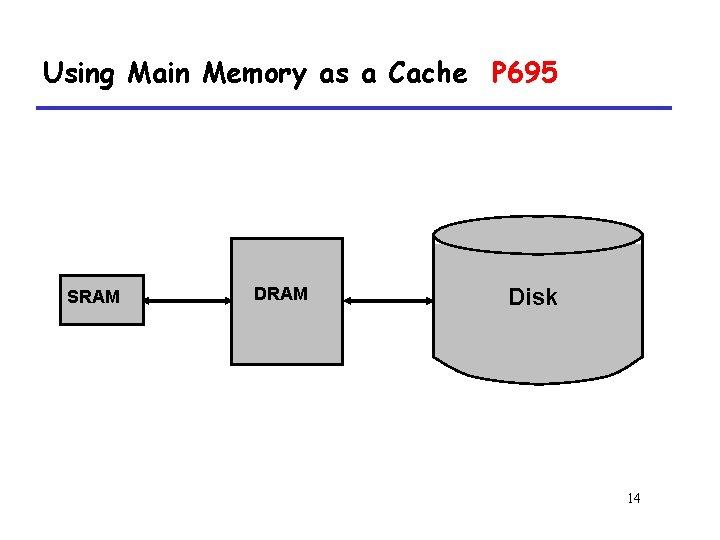

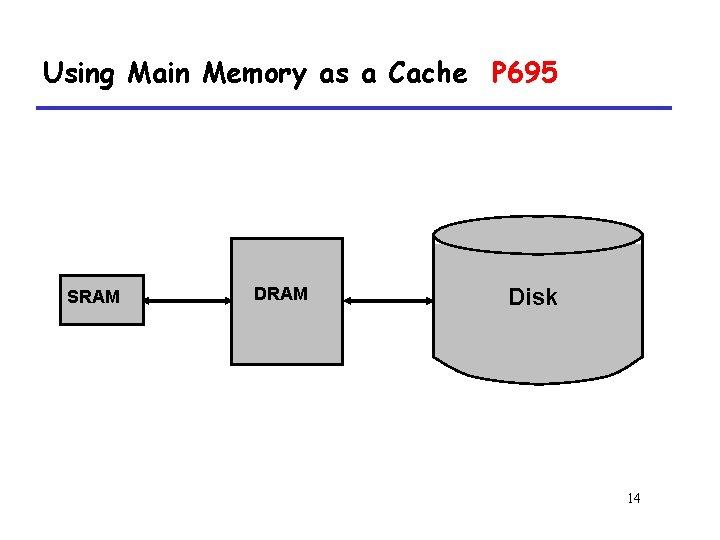

Using Main Memory as a Cache P 695 SRAM Disk 14

10. 3. 1 DRAM Cache Organization 15

Using Main Memory as a Cache • DRAM vs. disk is more extreme than SRAM vs. DRAM – Access latencies: • DRAM ~10 X slower than SRAM • Disk ~100, 000 X slower than DRAM – Bottom line: • Design decisions made for DRAM caches driven by enormous cost of misses 16

Design Considerations • Line size? – Large, since disk better at transferring large blocks • Associativity? – High, to minimize miss rate • Write through or write back? – Write back, since can’t afford to perform small writes to disk Write back: defers the memory update as long as possible by writing the updated block to memory only when it is evicted from the cache by the replacement algorithm. 17

10. 3. 2 Page Tables 18

Page • Virtual memory – Organized as an array of contiguous byte-sized cells stored on disk conceptually. – Each byte has a unique virtual address that serves as an index into the array – The contents of the array on disk are cached in main memory 19

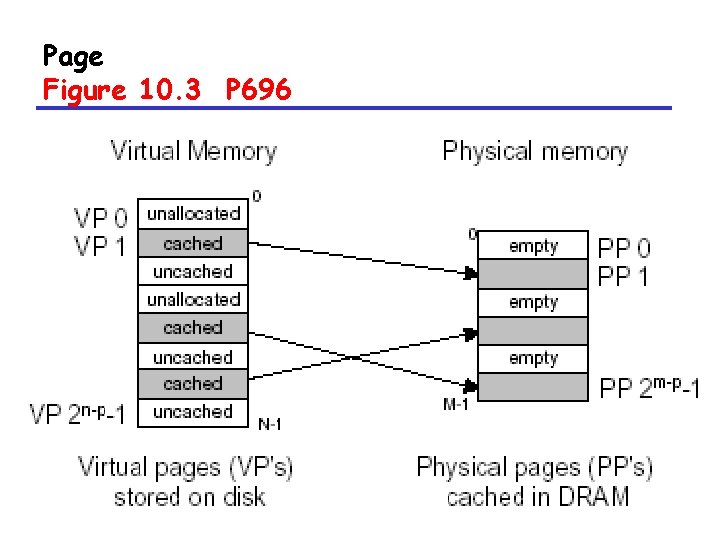

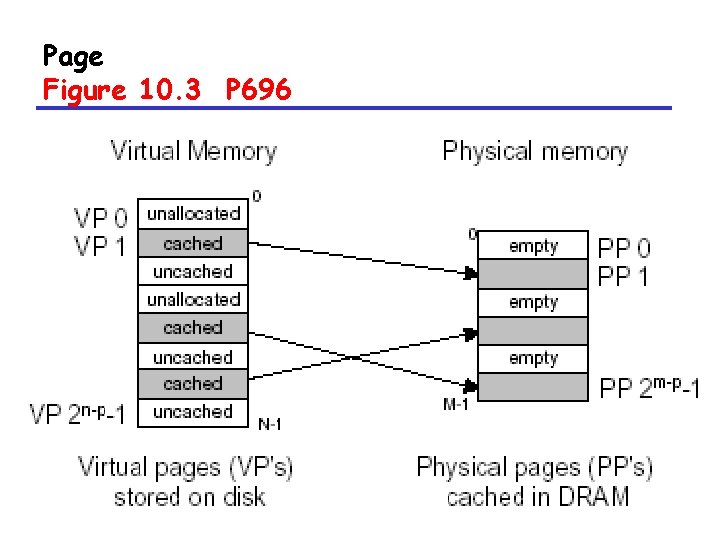

Page P 695 • The data on disk is partitioned into blocks – Serve as the transfer units between the disk and the main memory – virtual pages (VPs) – physical pages (PPs) • Also referred to as page frames 20

Page Attributes P 695 • 1) Unallocated: – Pages that have not yet been allocated (or created) by the VM system – Do not have any data associated with them – Do not occupy any space on disk. 21

Page Attributes • 2) Cached: – Allocated pages that are currently cached in physical memory. • 3) Uncached: – Allocated pages that are not cached in physical memory. 22

Page Figure 10. 3 P 696 23

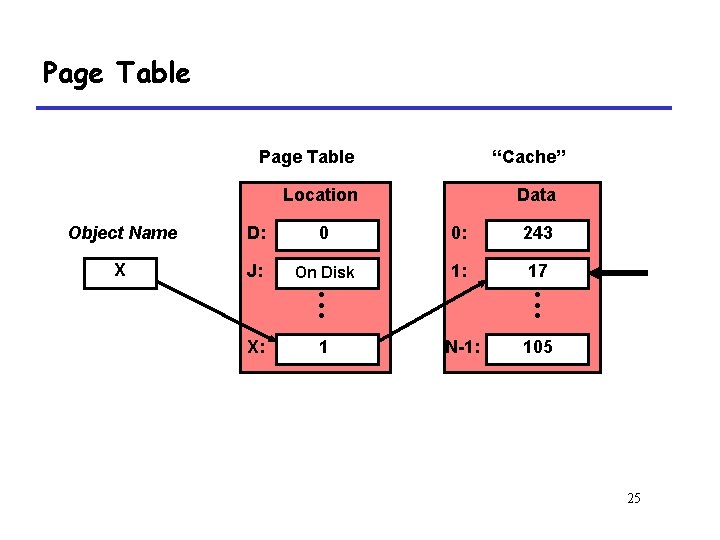

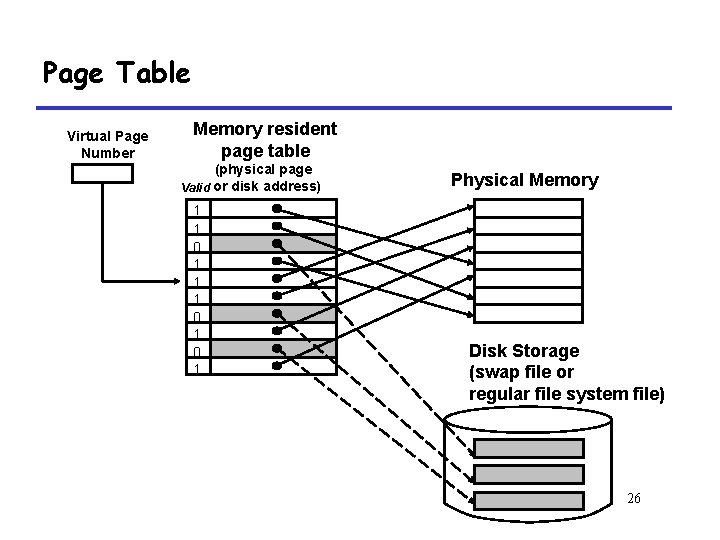

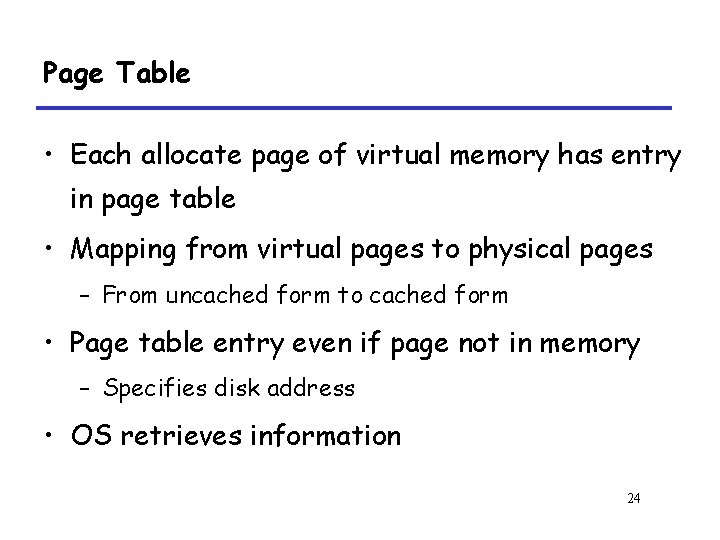

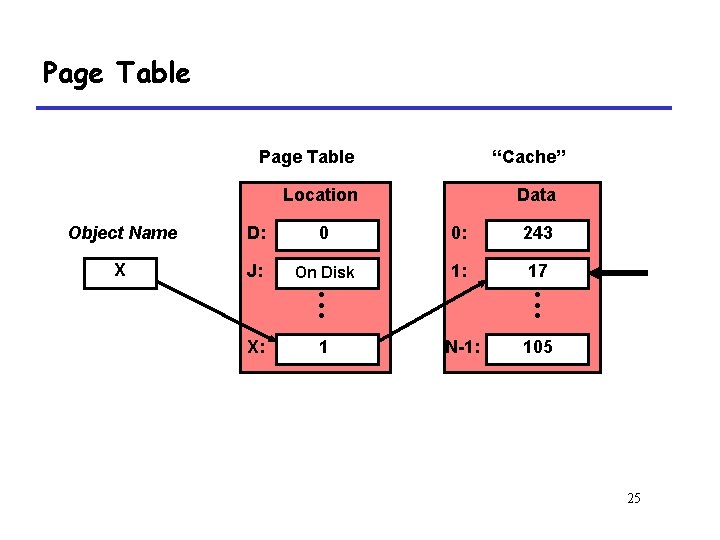

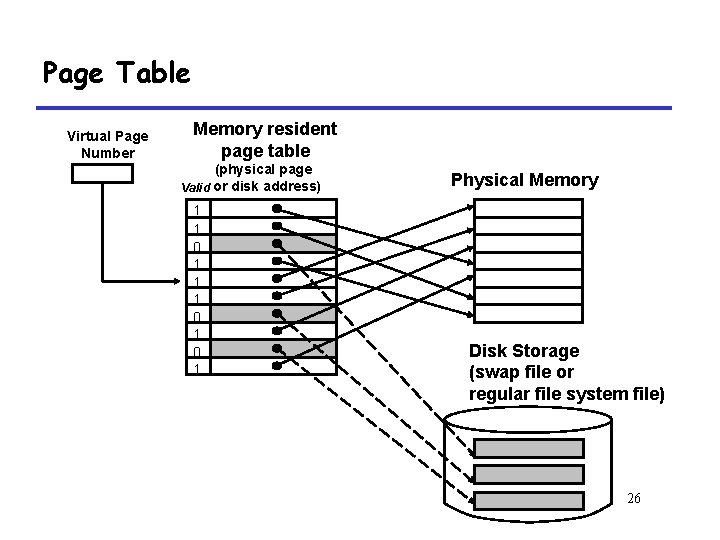

Page Table • Each allocate page of virtual memory has entry in page table • Mapping from virtual pages to physical pages – From uncached form to cached form • Page table entry even if page not in memory – Specifies disk address • OS retrieves information 24

Page Table “Cache” Location Data Object Name D: 0 0: 243 X J: On Disk 1: 17 • • • N-1: 105 • • • X: 1 25

Page Table Virtual Page Number Memory resident page table (physical page Valid or disk address) 1 1 0 1 0 1 Physical Memory Disk Storage (swap file or regular file system file) 26

10. 3. 3 Page Hits 27

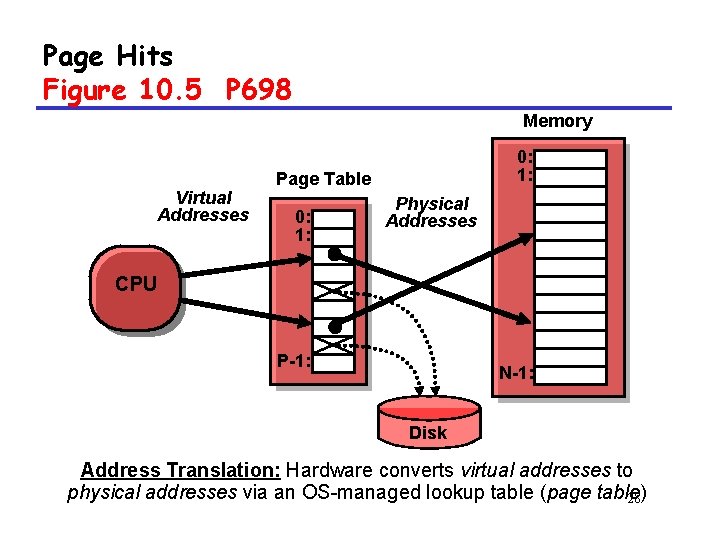

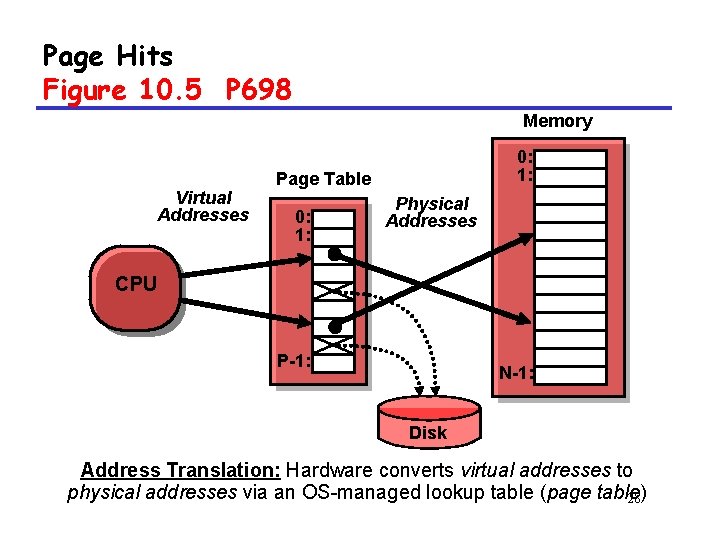

Page Hits Figure 10. 5 P 698 Memory Virtual Addresses 0: 1: Page Table 0: 1: Physical Addresses CPU P-1: N-1: Disk Address Translation: Hardware converts virtual addresses to physical addresses via an OS-managed lookup table (page table) 28

10. 3. 4 Page Faults 29

Page Faults • Page table entry indicates virtual address not in memory • OS exception handler invoked to move data from disk into memory – current process suspends, others can resume – OS has full control over placement, etc. Suspend: 悬挂 30

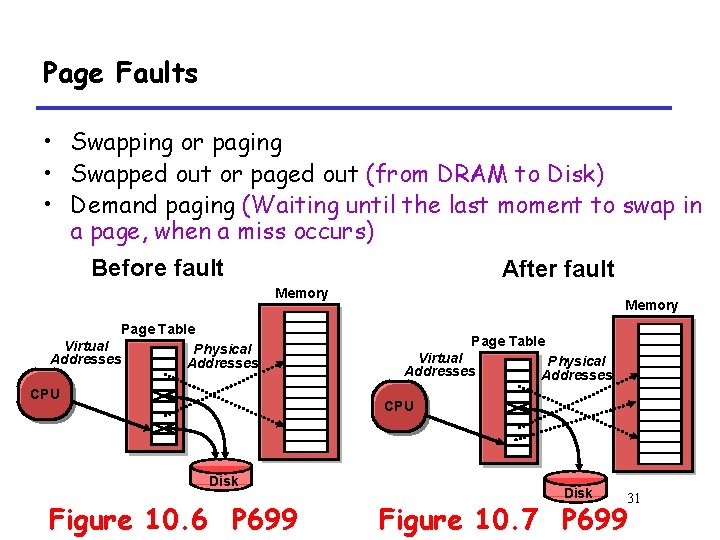

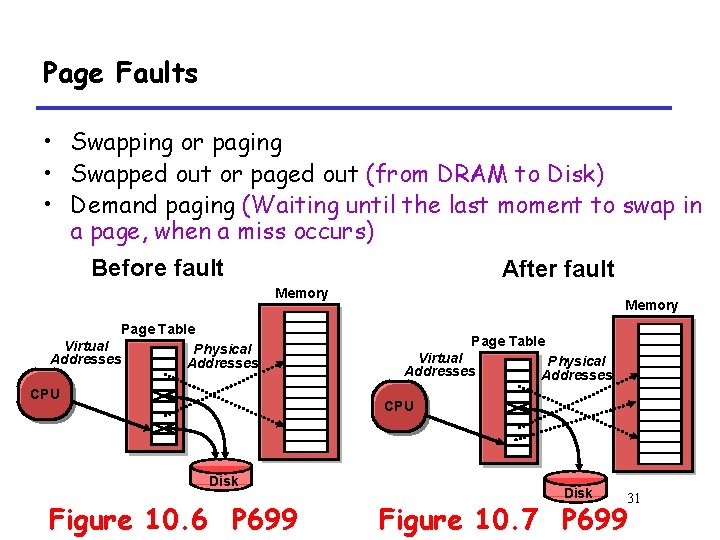

Page Faults • Swapping or paging • Swapped out or paged out (from DRAM to Disk) • Demand paging (Waiting until the last moment to swap in a page, when a miss occurs) Before fault After fault Memory Page Table Virtual Physical Addresses CPU Memory Page Table Virtual Addresses Physical Addresses CPU Disk Figure 10. 6 P 699 Disk 31 Figure 10. 7 P 699

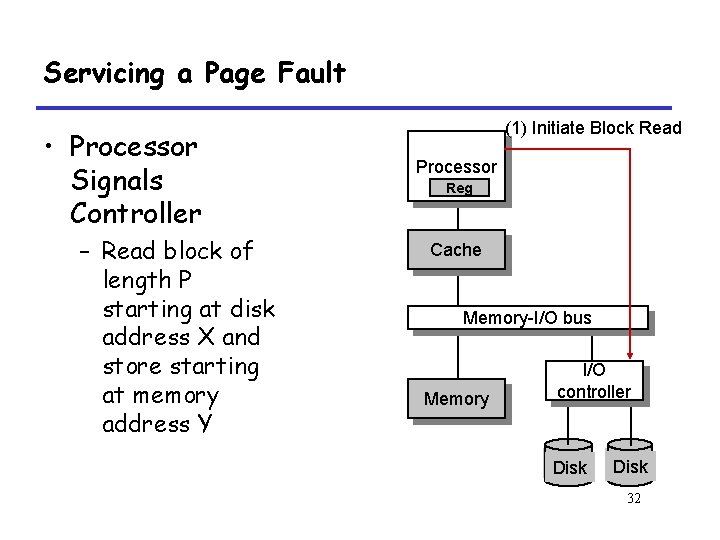

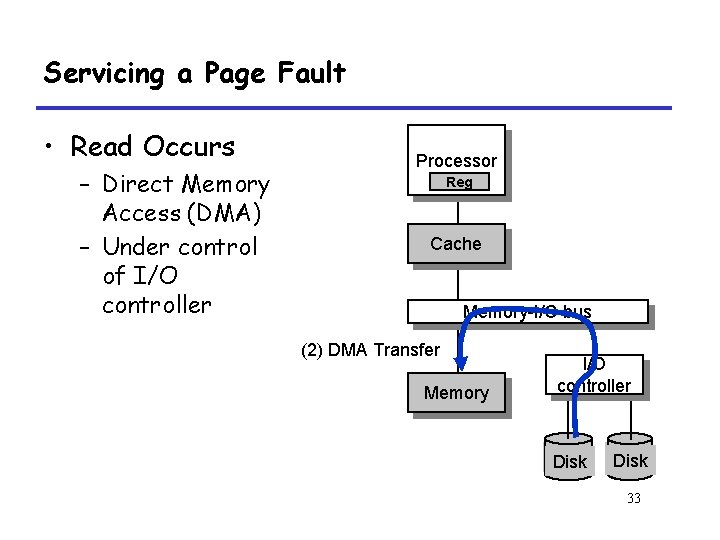

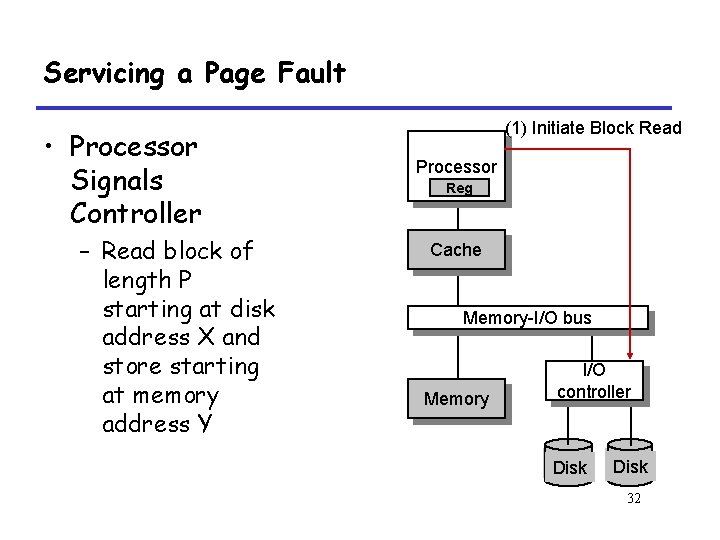

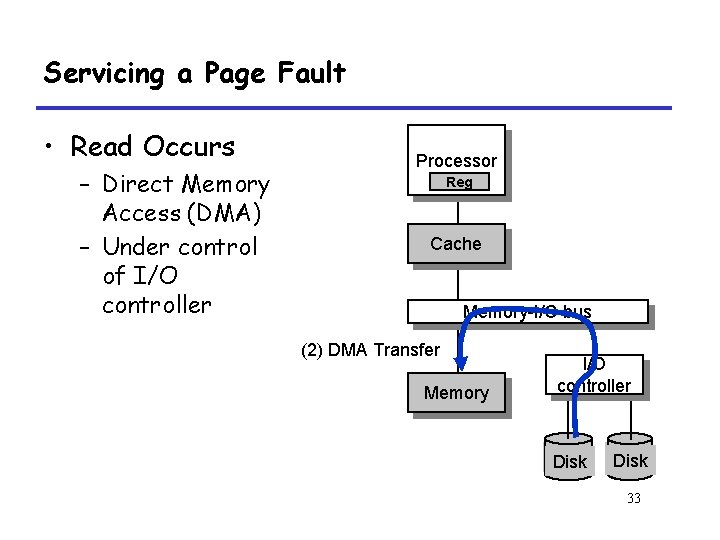

Servicing a Page Fault • Processor Signals Controller – Read block of length P starting at disk address X and store starting at memory address Y (1) Initiate Block Read Processor Reg Cache Memory-I/O bus Memory I/O controller disk Disk 32

Servicing a Page Fault • Read Occurs – Direct Memory Access (DMA) – Under control of I/O controller Processor Reg Cache Memory-I/O bus (2) DMA Transfer Memory I/O controller disk Disk 33

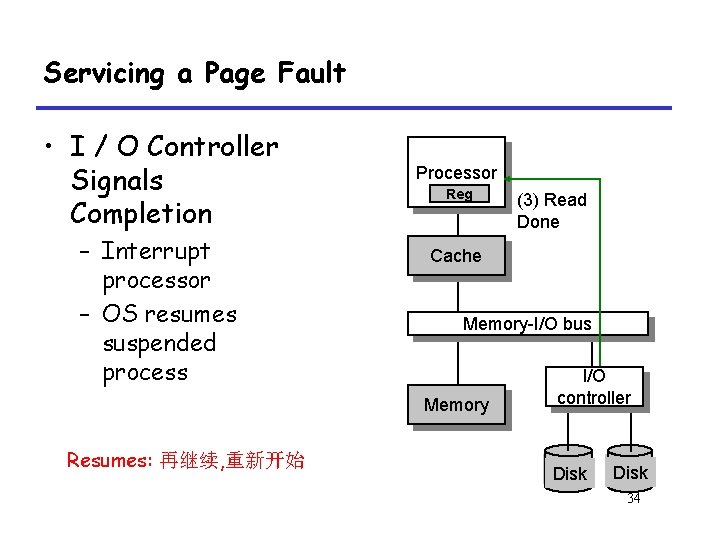

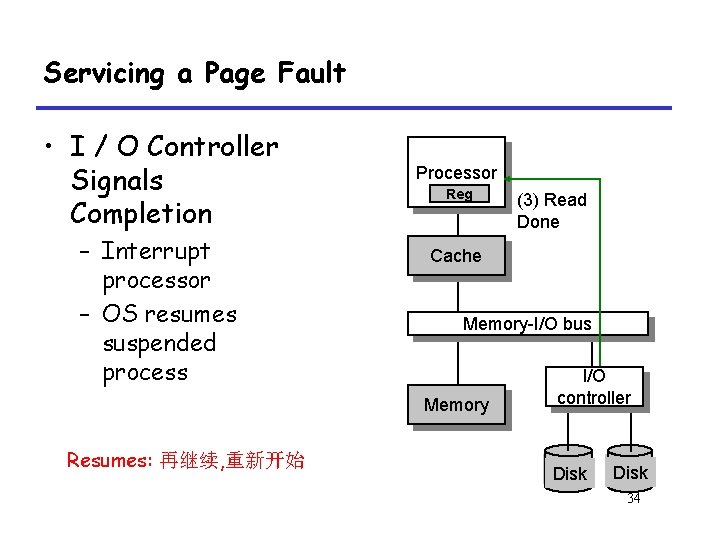

Servicing a Page Fault • I / O Controller Signals Completion – Interrupt processor – OS resumes suspended process Processor Reg Cache Memory-I/O bus Memory Resumes: 再继续, 重新开始 (3) Read Done I/O controller disk Disk 34

10. 3. 5 Allocating Pages 35

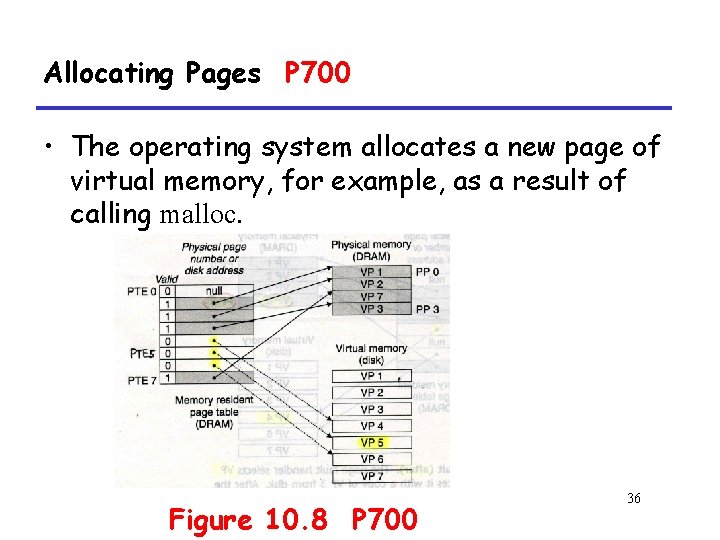

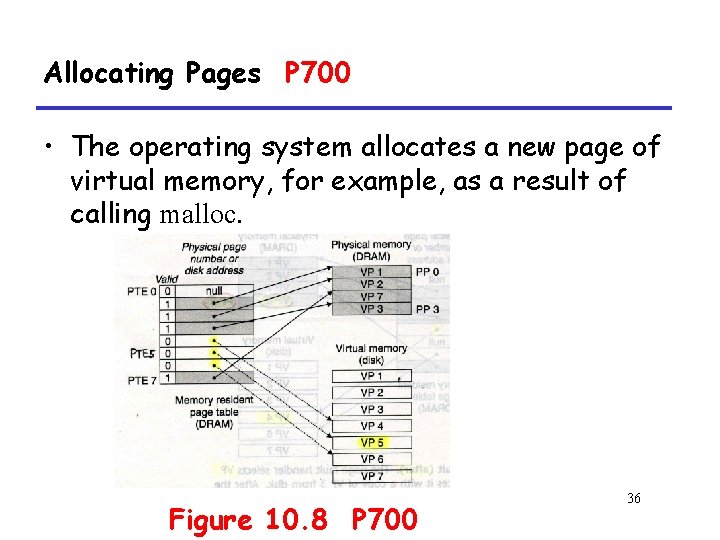

Allocating Pages P 700 • The operating system allocates a new page of virtual memory, for example, as a result of calling malloc. Figure 10. 8 P 700 36

10. 3. 6 Locality to the Rescue Again Rescue: 解救 37

Locality P 700 • The principle of locality promises that at any point in time they will tend to work on a smaller set of active pages, known as working set or resident set. • Initial overhead where the working set is paged into memory, subsequent references to the working set result in hits, with no additional disk traffic. 38

Locality-2 P 700 • If the working set size exceeds the size of physical memory, then the program can produce an unfortunate situation known as thrashing, where the pages are swapped in and out continuously. Thrash: 鞭打 39

10. 4 VM as a Tool for Memory Management 40

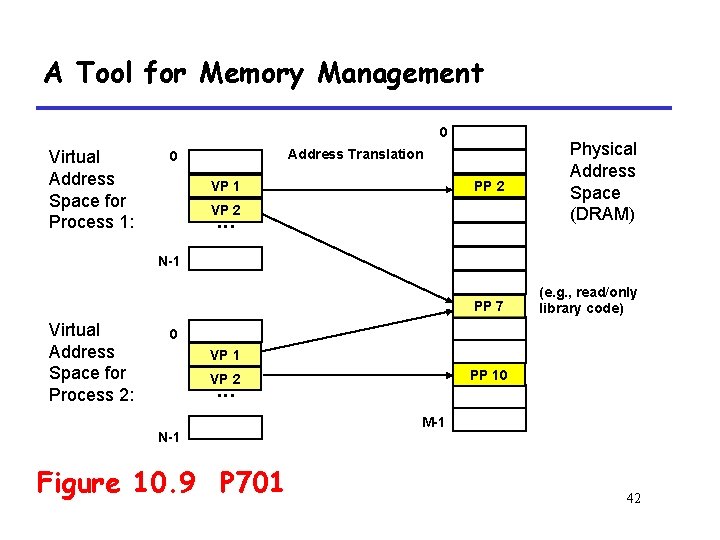

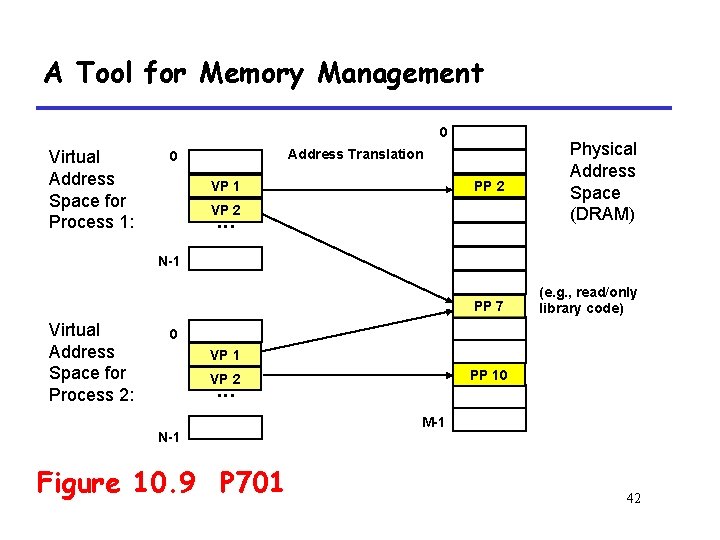

A Tool for Memory Management • Separate virtual address space – Each process has its own virtual address space • Simplify linking, sharing, loading, and memory allocation 41

A Tool for Memory Management 0 Virtual Address Space for Process 1: PP 2 Physical Address Space (DRAM) PP 7 (e. g. , read/only library code) Address Translation 0 VP 1 VP 2 . . . N-1 Virtual Address Space for Process 2: 0 VP 1 PP 10 VP 2 . . . N-1 Figure 10. 9 P 701 M-1 42

10. 4. 1 Simplifying Linking 43

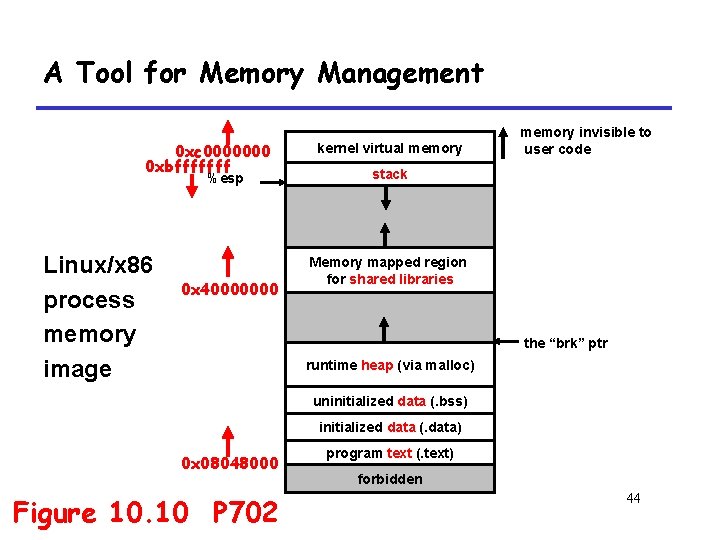

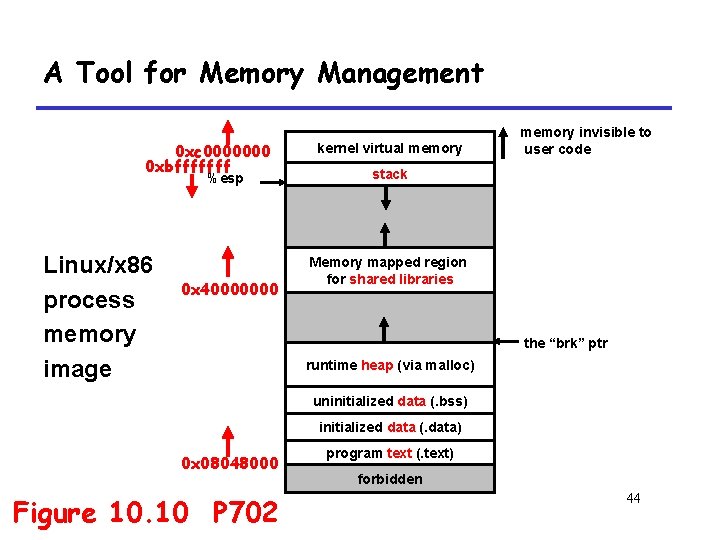

A Tool for Memory Management 0 xc 0000000 0 xbfffffff %esp Linux/x 86 process memory image 0 x 40000000 kernel virtual memory invisible to user code stack Memory mapped region for shared libraries the “brk” ptr runtime heap (via malloc) uninitialized data (. bss) initialized data (. data) 0 x 08048000 Figure 10. 10 P 702 program text (. text) forbidden 44

10. 4. 2 Simplifying Sharing 45

Simplifying Sharing • In some instances, it is desirable for processes to share code and data. – The same operating system kernel code – Make calls to routines in the standard C library • The operating system can arrange for multiple process to share a single copy of this code by mapping the appropriate virtual pages in different processes to the same physical pages 46

10. 4. 3 Simplifying Memory Allocation 47

Simplifying Memory Allocation • A simple mechanism for allocating additional memory to user processes. • Page table work. 48

10. 4. 4 Simplifying Loading 49

Simplifying Loading • Load executable and shared object files into memory. • Memory mapping……mmap 50

10. 5 VM as a Tool for Memory Protection 51

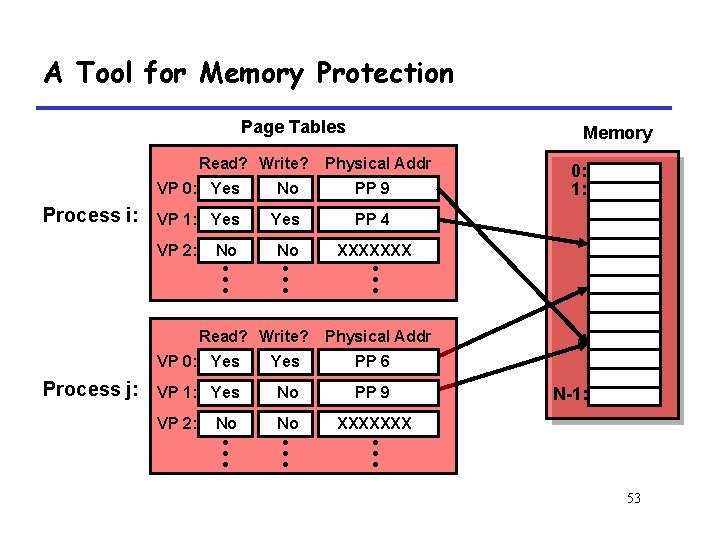

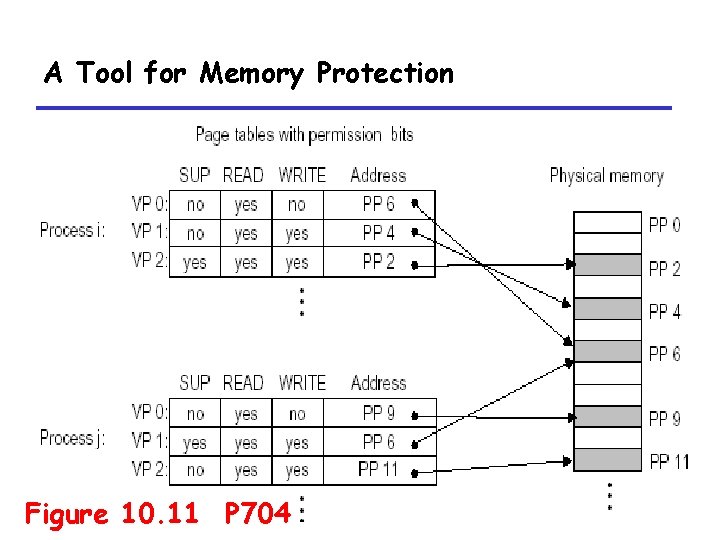

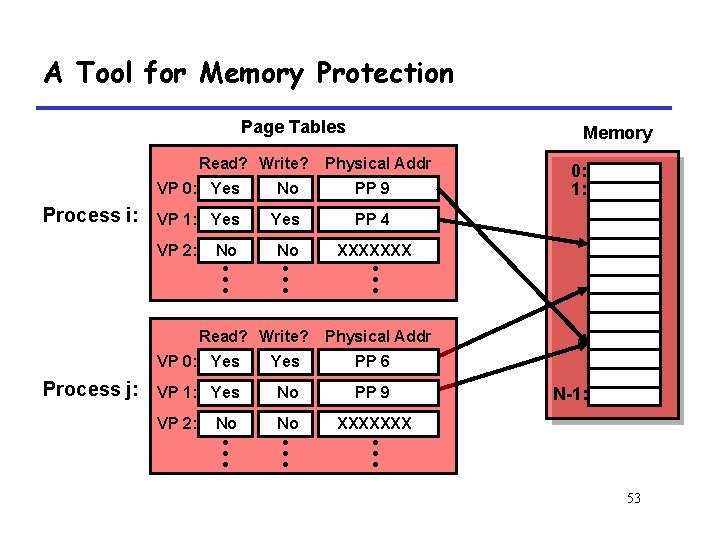

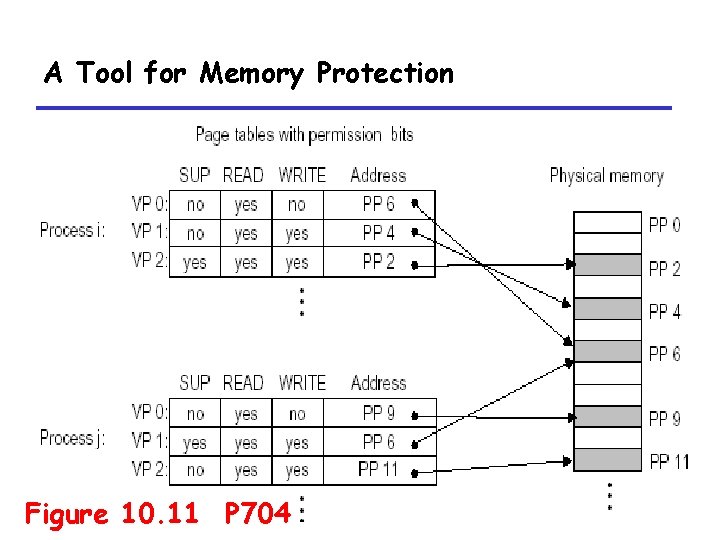

A Tool for Memory Protection • Page table entry contains access rights information – hardware enforces this protection (trap into OS if violation occurs) 52

A Tool for Memory Protection Page Tables Read? Write? Process i: Physical Addr VP 0: Yes No PP 9 VP 1: Yes PP 4 No No XXXXXXX • • • VP 2: Read? Write? Process j: Memory 0: 1: Physical Addr VP 0: Yes PP 6 VP 1: Yes No PP 9 VP 2: No No XXXXXXX • • • N-1: 53

A Tool for Memory Protection Figure 10. 11 P 704 54

10. 6 Address Translation 55

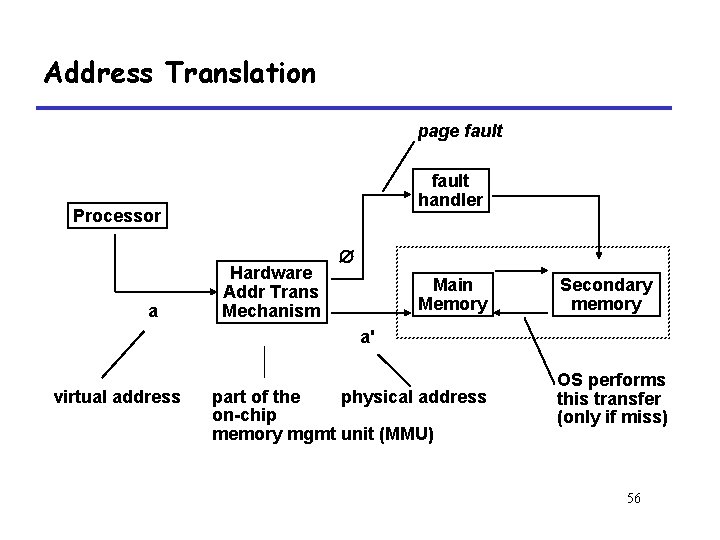

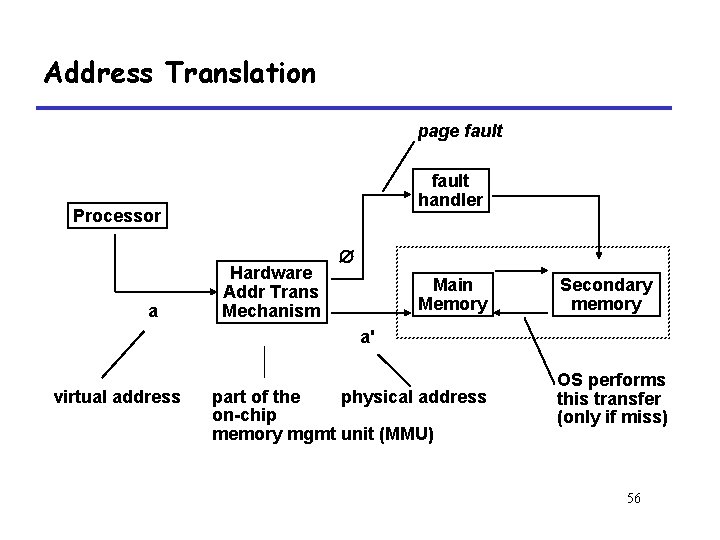

Address Translation page fault handler Processor a Hardware Addr Trans Mechanism Main Memory Secondary memory a' virtual address part of the physical address on-chip memory mgmt unit (MMU) OS performs this transfer (only if miss) 56

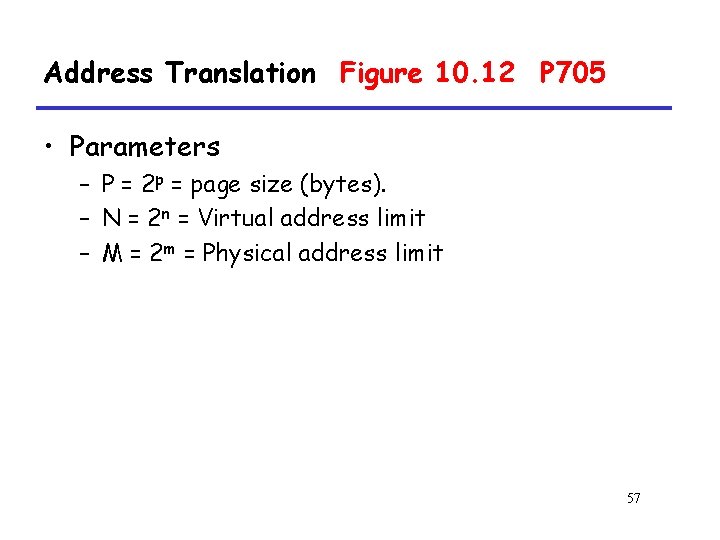

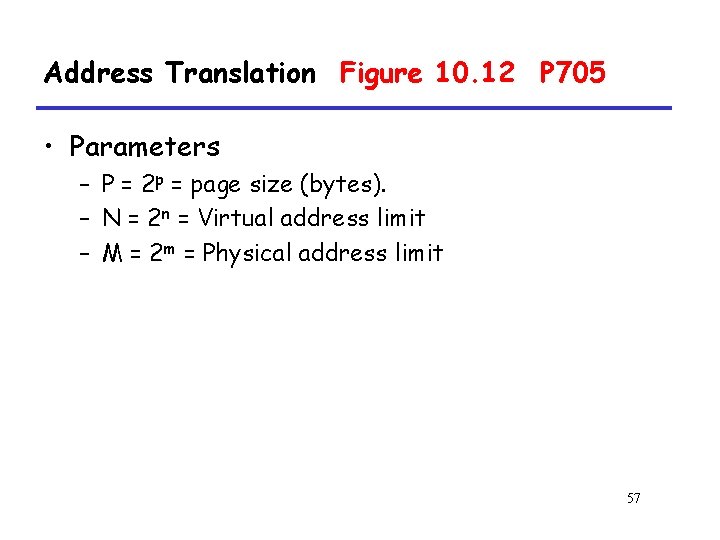

Address Translation Figure 10. 12 P 705 • Parameters – P = 2 p = page size (bytes). – N = 2 n = Virtual address limit – M = 2 m = Physical address limit 57

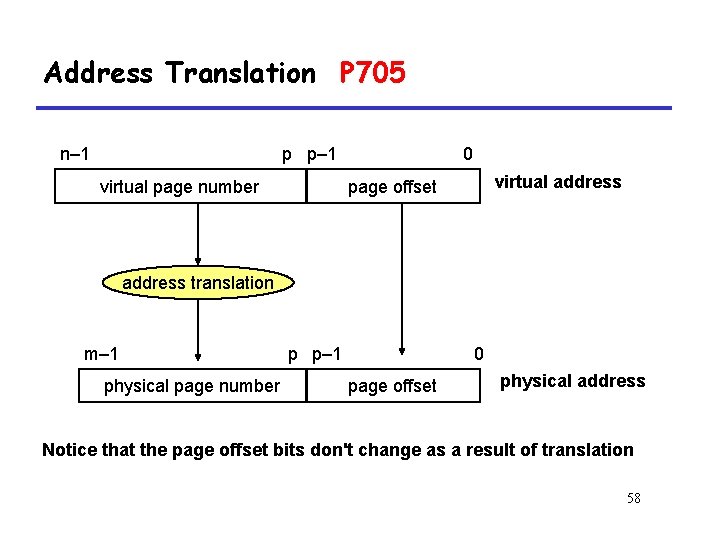

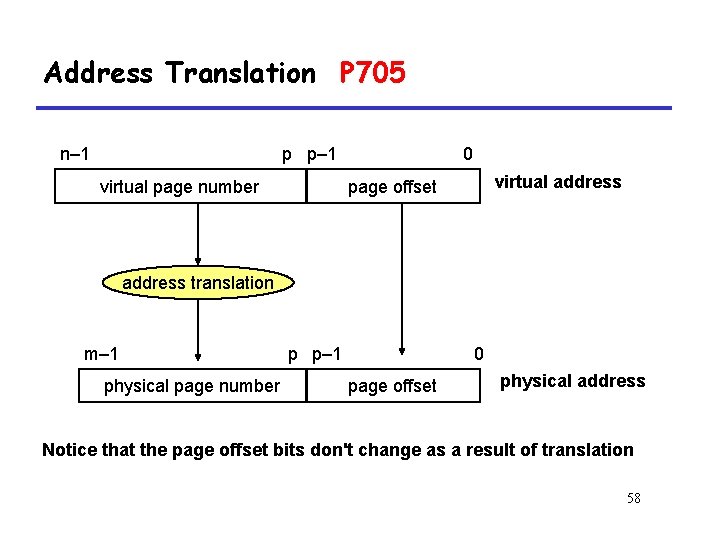

Address Translation P 705 n– 1 p p– 1 virtual page number 0 virtual address page offset address translation m– 1 physical page number p p– 1 0 page offset physical address Notice that the page offset bits don't change as a result of translation 58

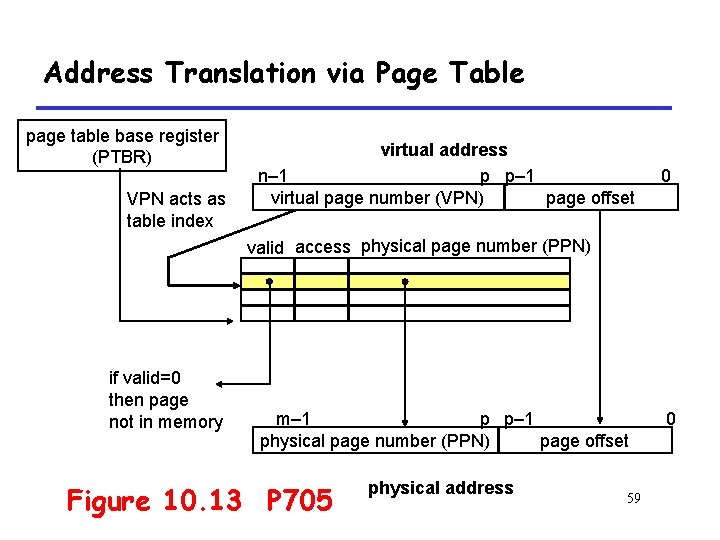

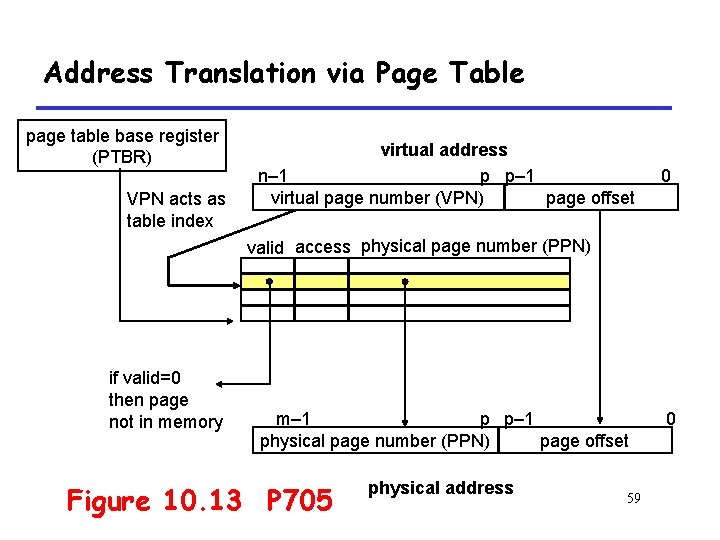

Address Translation via Page Table page table base register (PTBR) VPN acts as table index virtual address n– 1 p p– 1 virtual page number (VPN) page offset 0 valid access physical page number (PPN) if valid=0 then page not in memory m– 1 p p– 1 physical page number (PPN) page offset Figure 10. 13 P 705 physical address 59 0

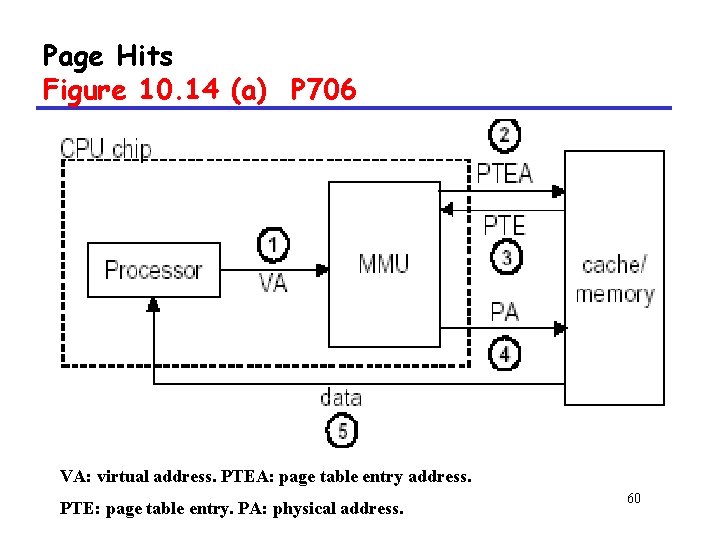

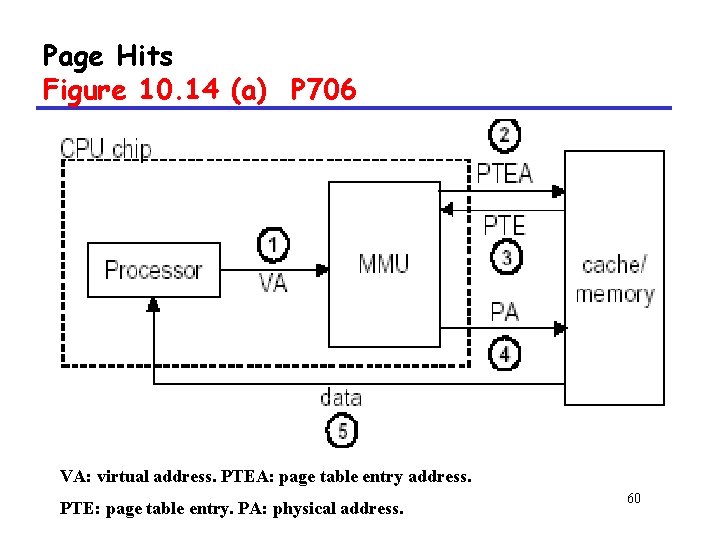

Page Hits Figure 10. 14 (a) P 706 VA: virtual address. PTEA: page table entry address. PTE: page table entry. PA: physical address. 60

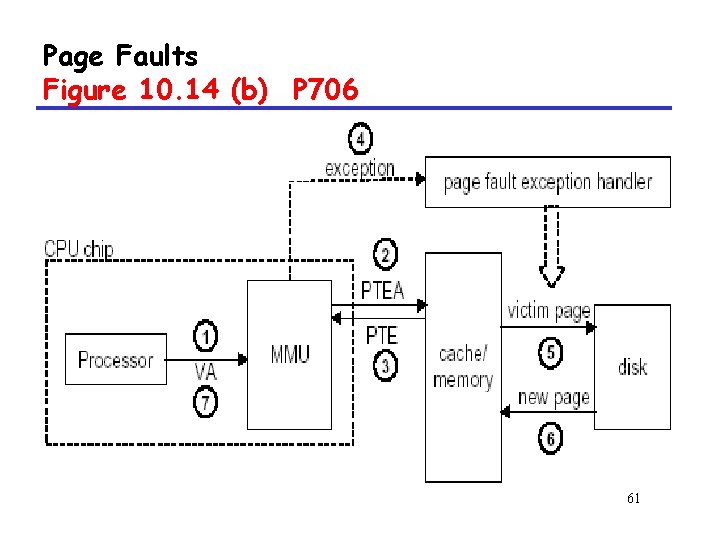

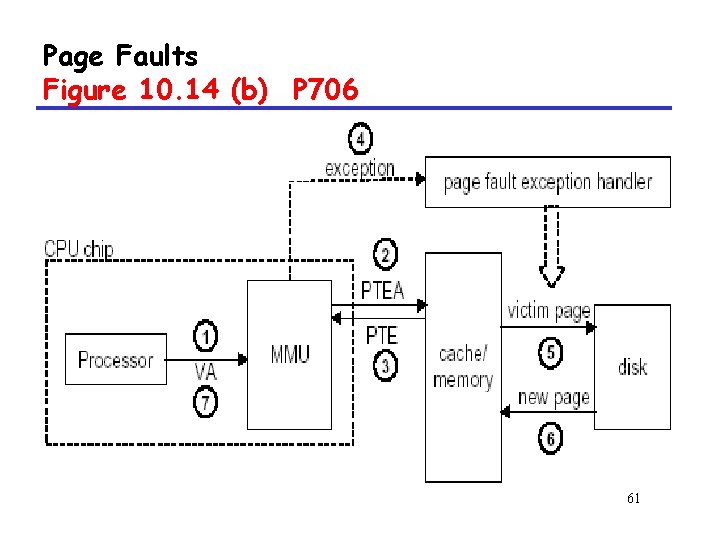

Page Faults Figure 10. 14 (b) P 706 61

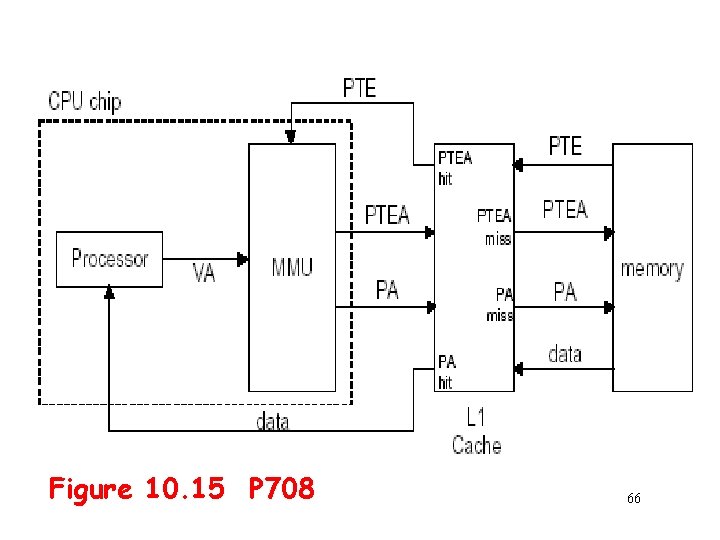

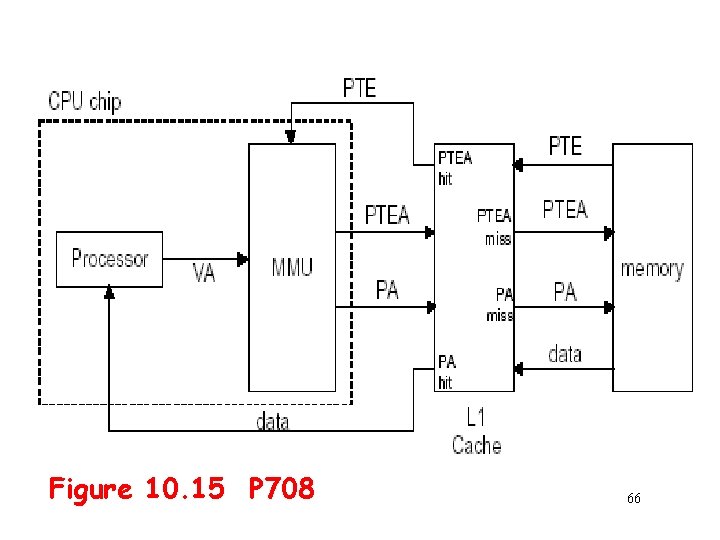

10. 6. 1 Integrating Caches and VM 62

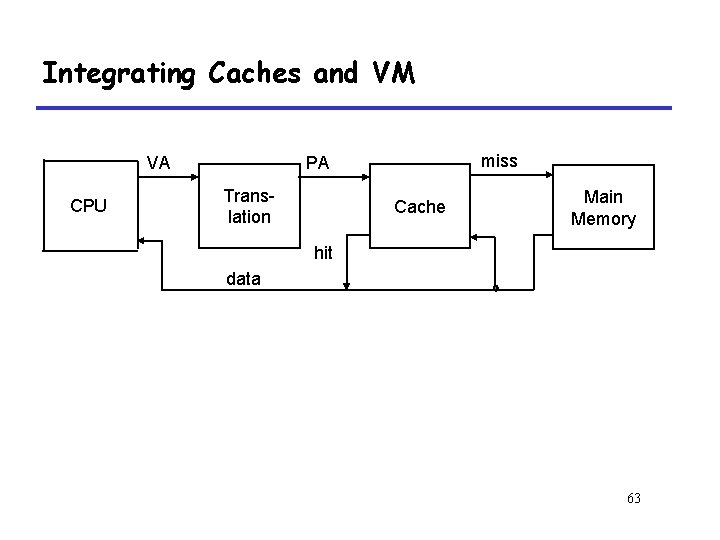

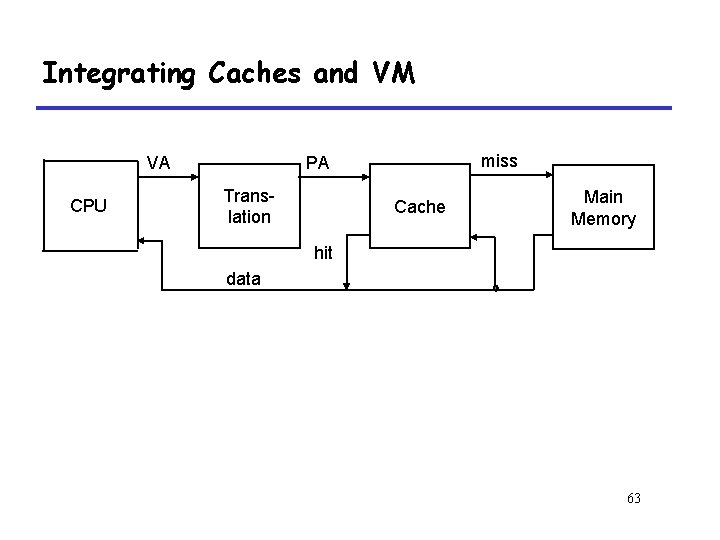

Integrating Caches and VM VA CPU miss PA Translation Cache Main Memory hit data 63

Integrating Caches and VM • Most Caches “Physically Addressed” – Accessed by physical addresses – Allows multiple processes to have blocks in cache at same time – Allows multiple processes to share pages – Cache doesn’t need to be concerned with protection issues • Access rights checked as part of address translation 64

Integrating Caches and VM • Perform Address Translation Before Cache Lookup – But this could involve a memory access itself (of the PTE) – Of course, page table entries can also become cached 65

Figure 10. 15 P 708 66

10. 6. 2 Speeding up Address Translation with a TLB 67

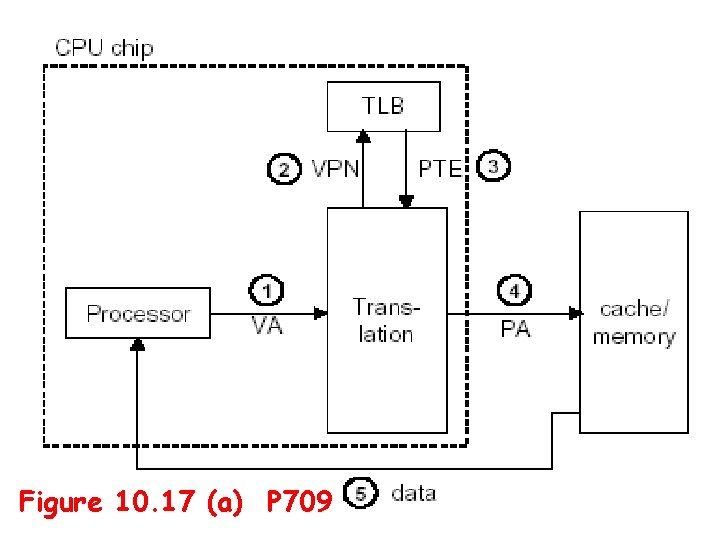

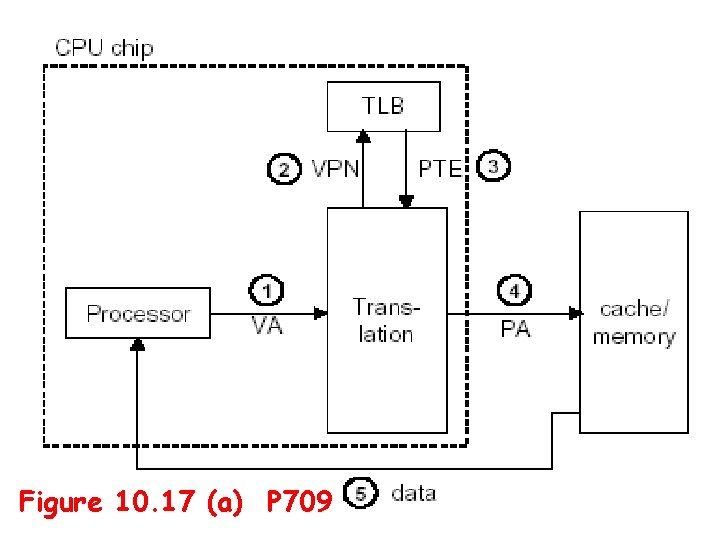

Speeding up Translation with a TLB • “Translation Lookaside Buffer” (TLB) – Small hardware cache in MMU – Maps virtual page numbers to physical page numbers 68

Figure 10. 17 (a) P 709 69

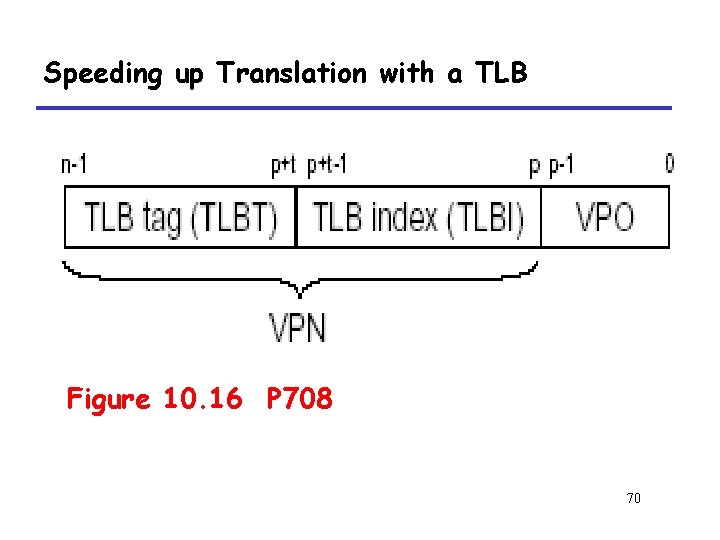

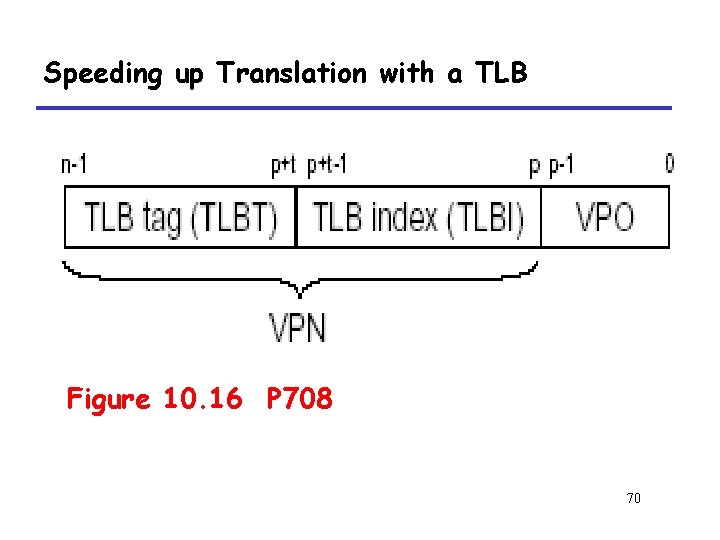

Speeding up Translation with a TLB Figure 10. 16 P 708 70

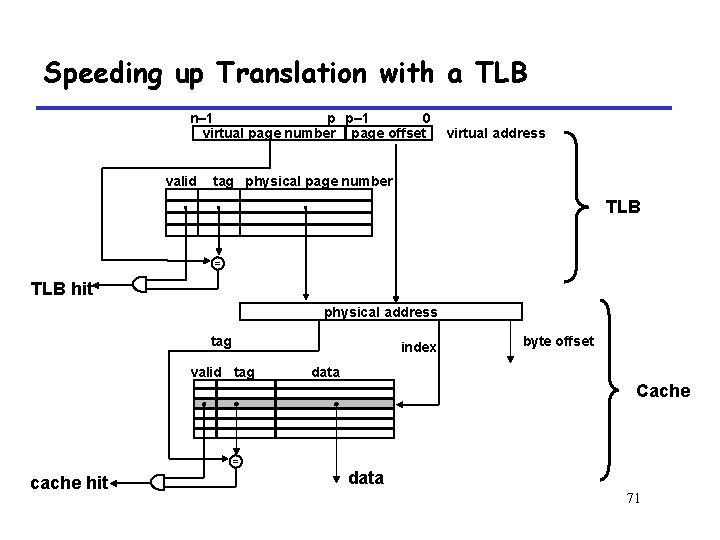

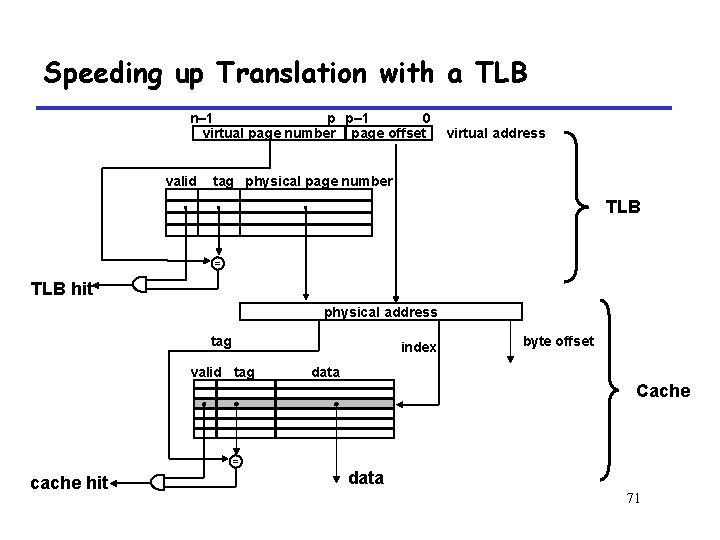

Speeding up Translation with a TLB n– 1 p p– 1 0 virtual page number page offset valid . virtual address tag physical page number . . TLB = TLB hit physical address tag index valid tag byte offset data Cache = cache hit data 71

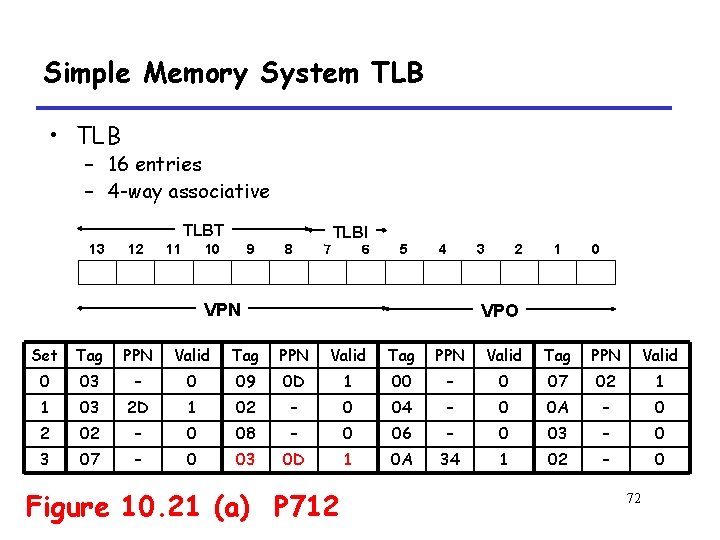

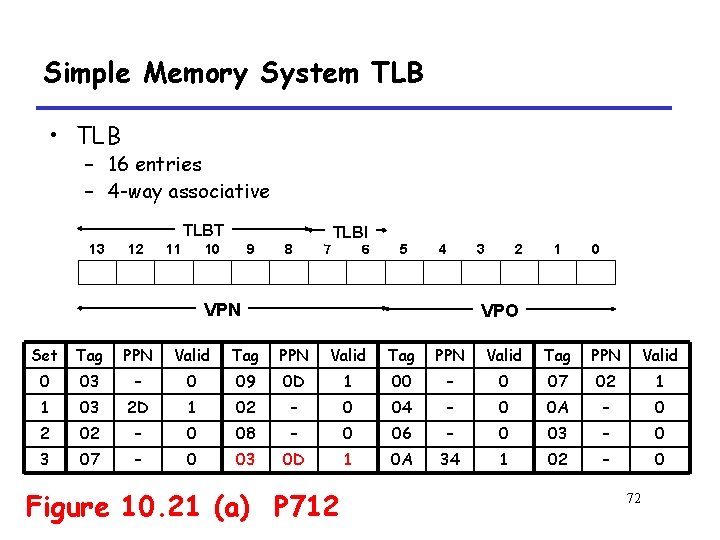

Simple Memory System TLB • TLB – 16 entries – 4 -way associative TLBT 13 12 11 10 9 8 7 TLBI 6 5 4 3 VPN 2 1 0 VPO Set Tag PPN Valid 0 03 – 0 09 0 D 1 00 – 0 07 02 1 1 03 2 D 1 02 – 0 04 – 0 0 A – 0 2 02 – 0 08 – 0 06 – 0 03 – 0 3 07 – 0 03 0 D 1 0 A 34 1 02 – 0 Figure 10. 21 (a) P 712 72

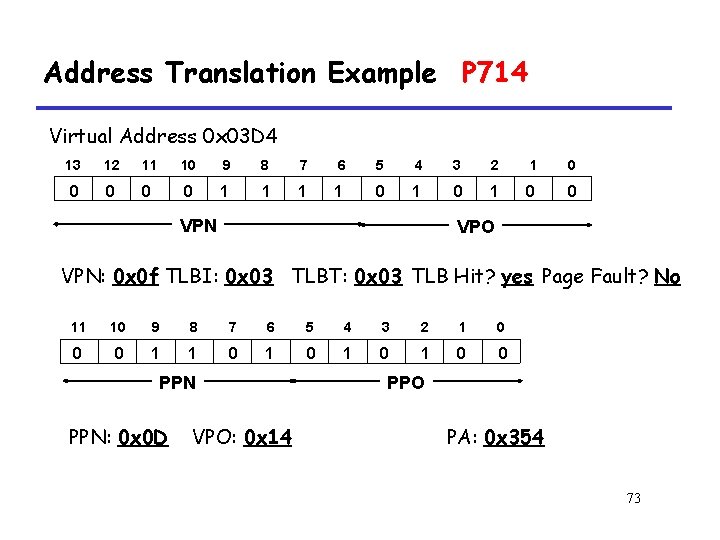

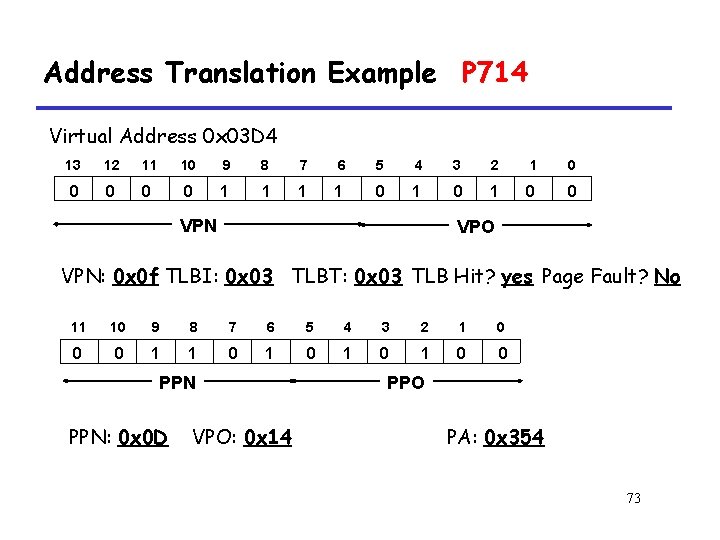

Address Translation Example P 714 Virtual Address 0 x 03 D 4 13 12 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 VPN VPO VPN: 0 x 0 f TLBI: 0 x 03 TLBT: 0 x 03 TLB Hit? yes Page Fault? No 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 PPN: 0 x 0 D VPO: 0 x 14 PPO PA: 0 x 354 73

Simple Memory System Cache • Cache – 16 lines – 4 -byte line size – Direct mapped 74

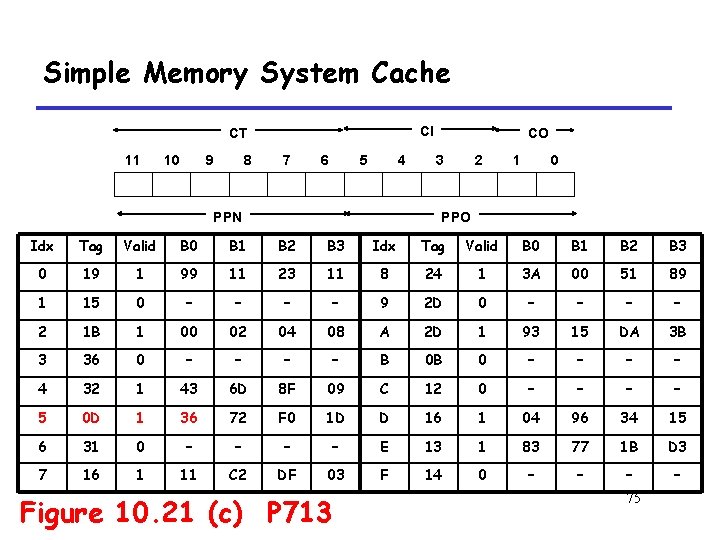

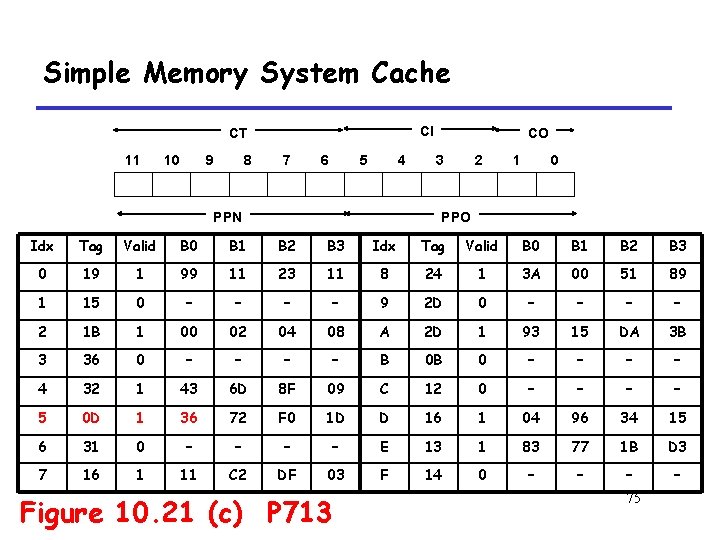

Simple Memory System Cache CI CT 11 10 9 8 7 6 5 4 PPN CO 3 2 1 0 PPO Idx Tag Valid B 0 B 1 B 2 B 3 0 19 1 99 11 23 11 8 24 1 3 A 00 51 89 1 15 0 – – 9 2 D 0 – – 2 1 B 1 00 02 04 08 A 2 D 1 93 15 DA 3 B 3 36 0 – – B 0 B 0 – – 4 32 1 43 6 D 8 F 09 C 12 0 – – 5 0 D 1 36 72 F 0 1 D D 16 1 04 96 34 15 6 31 0 – – E 13 1 83 77 1 B D 3 7 16 1 11 C 2 DF 03 F 14 0 – – Figure 10. 21 (c) P 713 75

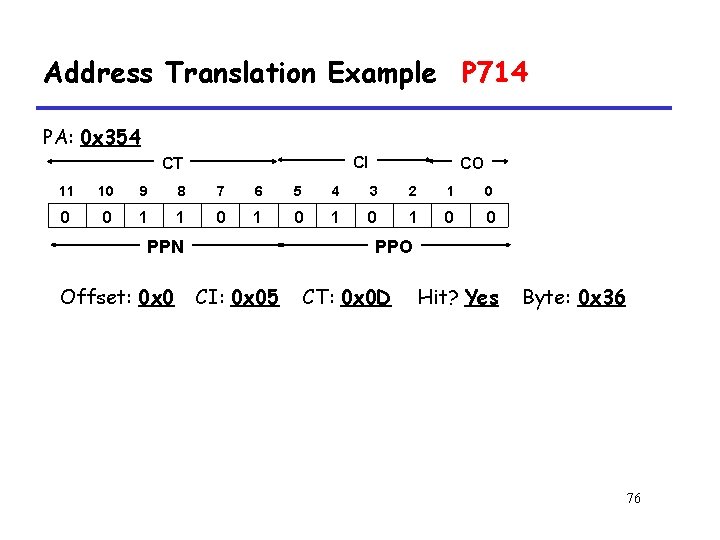

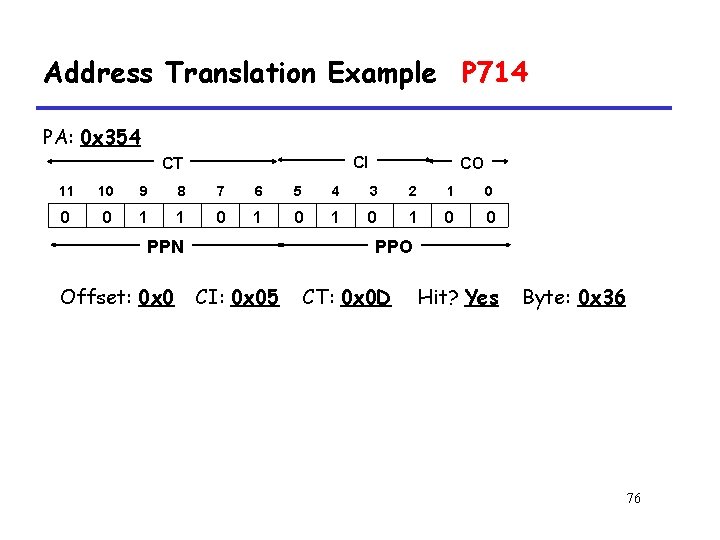

Address Translation Example P 714 PA: 0 x 354 CI CT CO 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 PPN Offset: 0 x 0 PPO CI: 0 x 05 CT: 0 x 0 D Hit? Yes Byte: 0 x 36 76

10. 6. 3 Multi Level Page Tables 77

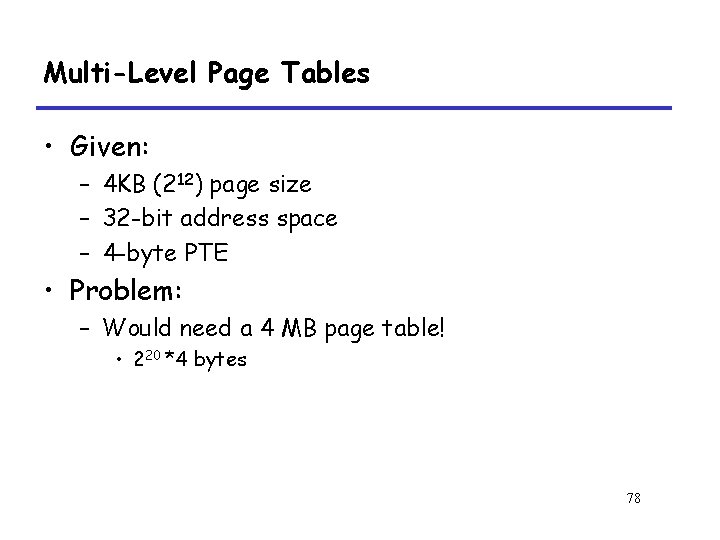

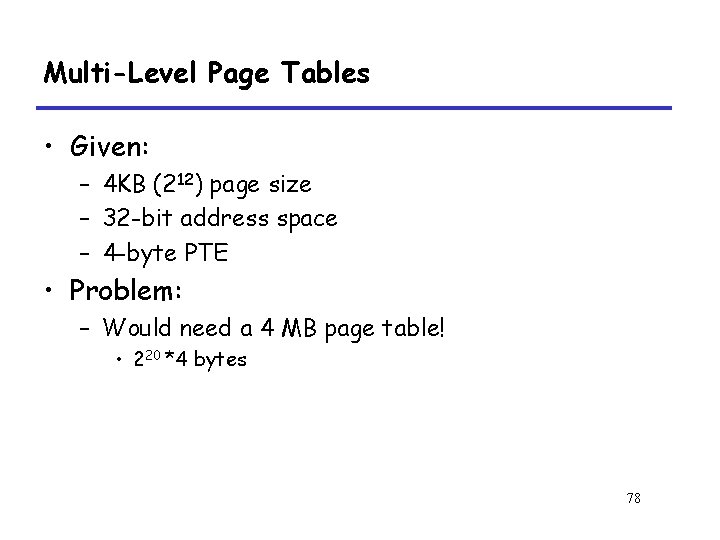

Multi-Level Page Tables • Given: – 4 KB (212) page size – 32 -bit address space – 4 -byte PTE • Problem: – Would need a 4 MB page table! • 220 *4 bytes 78

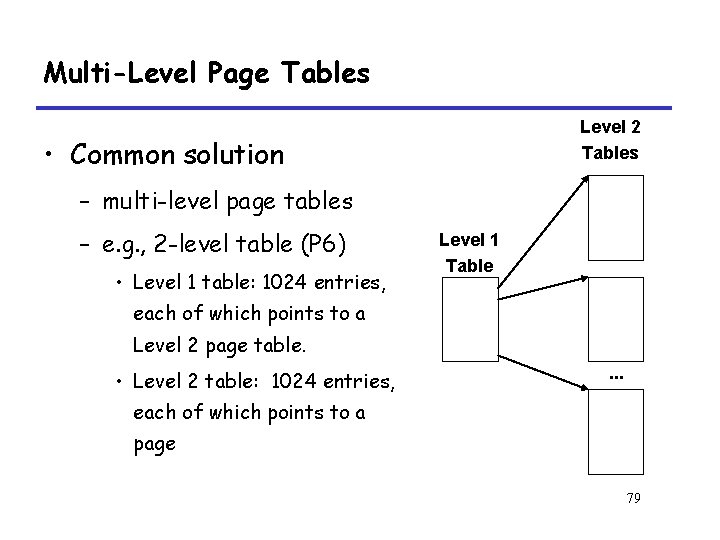

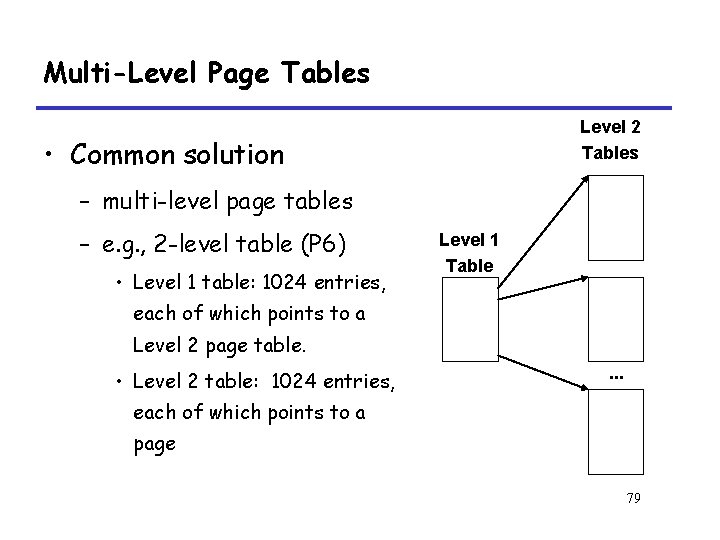

Multi-Level Page Tables Level 2 Tables • Common solution – multi-level page tables – e. g. , 2 -level table (P 6) • Level 1 table: 1024 entries, Level 1 Table each of which points to a Level 2 page table. • Level 2 table: 1024 entries, . . . each of which points to a page 79

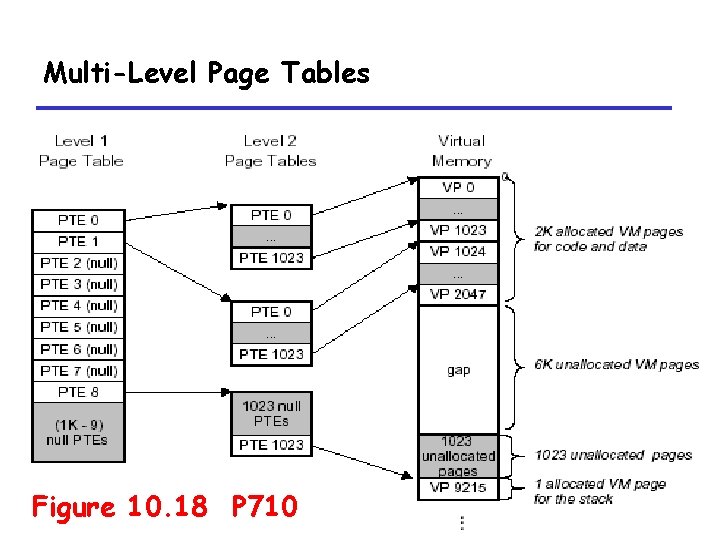

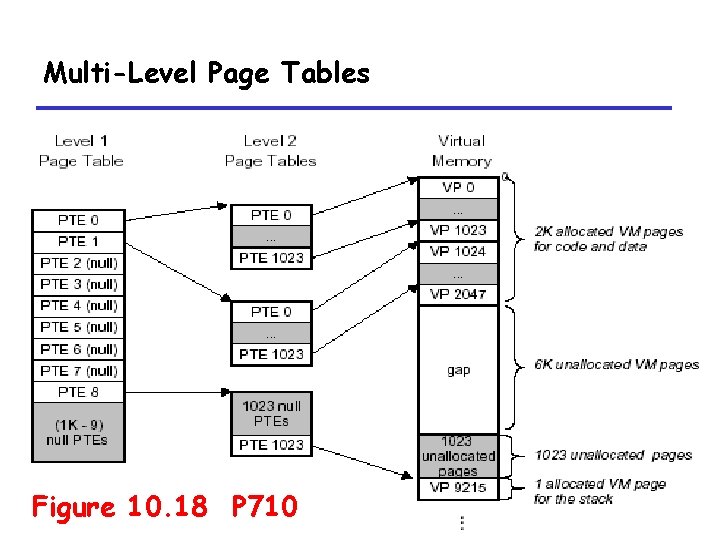

Multi-Level Page Tables Figure 10. 18 P 710 80

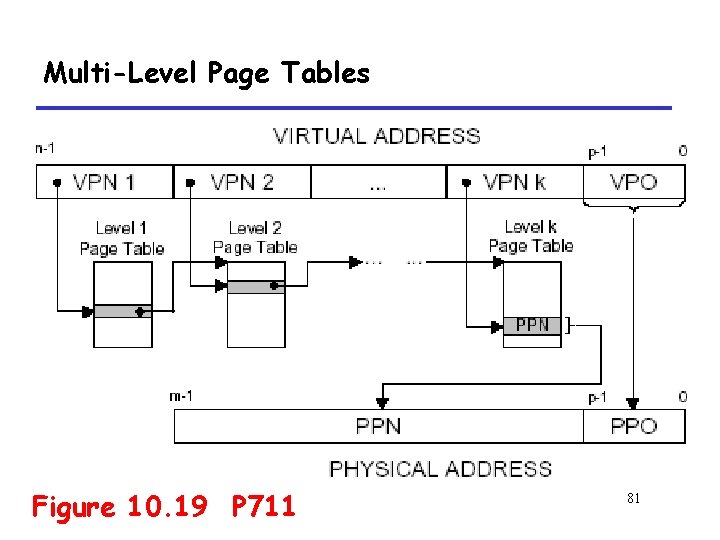

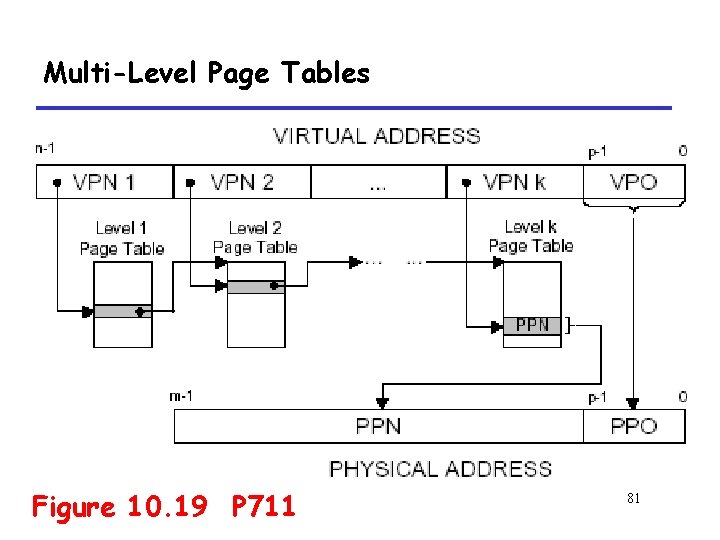

Multi-Level Page Tables Figure 10. 19 P 711 81

10. 6. 4 Putting it Together: End-to-End Address Translation 82

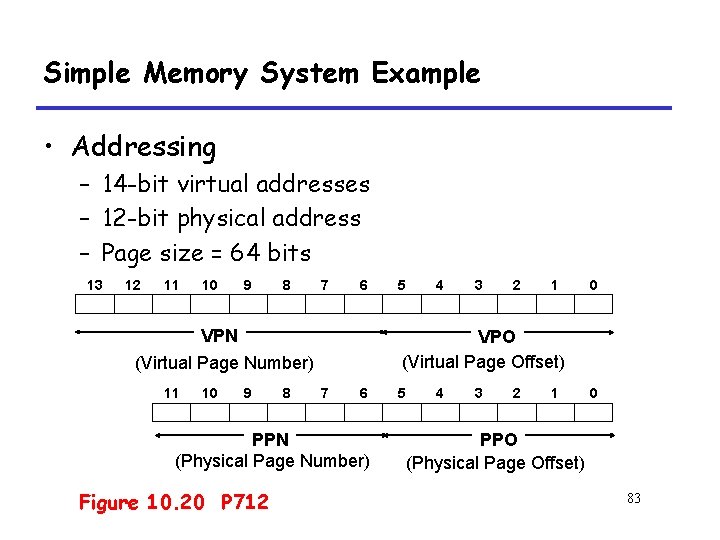

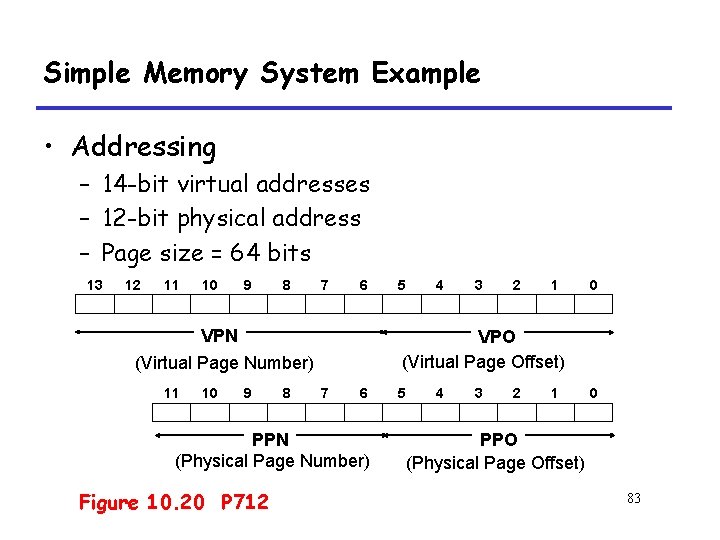

Simple Memory System Example • Addressing – 14 -bit virtual addresses – 12 -bit physical address – Page size = 64 bits 13 12 11 10 9 8 7 6 VPN (Virtual Page Number) 11 10 9 8 4 3 2 1 0 VPO (Virtual Page Offset) 7 6 PPN (Physical Page Number) Figure 10. 20 P 712 5 5 4 3 2 1 0 PPO (Physical Page Offset) 83

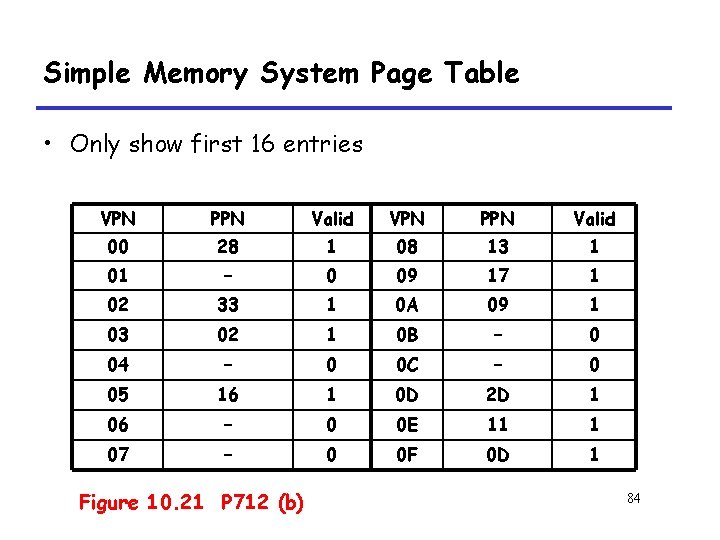

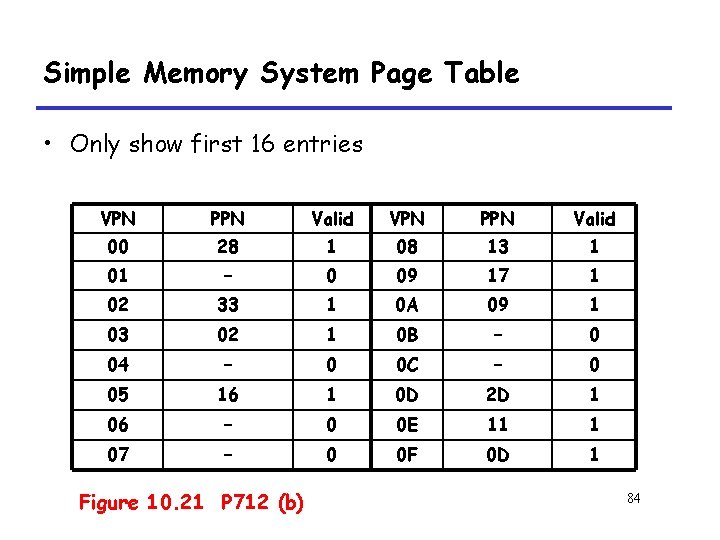

Simple Memory System Page Table • Only show first 16 entries VPN PPN Valid 00 28 1 08 13 1 01 – 0 09 17 1 02 33 1 0 A 09 1 03 02 1 0 B – 0 04 – 0 0 C – 0 05 16 1 0 D 2 D 1 06 – 0 0 E 11 1 07 – 0 0 F 0 D 1 Figure 10. 21 P 712 (b) 84

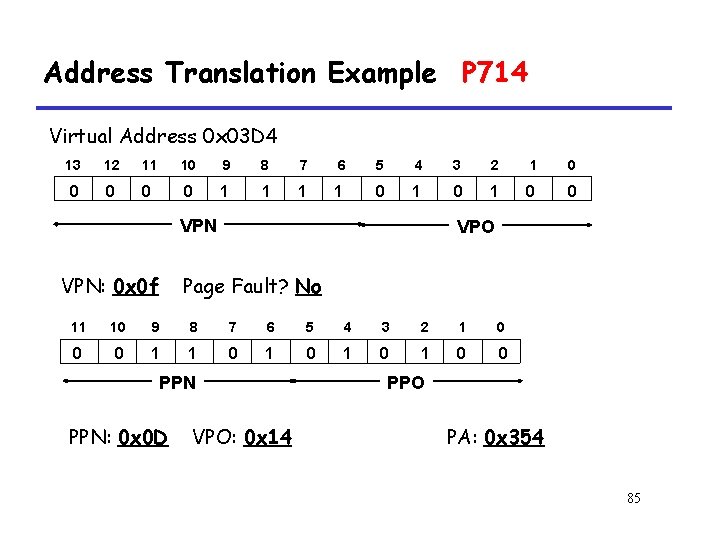

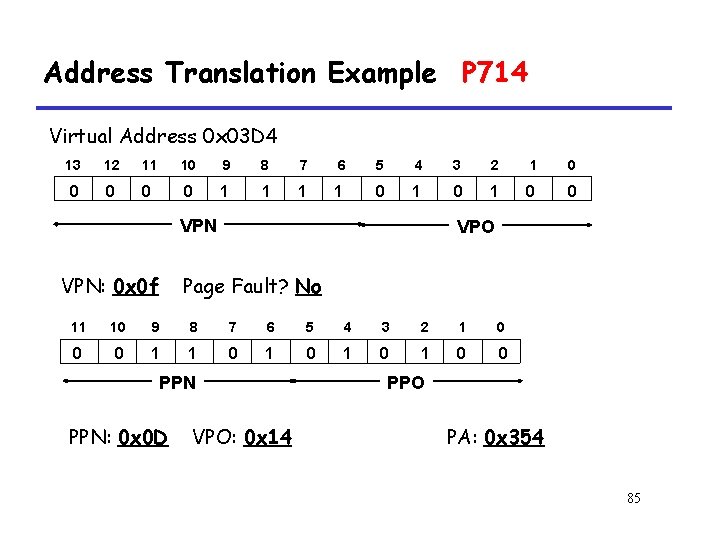

Address Translation Example P 714 Virtual Address 0 x 03 D 4 13 12 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 VPN: 0 x 0 f VPO Page Fault? No 11 10 9 8 7 6 5 4 3 2 1 0 0 0 1 1 0 1 0 0 PPN: 0 x 0 D VPO: 0 x 14 PPO PA: 0 x 354 85