Video Human Action Classification Group No 14 Linjun

![Literature Survey ● Simonyan et al. [1] first proposed the two-stream network which combined Literature Survey ● Simonyan et al. [1] first proposed the two-stream network which combined](https://slidetodoc.com/presentation_image_h2/51dcd6b90c19f5c6459caff81eedb611/image-6.jpg)

![References [1]. Simonyan, K. and Zisserman, A. , 2014. Two-stream convolutional networks for action References [1]. Simonyan, K. and Zisserman, A. , 2014. Two-stream convolutional networks for action](https://slidetodoc.com/presentation_image_h2/51dcd6b90c19f5c6459caff81eedb611/image-16.jpg)

- Slides: 16

Video Human Action Classification Group No. 14 Linjun Li, Yifan Cao, and Zheng Zhou

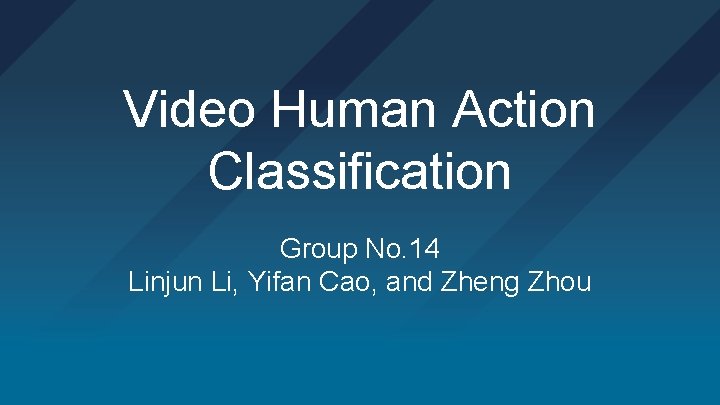

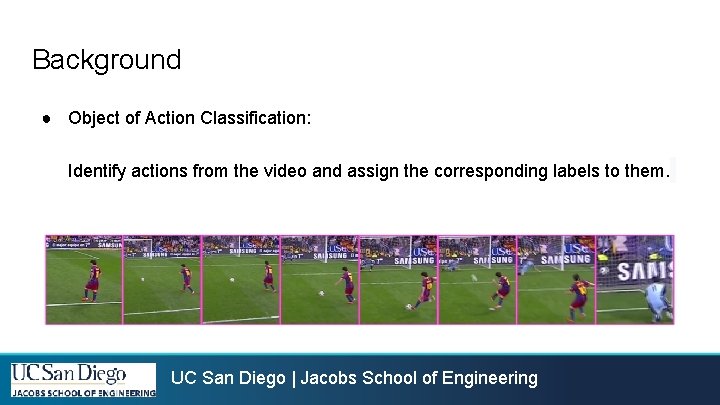

Background ● Object of Action Classification: Identify actions from the video and assign the corresponding labels to them. UC San Diego | Jacobs School of Engineering

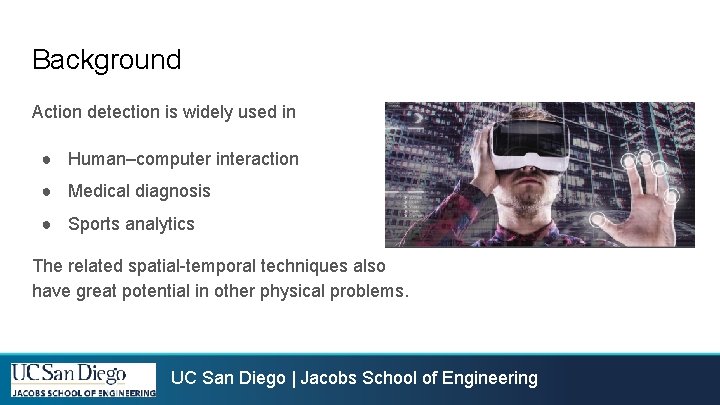

Background Action detection is widely used in ● Human–computer interaction ● Medical diagnosis ● Sports analytics The related spatial-temporal techniques also have great potential in other physical problems. UC San Diego | Jacobs School of Engineering

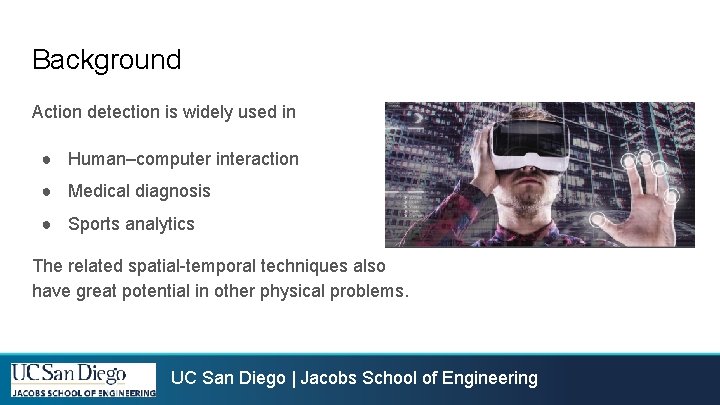

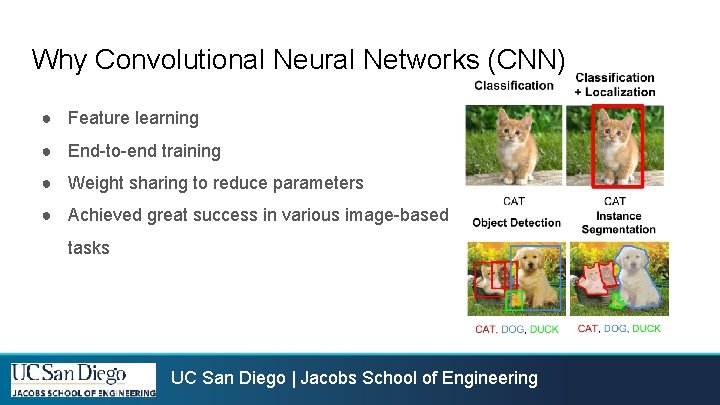

Why Convolutional Neural Networks (CNN) ● Feature learning ● End-to-end training ● Weight sharing to reduce parameters ● Achieved great success in various image-based tasks UC San Diego | Jacobs School of Engineering

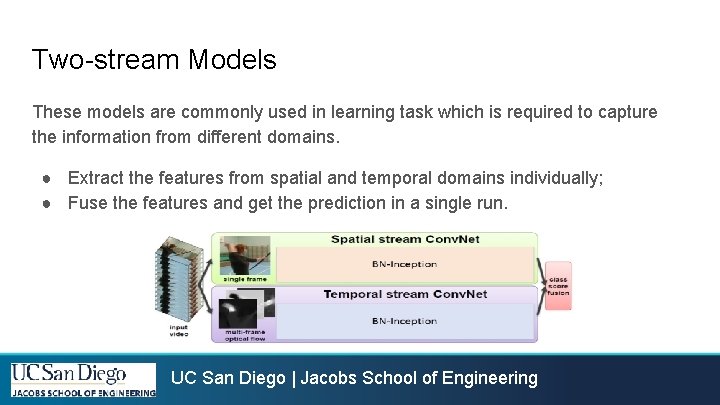

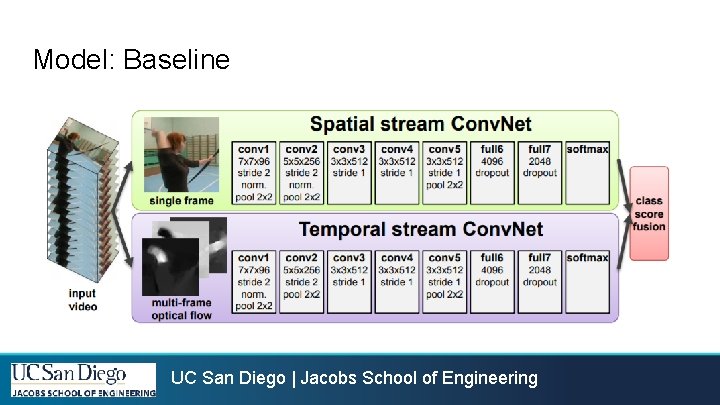

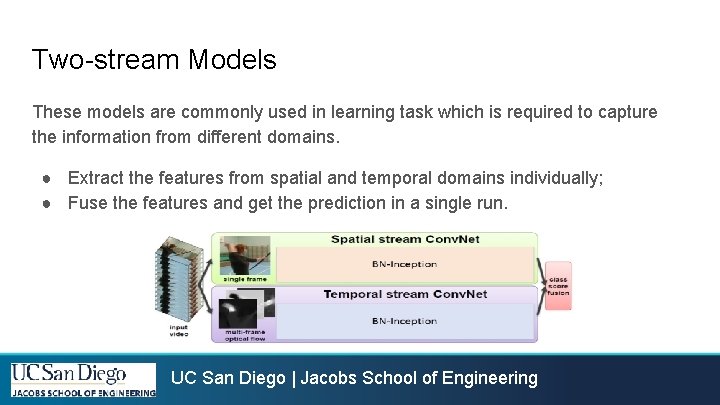

Two-stream Models These models are commonly used in learning task which is required to capture the information from different domains. ● Extract the features from spatial and temporal domains individually; ● Fuse the features and get the prediction in a single run. UC San Diego | Jacobs School of Engineering

![Literature Survey Simonyan et al 1 first proposed the twostream network which combined Literature Survey ● Simonyan et al. [1] first proposed the two-stream network which combined](https://slidetodoc.com/presentation_image_h2/51dcd6b90c19f5c6459caff81eedb611/image-6.jpg)

Literature Survey ● Simonyan et al. [1] first proposed the two-stream network which combined the appearance and the motion stream together ● Wang et al. [2] proposed a temporal segment network which first divided the video sequence into several segments and then fed them to the two-stream network. ● Gammulle et al. [3] integrated recurrent neural networks (RNN) into the twostream network to enhance the accuracy. [1]. Simonyan, K. and Zisserman, A. , 2014. Two-stream convolutional networks for action recognition in videos. [2]. Wang, L. , Xiong, Y. , Wang, Z. , Qiao, Y. , Lin, D. , Tang, X. and Van Gool, L. , 2016, October. Temporal segment networks: Towards good practices for deep action recognition. [3]. Gammulle, H. , Denman, S. , Sridharan, S. and Fookes, C. , 2017, March. Two stream lstm: A deep fusion framework for human action recognition. UC San Diego | Jacobs School of Engineering

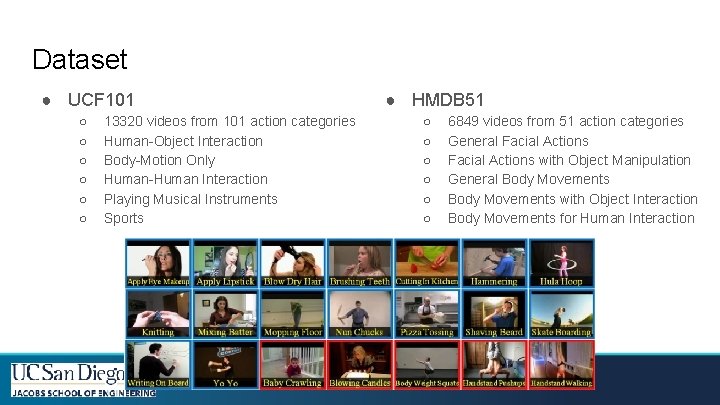

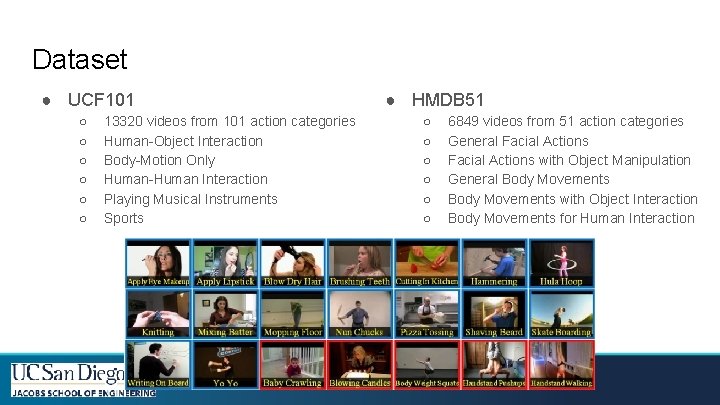

Dataset ● UCF 101 ○ ○ ○ ● HMDB 51 13320 videos from 101 action categories Human-Object Interaction Body-Motion Only Human-Human Interaction Playing Musical Instruments Sports ○ ○ ○ 6849 videos from 51 action categories General Facial Actions with Object Manipulation General Body Movements with Object Interaction Body Movements for Human Interaction UC San Diego | Jacobs School of Engineering

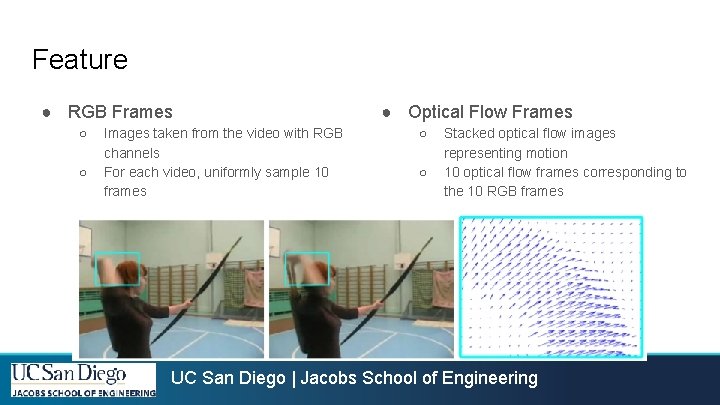

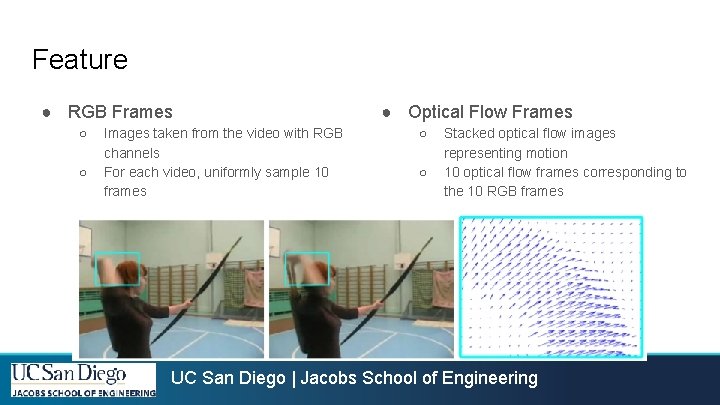

Feature ● RGB Frames ○ ○ Images taken from the video with RGB channels For each video, uniformly sample 10 frames ● Optical Flow Frames ○ ○ Stacked optical flow images representing motion 10 optical flow frames corresponding to the 10 RGB frames UC San Diego | Jacobs School of Engineering

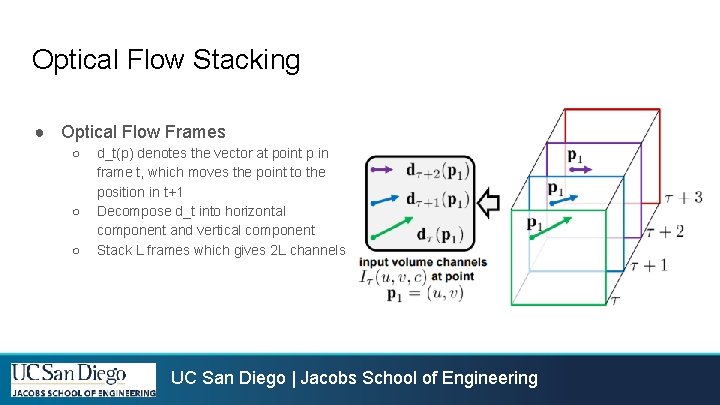

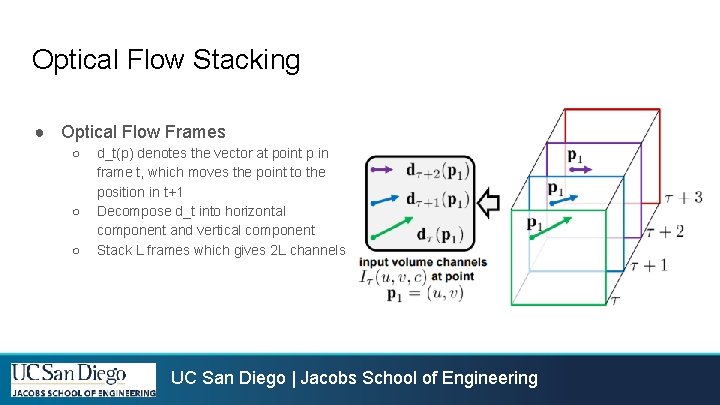

Optical Flow Stacking ● Optical Flow Frames ○ ○ ○ d_t(p) denotes the vector at point p in frame t, which moves the point to the position in t+1 Decompose d_t into horizontal component and vertical component Stack L frames which gives 2 L channels UC San Diego | Jacobs School of Engineering

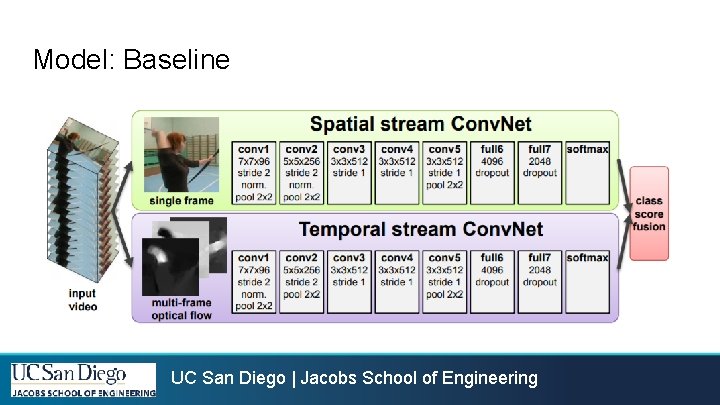

Model: Baseline UC San Diego | Jacobs School of Engineering

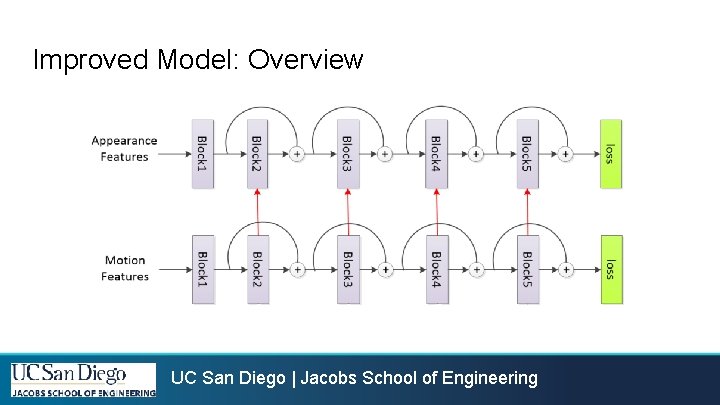

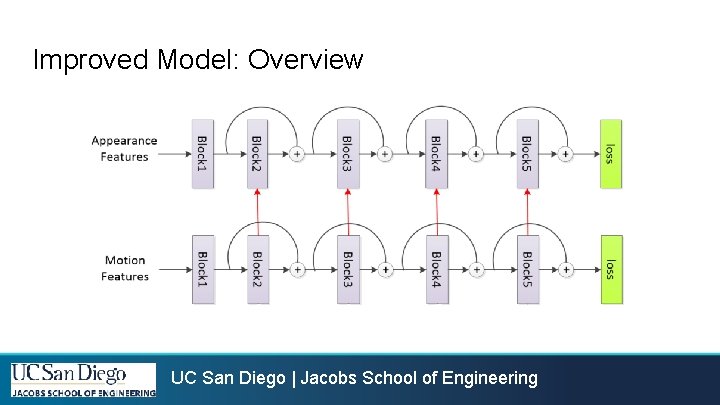

Improved Model: Overview UC San Diego | Jacobs School of Engineering

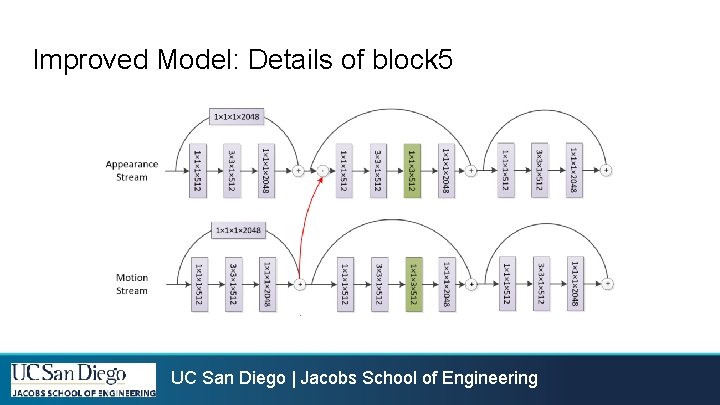

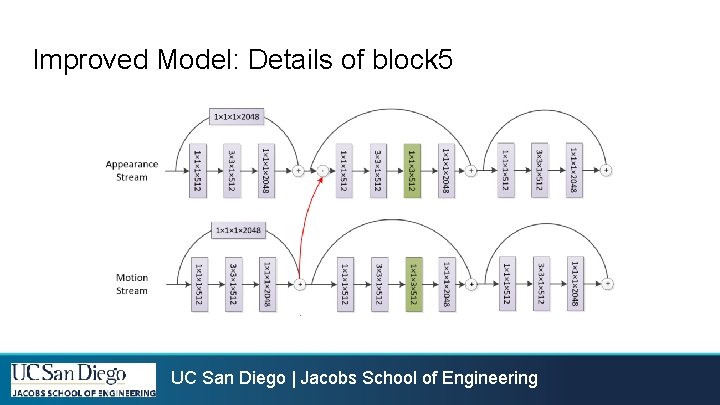

Improved Model: Details of block 5 UC San Diego | Jacobs School of Engineering

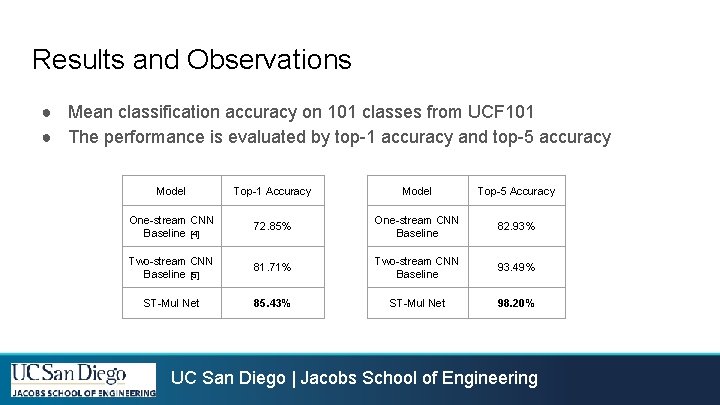

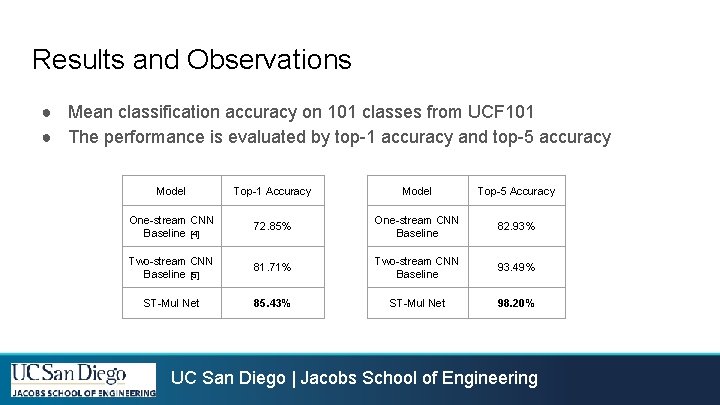

Results and Observations ● Mean classification accuracy on 101 classes from UCF 101 ● The performance is evaluated by top-1 accuracy and top-5 accuracy Model Top-1 Accuracy Model Top-5 Accuracy One-stream CNN Baseline [4] 72. 85% One-stream CNN Baseline 82. 93% Two-stream CNN Baseline [5] 81. 71% Two-stream CNN Baseline 93. 49% ST-Mul Net 85. 43% ST-Mul Net 98. 20% UC San Diego | Jacobs School of Engineering

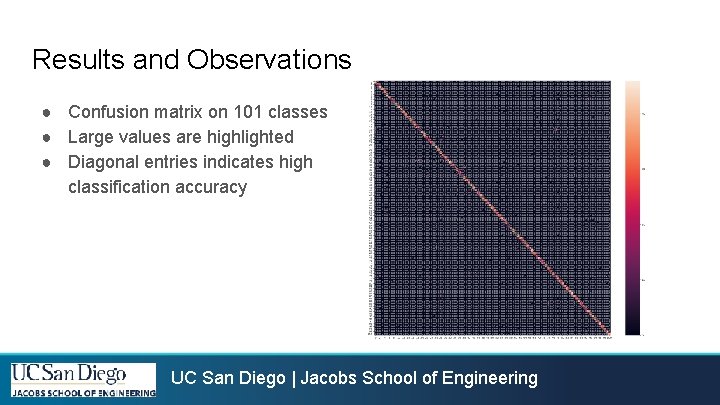

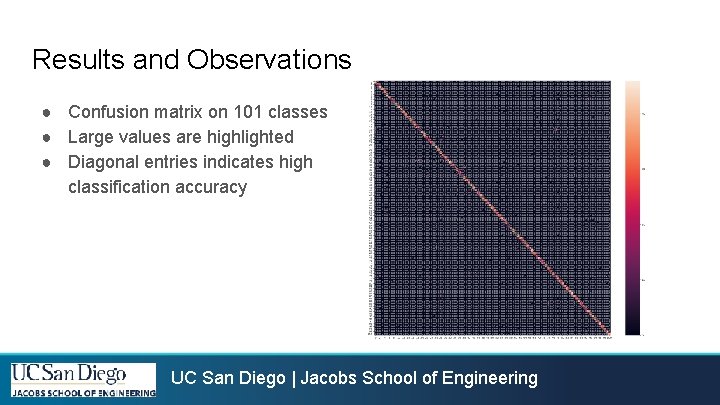

Results and Observations ● Confusion matrix on 101 classes ● Large values are highlighted ● Diagonal entries indicates high classification accuracy UC San Diego | Jacobs School of Engineering

Future Work ● Investigate different fusion types and structures ● Reproduce experiments on HMDB 51 dataset ● Extend 2 D Convolutional kernels to 3 D kernels; ● Incorporate the CNN-based features with Long short-term memory (LSTM) to enhance temporal support; ● Extract the skeleton of human body to capture tiny movements. UC San Diego | Jacobs School of Engineering

![References 1 Simonyan K and Zisserman A 2014 Twostream convolutional networks for action References [1]. Simonyan, K. and Zisserman, A. , 2014. Two-stream convolutional networks for action](https://slidetodoc.com/presentation_image_h2/51dcd6b90c19f5c6459caff81eedb611/image-16.jpg)

References [1]. Simonyan, K. and Zisserman, A. , 2014. Two-stream convolutional networks for action recognition in videos. In Advances in neural information processing systems (pp. 568 -576). [2]. Wang, L. , Xiong, Y. , Wang, Z. , Qiao, Y. , Lin, D. , Tang, X. and Van Gool, L. , 2016, October. Temporal segment networks: Towards good practices for deep action recognition. In European conference on computer vision (pp. 20 -36). Springer, Cham. [3]. Gammulle, H. , Denman, S. , Sridharan, S. and Fookes, C. , 2017, March. Two stream lstm: A deep fusion framework for human action recognition. In 2017 IEEE Winter Conference on Applications of Computer Vision (WACV) (pp. 177 -186). IEEE. [4]. Yao, L. , Torabi, A. , Cho, K. , Ballas, N. , Pal, C. , Larochelle, H. and Courville, A. , 2015. Describing videos by exploiting temporal structure. In Proceedings of the IEEE international conference on computer vision (pp. 4507 -4515). [5]. Feichtenhofer, C. , Pinz, A. and Zisserman, A. , 2016. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1933 -1941). UC San Diego | Jacobs School of Engineering