Very LargeScale Incremental Clustering Berker Mumin Cebe Ismet

Very Large-Scale Incremental Clustering Berker Mumin Cebe Ismet Zeki Yalniz 27 March 2007 1

Table of Contents l Why Clustering? l Why Incremental Clustering? l Related Work l Incremental C 3 M (C 2 ICM) l A Former Implementation of C 2 ICM for very large datasets l Conclusion 2

Why clustering ? l It is an effective tool to manage information overload l To browse large document collections quickly l To easily grasp the distinct topics and subtopics (concept hierarchies) l To allow search engines to efficiently query large document collections 3

Types of Clustering Hierarchical vs. Non-hierarchical l Partitional vs. Agglomerative l Deterministic vs. Probabilistic algorithms l Incremental vs. Batch algorithms l 4

Why Incremental Clustering ? l The current information explosion l Popular sources of informational text documents such as Newswire and Blogs l Delay would be unacceptable in several important areas 5

Related Work l The cluster-splitting approach l Adaptive clustering based on user queries l Cobweb algorithm l Hierarchical Clustering in Incremental manner 6

C 2 ICM Algorithm l C 3 M is known as an efficient, effective and robust algorithm for clustering documents l C 3 M is well-developed for initial clustering, but maintenance is also necessary in clustering 7

C 2 ICM Algorithm l C 2 ICM algorithm is based on cover coefficient concept as C 3 M. l C 2 ICM is suitable for dynamic environments where there additions and deletions of documents l With C 2 ICM, reclustering for each update is avoided. 8

C 2 ICM Algorithm Details l First we compute the number of clusters and cluster seed powers in the updated database l Then we determine the newly added documents and falsified documents 9

C 2 ICM Algorithm Details l How do the clusters become false? l When a seed document becomes non-seed or is deleted l One or more non-seed documents of that cluster becomes seed 10

C 2 ICM Algorithm Details l We cluster these documents by assigning them to the cluster of the seed that covers them most l The documents which does not belong to any cluster are grouped into ragbag cluster 11

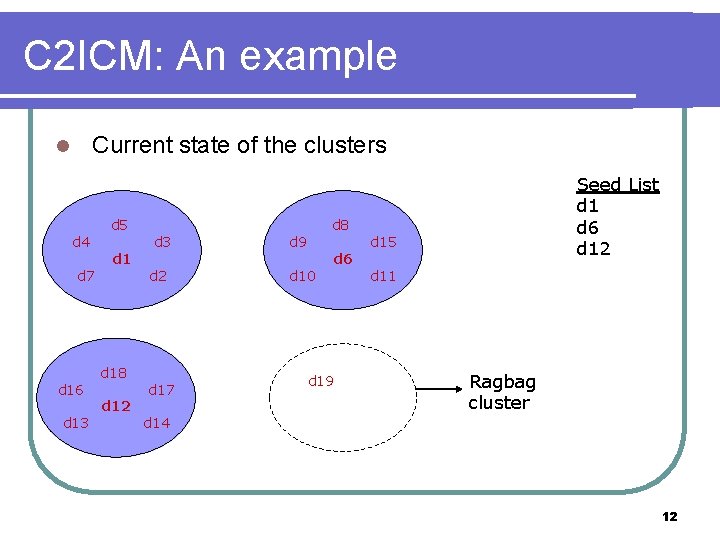

C 2 ICM: An example Current state of the clusters l d 4 d 7 d 16 d 13 d 5 d 18 d 12 d 3 d 2 d 17 d 8 d 9 d 10 d 6 d 19 Seed List d 1 d 6 d 12 d 15 d 11 Ragbag cluster d 14 12

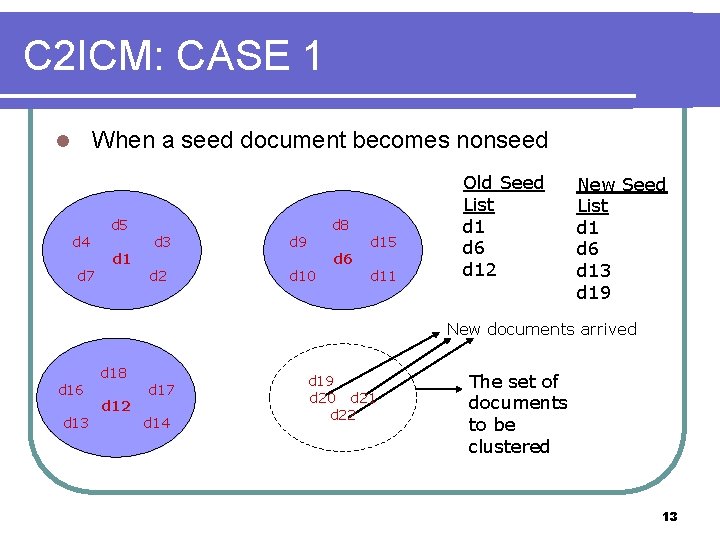

C 2 ICM: CASE 1 When a seed document becomes nonseed l d 4 d 7 d 5 d 1 d 3 d 2 d 8 d 9 d 10 d 6 d 15 d 11 Old Seed List d 1 d 6 d 12 New Seed List d 1 d 6 d 13 d 19 New documents arrived d 16 d 13 d 18 d 12 d 17 d 14 d 19 d 20 d 21 d 22 The set of documents to be clustered 13

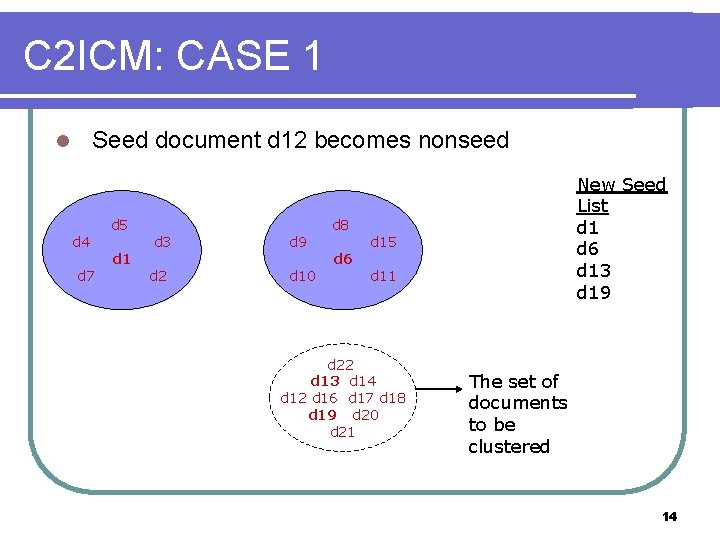

C 2 ICM: CASE 1 Seed document d 12 becomes nonseed l d 4 d 7 d 5 d 1 d 3 d 2 d 9 d 10 d 8 d 6 New Seed List d 1 d 6 d 13 d 19 d 15 d 11 d 22 d 13 d 14 d 12 d 16 d 17 d 18 d 19 d 20 d 21 The set of documents to be clustered 14

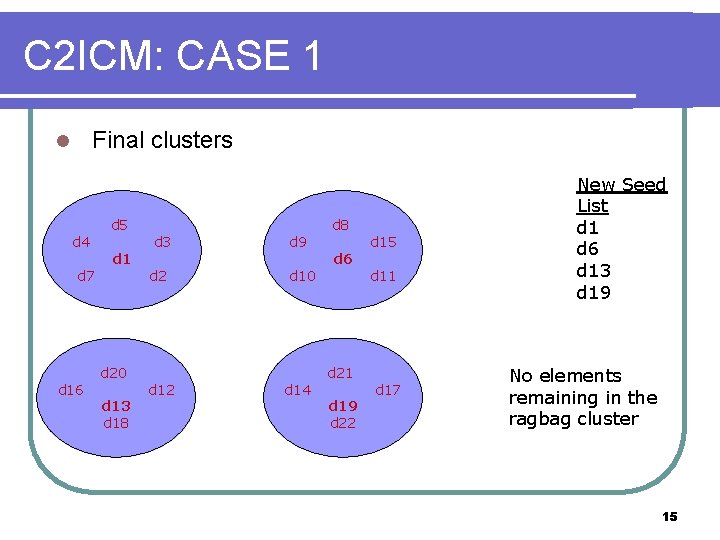

C 2 ICM: CASE 1 Final clusters l d 4 d 7 d 16 d 5 d 1 d 20 d 13 d 18 d 3 d 2 d 12 d 9 d 10 d 14 d 8 d 6 d 21 d 19 d 22 d 15 d 11 d 17 New Seed List d 1 d 6 d 13 d 19 No elements remaining in the ragbag cluster 15

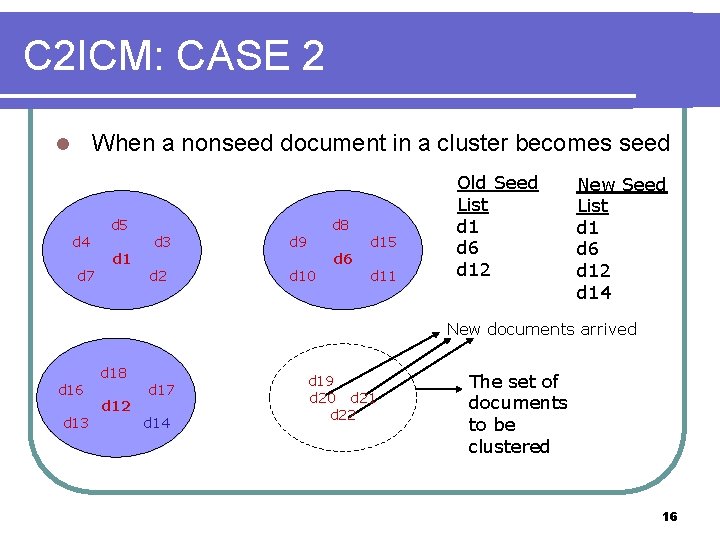

C 2 ICM: CASE 2 When a nonseed document in a cluster becomes seed l d 4 d 7 d 5 d 1 d 3 d 2 d 8 d 9 d 10 d 6 d 15 d 11 Old Seed List d 1 d 6 d 12 New Seed List d 1 d 6 d 12 d 14 New documents arrived d 16 d 13 d 18 d 12 d 17 d 14 d 19 d 20 d 21 d 22 The set of documents to be clustered 16

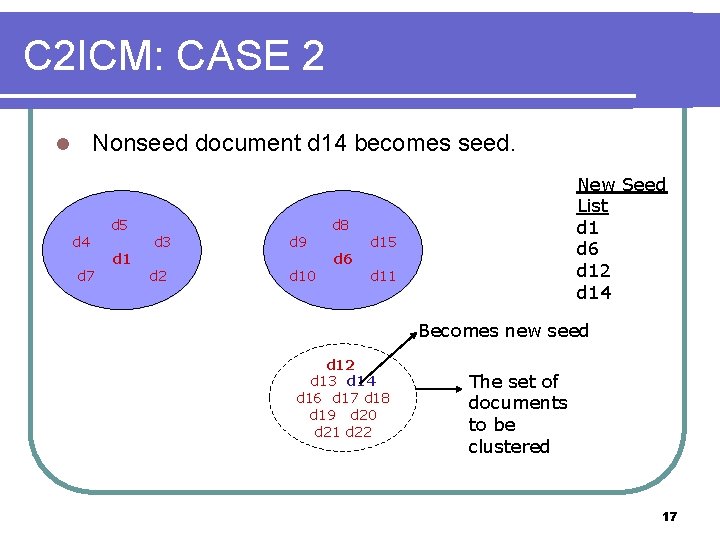

C 2 ICM: CASE 2 Nonseed document d 14 becomes seed. l d 4 d 7 d 5 d 1 d 3 d 2 d 9 d 10 d 8 d 6 New Seed List d 1 d 6 d 12 d 14 d 15 d 11 Becomes new seed d 12 d 13 d 14 d 16 d 17 d 18 d 19 d 20 d 21 d 22 The set of documents to be clustered 17

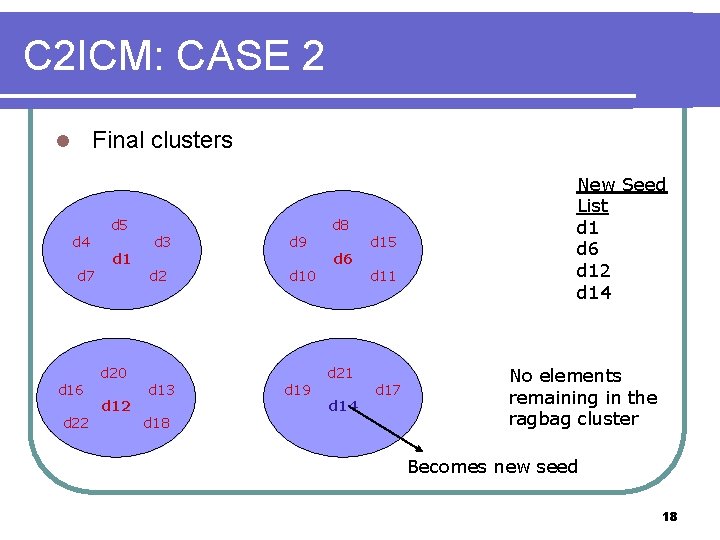

C 2 ICM: CASE 2 Final clusters l d 4 d 7 d 16 d 22 d 5 d 1 d 20 d 12 d 3 d 2 d 13 d 18 d 9 d 10 d 19 d 8 d 6 d 21 d 14 d 15 d 11 d 17 New Seed List d 1 d 6 d 12 d 14 No elements remaining in the ragbag cluster Becomes new seed 18

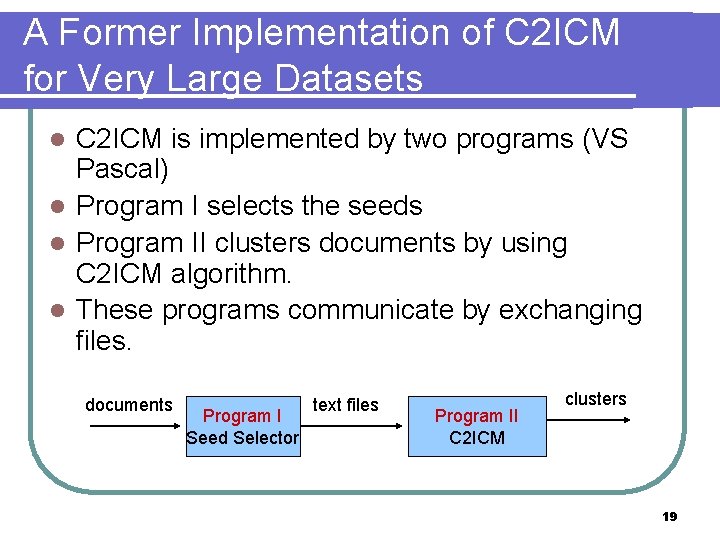

A Former Implementation of C 2 ICM for Very Large Datasets C 2 ICM is implemented by two programs (VS Pascal) l Program I selects the seeds l Program II clusters documents by using C 2 ICM algorithm. l These programs communicate by exchanging files. l documents Program I Seed Selector text files Program II C 2 ICM clusters 19

Former Experiments l C 2 ICM is tested with a subset of MARIAN database (~43 K documents) in 1995. l 6 experiments are done. Each incremental update added ~6 K documents to the different sizes of initially clustered documents 20

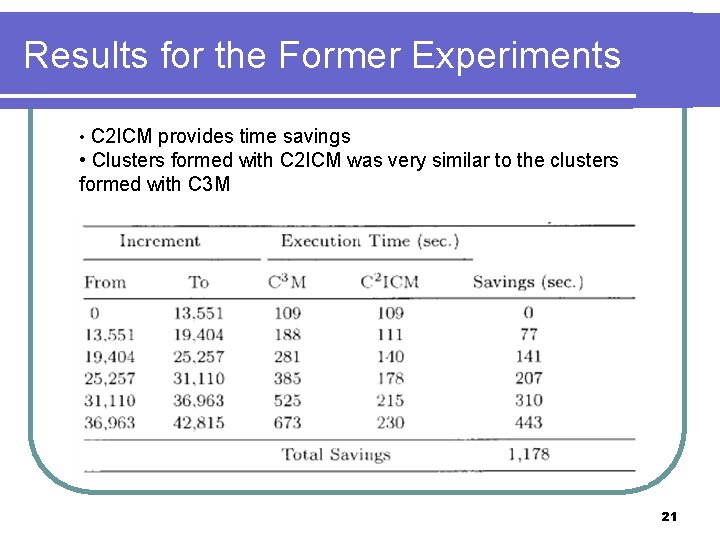

Results for the Former Experiments • C 2 ICM provides time savings • Clusters formed with C 2 ICM was very similar to the clusters formed with C 3 M 21

Conclusion l Cluster maintenance problem is challenging l Our aim is to conduct experiments for C 2 ICM with very large number of documents (i. e. millions of documents) l HARD dataset will be used for evaluation. Information retrieval performance will be measured. l Implementation of C 2 ICM must be time and memory efficient. 22

References l Can, F. , Ozkarahan, E. A. "Concepts and effectiveness of the cover coefficient-based clustering methodology for text databases. " ACM Transactions on Database Systems. Vol. 15, No. 4 (December, 1990), pp. 483 -517. l Can, F. "Incremental clustering for dynamic information processing. " ACM Transactions on Information Systems. Vol. 11, No. 2 (April, 1993), 143 -164. l Can, F. , Fox, E. A. , Snavely, C. D. , France, R. K. "Incremental clustering for very large document databases: initial MARIAN experience. " Information Sciences. Vol. 84 (1995), pp. 101 -114. l A. K. Jain , M. N. Murty , P. J. Flynn, Data clustering: a review, ACM Computing Surveys (CSUR), v. 31 n. 3, p. 264 -323, Sept. 1999 23

Questions? 24

- Slides: 24