Verification Scores in CPT Simon J Mason simoniri

- Slides: 25

Verification Scores in CPT Simon J. Mason simon@iri. columbia. edu International Research Institute for Climate and Society The Earth Institute of Columbia University SAWS Training Workshop on the Climate Predictability Tool (CPT) Pretoria, South Africa, 07 – 16 May, 2011

Forecast verification If the forecasts are correct 60% of the time, is that good? 2

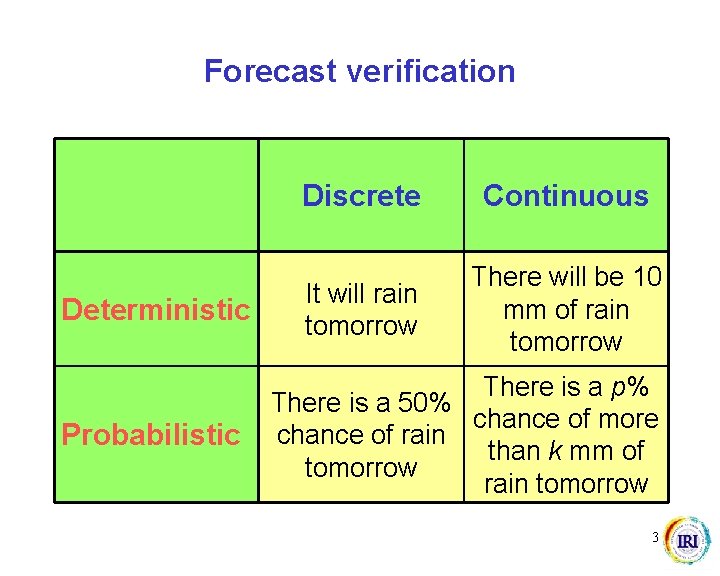

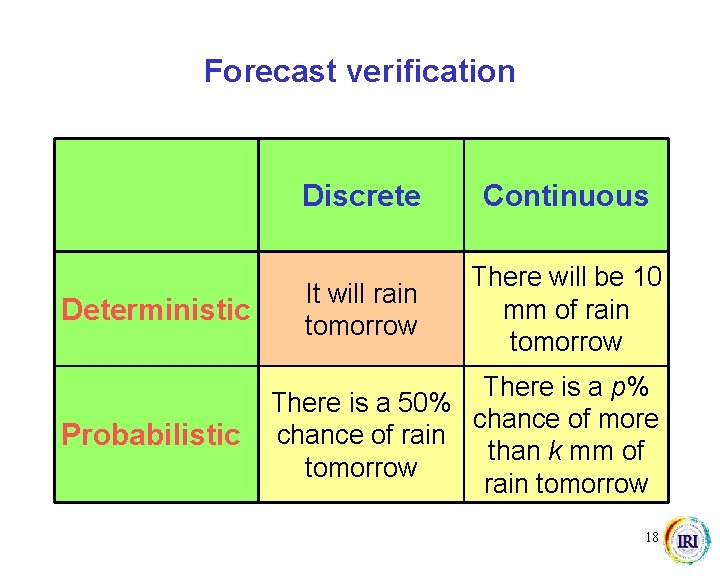

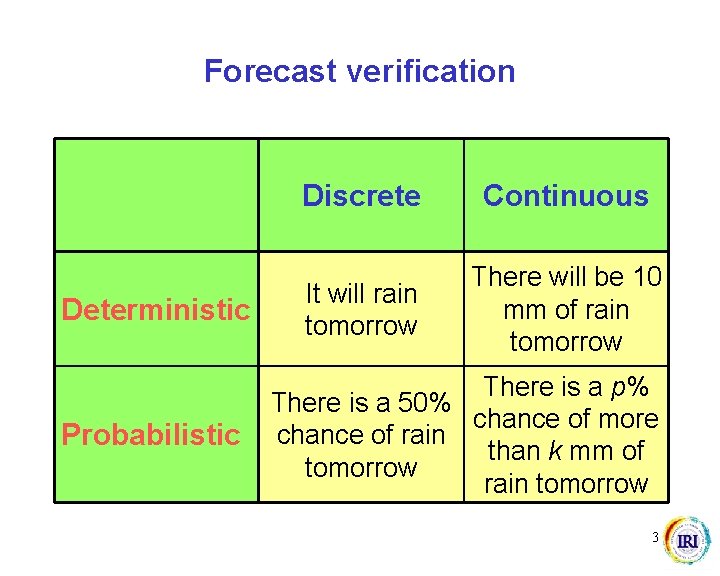

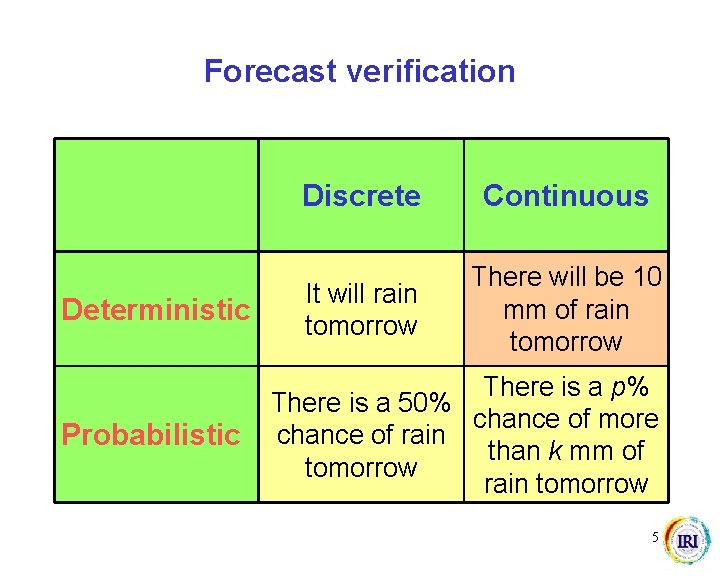

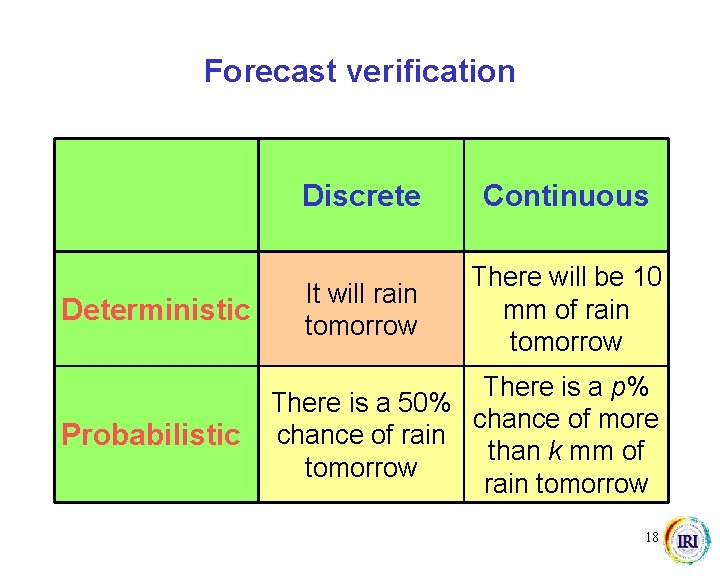

Forecast verification Deterministic Probabilistic Discrete Continuous It will rain tomorrow There will be 10 mm of rain tomorrow There is a p% There is a 50% chance of more chance of rain than k mm of tomorrow rain tomorrow 3

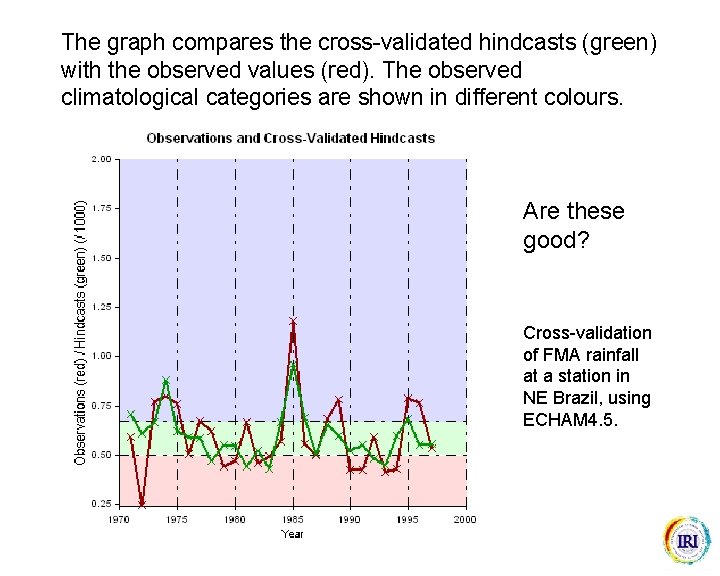

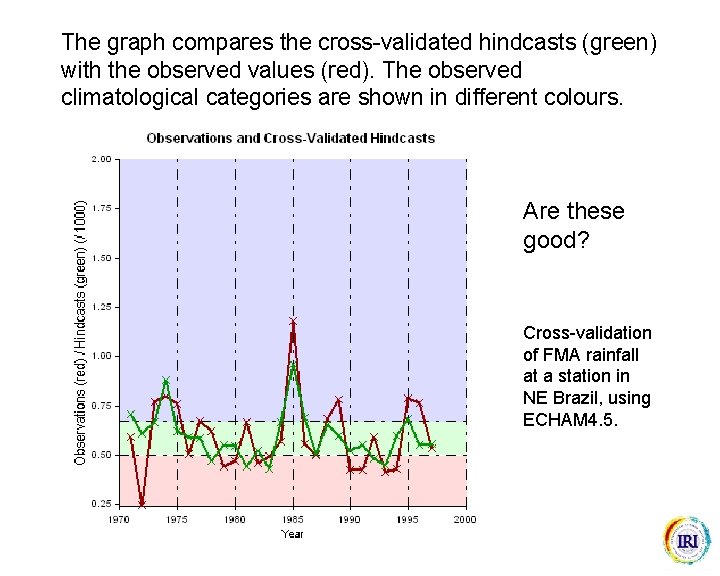

The graph compares the cross-validated hindcasts (green) with the observed values (red). The observed climatological categories are shown in different colours. Are these good? Cross-validation of FMA rainfall at a station in NE Brazil, using ECHAM 4. 5.

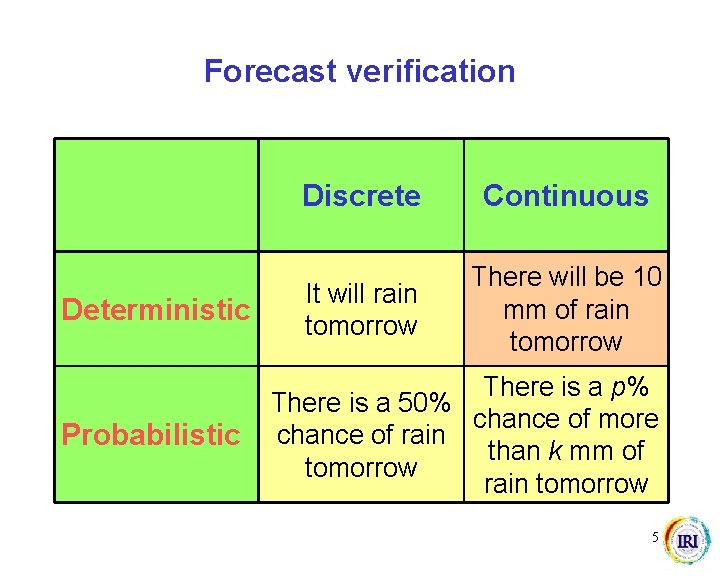

Forecast verification Deterministic Probabilistic Discrete Continuous It will rain tomorrow There will be 10 mm of rain tomorrow There is a p% There is a 50% chance of more chance of rain than k mm of tomorrow rain tomorrow 5

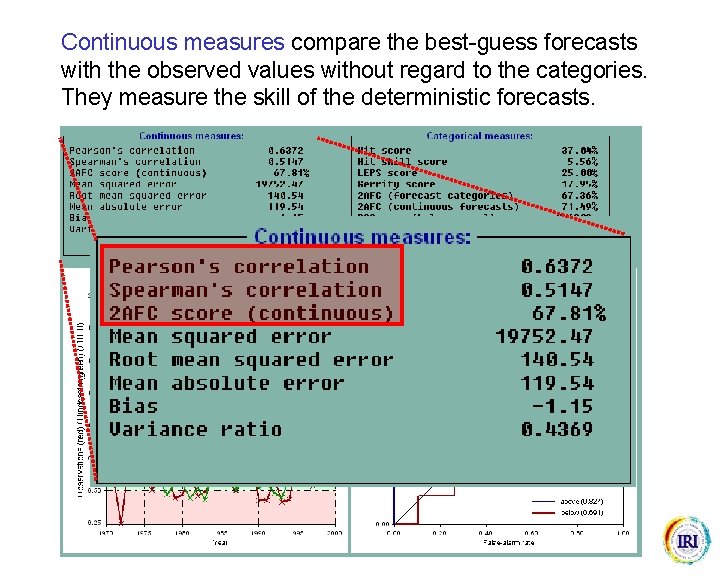

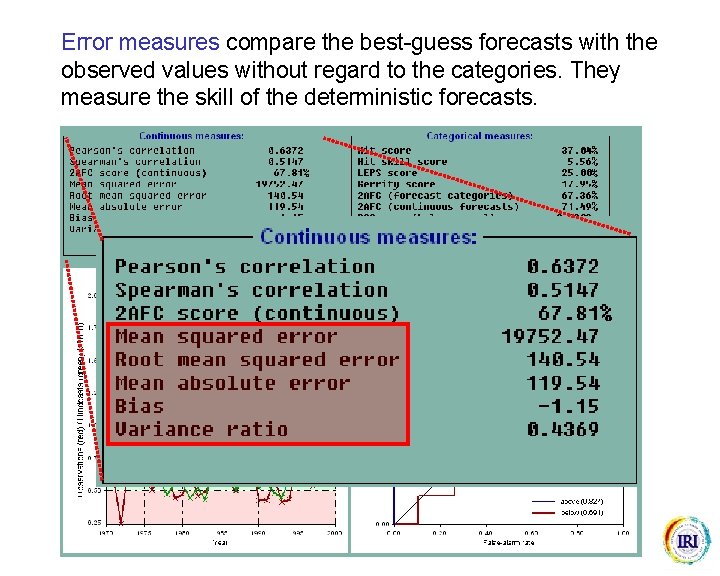

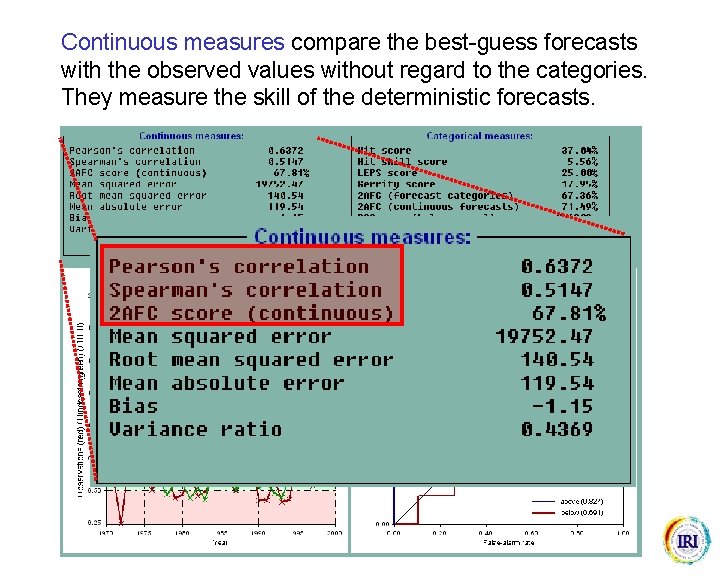

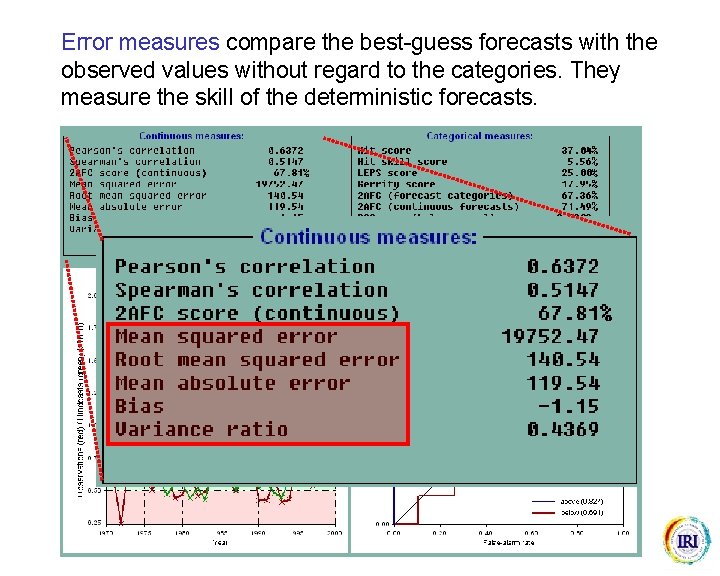

Continuous measures compare the best-guess forecasts with the observed values without regard to the categories. They measure the skill of the deterministic forecasts.

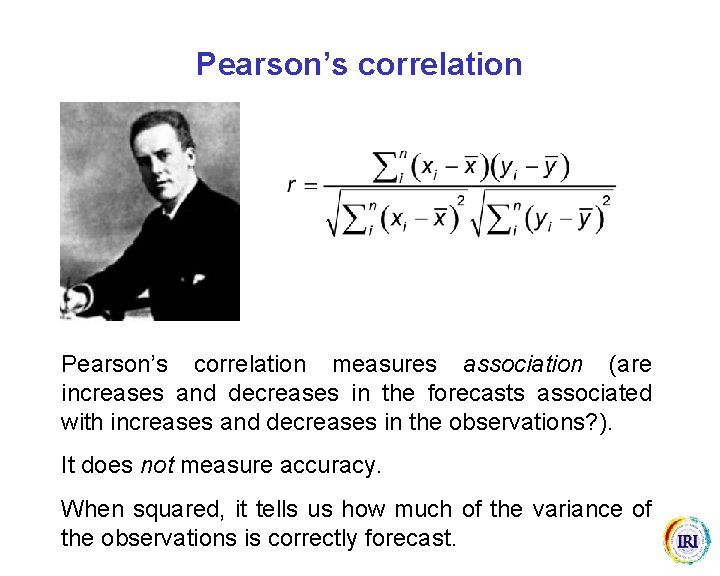

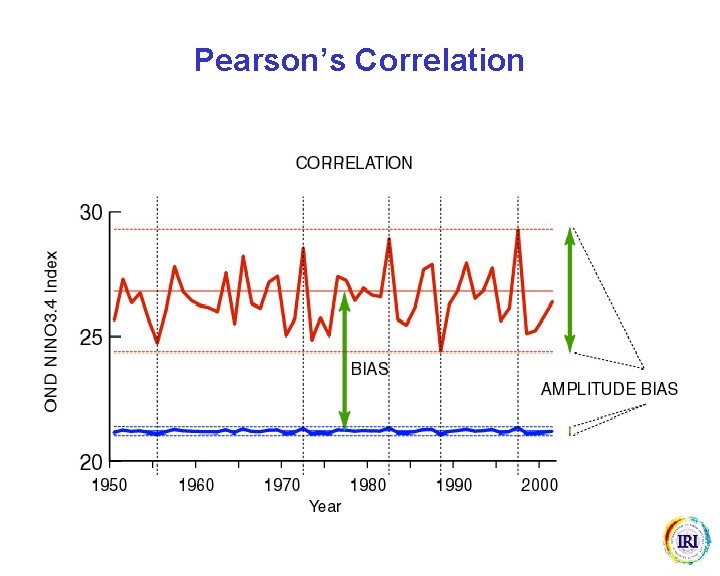

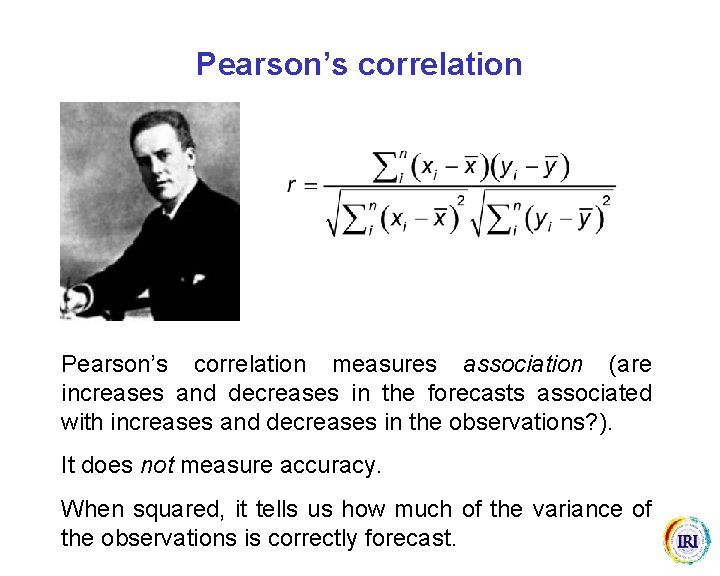

Pearson’s correlation measures association (are increases and decreases in the forecasts associated with increases and decreases in the observations? ). It does not measure accuracy. When squared, it tells us how much of the variance of the observations is correctly forecast.

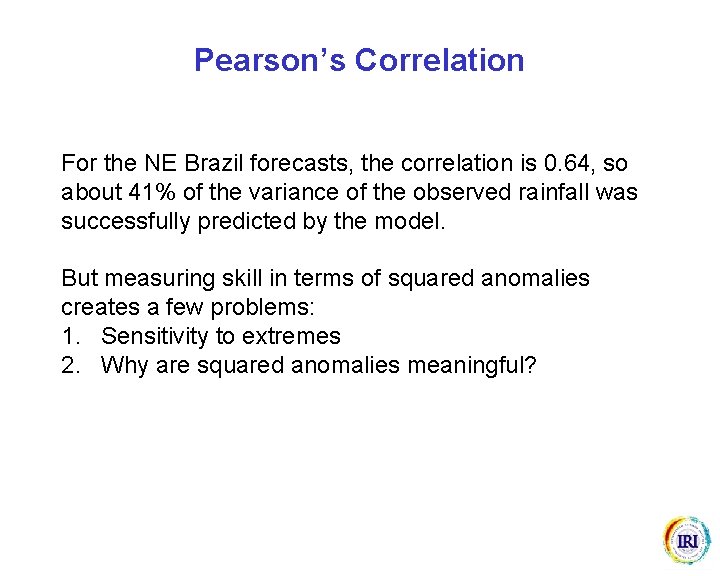

Pearson’s Correlation For the NE Brazil forecasts, the correlation is 0. 64, so about 41% of the variance of the observed rainfall was successfully predicted by the model. But measuring skill in terms of squared anomalies creates a few problems: 1. Sensitivity to extremes 2. Why are squared anomalies meaningful?

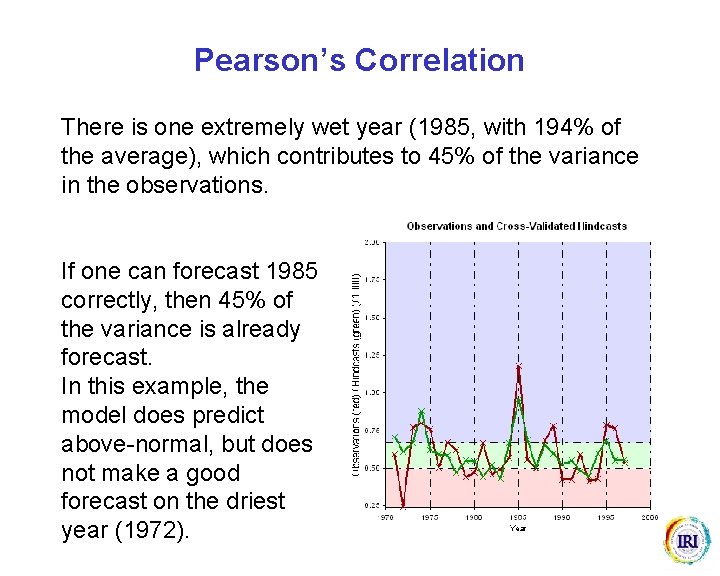

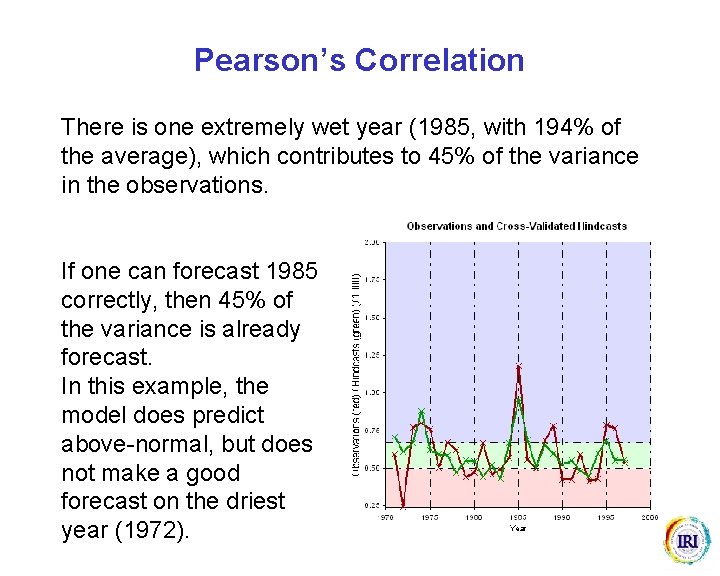

Pearson’s Correlation There is one extremely wet year (1985, with 194% of the average), which contributes to 45% of the variance in the observations. If one can forecast 1985 correctly, then 45% of the variance is already forecast. In this example, the model does predict above-normal, but does not make a good forecast on the driest year (1972).

Pearson’s Correlation

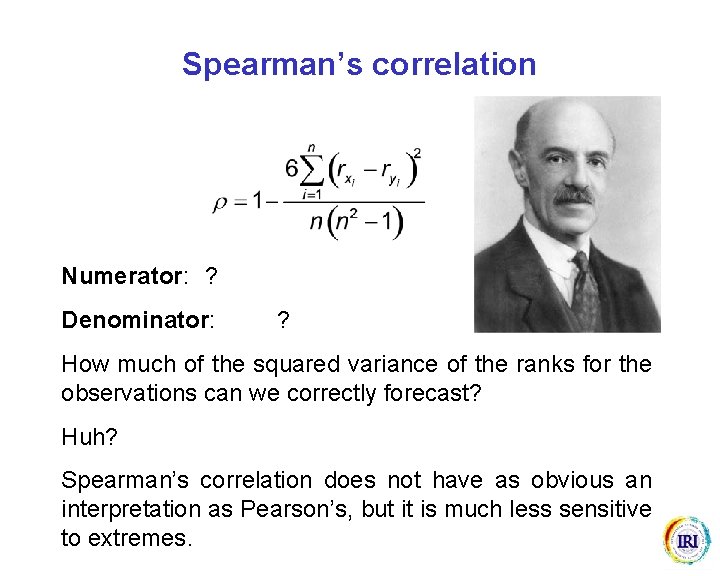

Spearman’s correlation Numerator: ? Denominator: ? How much of the squared variance of the ranks for the observations can we correctly forecast? Huh? Spearman’s correlation does not have as obvious an interpretation as Pearson’s, but it is much less sensitive to extremes.

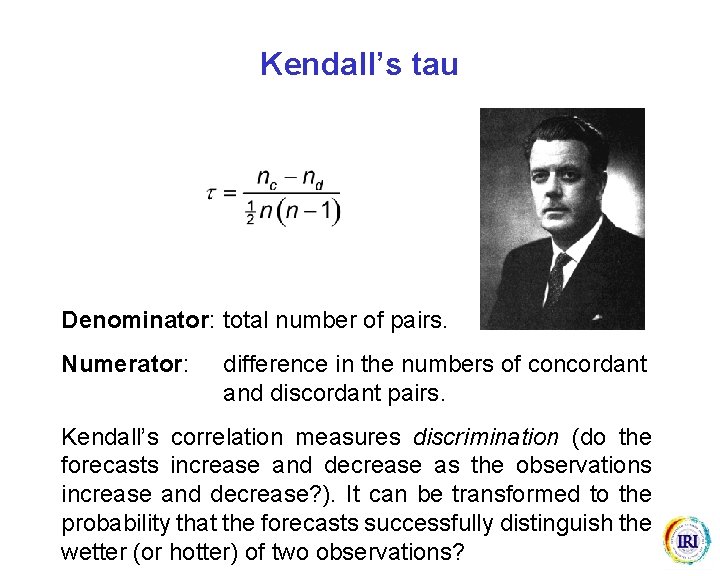

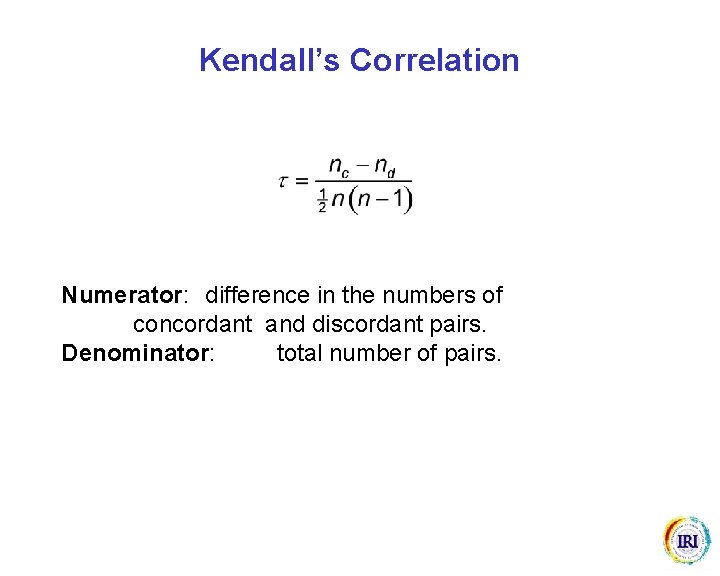

Kendall’s tau Denominator: total number of pairs. Numerator: difference in the numbers of concordant and discordant pairs. Kendall’s correlation measures discrimination (do the forecasts increase and decrease as the observations increase and decrease? ). It can be transformed to the probability that the forecasts successfully distinguish the wetter (or hotter) of two observations?

Kendall’s Correlation Numerator: difference in the numbers of concordant and discordant pairs. Denominator: total number of pairs.

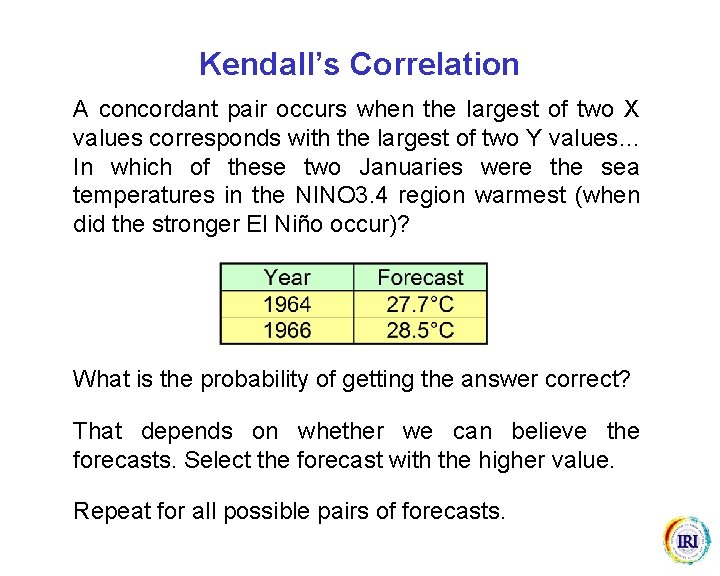

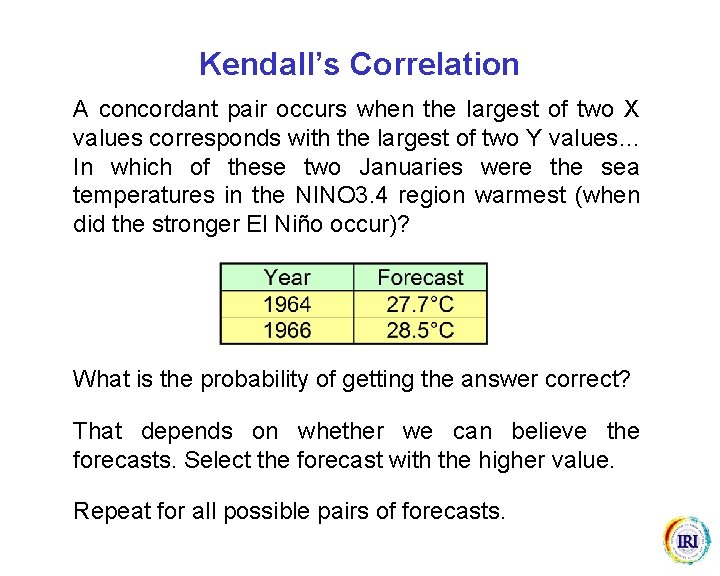

Kendall’s Correlation A concordant pair occurs when the largest of two X values corresponds with the largest of two Y values… In which of these two Januaries were the sea temperatures in the NINO 3. 4 region warmest (when did the stronger El Niño occur)? What is the probability of getting the answer correct? That depends on whether we can believe the forecasts. Select the forecast with the higher value. Repeat for all possible pairs of forecasts.

Error measures compare the best-guess forecasts with the observed values without regard to the categories. They measure the skill of the deterministic forecasts.

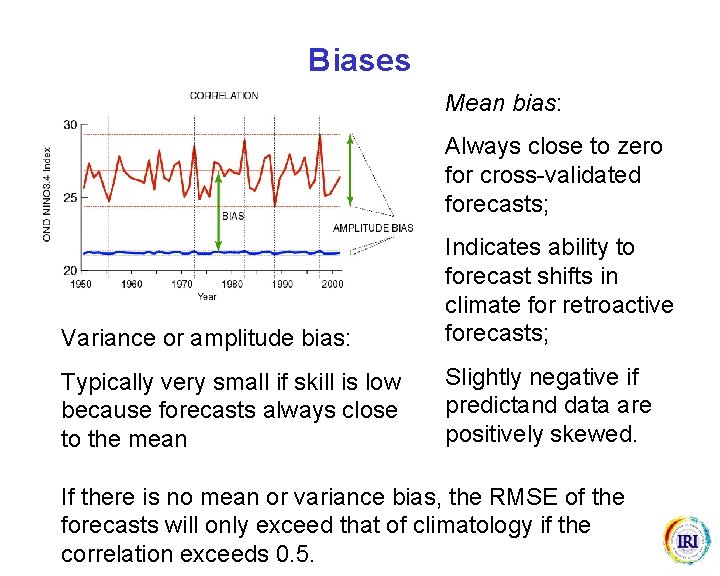

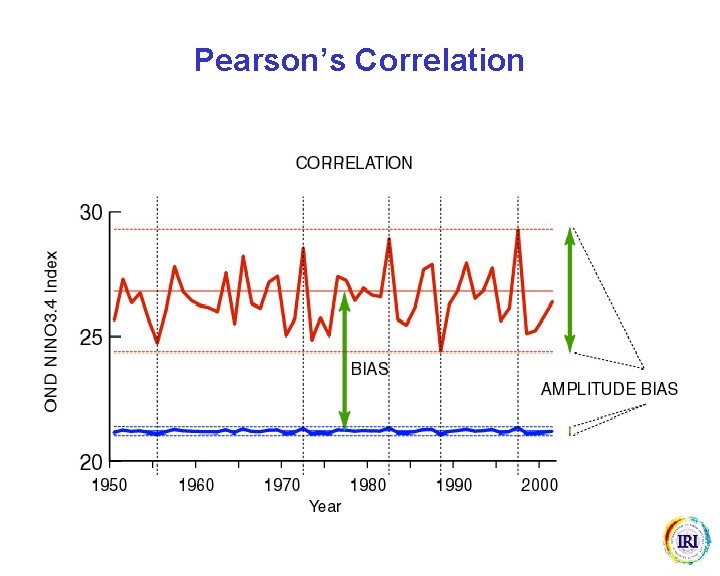

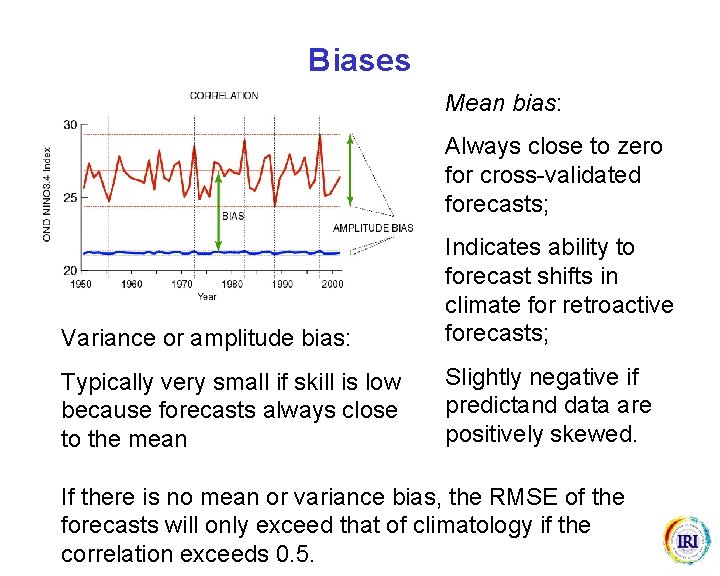

Biases Mean bias: Always close to zero for cross-validated forecasts; Variance or amplitude bias: Indicates ability to forecast shifts in climate for retroactive forecasts; Typically very small if skill is low because forecasts always close to the mean Slightly negative if predictand data are positively skewed. If there is no mean or variance bias, the RMSE of the forecasts will only exceed that of climatology if the correlation exceeds 0. 5.

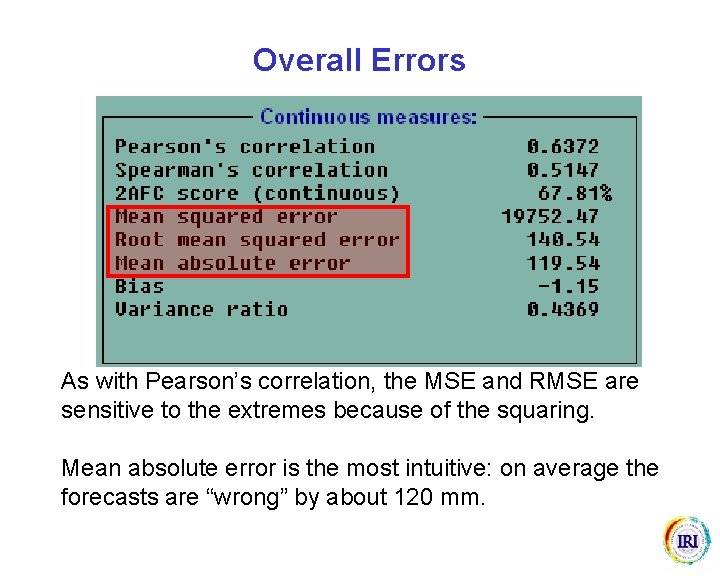

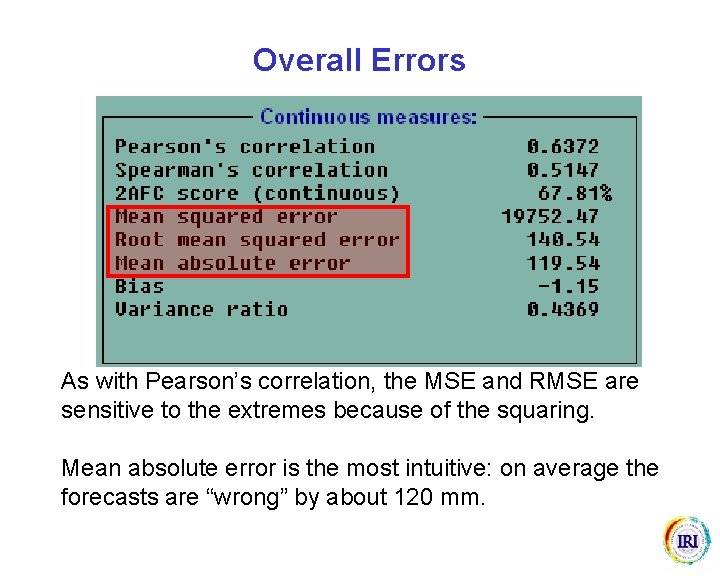

Overall Errors As with Pearson’s correlation, the MSE and RMSE are sensitive to the extremes because of the squaring. Mean absolute error is the most intuitive: on average the forecasts are “wrong” by about 120 mm.

Forecast verification Deterministic Probabilistic Discrete Continuous It will rain tomorrow There will be 10 mm of rain tomorrow There is a p% There is a 50% chance of more chance of rain than k mm of tomorrow rain tomorrow 18

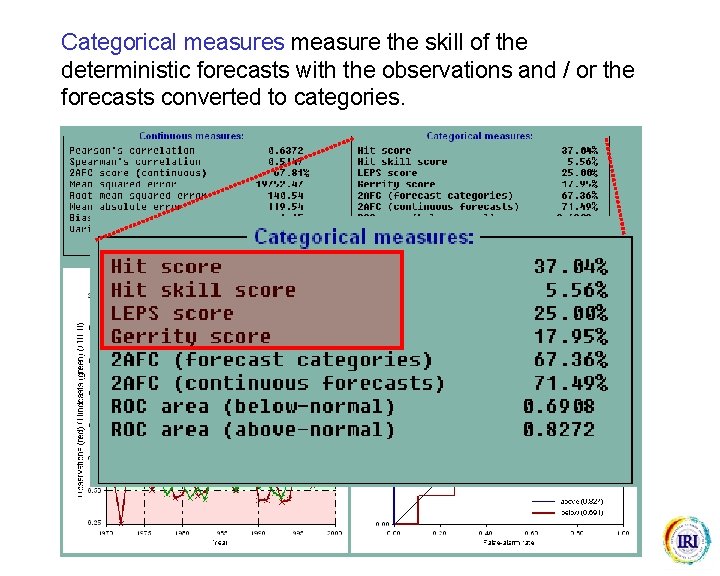

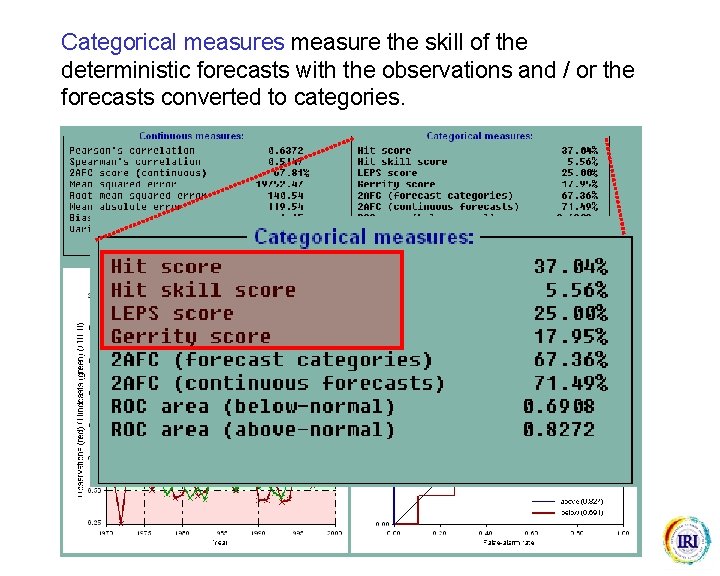

Categorical measures measure the skill of the deterministic forecasts with the observations and / or the forecasts converted to categories.

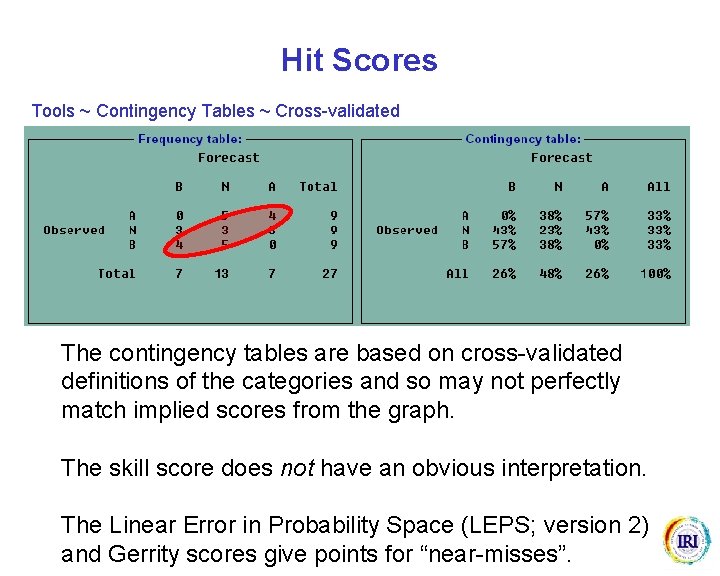

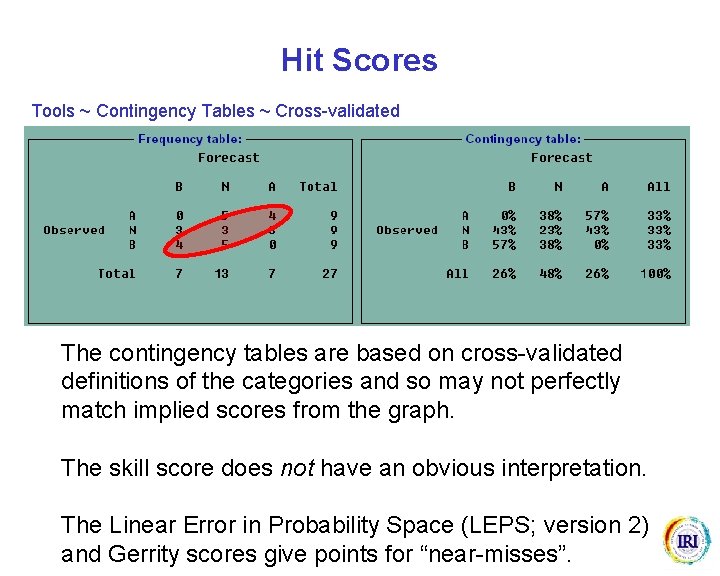

Hit Scores Tools ~ Contingency Tables ~ Cross-validated The contingency tables are based on cross-validated definitions of the categories and so may not perfectly match implied scores from the graph. The skill score does not have an obvious interpretation. The Linear Error in Probability Space (LEPS; version 2) and Gerrity scores give points for “near-misses”.

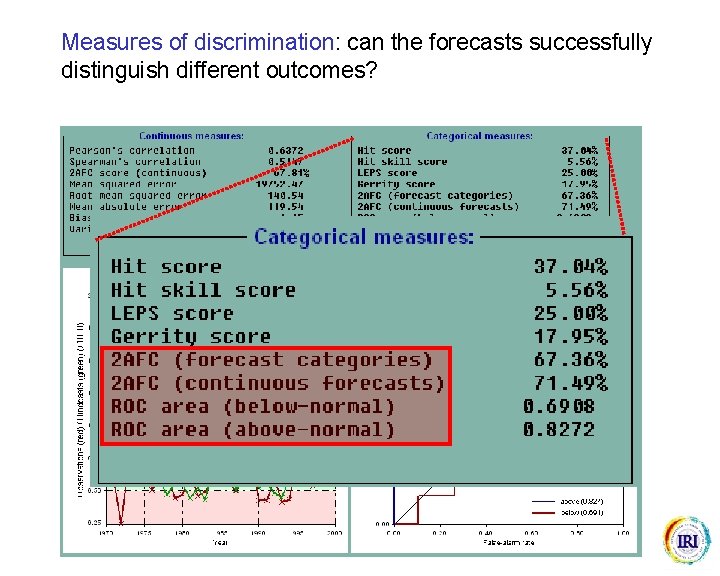

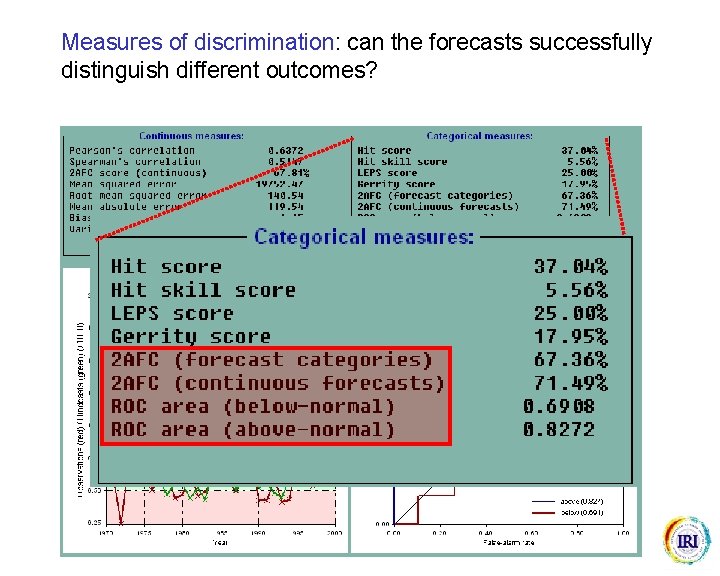

Measures of discrimination: can the forecasts successfully distinguish different outcomes?

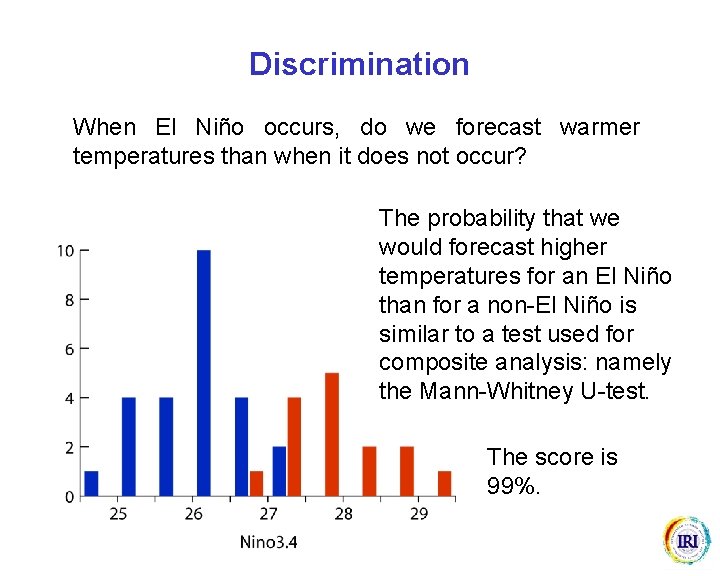

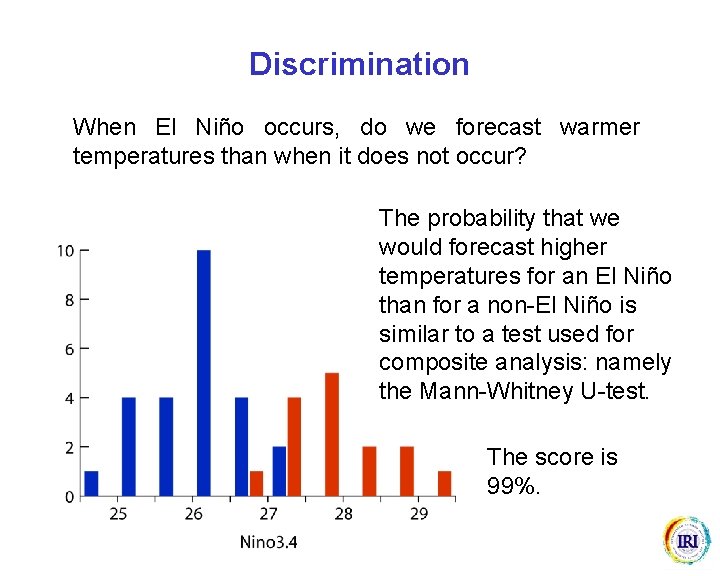

Discrimination When El Niño occurs, do we forecast warmer temperatures than when it does not occur? The probability that we would forecast higher temperatures for an El Niño than for a non-El Niño is similar to a test used for composite analysis: namely the Mann-Whitney U-test. The score is 99%.

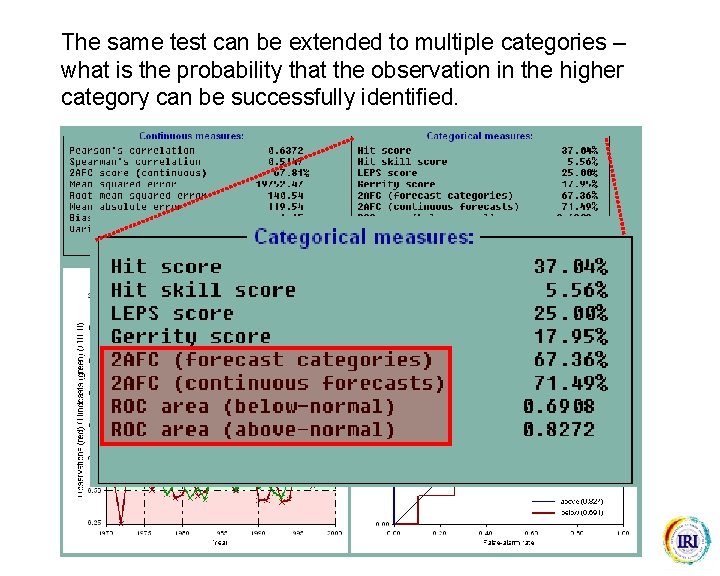

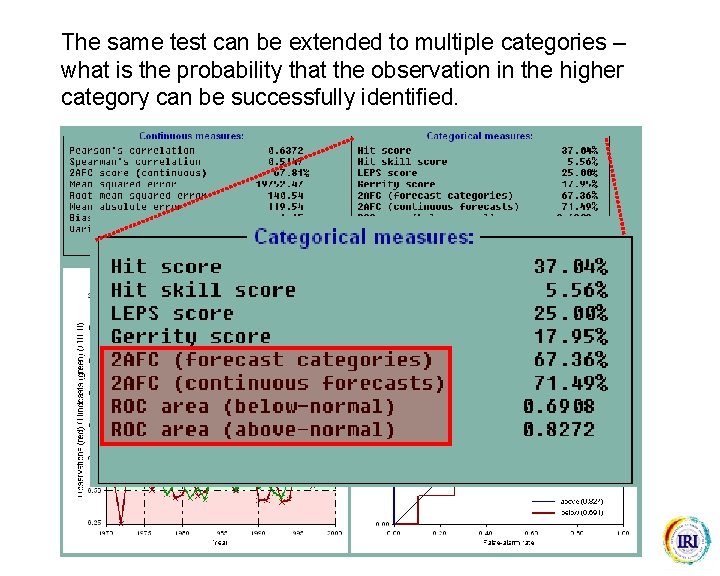

The same test can be extended to multiple categories – what is the probability that the observation in the higher category can be successfully identified.

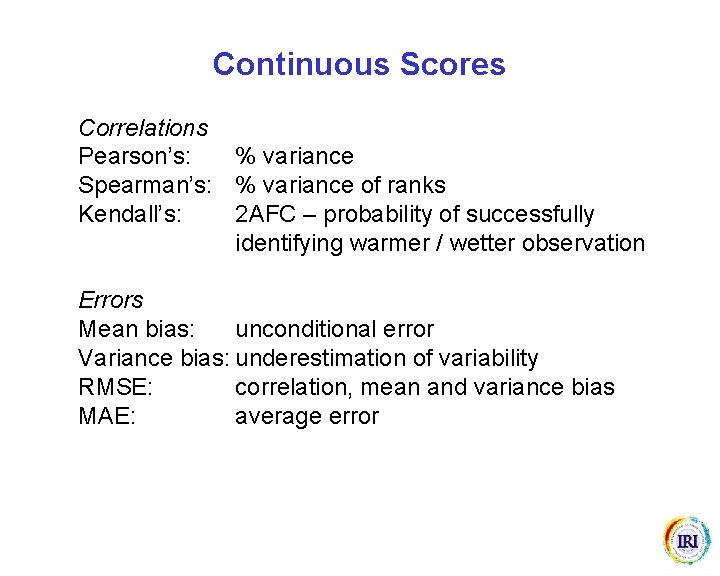

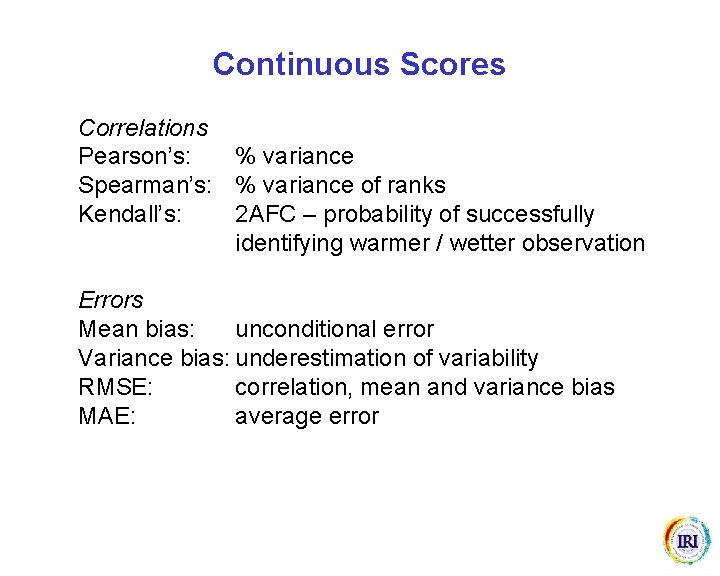

Continuous Scores Correlations Pearson’s: % variance Spearman’s: % variance of ranks Kendall’s: 2 AFC – probability of successfully identifying warmer / wetter observation Errors Mean bias: unconditional error Variance bias: underestimation of variability RMSE: correlation, mean and variance bias MAE: average error

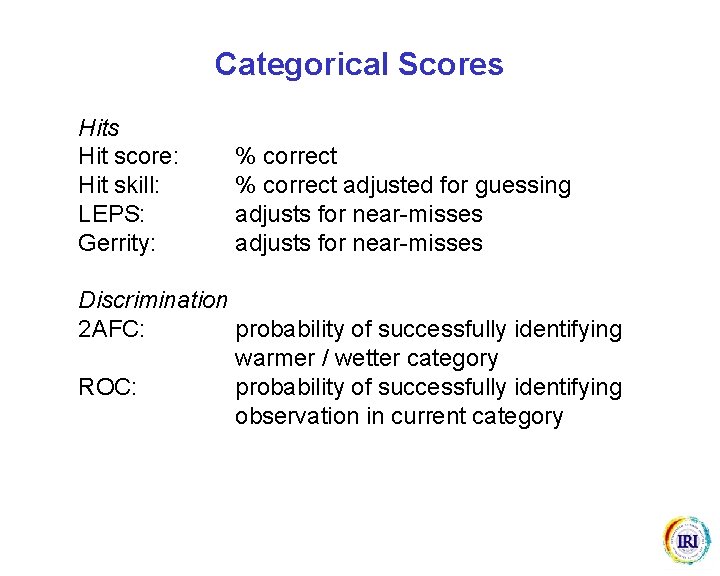

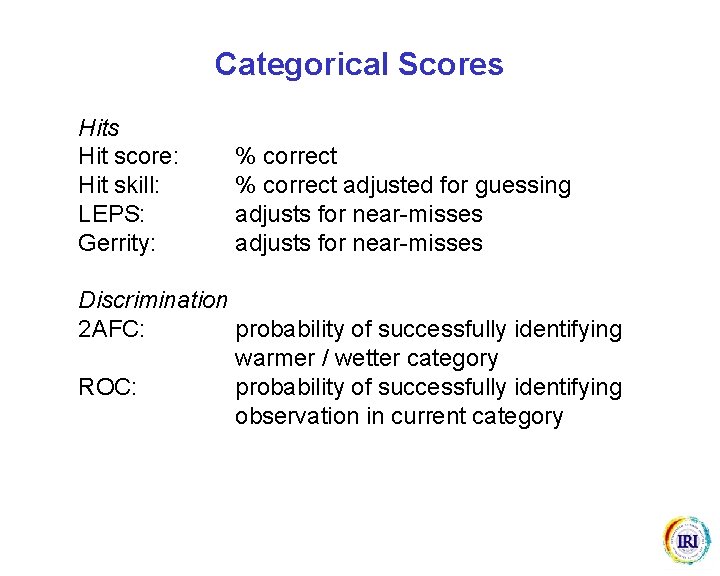

Categorical Scores Hit score: Hit skill: LEPS: Gerrity: % correct adjusted for guessing adjusts for near-misses Discrimination 2 AFC: probability of successfully identifying warmer / wetter category ROC: probability of successfully identifying observation in current category

Sybil dorsett

Sybil dorsett Scale scores convey more information than index scores.

Scale scores convey more information than index scores. Qcm vba

Qcm vba Keeley mason

Keeley mason Mason and molloy classification

Mason and molloy classification Copper plate multiplication

Copper plate multiplication Mason liker

Mason liker High valley hatchery

High valley hatchery Scott mason clemson

Scott mason clemson Mason river

Mason river Mason emnett

Mason emnett Grant writers in oklahoma

Grant writers in oklahoma Shiloh essay

Shiloh essay Shirley ardell mason biography

Shirley ardell mason biography George mason anti federalist

George mason anti federalist Mr peter mason

Mr peter mason George mason federalist

George mason federalist Dr mason moore

Dr mason moore Tucson audubon mason center

Tucson audubon mason center Mason high motivation

Mason high motivation Chris mason btrfs

Chris mason btrfs North mason school district levy

North mason school district levy Radiation safety

Radiation safety Eavan miles mason

Eavan miles mason Traumatisme du coude

Traumatisme du coude Masonlife

Masonlife