Verification methods towards a user oriented verification The

- Slides: 27

Verification methods - towards a user oriented verification The verification group

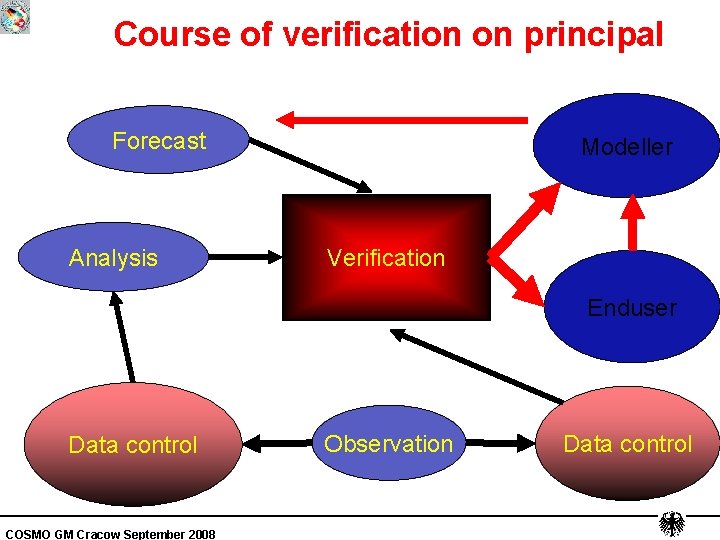

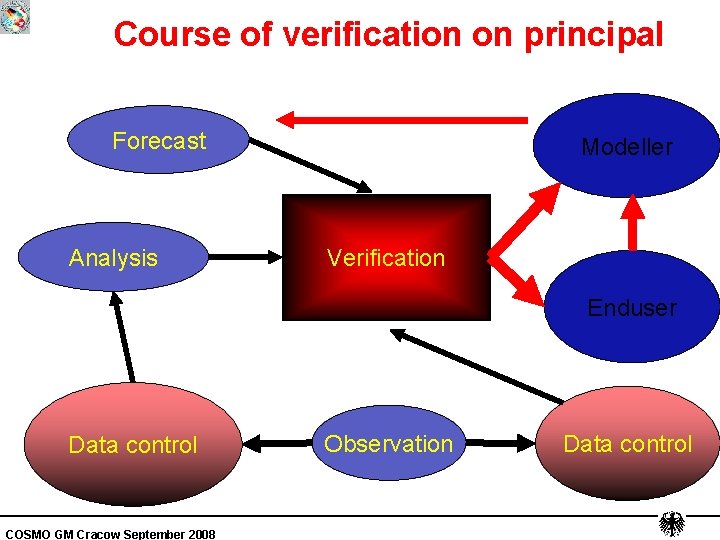

Course of verification on principal Forecast Analysis Modeller Verification Enduser Data control COSMO GM Cracow September 2008 Observation Data control

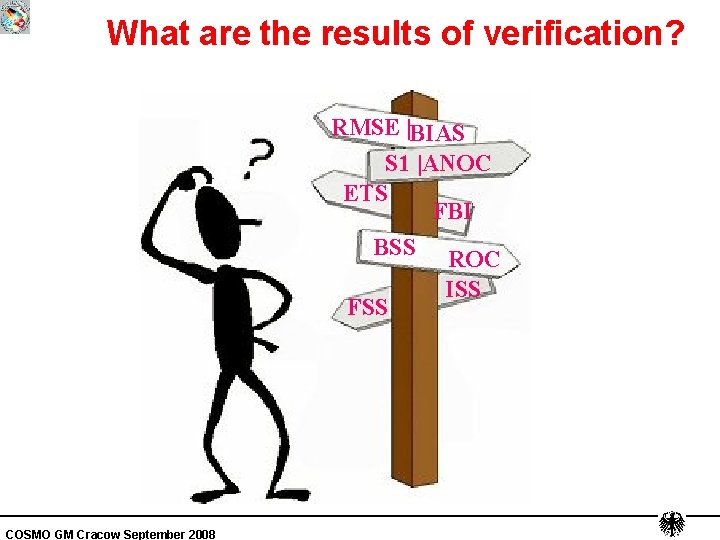

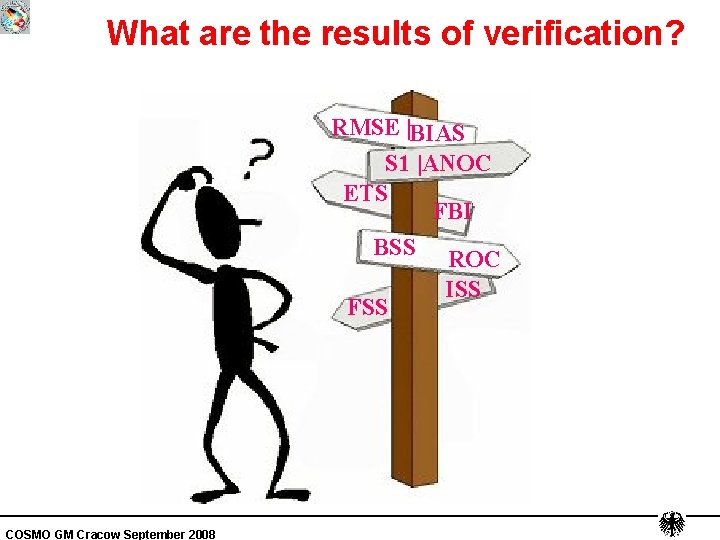

What are the results of verification? RMSE |BIAS S 1 |ANOC ETS FBI BSS ROC FSS COSMO GM Cracow September 2008 ISS

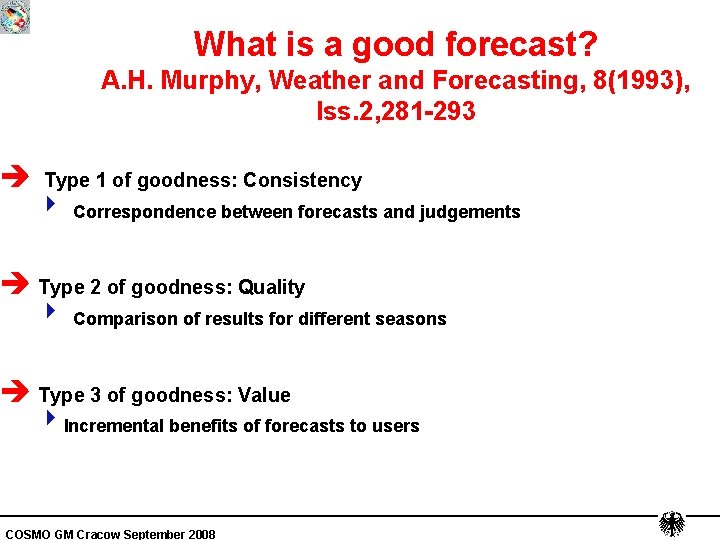

What is a good forecast? A. H. Murphy, Weather and Forecasting, 8(1993), Iss. 2, 281 -293 è Type 1 of goodness: Consistency 4 Correspondence between forecasts and judgements è Type 2 of goodness: Quality 4 Comparison of results for different seasons è Type 3 of goodness: Value 4 Incremental benefits of forecasts to users COSMO GM Cracow September 2008

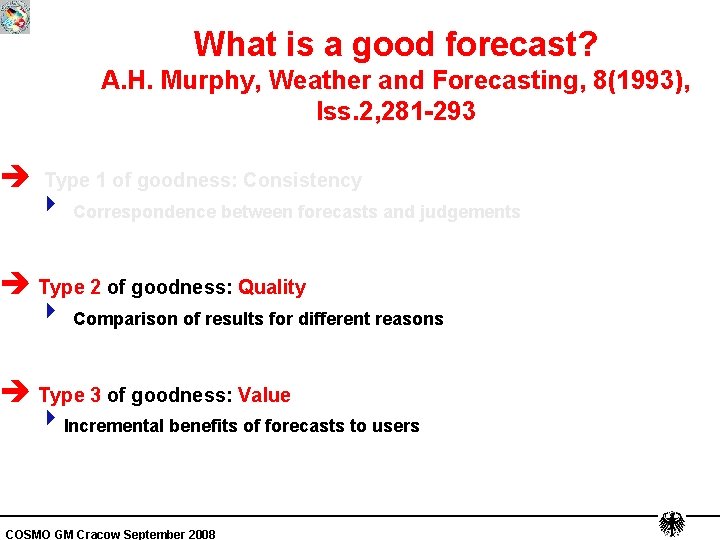

What is a good forecast? A. H. Murphy, Weather and Forecasting, 8(1993), Iss. 2, 281 -293 è Type 1 of goodness: Consistency 4 Correspondence between forecasts and judgements è Type 2 of goodness: Quality 4 Comparison of results for different reasons è Type 3 of goodness: Value 4 Incremental benefits of forecasts to users COSMO GM Cracow September 2008

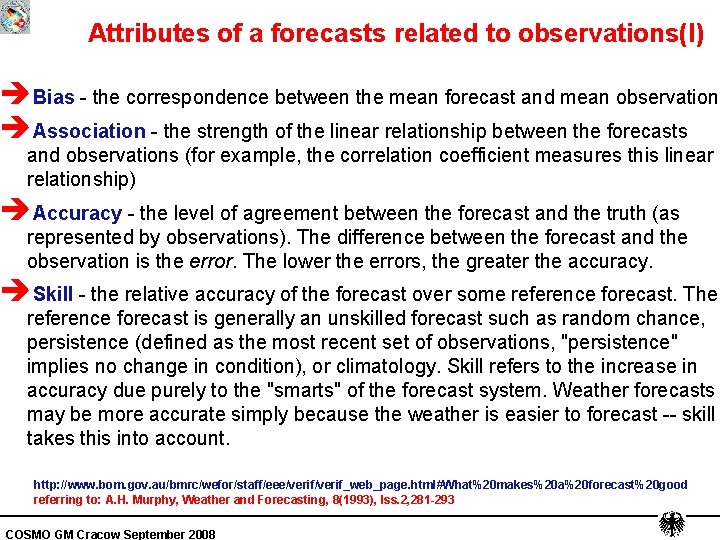

Attributes of a forecasts related to observations(I) èBias - the correspondence between the mean forecast and mean observation. èAssociation - the strength of the linear relationship between the forecasts and observations (for example, the correlation coefficient measures this linear relationship) èAccuracy - the level of agreement between the forecast and the truth (as represented by observations). The difference between the forecast and the observation is the error. The lower the errors, the greater the accuracy. èSkill - the relative accuracy of the forecast over some reference forecast. The reference forecast is generally an unskilled forecast such as random chance, persistence (defined as the most recent set of observations, "persistence" implies no change in condition), or climatology. Skill refers to the increase in accuracy due purely to the "smarts" of the forecast system. Weather forecasts may be more accurate simply because the weather is easier to forecast -- skill takes this into account. http: //www. bom. gov. au/bmrc/wefor/staff/eee/verif_web_page. html#What%20 makes%20 a%20 forecast%20 good referring to: A. H. Murphy, Weather and Forecasting, 8(1993), Iss. 2, 281 -293 COSMO GM Cracow September 2008

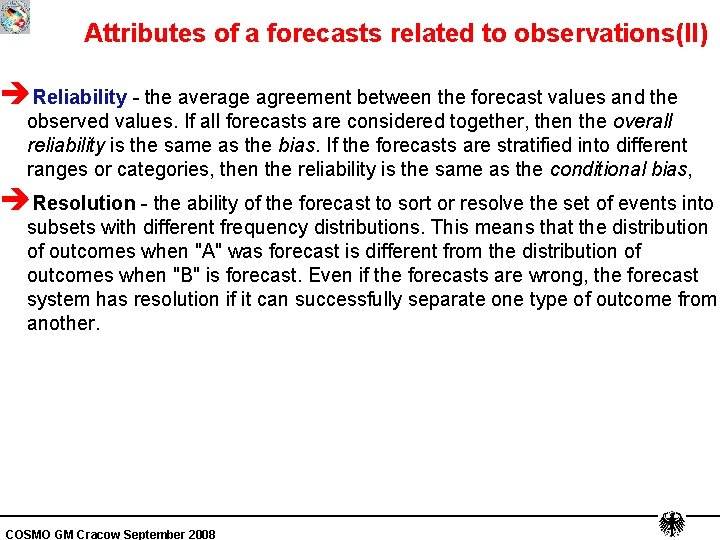

Attributes of a forecasts related to observations(II) èReliability - the average agreement between the forecast values and the observed values. If all forecasts are considered together, then the overall reliability is the same as the bias. If the forecasts are stratified into different ranges or categories, then the reliability is the same as the conditional bias, èResolution - the ability of the forecast to sort or resolve the set of events into subsets with different frequency distributions. This means that the distribution of outcomes when "A" was forecast is different from the distribution of outcomes when "B" is forecast. Even if the forecasts are wrong, the forecast system has resolution if it can successfully separate one type of outcome from another. COSMO GM Cracow September 2008

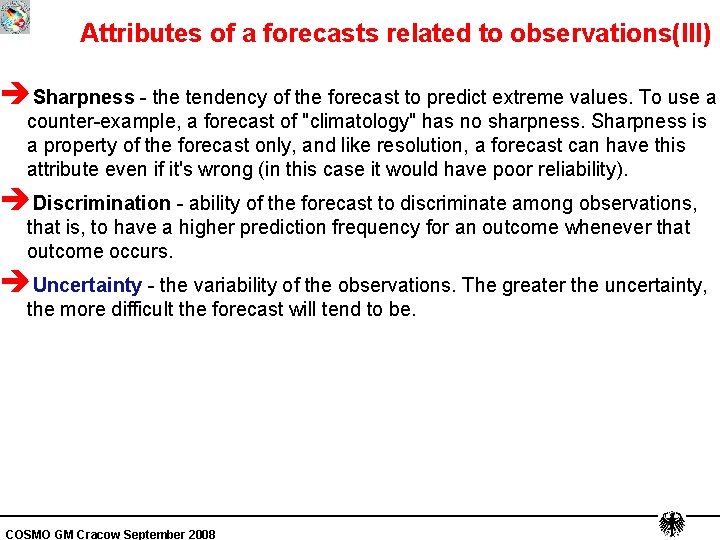

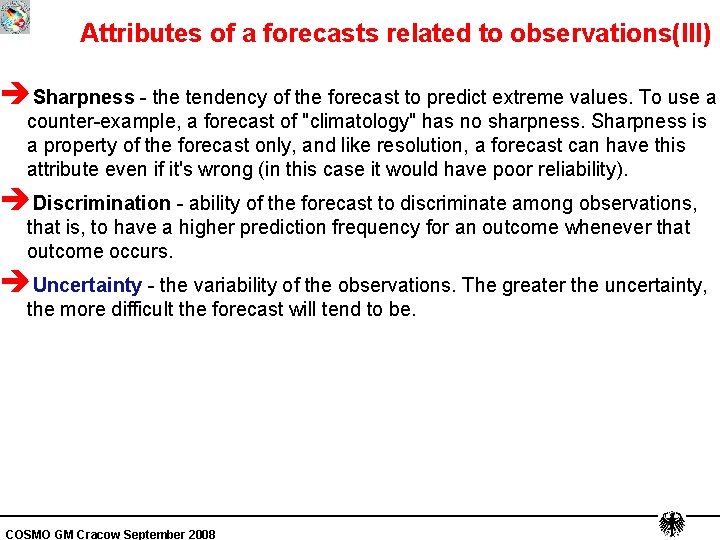

Attributes of a forecasts related to observations(III) èSharpness - the tendency of the forecast to predict extreme values. To use a counter-example, a forecast of "climatology" has no sharpness. Sharpness is a property of the forecast only, and like resolution, a forecast can have this attribute even if it's wrong (in this case it would have poor reliability). èDiscrimination - ability of the forecast to discriminate among observations, that is, to have a higher prediction frequency for an outcome whenever that outcome occurs. èUncertainty - the variability of the observations. The greater the uncertainty, the more difficult the forecast will tend to be. COSMO GM Cracow September 2008

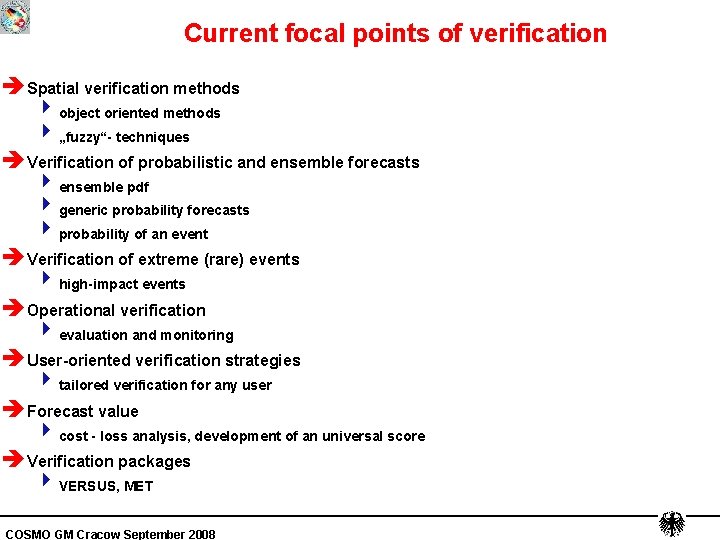

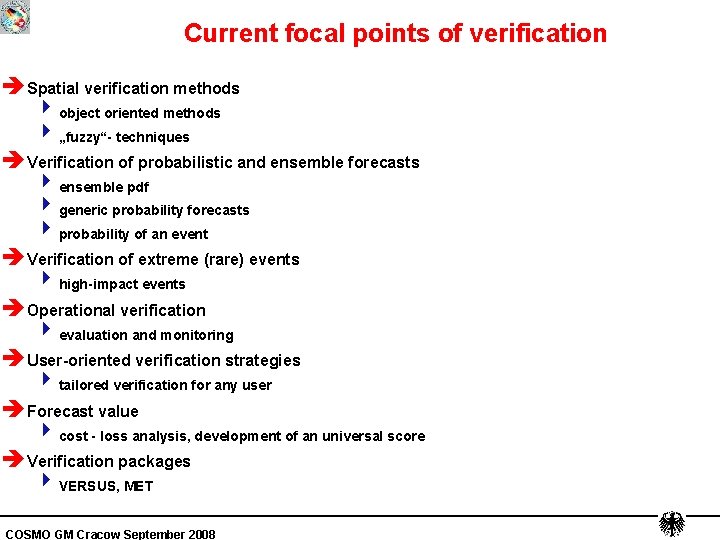

Current focal points of verification è Spatial verification methods 4 object oriented methods 4 „fuzzy“- techniques è Verification of probabilistic and ensemble forecasts 4 ensemble pdf 4 generic probability forecasts 4 probability of an event è Verification of extreme (rare) events 4 high-impact events è Operational verification 4 evaluation and monitoring è User-oriented verification strategies 4 tailored verification for any user è Forecast value 4 cost - loss analysis, development of an universal score è Verification packages 4 VERSUS, MET COSMO GM Cracow September 2008

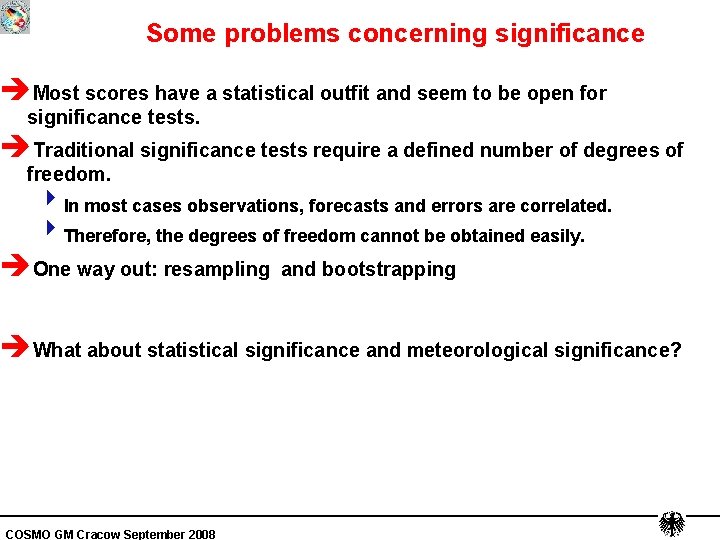

Some problems concerning significance èMost scores have a statistical outfit and seem to be open for significance tests. èTraditional significance tests require a defined number of degrees of freedom. 4 In most cases observations, forecasts and errors are correlated. 4 Therefore, the degrees of freedom cannot be obtained easily. èOne way out: resampling and bootstrapping èWhat about statistical significance and meteorological significance? COSMO GM Cracow September 2008

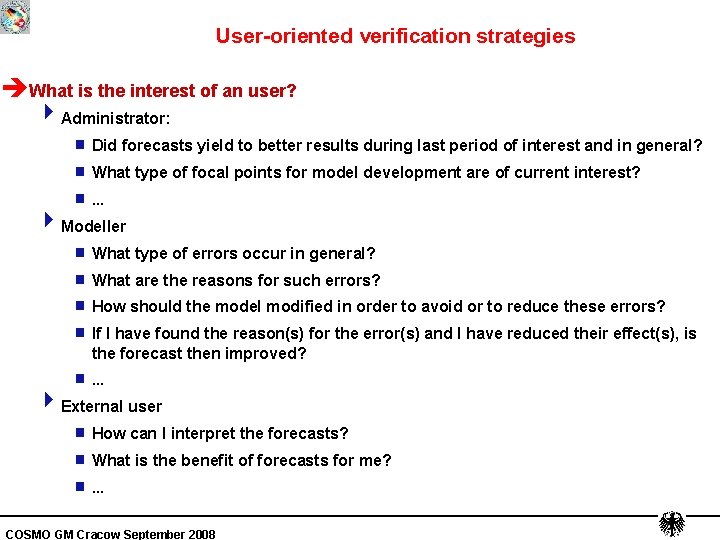

User-oriented verification strategies èWhat is the interest of an user? 4 Administrator: g Did forecasts yield to better results during last period of interest and in general? g What type of focal points for model development are of current interest? g . . . g What type of errors occur in general? g What are the reasons for such errors? g How should the model modified in order to avoid or to reduce these errors? g If I have found the reason(s) for the error(s) and I have reduced their effect(s), is the forecast then improved? g . . . g How can I interpret the forecasts? g What is the benefit of forecasts for me? g . . . 4 Modeller 4 External user COSMO GM Cracow September 2008

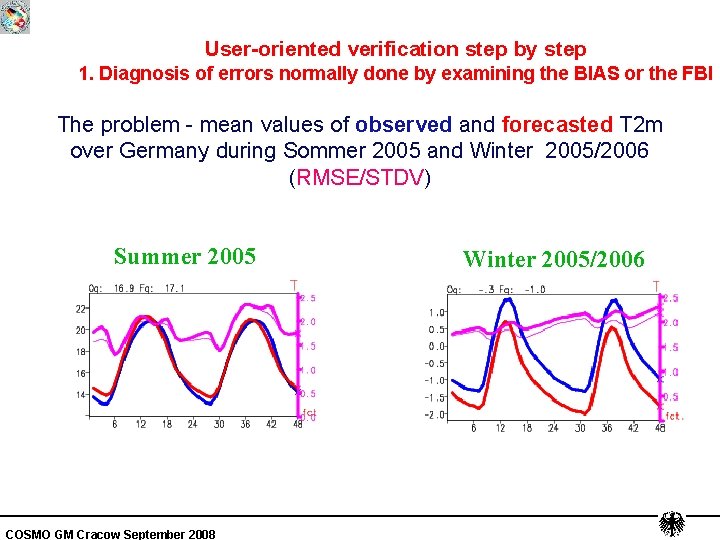

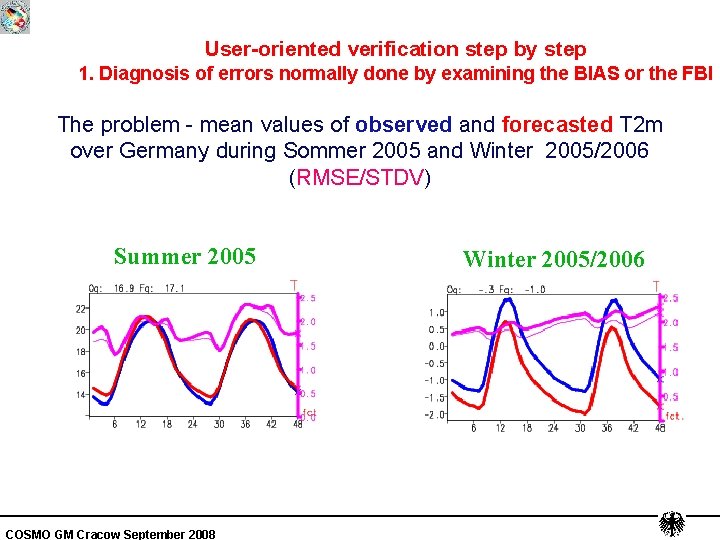

User-oriented verification step by step 1. Diagnosis of errors normally done by examining the BIAS or the FBI The problem - mean values of observed and forecasted T 2 m over Germany during Sommer 2005 and Winter 2005/2006 (RMSE/STDV) Summer 2005 COSMO GM Cracow September 2008 Winter 2005/2006

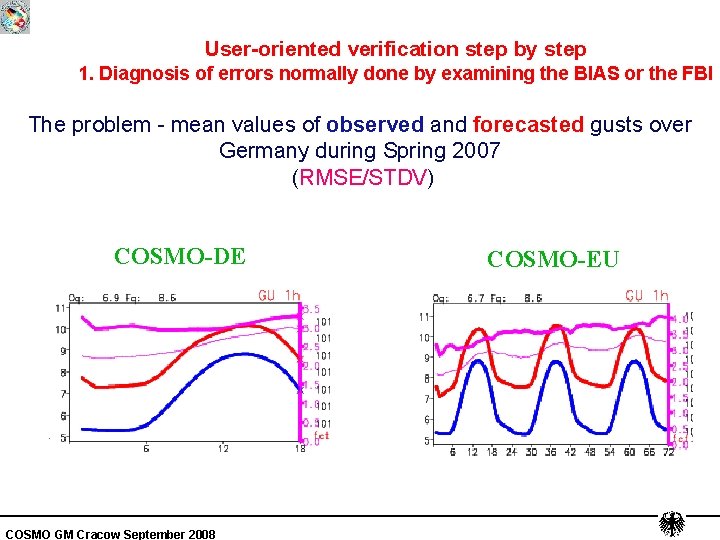

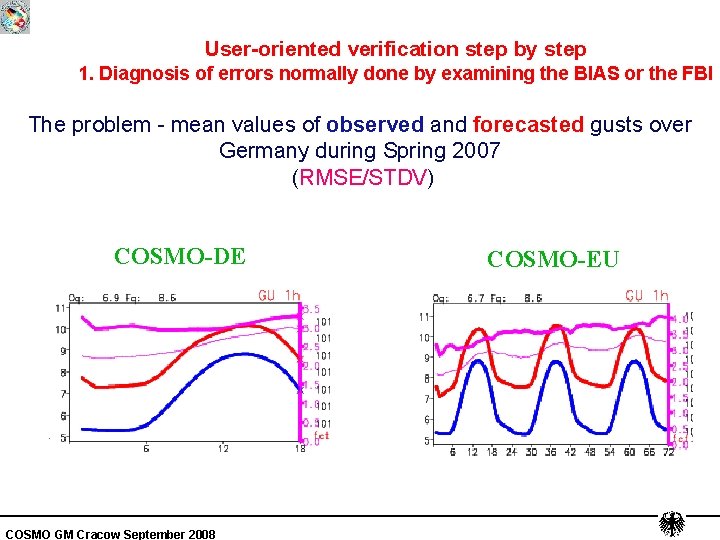

User-oriented verification step by step 1. Diagnosis of errors normally done by examining the BIAS or the FBI The problem - mean values of observed and forecasted gusts over Germany during Spring 2007 (RMSE/STDV) COSMO-DE COSMO GM Cracow September 2008 COSMO-EU

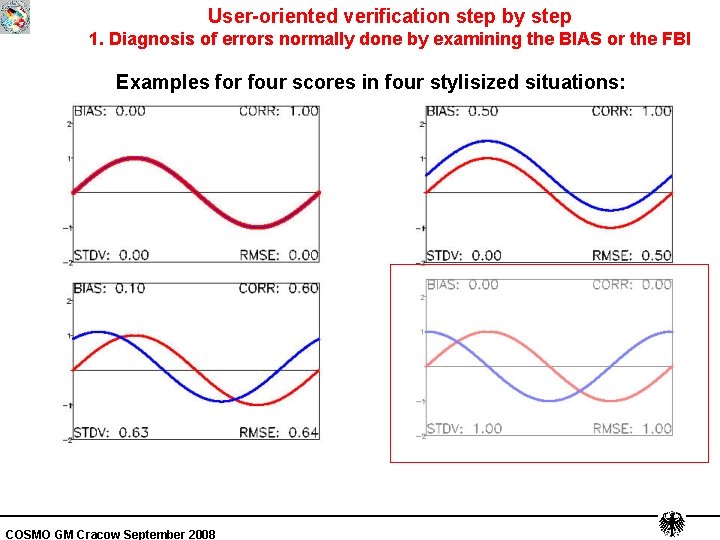

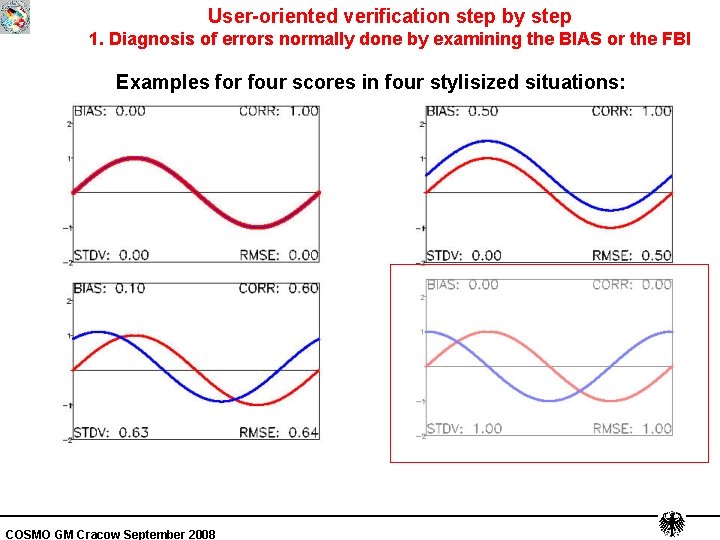

User-oriented verification step by step 1. Diagnosis of errors normally done by examining the BIAS or the FBI Examples for four scores in four stylisized situations: COSMO GM Cracow September 2008

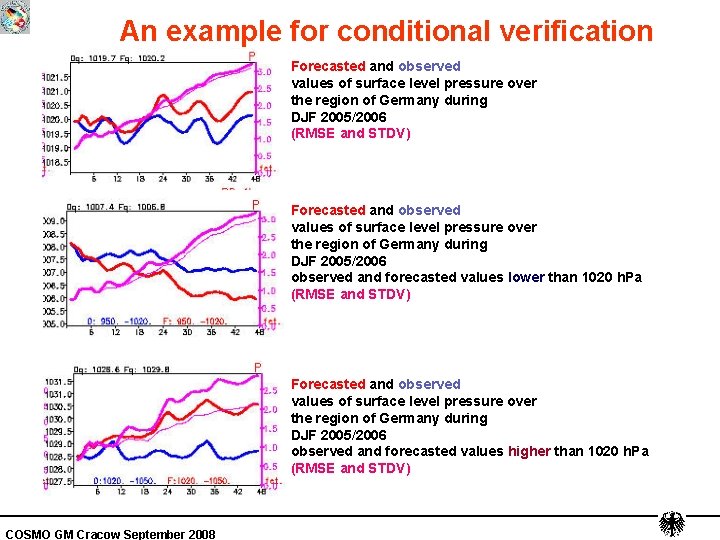

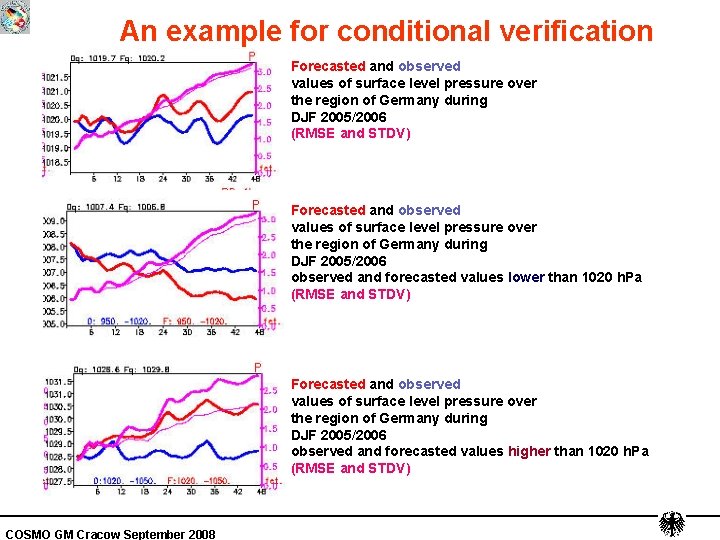

An example for conditional verification Forecasted and observed values of surface level pressure over the region of Germany during DJF 2005/2006 (RMSE and STDV) Forecasted and observed values of surface level pressure over the region of Germany during DJF 2005/2006 observed and forecasted values lower than 1020 h. Pa (RMSE and STDV) Forecasted and observed values of surface level pressure over the region of Germany during DJF 2005/2006 observed and forecasted values higher than 1020 h. Pa (RMSE and STDV) COSMO GM Cracow September 2008

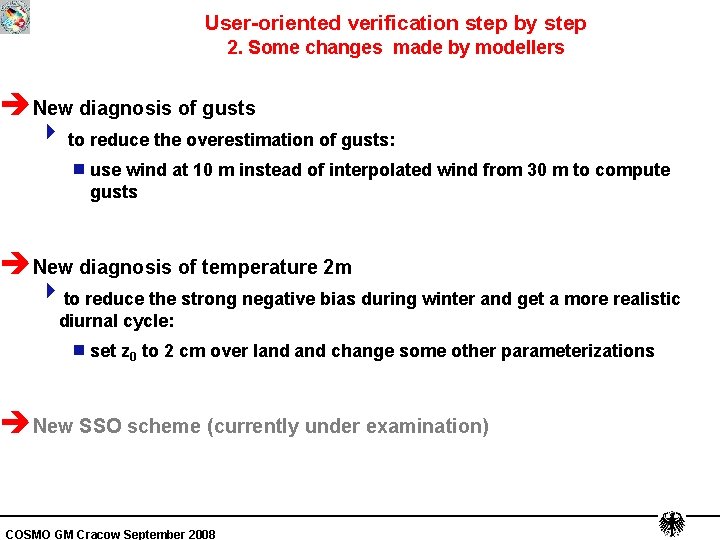

User-oriented verification step by step 2. Some changes made by modellers èNew diagnosis of gusts 4 to reduce the overestimation of gusts: g use wind at 10 m instead of interpolated wind from 30 m to compute gusts èNew diagnosis of temperature 2 m 4 to reduce the strong negative bias during winter and get a more realistic diurnal cycle: g set z 0 to 2 cm over land change some other parameterizations èNew SSO scheme (currently under examination) COSMO GM Cracow September 2008

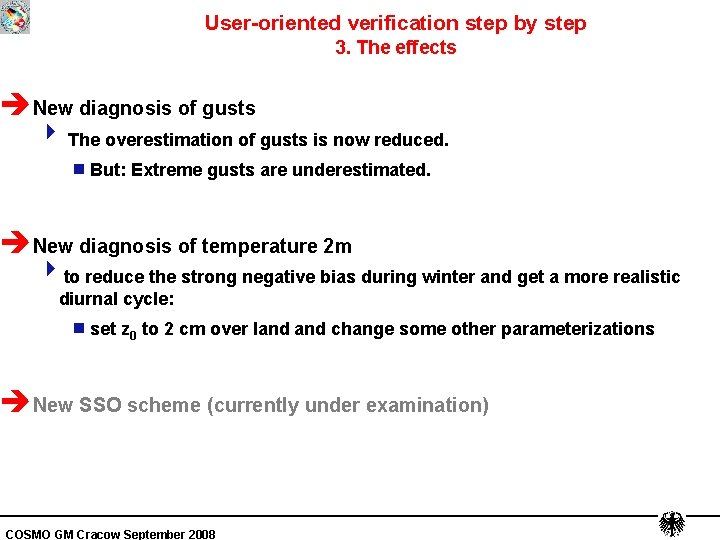

User-oriented verification step by step 3. The effects èNew diagnosis of gusts 4 The overestimation of gusts is now reduced. g But: Extreme gusts are underestimated. èNew diagnosis of temperature 2 m 4 to reduce the strong negative bias during winter and get a more realistic diurnal cycle: g set z 0 to 2 cm over land change some other parameterizations èNew SSO scheme (currently under examination) COSMO GM Cracow September 2008

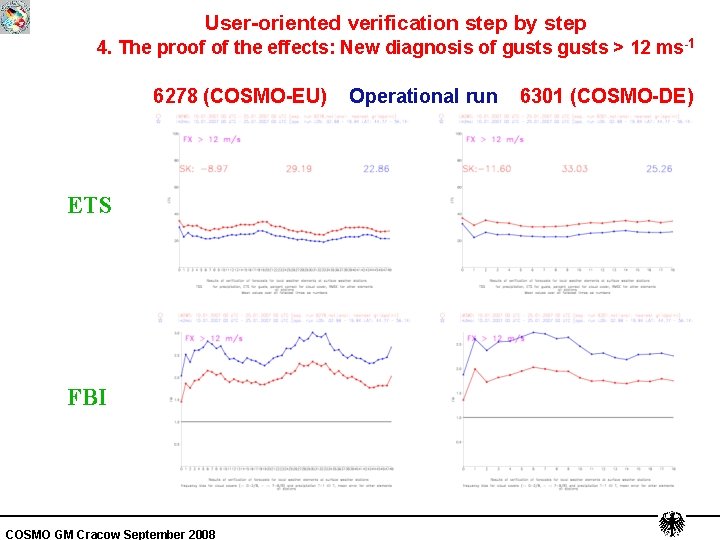

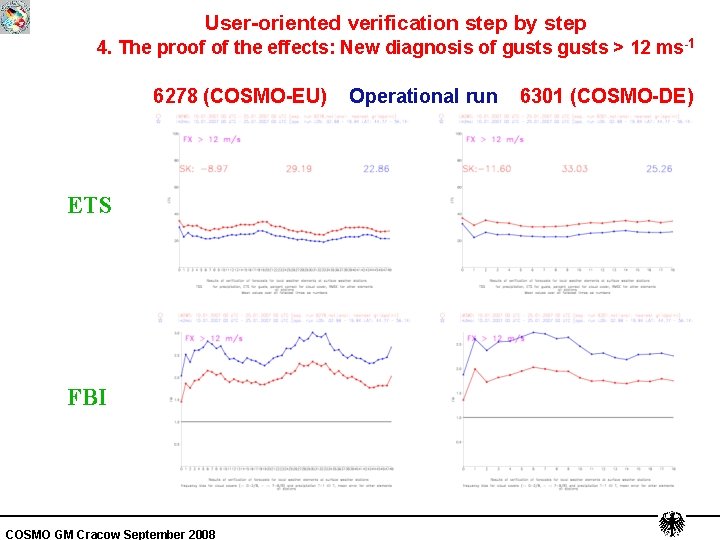

User-oriented verification step by step 4. The proof of the effects: New diagnosis of gusts > 12 ms -1 Böenverifikation der Experimente 6278 (COSMO-EU) Operational run 6301 (COSMO-DE) ETS FBI COSMO GM Cracow September 2008

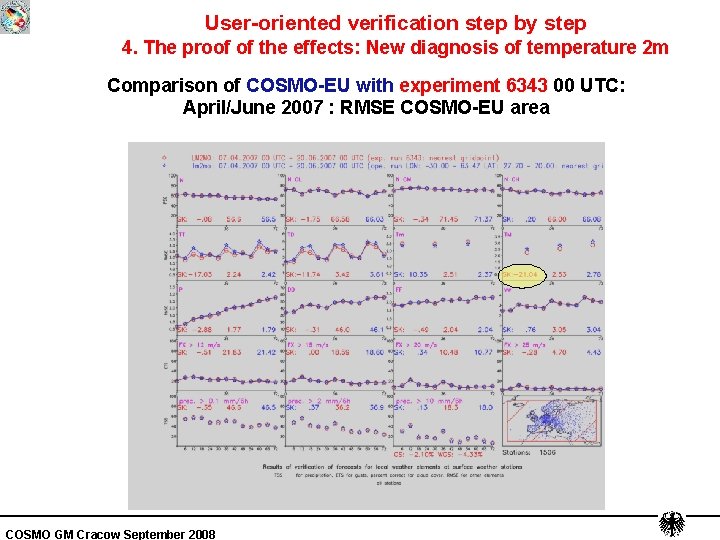

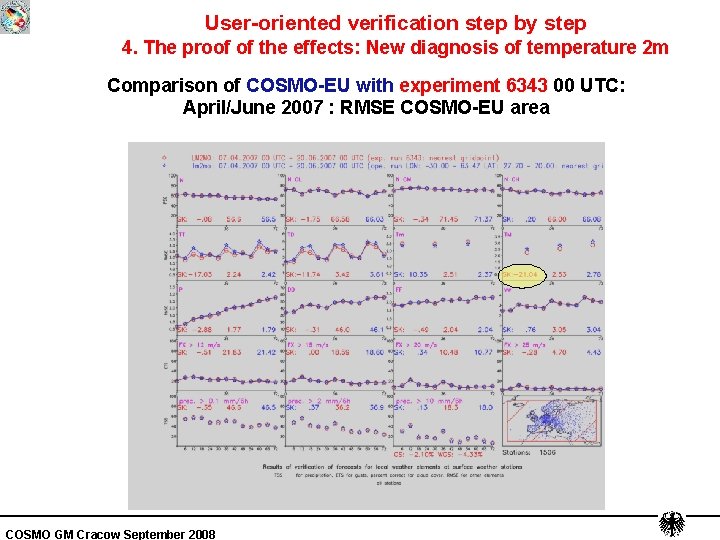

User-oriented verification step by step 4. The proof of the effects: New diagnosis of temperature 2 m Comparison of COSMO-EU with experiment 6343 00 UTC: April/June 2007 : RMSE COSMO-EU area COSMO GM Cracow September 2008

A basic law during model development: There are no gains without any losses! (maybe with some exceptions) Therefore, one has to look both at benefits and risks. COSMO GM Cracow September 2008

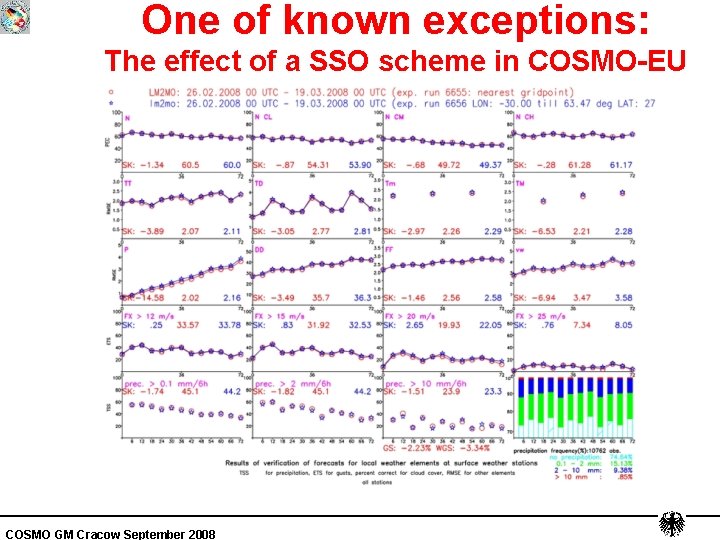

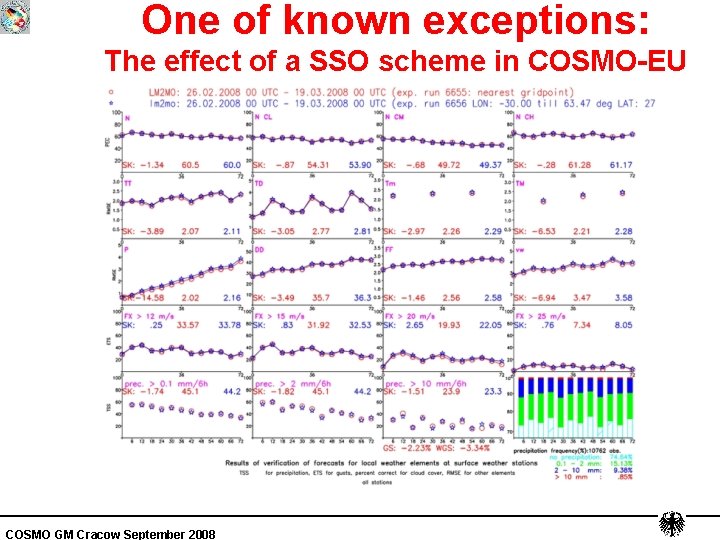

One of known exceptions: The effect of a SSO scheme in COSMO-EU COSMO GM Cracow September 2008

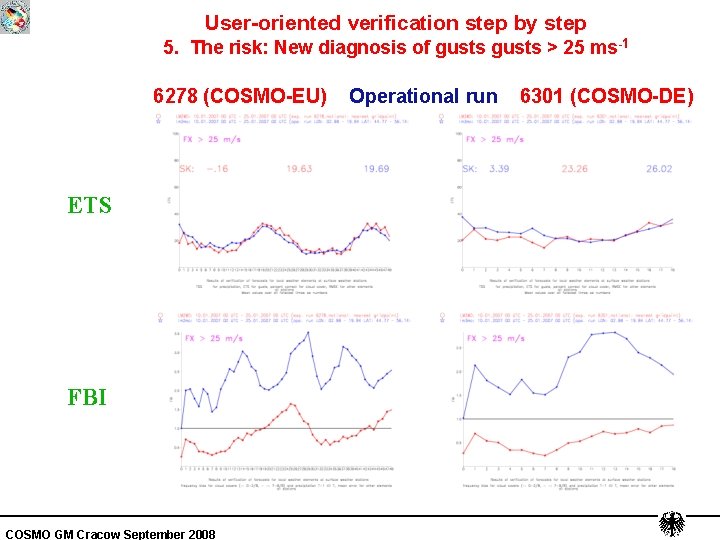

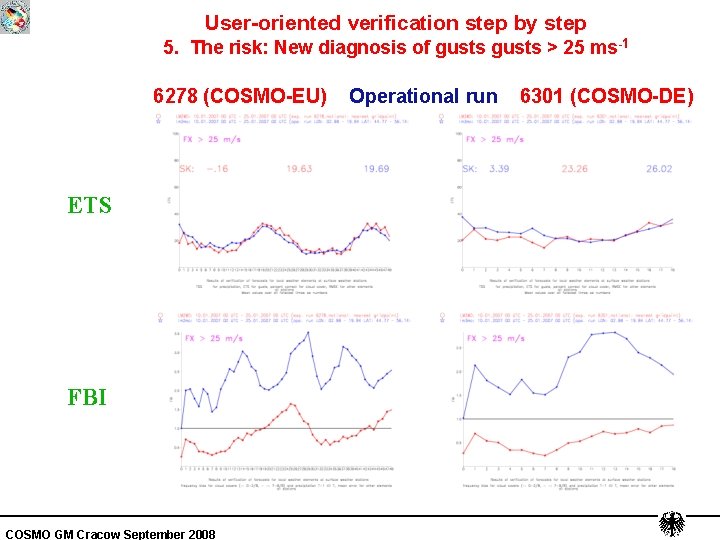

User-oriented verification step by step 5. The risk: New diagnosis of gusts > 25 ms-1 Böenverifikation der Experimente 6278 (COSMO-EU) Operational run 6301 (COSMO-DE) ETS FBI COSMO GM Cracow September 2008

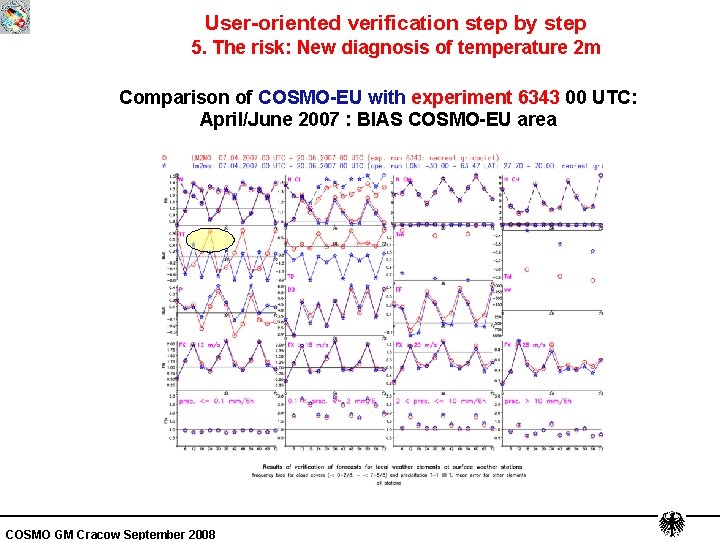

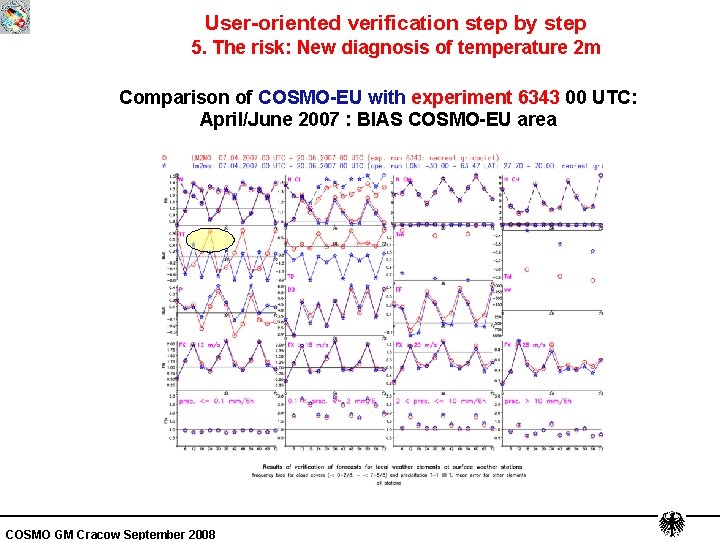

User-oriented verification step by step 5. The risk: New diagnosis of temperature 2 m Comparison of COSMO-EU with experiment 6343 00 UTC: April/June 2007 : BIAS COSMO-EU area COSMO GM Cracow September 2008

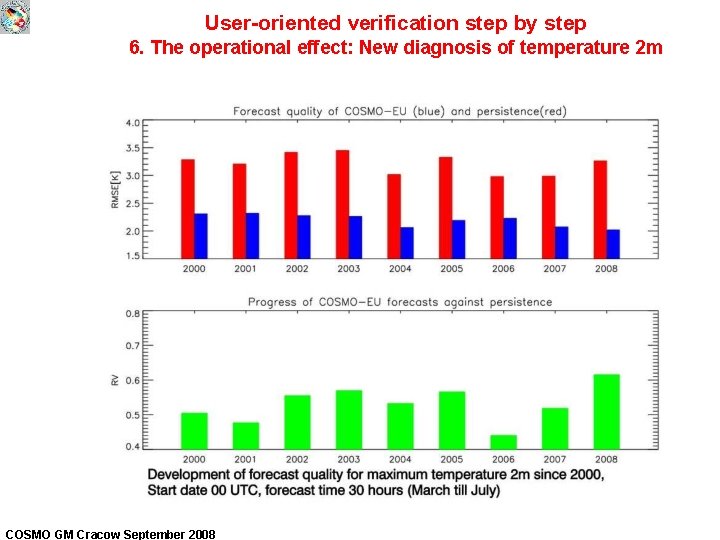

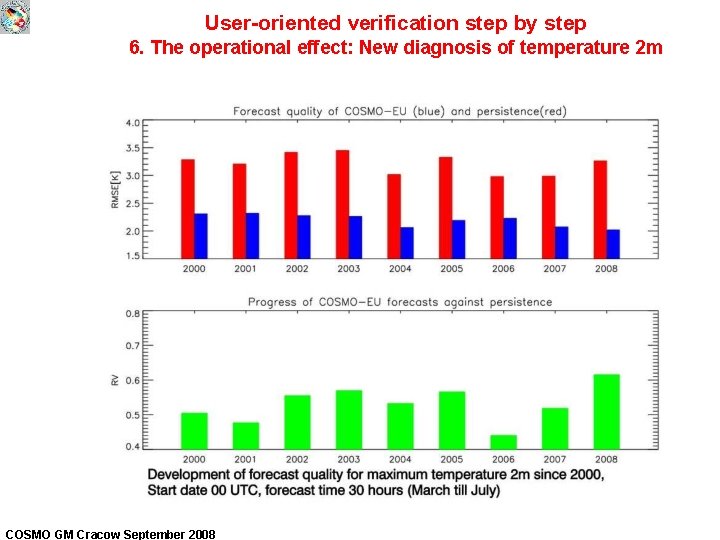

User-oriented verification step by step 6. The operational effect: New diagnosis of temperature 2 m COSMO GM Cracow September 2008

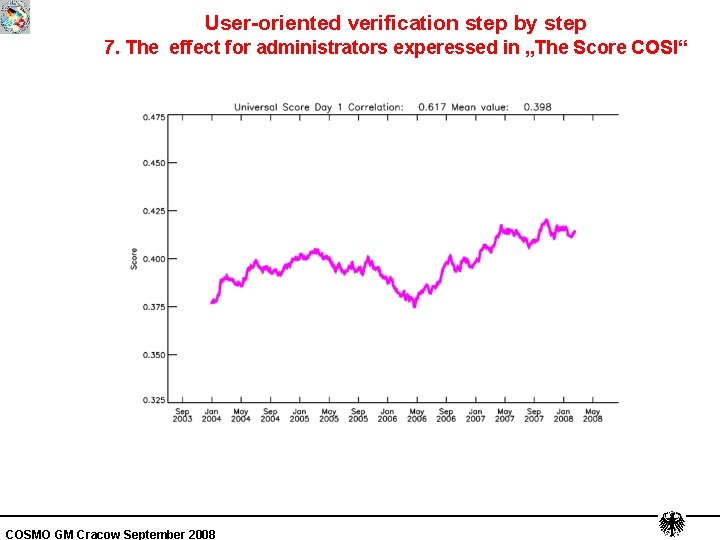

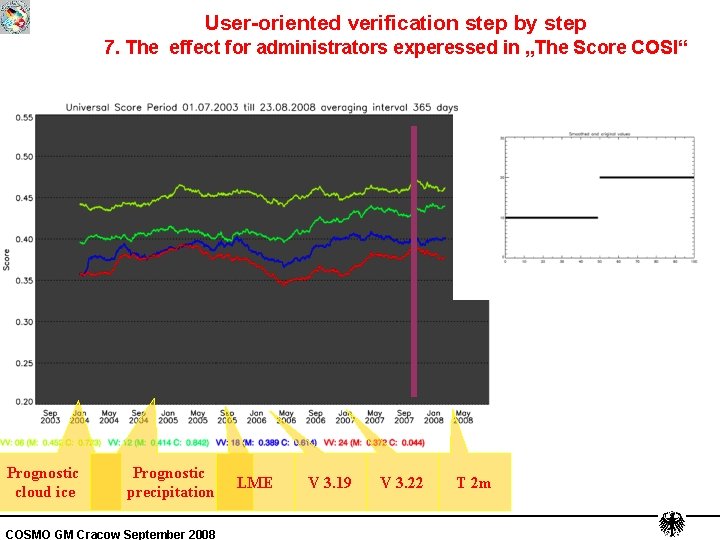

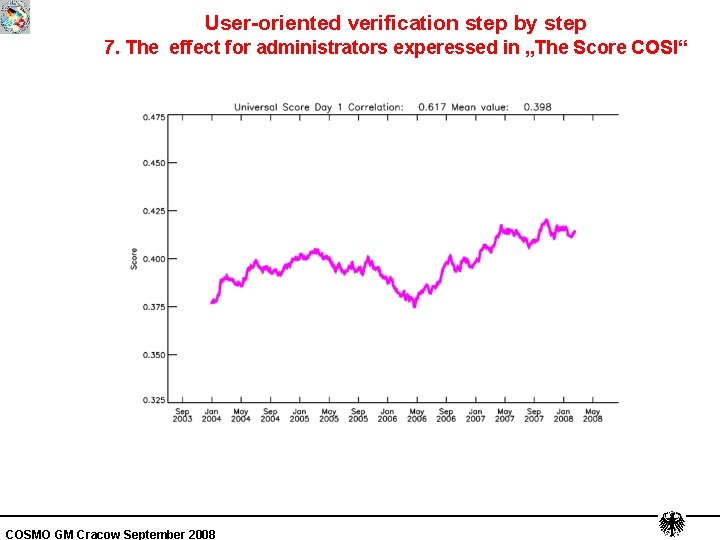

User-oriented verification step by step 7. The effect for administrators experessed in „The Score COSI“ COSMO GM Cracow September 2008

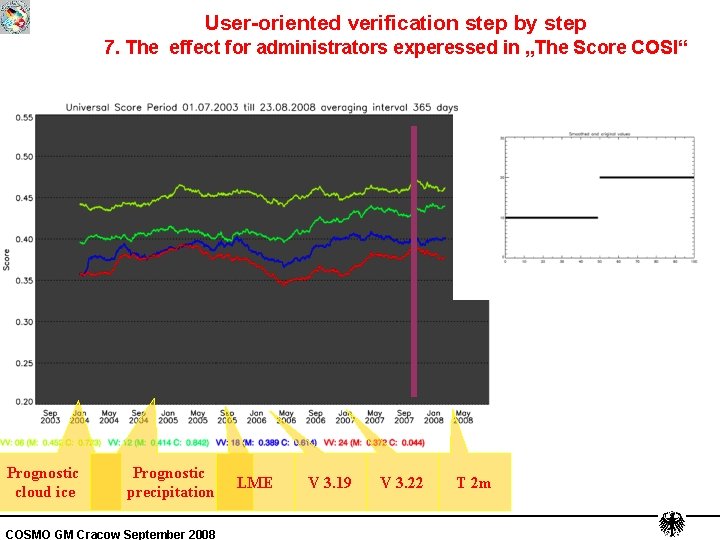

User-oriented verification step by step 7. The effect for administrators experessed in „The Score COSI“ asr Prognostic cloud ice Prognostic precipitation COSMO GM Cracow September 2008 LME V 3. 19 V 3. 22 T 2 m

User-oriented verification step by step 8. Questions Are there any questions to me? Question from the verification group: What are the requirements to the verification process by users in order to make the process of model development as effective as possible? COSMO GM Cracow September 2008