Various EPICS Lab VIEW Interfaces Alexander Zhukov Beam

Various EPICS / Lab. VIEW Interfaces Alexander Zhukov Beam Instrumentation Group SNS Managed by UT-Battelle for the Department of Energy

Why one would want LV and EPICS? · Need to add EPICS support (client or server side) to existing instrument controlled by Lab. VIEW · Develop IOC using National Instruments hardware (c. RIO draws more and more attention) – SNS – LANL · Use Lab. VIEW as multi-platform GUI client – Windows NSTX/PPPL – Linux JLAB Hall D 2 Managed by UT-Battelle for the Department of Energy P. Sichta E. Wolin

SNS Diagnostics using Lab. VIEW Total number of LV controlled instruments >300 · One instrument = One IOC = One PC · Beam Instruments running LV on Windows – – – – BPMs BCMs Wire scanners Laser. Wire stations Video monitors Faraday Cups Emittance scanners … · Typical data acquisition rate 1 Hz (special DIAG_SLOW event) 3 Managed by UT-Battelle for the Department of Energy

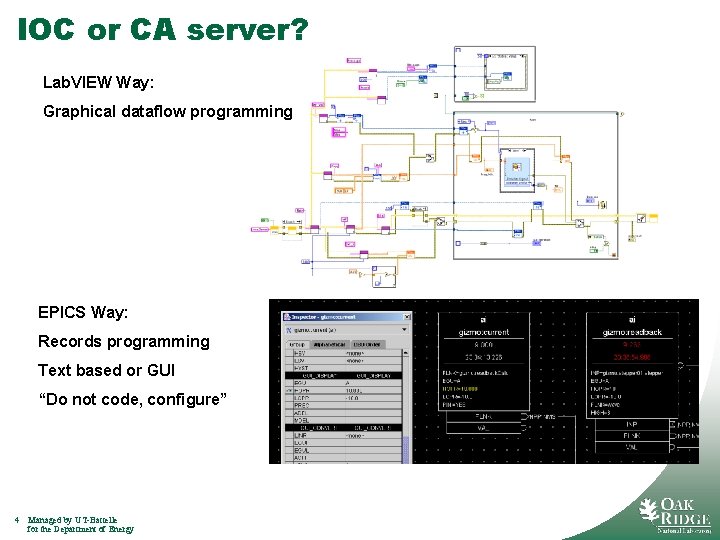

IOC or CA server? Lab. VIEW Way: Graphical dataflow programming EPICS Way: Records programming Text based or GUI “Do not code, configure” 4 Managed by UT-Battelle for the Department of Energy

What options do we have? · Windows shared memory – SNS · National Instruments (with help of Cosylab) c. RIO implementation of shared memory (full IOC runs on Vx. Works) – LANL · National Instruments CA server shared variable engine extension · Simple Channel Access (SCA) OS specific (exists for Windows and Linux? ) · Ca. Lab Windows DLL – BESSY · Lab. VIEW Native Channel Access for EPICS LANCE – Observatory Sciences · Pure Lab. VIEW CA (in development) – SNS 5 Managed by UT-Battelle for the Department of Energy

EPICS shared memory interface* Read. Data() Channel Access Wait. For. Interrupt() Get. Index. By. Name() Write. Data() Shared Memory DLL Lab. VIEW Application (Wire Scanner, BPM, etc) Create. DBEntry() IOC (database, CA) Set. Interrupt() DBD and DBD files 6 Managed by UT-Battelle for the Department of Energy *ICALEPCS talk by W. Blokland, D. Thompson

What is good and not so good? Good · You have full IOC and everything that comes with it (including record processing) · All EPICS tools will work by default · Tested and relatively reliable solution used for many years at SNS Bad · Need to support 2 infrastructure for EPICS IOC and for Lab. VIEW program (we can work around, but the bundle is still a little bit ugly) · Deployment is complicated: one has to make sure that 2 different parts (and processes) are behaving · Platform specific (Windows) 7 Managed by UT-Battelle for the Department of Energy

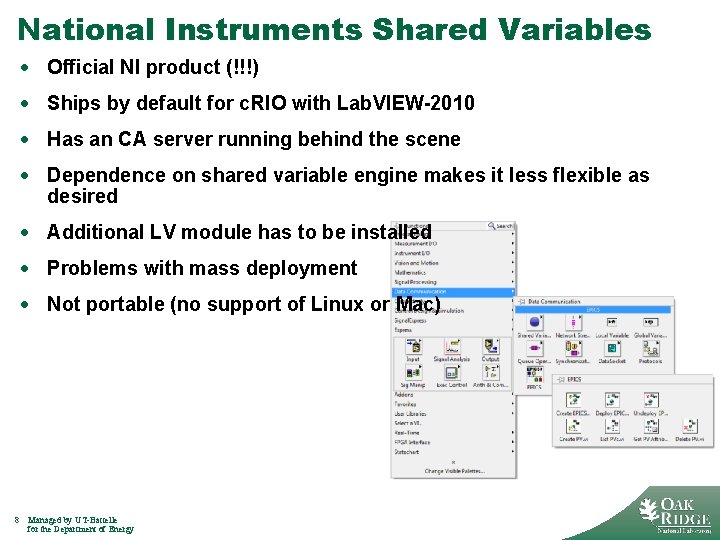

National Instruments Shared Variables · Official NI product (!!!) · Ships by default for c. RIO with Lab. VIEW-2010 · Has an CA server running behind the scene · Dependence on shared variable engine makes it less flexible as desired · Additional LV module has to be installed · Problems with mass deployment · Not portable (no support of Linux or Mac) 8 Managed by UT-Battelle for the Department of Energy

SNS Pure Lab. VIEW (8. 5+) CA server · Pure Lab. VIEW implementation using UDP/TCP Vis · Everything within LV – no priority balancing with “alien” process · Supports – ENUM – INT – CHAR – FLOAT – DOUBLE Since last year 9 · Switched to Object Oriented design · No more PV IDs, instead PV object with appropriate for given PV type methods · Naive implementation of standard Map container in LV (no templates/generics in Lab. VIEW, no standard container support like List, Map etc) · Code became more modular · Local/Remote PV are handled similarly by user API Managed by UT-Battelle for the Department of Energy

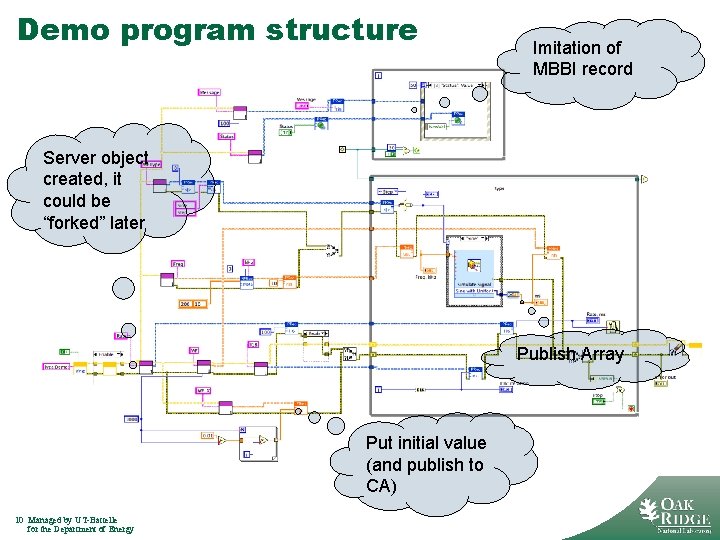

Demo program structure Imitation of MBBI record Server object created, it could be “forked” later Publish Array Put initial value (and publish to CA) 10 Managed by UT-Battelle for the Department of Energy

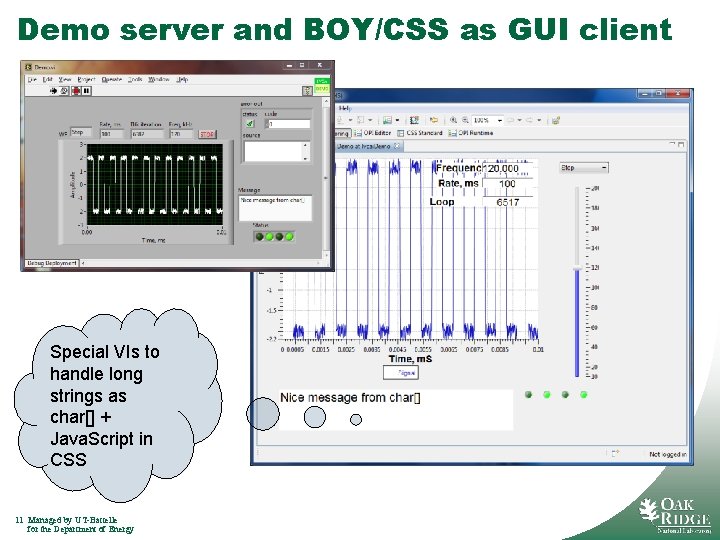

Demo server and BOY/CSS as GUI client Special VIs to handle long strings as char[] + Java. Script in CSS 11 Managed by UT-Battelle for the Department of Energy

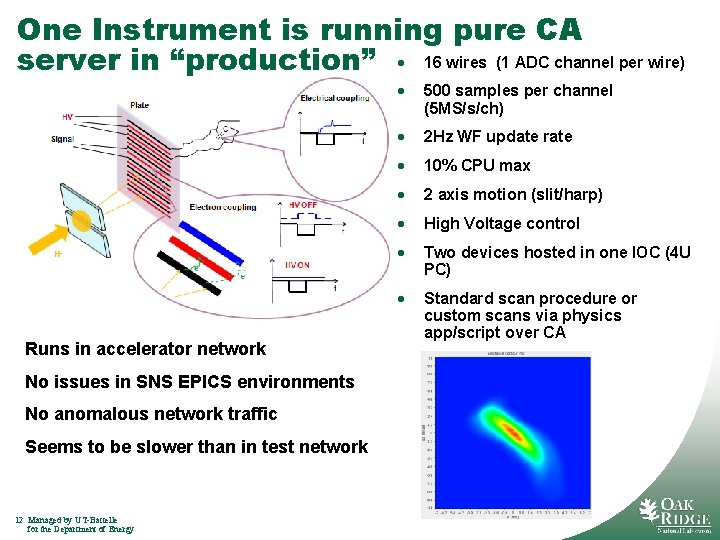

One Instrument is running pure CA server in “production” · 16 wires (1 ADC channel per wire) Runs in accelerator network No issues in SNS EPICS environments No anomalous network traffic Seems to be slower than in test network 12 Managed by UT-Battelle for the Department of Energy · 500 samples per channel (5 MS/s/ch) · 2 Hz WF update rate · 10% CPU max · 2 axis motion (slit/harp) · High Voltage control · Two devices hosted in one IOC (4 U PC) · Standard scan procedure or custom scans via physics app/script over CA

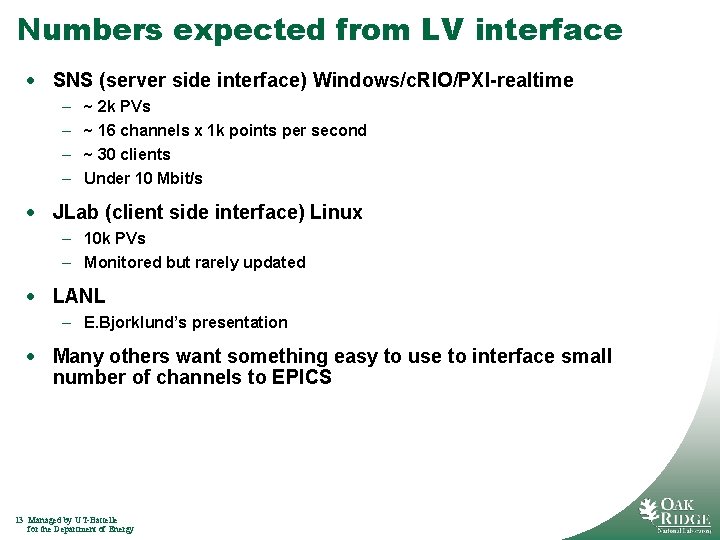

Numbers expected from LV interface · SNS (server side interface) Windows/c. RIO/PXI-realtime – – ~ 2 k PVs ~ 16 channels x 1 k points per second ~ 30 clients Under 10 Mbit/s · JLab (client side interface) Linux – 10 k PVs – Monitored but rarely updated · LANL – E. Bjorklund’s presentation · Many others want something easy to use to interface small number of channels to EPICS 13 Managed by UT-Battelle for the Department of Energy

Performance benchmarking · The biggest problem is setting up a test environment and test case · Number of PVs, clients connected, PV update rate, PV (array) size, CPU power form at least 5 -dimensional parameter configuration space · Typical numbers: – SNS (pure LV version): one 1 k WF at 300 Hz/5 clients results in ~ 20% of CPU usage of i. MAC with I 7 – NI c. RIO-9014 (400 MHz, 128 MB), CA server from NI: 1000 PVs/sec, fastest update rate 2 ms · Generally performance doesn’t seem to be an issue in any of implementation when applied to realistic situations. 14 Managed by UT-Battelle for the Department of Energy

Summary · Many LV EPICS interfaces exist · There is no ideal one · The need in full featured IOC is the key parameter for selection process · Windows shared memory is still default way at SNS · NI version becomes better · SNS pure LV version is cross-platform and seems to satisfy all needs, but is not finalized yet – One instrument PC-Windows deployed – Three (c. RIO) will go in production in Feb 2011 (NI implementation as plan B) – Client side to be ready for testing by December 2010 – If anyone is interested we are ready for accommodating requests since the API is not frozen yet – CA Client is the biggest TODO for nearest future 15 Managed by UT-Battelle for the Department of Energy

- Slides: 15