ValueAdded Teacher Evaluation Explanations and Implications for Michigan

- Slides: 27

Value-Added Teacher Evaluation: Explanations and Implications for Michigan Music Educators Colleen Conway, University of Michigan (Session Presider) Abby Butler, Wayne State University Phillip Hash, Calvin College Cynthia Taggart, Michigan State University

Overview of Michigan Legislation Signed July 19, 2011 • “…with the involvement of teachers and school administrators, the board of a school district…shall adopt and implement for all teachers and school administrators a rigorous, transparent, and fair performance evaluation system that does all of the following: ” – Measures student growth – Provides relevant data on student growth – Evaluates a teacher's job performance “using multiple rating categories that take into account data on student growth as a significant factor” (PA 102, p. 2).

Overview of Michigan Legislation (cont. ) • % of evaluation related to student growth: – 2013 -14 (25%); 2014 -15 (40%); 2015 -16 (50%) • All teachers evaluated annually – Review of lesson plan w/ standards – Rated as: Highly effective, minimally effective, ineffective • Evaluations vs. Seniority in personnel decisions • National, state, and local assessments allowed

MDE Will Provide • Measures For every educator, regardless of subject taught, based on 2009 -10 and 2010 -11 data: – Student growth levels in reading and math – Student proficiency levels in math, reading, writing, science, social studies – Foundational measure of student proficiency and improvement (same for each teacher in a school) Understanding Michigan's Educator Evaluations, MDE (December 2010) • How will this data be used for arts educators? – Currently up to school districts – Might be specified by the state after this year

Governor’s Council on Educator Effectiveness • By April 30, 2012 submit a report that recommends – a student growth and assessment tool – State evaluation tools for teachers and administrators – parameters for effectiveness rating categories. – Subject to leg. approval

Recommendations for Music Teachers

Recommendations for Music Educators Be active within your educational communities: • State level: Be involved in developing and implementing curricula that state clearly what students should know/be able to do. • District level: Work with administration to identify and develop objective and valid measures of curricular goals before evaluation cycle begins.

Learn as much as you can about assessment: • Use a variety of assessments that are valid for measuring growth and achievement in order to paint a rich picture of each student musically • Consult colleagues and experts for assistance as needed

Adjust your teaching practice to embrace assessment: • Make assessment a naturalistic, regular part of nearly every class period. • Include opportunities for individual response.

Abby Butler Wayne State University ASSESSMENT

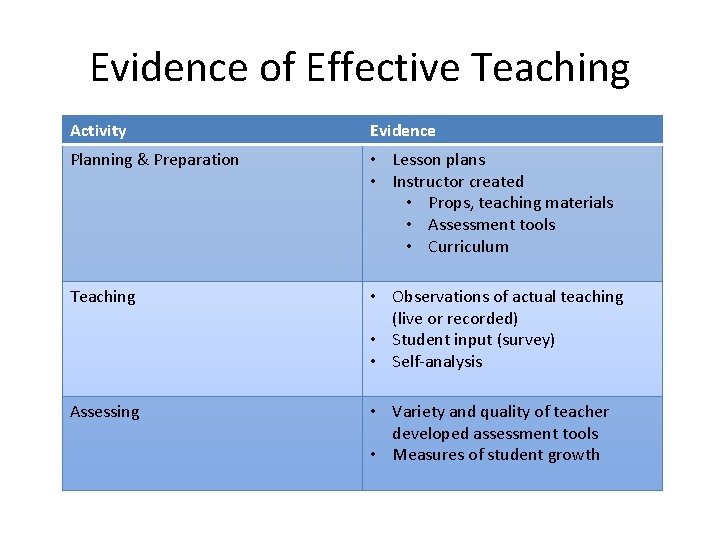

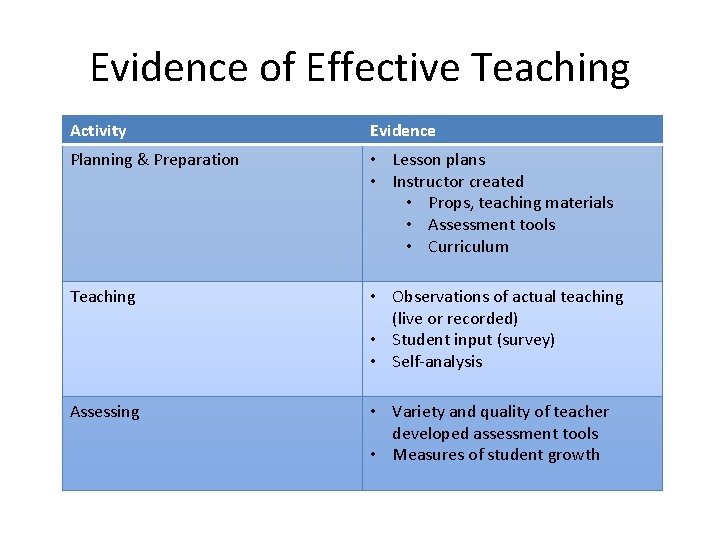

Evidence of Effective Teaching Activity Evidence Planning & Preparation • Lesson plans • Instructor created • Props, teaching materials • Assessment tools • Curriculum Teaching • Observations of actual teaching (live or recorded) • Student input (survey) • Self-analysis Assessing • Variety and quality of teacher developed assessment tools • Measures of student growth

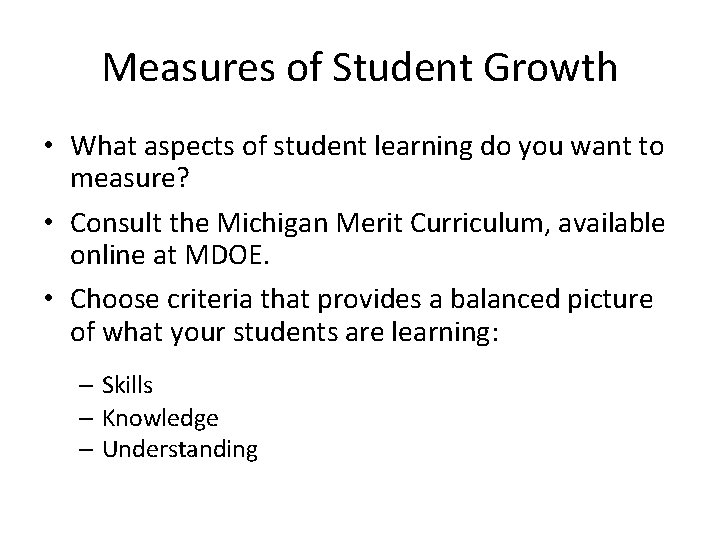

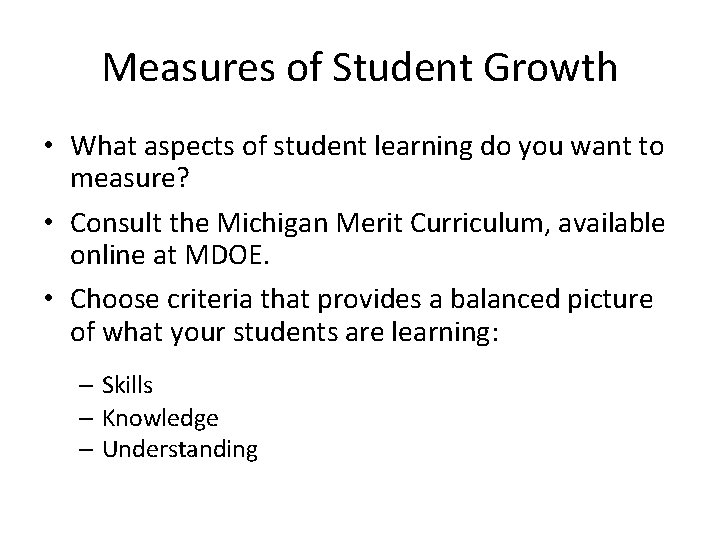

Measures of Student Growth • What aspects of student learning do you want to measure? • Consult the Michigan Merit Curriculum, available online at MDOE. • Choose criteria that provides a balanced picture of what your students are learning: – Skills – Knowledge – Understanding

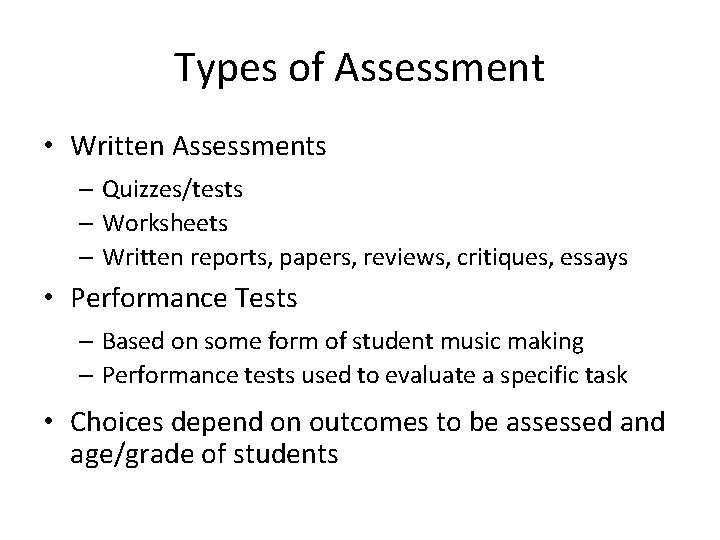

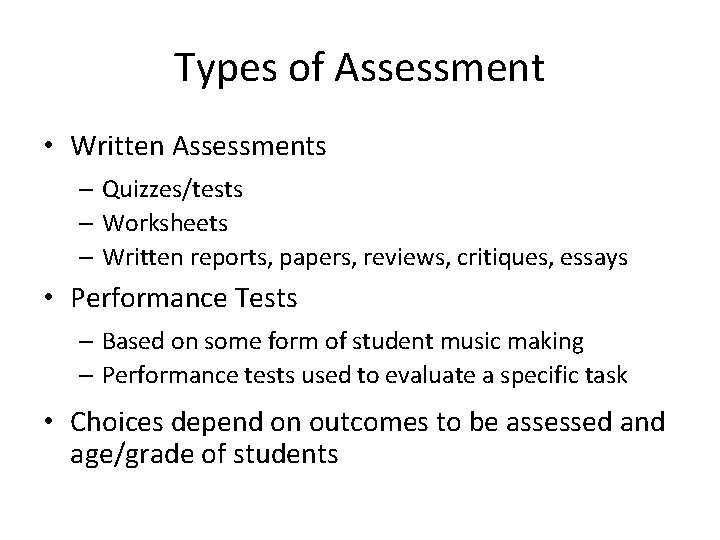

Types of Assessment • Written Assessments – Quizzes/tests – Worksheets – Written reports, papers, reviews, critiques, essays • Performance Tests – Based on some form of student music making – Performance tests used to evaluate a specific task • Choices depend on outcomes to be assessed and age/grade of students

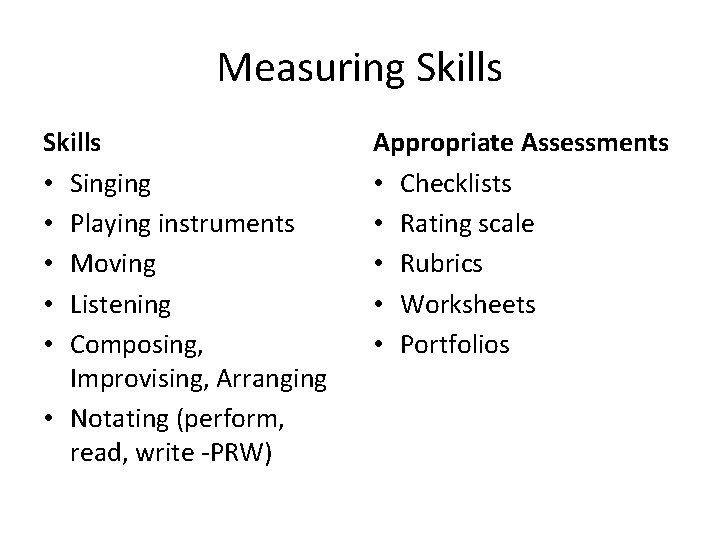

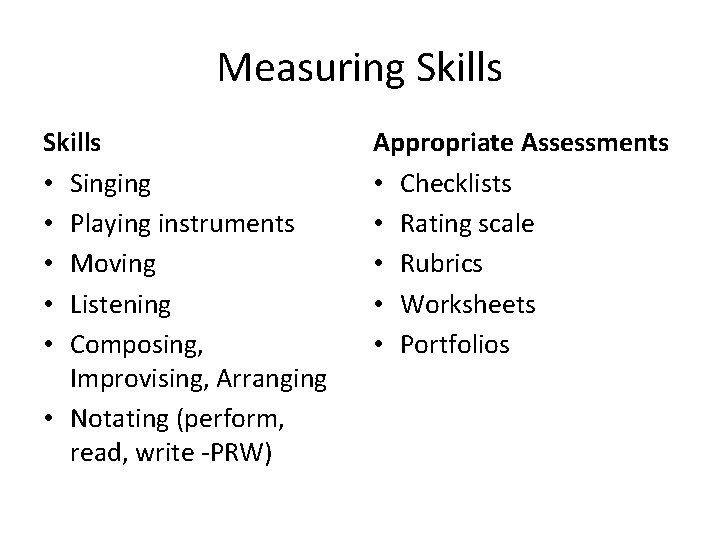

Measuring Skills • Singing • Playing instruments • Moving • Listening • Composing, Improvising, Arranging • Notating (perform, read, write -PRW) Appropriate Assessments • Checklists • Rating scale • Rubrics • Worksheets • Portfolios

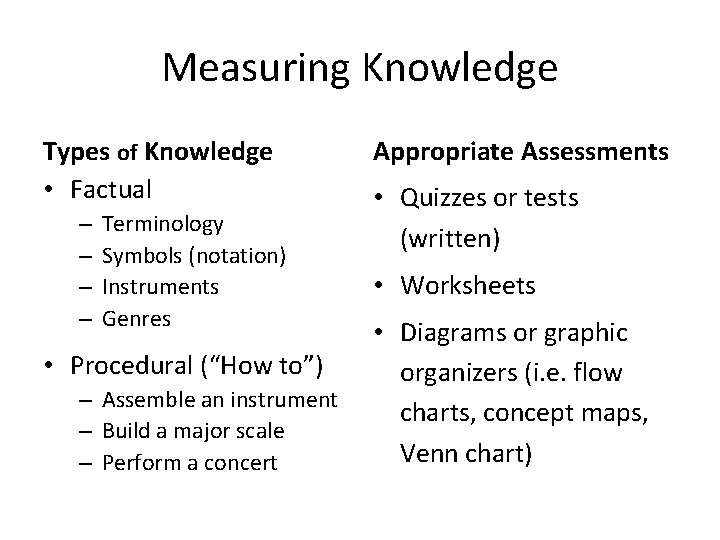

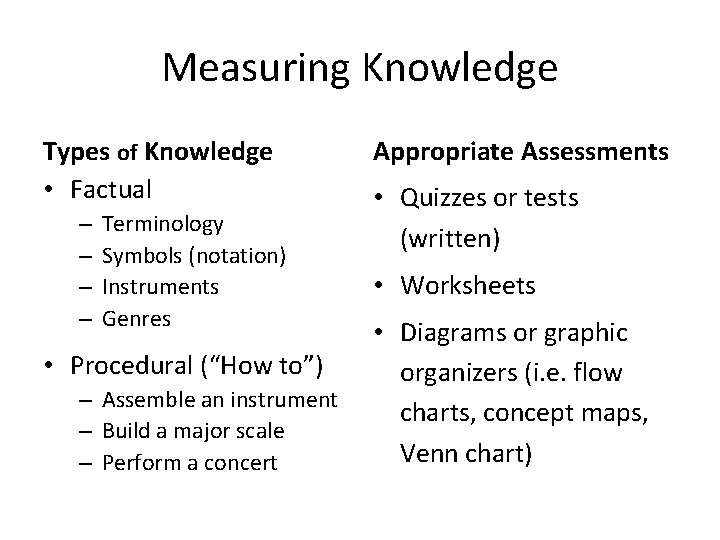

Measuring Knowledge Types of Knowledge • Factual – – Terminology Symbols (notation) Instruments Genres • Procedural (“How to”) – Assemble an instrument – Build a major scale – Perform a concert Appropriate Assessments • Quizzes or tests (written) • Worksheets • Diagrams or graphic organizers (i. e. flow charts, concept maps, Venn chart)

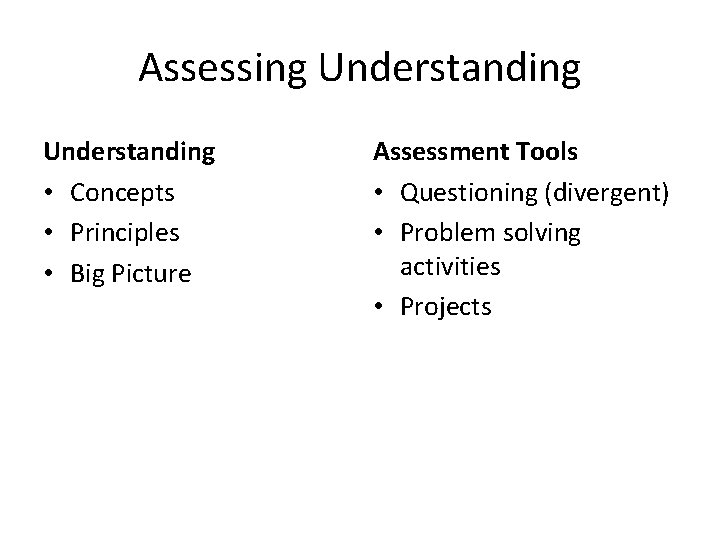

Assessing Understanding • Concepts • Principles • Big Picture Assessment Tools • Questioning (divergent) • Problem solving activities • Projects

Evidence of Growth • Baseline data for comparison • Possible sources: – Teacher developed pre-test – Teacher developed grade level assessments – Assessments included in textbook series – Purchased tests (i. e. Gordon’s PMMA, Iowa Test for Music Literacy)

Scheduling Assessments • Consult with your principal to determine a schedule for assessments • Consider the following: – Number, length, and type of assessments – School calendar & performance events – Who will administer assessments – Which grades, classes, or students will be assessed

Documenting & Recording the Evidence • How will you document the evidence? – Will results be quantitative or qualitative? – If you use rubrics, checklists, or rating scales, how will they be scored? • Where will this information be recorded? – School computer, i. Pad, Smartboard? – Software program, i. e. Excel?

Interpreting Results • • Adequate and consistent data Baseline for comparison Consideration of mediating factors Consult experts as needed

Festival Ratings

Festival Ratings: Possibilities • Provide quantitative third party assessment • Can show growth over time in some circumstances – Individual judges’ ratings – Repertoire difficulty – 3 yr. period • Valid to the extent that they measure the quality of an ensemble’s performance of three selected pieces & sight reading at one point in time • Probably adaptable to state-wide evaluation tool • Assess a few performance standards

Festival Ratings: Concerns • Group assessment only – Ratings alone not sufficient – Limited assessment of growth • Reliability not established – Consistency of adjudication b/w years, districts, sites, judges? • Numerous factors influence reliability [next slide] • What is the role of festival and music organizations?

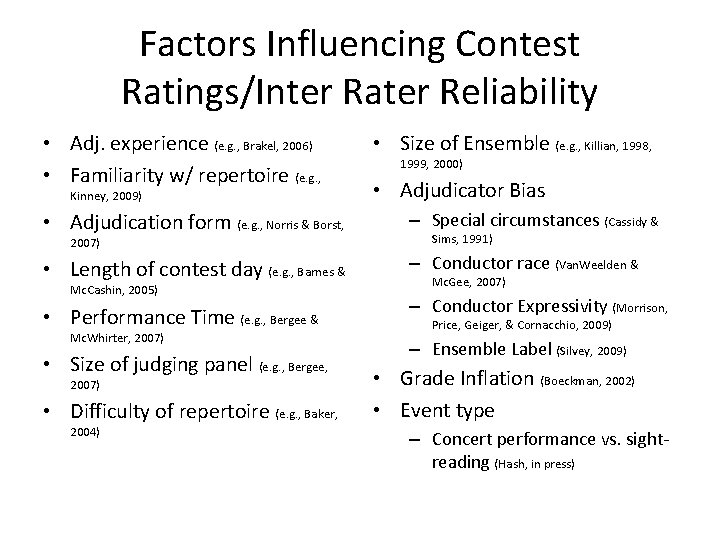

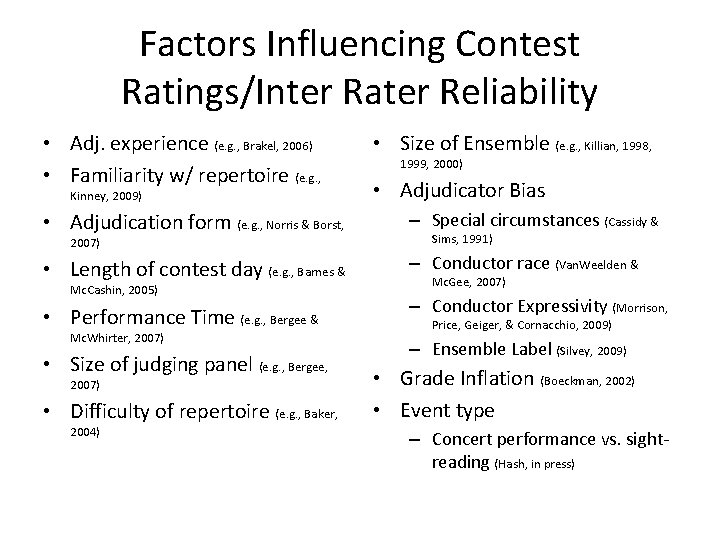

Factors Influencing Contest Ratings/Inter Rater Reliability • Adj. experience (e. g. , Brakel, 2006) • Familiarity w/ repertoire (e. g. , Kinney, 2009) • Size of Ensemble (e. g. , Killian, 1998, 1999, 2000) • Adjudicator Bias • Adjudication form (e. g. , Norris & Borst, – Special circumstances (Cassidy & • Length of contest day (e. g. , Barnes & – Conductor race (Van. Weelden & • Performance Time (e. g. , Bergee & – Conductor Expressivity (Morrison, 2007) Mc. Cashin, 2005) Mc. Whirter, 2007) • Size of judging panel (e. g. , Bergee, 2007) • Difficulty of repertoire (e. g. , Baker, 2004) Sims, 1991) Mc. Gee, 2007) Price, Geiger, & Cornacchio, 2009) – Ensemble Label (Silvey, 2009) • Grade Inflation (Boeckman, 2002) • Event type – Concert performance vs. sightreading (Hash, in press)

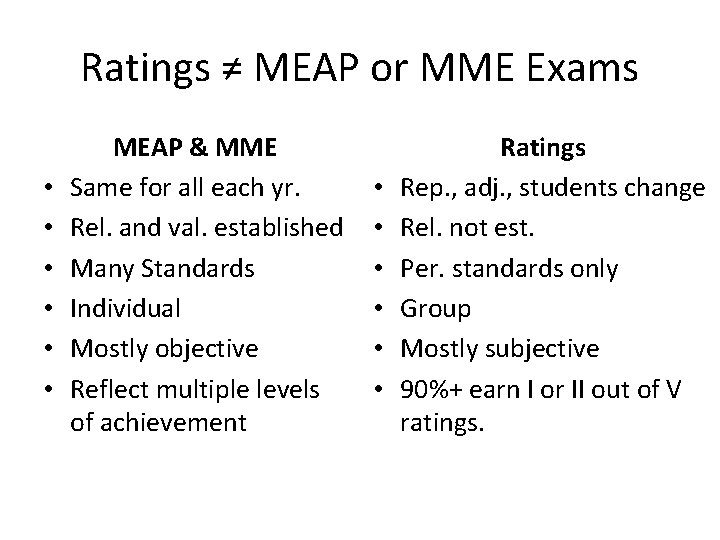

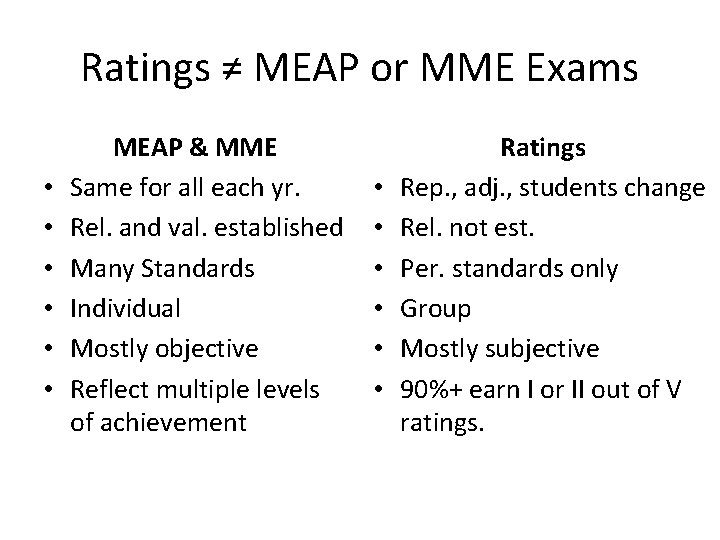

Ratings ≠ MEAP or MME Exams • • • MEAP & MME Same for all each yr. Rel. and val. established Many Standards Individual Mostly objective Reflect multiple levels of achievement • • • Ratings Rep. , adj. , students change Rel. not est. Per. standards only Group Mostly subjective 90%+ earn I or II out of V ratings.

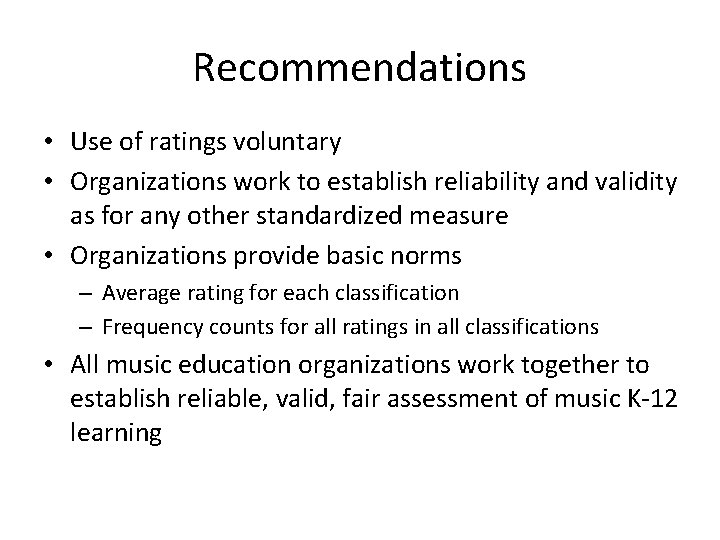

Recommendations • Use of ratings voluntary • Organizations work to establish reliability and validity as for any other standardized measure • Organizations provide basic norms – Average rating for each classification – Frequency counts for all ratings in all classifications • All music education organizations work together to establish reliable, valid, fair assessment of music K-12 learning

Questions/Comments?