Value Function Approximation Many slides adapted from Emma

- Slides: 29

Value Function Approximation Many slides adapted from Emma Brunskill’s CS 234 course at Stanford

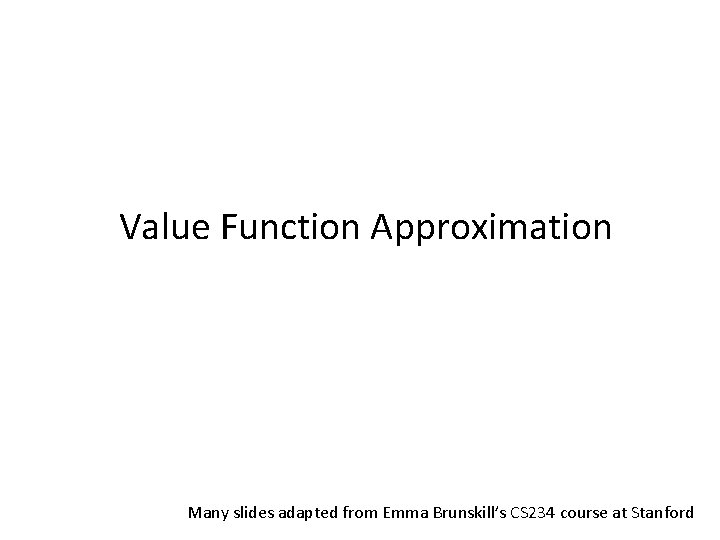

Recall from Week 1: Generalization • In many RL problems, it is impossible to explicitly store or learn for every single state: – – Dynamics or reward model Value State-action value Policy Input: Image How many images are there?

Recall from Week 1: Generalization • Want more compact representation that generalizes across state or states and actions – Reduce memory needed to store (P, R)/V/Q/π – Reduce computation needed to compute (P, R)/V/Q/π – Reduce experience needed to find a good (P, R)/V/Q/π

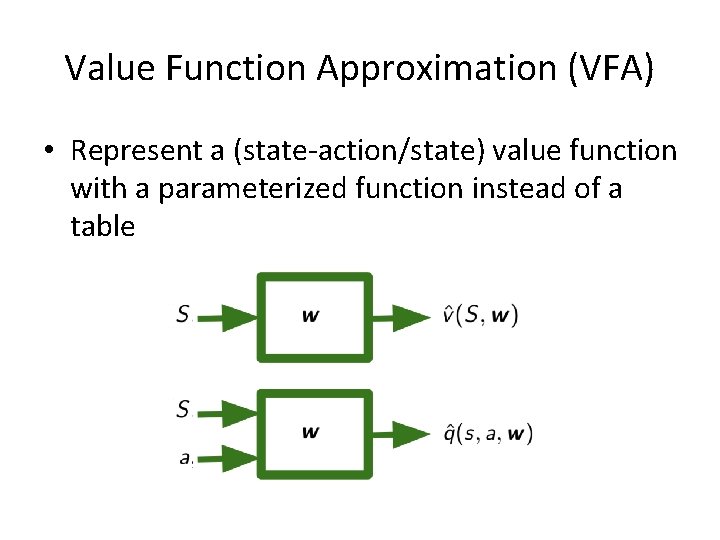

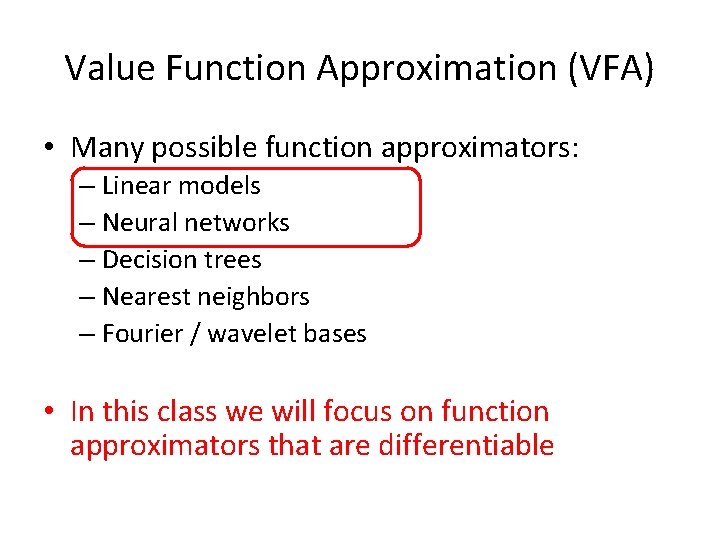

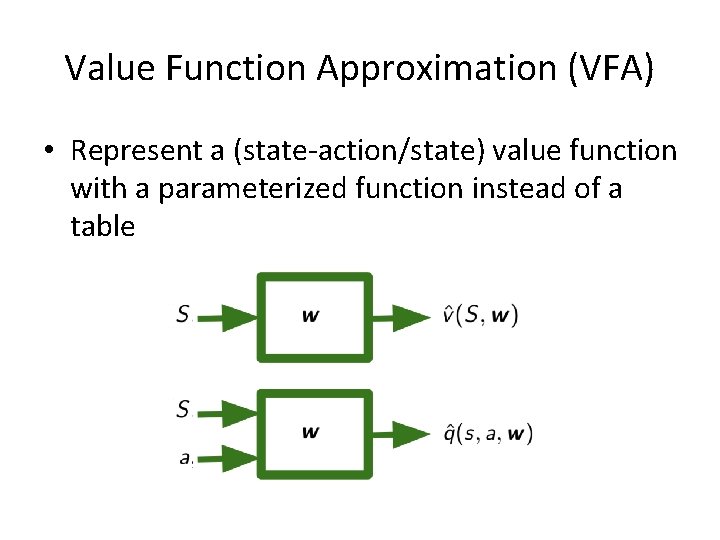

Value Function Approximation (VFA) • Represent a (state-action/state) value function with a parameterized function instead of a table

Value Function Approximation (VFA) • Many possible function approximators: – Linear models – Neural networks – Decision trees – Nearest neighbors – Fourier / wavelet bases • In this class we will focus on function approximators that are differentiable

Outline 1. VFA for policy evaluation 2. VFA for control

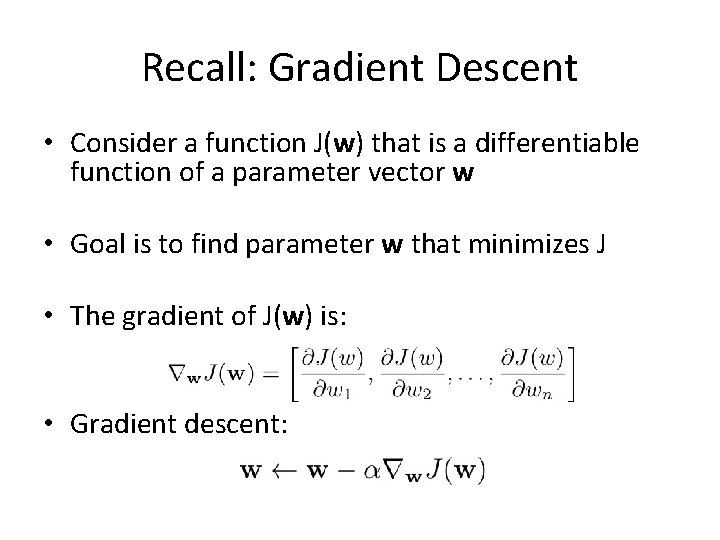

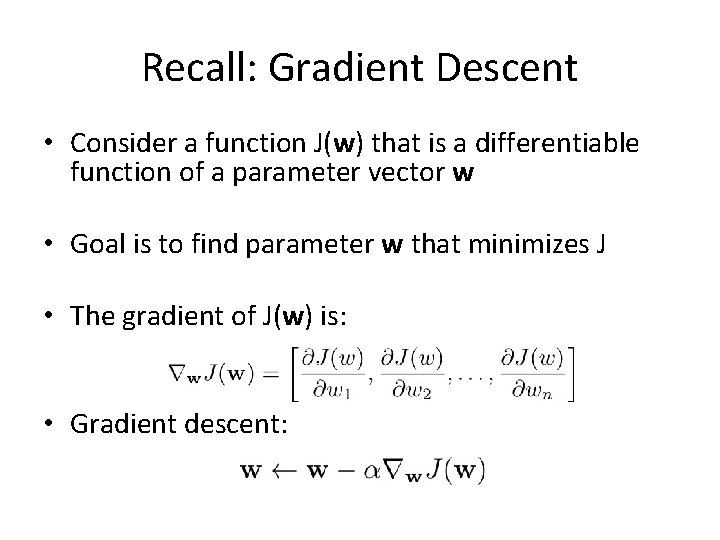

Recall: Gradient Descent • Consider a function J(w) that is a differentiable function of a parameter vector w • Goal is to find parameter w that minimizes J • The gradient of J(w) is: • Gradient descent:

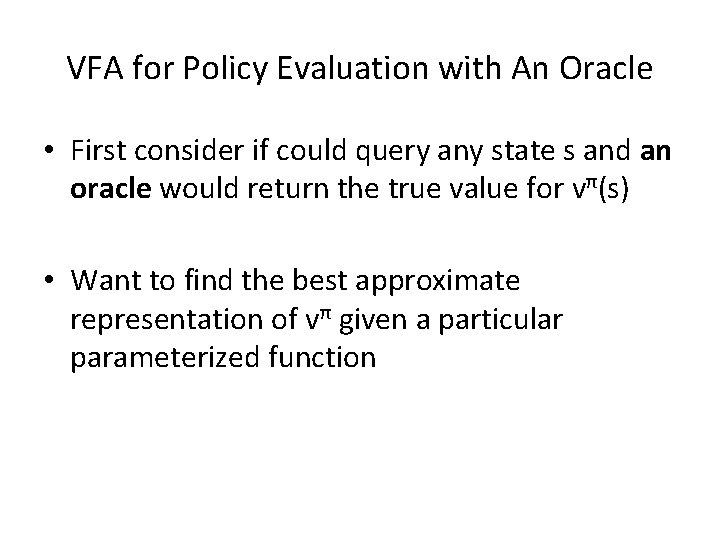

VFA for Policy Evaluation with An Oracle • First consider if could query any state s and an oracle would return the true value for vπ(s) • Want to find the best approximate representation of vπ given a particular parameterized function

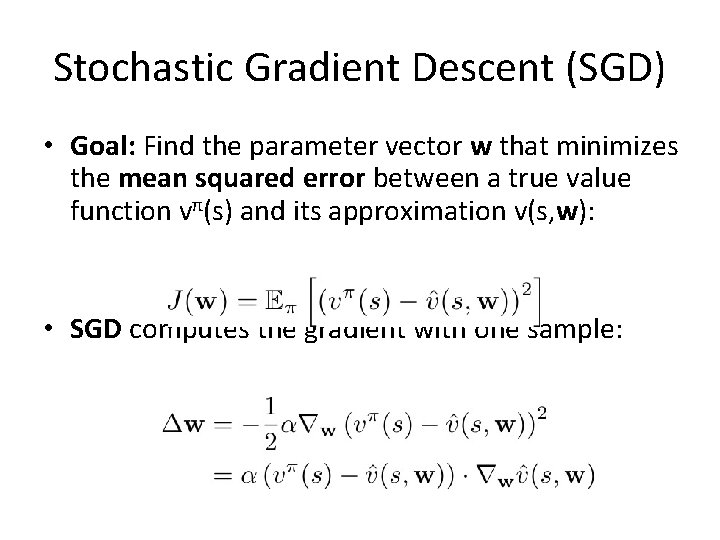

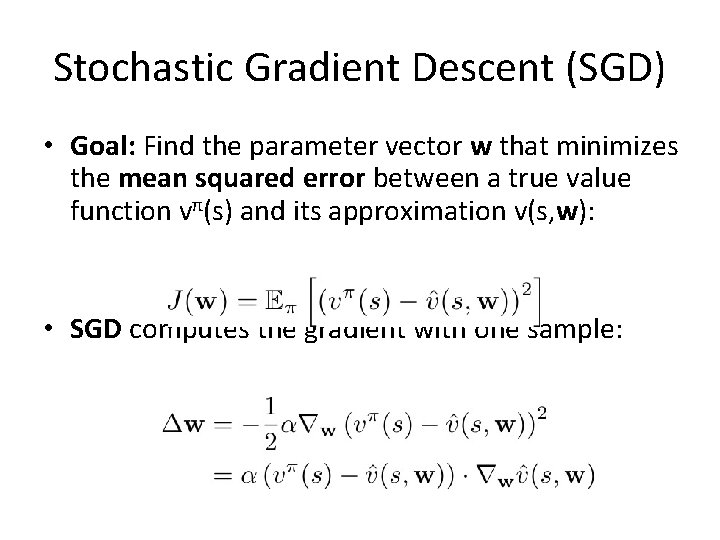

Stochastic Gradient Descent (SGD) • Goal: Find the parameter vector w that minimizes the mean squared error between a true value function vπ(s) and its approximation v(s, w): • SGD computes the gradient with one sample:

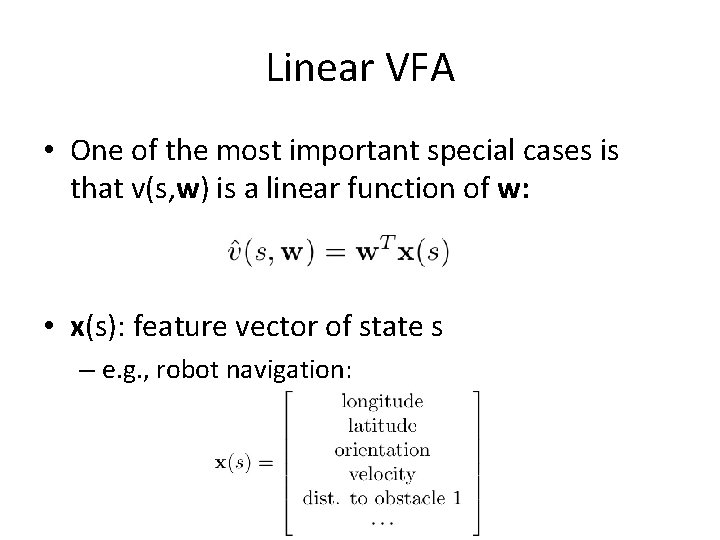

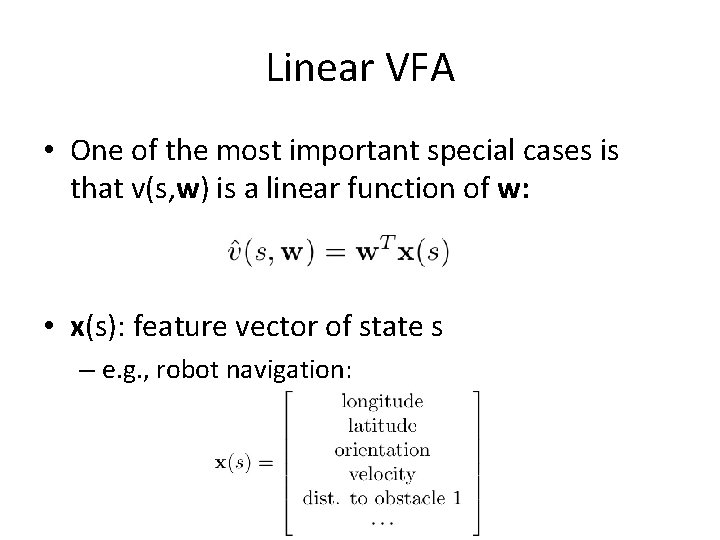

Linear VFA • One of the most important special cases is that v(s, w) is a linear function of w: • x(s): feature vector of state s – e. g. , robot navigation:

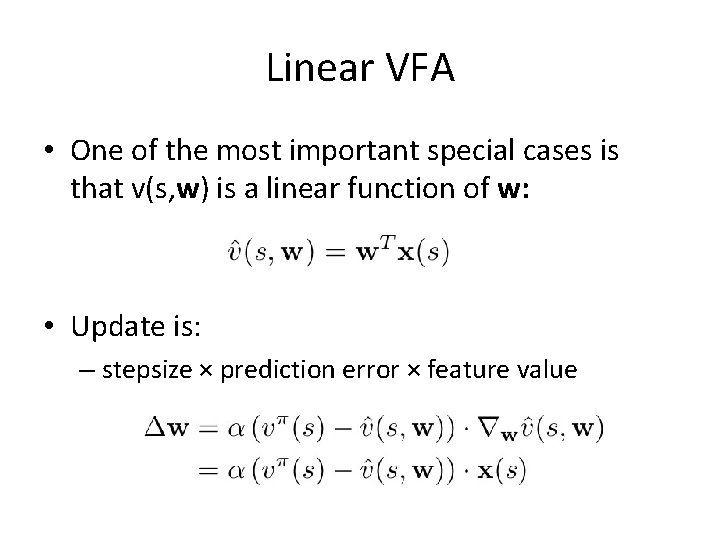

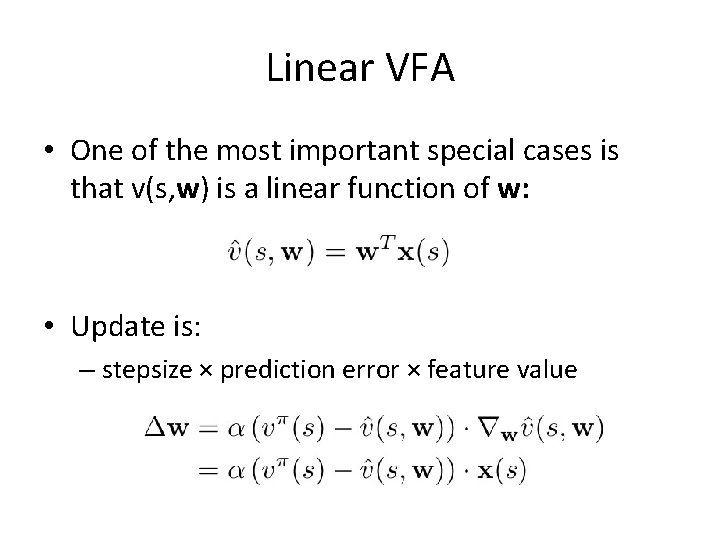

Linear VFA • One of the most important special cases is that v(s, w) is a linear function of w: • Update is: – stepsize × prediction error × feature value

Exercise • Show that tabular representations are a special case of linear function approximation. What would the feature vectors be?

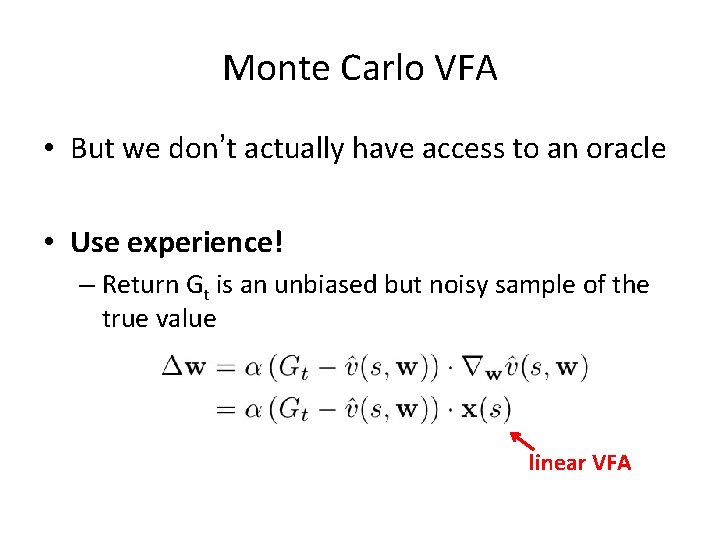

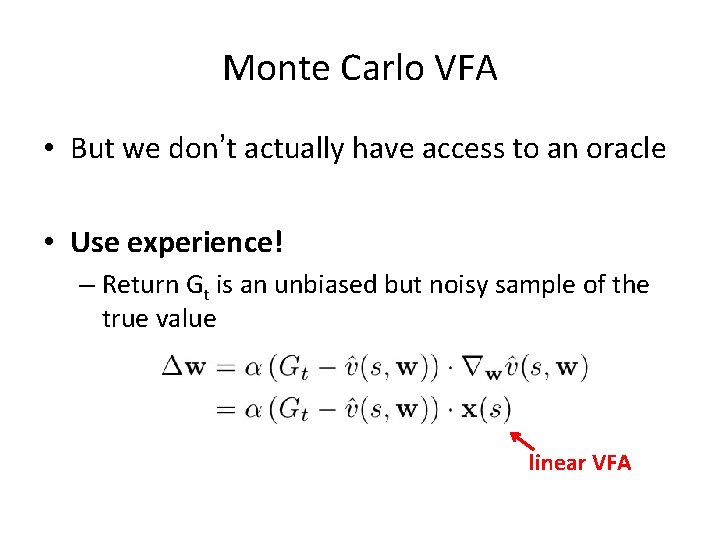

Monte Carlo VFA • But we don’t actually have access to an oracle • Use experience! – Return Gt is an unbiased but noisy sample of the true value linear VFA

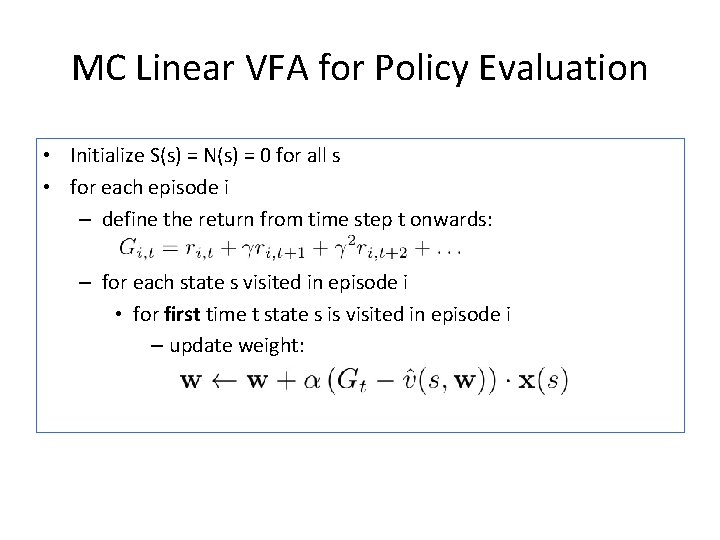

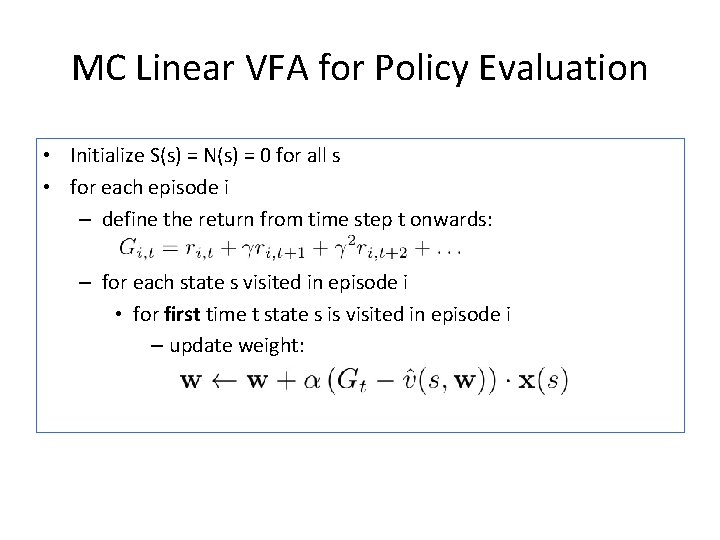

MC Linear VFA for Policy Evaluation • Initialize S(s) = N(s) = 0 for all s • for each episode i – define the return from time step t onwards: – for each state s visited in episode i • for first time t state s is visited in episode i – update weight:

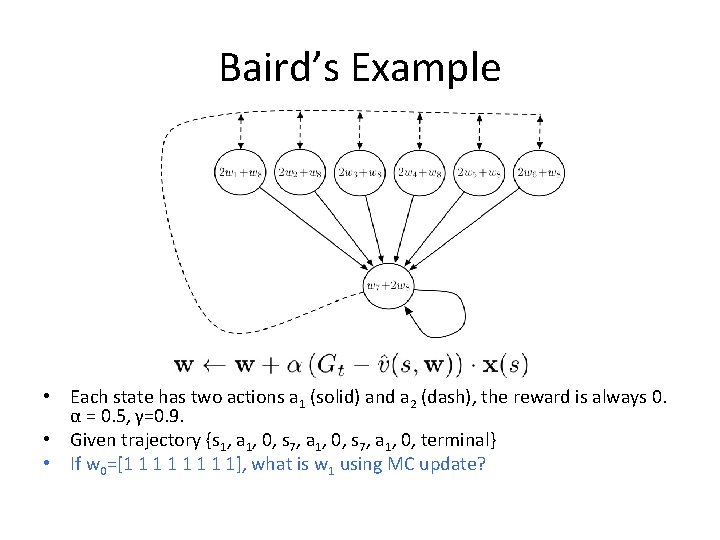

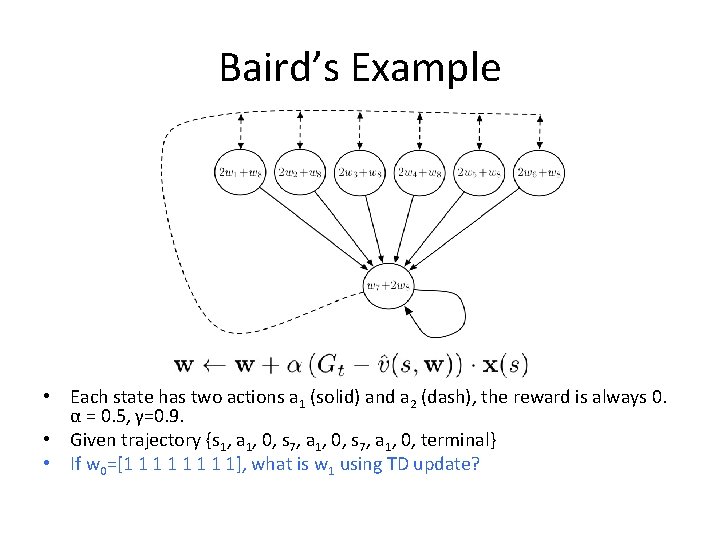

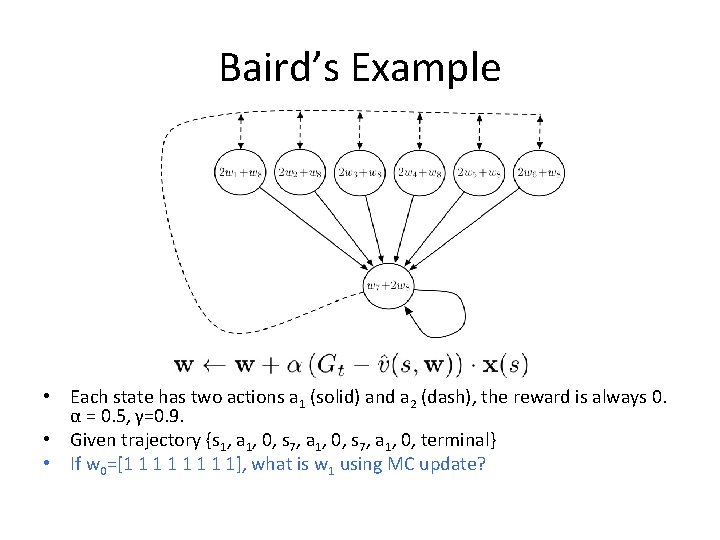

Baird’s Example • Each state has two actions a 1 (solid) and a 2 (dash), the reward is always 0. α = 0. 5, γ=0. 9. • Given trajectory {s 1, a 1, 0, s 7, a 1, 0, terminal} • If w 0=[1 1 1 1 1], what is w 1 using MC update?

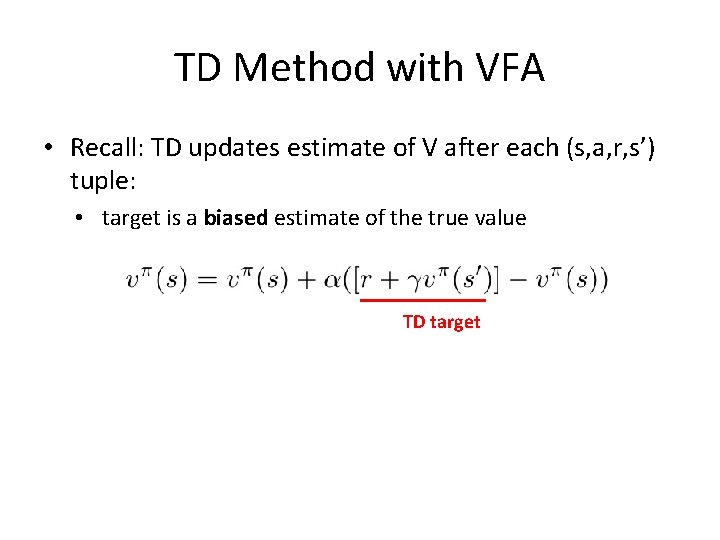

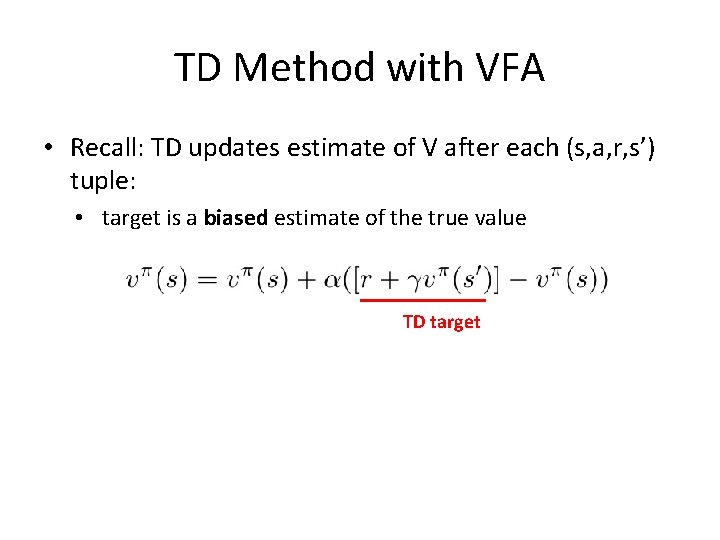

TD Method with VFA • Recall: TD updates estimate of V after each (s, a, r, s’) tuple: • target is a biased estimate of the true value TD target

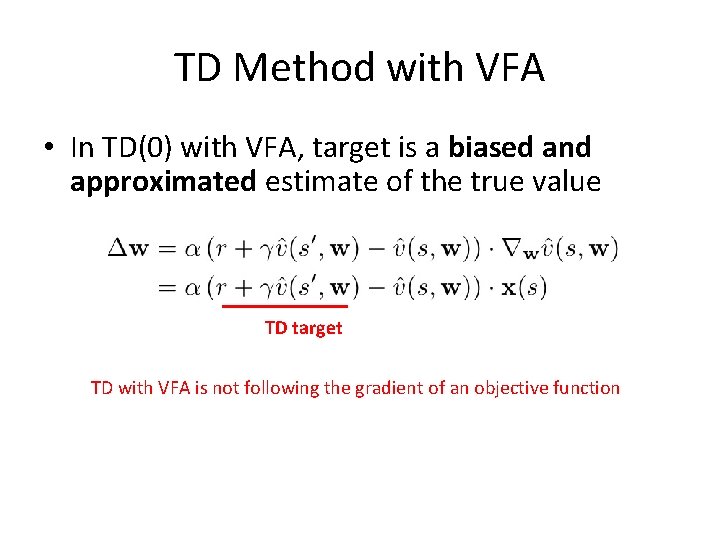

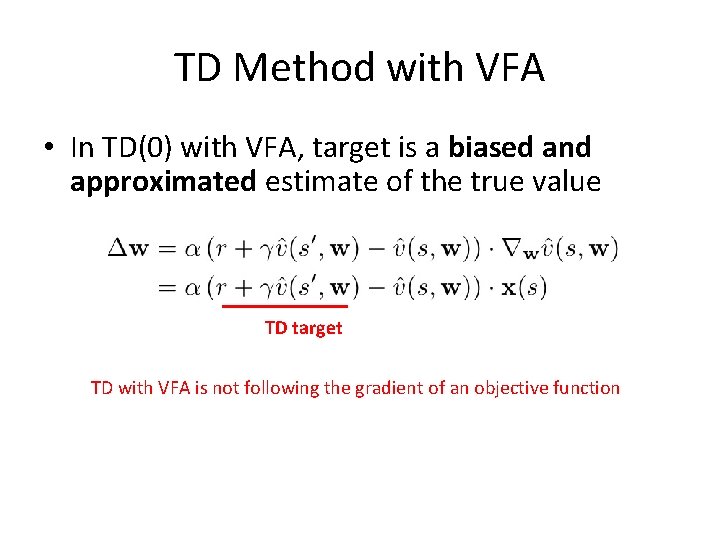

TD Method with VFA • In TD(0) with VFA, target is a biased and approximated estimate of the true value TD target TD with VFA is not following the gradient of an objective function

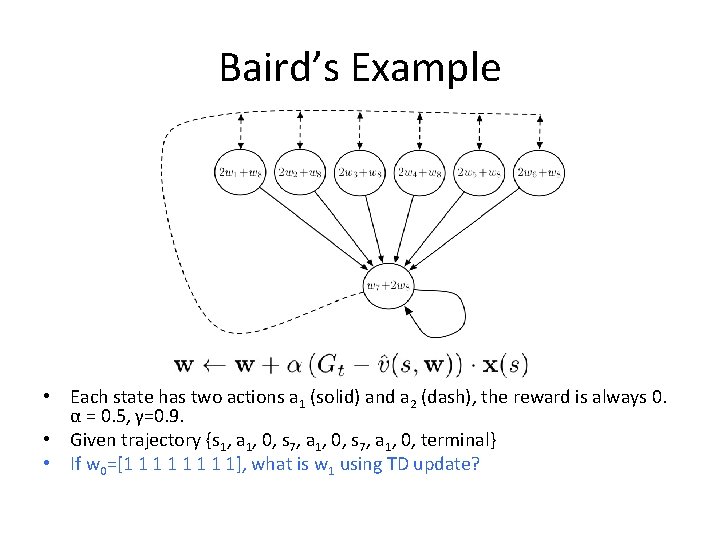

Baird’s Example • Each state has two actions a 1 (solid) and a 2 (dash), the reward is always 0. α = 0. 5, γ=0. 9. • Given trajectory {s 1, a 1, 0, s 7, a 1, 0, terminal} • If w 0=[1 1 1 1 1], what is w 1 using TD update?

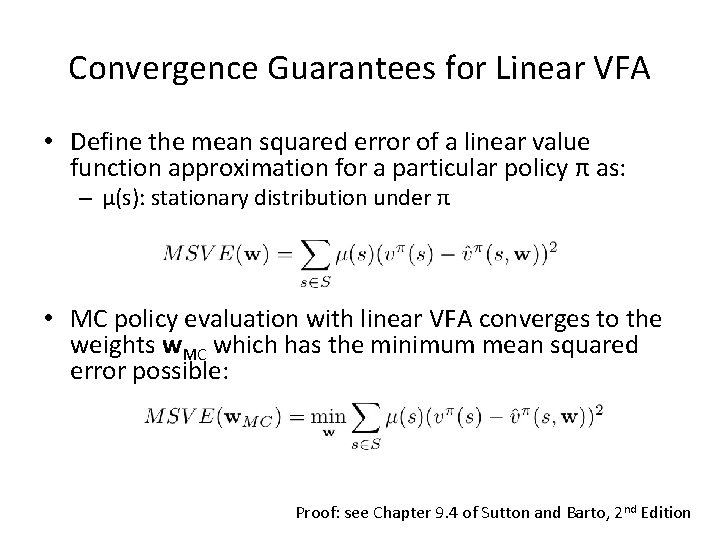

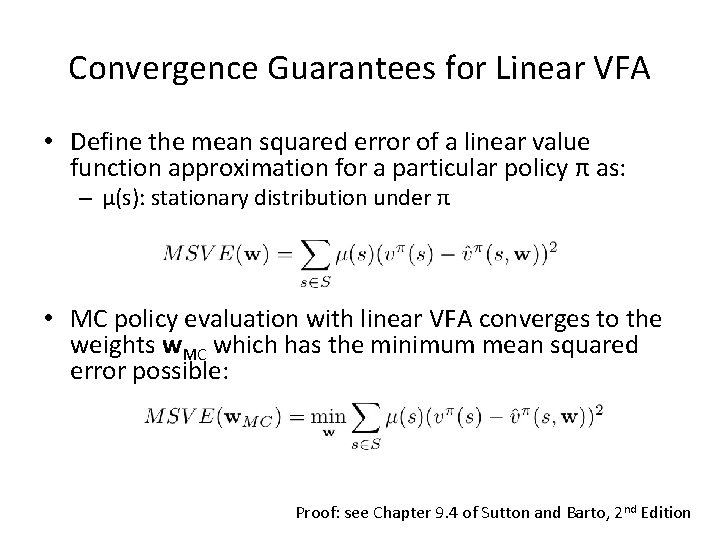

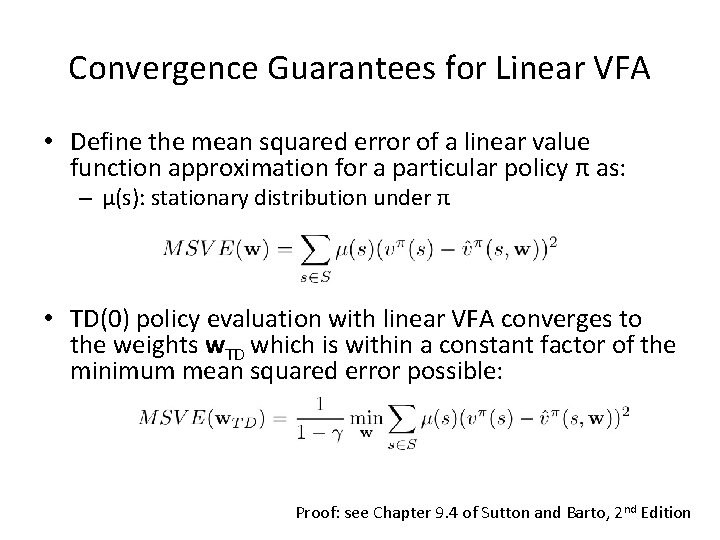

Convergence Guarantees for Linear VFA • Define the mean squared error of a linear value function approximation for a particular policy π as: – μ(s): stationary distribution under π • MC policy evaluation with linear VFA converges to the weights w. MC which has the minimum mean squared error possible: Proof: see Chapter 9. 4 of Sutton and Barto, 2 nd Edition

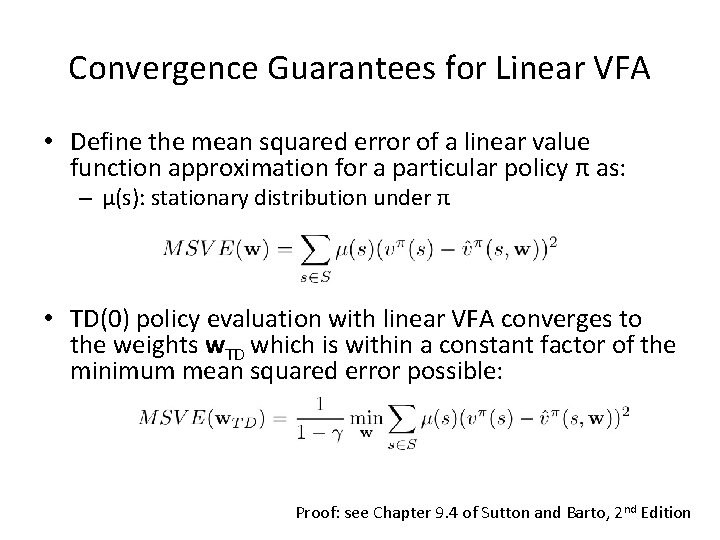

Convergence Guarantees for Linear VFA • Define the mean squared error of a linear value function approximation for a particular policy π as: – μ(s): stationary distribution under π • TD(0) policy evaluation with linear VFA converges to the weights w. TD which is within a constant factor of the minimum mean squared error possible: Proof: see Chapter 9. 4 of Sutton and Barto, 2 nd Edition

Outline 1. VFA for policy evaluation 2. VFA for control

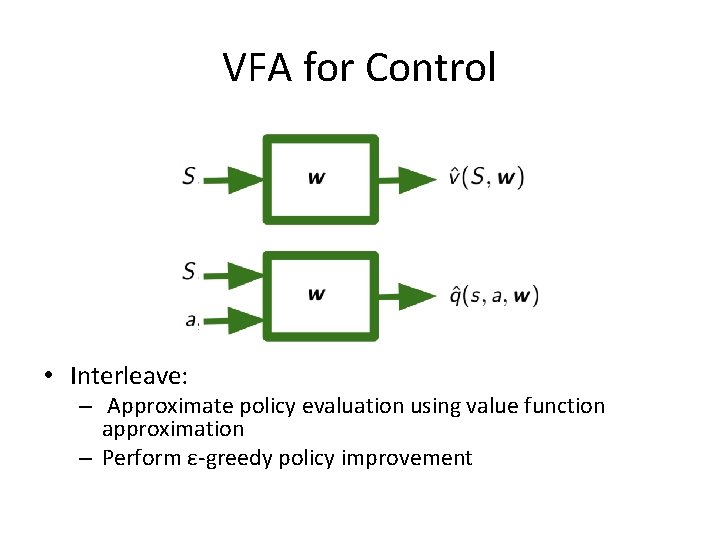

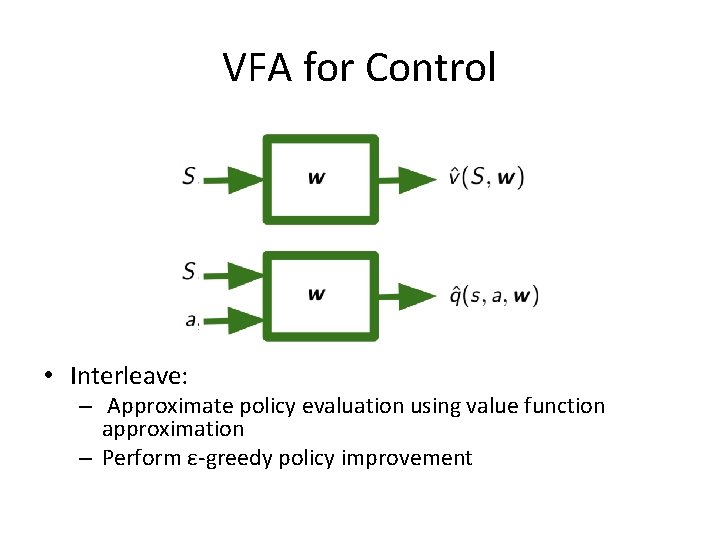

VFA for Control • Interleave: – Approximate policy evaluation using value function approximation – Perform ε-greedy policy improvement

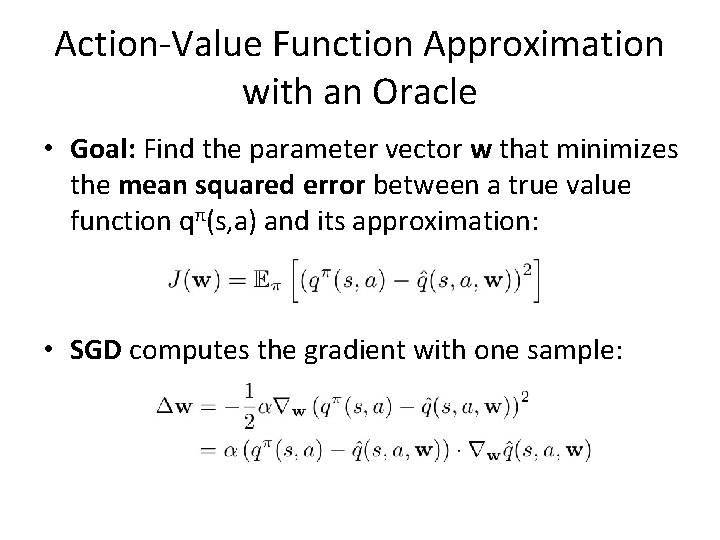

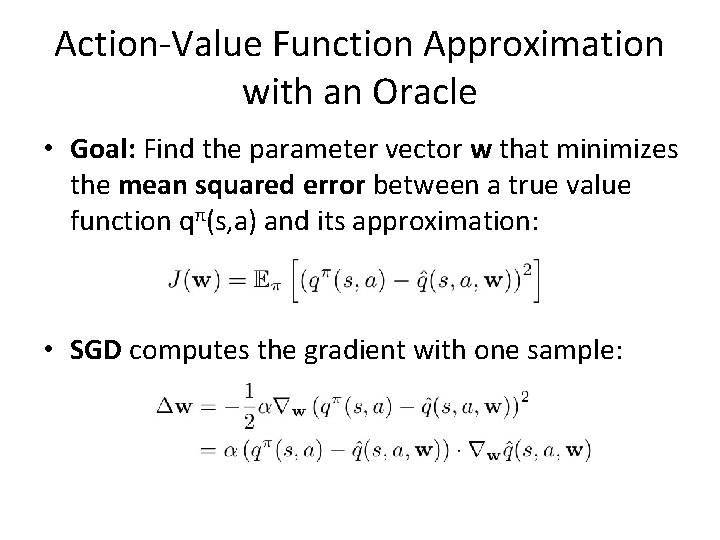

Action-Value Function Approximation with an Oracle • Goal: Find the parameter vector w that minimizes the mean squared error between a true value function qπ(s, a) and its approximation: • SGD computes the gradient with one sample:

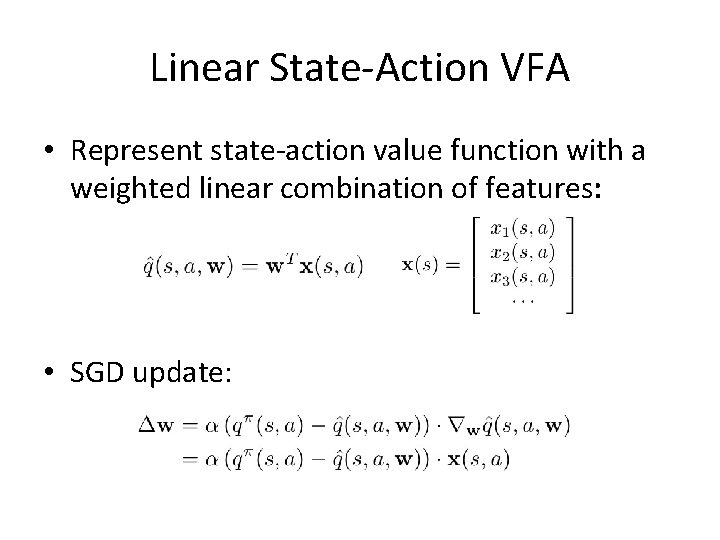

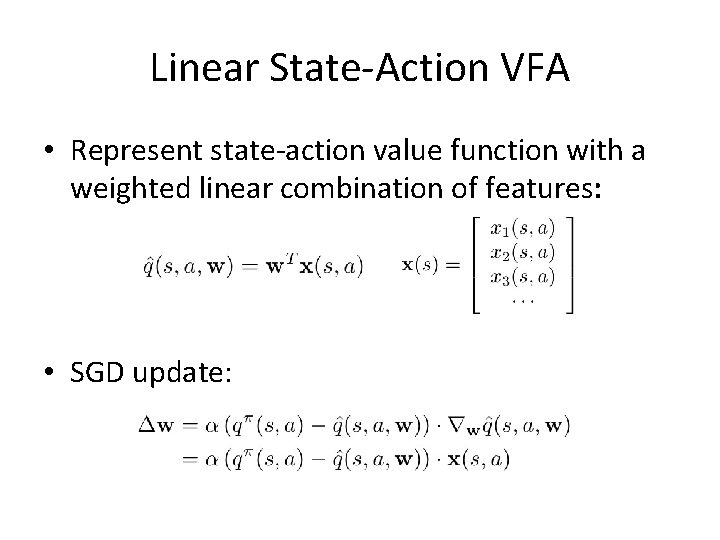

Linear State-Action VFA • Represent state-action value function with a weighted linear combination of features: • SGD update:

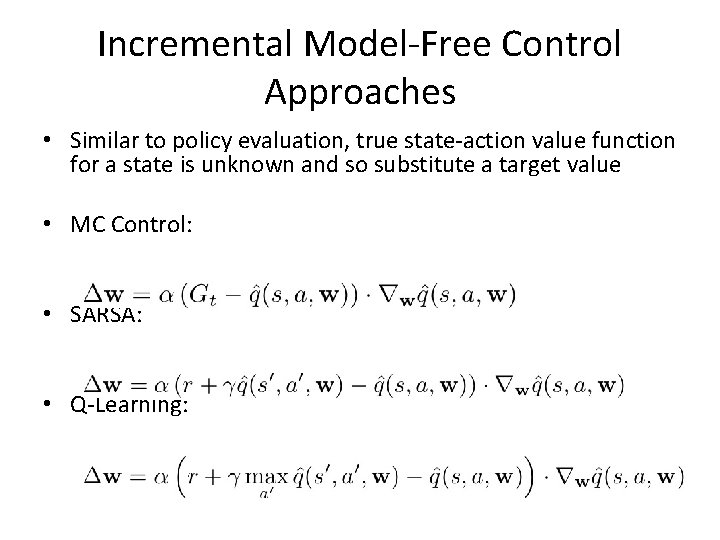

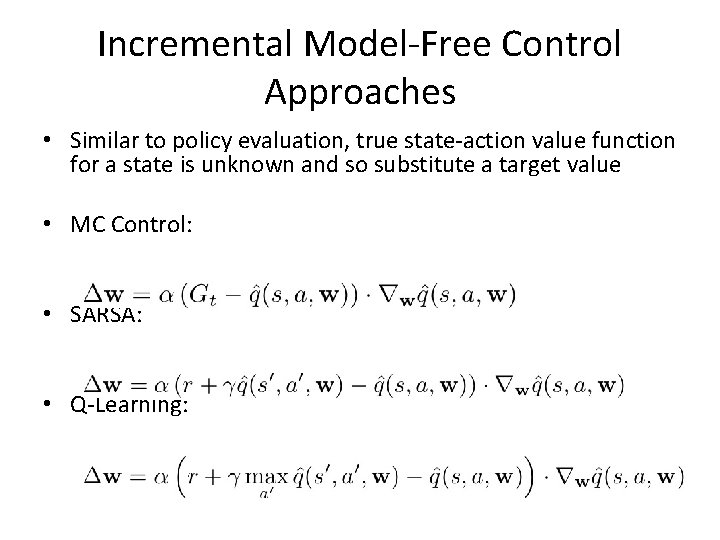

Incremental Model-Free Control Approaches • Similar to policy evaluation, true state-action value function for a state is unknown and so substitute a target value • MC Control: • SARSA: • Q-Learning:

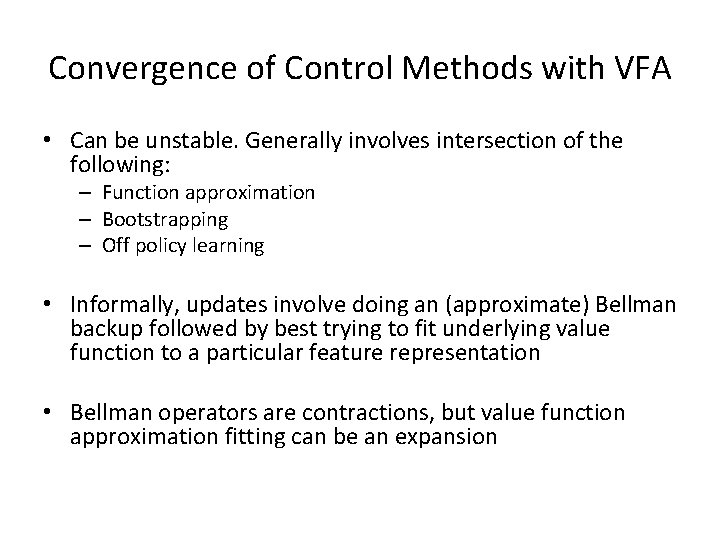

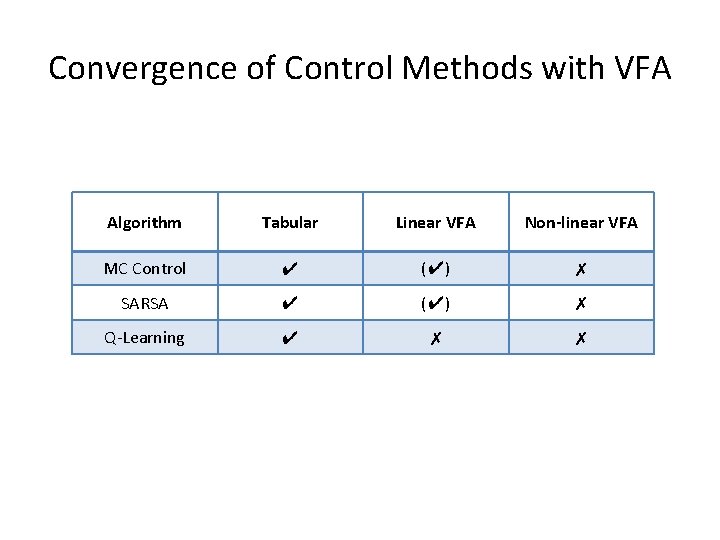

Convergence of Control Methods with VFA • Can be unstable. Generally involves intersection of the following: – Function approximation – Bootstrapping – Off policy learning • Informally, updates involve doing an (approximate) Bellman backup followed by best trying to fit underlying value function to a particular feature representation • Bellman operators are contractions, but value function approximation fitting can be an expansion

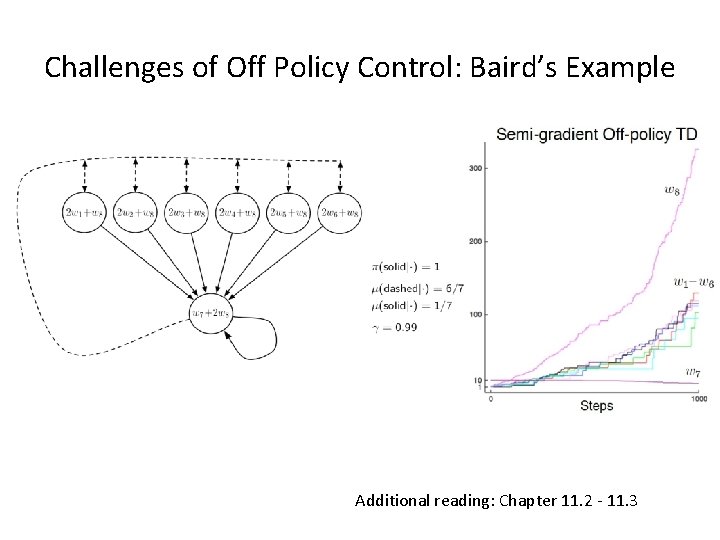

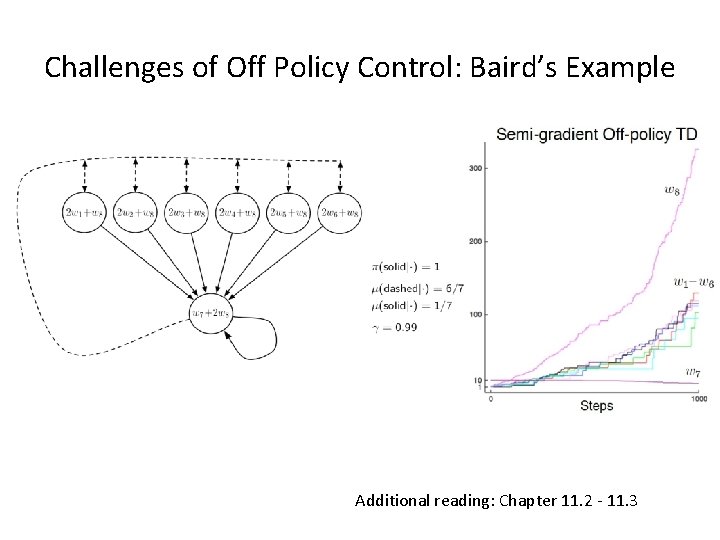

Challenges of Off Policy Control: Baird’s Example Additional reading: Chapter 11. 2 - 11. 3

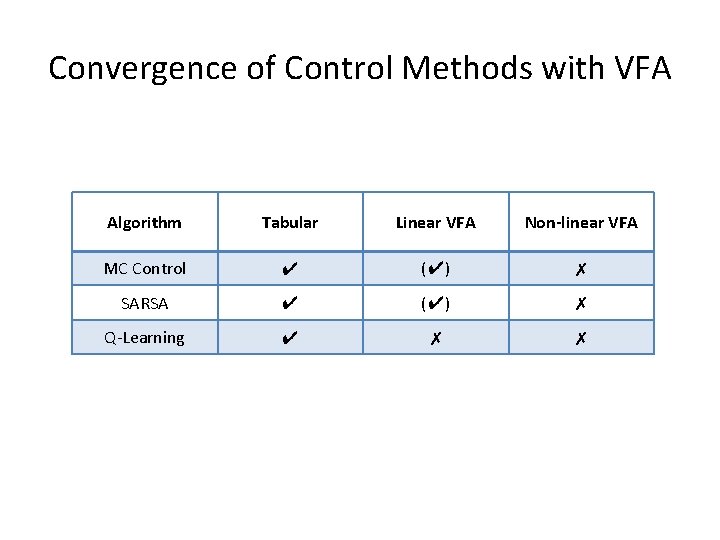

Convergence of Control Methods with VFA Algorithm Tabular Linear VFA Non-linear VFA MC Control ✔ (✔) ✗ SARSA ✔ (✔) ✗ Q-Learning ✔ ✗ ✗

Other Function Approximators • Linear value function approximators often work well given the right set of features • But can require carefully hand designing that feature set • An alternative is to use a much richer function approximation class that is able to directly go from states without requiring an explicit specification of features (e. g. , deep neural nets)