Validity Chong Ho Yu Caution Red alert This

- Slides: 35

Validity Chong Ho Yu

Caution (Red alert! This is not a drill) • Validity in the context of measurement is different from that in the context of research design (e. g. internal validity, external validity) • Even graduate students are confused! •

Caution (Red alert! This is not a drill) • Validity in measurement: whether the test can measure what it intends to measure. • Validity in research design – Internal validity: given the research design, can the data support the conclusion? – External validity: given the research design, can the conclusion be generalized to a wider population?

Common approaches of validity • Content validity • Criterion – Predictive: how well the test scores of one test can predict performance in another test or situation. – Concurrent: whether one measure can substitute another, such as allowing MAT 130 to exempt Psyc 299). Both of them is about the relationship of X and Y, and thus we will talk about criterion validity as one.

Common approaches of validity • Construct: – Convergent: whether two tests measure related skills or knowledge. – Discriminant: different tests measure different things. Convergent validity and discriminant validity are the two sides of the same coin. Thus, one will talk about construct validity as one.

Face validity • Face validity simply means that the validity is taken at face value. As a check on face validity, test/survey items are sent to teachers or other subject matter experts to obtain suggestions for modification. Because of its vagueness and subjectivity, psychometricians have abandoned this concept for a long time.

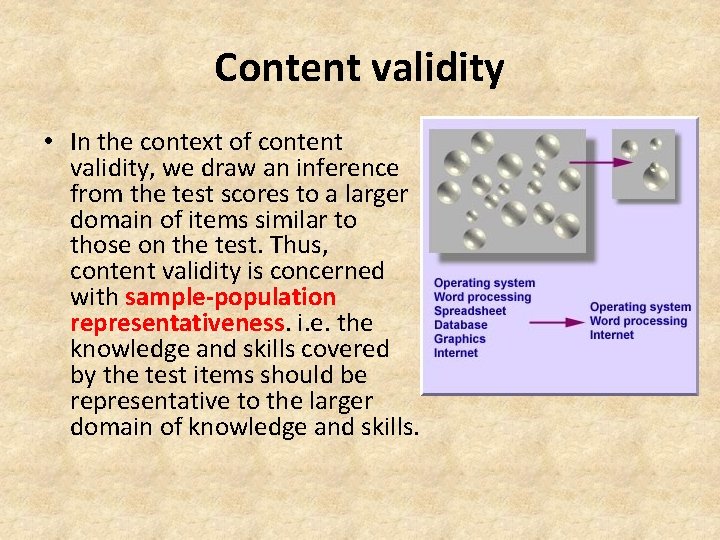

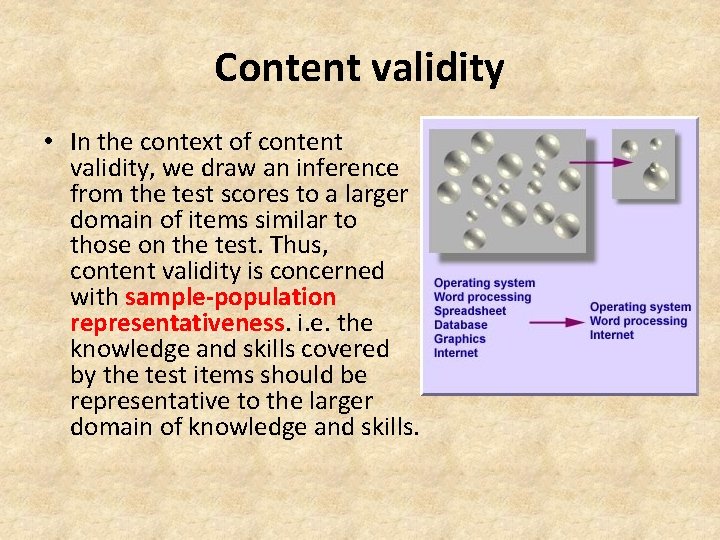

Content validity • In the context of content validity, we draw an inference from the test scores to a larger domain of items similar to those on the test. Thus, content validity is concerned with sample-population representativeness. i. e. the knowledge and skills covered by the test items should be representative to the larger domain of knowledge and skills.

Content validity • Computer literacy includes skills in operating system, word processing, spreadsheet, database, graphics, internet, and many others. • It is difficult to administer a test covering all aspects of computing. Therefore, only several tasks are sampled from the universe of computer skills.

Content validity • Content validity is usually established by content experts. • Take computer literacy as an example again. A test of computer literacy should be written or reviewed by computer science professors or senior programmers in the IT industry because it is assumed that computer scientists should know what are important in his own discipline.

Content validity • At first glance, this approach looks similar to the validation process of face validity, but yet there is a subtle difference. • In content validity, evidence is obtained by looking for agreement in judgments by judges. In short, face validity can be established by one person but content validity should be checked by a panel, and thus usually it goes hand in hand with inter-rater reliability(Kappa!)

Content validity • This approach has some drawbacks. Usually experts tend to take their knowledge for granted and forget how little other people know. It is not uncommon that some tests written by content experts are extremely difficult. • Sometimes we cannot totally rely on experts. For example, we need the patient perspective to develop the measurement scale for fatigue.

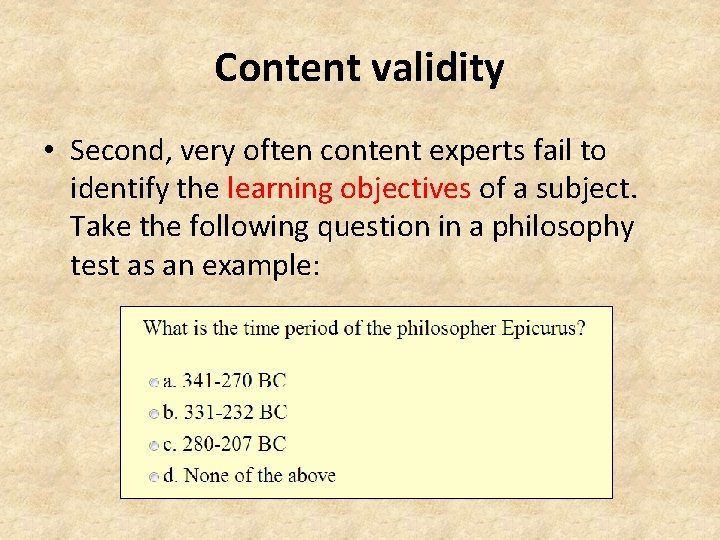

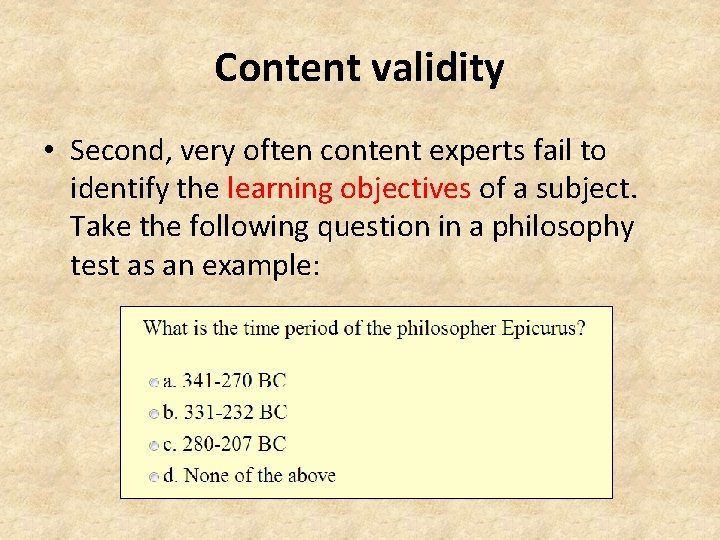

Content validity • Second, very often content experts fail to identify the learning objectives of a subject. Take the following question in a philosophy test as an example:

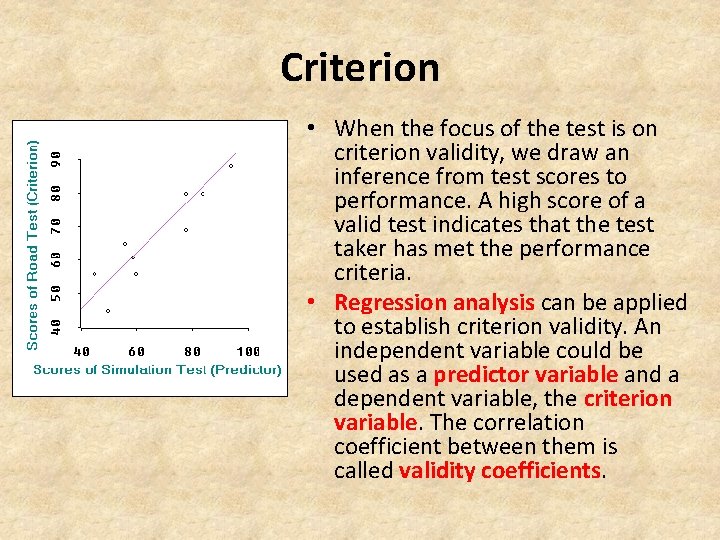

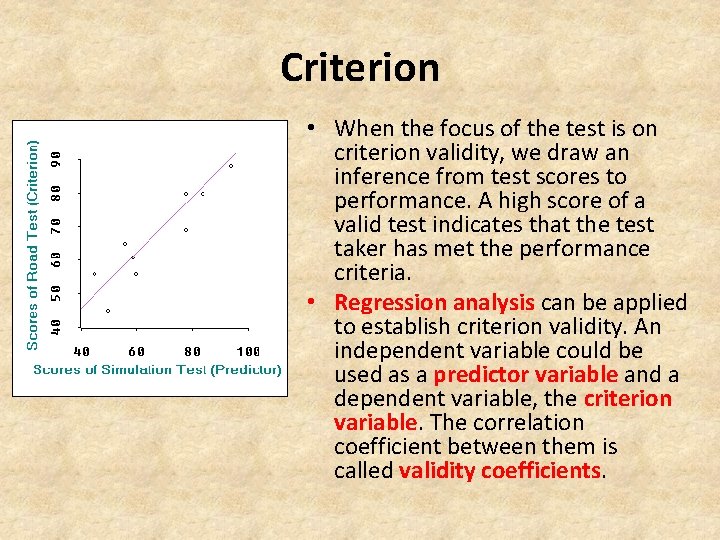

Criterion • When the focus of the test is on criterion validity, we draw an inference from test scores to performance. A high score of a valid test indicates that the test taker has met the performance criteria. • Regression analysis can be applied to establish criterion validity. An independent variable could be used as a predictor variable and a dependent variable, the criterion variable. The correlation coefficient between them is called validity coefficients.

Construct • When construct validity is emphasized, as the name implies, we draw an inference form test scores to a psychological construct. Because it is concerned with abstract and theoretical construct, construct validity is also known as theoretical validity.

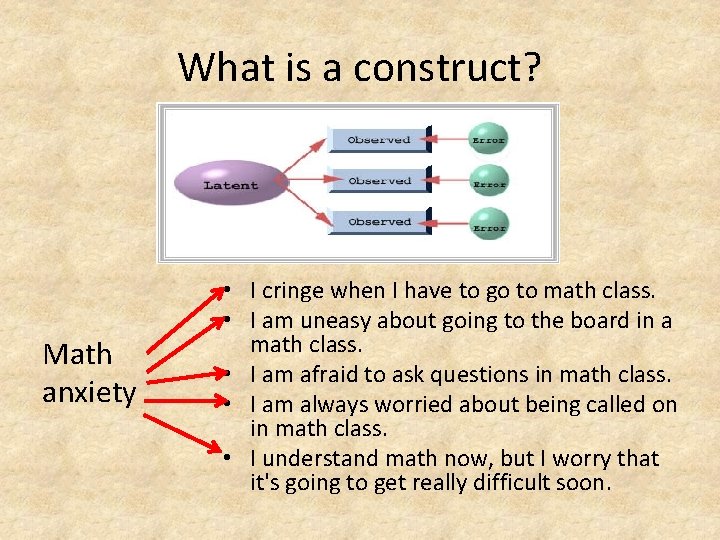

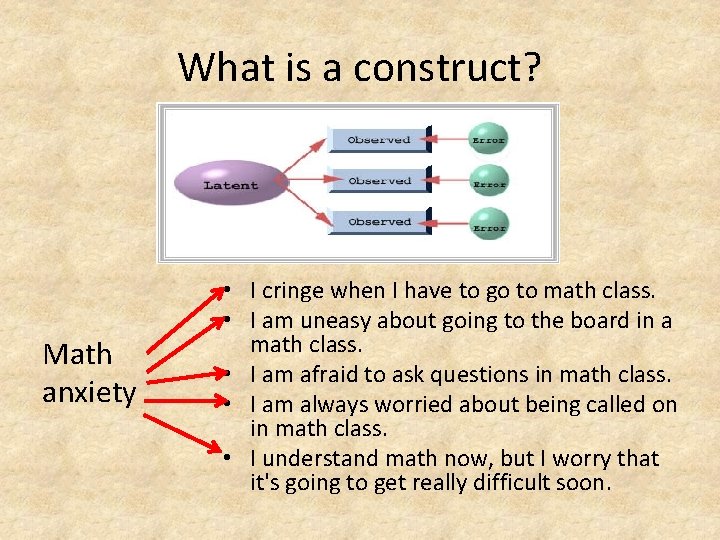

What is a construct? Math anxiety • I cringe when I have to go to math class. • I am uneasy about going to the board in a math class. • I am afraid to ask questions in math class. • I am always worried about being called on in math class. • I understand math now, but I worry that it's going to get really difficult soon.

Factor model • A factor model identifies the relationship between observed items and latent factors. For example, when a psychologist wants to study the causal relationships between Math anxiety and job performance, first he/she has to define the constructs “Math anxiety” and “job performance. ” To accomplish this step, about the psychologist needs to develop items that measure the defined construct.

Construct, dimension, subscale, factor, component • This construct has eight dimensions (e. g. Intelligence has eight aspects) • This scale has eight subscales (e. g. the survey measures different but weakly related things) • The factor structure has eight factors/components (e. g. in factor analysis/PCA)

From reliability to validity • A psychologist employs Cronbach’s alpha to evaluate the internal consistency of observed items, and also applies factor analysis to extract latent constructs from these consistent observed variables. • If the factor structure indicates that the observed items cluster around one concept (construct), the construct is said to be unidimensional.

Uni-dimensional vs. multi-dimensional • A survey/test measures a single psychological construct unidimensional • A survey/test has subscales to measure more than one constructs multidimensional • Sometimes it is good to have one and only one dimension: The construct is fairly simple and there is not much debate. And your goal is to measure this particular construct, noting else!

Uni-dimensional vs. multi-dimensional • Sometimes you may face criticisms when using reducing a complex phenomenon to one dimension: reductionism (Intelligence has only one single G factor; religion is nothing more than a coping mechanism against anxiety…etc. ) • To counteract reductionism, you need multidimensional measures.

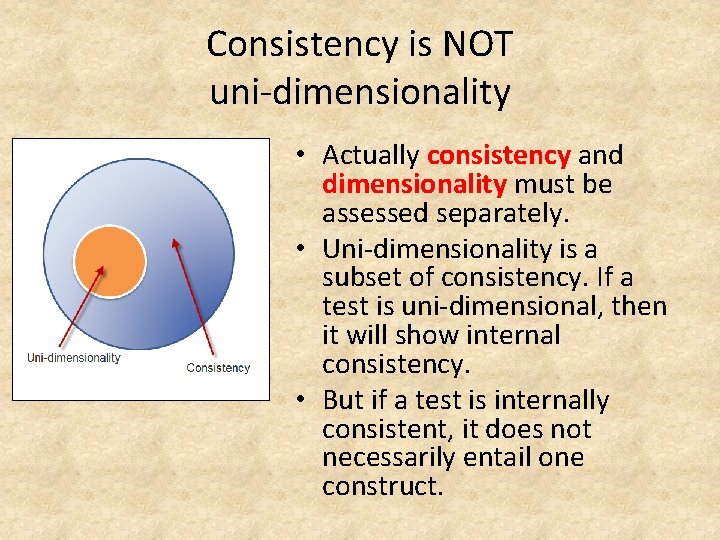

Overall Cronbach Coefficient Alpha • One may argue that when a high Cronbach Alpha indicates a high degree of internal consistency, the test or the survey must be uni -dimensional rather than multi-dimensional. Thus, there is no need to further investigate its subscales. This is a common misconception.

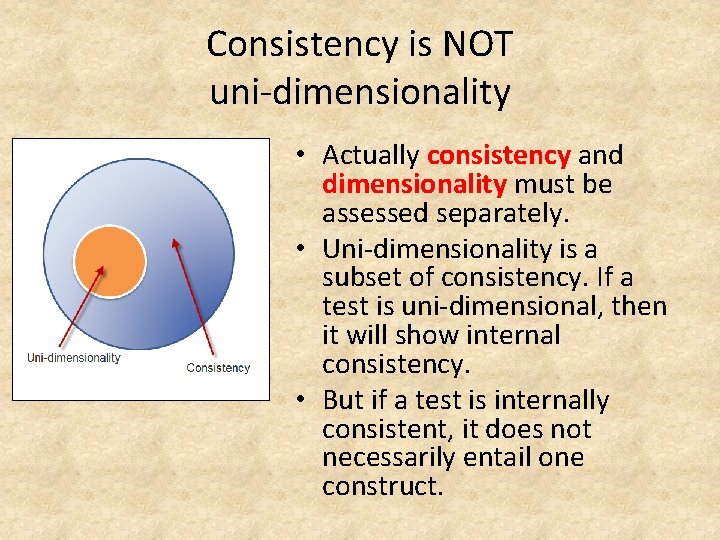

Consistency is NOT uni-dimensionality • Actually consistency and dimensionality must be assessed separately. • Uni-dimensionality is a subset of consistency. If a test is uni-dimensional, then it will show internal consistency. • But if a test is internally consistent, it does not necessarily entail one construct.

Fallacy • If I am a man, I must be a human. But if I am a human, I may not be a man (could be a woman). The logical fallacy that "if A then B; if B then A" is termed as "affirming the consequent. "

Factor analysis • Exploratory factor analysis (EFA): Propose a factor model • Confirmatory factor analysis (CFA): confirm/verify the proposed factor model • You can do EFA in JMP, SAS, or SPSS (Base or Standard). In this class you need to learn the concepts only.

PCA and EFA • Principal components analysis is aimed to find the optimal way of collapsing many correlated variables into a small number of subsets so that the study is more manageable. The subsets do not need to make any theoretical sense. It is for convenience only. • Exploratory factor analysis is intended to identify the underlying theoretical structure of diverse variables. For example, if certain items are loaded into a subscale called intrinsic religious orientation, then the items must be related to this construct both mathematically and conceptually.

Example of PCA: Insurance policy • The policy variables (Maitra & Yan): – Fire Protection Class – Number of Building in Policy – Number of Locations in Policy – Maximum Building Age – Building Coverage Indicator – Policy Age

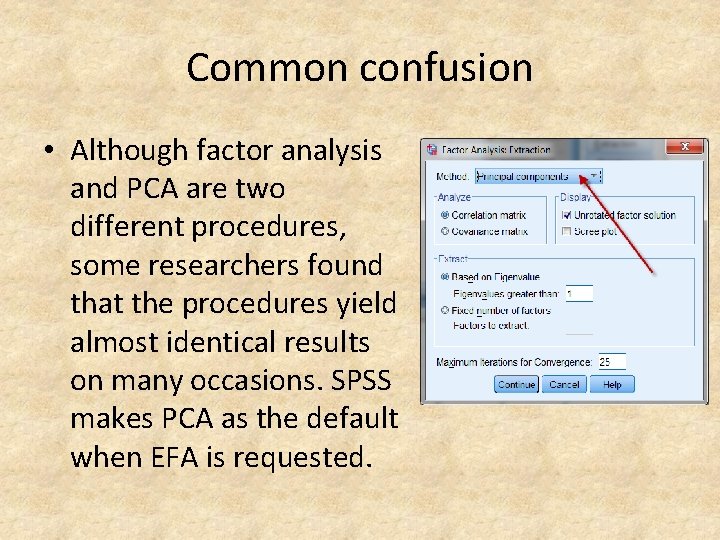

Common confusion • Although factor analysis and PCA are two different procedures, some researchers found that the procedures yield almost identical results on many occasions. SPSS makes PCA as the default when EFA is requested.

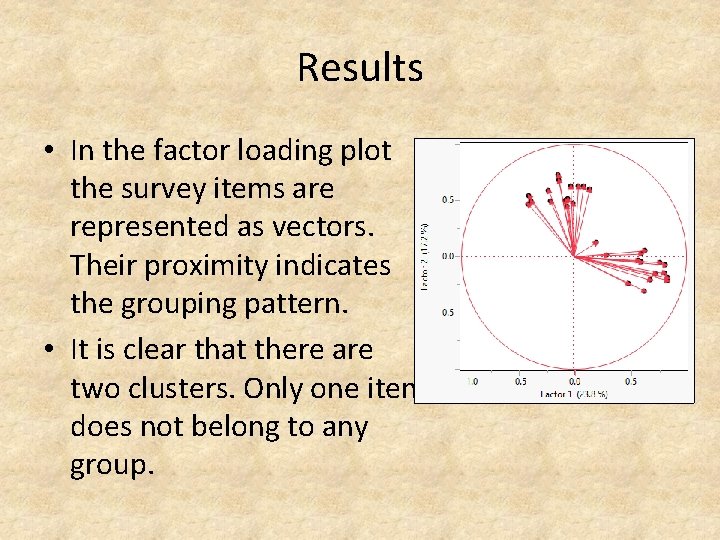

Example • Dr. Shaynah Neshama at Social Work developed a scale with two constructs (subscales): Perception of human nature and perception of social justice. • She wants to know whethere are really only two constructs in the survey, and whether the items fit into the proposed subscales.

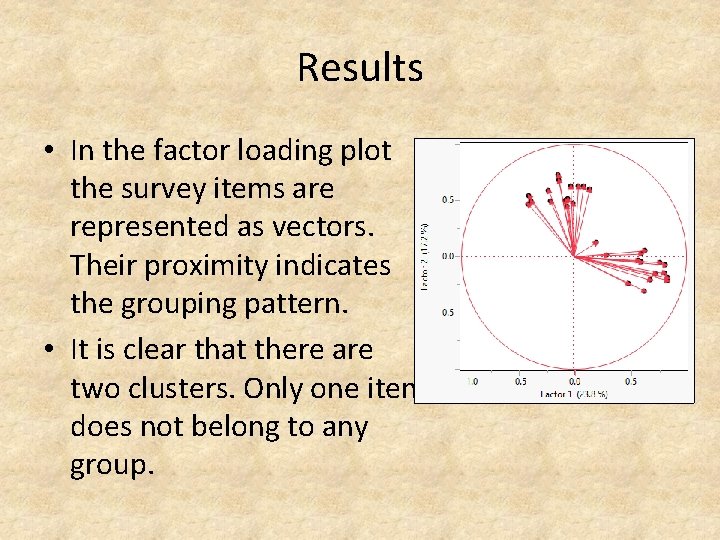

Results • In the factor loading plot the survey items are represented as vectors. Their proximity indicates the grouping pattern. • It is clear that there are two clusters. Only one item does not belong to any group.

• CFA is a component of Structural Equation Modeling (SEM). You test specific hypotheses based on fitness. You can either reject or fail to reject the hypothesis.

CFA • You cannot do CFA in SPSS Base • You can use: – SPSS AMOS – SAS – EQS – Mplus • You don’t need to know the computational procedures for the exam • If you are interested in CFA, please take my class 462 Research Methods II

Differential item functioning • Based on IRT, should the instrument developer know whether examinees of the same ability but from different groups have different probabilities of success on an item? Put simply, is the test bias against particular gender, ethnic, social, or economic groups? Differential item functioning (DIF) was developed to counteract test item bias. DIF tells you whether the test item functions differently to different groups. There are two types of DIF:

Caution • A statistical disparity does not necessarily imply that the test or some test items must be biased. For example, some people do better than other groups in a test simply because they work harder. • You need to read the test items and to evaluate the situation in order to find out whether some elements there bias against certain groups.

Possible bias and unfair situations • Not all examinees in the population have access to the resources, e. g. a graduate school requires all applicants take a test of SAS and SPSS (Premium). • There are irrelevant elements in the test that can substantively affect test performance e. g. in a speed test people who can type faster have advantages; in an online test people who have access to a faster network have advantages.

What should be done? • Standards for Educational and Psychological Testing (AERA, APA, & NCME, 2014): – Use a “universal” test design to remove barriers – Remove elements that might restrict an examinee’s ability to show what they know • Examples – Individuals with disabilities – Individuals from diverse language and cultural backgrounds – Older adults who are not familiar with technologies