v Fair LatencyAware Fair Storage Scheduling via PerIO

- Slides: 28

v. Fair: Latency-Aware Fair Storage Scheduling via Per-IO Cost-Based Differentiation Hui Lu, Brendan Saltaformaggio, Ramana Kompella, Dongyan Xu ACM Symposium on Cloud Computing 2015 (So. CC'15)

OUTLINE • • • Introduction Background & Motivation Design Evaluation Conclusion

Introduction(1/4) • In virtualized data centers, multiple VMs are consolidated to access a shared storage system • Sharing physical hardware among many virtual machines (VMs) offers several benefits – Improved utilization – Agility in resource provisioning required for modern applications.

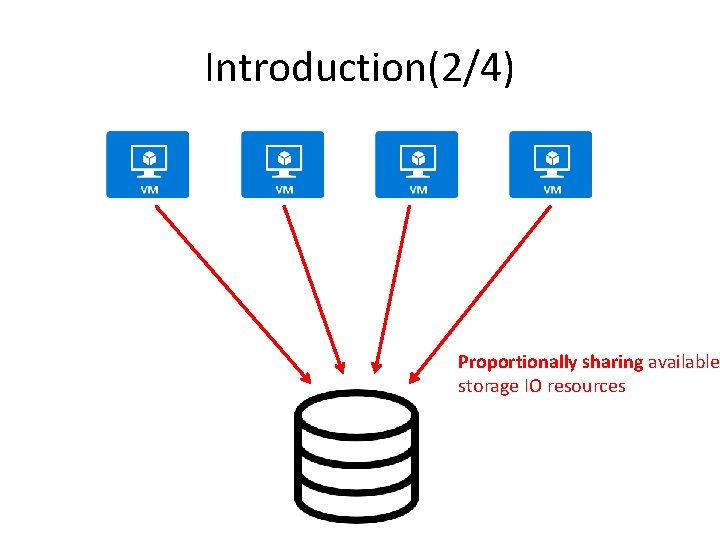

Introduction(2/4) Proportionally sharing available storage IO resources

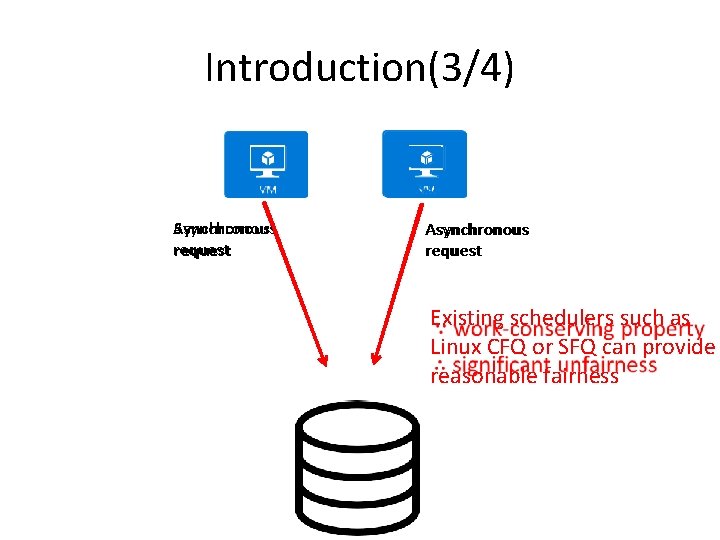

Introduction(3/4) Asynchronous Synchronous request Asynchronous request Existing schedulers such as Linux CFQ or SFQ can provide reasonable fairness

Introduction(4/4) • v. Fair: a novel block-level proportional share scheduling framework. • Provide both strict proportional sharing of storage IO among multiple VMs and high storage utilization, regardless of IO patterns.

OUTLINE • • • Introduction Background & Motivation Design Evaluation Conclusion

Background • storage access patterns: – Direction(read / write) – Location(sequential / random) – Parallelism(synchronous / asynchronous) • Synchronous => low IO-concurrency • Asynchronous => high IO-concurrency – Block size

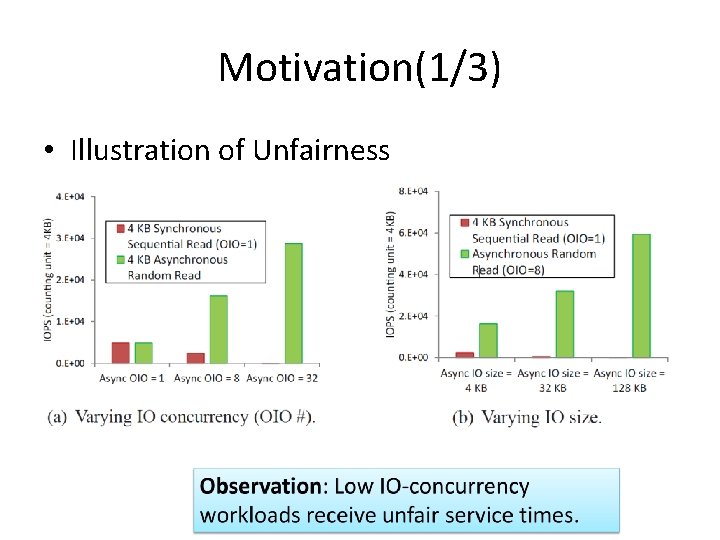

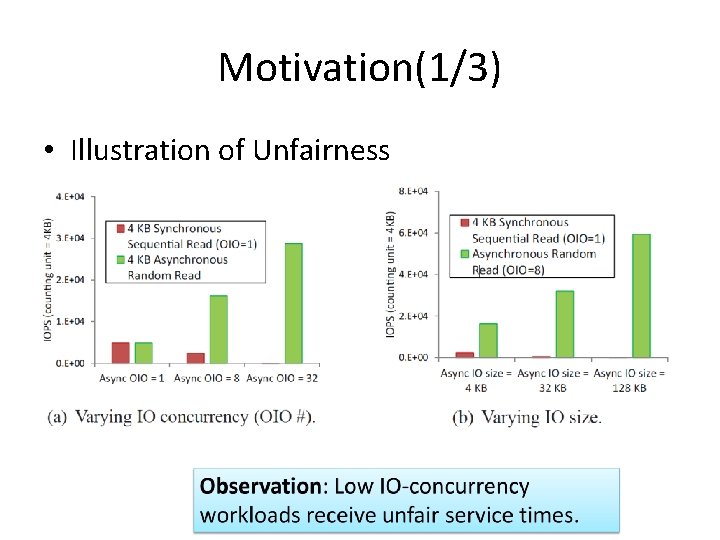

Motivation(1/3) • Illustration of Unfairness

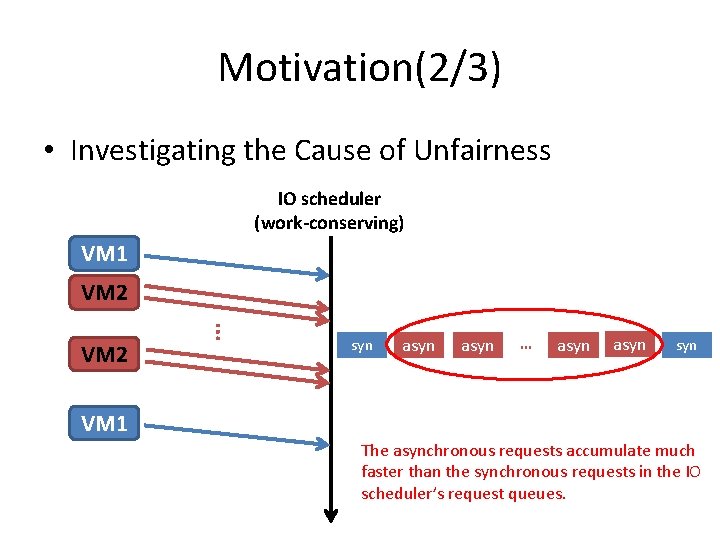

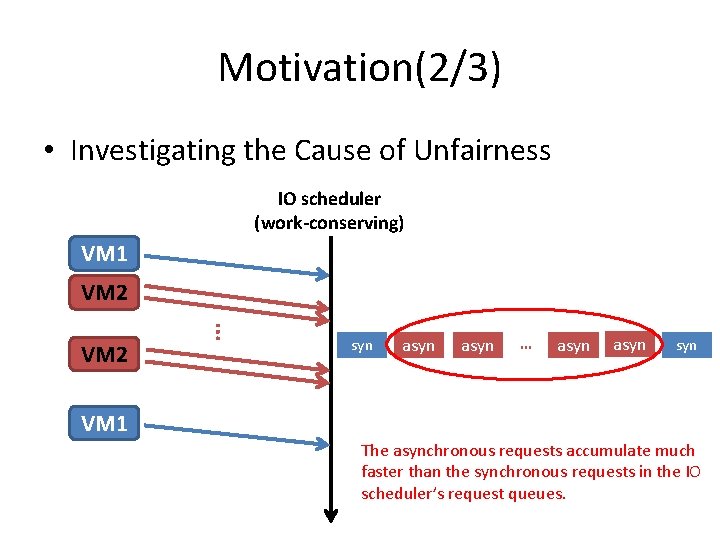

Motivation(2/3) • Investigating the Cause of Unfairness IO scheduler (work-conserving) VM 1 VM 2 … VM 2 syn asyn … asyn syn VM 1 The asynchronous requests accumulate much faster than the synchronous requests in the IO scheduler’s request queues.

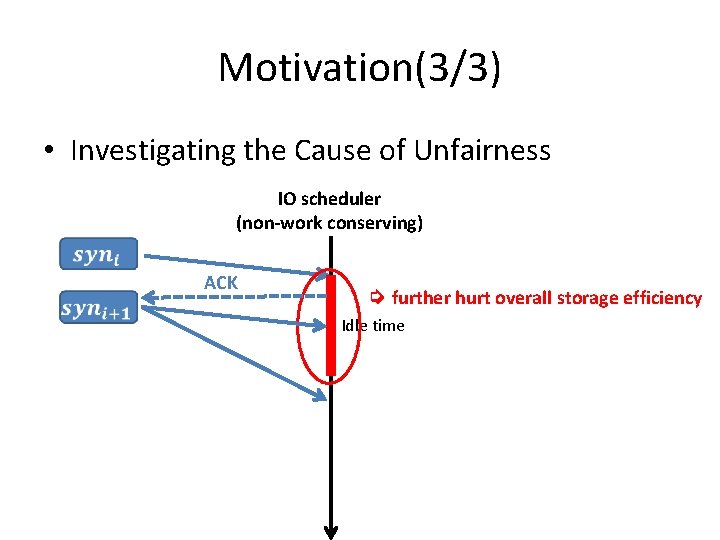

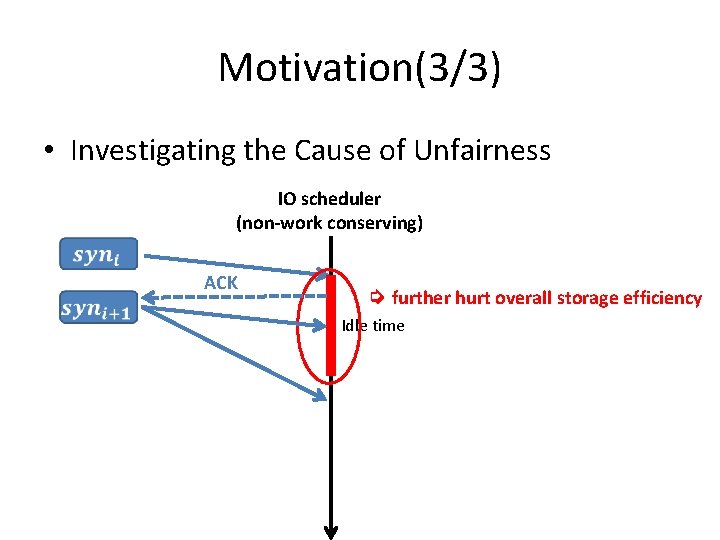

Motivation(3/3) • Investigating the Cause of Unfairness IO scheduler (non-work conserving) ACK ➭ further hurt overall storage efficiency Idle time

OUTLINE • • • Introduction Background & Motivation Design Evaluation Conclusion

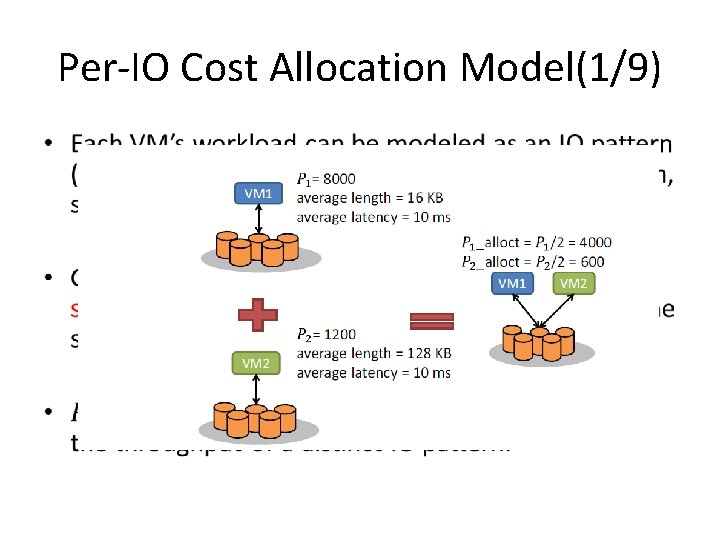

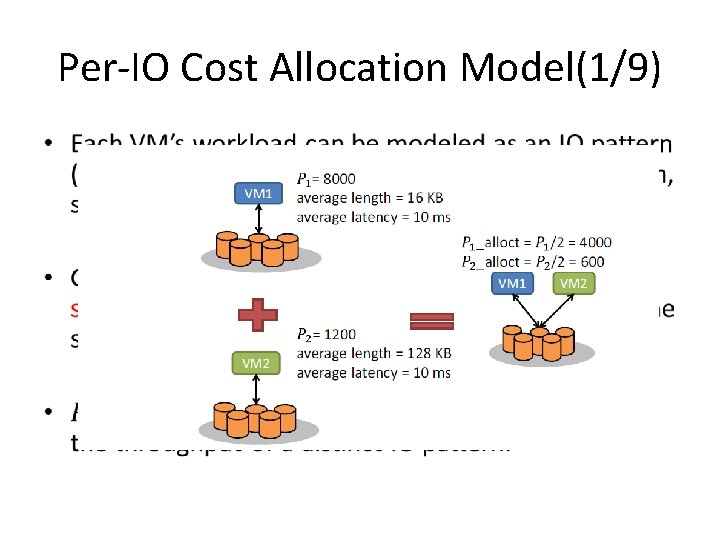

Per-IO Cost Allocation Model(1/9) •

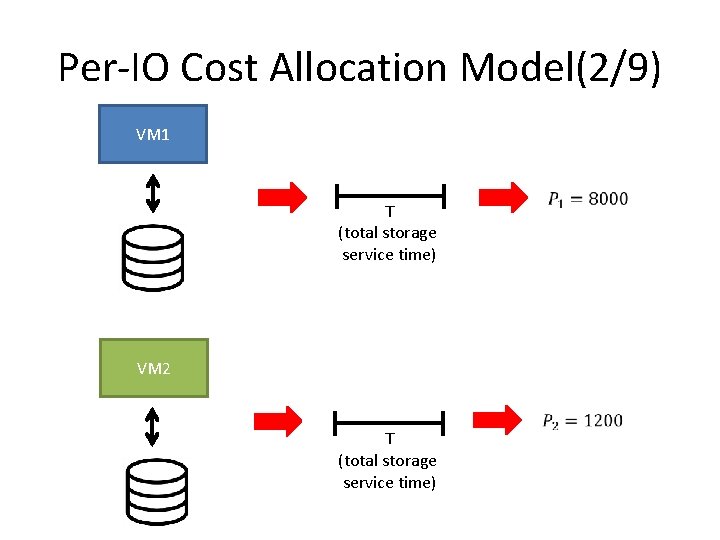

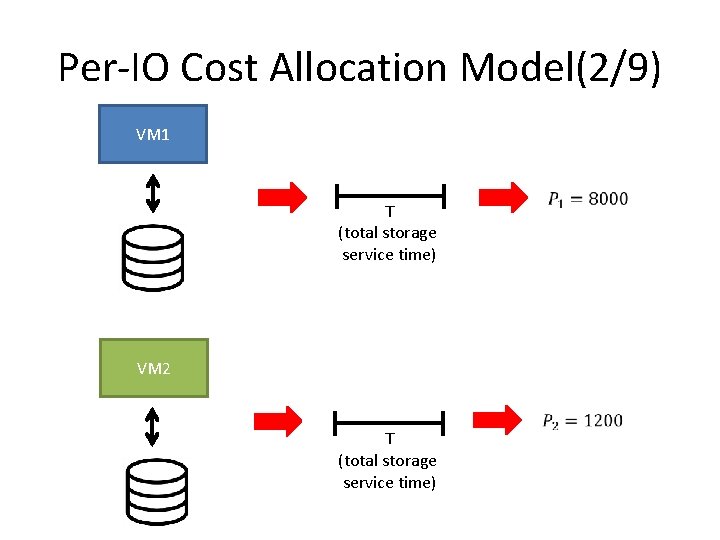

Per-IO Cost Allocation Model(2/9) VM 1 T (total storage service time) VM 2 T (total storage service time)

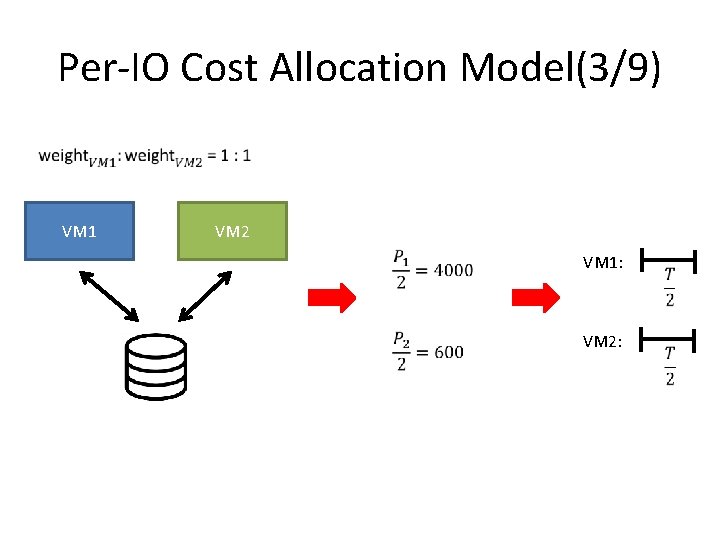

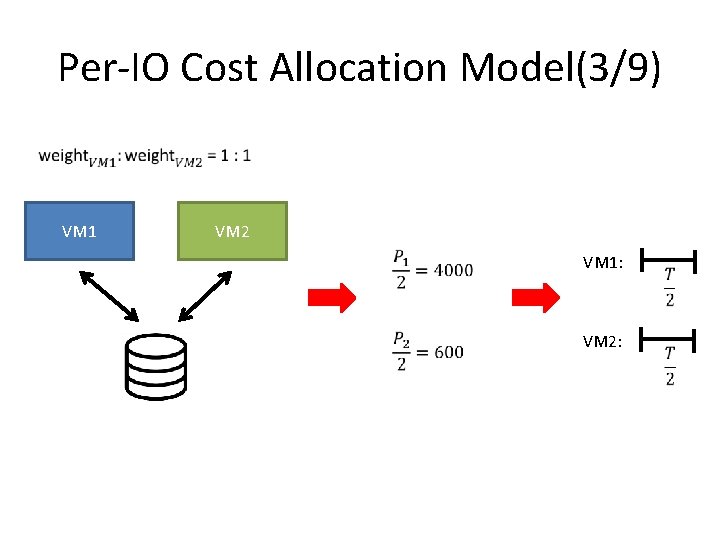

Per-IO Cost Allocation Model(3/9) VM 1 VM 2 VM 1: VM 2:

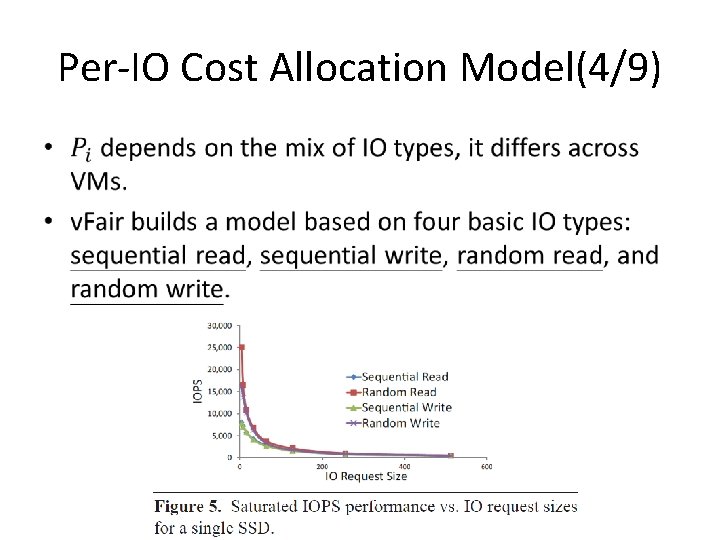

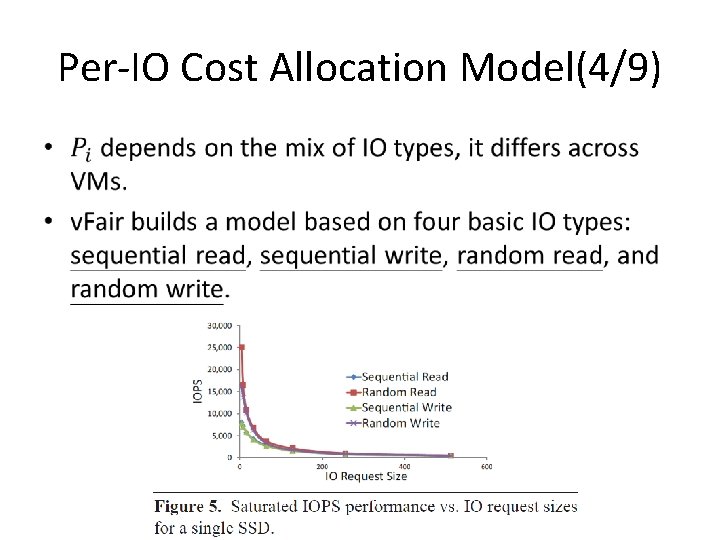

Per-IO Cost Allocation Model(4/9) •

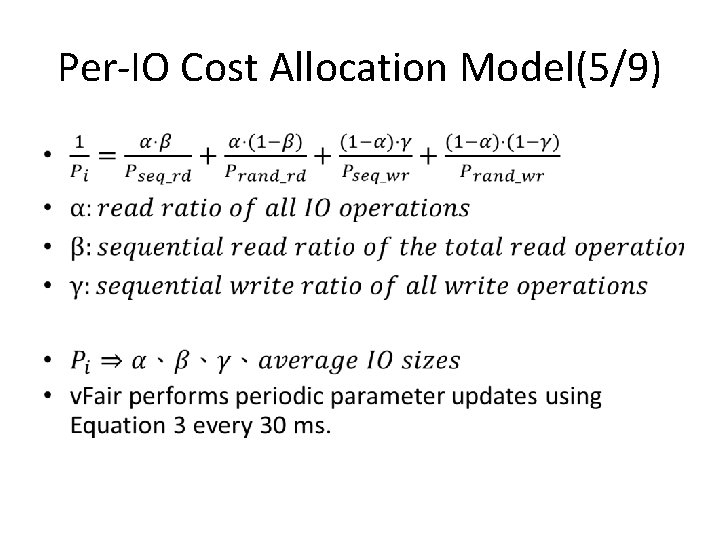

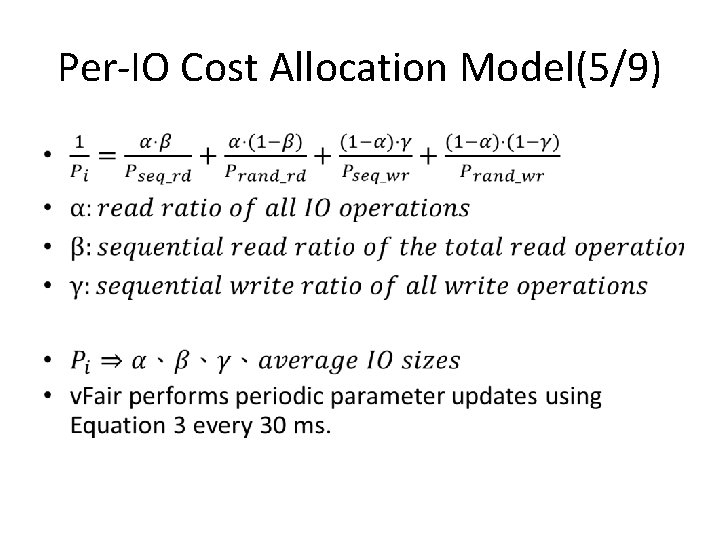

Per-IO Cost Allocation Model(5/9) •

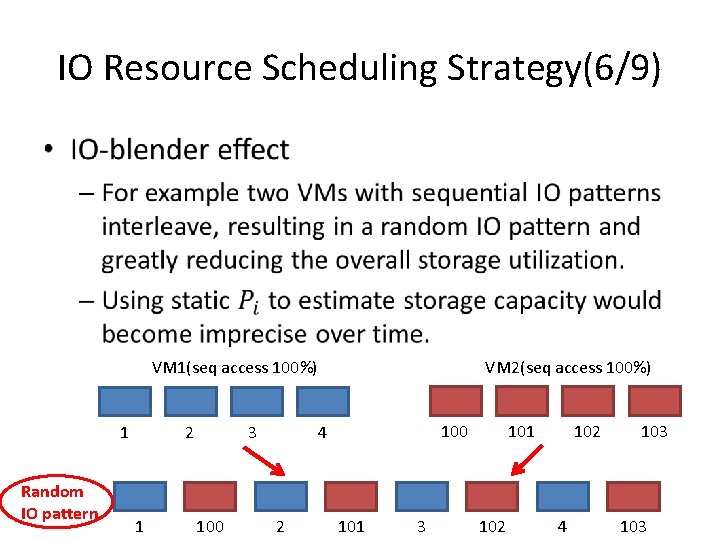

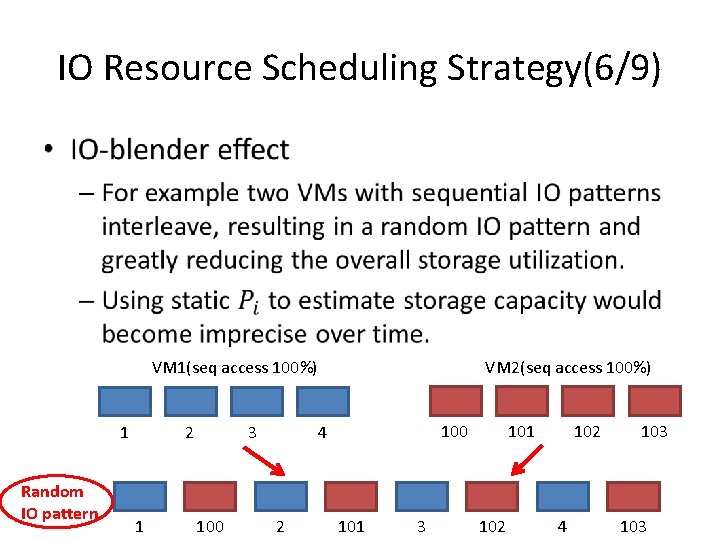

IO Resource Scheduling Strategy(6/9) • VM 2(seq access 100%) VM 1(seq access 100%) 1 Random IO pattern 2 1 3 100 4 2 101 3 101 102 4 103

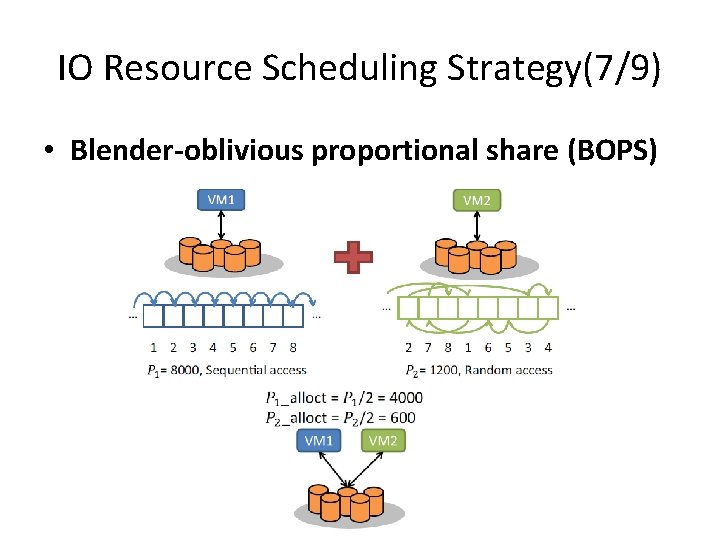

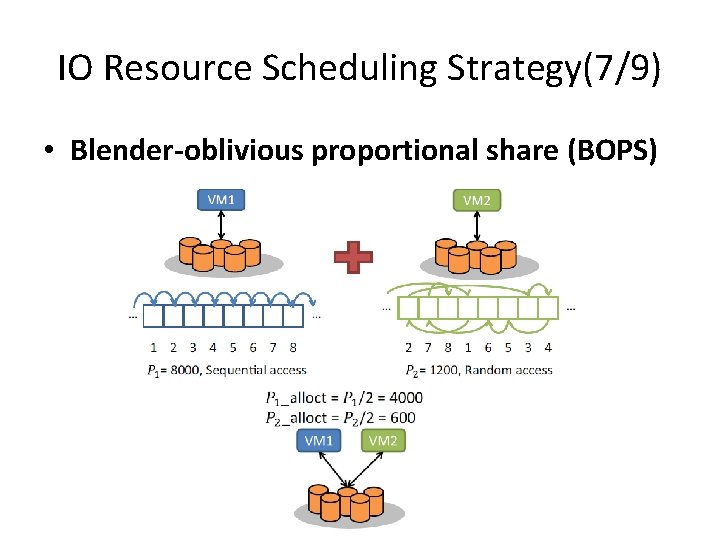

IO Resource Scheduling Strategy(7/9) • Blender-oblivious proportional share (BOPS)

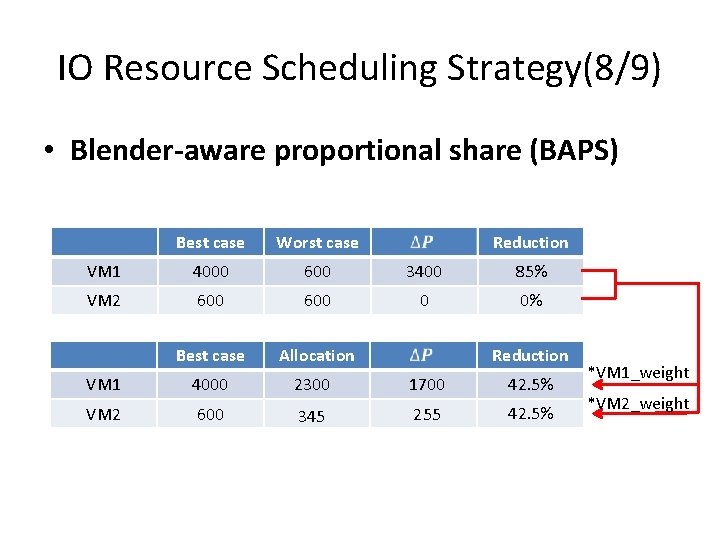

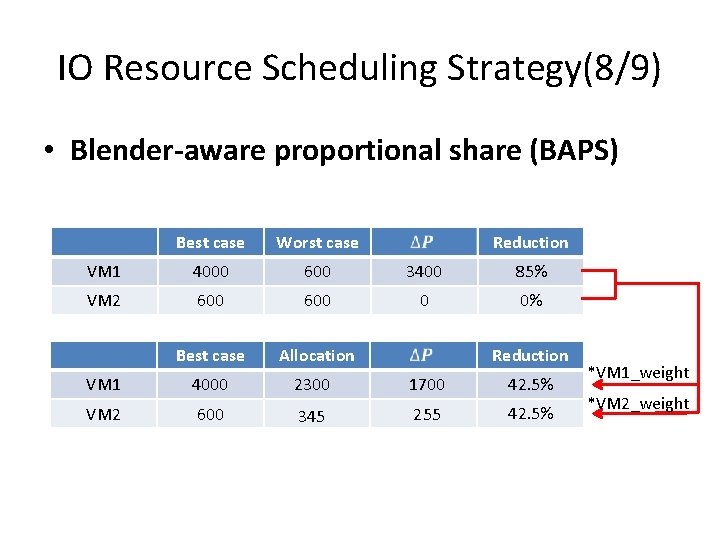

IO Resource Scheduling Strategy(8/9) • Blender-aware proportional share (BAPS) Best case Worst case Reduction VM 1 4000 600 3400 85% VM 2 600 0 0% Best case Allocation VM 1 4000 2300 1700 42. 5% VM 2 600 345 255 42. 5% Reduction *VM 1_weight *VM 2_weight

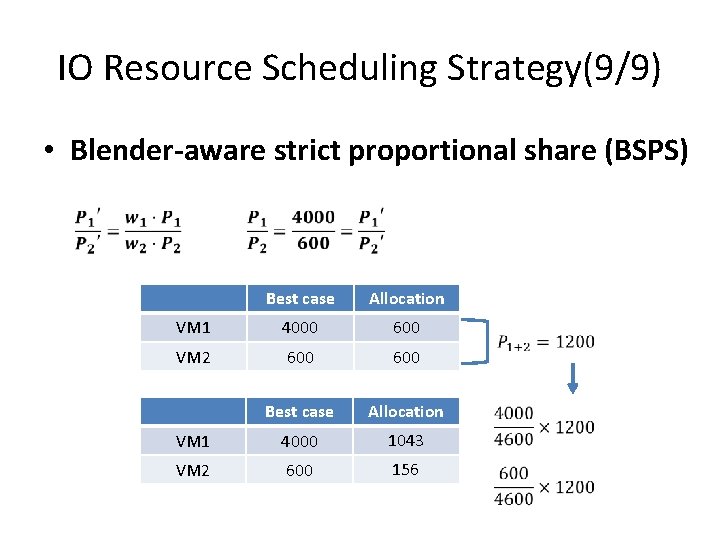

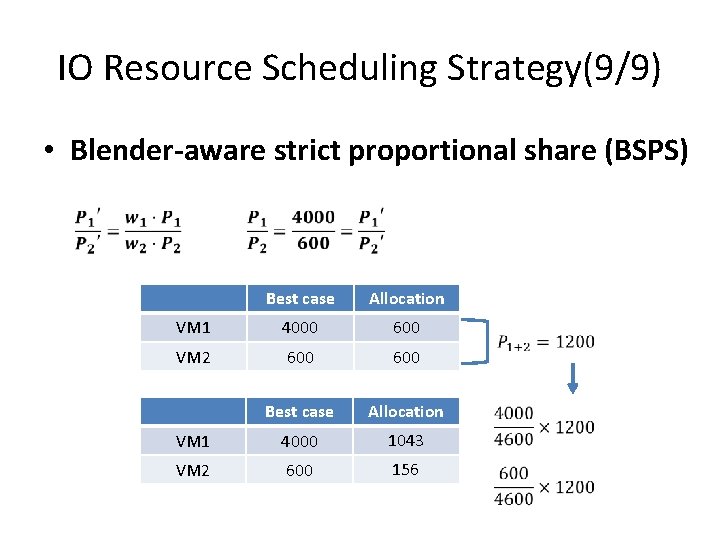

IO Resource Scheduling Strategy(9/9) • Blender-aware strict proportional share (BSPS) Best case Allocation VM 1 4000 600 VM 2 600 Best case Allocation VM 1 4000 1043 VM 2 600 156

OUTLINE • • • Introduction Background & Motivation Design Evaluation Conclusion

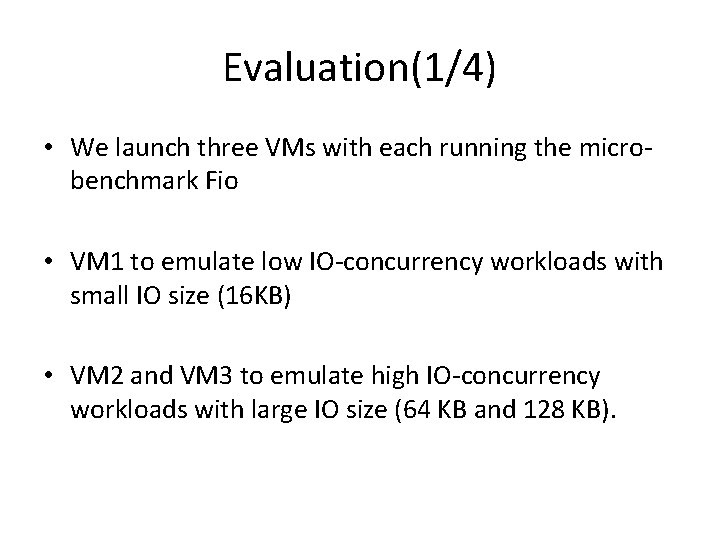

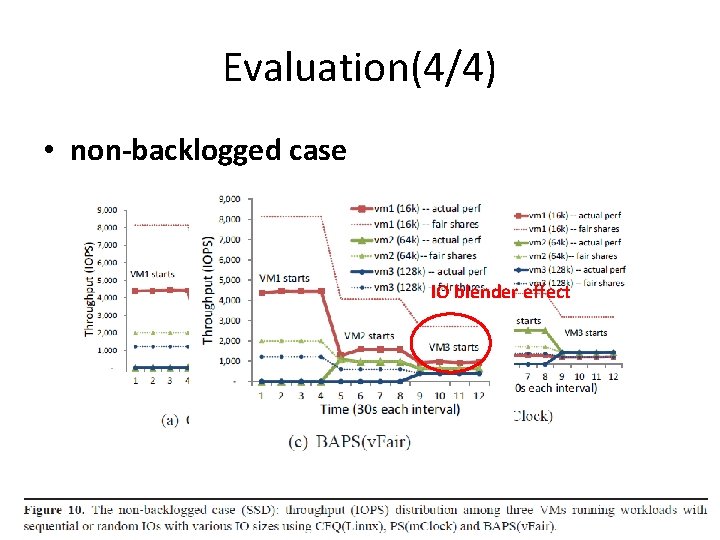

Evaluation(1/4) • We launch three VMs with each running the microbenchmark Fio • VM 1 to emulate low IO-concurrency workloads with small IO size (16 KB) • VM 2 and VM 3 to emulate high IO-concurrency workloads with large IO size (64 KB and 128 KB).

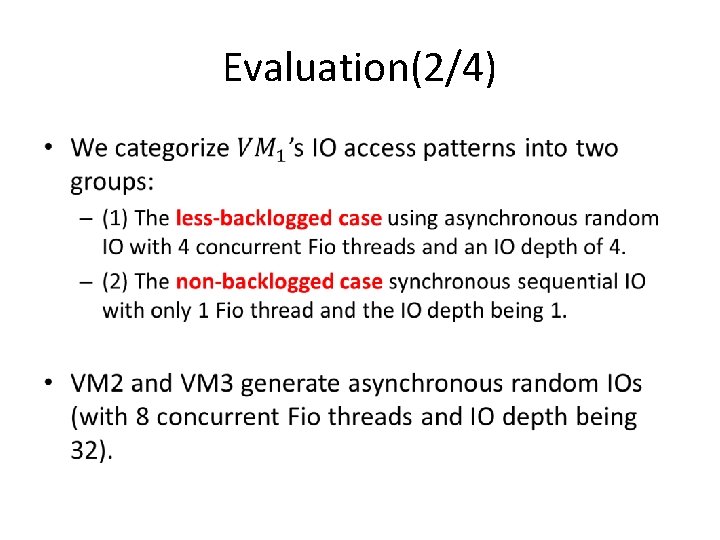

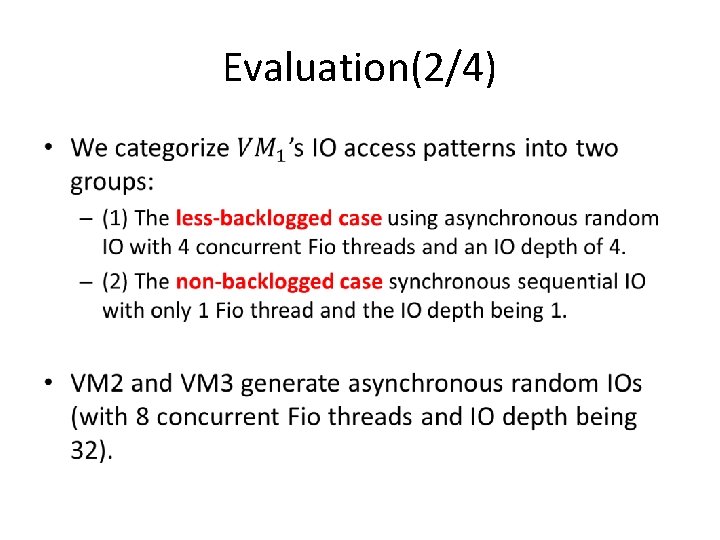

Evaluation(2/4) •

Evaluation(3/4) • less-backlogged case

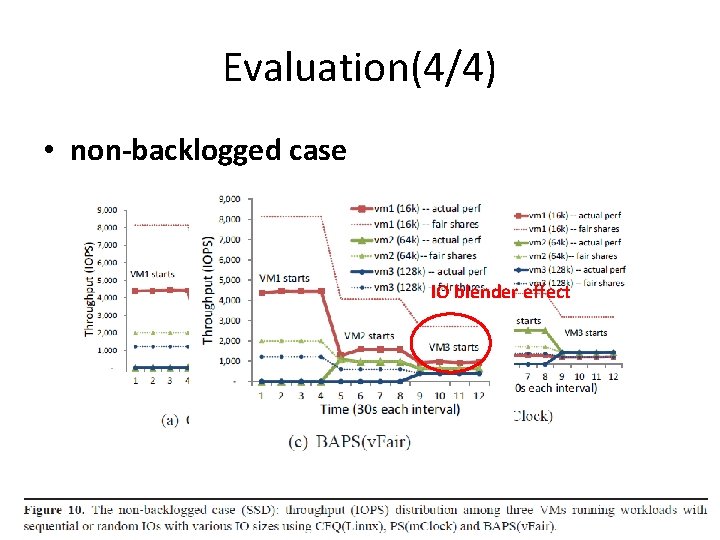

Evaluation(4/4) • non-backlogged case IO blender effect

OUTLINE • • • Introduction Background & Motivation Design Evaluation Conclusion

Conclusion • v. Fair achieves fairness among sharing VMs while maintaining high resource utilization, regardless of the VMs’ workloads and IO patterns. • Our evaluation with micro-benchmarks and realistic applications indicates the effectiveness of v. Fair, compared with state-of-the-art techniques.