V De Florio KULeuven 2002 Basic Concepts Computer

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 1 Course contents • Basic Concepts • Computer Design è Computer Architectures for AI • Computer Architectures in Practice

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 2 Types of Parallelism • Focus: parallel systems and languages • Types of parallelism (//): q Functional //: deriving from the logic of a problem solution q Data //: based on data structures that can be regarded as a (huge) number of independent units of work ðVarious grains: images, matrices, neurons, genes, associations… ðCustom data-parallel languages: DAP prolog, Connection Machine LISP and C*

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 3 Level of Functional Parallelism • At different grains q Instruction-level (fine-grained) // q Loop-level (middle-grain //) q Procedure-level (large-grain //) q Program-level (coarse-grain //) • ILP and LLP: discussed in Part 2 • Procedure-level //: the unit executed in // is a procedure • Program-level //: basic units are the user programs (inherently independent)

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 4 Types of parallel architectures • Flynn’s taxonomy q Single stream of instructions, executed by a single processor (SISD) q Single stream of instructions, executed by multiple processors (SIMD) q Multiple streams of instructions, executed by multiple processors (MIMD) q Multiple streams of instructions executed by a single processor (MISD) ð Only defined for sake of symmetry (though…) ð Or for dependability strategies based on timeredundancy ü Versions ü Redundant computations with voting

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 5 Classification • Data parallel (in the following) q Vector architectures q Associative and neural q SIMD q Systolic • Functional parallel q ILP ð VLIW and superscalar (part 2) q Thread-level // q Process-level // ð Distributed-memory MIMD (multi-computers) ð Shared-memory MIMD (multi-processors)

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 6 Data-parallel architectures • Source: part III of Sima/Fountain/Kaksuk • Main idea: the computer works sequentially, but on a parallel data set q E. g. on a per-matrix vs. a per-byte basis (numerical analysis) q E. g. portions of images (image processing, machine vision, pattern recognition. . . ) q E. g. portions of databases (associative and neural)

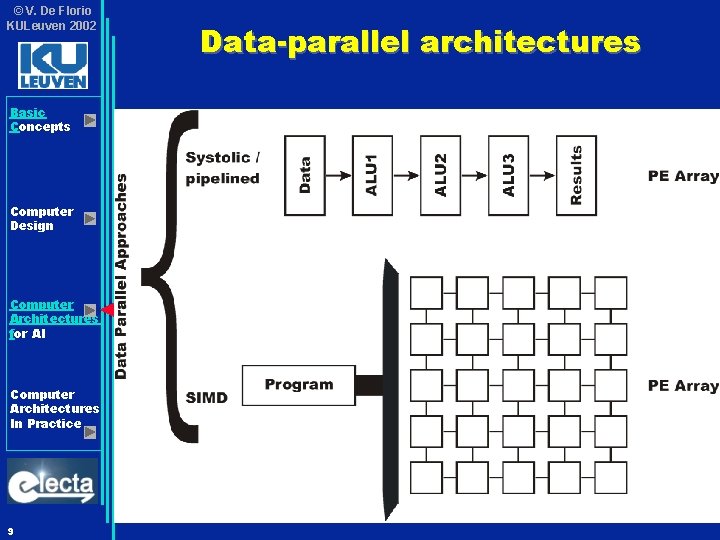

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 7 Data-parallel architectures • This corresponds to four “classes” of data parallel architectures: q SIMD q Systolic and pipelined q Vector q Associative and Neural • The rest of this part focuses on SIMD, systolic/pipelined, associative and neural architectures

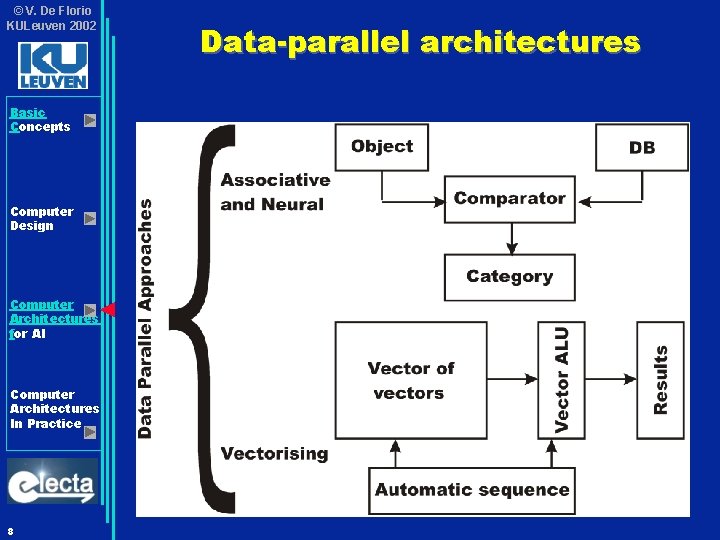

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 8 Data-parallel architectures

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 9 Data-parallel architectures

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 10 Data-parallel architectures • Data-parallel architectures q SIMD q Associative and Neural q Systolic and pipelined

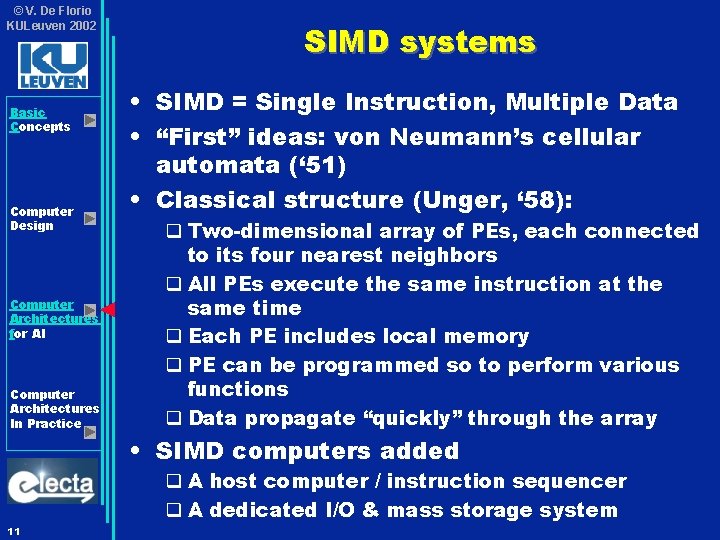

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice SIMD systems • SIMD = Single Instruction, Multiple Data • “First” ideas: von Neumann’s cellular automata (‘ 51) • Classical structure (Unger, ‘ 58): q Two-dimensional array of PEs, each connected to its four nearest neighbors q All PEs execute the same instruction at the same time q Each PE includes local memory q PE can be programmed so to perform various functions q Data propagate “quickly” through the array • SIMD computers added q A host computer / instruction sequencer q A dedicated I/O & mass storage system 11

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 12 SIMD systems

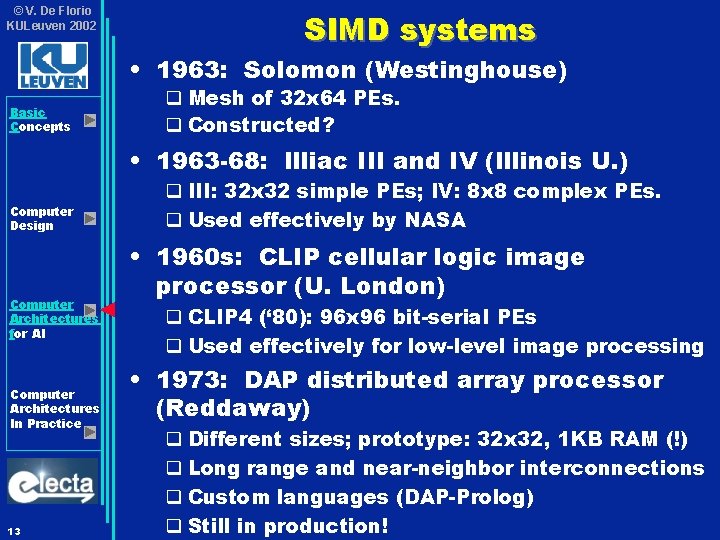

© V. De Florio KULeuven 2002 SIMD systems • 1963: Solomon (Westinghouse) Basic Concepts q Mesh of 32 x 64 PEs. q Constructed? • 1963 -68: Illiac III and IV (Illinois U. ) Computer Design Computer Architectures for AI Computer Architectures In Practice 13 q III: 32 x 32 simple PEs; IV: 8 x 8 complex PEs. q Used effectively by NASA • 1960 s: CLIP cellular logic image processor (U. London) q CLIP 4 (‘ 80): 96 x 96 bit-serial PEs q Used effectively for low-level image processing • 1973: DAP distributed array processor (Reddaway) q Different sizes; prototype: 32 x 32, 1 KB RAM (!) q Long range and near-neighbor interconnections q Custom languages (DAP-Prolog) q Still in production!

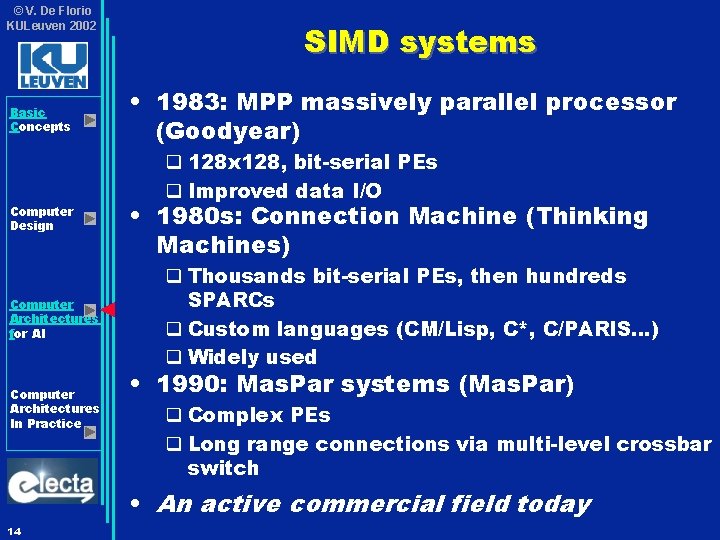

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice SIMD systems • 1983: MPP massively parallel processor (Goodyear) q 128 x 128, bit-serial PEs q Improved data I/O • 1980 s: Connection Machine (Thinking Machines) q Thousands bit-serial PEs, then hundreds SPARCs q Custom languages (CM/Lisp, C*, C/PARIS…) q Widely used • 1990: Mas. Par systems (Mas. Par) q Complex PEs q Long range connections via multi-level crossbar switch • An active commercial field today 14

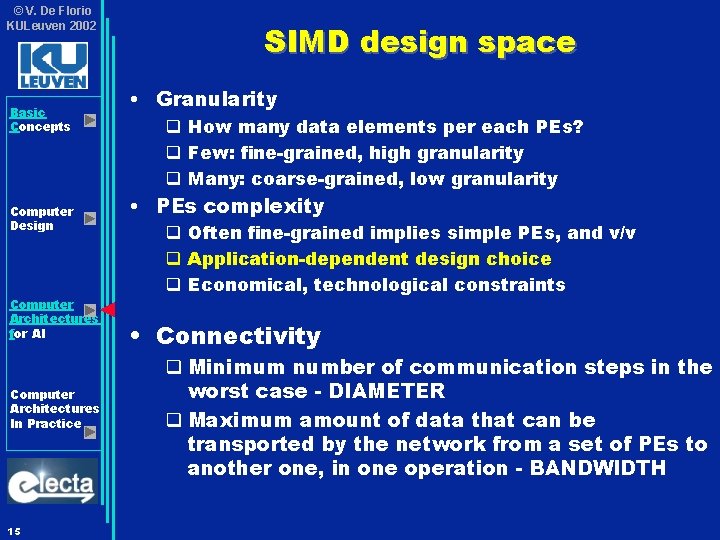

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 15 SIMD design space • Granularity q How many data elements per each PEs? q Few: fine-grained, high granularity q Many: coarse-grained, low granularity • PEs complexity q Often fine-grained implies simple PEs, and v/v q Application-dependent design choice q Economical, technological constraints • Connectivity q Minimum number of communication steps in the worst case - DIAMETER q Maximum amount of data that can be transported by the network from a set of PEs to another one, in one operation - BANDWIDTH

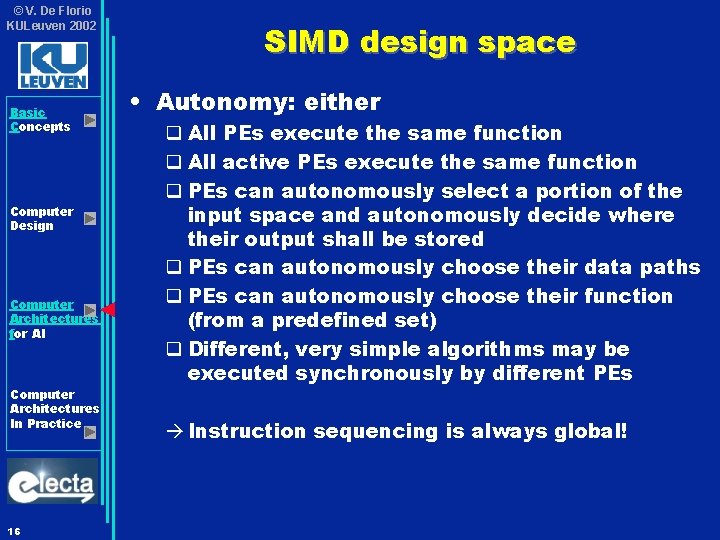

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 16 SIMD design space • Autonomy: either q All PEs execute the same function q All active PEs execute the same function q PEs can autonomously select a portion of the input space and autonomously decide where their output shall be stored q PEs can autonomously choose their data paths q PEs can autonomously choose their function (from a predefined set) q Different, very simple algorithms may be executed synchronously by different PEs à Instruction sequencing is always global!

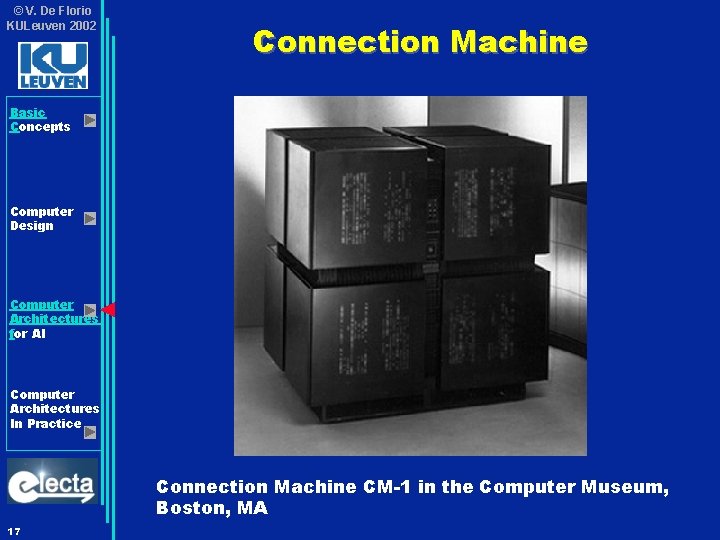

© V. De Florio KULeuven 2002 Connection Machine Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice Connection Machine CM-1 in the Computer Museum, Boston, MA 17

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 18 Connection Machine • The Connection Machine was the first commercial computer designed expressly to work on simulating intelligence and life • A massively parallel supercomputer with up to 65, 536 processors • Conceived by Danny Hillis while he was a graduate student under Marvin Minsky at the MIT Artificial Intelligence Lab

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 19 Connection Machine • Modelled after the structure of a human brain: Rather than relying on a single powerful processor to perform calculations one after another, the data was distributed over the tens of thousands of processors, all of which could perform calculations simultaneously • The structures for communication and transfer of data between processors could change as needed depending on the nature of the problem, making the mutability of the connections between processors more important than the processors themselves, hence the name “Connection Machine” q Initially, 1 -bit processors!

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Connection Machine • CM 1: fine-grained • CM 5: coarse-grained: q Internal 64 -bit organization q 40 MHz (now much higher…) q Data and control networks q 4 FP vector processors with separate data paths to memory q 32 MB memory (now much more…) q Parallel versions of C and Fortran Computer Architectures In Practice 20

© V. De Florio KULeuven 2002 Basic Concepts Connection Machine • Custom programming languages q C/Paris (common C plus Paris library) ð Each processor executes the same action and communicates with its nearest neighbours Computer Design Computer Architectures for AI Computer Architectures In Practice 21 q *C (SIMD extension of C) q *Lisp (SIMD extension of functional language LISP) • The higher the number of processors, the lower the system reliability q MTBF very high

© V. De Florio KULeuven 2002 Basic Concepts Connection Machine • References q http: //mission. base. com/tamiko/theory/cm_txts/ di-frames. html Computer Design q Hillis, W. Daniel. The Connection Machine, MIT Press, Cambridge, MA. , 1985 Computer Architectures for AI Computer Architectures In Practice 22 q Hillis, W. Daniel. "The Connection Machine, " Scientific American, Vol. 256, June 1987, pp. 108 -115

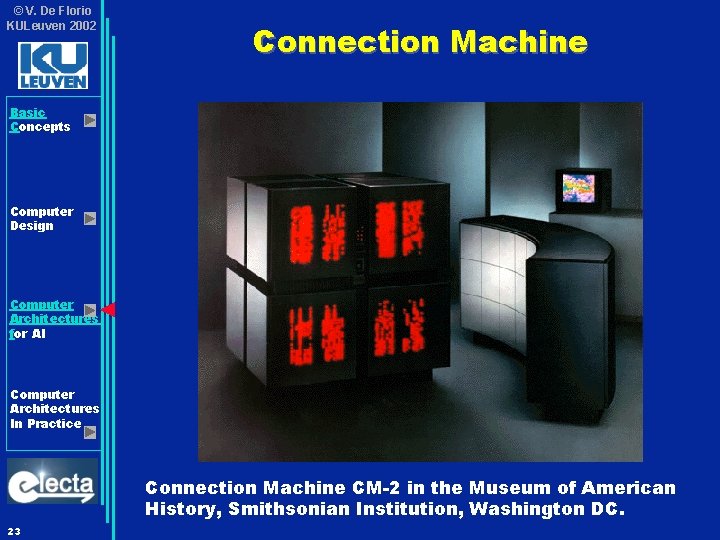

© V. De Florio KULeuven 2002 Connection Machine Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice Connection Machine CM-2 in the Museum of American History, Smithsonian Institution, Washington DC. 23

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 24 Data-parallel architectures • Data-parallel architectures q SIMD q Associative and Neural q Systolic and pipelined

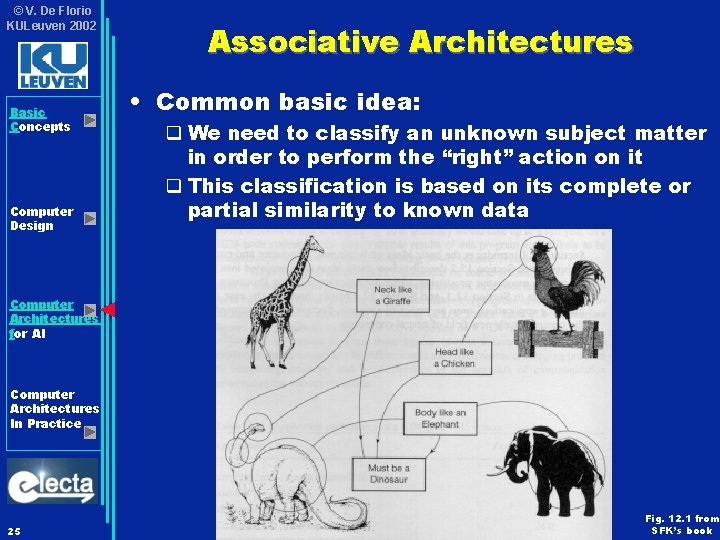

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Associative Architectures • Common basic idea: q We need to classify an unknown subject matter in order to perform the “right” action on it q This classification is based on its complete or partial similarity to known data Computer Architectures for AI Computer Architectures In Practice 25 Fig. 12. 1 from SFK’s book

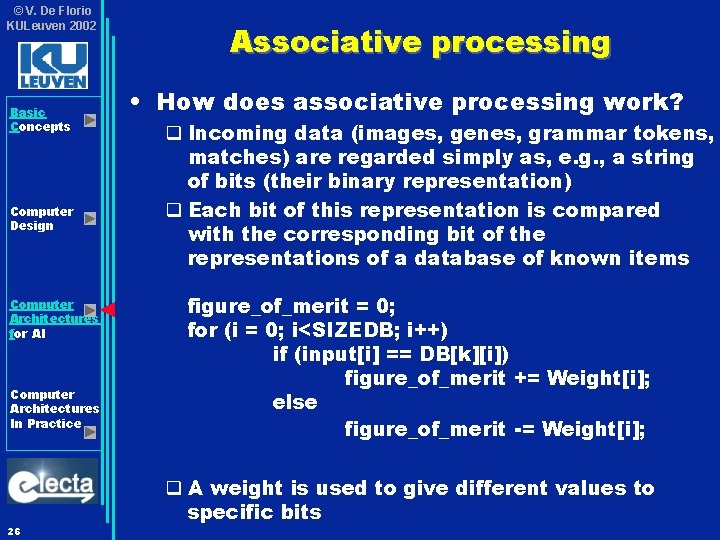

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 26 Associative processing • How does associative processing work? q Incoming data (images, genes, grammar tokens, matches) are regarded simply as, e. g. , a string of bits (their binary representation) q Each bit of this representation is compared with the corresponding bit of the representations of a database of known items figure_of_merit = 0; for (i = 0; i<SIZEDB; i++) if (input[i] == DB[k][i]) figure_of_merit += Weight[i]; else figure_of_merit -= Weight[i]; q A weight is used to give different values to specific bits

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 27 Associative processing • Key choice: data item of the basic matching process q Working with bits may be difficult and less appropriate w. r. t. , e. g. , working with more complex data items ð Images -> pixels ð Spell checker -> letters of alphabet ð Speech recognition -> phonetics atoms

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice Parallel Associative Processing • Where is parallelism in associative processing? • At multiple levels q One may compare all letters of each word in parallel q Or do the same, but comparing sets of words in parallel q Or do the same, but with multiple databases q …and so forth Ø This is like working with parallelism at multiple levels: q ILP q Loop level q Process level etc. • But the steady state can be sustained more easily 28

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 29 The Associative String Processor • The Brunel ASP: a massively parallel, finegrain associative architecture • Currently developed by Aspex Microsystems Ltd. , UK • Building block: the ASP module • A set of ASP modules is attached to a control bus and data communication networks • The latter can be used to set up generalpurpose and application-specific topologies q Crossbar, mesh, binary n-cube, …

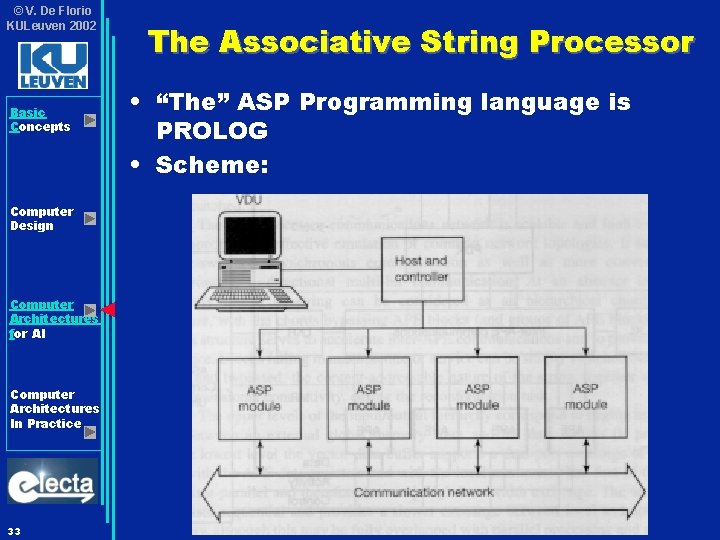

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 33 The Associative String Processor • “The” ASP Programming language is PROLOG • Scheme:

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 35 Neural Computing • Brief description (NN are part of other courses in MAI programme) • Neural computers are trained vs. programmed • After training, they can, e. g. , classify an input data as belonging to a certain class out of a set of possible classes • Paradigms of Neural Computing include 1. Classification ð Reduction in the quantity of information necessary to classify some entities ð Training set = (parameters, classifications) * ð Processing = (parametric description, ? ) ð Multi-layer Perceptron (Rosenblatt, ‘ 58)

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 36 Neural Computing 2. Transformation ð Moving from one representation to another (e. g. , converting a written text into speech) ð Same amount of data, but with a different representation 3. Minimization ð Hopfield networks (Hopfield, ’ 82) ð A net of all-to-all connected binary threshold logic units with weighted connections b/w units ð A number of patterns are stored in the network ð A partial or corrupted pattern is input; the network recognizes it as one of its stored patterns

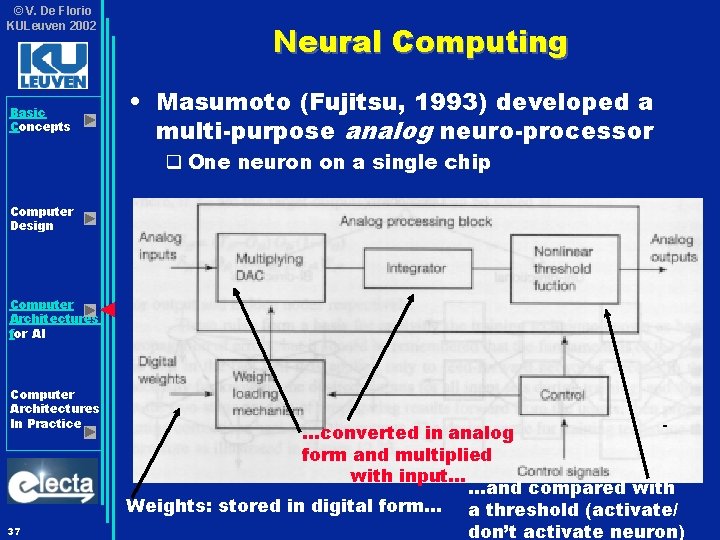

© V. De Florio KULeuven 2002 Basic Concepts Neural Computing • Masumoto (Fujitsu, 1993) developed a multi-purpose analog neuro-processor q One neuron on a single chip Computer Design Computer Architectures for AI Computer Architectures In Practice 37 …converted in analog form and multiplied with input… …and compared with Weights: stored in digital form… a threshold (activate/ don’t activate neuron)

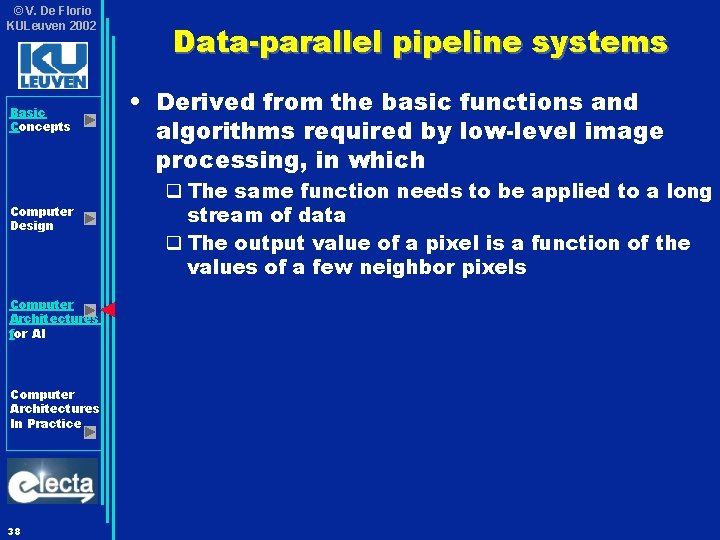

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 38 Data-parallel pipeline systems • Derived from the basic functions and algorithms required by low-level image processing, in which q The same function needs to be applied to a long stream of data q The output value of a pixel is a function of the values of a few neighbor pixels

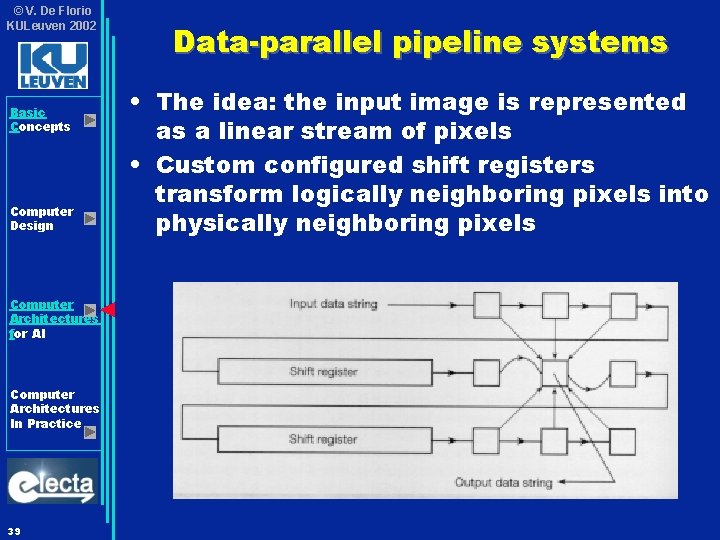

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 39 Data-parallel pipeline systems • The idea: the input image is represented as a linear stream of pixels • Custom configured shift registers transform logically neighboring pixels into physically neighboring pixels

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 40 Data-parallel pipeline systems • Example: the DIP-1 system (Gerritsen, ’ 78, TUDelft) • Characteristics: q Rather than shift registers, actual computation of the addresses of the neighboring pixels q A mixture of fixed-function and programmable elements q A set of binary function, 3 x 3 neighborhood look up tables • Performance: much faster than leading edge general purpose workstations of 1978 – for image processing only • Usability: q Too specialized q Too difficult to program (microprogrammed machine)

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 41 Sistolic architectures • A specialization of SIMD, devised by Kung and Leisersor in 1978 • Named after an analogy with the pumping action of the heart: • Data are “pumped” through the system, and fed to PEs that produce a stream of results • What is systolic computing?

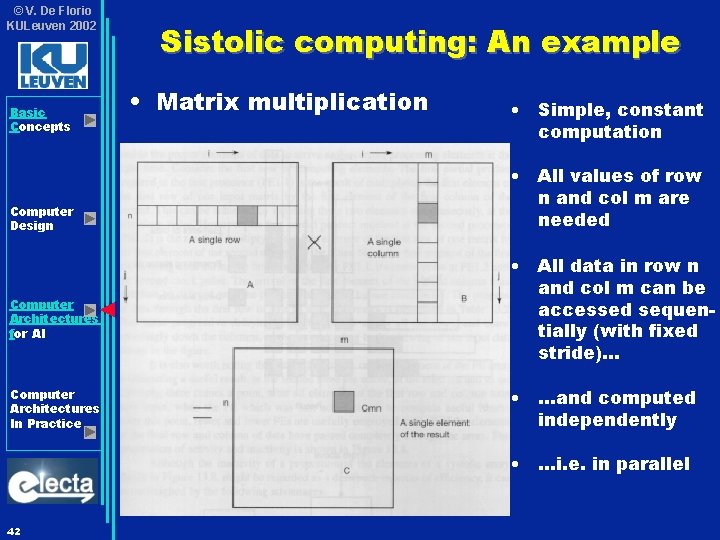

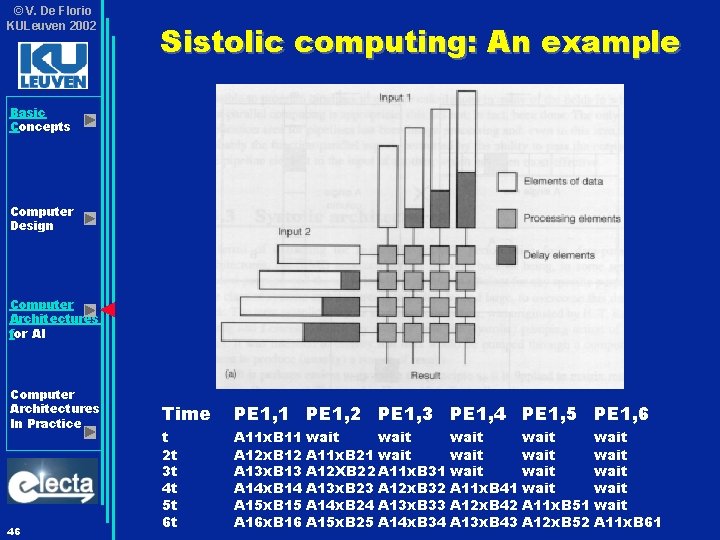

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice Sistolic computing: An example • Matrix multiplication • Simple, constant computation • All values of row n and col m are needed • All data in row n and col m can be accessed sequentially (with fixed stride)… • …and computed independently • …i. e. in parallel 42

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 43 Sistolic computing: An example • Sistolic computer: q A square matrix of PEs is available q Simple PE = multiplier, adder, storage unit q Fixed function PEs q Each PE is only connected to its nearest neighbors q Connections are unidirectional q The clock pulses are the only control signal, pacing all PEs

© V. De Florio KULeuven 2002 Sistolic computing: An example Basic Concepts i Computer Design Computer Architectures for AI i Computer Architectures In Practice o 44

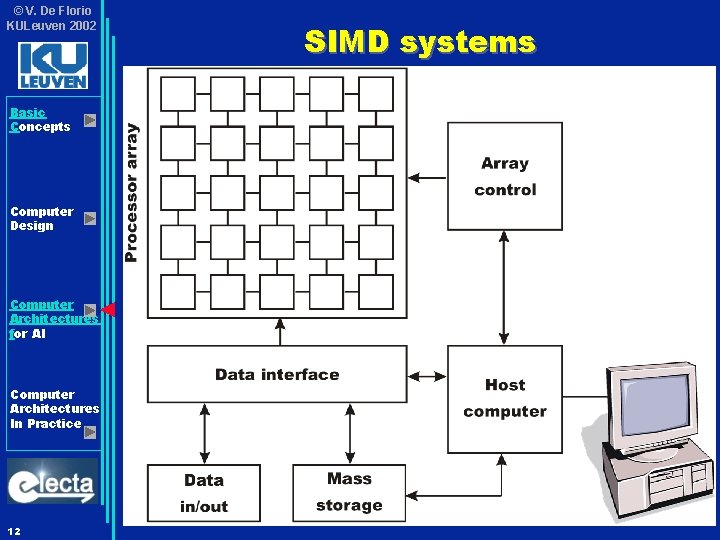

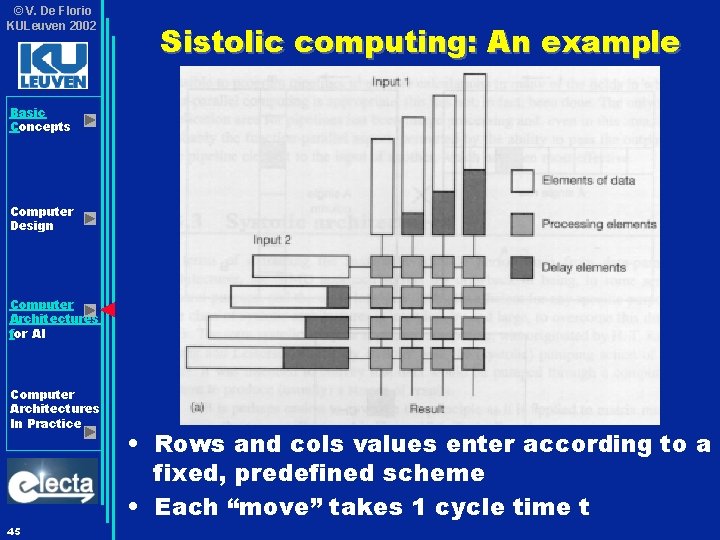

© V. De Florio KULeuven 2002 Sistolic computing: An example Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 45 • Rows and cols values enter according to a fixed, predefined scheme • Each “move” takes 1 cycle time t

© V. De Florio KULeuven 2002 Sistolic computing: An example Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 46 Time PE 1, 1 PE 1, 2 PE 1, 3 PE 1, 4 PE 1, 5 PE 1, 6 t 2 t 3 t 4 t 5 t 6 t A 11 x. B 11 wait wait A 12 x. B 12 A 11 x. B 21 wait A 13 x. B 13 A 12 XB 22 A 11 x. B 31 wait A 14 x. B 14 A 13 x. B 23 A 12 x. B 32 A 11 x. B 41 wait A 15 x. B 15 A 14 x. B 24 A 13 x. B 33 A 12 x. B 42 A 11 x. B 51 wait A 16 x. B 16 A 15 x. B 25 A 14 x. B 34 A 13 x. B 43 A 12 x. B 52 A 11 x. B 61

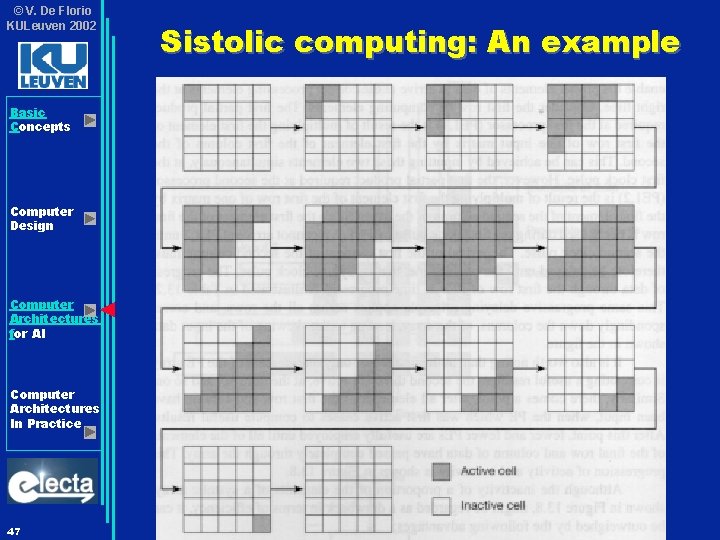

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 47 Sistolic computing: An example

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 48

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 49 Q 1 from Exam of 2 Sept 03 • A Genetic Algorithm (GA) is an evolutionary algorithm which generates each individual from some encoded form known as a “chromosome”. Chromosomes are combined or mutated to breed new individuals. “Crossover”, the kind of recombination of chromosomes found in reproduction in nature, is often also used in GAs. Here, an offspring's chromosome is created by joining segments choosen alternately from each of two parents' chromosomes which are of fixed length. The resulting individual can then be evaluated through a fitness function. Only best fitting individuals are allowed to become officially part of the next generation. Generations follow generations until the fitness function reaches some threshold value. • Assume a fixed population of n individuals and a fitness function f. • Assume that CPUtime(f) = t ms

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 50 Q 1… (continued) • Sketch a computer architecture for this family of GAs for n and t very large • Motivate your choices.

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Solution 1. for n and t very large q This means “a lot of computations” ü Is this a data parallel or a functional parallel problem? Ø Same function is applied to each work unit -> data parallel Computer Architectures for AI ü Which grain for the data? Ø n is very large, so course grain seems OK Computer Architectures In Practice ü Which grain for the processing units? 51 Ø t is very large, so course grain seems OK

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 52 Solution • Architecture: one characterised by a data parallel structure, able to operate on large chuncks of data with powerful PE’s Ø Tightly coupled MIMD (multiprocessor) or network of workstations 1. Each processor does the same work on different data (SIMD scheme mapped onto MIMD arch. ) 2. Each processor is quite powerful and can “crunch” a large work unit • Reduced need for synchronisation 3. A master PE could dispatch work units on demand

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 53 An exercise for you • Now sketch a computer architecture for this family of GAs for n and t very small • Motivate your choices.

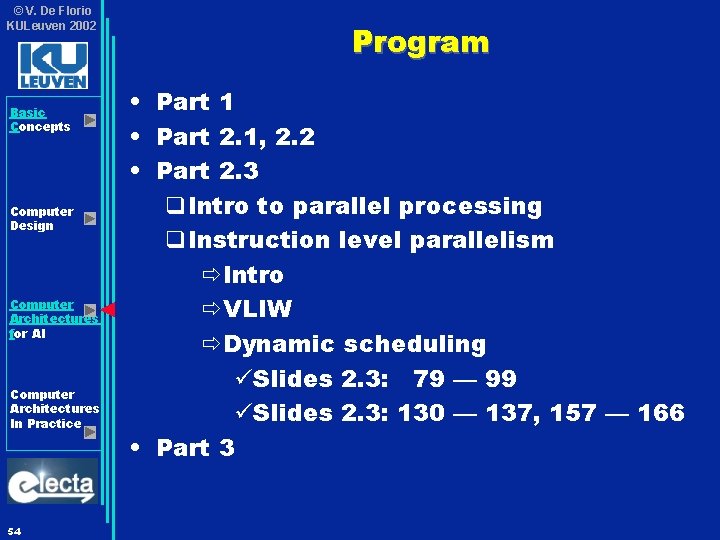

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 54 Program • Part 1 • Part 2. 1, 2. 2 • Part 2. 3 q. Intro to parallel processing q. Instruction level parallelism ðIntro ðVLIW ðDynamic scheduling üSlides 2. 3: 79 — 99 üSlides 2. 3: 130 — 137, 157 — 166 • Part 3

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 55

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 56 Course contents • Basic Microprocessor Provisions • Computer Design • Computer Architectures for AI è Computer Architectures in Practice

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 57 Computer Architectures in Practice • Trends, properties and selection criteria

© V. De Florio KULeuven 2002 Basic Concepts Computer Architectures in Practice • Trends, properties and selection criteria q General purpose microprocessor: ð Intel 80 x 86 series Computer Design Computer Architectures for AI Computer Architectures In Practice 58

© V. De Florio KULeuven 2002 Basic Concepts Computer Architectures in Practice • Trends, properties and selection criteria q General purpose microprocessor ü Intel 80 x 86 series Computer Design Computer Architectures for AI Computer Architectures In Practice 59

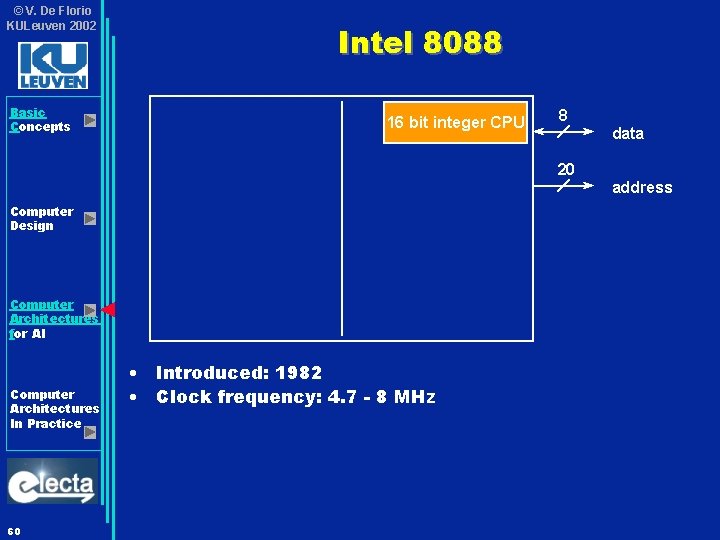

© V. De Florio KULeuven 2002 Basic Concepts Intel 8088 16 bit integer CPU 8 data 20 address Computer Design Computer Architectures for AI Computer Architectures In Practice 60 • Introduced: 1982 • Clock frequency: 4. 7 - 8 MHz

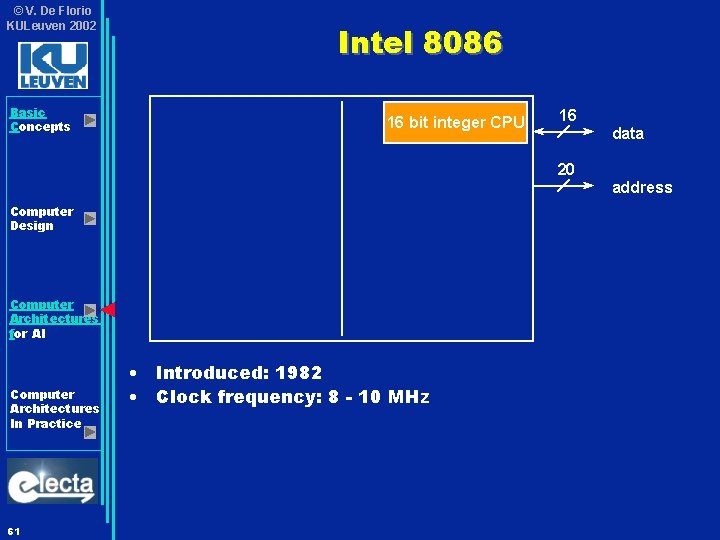

© V. De Florio KULeuven 2002 Basic Concepts Intel 8086 16 bit integer CPU 16 data 20 address Computer Design Computer Architectures for AI Computer Architectures In Practice 61 • Introduced: 1982 • Clock frequency: 8 - 10 MHz

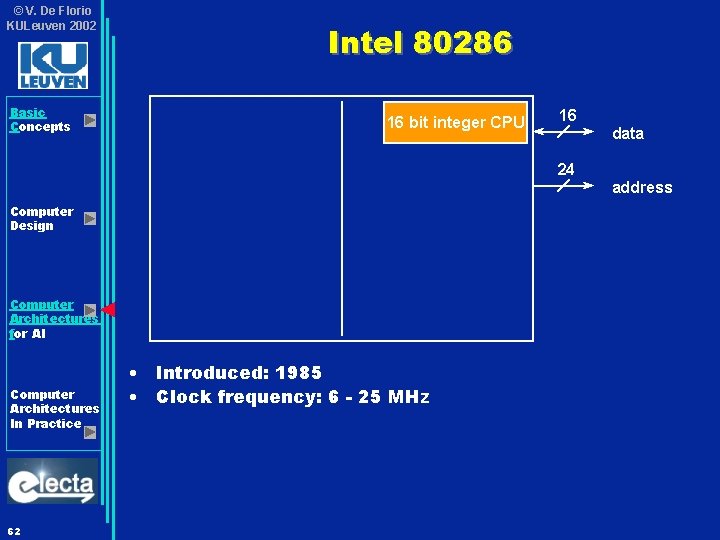

© V. De Florio KULeuven 2002 Basic Concepts Intel 80286 16 bit integer CPU 16 data 24 address Computer Design Computer Architectures for AI Computer Architectures In Practice 62 • Introduced: 1985 • Clock frequency: 6 - 25 MHz

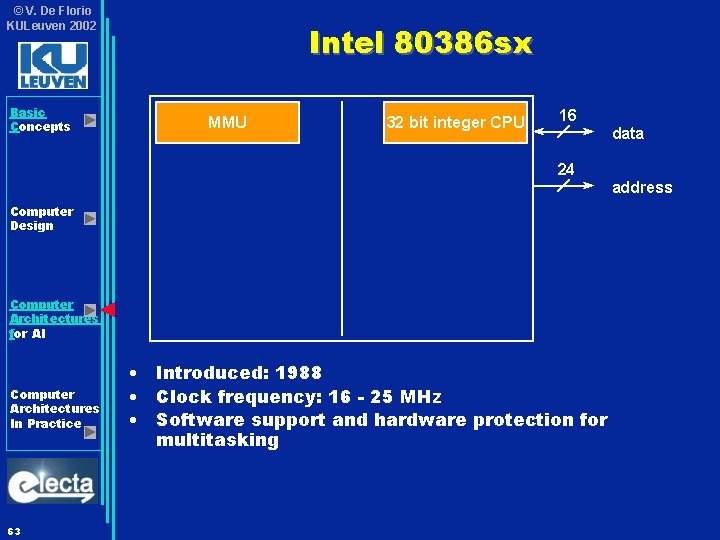

© V. De Florio KULeuven 2002 Basic Concepts Intel 80386 sx MMU 32 bit integer CPU 16 data 24 address Computer Design Computer Architectures for AI Computer Architectures In Practice 63 • Introduced: 1988 • Clock frequency: 16 - 25 MHz • Software support and hardware protection for multitasking

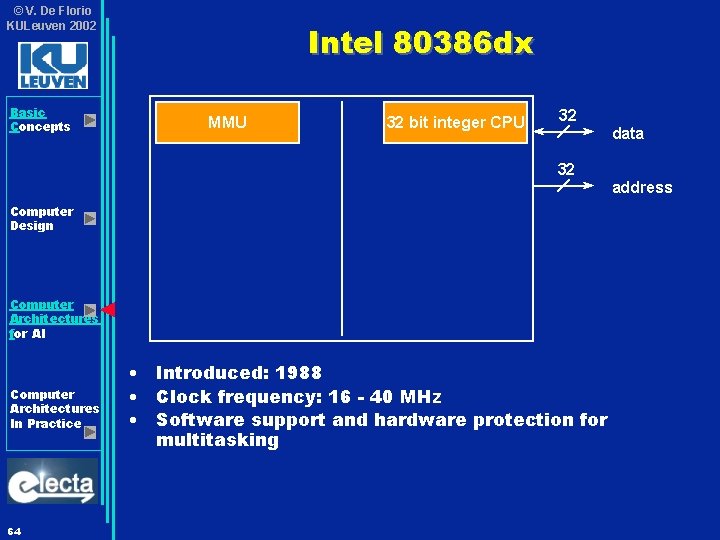

© V. De Florio KULeuven 2002 Basic Concepts Intel 80386 dx MMU 32 bit integer CPU 32 data 32 address Computer Design Computer Architectures for AI Computer Architectures In Practice 64 • Introduced: 1988 • Clock frequency: 16 - 40 MHz • Software support and hardware protection for multitasking

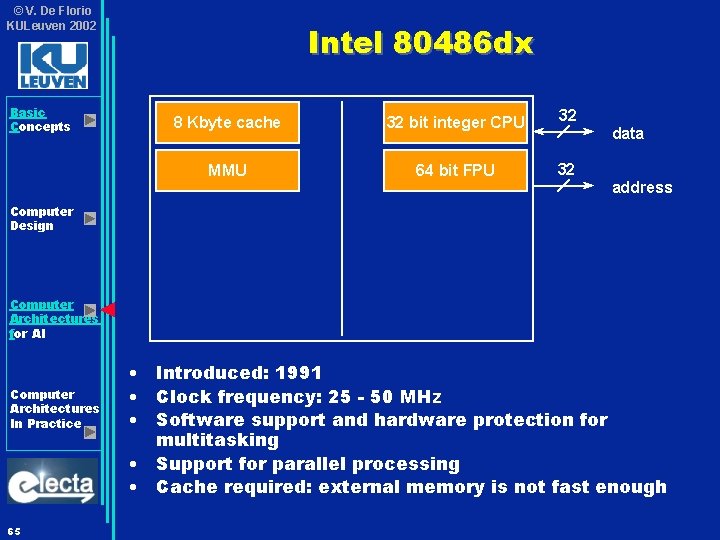

© V. De Florio KULeuven 2002 Basic Concepts Intel 80486 dx 8 Kbyte cache 32 bit integer CPU 32 MMU 64 bit FPU 32 data address Computer Design Computer Architectures for AI Computer Architectures In Practice 65 • Introduced: 1991 • Clock frequency: 25 - 50 MHz • Software support and hardware protection for multitasking • Support for parallel processing • Cache required: external memory is not fast enough

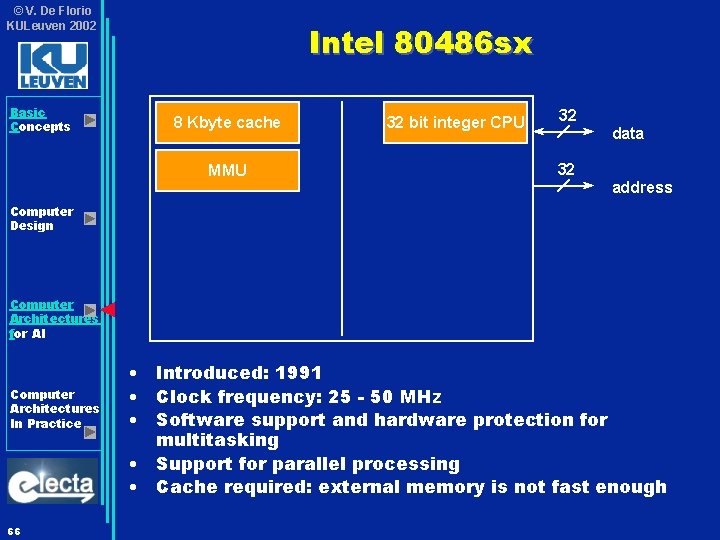

© V. De Florio KULeuven 2002 Basic Concepts Intel 80486 sx 8 Kbyte cache MMU 32 bit integer CPU 32 data 32 address Computer Design Computer Architectures for AI Computer Architectures In Practice 66 • Introduced: 1991 • Clock frequency: 25 - 50 MHz • Software support and hardware protection for multitasking • Support for parallel processing • Cache required: external memory is not fast enough

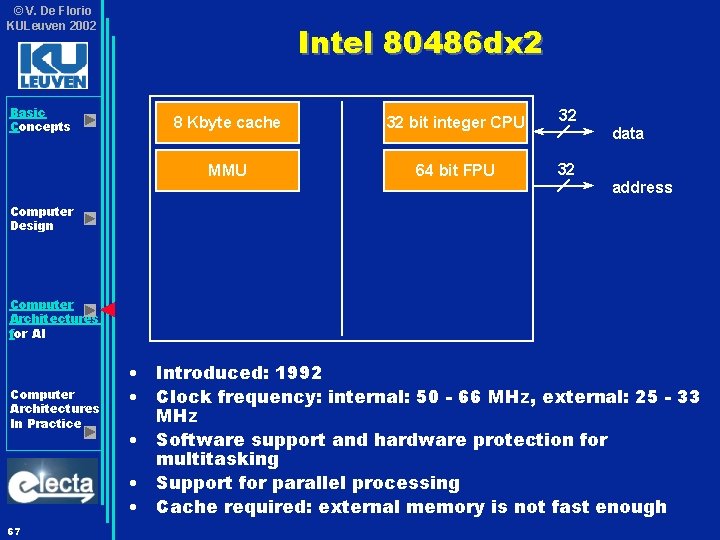

© V. De Florio KULeuven 2002 Basic Concepts Intel 80486 dx 2 8 Kbyte cache 32 bit integer CPU 32 MMU 64 bit FPU 32 data address Computer Design Computer Architectures for AI Computer Architectures In Practice 67 • Introduced: 1992 • Clock frequency: internal: 50 - 66 MHz, external: 25 - 33 MHz • Software support and hardware protection for multitasking • Support for parallel processing • Cache required: external memory is not fast enough

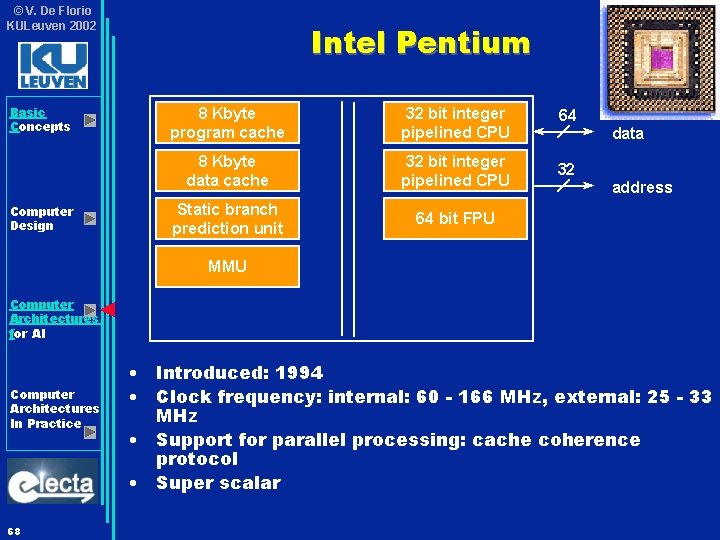

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Intel Pentium 8 Kbyte program cache 32 bit integer pipelined CPU 64 8 Kbyte data cache 32 bit integer pipelined CPU 32 Static branch prediction unit 64 bit FPU data address MMU Computer Architectures for AI Computer Architectures In Practice 68 • Introduced: 1994 • Clock frequency: internal: 60 - 166 MHz, external: 25 - 33 MHz • Support for parallel processing: cache coherence protocol • Super scalar

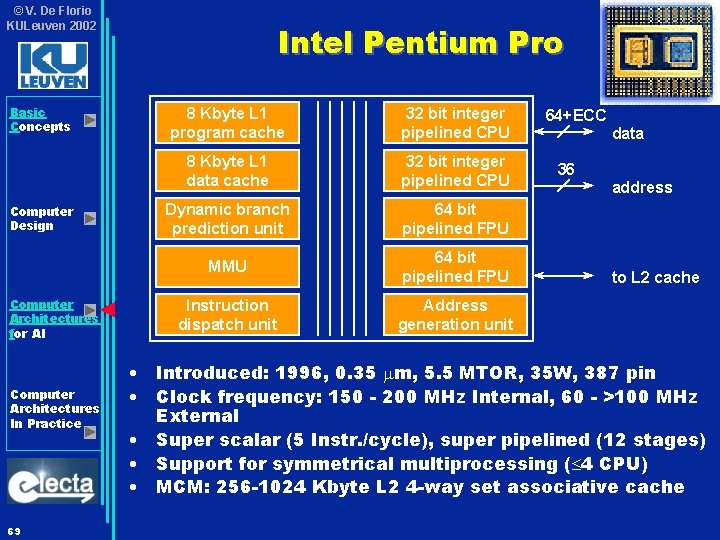

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 69 Intel Pentium Pro 8 Kbyte L 1 program cache 32 bit integer pipelined CPU 8 Kbyte L 1 data cache 32 bit integer pipelined CPU Dynamic branch prediction unit 64 bit pipelined FPU MMU 64 bit pipelined FPU Instruction dispatch unit Address generation unit 64+ECC data 36 address to L 2 cache • Introduced: 1996, 0. 35 m, 5. 5 MTOR, 35 W, 387 pin • Clock frequency: 150 - 200 MHz Internal, 60 - >100 MHz External • Super scalar (5 Instr. /cycle), super pipelined (12 stages) • Support for symmetrical multiprocessing ( 4 CPU) • MCM: 256 -1024 Kbyte L 2 4 -way set associative cache

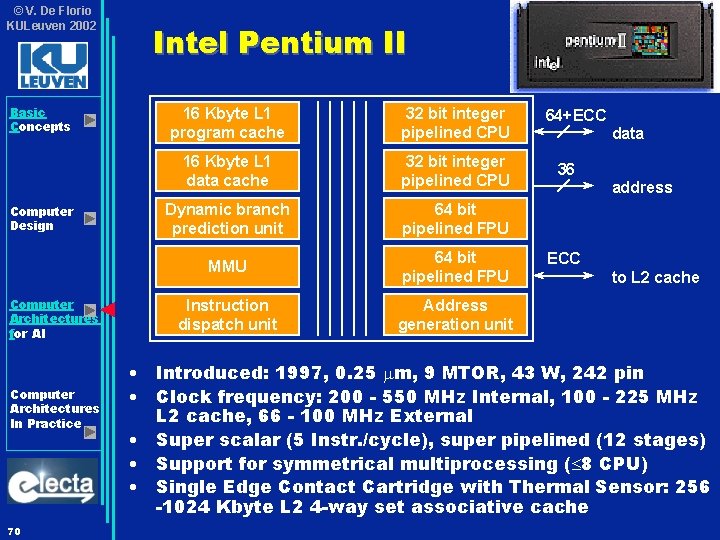

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 70 Intel Pentium II 16 Kbyte L 1 program cache 32 bit integer pipelined CPU 16 Kbyte L 1 data cache 32 bit integer pipelined CPU Dynamic branch prediction unit 64 bit pipelined FPU MMU 64 bit pipelined FPU Instruction dispatch unit Address generation unit 64+ECC data 36 address ECC to L 2 cache • Introduced: 1997, 0. 25 m, 9 MTOR, 43 W, 242 pin • Clock frequency: 200 - 550 MHz Internal, 100 - 225 MHz L 2 cache, 66 - 100 MHz External • Super scalar (5 Instr. /cycle), super pipelined (12 stages) • Support for symmetrical multiprocessing ( 8 CPU) • Single Edge Contact Cartridge with Thermal Sensor: 256 -1024 Kbyte L 2 4 -way set associative cache

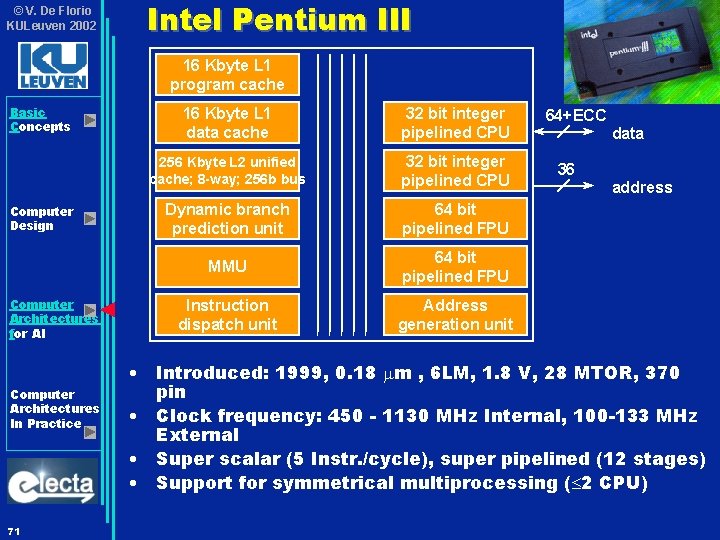

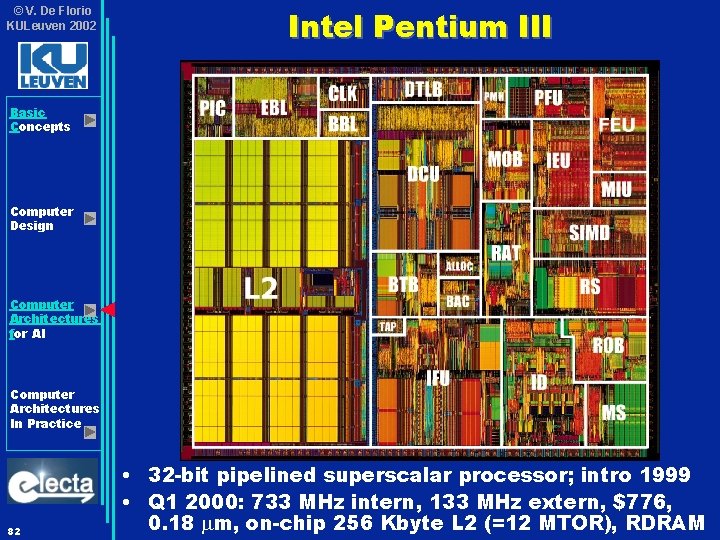

© V. De Florio KULeuven 2002 Intel Pentium III 16 Kbyte L 1 program cache Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 71 16 Kbyte L 1 data cache 32 bit integer pipelined CPU 256 Kbyte L 2 unified cache; 8 -way; 256 b bus 32 bit integer pipelined CPU Dynamic branch prediction unit 64 bit pipelined FPU MMU 64 bit pipelined FPU Instruction dispatch unit Address generation unit 64+ECC data 36 address • Introduced: 1999, 0. 18 m , 6 LM, 1. 8 V, 28 MTOR, 370 pin • Clock frequency: 450 - 1130 MHz Internal, 100 -133 MHz External • Super scalar (5 Instr. /cycle), super pipelined (12 stages) • Support for symmetrical multiprocessing ( 2 CPU)

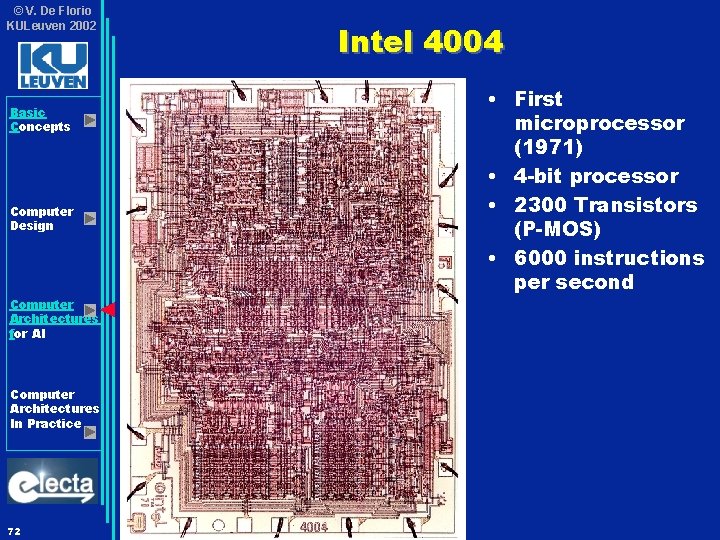

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 72 Intel 4004 • First microprocessor (1971) • 4 -bit processor • 2300 Transistors (P-MOS) • 6000 instructions per second

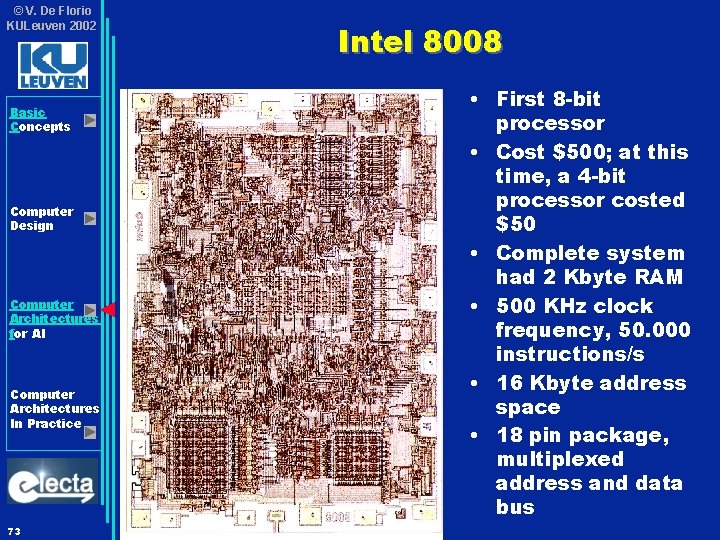

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 73 Intel 8008 • First 8 -bit processor • Cost $500; at this time, a 4 -bit processor costed $50 • Complete system had 2 Kbyte RAM • 500 KHz clock frequency, 50. 000 instructions/s • 16 Kbyte address space • 18 pin package, multiplexed address and data bus

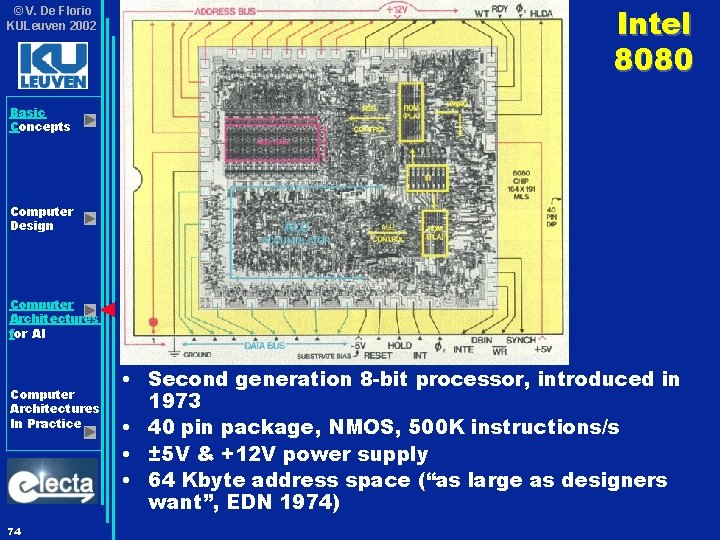

© V. De Florio KULeuven 2002 Intel 8080 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 74 • Second generation 8 -bit processor, introduced in 1973 • 40 pin package, NMOS, 500 K instructions/s • ± 5 V & +12 V power supply • 64 Kbyte address space (“as large as designers want”, EDN 1974)

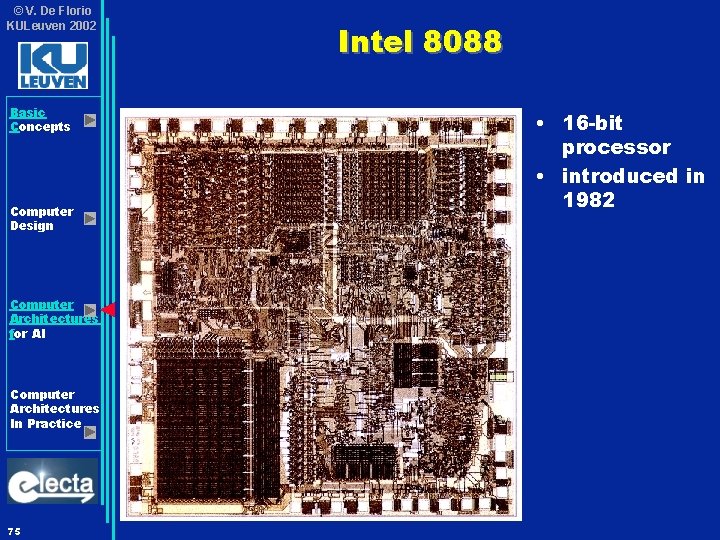

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 75 Intel 8088 • 16 -bit processor • introduced in 1982

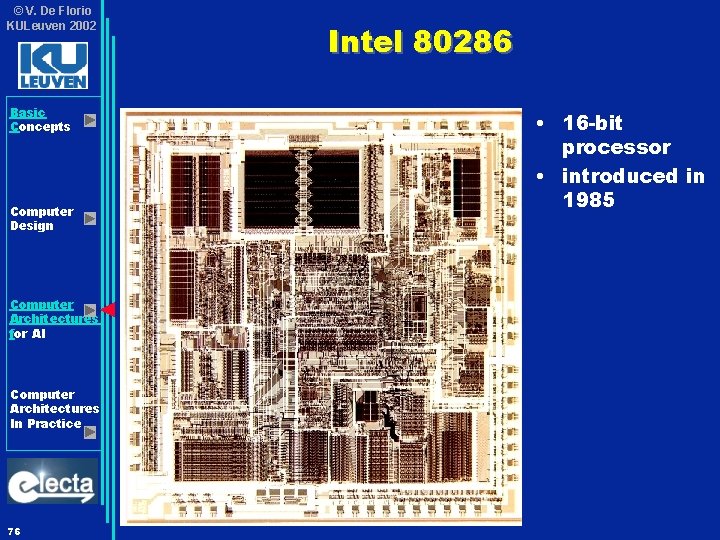

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 76 Intel 80286 • 16 -bit processor • introduced in 1985

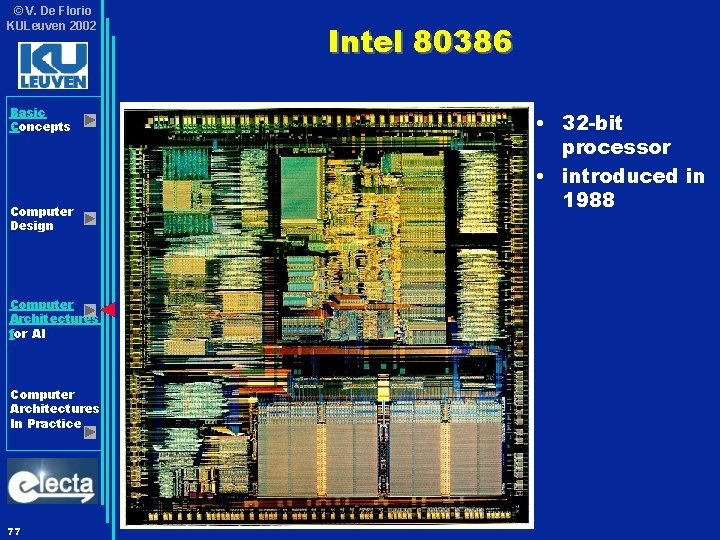

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 77 Intel 80386 • 32 -bit processor • introduced in 1988

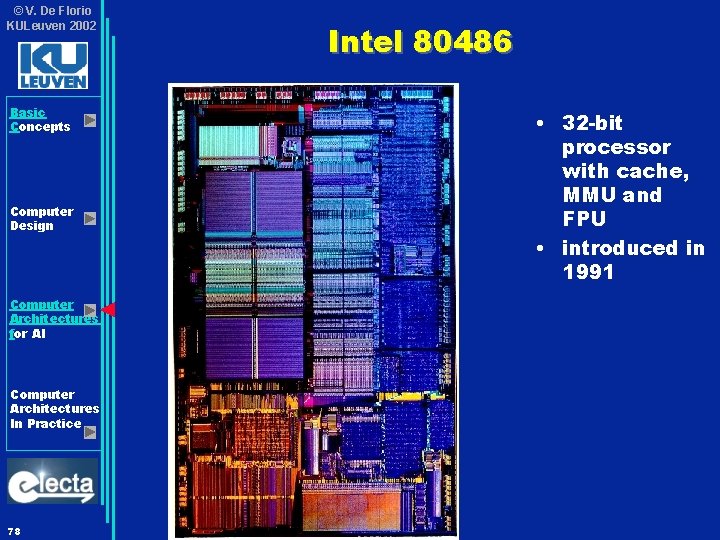

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 78 Intel 80486 • 32 -bit processor with cache, MMU and FPU • introduced in 1991

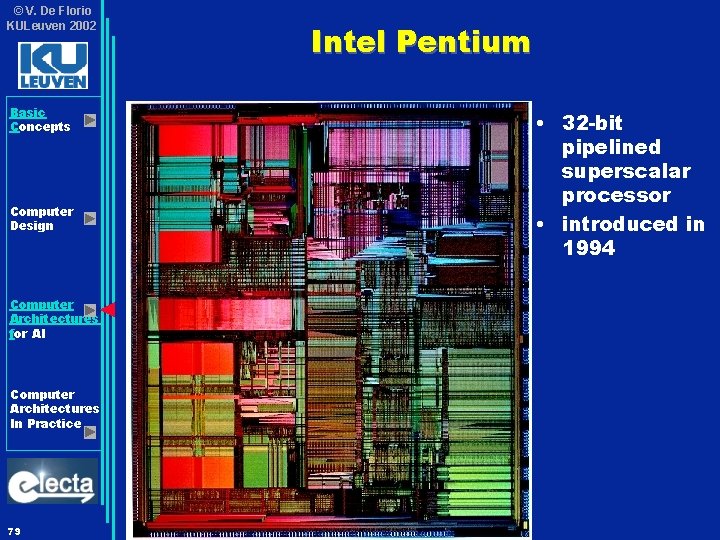

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 79 Intel Pentium • 32 -bit pipelined superscalar processor • introduced in 1994

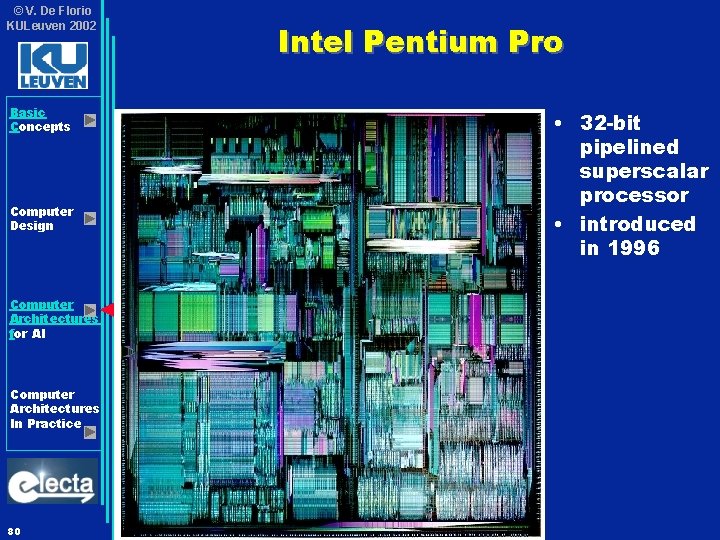

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 80 Intel Pentium Pro • 32 -bit pipelined superscalar processor • introduced in 1996

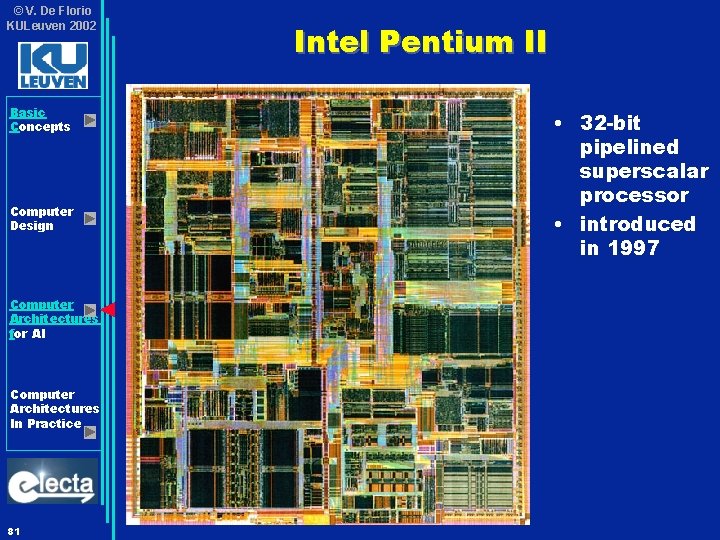

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 81 Intel Pentium II • 32 -bit pipelined superscalar processor • introduced in 1997

© V. De Florio KULeuven 2002 Intel Pentium III Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 82 • 32 -bit pipelined superscalar processor; intro 1999 • Q 1 2000: 733 MHz intern, 133 MHz extern, $776, 0. 18 m, on-chip 256 Kbyte L 2 (=12 MTOR), RDRAM

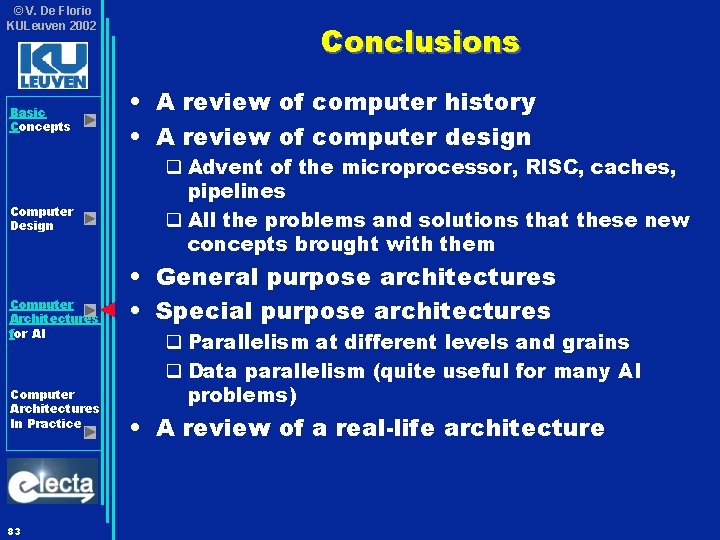

© V. De Florio KULeuven 2002 Basic Concepts Computer Design Computer Architectures for AI Computer Architectures In Practice 83 Conclusions • A review of computer history • A review of computer design q Advent of the microprocessor, RISC, caches, pipelines q All the problems and solutions that these new concepts brought with them • General purpose architectures • Special purpose architectures q Parallelism at different levels and grains q Data parallelism (quite useful for many AI problems) • A review of a real-life architecture

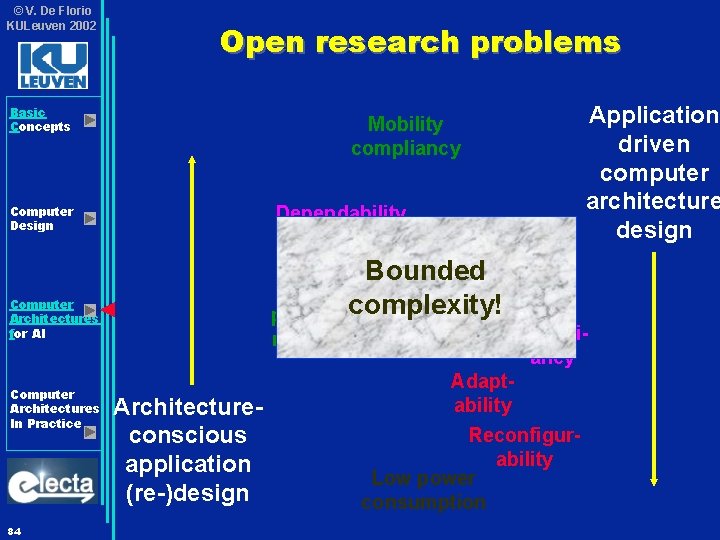

© V. De Florio KULeuven 2002 Open research problems Basic Concepts Dependability Computer Design High performance Computer Architectures for AI Computer Architectures In Practice 84 Application driven computer architecture design Mobility compliancy Architectureconscious application (re-)design Bounded complexity! Realtime compliancy Adaptability Reconfigurability Low power consumption

- Slides: 80