Using Wordlevel Features to Better Predict Student Emotions

Using Word-level Features to Better Predict Student Emotions during Spoken Tutoring Dialogues Mihai Rotaru Diane J. Litman Do. D Group Meeting Presentation

Introduction n n Why is important to detect/handle emotions? Emotion annotation Classification task Previous work 2

(Spoken) Tutoring dialogues n Education n n Classroom setting Human (one-on-one) tutoring Computer tutoring (ITS – Intelligent Tutoring Systems) Addressing the learning gap between human and computer tutoring n n n Dialogue-based ITS (Ex: Why 2) Improve the language understanding module of ITS Incorporate affective reasoning n n Connection between learning and student emotional state Adding human-provided emotional scaffolding to a reading tutor increases student persistence (Aist et al. , 2002) 3

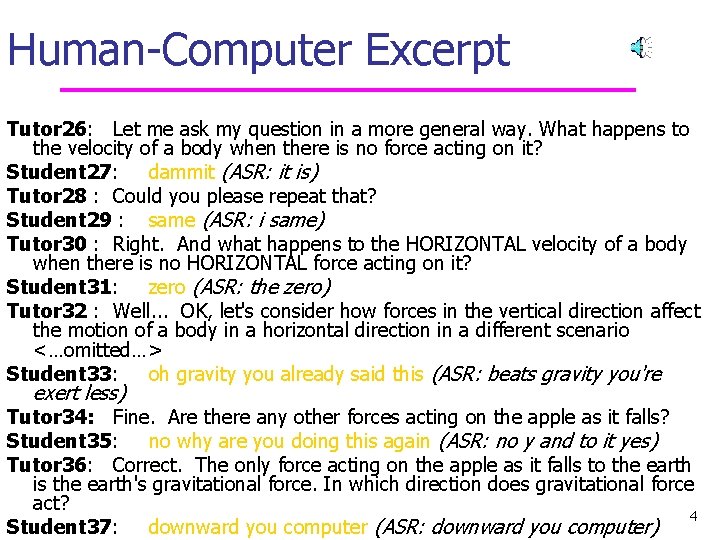

Human-Computer Excerpt Tutor 26: Let me ask my question in a more general way. What happens to the velocity of a body when there is no force acting on it? Student 27: dammit (ASR: it is) Tutor 28 : Could you please repeat that? Student 29 : same (ASR: i same) Tutor 30 : Right. And what happens to the HORIZONTAL velocity of a body when there is no HORIZONTAL force acting on it? Student 31: zero (ASR: the zero) Tutor 32 : Well. . . OK, let's consider how forces in the vertical direction affect the motion of a body in a horizontal direction in a different scenario <…omitted…> Student 33: oh gravity you already said this (ASR: beats gravity you're exert less) Tutor 34: Fine. Are there any other forces acting on the apple as it falls? Student 35: no why are you doing this again (ASR: no y and to it yes) Tutor 36: Correct. The only force acting on the apple as it falls to the earth is the earth's gravitational force. In which direction does gravitational force act? 4 Student 37: downward you computer (ASR: downward you computer)

Affective reasoning n Prerequisites n n Dialogue-based ITS : Why 2 Interaction via speech : ITSPOKE (Intelligent Tutoring SPOKEn dialogue system) n Affective reasoning n n Detect student emotions Handle student emotions 5

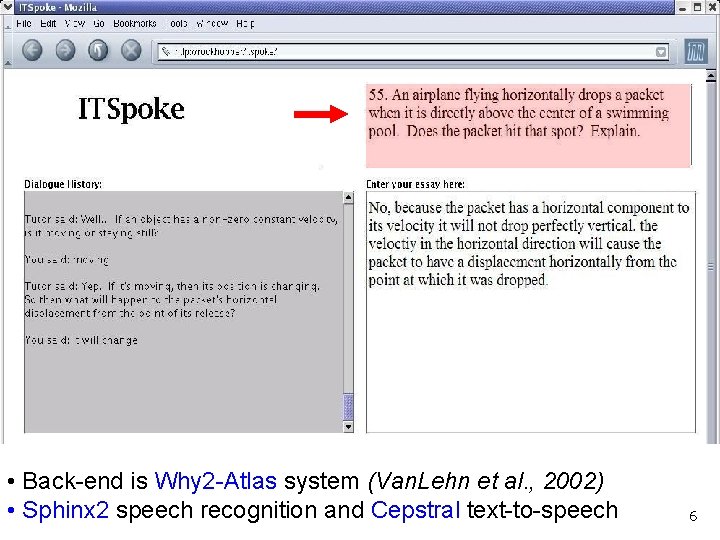

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech 6

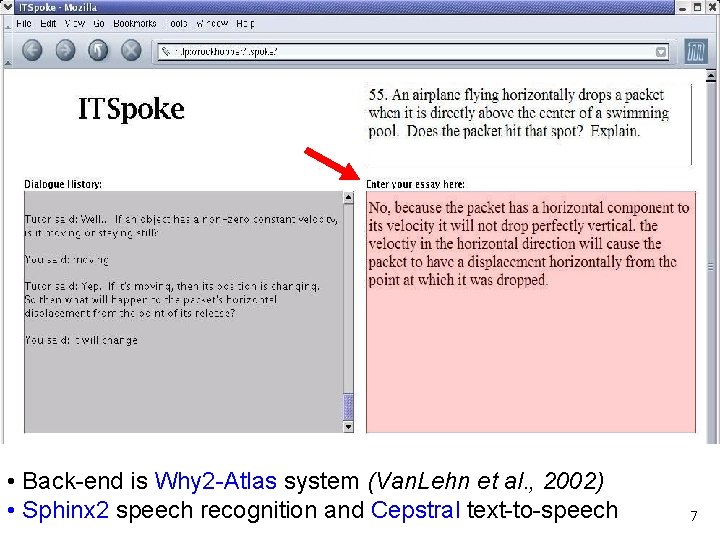

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech 7

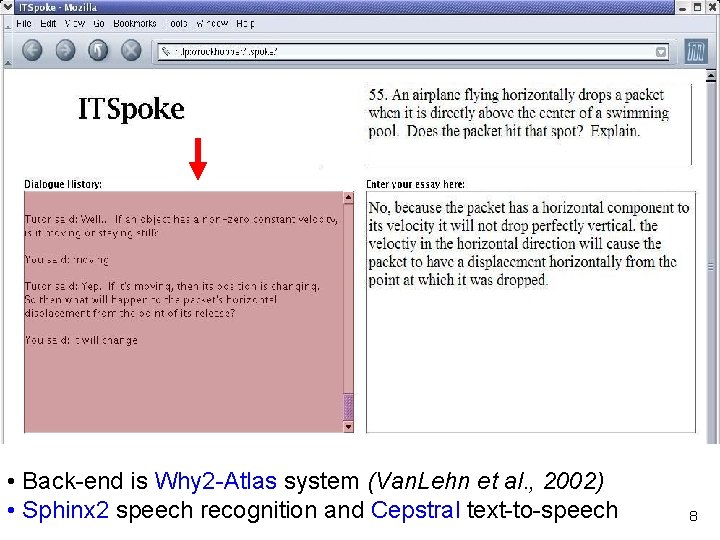

• Back-end is Why 2 -Atlas system (Van. Lehn et al. , 2002) • Sphinx 2 speech recognition and Cepstral text-to-speech 8

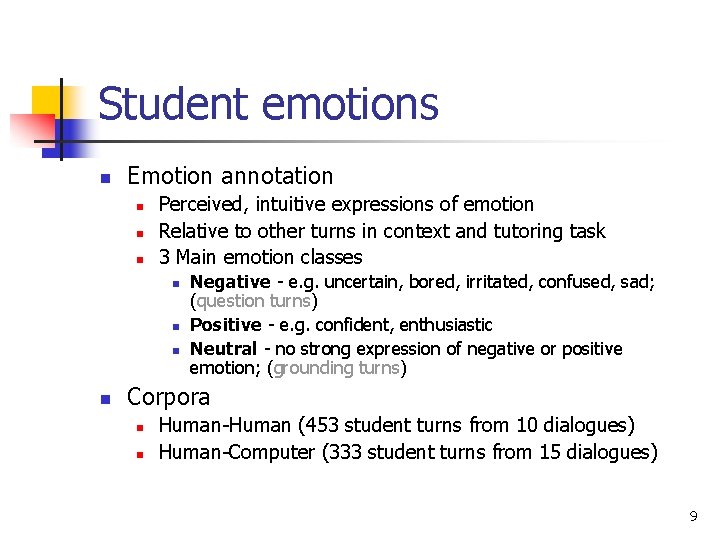

Student emotions n Emotion annotation n Perceived, intuitive expressions of emotion Relative to other turns in context and tutoring task 3 Main emotion classes n n Negative - e. g. uncertain, bored, irritated, confused, sad; (question turns) Positive - e. g. confident, enthusiastic Neutral - no strong expression of negative or positive emotion; (grounding turns) Corpora n n Human-Human (453 student turns from 10 dialogues) Human-Computer (333 student turns from 15 dialogues) 9

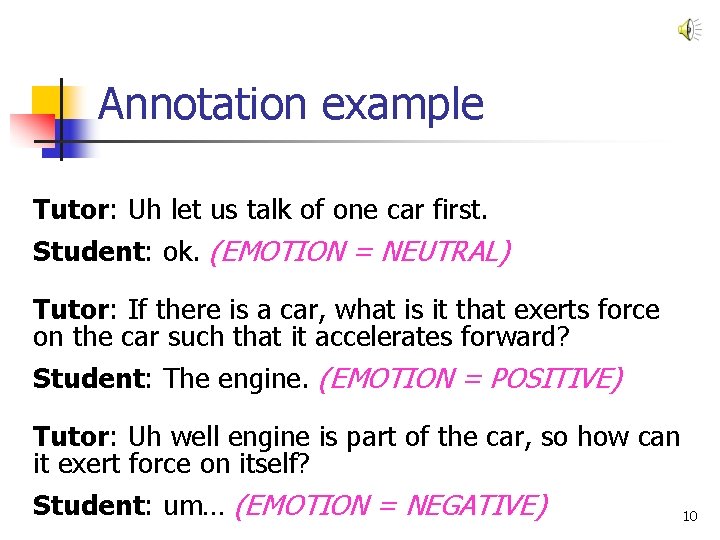

Annotation example Tutor: Uh let us talk of one car first. Student: ok. (EMOTION = NEUTRAL) Tutor: If there is a car, what is it that exerts force on the car such that it accelerates forward? Student: The engine. (EMOTION = POSITIVE) Tutor: Uh well engine is part of the car, so how can it exert force on itself? Student: um… (EMOTION = NEGATIVE) 10

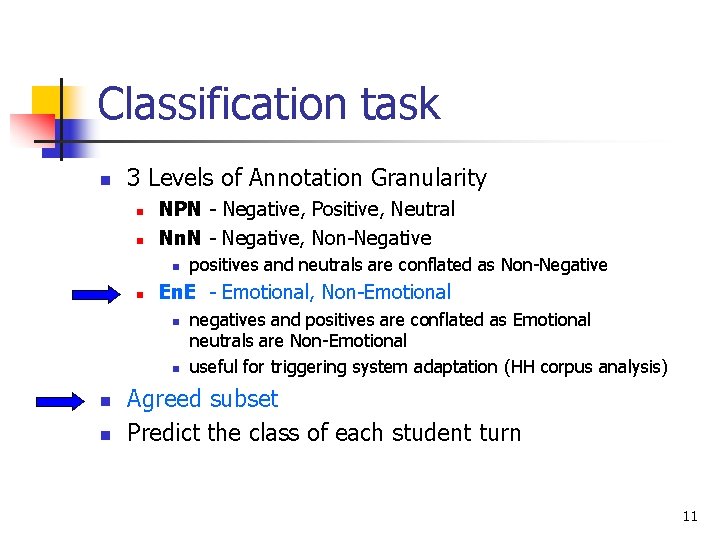

Classification task n 3 Levels of Annotation Granularity n n NPN - Negative, Positive, Neutral Nn. N - Negative, Non-Negative n n En. E - Emotional, Non-Emotional n n positives and neutrals are conflated as Non-Negative negatives and positives are conflated as Emotional neutrals are Non-Emotional useful for triggering system adaptation (HH corpus analysis) Agreed subset Predict the class of each student turn 11

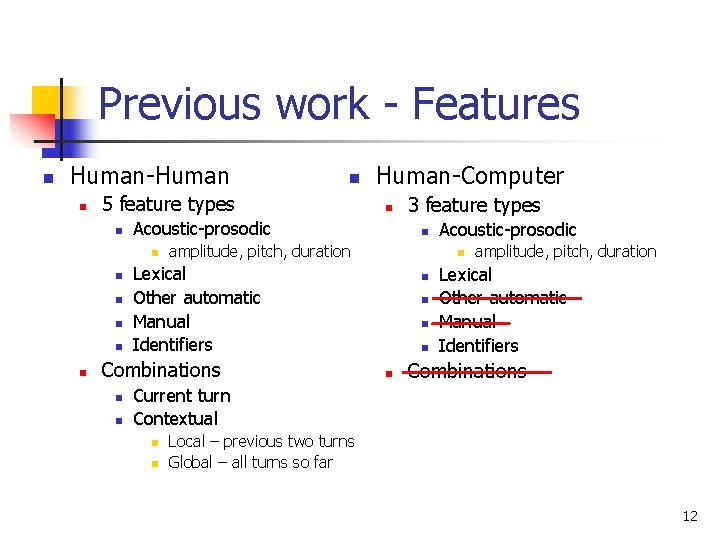

Previous work - Features n Human-Human n 5 feature types n n n n 3 feature types n amplitude, pitch, duration Acoustic-prosodic n Lexical Other automatic Manual Identifiers Combinations n Human-Computer Acoustic-prosodic n n n n amplitude, pitch, duration Lexical Other automatic Manual Identifiers Combinations Current turn Contextual n n Local – previous two turns Global – all turns so far 12

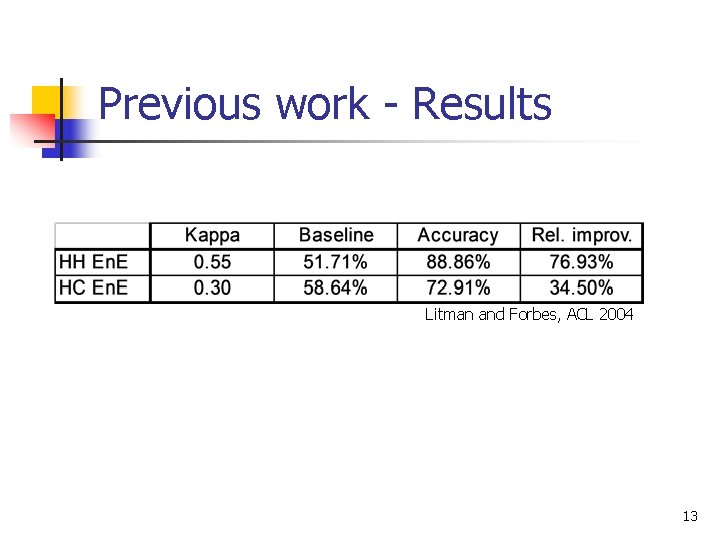

Previous work - Results Litman and Forbes, ACL 2004 13

How to improve? n Use word-level features instead of turn-level features n n Extend the pitch features set Simplified word-level emotion model 14

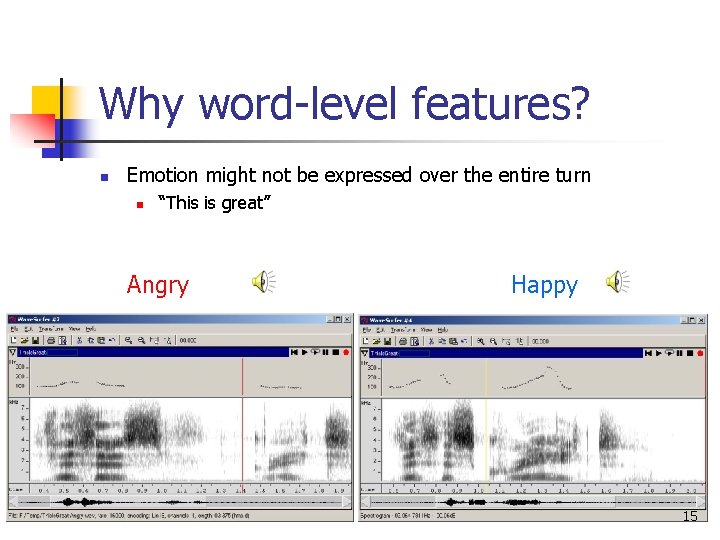

Why word-level features? n Emotion might not be expressed over the entire turn n “This is great” Angry Happy 15

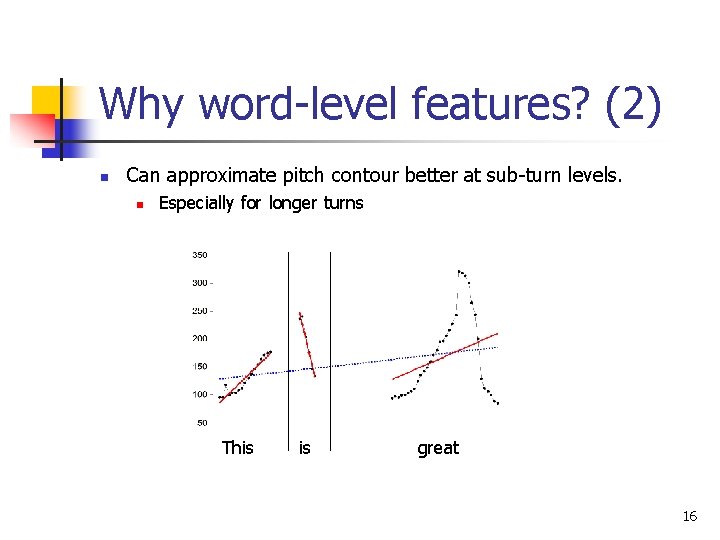

Why word-level features? (2) n Can approximate pitch contour better at sub-turn levels. n Especially for longer turns This is great 16

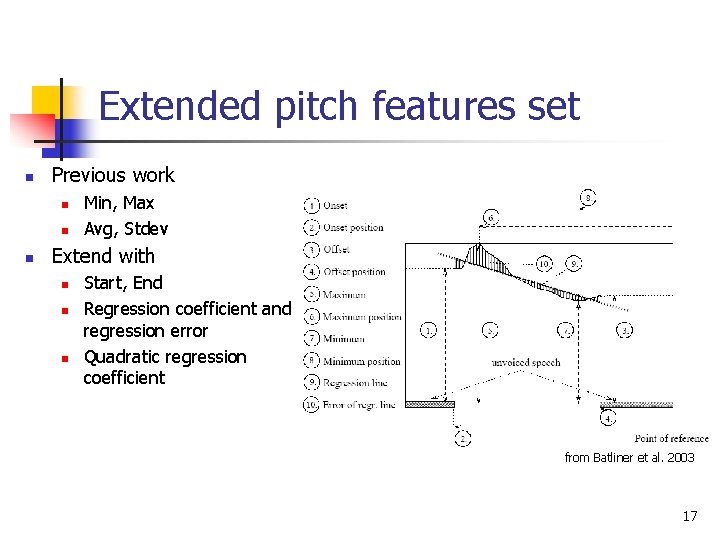

Extended pitch features set n Previous work n n n Min, Max Avg, Stdev Extend with n n n Start, End Regression coefficient and regression error Quadratic regression coefficient from Batliner et al. 2003 17

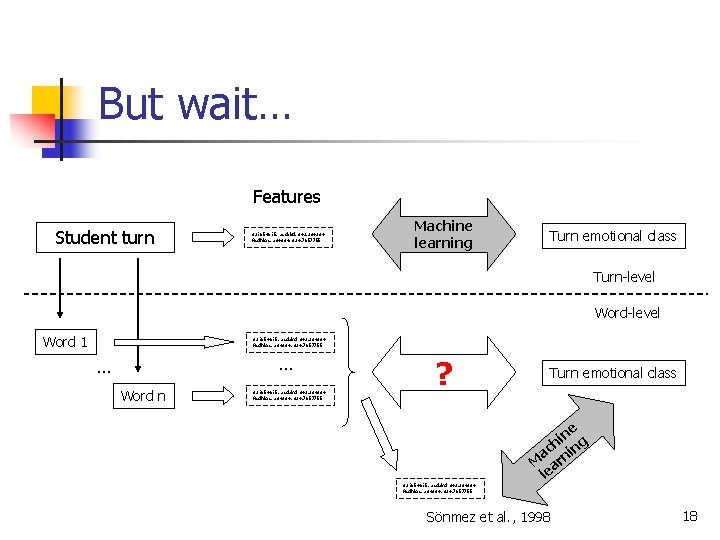

But wait… Features Student turn 321654615, asdakd, 342. 234234 Asdhkas, a 34334, 324, 7657755 Machine learning Turn emotional class Turn-level Word 1 321654615, asdakd, 342. 234234 Asdhkas, a 34334, 324, 7657755 … … Word n 321654615, asdakd, 342. 234234 Asdhkas, a 34334, 324, 7657755 ? 321654615, asdakd, 342. 234234 Asdhkas, a 34334, 324, 7657755 Turn emotional class ne i h g ac nin M ar le Sönmez et al. , 1998 18

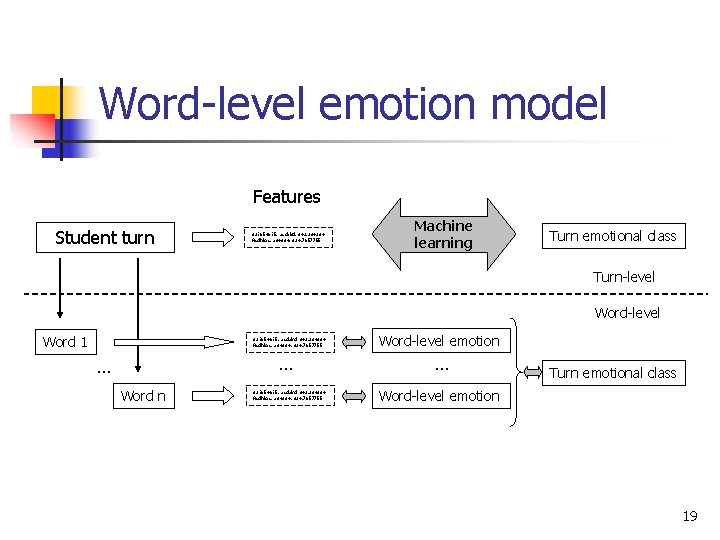

Word-level emotion model Features Student turn 321654615, asdakd, 342. 234234 Asdhkas, a 34334, 324, 7657755 Machine learning Turn emotional class Turn-level Word 1 321654615, asdakd, 342. 234234 Asdhkas, a 34334, 324, 7657755 … … Word n 321654615, asdakd, 342. 234234 Asdhkas, a 34334, 324, 7657755 Word-level emotion … Turn emotional class Word-level emotion 19

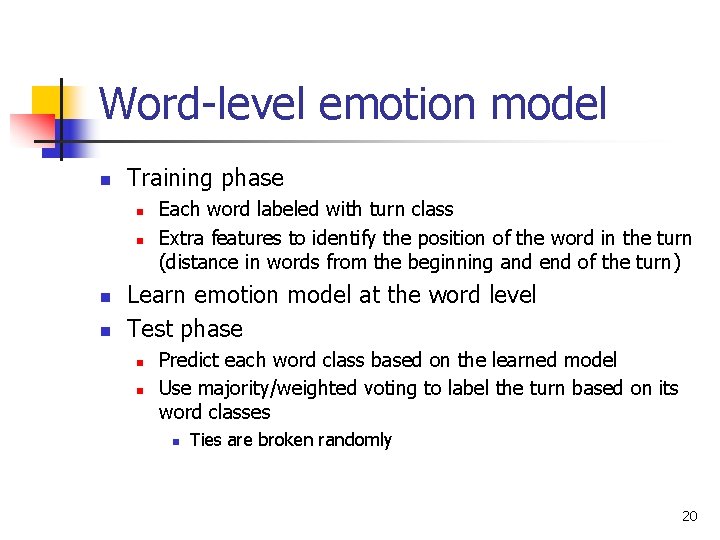

Word-level emotion model n Training phase n n Each word labeled with turn class Extra features to identify the position of the word in the turn (distance in words from the beginning and end of the turn) Learn emotion model at the word level Test phase n n Predict each word class based on the learned model Use majority/weighted voting to label the turn based on its word classes n Ties are broken randomly 20

Questions to answer n Will word level feature work better than turn level features for emotion prediction? n n If yes, where does the advantage comes from? n n Yes Better prediction of longer turns Is there a feature set that offers robust performance? n Yes. Combination of pitch and lexical features at word level. 21

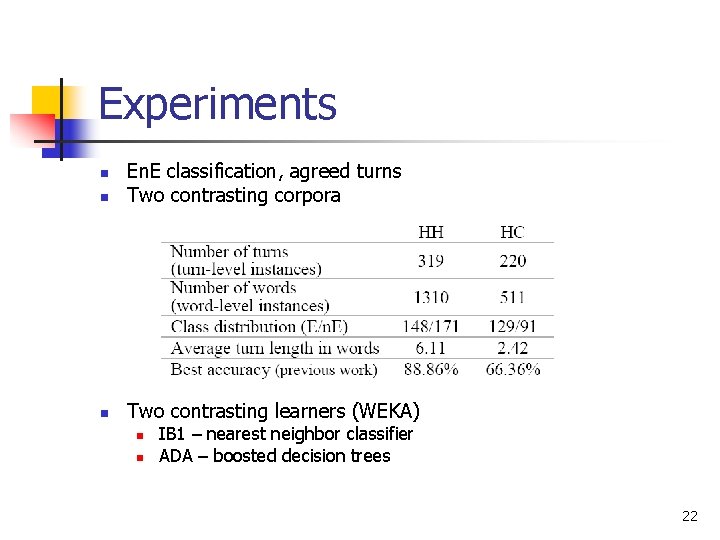

Experiments n En. E classification, agreed turns Two contrasting corpora n Two contrasting learners (WEKA) n n n IB 1 – nearest neighbor classifier ADA – boosted decision trees 22

Feature sets n n Only pitch and lexical features 6 sets of features n Turn level: n n Word level: n n n Lex-Turn – only lexical Pitch-Turn – only pitch Pitch. Lex-Turn – lexical and prosodic Lex-Word – only lexical + positional Pitch-Word – only pitch + positional Pitch. Lex-Word – lexical and prosodic + positional Baseline: majority class 10 x 10 cross validation 23

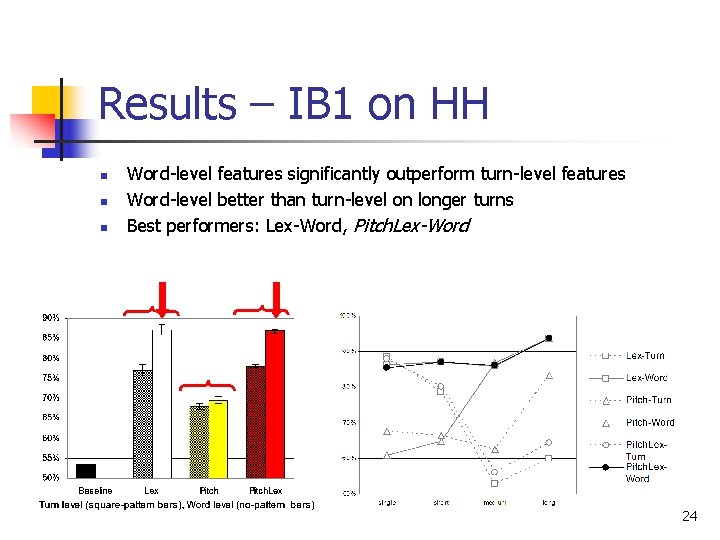

Results – IB 1 on HH n n n Word-level features significantly outperform turn-level features Word-level better than turn-level on longer turns Best performers: Lex-Word, Pitch. Lex-Word 24

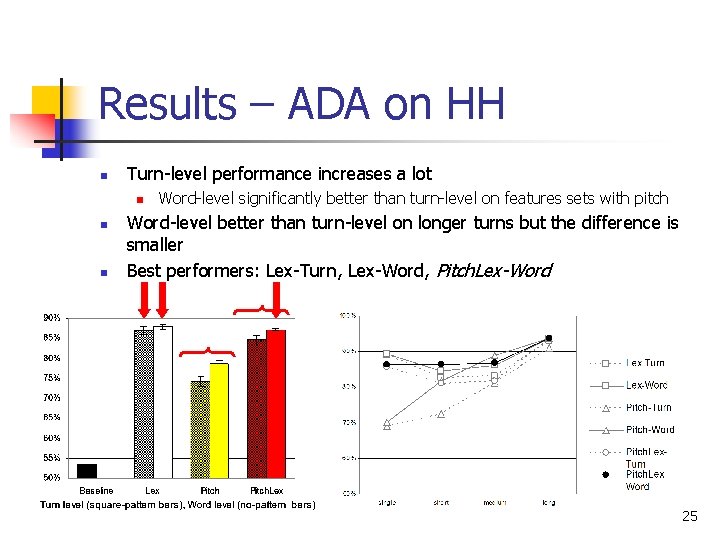

Results – ADA on HH n Turn-level performance increases a lot n n n Word-level significantly better than turn-level on features sets with pitch Word-level better than turn-level on longer turns but the difference is smaller Best performers: Lex-Turn, Lex-Word, Pitch. Lex-Word 25

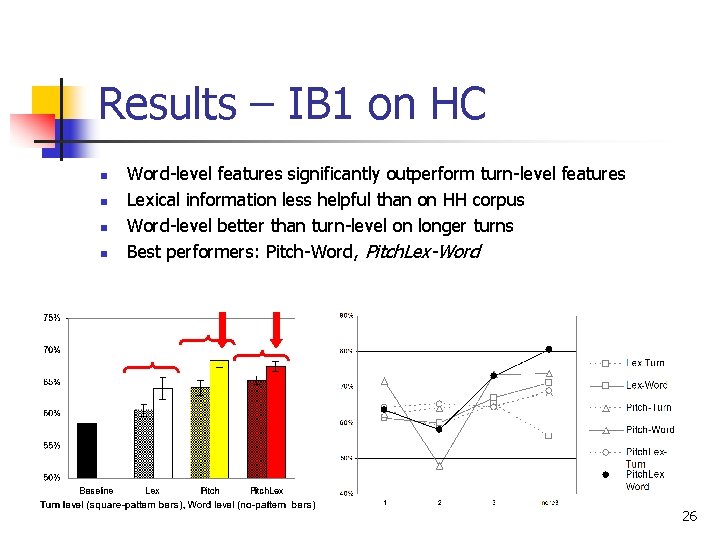

Results – IB 1 on HC n n Word-level features significantly outperform turn-level features Lexical information less helpful than on HH corpus Word-level better than turn-level on longer turns Best performers: Pitch-Word, Pitch. Lex-Word 26

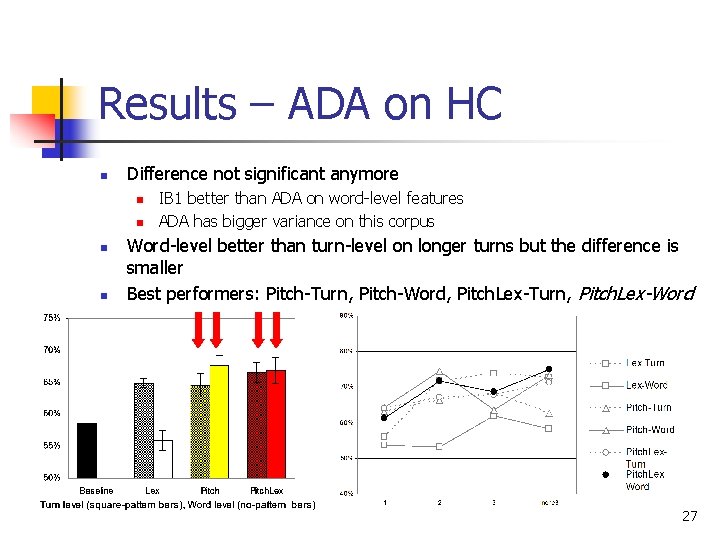

Results – ADA on HC n Difference not significant anymore n n IB 1 better than ADA on word-level features ADA has bigger variance on this corpus Word-level better than turn-level on longer turns but the difference is smaller Best performers: Pitch-Turn, Pitch-Word, Pitch. Lex-Turn, Pitch. Lex-Word 27

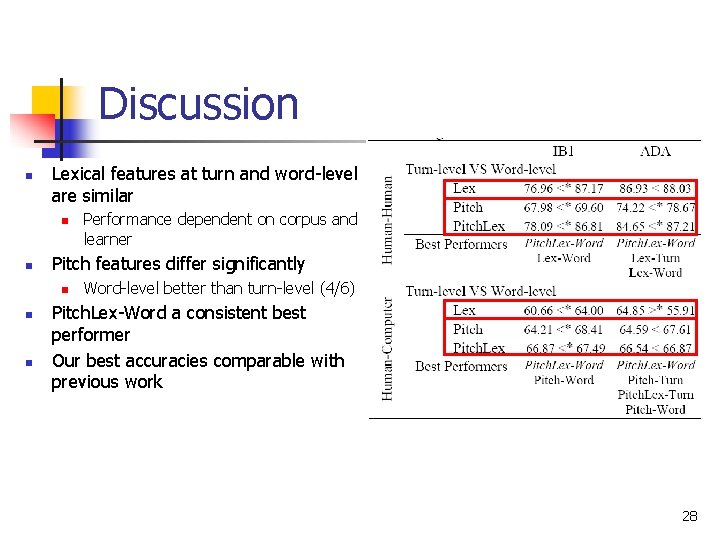

Discussion n Lexical features at turn and word-level are similar n n Pitch features differ significantly n n n Performance dependent on corpus and learner Word-level better than turn-level (4/6) Pitch. Lex-Word a consistent best performer Our best accuracies comparable with previous work 28

Conclusions & Future work n Word-level better than turn-level for emotion prediction n n Even under a very simple word-level emotion model Word-level better at predicting longer turns Pitch. Lex-Word a consistent best performer Future work: n More refined word-level emotion models n n n HMMs Co-training Filter irrelevant words Use the prosodic information left out See if our conclusions generalize on detecting student uncertainty Experiment with other sub-turn units (breath groups) 29

- Slides: 29