Using Word Net Predicates for Multilingual Named Entity

- Slides: 29

Using Word. Net Predicates for Multilingual Named Entity Recognition Matteo Negri and Bernardo Magnini ITC-irst Centro per la Ricerca Scientifica e Tecnologica, Trento - Italy [negri, magnini]@itc. it January 23, 2004 GWC'04 - Brno (Czech Republic) GWC’ 04 - Brno (Czech Republic), January 23 2004

Outline • Named Entity Recognition (NER) • Rule-based approach using Word. Net information – Word. Net Predicates (language independent) – Internal evidence: Word_Instances – External evidence: Word_Classes • System architecture • Experiments and results on English and Italian • Future work January 23, 2004 GWC'04 - Brno (Czech Republic) 2

Named Entity Recognition (NER) • Given a written text, identify and categorize: – Entity names (e. g. persons, organizations, location names) – Temporal expressions (e. g. dates and time) – Numerical expressions (e. g. monetary values and percentages) • NER is crucial for Information Extraction, Question Answering and Information Retrieval – Up to 10% of a newswire text may consist of proper names , dates, times, etc. January 23, 2004 GWC'04 - Brno (Czech Republic) 3

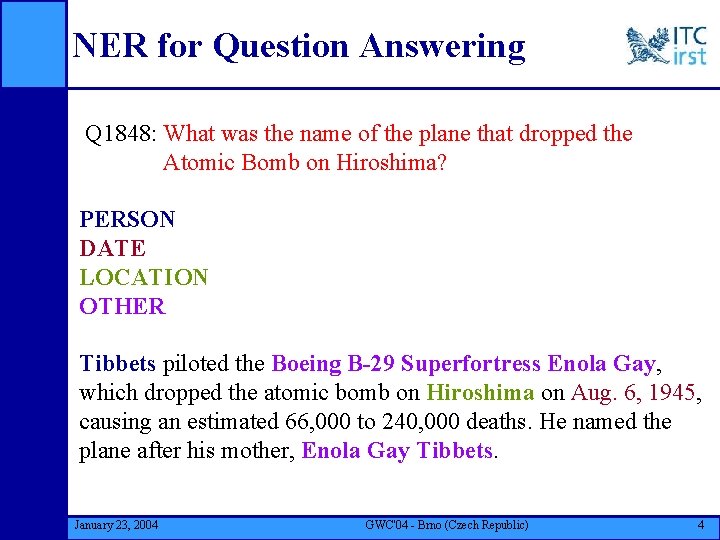

NER for Question Answering Q 1848: What was the name of the plane that dropped the Atomic Bomb on Hiroshima? PERSON DATE LOCATION OTHER Tibbets piloted the Boeing B-29 Superfortress Enola Gay, which dropped the atomic bomb on Hiroshima on Aug. 6, 1945, causing an estimated 66, 000 to 240, 000 deaths. He named the plane after his mother, Enola Gay Tibbets. January 23, 2004 GWC'04 - Brno (Czech Republic) 4

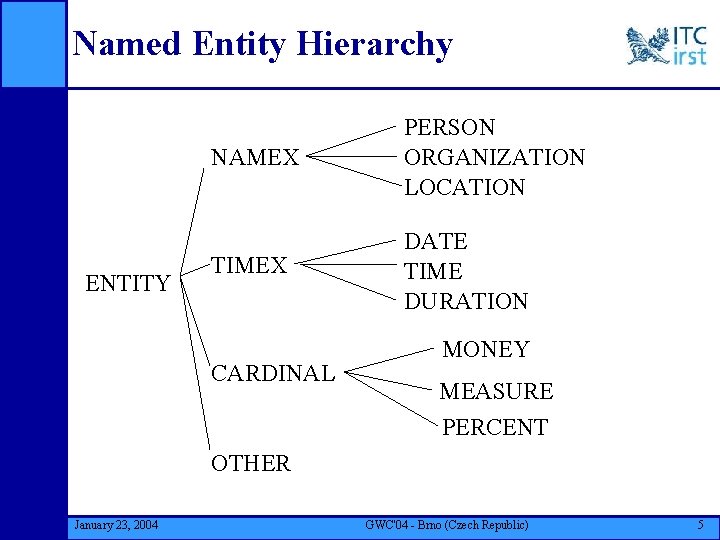

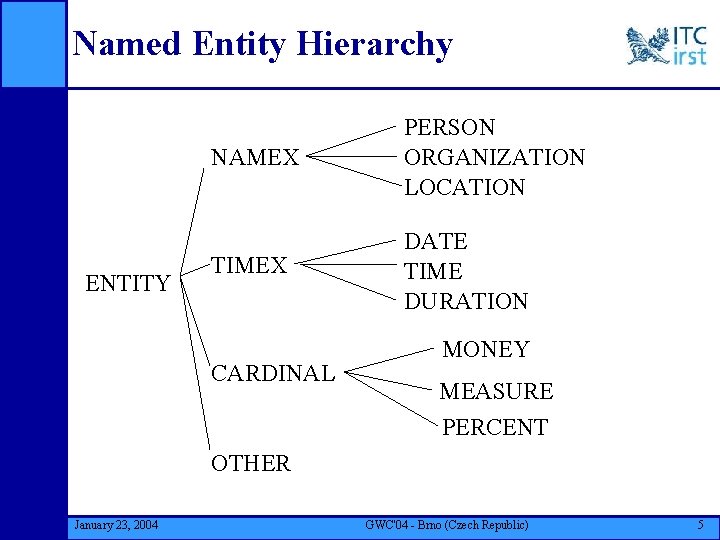

Named Entity Hierarchy ENTITY NAMEX PERSON ORGANIZATION LOCATION TIMEX DATE TIME DURATION CARDINAL MONEY MEASURE PERCENT OTHER January 23, 2004 GWC'04 - Brno (Czech Republic) 5

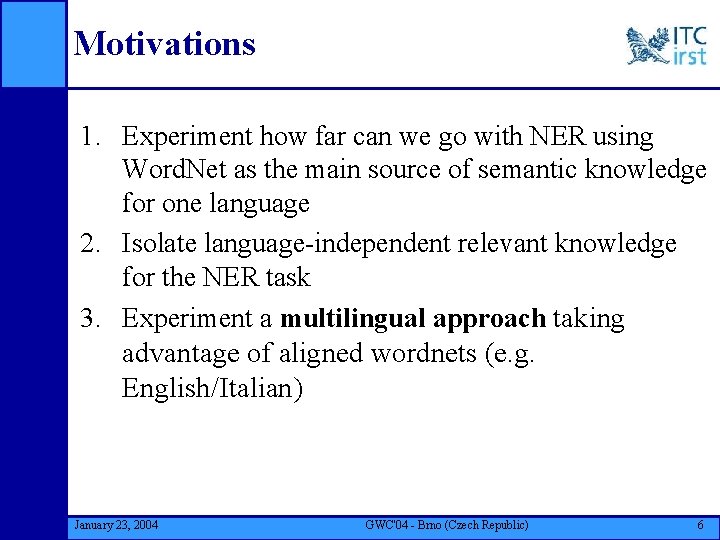

Motivations 1. Experiment how far can we go with NER using Word. Net as the main source of semantic knowledge for one language 2. Isolate language-independent relevant knowledge for the NER task 3. Experiment a multilingual approach taking advantage of aligned wordnets (e. g. English/Italian) January 23, 2004 GWC'04 - Brno (Czech Republic) 6

Knowledge-Based NER • Combination of a wide range of knowledge sources – lexical, syntactic, and semantic features of the input text – world knowledge (e. g. gazetteers) – discourse level information (e. g. co-reference resolution) January 23, 2004 GWC'04 - Brno (Czech Republic) 7

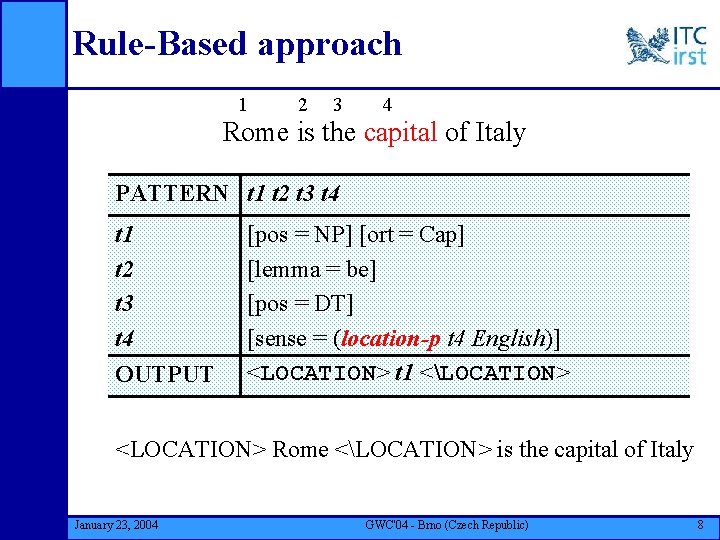

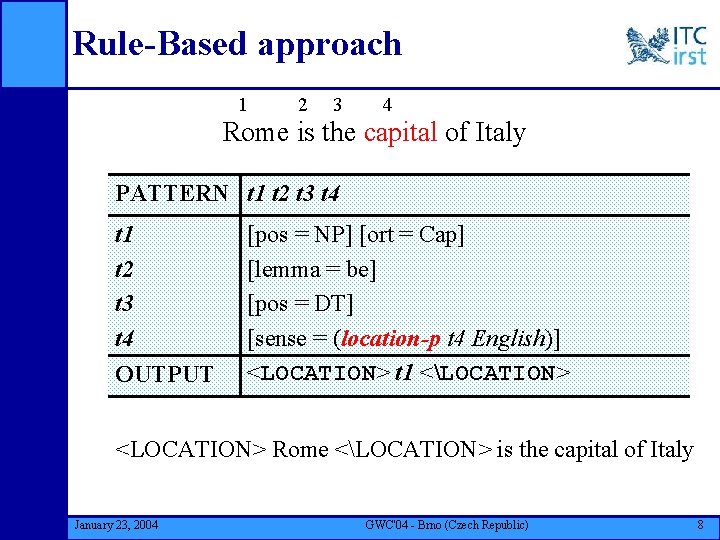

Rule-Based approach 1 2 3 4 Rome is the capital of Italy PATTERN t 1 t 2 t 3 t 4 OUTPUT [pos = NP] [ort = Cap] [lemma = be] [pos = DT] [sense = (location-p t 4 English)] <LOCATION> t 1 <LOCATION> <LOCATION> Rome <LOCATION> is the capital of Italy January 23, 2004 GWC'04 - Brno (Czech Republic) 8

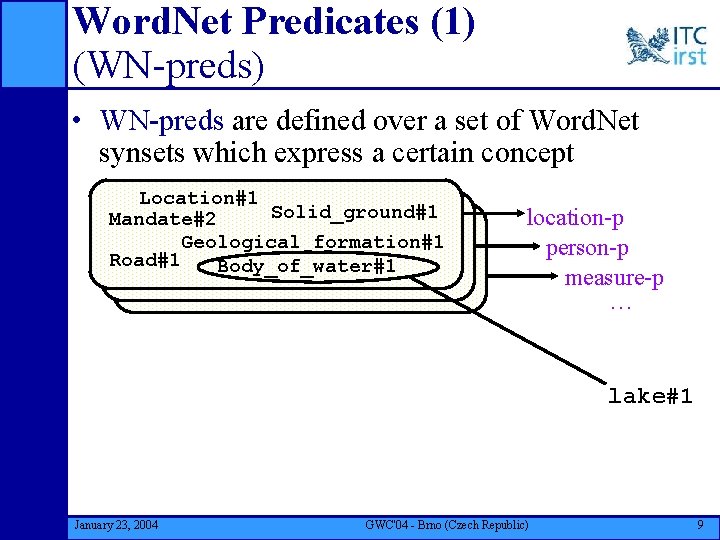

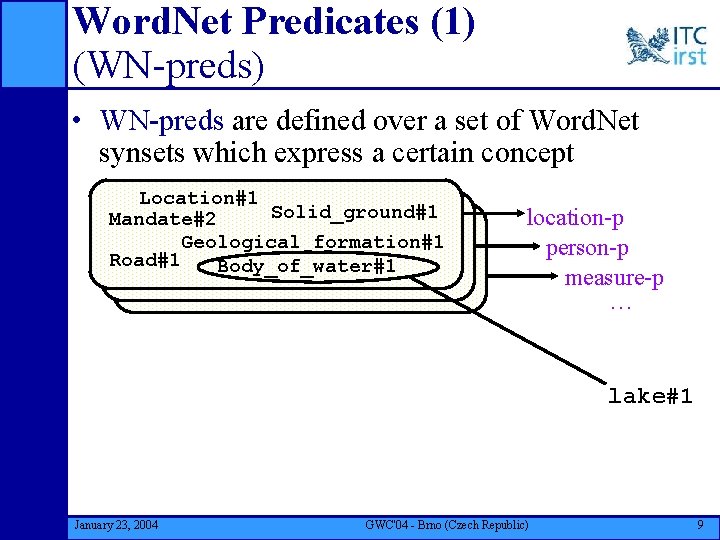

Word. Net Predicates (1) (WN-preds) • WN-preds are defined over a set of Word. Net synsets which express a certain concept Location#1 Solid_ground#1 Mandate#2 Geological_formation#1 Road#1 Body_of_water#1 location-p person-p measure-p … lake#1 January 23, 2004 GWC'04 - Brno (Czech Republic) 9

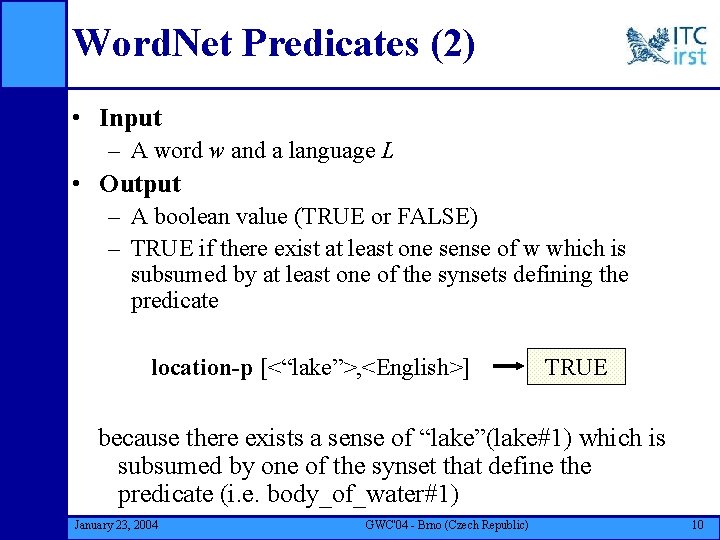

Word. Net Predicates (2) • Input – A word w and a language L • Output – A boolean value (TRUE or FALSE) – TRUE if there exist at least one sense of w which is subsumed by at least one of the synsets defining the predicate location-p [<“lake”>, <English>] TRUE because there exists a sense of “lake”(lake#1) which is subsumed by one of the synset that define the predicate (i. e. body_of_water#1) January 23, 2004 GWC'04 - Brno (Czech Republic) 10

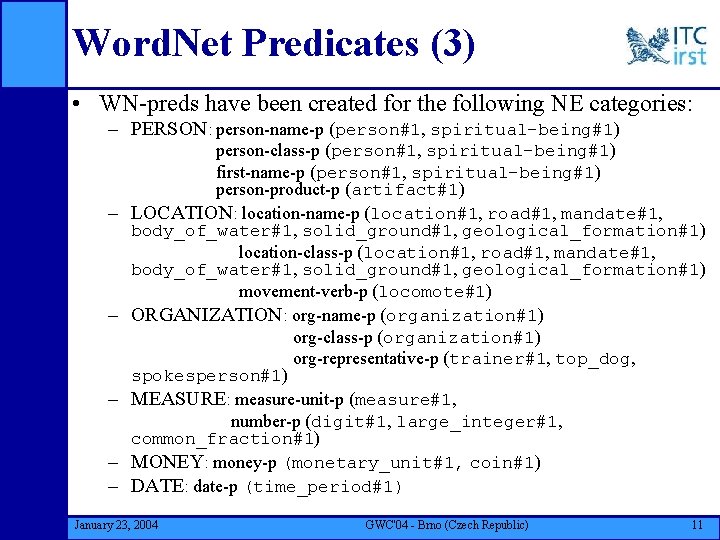

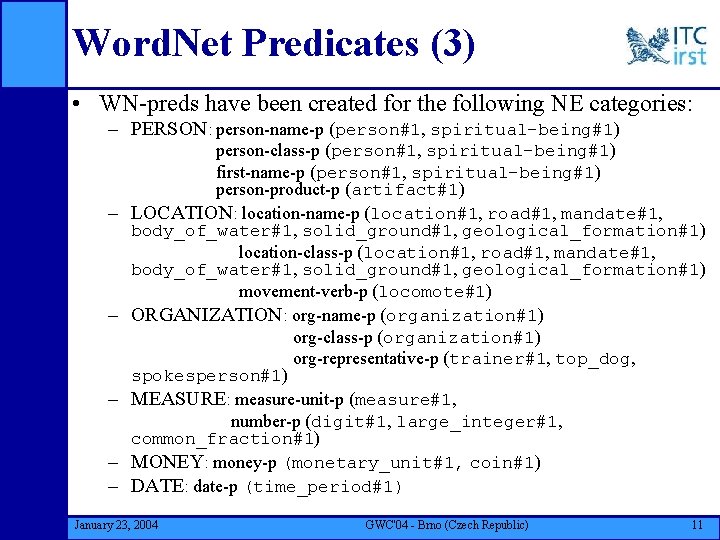

Word. Net Predicates (3) • WN-preds have been created for the following NE categories: – PERSON: person-name-p (person#1, spiritual-being#1) – – – person-class-p (person#1, spiritual-being#1) first-name-p (person#1, spiritual-being#1) person-product-p (artifact#1) LOCATION: location-name-p (location#1, road#1, mandate#1, body_of_water#1, solid_ground#1, geological_formation#1) location-class-p (location#1, road#1, mandate#1, body_of_water#1, solid_ground#1, geological_formation#1) movement-verb-p (locomote#1) ORGANIZATION: org-name-p (organization#1) org-class-p (organization#1) org-representative-p (trainer#1, top_dog, spokesperson#1) MEASURE: measure-unit-p (measure#1, number-p (digit#1, large_integer#1, common_fraction#1) MONEY: money-p (monetary_unit#1, coin#1) DATE: date-p (time_period#1) January 23, 2004 GWC'04 - Brno (Czech Republic) 11

Word. Net Predicates (4) • The definition of a wordnet-predicate is languageindependent. • In case of aligned wordnet w-preds can be easily parametrized with respect to a certain language without changing the predicate definition – E. g. (Location-p lake English) (Location-p lago Italian) January 23, 2004 GWC'04 - Brno (Czech Republic) 12

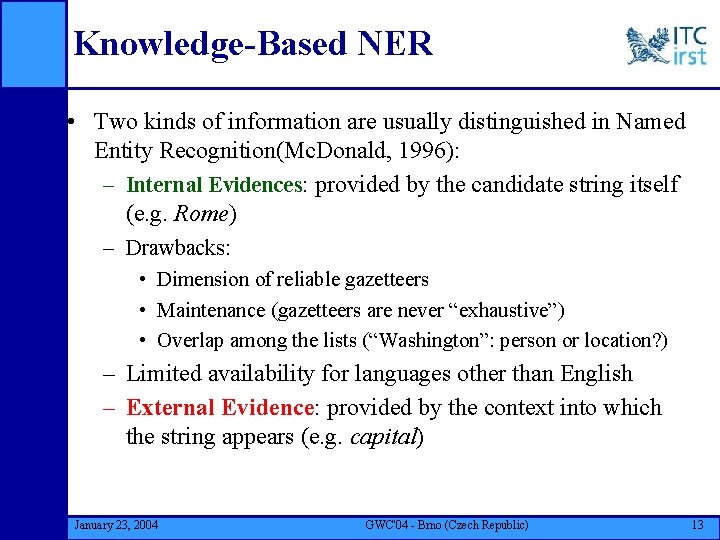

Knowledge-Based NER • Two kinds of information are usually distinguished in Named Entity Recognition(Mc. Donald, 1996): – Internal Evidences: provided by the candidate string itself (e. g. Rome) – Drawbacks: • Dimension of reliable gazetteers • Maintenance (gazetteers are never “exhaustive”) • Overlap among the lists (“Washington”: person or location? ) – Limited availability for languages other than English – External Evidence: provided by the context into which the string appears (e. g. capital) January 23, 2004 GWC'04 - Brno (Czech Republic) 13

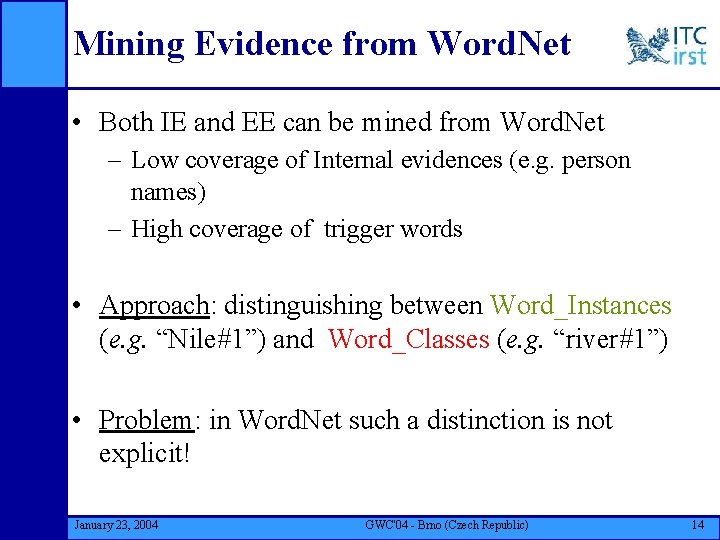

Mining Evidence from Word. Net • Both IE and EE can be mined from Word. Net – Low coverage of Internal evidences (e. g. person names) – High coverage of trigger words • Approach: distinguishing between Word_Instances (e. g. “Nile#1”) and Word_Classes (e. g. “river#1”) • Problem: in Word. Net such a distinction is not explicit! January 23, 2004 GWC'04 - Brno (Czech Republic) 14

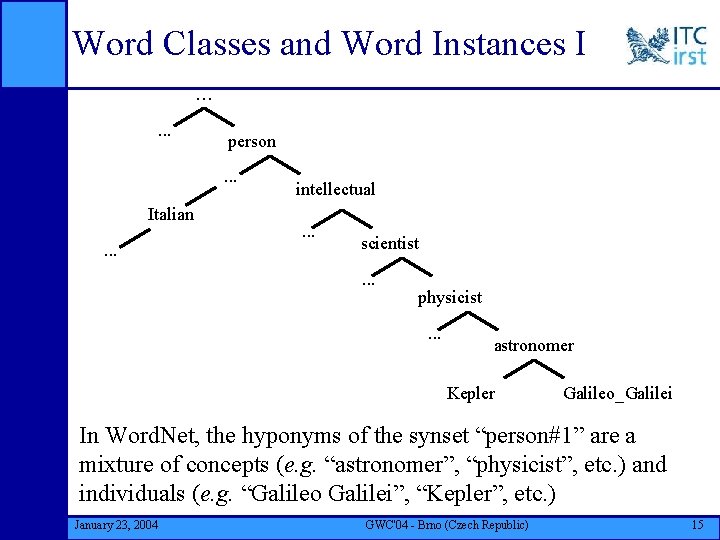

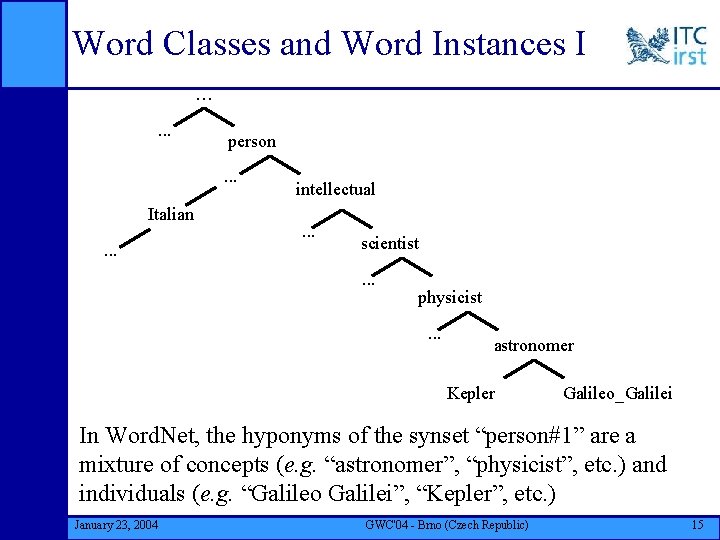

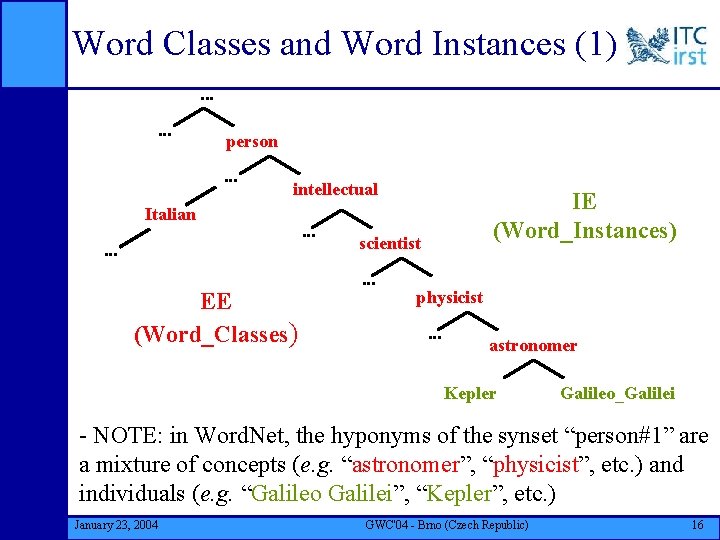

Word Classes and Word Instances I …. . . person. . . Italian. . . intellectual. . . scientist. . . physicist. . . astronomer Kepler Galileo_Galilei In Word. Net, the hyponyms of the synset “person#1” are a mixture of concepts (e. g. “astronomer”, “physicist”, etc. ) and individuals (e. g. “Galileo Galilei”, “Kepler”, etc. ) January 23, 2004 GWC'04 - Brno (Czech Republic) 15

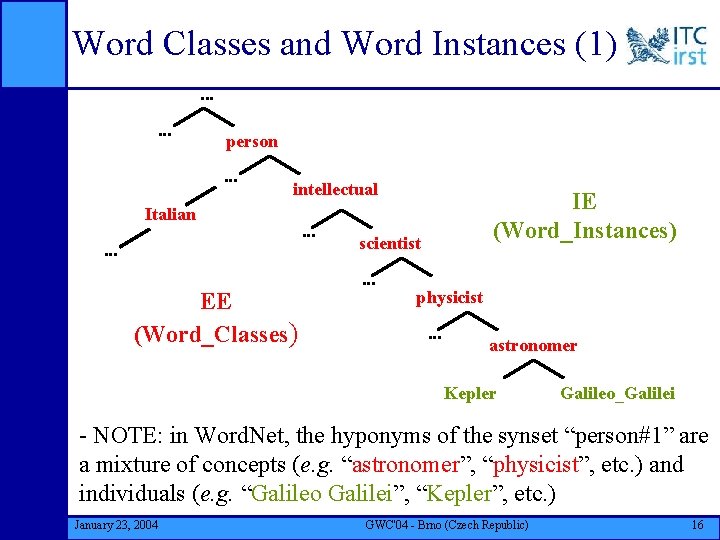

Word Classes and Word Instances (1). . . person. . . intellectual Italian. . . EE (Word_Classes) . . . IE (Word_Instances) scientist. . . physicist. . . astronomer Kepler Galileo_Galilei - NOTE: in Word. Net, the hyponyms of the synset “person#1” are a mixture of concepts (e. g. “astronomer”, “physicist”, etc. ) and individuals (e. g. “Galileo Galilei”, “Kepler”, etc. ) January 23, 2004 GWC'04 - Brno (Czech Republic) 16

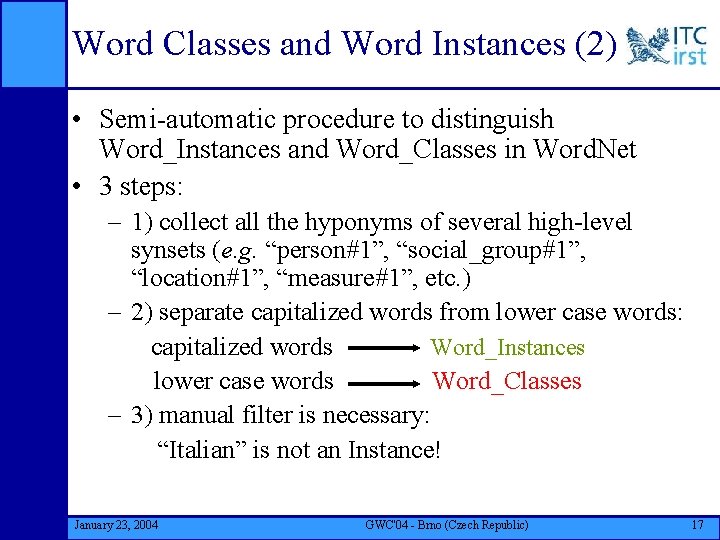

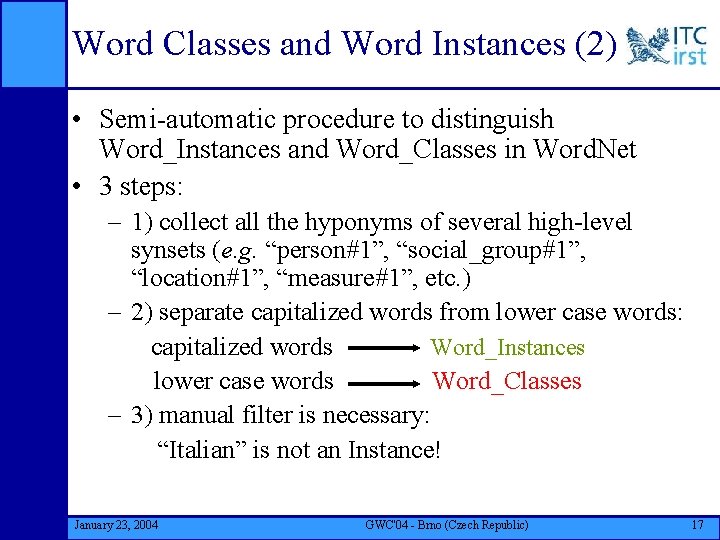

Word Classes and Word Instances (2) • Semi-automatic procedure to distinguish Word_Instances and Word_Classes in Word. Net • 3 steps: – 1) collect all the hyponyms of several high-level synsets (e. g. “person#1”, “social_group#1”, “location#1”, “measure#1”, etc. ) – 2) separate capitalized words from lower case words: capitalized words Word_Instances lower case words Word_Classes – 3) manual filter is necessary: “Italian” is not an Instance! January 23, 2004 GWC'04 - Brno (Czech Republic) 17

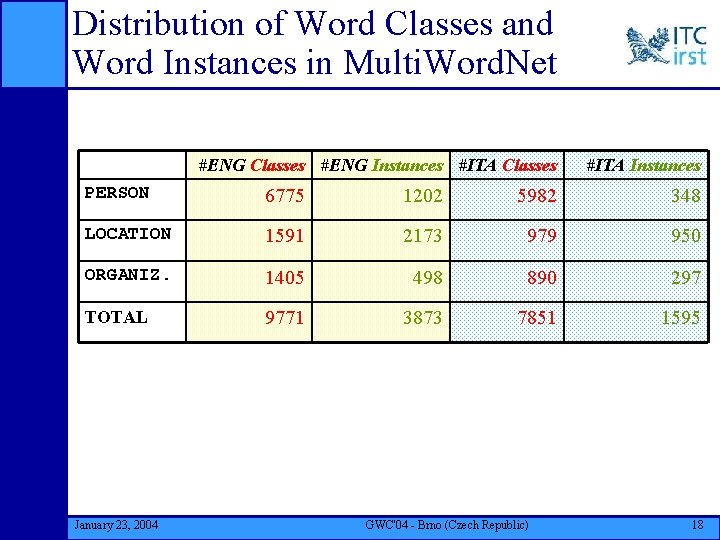

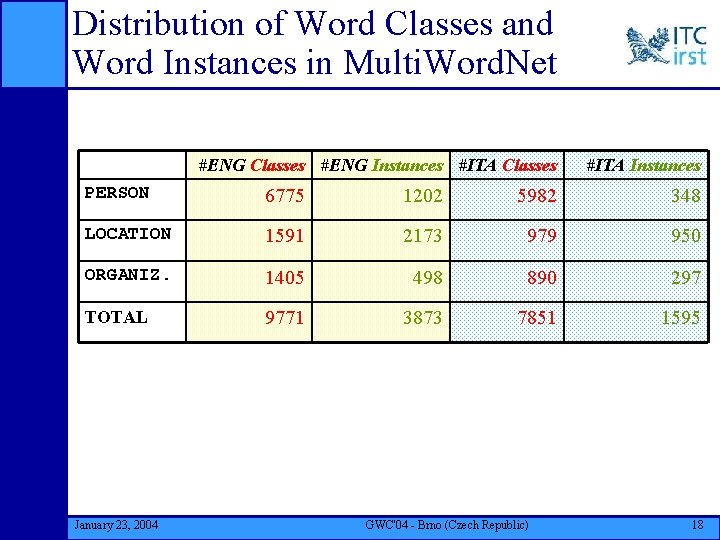

Distribution of Word Classes and Word Instances in Multi. Word. Net #ENG Classes #ENG Instances #ITA Classes #ITA Instances PERSON 6775 1202 5982 348 LOCATION 1591 2173 979 950 ORGANIZ. 1405 498 890 297 TOTAL 9771 3873 7851 1595 January 23, 2004 GWC'04 - Brno (Czech Republic) 18

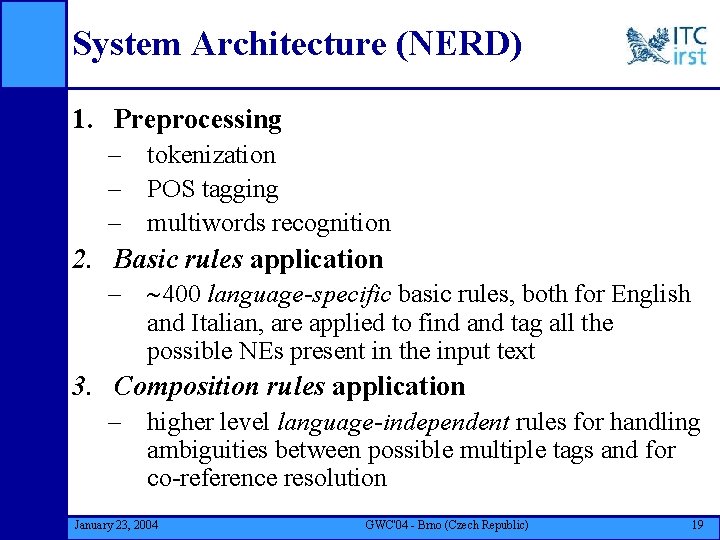

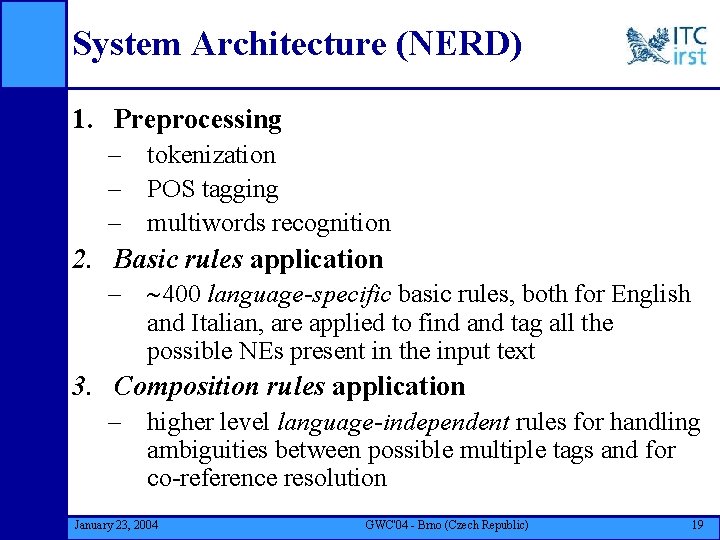

System Architecture (NERD) 1. Preprocessing – tokenization – POS tagging – multiwords recognition 2. Basic rules application – 400 language-specific basic rules, both for English and Italian, are applied to find and tag all the possible NEs present in the input text 3. Composition rules application – higher level language-independent rules for handling ambiguities between possible multiple tags and for co-reference resolution January 23, 2004 GWC'04 - Brno (Czech Republic) 19

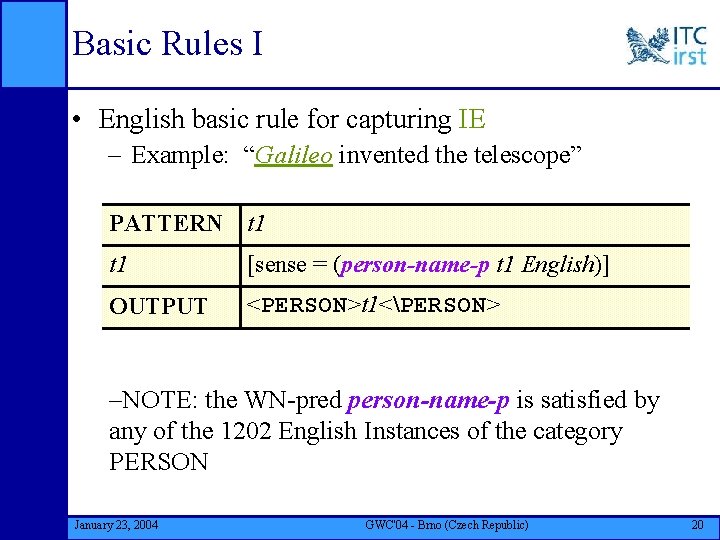

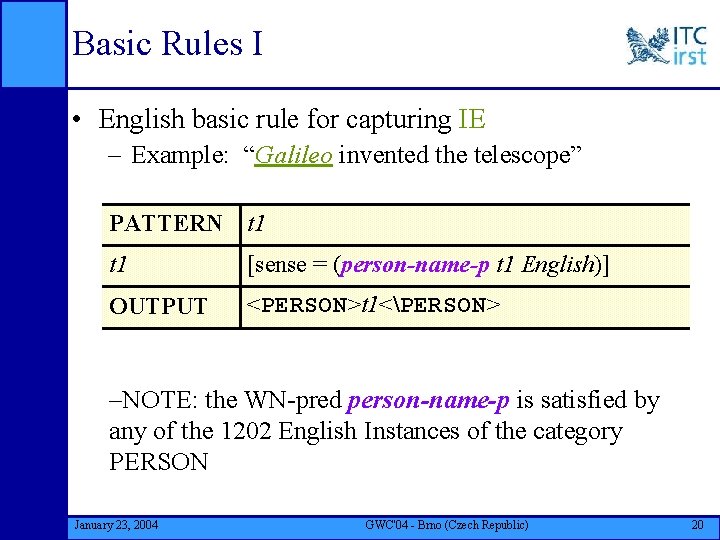

Basic Rules I • English basic rule for capturing IE – Example: “Galileo invented the telescope” PATTERN t 1 [sense = (person-name-p t 1 English)] OUTPUT <PERSON>t 1<PERSON> –NOTE: the WN-pred person-name-p is satisfied by any of the 1202 English Instances of the category PERSON January 23, 2004 GWC'04 - Brno (Czech Republic) 20

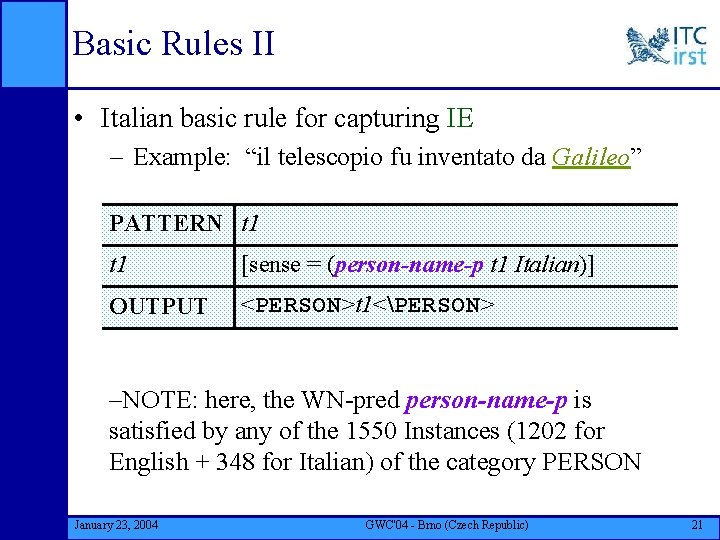

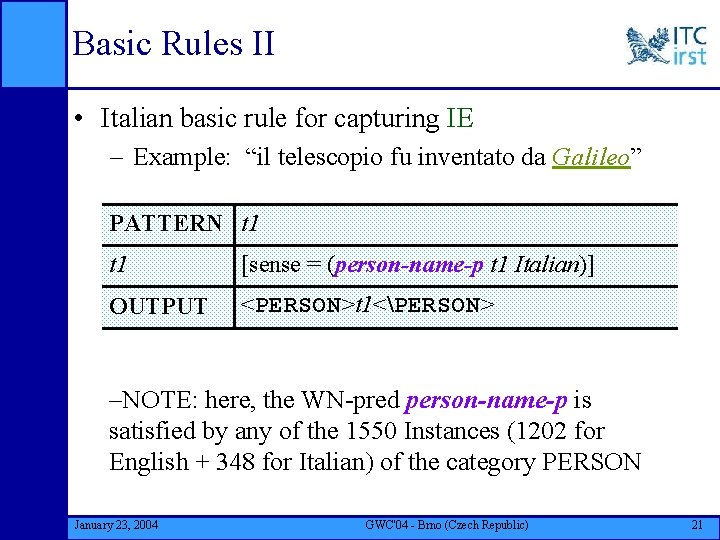

Basic Rules II • Italian basic rule for capturing IE – Example: “il telescopio fu inventato da Galileo” PATTERN t 1 [sense = (person-name-p t 1 Italian)] OUTPUT <PERSON>t 1<PERSON> –NOTE: here, the WN-pred person-name-p is satisfied by any of the 1550 Instances (1202 for English + 348 for Italian) of the category PERSON January 23, 2004 GWC'04 - Brno (Czech Republic) 21

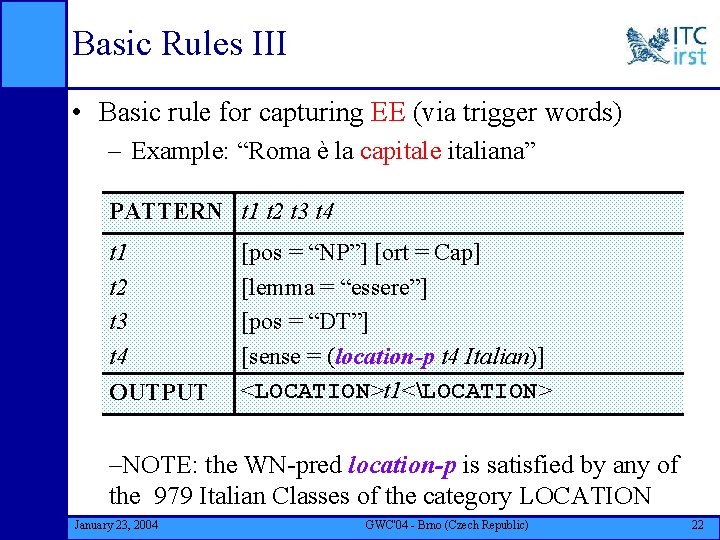

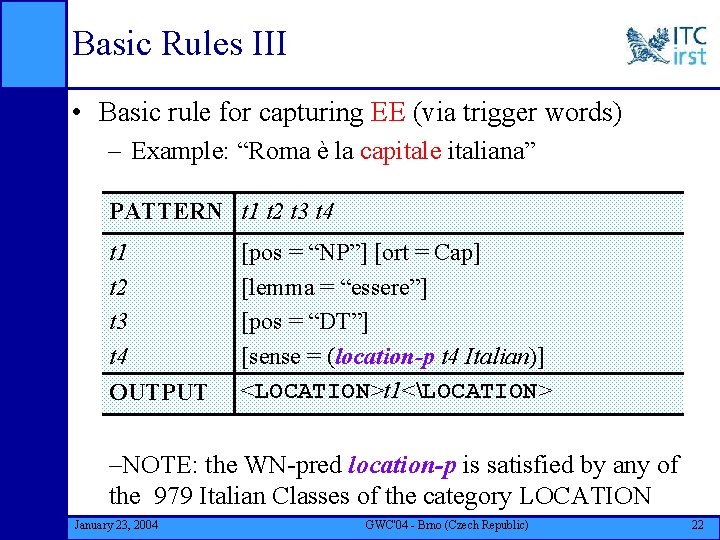

Basic Rules III • Basic rule for capturing EE (via trigger words) – Example: “Roma è la capitale italiana” PATTERN t 1 t 2 t 3 t 4 OUTPUT [pos = “NP”] [ort = Cap] [lemma = “essere”] [pos = “DT”] [sense = (location-p t 4 Italian)] <LOCATION>t 1<LOCATION> –NOTE: the WN-pred location-p is satisfied by any of the 979 Italian Classes of the category LOCATION January 23, 2004 GWC'04 - Brno (Czech Republic) 22

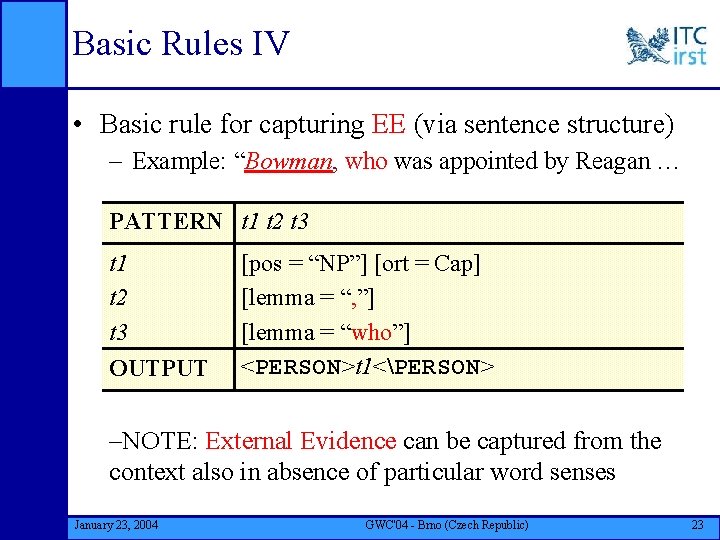

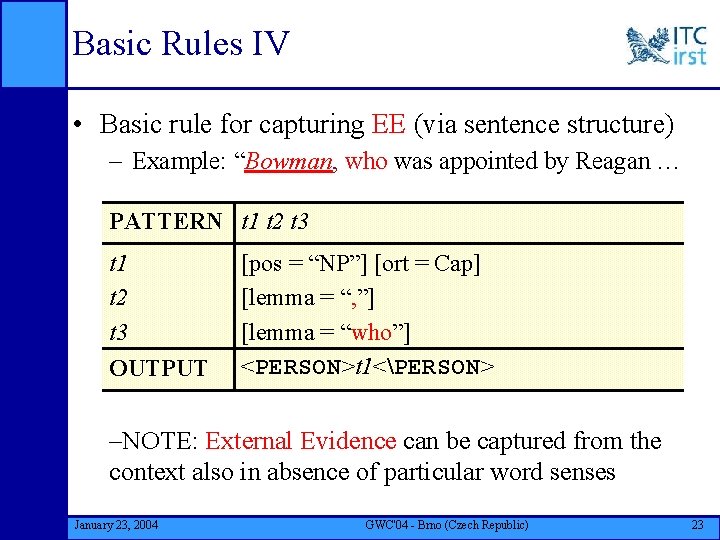

Basic Rules IV • Basic rule for capturing EE (via sentence structure) – Example: “Bowman, who was appointed by Reagan … PATTERN t 1 t 2 t 3 OUTPUT [pos = “NP”] [ort = Cap] [lemma = “, ”] [lemma = “who”] <PERSON>t 1<PERSON> –NOTE: External Evidence can be captured from the context also in absence of particular word senses January 23, 2004 GWC'04 - Brno (Czech Republic) 23

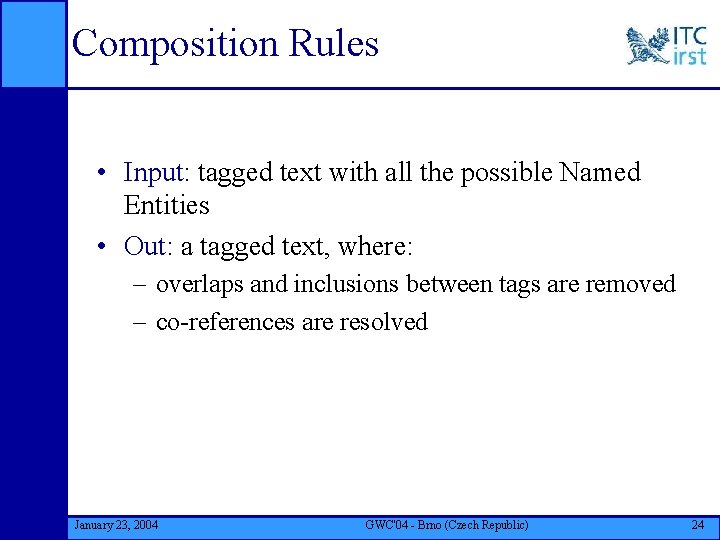

Composition Rules • Input: tagged text with all the possible Named Entities • Out: a tagged text, where: – overlaps and inclusions between tags are removed – co-references are resolved January 23, 2004 GWC'04 - Brno (Czech Republic) 24

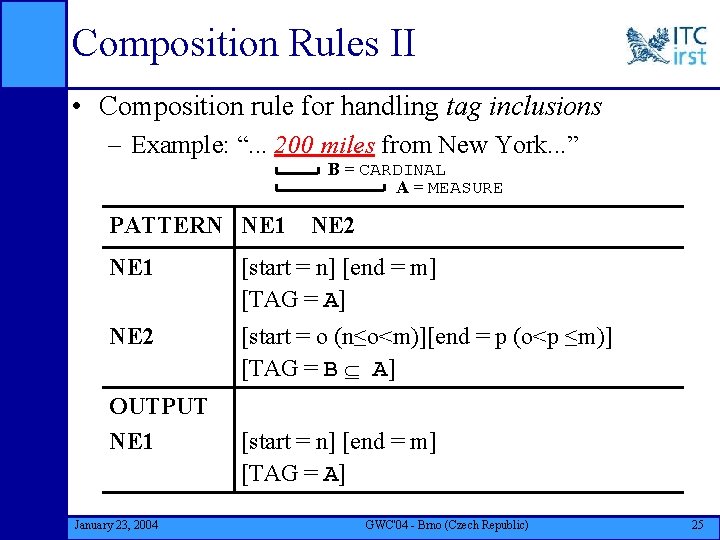

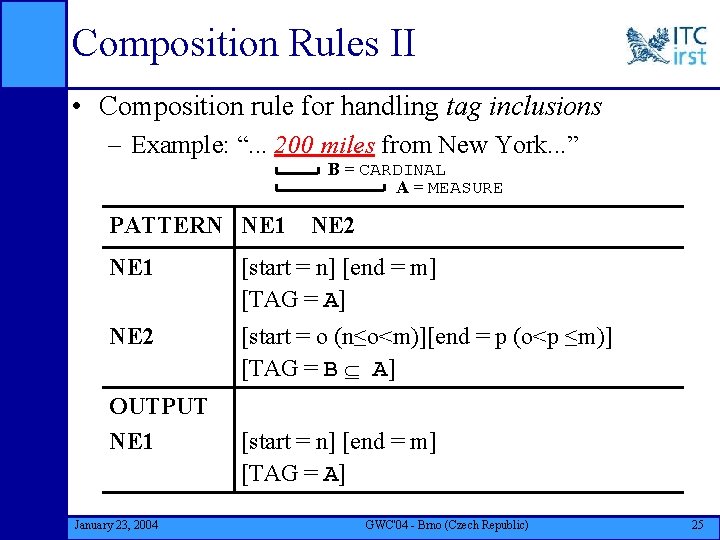

Composition Rules II • Composition rule for handling tag inclusions – Example: “. . . 200 miles from New York. . . ” B = CARDINAL A = MEASURE PATTERN NE 1 NE 2 NE 1 [start = n] [end = m] [TAG = A] NE 2 [start = o (n≤o<m)][end = p (o<p ≤m)] [TAG = B A] OUTPUT NE 1 January 23, 2004 [start = n] [end = m] [TAG = A] GWC'04 - Brno (Czech Republic) 25

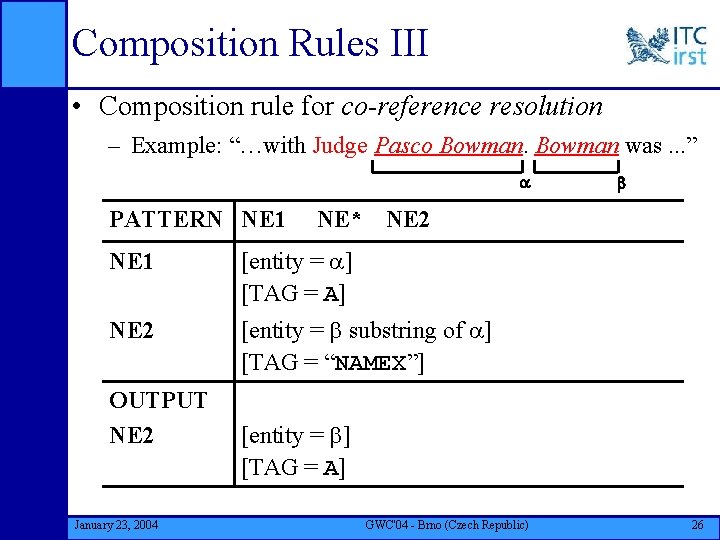

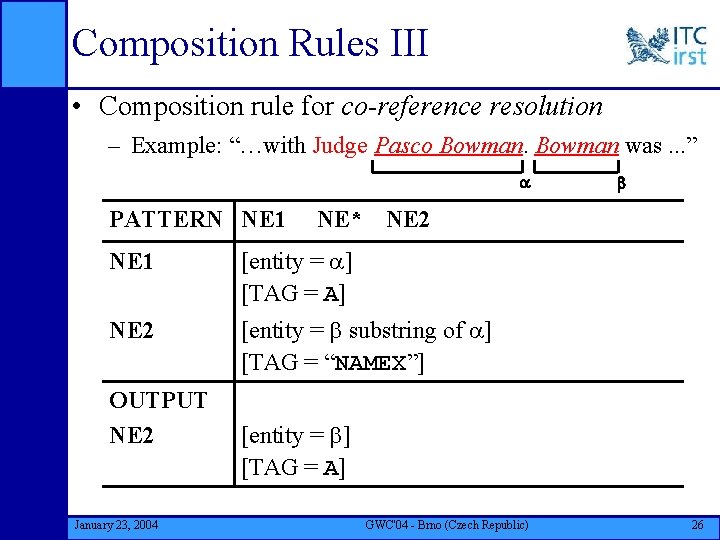

Composition Rules III • Composition rule for co-reference resolution – Example: “…with Judge Pasco Bowman was. . . ” PATTERN NE 1 NE* NE 2 NE 1 [entity = ] [TAG = A] NE 2 [entity = substring of ] [TAG = “NAMEX”] OUTPUT NE 2 January 23, 2004 [entity = ] [TAG = A] GWC'04 - Brno (Czech Republic) 26

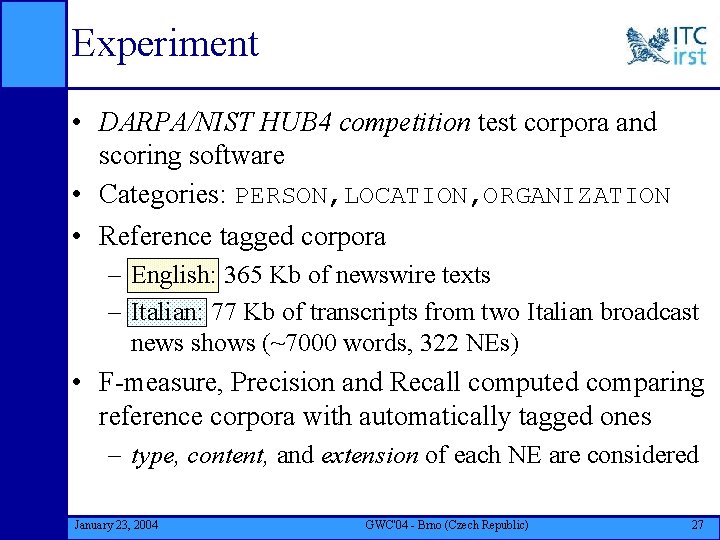

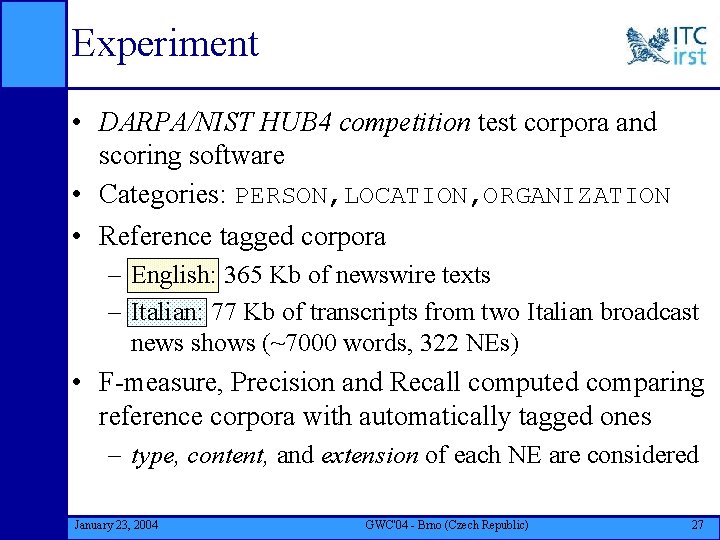

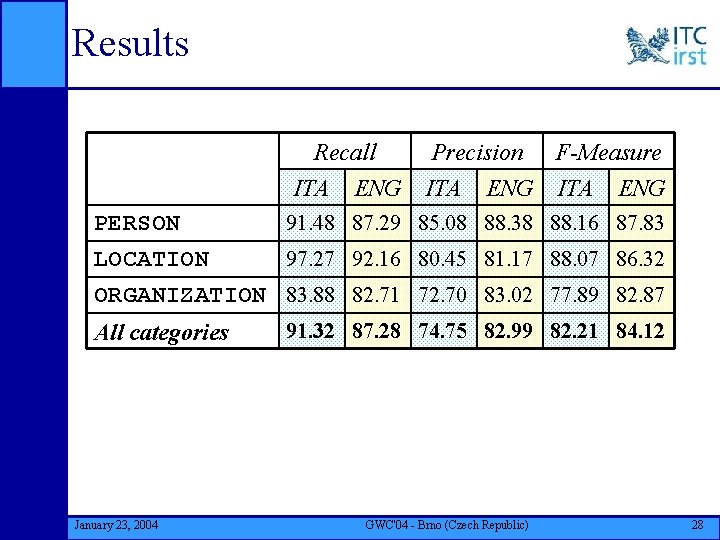

Experiment • DARPA/NIST HUB 4 competition test corpora and scoring software • Categories: PERSON, LOCATION, ORGANIZATION • Reference tagged corpora – English: 365 Kb of newswire texts – Italian: 77 Kb of transcripts from two Italian broadcast news shows (~7000 words, 322 NEs) • F-measure, Precision and Recall computed comparing reference corpora with automatically tagged ones – type, content, and extension of each NE are considered January 23, 2004 GWC'04 - Brno (Czech Republic) 27

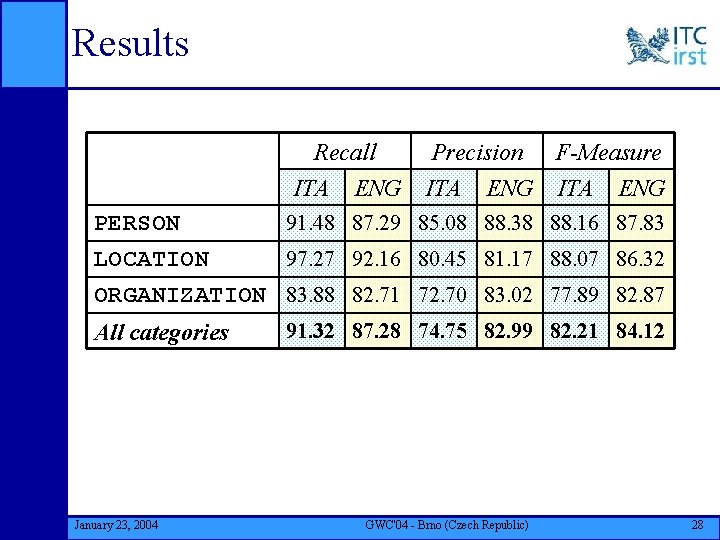

Results Recall ITA ENG PERSON LOCATION Precision F-Measure ITA ENG 91. 48 87. 29 85. 08 88. 38 88. 16 87. 83 97. 27 92. 16 80. 45 81. 17 88. 07 86. 32 ORGANIZATION 83. 88 82. 71 72. 70 83. 02 77. 89 82. 87 All categories January 23, 2004 91. 32 87. 28 74. 75 82. 99 82. 21 84. 12 GWC'04 - Brno (Czech Republic) 28

Conclusion and Future Work • We presented a NE recognition system based on information represented in Wordnet • Language independent predicates for NE have been defined • Results on two languages show that the approach performs as state of art rule based systems • The system has been successfully integrated in a QA system • Future work: – move to WN 2. 0 – integrate gazetteers – use Sumo concepts January 23, 2004 GWC'04 - Brno (Czech Republic) 29