Using TDT Data to Improve BN Acoustic Models

Using TDT Data to Improve BN Acoustic Models Long Nguyen and Bing Xiang STT Workshop Martigny, Switzerland, Sept. 5 -6, 2003

Overview · TDT Data · Selection procedure – Data pre-processing – Lightly-supervised decoding – Selection · Experimental results · Conclusion & future work 2

TDT Data · TDT 2: – Jan 1998 – June 1998 – Four sources (ABC, CNN, PRI, VOA) – 1034 shows , 633 hrs · TDT 3: – Oct 1998 – Dec 1998 – Six sources (ABC, CNN, MNB/MSN, NBC, PRI, VOA) – 731 shows , 475 hrs · TDT 4: – Oct 2000 – Jan 2001 – Same six sources as in TDT 3 – 425 shows , 294 hrs 3

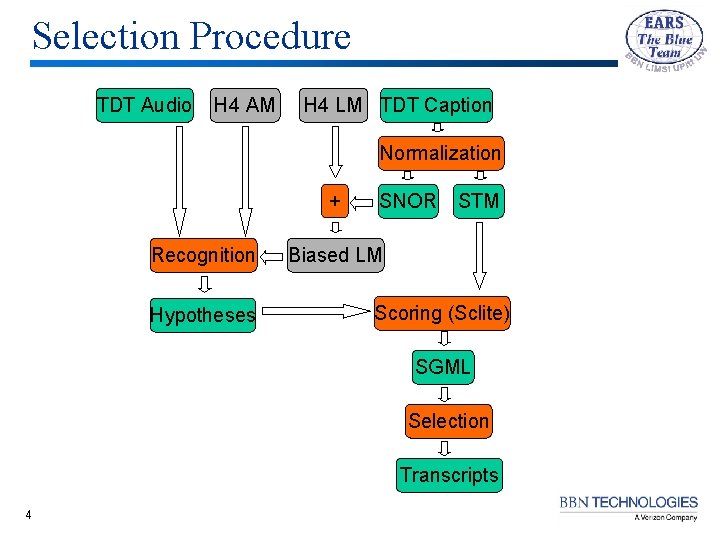

Selection Procedure TDT Audio H 4 AM H 4 LM TDT Caption Normalization + Recognition Hypotheses SNOR STM Biased LM Scoring (Sclite) SGML Selection Transcripts 4

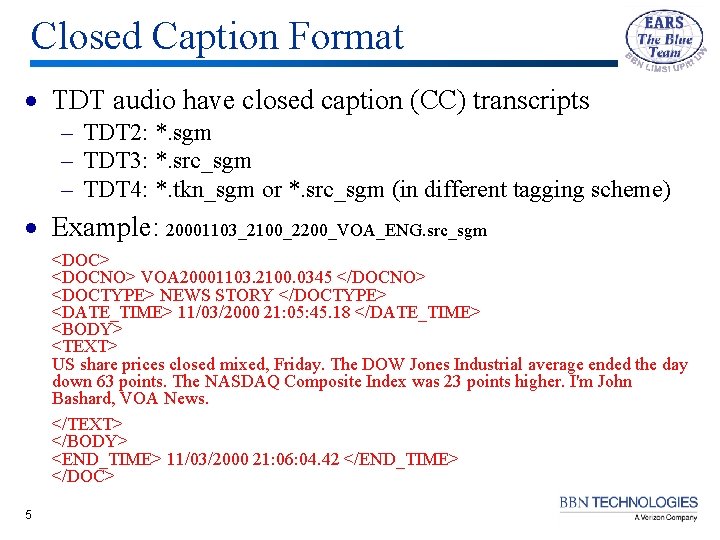

Closed Caption Format · TDT audio have closed caption (CC) transcripts – TDT 2: *. sgm – TDT 3: *. src_sgm – TDT 4: *. tkn_sgm or *. src_sgm (in different tagging scheme) · Example: 20001103_2100_2200_VOA_ENG. src_sgm <DOC> <DOCNO> VOA 20001103. 2100. 0345 </DOCNO> <DOCTYPE> NEWS STORY </DOCTYPE> <DATE_TIME> 11/03/2000 21: 05: 45. 18 </DATE_TIME> <BODY> <TEXT> US share prices closed mixed, Friday. The DOW Jones Industrial average ended the day down 63 points. The NASDAQ Composite Index was 23 points higher. I'm John Bashard, VOA News. </TEXT> </BODY> <END_TIME> 11/03/2000 21: 06: 04. 42 </END_TIME> </DOC> 5

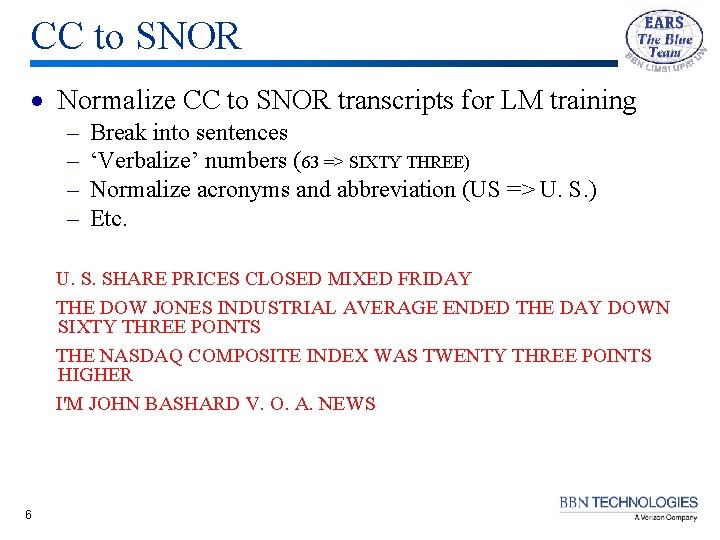

CC to SNOR · Normalize CC to SNOR transcripts for LM training – – Break into sentences ‘Verbalize’ numbers (63 => SIXTY THREE) Normalize acronyms and abbreviation (US => U. S. ) Etc. U. S. SHARE PRICES CLOSED MIXED FRIDAY THE DOW JONES INDUSTRIAL AVERAGE ENDED THE DAY DOWN SIXTY THREE POINTS THE NASDAQ COMPOSITE INDEX WAS TWENTY THREE POINTS HIGHER I'M JOHN BASHARD V. O. A. NEWS 6

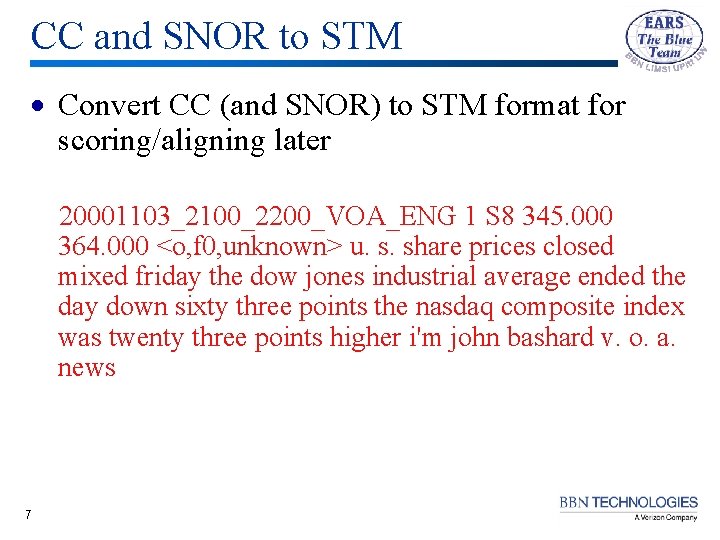

CC and SNOR to STM · Convert CC (and SNOR) to STM format for scoring/aligning later 20001103_2100_2200_VOA_ENG 1 S 8 345. 000 364. 000 <o, f 0, unknown> u. s. share prices closed mixed friday the dow jones industrial average ended the day down sixty three points the nasdaq composite index was twenty three points higher i'm john bashard v. o. a. news 7

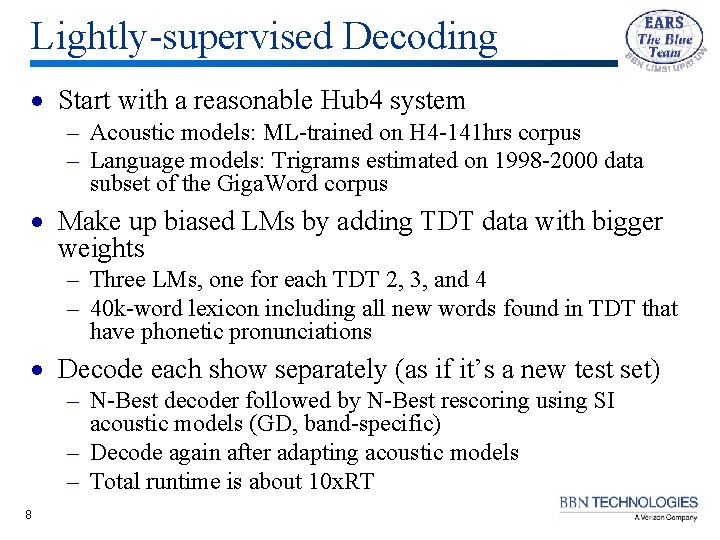

Lightly-supervised Decoding · Start with a reasonable Hub 4 system – Acoustic models: ML-trained on H 4 -141 hrs corpus – Language models: Trigrams estimated on 1998 -2000 data subset of the Giga. Word corpus · Make up biased LMs by adding TDT data with bigger weights – Three LMs, one for each TDT 2, 3, and 4 – 40 k-word lexicon including all new words found in TDT that have phonetic pronunciations · Decode each show separately (as if it’s a new test set) – N-Best decoder followed by N-Best rescoring using SI acoustic models (GD, band-specific) – Decode again after adapting acoustic models – Total runtime is about 10 x. RT 8

Alignment · Use sclite to align hypotheses with CC transcripts to take advantage of the time-stamped word alignments stored in the SGML output C, "u. ", 345. 270+345. 440: C, "s. ", 345. 440+345. 610: C, "share", 345. 610+3 45. 840: C, "prices", 345. 840+346. 290: C, "closed", 346. 290+346. 630: C, "mixed", 346. 630+347. 000: C, "friday", 347. 000+347. 490: C, "the", "t he", 347. 490+347. 580: C, "dow", 347. 580+347. 800: C, "jones", 347. 800+ 348. 110: C, "industrial", 348. 110+348. 720: C, "average", 348. 72 0+349. 130: C, "ended", 349. 130+349. 350: C, "the", 349. 350+349. 430: C, " day", "day", 349. 430+349. 610: C, "down", 349. 610+350. 020: C, "sixty", 3 50. 020+350. 410: C, "three", 350. 410+350. 680: C, "points", 350. 680+35 1. 220: C, "the", 351. 840+351. 940: C, "nasdaq", 351. 940+352. 500: C, "co mposite", "composite", 352. 500+352. 960: C, "index", 352. 960+353. 330: C, "was", 353. 330+353. 490: C, "twenty", 353. 490+353. 800: C, "three", 35 3. 800+354. 020: C, "points", 354. 020+354. 380: C, "higher", 354. 380+ 354. 860: C, "i'm", 355. 710+355. 900: C, "john", 355. 900+356. 180: I, , "burr", 356. 180+356. 310: S, "bashard", "shard", 356. 310+356. 860: C, "v. ", 356. 860+357. 01 0: C, "o. ", 357. 010+357. 160: C, "a. ", 357. 150+357. 300: C, "news", 357. 3 00+358. 060 9

Selection Strategy · Search through the SGML file to select – Utterances having no errors – Phrases of 3+ contiguous correct words · 10 In effect, use only a subset of words that both the CC transcripts and the decoder’s hypotheses agree

Selection Results · The amount of data selected from TDT 2, TDT 3 and TDT 4 (in hours) · Only 68% of the TDT audio have CC (966/1402 hrs) – Based on the observation of long passages of contiguous insertion errors · Selection yield rate is 72% (702/966) [Or 50% yield rate relative to the amount of raw audio data] 11

Scalability · Trained 4 sets of acoustic models – ML, HLDA-SAT only – (not quite ready to use MMI training yet) · System parameters grow as more and more data added if thresholds and/or criteria of speaker clustering, state clustering, and Gaussian mixing stay fixed. 12

Experimental Results · Tested on the BN dev 03 test set (h 4 d 03) · Used same RT 03 Eval LMs · Double the data (150 => 300 hrs) provided 0. 7% abs reduction in WER · Double again (300 => 600 hrs) provided an additional 0. 6% abs reduction

Un-Adapted Results in Detail · 14 Significant reduction across all shows when adding TDT 4 data into Hub-4 BN data

Adapted Results in Detail · 15 No noticable reduction observed for the MSN and NBC shows when adding the TDT 2 data. [These two types of shows not part of the TDT 2 corpus]

Summary · · 16 Proposed an effective strategy for automatic selection of BN audio data having closed caption transcripts as (additional) acoustic training data 68% of TDT audio data are captioned Selection yield rate is 72% of captioned data Adding 450 hrs of selected data from the TDT 2 and TDT 4 corpus provides 1. 3% abs reduction in WER for the BN dev 03 test set

Future Work · · · 17 Obtain results when adding the TDT 3 data Improve the biased LMs and retry Understand the differences/errors in aligning the hypotheses and the closed caption to refine the selection criteria Cooperate with other sites to speed up and improve the data selection process Use MMI training with this large amount of training data

- Slides: 17