Using Systems Thinking in Evaluation Capacity Building the

Using Systems Thinking in Evaluation Capacity Building: the Systems Evaluation Protocol (SEP) Margaret A. Johnson, Ph. D Candidate Wanda Casillas, Ph. D Candidate Jen Brown Urban, Ph. D William Trochim, Ph. D

What do you think? You can’t do evaluation capacity building without doing systems thinking. 2

An even bigger claim… All thinking is systems thinking. 3

This might be easier to see… Systems thinking is one element of evaluative thinking 4

So what is systems thinking? • It’s causal loop analysis! • No, it’s social network analysis! • No, it’s system dynamics… 5

Central ST themes in evaluation Williams on common patterns in ST: -perspectives -boundaries -entangled systems Patton on systems framework premises in systems dynamics modeling: -whole greater than the sum; parts are interdependent -focus is on interconnected relationships -systems are composed of subsystems; context matters -systems boundaries are necessary but arbitrary 6

The Big Picture “Systems evaluation considers the complex factors inherent within the larger structure or system within which the program is embedded. The goal is to accomplish high-quality evaluation with integration across organizational levels and structures. ” ~William Trochim, Principal Investigator 7

A conceptual framework “We mine the systems literature for its heuristic value, as opposed to using systems methodologies (i. e. , system dynamics, network analysis) in evaluation work. ” ~Dr. Jen Brown Urban, Co-Principal Investigator 8

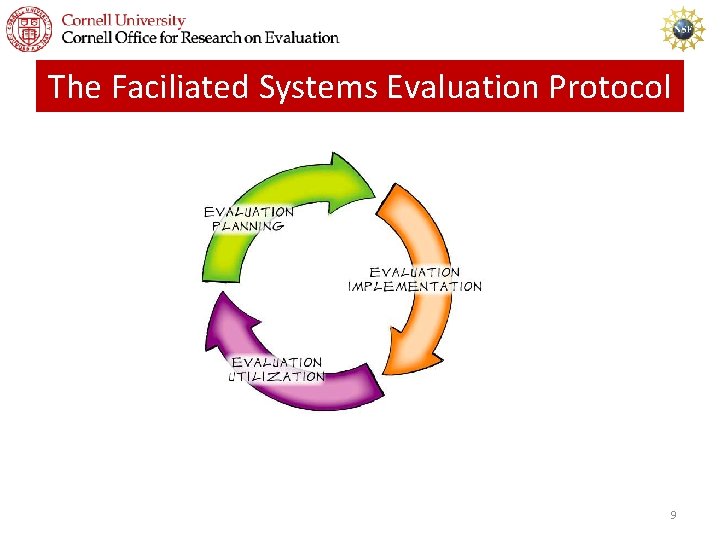

The Faciliated Systems Evaluation Protocol 9

SEP as a multi-level intervention • Program level—programs may be simple and linear, though not always • Cohort level--facilitation of the SEP (group learning) by the Cornell team is complicated, with many moving parts requiring specialization and coordination • System level—emerging network of participants (past, present, incoming cohorts) within their larger program systems is complex and unpredictable, not centrally controlled.

Streams of ST meeting in the SEP • General Systems Theory: part-whole relationships, local and global scale • • • Ecological theory: static and dynamic processes, boundaries Evolutionary theory: ontogeny and philogeny System dynamics: causal pathways, feedback Network theory: multiple perspectives Complexity theory: simple rules and emergence 11

Zeroing in on the program level Protocol process, generally Specific steps in the planning protocol: Lifecycle analysis and alignment Stakeholder analysis Boundary analysis Causal pathway modeling 12

Walking the steps of the Protocol • Simple rules lead to complex results • Feedback, iteration and learning 13

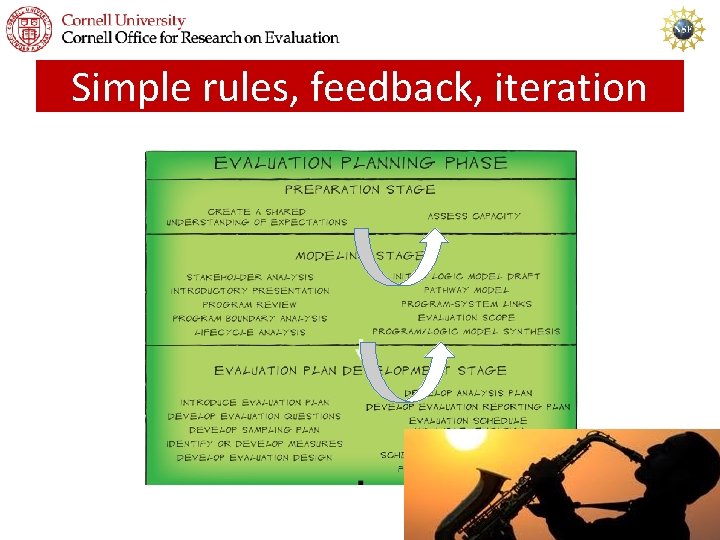

Simple rules, feedback, iteration 14

Lifecycle analysis and alignment • Static and dynamic processes • Ontogeny • Phylogeny • Co-evolution and symbiosis 15

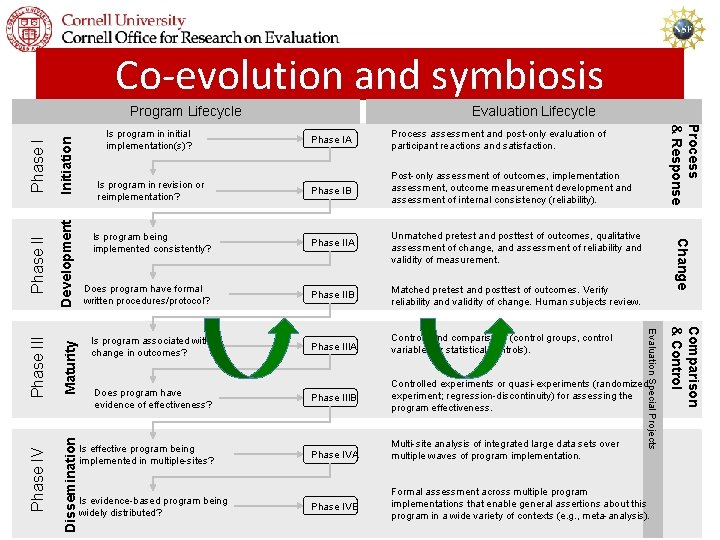

Co-evolution and symbiosis Initiation Development Maturity Dissemination Phase I Is program in revision or reimplementation? Phase IB Post-only assessment of outcomes, implementation assessment, outcome measurement development and assessment of internal consistency (reliability). Is program being implemented consistently? Phase IIA Unmatched pretest and posttest of outcomes, qualitative assessment of change, and assessment of reliability and validity of measurement. Does program have formal written procedures/protocol? Phase IIB Matched pretest and posttest of outcomes. Verify reliability and validity of change. Human subjects review. Is program associated with change in outcomes? Phase IIIA Controls and comparisons (control groups, control variables or statistical controls). Does program have evidence of effectiveness? Phase IIIB Controlled experiments or quasi-experiments (randomized experiment; regression-discontinuity) for assessing the program effectiveness. Is effective program being implemented in multiple-sites? Phase IVA Multi-site analysis of integrated large data sets over multiple waves of program implementation. Phase IVB Formal assessment across multiple program implementations that enable general assertions about this program in a wide variety of contexts (e. g. , meta-analysis). Is evidence-based program being widely distributed? Comparison & Control Phase III Process assessment and post-only evaluation of participant reactions and satisfaction. Change Phase IA Evaluation Special Projects Phase IV Is program in initial implementation(s)? Evaluation Lifecycle Process & Response Phase II Program Lifecycle 16

Stakeholder analysis • Part-whole relationships • Local and global scale • Multiple perspectives 17

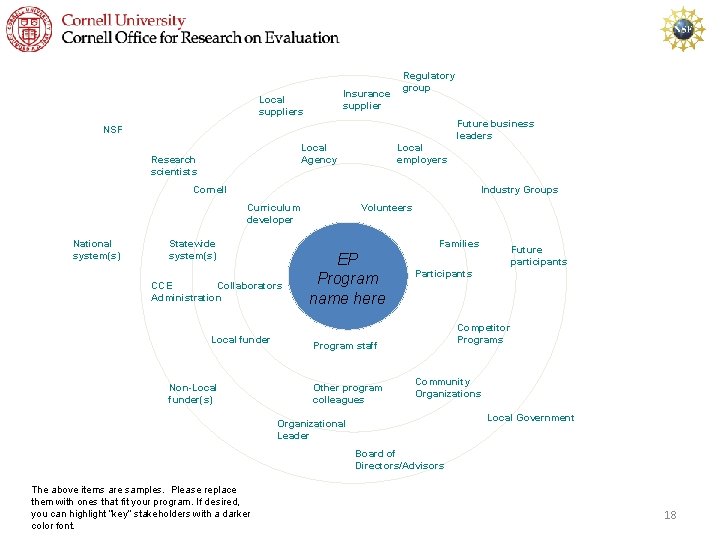

Insurance supplier Local suppliers Regulatory group Future business leaders NSF Local Agency Research scientists Local employers Cornell Industry Groups Curriculum developer National system(s) Statewide system(s) Families CCE Collaborators Administration Local funder Non-Local funder(s) Volunteers EP Program name here Participants Competitor Programs Program staff Other program colleagues Future participants Community Organizations Local Government Organizational Leader Board of Directors/Advisors The above items are samples. Please replace them with ones that fit your program. If desired, you can highlight “key” stakeholders with a darker color font. 18

Boundary analysis Boundaries 19

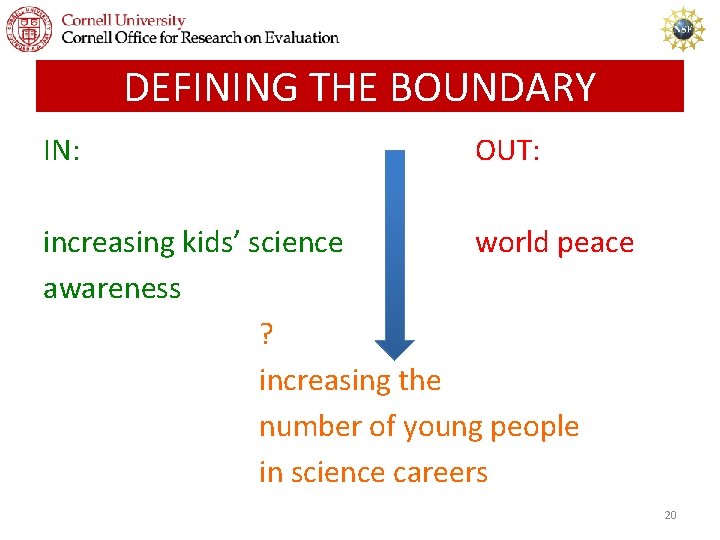

DEFINING THE BOUNDARY IN: OUT: increasing kids’ science world peace awareness ? increasing the number of young people in science careers 20

Causal pathway modelling Causal pathways 21

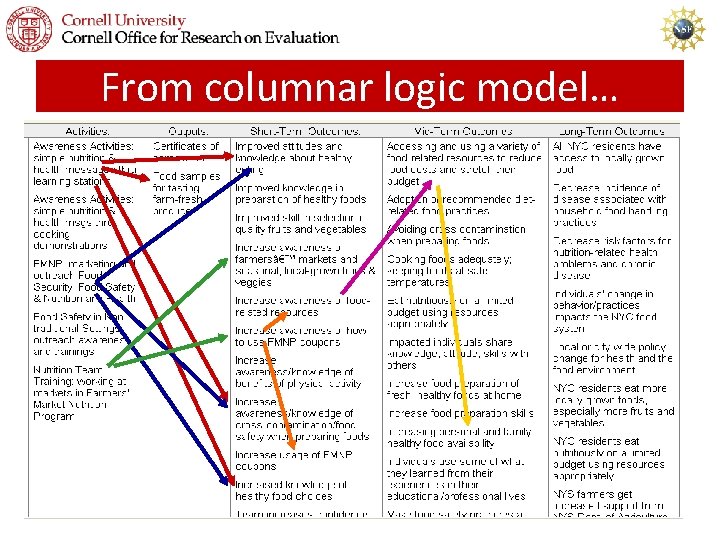

From columnar logic model… 22

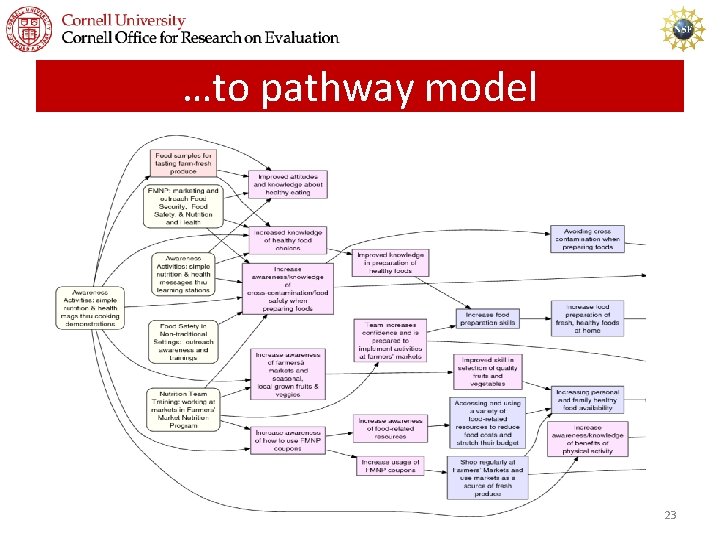

…to pathway model 23

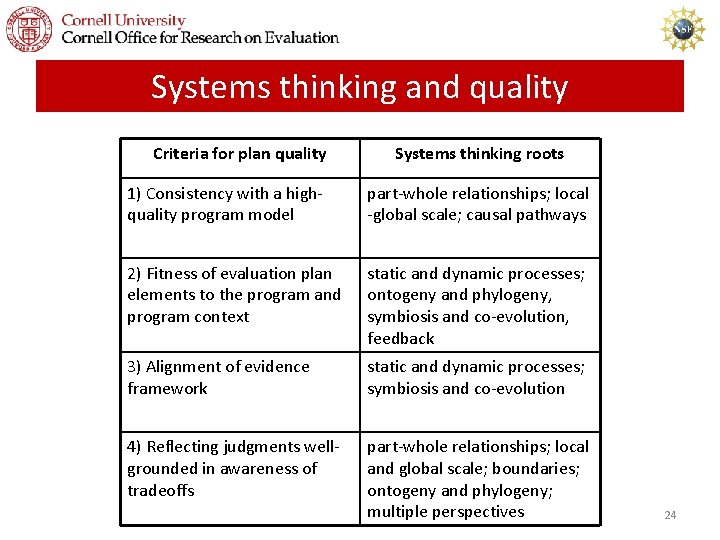

Systems thinking and quality Criteria for plan quality Systems thinking roots 1) Consistency with a highquality program model part-whole relationships; local -global scale; causal pathways 2) Fitness of evaluation plan elements to the program and program context static and dynamic processes; ontogeny and phylogeny, symbiosis and co-evolution, feedback 3) Alignment of evidence framework static and dynamic processes; symbiosis and co-evolution 4) Reflecting judgments wellgrounded in awareness of tradeoffs part-whole relationships; local and global scale; boundaries; ontogeny and phylogeny; multiple perspectives 24

Thank you! This presentation is based on work by the research and facilitation team at the Cornell Office for Research on Evaluation, led by Dr. William Trochim. For more information on the Systems Evaluation Protocol, see HTTP: //CORE. HUMAN. CORNELL. EDU/AEA_CONFERENCE. CFM 25

- Slides: 25