Using SVM for Expression Microarray Data Mining Data

- Slides: 15

Using SVM for Expression Micro-array Data Mining —— Data Mining Final Project Chong Shou Apr. 17, 2007

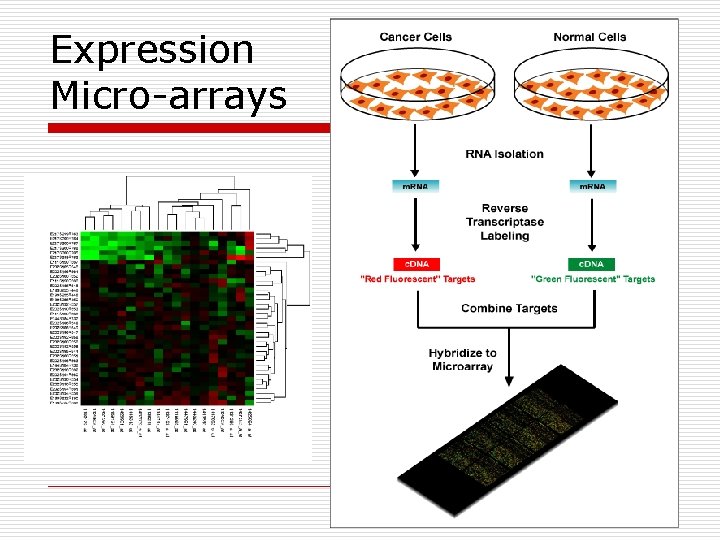

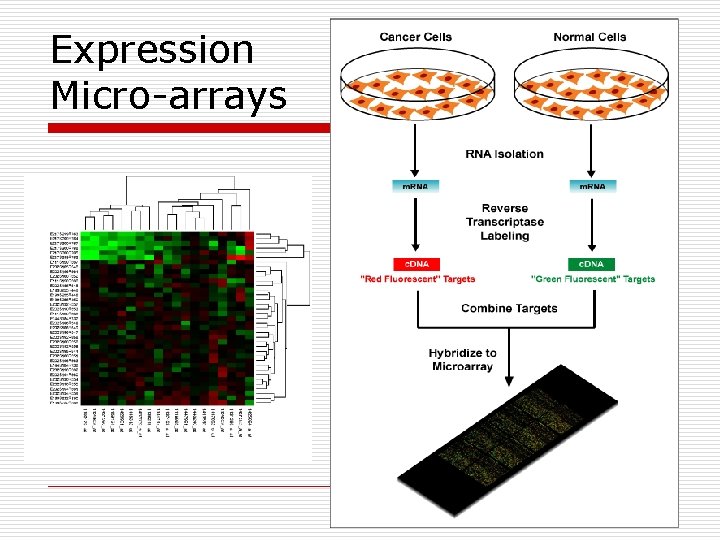

Expression Micro-arrays

Expression Micro-arrays o Data: n n A n*m data matrix n = : gene number under investigation m = 79: conditions under which gene expression levels are measured Expression level: expression under a certain condition is compared to a reference expression level, positive if upregulated, negative if down-regulated

Expression Micro-arrays o o o It is believed that genes working together in certain biological processes should have similar expression patterns We should be able to find similar patterns of genes having related functions We could use this information to infer (predict) functions of unknown genes

Supervised Learning o o o We use biological knowledge gathered from experiments to label selected genes to a set of classes as our training set Training set: 2467 genes Test set: 3754 genes

GO (Gene Ontology) o o Use GO as functional annotation for selected genes Classes: n n n Respiration TCA cycle (biochemical process that produce energy) Histone (protein helps DNA packing) Ribosome (protein complex assembles amino acids to proteins) Proteolysis (process destroy proteins) Meiosis (process produce reproductive cells)

Advantages using SVM o o o Classical supervised learning method Able to use a variety of distance functions (kernels) Able to handle data with extremely high dimensions: 79 conditions

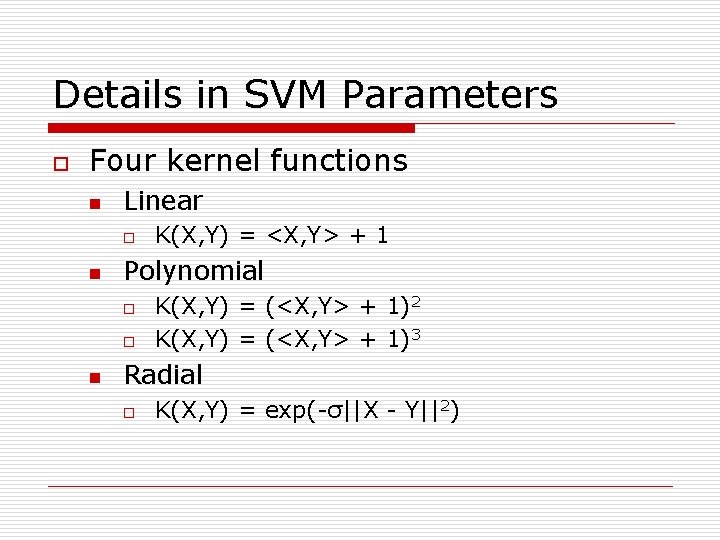

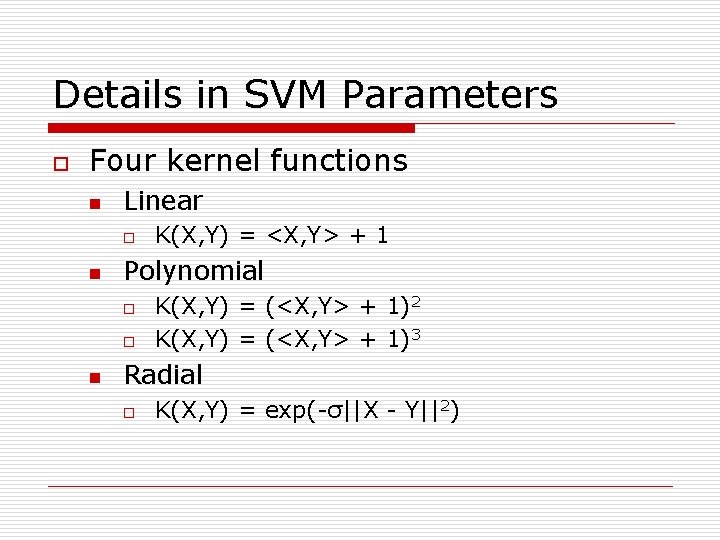

Details in SVM Parameters o Four kernel functions n Linear o n Polynomial o o n K(X, Y) = <X, Y> + 1 K(X, Y) = (<X, Y> + 1)2 K(X, Y) = (<X, Y> + 1)3 Radial o K(X, Y) = exp(-σ||X - Y||2)

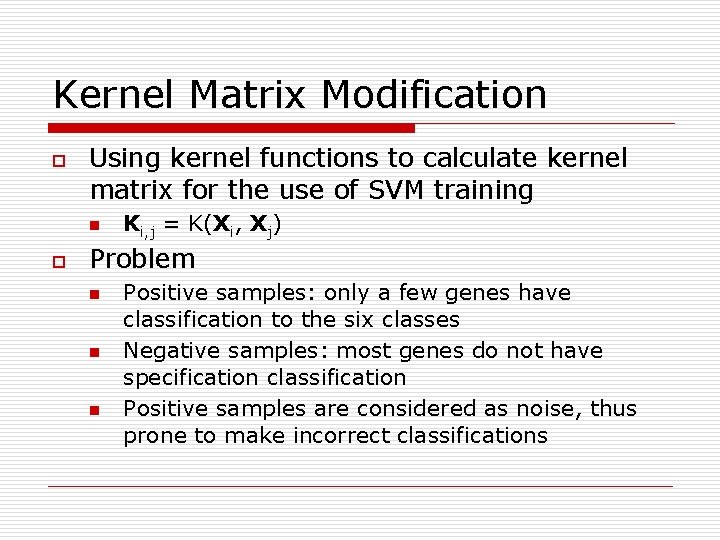

Kernel Matrix Modification o Using kernel functions to calculate kernel matrix for the use of SVM training n o Ki, j = K(Xi, Xj) Problem n n n Positive samples: only a few genes have classification to the six classes Negative samples: most genes do not have specification classification Positive samples are considered as noise, thus prone to make incorrect classifications

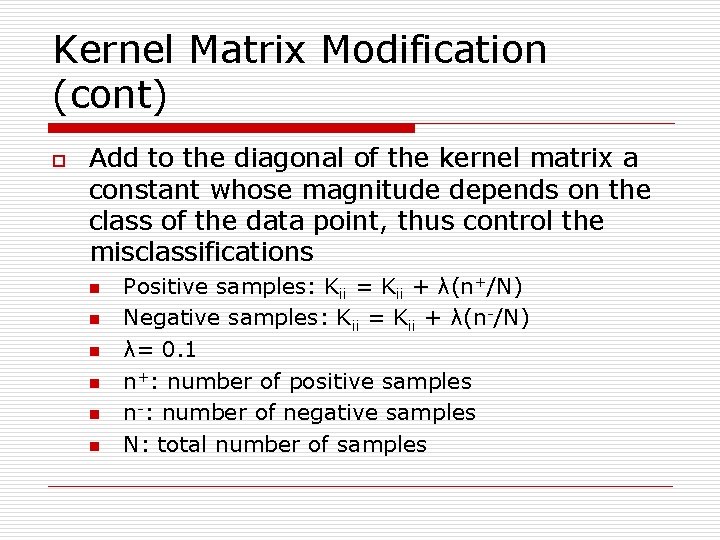

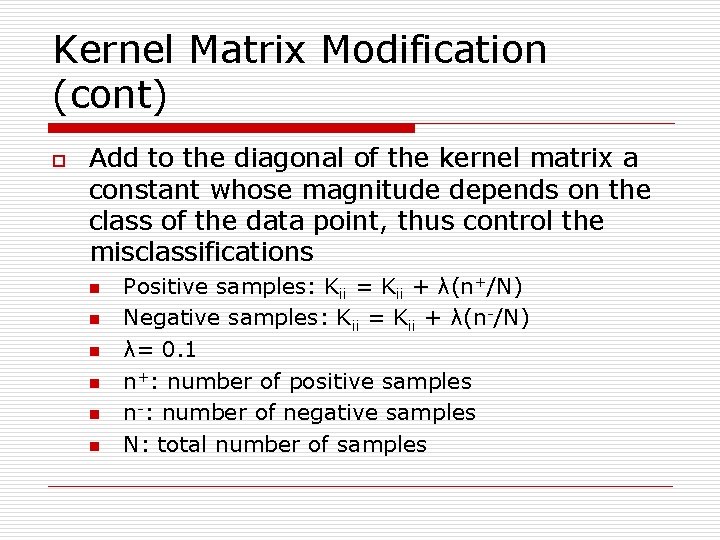

Kernel Matrix Modification (cont) o Add to the diagonal of the kernel matrix a constant whose magnitude depends on the class of the data point, thus control the misclassifications n n n Positive samples: Kii = Kii + λ(n+/N) Negative samples: Kii = Kii + λ(n-/N) λ= 0. 1 n+: number of positive samples n-: number of negative samples N: total number of samples

Othre Supervised Learning Methods o Classification tree n n o rpart moc k-Nearest Neighbors

Model Evaluation o Confusion Matrix n o Cost Function n o FP, FN, TP, TN CM = FPM + 2*FNM M: learning method FN has larger weight Save Function n SM = C N – C M CN: cost if all samples are labeled negative Models with large SM are preferred

Prediction o o Use SVM on testing set to predict gene function Compare the classification result with GO

Some Problems o o High data matrix dimension, 2467 * 2467 for the training set. Requires long running time on PC. “predict” function in R

Questions?