Using Statistics in Research Psych 231 Research Methods

- Slides: 24

Using Statistics in Research Psych 231: Research Methods in Psychology

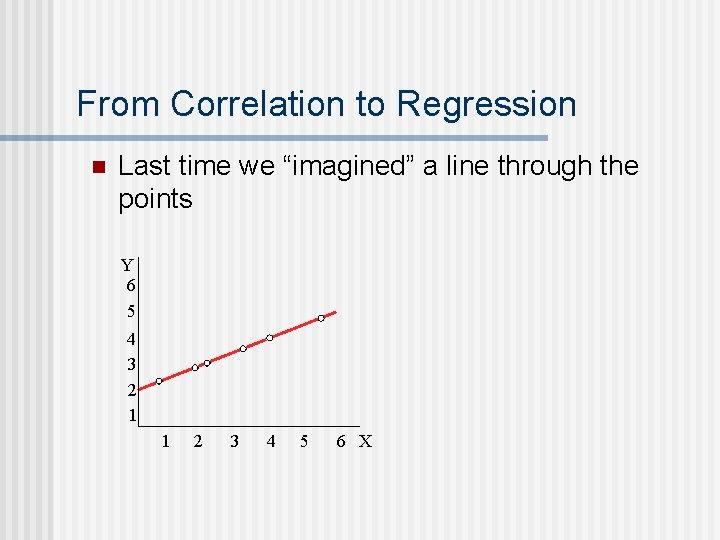

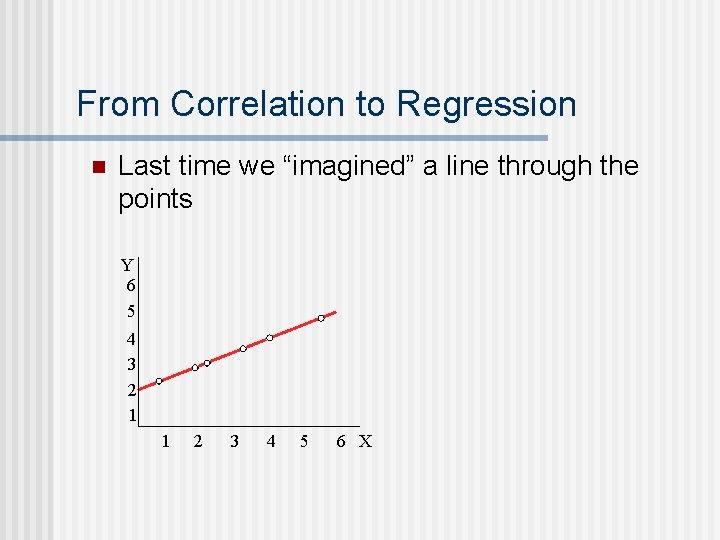

From Correlation to Regression n Last time we “imagined” a line through the points Y 6 5 4 3 2 1 1 2 3 4 5 6 X

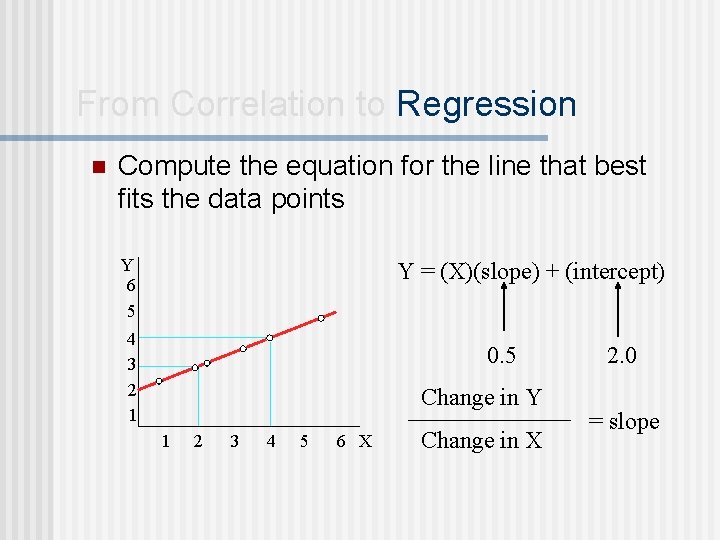

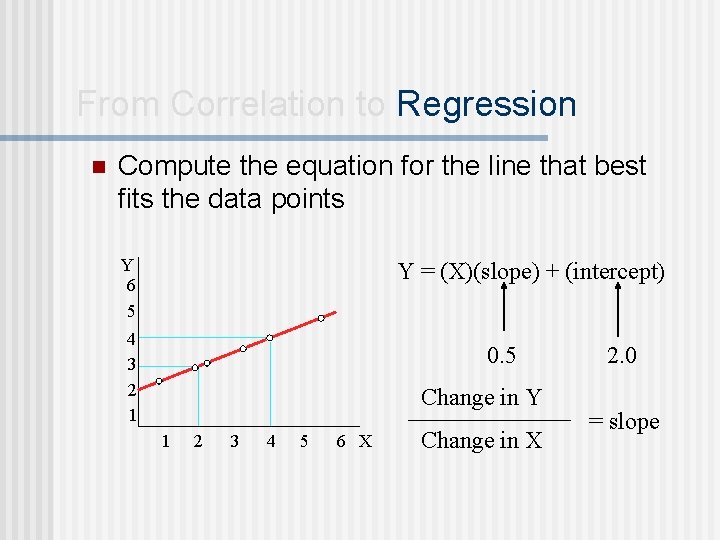

From Correlation to Regression n Compute the equation for the line that best fits the data points Y 6 5 Y = (X)(slope) + (intercept) 4 3 2 1 0. 5 Change in Y 1 2 3 4 5 6 X Change in X 2. 0 = slope

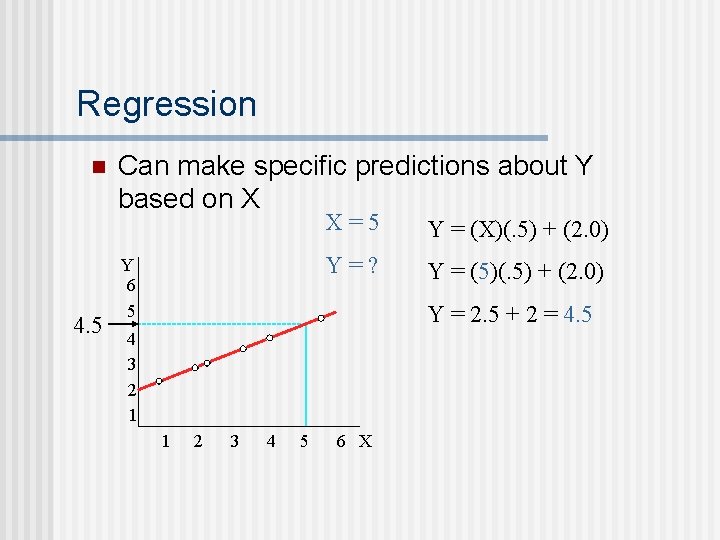

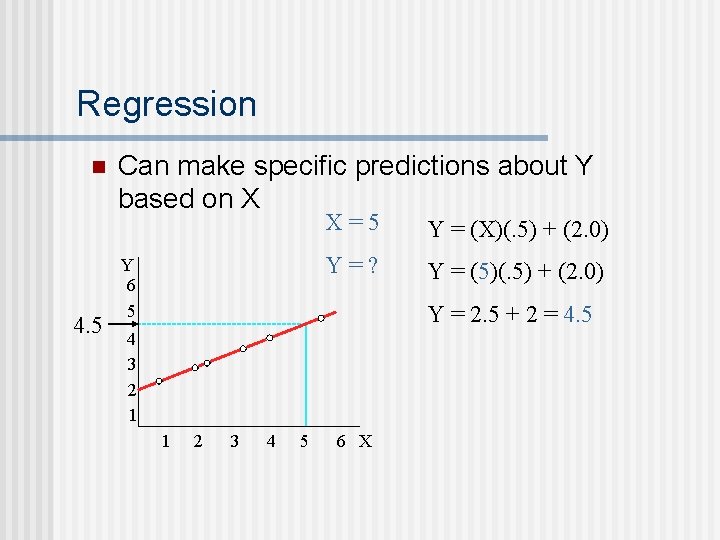

Regression n 4. 5 Can make specific predictions about Y based on X Y 6 5 X=5 Y = (X)(. 5) + (2. 0) Y=? Y = (5)(. 5) + (2. 0) Y = 2. 5 + 2 = 4. 5 4 3 2 1 1 2 3 4 5 6 X

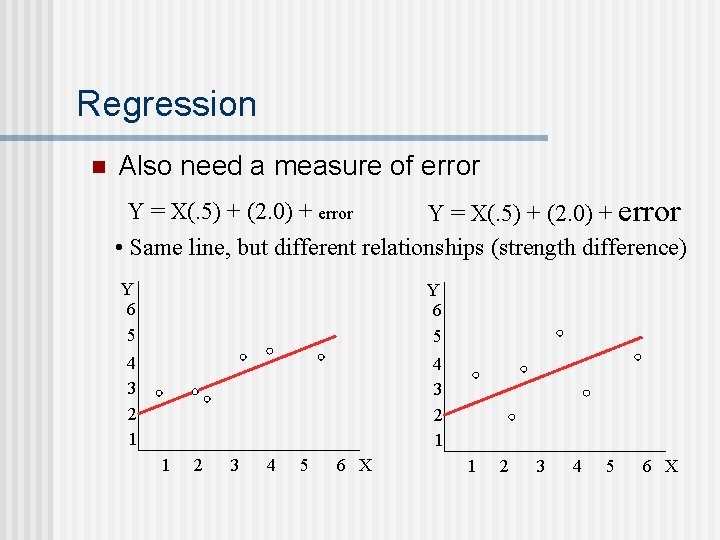

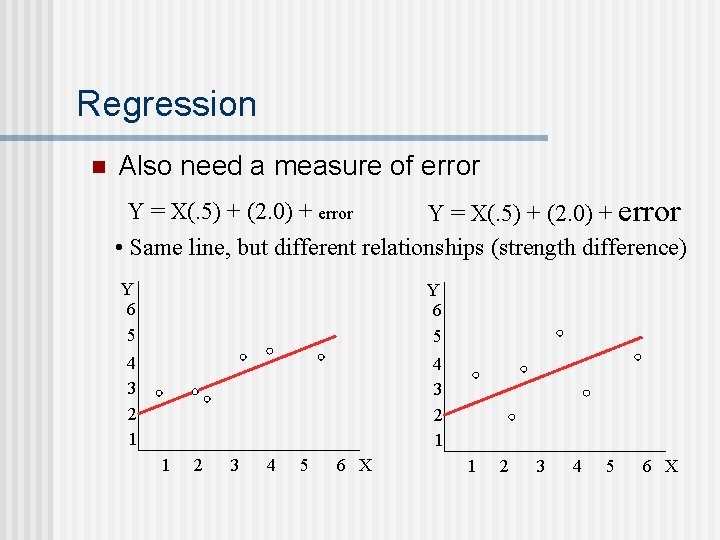

Regression n Also need a measure of error Y = X(. 5) + (2. 0) + error • Same line, but different relationships (strength difference) Y 6 5 4 3 2 1 1 2 3 4 5 6 X

Cautions with correlation & regression n Don’t make causal claims Don’t extrapolate Extreme scores (outliers) can strongly influence the calculated relationship

Inferential Statistics n Purpose: To make claims about populations based on data collected from samples n Ø What’s the big deal? Example Experiment: Ø Group A - gets treatment to improve memory Ø Group B - gets no treatment (control) Ø Ø After treatment period test both groups for memory Results: Ø Group A’s average memory score is 80% Ø Group B’s is 76% Ø Is the 4% difference a “real” difference (statistically significant) or is it just sampling error?

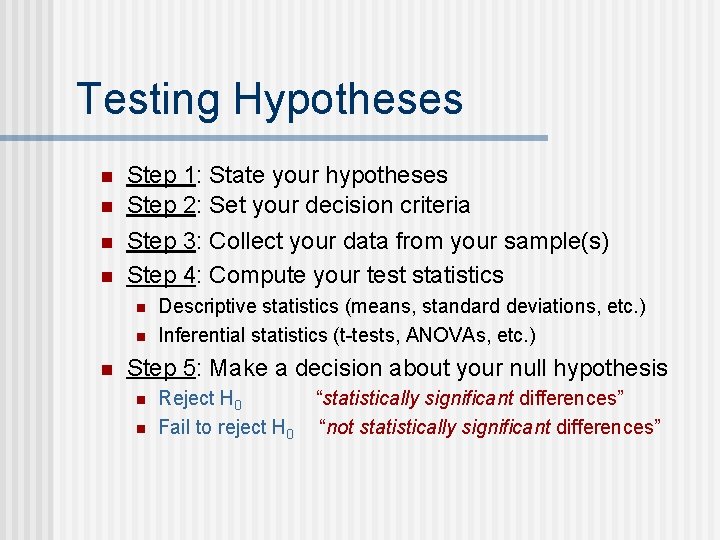

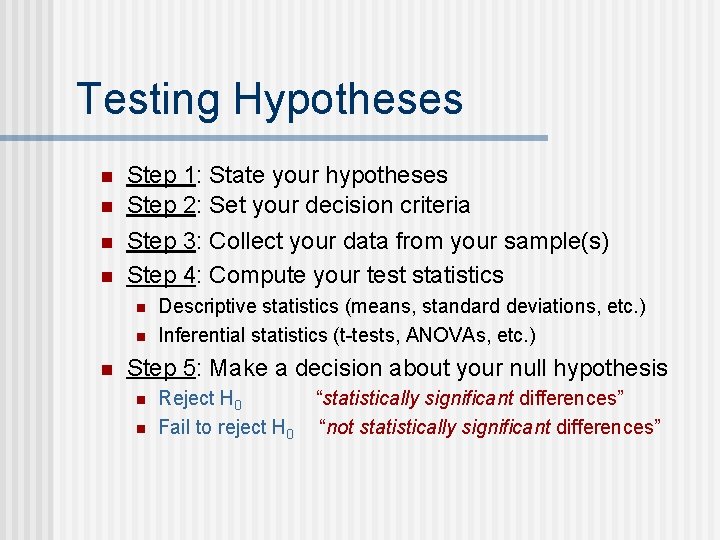

Testing Hypotheses n n n Step 1: State your hypotheses Step 2: Set your decision criteria Step 3: Collect your data from your sample(s) Step 4: Compute your test statistics Step 5: Make a decision about your null hypothesis n n “Reject H 0” “Fail to reject H 0”

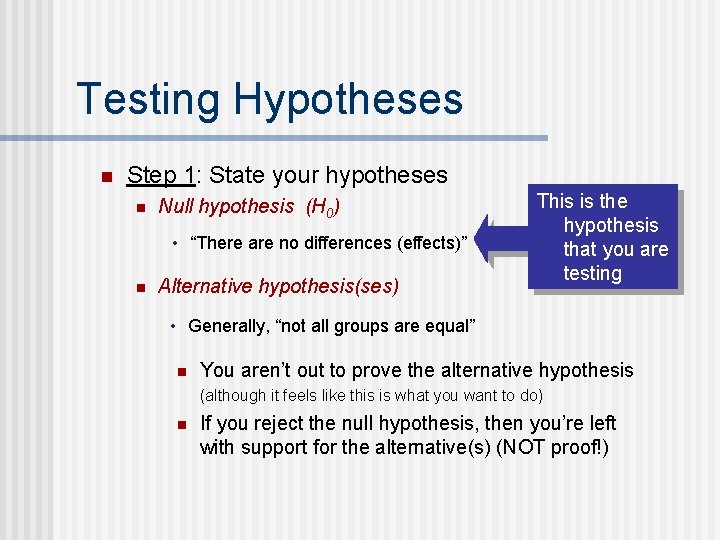

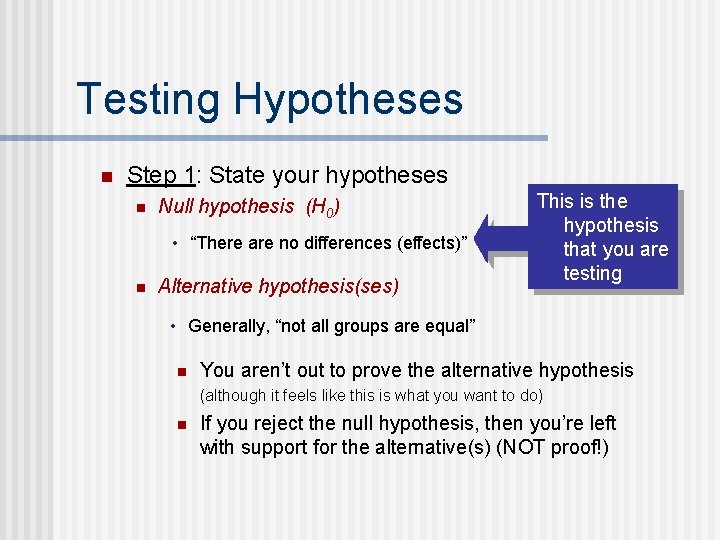

Testing Hypotheses n Step 1: State your hypotheses n Null hypothesis (H 0) • “There are no differences (effects)” n Alternative hypothesis(ses) This is the hypothesis that you are testing • Generally, “not all groups are equal” n You aren’t out to prove the alternative hypothesis (although it feels like this is what you want to do) n If you reject the null hypothesis, then you’re left with support for the alternative(s) (NOT proof!)

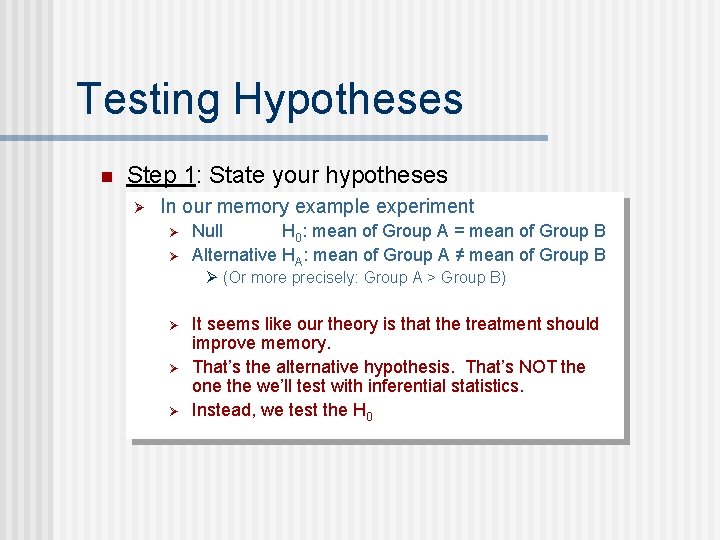

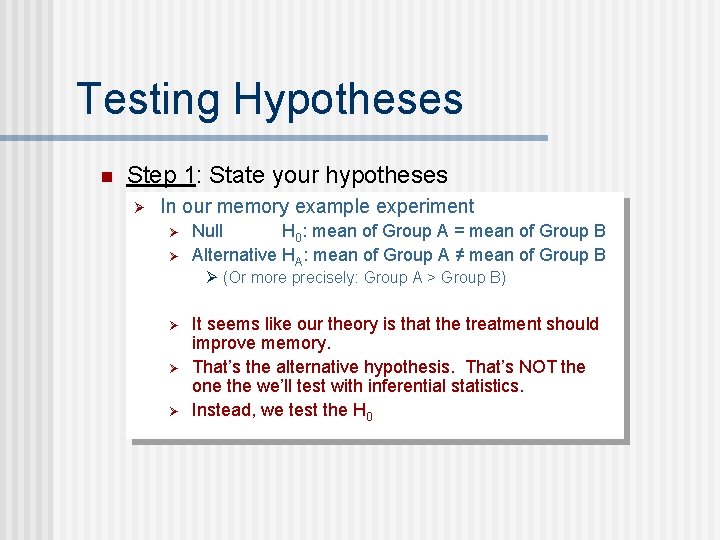

Testing Hypotheses n Step 1: State your hypotheses Ø In our memory example experiment Ø Ø Null H 0: mean of Group A = mean of Group B Alternative HA: mean of Group A ≠ mean of Group B Ø (Or more precisely: Group A > Group B) Ø Ø Ø It seems like our theory is that the treatment should improve memory. That’s the alternative hypothesis. That’s NOT the one the we’ll test with inferential statistics. Instead, we test the H 0

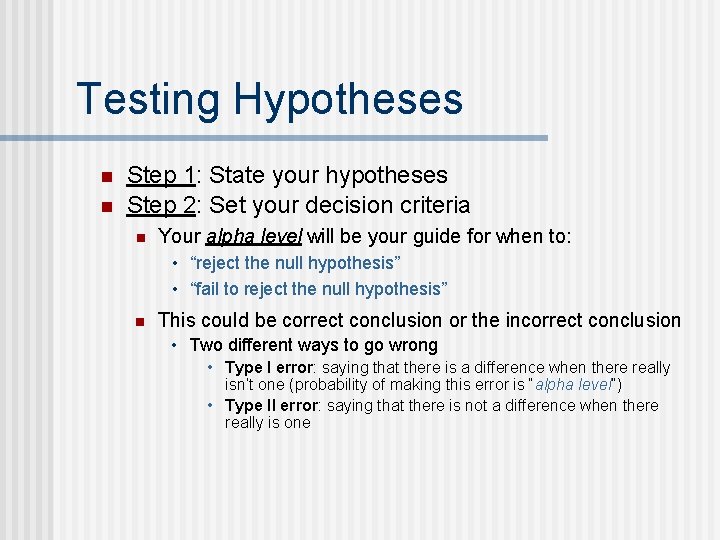

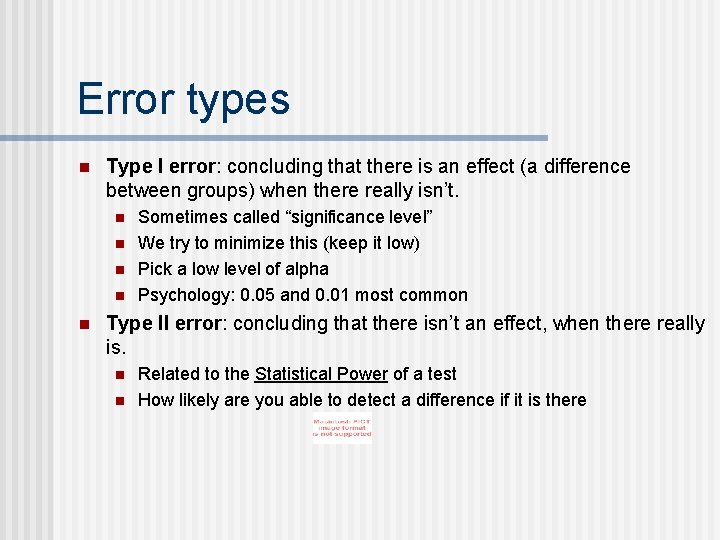

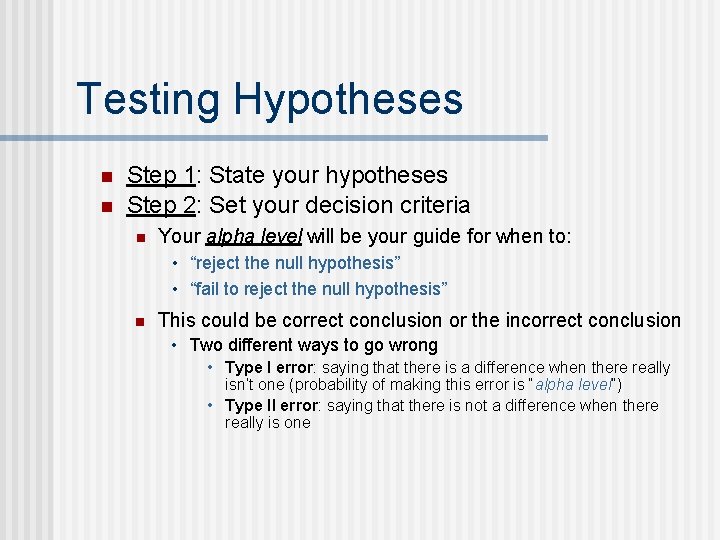

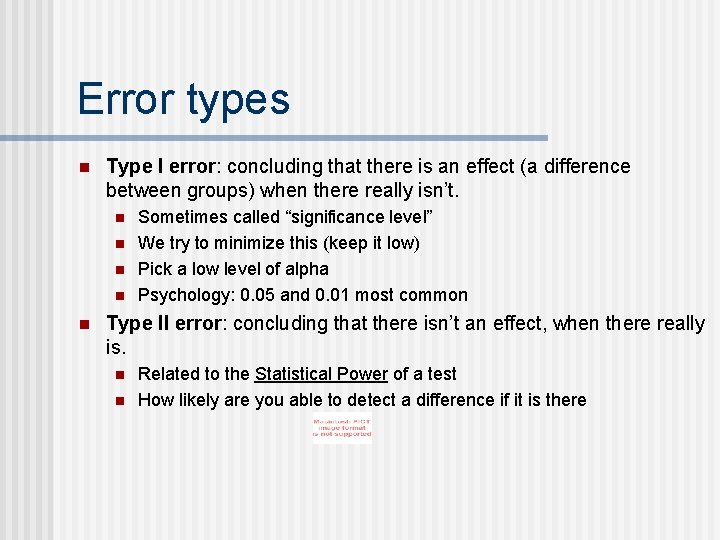

Testing Hypotheses n n Step 1: State your hypotheses Step 2: Set your decision criteria n Your alpha level will be your guide for when to: • “reject the null hypothesis” • “fail to reject the null hypothesis” n This could be correct conclusion or the incorrect conclusion • Two different ways to go wrong • Type I error: saying that there is a difference when there really isn’t one (probability of making this error is “alpha level”) • Type II error: saying that there is not a difference when there really is one

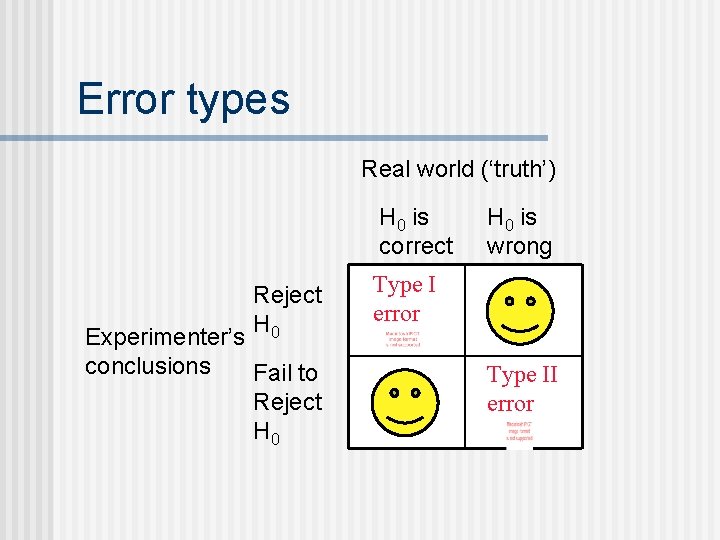

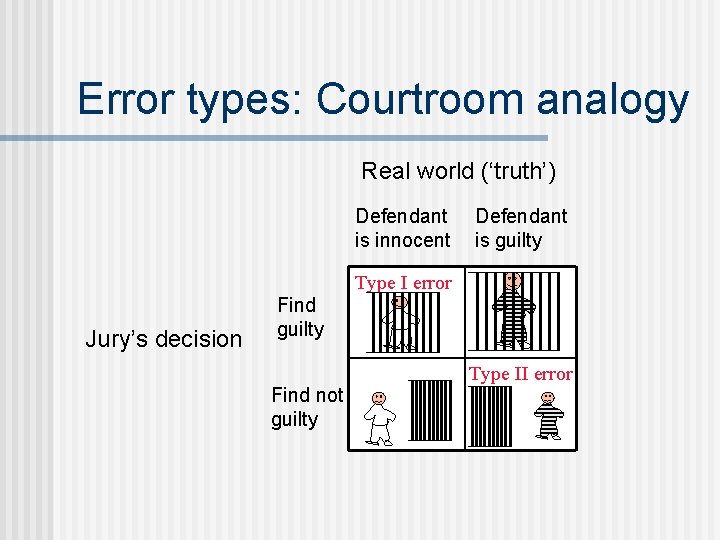

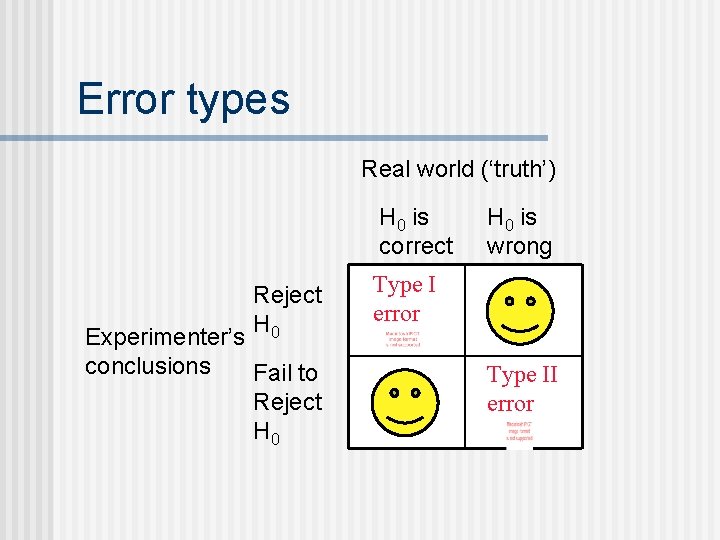

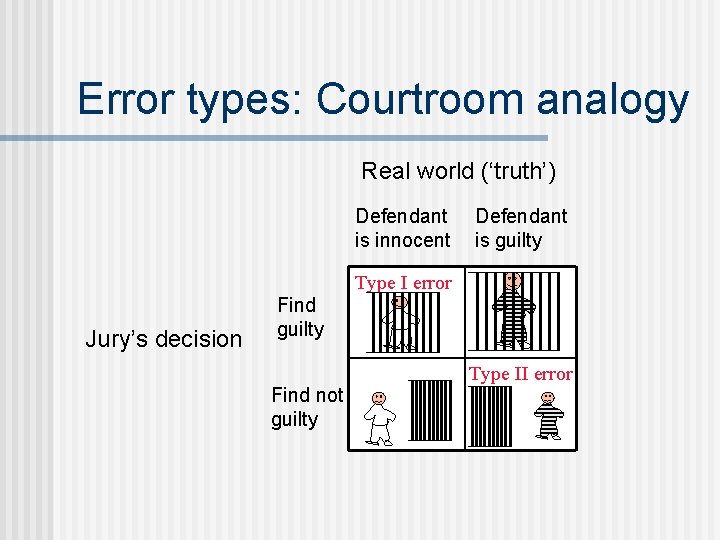

Error types Real world (‘truth’) H 0 is correct Reject H 0 Experimenter’s conclusions Fail to Reject H 0 is wrong Type I error Type II error

Error types: Courtroom analogy Real world (‘truth’) Defendant is innocent Defendant is guilty Type I error Jury’s decision Find guilty Find not guilty Type II error

Error types n Type I error: concluding that there is an effect (a difference between groups) when there really isn’t. n n n Sometimes called “significance level” We try to minimize this (keep it low) Pick a low level of alpha Psychology: 0. 05 and 0. 01 most common Type II error: concluding that there isn’t an effect, when there really is. n n Related to the Statistical Power of a test How likely are you able to detect a difference if it is there

Testing Hypotheses n n Step 1: State your hypotheses Step 2: Set your decision criteria Step 3: Collect your data from your sample(s) Step 4: Compute your test statistics n n n Descriptive statistics (means, standard deviations, etc. ) Inferential statistics (t-tests, ANOVAs, etc. ) Step 5: Make a decision about your null hypothesis n n Reject H 0 Fail to reject H 0 “statistically significant differences” “not statistically significant differences”

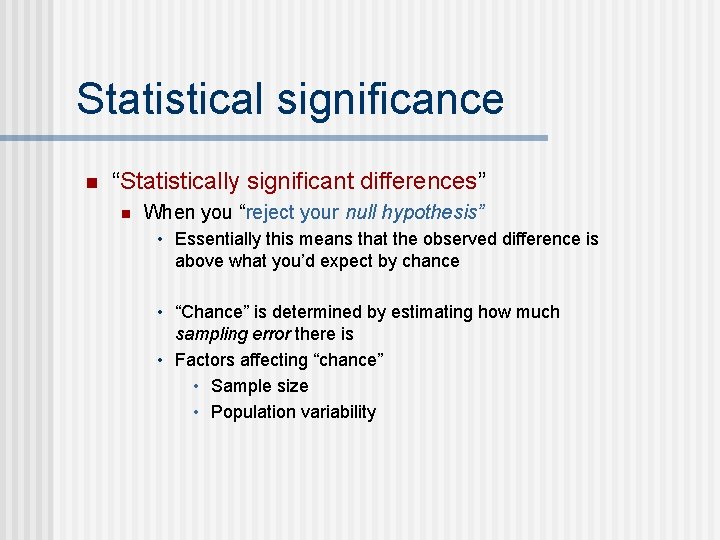

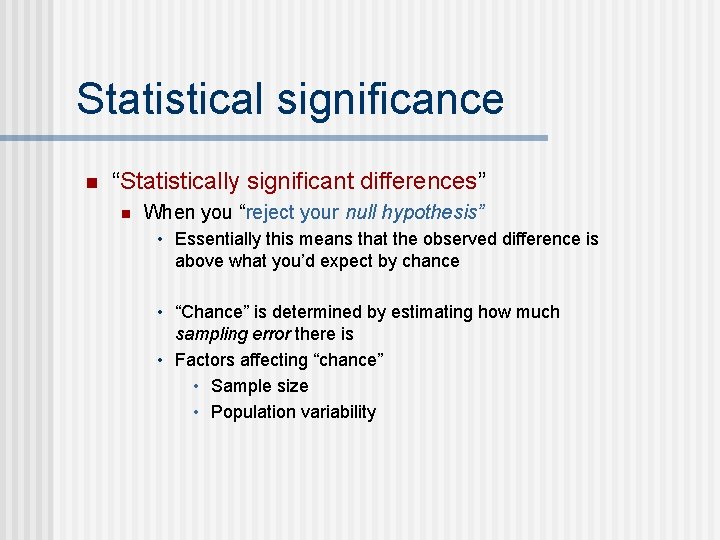

Statistical significance n “Statistically significant differences” n When you “reject your null hypothesis” • Essentially this means that the observed difference is above what you’d expect by chance • “Chance” is determined by estimating how much sampling error there is • Factors affecting “chance” • Sample size • Population variability

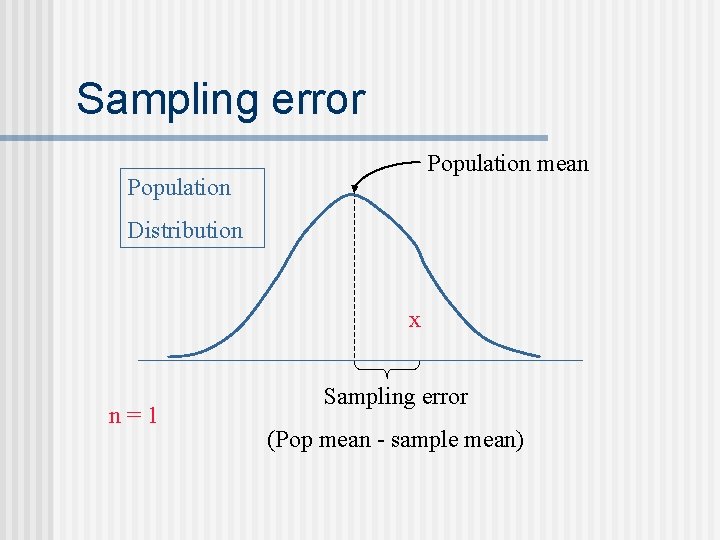

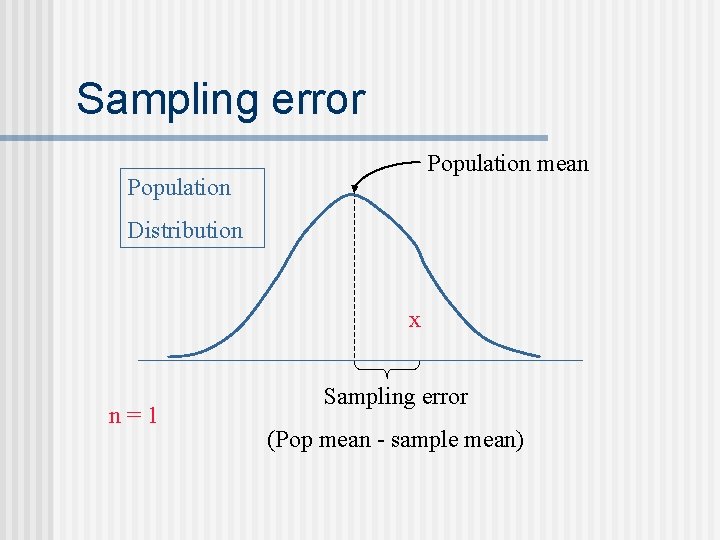

Sampling error Population mean Population Distribution x n=1 Sampling error (Pop mean - sample mean)

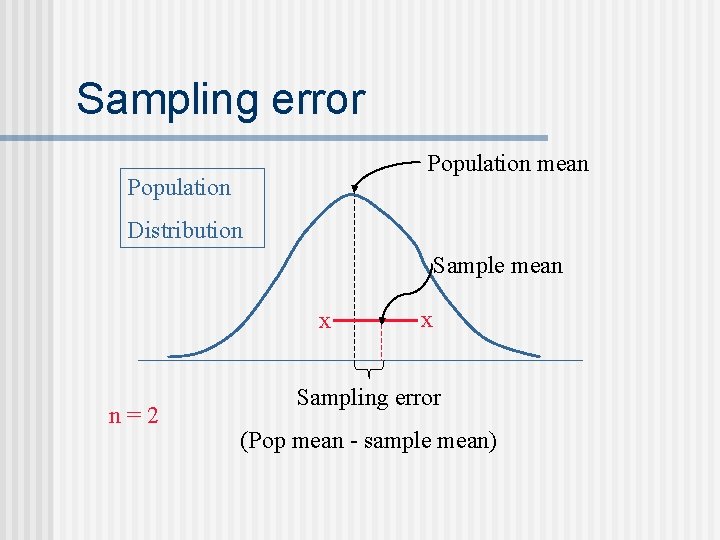

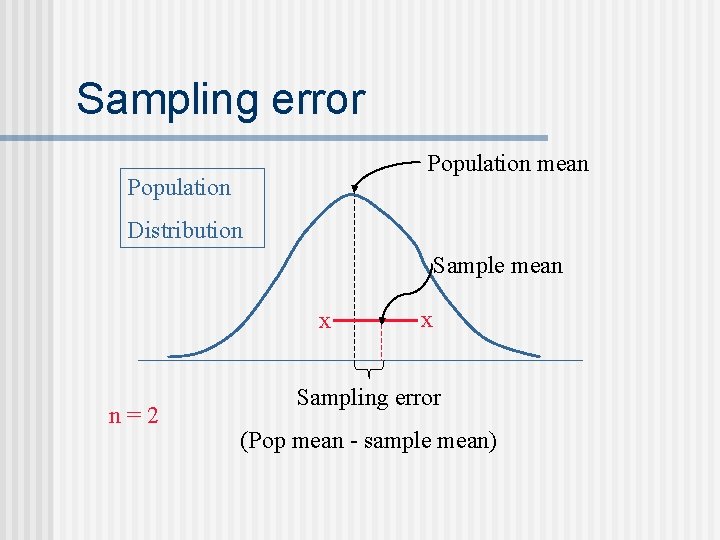

Sampling error Population mean Population Distribution Sample mean x n=2 x Sampling error (Pop mean - sample mean)

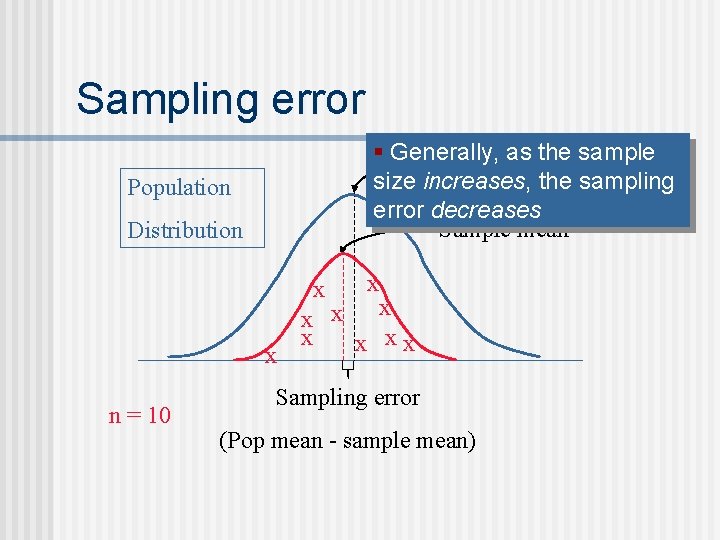

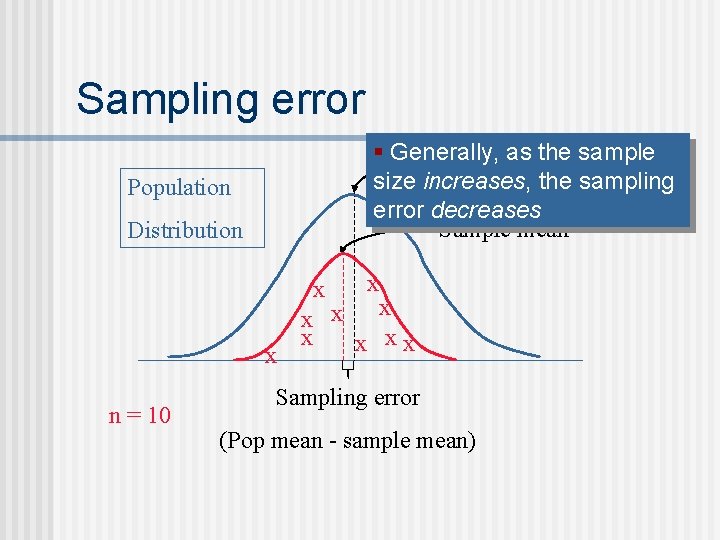

Sampling error § Generally, as mean the sample Population size increases, the sampling error decreases Sample mean Population Distribution x x n = 10 x x x xx Sampling error (Pop mean - sample mean)

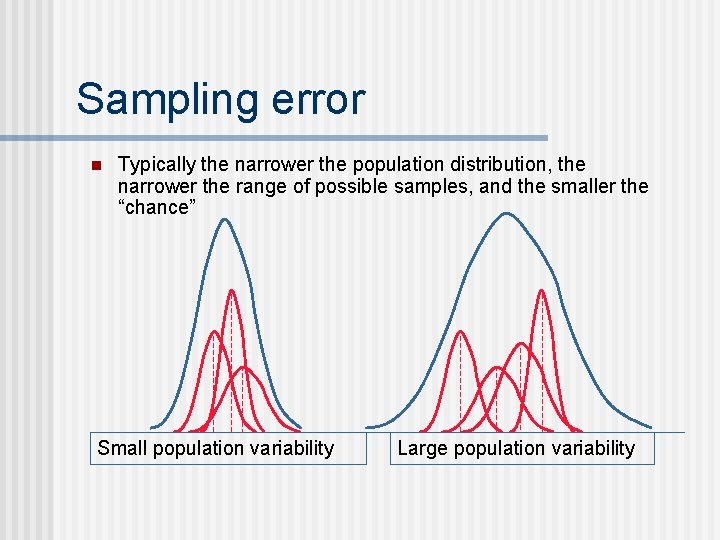

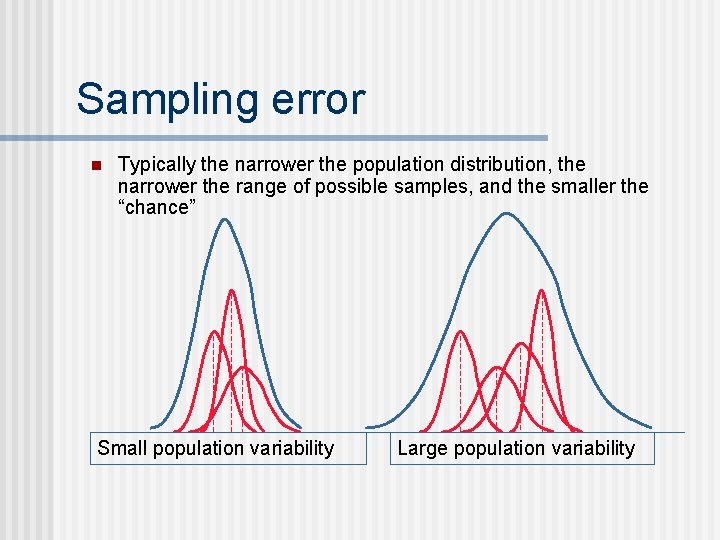

Sampling error n Typically the narrower the population distribution, the narrower the range of possible samples, and the smaller the “chance” Small population variability Large population variability

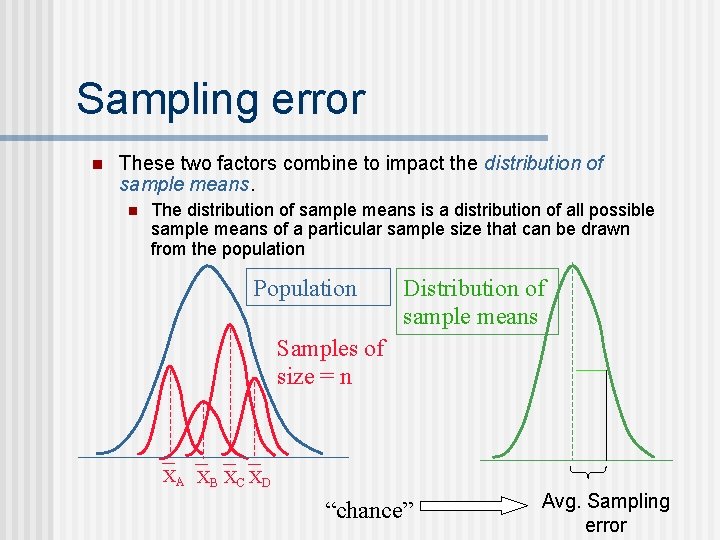

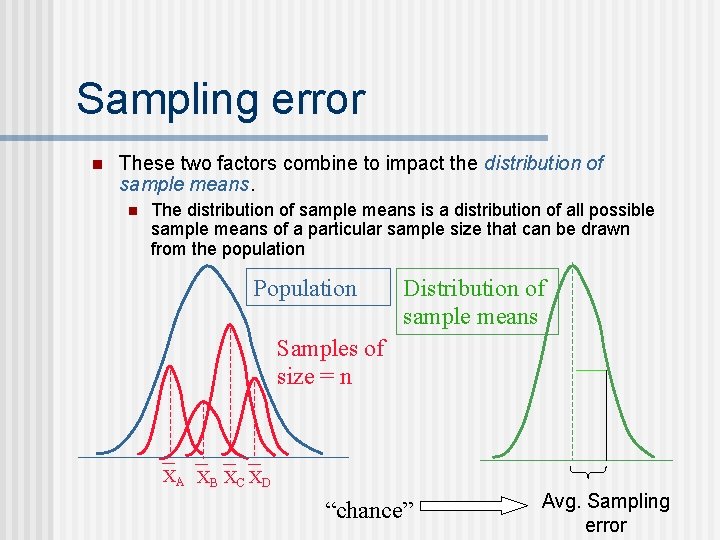

Sampling error n These two factors combine to impact the distribution of sample means. n The distribution of sample means is a distribution of all possible sample means of a particular sample size that can be drawn from the population Population Distribution of sample means Samples of size = n XA XB XC XD “chance” Avg. Sampling error

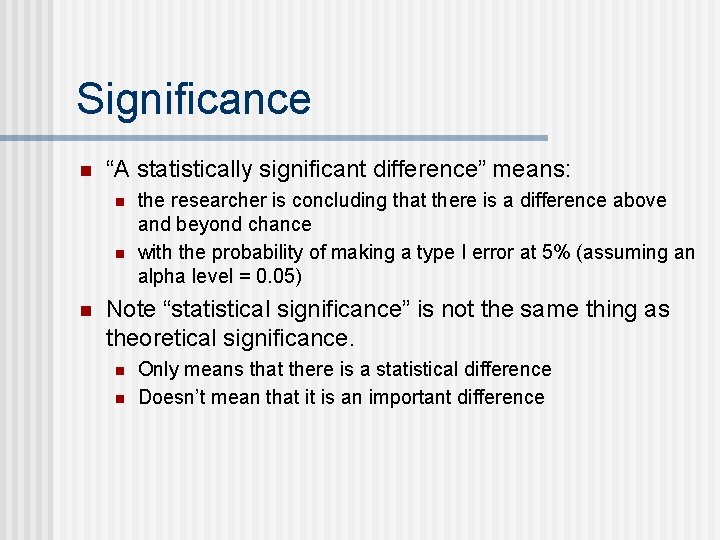

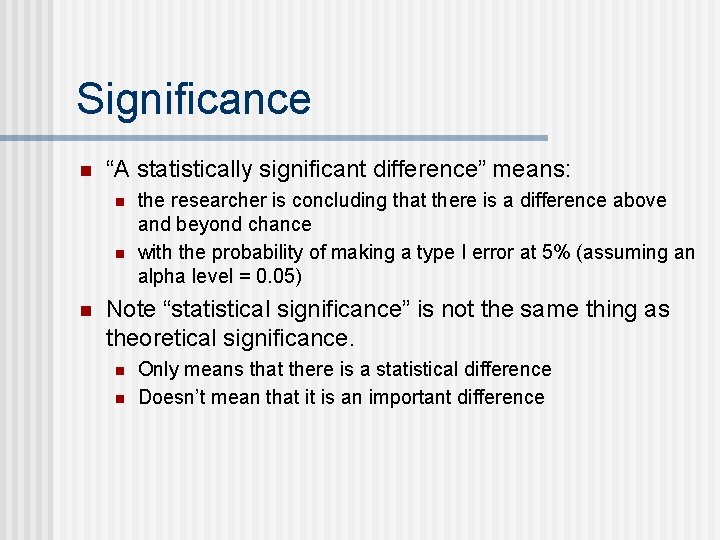

Significance n “A statistically significant difference” means: n n n the researcher is concluding that there is a difference above and beyond chance with the probability of making a type I error at 5% (assuming an alpha level = 0. 05) Note “statistical significance” is not the same thing as theoretical significance. n n Only means that there is a statistical difference Doesn’t mean that it is an important difference

Non-Significance n Failing to reject the null hypothesis n n Generally, not interested in “accepting the null hypothesis” (remember we can’t prove things only disprove them) Usually check to see if you made a Type II error (failed to detect a difference that is really there) • Check the statistical power of your test • Sample size is too small • Effects that you’re looking for are really small • Check your controls, maybe too much variability

Next time: Inferential Statistical Tests n Different statistical tests n n n “Generic test” T-test Analysis of Variance (ANOVA)