Using services in DIRAC A Tsaregorodtsev CPPM Marseille

- Slides: 19

Using services in DIRAC A. Tsaregorodtsev, CPPM, Marseille 2 nd ARDA Workshop, 21 -23 June 2004, CERN LHCb week, 27 May 2004, CERN 1

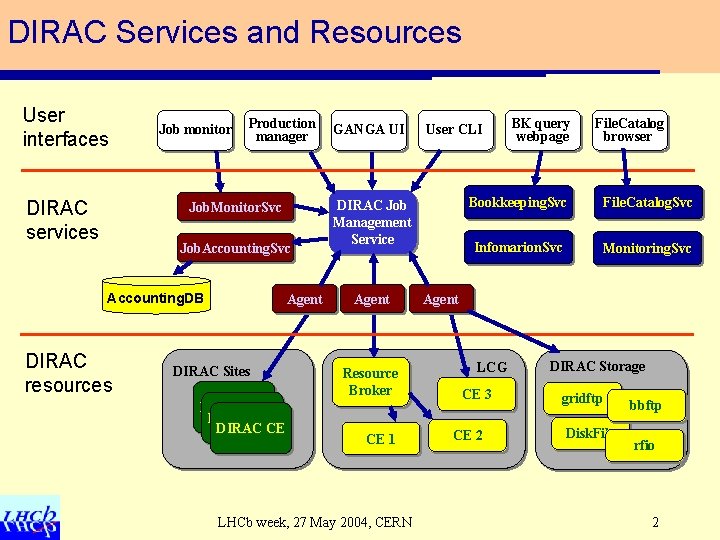

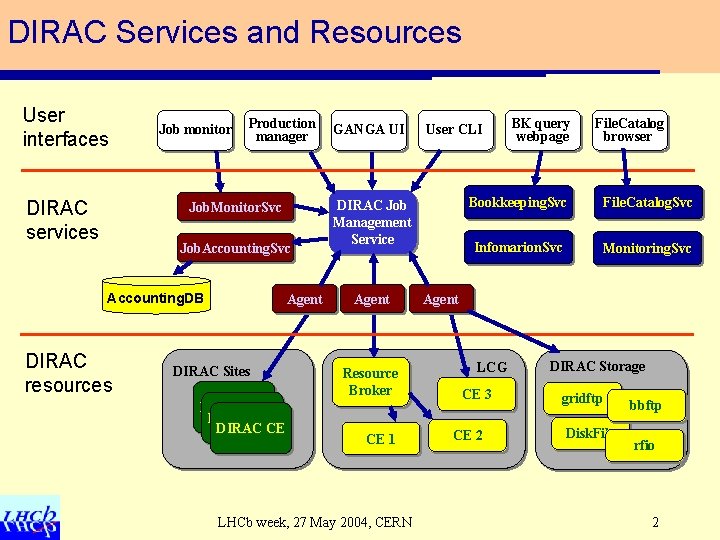

DIRAC Services and Resources User interfaces DIRAC services Job monitor Production manager Job. Monitor. Svc Job. Accounting. Svc Accounting. DB DIRAC resources Agent DIRAC Sites DIRAC CE GANGA UI User CLI DIRAC Job Management Service Agent Resource Broker CE 1 LHCb week, 27 May 2004, CERN BK query webpage File. Catalog browser Bookkeeping. Svc File. Catalog. Svc Infomarion. Svc Monitoring. Svc Agent LCG CE 3 CE 2 DIRAC Storage gridftp Disk. File bbftp rfio 2

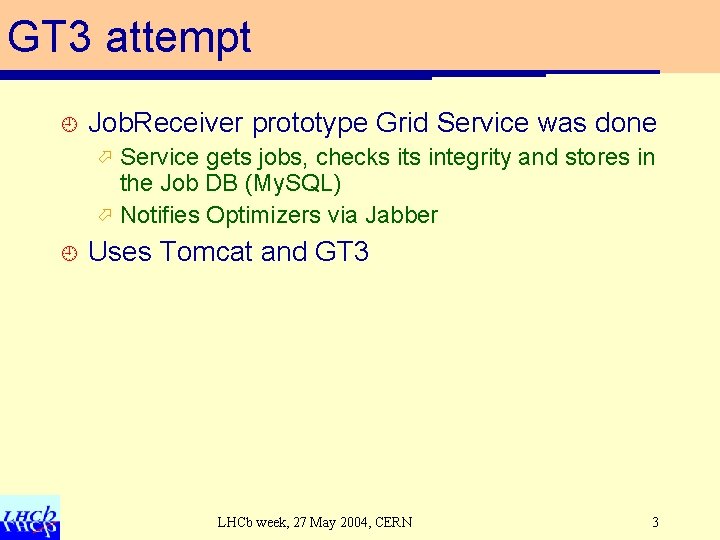

GT 3 attempt ¿ Job. Receiver prototype Grid Service was done Service gets jobs, checks its integrity and stores in the Job DB (My. SQL) ö Notifies Optimizers via Jabber ö ¿ Uses Tomcat and GT 3 LHCb week, 27 May 2004, CERN 3

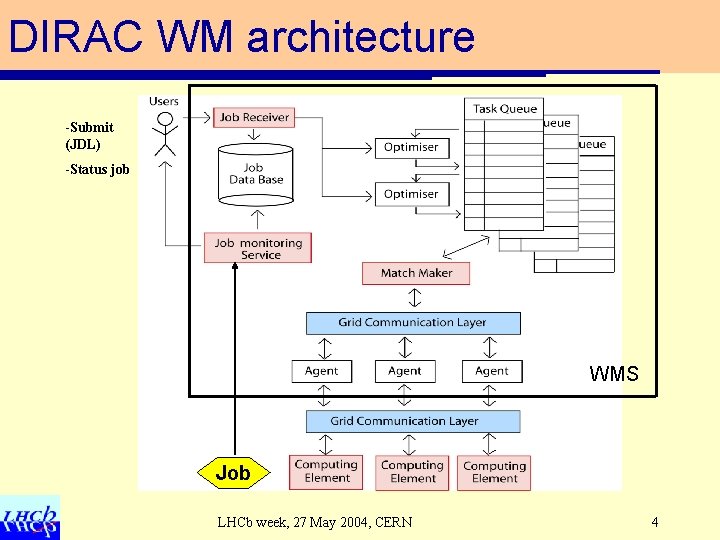

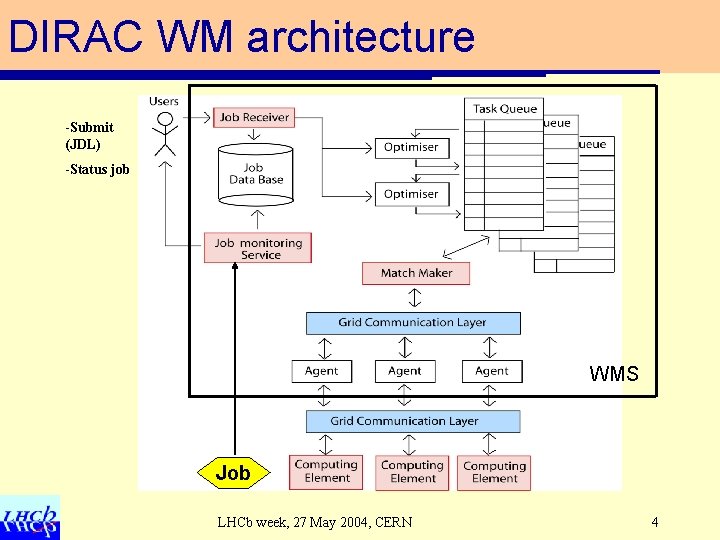

DIRAC WM architecture -Submit (JDL) -Status job Source job (JDL) WM S WMS Job LHCb week, 27 May 2004, CERN 4

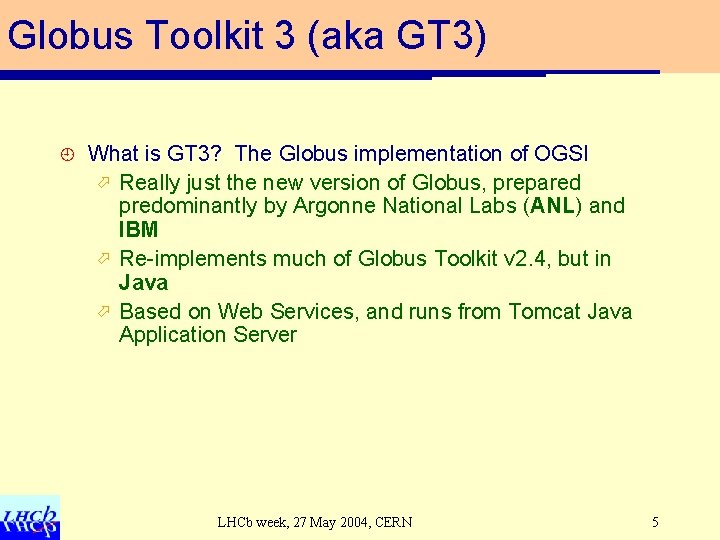

Globus Toolkit 3 (aka GT 3) ¿ What is GT 3? The Globus implementation of OGSI ö Really just the new version of Globus, prepared predominantly by Argonne National Labs (ANL) and IBM ö Re-implements much of Globus Toolkit v 2. 4, but in Java ö Based on Web Services, and runs from Tomcat Java Application Server LHCb week, 27 May 2004, CERN 5

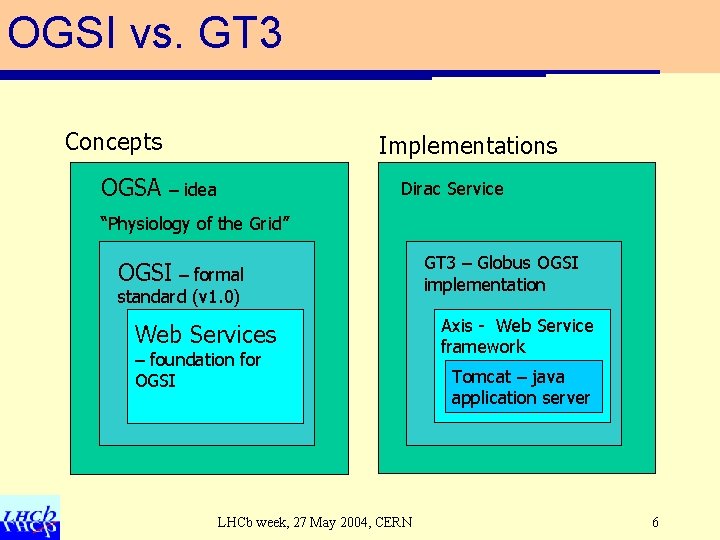

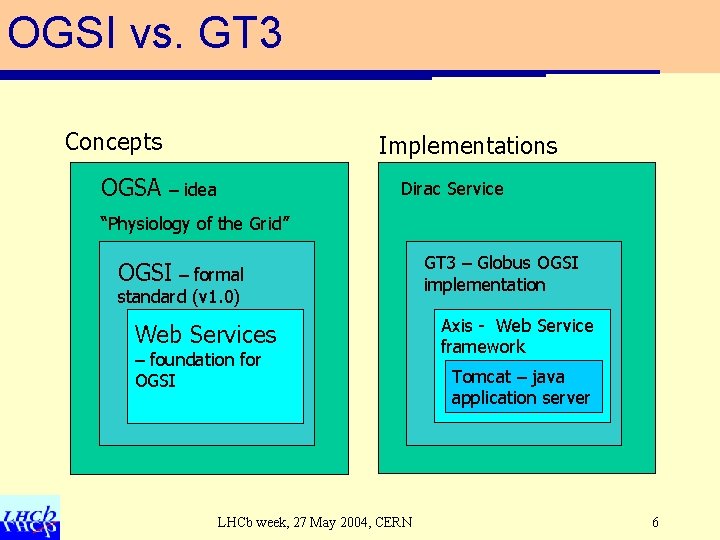

OGSI vs. GT 3 Concepts OGSA Implementations Dirac Service – idea “Physiology of the Grid” OGSI – formal standard (v 1. 0) Web Services – foundation for OGSI LHCb week, 27 May 2004, CERN GT 3 – Globus OGSI implementation Axis - Web Service framework Tomcat – java application server 6

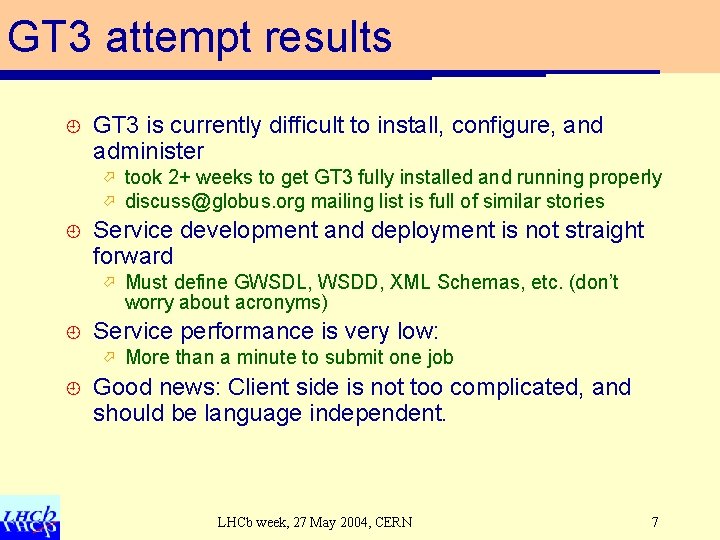

GT 3 attempt results ¿ GT 3 is currently difficult to install, configure, and administer ö ö ¿ Service development and deployment is not straight forward ö ¿ Must define GWSDL, WSDD, XML Schemas, etc. (don’t worry about acronyms) Service performance is very low: ö ¿ took 2+ weeks to get GT 3 fully installed and running properly discuss@globus. org mailing list is full of similar stories More than a minute to submit one job Good news: Client side is not too complicated, and should be language independent. LHCb week, 27 May 2004, CERN 7

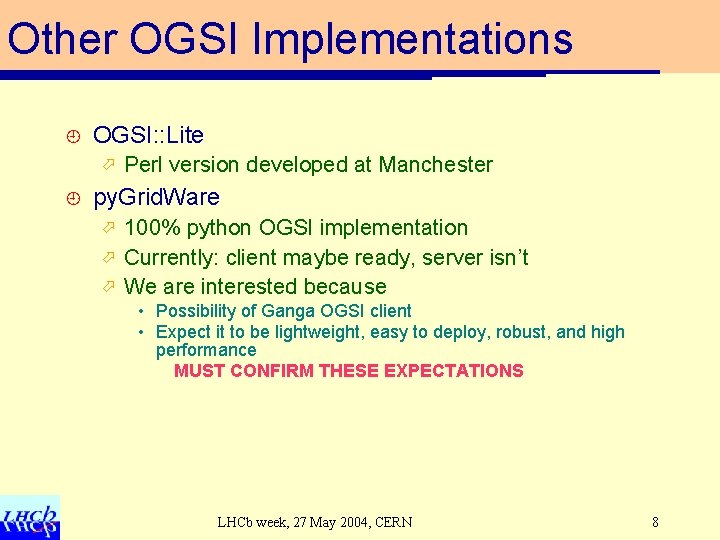

Other OGSI Implementations ¿ OGSI: : Lite ö ¿ Perl version developed at Manchester py. Grid. Ware 100% python OGSI implementation ö Currently: client maybe ready, server isn’t ö We are interested because ö • Possibility of Ganga OGSI client • Expect it to be lightweight, easy to deploy, robust, and high performance MUST CONFIRM THESE EXPECTATIONS LHCb week, 27 May 2004, CERN 8

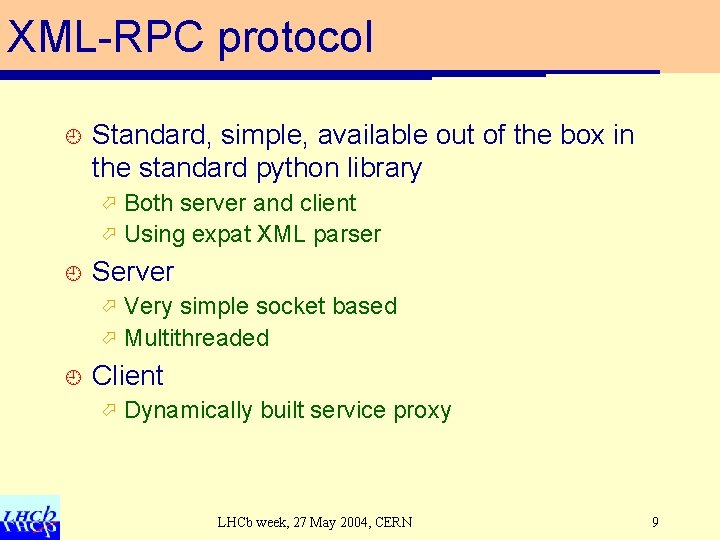

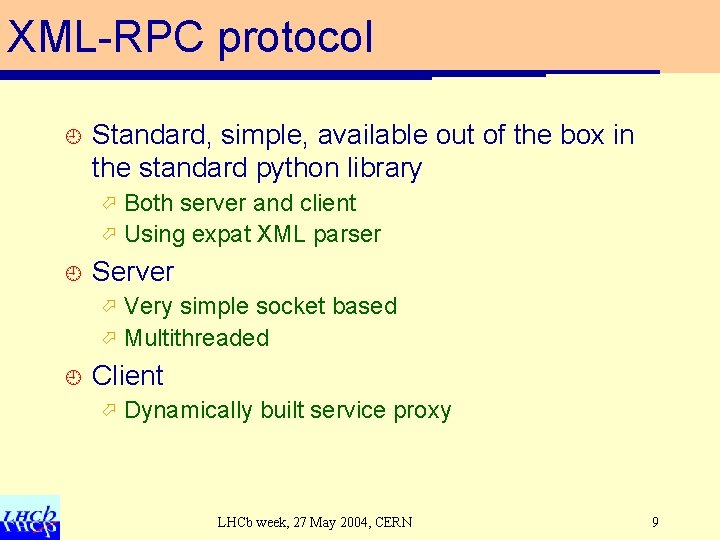

XML-RPC protocol ¿ Standard, simple, available out of the box in the standard python library Both server and client ö Using expat XML parser ö ¿ Server Very simple socket based ö Multithreaded ö ¿ Client ö Dynamically built service proxy LHCb week, 27 May 2004, CERN 9

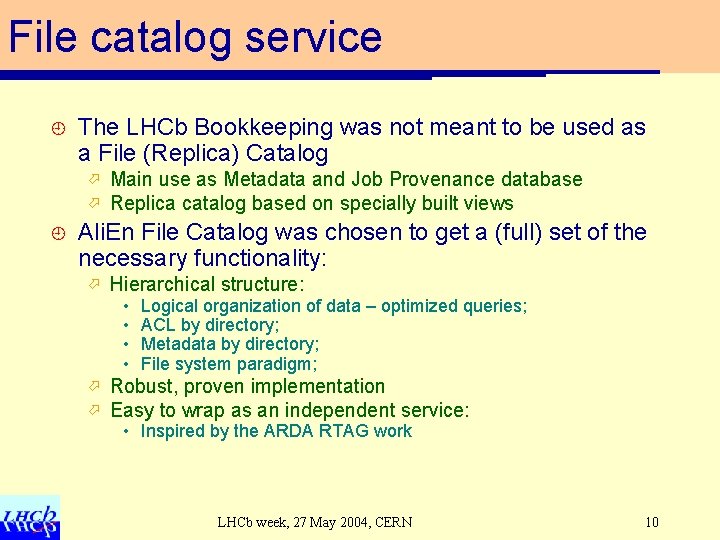

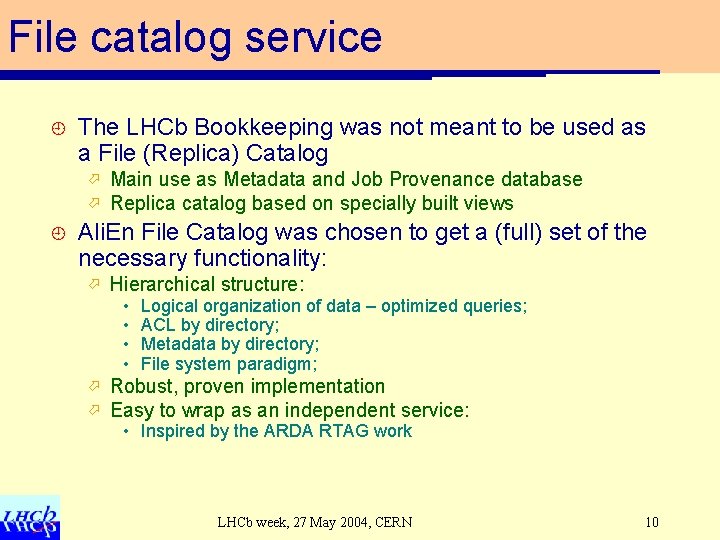

File catalog service ¿ The LHCb Bookkeeping was not meant to be used as a File (Replica) Catalog ö ö ¿ Main use as Metadata and Job Provenance database Replica catalog based on specially built views Ali. En File Catalog was chosen to get a (full) set of the necessary functionality: ö Hierarchical structure: • • ö ö Logical organization of data – optimized queries; ACL by directory; Metadata by directory; File system paradigm; Robust, proven implementation Easy to wrap as an independent service: • Inspired by the ARDA RTAG work LHCb week, 27 May 2004, CERN 10

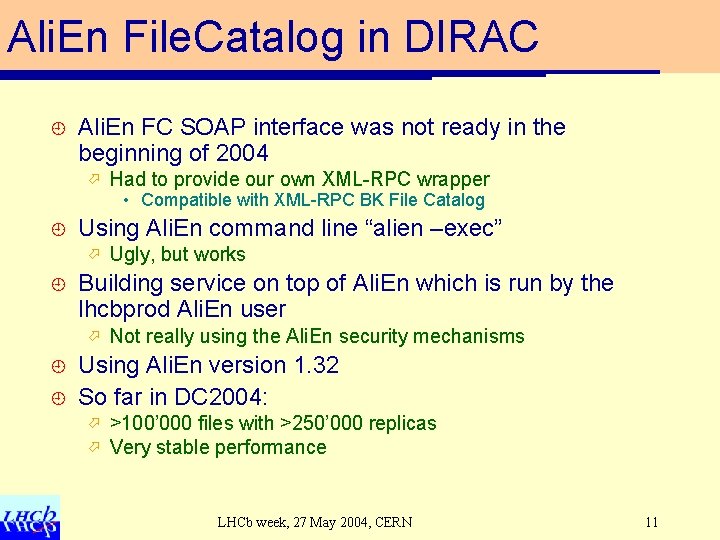

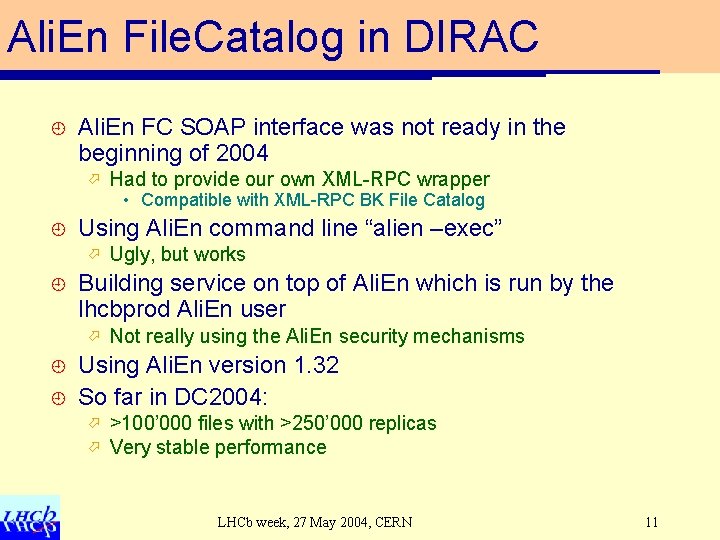

Ali. En File. Catalog in DIRAC ¿ Ali. En FC SOAP interface was not ready in the beginning of 2004 ö Had to provide our own XML-RPC wrapper • Compatible with XML-RPC BK File Catalog ¿ Using Ali. En command line “alien –exec” ö ¿ Building service on top of Ali. En which is run by the lhcbprod Ali. En user ö ¿ ¿ Ugly, but works Not really using the Ali. En security mechanisms Using Ali. En version 1. 32 So far in DC 2004: ö ö >100’ 000 files with >250’ 000 replicas Very stable performance LHCb week, 27 May 2004, CERN 11

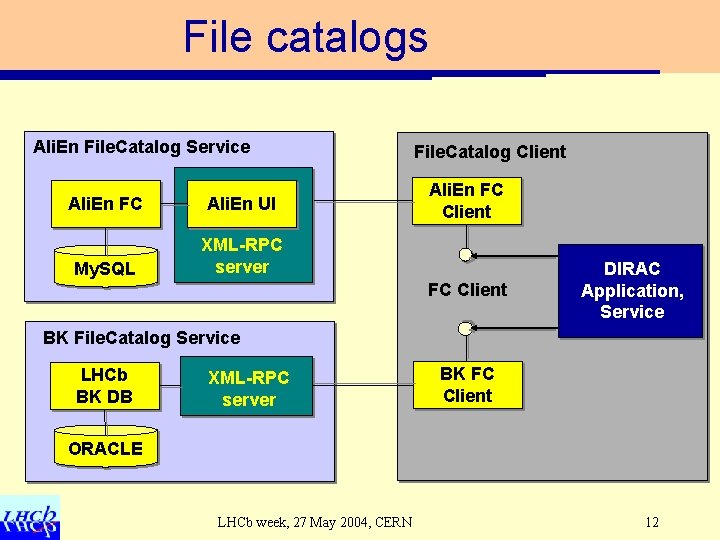

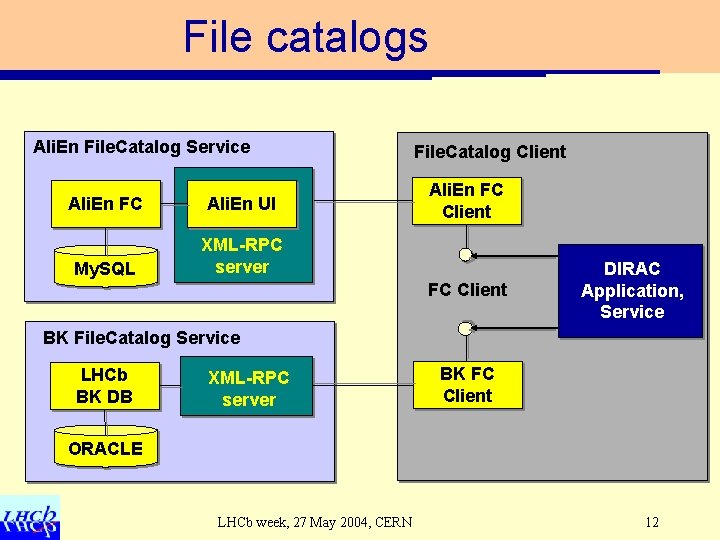

File catalogs Ali. En File. Catalog Service Ali. En FC Ali. En UI My. SQL XML-RPC server File. Catalog Client Ali. En FC Client DIRAC Application, Service BK File. Catalog Service LHCb BK DB XML-RPC server BK FC Client ORACLE LHCb week, 27 May 2004, CERN 12

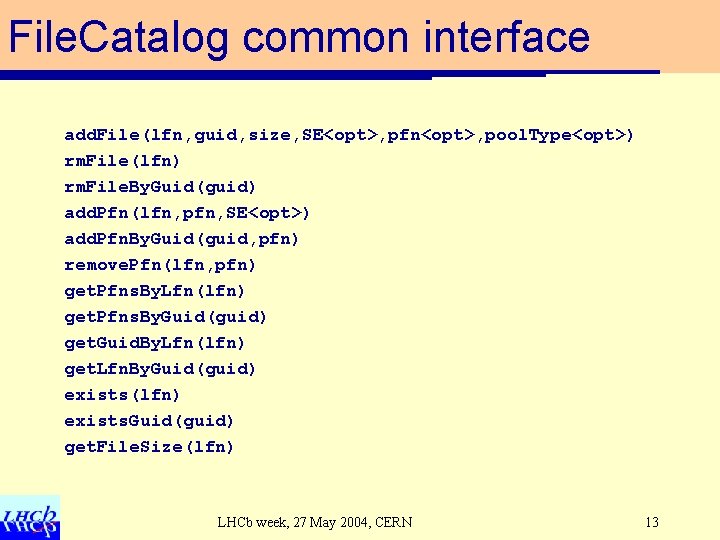

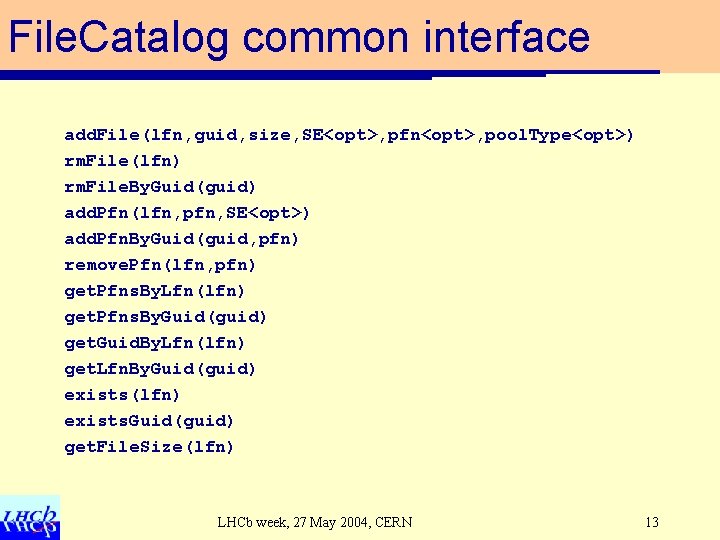

File. Catalog common interface add. File(lfn, guid, size, SE<opt>, pfn<opt>, pool. Type<opt>) rm. File(lfn) rm. File. By. Guid(guid) add. Pfn(lfn, pfn, SE<opt>) add. Pfn. By. Guid(guid, pfn) remove. Pfn(lfn, pfn) get. Pfns. By. Lfn(lfn) get. Pfns. By. Guid(guid) get. Guid. By. Lfn(lfn) get. Lfn. By. Guid(guid) exists(lfn) exists. Guid(guid) get. File. Size(lfn) LHCb week, 27 May 2004, CERN 13

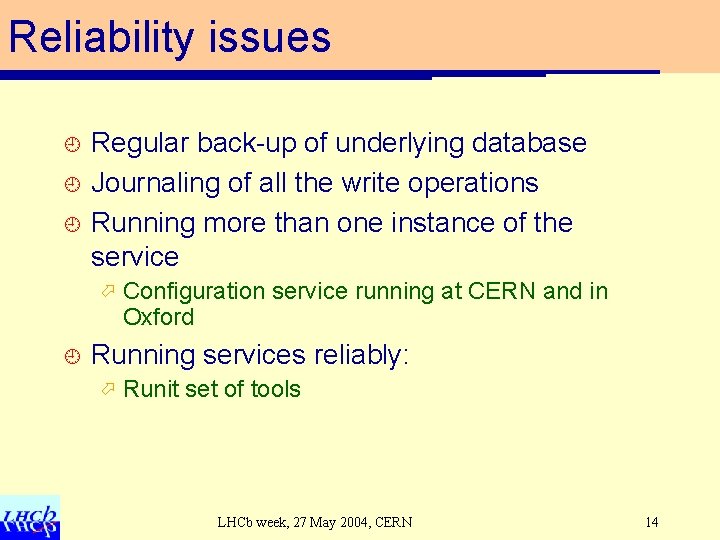

Reliability issues ¿ ¿ ¿ Regular back-up of underlying database Journaling of all the write operations Running more than one instance of the service ö ¿ Configuration service running at CERN and in Oxford Running services reliably: ö Runit set of tools LHCb week, 27 May 2004, CERN 14

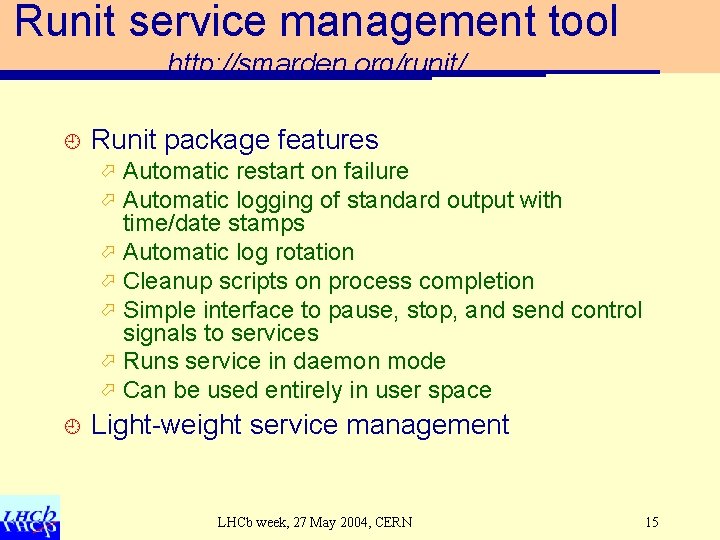

Runit service management tool http: //smarden. org/runit/ ¿ Runit package features Automatic restart on failure Automatic logging of standard output with time/date stamps ö Automatic log rotation ö Cleanup scripts on process completion ö Simple interface to pause, stop, and send control signals to services ö Runs service in daemon mode ö Can be used entirely in user space ö ö ¿ Light-weight service management LHCb week, 27 May 2004, CERN 15

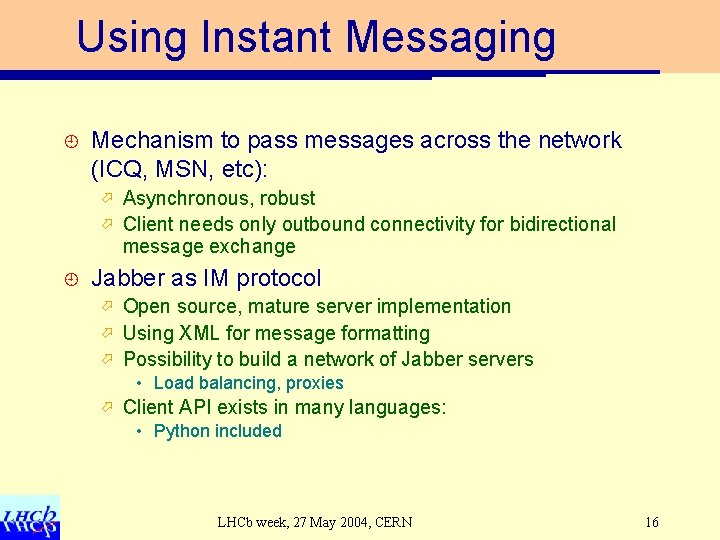

Using Instant Messaging ¿ Mechanism to pass messages across the network (ICQ, MSN, etc): Asynchronous, robust ö Client needs only outbound connectivity for bidirectional message exchange ö ¿ Jabber as IM protocol Open source, mature server implementation ö Using XML for message formatting ö Possibility to build a network of Jabber servers ö • Load balancing, proxies ö Client API exists in many languages: • Python included LHCb week, 27 May 2004, CERN 16

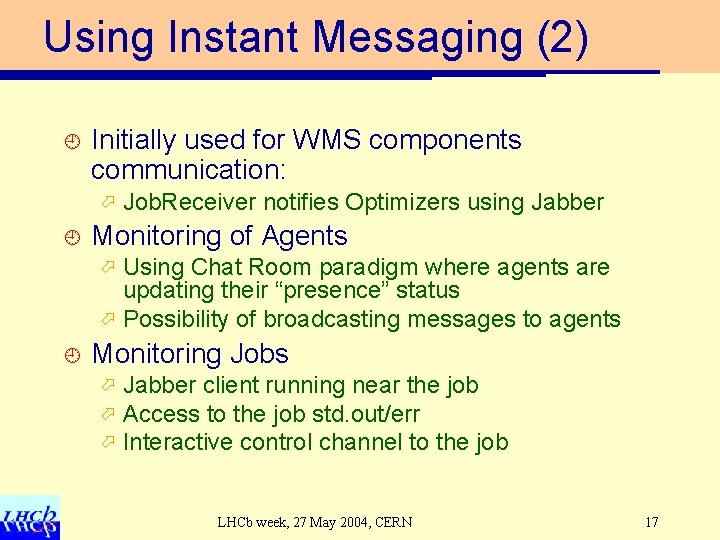

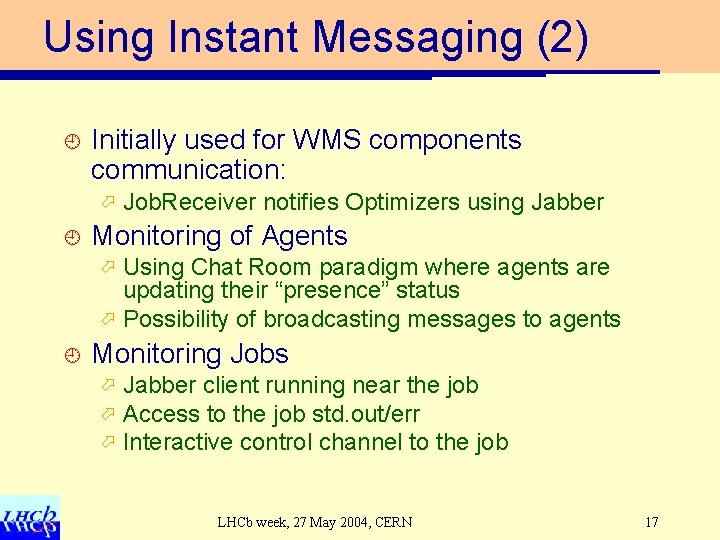

Using Instant Messaging (2) ¿ Initially used for WMS components communication: ö ¿ Job. Receiver notifies Optimizers using Jabber Monitoring of Agents Using Chat Room paradigm where agents are updating their “presence” status ö Possibility of broadcasting messages to agents ö ¿ Monitoring Jobs ö ö ö Jabber client running near the job Access to the job std. out/err Interactive control channel to the job LHCb week, 27 May 2004, CERN 17

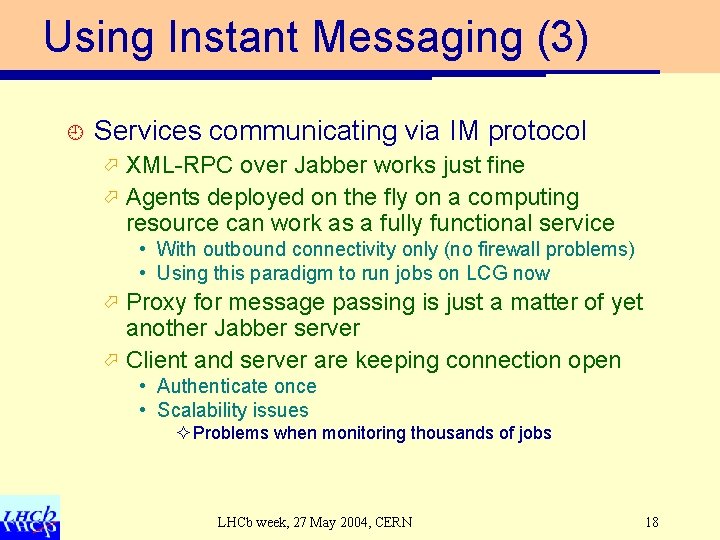

Using Instant Messaging (3) ¿ Services communicating via IM protocol XML-RPC over Jabber works just fine ö Agents deployed on the fly on a computing resource can work as a fully functional service ö • With outbound connectivity only (no firewall problems) • Using this paradigm to run jobs on LCG now Proxy for message passing is just a matter of yet another Jabber server ö Client and server are keeping connection open ö • Authenticate once • Scalability issues ² Problems when monitoring thousands of jobs LHCb week, 27 May 2004, CERN 18

Conclusions ¿ Building services from the existing components is rather easy; ö ¿ ¿ Secure services is not easy Having more than one implementation of services is a must; Instant Messaging is very promising for creating dynamically deployed, light-weight services – agents. LHCb week, 27 May 2004, CERN 19