Using Sensitivity Analysis and Visualization Techniques to Open

![Example 2: Can we classify videos by facial expression? [Tam et al. 2017] © Example 2: Can we classify videos by facial expression? [Tam et al. 2017] ©](https://slidetodoc.com/presentation_image_h2/a5f84f8c377aea6fa7047ec75bd4d818/image-6.jpg)

![Example 3: Can we predict class dropout in MOOCs? [Chen et al. 2017] © Example 3: Can we predict class dropout in MOOCs? [Chen et al. 2017] ©](https://slidetodoc.com/presentation_image_h2/a5f84f8c377aea6fa7047ec75bd4d818/image-7.jpg)

![Example: VA Task [T 1] Progressively explore the quality of prediction based on different Example: VA Task [T 1] Progressively explore the quality of prediction based on different](https://slidetodoc.com/presentation_image_h2/a5f84f8c377aea6fa7047ec75bd4d818/image-45.jpg)

- Slides: 47

Using Sensitivity Analysis and Visualization Techniques to Open Black Box Data Mining Models Information Sciences 225 (2013) 1 -17 Publisher: Elsevier Impact 2015/2016: 4. 95 © Mai Elshehaly

Authors • Paulo Cortez • Mark J. Embrechts © Mai Elshehaly

Motivation (why) • Need to extract useful knowledge from raw data addressed by data mining (DM) © Mai Elshehaly

Examples of Useful Knowledge © Mai Elshehaly

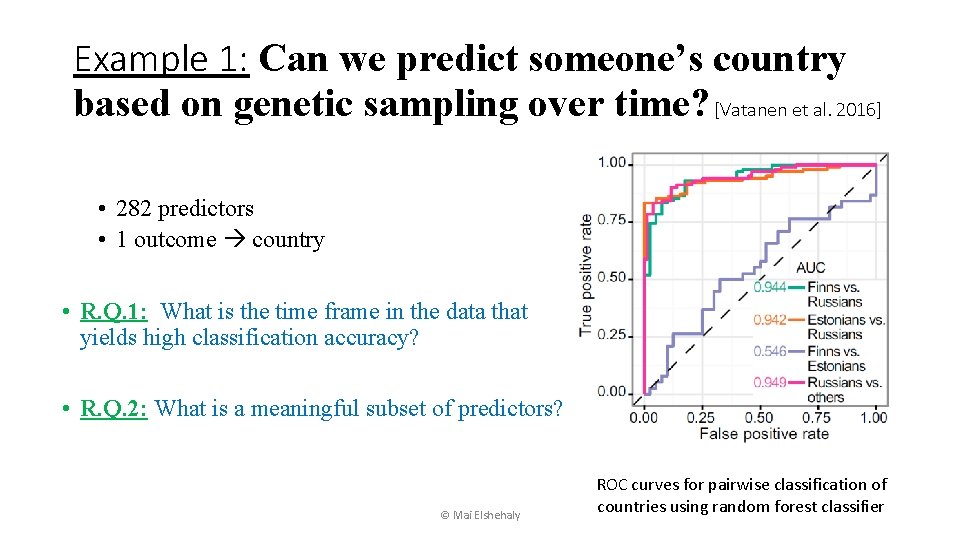

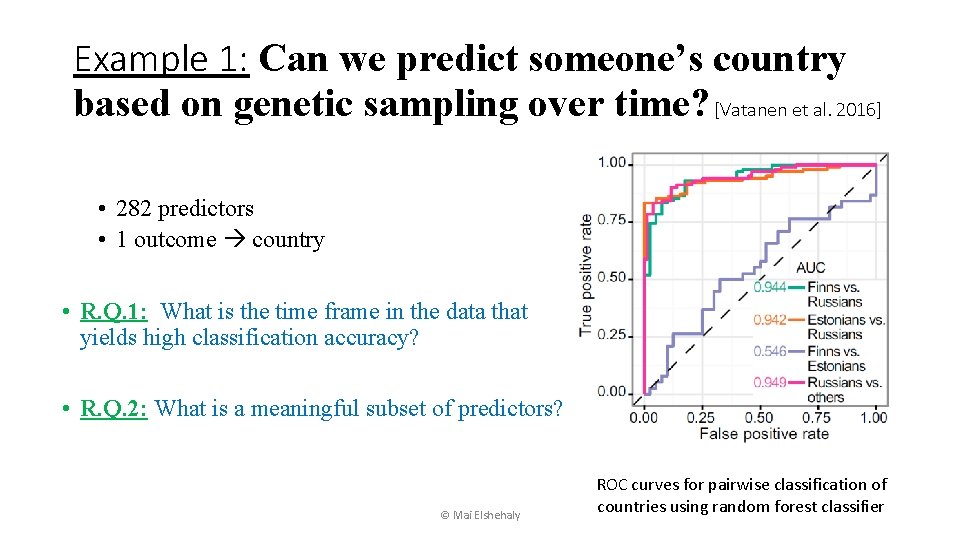

Example 1: Can we predict someone’s country based on genetic sampling over time? [Vatanen et al. 2016] • 282 predictors • 1 outcome country • R. Q. 1: What is the time frame in the data that yields high classification accuracy? • R. Q. 2: What is a meaningful subset of predictors? © Mai Elshehaly ROC curves for pairwise classification of countries using random forest classifier

![Example 2 Can we classify videos by facial expression Tam et al 2017 Example 2: Can we classify videos by facial expression? [Tam et al. 2017] ©](https://slidetodoc.com/presentation_image_h2/a5f84f8c377aea6fa7047ec75bd4d818/image-6.jpg)

Example 2: Can we classify videos by facial expression? [Tam et al. 2017] © Mai Elshehaly

![Example 3 Can we predict class dropout in MOOCs Chen et al 2017 Example 3: Can we predict class dropout in MOOCs? [Chen et al. 2017] ©](https://slidetodoc.com/presentation_image_h2/a5f84f8c377aea6fa7047ec75bd4d818/image-7.jpg)

Example 3: Can we predict class dropout in MOOCs? [Chen et al. 2017] © Mai Elshehaly

Example 4: Can we predict the popularity of posts in social media (# reshares)? [Chen Siming et al. 2017] © Mai Elshehaly

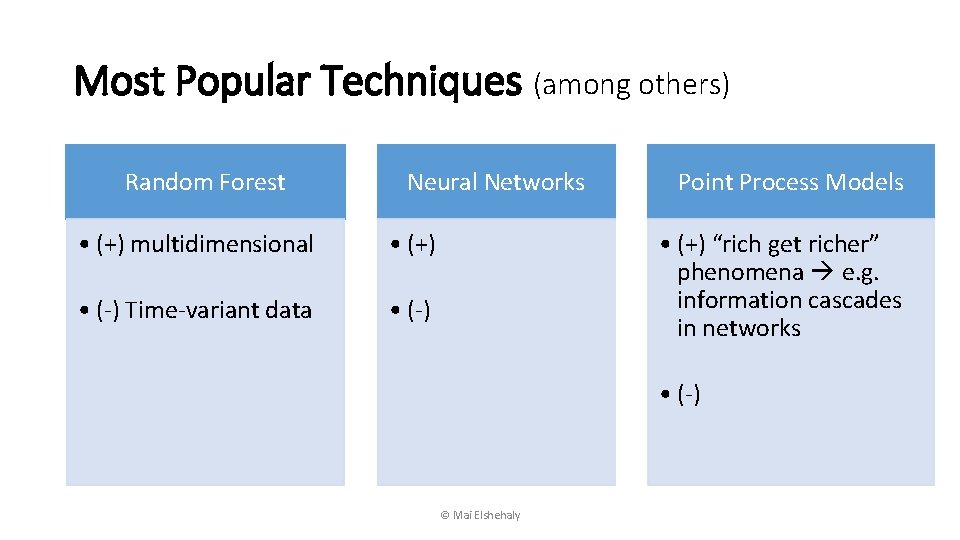

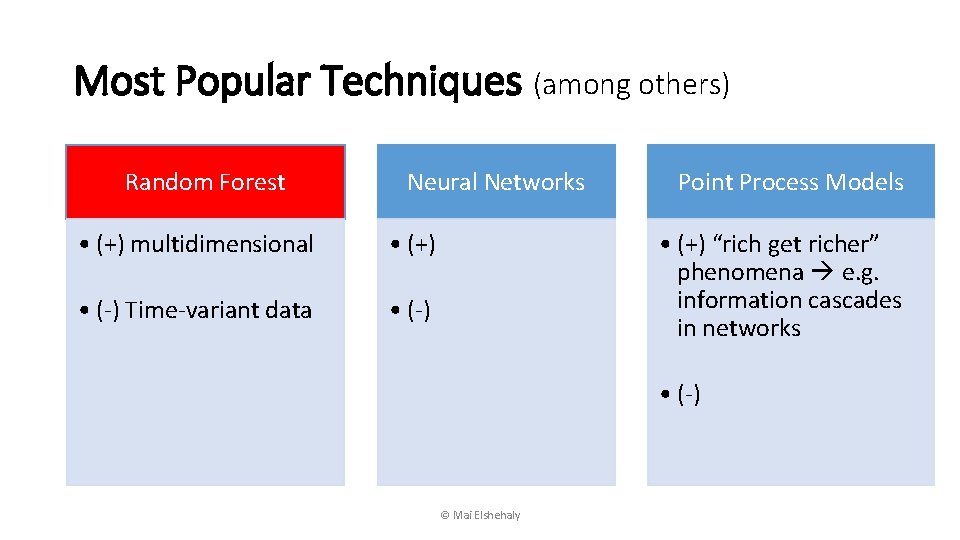

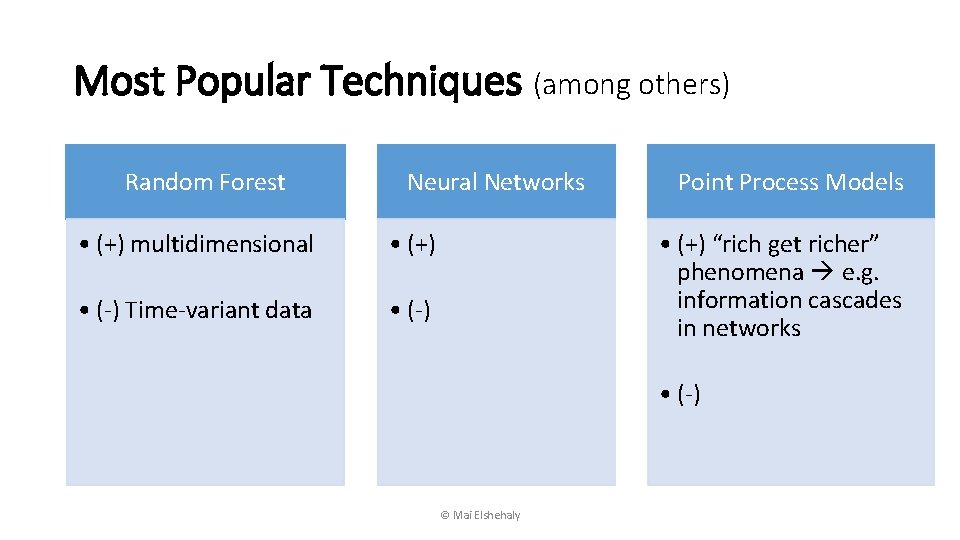

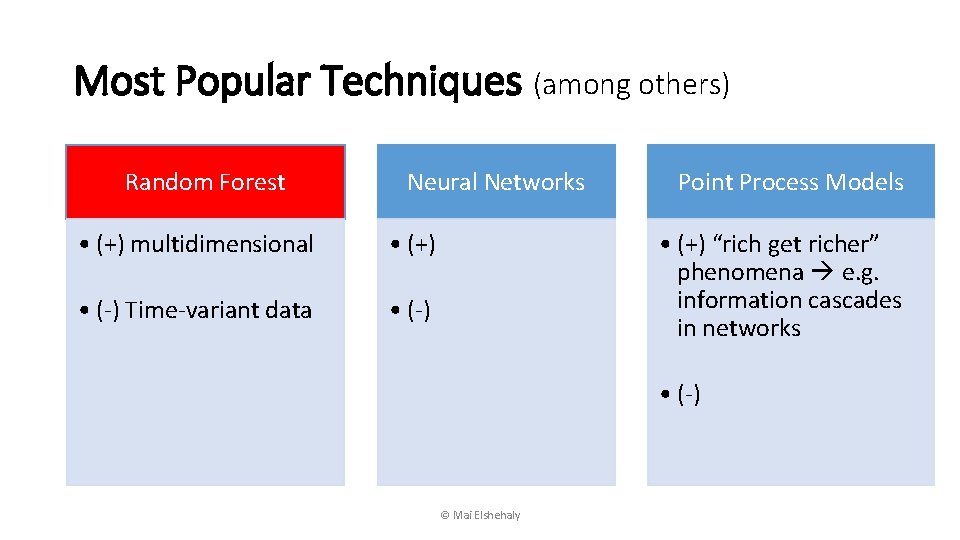

Most Popular Techniques (among others) Random Forest Neural Networks • (+) multidimensional • (+) • (-) Time-variant data • (-) Point Process Models • (+) “rich get richer” phenomena e. g. information cascades in networks • (-) © Mai Elshehaly

Most Popular Techniques (among others) Random Forest Neural Networks • (+) multidimensional • (+) • (-) Time-variant data • (-) Point Process Models • (+) “rich get richer” phenomena e. g. information cascades in networks • (-) © Mai Elshehaly

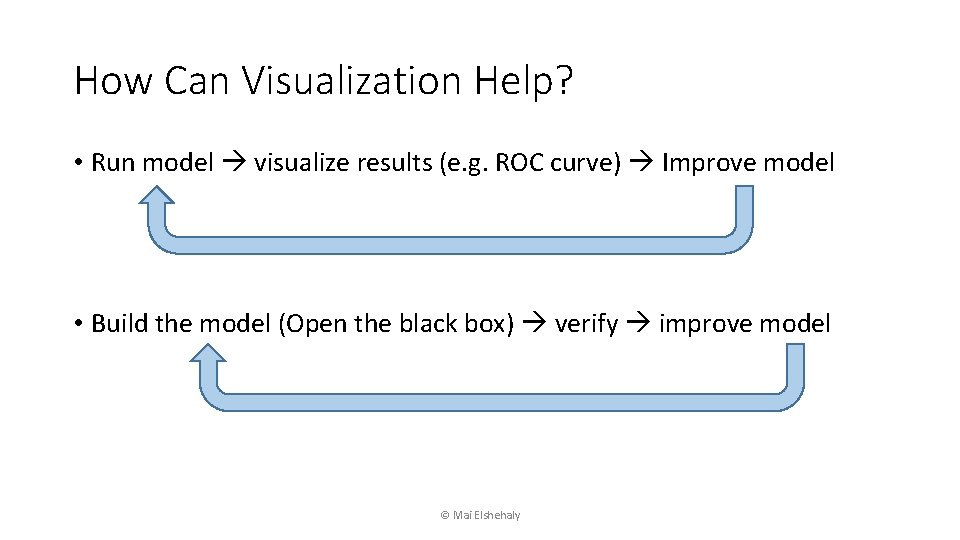

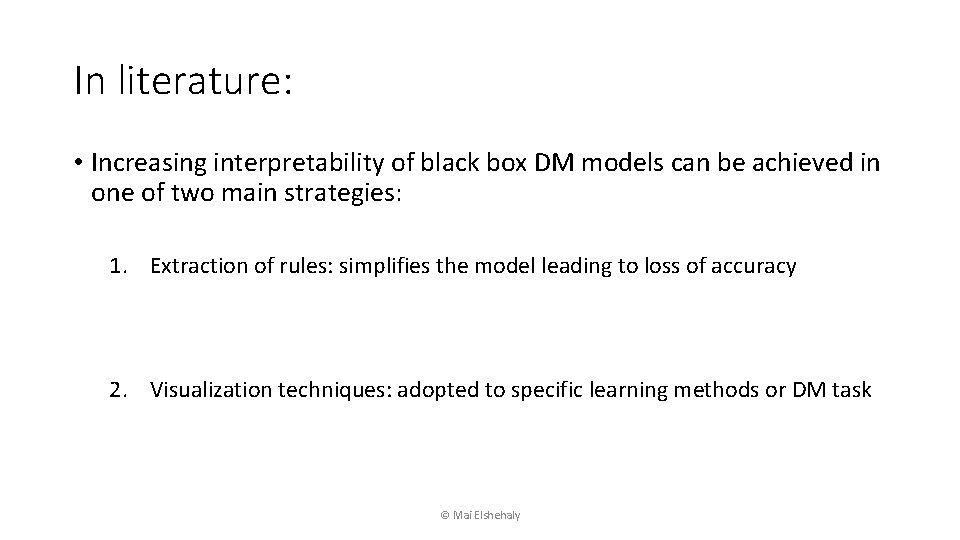

Challenges in Data Mining • The worth of a DM model can be determined by its: • Predictive capability • Computational requirements • Explanatory power • DM models are black boxes and are too complex to be understood by humans © Mai Elshehaly

In literature: • Increasing interpretability of black box DM models can be achieved in one of two main strategies: 1. Extraction of rules 2. Visualization techniques © Mai Elshehaly

In literature: • Increasing interpretability of black box DM models can be achieved in one of two main strategies: 1. Extraction of rules: simplifies the model leading to loss of accuracy 2. Visualization techniques: adopted to specific learning methods or DM task © Mai Elshehaly

How Can Visualization Help? • Run model visualize results (e. g. ROC curve) Improve model • Build the model (Open the black box) verify improve model © Mai Elshehaly

Contributions (what) • Novel visualization approach to extract human understandable knowledge from black box DM models • Five Sensitivity Analysis methods (three of which are new) • Four measures of input importance (1 new) • Adaptation of SA methods and measures to handle discrete variables and classification tasks • New synthetic dataset for evaluation • Visualization plots for SA results: input importance bars, coloristic curve, surface and contour • Explore 3 black box models (NN, SVM, and RF) and one white box model (decision tree) to show examples © Mai Elshehaly

Proposed Approach (how) • Describes: • • SA approaches Visualization techniques Learning methods Datasets • Test: • Synthetic data • Real-world data © Mai Elshehaly

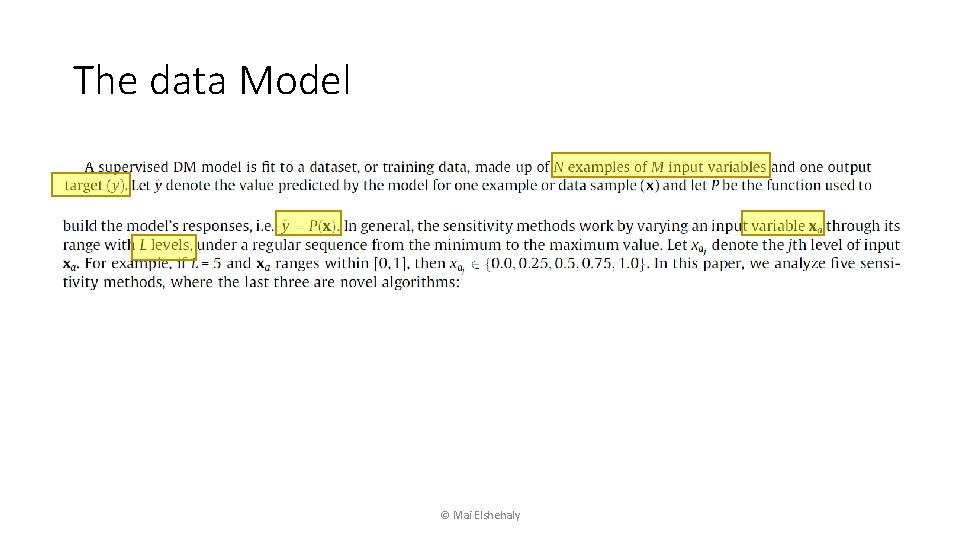

The data Model © Mai Elshehaly

What just happened? • The authors characterized the data that is input to their system using an abstract model • This abstract model characterizes the different components in the data without going into domain-specific details (e. g. we don’t know if the N samples or M variables are N patient samples with M genes or N cars with M locations, etc. ) © Mai Elshehaly

Note to us: Section 3. 1: Data Abstraction • Write an abstract description of the data set you will work on. • Use mathematical notation to represent the different components. • Identify any high level features or properties of the data that will influence your design. • Reuse the terminology and symbols introduced in this section throughout the paper to refer to data components. © Mai Elshehaly

Measures of sensitivity • What is sensitivity? • How the outcomes of a classifier change as one or more predictors change • What does it tell us? • The effect that input components (predictors) have over the classification of data • A measure of input importance © Mai Elshehaly

Example: A Classifier to Make a Decision • Amr Diab concert in Ismailia! Should you go or not? • x 1: Is the weather good? • x 2 : Is the ticket affordable? Decision: yes/no • x 3 : Does your best friend want to go with you? © Mai Elshehaly

Example: A Classifier to Make a Decision • If x 1= 1, x 2= 1, and x 3= 1 go • If x 1= 1, x 2= 1, and x 3= 0 ? • If x 1= 0, x 2= 0, and x 3= 1 ? • The neuron needs to learn what is important to you to know when to output 1 (yes!) • A neuron will fire if it calculates a value larger than a specified threshold © Mai Elshehaly

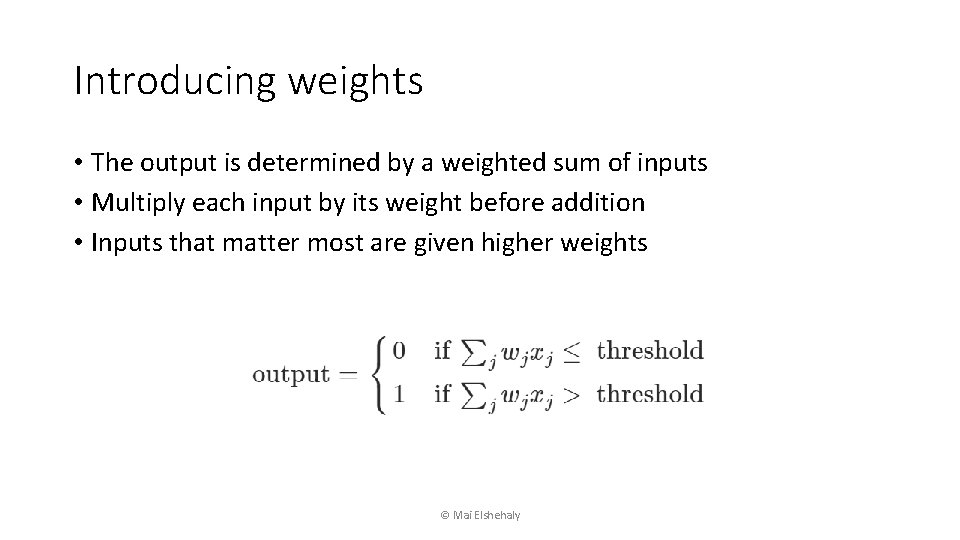

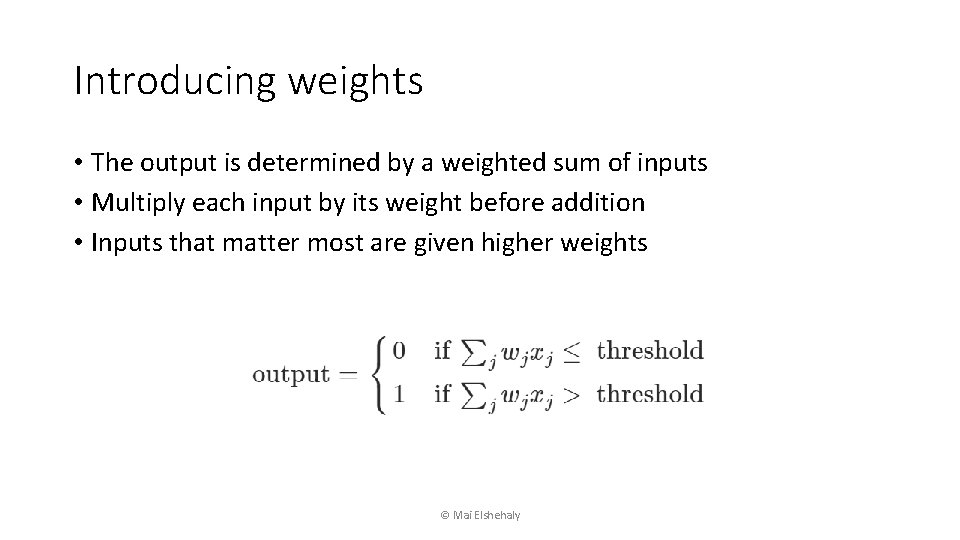

Example: A Classifier to Make a Decision • We can train the neuron to fire (say “yes” to Amr Diab!) if any two of the three conditions are satisfied • That is: • If the ticket is affordable and your friend is coming, you don’t care if it’s pouring rain (x 1= 0, x 2= 1, x 3= 1) • If the weather is good and your friend is coming, you don’t care if the ticket costs over 1, 000 L. E. (x 1= 1, x 2= 0, x 3= 1) • If the weather is good, and the ticket is affordable, you don’t care if your friend wants to watch Barney instead of going with you (x 1= 1, x 2= 1, x 3= 0) © Mai Elshehaly

Example: A Classifier to Make a Decision • In this case, the neuron outputs 1 if the sum: x 1+ x 2+ x 3 >= 2 • So we set a threshold = 2 for the neuron to fire • Is this good enough? Ø The above example treats all input conditions equally! Ø What if one of the inputs matters more to you than others? © Mai Elshehaly

Introducing weights • The output is determined by a weighted sum of inputs • Multiply each input by its weight before addition • Inputs that matter most are given higher weights © Mai Elshehaly

Example: Make a decision (cont. ) • If the price of the ticket is most important we can set w 2 = 6 • Since you care equally about the weather and your friend, you can set w 1 = w 3 = 2 • Now set threshold = 5 • In case x 1= 0, x 2= 1, x 3= 1 • w 1 x 1+ w 2 x 2+ w 3 x 3= 2*0 + 6*1 + 2*1 = 8 > 5 GO • In case x 1= 0, x 2= 1, x 3= 0 • w 1 x 1+ w 2 x 2+ w 3 x 3= 2*0 + 6*1 + 2*0 = 6 > 5 GO • In case x 1= 1, x 2= 0, x 3= 1 • w 1 x 1+ w 2 x 2+ w 3 x 3= 2*1 + 6*0 + 2*1 = 4 < 5 DON’T GO © Mai Elshehaly

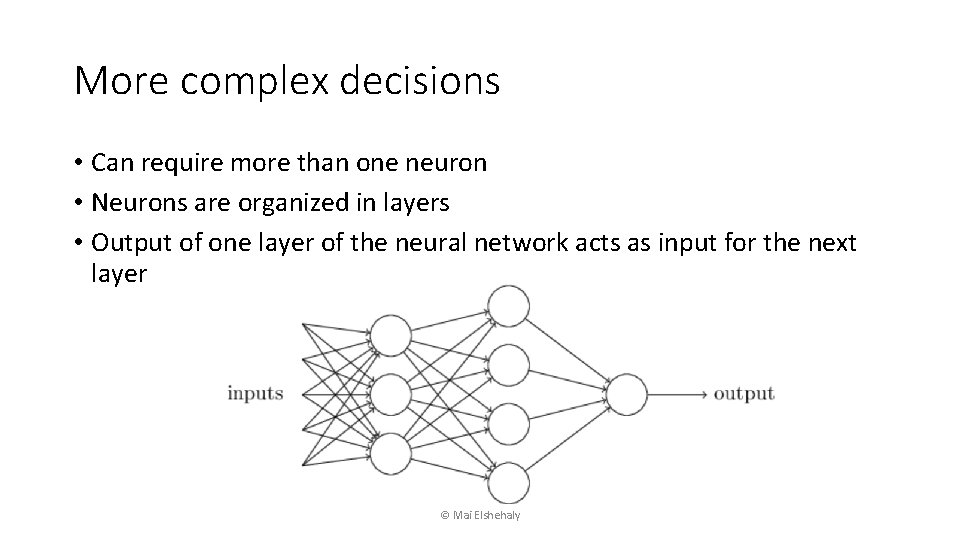

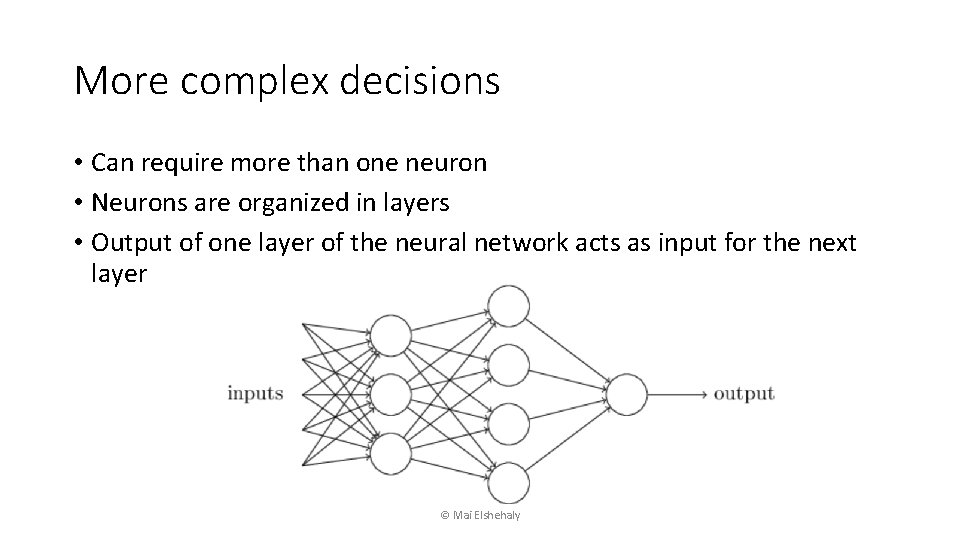

More complex decisions • Can require more than one neuron • Neurons are organized in layers • Output of one layer of the neural network acts as input for the next layer © Mai Elshehaly

Another Classification Example: Cat or Dog? © Mai Elshehaly

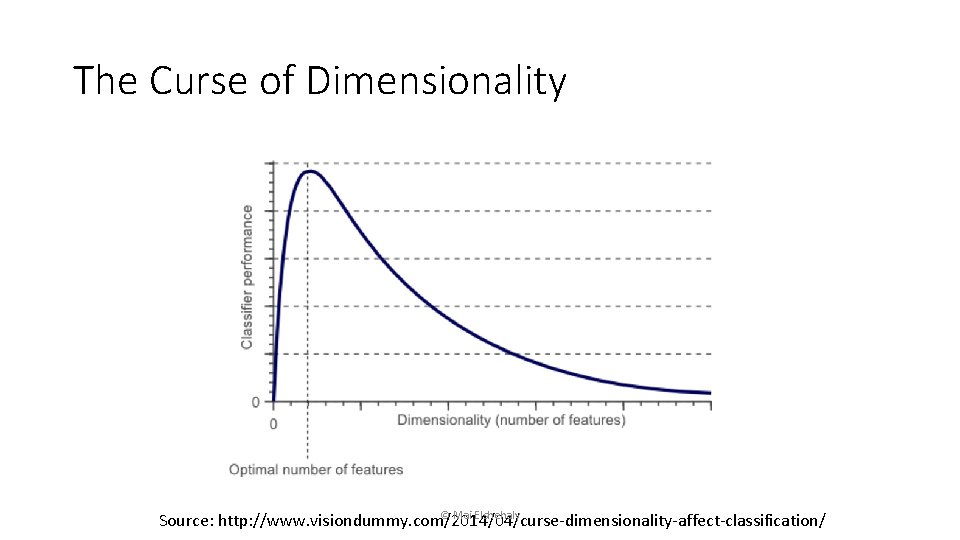

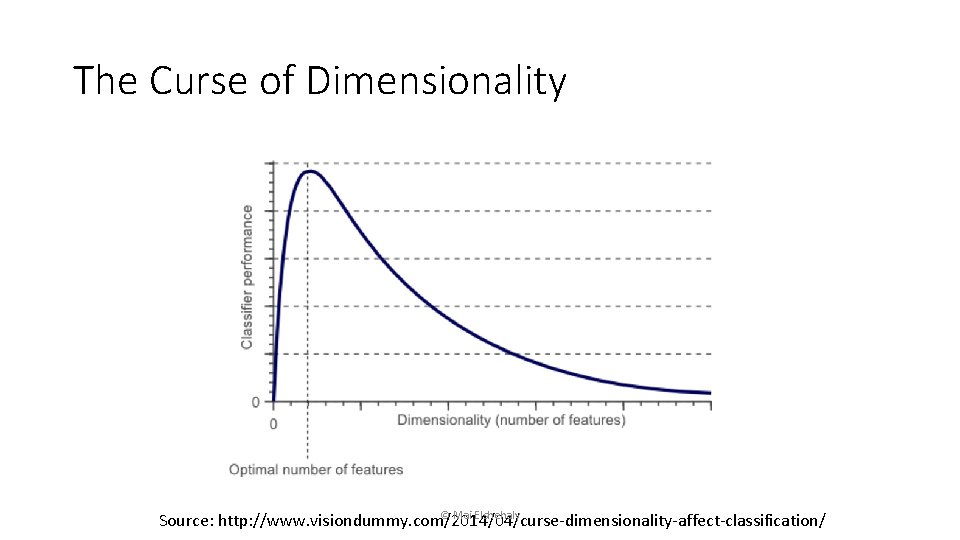

The Curse of Dimensionality © Mai Elshehaly Source: http: //www. visiondummy. com/2014/04/curse-dimensionality-affect-classification/

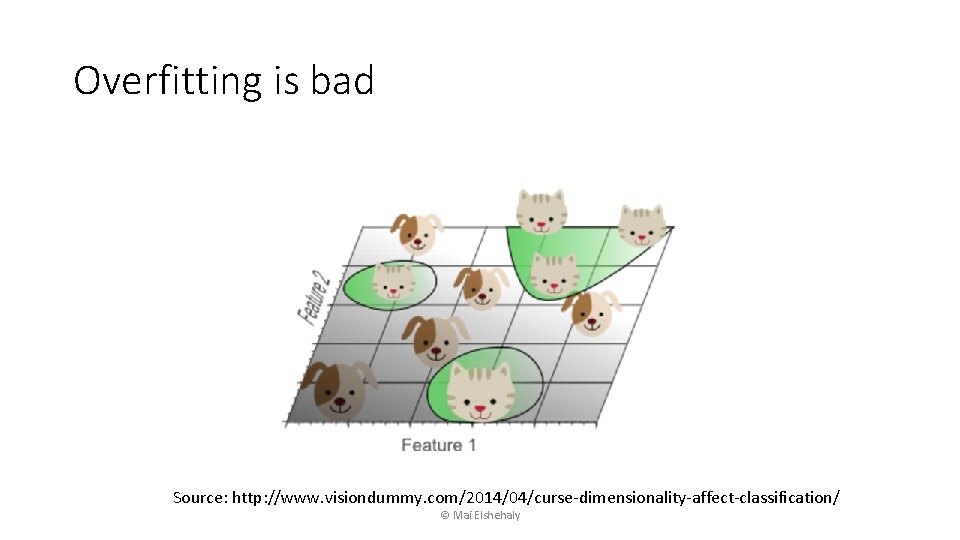

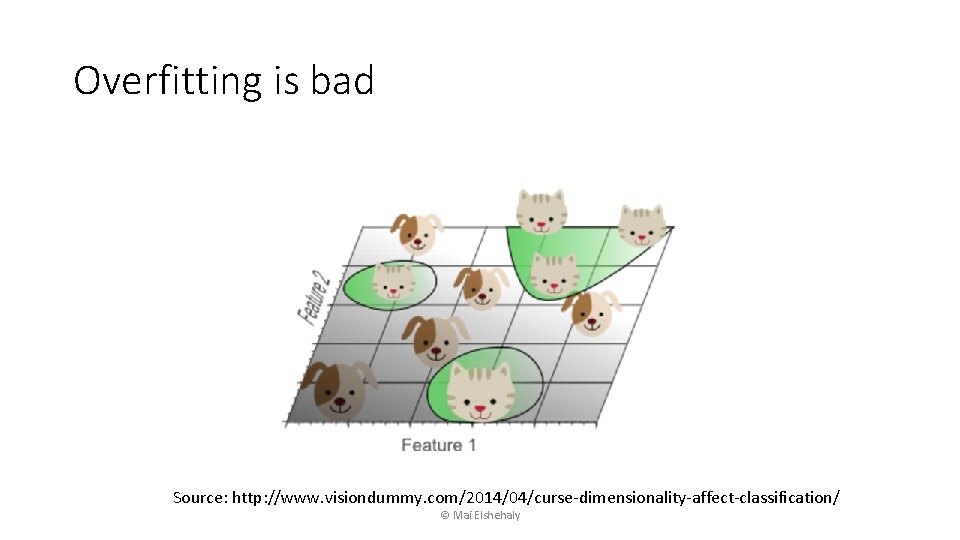

Overfitting is bad Source: http: //www. visiondummy. com/2014/04/curse-dimensionality-affect-classification/ © Mai Elshehaly

Avoiding overfitting • Very large training data grows exponentially with # dimensions • Keep #dimensions manageable find the most important features © Mai Elshehaly

Remember • The worth of a DM model can be determined by its: • Predictive capability • Computational requirements • Explanatory power © Mai Elshehaly

Remember • The worth of a DM model can be determined by its: • Predictive capability • Computational requirements • Explanatory power Feature importance helps all three © Mai Elshehaly

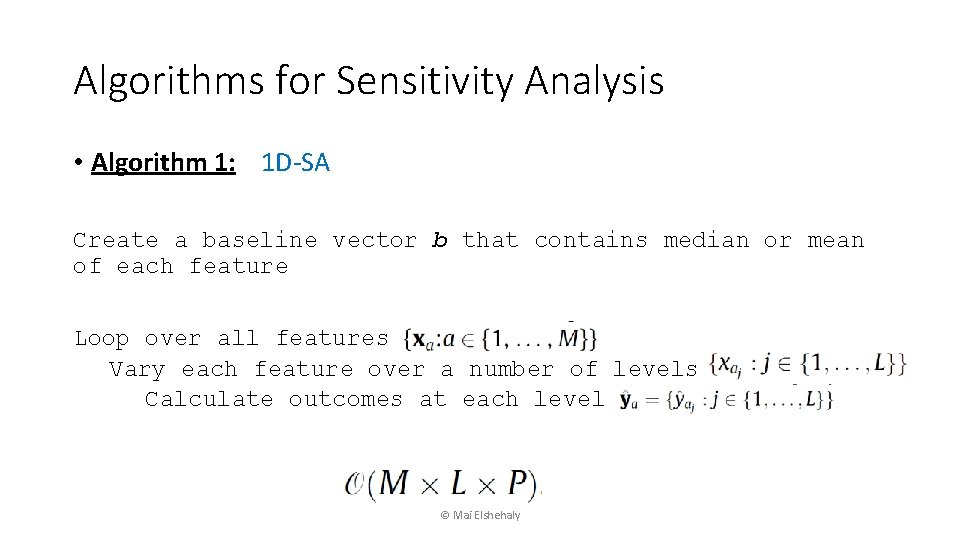

Algorithms for Sensitivity Analysis • Algorithm 1: 1 D-SA Create a baseline vector b that contains median or mean of each feature Loop over all features Vary each feature over a number of levels Calculate outcomes at each level © Mai Elshehaly

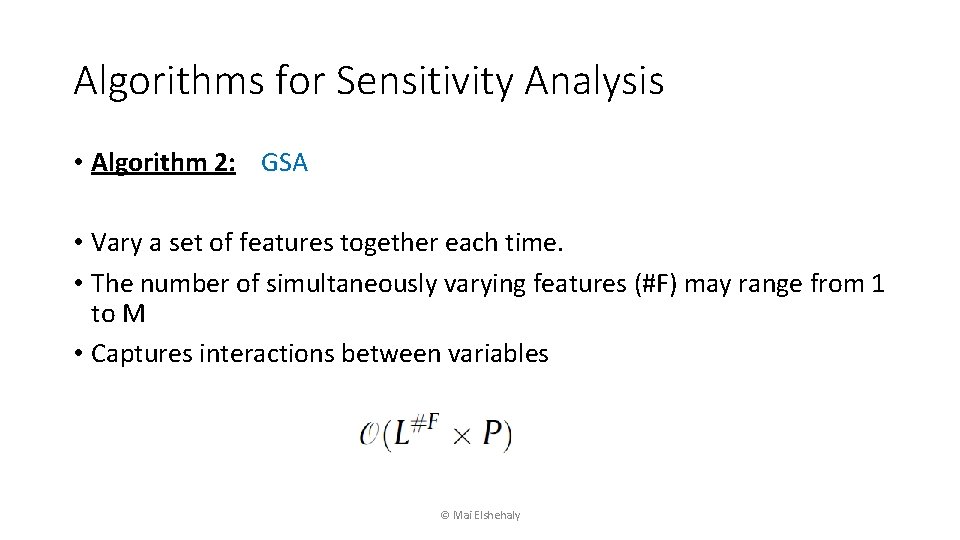

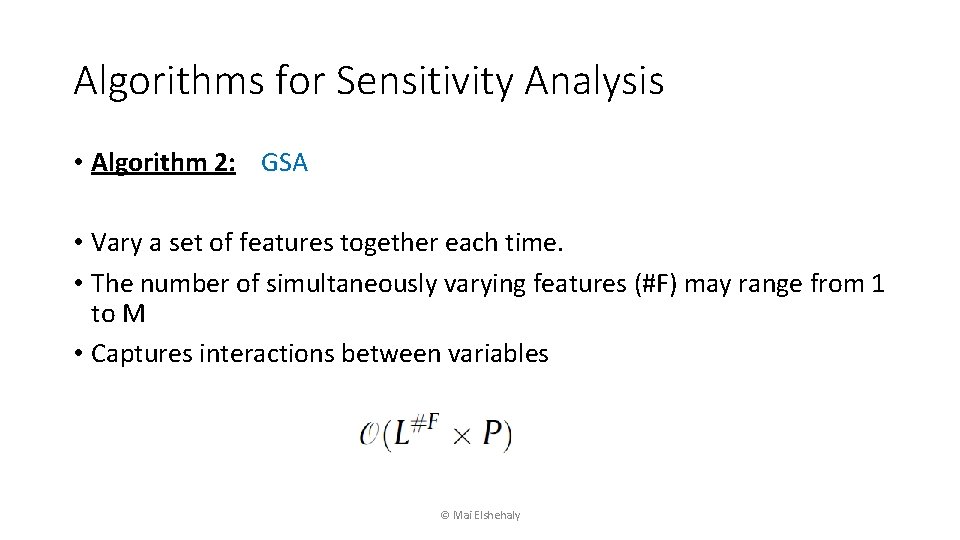

Algorithms for Sensitivity Analysis • Algorithm 2: GSA • Vary a set of features together each time. • The number of simultaneously varying features (#F) may range from 1 to M • Captures interactions between variables © Mai Elshehaly

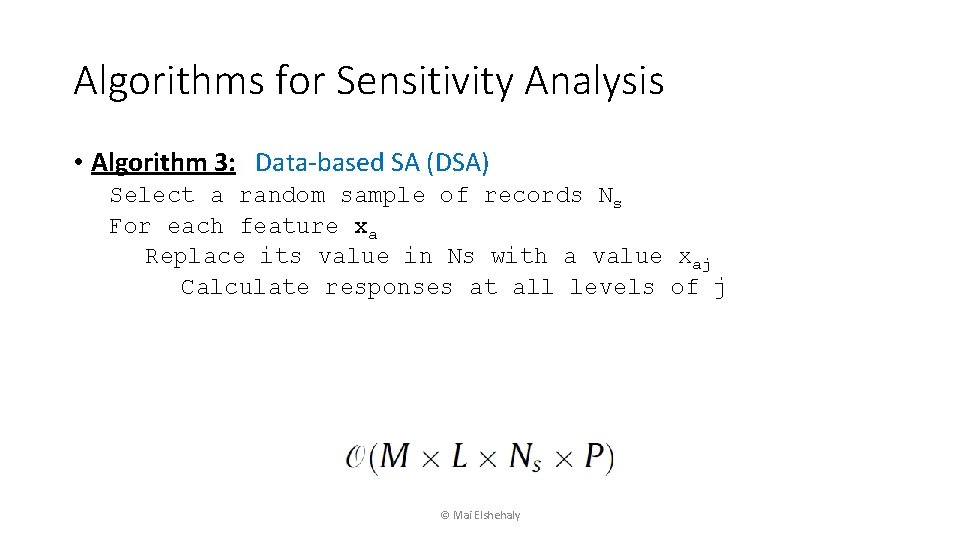

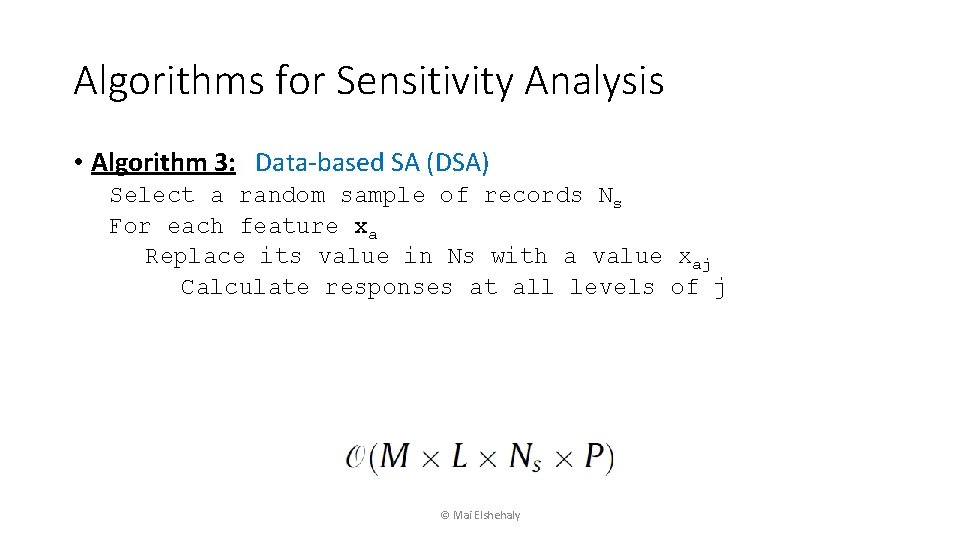

Algorithms for Sensitivity Analysis • Algorithm 3: Data-based SA (DSA) Select a random sample of records Ns For each feature xa Replace its value in Ns with a value xaj Calculate responses at all levels of j © Mai Elshehaly

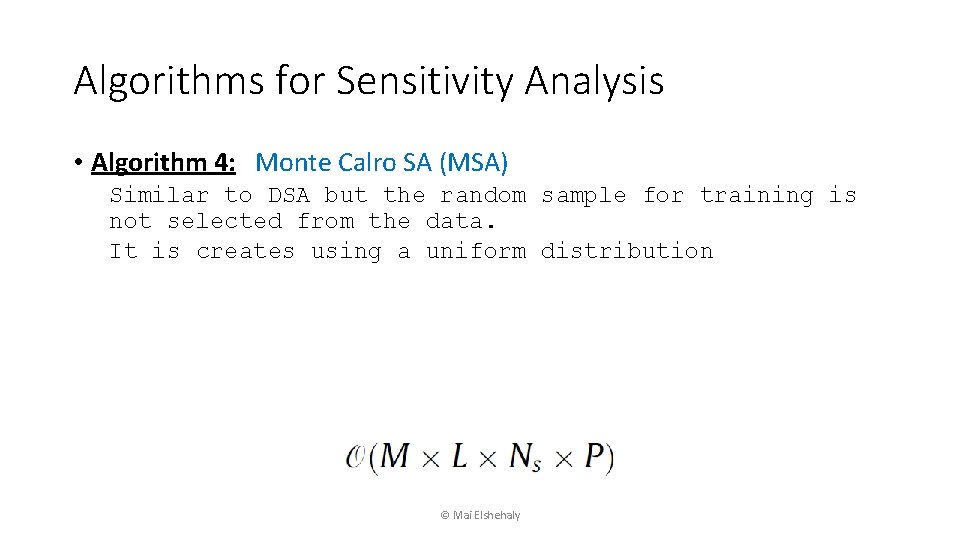

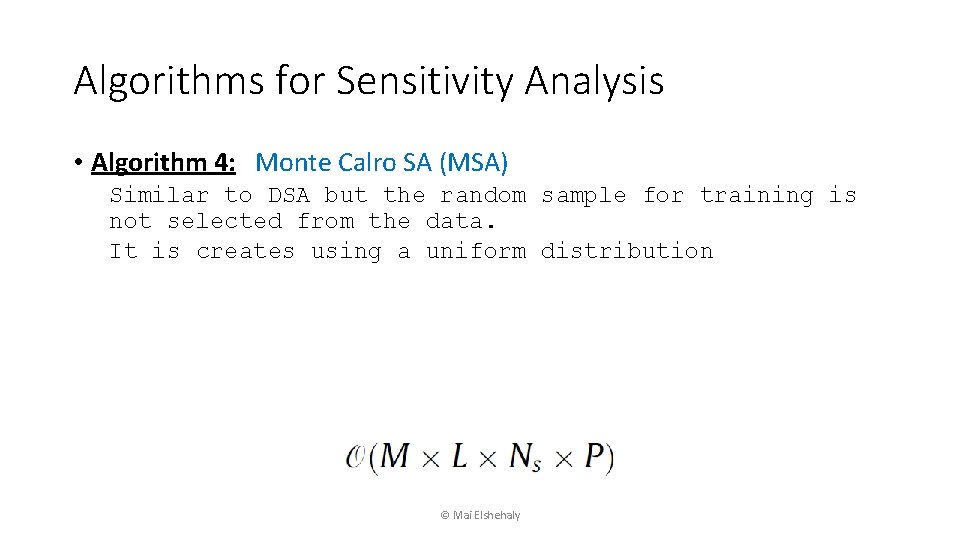

Algorithms for Sensitivity Analysis • Algorithm 4: Monte Calro SA (MSA) Similar to DSA but the random sample for training is not selected from the data. It is creates using a uniform distribution © Mai Elshehaly

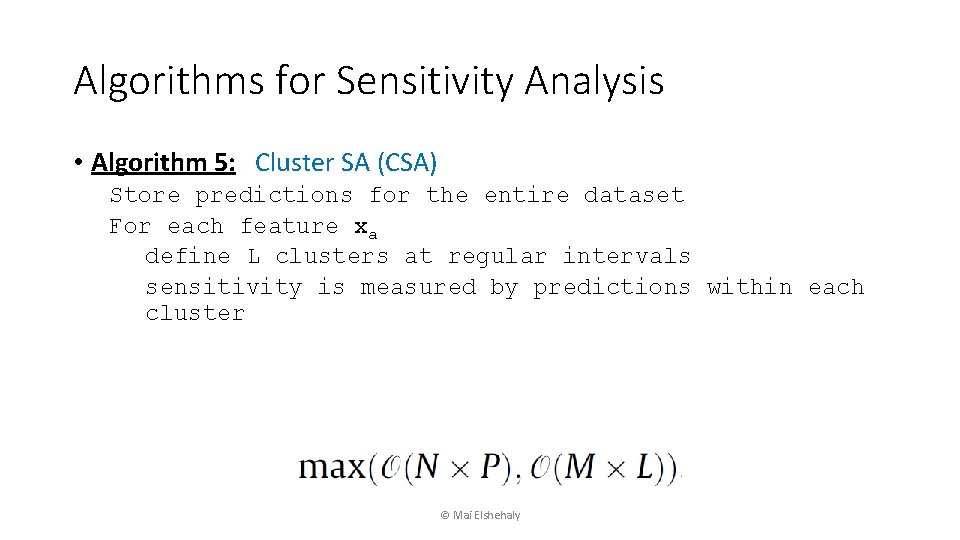

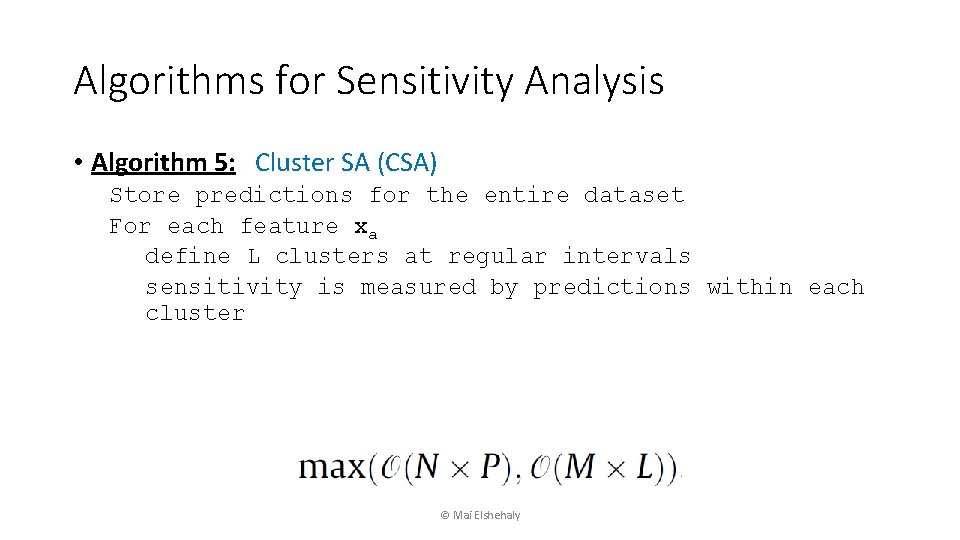

Algorithms for Sensitivity Analysis • Algorithm 5: Cluster SA (CSA) Store predictions for the entire dataset For each feature xa define L clusters at regular intervals sensitivity is measured by predictions within each cluster © Mai Elshehaly

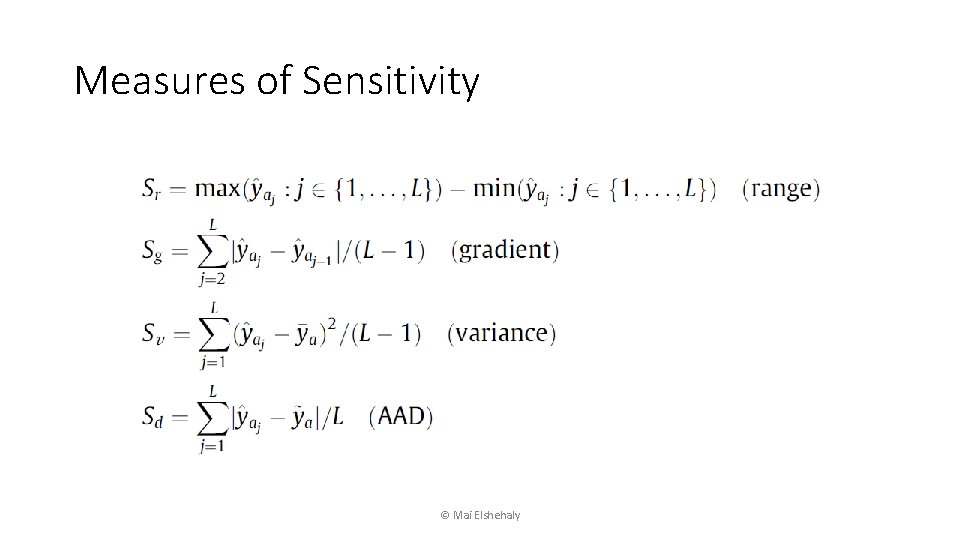

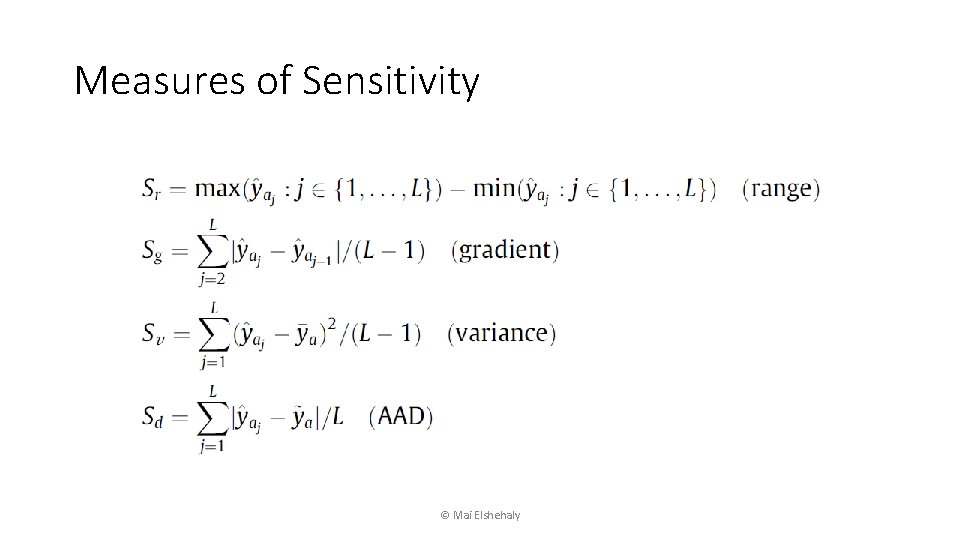

Measures of Sensitivity © Mai Elshehaly

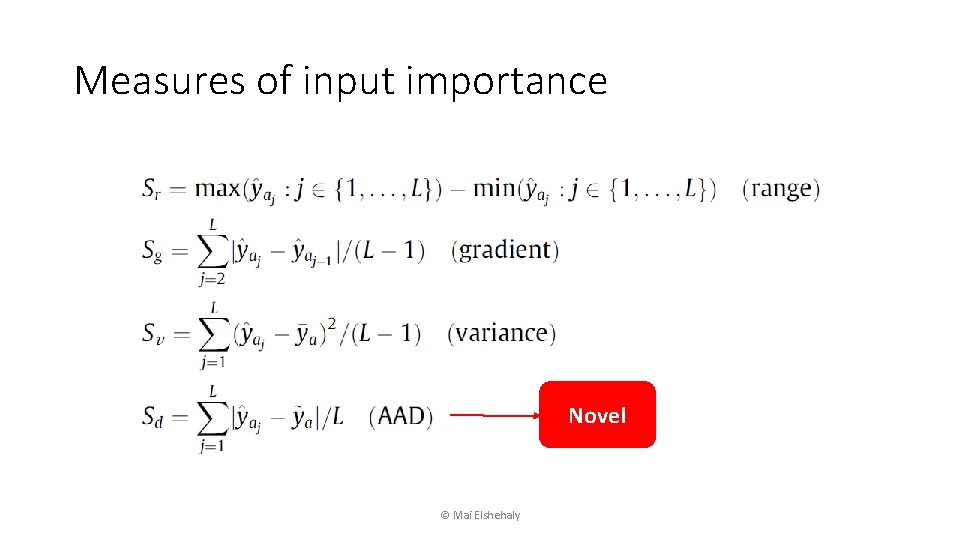

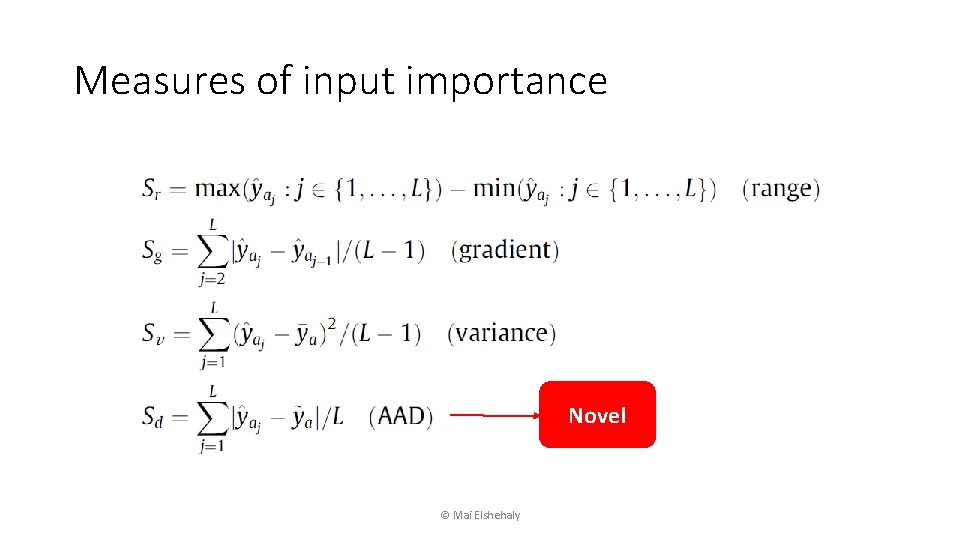

Measures of input importance Novel © Mai Elshehaly

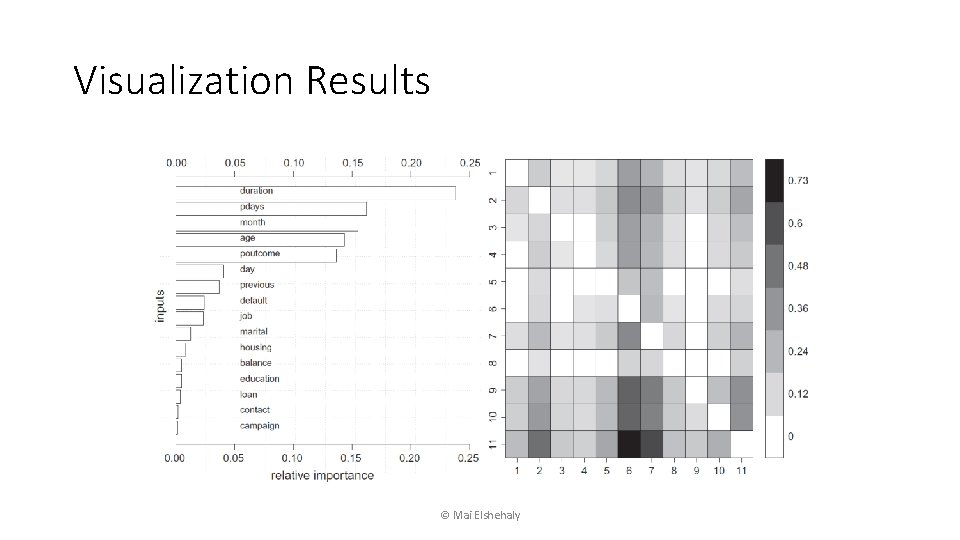

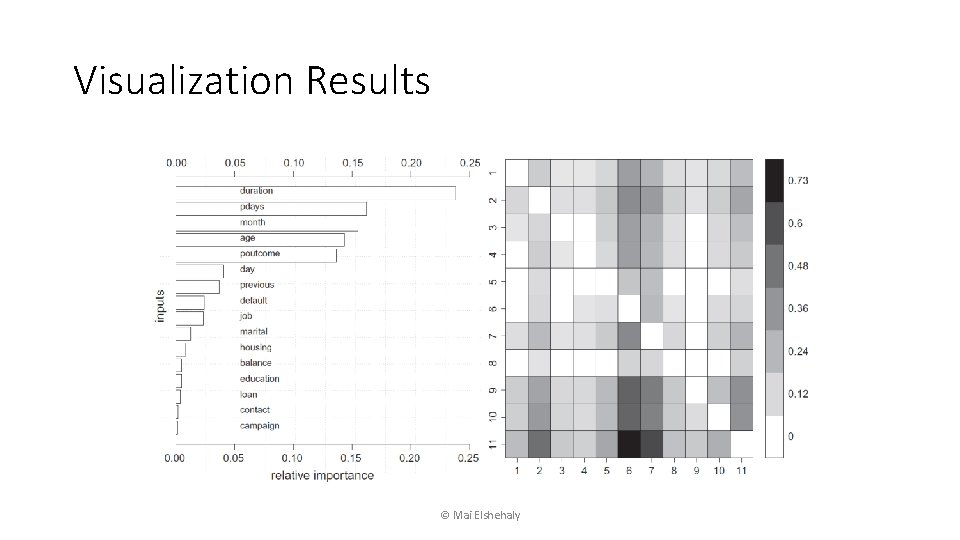

Visualization Results © Mai Elshehaly

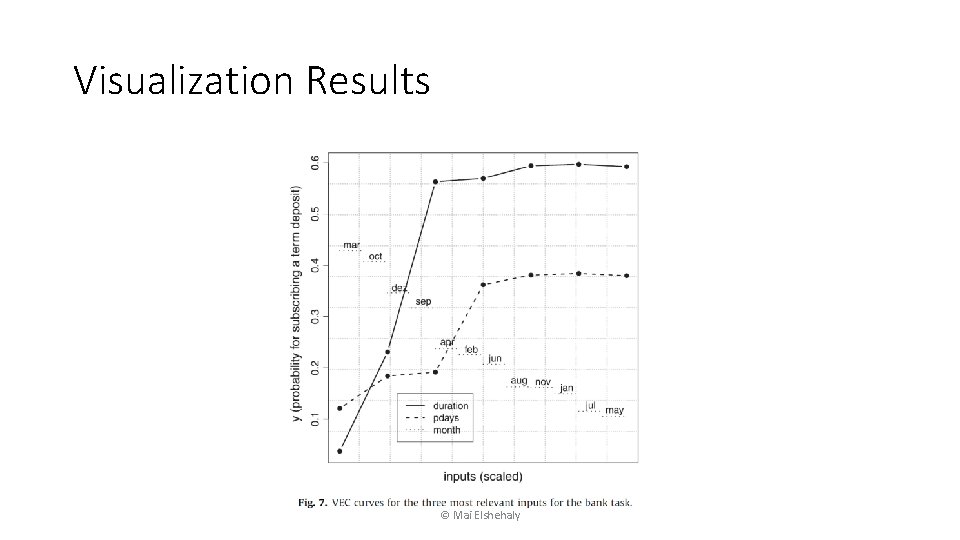

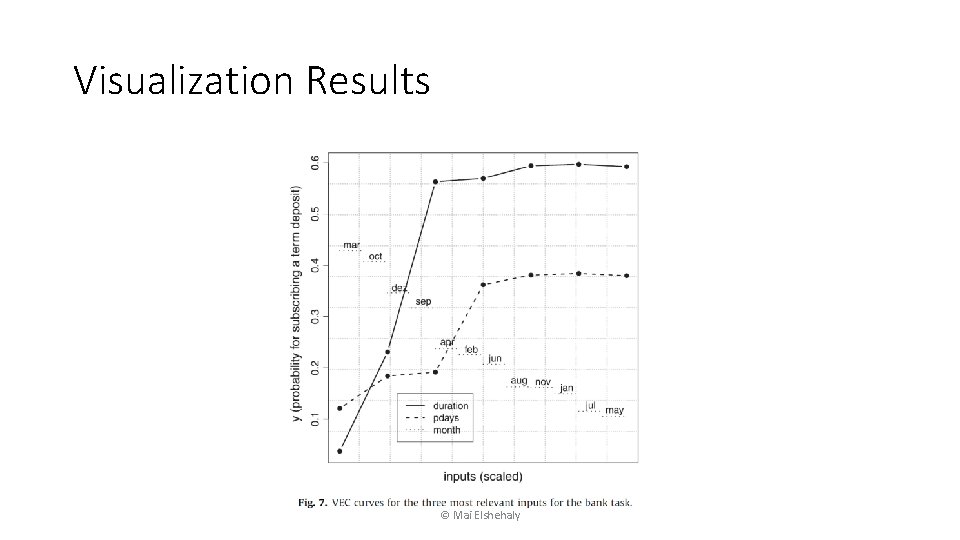

Visualization Results © Mai Elshehaly

What’s Our Take-Home? © Mai Elshehaly

What’s Our Take-Home? • Data Mining Techniques are black boxes • We need to open the box and understand: 1. What are the most important features? 2. How do the features interact and together affect the outcomes? 3. What combinations of features yield the highest accuracy of prediction while avoiding overfitting? • Always start with data abstraction © Mai Elshehaly

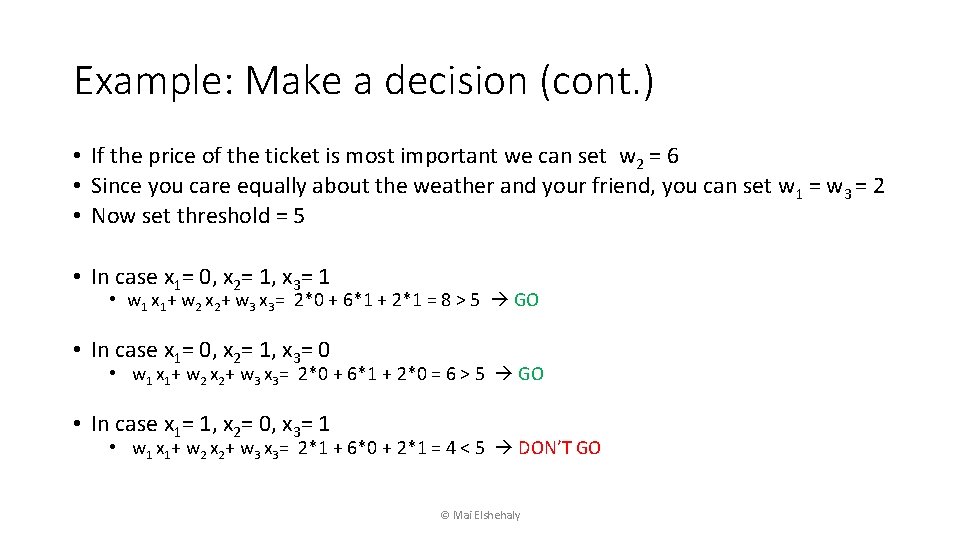

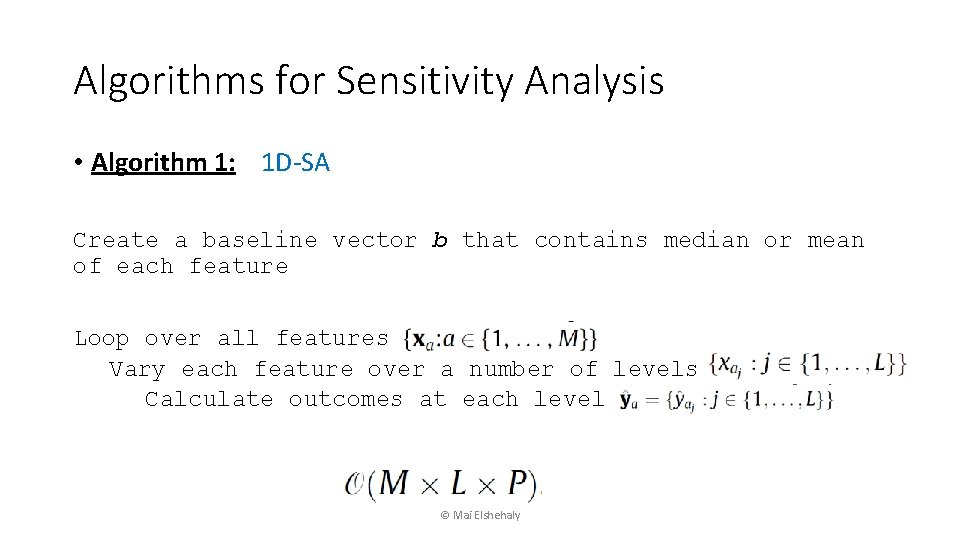

![Example VA Task T 1 Progressively explore the quality of prediction based on different Example: VA Task [T 1] Progressively explore the quality of prediction based on different](https://slidetodoc.com/presentation_image_h2/a5f84f8c377aea6fa7047ec75bd4d818/image-45.jpg)

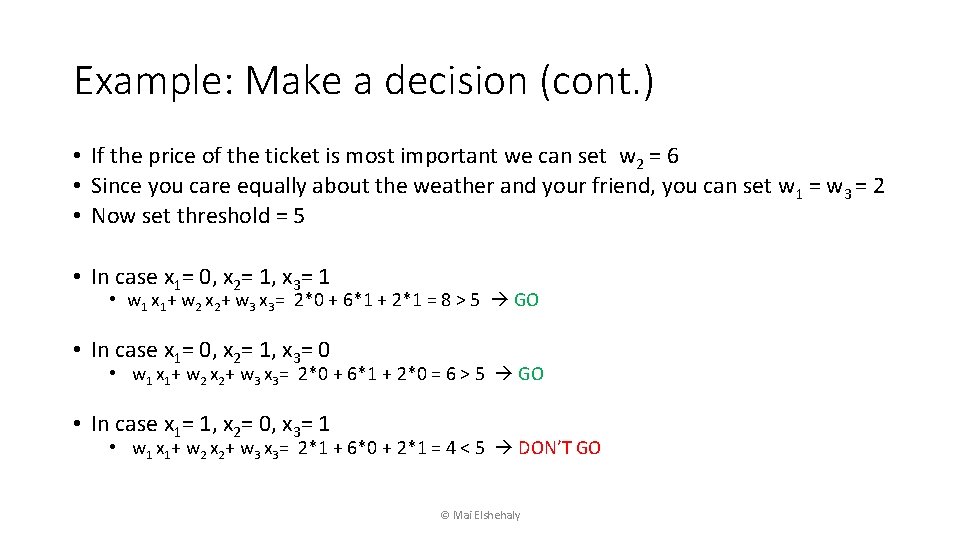

Example: VA Task [T 1] Progressively explore the quality of prediction based on different levels of groupings of genes (features). © Mai Elshehaly

Idea: • We can use parallel coordinates to visualize the most important features and the effect of varying their levels on the outcome © Mai Elshehaly

Brainstorming for VAST Challenge Data Abstraction for Mini Challenge 1 © Mai Elshehaly