Using Qualitative Research Strategies for Evaluation and Assessment

- Slides: 41

Using Qualitative Research Strategies for Evaluation and Assessment of Grants Donna Harp Ziegenfuss, Ed. D. Associate Librarian, Marriott Library donna. ziegenfuss@utah. edu

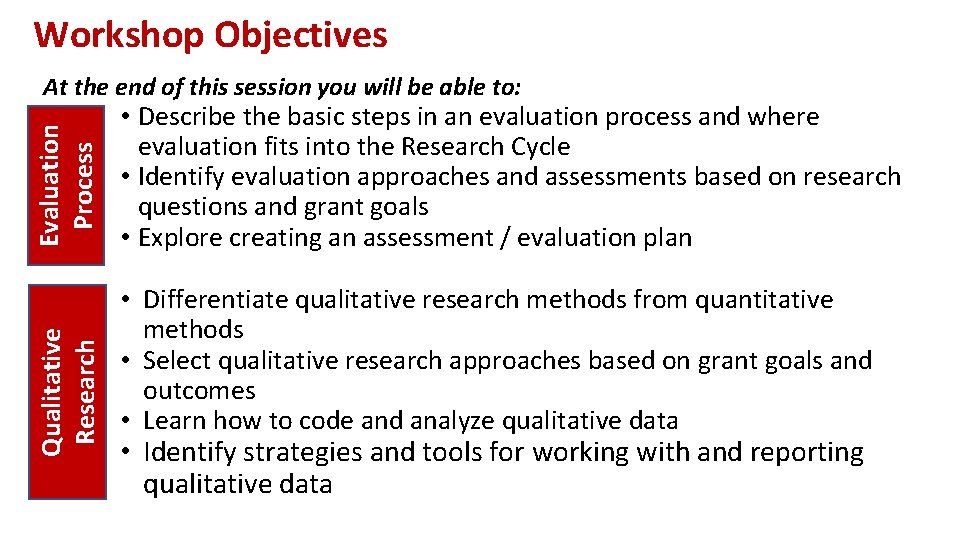

Workshop Objectives Qualitative Research Evaluation Process At the end of this session you will be able to: • Describe the basic steps in an evaluation process and where evaluation fits into the Research Cycle • Identify evaluation approaches and assessments based on research questions and grant goals • Explore creating an assessment / evaluation plan • Differentiate qualitative research methods from quantitative methods • Select qualitative research approaches based on grant goals and outcomes • Learn how to code and analyze qualitative data • Identify strategies and tools for working with and reporting qualitative data

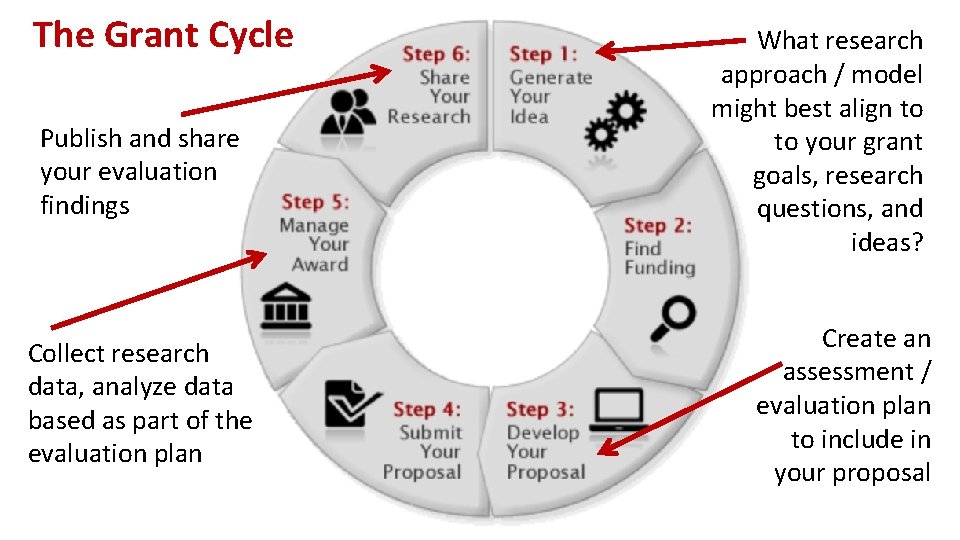

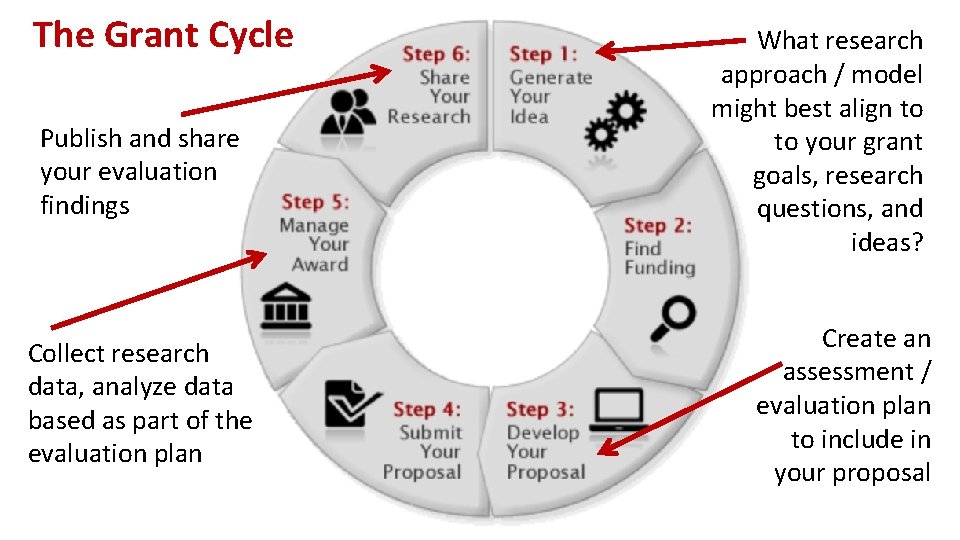

The Grant Cycle Publish and share your evaluation findings Collect research data, analyze data based as part of the evaluation plan What research approach / model might best align to your grant goals, research questions, and ideas? Create an assessment / evaluation plan to include in your proposal

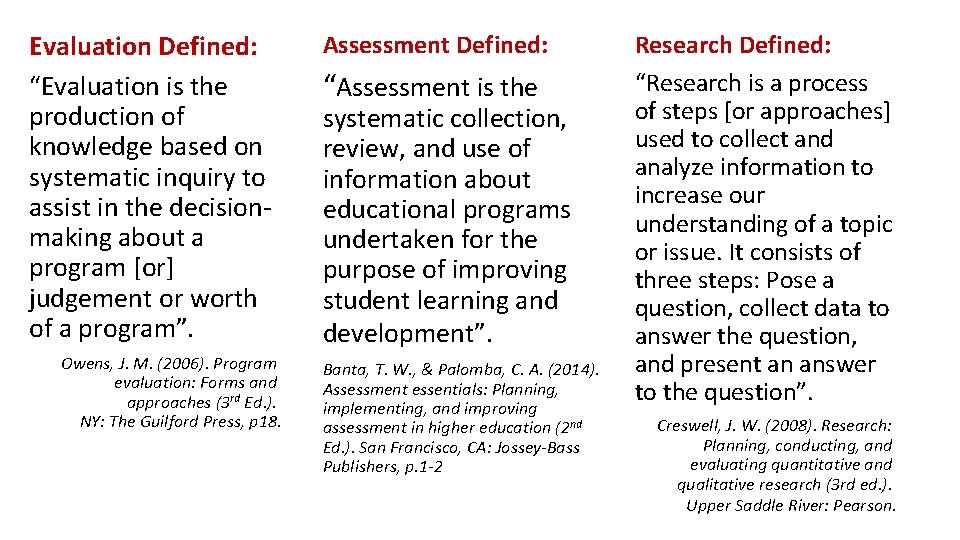

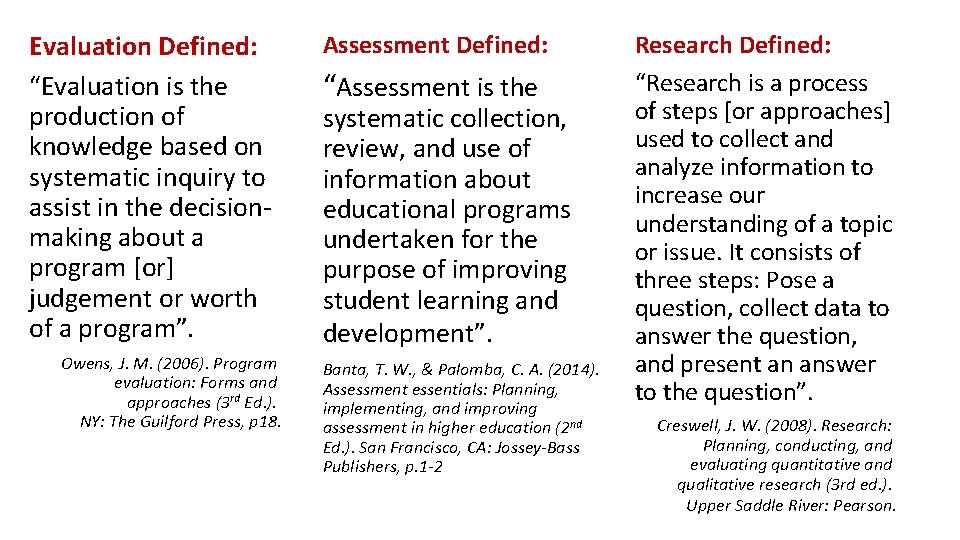

Evaluation Defined: “Evaluation is the production of knowledge based on systematic inquiry to assist in the decisionmaking about a program [or] judgement or worth of a program”. Owens, J. M. (2006). Program evaluation: Forms and approaches (3 rd Ed. ). NY: The Guilford Press, p 18. Assessment Defined: “Assessment is the systematic collection, review, and use of information about educational programs undertaken for the purpose of improving student learning and development”. Banta, T. W. , & Palomba, C. A. (2014). Assessment essentials: Planning, implementing, and improving assessment in higher education (2 nd Ed. ). San Francisco, CA: Jossey-Bass Publishers, p. 1 -2 Research Defined: “Research is a process of steps [or approaches] used to collect and analyze information to increase our understanding of a topic or issue. It consists of three steps: Pose a question, collect data to answer the question, and present an answer to the question”. Creswell, J. W. (2008). Research: Planning, conducting, and evaluating quantitative and qualitative research (3 rd ed. ). Upper Saddle River: Pearson.

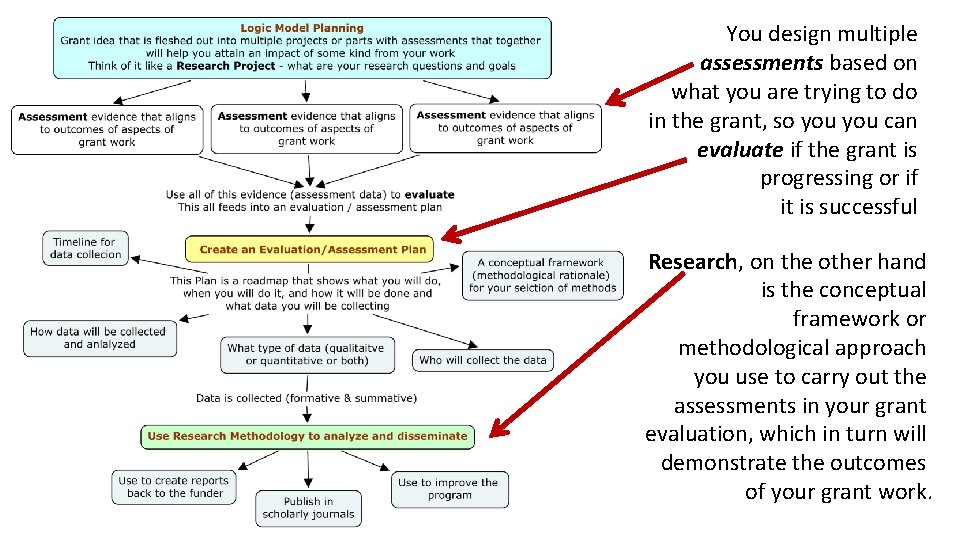

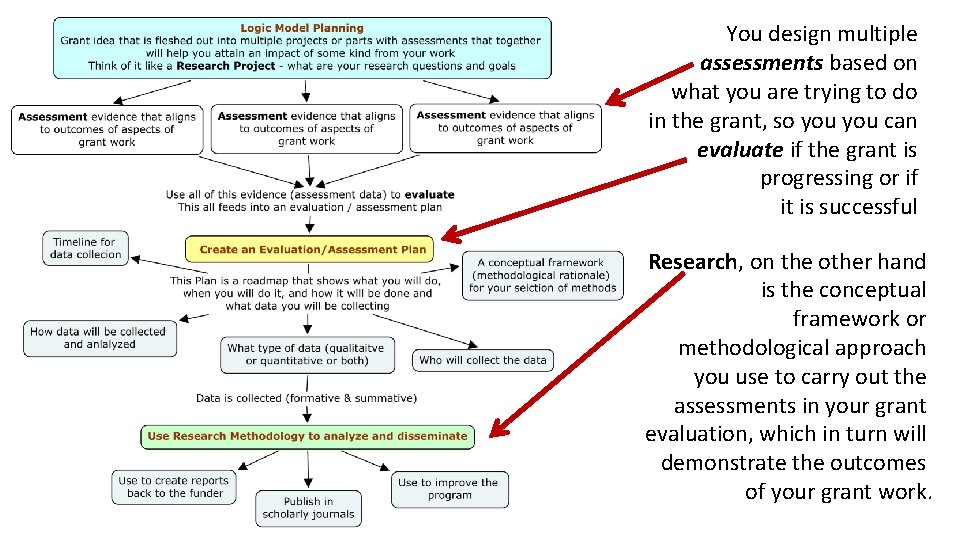

You design multiple assessments based on what you are trying to do in the grant, so you can evaluate if the grant is progressing or if it is successful Research, on the other hand is the conceptual framework or methodological approach you use to carry out the assessments in your grant evaluation, which in turn will demonstrate the outcomes of your grant work.

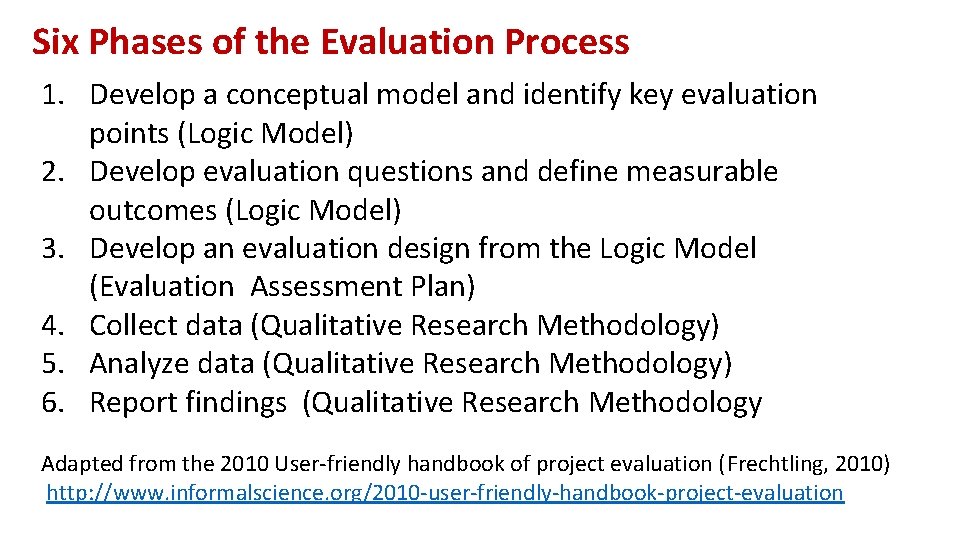

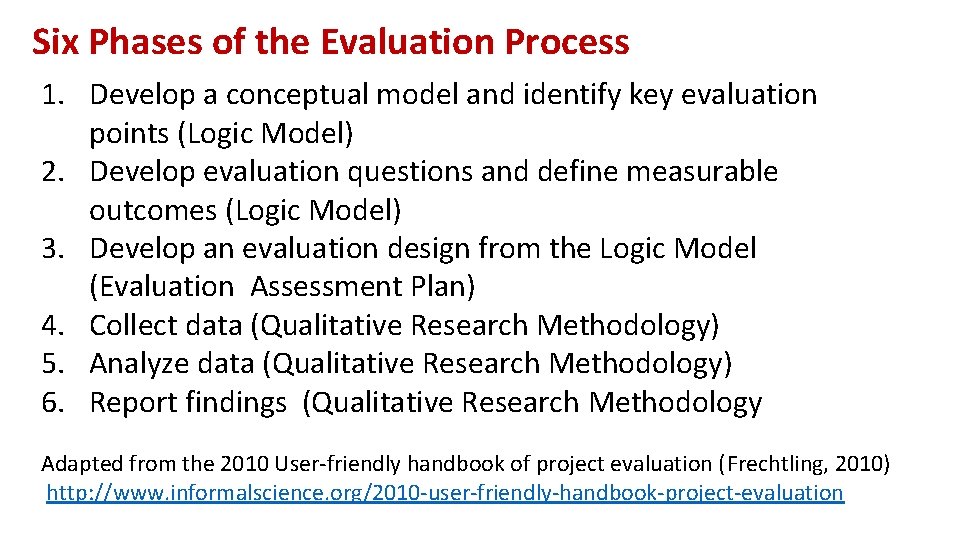

Six Phases of the Evaluation Process 1. Develop a conceptual model and identify key evaluation points (Logic Model) 2. Develop evaluation questions and define measurable outcomes (Logic Model) 3. Develop an evaluation design from the Logic Model (Evaluation Assessment Plan) 4. Collect data (Qualitative Research Methodology) 5. Analyze data (Qualitative Research Methodology) 6. Report findings (Qualitative Research Methodology Adapted from the 2010 User-friendly handbook of project evaluation (Frechtling, 2010) http: //www. informalscience. org/2010 -user-friendly-handbook-project-evaluation

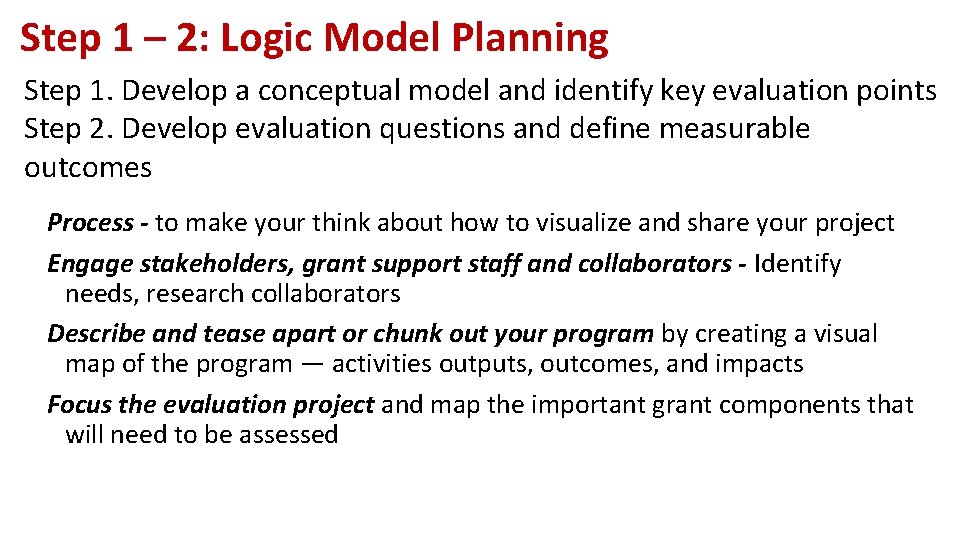

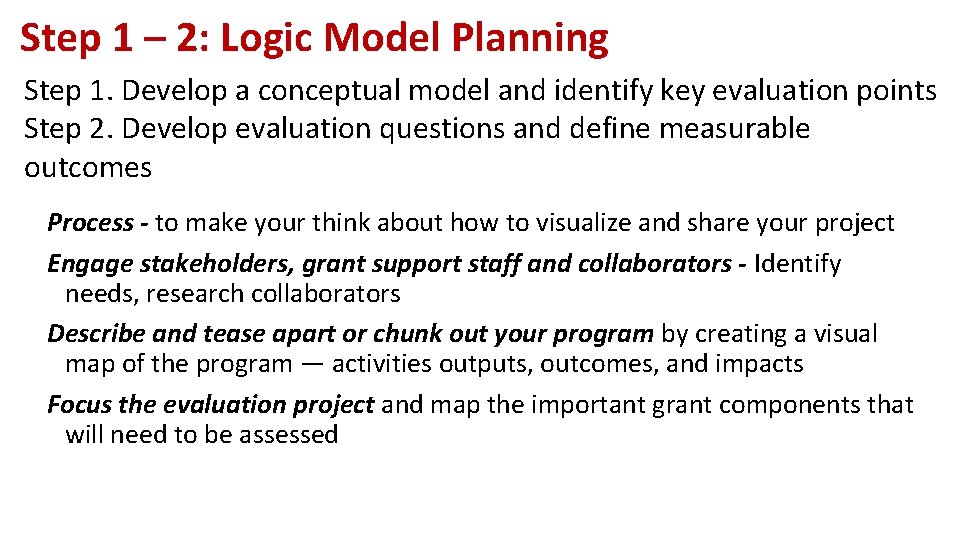

Step 1 – 2: Logic Model Planning Step 1. Develop a conceptual model and identify key evaluation points Step 2. Develop evaluation questions and define measurable outcomes Process - to make your think about how to visualize and share your project Engage stakeholders, grant support staff and collaborators - Identify needs, research collaborators Describe and tease apart or chunk out your program by creating a visual map of the program — activities outputs, outcomes, and impacts Focus the evaluation project and map the important grant components that will need to be assessed

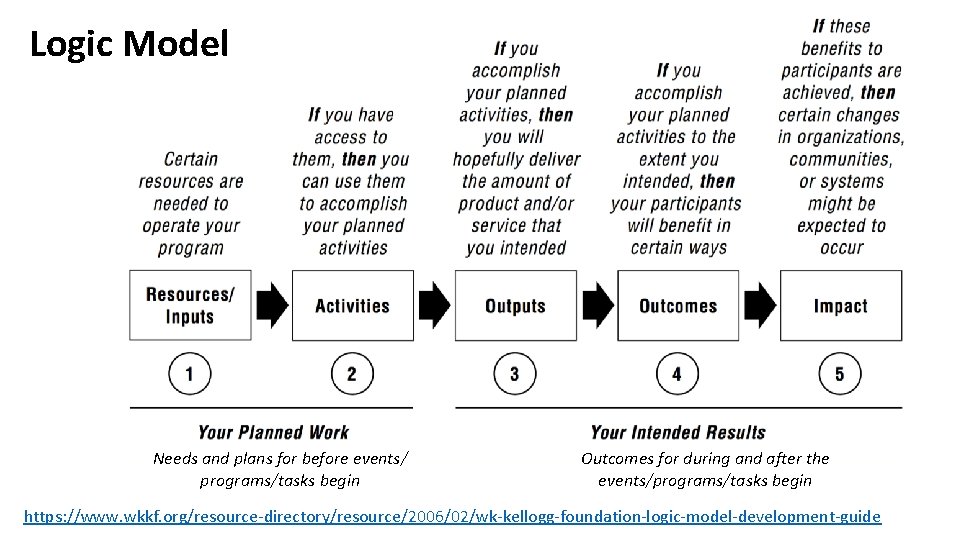

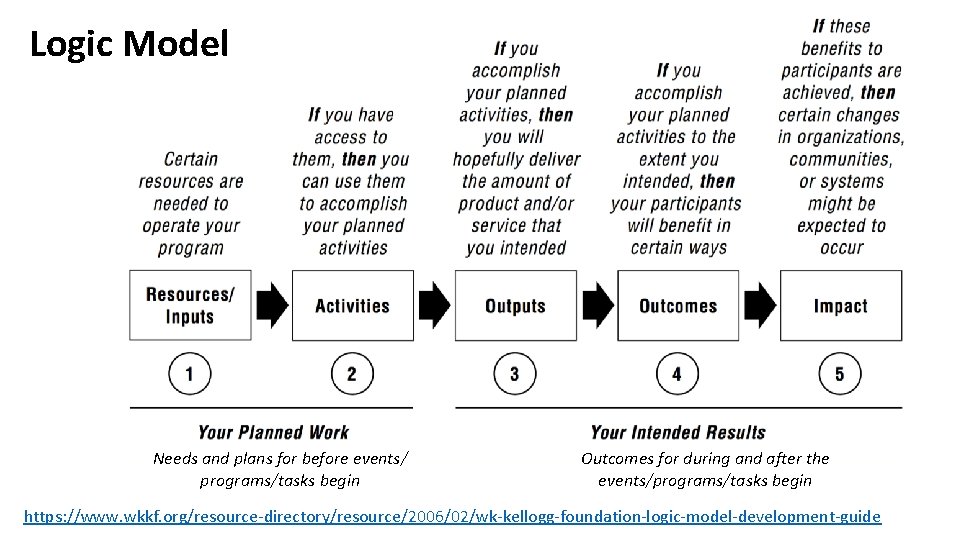

Logic Model Needs and plans for before events/ programs/tasks begin Outcomes for during and after the events/programs/tasks begin https: //www. wkkf. org/resource-directory/resource/2006/02/wk-kellogg-foundation-logic-model-development-guide

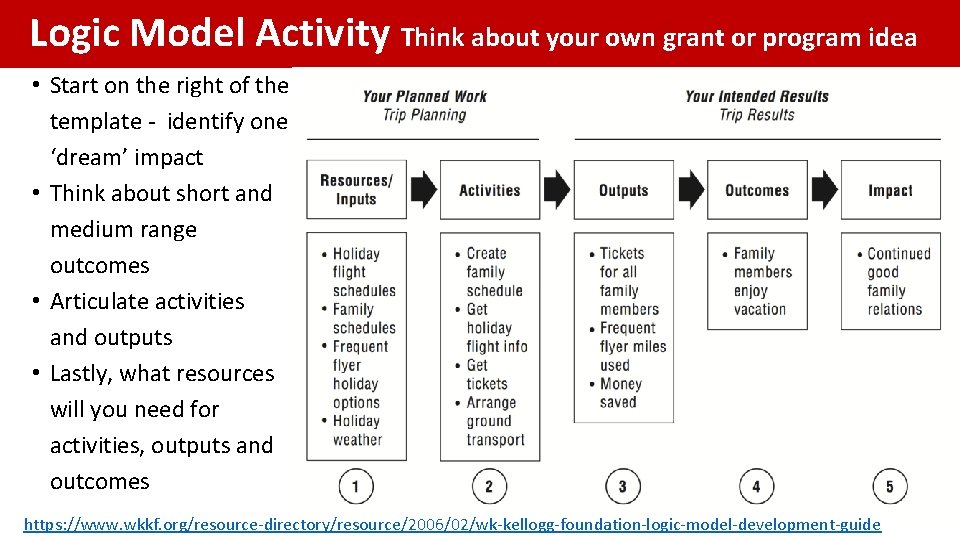

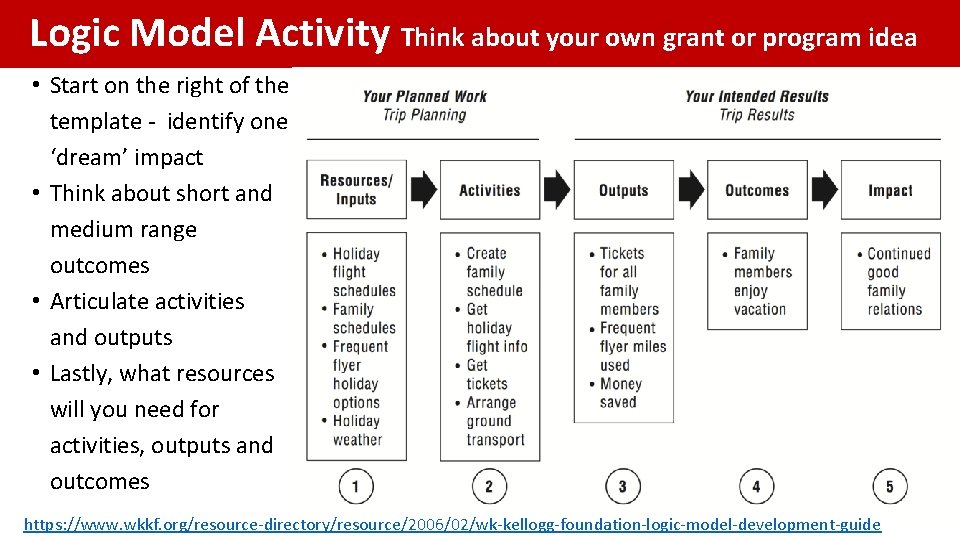

Logic Model Activity Think about your own grant or program idea • Start on the right of the template - identify one ‘dream’ impact • Think about short and medium range outcomes • Articulate activities and outputs • Lastly, what resources will you need for activities, outputs and outcomes https: //www. wkkf. org/resource-directory/resource/2006/02/wk-kellogg-foundation-logic-model-development-guide

Step 3. Develop an Evaluation Plan Design from the Logic Model (Evaluation/Assessment Plan) Why do a Logic Model and Evaluation Plan? • Required by many funding agencies and demonstrates program effectiveness • Could also be used to support a need for more funding and grants • Visual roadmap to measure the impact the program is having and document accomplishments • Improve the implementation and effectiveness of programs with comprehensive planning upfront • Provide transparency and ethical responsibility so others can see exactly what you doing and replicate results • Better manage limited resources • Good management and quality improvement tool - gain insight into effective strategies on how to improve performance by reviewing plan

What could an evaluation plan measure? Criteria could include measuring: Efforts – quality and quantity of inputs Performance – quality and quantity of outputs and grant activities Efficiency or Quality – of the innovation or program Implementation success – did you do what you planned to do Student Learning – progress of student learning, how students are learning • Equity – distribution and fairness of a program or project, or equity of resource allocation and usage • Impact – on particular stakeholders and organizations • • •

Types of Evaluation Plans - Formative Evaluation Involves monitoring the “process” while the program is ongoing (Trochim, 2006) • Tells you if you’re on track • Points to possible areas for improvement • Helps you make changes as you go • Can be combined with summative evaluation Could Include: • Needs Assessment • Program Implementation Evaluation • Grant Process Evaluation • Grant Program Evaluation • Project Evaluation

Types of Evaluation Plans - Summative Evaluation Involves assessing the outcome at the conclusion of the program and measures how change that has occurred as a result of the program (Trochim, 2006) • Shows what impact you have had on the problem or project under evaluation • Helps justify program • Can be combined with formative evaluation Could include: • Outcome Evaluation or a comparing interventions or assessment over time • Ex. control group, comparison group, pre- and post-testing • The data collected is most often quantitative but may be qualitative given the nature of the program or project • Impact Evaluation • Cost-Effectiveness and Cost-Benefit Analysis

Tips for Evaluation Plans • Evaluation is a continuous process and planned with the program • Methodological and data collection approach depends on goals and questions to be answered • Quantitative or qualitative research has different types of questions • Determine who will be studied and when • Consider sampling, use of comparison groups, sequencing of activities or projects, as well as frequency of data collection • Involve the internal and external evaluations in the grant planning process from the beginning – the Logic Model process

Tips for Evaluation Plans (cont. ) • Think about the type of data you will collect • Primary Data – data you will collect during the grant and research from surveys, assessments, interviews, and observations • Secondary Data – available but already collected – NSSE data, department survey data, student data, other records • You do not have to use complicated quantitative methodology – depends on goals and research questions • You can use pre- and post-tests, attitude surveys, interviews and focus groups, document analysis • An explicit alignment between activities and outcomes • Explain clearly what measures you are going to use to measure success, progress, impact

Tips for Evaluation Plans (cont. ) • Negative findings are as useful as positive findings • Make the evaluation plan clear and specific enough that it can be replicated by other researchers • External evaluation often strengthens a proposal, and is required by some funders • Show the sustainability of the project as part of the evaluation plan – how will you continue after the grant • Think about an evaluation budget as part of your grant (usually about 5 to 10 %) – some funders require more

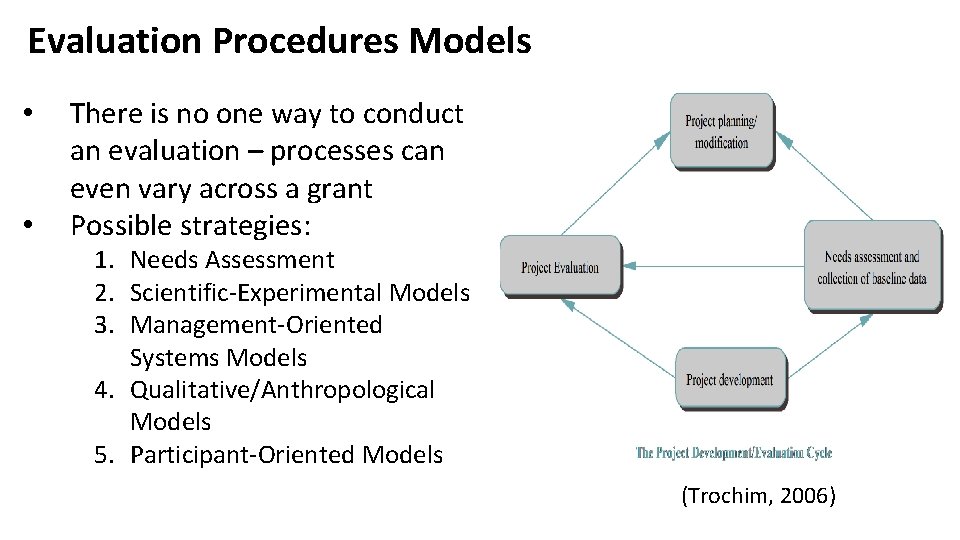

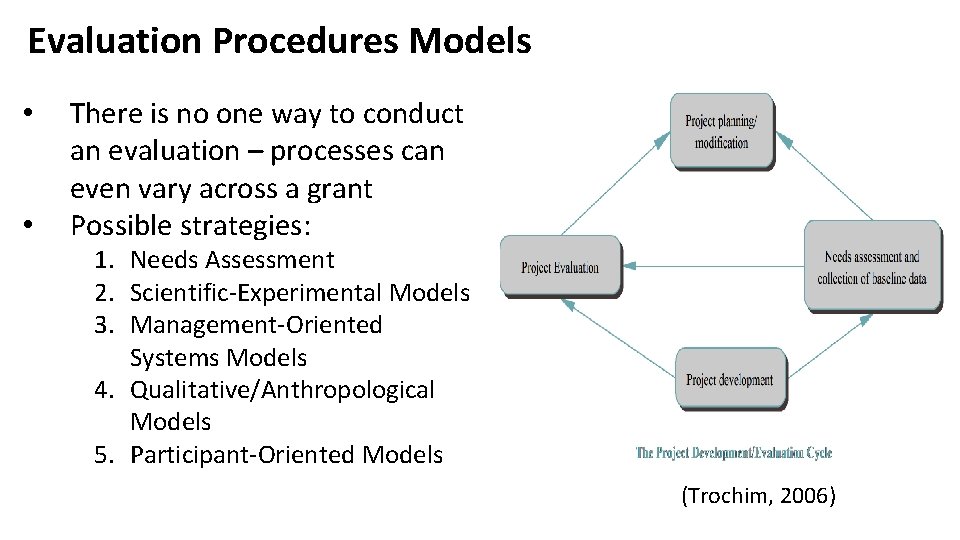

Evaluation Procedures Models • • There is no one way to conduct an evaluation – processes can even vary across a grant Possible strategies: 1. Needs Assessment 2. Scientific-Experimental Models 3. Management-Oriented Systems Models 4. Qualitative/Anthropological Models 5. Participant-Oriented Models (Trochim, 2006)

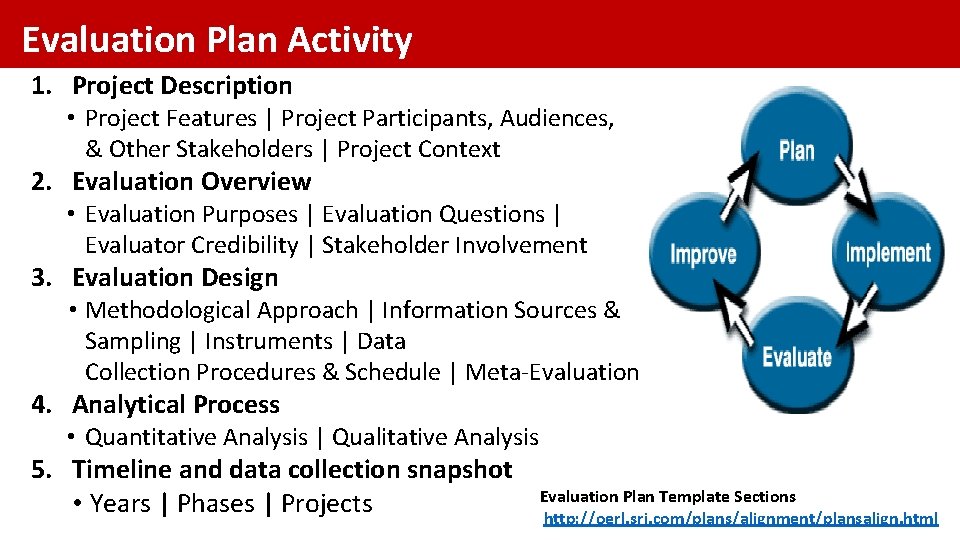

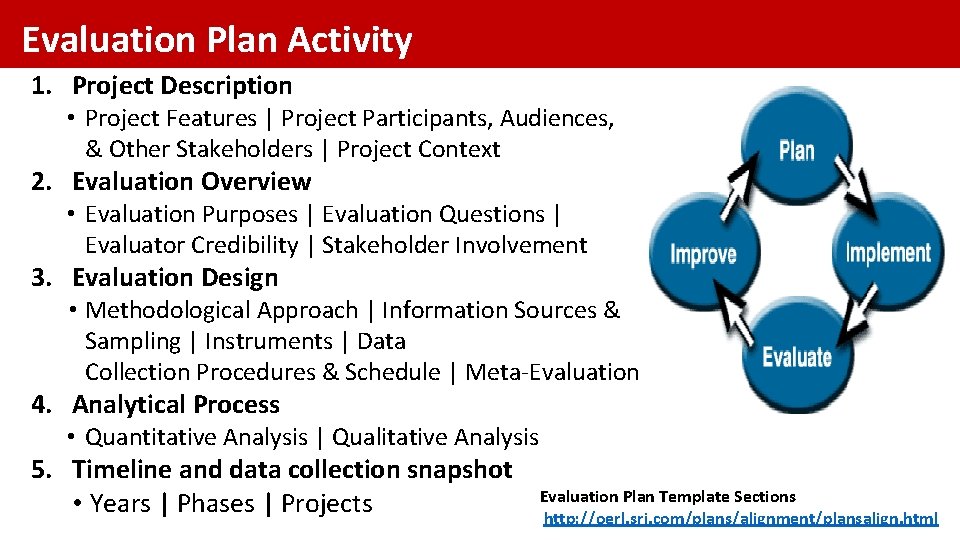

Evaluation Plan Activity 1. Project Description • Project Features | Project Participants, Audiences, & Other Stakeholders | Project Context 2. Evaluation Overview • Evaluation Purposes | Evaluation Questions | Evaluator Credibility | Stakeholder Involvement 3. Evaluation Design • Methodological Approach | Information Sources & Sampling | Instruments | Data Collection Procedures & Schedule | Meta-Evaluation 4. Analytical Process • Quantitative Analysis | Qualitative Analysis 5. Timeline and data collection snapshot • Years | Phases | Projects Evaluation Plan Template Sections http: //oerl. sri. com/plans/alignment/plansalign. html

Step 4 -6 Detailing Out the Qualitative Research Component of the Evaluation Plan • Use research questions instead of hypothesis • Take a holistic approach that studies the culture, group and individual in the real world setting • Strive for “Thick Description” • Focus on seeing the world from the eyes of the participants • Context is critical: Strive to make sense of phenomena in terms of the meaning of human behavior • Researcher integrity is essential component – trust & confidentiality • Meaning resides in language and therefore qualitative research focuses on studying text (Jackson & Verberg, 2002)

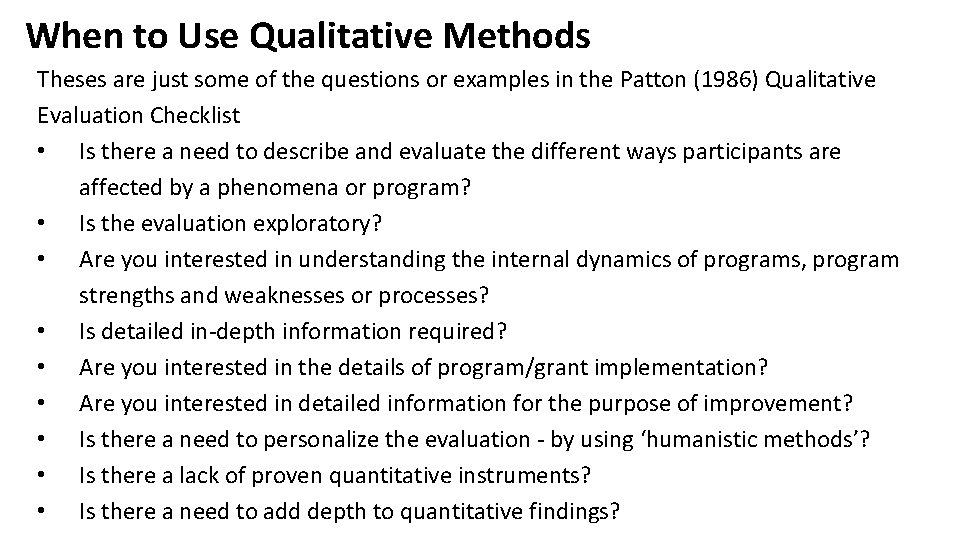

When to Use Qualitative Methods Theses are just some of the questions or examples in the Patton (1986) Qualitative Evaluation Checklist • Is there a need to describe and evaluate the different ways participants are affected by a phenomena or program? • Is the evaluation exploratory? • Are you interested in understanding the internal dynamics of programs, program strengths and weaknesses or processes? • Is detailed in-depth information required? • Are you interested in the details of program/grant implementation? • Are you interested in detailed information for the purpose of improvement? • Is there a need to personalize the evaluation - by using ‘humanistic methods’? • Is there a lack of proven quantitative instruments? • Is there a need to add depth to quantitative findings?

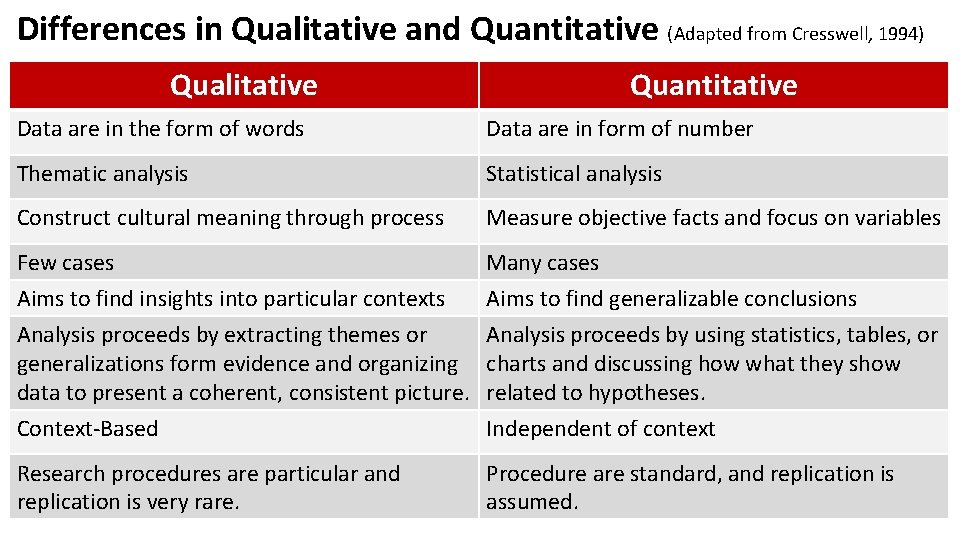

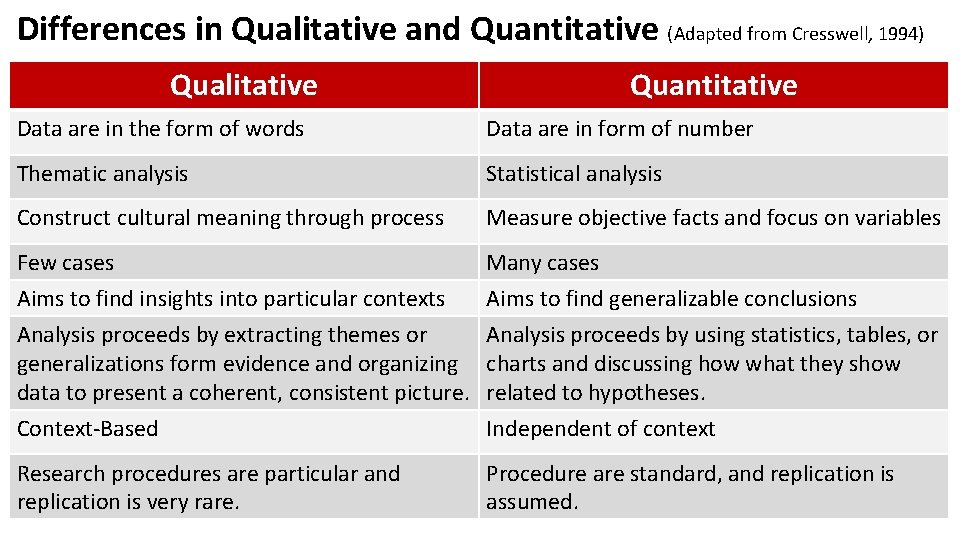

Differences in Qualitative and Quantitative (Adapted from Cresswell, 1994) Qualitative Quantitative Data are in the form of words Data are in form of number Thematic analysis Statistical analysis Construct cultural meaning through process Measure objective facts and focus on variables Few cases Aims to find insights into particular contexts Analysis proceeds by extracting themes or generalizations form evidence and organizing data to present a coherent, consistent picture. Many cases Aims to find generalizable conclusions Analysis proceeds by using statistics, tables, or charts and discussing how what they show related to hypotheses. Context-Based Independent of context Research procedures are particular and replication is very rare. Procedure are standard, and replication is assumed.

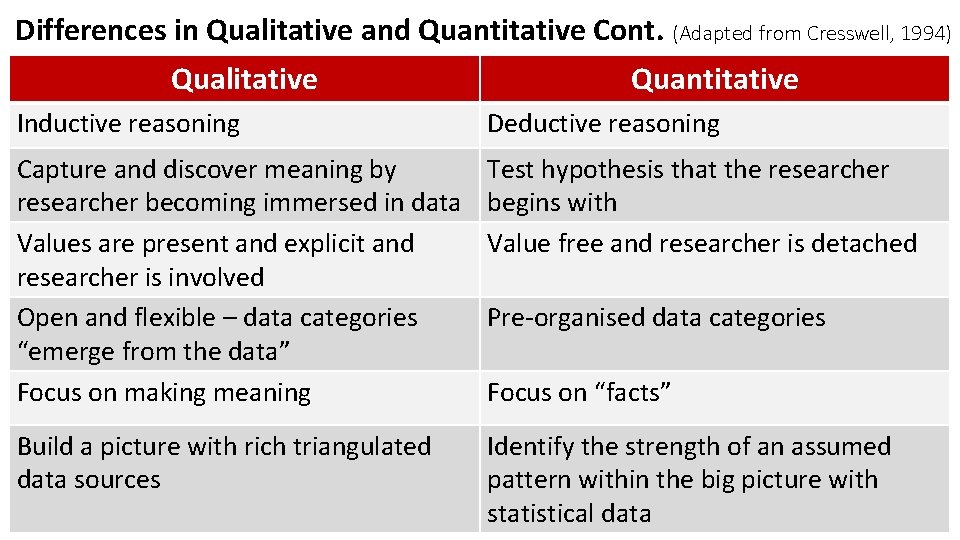

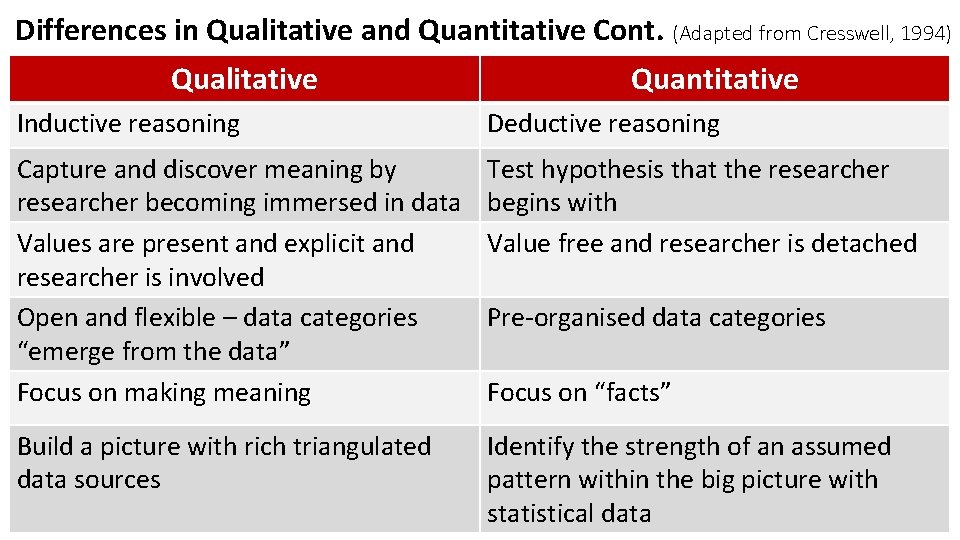

Differences in Qualitative and Quantitative Cont. (Adapted from Cresswell, 1994) Qualitative Quantitative Inductive reasoning Deductive reasoning Capture and discover meaning by researcher becoming immersed in data Values are present and explicit and researcher is involved Open and flexible – data categories “emerge from the data” Focus on making meaning Test hypothesis that the researcher begins with Value free and researcher is detached Build a picture with rich triangulated data sources Identify the strength of an assumed pattern within the big picture with statistical data Pre-organised data categories Focus on “facts”

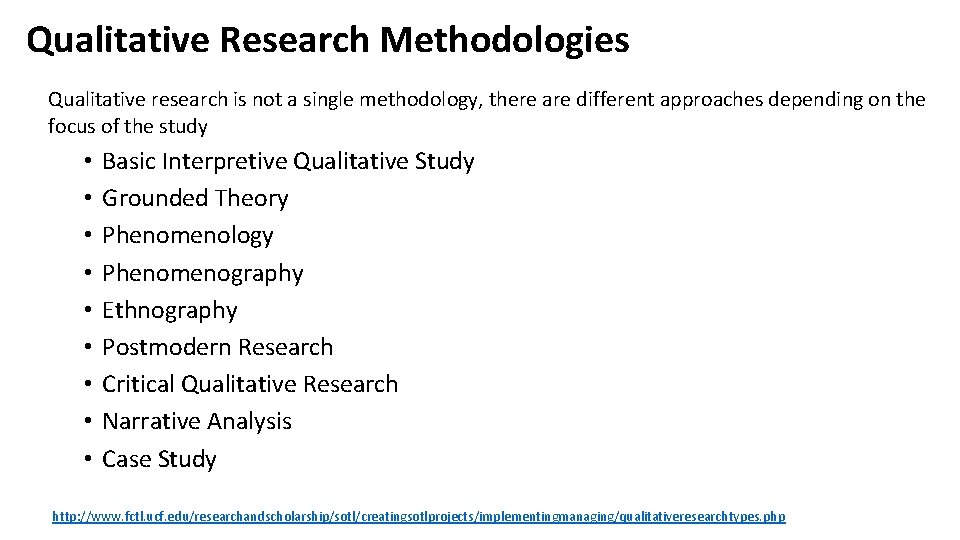

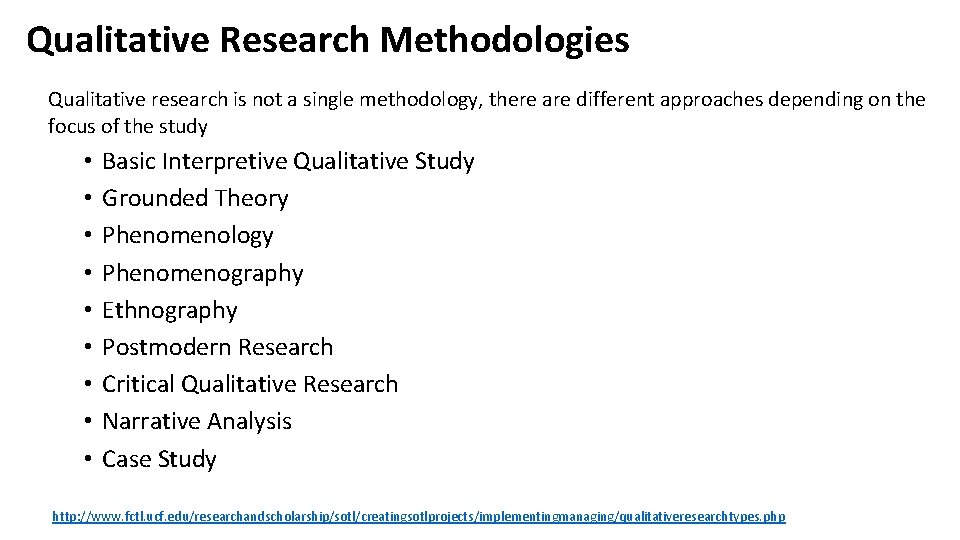

Qualitative Research Methodologies Qualitative research is not a single methodology, there are different approaches depending on the focus of the study • • • Basic Interpretive Qualitative Study Grounded Theory Phenomenology Phenomenography Ethnography Postmodern Research Critical Qualitative Research Narrative Analysis Case Study http: //www. fctl. ucf. edu/researchandscholarship/sotl/creatingsotlprojects/implementingmanaging/qualitativeresearchtypes. php

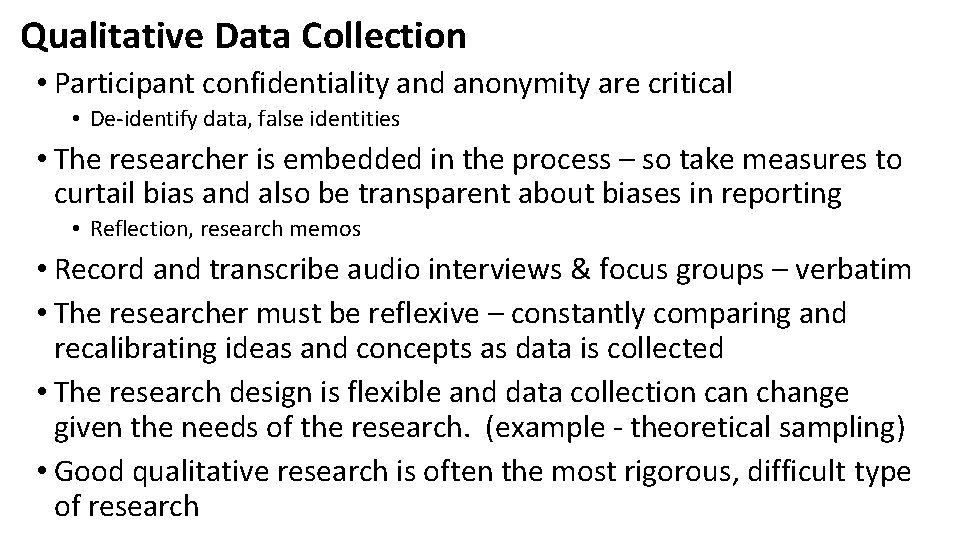

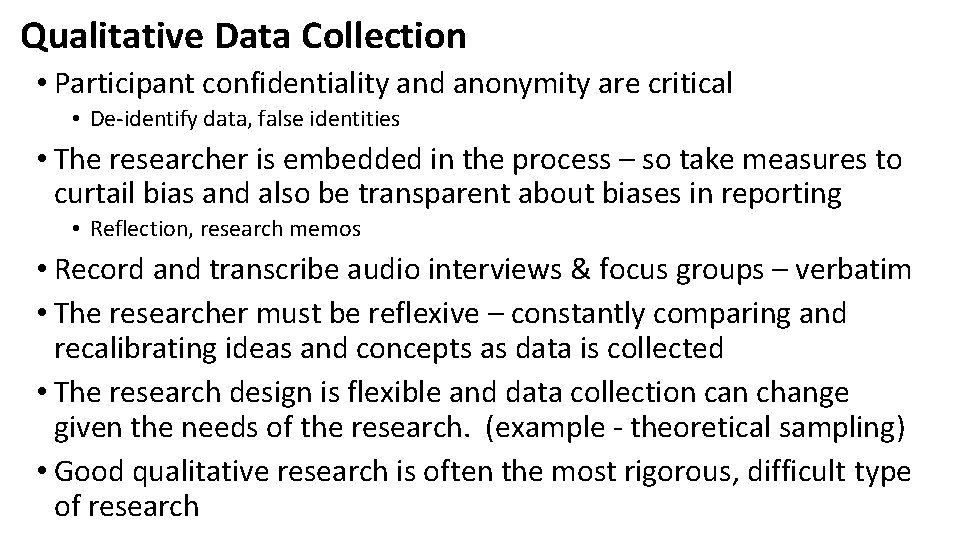

Qualitative Data Collection • Participant confidentiality and anonymity are critical • De-identify data, false identities • The researcher is embedded in the process – so take measures to curtail bias and also be transparent about biases in reporting • Reflection, research memos • Record and transcribe audio interviews & focus groups – verbatim • The researcher must be reflexive – constantly comparing and recalibrating ideas and concepts as data is collected • The research design is flexible and data collection can change given the needs of the research. (example - theoretical sampling) • Good qualitative research is often the most rigorous, difficult type of research

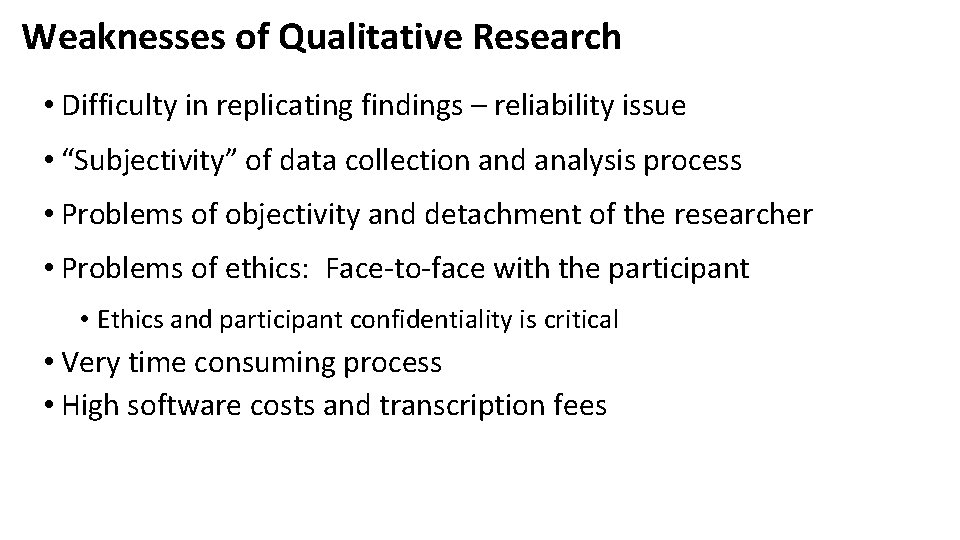

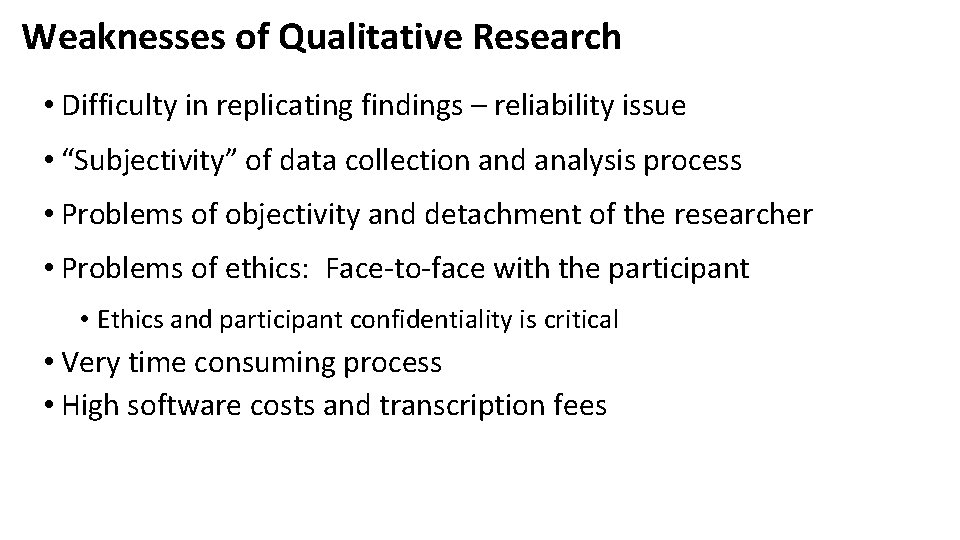

Weaknesses of Qualitative Research • Difficulty in replicating findings – reliability issue • “Subjectivity” of data collection and analysis process • Problems of objectivity and detachment of the researcher • Problems of ethics: Face-to-face with the participant • Ethics and participant confidentiality is critical • Very time consuming process • High software costs and transcription fees

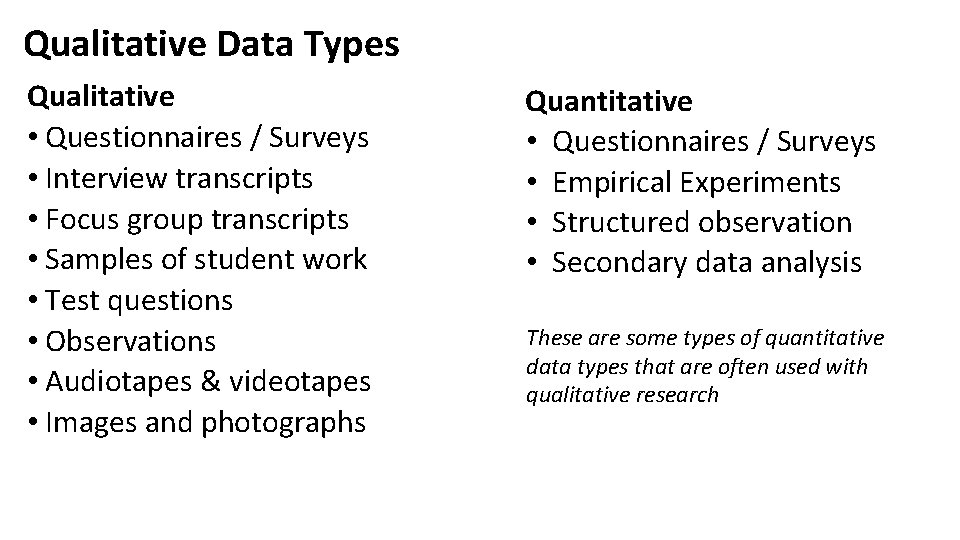

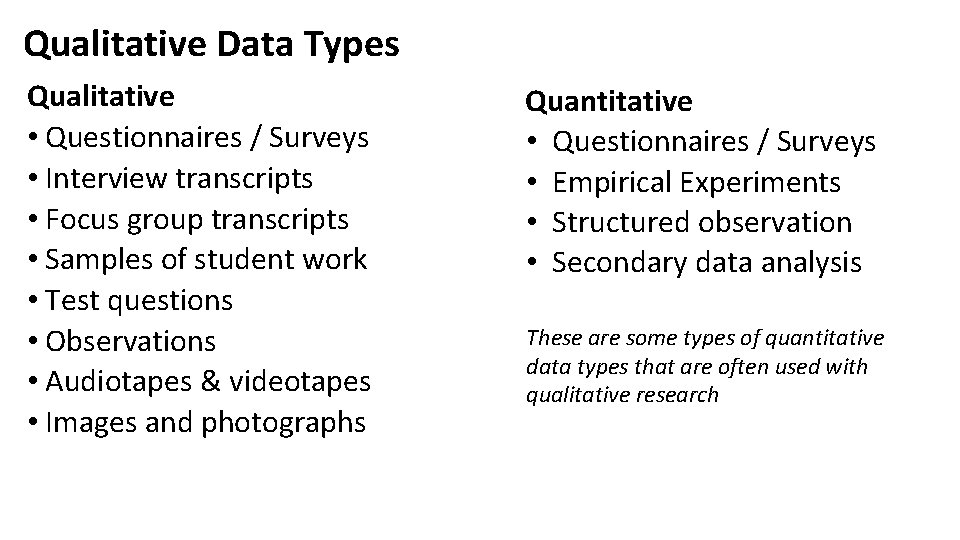

Qualitative Data Types Qualitative • Questionnaires / Surveys • Interview transcripts • Focus group transcripts • Samples of student work • Test questions • Observations • Audiotapes & videotapes • Images and photographs Quantitative • Questionnaires / Surveys • Empirical Experiments • Structured observation • Secondary data analysis These are some types of quantitative data types that are often used with qualitative research

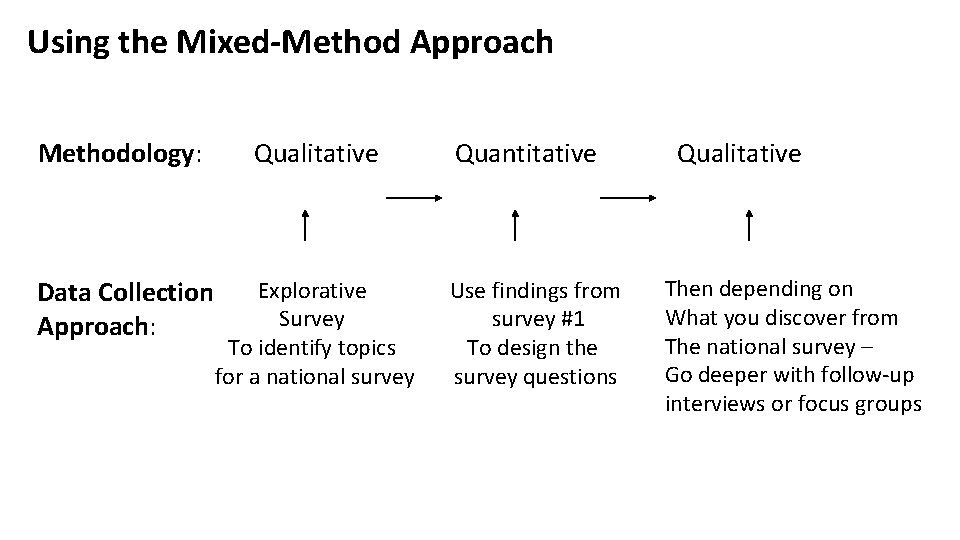

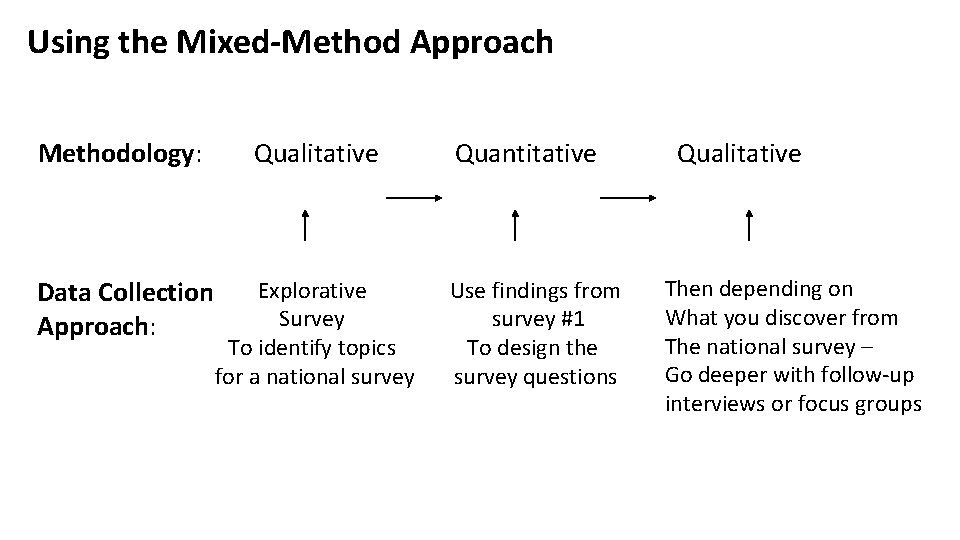

Using the Mixed-Method Approach Methodology: Qualitative Quantitative Qualitative Data Collection Approach: Explorative Survey To identify topics for a national survey Use findings from survey #1 To design the survey questions Then depending on What you discover from The national survey – Go deeper with follow-up interviews or focus groups

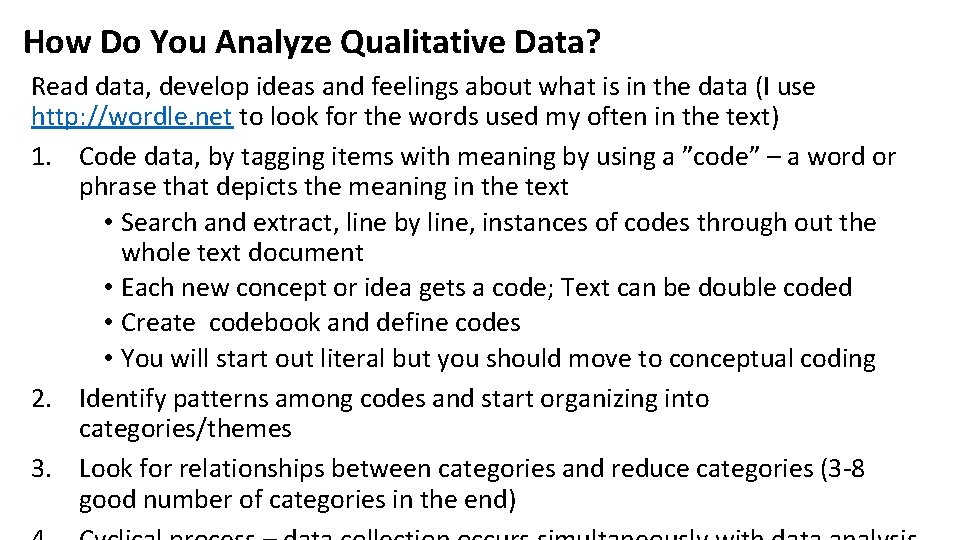

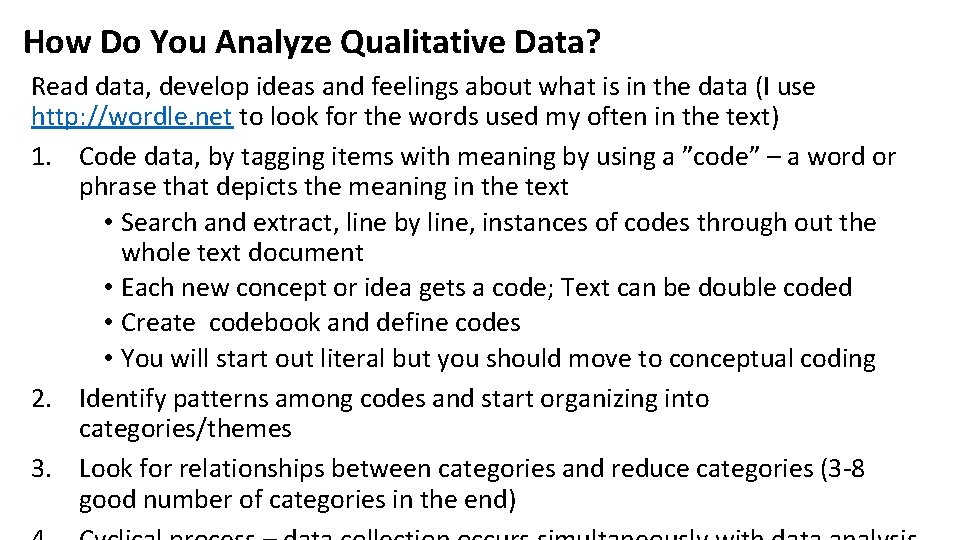

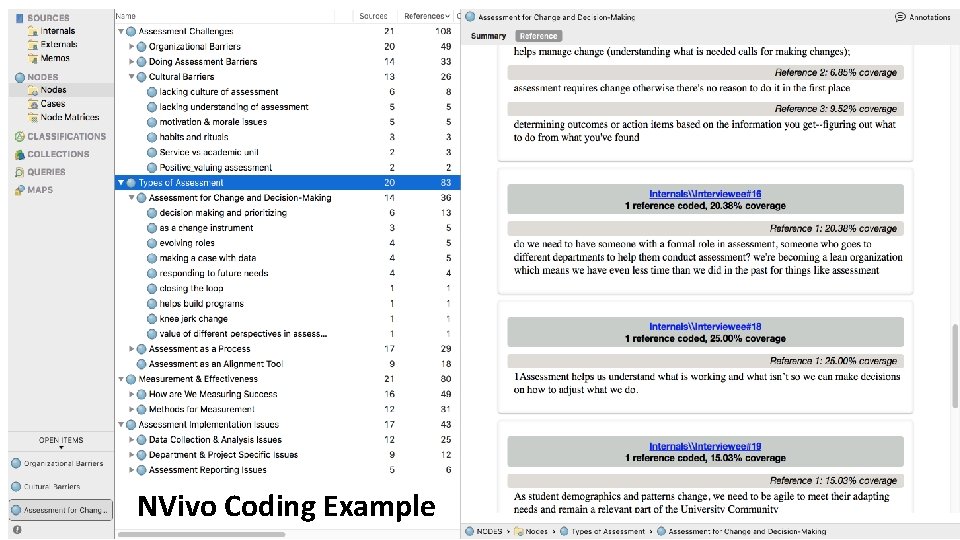

How Do You Analyze Qualitative Data? Read data, develop ideas and feelings about what is in the data (I use http: //wordle. net to look for the words used my often in the text) 1. Code data, by tagging items with meaning by using a ”code” – a word or phrase that depicts the meaning in the text • Search and extract, line by line, instances of codes through out the whole text document • Each new concept or idea gets a code; Text can be double coded • Create codebook and define codes • You will start out literal but you should move to conceptual coding 2. Identify patterns among codes and start organizing into categories/themes 3. Look for relationships between categories and reduce categories (3 -8 good number of categories in the end)

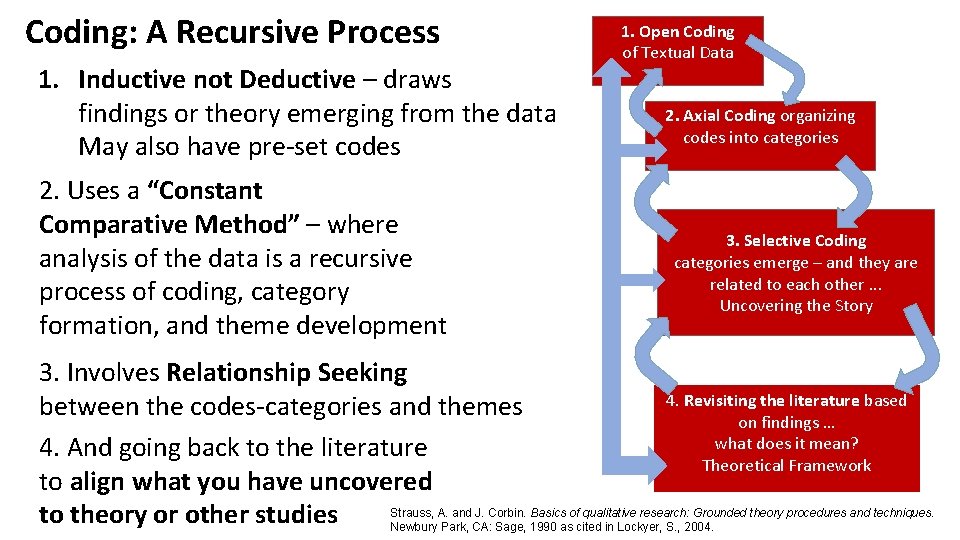

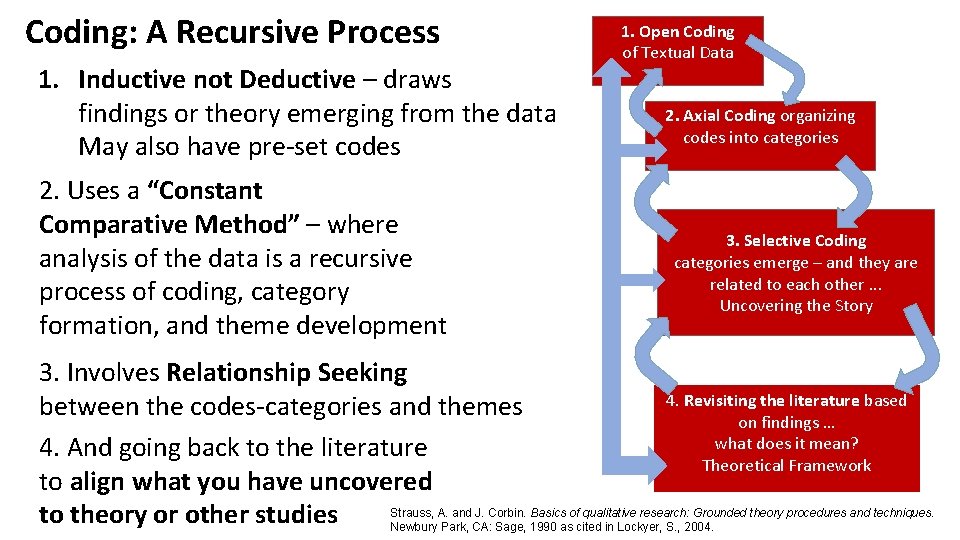

Coding: A Recursive Process 1. Inductive not Deductive – draws findings or theory emerging from the data May also have pre-set codes 2. Uses a “Constant Comparative Method” – where analysis of the data is a recursive process of coding, category formation, and theme development 1. Open Coding of Textual Data 2. Axial Coding organizing codes into categories 3. Selective Coding categories emerge – and they are related to each other. . . Uncovering the Story 3. Involves Relationship Seeking 4. Revisiting the literature based between the codes-categories and themes on findings … what does it mean? 4. And going back to the literature Theoretical Framework to align what you have uncovered Strauss, A. and J. Corbin. Basics of qualitative research: Grounded theory procedures and techniques. to theory or other studies Newbury Park, CA: Sage, 1990 as cited in Lockyer, S. , 2004.

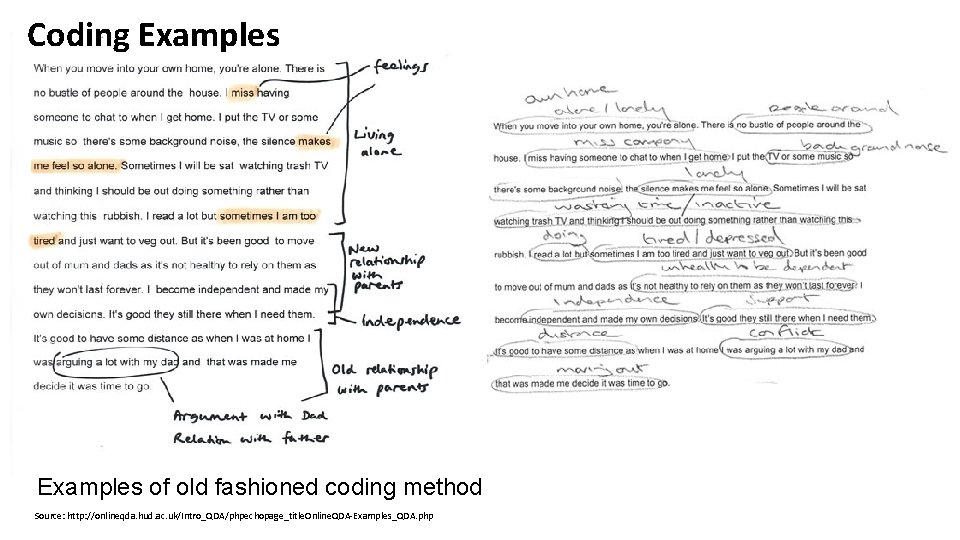

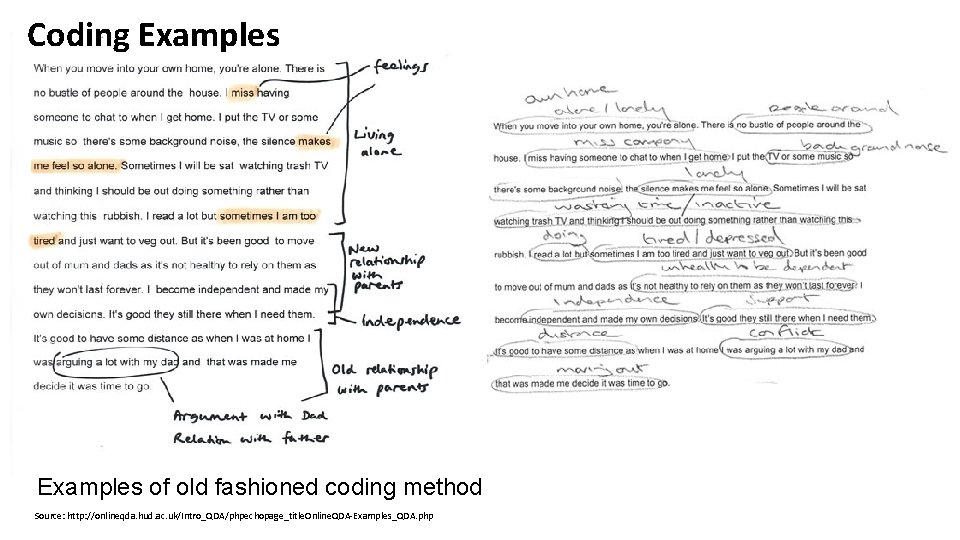

Coding Examples of old fashioned coding method Source: http: //onlineqda. hud. ac. uk/Intro_QDA/phpechopage_title. Online. QDA-Examples_QDA. php

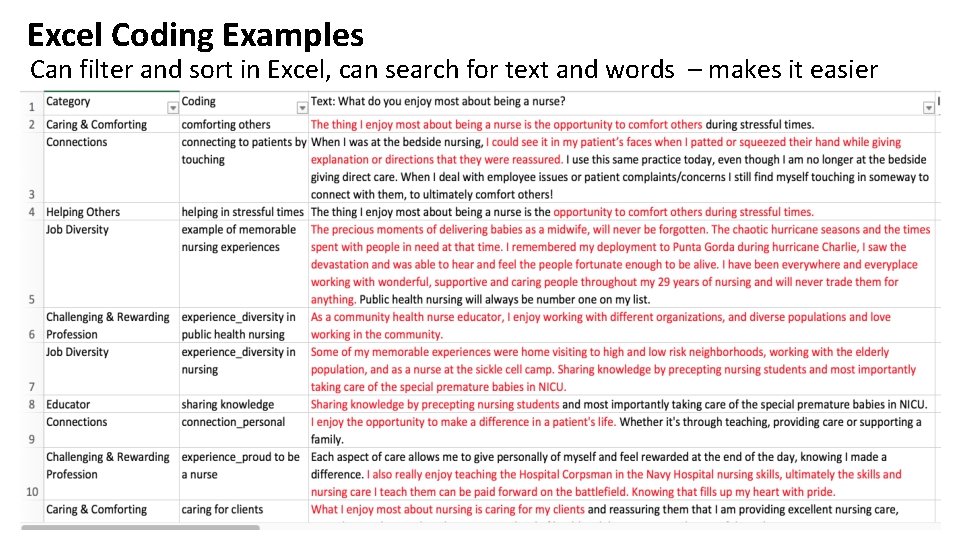

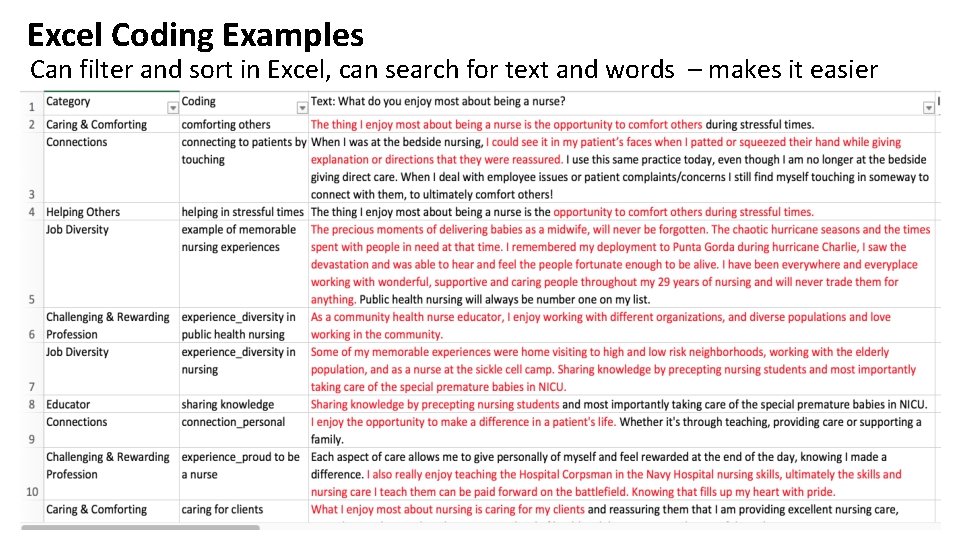

Excel Coding Examples Can filter and sort in Excel, can search for text and words – makes it easier

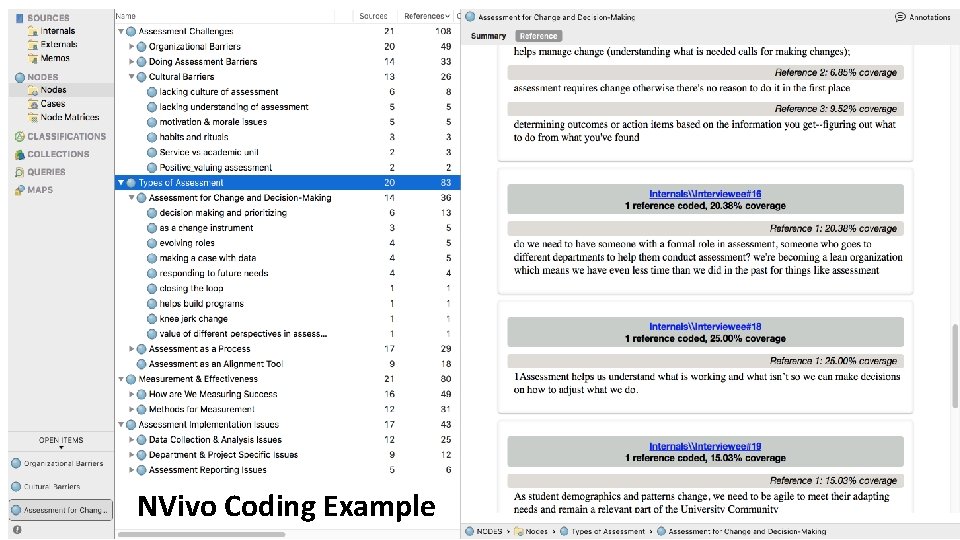

NVivo Coding Example

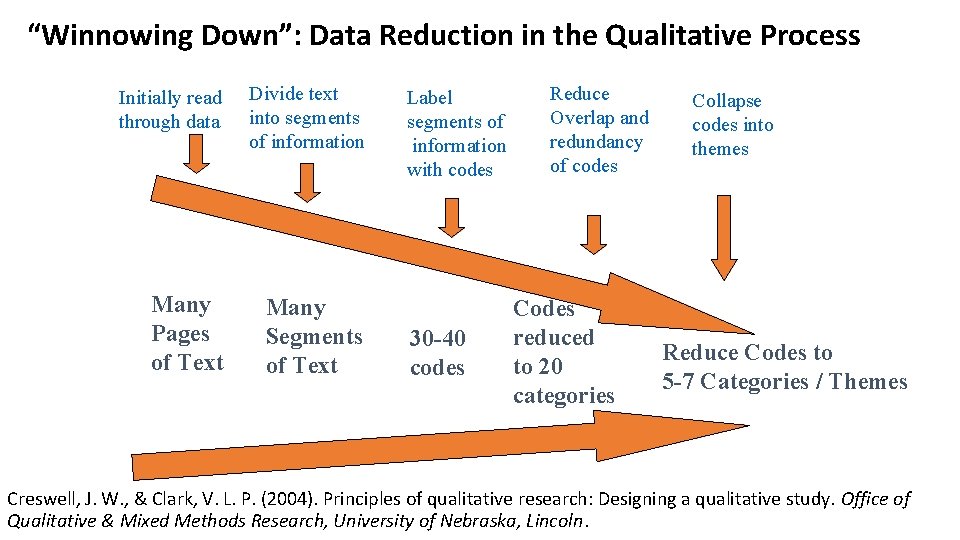

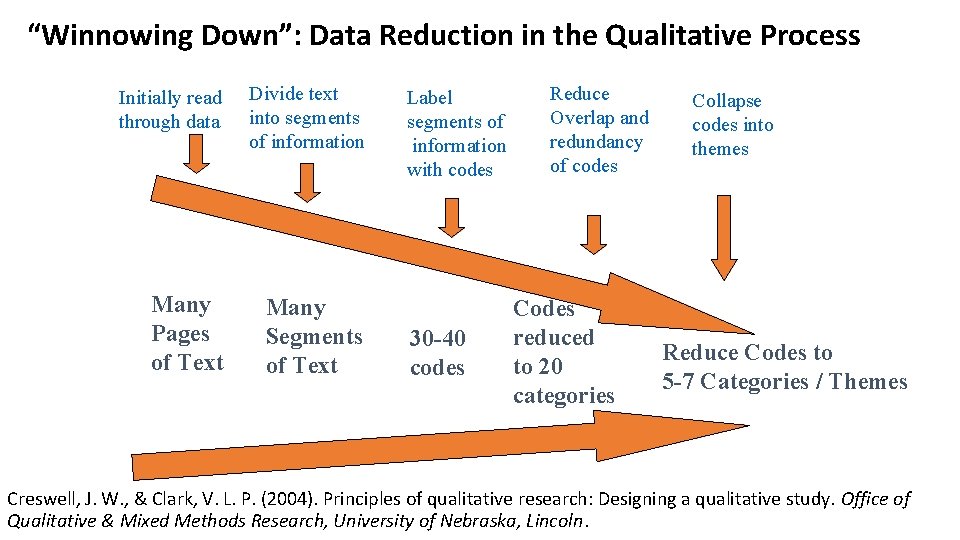

“Winnowing Down”: Data Reduction in the Qualitative Process Initially read through data Divide text into segments of information Many Pages of Text Many Segments of Text Label segments of information with codes 30 -40 codes Reduce Overlap and redundancy of codes Codes reduced to 20 categories Collapse codes into themes Reduce Codes to 5 -7 Categories / Themes Creswell, J. W. , & Clark, V. L. P. (2004). Principles of qualitative research: Designing a qualitative study. Office of Qualitative & Mixed Methods Research, University of Nebraska, Lincoln.

Short Coding Activity • Try your hand at coding a short excerpt in your packet • Share your codes with a peer or a group around you • How similar was your coding? • Report back http: //www. angelastockman. com/wordpress/wpcontent/uploads/2016/02/CODING-QUALITATIVE-DATA 768 x 1024. png

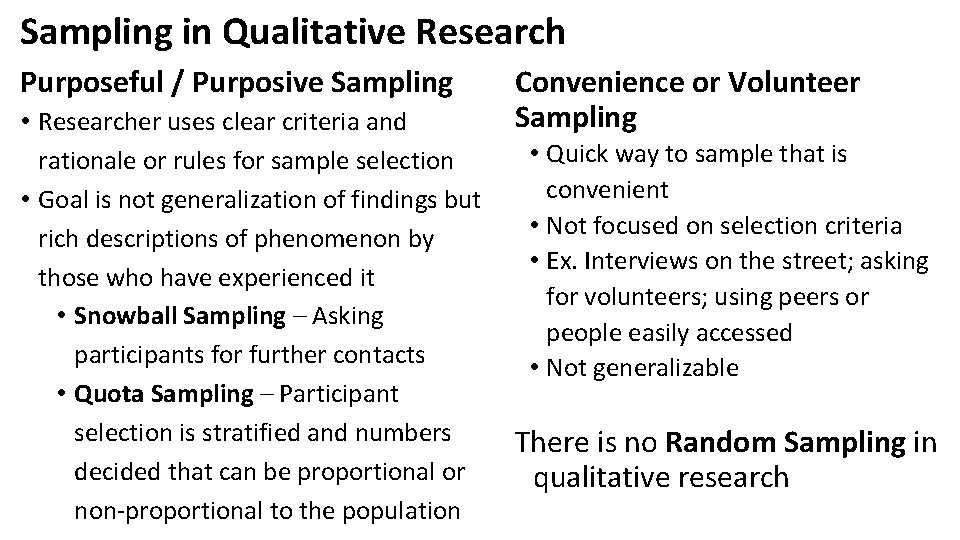

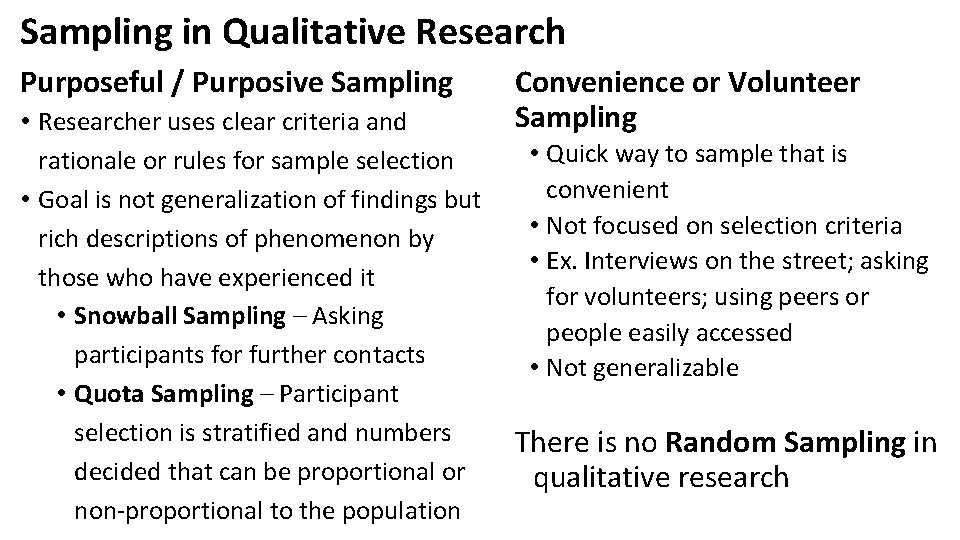

Sampling in Qualitative Research Purposeful / Purposive Sampling Convenience or Volunteer Sampling • Researcher uses clear criteria and • Quick way to sample that is rationale or rules for sample selection convenient • Goal is not generalization of findings but • Not focused on selection criteria rich descriptions of phenomenon by • Ex. Interviews on the street; asking those who have experienced it for volunteers; using peers or • Snowball Sampling – Asking people easily accessed participants for further contacts • Not generalizable • Quota Sampling – Participant selection is stratified and numbers There is no Random Sampling in decided that can be proportional or qualitative research non-proportional to the population

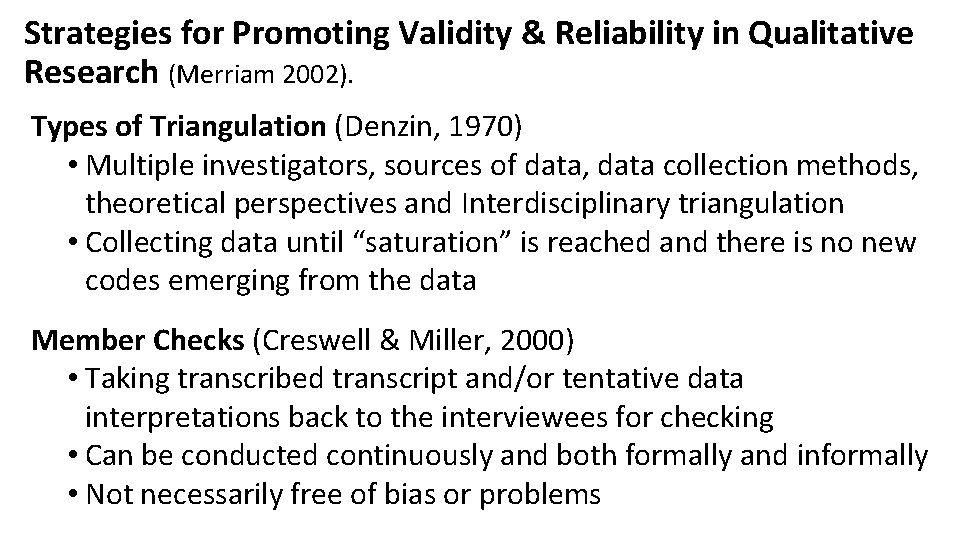

Strategies for Promoting Validity & Reliability in Qualitative Research (Merriam 2002). Types of Triangulation (Denzin, 1970) • Multiple investigators, sources of data, data collection methods, theoretical perspectives and Interdisciplinary triangulation • Collecting data until “saturation” is reached and there is no new codes emerging from the data Member Checks (Creswell & Miller, 2000) • Taking transcribed transcript and/or tentative data interpretations back to the interviewees for checking • Can be conducted continuously and both formally and informally • Not necessarily free of bias or problems

Other Strategies for Promoting Validity & Reliability in Qualitative Research (Merriam 2002). Researcher’s Reflexivity • Critical self-reflection by the researcher to identify and make transparent biases that may impact the investigation • “Bracketing” or setting aside one’s biases and personal views on a topic by keeping a diary of personal thoughts and decisions during the planning, implementation and analysis Transferability refers to the generalizability of the study findings to other settings, populations, and contexts • Report must provide sufficient detail about the collection and analysis of data so that reader can assess and use to reproduce study (think of a cookbook metaphor) • Lack of transferability is viewed as a weakness of qualitative methods

Other Strategies for Promoting Validity & Reliability in Qualitative Research (Merriam 2002). An Audit Trail (Lincoln and Guba, 1985) • A record of the detailed account of the methods, procedures and decision points in carrying out the study • Six categories of audit trail materials: (1) raw data (interview guides, notes, documents) (2) data reduction and analysis products (3) data reconstruction and synthesis products (e. g. data analysis sheets) (4) process notes (journal) (5) materials relating to intentions and dispositions (inquiry proposal, journal, peer debriefing notes) (6) information relevant to any instrument development.

Ten Ways to Avoid Drowning in Qualitative Data: Try it in your Classroom 1. “As you begin learning from the data you are gathering, narrow your study 2. Then, narrow your view within this smaller study (start small) 3. Use analytic questions to refine your perspective and begin limiting the data you gather 4. Plan your next study in response to the findings from your last study 5. Reflect on your findings as you go, and make helpful notations 6. Share the hunches you’re developing with your students, and invite them to help you tighten your focus 7. Research your topic, and use what others are learning to inform your work 8. Create metaphors and analogies for your discoveries 9. Use visual devices to represent the story of your learning 10. Code your data” Angela Stockman Blog - http: //www. angelastockman. com/blog/2016/02/14/want-to-go-gradeless-ten-ways-to-avoid-drowning-in-data/

References- Evaluation • Banta, T. W. , & Palomba, C. A. (2014). Assessment essentials: Planning, implementing, and improving assessment in higher education (2 nd Ed. ). San Francisco, CA: Jossey-Bass Publishers, p. 1 -2 • Better Evaluation Resources http: //www. betterevaluation. org/en/commissioners_guide/step 5 • Center for the Advancement of Informal Science Education Evaluation Resources http: //www. informalscience. org/evaluation • Corporation for National & Community Service Evaluation Resources https: //www. nationalservice. gov/resources/evaluation (Links to an external site. )Links to an external site. • Evaluation Plan Template Sections http: //oerl. sri. com/plans/alignment/plansalign. html • Frechtling, (2010), 2002 User-Friendly Handbook for Project Evaluations. Adapted from the 2010 User-friendly handbook of project evaluation (http: //www. informalscience. org/2010 -user-friendly-handbook-project-evaluation • Jackson, W. , & Verberg, N. (2002). Approaches to methods. Methods: doing social research. Toronto, Pearson Prentice Hall • NSF (2004). A Guide for Proposal Writing (nsf 04016_Criteria for Evaluation: Intellectual merit and broader impacts). http: //www. nsf. gov/pubs/2004/nsf 04016_4. htm • Owens, J. M. (2006). Program evaluation: Forms and approaches (3 rd Ed. ). NY: The Guilford Press, p 18. • Pell Institute Evaluation 1010 Toolkit http: //toolkit. pellinstitute. org/evaluation-101/ • The Kellogg Foundation Logic Model Guidebook https: //www. wkkf. org/resource-directory/resource/2006/02/wk-kellogg -foundation-logic-model-development-guide • Trochim, W. (2006) Research Knowledge Base Website Available at: https: //www. socialresearchmethods. net

References - Qualitative Research • Angela Stockman Blog - http: //www. angelastockman. com/blog/2016/02/14/want-to-go-gradeless-ten-ways-to-avoiddrowning-in-data/ • Creswell, J. W. (1994), Research Design: Quantitative and Qualitative Approaches. Thousand Oaks, CA: Sage. • Creswell, J. W. , & Miller, D. L. (2000). Determining validity in qualitative inquiry. Theory into practice, 39(3), 124 -130. • Creswell, J. W. , & Clark, V. L. P. (2004). Principles of qualitative research: Designing a qualitative study. Office of Qualitative & Mixed Methods Research, University of Nebraska, Lincoln. • Creswell, J. W. (2008). Research: Planning, conducting, and evaluating quantitative and qualitative research (3 rd ed. ). Upper Saddle River: Pearson. • Denzin, N. K. (1970). Strategies of multiple triangulation (pp. 297 -313). In N. K. Denzin (Ed. ), The research act in sociology: A theoretical introduction to sociological method. New York: Mc. Graw-Hill. • Lincoln, Y. S. , & Guba, E. G. (1985). Naturalistic inquiry. Beverly Hills, CA: Sage. • Merriam, S. B. (2002). Qualitative research in practice: Examples for discussion and analysis. San Francisco, CA: Jossey. Bass Inc Pub. • Online QDA website: http: //onlineqda. hud. ac. uk/Intro_QDA/phpechopage_title. Online. QDA-Examples_QDA. php • Strauss, A. and J. Corbin. Basics of qualitative research: Grounded theory procedures and techniques. Newbury Park, CA: Sage, 1990 as cited in Lockyer, S. , 2004. • University of Central Florida Faculty Center. Available at: http: //www. fctl. ucf. edu/researchandscholarship/sotl/creatingsotlprojects/implementingmanaging/qualitativeresearch types. php