Using PatternModels to Guide SSD Deployment for Big

Using Pattern-Models to Guide SSD Deployment for Big Data in HPC Systems Junjie Chen 1, Philip C. Roth 2, Yong Chen 1 1 Data-Intensive Scalable Computing Lab (DISCL) Department of Computer Science Texas Tech University 2 Oak Ridge National Laboratory IEEE Big. Data’ 13 1

Background • HPC applications are increasingly data-intensive • Scientific simulations have already reached 100 TB – 1 PB of data volume, projected at the scale of 10 PB – 100 PB for upcoming exascale • Collected data from instruments increases rapidly too, in a global climate model, with 100 × 120 km grid cell, PBs of data managed • Such trend brings a critical challenge • Efficient I/O access demands • Highly efficient storage system IEEE Big. Data’ 13 2

Storage Media • Over 90% of all data in the world is being stored on magnetic media (hard disk drives, HDDs) • IBM invented in 1956 • Mechanism remains the same since then • Various mechanical moving parts • High latency, slow random access performance, unreliable, power hungry • Large capacity, low cost (USD 0. 10/GB), impressive sequential access performance IEEE Big. Data’ 13 3

Emerging Storage Media • Non-volatile storage-class memory (SCM) • Flash-memory based Solid State Drives (SSDs), PCRAM, NRAM, … • Use microchips which retain data in nonvolatile memory (array of floating gate transistors isolated by an insulating layer) Intel® X 25 -E SSD • Superior performance, high bandwidth, low latency (esp. random accesses), less susceptible to physical shock, power efficient • Low capacity, high cost (USD 0. 90 -2/GB), block erasure, wear out (10 K-100 K P/E cycles) IEEE Big. Data’ 13 4

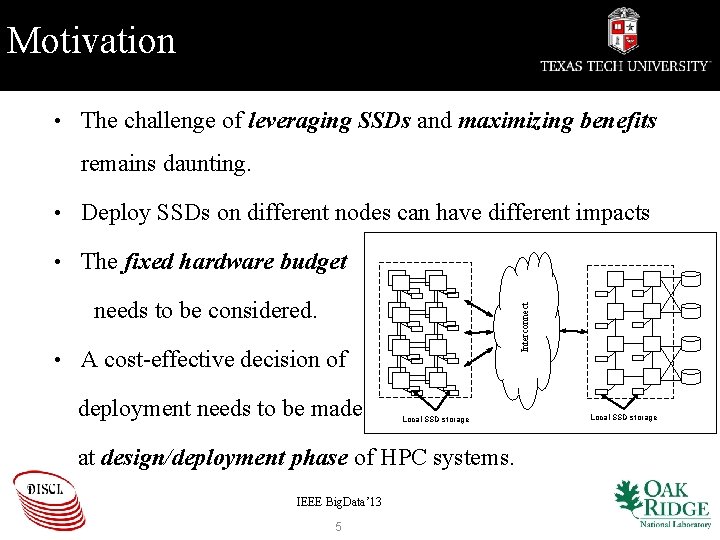

Motivation • The challenge of leveraging SSDs and maximizing benefits remains daunting. • Deploy SSDs on different nodes can have different impacts • The fixed hardware budget Interconnect needs to be considered. • A cost-effective decision of deployment needs to be made Local SSD storage at design/deployment phase of HPC systems. IEEE Big. Data’ 13 5 Local SSD storage

Our Study • To investigate different deployment strategies • Compute side and storage side • Characteristics of SSDs, ratios, access patterns • Consider a fixed hardware budget • Pattern-Model Guided Deployment Approach • Considering I/O access pattern of workloads • Considering SSD characteristics via a performance model IEEE Big. Data’ 13 6

Our Contributions • We propose a pattern-model guided deployment approach • We introduce a performance model to quantitatively analyze different SSD deployment strategies • We try to answer the questions of how SSDs show be utilized for big data applications in HPC systems • We have carried out initial experimental tests to verify the proposed approach. IEEE Big. Data’ 13 7

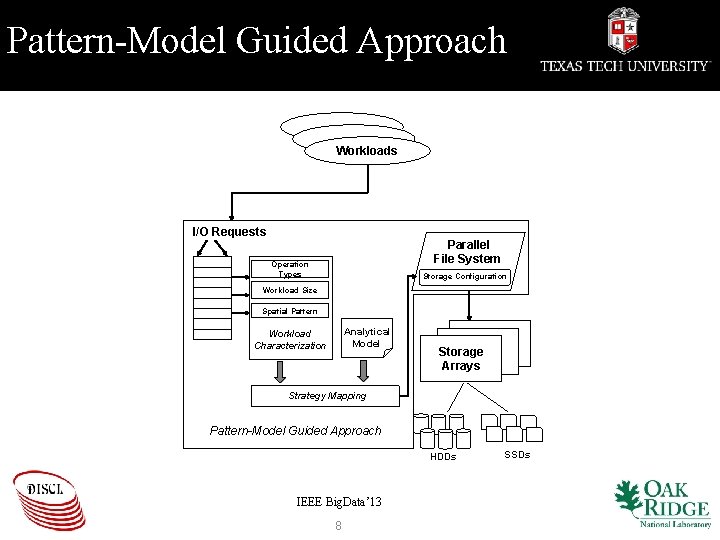

Pattern-Model Guided Approach Workloads I/O Requests Parallel File System Operation Types Storage Configuration Workload Size Spatial Pattern Analytical Model Workload Characterization Storage Arrays Strategy Mapping Pattern-Model Guided Approach HDDs IEEE Big. Data’ 13 8 SSDs

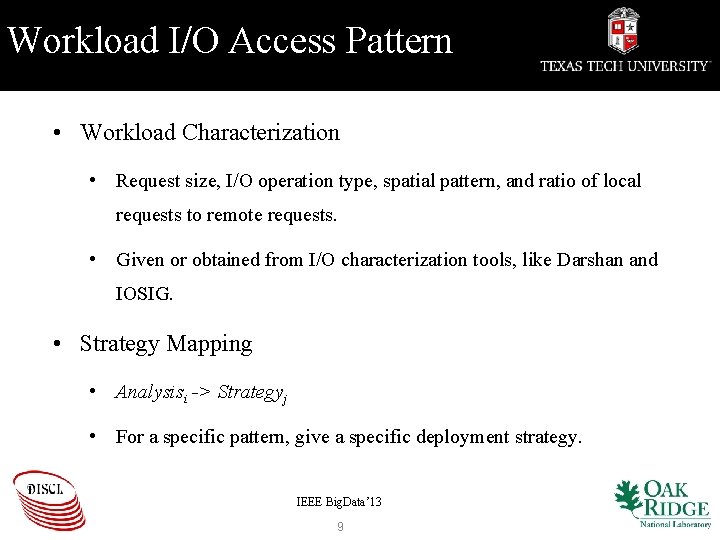

Workload I/O Access Pattern • Workload Characterization • Request size, I/O operation type, spatial pattern, and ratio of local requests to remote requests. • Given or obtained from I/O characterization tools, like Darshan and IOSIG. • Strategy Mapping • Analysisi -> Strategyj • For a specific pattern, give a specific deployment strategy. IEEE Big. Data’ 13 9

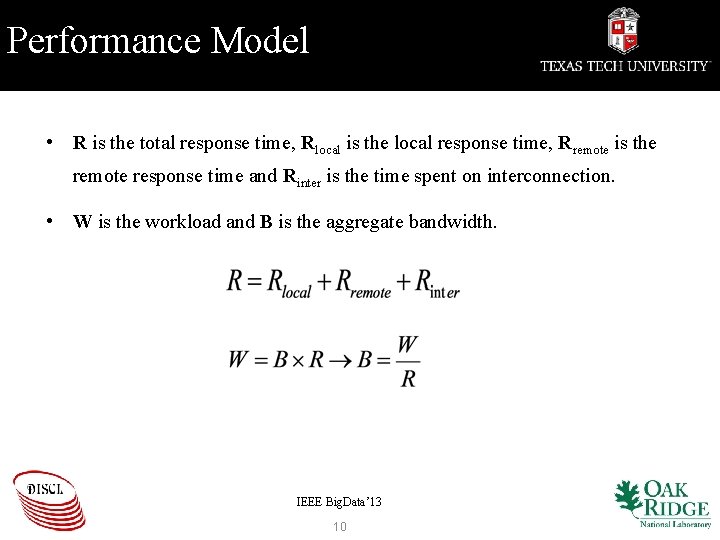

Performance Model • R is the total response time, Rlocal is the local response time, Rremote is the remote response time and Rinter is the time spent on interconnection. • W is the workload and B is the aggregate bandwidth. IEEE Big. Data’ 13 10

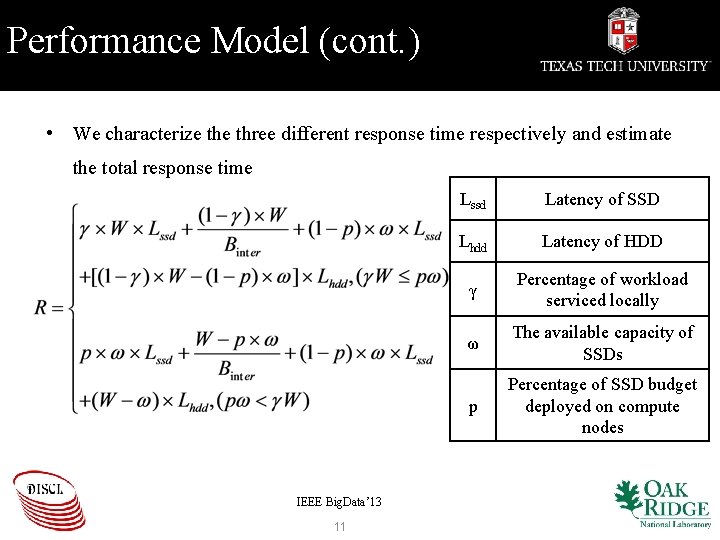

Performance Model (cont. ) • We characterize three different response time respectively and estimate the total response time IEEE Big. Data’ 13 11 Lssd Latency of SSD Lhdd Latency of HDD γ Percentage of workload serviced locally ω The available capacity of SSDs p Percentage of SSD budget deployed on compute nodes

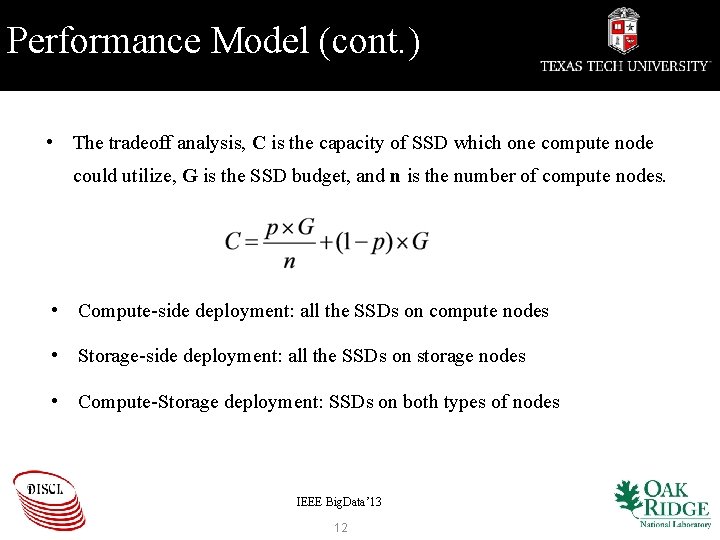

Performance Model (cont. ) • The tradeoff analysis, C is the capacity of SSD which one compute node could utilize, G is the SSD budget, and n is the number of compute nodes. • Compute-side deployment: all the SSDs on compute nodes • Storage-side deployment: all the SSDs on storage nodes • Compute-Storage deployment: SSDs on both types of nodes IEEE Big. Data’ 13 12

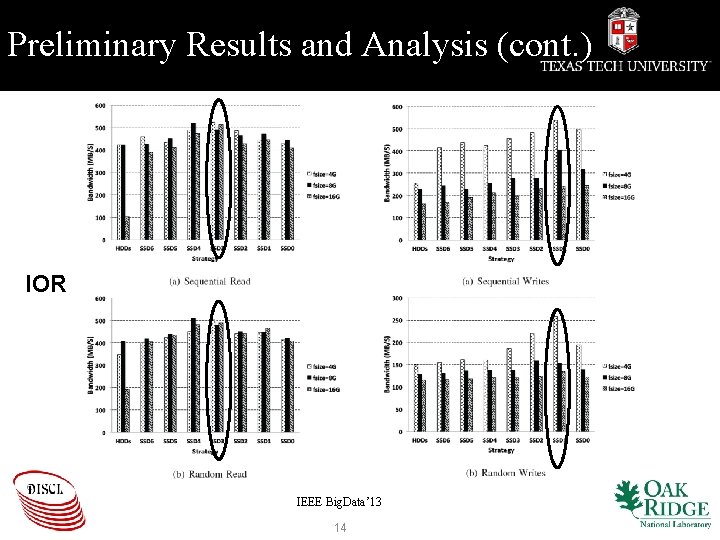

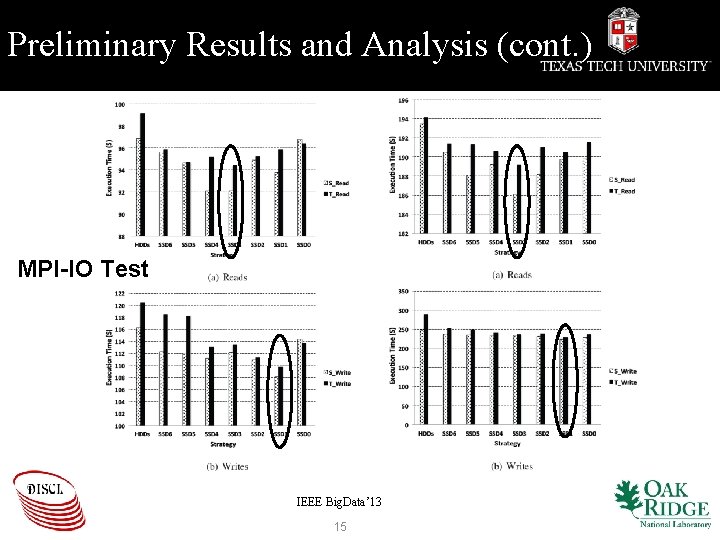

Preliminary Results and Analysis • IOR • Tested the aggregate bandwidth/execution time • File size is varied, and the performance of sequential read and write, and random read and write is tested. • MPI-IO Test • Tested the aggregate bandwidth/execution time • With different file sizes and operation types (sequential read and write, random read and write). • Both benchmark with ratio γ= ¼. IEEE Big. Data’ 13 13

Preliminary Results and Analysis (cont. ) IOR IEEE Big. Data’ 13 14

Preliminary Results and Analysis (cont. ) MPI-IO Test IEEE Big. Data’ 13 15

Conclusion • Flash-memory based SSDs are promising storage devices in the storage hierarchy of HPC systems. • Different deployment strategies of SSDs can impact the performance given a fixed hardware budget • We proposed a pattern-model guided approach • Model the performance impact of various deployment strategies • Considering workload characterization and device characteristics • Mapping to deployment strategy • This study provides a possible solution that guides such placement and deployment strategies IEEE Big. Data’ 13 16

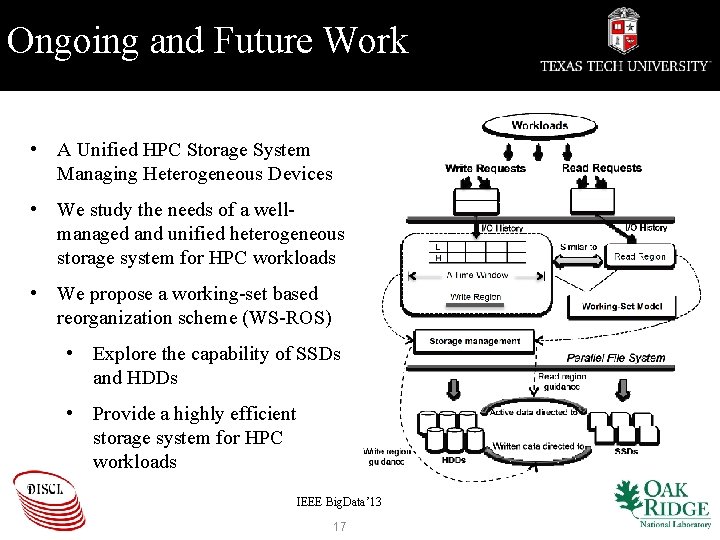

Ongoing and Future Work • A Unified HPC Storage System Managing Heterogeneous Devices • We study the needs of a wellmanaged and unified heterogeneous storage system for HPC workloads • We propose a working-set based reorganization scheme (WS-ROS) • Explore the capability of SSDs and HDDs • Provide a highly efficient storage system for HPC workloads IEEE Big. Data’ 13 17

Q&A Thank You Please visit our website: http: //discl. cs. ttu. edu ACKNOWLDEGEMENT: This research is sponsored in part by the Advanced Scientific Computing Research program, Office of Science, U. S. Department of Energy. This research is also sponsored in part by Texas Tech University startup grant and National Science Foundation under NSF grant CNS-1162488. The work was performed in part at the Oak Ridge National Laboratory, which is managed by UT-Battelle, LLC under Contract No. De-AC 05 -00 OR 22725. Accordingly, the U. S. Government retains a non-exclusive, royalty-free license to publish or reproduce the published form of this contribution, or allow others to do so, for U. S. Government purposes. IEEE Big. Data’ 13 18

- Slides: 18