Using Natural Language Program Analysis to Locate and

- Slides: 27

Using Natural Language Program Analysis to Locate and understand Action-Oriented Concerns David Shepherd, Zachary P. Fry, Emily Hill, Lori Pollock, and K. Vijay-Shanker Presented By: Paul Heintzelman

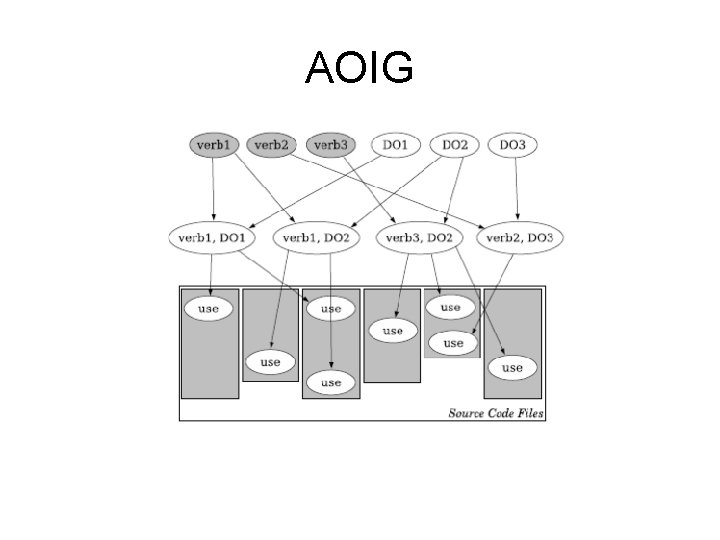

Global Concepts • Concept assignment problem • Hybrid of structural and natural language information • Concern Comprehension • Action-oriented relations between identifiers – Represented by Action-oriented identifier graph model (AOIG)

Why Action-Oriented Concerns • In OOP – Code is organized by objects • Objects are nouns • Objects and actions conflict – Code organized by objects causes actions to be scattered • Therefore in OOP action-oriented concerns tend to be scattered and more difficult to locate

Paper Contributions • AOIG – Interactive query expansion algorithm – A result graph construction algorithm – An Eclipse plug-in • Evaluation – Comparison of search effectiveness of tools – Per task analysis – Comparison of user effort

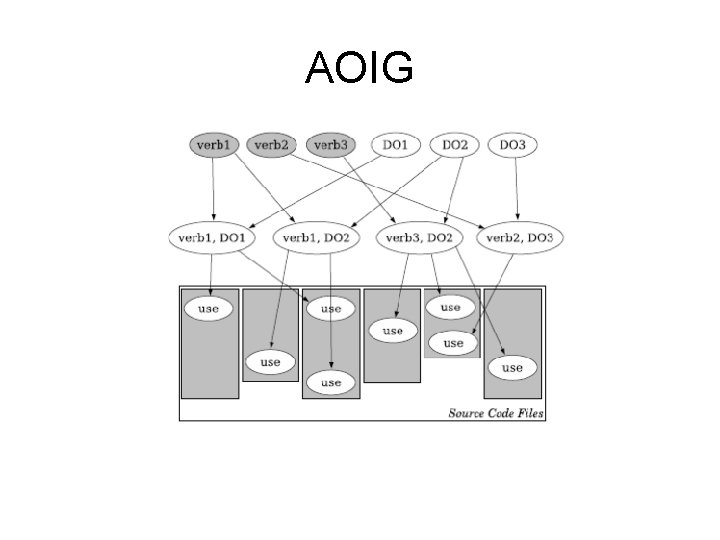

AOIG

State of the Art • Search-based approaches – Lexical searches • Lead to over-generalized searches – Information retrieval • Does not separate verbs and objects • Uses word frequency • Program navigation – Uses structural information e. g. call, inheritance graphs. . . – Accurate but difficult to seed • Dynamic approaches – Requires test case to enact concept

Challenges • Map high level concepts to queries – Aid user in mapping concepts • Inability to search with high precision and recall – Search NLP representation of concern • Understanding large result sets – Return results in an explorable graph

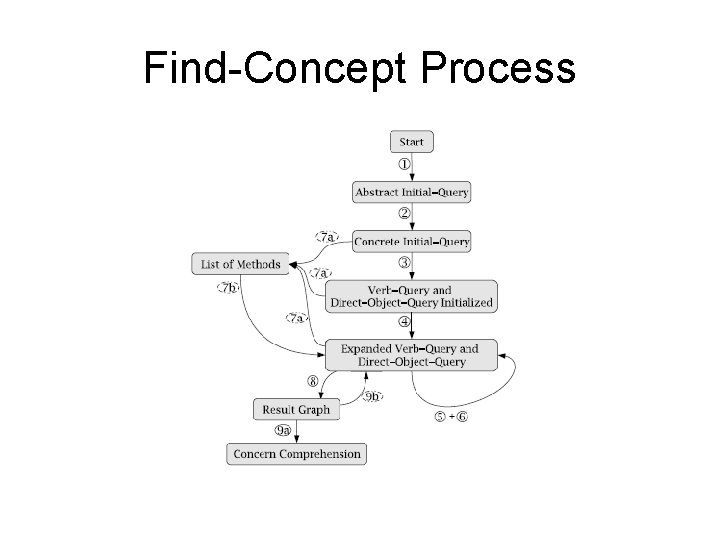

Overview of Approach • User formulates a query – Query must include verb-direct object pairings • User expands query – Recommendations based on query words and source code • Searches the AOIG – Interact with result graph

Independent Variables • Search Tools – Find-Concept – ELex built in Eclipse search – GES Google Eclipse search (modified) • Search Tasks – Application concept pairing • Human Subjects – 13 professional programmers – 5 grad students

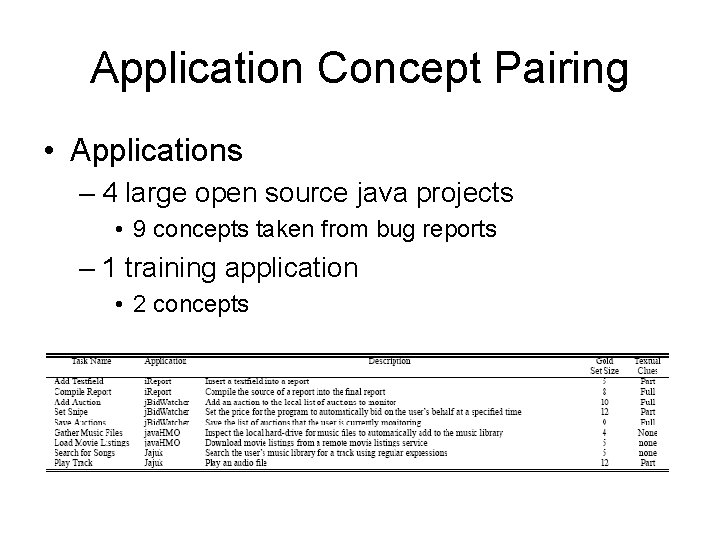

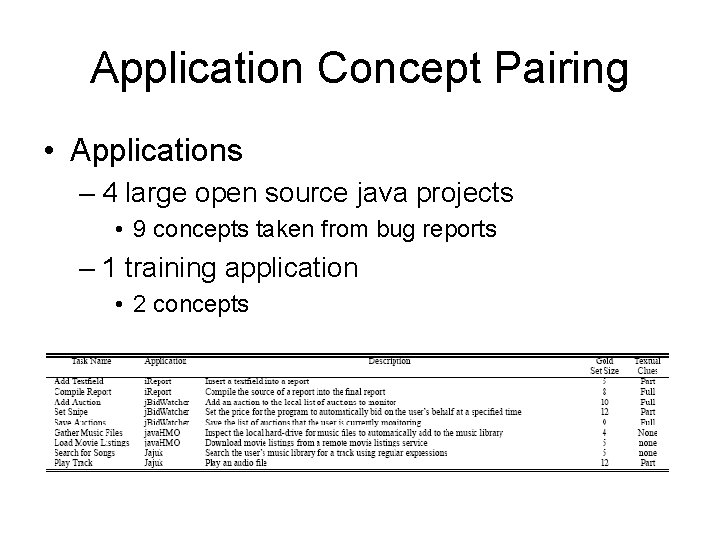

Application Concept Pairing • Applications – 4 large open source java projects • 9 concepts taken from bug reports – 1 training application • 2 concepts

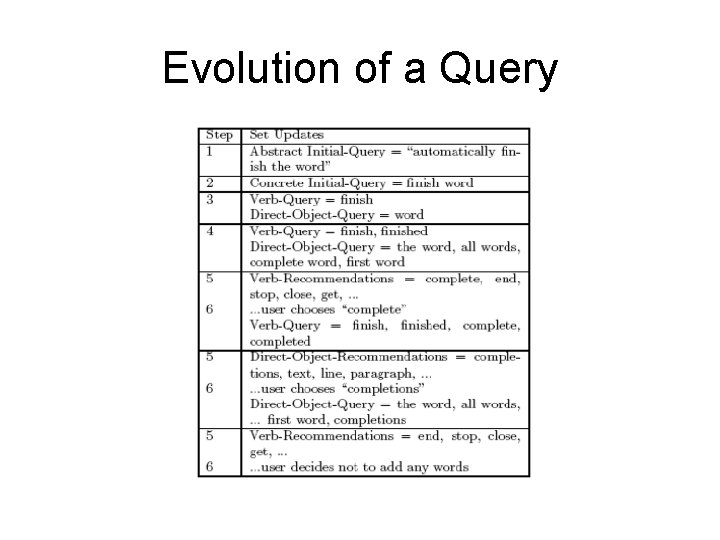

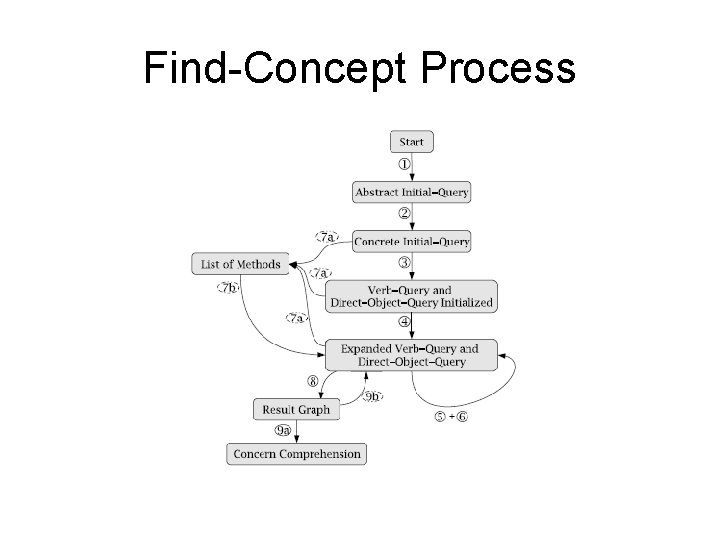

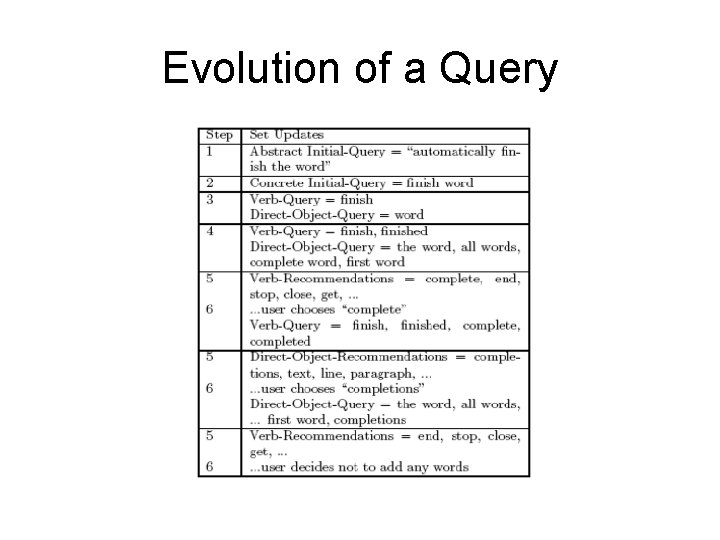

Forming the Initial Query • User generates abstract initial query – e. g. “automatically finish the word” • User decomposes abstract query into verb-direct object pairs – e. g. “finish” and “word” • Find concept maintains both verb query and direct object query • Initial query expansion – User is presented with alternative forms of words in both queries

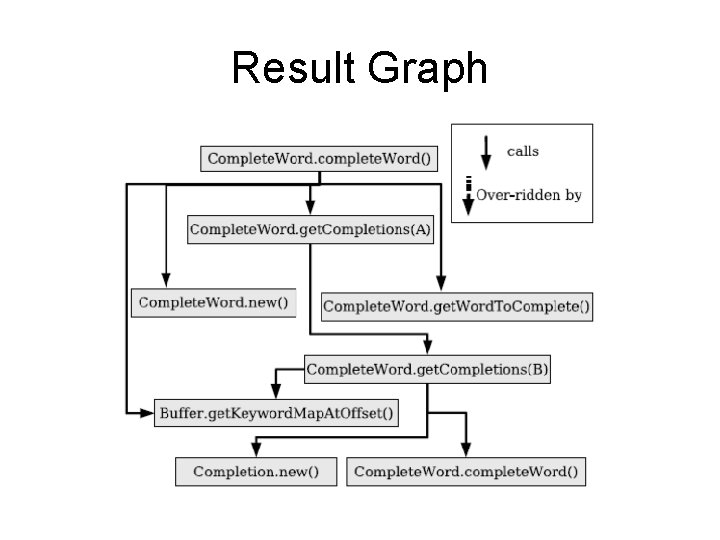

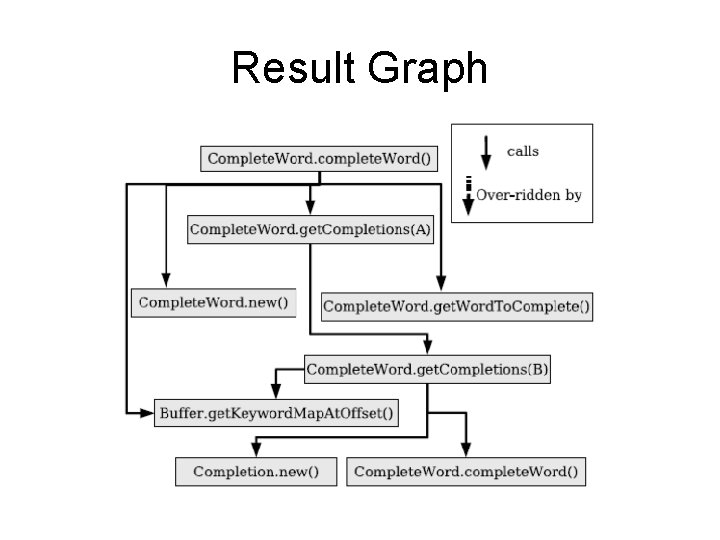

Query Expansion • Iterative steps – Generate recommended list • Similar semantics is weighted more heavily than similar use • 10 ranked recommendations – User examines recommendations • User selects words to add to queries • User can view a list of methods fitting the current queries • Stop when user is satisfied – Augment user query with get, set, execute, construct • Use AOIG to map verb-direct object pairs to source code – Generate result graph

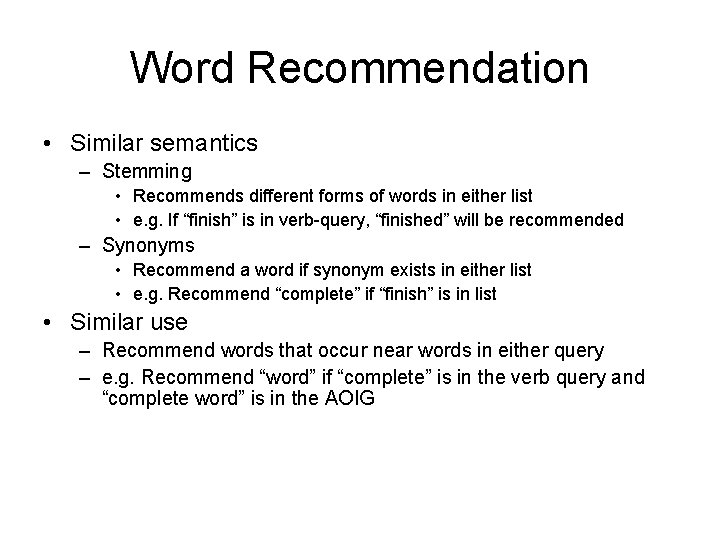

Word Recommendation • Similar semantics – Stemming • Recommends different forms of words in either list • e. g. If “finish” is in verb-query, “finished” will be recommended – Synonyms • Recommend a word if synonym exists in either list • e. g. Recommend “complete” if “finish” is in list • Similar use – Recommend words that occur near words in either query – e. g. Recommend “word” if “complete” is in the verb query and “complete word” is in the AOIG

Evolution of a Query

Result Graph

Find-Concept Process

Research Questions • Which search tool is most effective at locating concerns by forming and executing a query? • Which search tool requires the least amount of human effort to form an effective query?

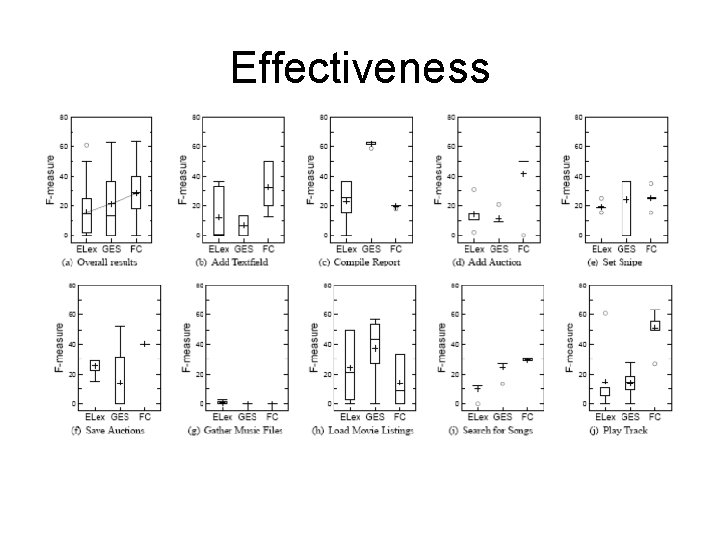

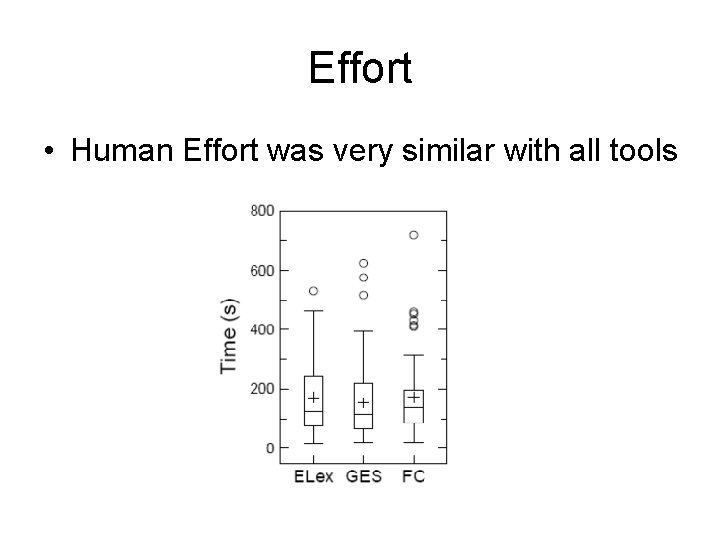

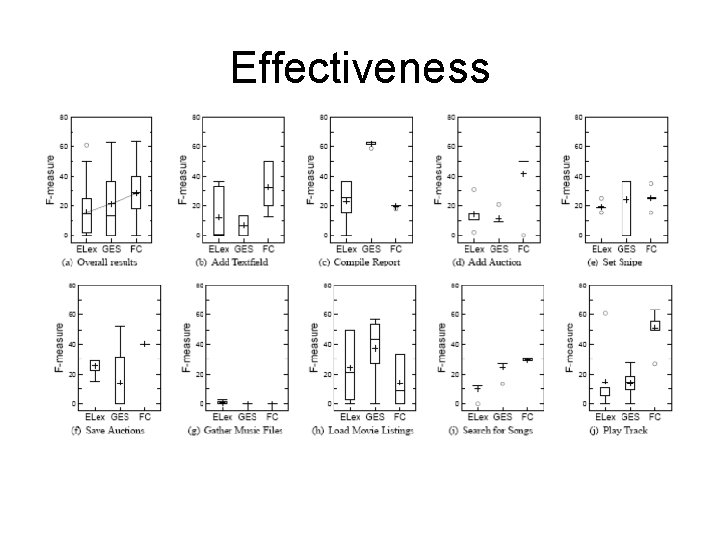

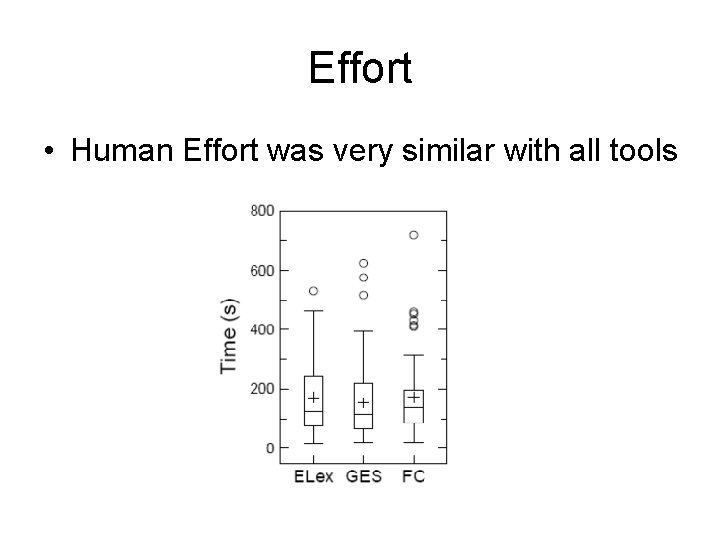

Evaluation • Effectiveness – Use the harmonic mean of precision and recall (f-measure) • (2 * precision * recall)/(precision + recall) – Result set is compared to evaluation set • Evaluation set is 90% generated by a member unfamiliar with the work of this paper • Effort – Measured amount of time required to form each query

Experimental Setup • Training – Subjects are guided through the use of each tool on the two training tasks • Task setup – Users are presented concepts in a visual form – Users confirm that they understood each task

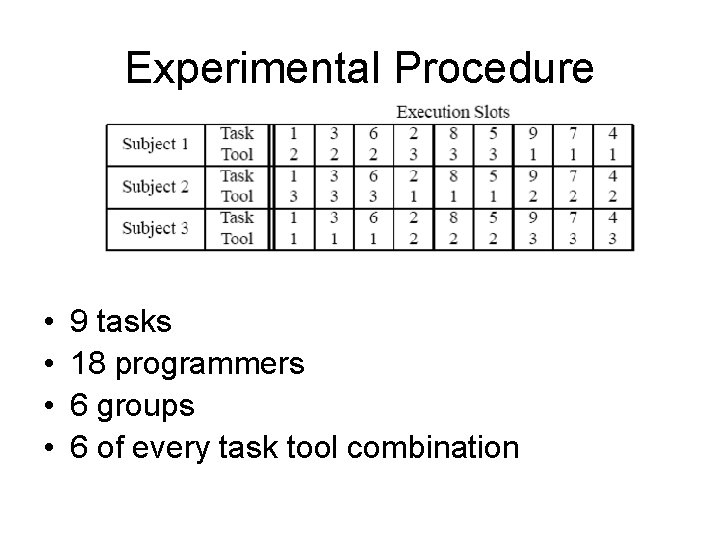

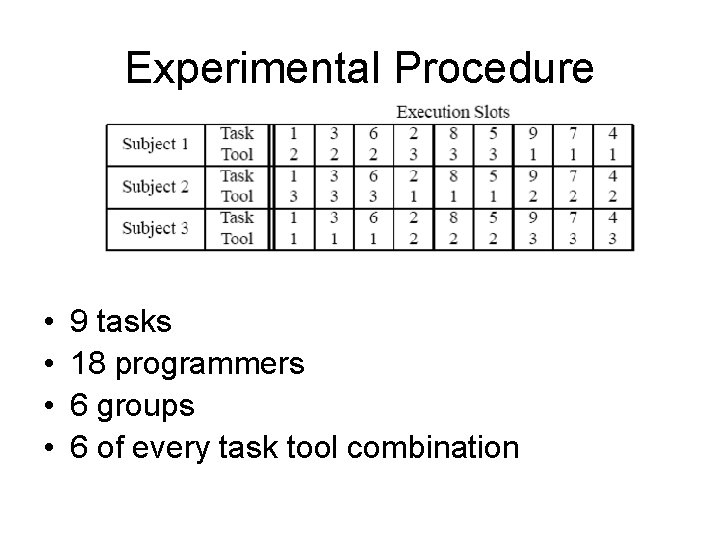

Experimental Procedure • • 9 tasks 18 programmers 6 groups 6 of every task tool combination

Results • Find-Concept vs. ELex – Consistently outperformed ELex • Find-Concept vs. GES – Outperformed GES on 4 tasks – Outperformed by GES on 2 tasks • AOIG to blame? – Performed equally to GES on 3 tasks

Effectiveness

Effort • Human Effort was very similar with all tools

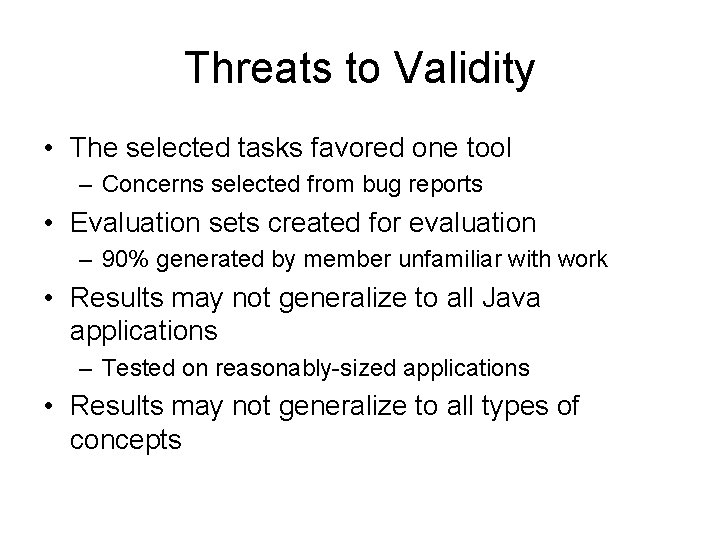

Threats to Validity • The selected tasks favored one tool – Concerns selected from bug reports • Evaluation sets created for evaluation – 90% generated by member unfamiliar with work • Results may not generalize to all Java applications – Tested on reasonably-sized applications • Results may not generalize to all types of concepts

Conclusion • Interactive query expansion algorithm • Graph construction algorithm • Find-Concept performs well against state of the art tools • All evaluated tools required similar human effort

Future Work • Create a more effective AOIGBuilder • Evaluate the effect of application’s quality and size on results • Evaluate the effect of incorporating naming conventions • Perform a study on how many tasks focus on actions • Automate query expansion

Additional threats to validity • Effort and Effectiveness are not really independent • Relies heavily on unjustified heuristic – Augmenting query • Search tools are often used in conjunction with structural tools