Using Historic Patterns in Both Cache Prefetching and

Using Historic Patterns in Both Cache Prefetching and Cache Replacement Jim Gast & Laura Spencer CS 703, Spring 2000

Motivation • Disk I/O is slow and not getting faster – 10 milliseconds for an unexpected open • Memory is cheap and getting cheaper – Cache more, read ahead, stay ahead • LRU is a lousy algorithm • Program modification is hard • History is (comparatively) easy

New metadata per file • • Uses (actual opens from actual programs) Cache-behind successes Prefetch successes, Prefetch tries Prefetch failures

Store history in triads • Historically, file E is typically followed by – file F after interval IE->F with Bias BE->F • And E, F is often followed by – file G after interval IEF->G with Bias BEF->G • Bias is the conditional probability – P(G|E) = P(F|E)*P(G|E, F) = BE->F * BEF->G

Simplifying Assumptions • Every file is just one page • Unique file name implies unique disk address • Ignore writes • Any file open takes 10 milliseconds – No disk contention

Prediction Algorithms • NONE • Kroger & Long – 3 rd order Trie – We added expected request time • Ours – 5 th order Trie simulated using recursion on 3 rd – Including expected request time

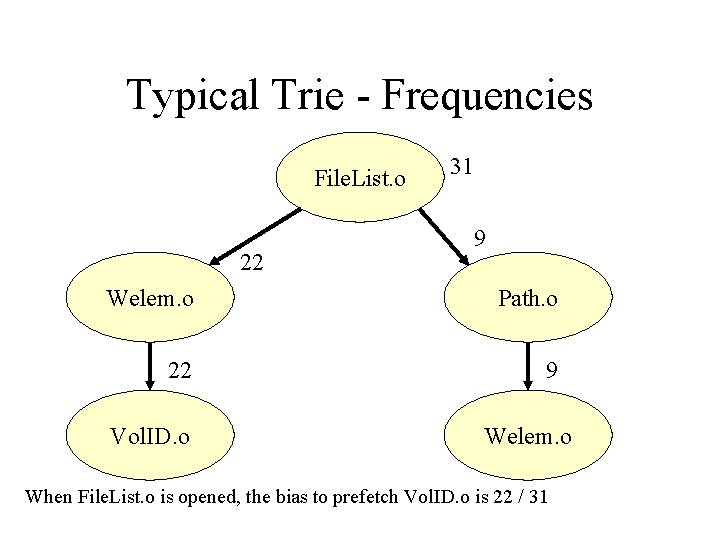

Typical Trie - Frequencies File. List. o 22 31 9 Welem. o Path. o 22 9 Vol. ID. o Welem. o When File. List. o is opened, the bias to prefetch Vol. ID. o is 22 / 31

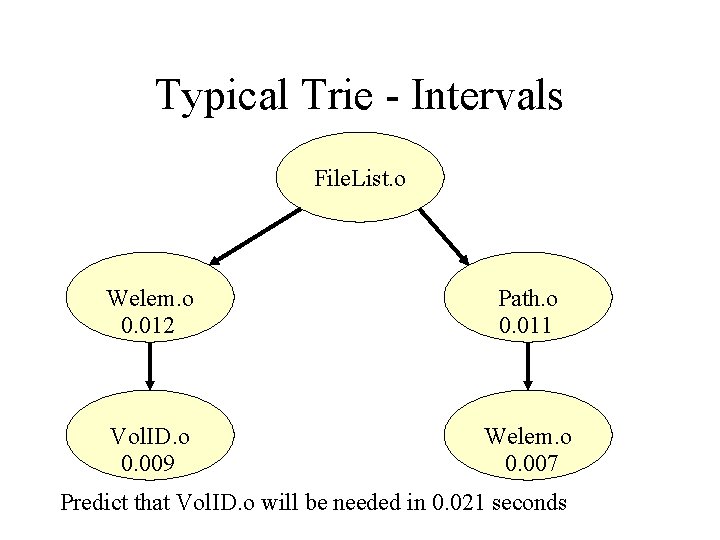

Typical Trie - Intervals File. List. o Welem. o 0. 012 Path. o 0. 011 Vol. ID. o 0. 009 Welem. o 0. 007 Predict that Vol. ID. o will be needed in 0. 021 seconds

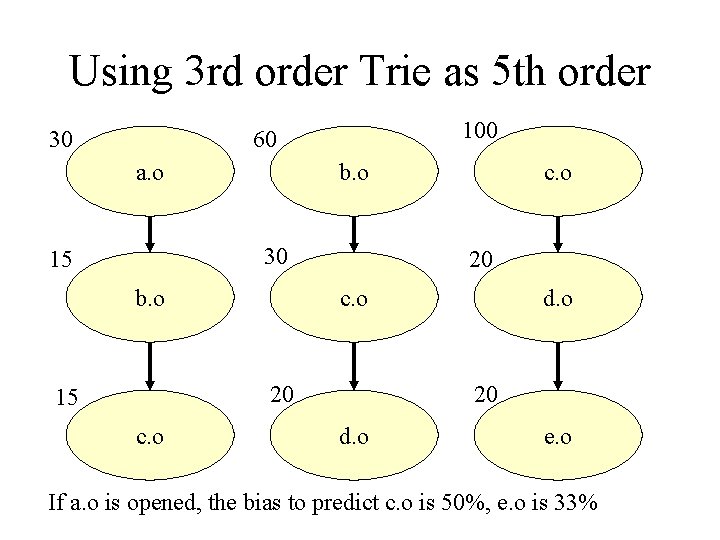

Using 3 rd order Trie as 5 th order 30 100 60 a. o b. o 30 15 b. o 20 c. o 20 15 c. o d. o 20 d. o e. o If a. o is opened, the bias to predict c. o is 50%, e. o is 33%

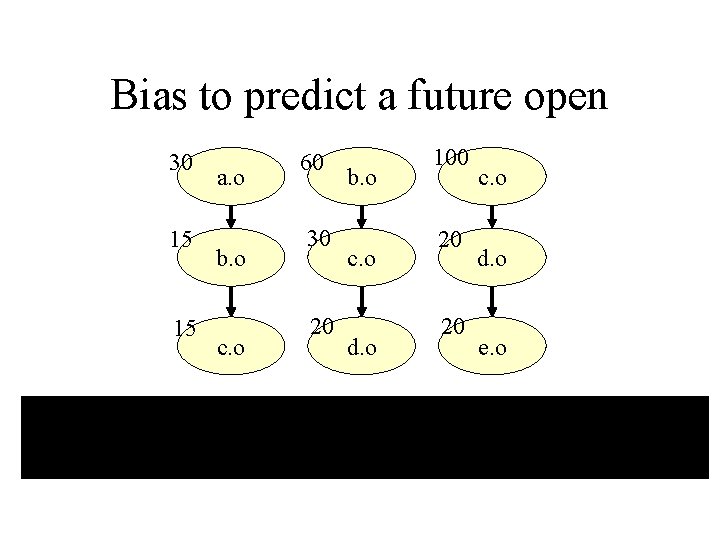

Bias to predict a future open 30 15 15 a. o b. o c. o 60 30 20 b. o c. o d. o 100 20 20 c. o d. o e. o

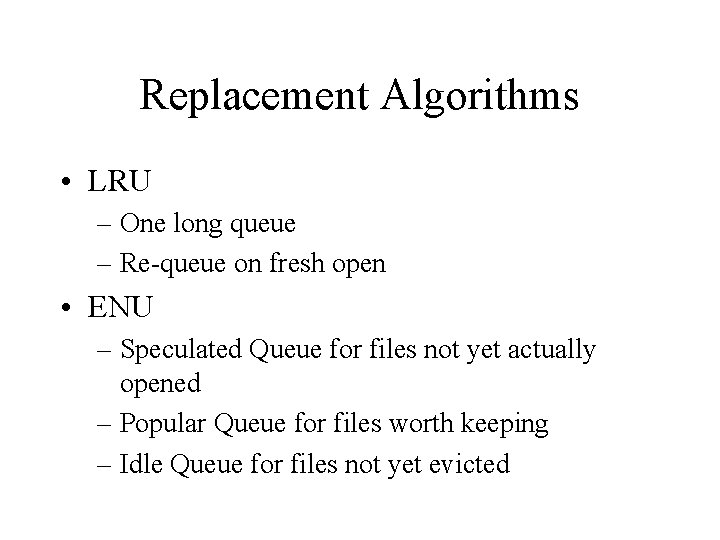

Replacement Algorithms • LRU – One long queue – Re-queue on fresh open • ENU – Speculated Queue for files not yet actually opened – Popular Queue for files worth keeping – Idle Queue for files not yet evicted

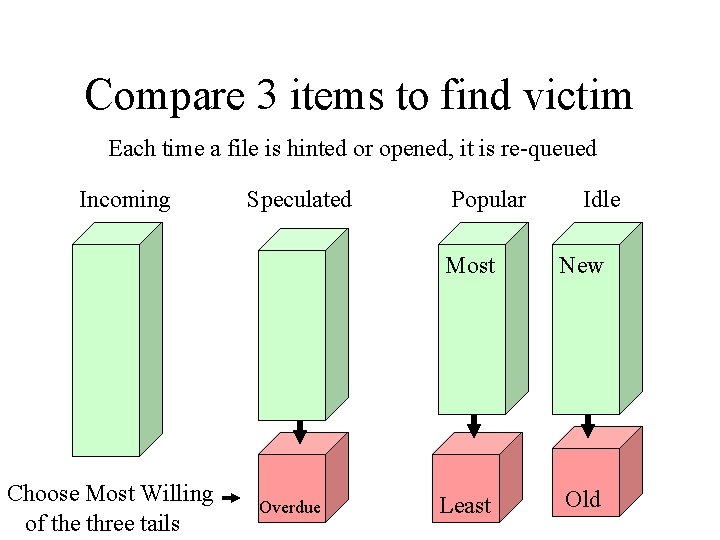

Compare 3 items to find victim Each time a file is hinted or opened, it is re-queued Incoming Choose Most Willing of the three tails Speculated Overdue Popular Idle Most New Least Old

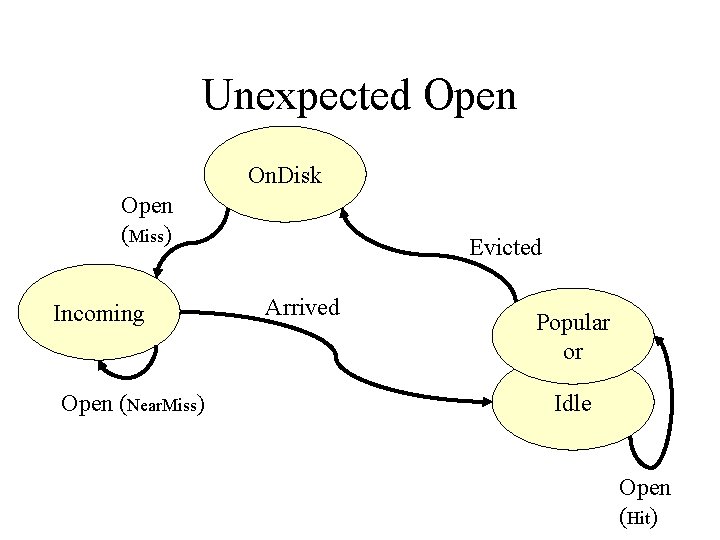

Unexpected Open On. Disk Open (Miss) Incoming Open (Near. Miss) Evicted Arrived Popular or Idle Open (Hit)

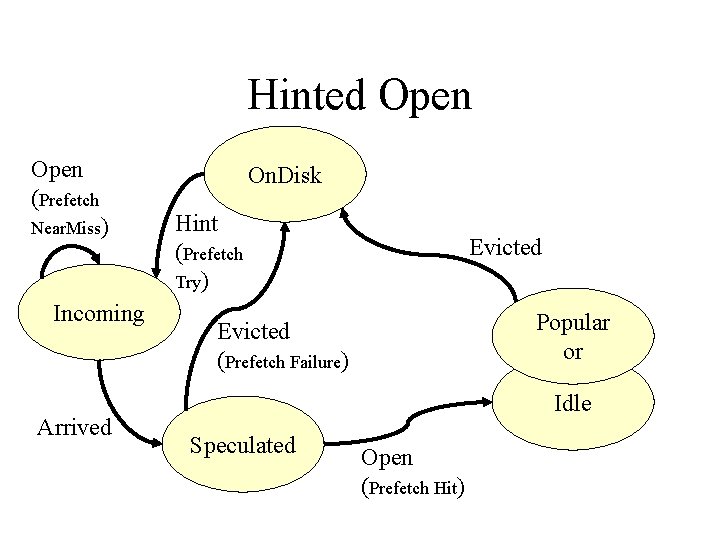

Hinted Open (Prefetch Near. Miss) Incoming Arrived On. Disk Hint (Prefetch Try) Evicted Popular or Evicted (Prefetch Failure) Idle Speculated Open (Prefetch Hit)

Trace-driven Simulation • Seer traces from UCLA • Nine LINUX servers – Software development environment • Every file open for several weeks – We only used one week from one server

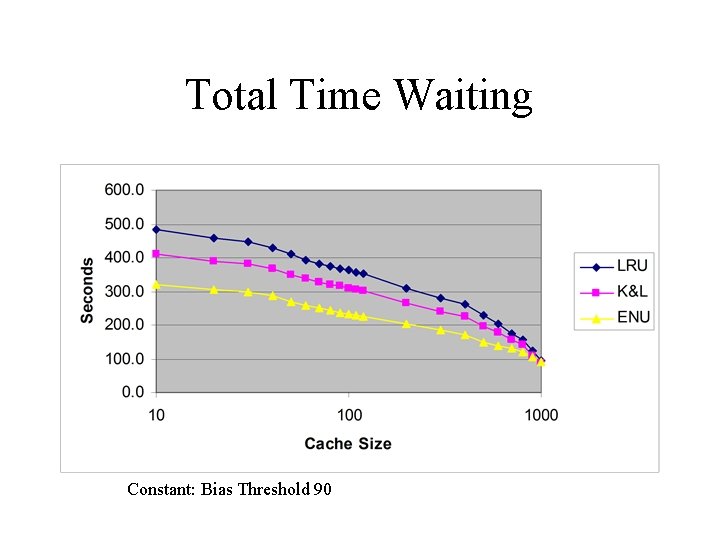

Simulation • 21 Cache sizes – 10 pages = much smaller than workingset – 1000 pages = much larger than workingset • Various levels of aggression – 90% bias (conservative) – 10% bias (aggressive) • Compared LRU, K&L, Pop, ENU

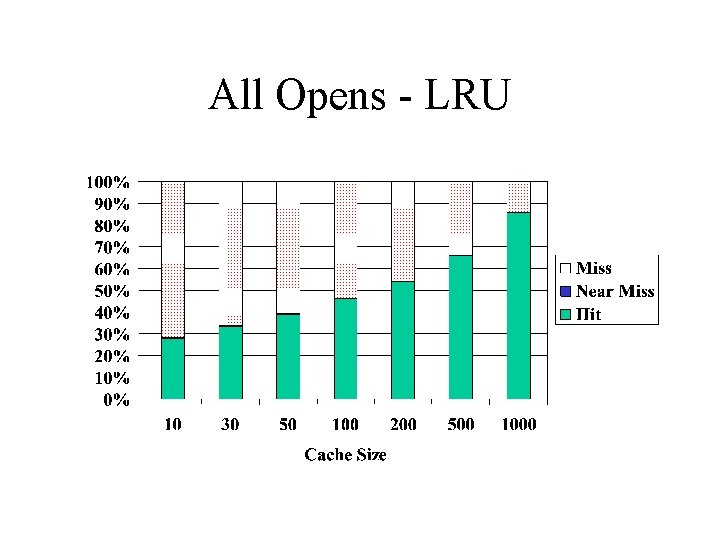

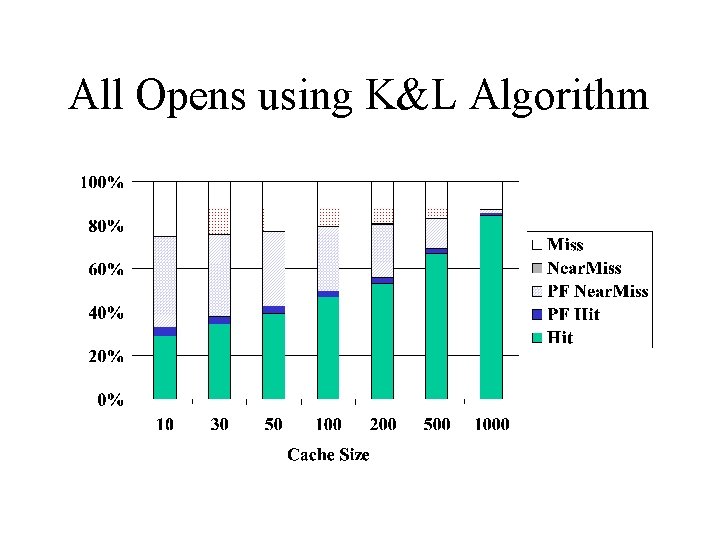

Measurements • Total Time Waiting – And Time Fully Busy • • • Hits & prefetch Hits Near Misses & prefetch Near Misses Prefetch Failures Memory Pressure

Total Time Waiting Constant: Bias Threshold 90

All Opens - LRU

All Opens using K&L Algorithm

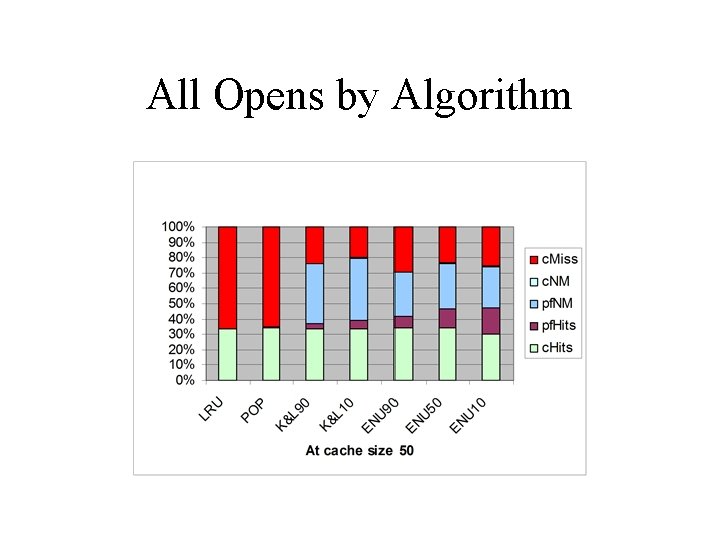

All Opens by Algorithm

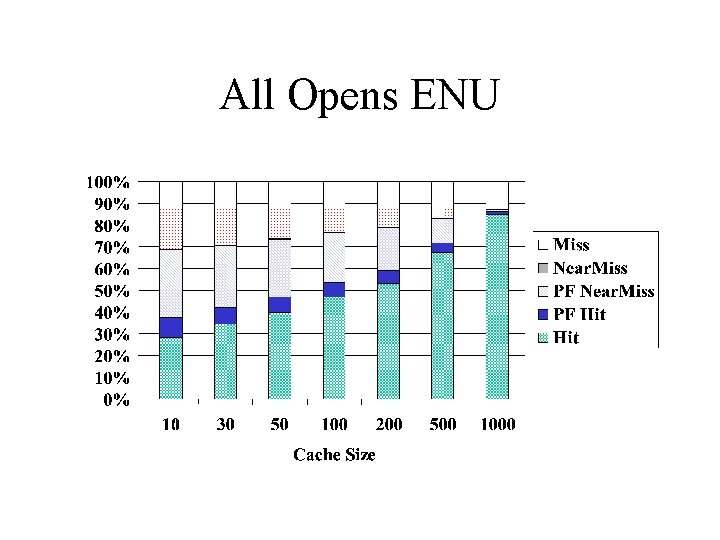

All Opens ENU

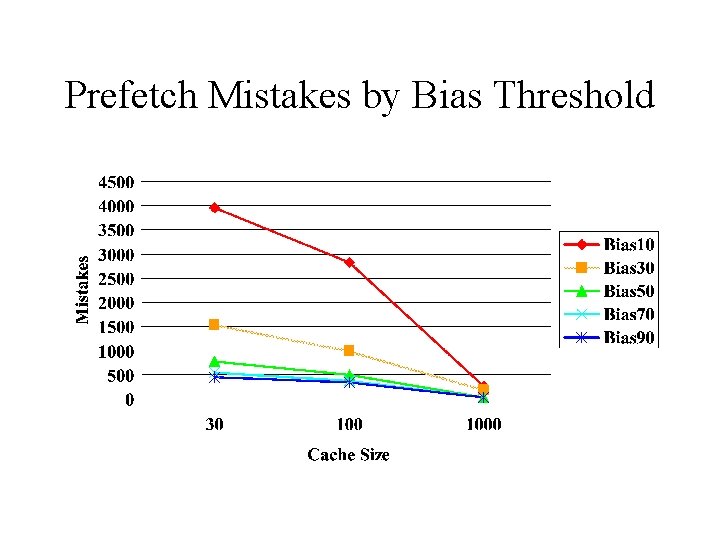

Prefetch Mistakes by Bias Threshold

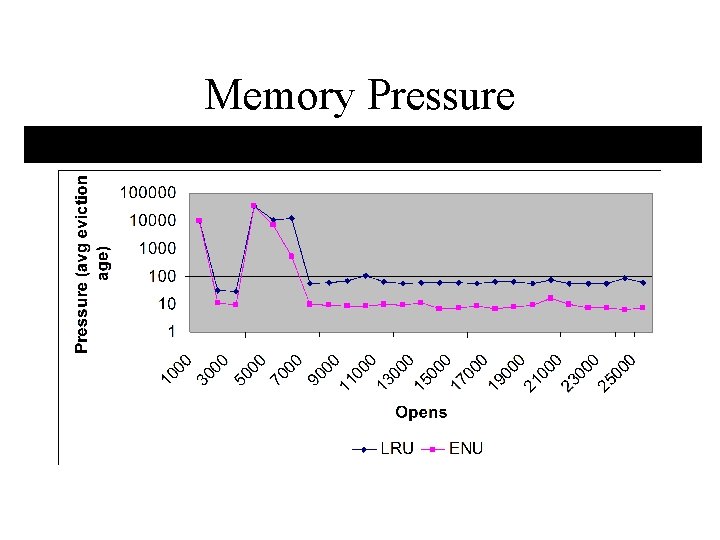

Memory Pressure

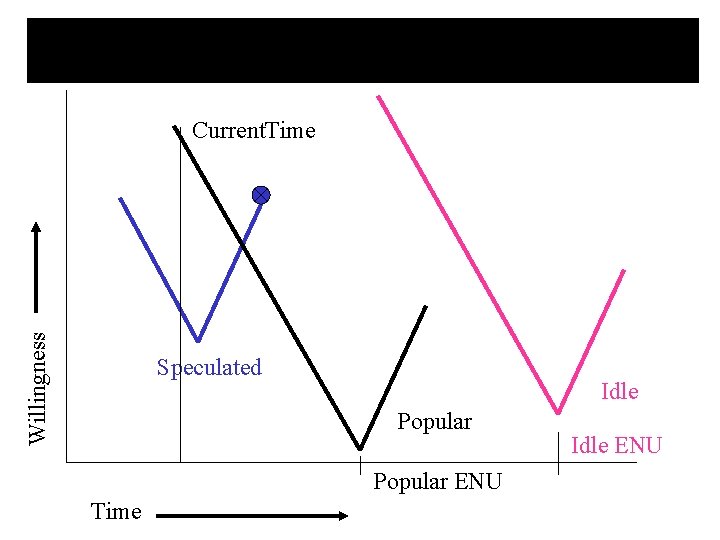

Willingness Current. Time Speculated Idle Popular ENU Time Idle ENU

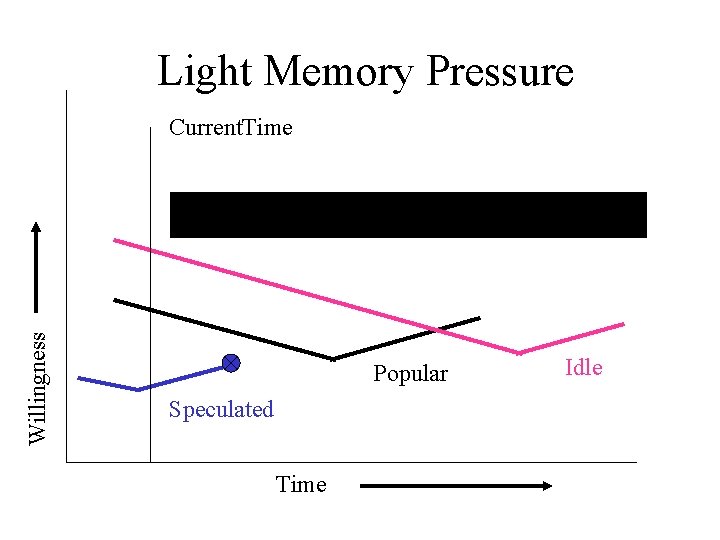

Light Memory Pressure Willingness Current. Time Popular Speculated Time Idle

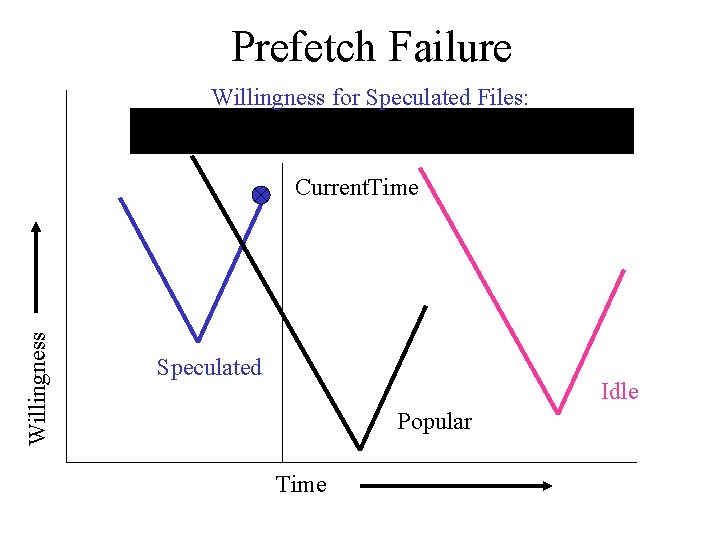

Prefetch Failure Willingness for Speculated Files: Willingness Current. Time Speculated Idle Popular Time

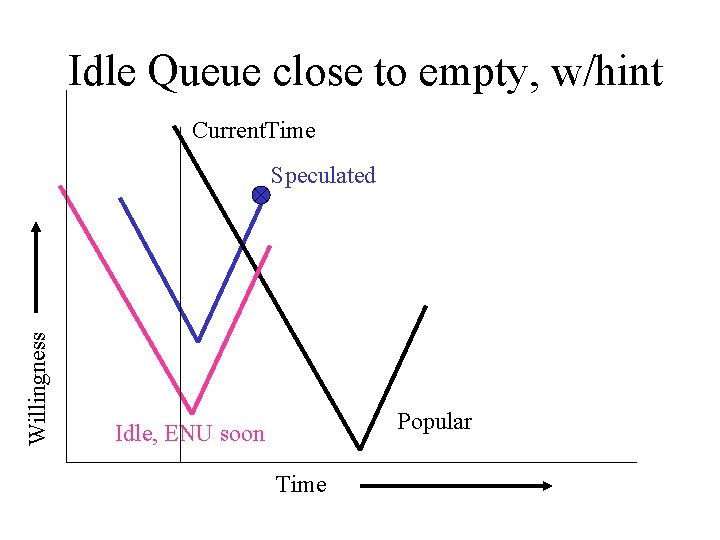

Idle Queue close to empty, w/hint Current. Time Willingness Speculated Popular Idle, ENU soon Time

Future Work • Block Level – Intelligent disks • Model dirty pages • Better Simulation & Traces – Interleaved requests, limited disks • Real Kernel Mods – Study long strings without trace times anomoly • Refinements to measurements – Willingness, Popularity

- Slides: 29