Using emulation for RTL performance verification June 4

- Slides: 11

Using emulation for RTL performance verification June 4, 2014 Dae. Seo Cha Infrastructure Design Center System LSI Division Samsung Electronics Co. , Ltd. 1

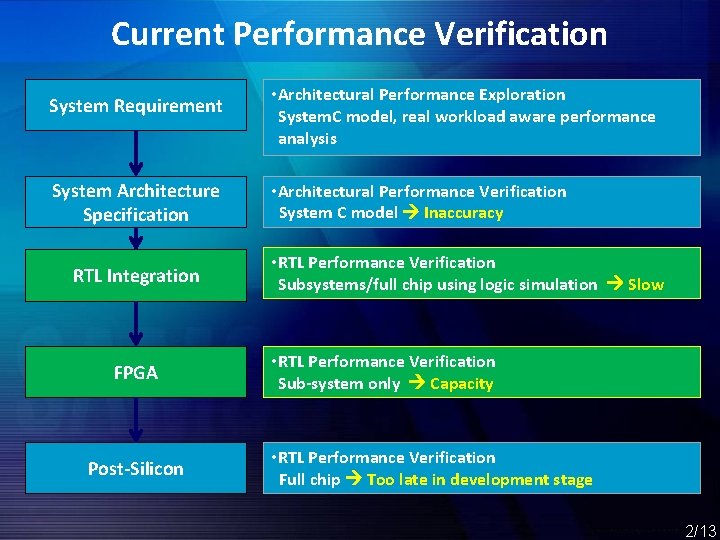

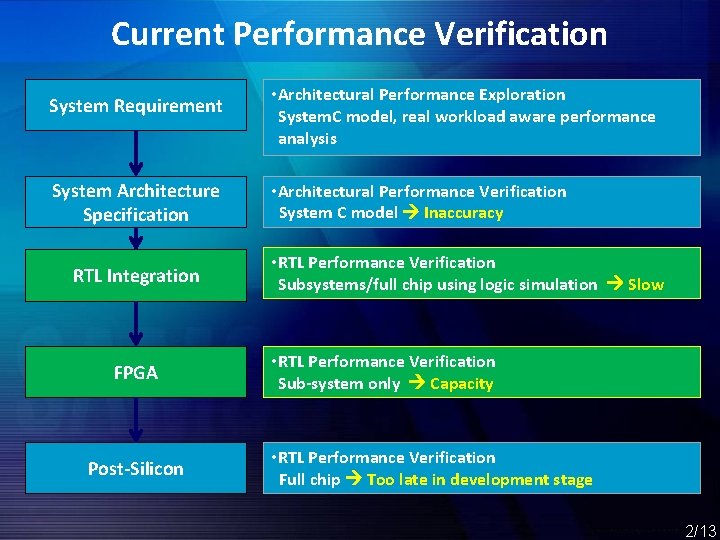

Current Performance Verification System Requirement System Architecture Specification RTL Integration FPGA Post-Silicon 2 • Architectural Performance Exploration System. C model, real workload aware performance analysis • Architectural Performance Verification System C model Inaccuracy • RTL Performance Verification Subsystems/full chip using logic simulation Slow • RTL Performance Verification Sub-system only Capacity • RTL Performance Verification Full chip Too late in development stage 2/13

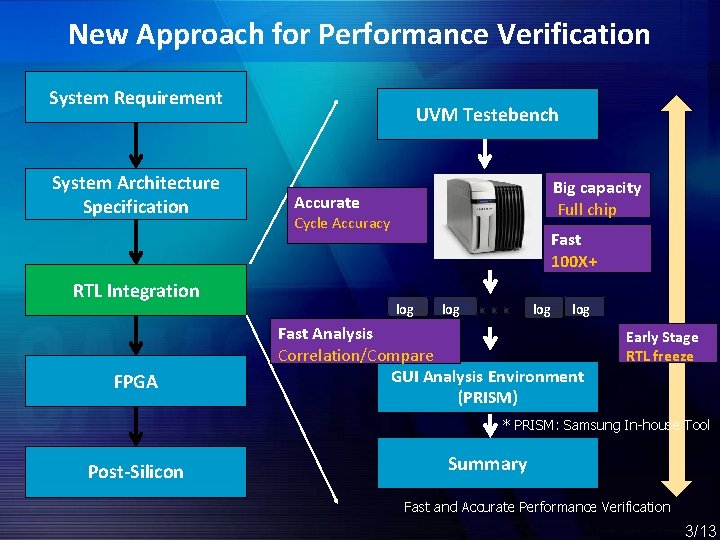

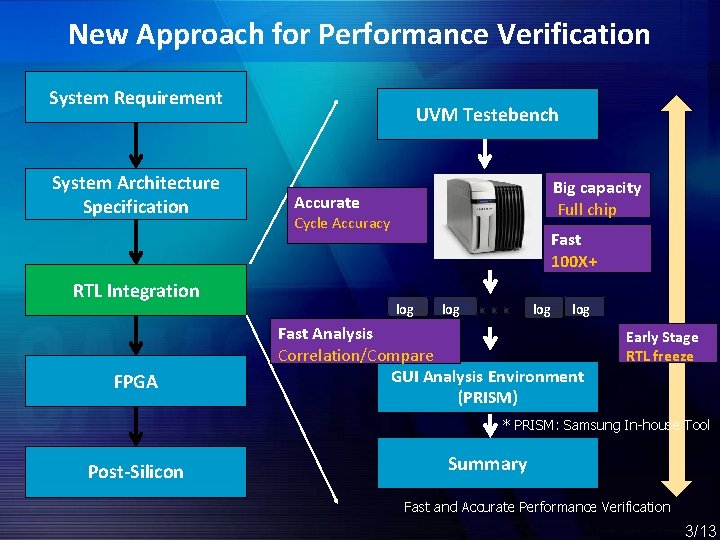

New Approach for Performance Verification System Requirement System Architecture Specification RTL Integration FPGA UVM Testebench Big capacity Full chip Accurate Cycle Accuracy Fast 100 X+ log log Fast Analysis Correlation/Compare GUI Analysis Environment (PRISM) Early Stage RTL freeze * PRISM: Samsung In-house Tool Post-Silicon Summary Fast and Accurate Performance Verification 3 3/13

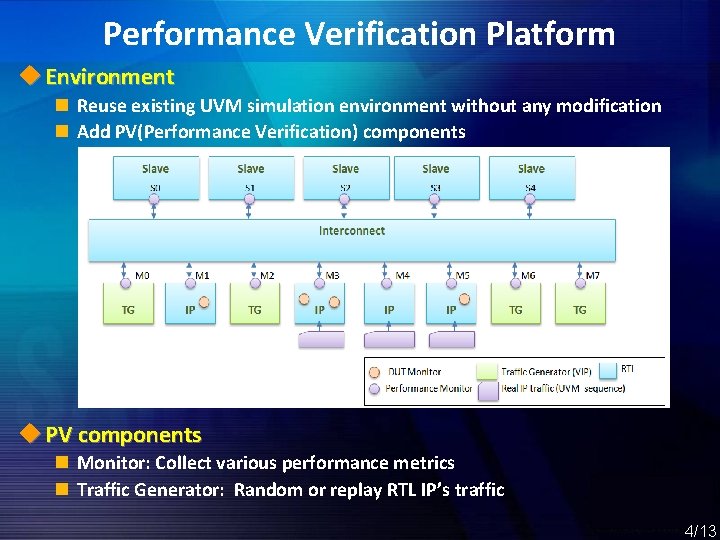

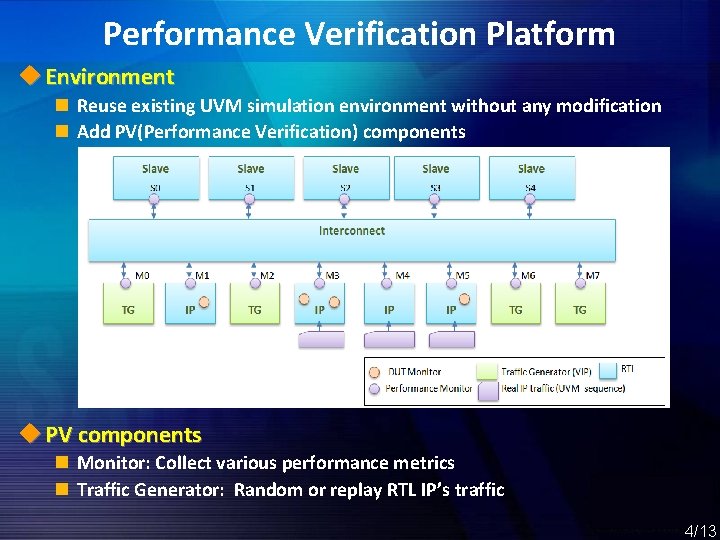

Performance Verification Platform u Environment n Reuse existing UVM simulation environment without any modification n Add PV(Performance Verification) components u PV components n Monitor: Collect various performance metrics n Traffic Generator: Random or replay RTL IP’s traffic 4 4/13

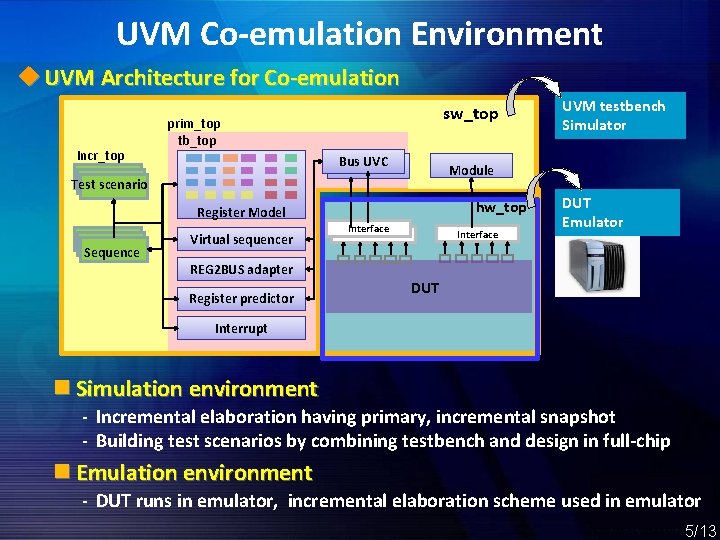

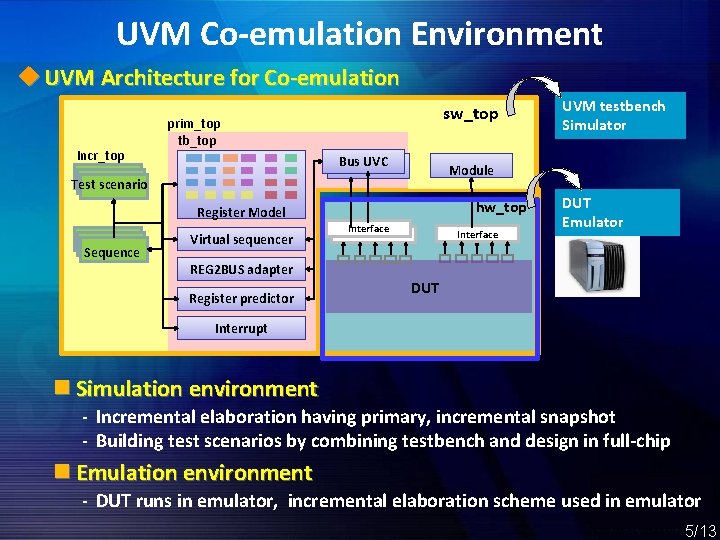

UVM Co-emulation Environment u UVM Architecture for Co-emulation Incr_top sw_top prim_top tb_top Bus AXIUVC bus Module Test scenario hw_top Register Model Sequence Virtual sequencer UVM testbench Simulator Interface DUT Emulator REG 2 BUS adapter Register predictor DUT Interrupt n Simulation environment - Incremental elaboration having primary, incremental snapshot - Building test scenarios by combining testbench and design in full-chip n Emulation environment - DUT runs in emulator, incremental elaboration scheme used in emulator 5 5/13

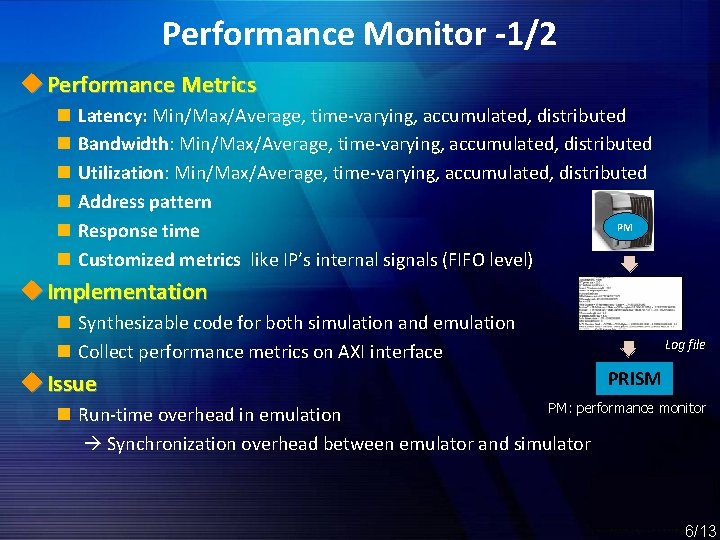

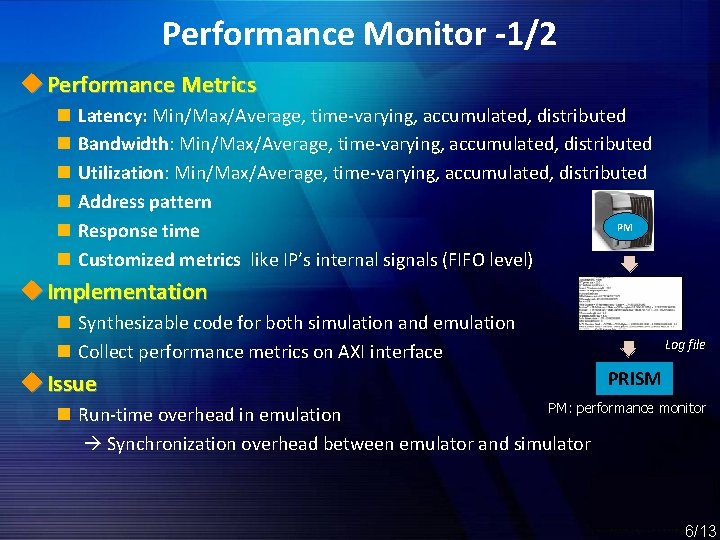

Performance Monitor -1/2 u Performance Metrics n n n Latency: Min/Max/Average, time-varying, accumulated, distributed Bandwidth: Min/Max/Average, time-varying, accumulated, distributed Utilization: Min/Max/Average, time-varying, accumulated, distributed Address pattern PM Response time Customized metrics like IP’s internal signals (FIFO level) u Implementation n Synthesizable code for both simulation and emulation n Collect performance metrics on AXI interface u Issue Log file PRISM PM: performance monitor n Run-time overhead in emulation Synchronization overhead between emulator and simulator 6 6/13

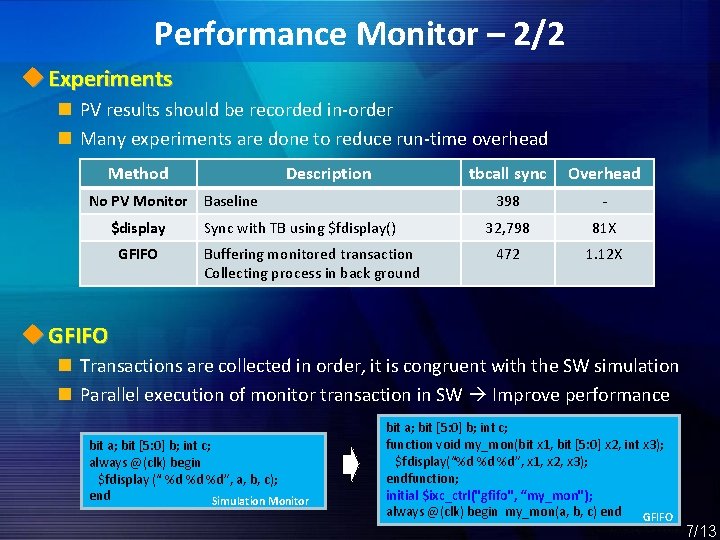

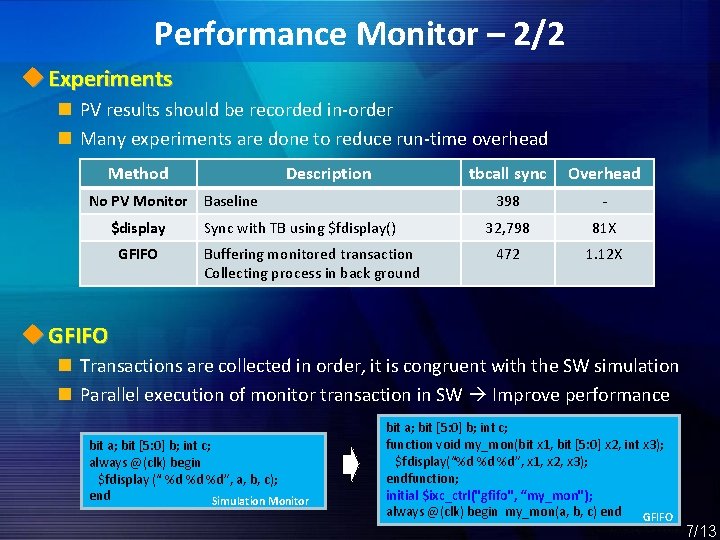

Performance Monitor – 2/2 u Experiments n PV results should be recorded in-order n Many experiments are done to reduce run-time overhead Method No PV Monitor $display GFIFO Description Baseline Sync with TB using $fdisplay() Buffering monitored transaction Collecting process in back ground tbcall sync Overhead 398 - 32, 798 81 X 472 1. 12 X u GFIFO n Transactions are collected in order, it is congruent with the SW simulation n Parallel execution of monitor transaction in SW Improve performance bit a; bit [5: 0] b; int c; always @(clk) begin $fdisplay (“ %d %d %d”, a, b, c); end Simulation Monitor 7 bit a; bit [5: 0] b; int c; function void my_mon(bit x 1, bit [5: 0] x 2, int x 3); $fdisplay(“%d %d %d”, x 1, x 2, x 3); endfunction; initial $ixc_ctrl("gfifo", “my_mon"); always @(clk) begin my_mon(a, b, c) end GFIFO 7/13

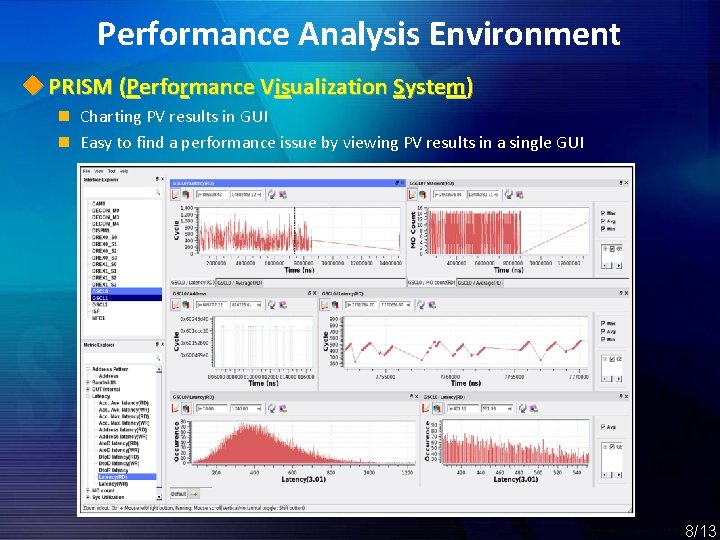

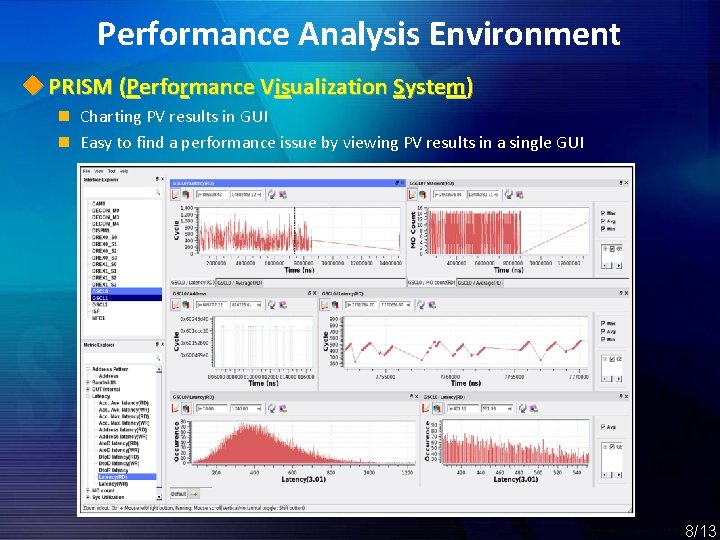

Performance Analysis Environment u PRISM (Performance Visualization System) n Charting PV results in GUI n Easy to find a performance issue by viewing PV results in a single GUI 8 8/13

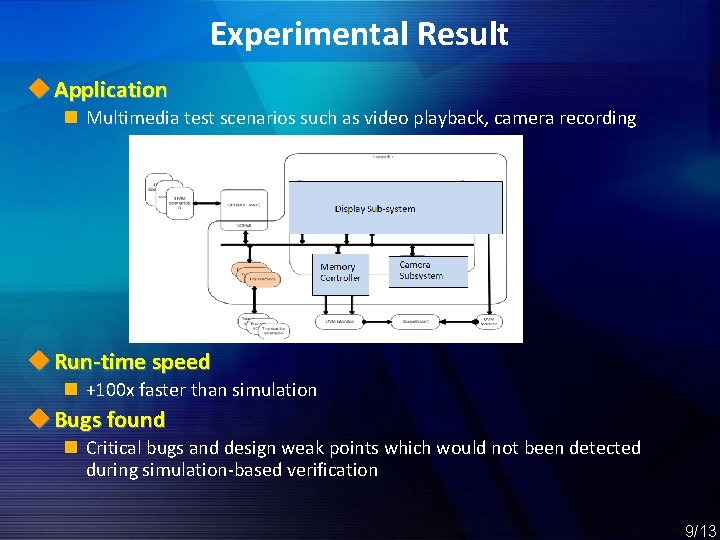

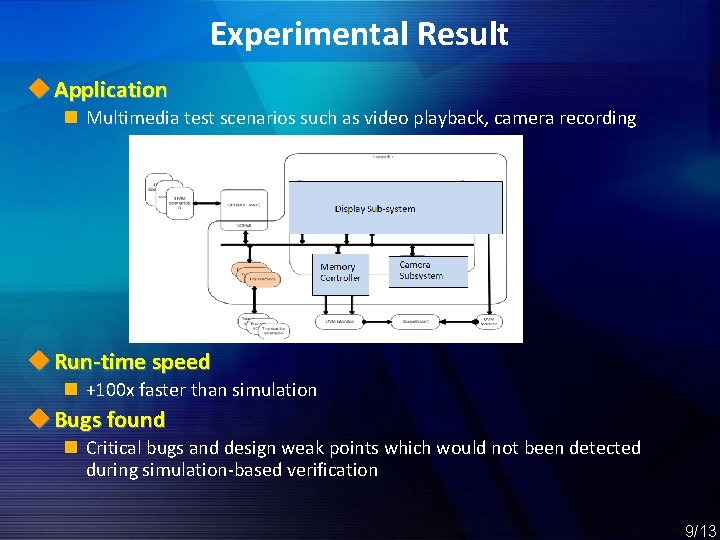

Experimental Result u Application n Multimedia test scenarios such as video playback, camera recording u Run-time speed n +100 x faster than simulation u Bugs found n Critical bugs and design weak points which would not been detected during simulation-based verification 9 9/13

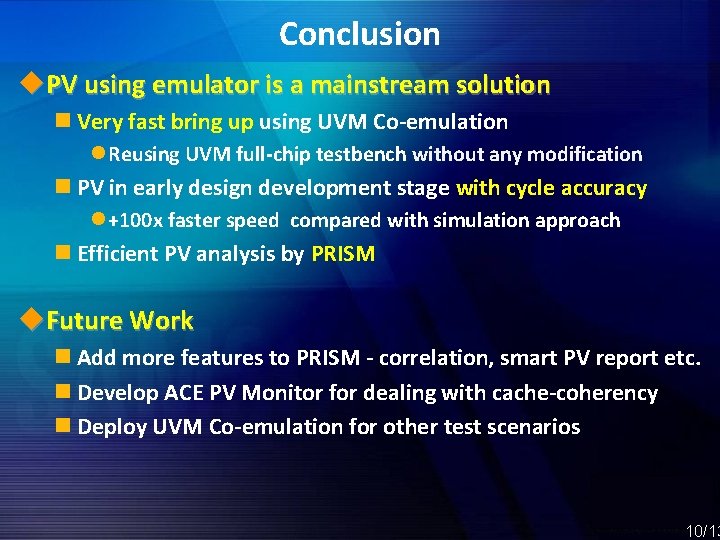

Conclusion u. PV using emulator is a mainstream solution n Very fast bring up using UVM Co-emulation l Reusing UVM full-chip testbench without any modification n PV in early design development stage with cycle accuracy l +100 x faster speed compared with simulation approach n Efficient PV analysis by PRISM u. Future Work n Add more features to PRISM - correlation, smart PV report etc. n Develop ACE PV Monitor for dealing with cache-coherency n Deploy UVM Co-emulation for other test scenarios 10 10/13

Thank you 11 11/13