Using Citation Analysis to Study Evaluation Influence Strengths

- Slides: 18

Using Citation Analysis to Study Evaluation Influence: Strengths and Limitations of the Methodology Lija Greenseid, Ph. D. American Evaluation Association Conference Orlando, Florida November 12, 2009 This material is based on work supported by the National Science Foundation under Grant No. REC 0438545. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

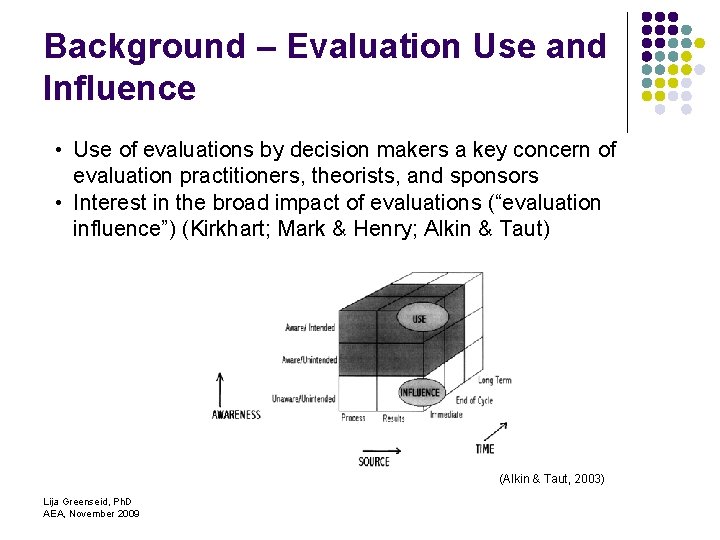

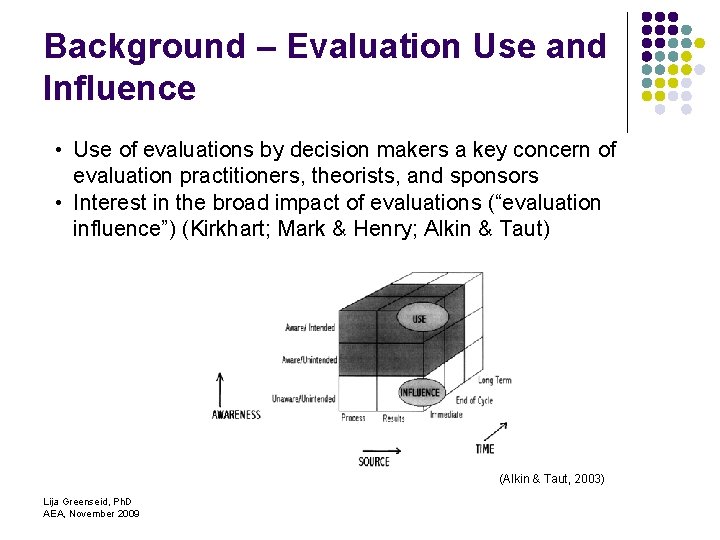

Background – Evaluation Use and Influence • Use of evaluations by decision makers a key concern of evaluation practitioners, theorists, and sponsors • Interest in the broad impact of evaluations (“evaluation influence”) (Kirkhart; Mark & Henry; Alkin & Taut) (Alkin & Taut, 2003) Lija Greenseid, Ph. D AEA, November 2009

Context Four large-scale, multi-site NSF funded program evaluations or evaluation technical assistance programs l l l Evaluations funded for 4 -10 years Evaluation budgets: $1 to $6. 5 million Evaluated between 20 and 350 projects Produced between 24 and 98 evaluation products Dissemination part of two contracts Lija Greenseid, Ph. D AEA, November 2009

How can evaluation influence be measured? This study examined the usefulness of citation analysis as a method for assessing evaluation influence l l Assess the impact of knowledge generated through evaluations Examine use and influence of evaluation products (e. g. , reports, publications, instruments, presentations) through examining the extent and patterns in citations of the products Lija Greenseid, Ph. D AEA, November 2009

Delimitations l l Evaluation products only one possible source of evaluation influence Citation analysis proposed as one possible way of measuring the influence of evaluation products Lija Greenseid, Ph. D AEA, November 2009

Method l Collected citation data using: l l ISI Web of Science Google Scholar (scholar. google. com) Google search engine (www. google. com) Analyzed distributions of citation counts, levels, content, and network maps Lija Greenseid, Ph. D AEA, November 2009

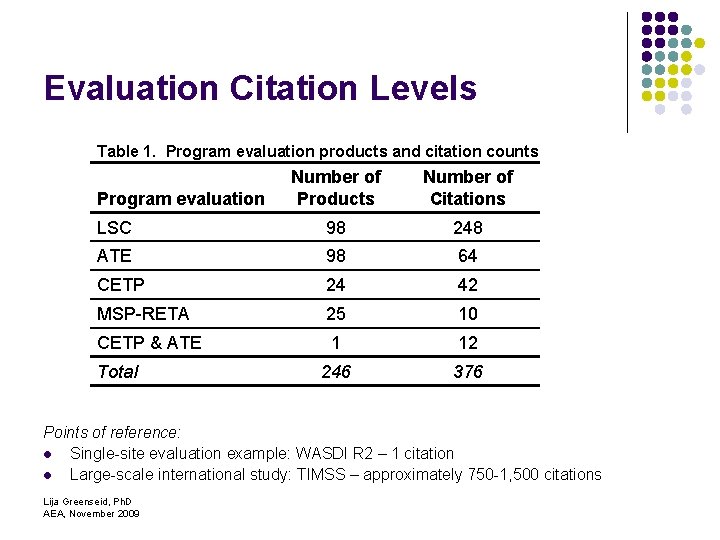

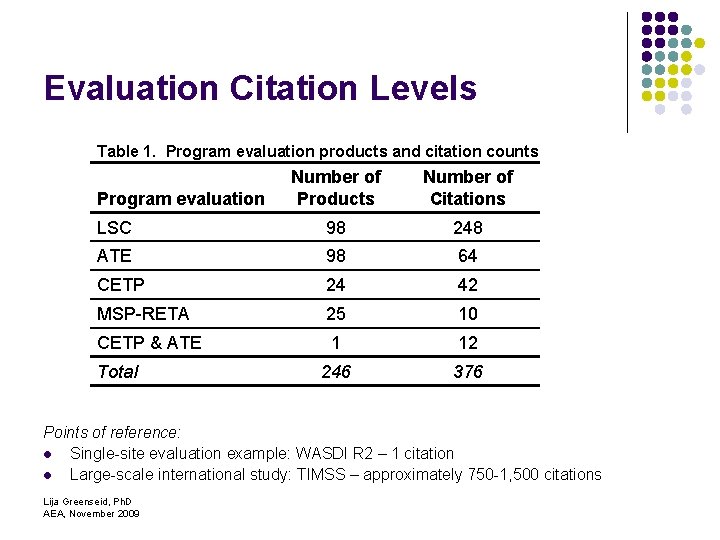

Evaluation Citation Levels Table 1. Program evaluation products and citation counts Number of Products Number of Citations LSC 98 248 ATE 98 64 CETP 24 42 MSP-RETA 25 10 CETP & ATE 1 12 246 376 Program evaluation Total Points of reference: l Single-site evaluation example: WASDI R 2 – 1 citation l Large-scale international study: TIMSS – approximately 750 -1, 500 citations Lija Greenseid, Ph. D AEA, November 2009

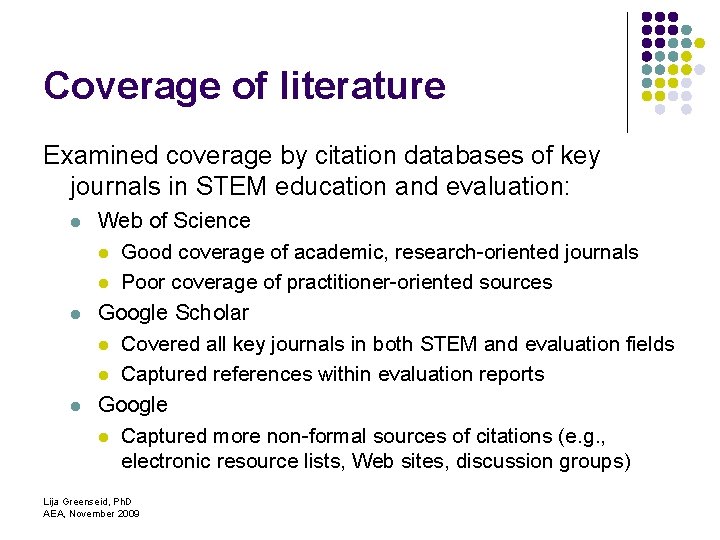

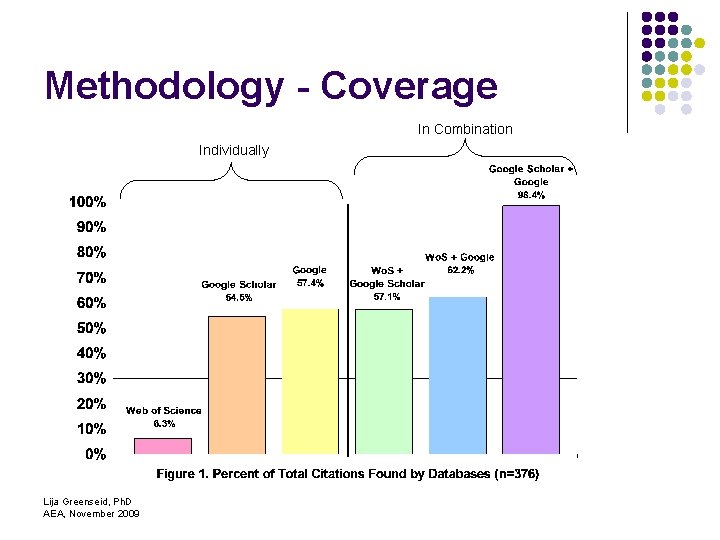

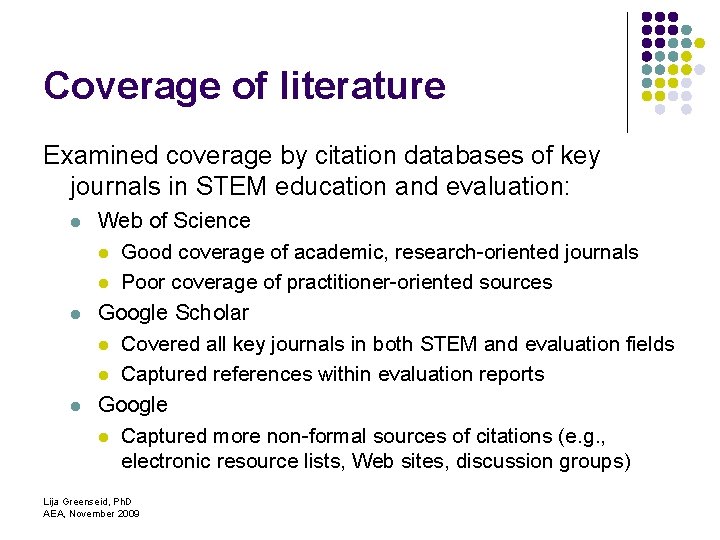

Coverage of literature Examined coverage by citation databases of key journals in STEM education and evaluation: l l l Web of Science l Good coverage of academic, research-oriented journals l Poor coverage of practitioner-oriented sources Google Scholar l Covered all key journals in both STEM and evaluation fields l Captured references within evaluation reports Google l Captured more non-formal sources of citations (e. g. , electronic resource lists, Web sites, discussion groups) Lija Greenseid, Ph. D AEA, November 2009

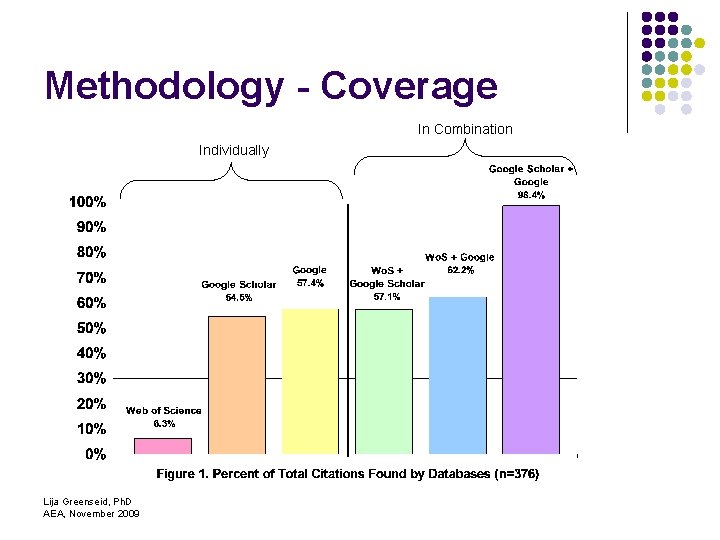

Methodology - Coverage In Combination Individually Lija Greenseid, Ph. D AEA, November 2009

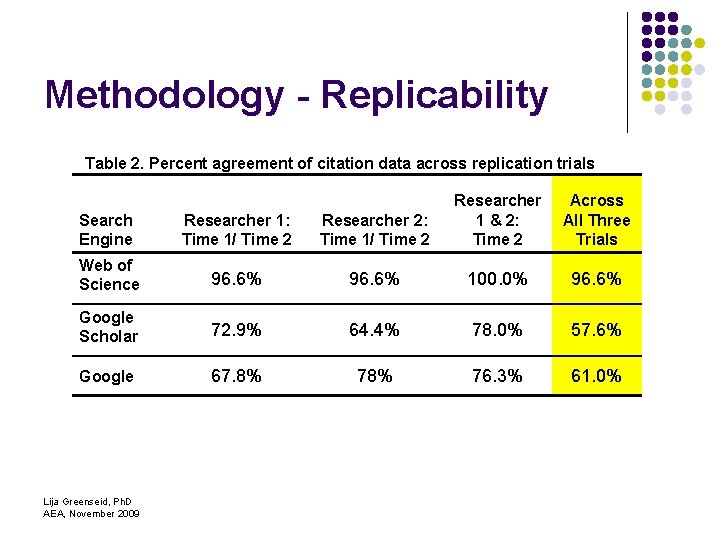

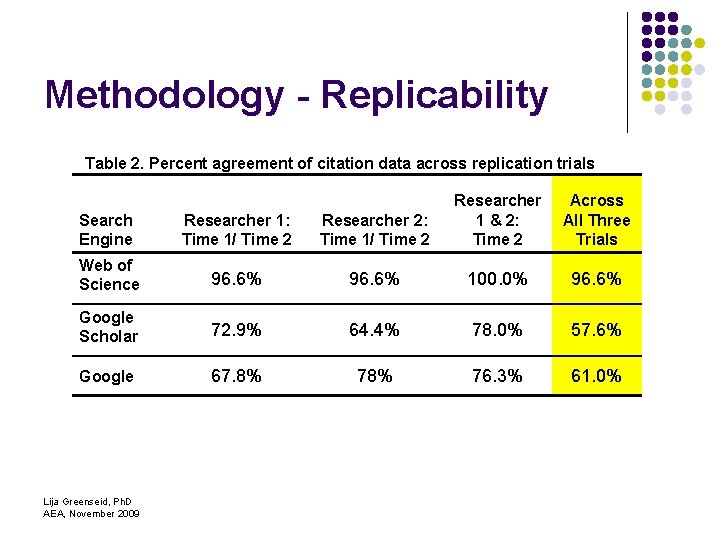

Methodology - Replicability Table 2. Percent agreement of citation data across replication trials Search Engine Researcher 1: Time 1/ Time 2 Researcher 2: Time 1/ Time 2 Researcher 1 & 2: Time 2 Web of Science 96. 6% 100. 0% 96. 6% Google Scholar 72. 9% 64. 4% 78. 0% 57. 6% Google 67. 8% 76. 3% 61. 0% Lija Greenseid, Ph. D AEA, November 2009 Across All Three Trials

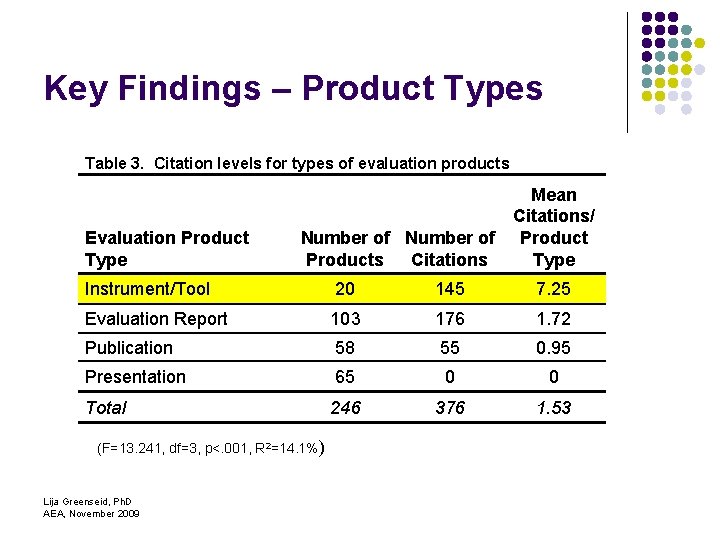

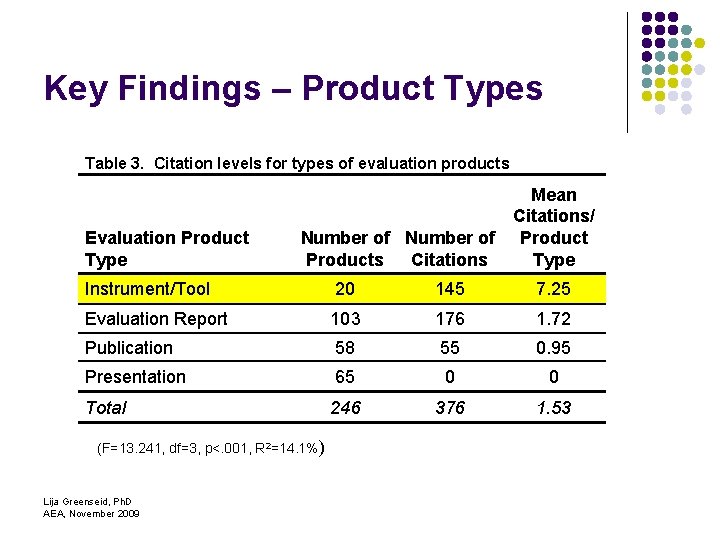

Key Findings – Product Types Table 3. Citation levels for types of evaluation products Evaluation Product Type Number of Products Citations Mean Citations/ Product Type Instrument/Tool 20 145 7. 25 Evaluation Report 103 176 1. 72 Publication 58 55 0. 95 Presentation 65 0 0 Total 246 376 1. 53 (F=13. 241, df=3, p<. 001, R 2=14. 1%) Lija Greenseid, Ph. D AEA, November 2009

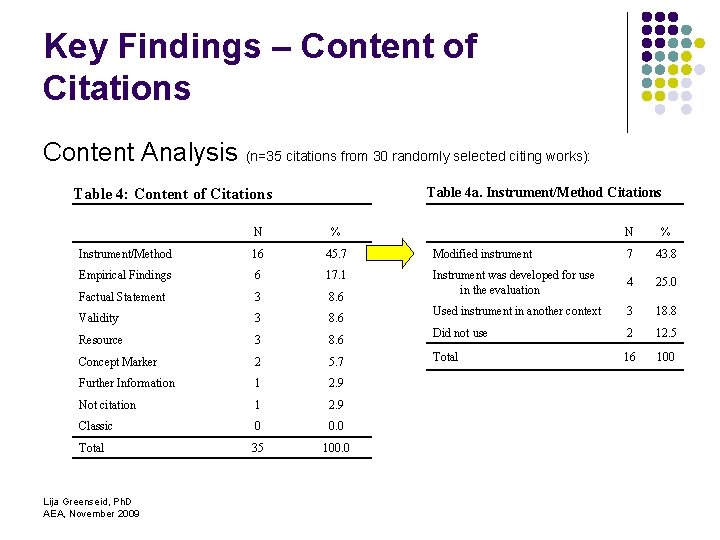

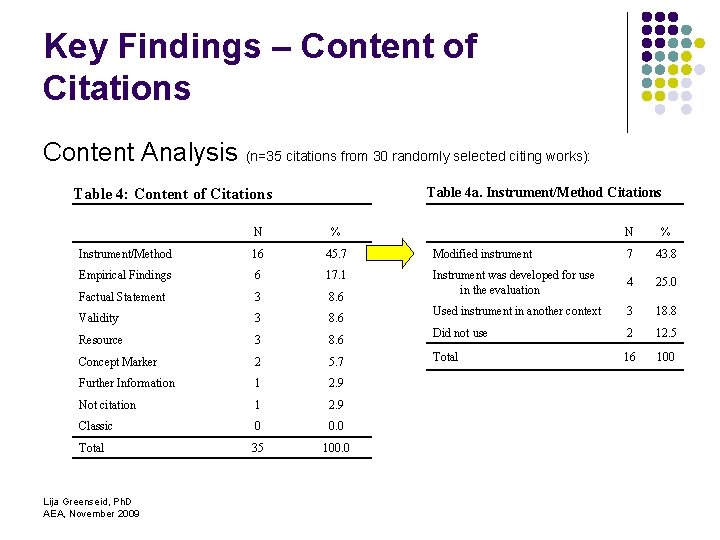

Key Findings – Content of Citations Content Analysis (n=35 citations from 30 randomly selected citing works): Table 4 a. Instrument/Method Citations Table 4: Content of Citations N % Instrument/Method 16 45. 7 Empirical Findings 6 17. 1 Factual Statement 3 8. 6 Validity 3 8. 6 Resource 3 8. 6 Concept Marker 2 5. 7 Further Information 1 2. 9 Not citation 1 2. 9 Classic 0 0. 0 Total 35 100. 0 Lija Greenseid, Ph. D AEA, November 2009 N % Modified instrument 7 43. 8 Instrument was developed for use in the evaluation 4 25. 0 Used instrument in another context 3 18. 8 Did not use 2 12. 5 Total 16 100

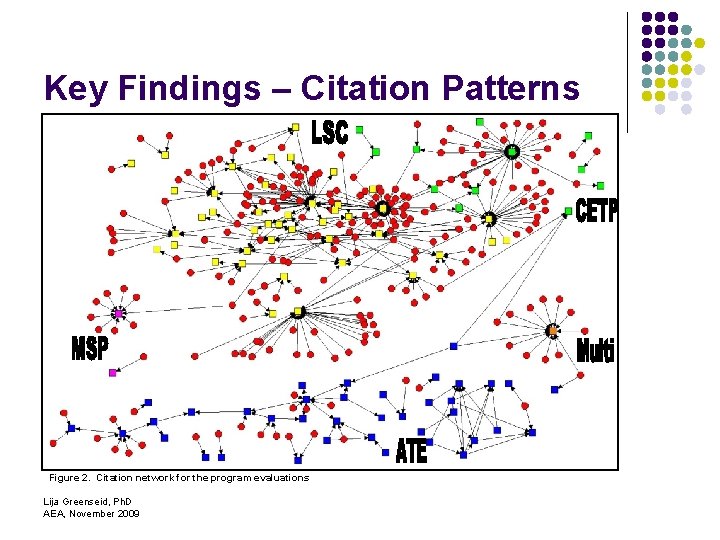

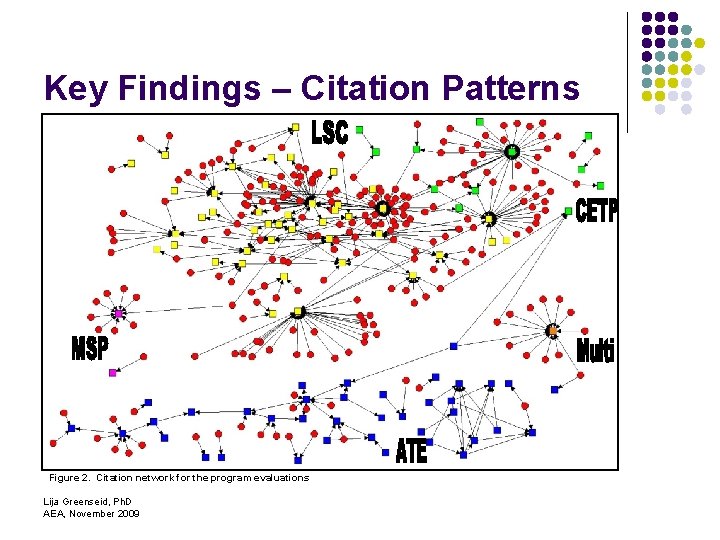

Key Findings – Citation Patterns Figure 2. Citation network for the program evaluations Lija Greenseid, Ph. D AEA, November 2009

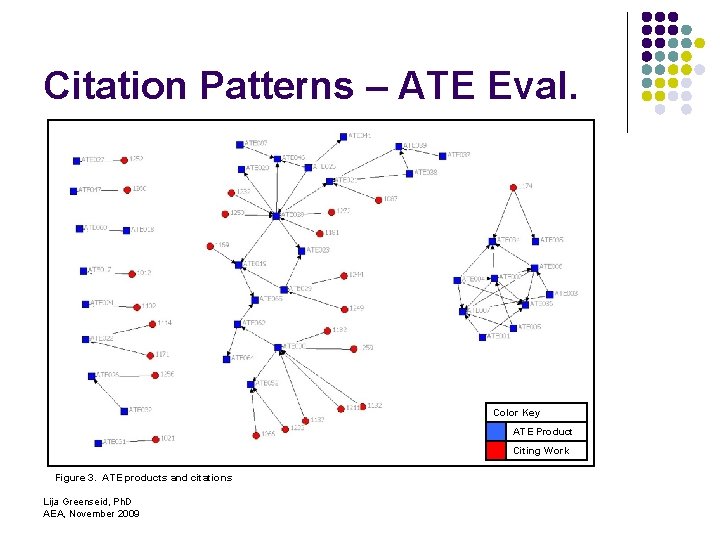

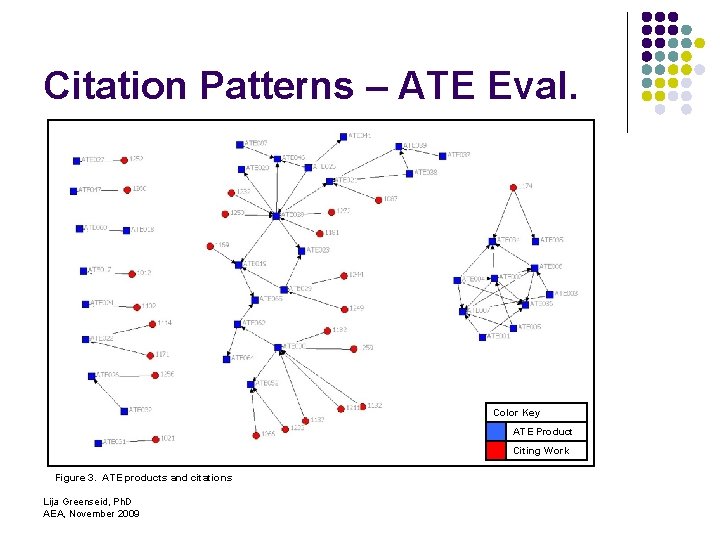

Citation Patterns – ATE Eval. Color Key Figure 3. ATE products and citations Lija Greenseid, Ph. D AEA, November 2009 ATE Product Citing Work

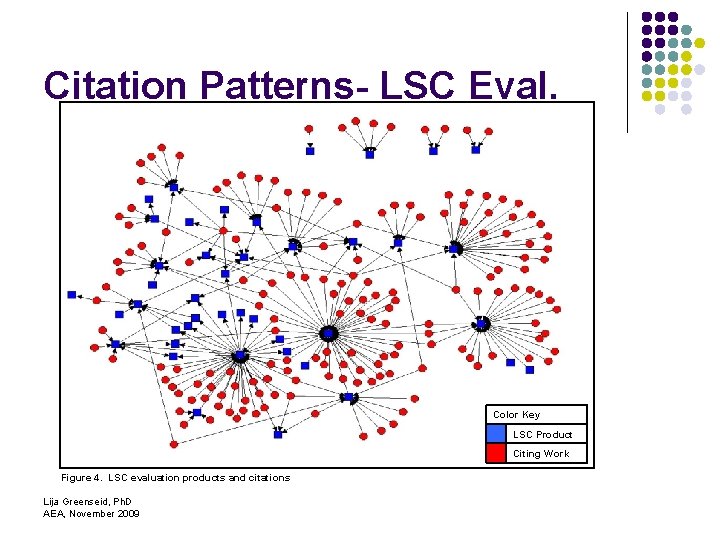

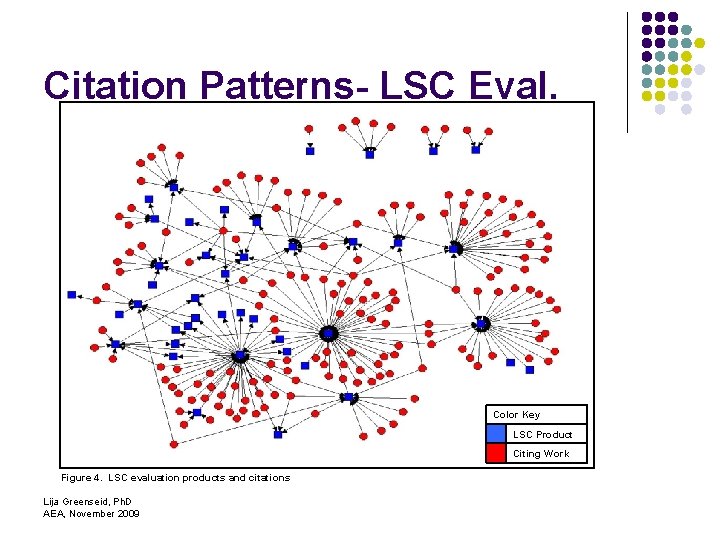

Citation Patterns- LSC Eval. Color Key Figure 4. LSC evaluation products and citations Lija Greenseid, Ph. D AEA, November 2009 LSC Product Citing Work

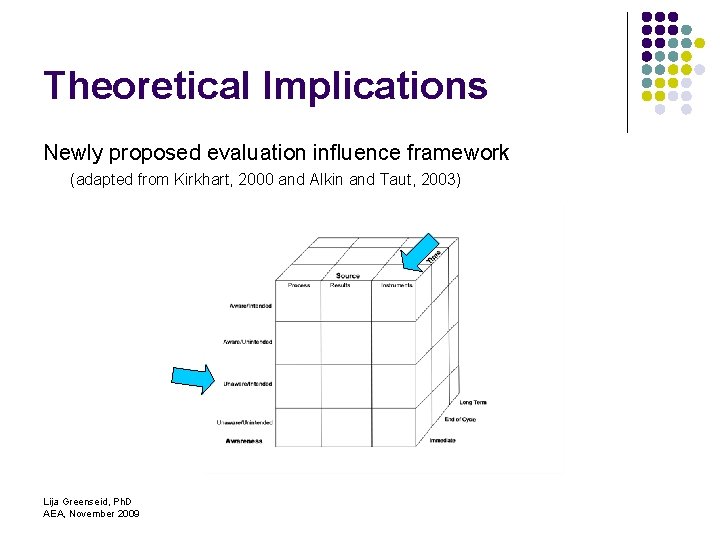

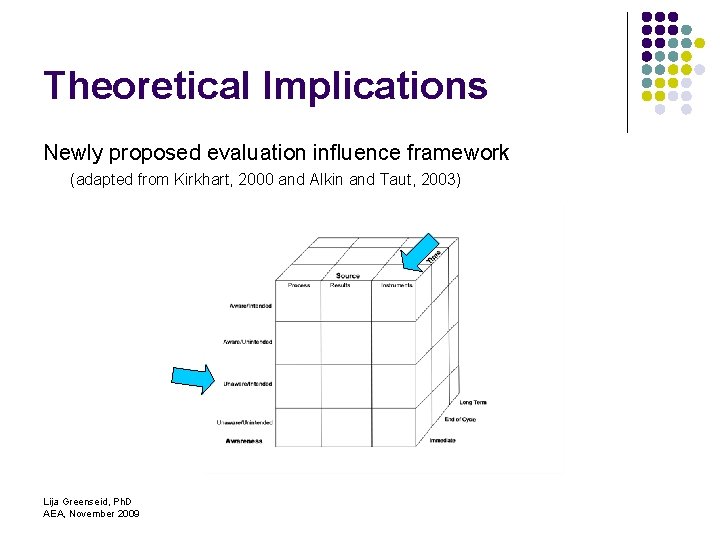

Theoretical Implications Newly proposed evaluation influence framework (adapted from Kirkhart, 2000 and Alkin and Taut, 2003) Lija Greenseid, Ph. D AEA, November 2009

Conclusions Citation analysis is useful to a limited extent l l l Helpful for comparing patterns of influence Best suited for comparative study of large-scale evaluations Measures only one limited type of evaluation influence – arising from products Citation counts underestimate true levels Highly labor-intensive process for collecting and managing data Lija Greenseid, Ph. D AEA, November 2009

Contact Information Lija Greenseid, Ph. D. Senior Evaluator Professional Data Analysts, Inc. www. PDAstats. com Minneapolis, MN lija@pdastats. com Lija Greenseid, Ph. D AEA, November 2009