Use of a High Level Language in High

- Slides: 24

Use of a High Level Language in High Performance Biomechanics Simulations Katherine Yelick, Armando Solar-Lezama, Jimmy Su, Dan Bonachea, Amir Kamil U. C. Berkeley and LBNL Collaborators: S. Graham, P. Hilfinger, P. Colella, K. Datta, E. Givelberg, N. Mai, T. Wen, C. Bell, P. Hargrove, J. Duell, C. Iancu, W. Chen, P. Husbands, M. Welcome, R. Nishtala

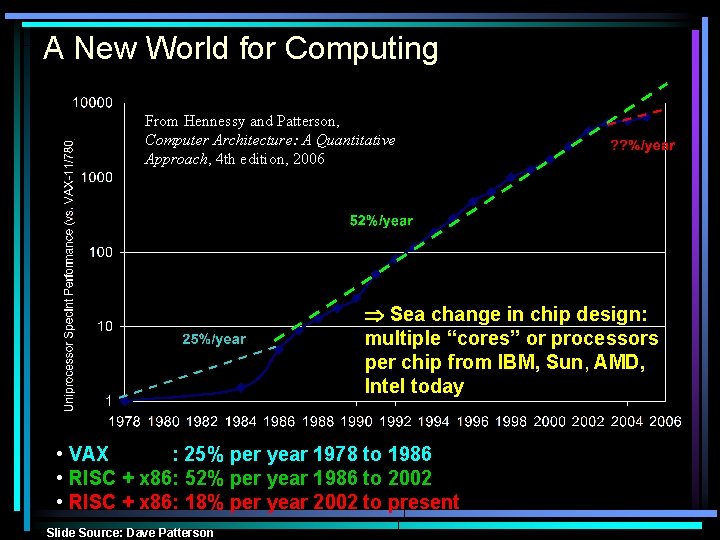

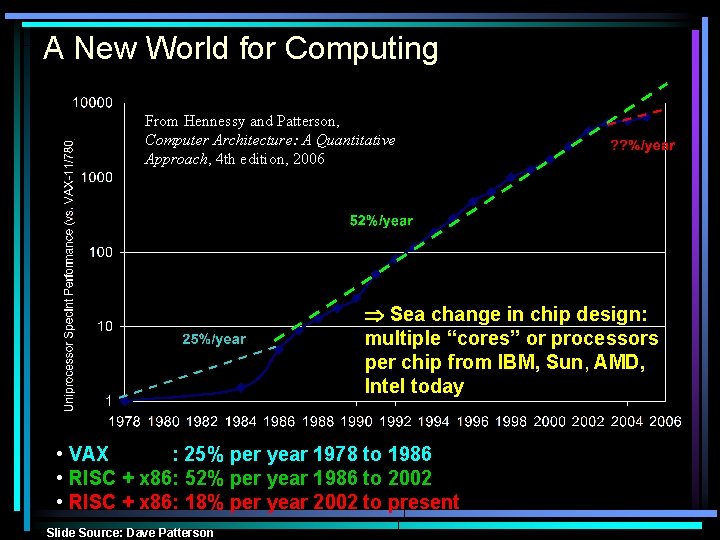

A New World for Computing From Hennessy and Patterson, Computer Architecture: A Quantitative Approach, 4 th edition, 2006 Sea change in chip design: multiple “cores” or processors per chip from IBM, Sun, AMD, Intel today • VAX : 25% per year 1978 to 1986 • RISC + x 86: 52% per year 1986 to 2002 • RISC + x 86: 18% per year 2002 to present Slide Source: Dave Patterson

Why Is the Computer Industry Worried? • For 20 years, hardware designers have taken care of performance • Now they will produce only parallel processors – Double number of cores every 18 -24 months – Uniprocessor performance relatively flat Performance is a software problem All software will be parallel • Programming options: – Libraries: Open. MP (scalabililty? ), MPI (usability? ) – Languages: parallel C, Fortran, Java, Matlab

Titanium: High Level Language for Scientific Computing • Titanium is an object-oriented language based on Java • Additional languages support – – Multidimensional arrays Value classes (Complex type) Fast memory management Scalable parallelism model with locality • Implementation strategy – Titanium compiler translates Titanium to C with calls to communication library (GASNet), no JVM – Portable across machines with C compilers – Cross language calls to C/F/MPI possible Joint work with Titanium group

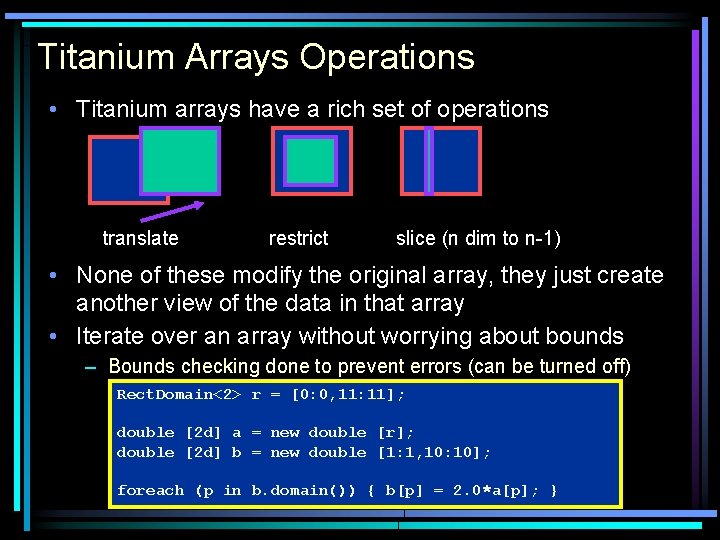

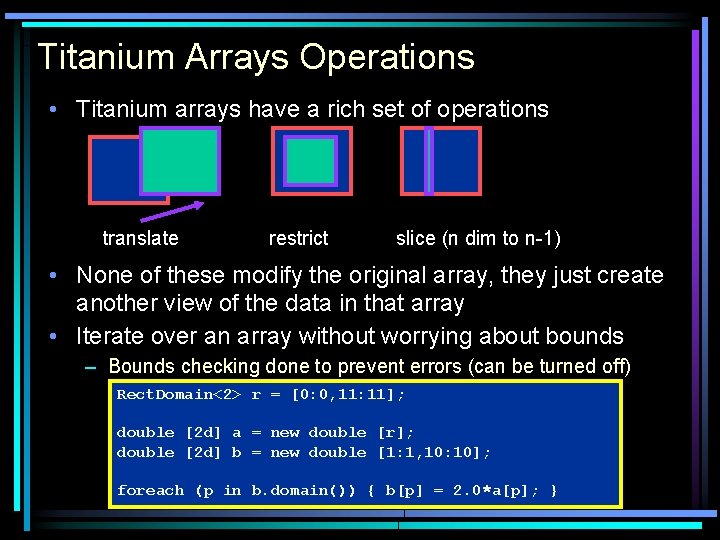

Titanium Arrays Operations • Titanium arrays have a rich set of operations translate restrict slice (n dim to n-1) • None of these modify the original array, they just create another view of the data in that array • Iterate over an array without worrying about bounds – Bounds checking done to prevent errors (can be turned off) Rect. Domain<2> r = [0: 0, 11: 11]; double [2 d] a = new double [r]; double [2 d] b = new double [1: 1, 10: 10]; foreach (p in b. domain()) { b[p] = 2. 0*a[p]; }

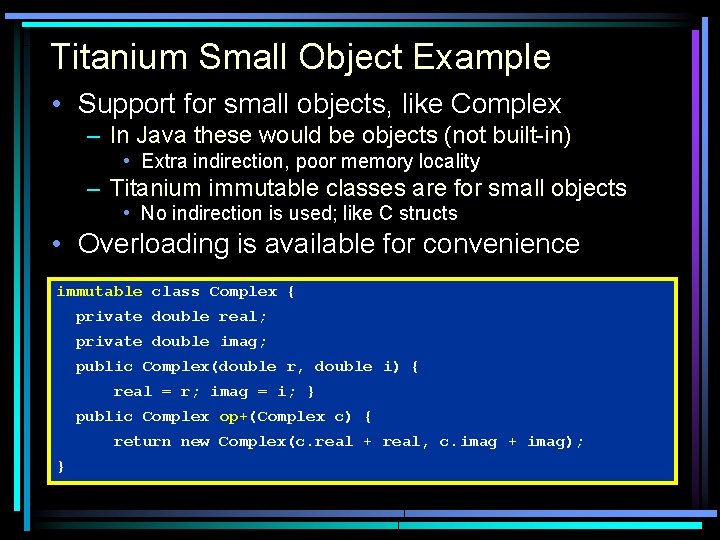

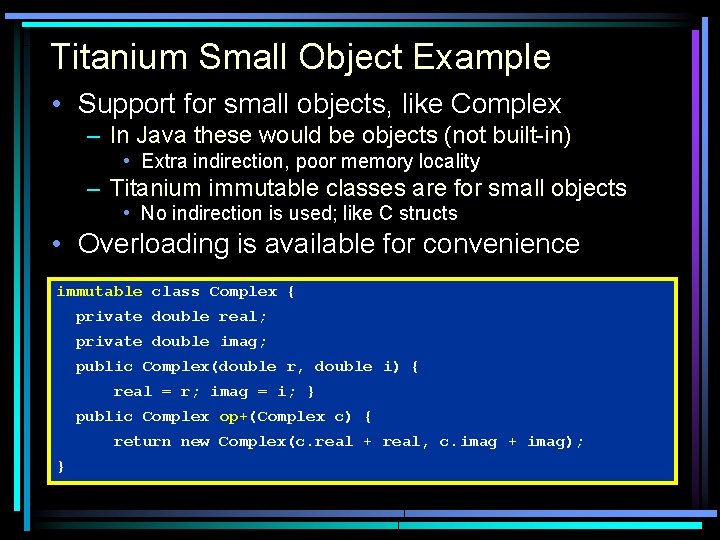

Titanium Small Object Example • Support for small objects, like Complex – In Java these would be objects (not built-in) • Extra indirection, poor memory locality – Titanium immutable classes are for small objects • No indirection is used; like C structs • Overloading is available for convenience immutable class Complex { private double real; private double imag; public Complex(double r, double i) { real = r; imag = i; } public Complex op+(Complex c) { return new Complex(c. real + real, c. imag + imag); }

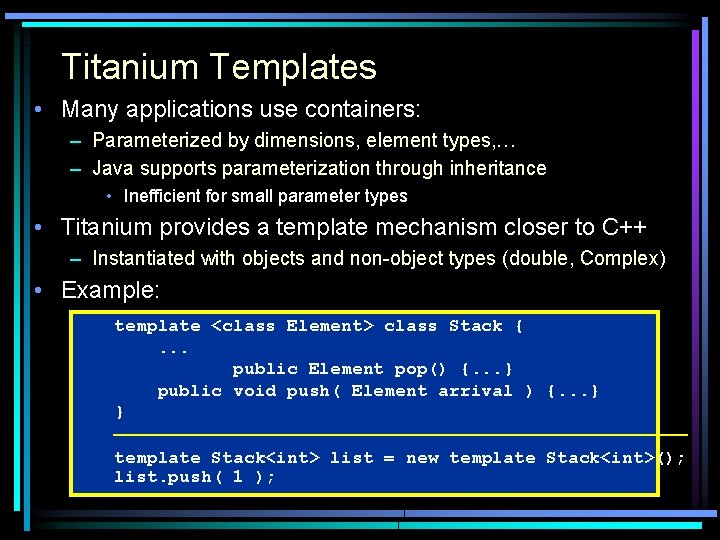

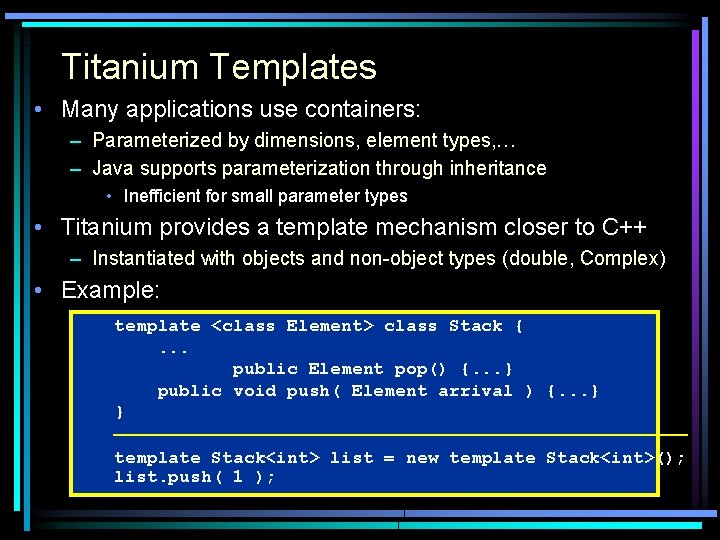

Titanium Templates • Many applications use containers: – Parameterized by dimensions, element types, … – Java supports parameterization through inheritance • Inefficient for small parameter types • Titanium provides a template mechanism closer to C++ – Instantiated with objects and non-object types (double, Complex) • Example: template <class Element> class Stack {. . . public Element pop() {. . . } public void push( Element arrival ) {. . . } } ___________________________ template Stack<int> list = new template Stack<int>(); list. push( 1 );

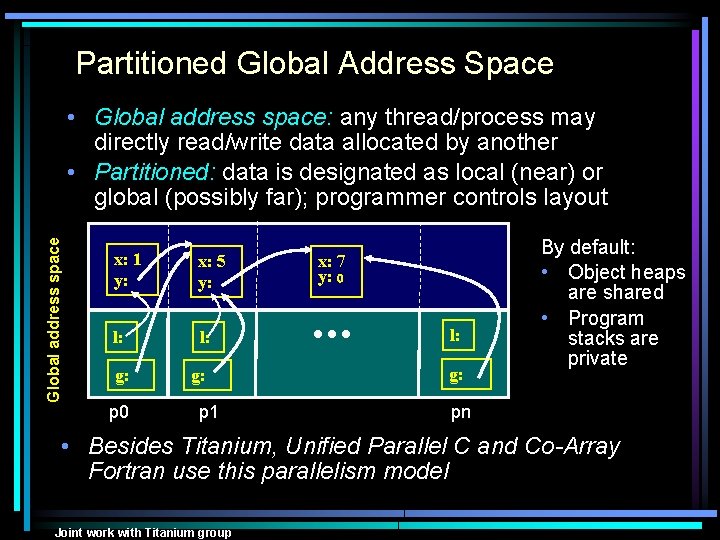

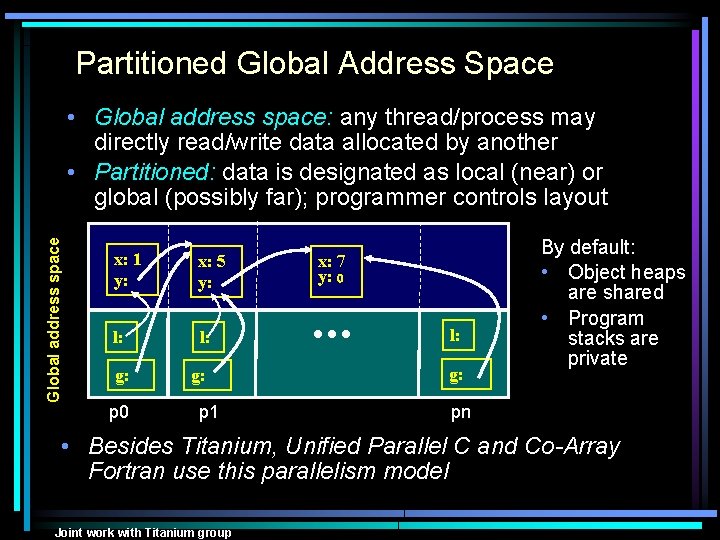

Partitioned Global Address Space Global address space • Global address space: any thread/process may directly read/write data allocated by another • Partitioned: data is designated as local (near) or global (possibly far); programmer controls layout x: 1 y: x: 5 y: l: l: g: g: p 0 p 1 x: 7 y: 0 By default: • Object heaps are shared • Program stacks are private pn • Besides Titanium, Unified Parallel C and Co-Array Fortran use this parallelism model Joint work with Titanium group

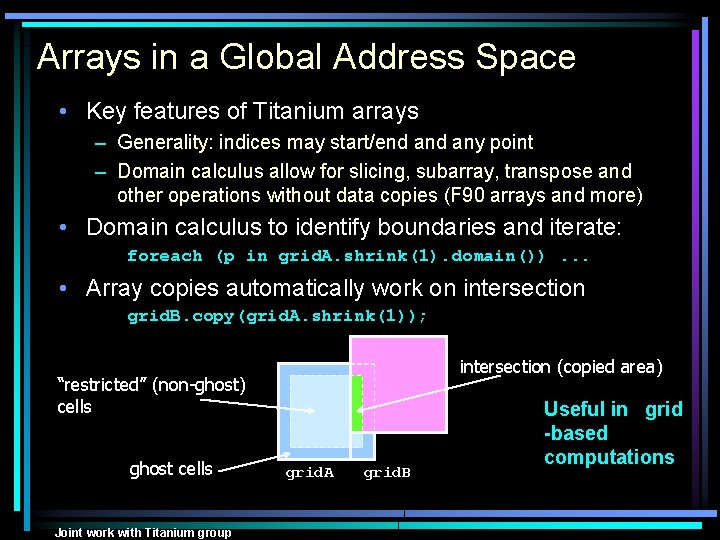

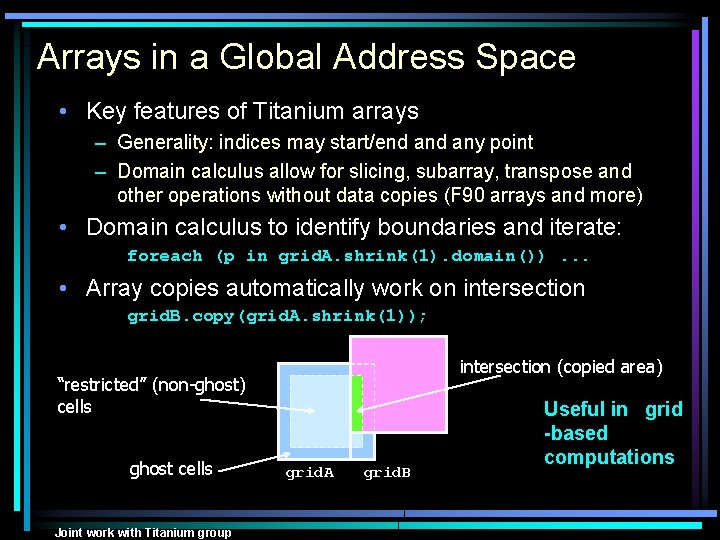

Arrays in a Global Address Space • Key features of Titanium arrays – Generality: indices may start/end any point – Domain calculus allow for slicing, subarray, transpose and other operations without data copies (F 90 arrays and more) • Domain calculus to identify boundaries and iterate: foreach (p in grid. A. shrink(1). domain()). . . • Array copies automatically work on intersection grid. B. copy(grid. A. shrink(1)); intersection (copied area) “restricted” (non-ghost) cells ghost cells Joint work with Titanium group grid. A grid. B Useful in grid -based computations

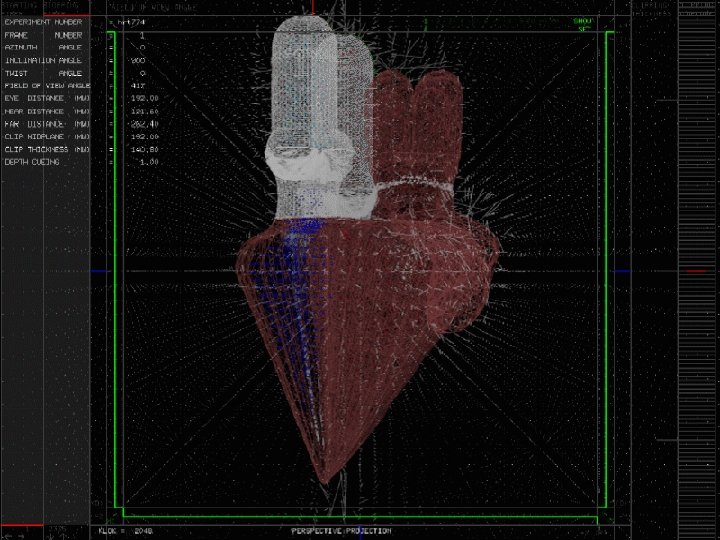

Immersed Boundaries in Biomechanics • Fluid flow within the body is one of the major challenges, e. g. , – – Blood through the heart Coagulation of platelets in clots Effect of sounds waves on the inner ear Movement of bacteria • A key problem is modeling an elastic structure immersed in a fluid – Irregular moving boundaries – Wide range of scales – Vary by structure, connectivity, viscosity, external forced, internally-generated forces, etc.

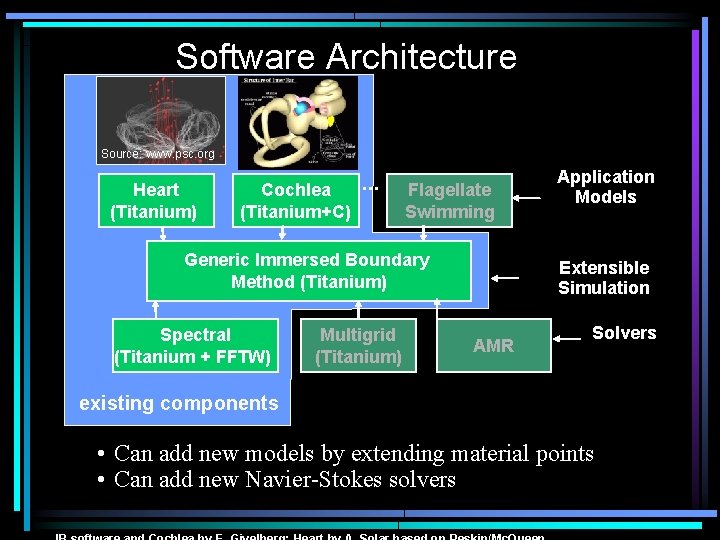

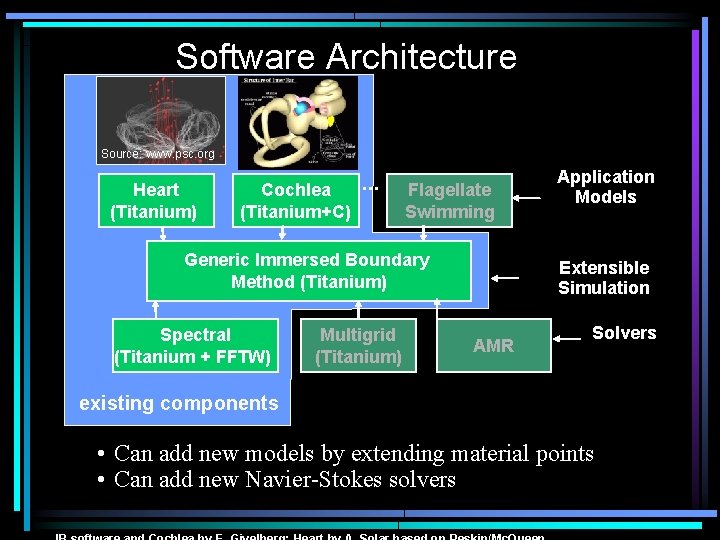

Software Architecture Source: www. psc. org Heart (Titanium) Cochlea (Titanium+C) … Flagellate Swimming Generic Immersed Boundary Method (Titanium) Spectral (Titanium + FFTW) Multigrid (Titanium) Application Models Extensible Simulation AMR Solvers existing components • Can add new models by extending material points • Can add new Navier-Stokes solvers

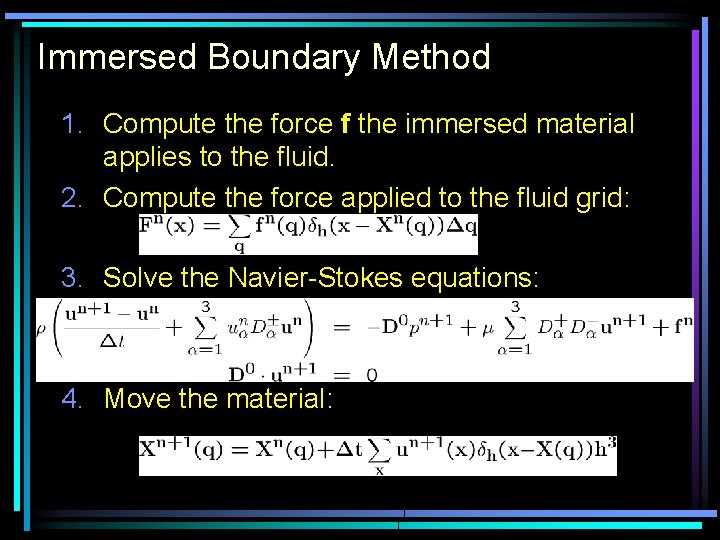

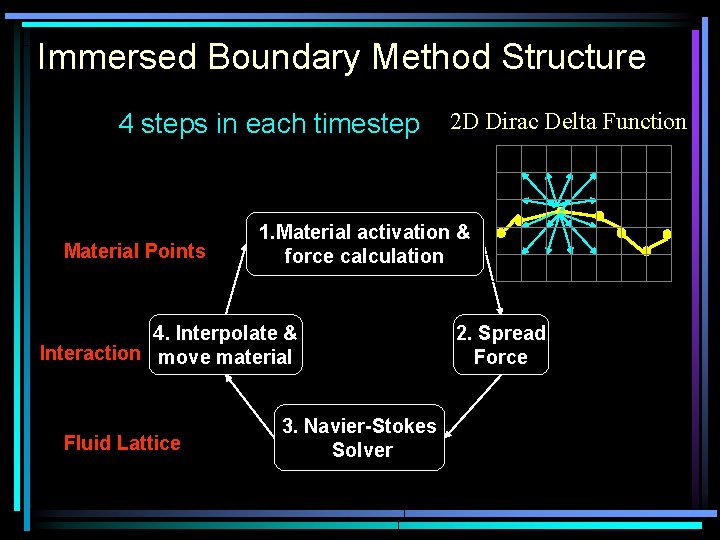

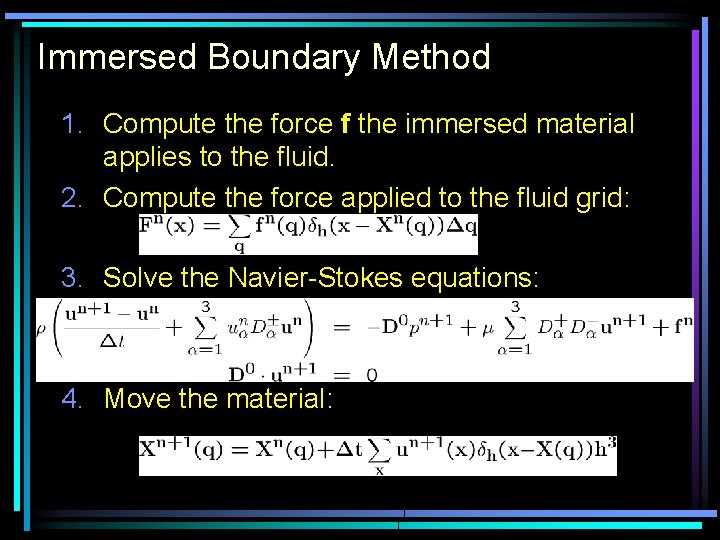

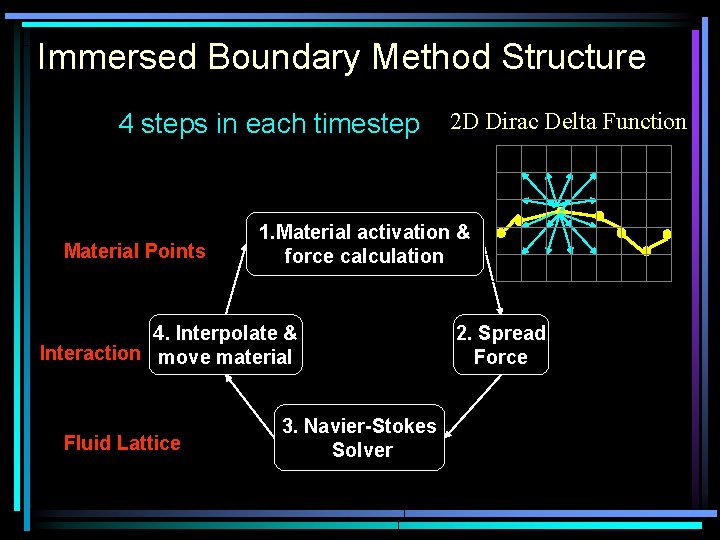

Immersed Boundary Method 1. Compute the force f the immersed material applies to the fluid. 2. Compute the force applied to the fluid grid: 3. Solve the Navier-Stokes equations: 4. Move the material:

Immersed Boundary Method Structure 4 steps in each timestep Material Points 1. Material activation & force calculation 4. Interpolate & Interaction move material Fluid Lattice 2 D Dirac Delta Function 3. Navier-Stokes Solver 2. Spread Force

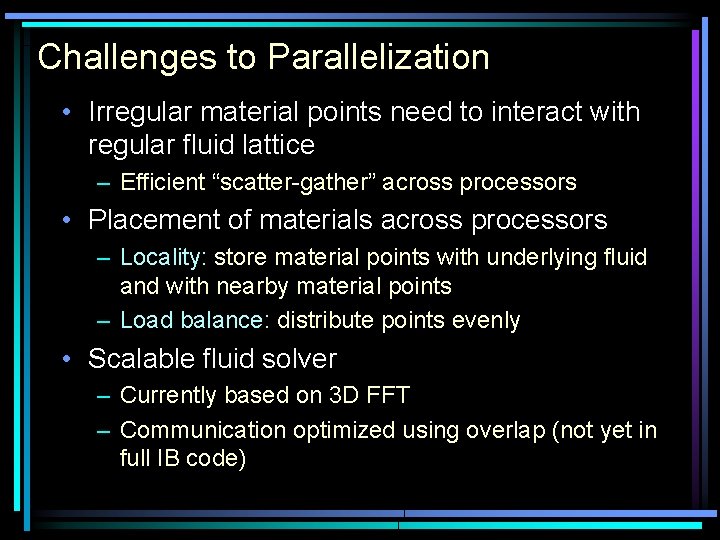

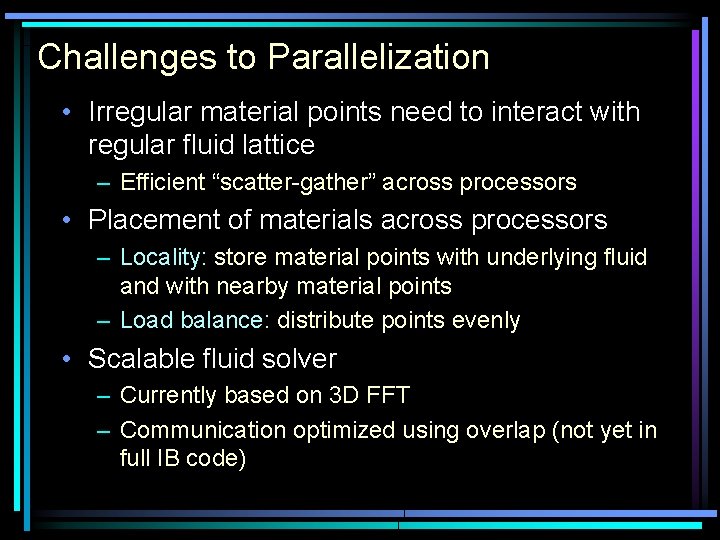

Challenges to Parallelization • Irregular material points need to interact with regular fluid lattice – Efficient “scatter-gather” across processors • Placement of materials across processors – Locality: store material points with underlying fluid and with nearby material points – Load balance: distribute points evenly • Scalable fluid solver – Currently based on 3 D FFT – Communication optimized using overlap (not yet in full IB code)

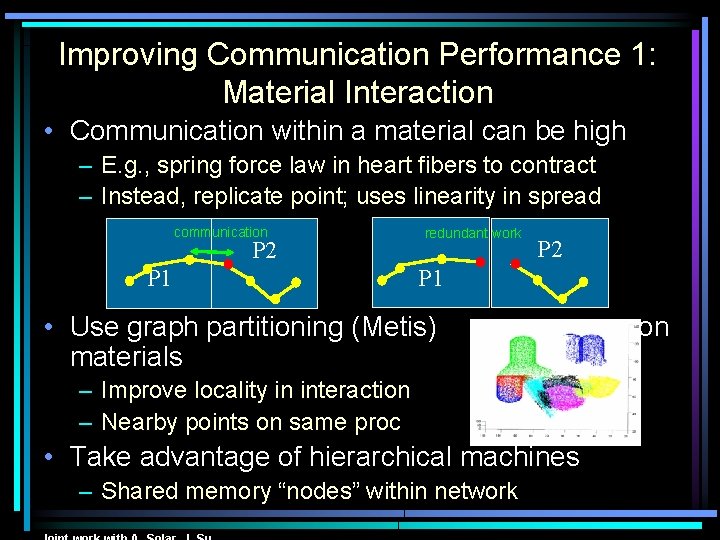

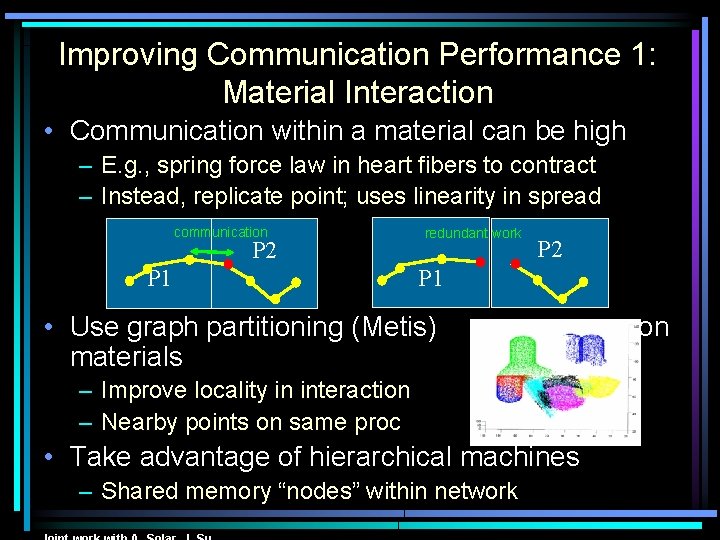

Improving Communication Performance 1: Material Interaction • Communication within a material can be high – E. g. , spring force law in heart fibers to contract – Instead, replicate point; uses linearity in spread communication P 2 P 1 redundant work P 2 P 1 • Use graph partitioning (Metis) materials – Improve locality in interaction – Nearby points on same proc • Take advantage of hierarchical machines – Shared memory “nodes” within network on

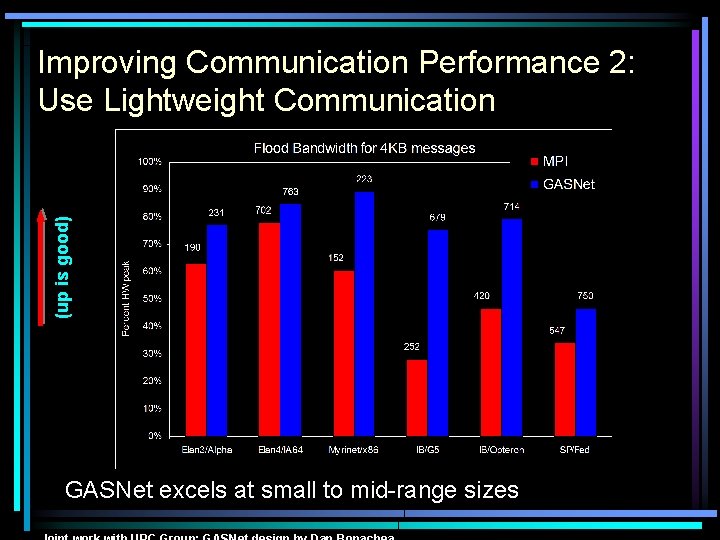

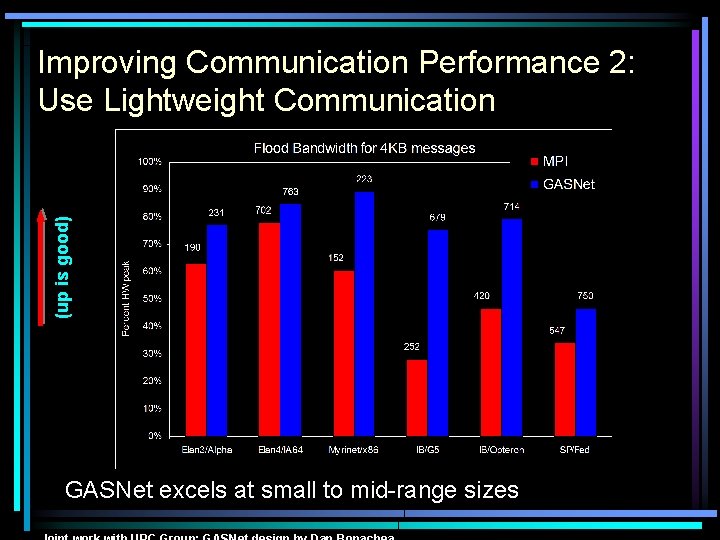

(up is good) Improving Communication Performance 2: Use Lightweight Communication GASNet excels at small to mid-range sizes

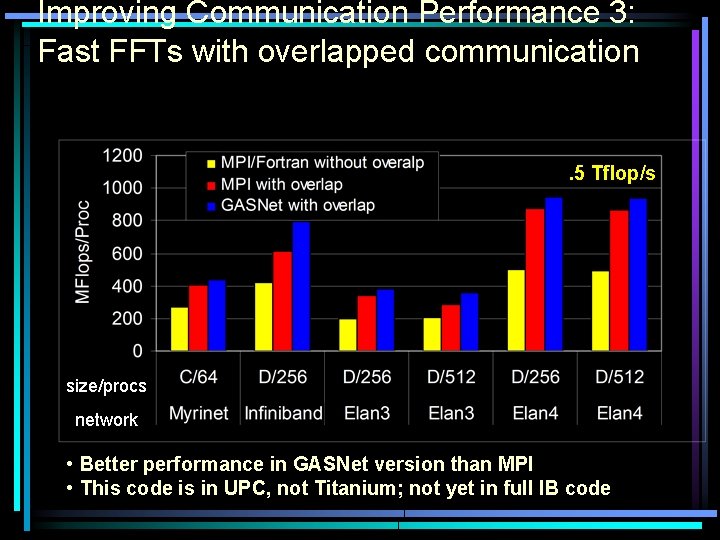

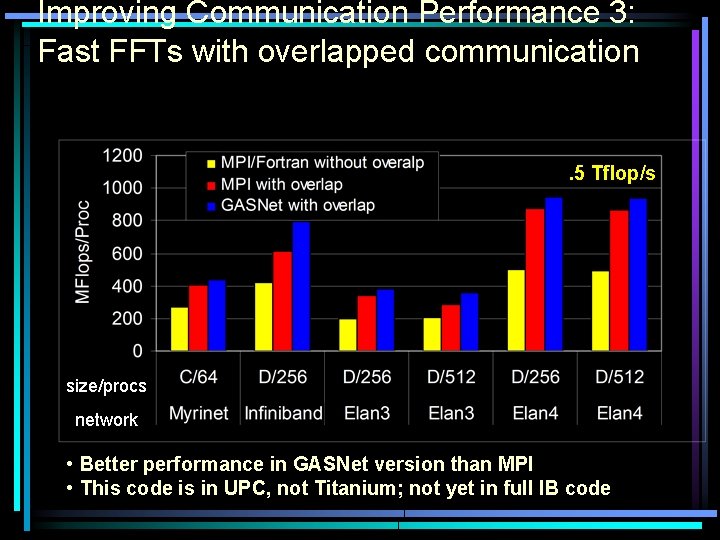

Improving Communication Performance 3: Fast FFTs with overlapped communication . 5 Tflop/s size/procs network • Better performance in GASNet version than MPI • This code is in UPC, not Titanium; not yet in full IB code

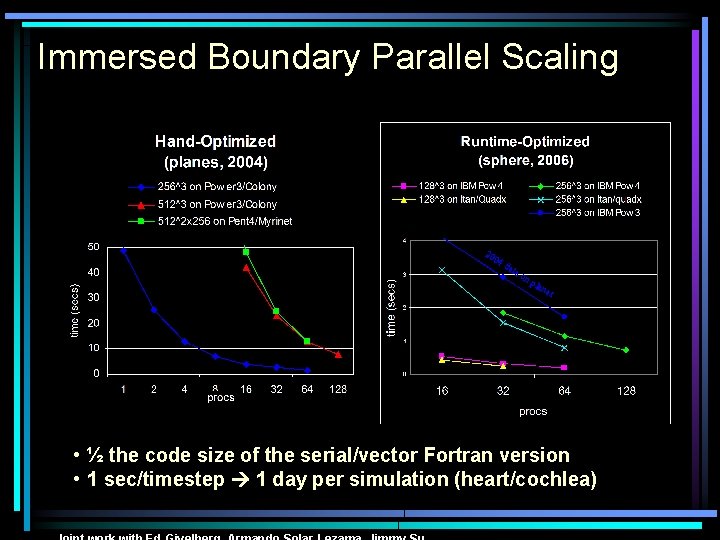

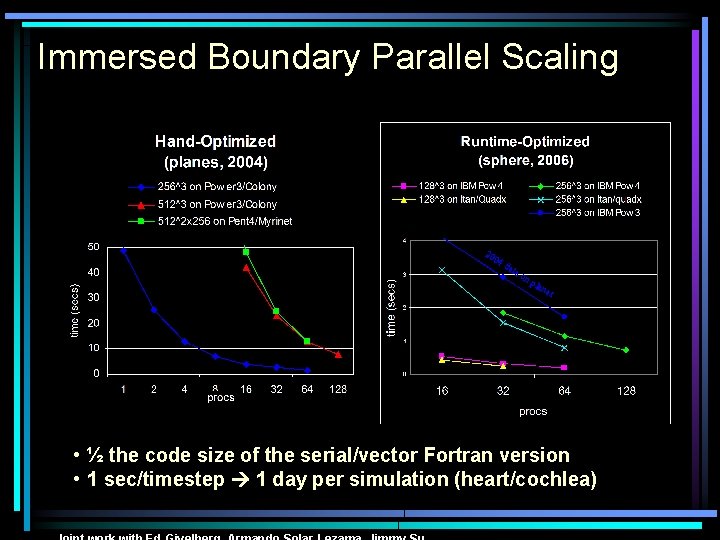

Immersed Boundary Parallel Scaling 20 04 dat ao np lan es • ½ the code size of the serial/vector Fortran version • 1 sec/timestep 1 day per simulation (heart/cochlea)

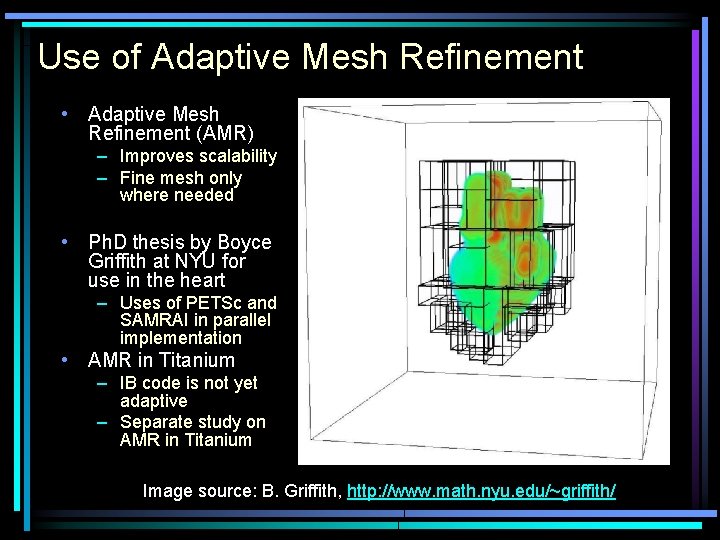

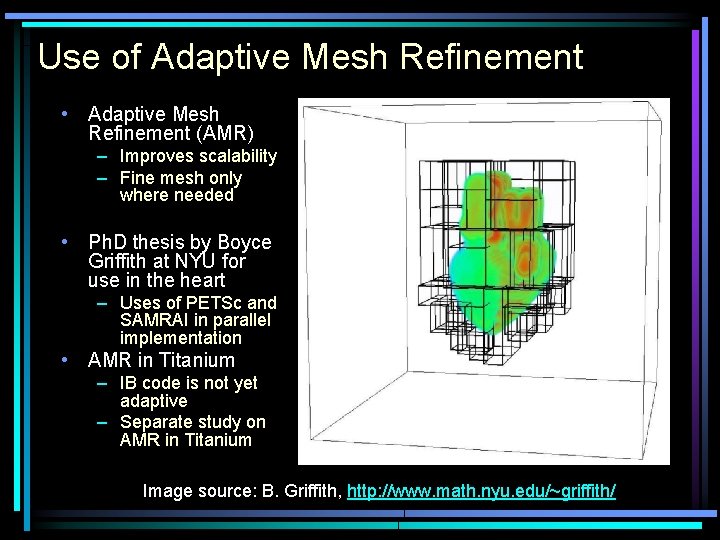

Use of Adaptive Mesh Refinement • Adaptive Mesh Refinement (AMR) – Improves scalability – Fine mesh only where needed • Ph. D thesis by Boyce Griffith at NYU for use in the heart – Uses of PETSc and SAMRAI in parallel implementation • AMR in Titanium – IB code is not yet adaptive – Separate study on AMR in Titanium Image source: B. Griffith, http: //www. math. nyu. edu/~griffith/

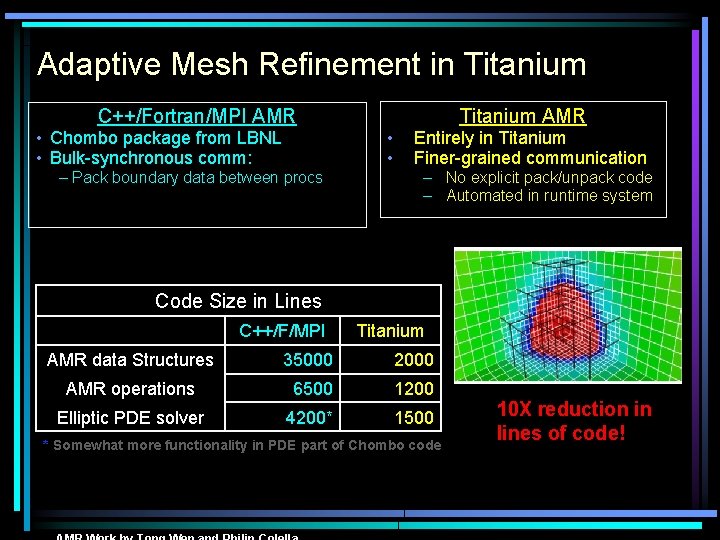

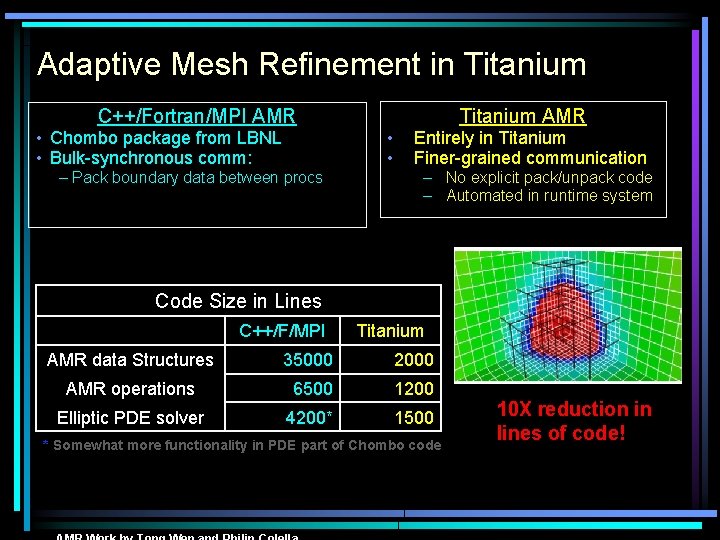

Adaptive Mesh Refinement in Titanium C++/Fortran/MPI AMR • Chombo package from LBNL • Bulk-synchronous comm: – Pack boundary data between procs • • Titanium AMR Entirely in Titanium Finer-grained communication – No explicit pack/unpack code – Automated in runtime system Code Size in Lines C++/F/MPI Titanium AMR data Structures 35000 2000 AMR operations 6500 1200 Elliptic PDE solver 4200* 1500 * Somewhat more functionality in PDE part of Chombo code 10 X reduction in lines of code!

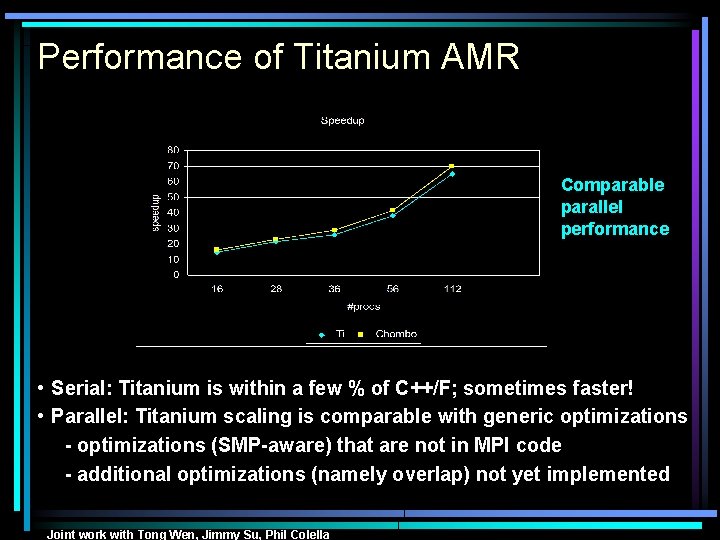

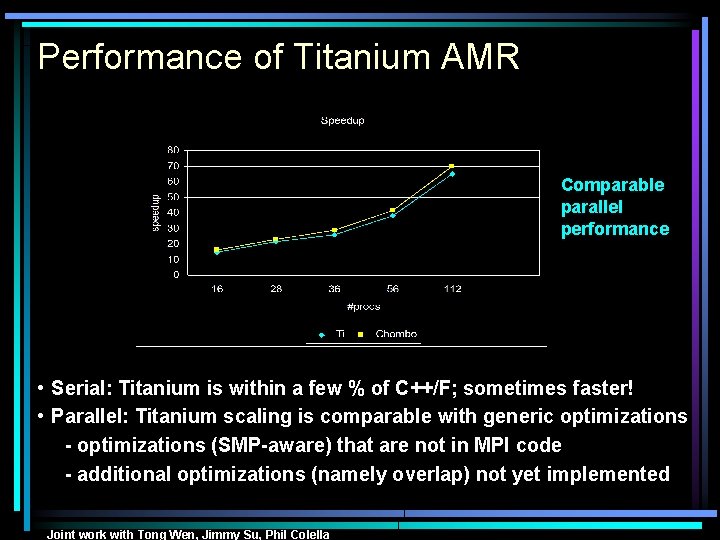

Performance of Titanium AMR Comparable parallel performance • Serial: Titanium is within a few % of C++/F; sometimes faster! • Parallel: Titanium scaling is comparable with generic optimizations - optimizations (SMP-aware) that are not in MPI code - additional optimizations (namely overlap) not yet implemented Joint work with Tong Wen, Jimmy Su, Phil Colella

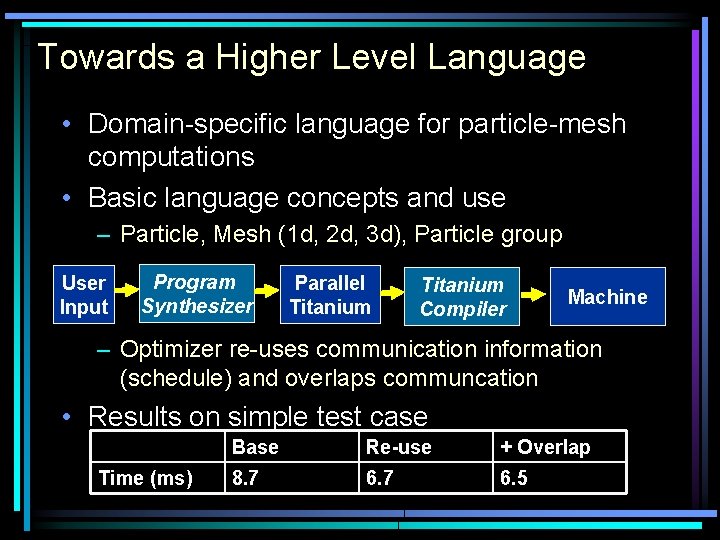

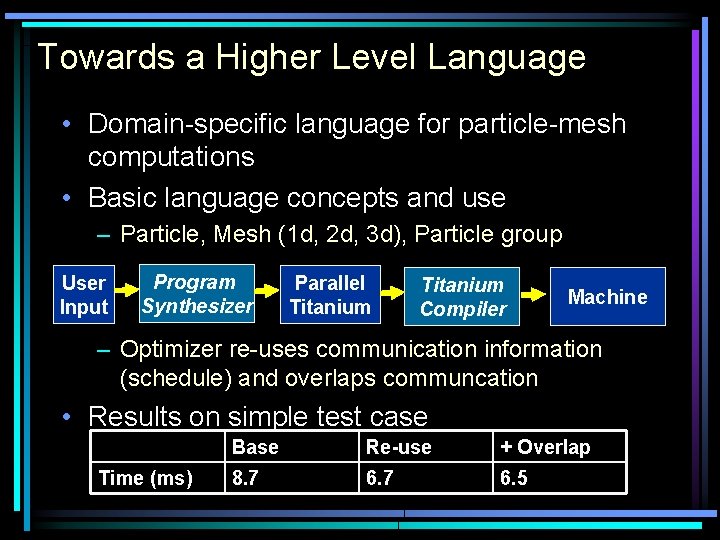

Towards a Higher Level Language • Domain-specific language for particle-mesh computations • Basic language concepts and use – Particle, Mesh (1 d, 2 d, 3 d), Particle group User Input Program Synthesizer Parallel Titanium Compiler Machine – Optimizer re-uses communication information (schedule) and overlaps communcation • Results on simple test case Time (ms) Base Re-use + Overlap 8. 7 6. 5

Conclusions • All software will soon be parallel – End of the single processor scaling era • Titanium is a high level parallel language – – Support for scientific computing High performance and scalable Highly portable across serial / parallel machines Download: http: //titanium. cs. berkeley. edu • Immersed boundary method framework – – Designed for extensibility Demonstrations on heart and cochlea simulations Some optimizations done in compiler / runtime Contact: {jimmysu, yelick}@cs. berkeley. edu