Use Cases at the Intersection of Apache SparkHadoop

- Slides: 32

Use Cases at the Intersection of Apache Spark/Hadoop and IBM z Analytics Eberhard Hechler Executive Architect DB 2 Analytics Accelerator Development IBM Germany R&D Lab Session Code: F 15 Thursday, 17 November 2016, 8: 30 – 9: 30 | Platform: DB 2 for z. OS

Disclaimer © Copyright IBM Corporation 2016. All rights reserved. U. S. Government Users Restricted Rights - Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. IBM’s statements regarding its plans, directions, and intent are subject to change or withdrawal without notice at IBM’s sole discretion. Information regarding potential future products is intended to outline our general product direction and it should not be relied on in making a purchasing decision. The information mentioned regarding potential future products is not a commitment, promise, or legal obligation to deliver any material, code or functionality. Information about potential future products may not be incorporated into any contract. The development, release, and timing of any future features or functionality described for our products remains at our sole discretion. IBM, the IBM logo, ibm. com, DB 2, and DB 2 for z/OS are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. If these and other IBM trademarked terms are marked on their first occurrence in this information with a trademark symbol (® or ™), these symbols indicate U. S. registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at “Copyright and trademark information” at www. ibm. com/legal/copytrade. shtml Other company, product, or service names may be trademarks or service marks of others.

Objectives 1. Review key use case scenarios that play at the intersection of Spark and z Analytics with DB 2 for z/OS and the DB 2 Analytics Accelerator. 2. Understand the value of the Hadoop and Spark ecosystem to enrich analytical insight on DB 2 for z/OS. 3. Gain insight into making available z data for analytics using Hadoop and Spark. 4. Dive into details on Big SQL for Hadoop, its functionality and integration with DB 2. 5. Demo on Spark integration with DB 2 for z/OS 3

Agenda • What is Apache Spark? RDDs / Data. Frames / Datasets • IBM Open Platform with Apache Spark and Apache Hadoop IBM Big. Insights • Spark on z/OS • Technical Integration Points Use Case Scenarios • Summary 4

What is Apache Spark?

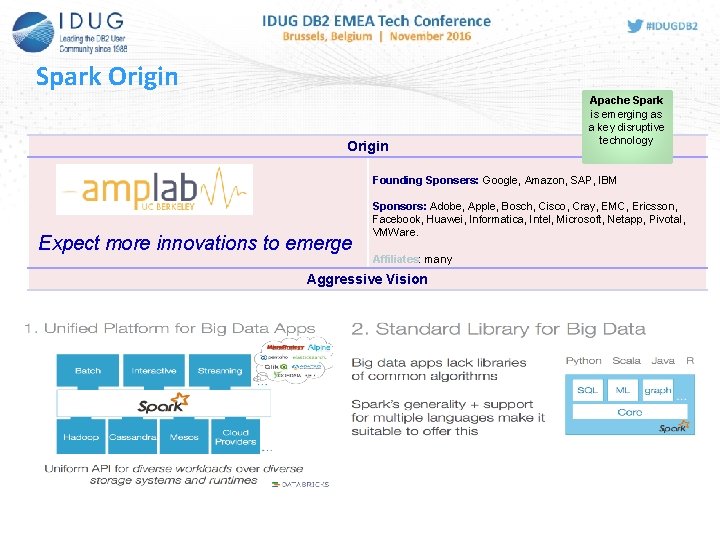

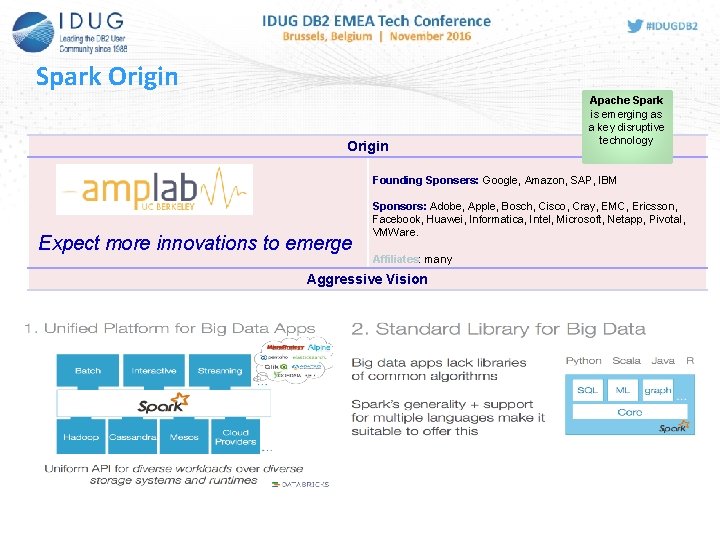

Spark Origin Apache Spark is emerging as a key disruptive technology Founding Sponsers: Google, Amazon, SAP, IBM Expect more innovations to emerge Sponsors: Adobe, Apple, Bosch, Cisco, Cray, EMC, Ericsson, Facebook, Huawei, Informatica, Intel, Microsoft, Netapp, Pivotal, VMWare. Affiliates: many Aggressive Vision

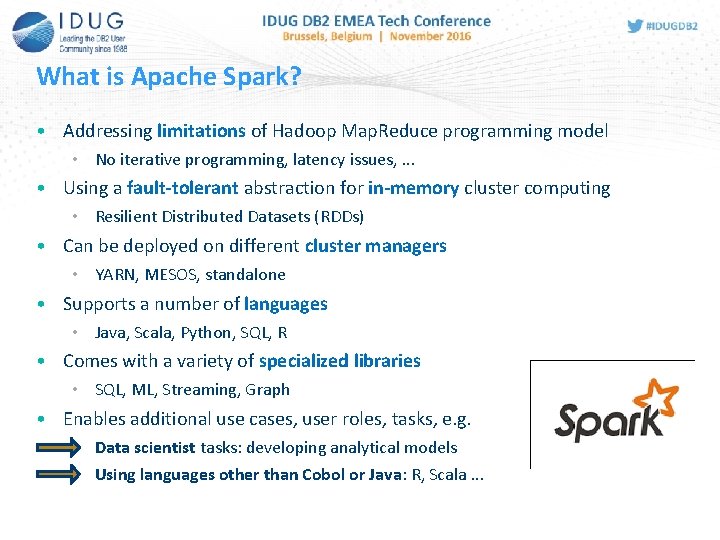

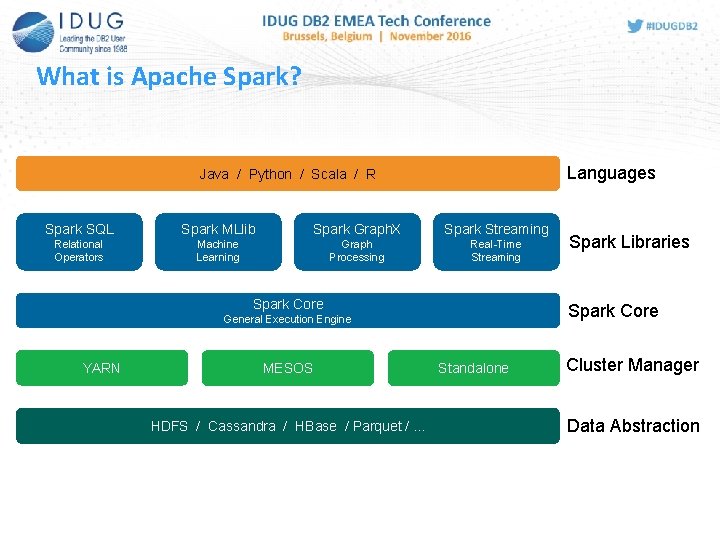

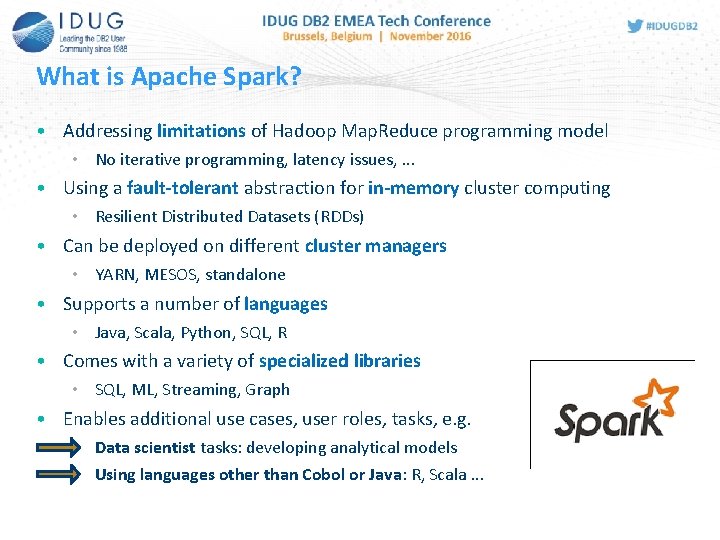

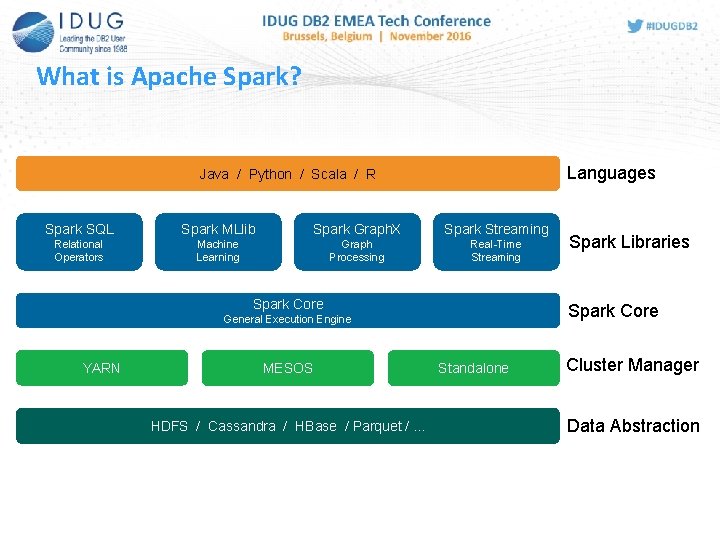

What is Apache Spark? • Addressing limitations of Hadoop Map. Reduce programming model • No iterative programming, latency issues, . . . • Using a fault-tolerant abstraction for in-memory cluster computing • Resilient Distributed Datasets (RDDs) • Can be deployed on different cluster managers • YARN, MESOS, standalone • Supports a number of languages • Java, Scala, Python, SQL, R • Comes with a variety of specialized libraries • SQL, ML, Streaming, Graph • Enables additional use cases, user roles, tasks, e. g. • Data scientist tasks: developing analytical models • Using languages other than Cobol or Java: R, Scala. . .

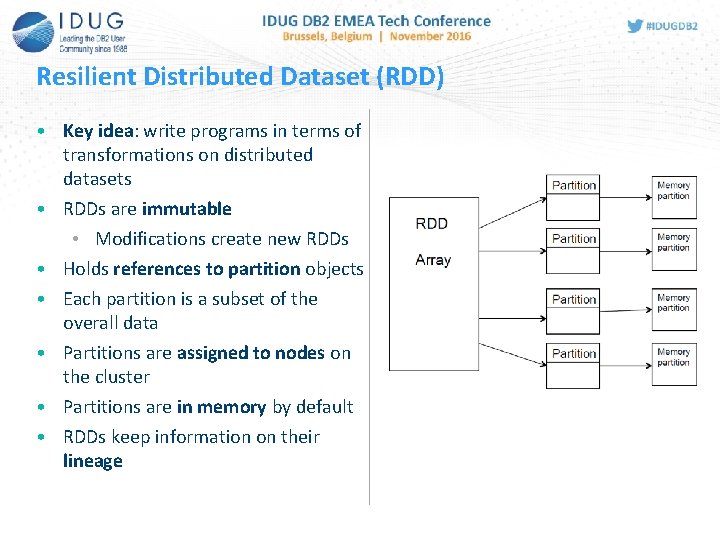

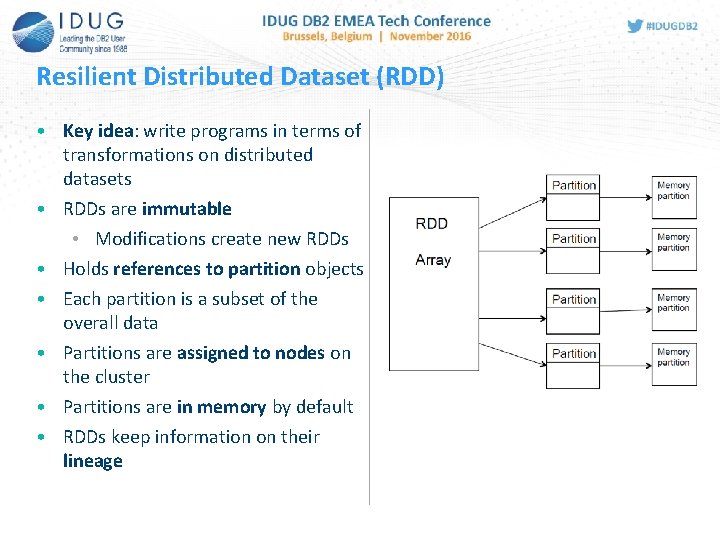

Resilient Distributed Dataset (RDD) • Key idea: write programs in terms of transformations on distributed datasets • RDDs are immutable • Modifications create new RDDs • Holds references to partition objects • Each partition is a subset of the overall data • Partitions are assigned to nodes on the cluster • Partitions are in memory by default • RDDs keep information on their lineage

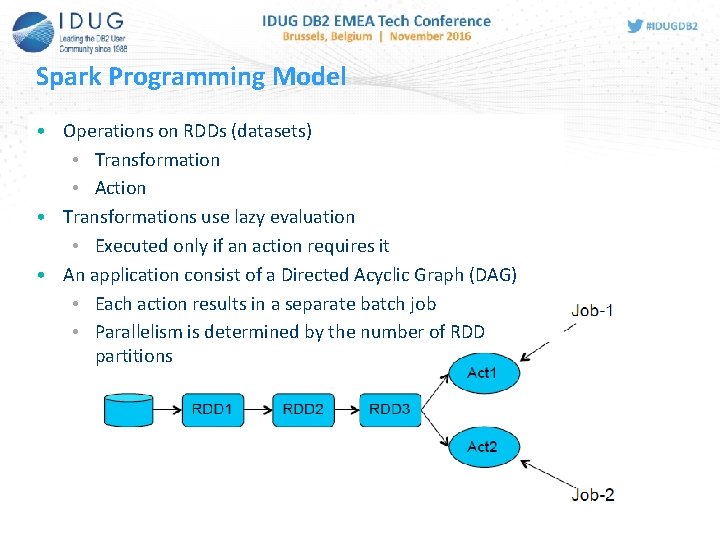

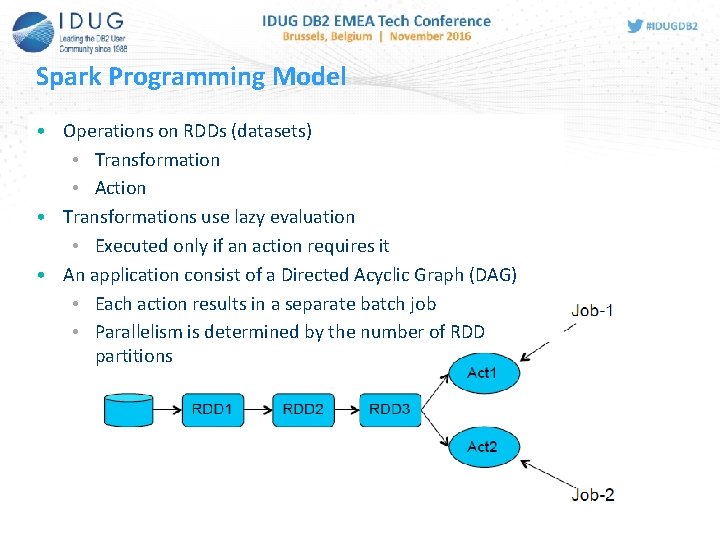

Spark Programming Model • Operations on RDDs (datasets) • Transformation • Action • Transformations use lazy evaluation • Executed only if an action requires it • An application consist of a Directed Acyclic Graph (DAG) • Each action results in a separate batch job • Parallelism is determined by the number of RDD partitions

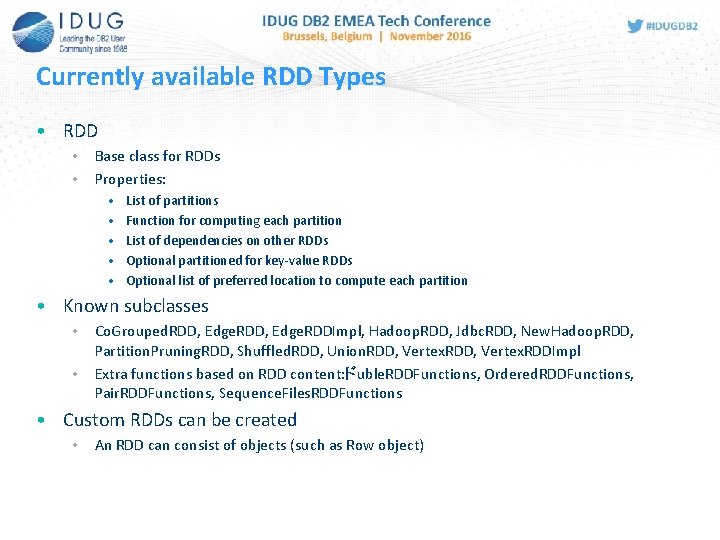

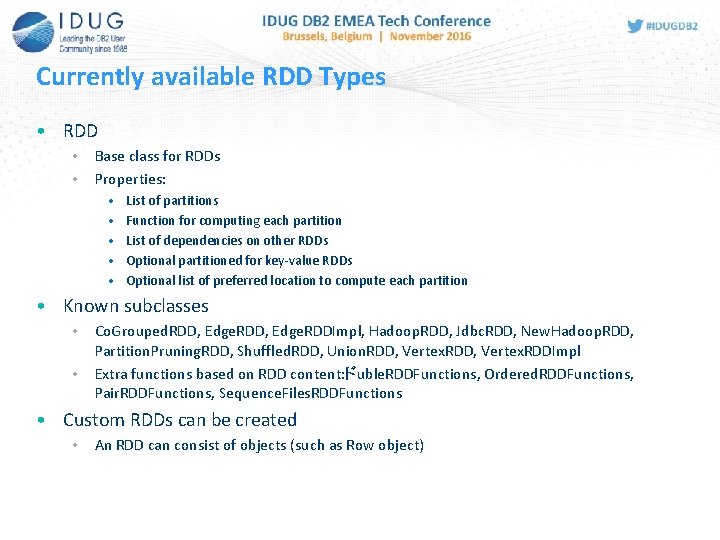

Currently available RDD Types • RDD • • Base class for RDDs Properties: • • • List of partitions Function for computing each partition List of dependencies on other RDDs Optional partitioned for key-value RDDs Optional list of preferred location to compute each partition • Known subclasses • • Co. Grouped. RDD, Edge. RDDImpl, Hadoop. RDD, Jdbc. RDD, New. Hadoop. RDD, Partition. Pruning. RDD, Shuffled. RDD, Union. RDD, Vertex. RDDImpl Extra functions based on RDD content: ドuble. RDDFunctions, Ordered. RDDFunctions, Pair. RDDFunctions, Sequence. Files. RDDFunctions • Custom RDDs can be created • An RDD can consist of objects (such as Row object)

What is Apache Spark? Languages Java / Python / Scala / R Spark SQL Spark MLlib Spark Graph. X Spark Streaming Relational Operators Machine Learning Graph Processing Real-Time Streaming Spark Core General Execution Engine YARN MESOS HDFS / Cassandra / HBase / Parquet /. . . Spark Libraries Standalone Cluster Manager Data Abstraction

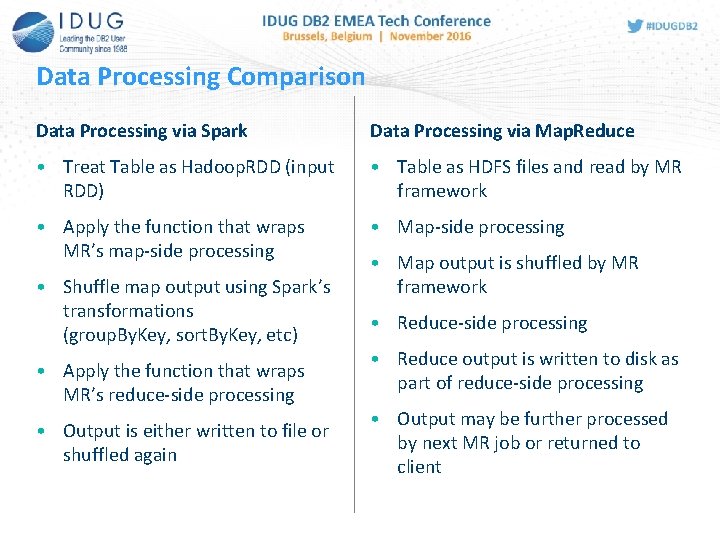

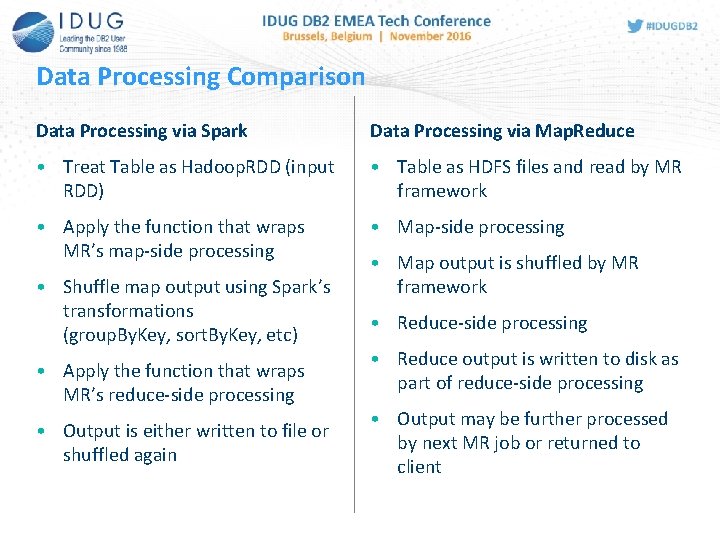

Data Processing Comparison Data Processing via Spark Data Processing via Map. Reduce • Treat Table as Hadoop. RDD (input RDD) • Table as HDFS files and read by MR framework • Apply the function that wraps MR’s map-side processing • Map-side processing • Shuffle map output using Spark’s transformations (group. By. Key, sort. By. Key, etc) • Apply the function that wraps MR’s reduce-side processing • Output is either written to file or shuffled again • Map output is shuffled by MR framework • Reduce-side processing • Reduce output is written to disk as part of reduce-side processing • Output may be further processed by next MR job or returned to client

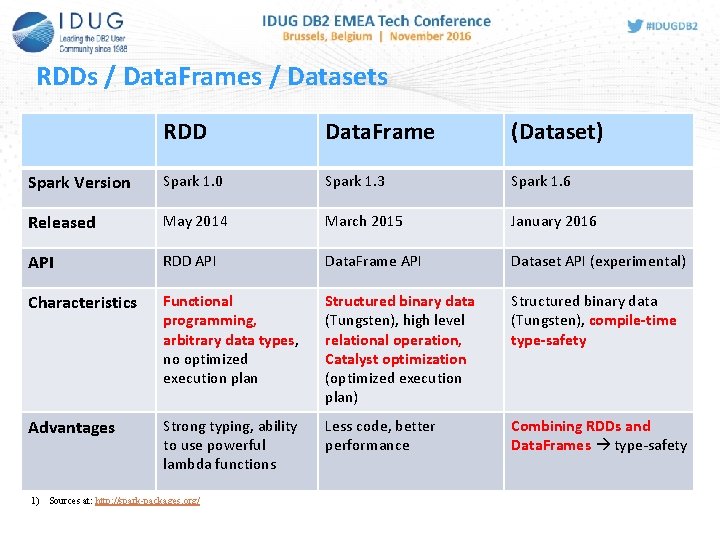

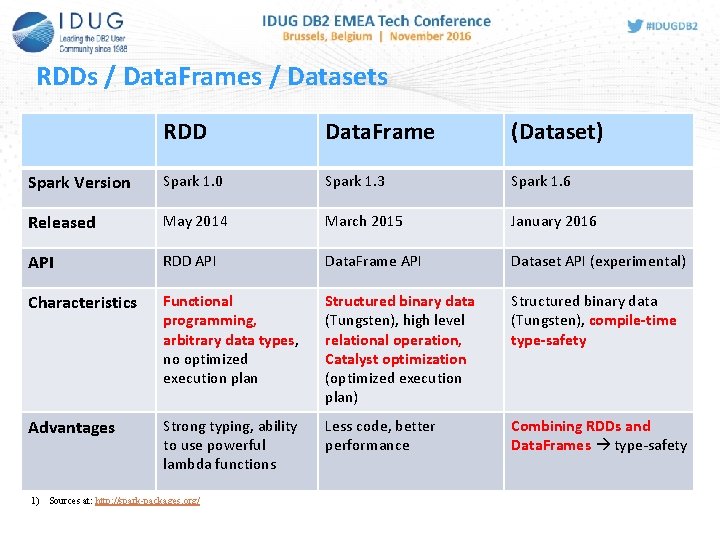

RDDs / Data. Frames / Datasets RDD Data. Frame (Dataset) Spark Version Spark 1. 0 Spark 1. 3 Spark 1. 6 Released May 2014 March 2015 January 2016 API RDD API Data. Frame API Dataset API (experimental) Characteristics Functional programming, arbitrary data types, no optimized execution plan Structured binary data (Tungsten), high level relational operation, Catalyst optimization (optimized execution plan) Structured binary data (Tungsten), compile-time type-safety Advantages Strong typing, ability to use powerful lambda functions Less code, better performance Combining RDDs and Data. Frames type-safety 1) Sources at: http: //spark-packages. org/

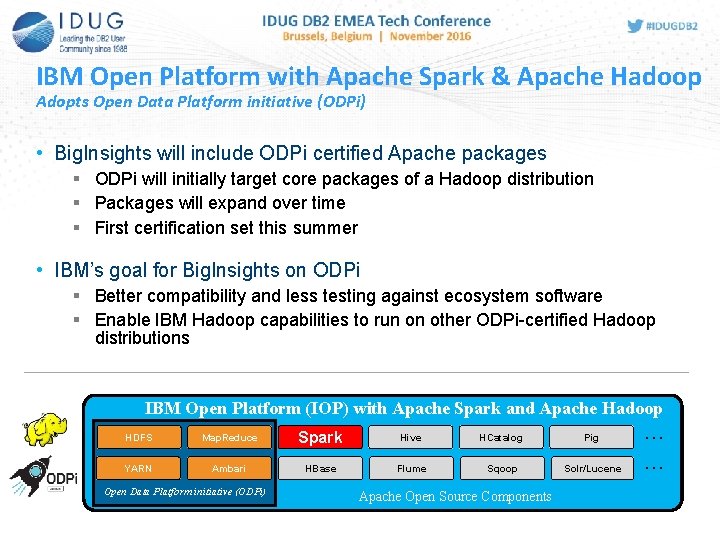

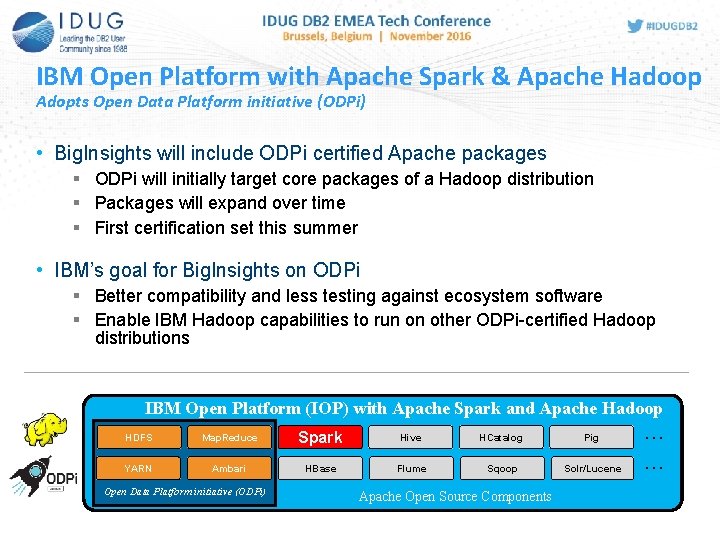

IBM Open Platform with Apache Spark & Apache Hadoop Adopts Open Data Platform initiative (ODPi) • Big. Insights will include ODPi certified Apache packages § ODPi will initially target core packages of a Hadoop distribution § Packages will expand over time § First certification set this summer • IBM’s goal for Big. Insights on ODPi § Better compatibility and less testing against ecosystem software § Enable IBM Hadoop capabilities to run on other ODPi-certified Hadoop distributions IBM Open Platform (IOP) with Apache Spark and Apache Hadoop HDFS YARN IBM Open Platform with Apache Hadoop Hive HCatalog Map. Reduce Spark Ambari Open Data Platform initiative (ODPi) HBase Flume Sqoop Apache Open Source Components Spark Pig Solr/Lucene . . .

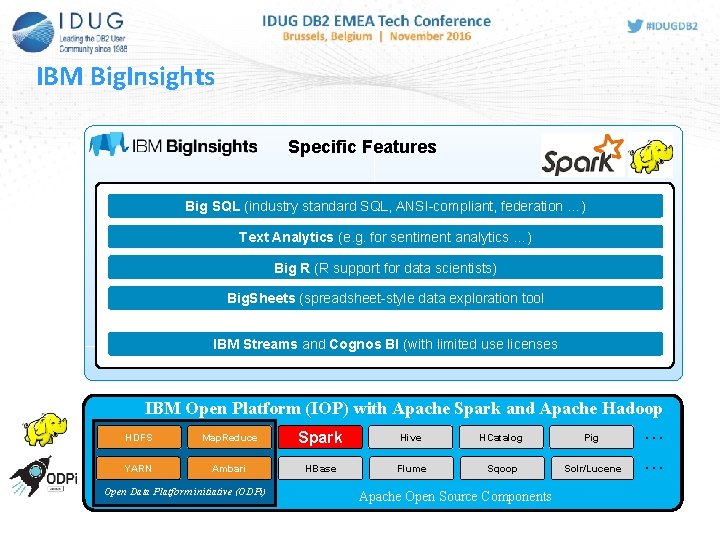

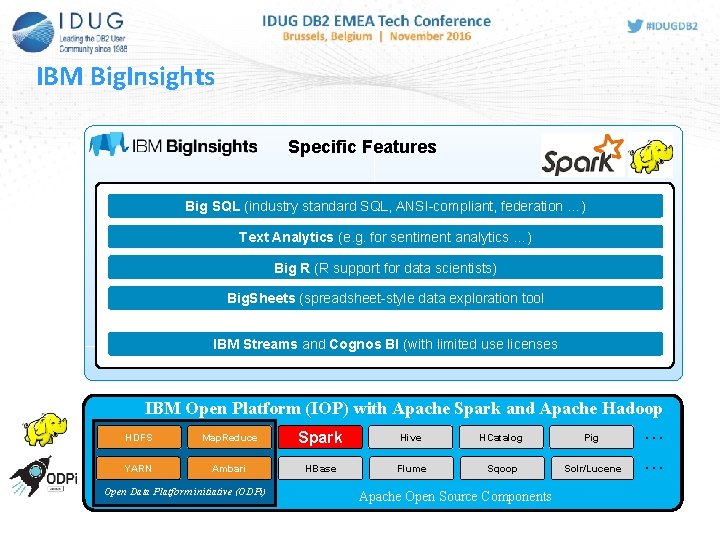

IBM Big. Insights Specific Features Big SQL (industry standard SQL, ANSI-compliant, federation …) Text Analytics (e. g. for sentiment analytics …) Big R (R support for data scientists) Big. Sheets (spreadsheet-style data exploration tool IBM Streams and Cognos BI (with limited use licenses IBM Open Platform (IOP) with Apache Spark and Apache Hadoop HDFS YARN IBM Open Platform with Apache Hadoop Hive HCatalog Map. Reduce Spark Ambari Open Data Platform initiative (ODPi) HBase Flume Sqoop Apache Open Source Components Spark Pig Solr/Lucene . . .

Spark on z/OS

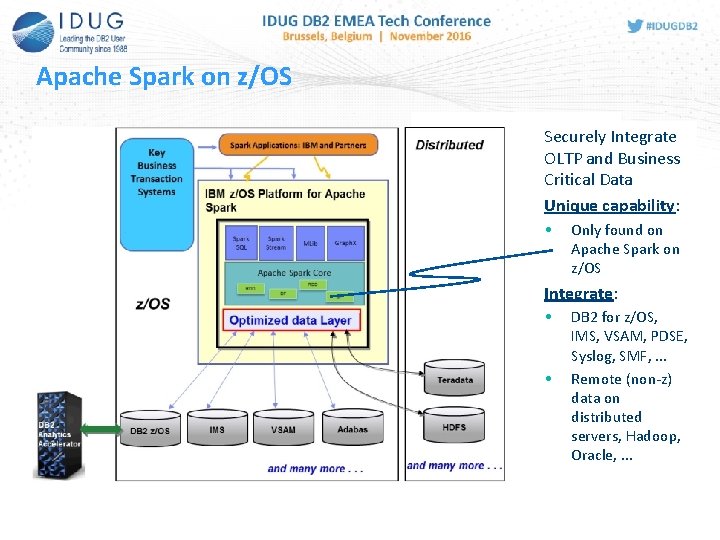

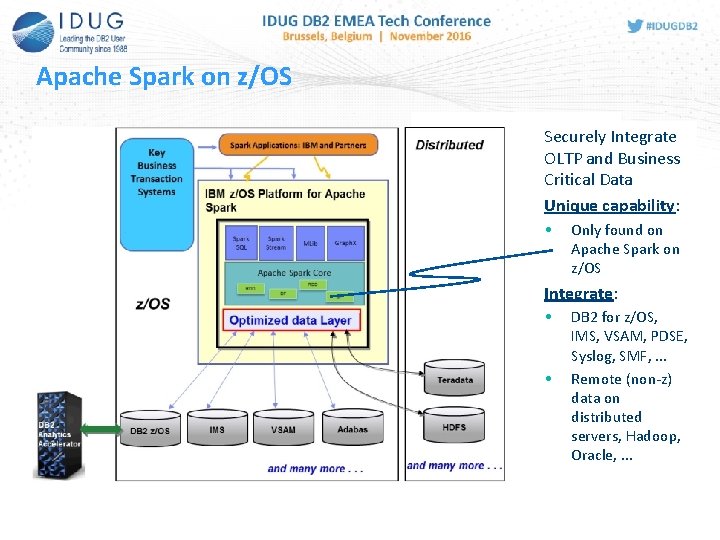

Apache Spark on z/OS Securely Integrate OLTP and Business Critical Data Unique capability: • Only found on Apache Spark on z/OS Integrate: • • DB 2 for z/OS, IMS, VSAM, PDSE, Syslog, SMF, . . . Remote (non-z) data on distributed servers, Hadoop, Oracle, . . .

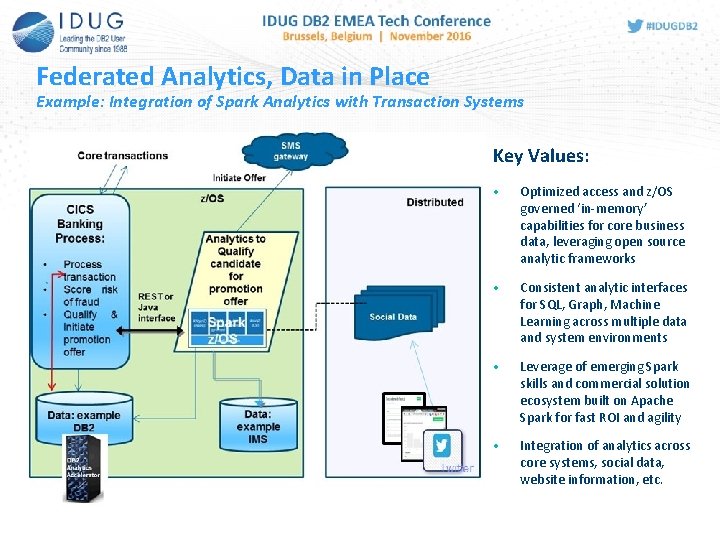

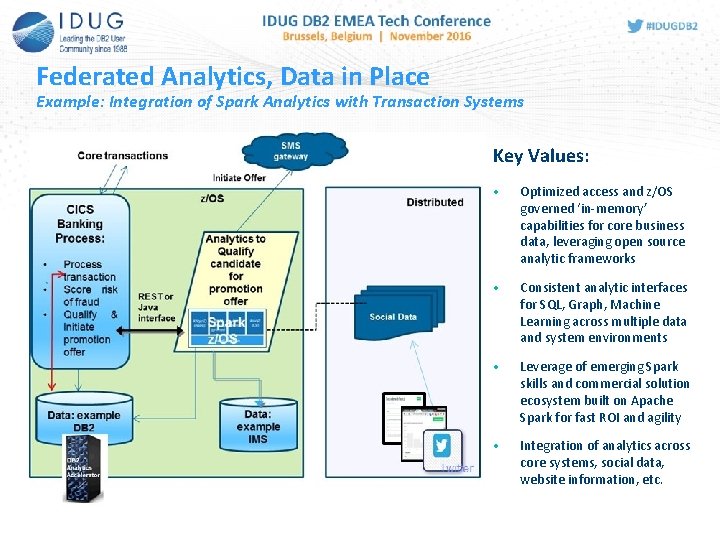

Federated Analytics, Data in Place Example: Integration of Spark Analytics with Transaction Systems Key Values: • Optimized access and z/OS governed ‘in-memory’ capabilities for core business data, leveraging open source analytic frameworks • Consistent analytic interfaces for SQL, Graph, Machine Learning across multiple data and system environments • Leverage of emerging Spark skills and commercial solution ecosystem built on Apache Spark for fast ROI and agility • Integration of analytics across core systems, social data, website information, etc.

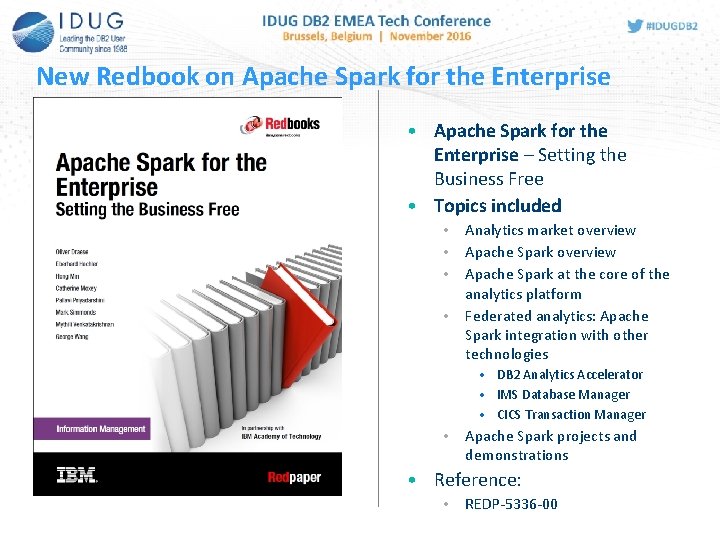

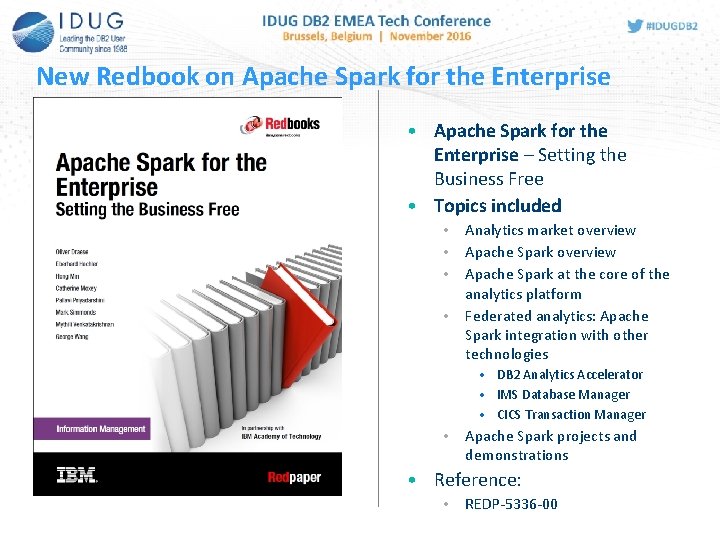

New Redbook on Apache Spark for the Enterprise • Apache Spark for the Enterprise – Setting the Business Free • Topics included • • Analytics market overview Apache Spark at the core of the analytics platform Federated analytics: Apache Spark integration with other technologies • DB 2 Analytics Accelerator • IMS Database Manager • CICS Transaction Manager • Apache Spark projects and demonstrations • Reference: • REDP-5336 -00

Technical Integration Points Use Case Scenarios

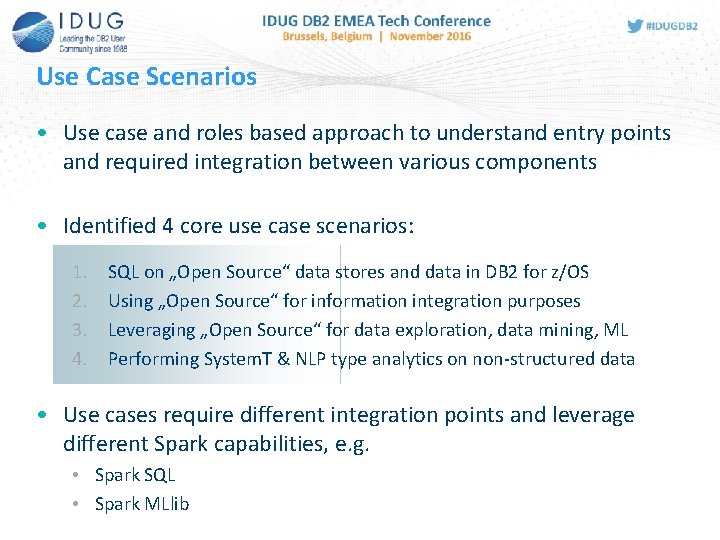

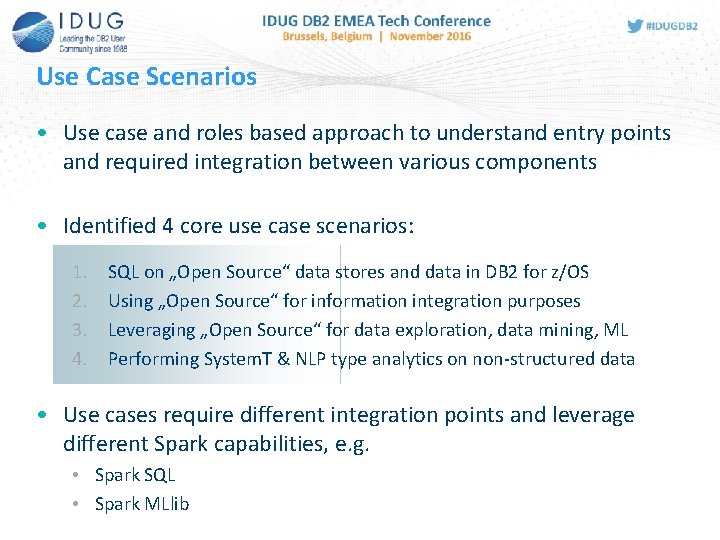

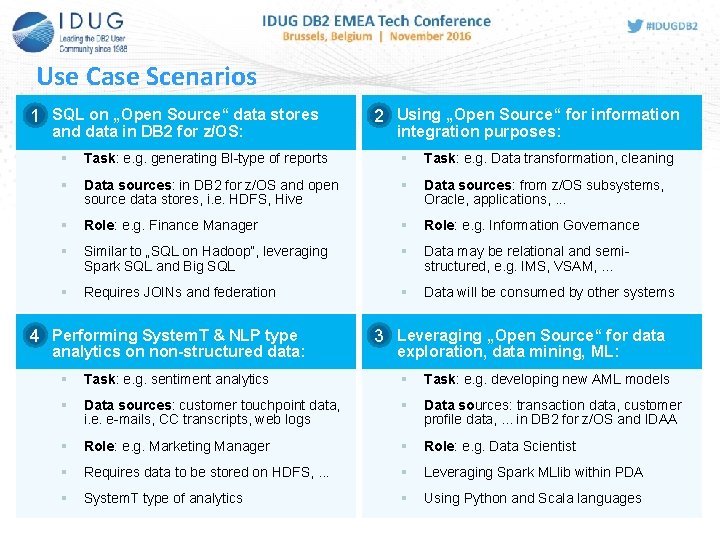

Use Case Scenarios • Use case and roles based approach to understand entry points and required integration between various components • Identified 4 core use case scenarios: 1. 2. 3. 4. SQL on „Open Source“ data stores and data in DB 2 for z/OS Using „Open Source“ for information integration purposes Leveraging „Open Source“ for data exploration, data mining, ML Performing System. T & NLP type analytics on non-structured data • Use cases require different integration points and leverage different Spark capabilities, e. g. • Spark SQL • Spark MLlib

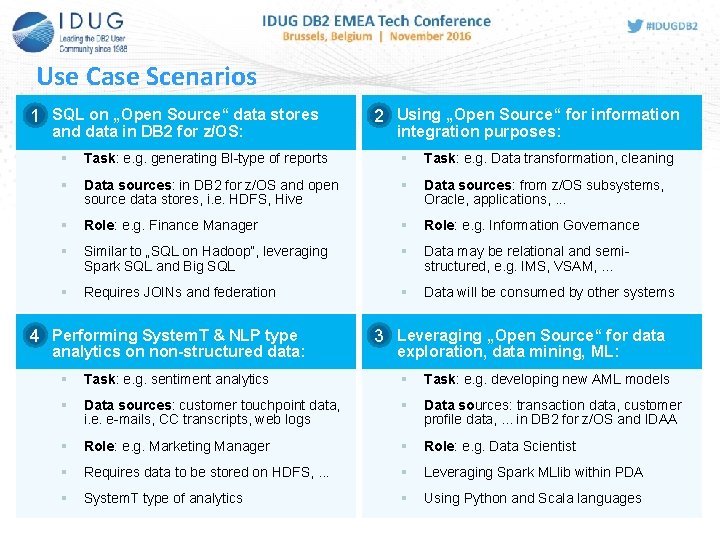

Use Case Scenarios • 1 SQL on „Open Source“ data stores and data in DB 2 for z/OS: • 2 Using „Open Source“ for information integration purposes: § Task: e. g. generating BI-type of reports § Task: e. g. Data transformation, cleaning § Data sources: in DB 2 for z/OS and open source data stores, i. e. HDFS, Hive § Data sources: from z/OS subsystems, Oracle, applications, . . . § Role: e. g. Finance Manager § Role: e. g. Information Governance § Similar to „SQL on Hadoop“, leveraging Spark SQL and Big SQL § Data may be relational and semistructured, e. g. IMS, VSAM, . . . § Requires JOINs and federation § Data will be consumed by other systems • 4 Performing System. T & NLP type analytics on non-structured data: • 3 Leveraging „Open Source“ for data exploration, data mining, ML: § Task: e. g. sentiment analytics § Task: e. g. developing new AML models § Data sources: customer touchpoint data, i. e. e-mails, CC transcripts, web logs § Data sources: transaction data, customer profile data, . . . in DB 2 for z/OS and IDAA § Role: e. g. Marketing Manager § Role: e. g. Data Scientist § Requires data to be stored on HDFS, . . . § Leveraging Spark MLlib within PDA § System. T type of analytics § Using Python and Scala languages

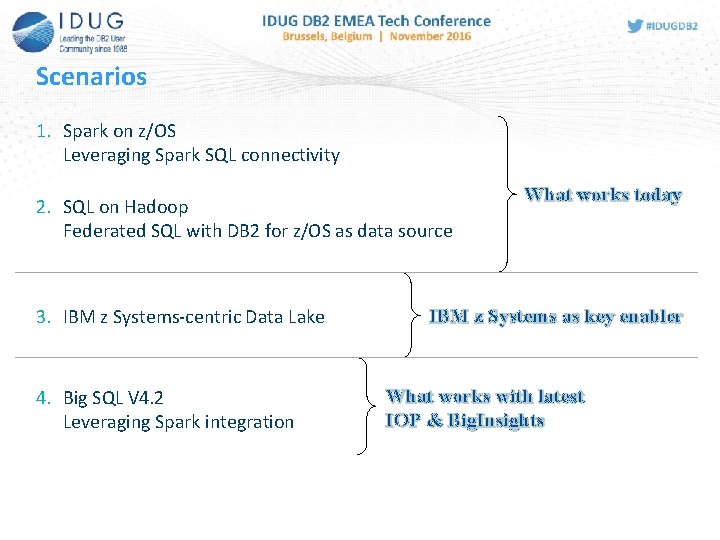

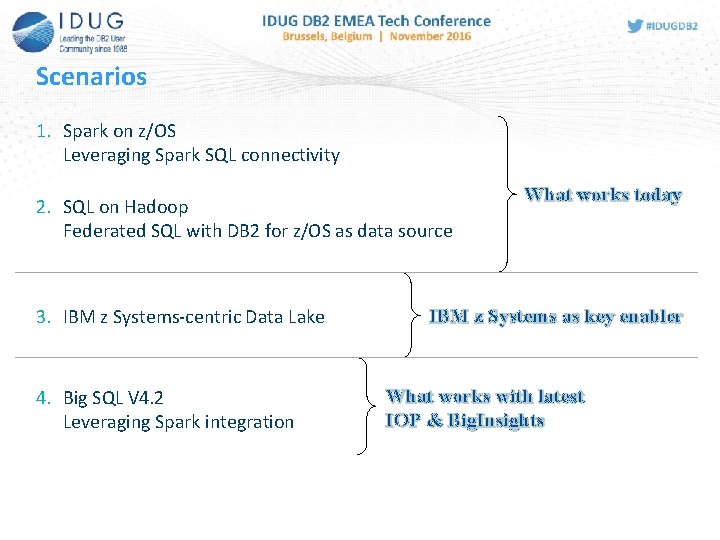

Scenarios 1. Spark on z/OS Leveraging Spark SQL connectivity 2. SQL on Hadoop Federated SQL with DB 2 for z/OS as data source 3. IBM z Systems-centric Data Lake 4. Big SQL V 4. 2 Leveraging Spark integration What works today IBM z Systems as key enabler What works with latest IOP & Big. Insights

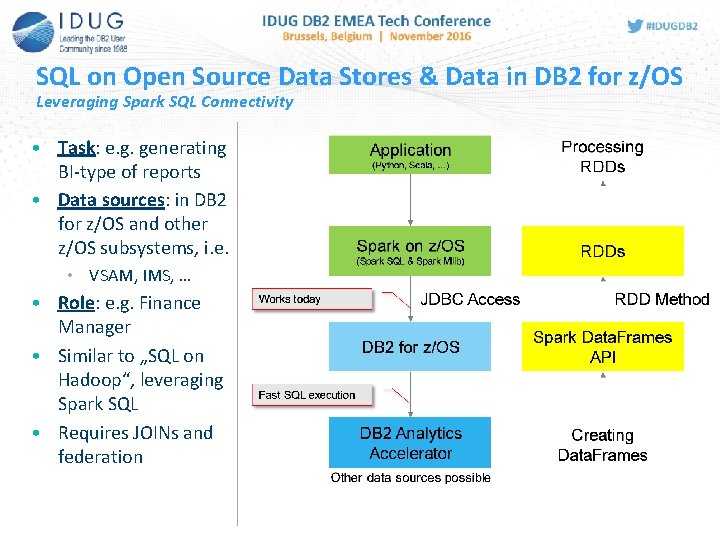

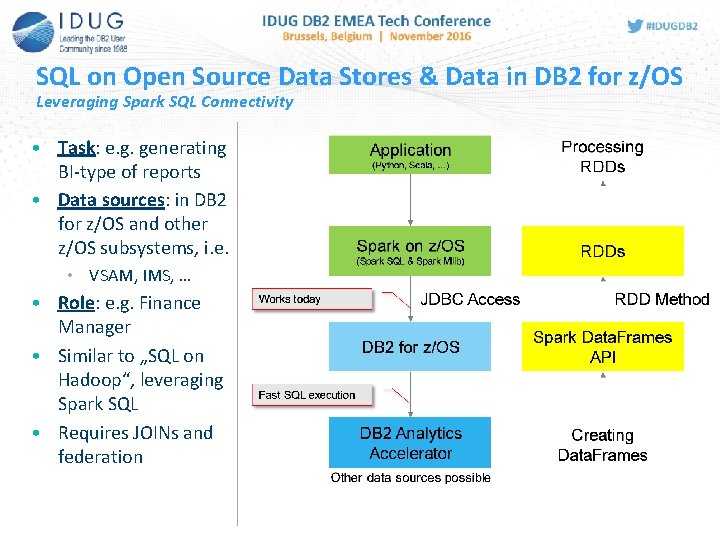

SQL on Open Source Data Stores & Data in DB 2 for z/OS Leveraging Spark SQL Connectivity • Task: e. g. generating BI-type of reports • Data sources: in DB 2 for z/OS and other z/OS subsystems, i. e. • VSAM, IMS, … • Role: e. g. Finance Manager • Similar to „SQL on Hadoop“, leveraging Spark SQL • Requires JOINs and federation

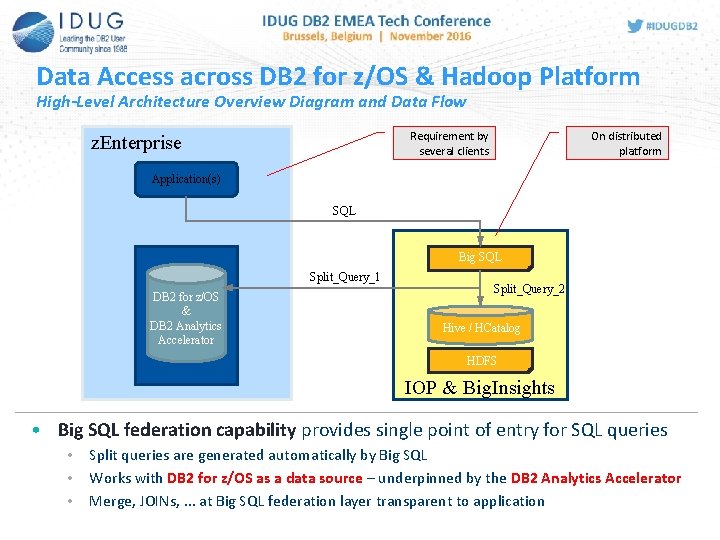

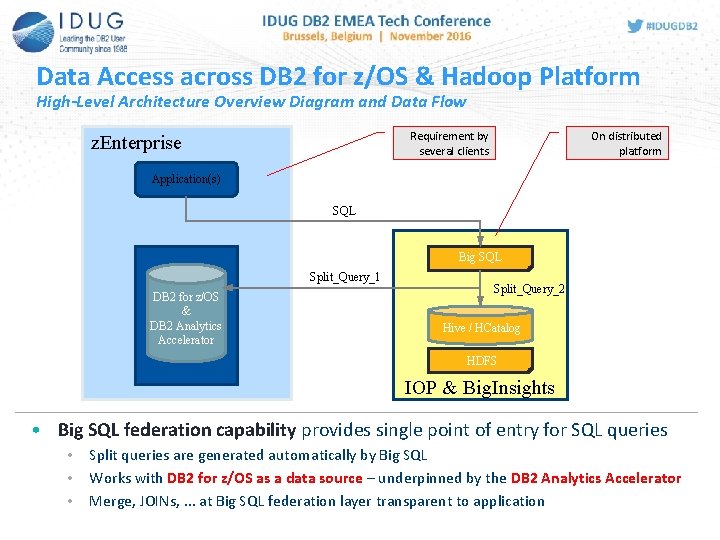

Data Access across DB 2 for z/OS & Hadoop Platform High-Level Architecture Overview Diagram and Data Flow Requirement by several clients z. Enterprise On distributed platform Application(s) SQL Big SQL Split_Query_1 DB 2 for z/OS & DB 2 Analytics Accelerator Split_Query_2 Hive / HCatalog HDFS IOP & Big. Insights • Big SQL federation capability provides single point of entry for SQL queries • • • Split queries are generated automatically by Big SQL Works with DB 2 for z/OS as a data source – underpinned by the DB 2 Analytics Accelerator Merge, JOINs, . . . at Big SQL federation layer transparent to application

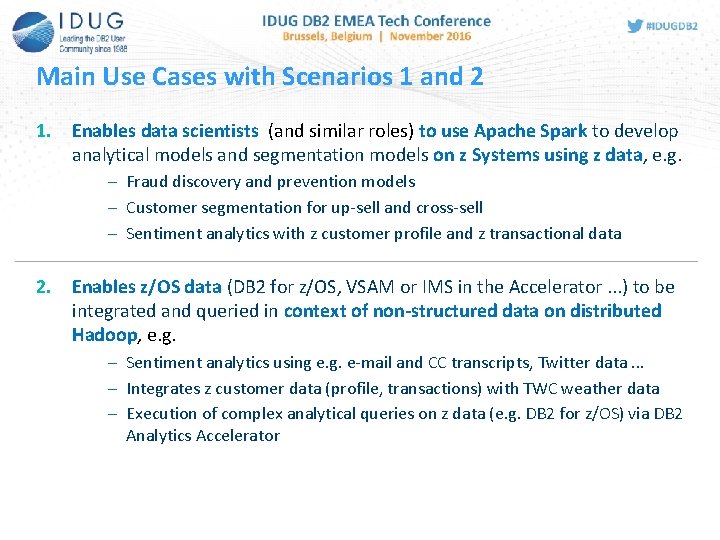

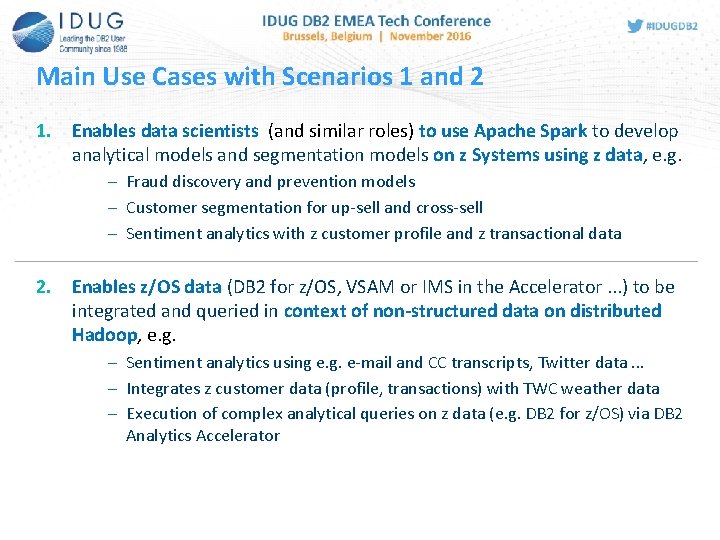

Main Use Cases with Scenarios 1 and 2 1. Enables data scientists (and similar roles) to use Apache Spark to develop analytical models and segmentation models on z Systems using z data, e. g. – Fraud discovery and prevention models – Customer segmentation for up-sell and cross-sell – Sentiment analytics with z customer profile and z transactional data 2. Enables z/OS data (DB 2 for z/OS, VSAM or IMS in the Accelerator. . . ) to be integrated and queried in context of non-structured data on distributed Hadoop, e. g. – Sentiment analytics using e. g. e-mail and CC transcripts, Twitter data. . . – Integrates z customer data (profile, transactions) with TWC weather data – Execution of complex analytical queries on z data (e. g. DB 2 for z/OS) via DB 2 Analytics Accelerator

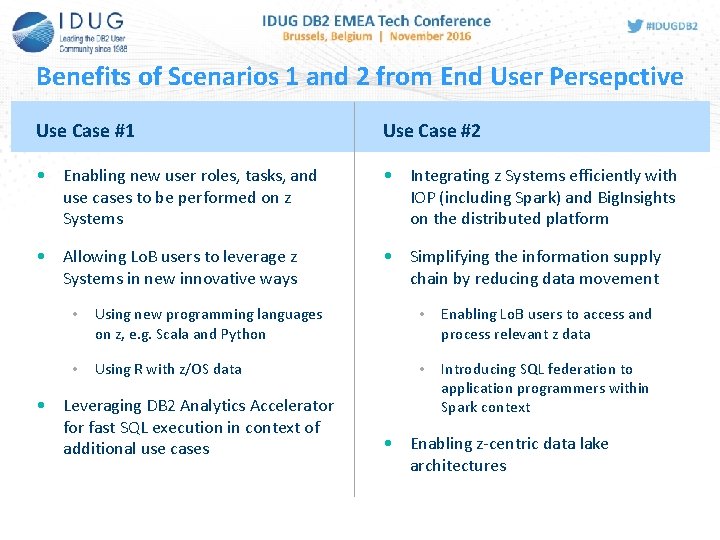

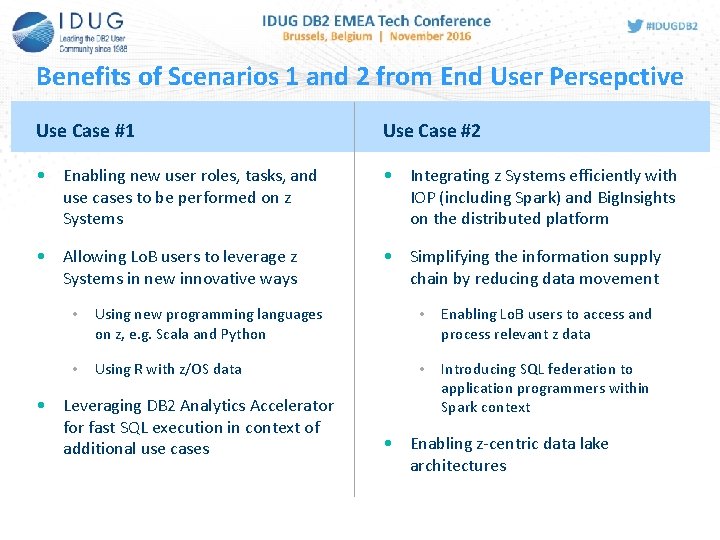

Benefits of Scenarios 1 and 2 from End User Persepctive Use Case #1 Use Case #2 • Enabling new user roles, tasks, and use cases to be performed on z Systems • Integrating z Systems efficiently with IOP (including Spark) and Big. Insights on the distributed platform • Allowing Lo. B users to leverage z Systems in new innovative ways • Simplifying the information supply chain by reducing data movement • Using new programming languages on z, e. g. Scala and Python • Enabling Lo. B users to access and process relevant z data • Using R with z/OS data • Introducing SQL federation to application programmers within Spark context • Leveraging DB 2 Analytics Accelerator fast SQL execution in context of additional use cases • Enabling z-centric data lake architectures

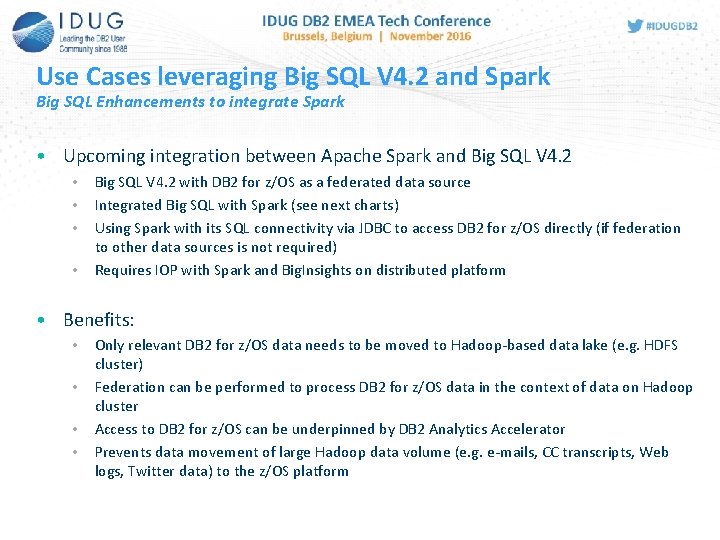

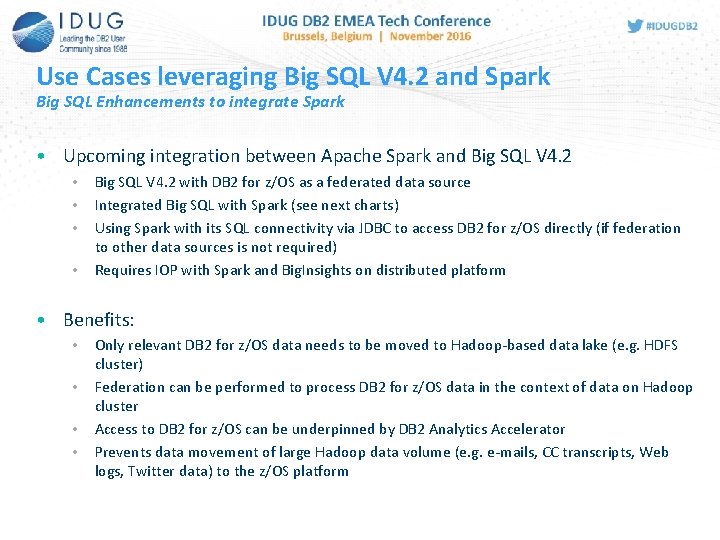

Use Cases leveraging Big SQL V 4. 2 and Spark Big SQL Enhancements to integrate Spark • Upcoming integration between Apache Spark and Big SQL V 4. 2 • • Big SQL V 4. 2 with DB 2 for z/OS as a federated data source Integrated Big SQL with Spark (see next charts) Using Spark with its SQL connectivity via JDBC to access DB 2 for z/OS directly (if federation to other data sources is not required) Requires IOP with Spark and Big. Insights on distributed platform • Benefits: • • Only relevant DB 2 for z/OS data needs to be moved to Hadoop-based data lake (e. g. HDFS cluster) Federation can be performed to process DB 2 for z/OS data in the context of data on Hadoop cluster Access to DB 2 for z/OS can be underpinned by DB 2 Analytics Accelerator Prevents data movement of large Hadoop data volume (e. g. e-mails, CC transcripts, Web logs, Twitter data) to the z/OS platform

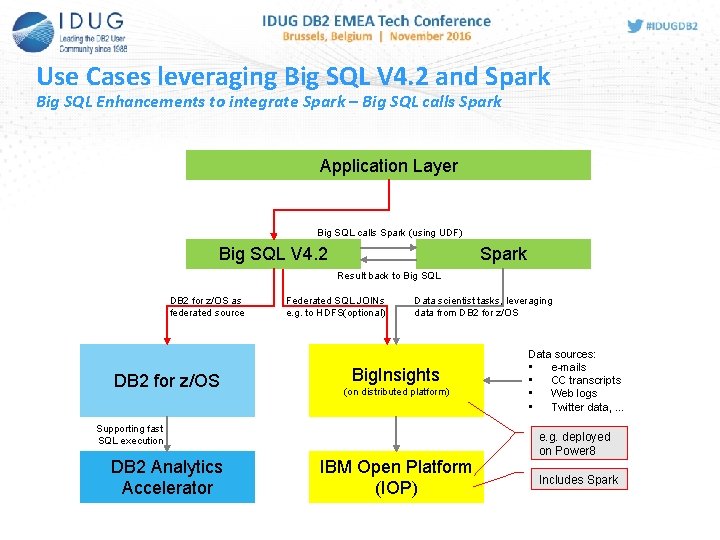

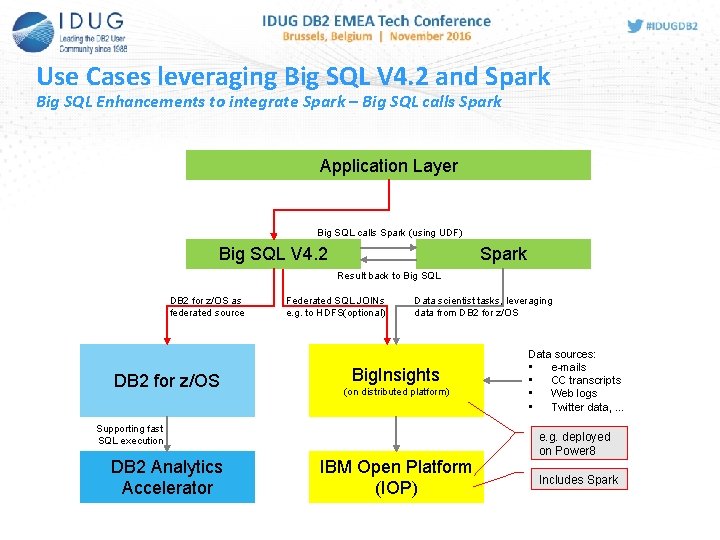

Use Cases leveraging Big SQL V 4. 2 and Spark Big SQL Enhancements to integrate Spark – Big SQL calls Spark Application Layer Big SQL calls Spark (using UDF) Big SQL V 4. 2 Spark Result back to Big SQL DB 2 for z/OS as federated source DB 2 for z/OS Federated SQL JOINs e. g. to HDFS(optional) Data scientist tasks, leveraging data from DB 2 for z/OS Big. Insights (on distributed platform) Supporting fast SQL execution DB 2 Analytics Accelerator IBM Open Platform (IOP) Data sources: • e-mails • CC transcripts • Web logs • Twitter data, . . . e. g. deployed on Power 8 Includes Spark

Summary

Summary • Spark on z/OS and Spark as part of IOP with Big. Insights on distributed platforms enable new innovative use case scenarios for z Systems clients • • Fraud discovery and prevention Customer segmentation Up-sell & cross-sell Sentiment analytics • Start embracing new themes, e. g. • z-centric data lake concepts • Leveraging Spark on z/OS • Integrating value from distributed ecosystem • Study the reference material • Consider Development Partnership with IBM

Eberhard Hechler IBM Germany R&D Lab ehechler@de. ibm. com Session Code: F 15 Use Cases at the Intersection of Apache Spark/Hadoop and IBM z Analytics Please fill out your session evaluation before leaving!