UQC 152 H 3 Advanced OS Memory Management

- Slides: 22

UQC 152 H 3 Advanced OS Memory Management under Linux

Memory Addressing • Segmentation and paging background • Segmentation – Intel segmentation hardware – Linux use of Intel segmentation • Paging – Intel paging hardware – Linux use of Intel paging • Memory layout – Kernel layout in physical memory – Process virtual memory layout – Kernel virtual memory layout

Segmentation Background • Motivated by logical use of memory areas – Code, heap, stack, etc. • Base + offset – Segment registers contain base • Segments variable size (usually large) • Process as collection of segments – No notion of linear, contiguous memory – Similar to “multi-stream” files (Mac) • Requires “segment table”

Paging Background • Motivated by notion of linear, contiguous, "virtual" memory (space) – Every process has it's own "zero" address • Uniform sized chunks (pages – 4 K on Intel) • Virtually contiguous pages may be physically scattered • Virtual space may have "holes" • Page table translates virtual "pages" to physical "page frames"

Intel Segmentation/Paging • Intel address terminology: – Logical – segment + offset – Linear (virtual) – 0. . 4 GB (64 GB with PAE) – Physical • Logical -> Linear -> Physical • Paging can be disabled • Segmentation required – Though you can just have one big segment

Intel Segmentation Hardware • Segment registers: cs, ss, ds, es, fs, gs – Indices ("selectors") into "segment descriptor tables" • Segment descriptor tables: GDT, LDT – Global and local (per process, in theory) – Each holds about 8000 descriptors • Segment descriptors: 8 bytes each – Base/limit, code/data privileges, type – Cached in ro registers when seg registers loaded • Task segment descriptor (TSS) – Special segment for holding process context

Segmentation in Linux • History – Early versions segmented; now paged – Using "shared" segments simplifies things – Some RISC chips don't support segmentation • Linux only uses GDT – LDTs allocated for Windows emulation – Each process has TSS and LDT descriptors – TSS is per-process; LDT is shared

Linux Descriptor Allocation • GDT holds 8192 descriptors – 4 primary, 4 APM, 4 unused – 8180 left / 2 -> 4090 processes (limit in 2. 2) • Primary (shared) segments – Kernel code, kernel data – User code, user data – Segments overlap in linear address space • 2. 4 removes 4 K process restriction

Intel Paging Hardware • Page table "maps" linear address space – Some pages may be invalid – Address space grows/shrinks (mapping) • New regions mapped by DLLs, mmap(), brk() – Pages (linear) vs. page frames (physical) • Page may map to different frame after swap – Page tables stored in kernel data segment • Intel page size: 4 K or 4 M ("jumbo pages") – Jumbo pages reduce table size • Paging "enabled" by writing cr 0 register

Intel Two-Level Paging • "Page table" is actually a two-level tree – Page Directory (root) – Page Tables (children) – Pages (grandchildren) • Linear address carved into three pieces – Directory (10), Table (10), Offset (12) – Entries: frame # + bookkeeping bits • Bookkeeping bits: – Present, Accessed, Dirty – Read/Write, User/Supervisor, Page Size • Jumbo pages – Map entire 4 GB address space with just top-level Directory – No need for Tables! (Kernel uses this technique)

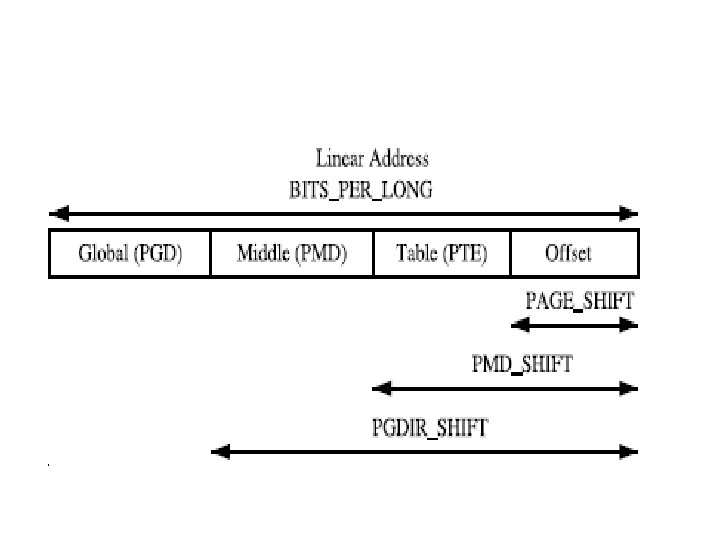

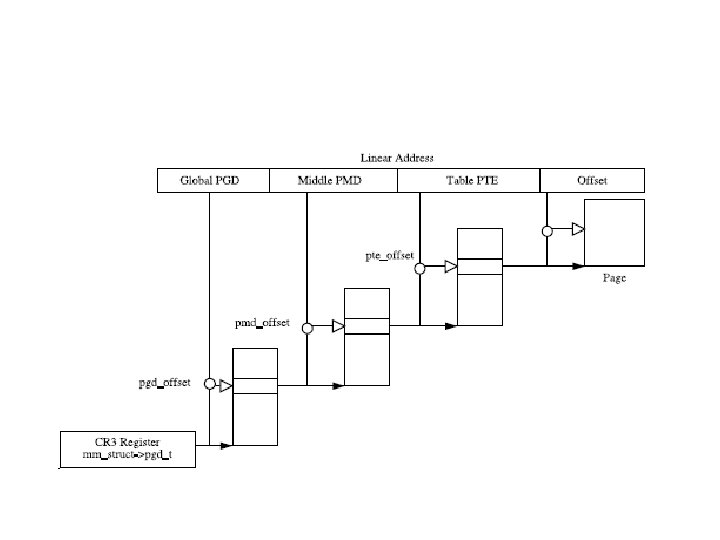

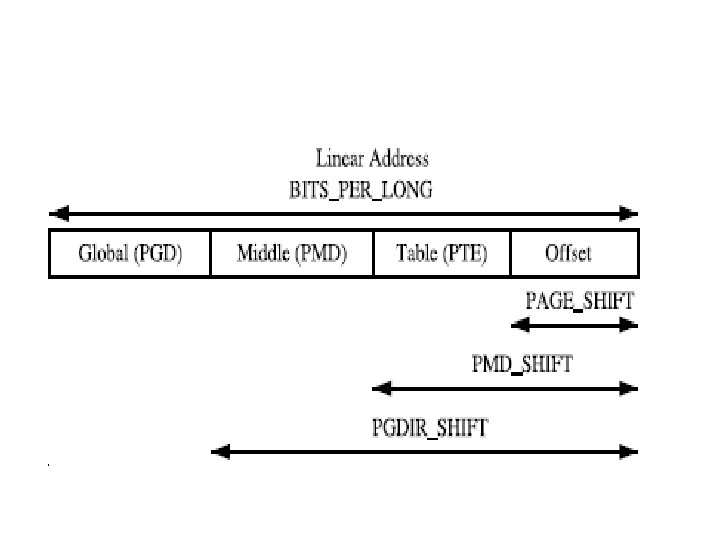

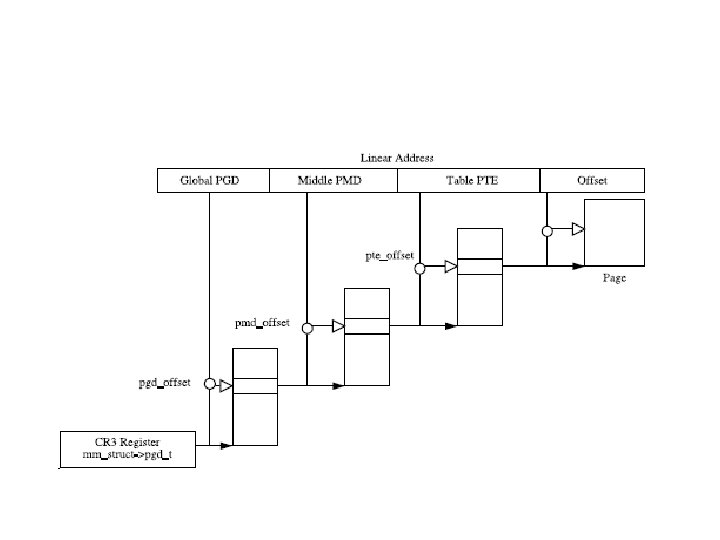

Three-Level Paging • How big are page tables on 64 bit arch? – Sparc, Alpha, Itanium – Assume 16 K pages => 32 M per process! • Better idea: three-level paging trees – Page Global Directory (pgd) – Page Middle Directory (pmd) – Page Table (pt) • Carve linear address into 4 pieces • Conceptual paging model – On Intel, page middle directory is compiled out

Aside: Caching • • Exploit temporal and spatial locality L 1 and L 2 caches (on chip) Cache "lines" (32 bytes on Intel) Kernel developer goals: – Keep frequently used struct fields in the same cache line! – Spread lines out for large data structures to keep cache utilized – The "Cache Police"

TLB • Translation Look-aside Buffer – Virtual to physical cache • Must be flushed (invalidated) when address space mappings change • A significant cost of context switch

Paging in Linux • Three-level scheme – Middle directories "collapsed" w/ macro tricks – No bits assigned for middle directory • On context switch (address space change) – Save/load registers in TSS – Install new top-level directory (write cr 3) – Flush TLB • Lot's of macros for: – Allocating/deallocating, altering, querying… – Page directories, tables, entries – Examples: • set_pte(), mk_pte(), pte_young(), new_page_tables()

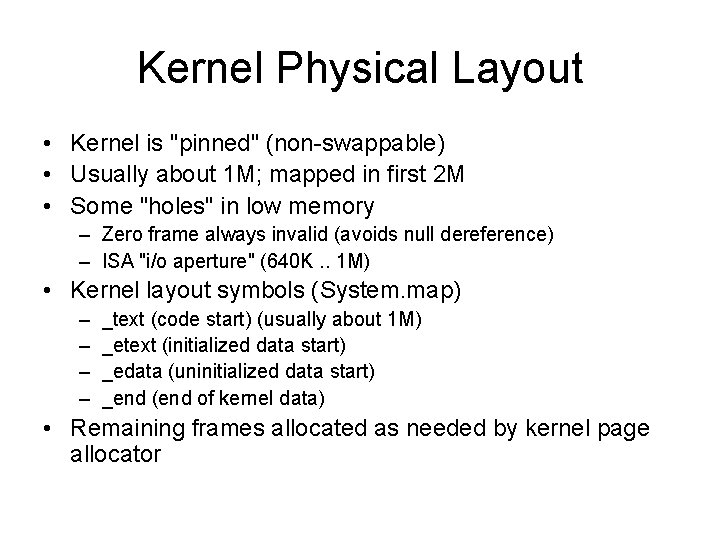

Kernel Physical Layout • Kernel is "pinned" (non-swappable) • Usually about 1 M; mapped in first 2 M • Some "holes" in low memory – Zero frame always invalid (avoids null dereference) – ISA "i/o aperture" (640 K. . 1 M) • Kernel layout symbols (System. map) – – _text (code start) (usually about 1 M) _etext (initialized data start) _edata (uninitialized data start) _end (end of kernel data) • Remaining frames allocated as needed by kernel page allocator

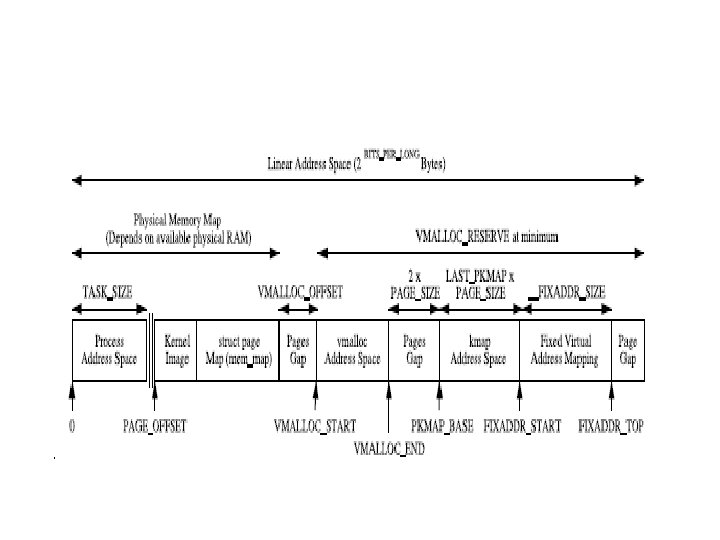

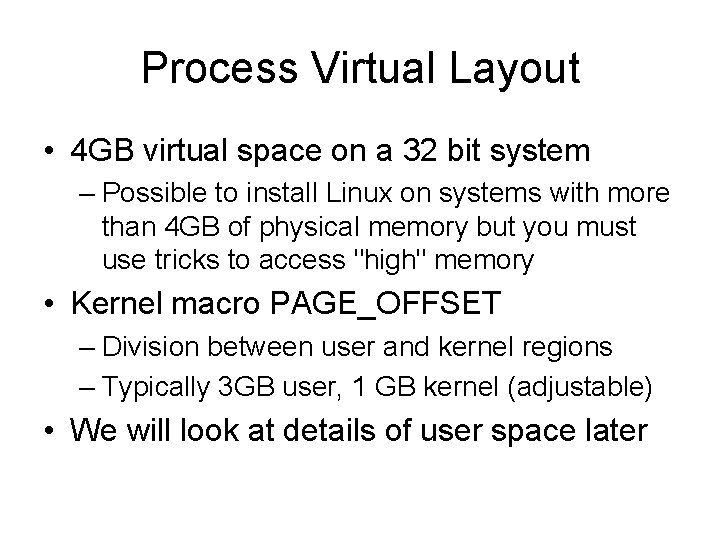

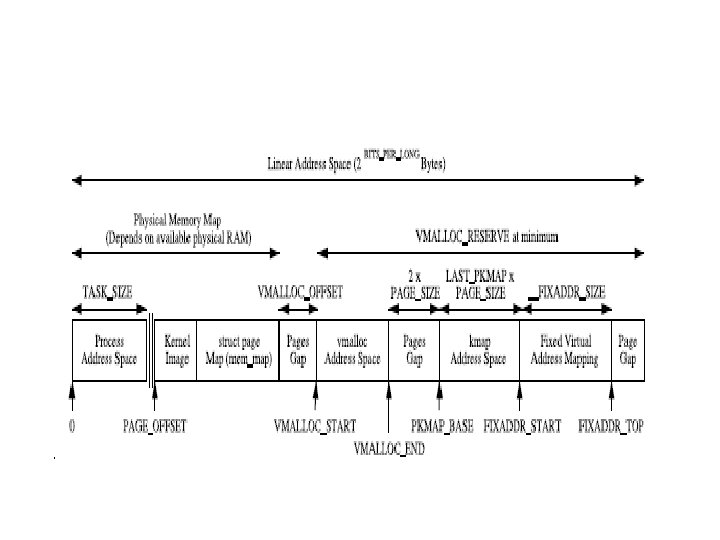

Process Virtual Layout • 4 GB virtual space on a 32 bit system – Possible to install Linux on systems with more than 4 GB of physical memory but you must use tricks to access "high" memory • Kernel macro PAGE_OFFSET – Division between user and kernel regions – Typically 3 GB user, 1 GB kernel (adjustable) • We will look at details of user space later

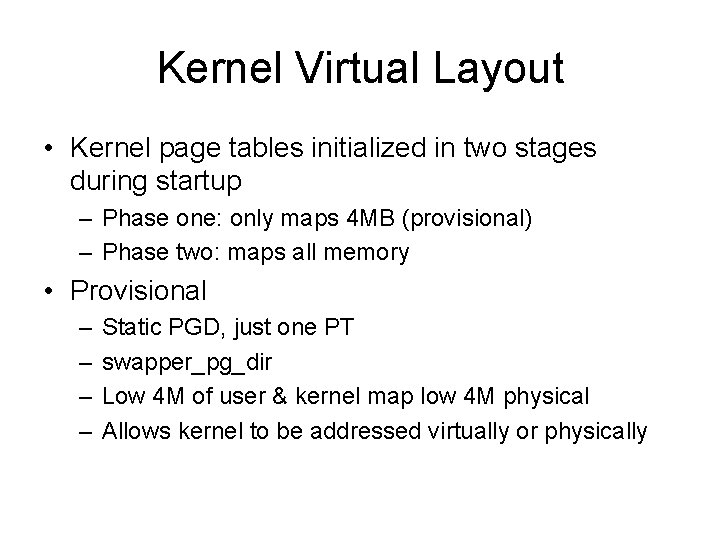

Kernel Virtual Layout • Kernel page tables initialized in two stages during startup – Phase one: only maps 4 MB (provisional) – Phase two: maps all memory • Provisional – – Static PGD, just one PT swapper_pg_dir Low 4 M of user & kernel map low 4 M physical Allows kernel to be addressed virtually or physically

Final Kernel Virtual Layout • Kernel code – Operates using linear (virtual) addresses – Macros to go from physical to virtual and back • _pa(virual), _va(physical) • Kernel mapped using jumbo pages • Kernel virtual address space – Low physical frames (containing kernel) mapped to virtual addresses starting at PAGE_OFFSET – Remaining frames allocated on demand by kernel and user processes and mapped to virtual addresses

Summary • • Intel hybrid segmented/paged architecture Segment support used minimally Linux has a conceptual 3 level page table Kernel is mapped into high memory of every process address space but is stored in low physical memory