Unsupervised Machine Learning Claudio Duran Cannistraci lab BIOTEC

Unsupervised Machine Learning Claudio Duran, Cannistraci lab, BIOTEC Robert Haase, Myers lab, MPI CBG Mahmood Nazari, ABX-Cro / Schroeder lab, BIOTEC Martin Weigert, Myers lab, MPI CBG https: //www. biotec. tu-dresden. de/research/cannistraci/lecture. html 28. 05. 2019 1

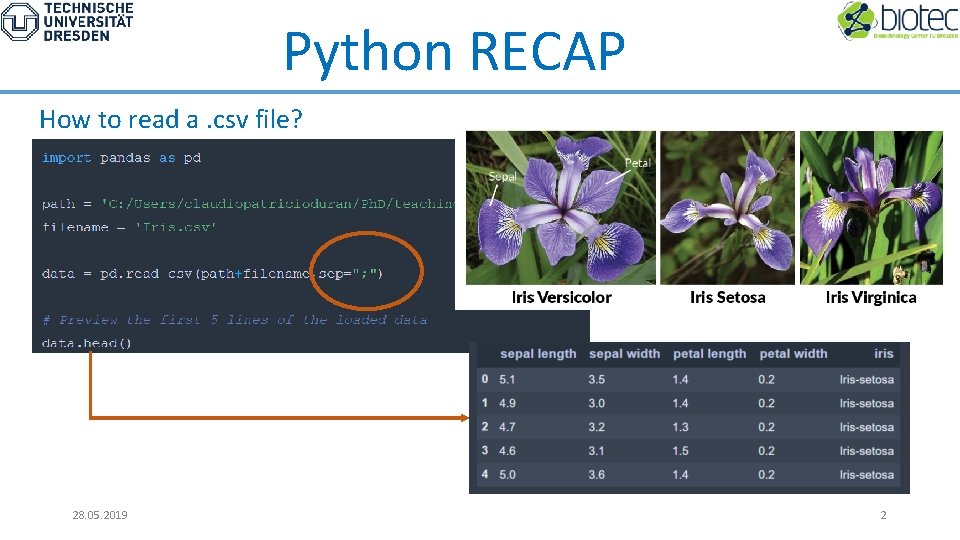

Python RECAP How to read a. csv file? 28. 05. 2019 2

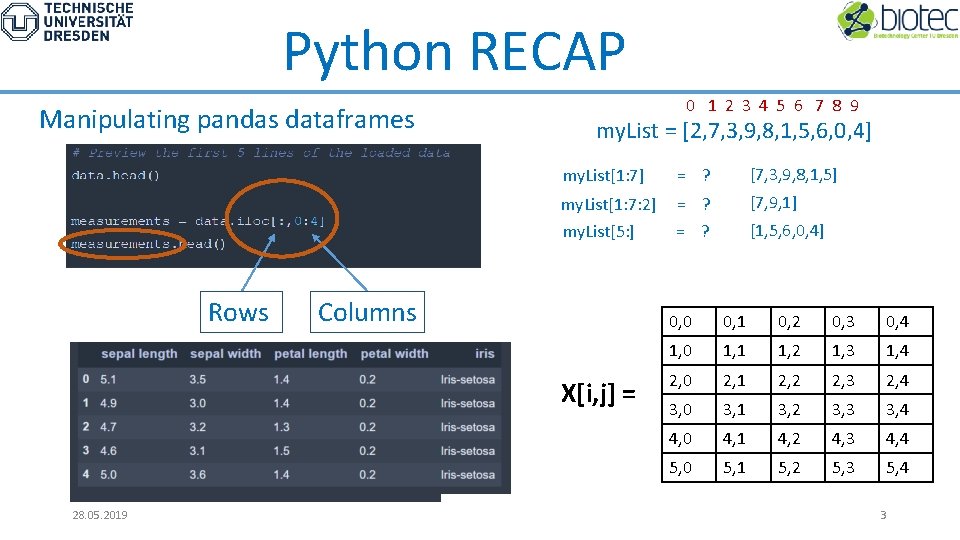

Python RECAP Manipulating pandas dataframes Rows 0 1 2 3 4 5 6 7 8 9 my. List = [2, 7, 3, 9, 8, 1, 5, 6, 0, 4] my. List[1: 7] = ? [7, 3, 9, 8, 1, 5] my. List[1: 7: 2] = ? [7, 9, 1] my. List[5: ] = ? [1, 5, 6, 0, 4] Columns X[i, j] = 28. 05. 2019 0, 0 0, 1 0, 2 0, 3 0, 4 1, 0 1, 1 1, 2 1, 3 1, 4 2, 0 2, 1 2, 2 2, 3 2, 4 3, 0 3, 1 3, 2 3, 3 3, 4 4, 0 4, 1 4, 2 4, 3 4, 4 5, 0 5, 1 5, 2 5, 3 5, 4 3

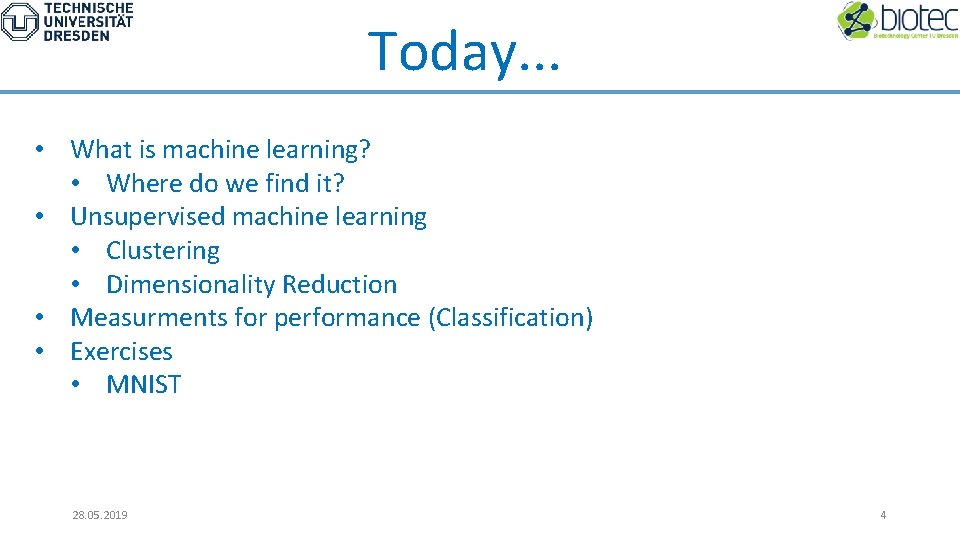

Today. . . • What is machine learning? • Where do we find it? • Unsupervised machine learning • Clustering • Dimensionality Reduction • Measurments for performance (Classification) • Exercises • MNIST 28. 05. 2019 4

Machine learning Use of AI to provide systems the ability to learn from experiences. Ø Search engines Ø Anti-spam softwares SPAM Ø Credit card transactions Ø Digital cameras (face recognition) 28. 05. 2019 2

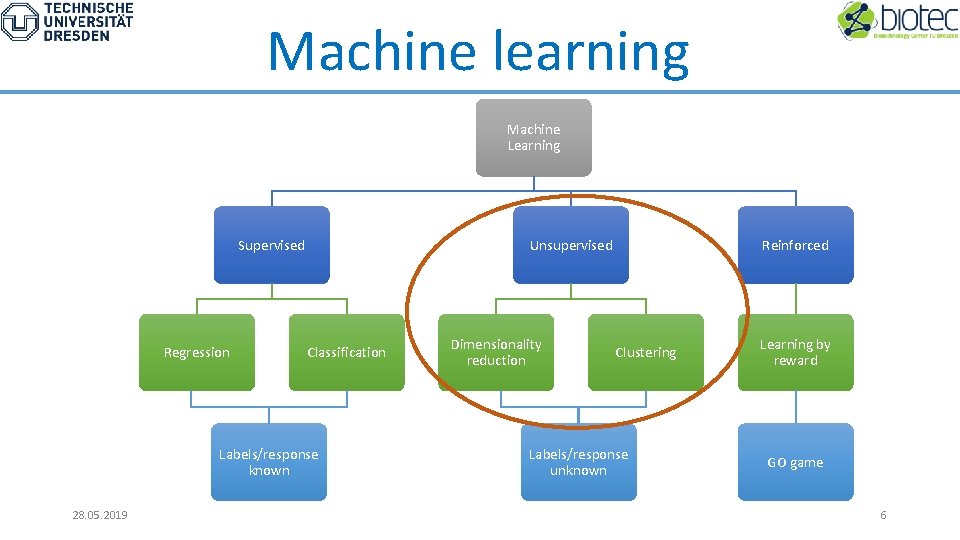

Machine learning Machine Learning Supervised Regression Unsupervised Classification Labels/response known 28. 05. 2019 Dimensionality reduction Reinforced Clustering Labels/response unknown Learning by reward GO game 6

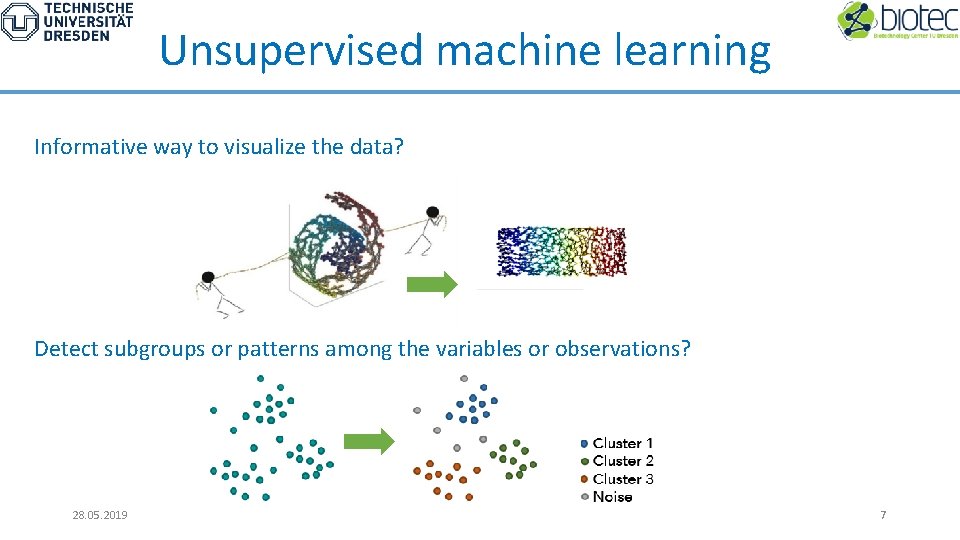

Unsupervised machine learning Informative way to visualize the data? Detect subgroups or patterns among the variables or observations? 28. 05. 2019 7

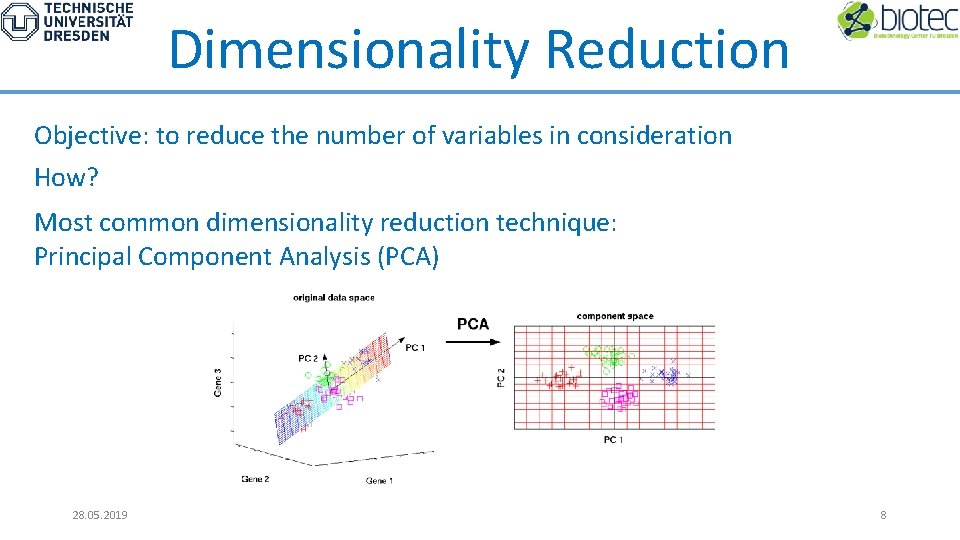

Dimensionality Reduction Objective: to reduce the number of variables in consideration How? Most common dimensionality reduction technique: Principal Component Analysis (PCA) 28. 05. 2019 8

PCA Finds a sequence of linear combinations of the variables that have maximal variance and are mutually uncorrelated. 28. 05. 2019 9

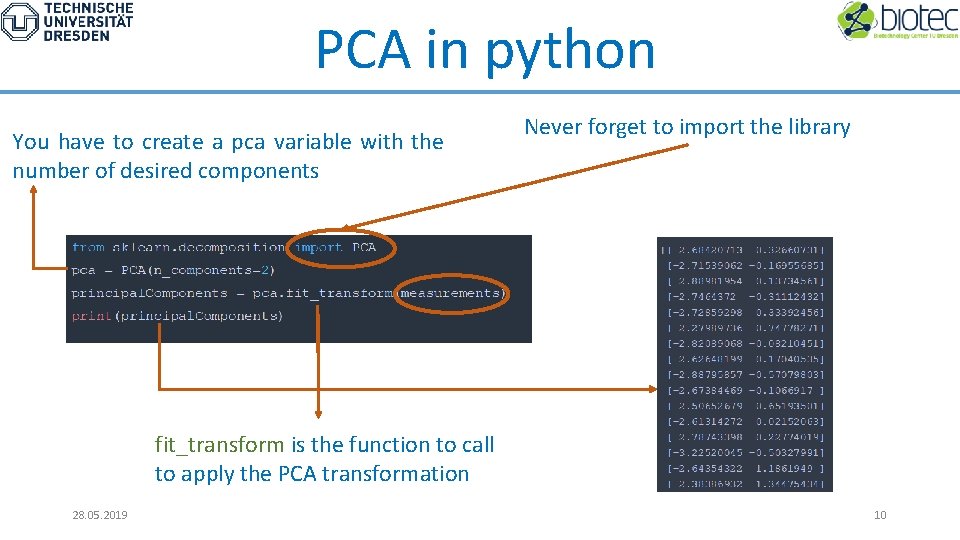

PCA in python You have to create a pca variable with the number of desired components Never forget to import the library fit_transform is the function to call to apply the PCA transformation 28. 05. 2019 10

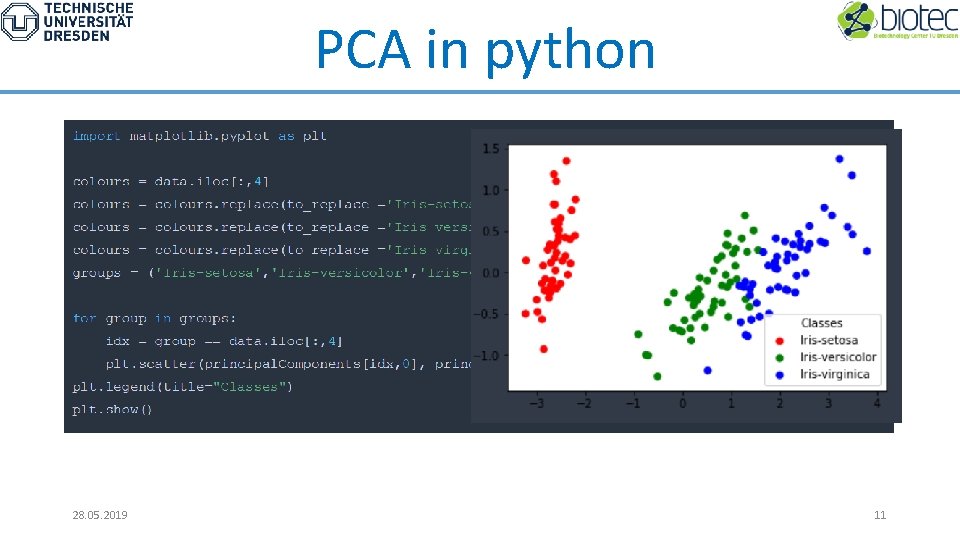

PCA in python 28. 05. 2019 11

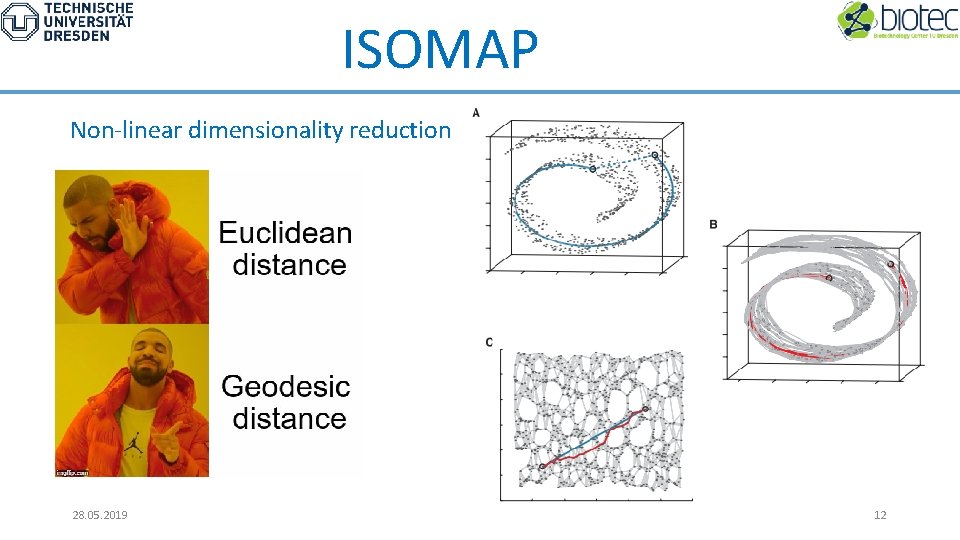

ISOMAP Non-linear dimensionality reduction 28. 05. 2019 12

ISOMAP Like in PCA 28. 05. 2019 13

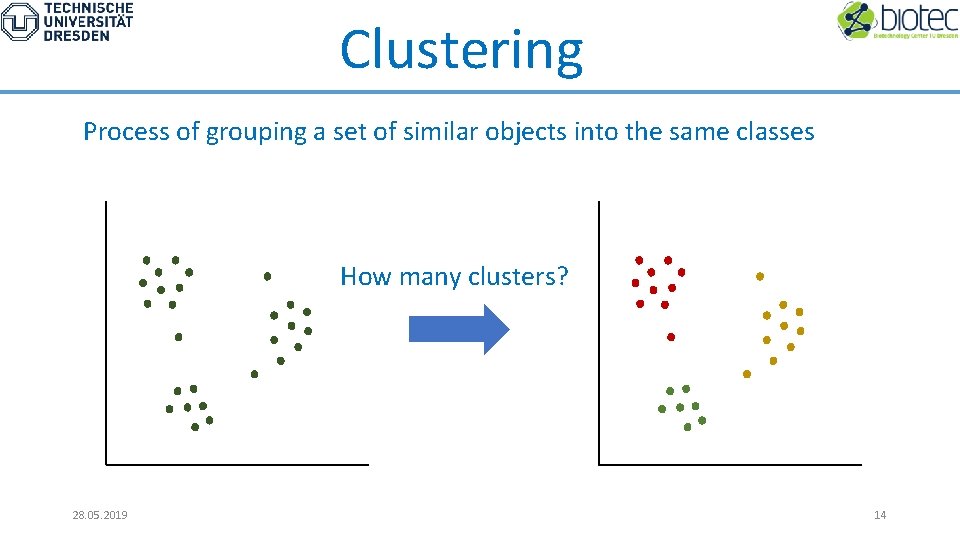

Clustering Process of grouping a set of similar objects into the same classes How many clusters? 28. 05. 2019 14

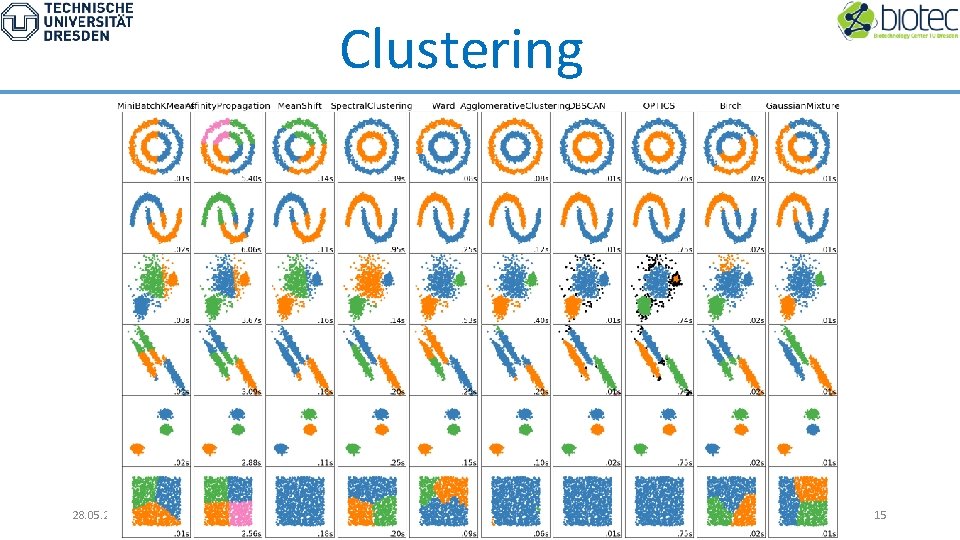

Clustering 28. 05. 2019 15

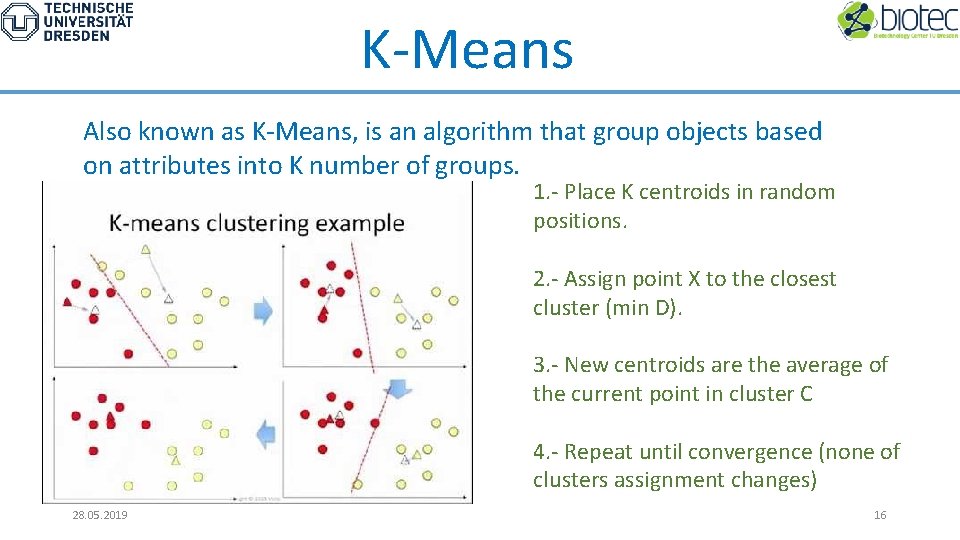

K-Means Also known as K-Means, is an algorithm that group objects based on attributes into K number of groups. 1. - Place K centroids in random positions. 2. - Assign point X to the closest cluster (min D). 3. - New centroids are the average of the current point in cluster C 4. - Repeat until convergence (none of clusters assignment changes) 28. 05. 2019 16

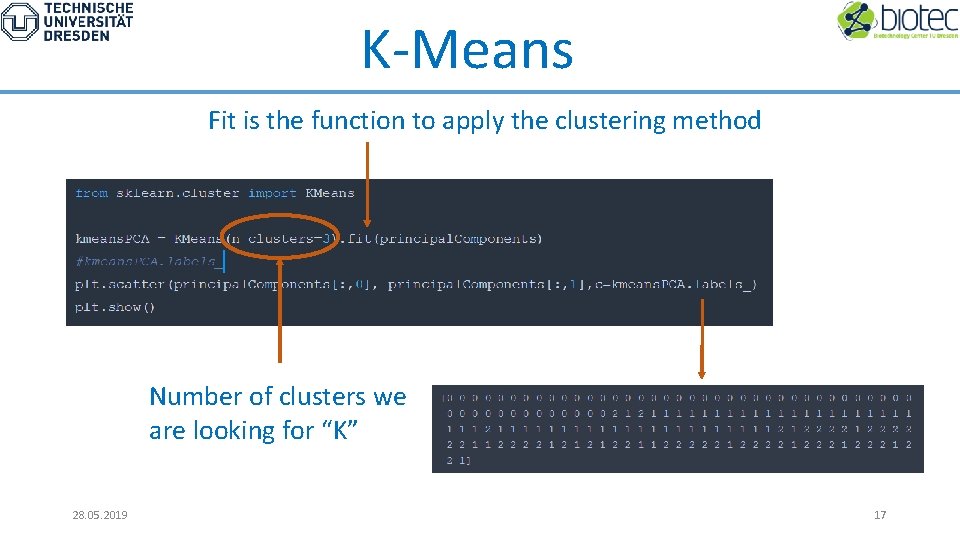

K-Means Fit is the function to apply the clustering method Number of clusters we are looking for “K” 28. 05. 2019 17

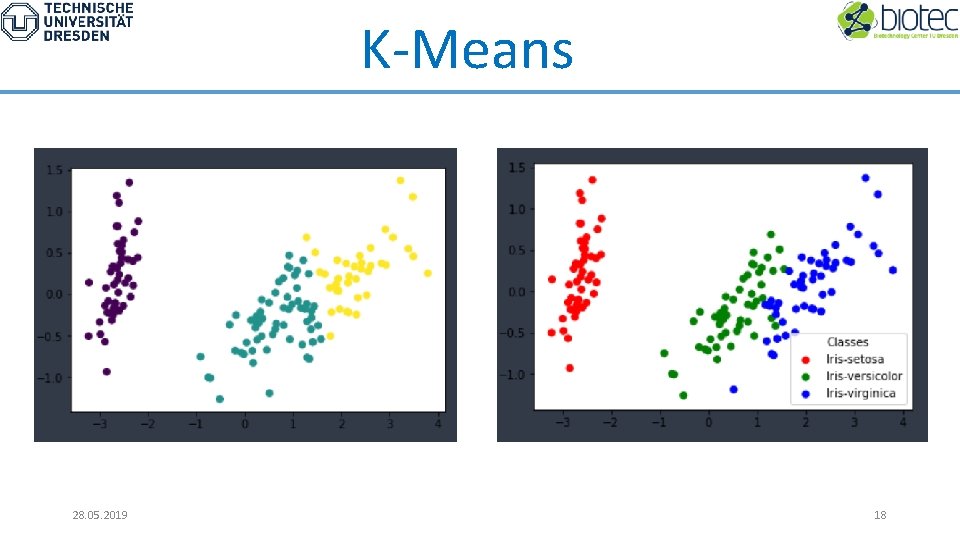

K-Means 28. 05. 2019 18

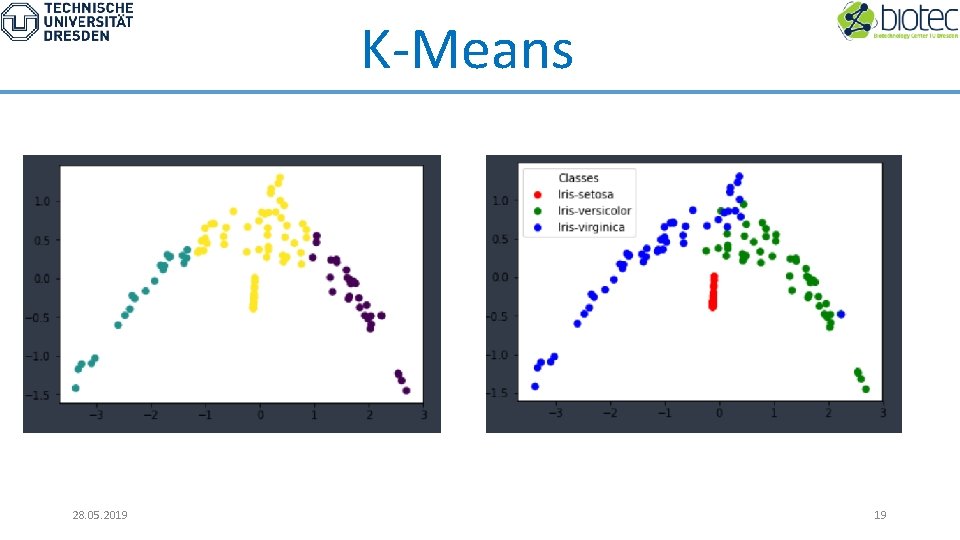

K-Means 28. 05. 2019 19

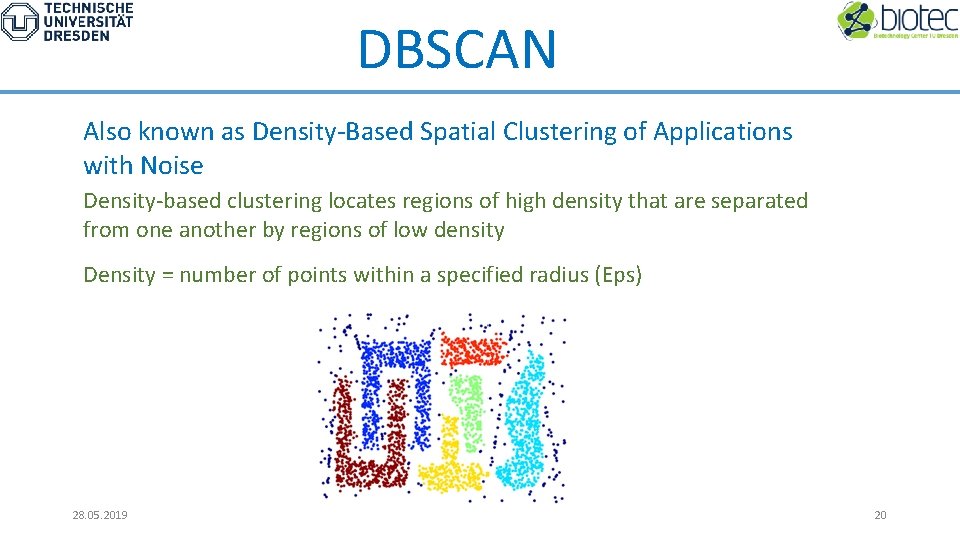

DBSCAN Also known as Density-Based Spatial Clustering of Applications with Noise Density-based clustering locates regions of high density that are separated from one another by regions of low density Density = number of points within a specified radius (Eps) 28. 05. 2019 20

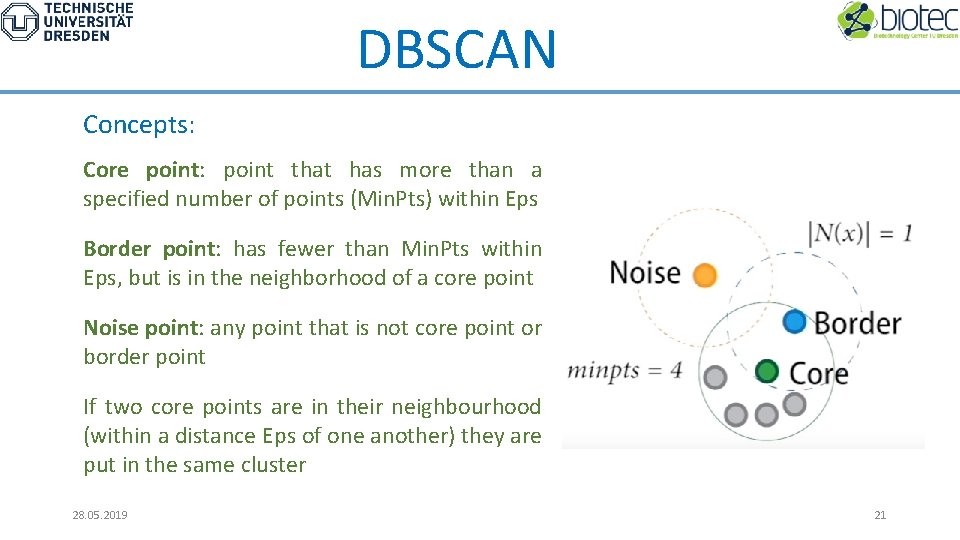

DBSCAN Concepts: Core point: point that has more than a specified number of points (Min. Pts) within Eps Border point: has fewer than Min. Pts within Eps, but is in the neighborhood of a core point Noise point: any point that is not core point or border point If two core points are in their neighbourhood (within a distance Eps of one another) they are put in the same cluster 28. 05. 2019 21

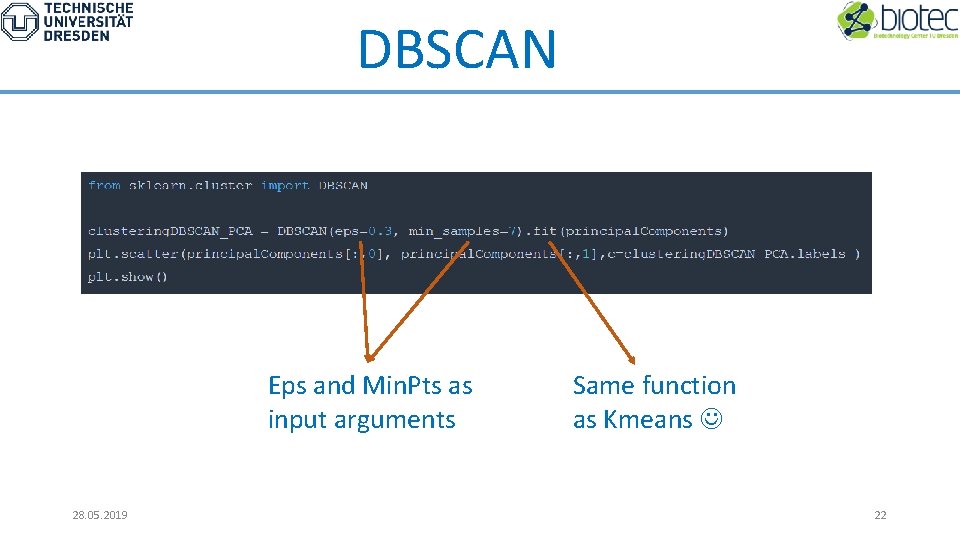

DBSCAN Eps and Min. Pts as input arguments 28. 05. 2019 Same function as Kmeans 22

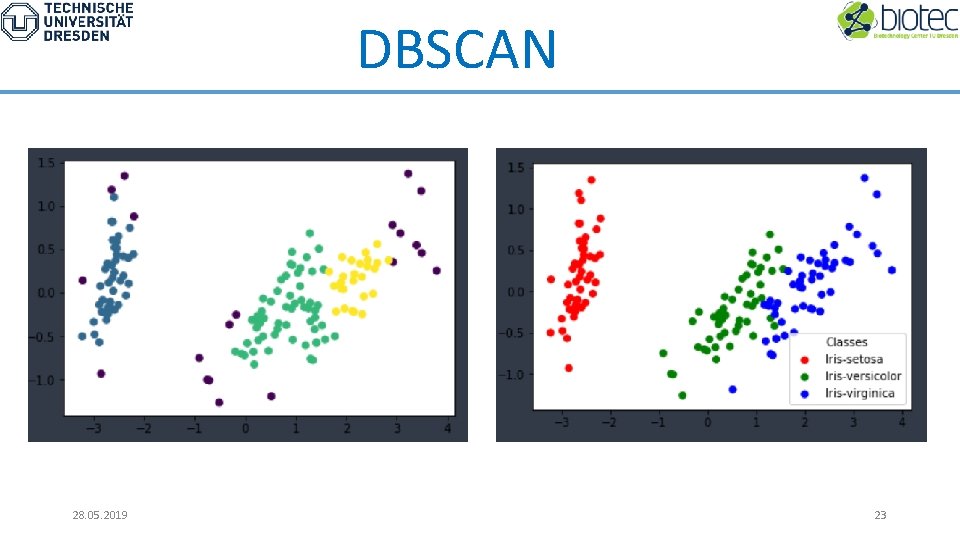

DBSCAN 28. 05. 2019 23

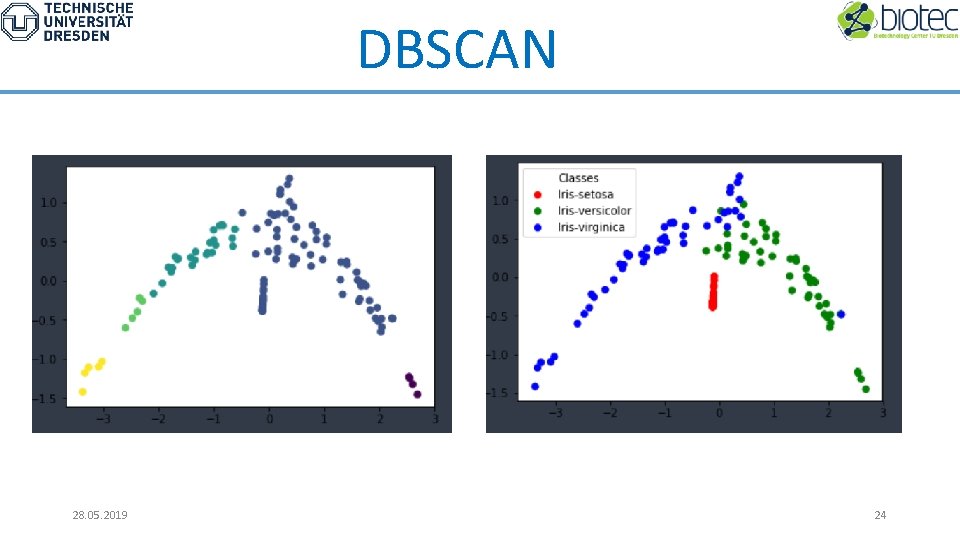

DBSCAN 28. 05. 2019 24

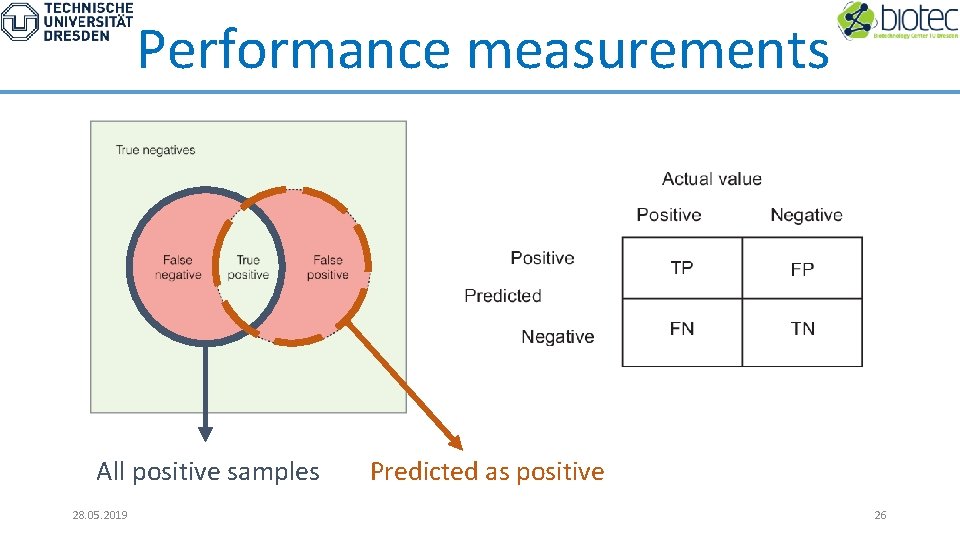

Performance measurements Usually applied in binary classification tasks (supervised learning) -Accuracy -Sensitivity (or recall or true positive rate [TPR]) -Specificity (or true negative rate [TNR]) -Precision (or positive predicted values) -F-score 28. 05. 2019 25

Performance measurements All positive samples 28. 05. 2019 Predicted as positive 26

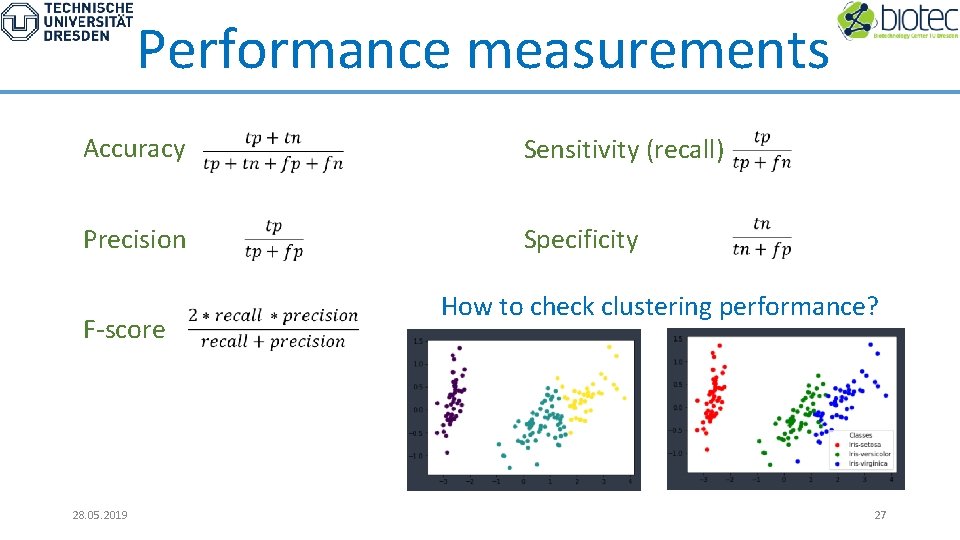

Performance measurements Accuracy Precision F-score 28. 05. 2019 Sensitivity (recall) Specificity How to check clustering performance? 27

Exercises! 28. 05. 2019 28

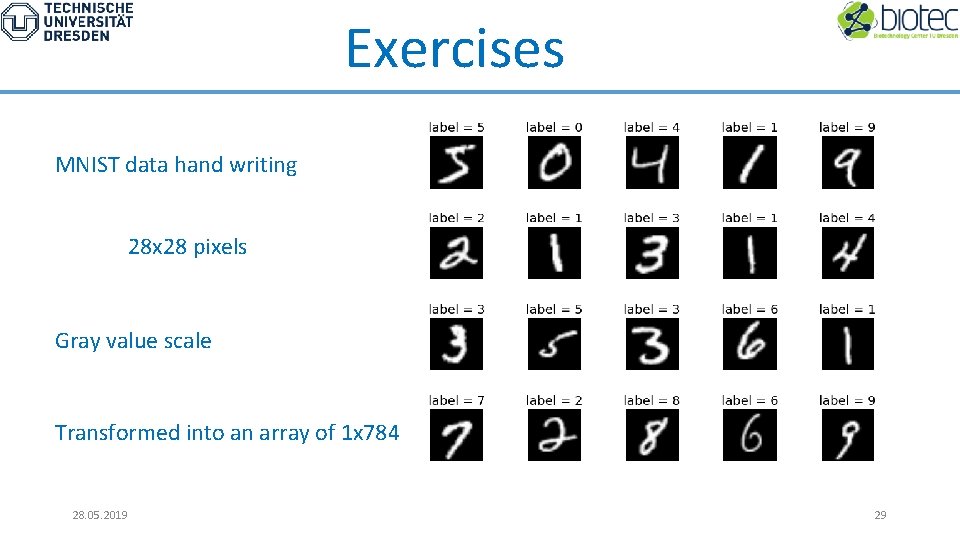

Exercises MNIST data hand writing 28 x 28 pixels Gray value scale Transformed into an array of 1 x 784 28. 05. 2019 29

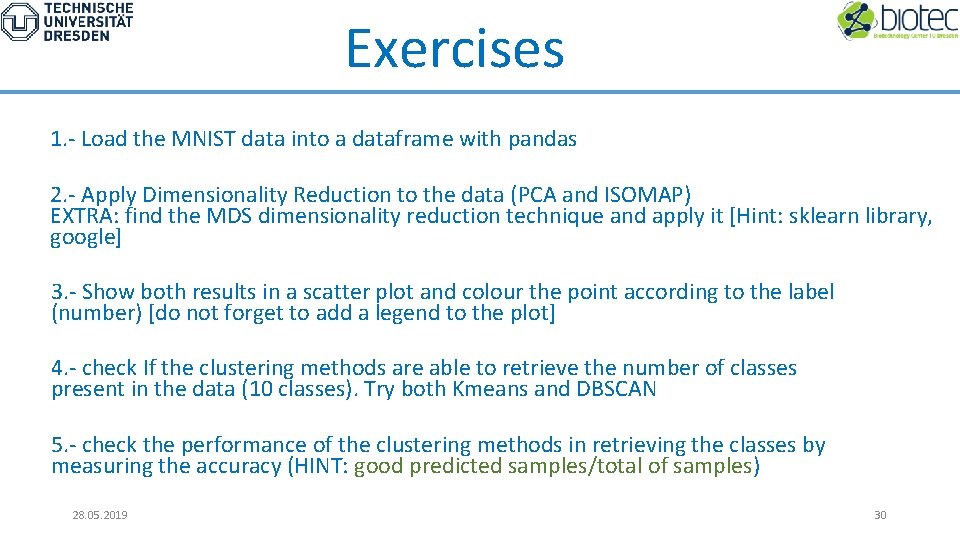

Exercises 1. - Load the MNIST data into a dataframe with pandas 2. - Apply Dimensionality Reduction to the data (PCA and ISOMAP) EXTRA: find the MDS dimensionality reduction technique and apply it [Hint: sklearn library, google] 3. - Show both results in a scatter plot and colour the point according to the label (number) [do not forget to add a legend to the plot] 4. - check If the clustering methods are able to retrieve the number of classes present in the data (10 classes). Try both Kmeans and DBSCAN 5. - check the performance of the clustering methods in retrieving the classes by measuring the accuracy (HINT: good predicted samples/total of samples) 28. 05. 2019 30

- Slides: 30