Unsupervised learning The Hebb rule Neurons that fire

Unsupervised learning • The Hebb rule – Neurons that fire together wire together. • PCA • RF development with PCA

Classical Conditioning

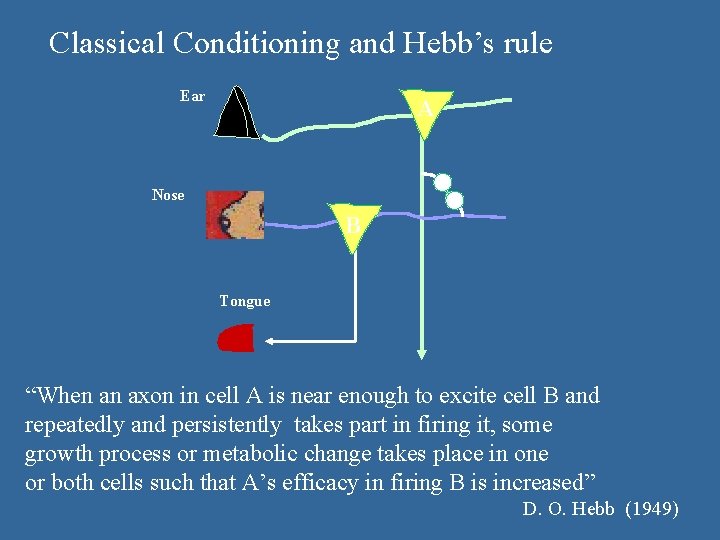

Classical Conditioning and Hebb’s rule Ear A Nose B Tongue “When an axon in cell A is near enough to excite cell B and repeatedly and persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficacy in firing B is increased” D. O. Hebb (1949)

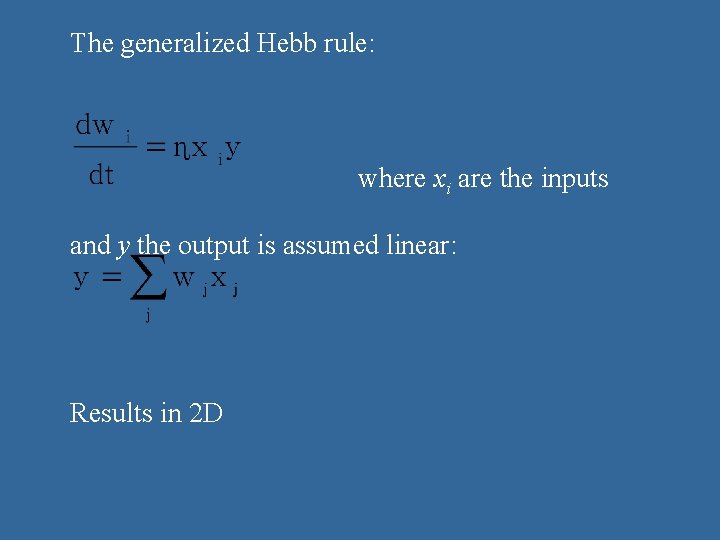

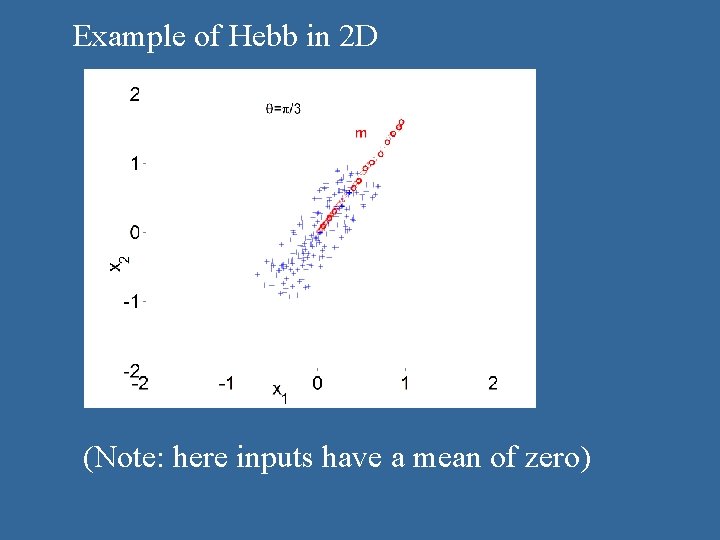

The generalized Hebb rule: where xi are the inputs and y the output is assumed linear: Results in 2 D

Example of Hebb in 2 D w (Note: here inputs have a mean of zero)

On the board: • Solve simple linear first order ODE • Fixed points and their stability for non linear ODE. • Describe potential and gradient descent

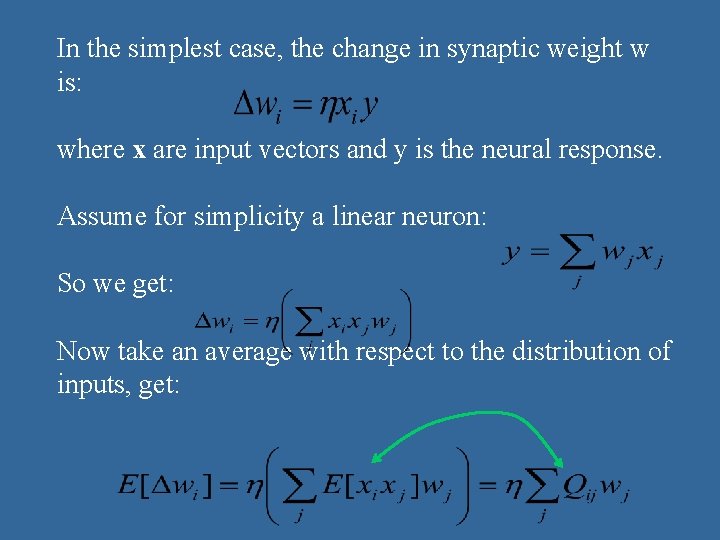

In the simplest case, the change in synaptic weight w is: where x are input vectors and y is the neural response. Assume for simplicity a linear neuron: So we get: Now take an average with respect to the distribution of inputs, get:

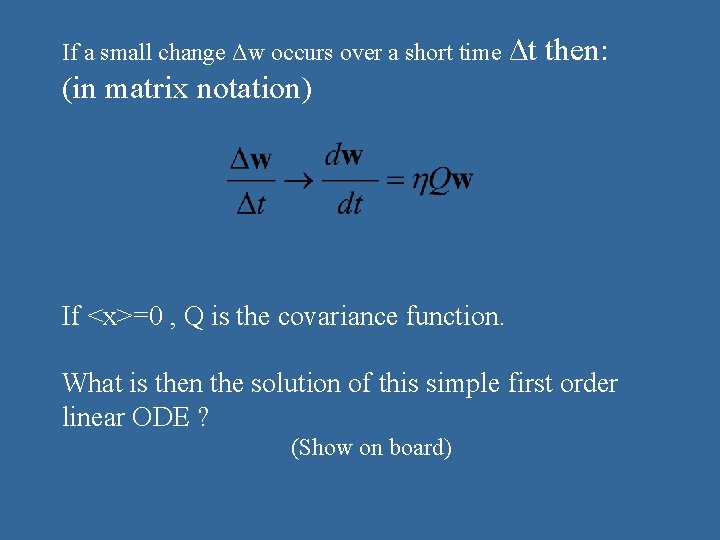

If a small change Δw occurs over a short time Δt then: (in matrix notation) If <x>=0 , Q is the covariance function. What is then the solution of this simple first order linear ODE ? (Show on board)

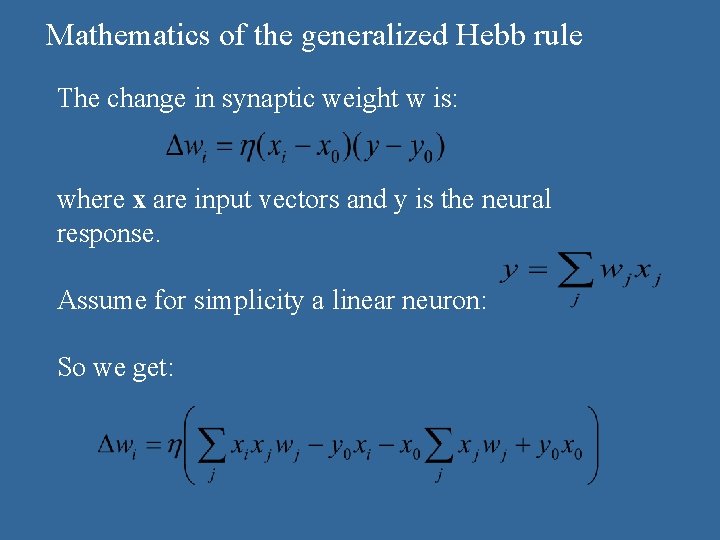

Mathematics of the generalized Hebb rule The change in synaptic weight w is: where x are input vectors and y is the neural response. Assume for simplicity a linear neuron: So we get:

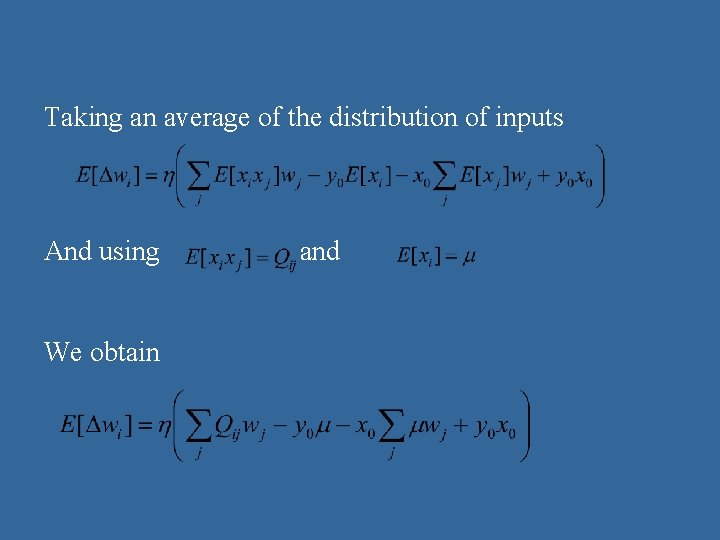

Taking an average of the distribution of inputs And using We obtain and

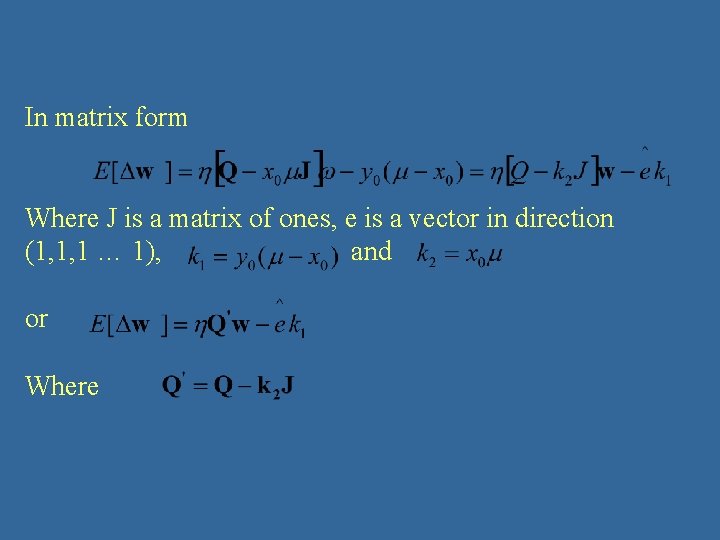

In matrix form Where J is a matrix of ones, e is a vector in direction (1, 1, 1 … 1), and or Where

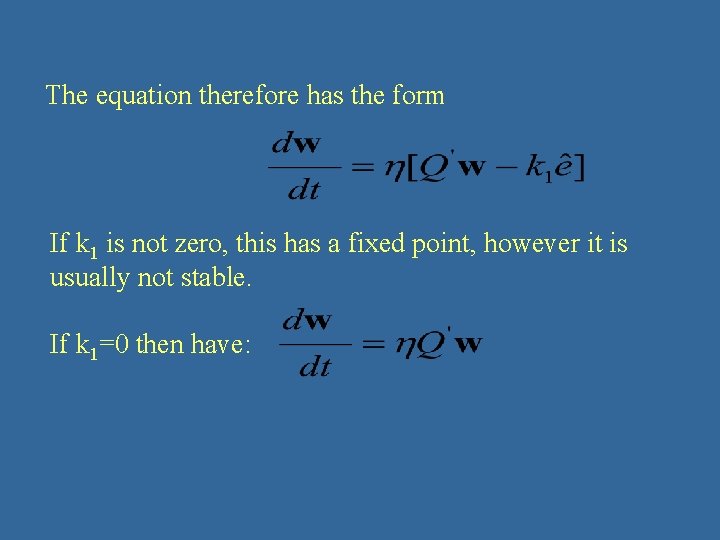

The equation therefore has the form If k 1 is not zero, this has a fixed point, however it is usually not stable. If k 1=0 then have:

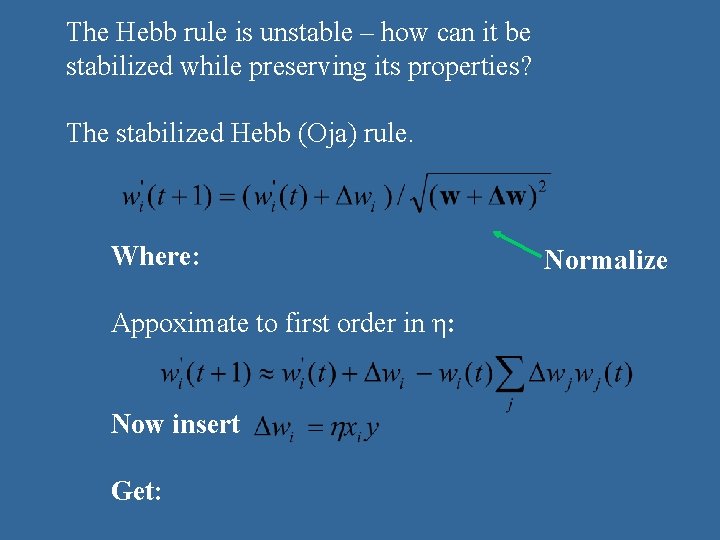

The Hebb rule is unstable – how can it be stabilized while preserving its properties? The stabilized Hebb (Oja) rule. Where: Appoximate to first order in η: Now insert Get: Normalize

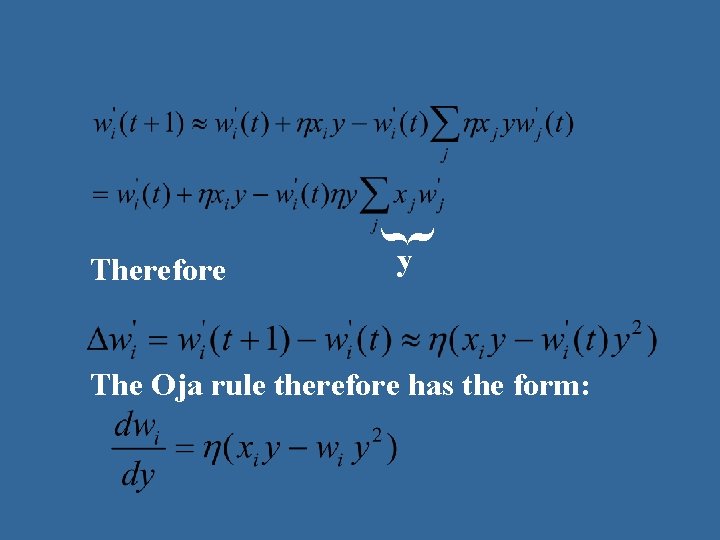

} Therefore y The Oja rule therefore has the form:

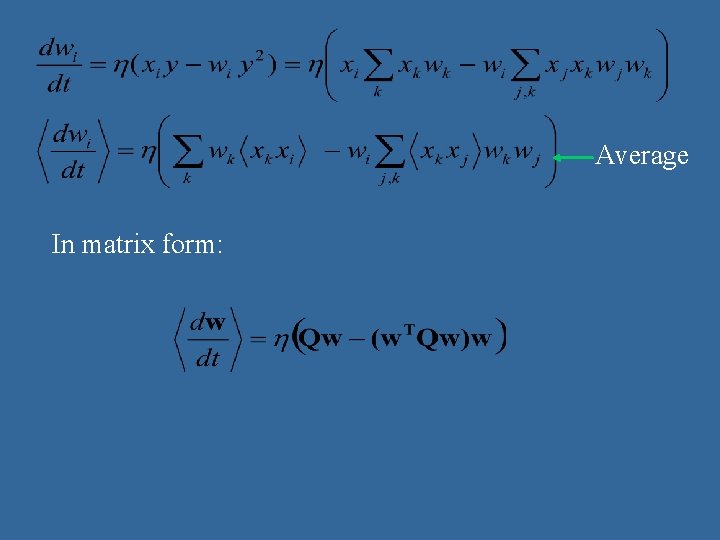

Average In matrix form:

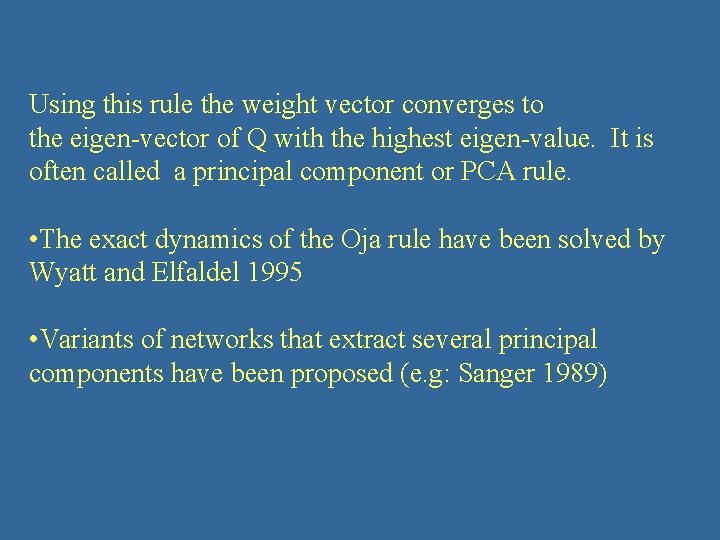

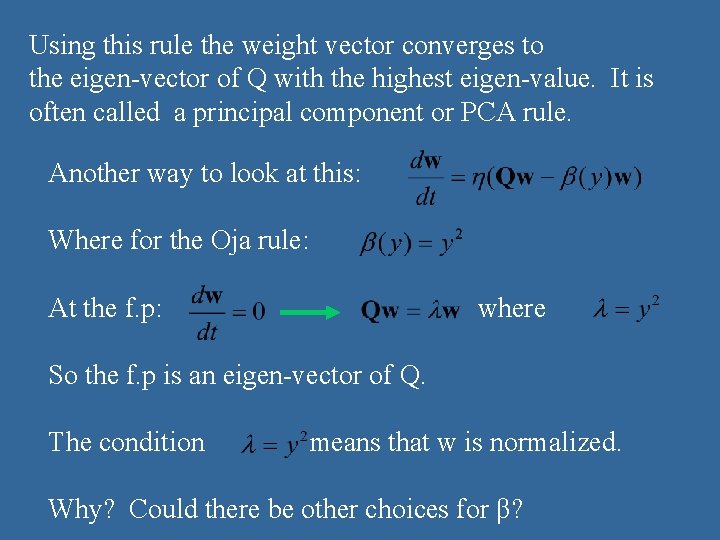

Using this rule the weight vector converges to the eigen-vector of Q with the highest eigen-value. It is often called a principal component or PCA rule. • The exact dynamics of the Oja rule have been solved by Wyatt and Elfaldel 1995 • Variants of networks that extract several principal components have been proposed (e. g: Sanger 1989)

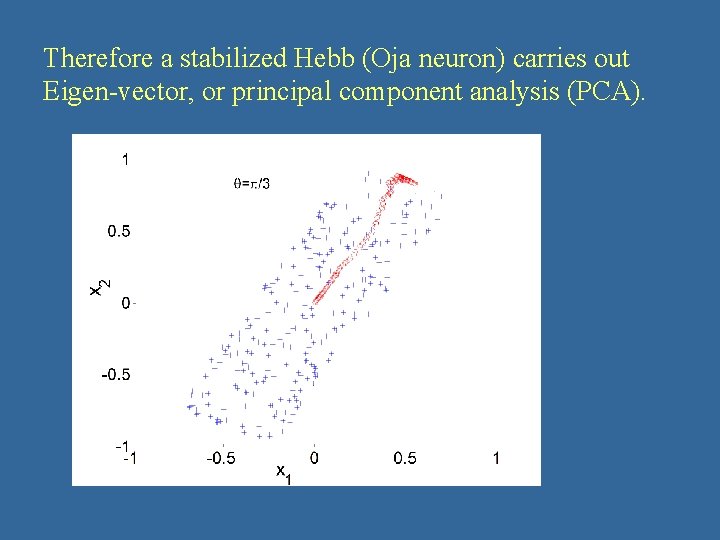

Therefore a stabilized Hebb (Oja neuron) carries out Eigen-vector, or principal component analysis (PCA).

Using this rule the weight vector converges to the eigen-vector of Q with the highest eigen-value. It is often called a principal component or PCA rule. Another way to look at this: Where for the Oja rule: At the f. p: where So the f. p is an eigen-vector of Q. The condition means that w is normalized. Why? Could there be other choices for β?

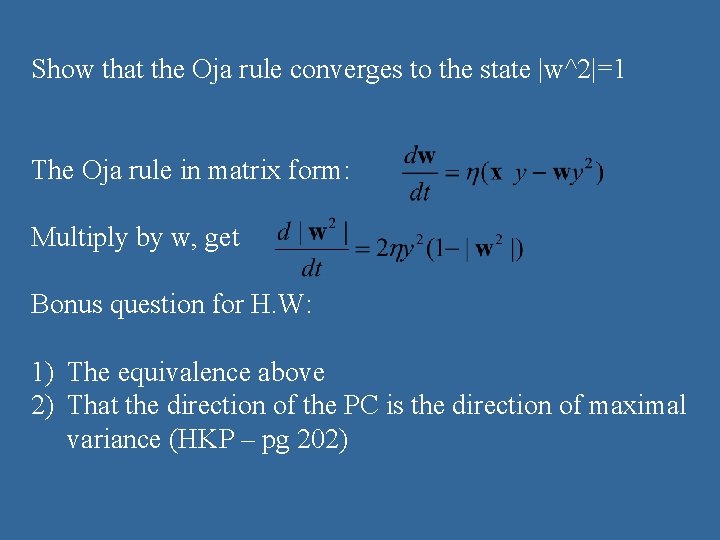

Show that the Oja rule converges to the state |w^2|=1 The Oja rule in matrix form: Multiply by w, get Bonus question for H. W: 1) The equivalence above 2) That the direction of the PC is the direction of maximal variance (HKP – pg 202)

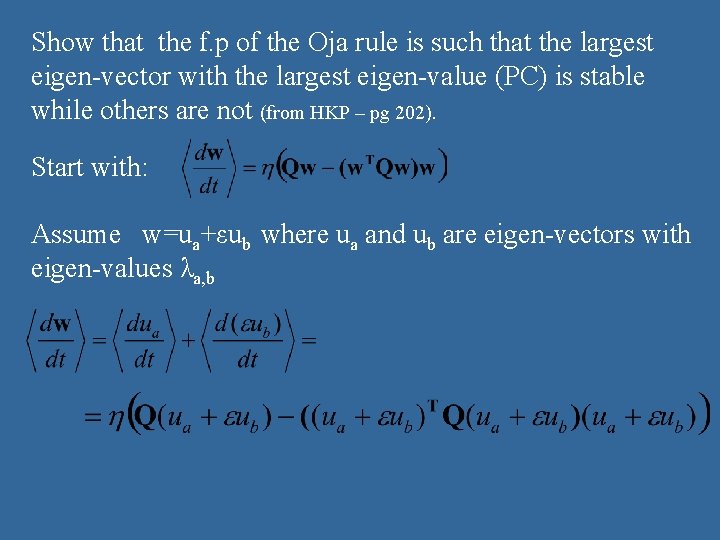

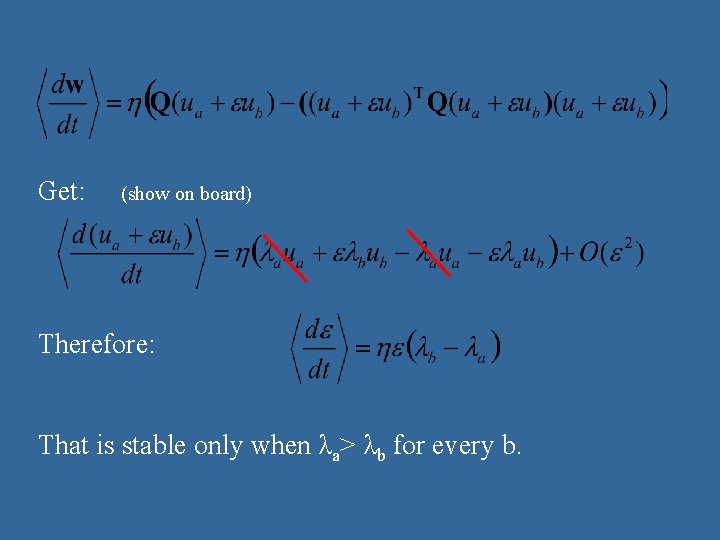

Show that the f. p of the Oja rule is such that the largest eigen-vector with the largest eigen-value (PC) is stable while others are not (from HKP – pg 202). Start with: Assume w=ua+εub where ua and ub are eigen-vectors with eigen-values λa, b

Get: (show on board) Therefore: That is stable only when λa> λb for every b.

- Slides: 21