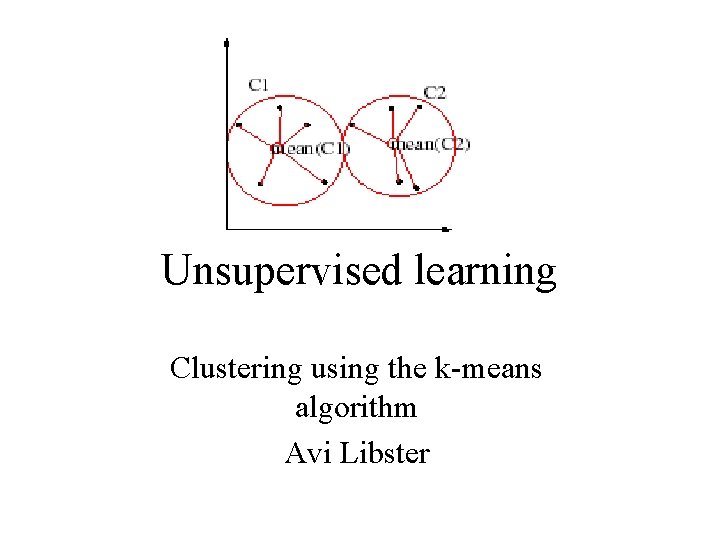

Unsupervised learning Clustering using the kmeans algorithm Avi

- Slides: 65

Unsupervised learning Clustering using the k-means algorithm Avi Libster

clustering • Used when we have a very large data set with very high dimensionality and lots of complex structure. • Basic assumption : attributes of the data are independent.

Cluster Analysis • Given a collection of data points in space , which might be high dimensional one, the goal is to find structure in the data: organize that data into sensible groups, so that each group will contain points that are near in some sense. • We want points in the same cluster to have high intersimilarity and low outersimilarity compared to points from different clusters.

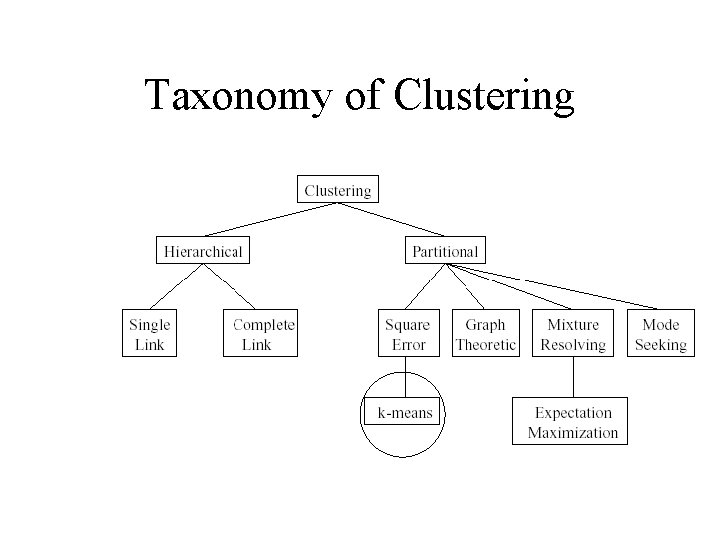

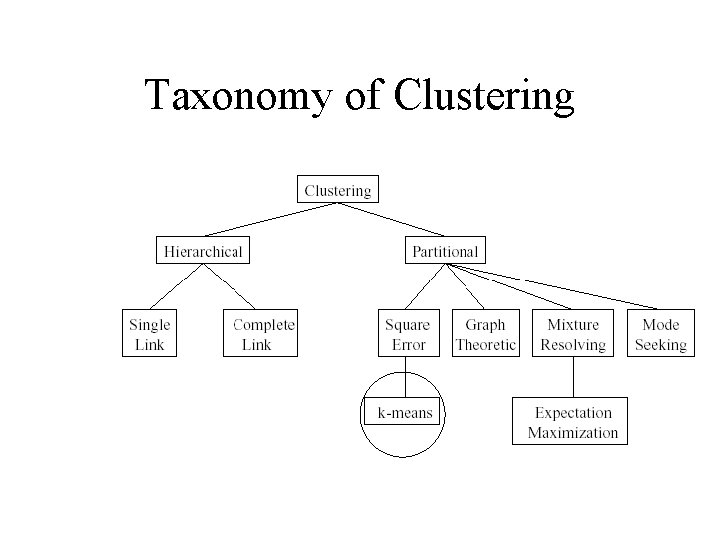

Taxonomy of Clustering

What is K-means • • An unsupervised learning algorithm Used for partitioning datasets. Simple to use Based on the minimization of the square error.

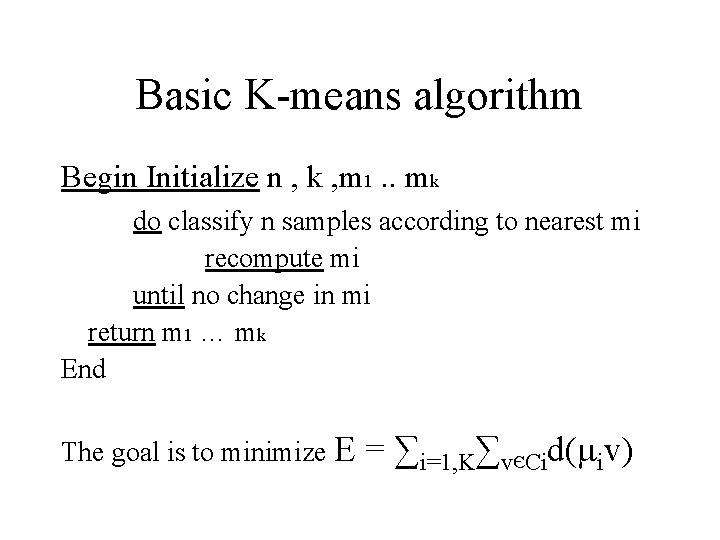

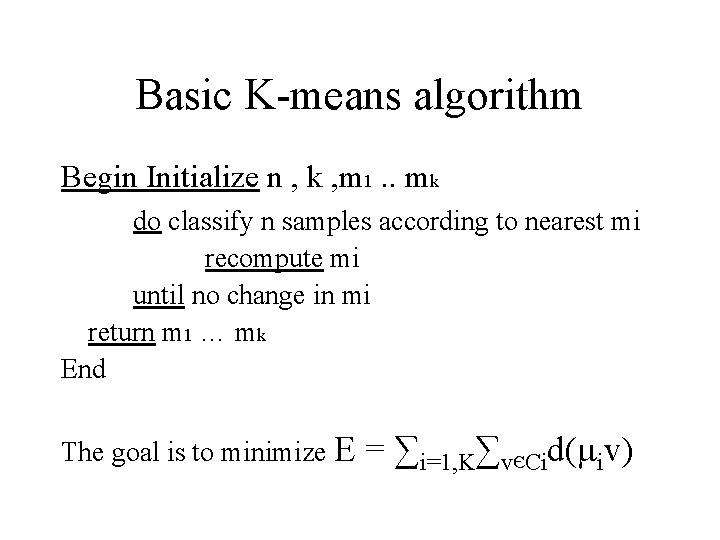

Basic K-means algorithm Begin Initialize n , k , m 1. . mk do classify n samples according to nearest mi recompute mi until no change in mi return m 1 … mk End The goal is to minimize E = ∑i=1, K∑vЄCid(μiv)

And now for something completely different… *Pictures * Adopted from http: //www. cs. ucr. edu/~eamonn/teaching/cs 170 materials/Machine. Learning 3. ppt

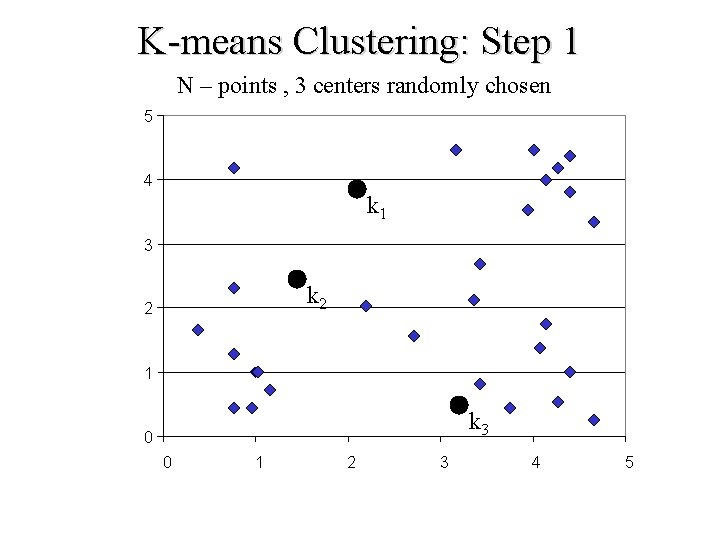

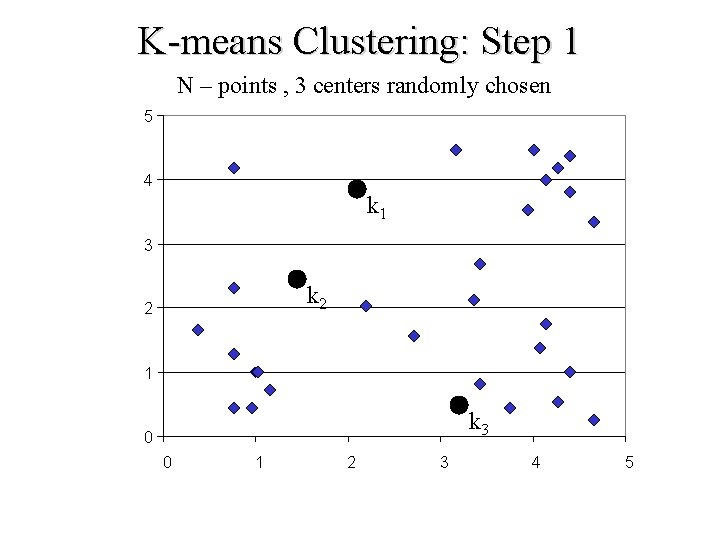

K-means Clustering: Step 1 N – points , 3 centers randomly chosen 5 4 k 1 3 k 2 2 1 k 3 0 0 1 2 3 4 5

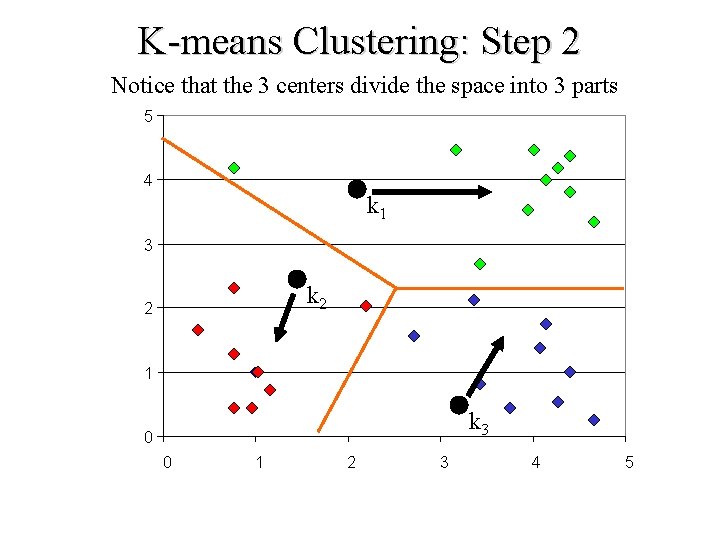

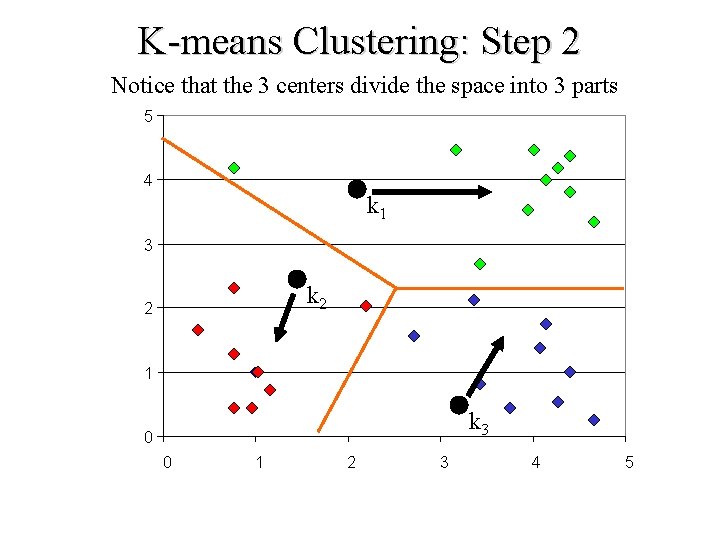

K-means Clustering: Step 2 Notice that the 3 centers divide the space into 3 parts 5 4 k 1 3 k 2 2 1 k 3 0 0 1 2 3 4 5

K-means Clustering: Step 3 New centers are calculated according to the instances of each K. 5 4 k 1 3 2 k 3 k 2 1 0 0 1 2 3 4 5

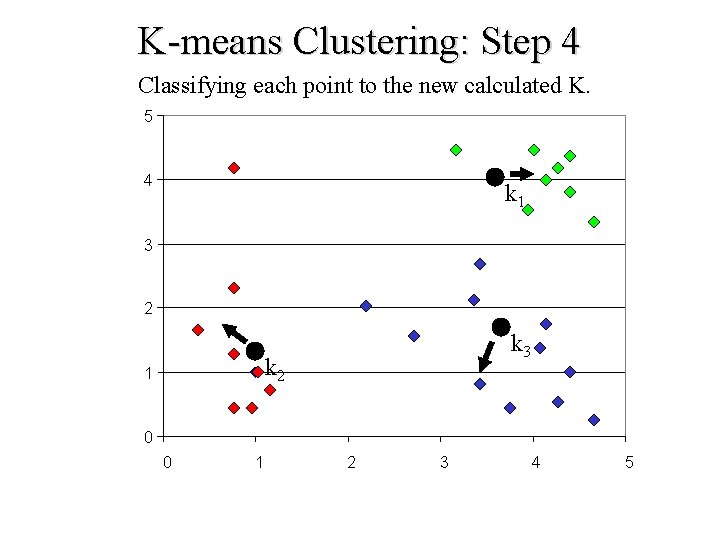

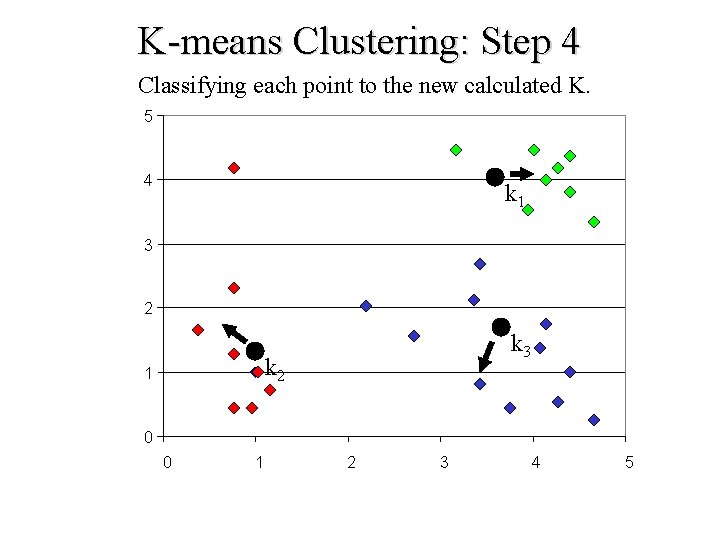

K-means Clustering: Step 4 Classifying each point to the new calculated K. 5 4 k 1 3 2 k 3 k 2 1 0 0 1 2 3 4 5

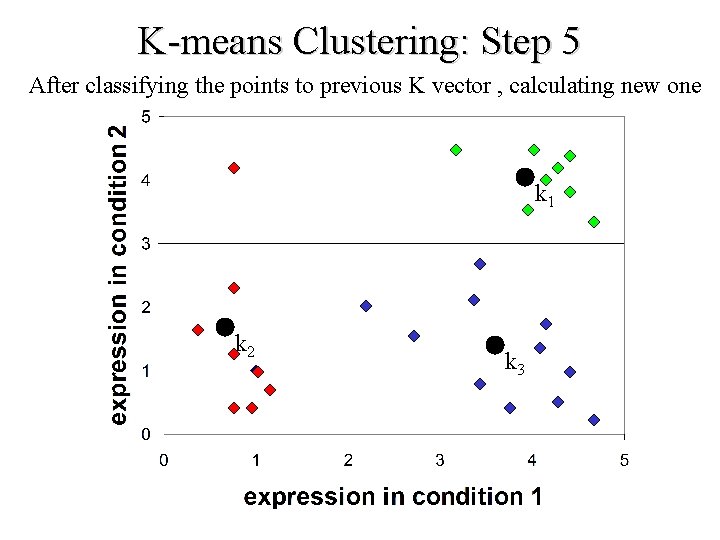

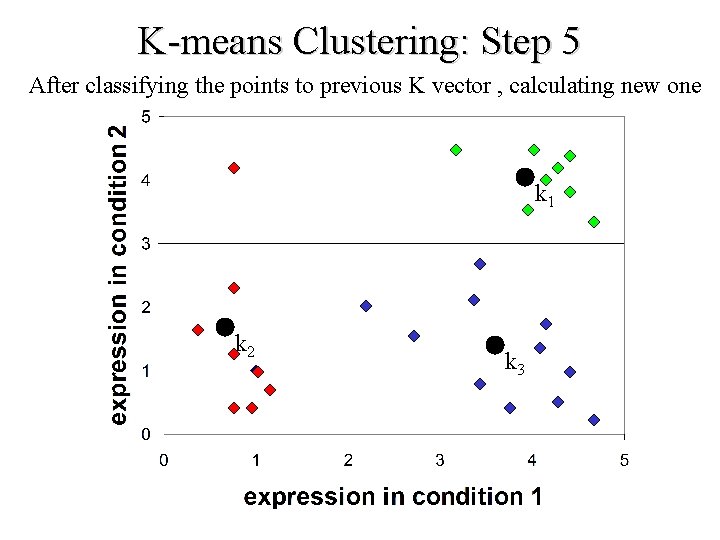

K-means Clustering: Step 5 After classifying the points to previous K vector , calculating new one k 1 k 2 k 3

Classic K-Means Strengths First and furthermost VERY easy to implement and understand. Nice results for a simple algorithm.

Classical K-Means - Strengths K-means can be viewed as a stochastic hill climbing procedure. we are looking for local optimum and not global optimum (as opposed to genetic or deterministic annealing algorithms which look for global optimum).

Why Hill climbing ? Actually Hill climbing can be a misleading term in this context. The hill climbing Is not done over the dataset points , but over the means values. When the k-means algorithm is running we actually change the values of the means (k of those). The changes of the means is somewhat dependent on each other.

Hill climbing continued… • The algorithm is said to converge when we don’t change the means values. • This happens when dmi/dt = 0. in the phase plane created from the means values we have reached the top of the hill (stable point or saddle point).

K-means Strengths continued… K-means complexity is easily derived from the algorithm : O(ndc. T) n – number of samples d – number of features (usually the dimension of the samples) k – number of centers checked T – number of iterations When the datasets are not to large and of low dimensions the average time of running is not high.

Using K-means strengths The following are real life situations in which K-means is used as the key clustering algorithm. During the presentation of the samples, I would like to emphasize some important points considering the implementation of kmeans.

Sample : Understanding Gene regulation using Expression array cluster analysis

Scheme of gene expression research Pairwise Measures Clustering Motif Searching/Network Construction Integrated Analysis (NMR/SNP/Clinic/…. )

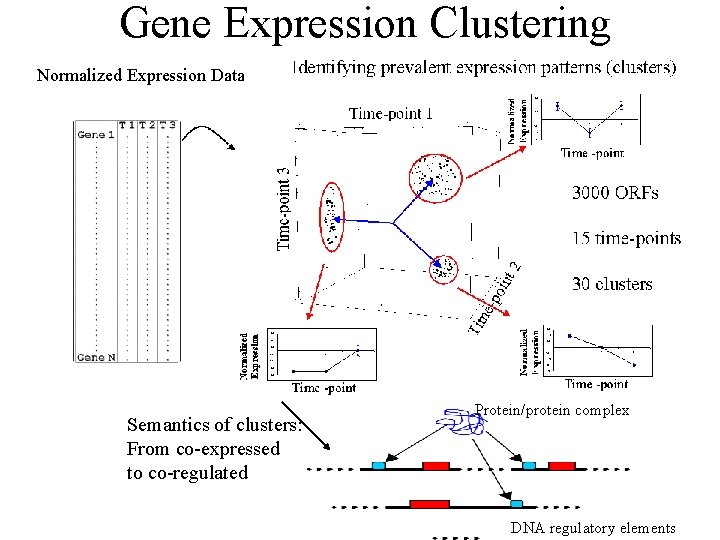

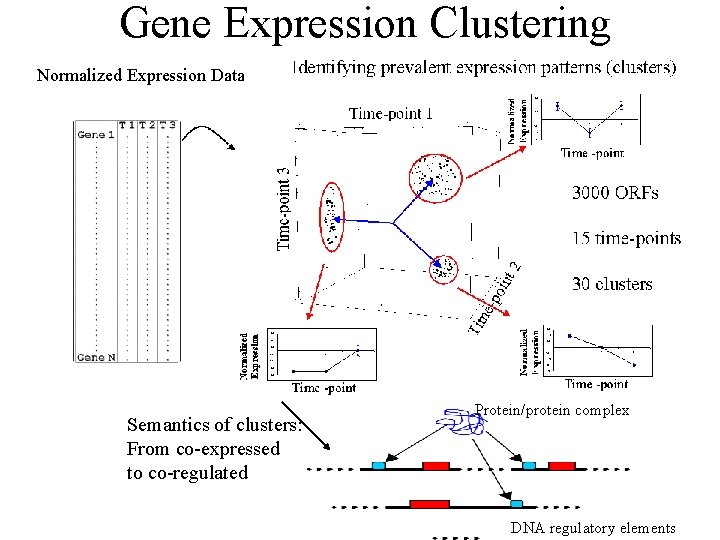

Gene Expression Clustering Normalized Expression Data Semantics of clusters: From co-expressed to co-regulated Protein/protein complex DNA regulatory elements

The meaning of gene clusters • genes that are consistently either up- or downregulated in given set of conditions. Down or upregulation may shade light on the causes of biological processes • patterns of gene expression and grouping genes into expression classes might provide much greater insight into their biological function and relevance.

Why should clusters emerge ? Genes contained in a particular pathway, or that respond to some environmental change , should be co-regulated and consequently, should show similar patterns of expression. from that fact one can see that the main goal is to identify genes which show similar patterns of expressions. Examples: 1. 2. If gene a expression is rising, gene b expression is rising too (might be because gene a encodes a protein which regulates the expression of b). Gene a is always expressed with gene b (it might happen because of the co-regulation by same protein).

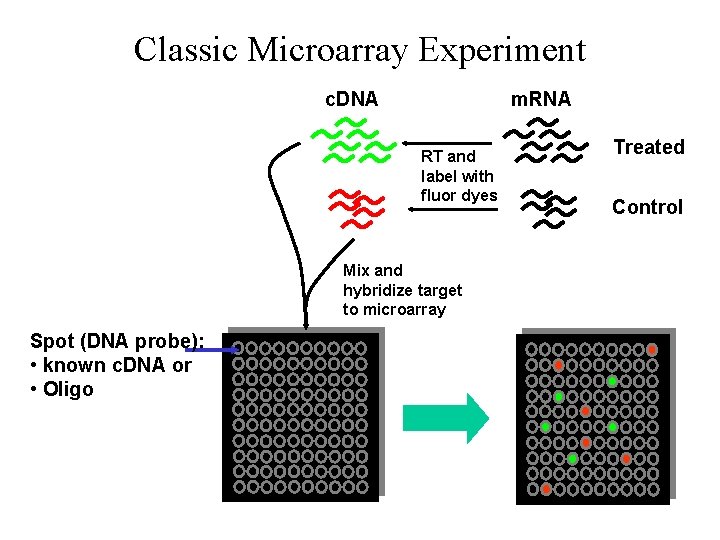

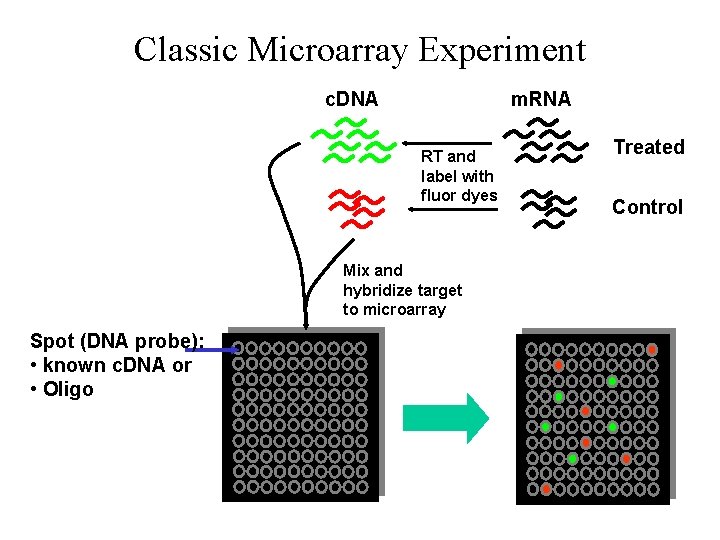

Classic Microarray Experiment c. DNA m. RNA RT and label with fluor dyes Mix and hybridize target to microarray Spot (DNA probe): • known c. DNA or • Oligo Treated Control

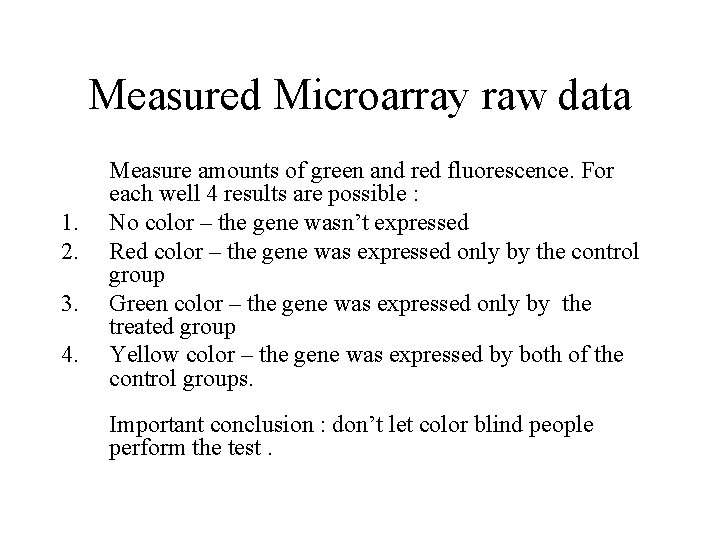

Measured Microarray raw data 1. 2. 3. 4. Measure amounts of green and red fluorescence. For each well 4 results are possible : No color – the gene wasn’t expressed Red color – the gene was expressed only by the control group Green color – the gene was expressed only by the treated group Yellow color – the gene was expressed by both of the control groups. Important conclusion : don’t let color blind people perform the test.

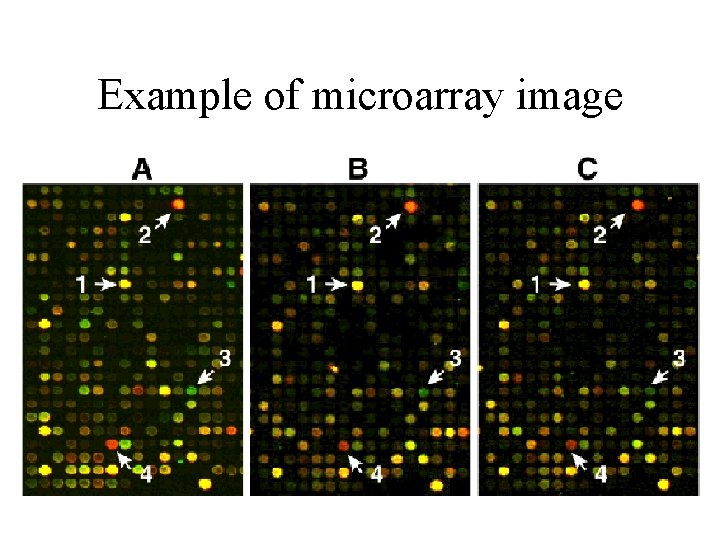

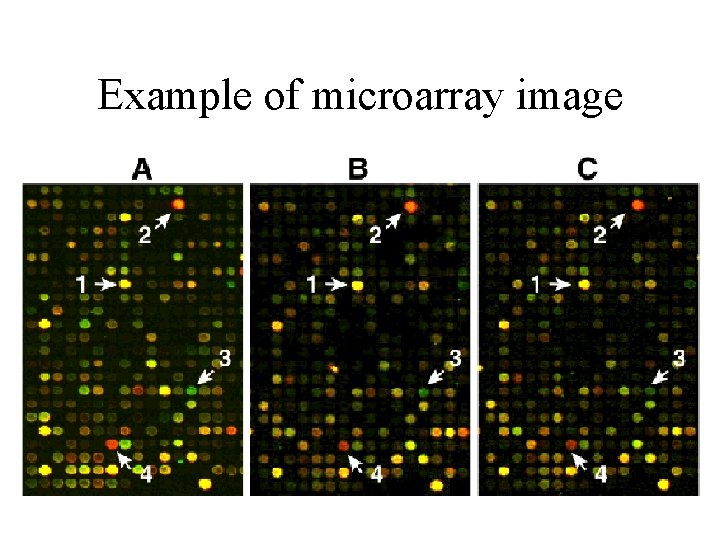

Example of microarray image

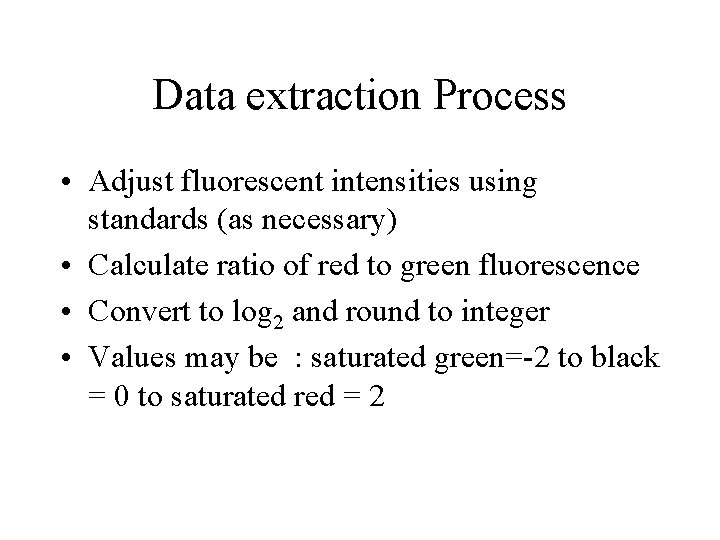

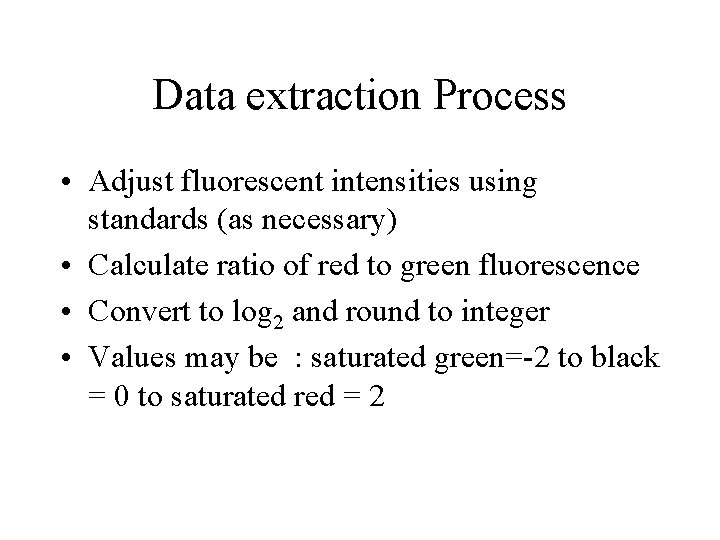

Data extraction Process • Adjust fluorescent intensities using standards (as necessary) • Calculate ratio of red to green fluorescence • Convert to log 2 and round to integer • Values may be : saturated green=-2 to black = 0 to saturated red = 2

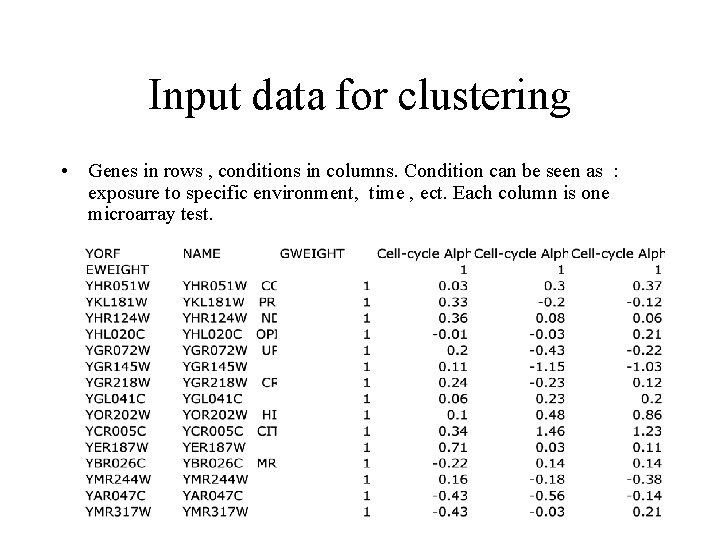

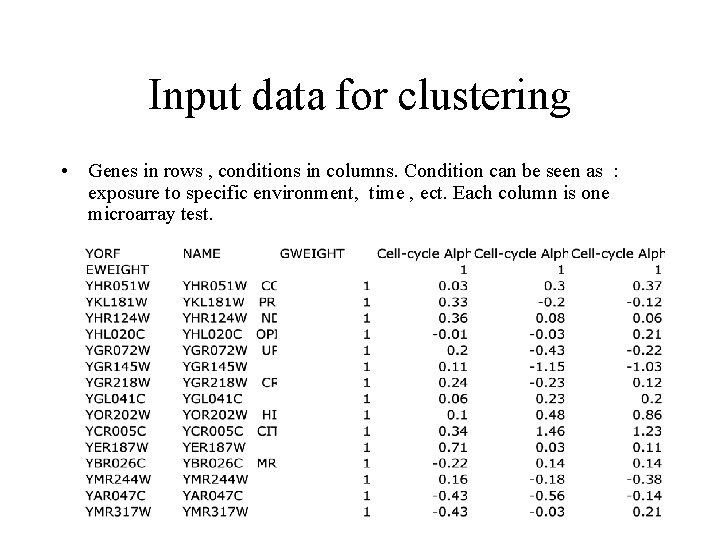

Input data for clustering • Genes in rows , conditions in columns. Condition can be seen as : exposure to specific environment, time , ect. Each column is one microarray test.

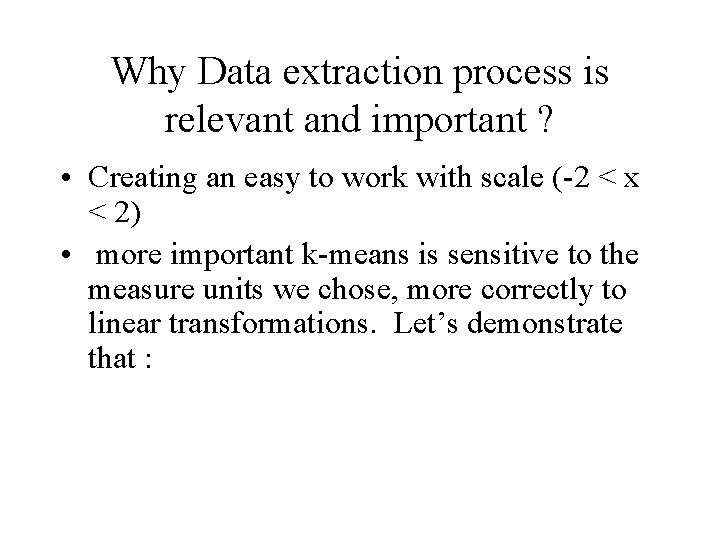

Why Data extraction process is relevant and important ? • Creating an easy to work with scale (-2 < x < 2) • more important k-means is sensitive to the measure units we chose, more correctly to linear transformations. Let’s demonstrate that :

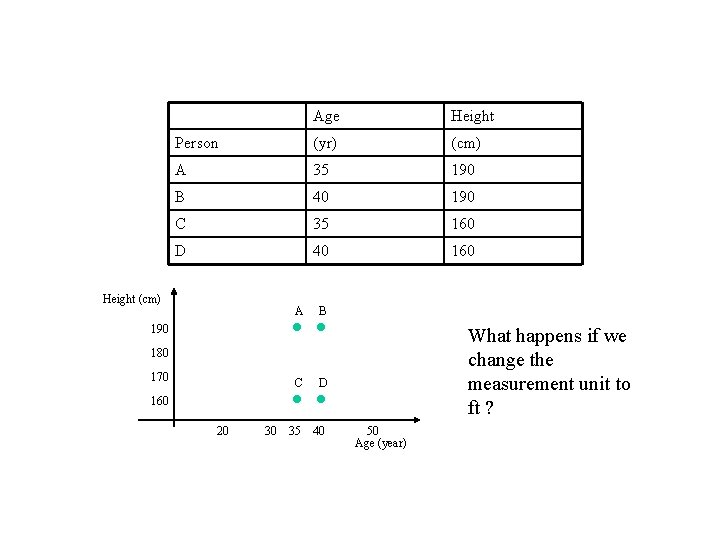

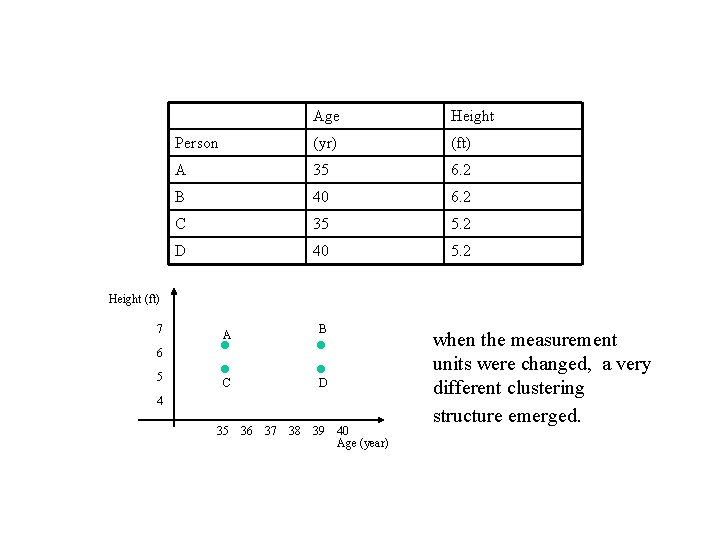

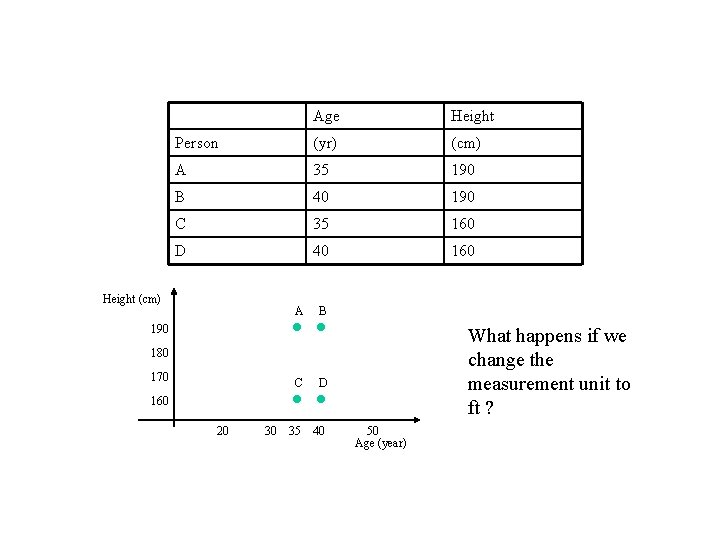

Age Height Person (yr) (cm) A 35 190 B 40 190 C 35 160 D 40 160 Height (cm) A B 190 What happens if we change the measurement unit to ft ? 180 170 C D 35 40 160 20 30 50 Age (year)

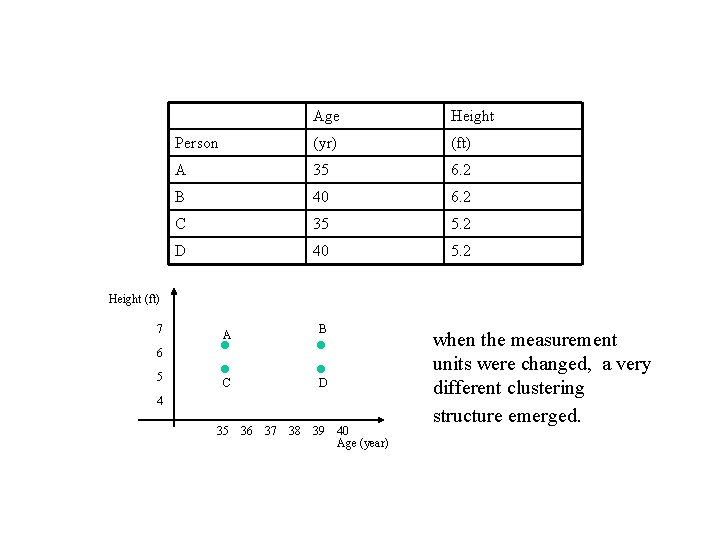

Age Height Person (yr) (ft) A 35 6. 2 B 40 6. 2 C 35 5. 2 D 40 5. 2 Height (ft) 7 A B C D 6 5 4 35 36 37 38 39 40 Age (year) when the measurement units were changed, a very different clustering structure emerged.

How to overcome measure unit problems ? • It’s clear that if k-means algorithm is to be used the data should be normalized and standardized. let’s just have a brief look on the dataset structure …

Dataset structure We are dealing with a Multivariate Dataset composed of p variables (p microarrays tests done) for n independent observations (genes). We represent it using n x p matrix M consisting of vectors X 1 through Xn each of length p.

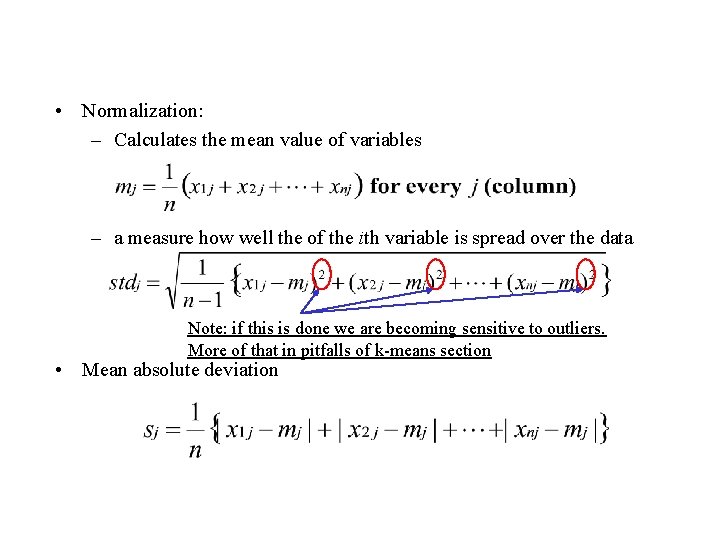

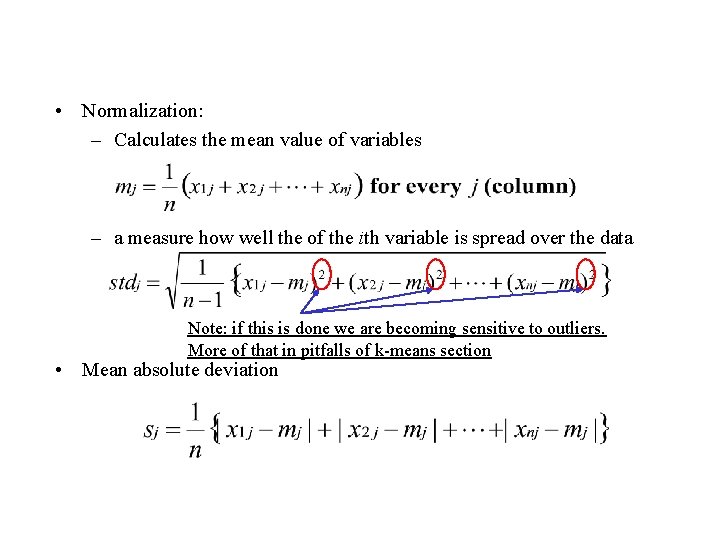

• Normalization: – Calculates the mean value of variables – a measure how well the of the ith variable is spread over the data Note: if this is done we are becoming sensitive to outliers. More of that in pitfalls of k-means section • Mean absolute deviation

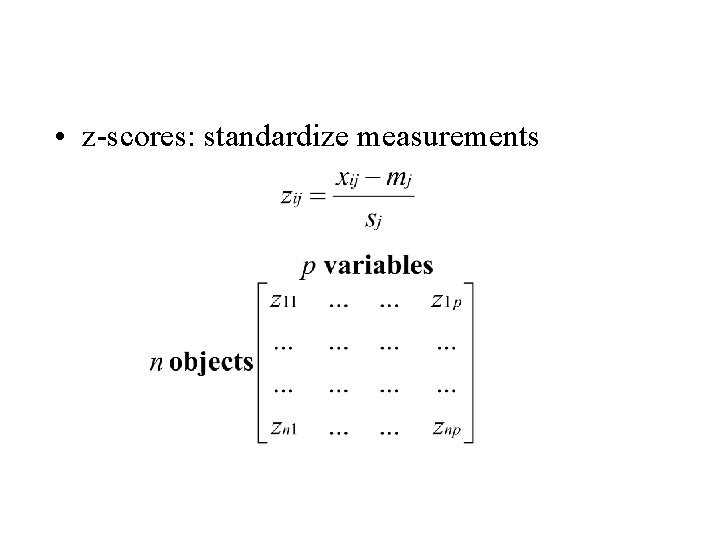

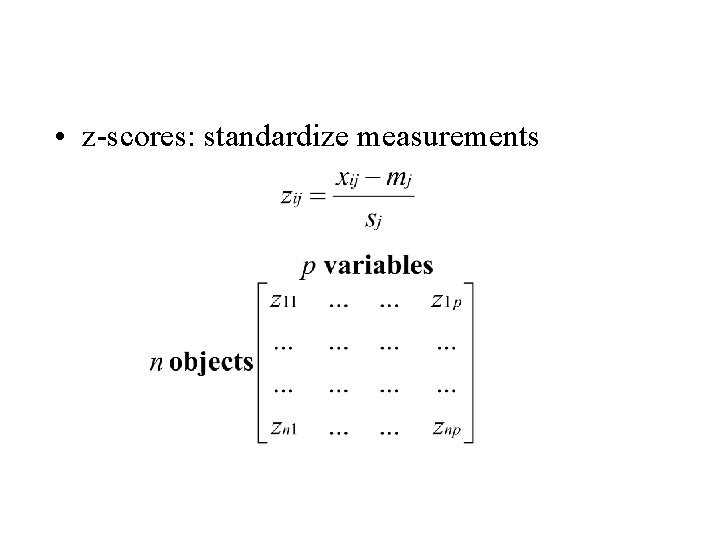

• z-scores: standardize measurements

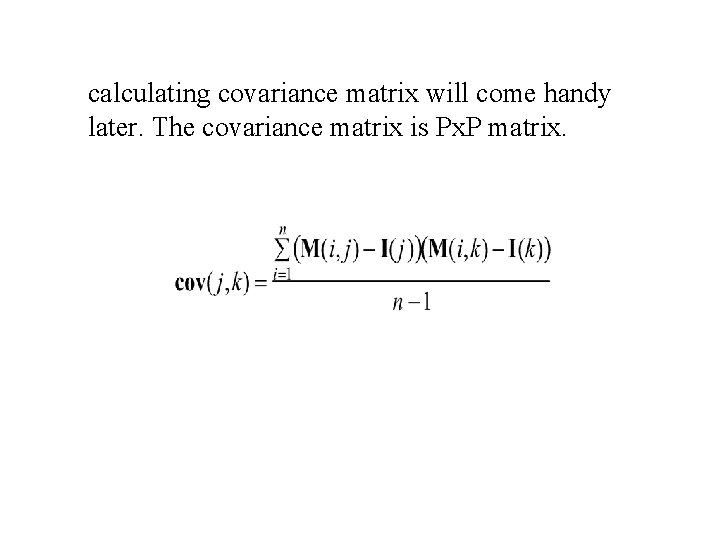

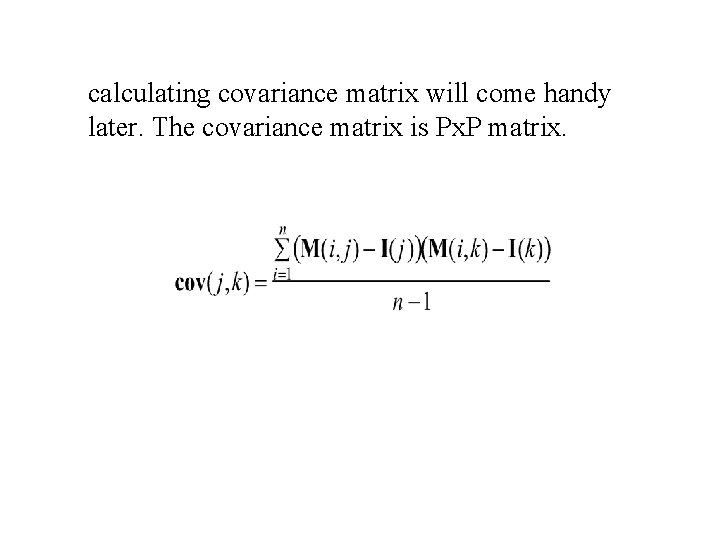

calculating covariance matrix will come handy later. The covariance matrix is Px. P matrix.

before running k-means on the data it’s also a good idea to do mean centering. Mean centering reduces the effect of the variables with the largest values (column) , which obscure other important differences. but When applying the previous steps to the data you should be cautious. When data is being standardized it may cause some damages to the clusters structure , because of the reduced effects , from variables with a big contribution, being divided by large sj.

Back to microarrays world

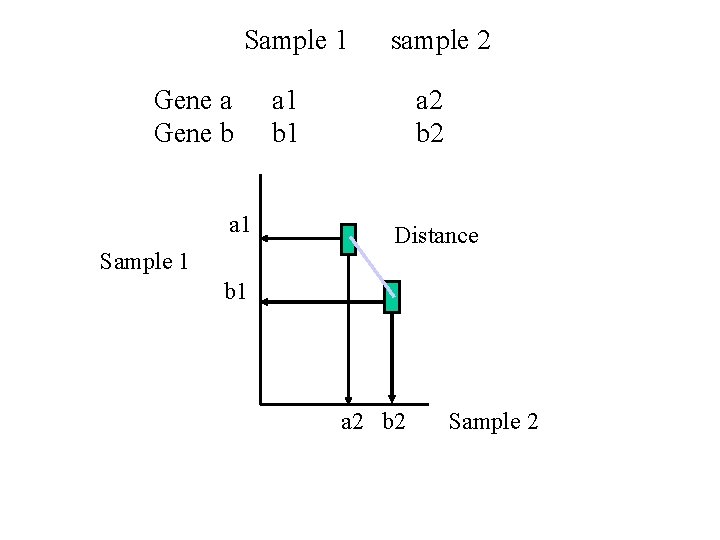

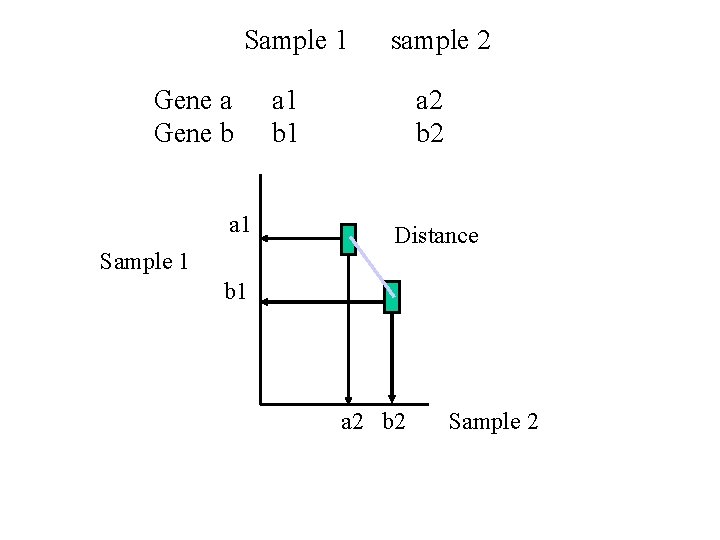

Sample 1 Gene a Gene b a 1 Sample 1 sample 2 a 1 b 1 a 2 b 2 Distance b 1 a 2 b 2 Sample 2

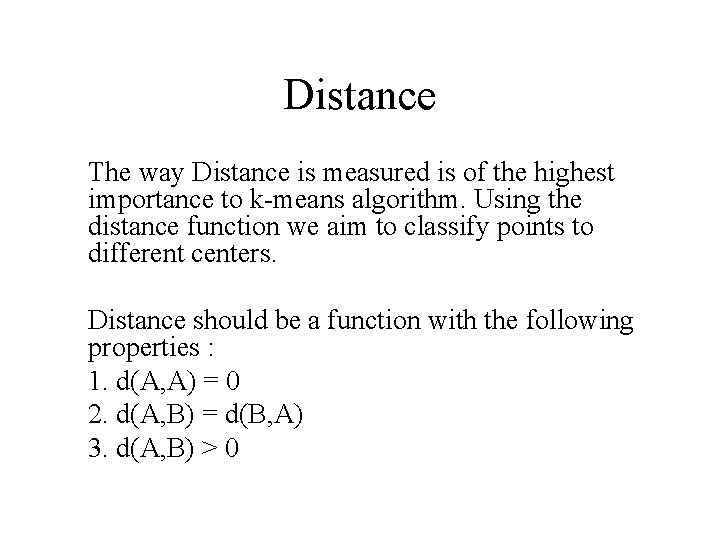

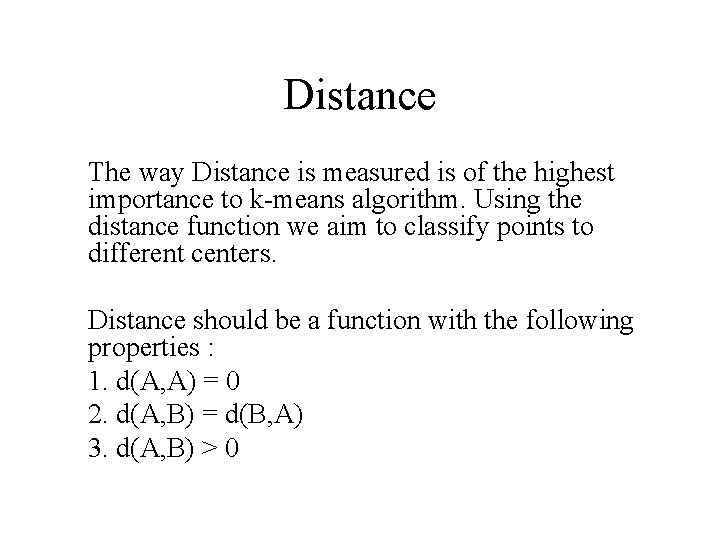

Distance The way Distance is measured is of the highest importance to k-means algorithm. Using the distance function we aim to classify points to different centers. Distance should be a function with the following properties : 1. d(A, A) = 0 2. d(A, B) = d(B, A) 3. d(A, B) > 0

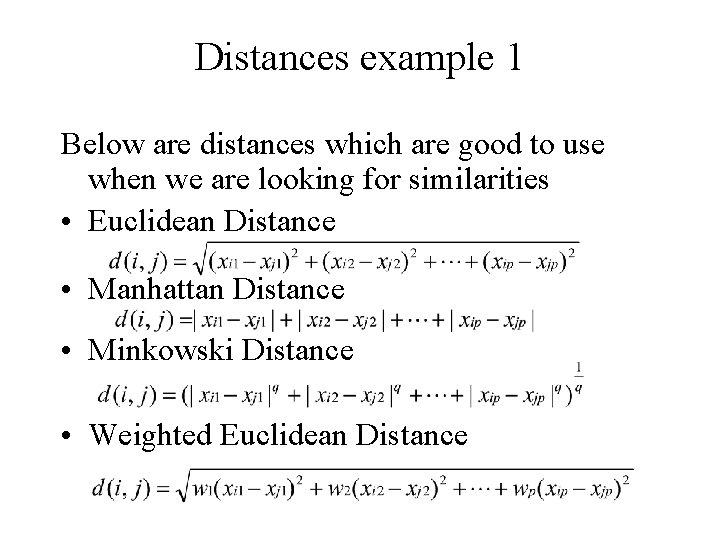

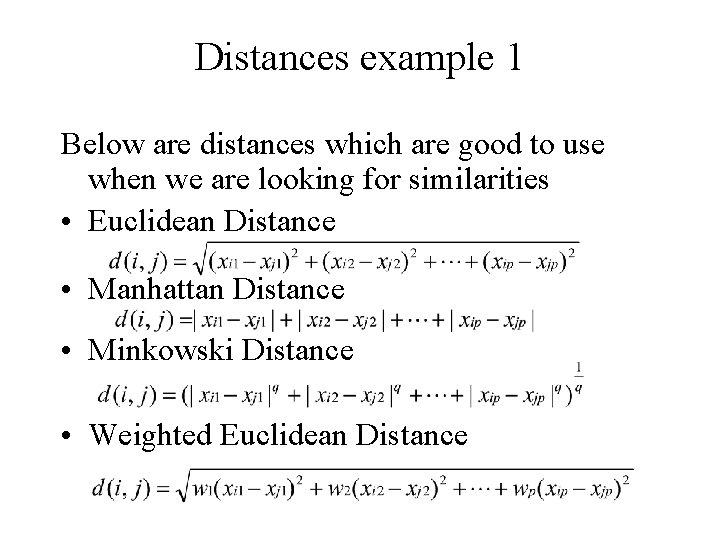

Distances example 1 Below are distances which are good to use when we are looking for similarities • Euclidean Distance • Manhattan Distance • Minkowski Distance • Weighted Euclidean Distance

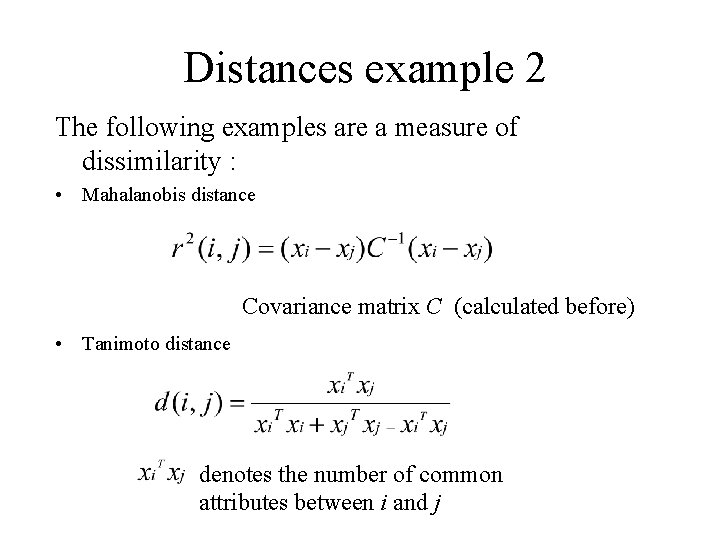

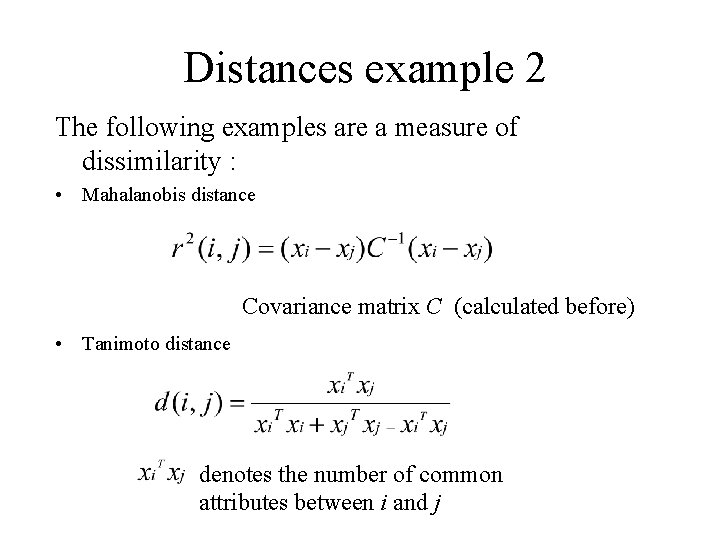

Distances example 2 The following examples are a measure of dissimilarity : • Mahalanobis distance Covariance matrix C (calculated before) • Tanimoto distance denotes the number of common attributes between i and j

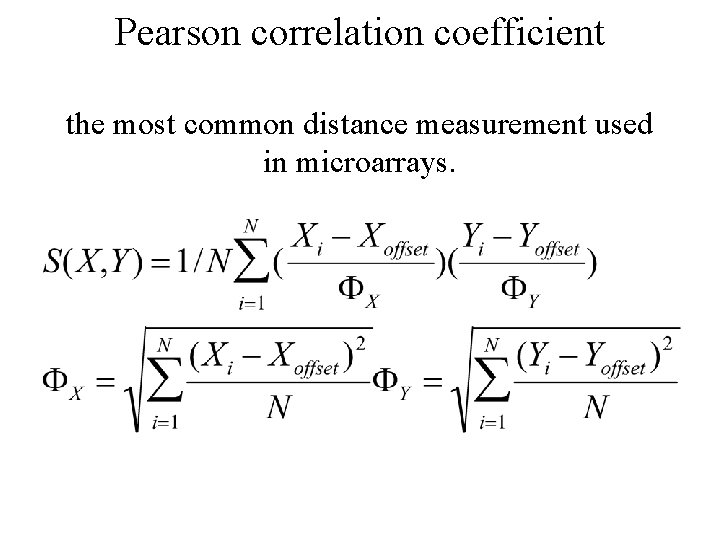

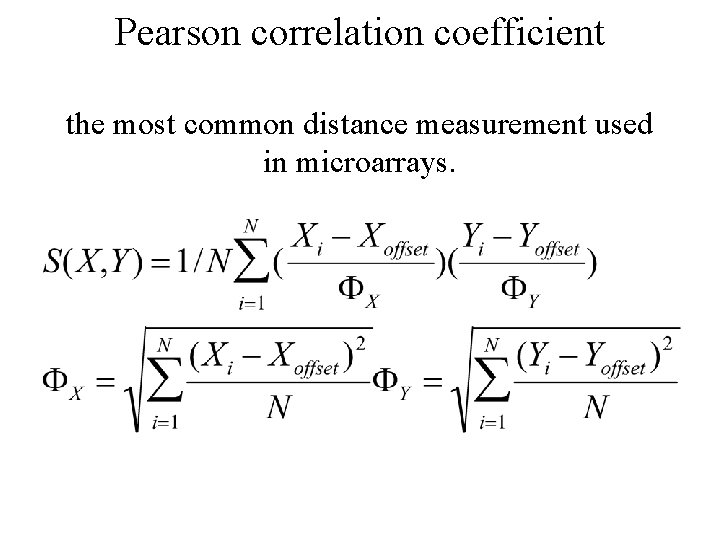

Pearson correlation coefficient the most common distance measurement used in microarrays.

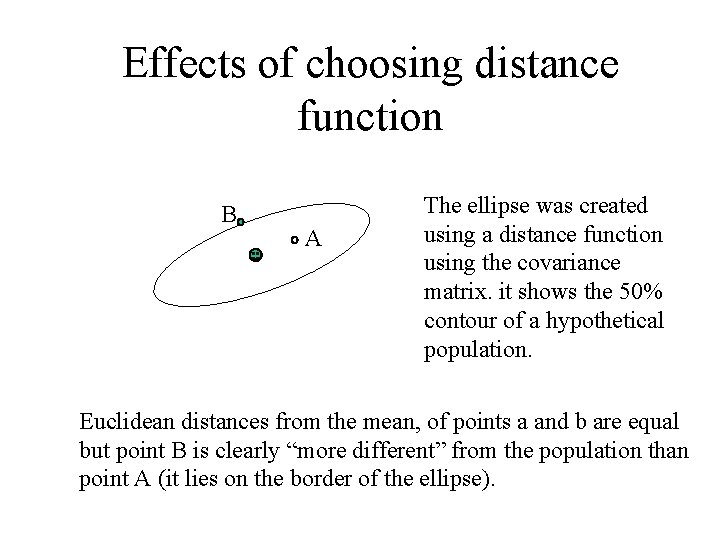

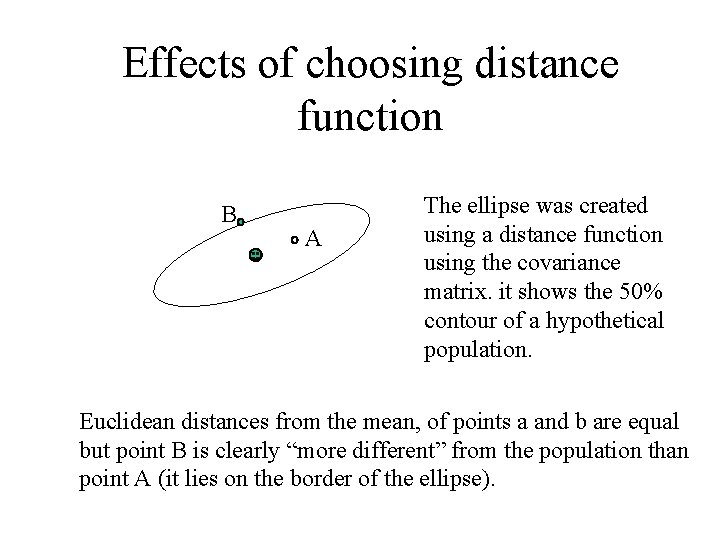

Effects of choosing distance function B A The ellipse was created using a distance function using the covariance matrix. it shows the 50% contour of a hypothetical population. Euclidean distances from the mean, of points a and b are equal but point B is clearly “more different” from the population than point A (it lies on the border of the ellipse).

Result from microarrays data analysis

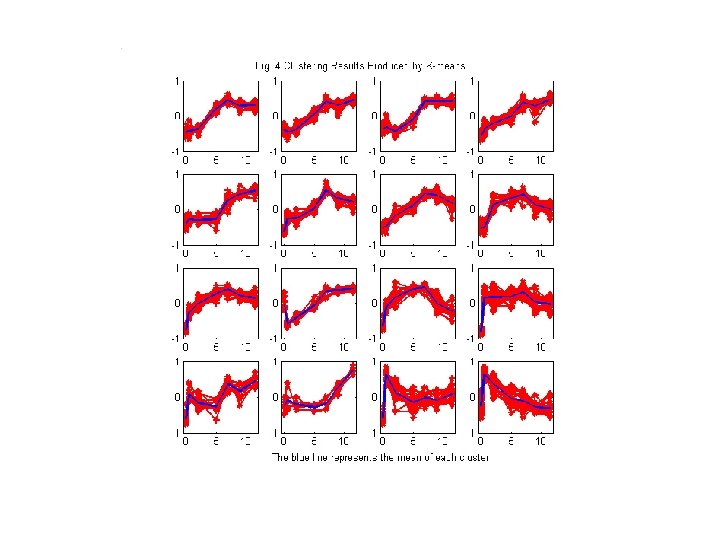

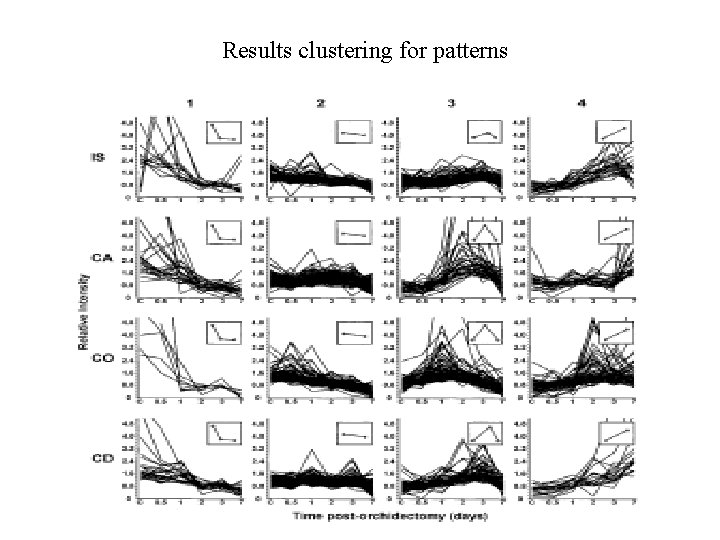

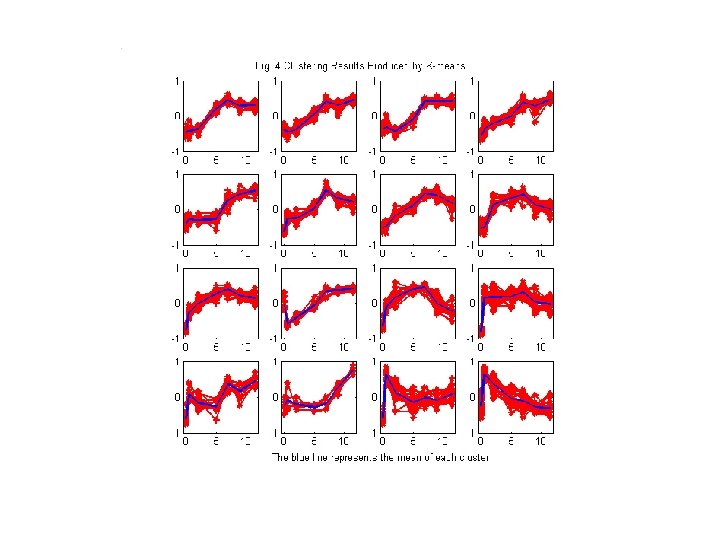

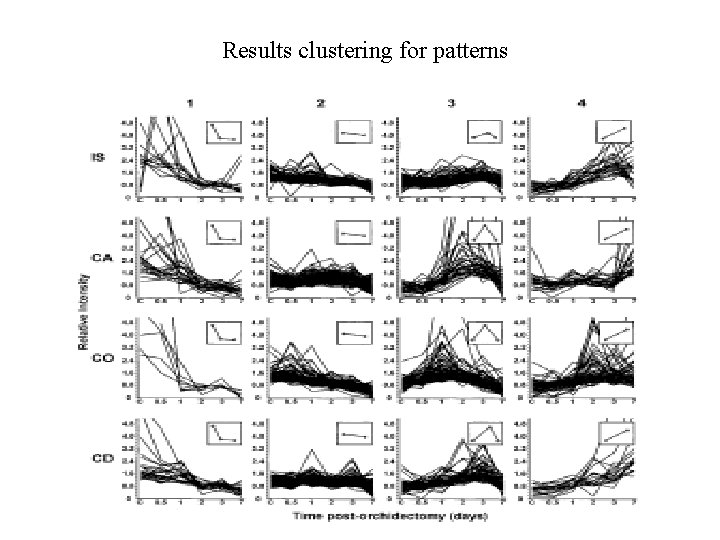

Results clustering for patterns

Problems with K-means type algorithms • Clusters are approximately spherical • Local optimum may be incorrect and influenced by the choice of the first K means values • High dimensionality is a problem • The value of K is an input parameter • Sensitive to outliers

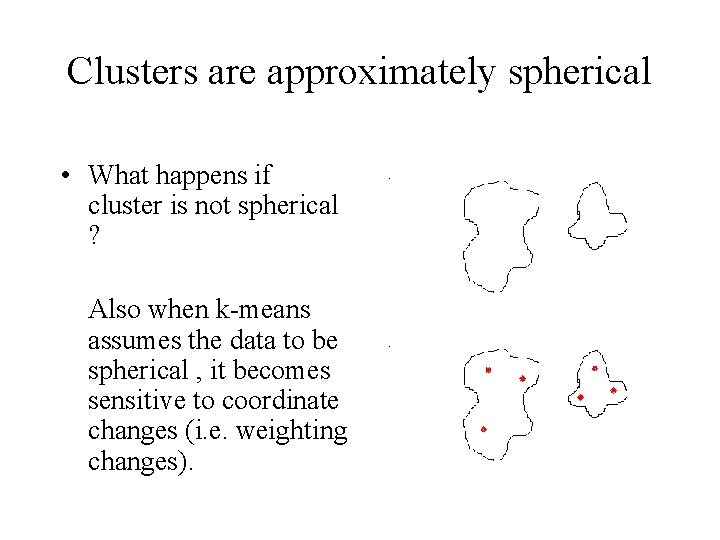

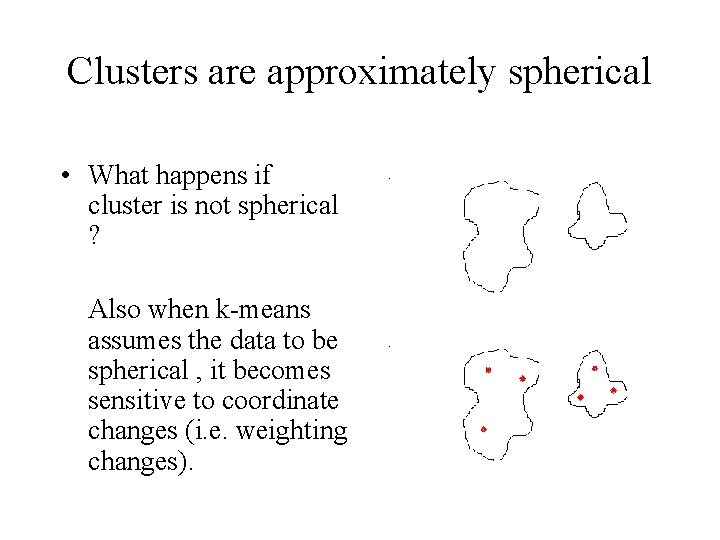

Clusters are approximately spherical • What happens if cluster is not spherical ? Also when k-means assumes the data to be spherical , it becomes sensitive to coordinate changes (i. e. weighting changes).

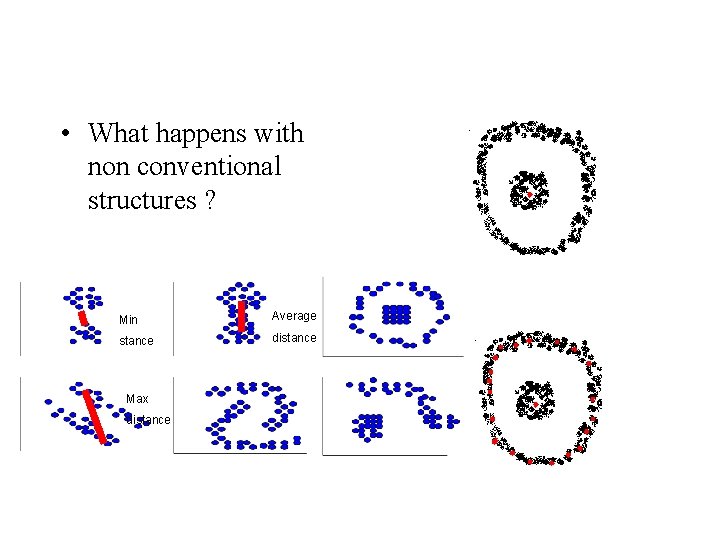

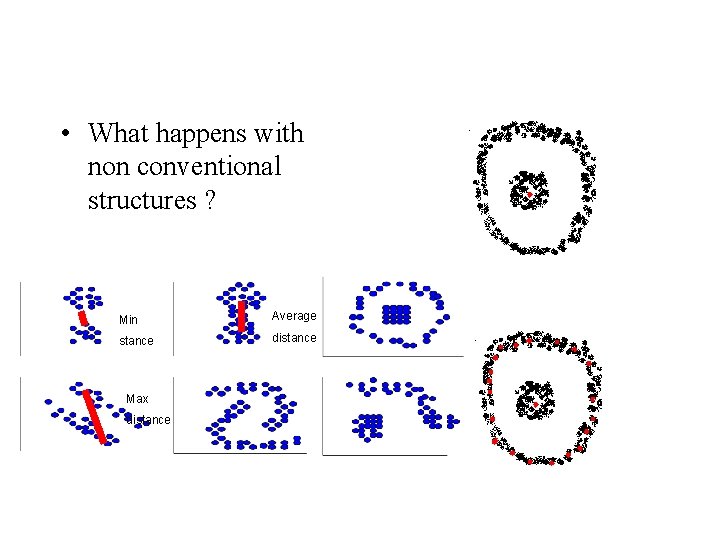

• What happens with non conventional structures ? Min Average stance distance Max distance

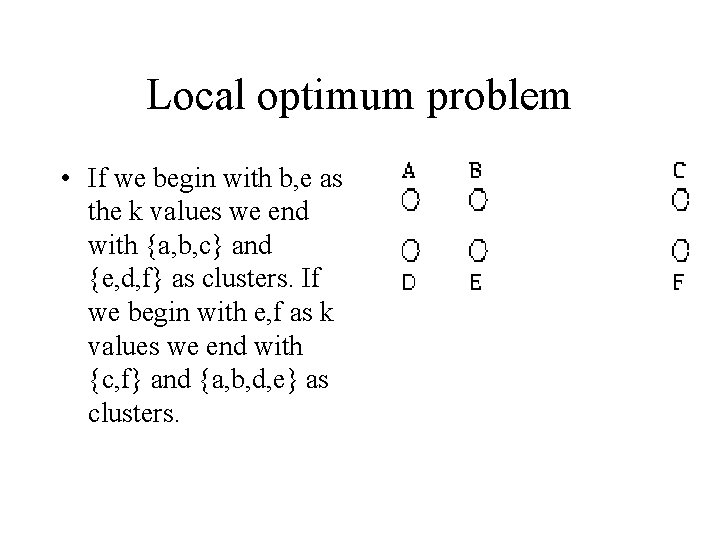

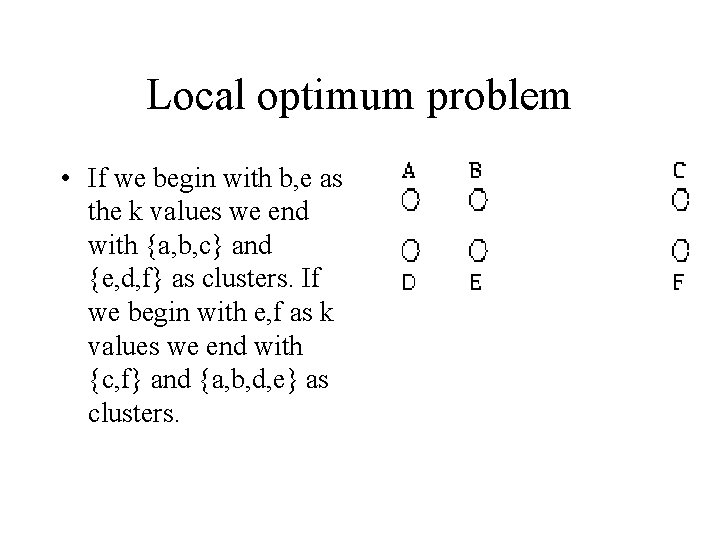

Local optimum problem • If we begin with b, e as the k values we end with {a, b, c} and {e, d, f} as clusters. If we begin with e, f as k values we end with {c, f} and {a, b, d, e} as clusters.

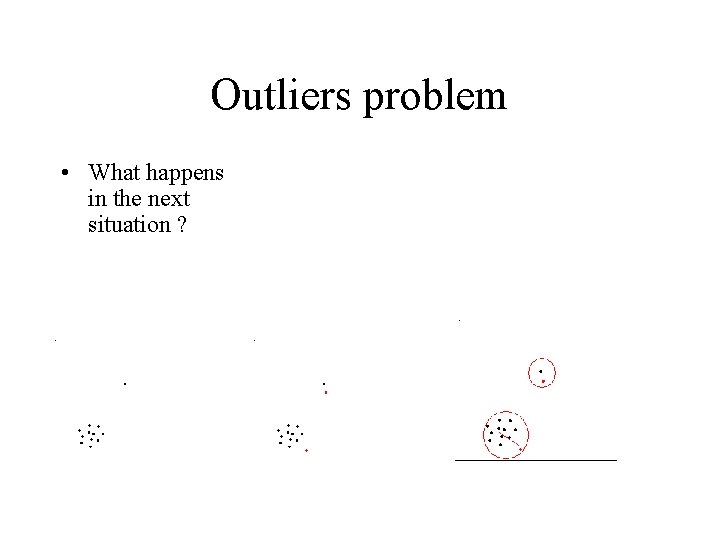

Outliers problem • What happens in the next situation ?

High dimensionality Poses a computational problem. The higher the dimension , the more resources are required. Also a linear connection exists between the dimension and running time.

Dimensions reduction Using PCA (principle component analysis) we can guess the dimensions which are most important to us (most changes of data occur in them). After finding the proper dimensions we reduce the data set dimensions accordingly.

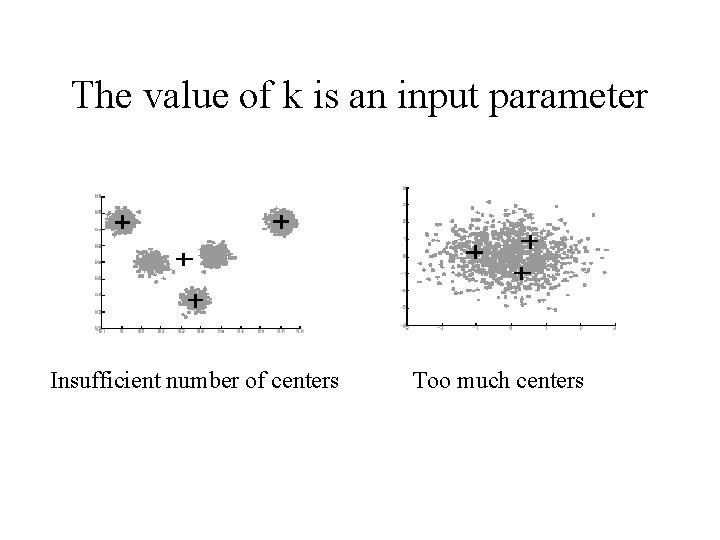

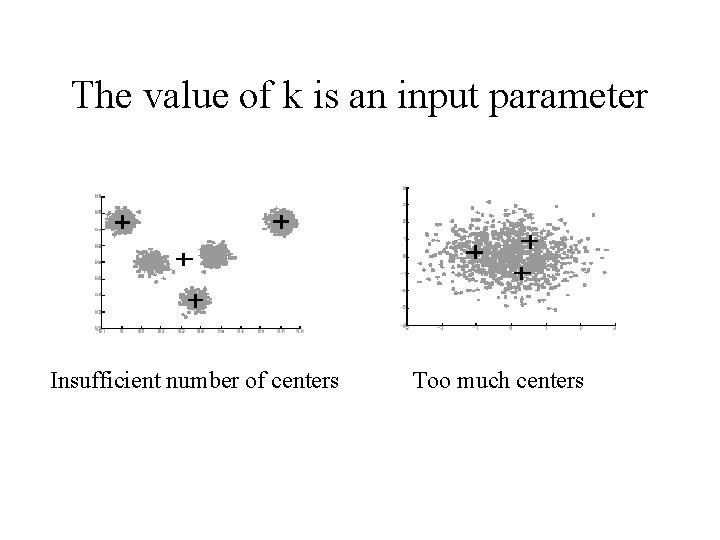

The value of k is an input parameter Insufficient number of centers Too much centers

Variation K-means • Instead of fixed number of centers , the number of centers change as the algorithm runs. • G-means is an example of such an algorithm.

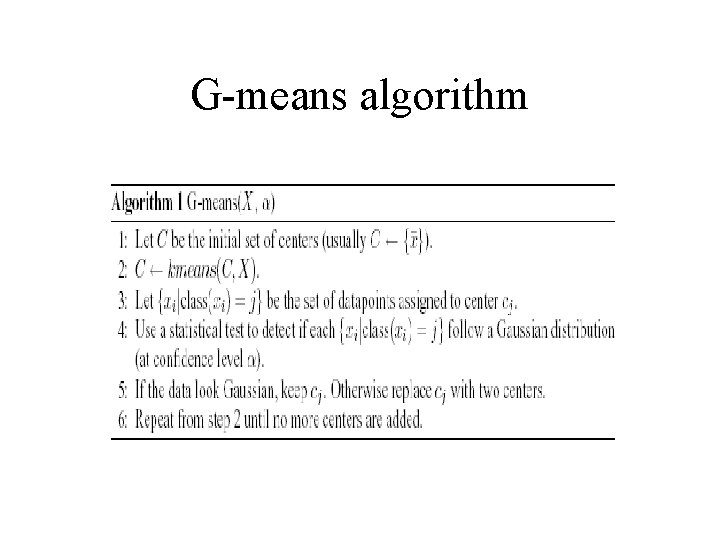

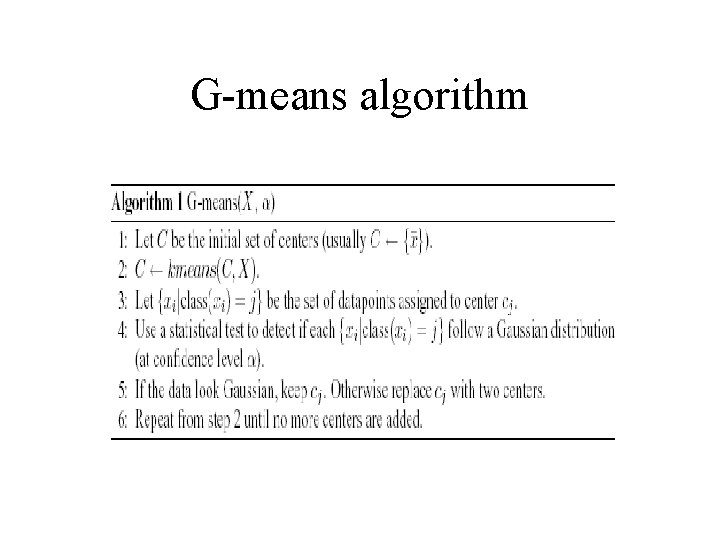

G-means algorithm

Ideas behind G-means • Each cluster adheres to unimodal distribution , such as Gaussian. • Doesn’t presume prior or domain specific knowledge • Increment of the number of centers occurs only if we have a cluster without gaussian distribution. • Statistical test is used to determine whether clusters have gaussian distributions or not.

Testing clusters for Gaussian fit we need a test to detect whether the data assigned to a center is sampled from a gaussian. The alternative hypotheses are: • H 0 – the data around the center is sampled from a gaussian • H 1 – the data around the center is not sampled from a gaussian Accepting the null H means we believe that one center is sufficient and we shouldn’t split the clusters. Regecting the null H, then we want to split the cluster

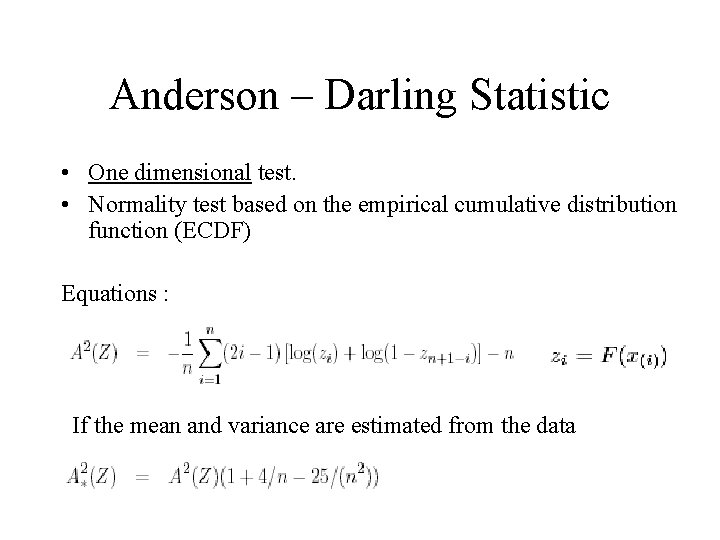

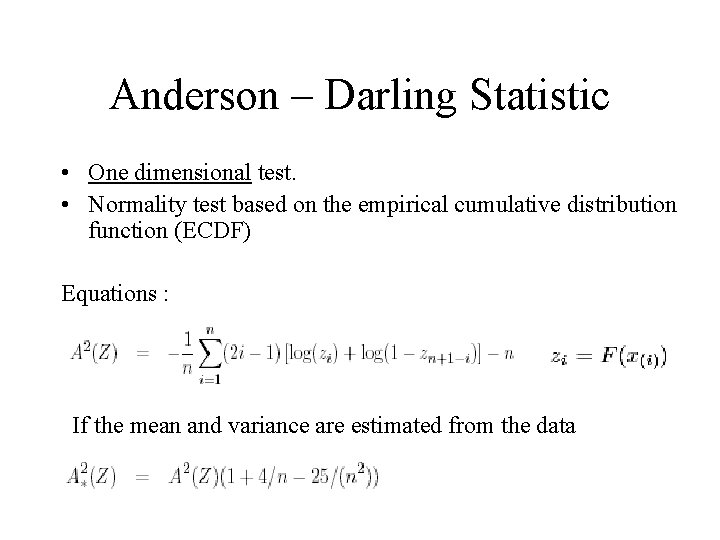

Anderson – Darling Statistic • One dimensional test. • Normality test based on the empirical cumulative distribution function (ECDF) Equations : If the mean and variance are estimated from the data

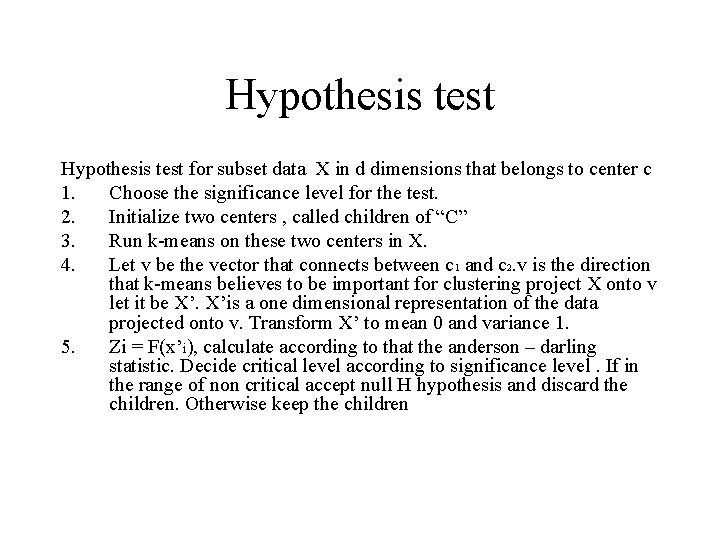

Hypothesis test for subset data X in d dimensions that belongs to center c 1. Choose the significance level for the test. 2. Initialize two centers , called children of “C” 3. Run k-means on these two centers in X. 4. Let v be the vector that connects between c 1 and c 2. v is the direction that k-means believes to be important for clustering project X onto v let it be X’. X’is a one dimensional representation of the data projected onto v. Transform X’ to mean 0 and variance 1. 5. Zi = F(x’i), calculate according to that the anderson – darling statistic. Decide critical level according to significance level. If in the range of non critical accept null H hypothesis and discard the children. Otherwise keep the children

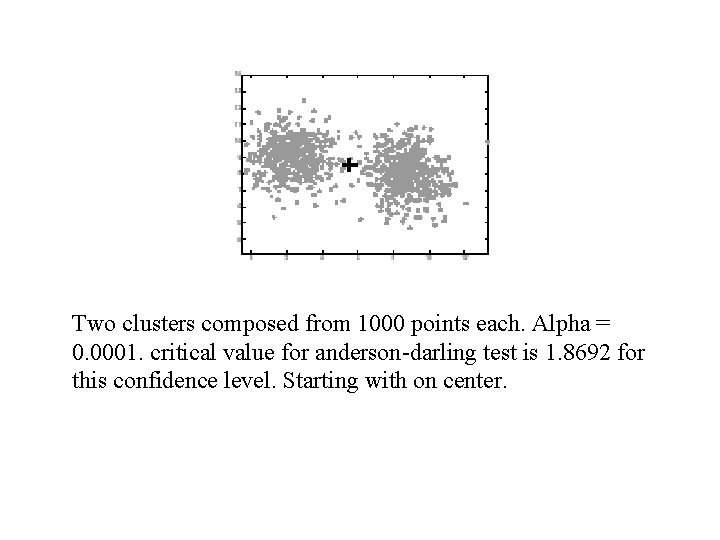

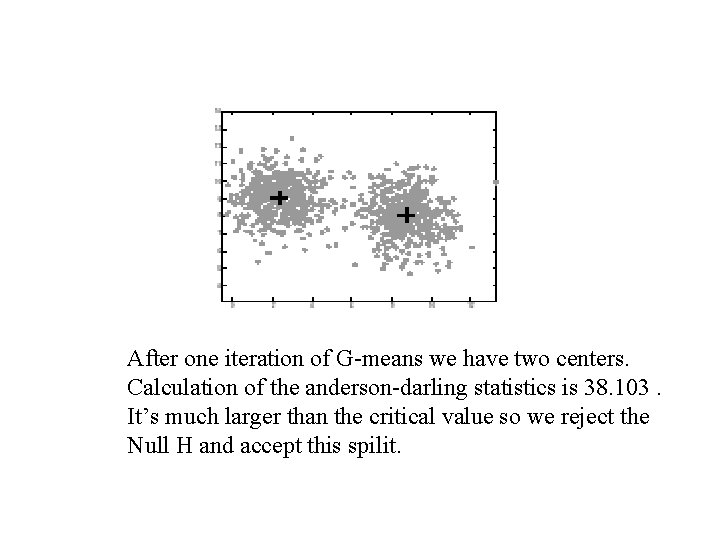

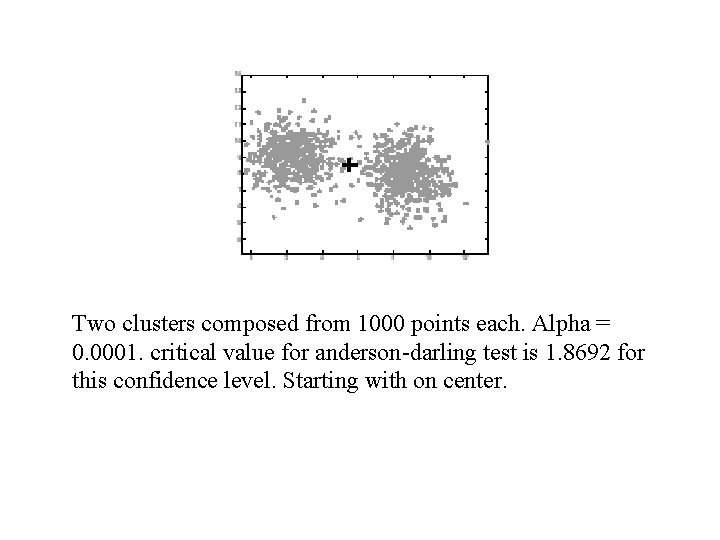

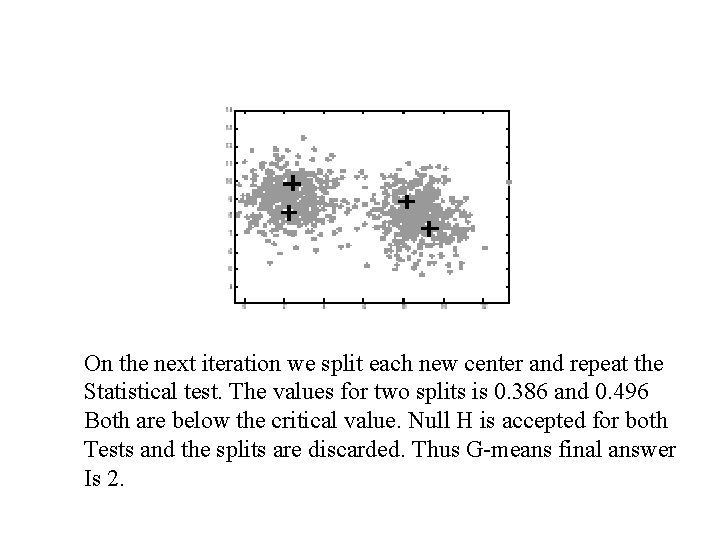

Two clusters composed from 1000 points each. Alpha = 0. 0001. critical value for anderson-darling test is 1. 8692 for this confidence level. Starting with on center.

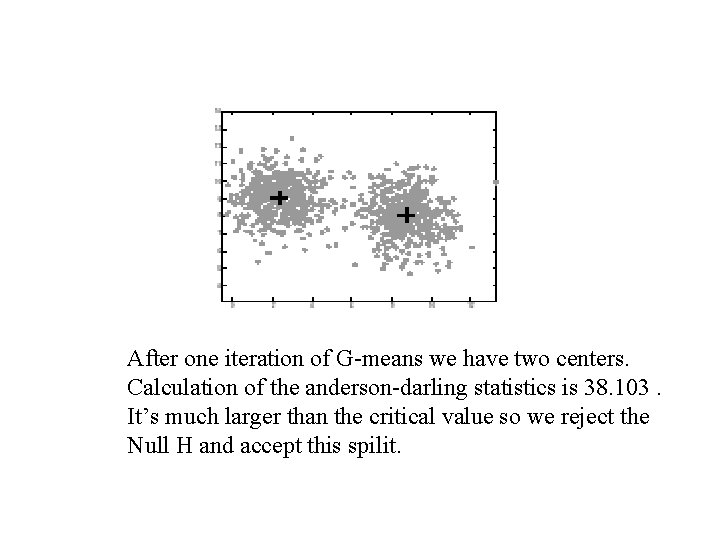

After one iteration of G-means we have two centers. Calculation of the anderson-darling statistics is 38. 103. It’s much larger than the critical value so we reject the Null H and accept this spilit.

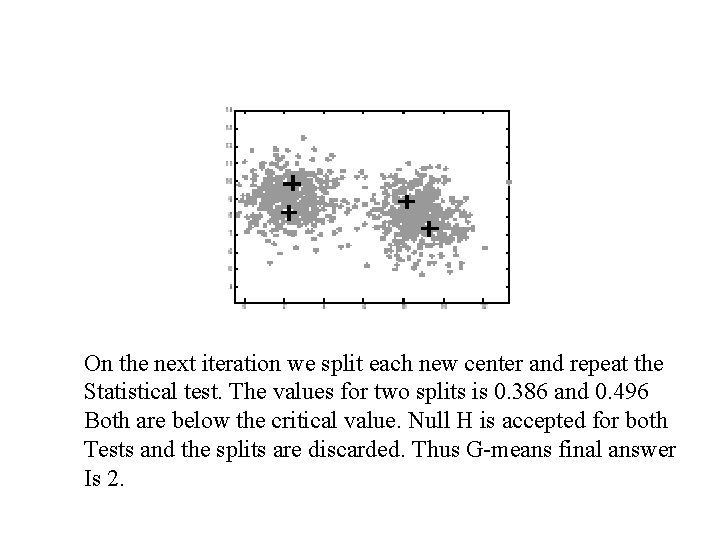

On the next iteration we split each new center and repeat the Statistical test. The values for two splits is 0. 386 and 0. 496 Both are below the critical value. Null H is accepted for both Tests and the splits are discarded. Thus G-means final answer Is 2.

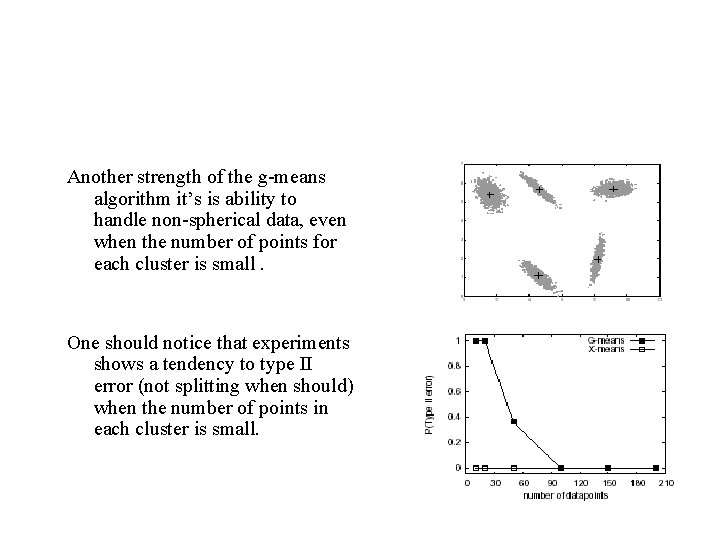

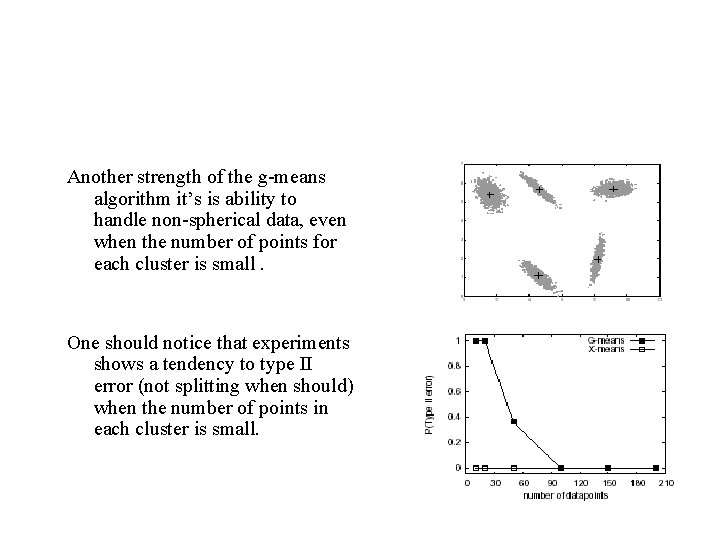

Another strength of the g-means algorithm it’s is ability to handle non-spherical data, even when the number of points for each cluster is small. One should notice that experiments shows a tendency to type II error (not splitting when should) when the number of points in each cluster is small.