Unsupervised Ensemble Based Learning for Insider Threat Detection

Unsupervised Ensemble Based Learning for Insider Threat Detection Pallabi Parveen, Nate Mc. Daniel, Varun S. Hariharan, Bhavani Thuraisingham and Latifur Khan Department of Computer Science at The University of Texas at Dallas

Outlines �Insider Threat �LZW & Quantized Dictionary �Concept Drift �Experiments & Results

What is the Problem? Definition of an Insider An Insider is someone who exploits, or has the intention to exploit, his/her legitimate access to assets unauthorised purposes. for For example, over time, legitimate users may enter commands that read or write private data, or install malicious software

Motivation � Computer Crime and Security Survey 2001 � $377 million financial losses due to attacks � 49% reported incidents of unauthorized network access by insiders � Wiki. Leaks Breach Highlights Insider Security Threat--Even the toughest security systems sometimes have a soft center that can be exploited by someone who has passed rigorous screening http: //www. scientificamerican. com/article. cfm? id =wikileaks-insider-threat

Challenges/Issues �Reduce false alarm rate without sacrificing threat detection rate �Threat detection is challenging since insiders mask and adapt their behavior to resemble legitimate system.

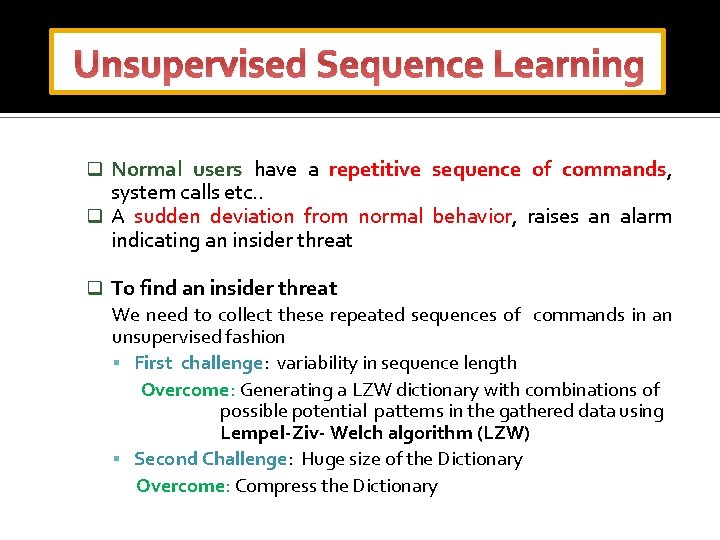

Unsupervised Sequence Learning q Normal users have a repetitive sequence of commands, system calls etc. . q A sudden deviation from normal behavior, raises an alarm indicating an insider threat q To find an insider threat We need to collect these repeated sequences of commands in an unsupervised fashion First challenge: variability in sequence length Overcome: Generating a LZW dictionary with combinations of possible potential patterns in the gathered data using Lempel-Ziv- Welch algorithm (LZW) Second Challenge: Huge size of the Dictionary Overcome: Compress the Dictionary

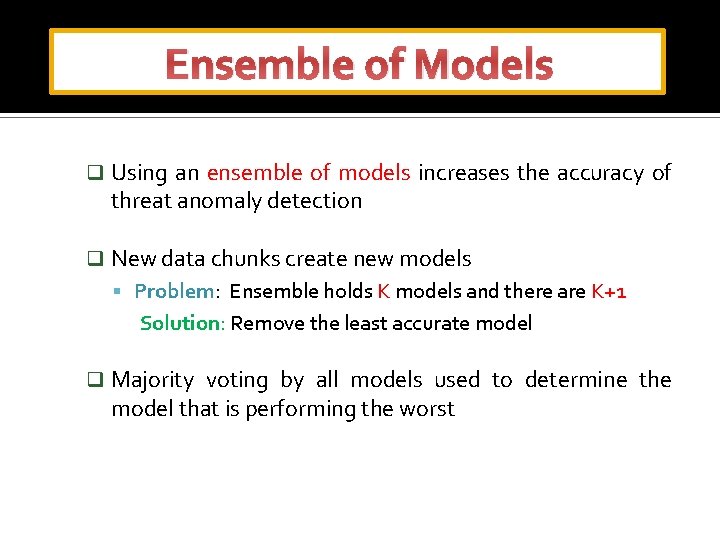

Ensemble of Models q Using an ensemble of models increases the accuracy of threat anomaly detection q New data chunks create new models Problem: Ensemble holds K models and there are K+1 Solution: Remove the least accurate model q Majority voting by all models used to determine the model that is performing the worst

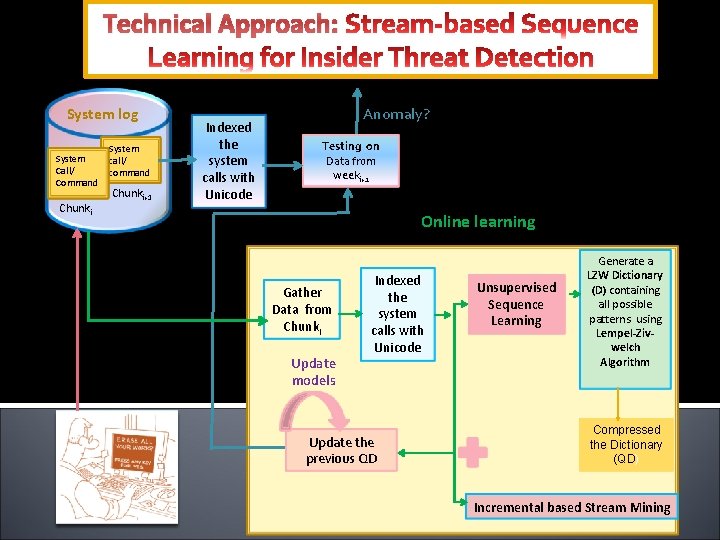

Technical Approach: System log System Call/ Command Chunki System call/ command j Chunki+1 Indexed the system calls with Unicode Anomaly? Testing on Data from weeki+1 Online learning Gather Data from Chunki Update models Indexed the system calls with Unicode Update the previous QD Unsupervised Sequence Learning Generate a LZW Dictionary (D) containing all possible patterns using Lempel-Zivwelch Algorithm Compressed the Dictionary (QD) Incremental based Stream Mining

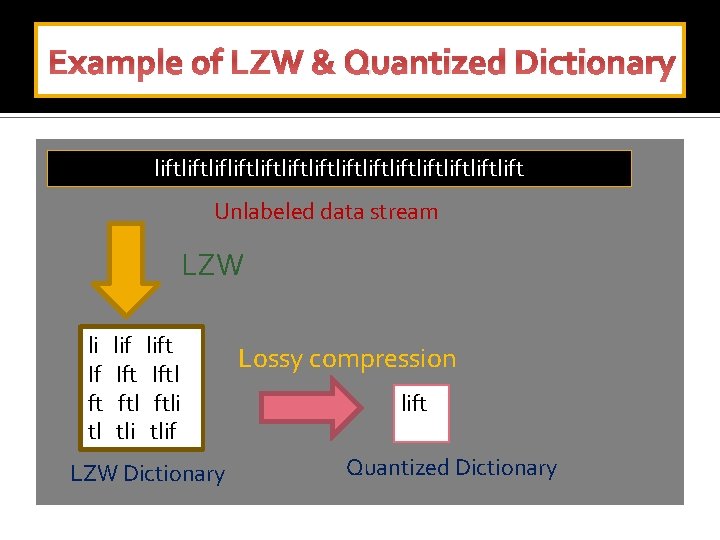

Example of LZW & Quantized Dictionary liftliftliftliftliftliftlift Unlabeled data stream LZW li If ft tl lift Iftl ftli tlif LZW Dictionary Lossy compression lift Quantized Dictionary

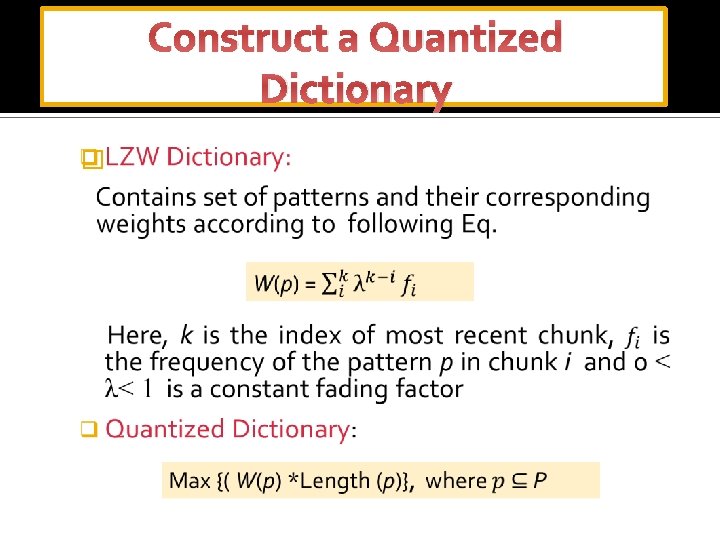

Construct a Quantized Dictionary �

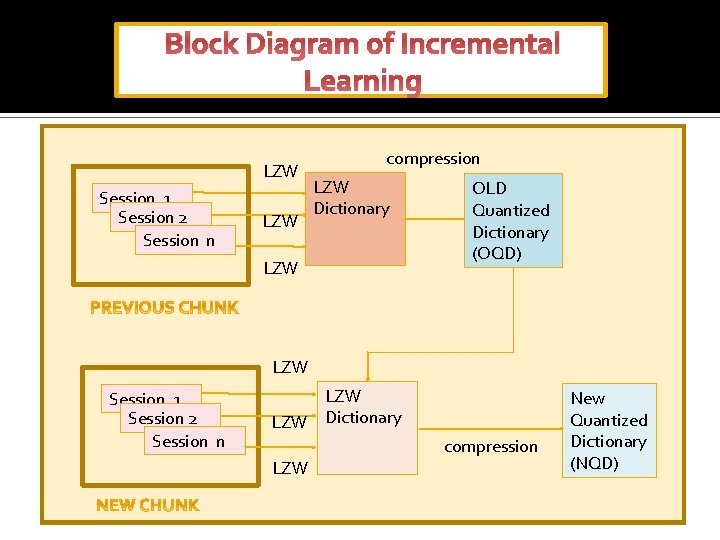

Block Diagram of Incremental Learning LZW Session 1 Session 2 Session n LZW compression LZW Dictionary LZW OLD Quantized Dictionary (OQD) LZW Session 1 Session 2 Session n LZW Dictionary compression LZW New Quantized Dictionary (NQD)

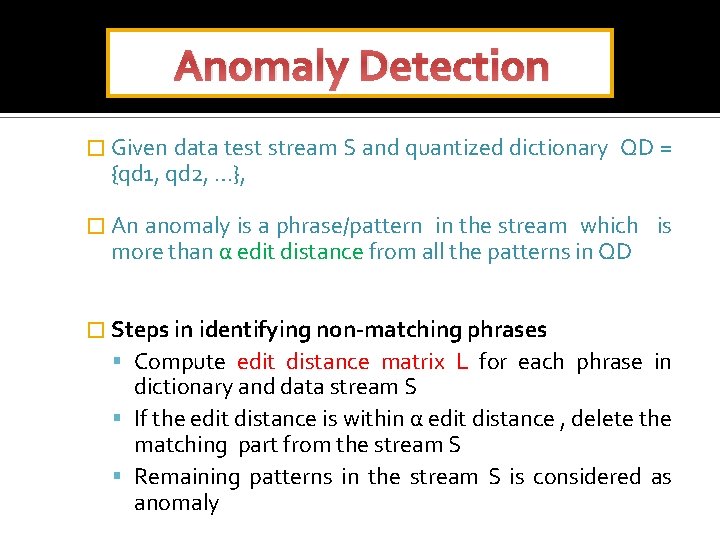

Anomaly Detection � Given data test stream S and quantized dictionary {qd 1, qd 2, …}, QD = � An anomaly is a phrase/pattern in the stream which is more than α edit distance from all the patterns in QD � Steps in identifying non-matching phrases Compute edit distance matrix L for each phrase in dictionary and data stream S If the edit distance is within α edit distance , delete the matching part from the stream S Remaining patterns in the stream S is considered as anomaly

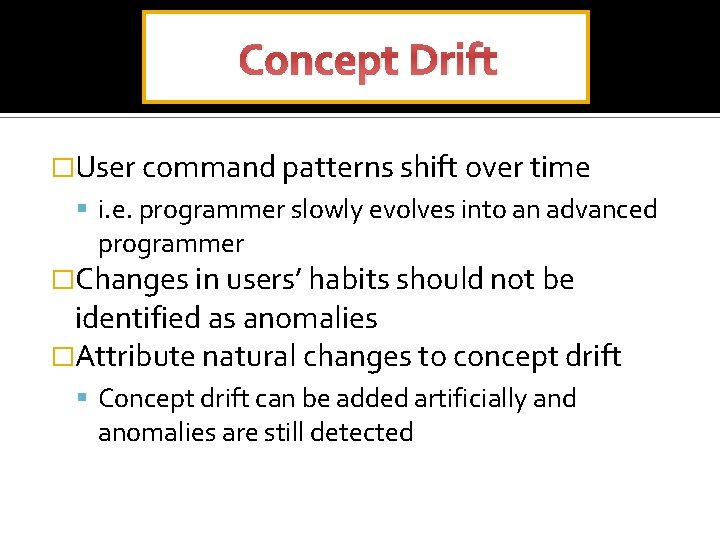

Concept Drift �User command patterns shift over time i. e. programmer slowly evolves into an advanced programmer �Changes in users’ habits should not be identified as anomalies �Attribute natural changes to concept drift Concept drift can be added artificially and anomalies are still detected

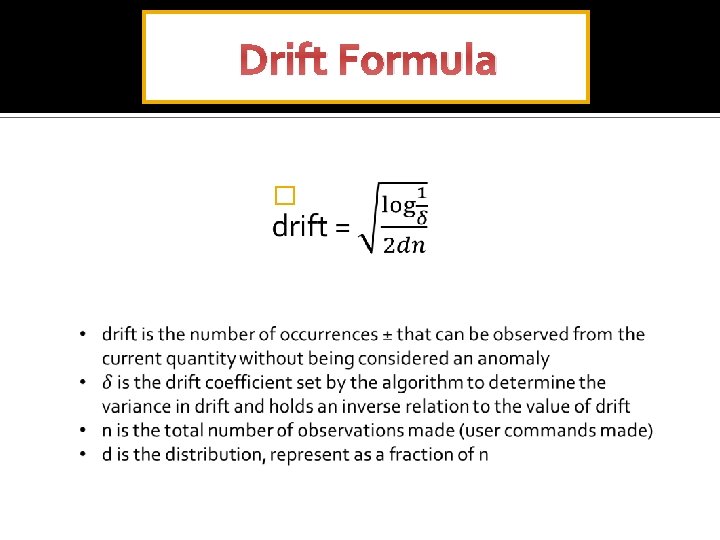

Drift Formula �

![Drift Example � drift = [. 7071, 1. 1180, 1. 5811, 1. 5811] Min/Max Drift Example � drift = [. 7071, 1. 1180, 1. 5811, 1. 5811] Min/Max](http://slidetodoc.com/presentation_image_h2/4cd3203b2a5210922f7a7b894f8aaa40/image-15.jpg)

Drift Example � drift = [. 7071, 1. 1180, 1. 5811, 1. 5811] Min/Max distributions = [. 42929/. 57071, . 08820/. 31180, 0/. 25811, 0/. 25811]

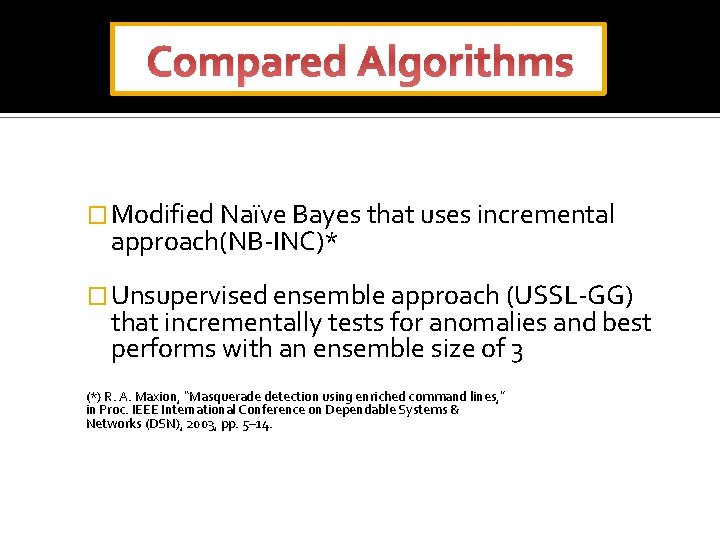

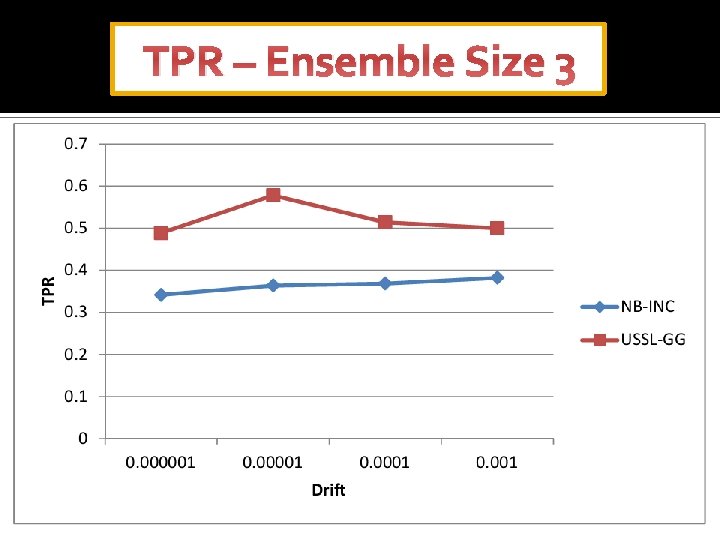

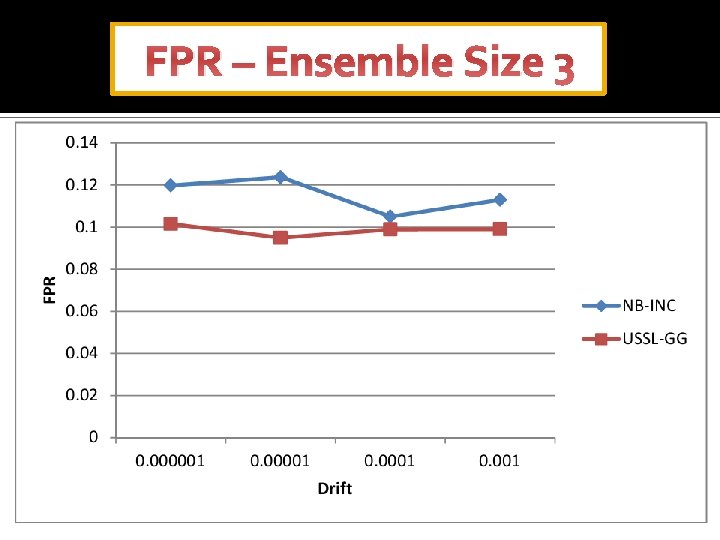

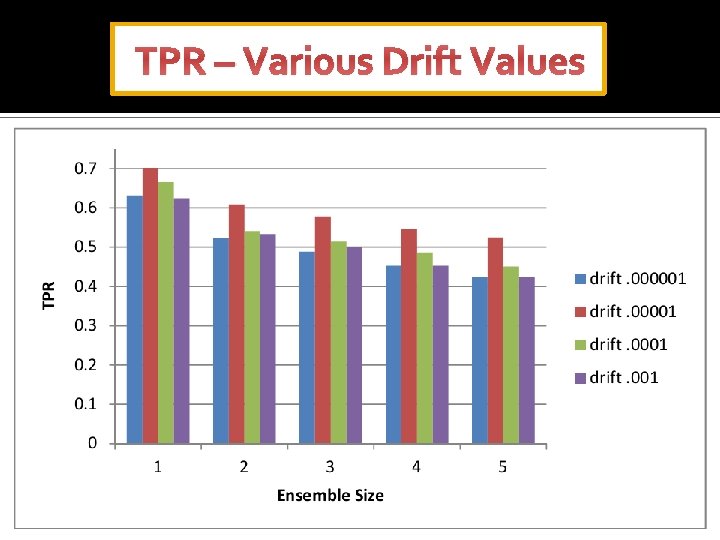

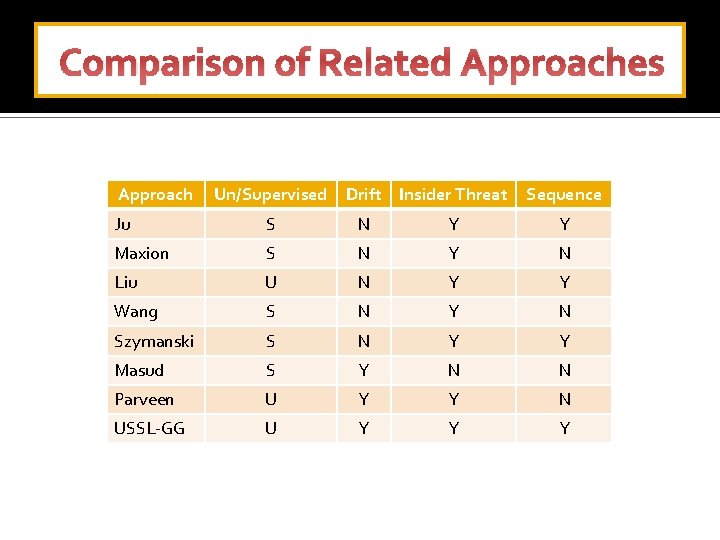

Compared Algorithms � Modified Naïve Bayes that uses incremental approach(NB-INC)* � Unsupervised ensemble approach (USSL-GG) that incrementally tests for anomalies and best performs with an ensemble size of 3 (*) R. A. Maxion, “Masquerade detection using enriched command lines, ” in Proc. IEEE International Conference on Dependable Systems & Networks (DSN), 2003, pp. 5– 14.

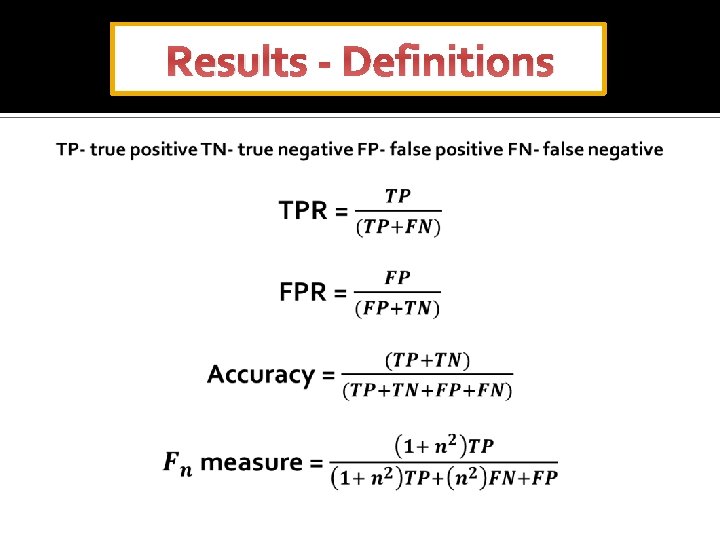

Results - Definitions

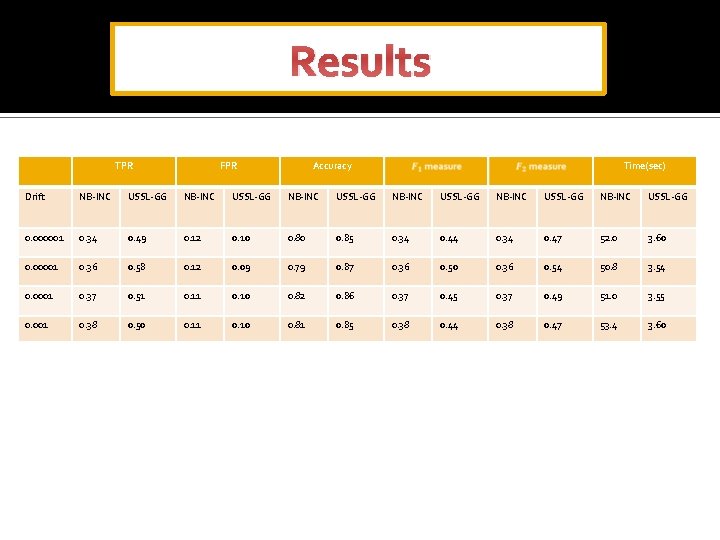

Results TPR FPR Accuracy Time(sec) Drift NB-INC USSL-GG NB-INC USSL-GG 0. 000001 0. 34 0. 49 0. 12 0. 10 0. 85 0. 34 0. 44 0. 34 0. 47 52. 0 3. 60 0. 00001 0. 36 0. 58 0. 12 0. 09 0. 79 0. 87 0. 36 0. 50 0. 36 0. 54 50. 8 3. 54 0. 0001 0. 37 0. 51 0. 10 0. 82 0. 86 0. 37 0. 45 0. 37 0. 49 51. 0 3. 55 0. 001 0. 38 0. 50 0. 11 0. 10 0. 81 0. 85 0. 38 0. 44 0. 38 0. 47 53. 4 3. 60

TPR – Ensemble Size 3

FPR – Ensemble Size 3

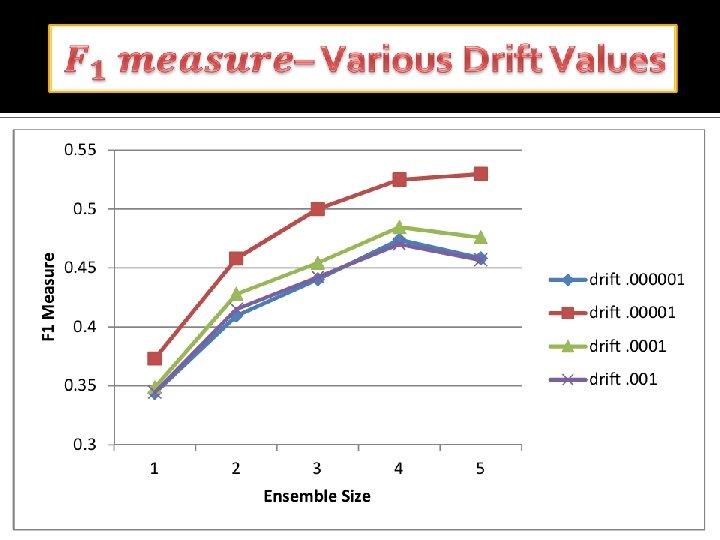

TPR – Various Drift Values

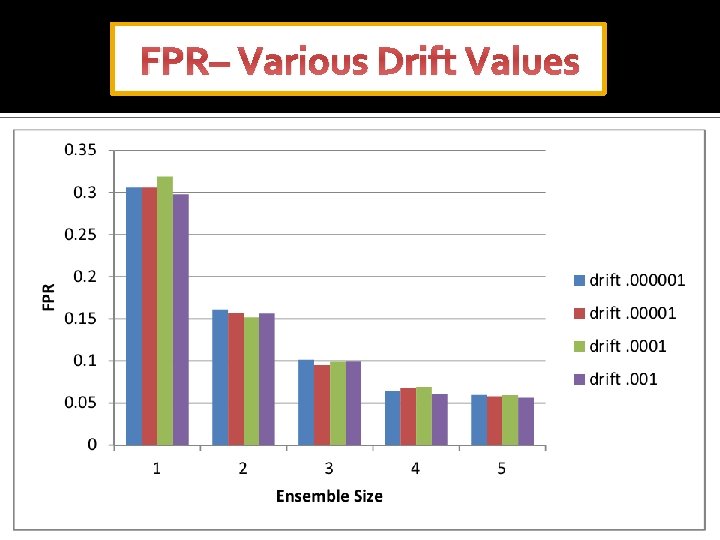

FPR– Various Drift Values

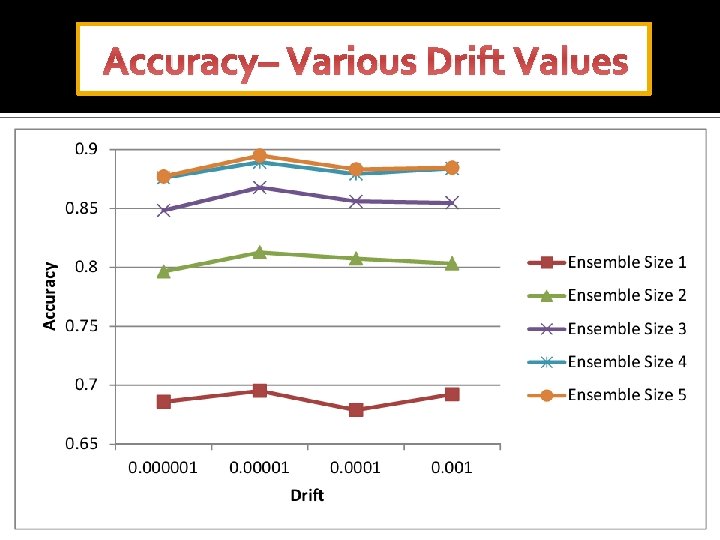

Accuracy– Various Drift Values

Accomplishment so far �Ensemble based stream mining effectively detects insider threats while coping with evolving concept drift �Our approach adopts advantages from stream mining, compression and ensembles– Compression gives unsupervised learning Stream mining offered adaptive learning Ensembles increase accuracy with concept drift

Comparison of Related Approaches Approach Un/Supervised Drift Insider Threat Sequence Ju S N Y Y Maxion S N Y N Liu U N Y Y Wang S N Y N Szymanski S N Y Y Masud S Y N N Parveen U Y Y N USSL-GG U Y Y Y

What remains to be accomplished? Update existing models based on user feedback Update and refine models on ground truth when it is available

THANKS

- Slides: 29