Unrolling A principled method to develop deep neural

![Performance [Borgerding and Schniter 2016] Performance [Borgerding and Schniter 2016]](https://slidetodoc.com/presentation_image_h/191d68fcb25f85254ec59032d27bbadf/image-10.jpg)

- Slides: 40

Unrolling: A principled method to develop deep neural networks Chris Metzler, Ali Mousavi, Richard Baraniuk Rice University

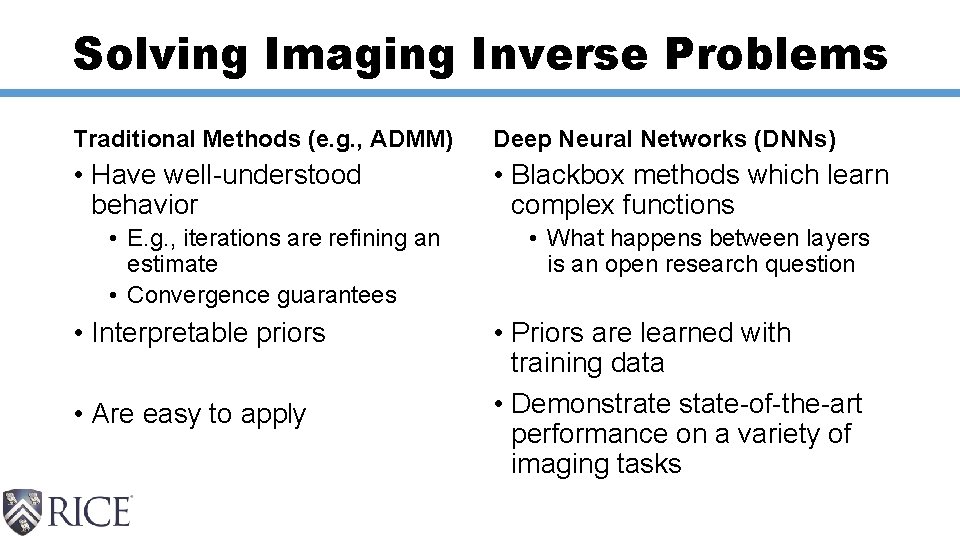

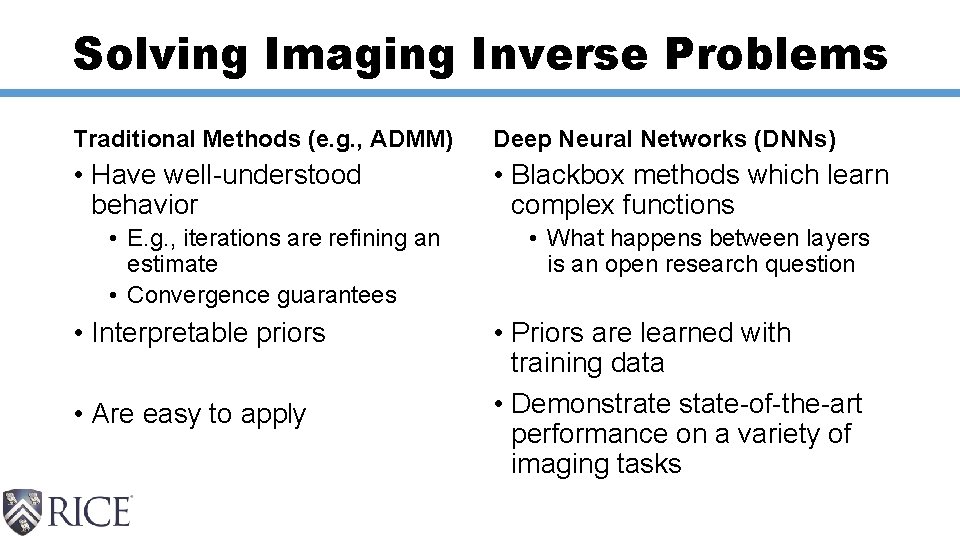

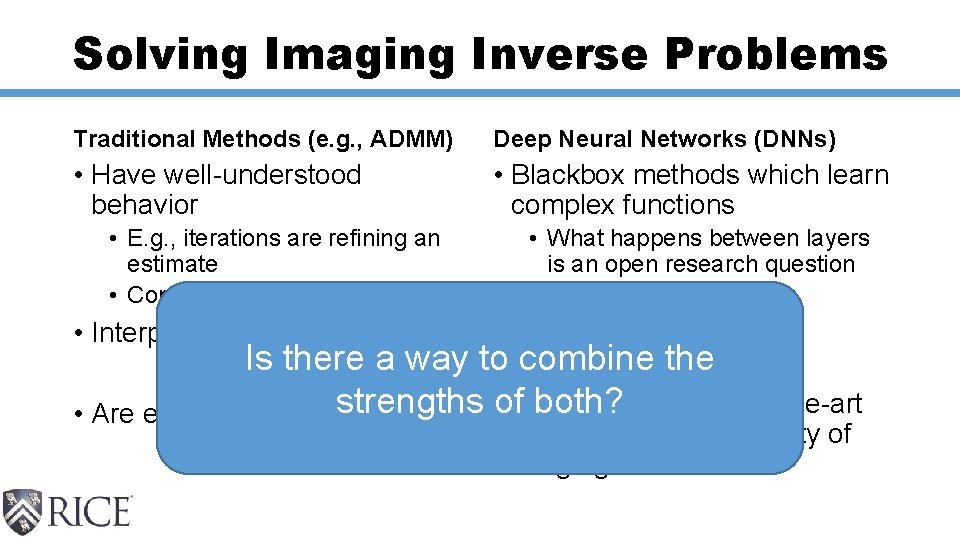

Solving Imaging Inverse Problems Traditional Methods (e. g. , ADMM) Deep Neural Networks (DNNs) • Have well-understood behavior • Blackbox methods which learn complex functions • E. g. , iterations are refining an estimate • Convergence guarantees • Interpretable priors • Are easy to apply • What happens between layers is an open research question • Priors are learned with training data • Demonstrate state-of-the-art performance on a variety of imaging tasks

Solving Imaging Inverse Problems Traditional Methods (e. g. , ADMM) Deep Neural Networks (DNNs) • Have well-understood behavior • Blackbox methods which learn complex functions • E. g. , iterations are refining an estimate • Convergence guarantees • Interpretable priors • What happens between layers is an open research question • Priors are learned with Is there a way to combine the training data • Demonstrate state-of-the-art • Are easy to apply strengths of both? performance on a variety of imaging tasks

This talk • Describe unrolling; a process to turn an iterative algorithm into a deep neural network • Can use training data • Is interpretable • Maintains convergence and performance guarantees • Apply unrolling to the Denoising-based AMP algorithm to solve the compressive imaging problem

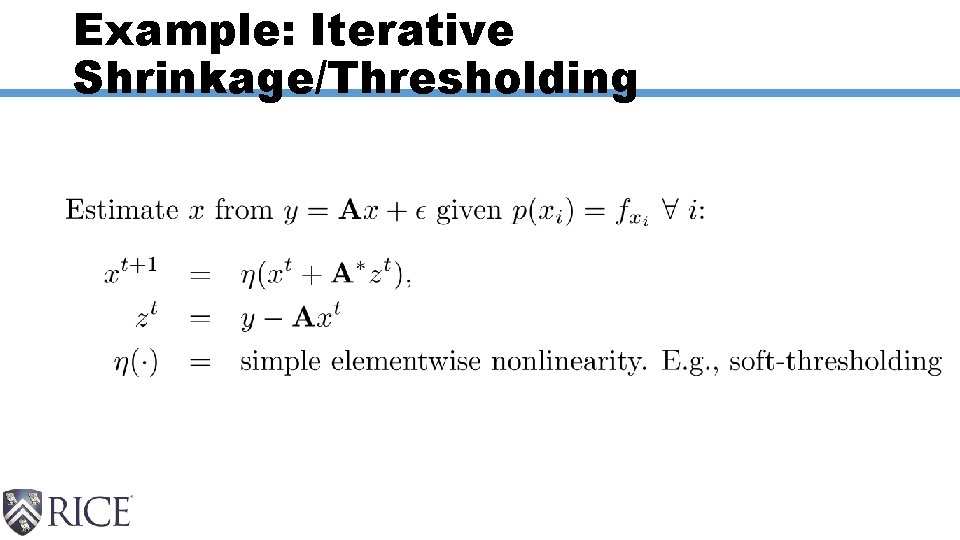

Unrolling an algorithm

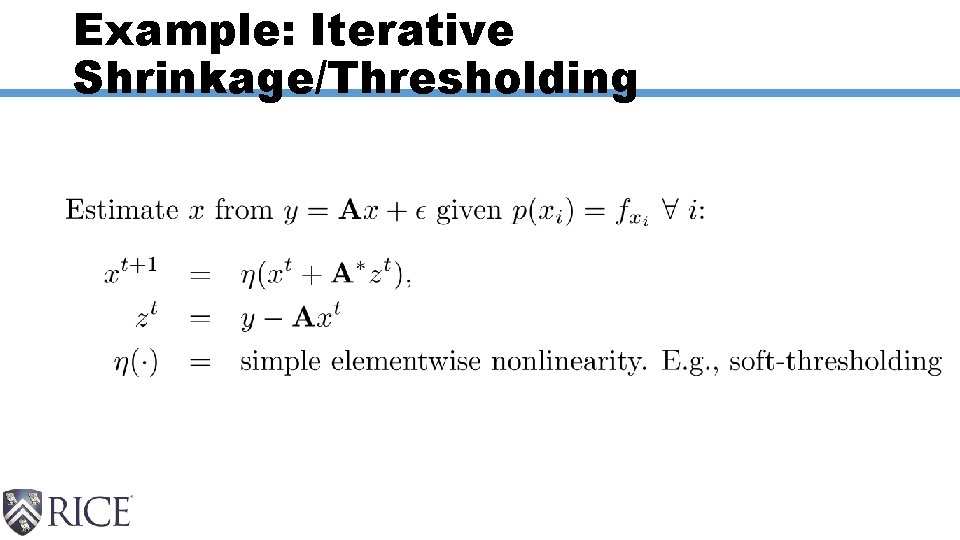

Example: Iterative Shrinkage/Thresholding

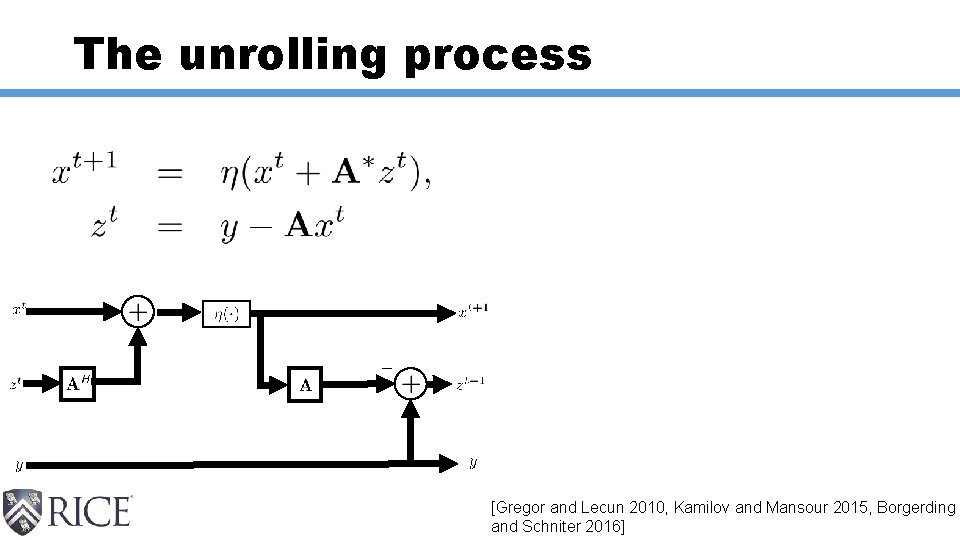

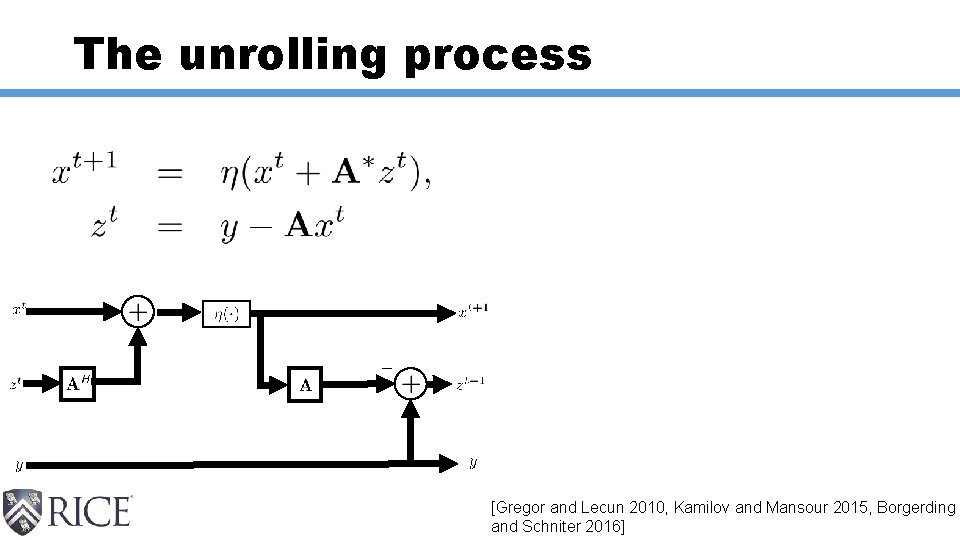

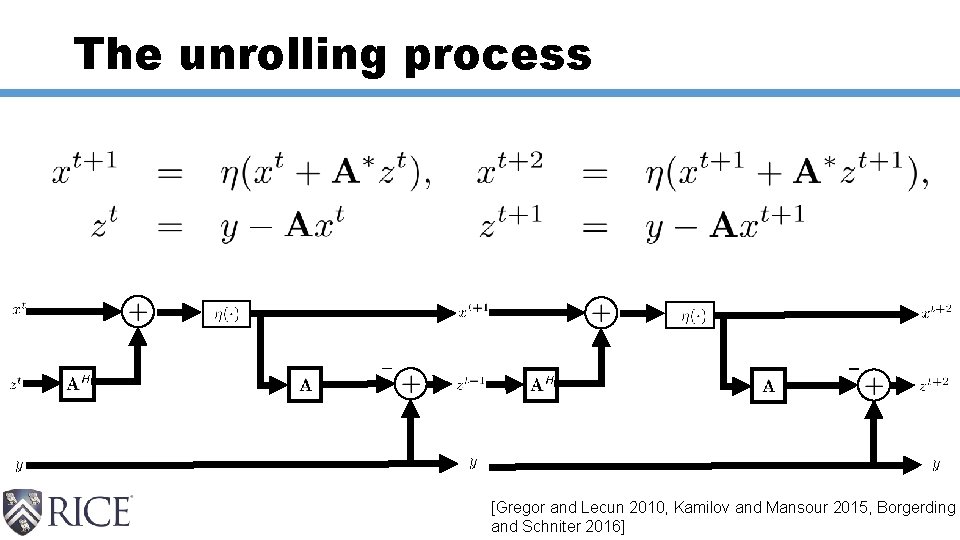

The unrolling process [Gregor and Lecun 2010, Kamilov and Mansour 2015, Borgerding and Schniter 2016]

The unrolling process [Gregor and Lecun 2010, Kamilov and Mansour 2015, Borgerding and Schniter 2016]

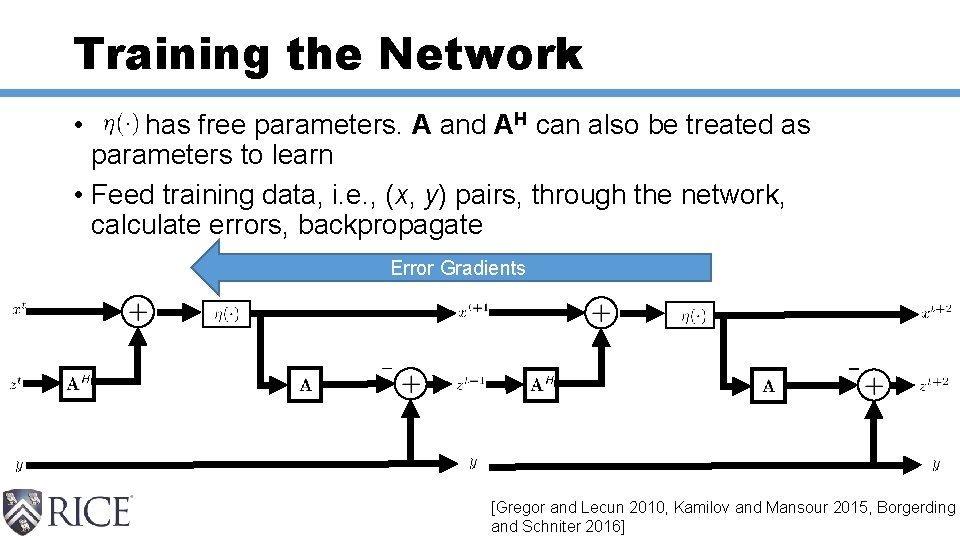

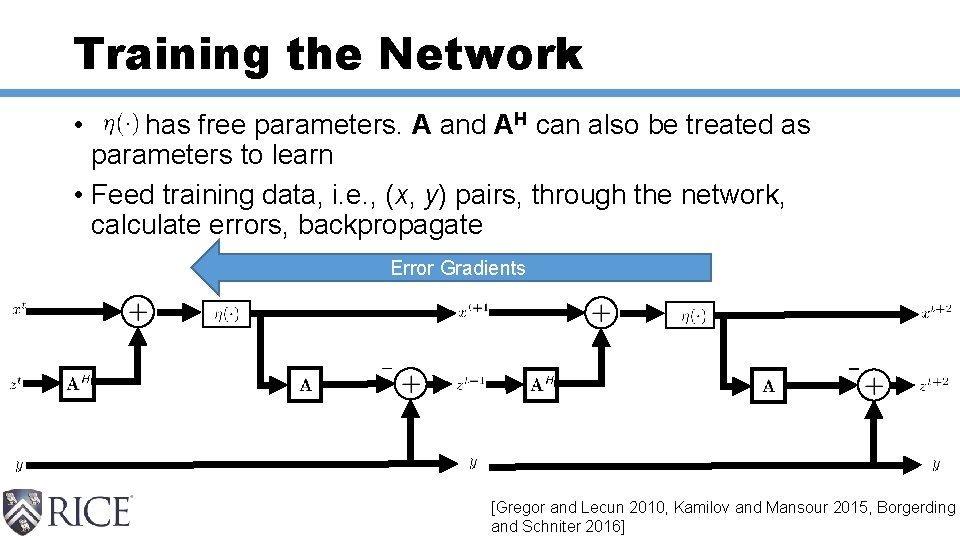

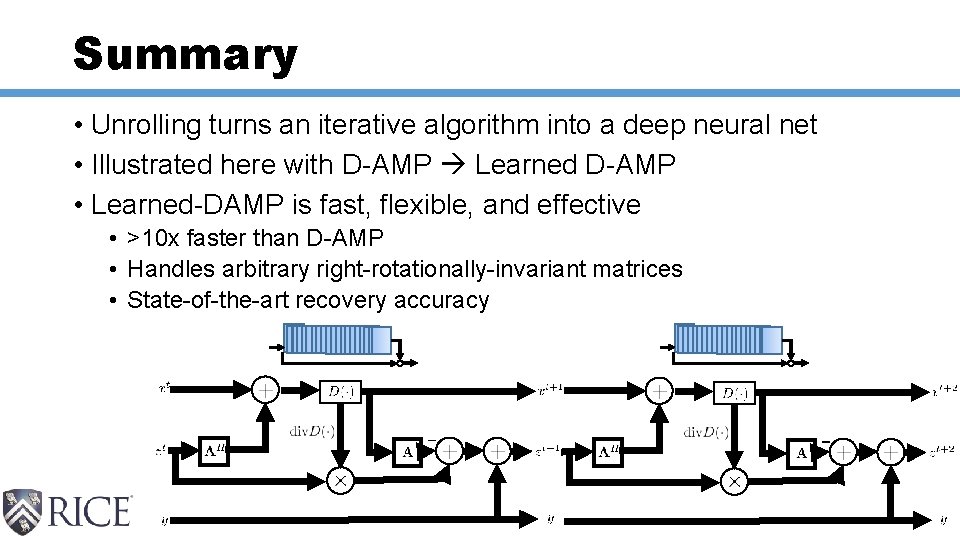

Training the Network • has free parameters. A and AH can also be treated as parameters to learn • Feed training data, i. e. , (x, y) pairs, through the network, calculate errors, backpropagate Error Gradients [Gregor and Lecun 2010, Kamilov and Mansour 2015, Borgerding and Schniter 2016]

![Performance Borgerding and Schniter 2016 Performance [Borgerding and Schniter 2016]](https://slidetodoc.com/presentation_image_h/191d68fcb25f85254ec59032d27bbadf/image-10.jpg)

Performance [Borgerding and Schniter 2016]

Unrolling Denoisingbased Approximate Message Passing

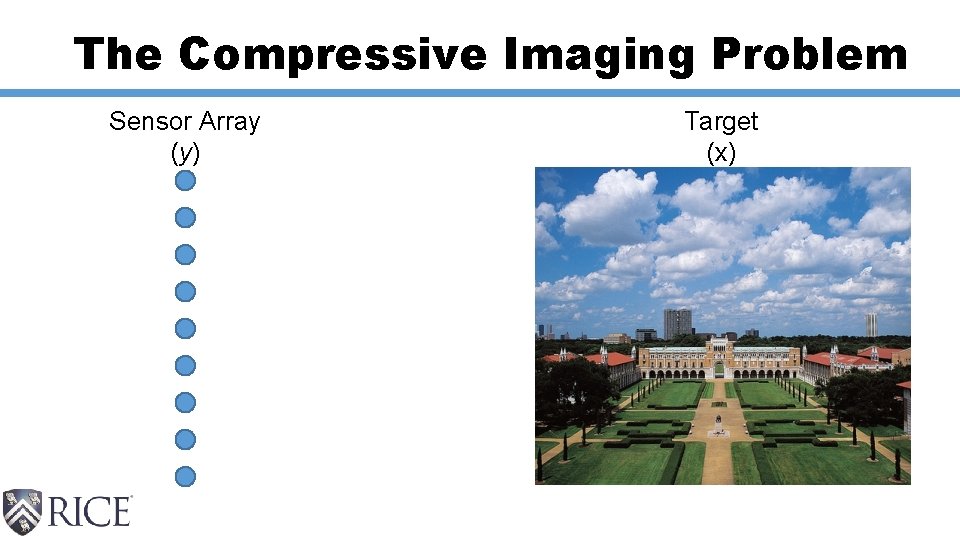

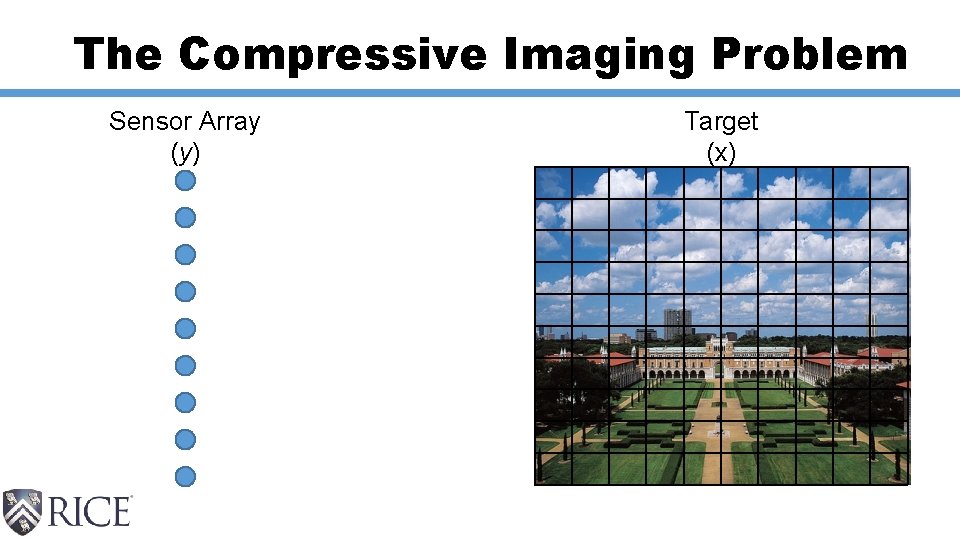

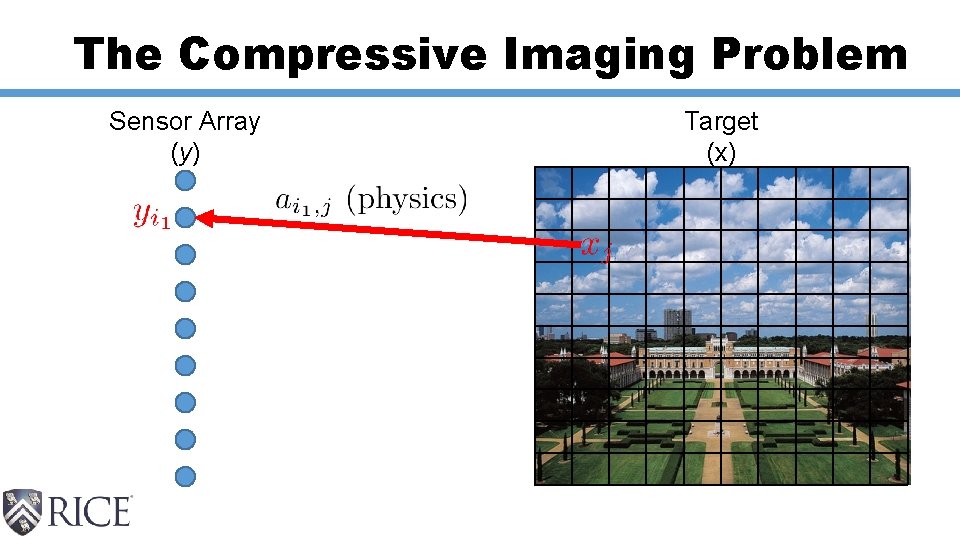

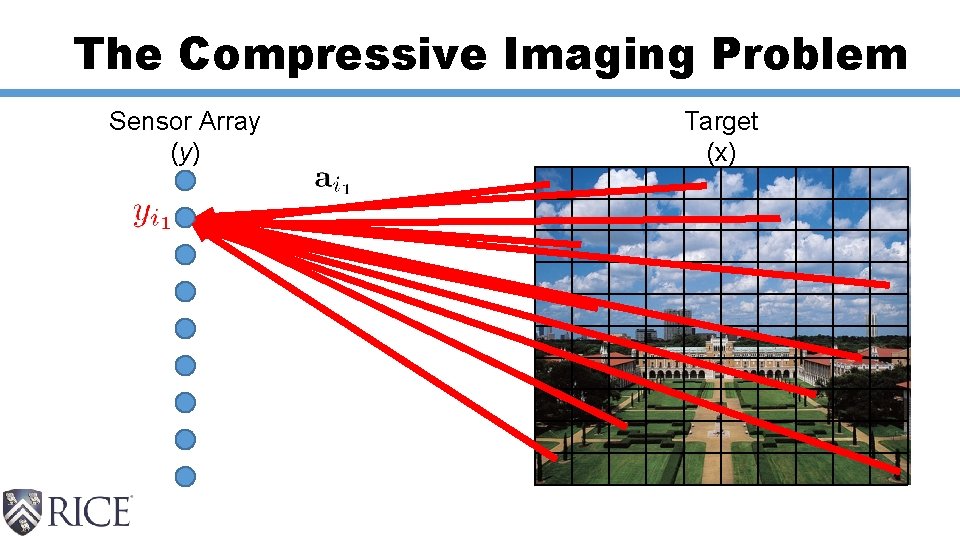

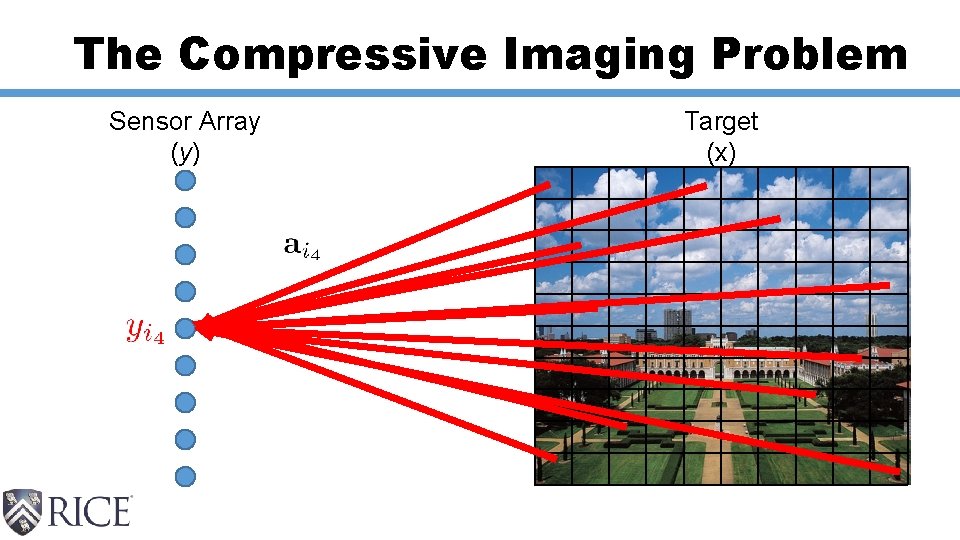

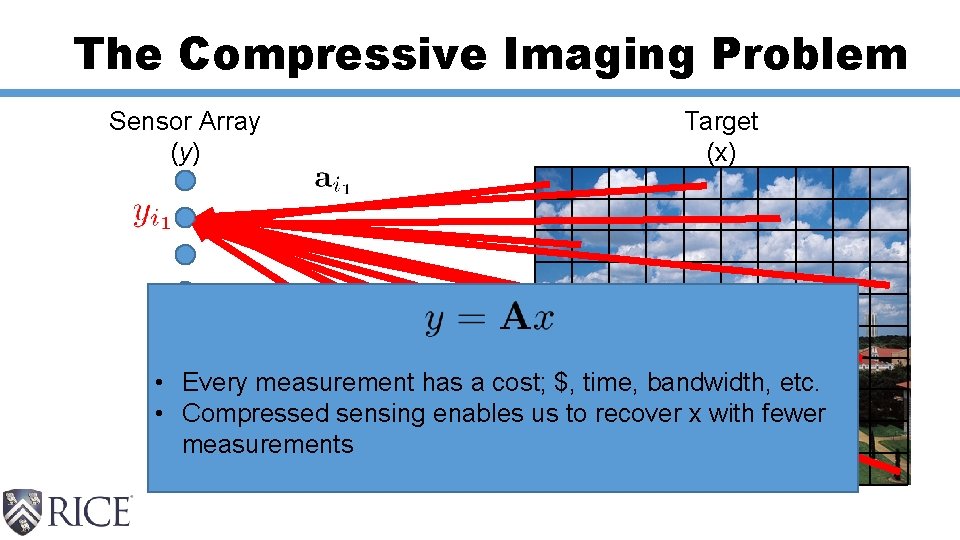

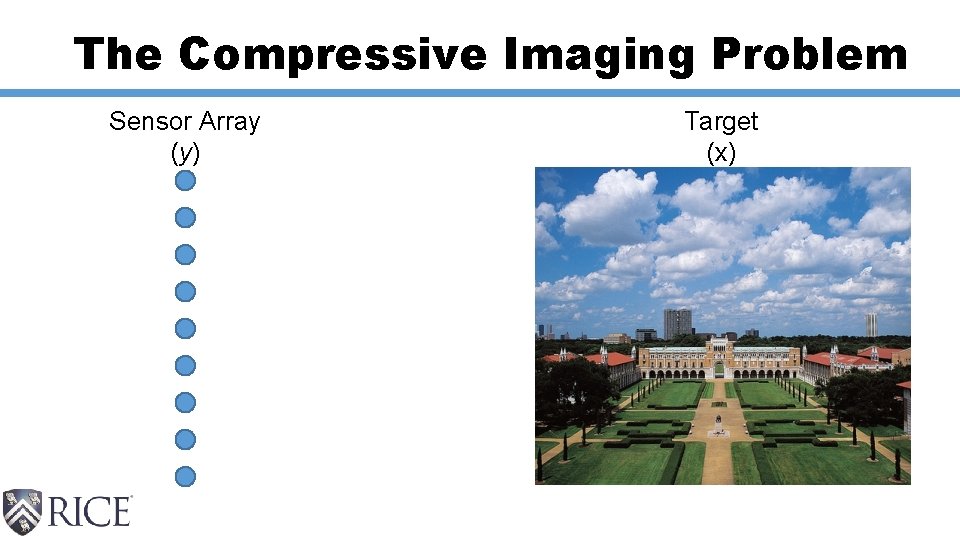

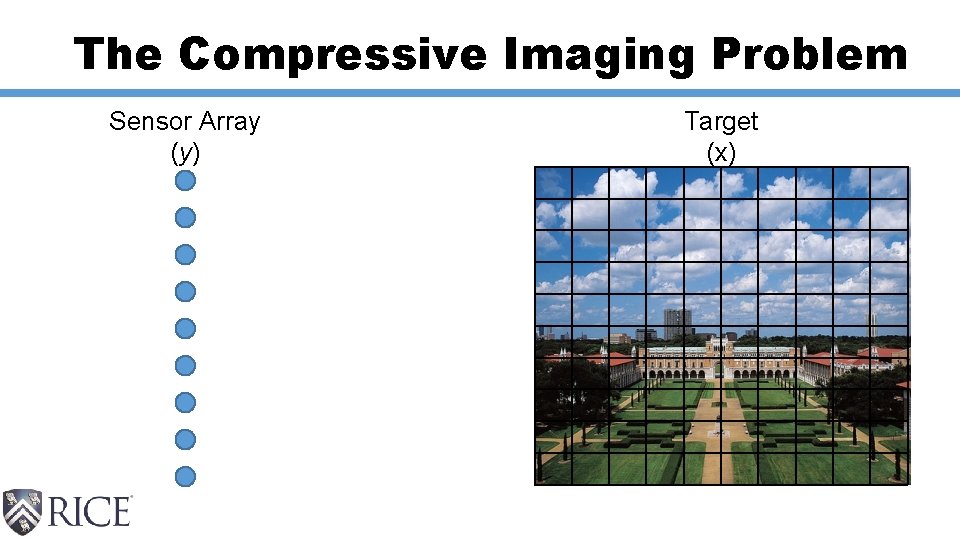

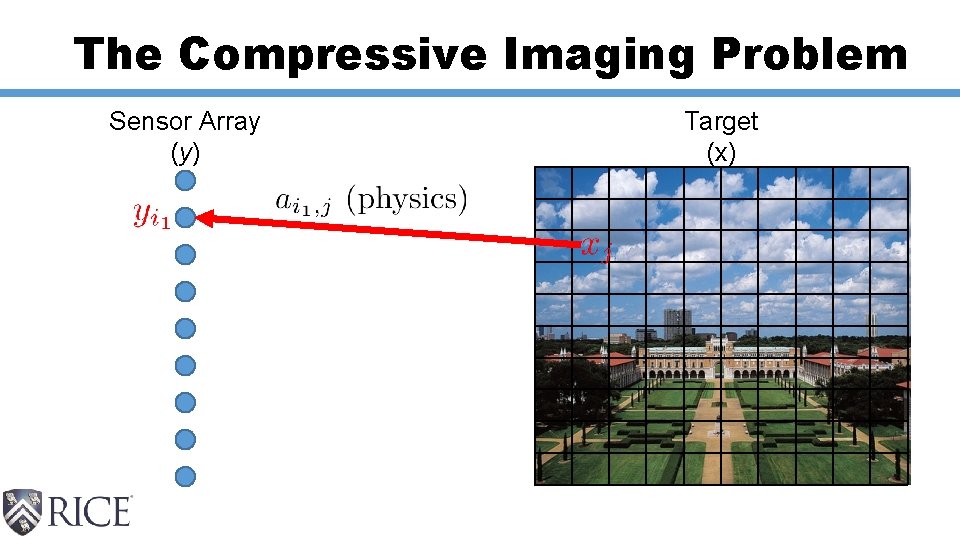

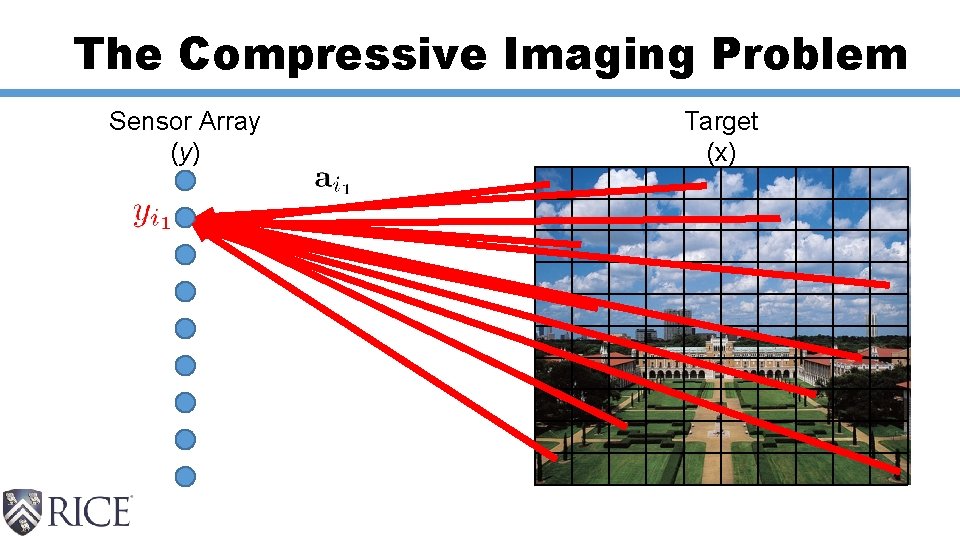

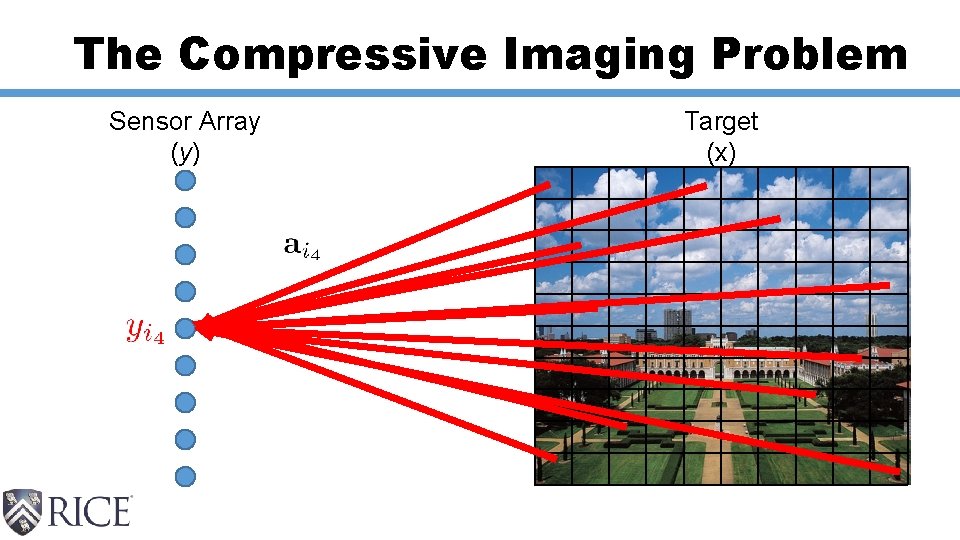

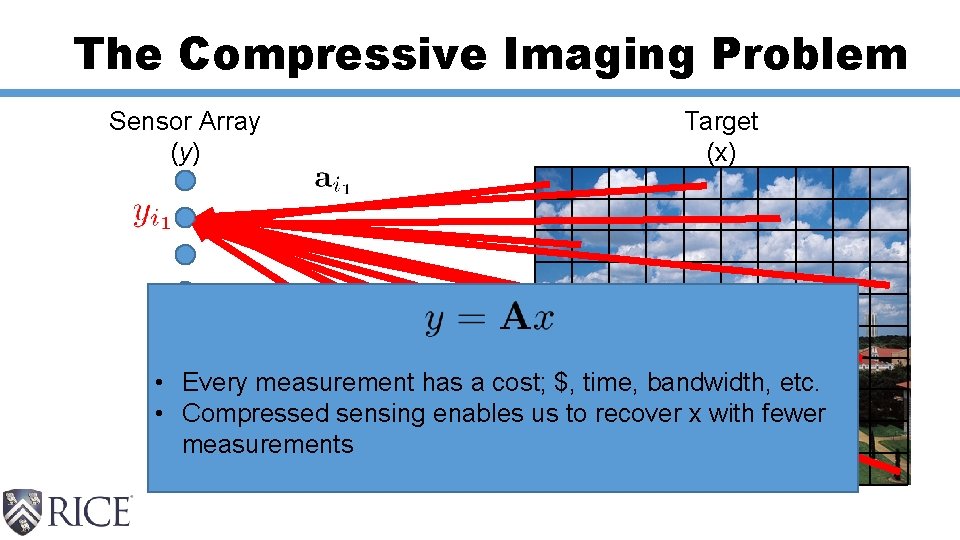

The Compressive Imaging Problem Sensor Array (y) Target (x)

The Compressive Imaging Problem Sensor Array (y) Target (x)

The Compressive Imaging Problem Sensor Array (y) Target (x)

The Compressive Imaging Problem Sensor Array (y) Target (x)

The Compressive Imaging Problem Sensor Array (y) Target (x)

The Compressive Imaging Problem Sensor Array (y) Target (x) • Every measurement has a cost; $, time, bandwidth, etc. • Compressed sensing enables us to recover x with fewer measurements

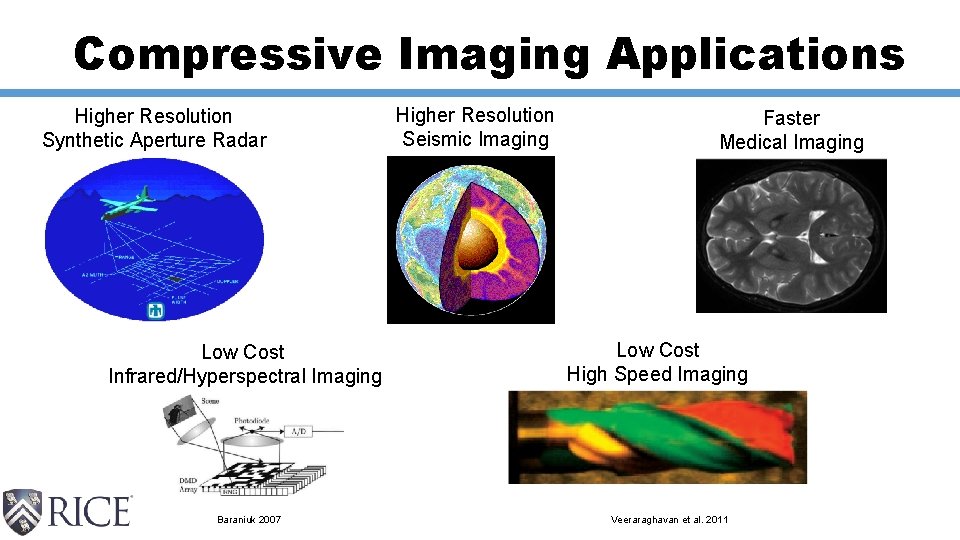

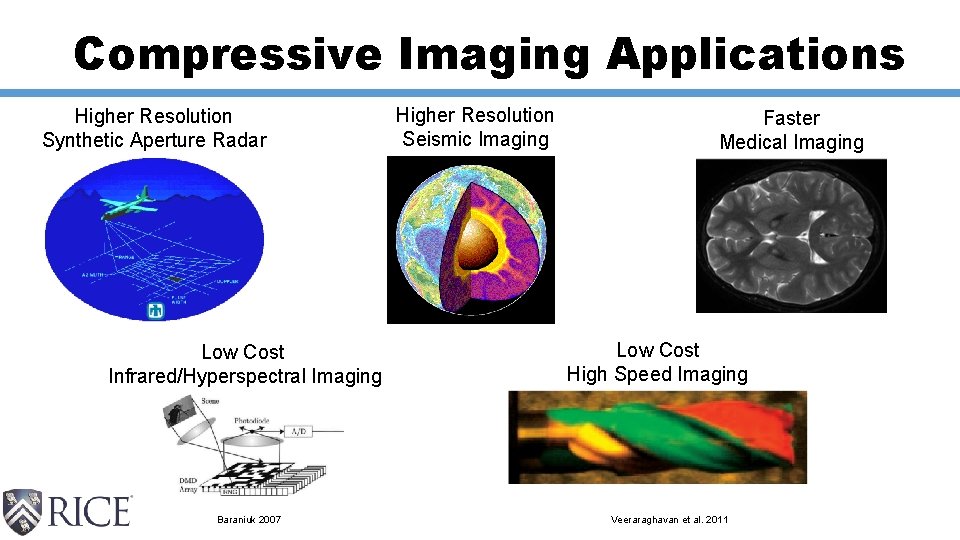

Compressive Imaging Applications Higher Resolution Synthetic Aperture Radar Low Cost Infrared/Hyperspectral Imaging Baraniuk 2007 Higher Resolution Seismic Imaging Faster Medical Imaging Low Cost High Speed Imaging Veeraraghavan et al. 2011

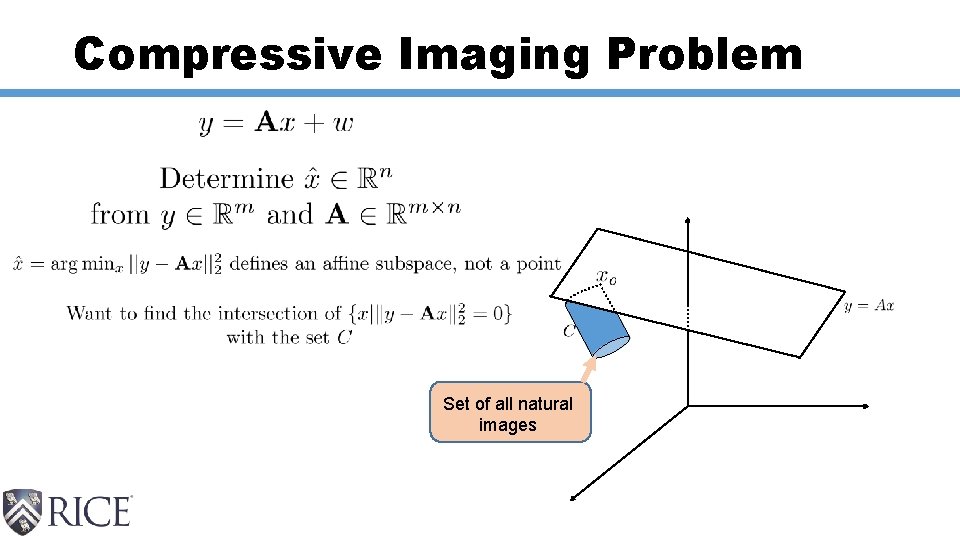

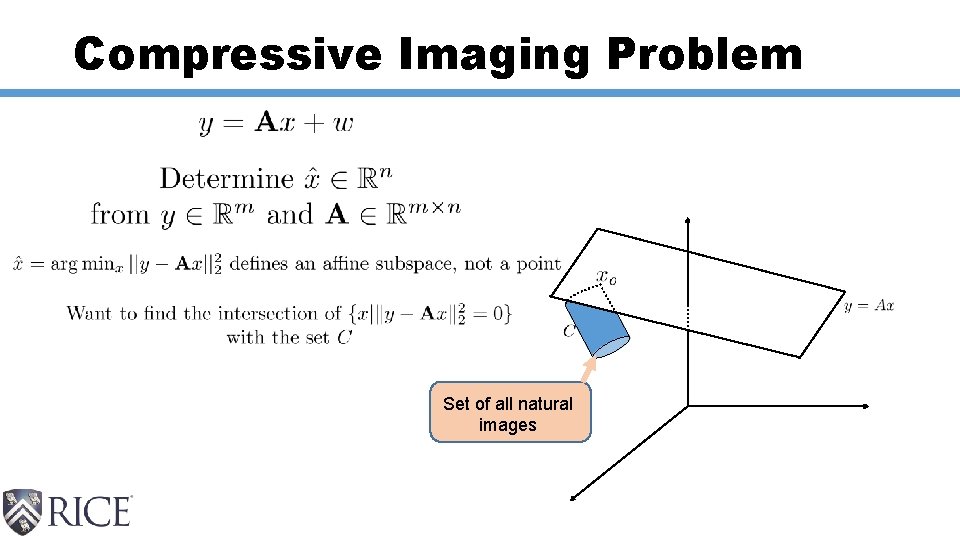

Compressive Imaging Problem Set of all natural images

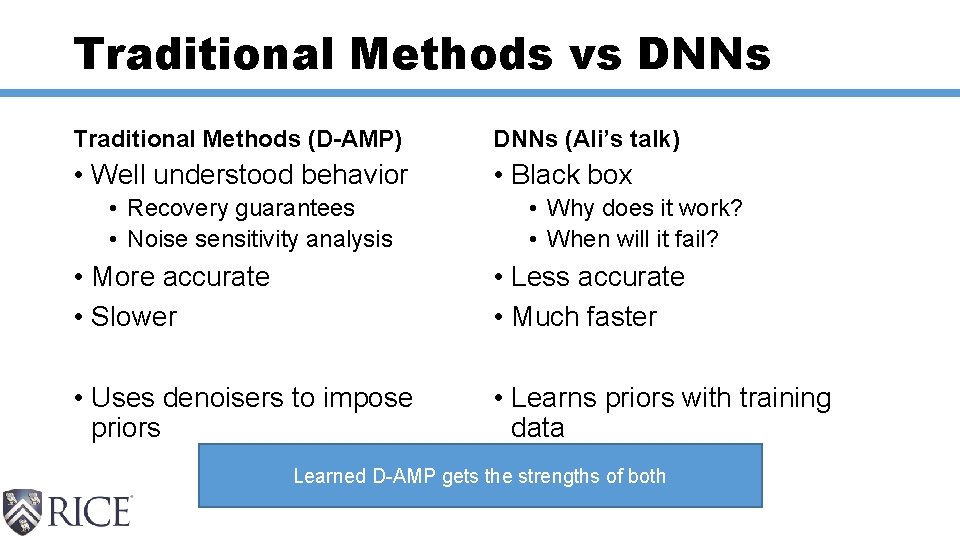

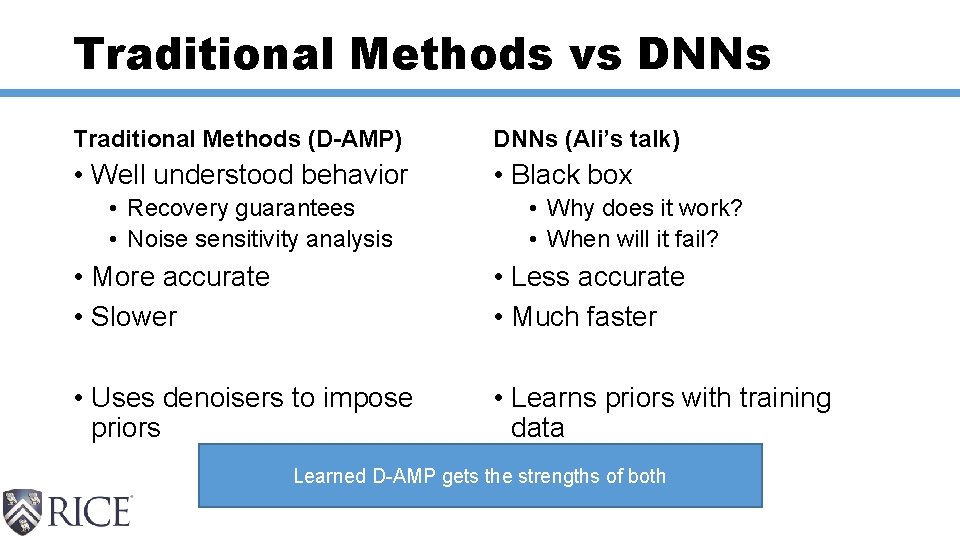

Traditional Methods vs DNNs Traditional Methods (D-AMP) DNNs (Ali’s talk) • Well understood behavior • Black box • Recovery guarantees • Noise sensitivity analysis • Why does it work? • When will it fail? • More accurate • Slower • Less accurate • Much faster • Uses denoisers to impose priors • Learns priors with training data Learned D-AMP gets the strengths of both

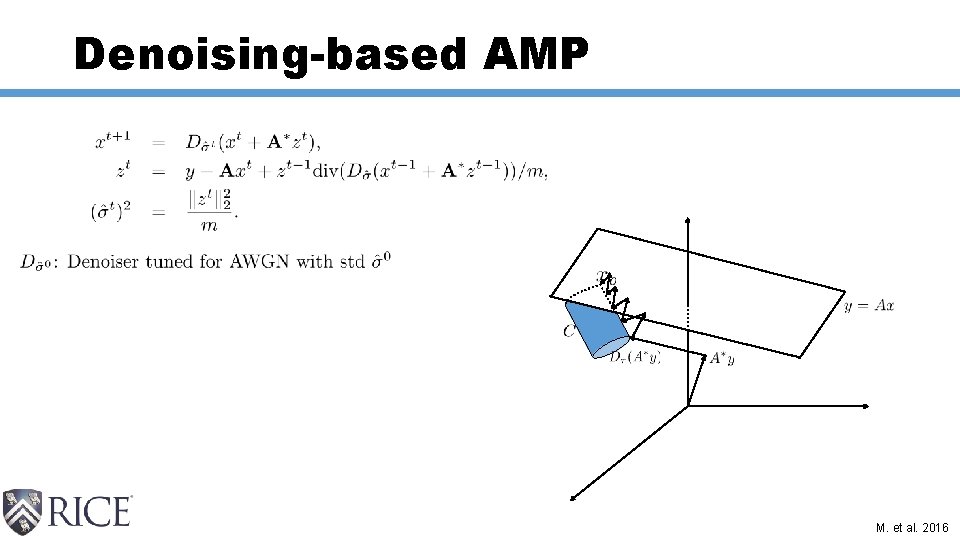

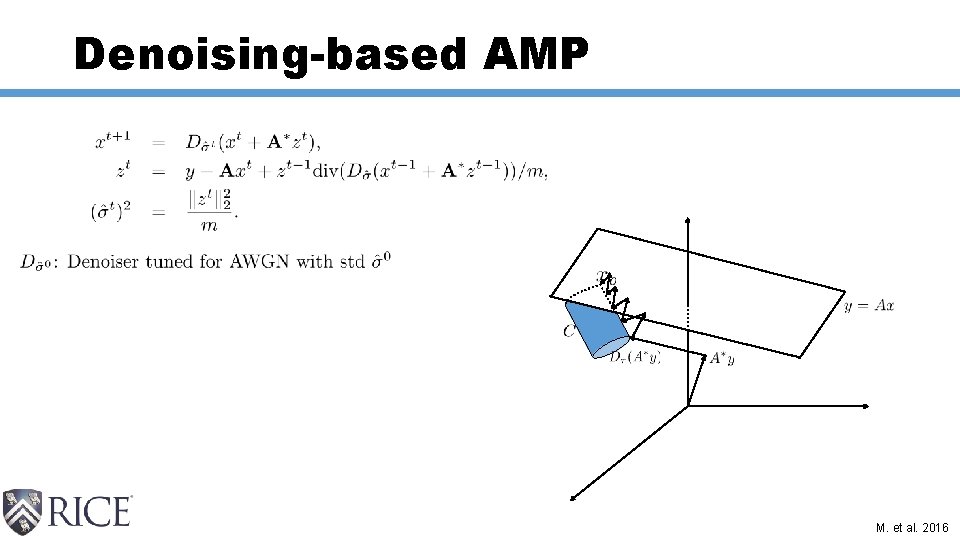

Denoising-based AMP M. et al. 2016

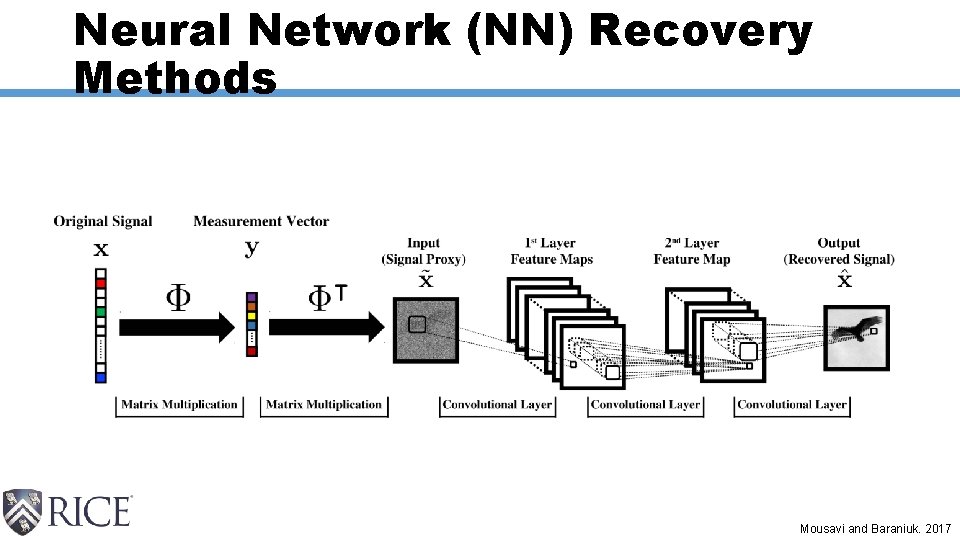

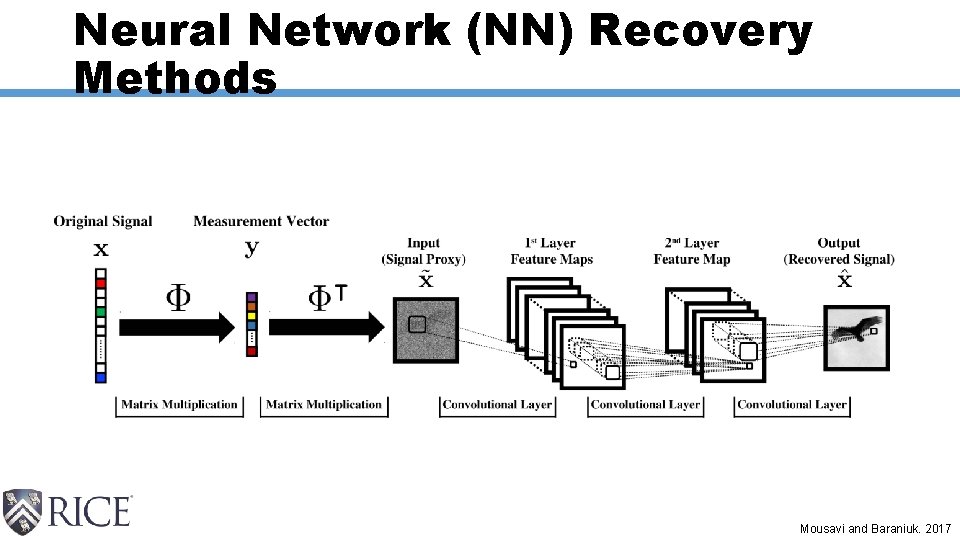

Neural Network (NN) Recovery Methods Mousavi and Baraniuk. 2017

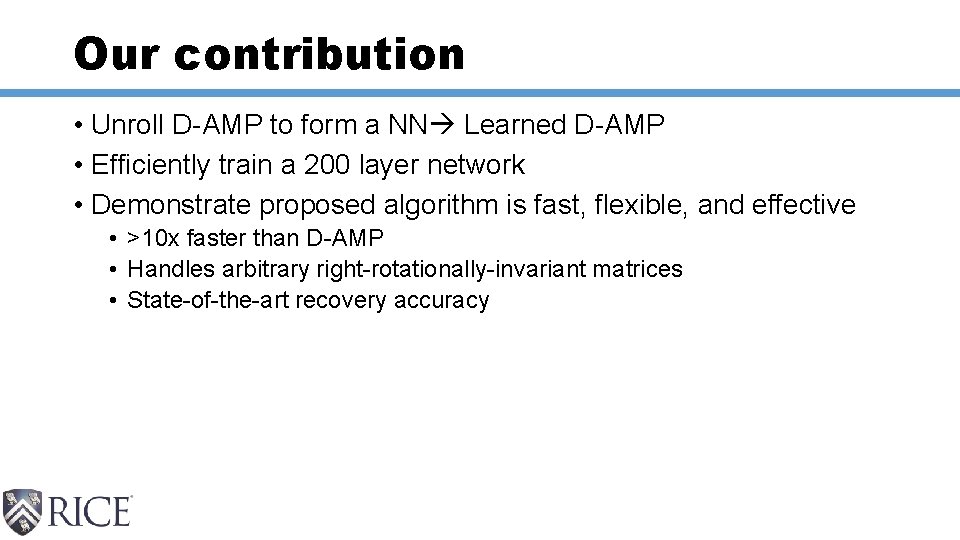

Our contribution • Unroll D-AMP to form a NN Learned D-AMP • Efficiently train a 200 layer network • Demonstrate proposed algorithm is fast, flexible, and effective • >10 x faster than D-AMP • Handles arbitrary right-rotationally-invariant matrices • State-of-the-art recovery accuracy

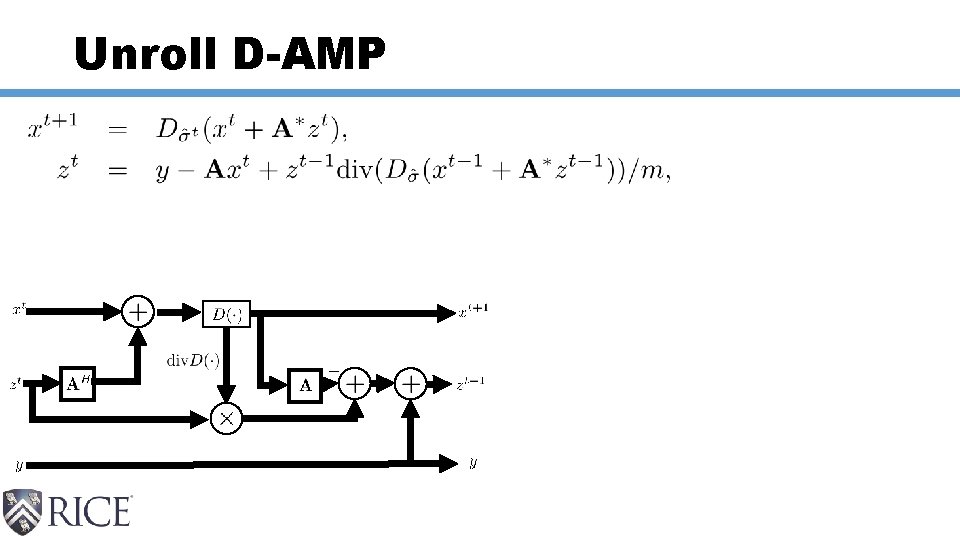

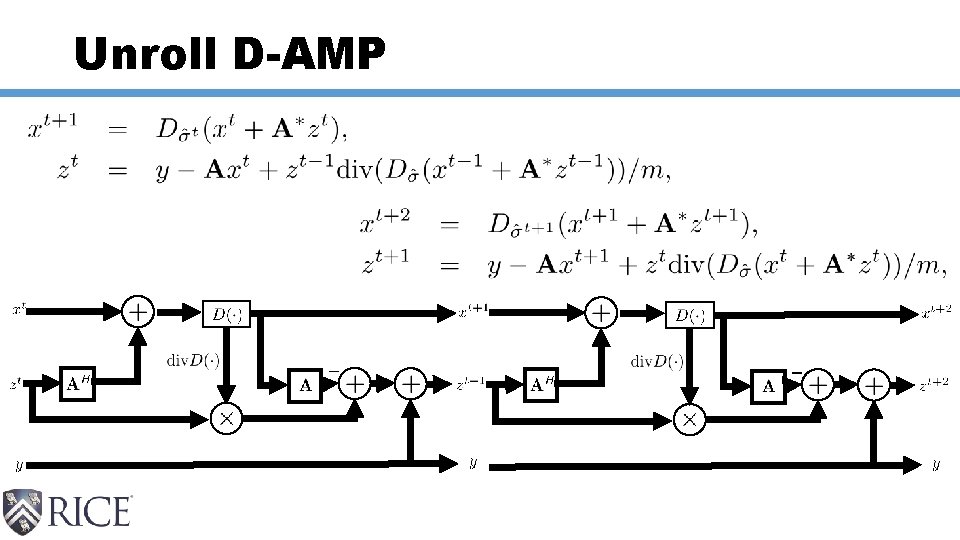

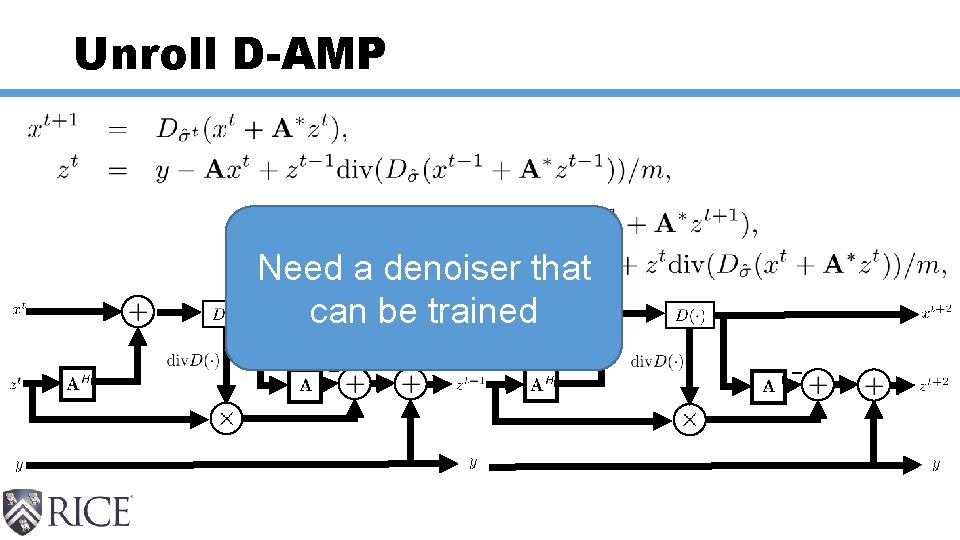

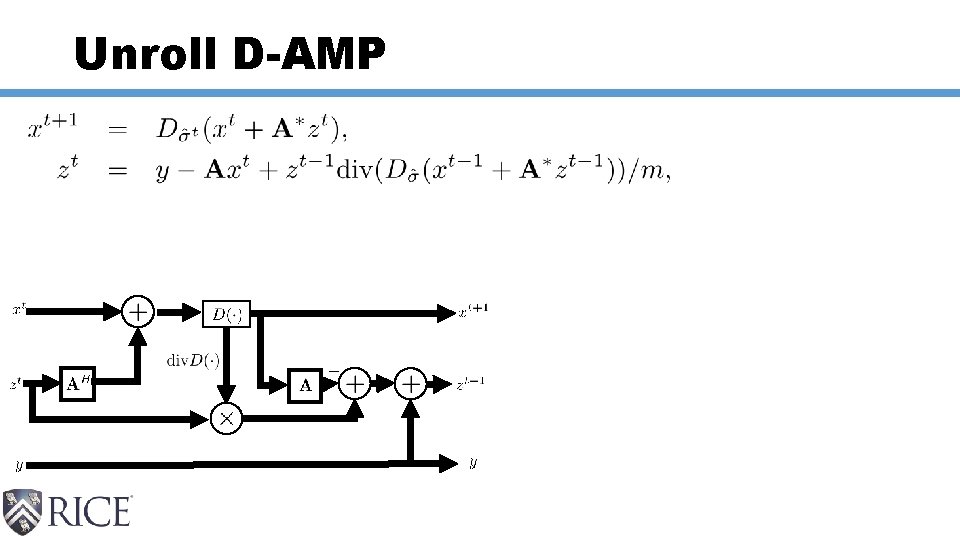

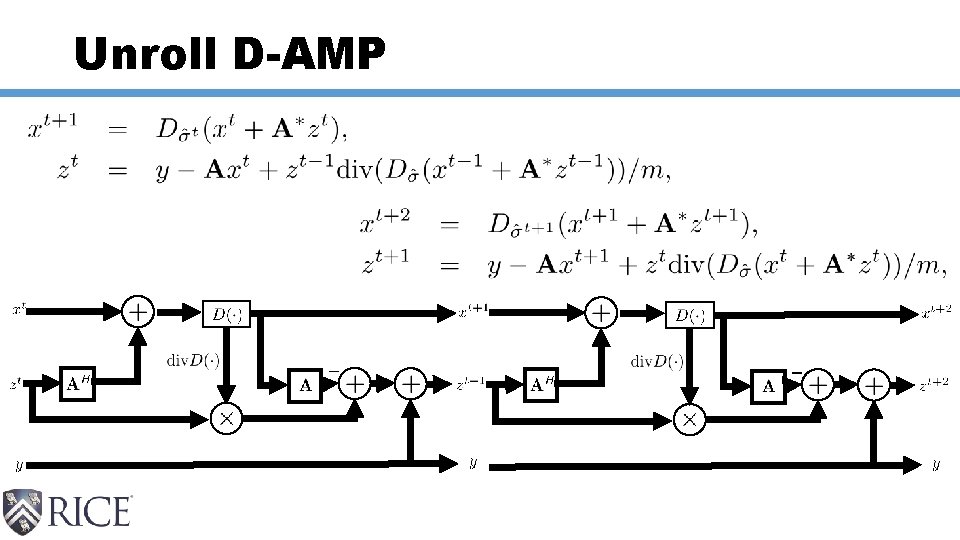

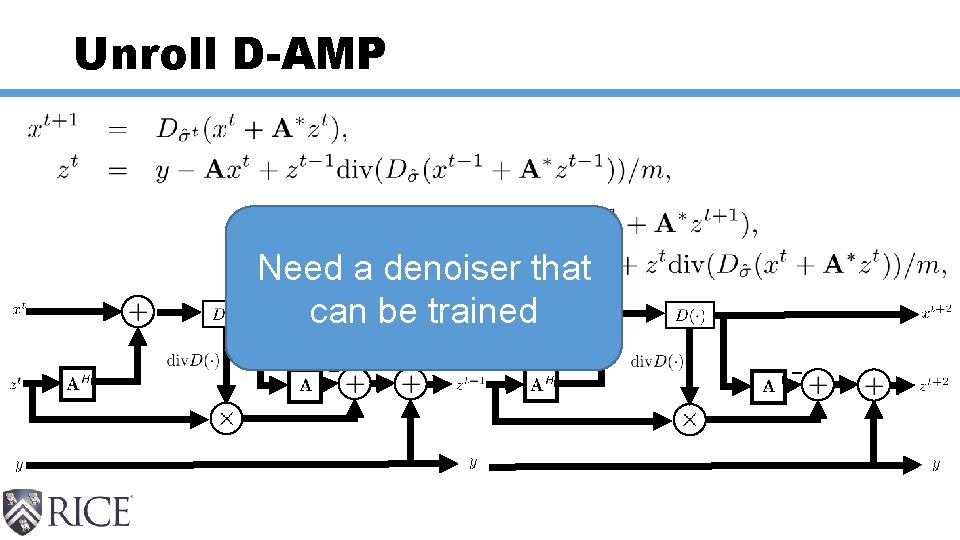

Unroll D-AMP

Unroll D-AMP

Unroll D-AMP Need a denoiser that can be trained

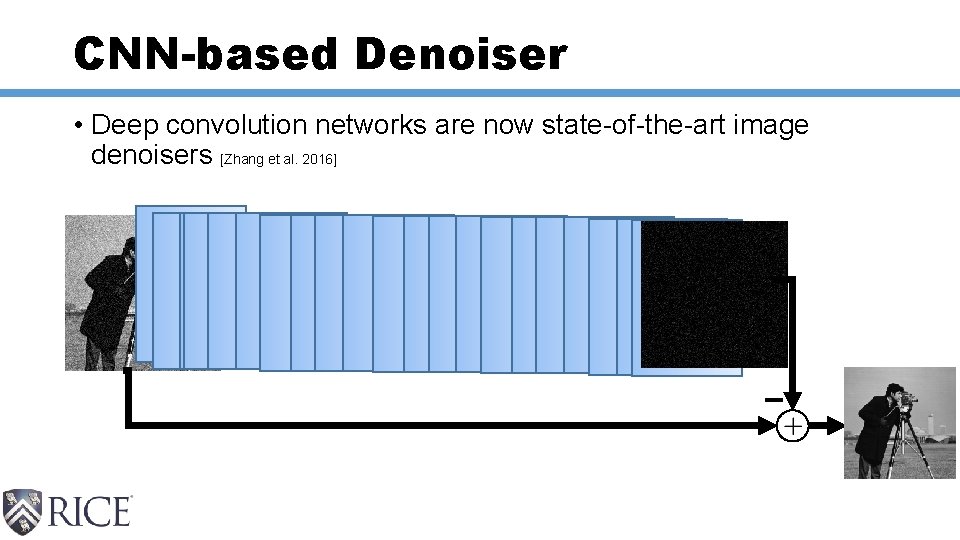

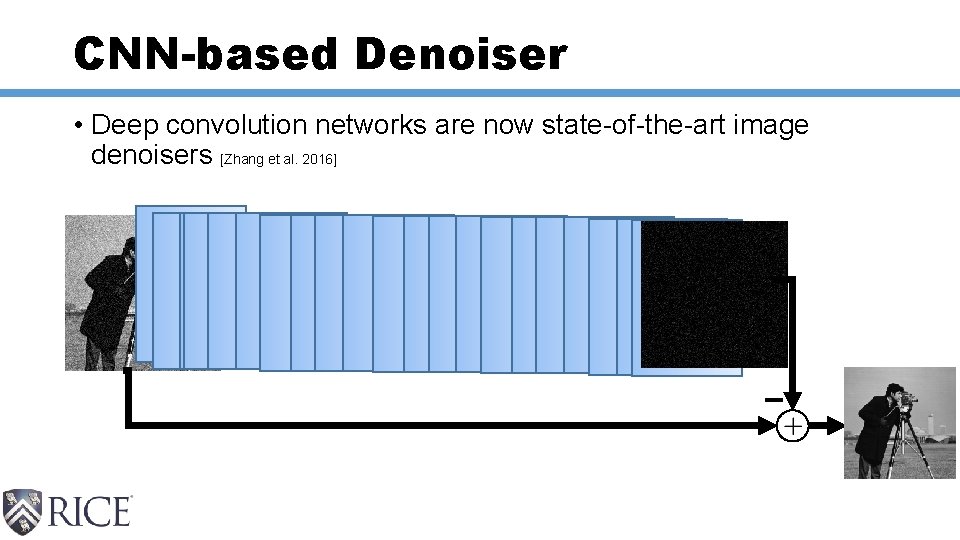

CNN-based Denoiser • Deep convolution networks are now state-of-the-art image denoisers [Zhang et al. 2016]

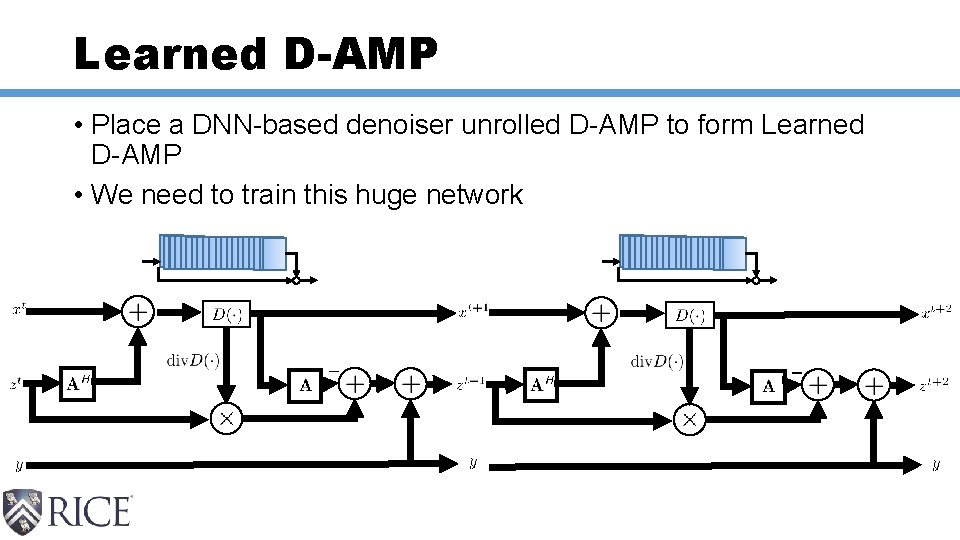

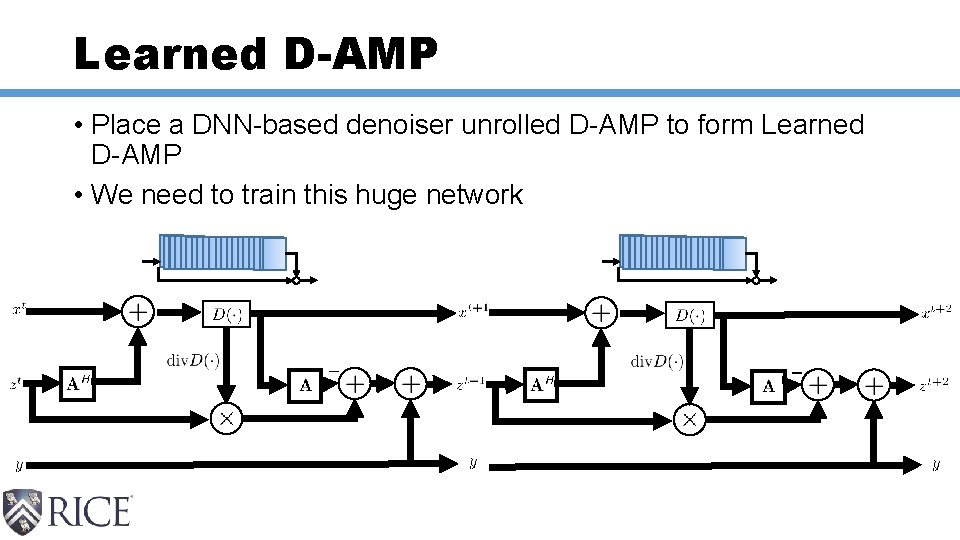

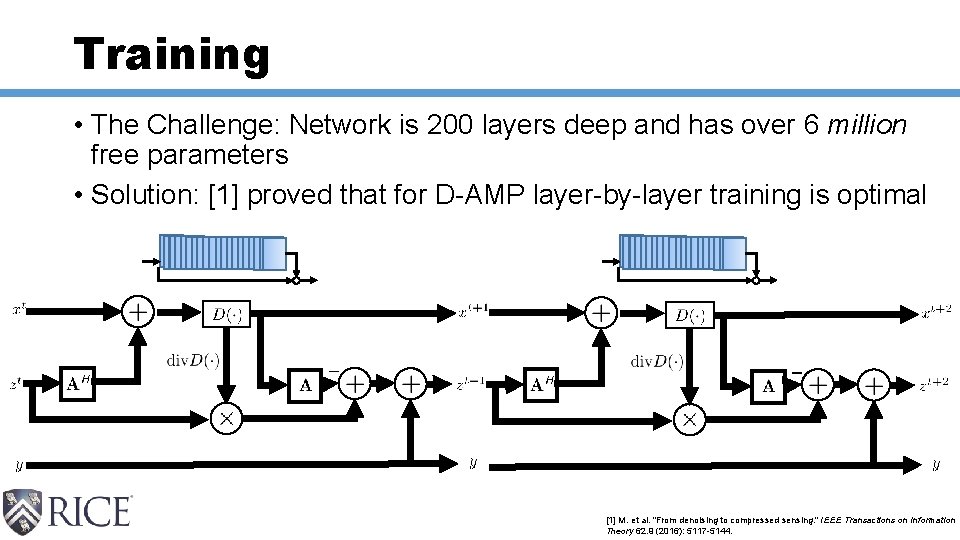

Learned D-AMP • Place a DNN-based denoiser unrolled D-AMP to form Learned D-AMP • We need to train this huge network

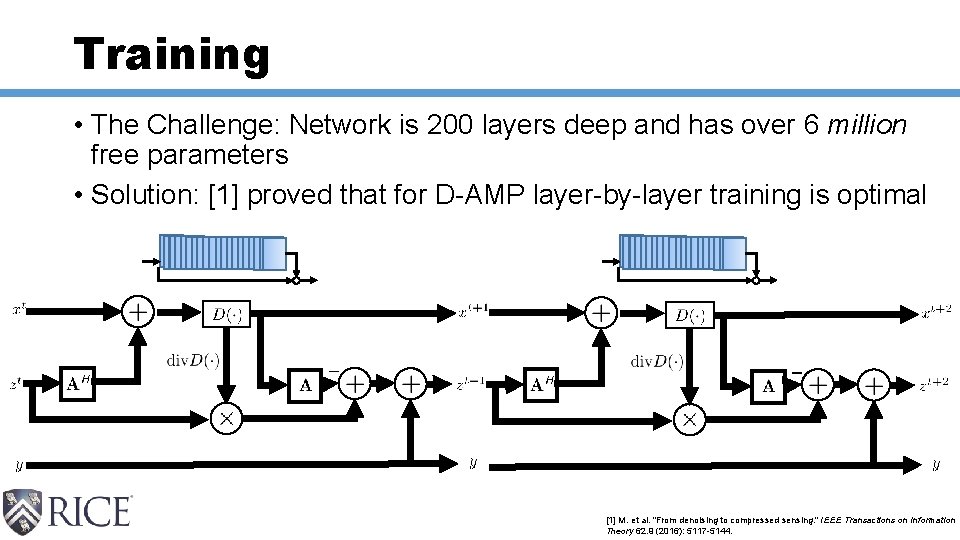

Training • The Challenge: Network is 200 layers deep and has over 6 million free parameters • Solution: [1] proved that for D-AMP layer-by-layer training is optimal [1] M. et al. "From denoising to compressed sensing. " IEEE Transactions on Information Theory 62. 9 (2016): 5117 -5144.

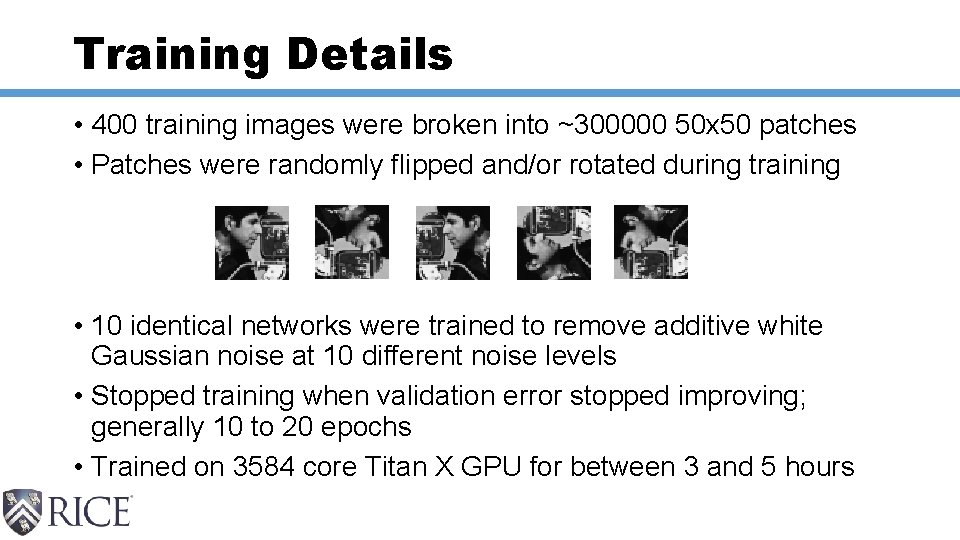

Training Details • 400 training images were broken into ~300000 50 x 50 patches • Patches were randomly flipped and/or rotated during training • 10 identical networks were trained to remove additive white Gaussian noise at 10 different noise levels • Stopped training when validation error stopped improving; generally 10 to 20 epochs • Trained on 3584 core Titan X GPU for between 3 and 5 hours

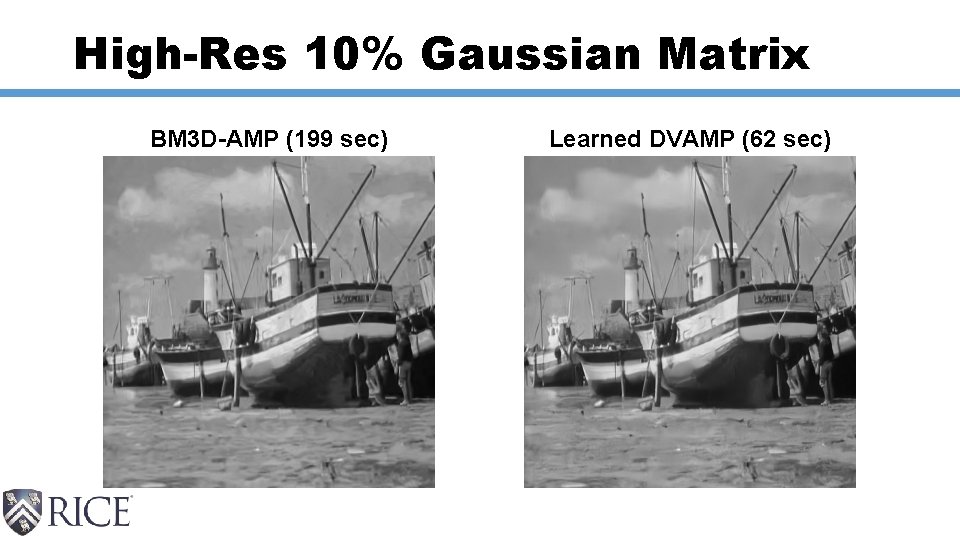

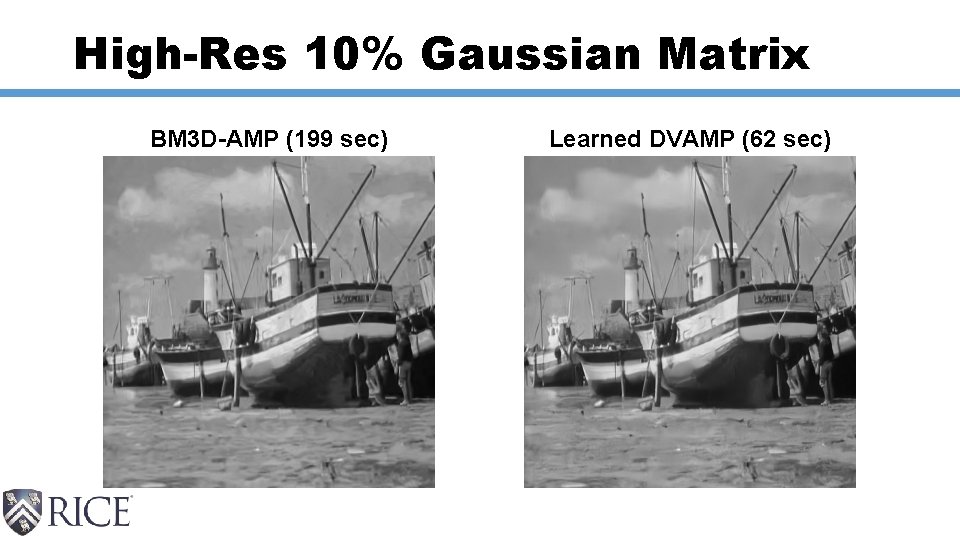

High-Res 10% Gaussian Matrix BM 3 D-AMP (199 sec) Learned DVAMP (62 sec)

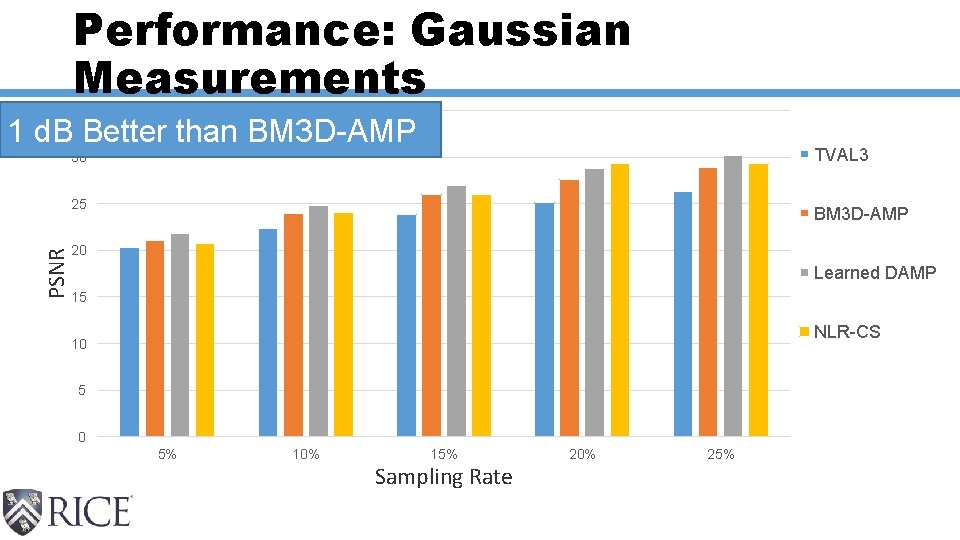

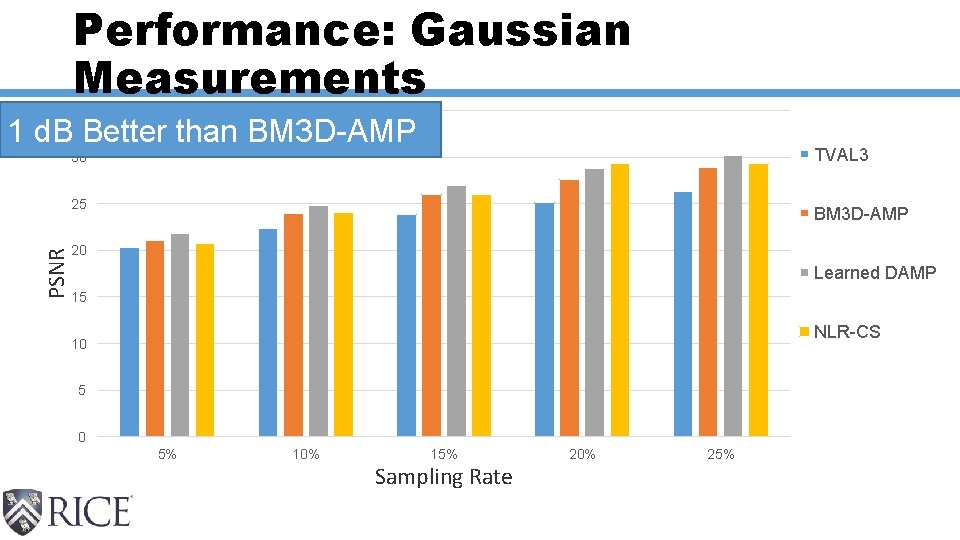

Performance: Gaussian Measurements 35 1 d. B Better than BM 3 D-AMP TVAL 3 30 PSNR 25 BM 3 D-AMP 20 Learned DAMP 15 NLR-CS 10 5 0 5% 10% 15% Sampling Rate 20% 25%

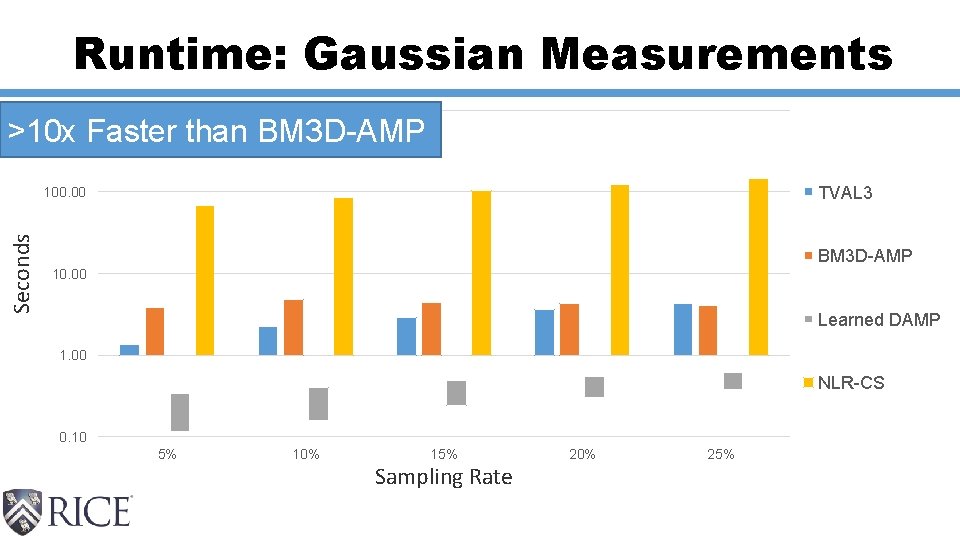

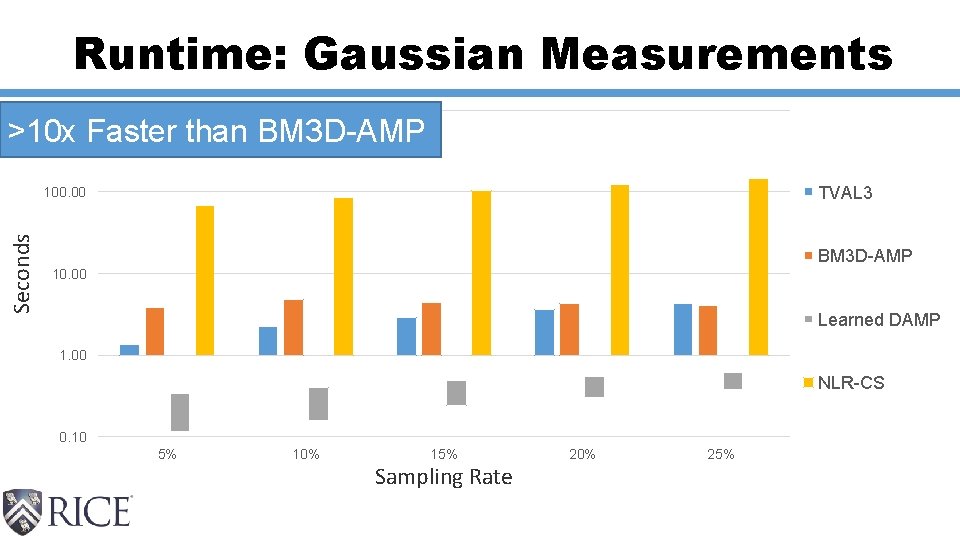

Runtime: Gaussian Measurements 1000. 00 >10 x Faster than BM 3 D-AMP TVAL 3 Seconds 100. 00 BM 3 D-AMP 10. 00 Learned DAMP 1. 00 NLR-CS 0. 10 5% 10% 15% Sampling Rate 20% 25%

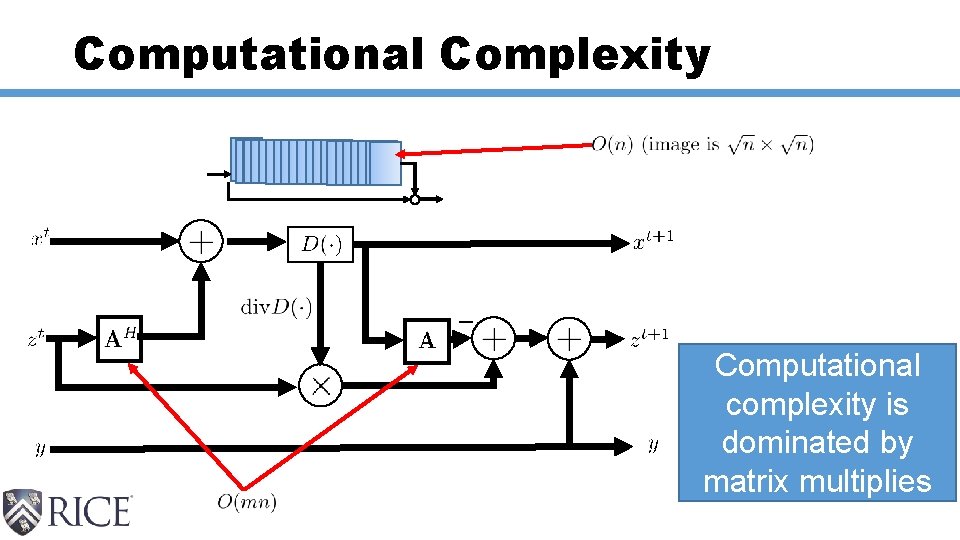

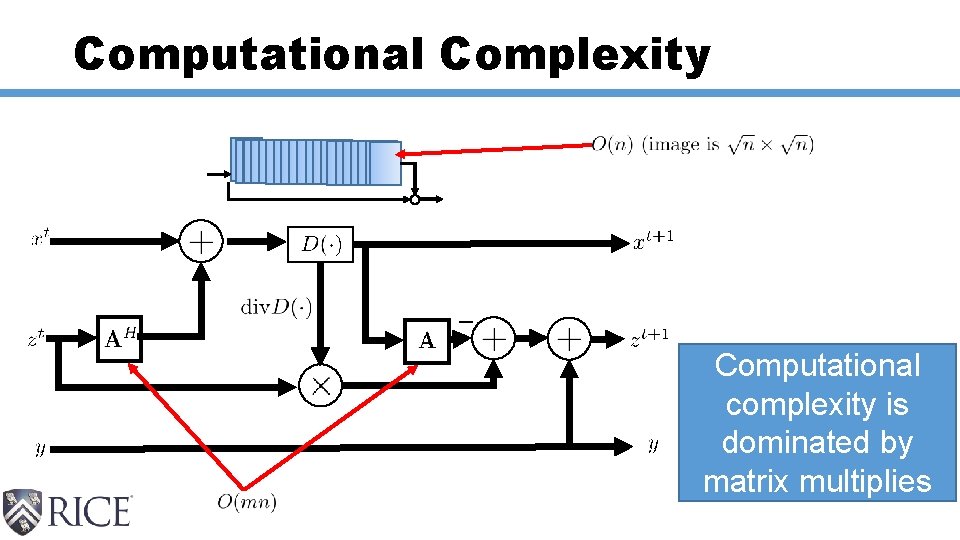

Computational Complexity Computational complexity is dominated by matrix multiplies

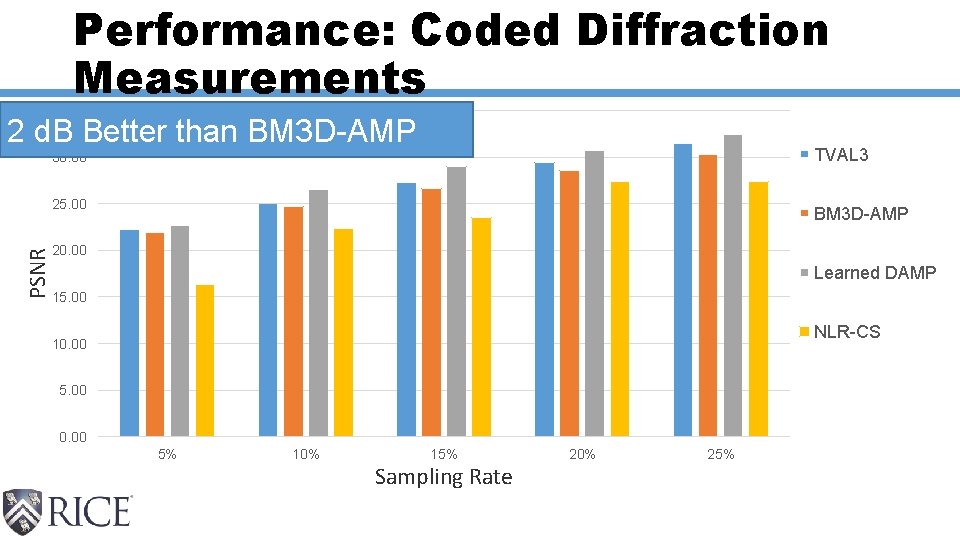

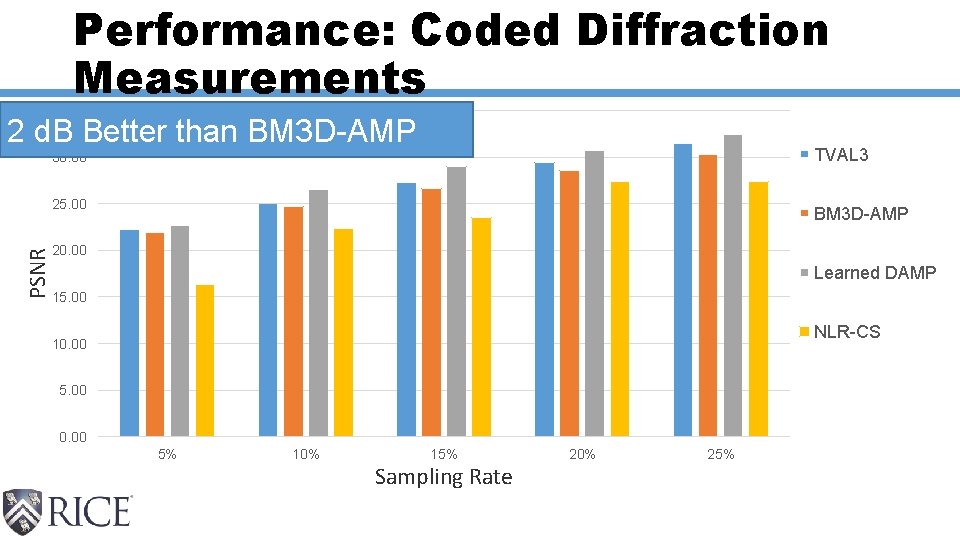

Performance: Coded Diffraction Measurements 35. 00 2 d. B Better than BM 3 D-AMP TVAL 3 30. 00 PSNR 25. 00 BM 3 D-AMP 20. 00 Learned DAMP 15. 00 NLR-CS 10. 00 5. 00 0. 00 5% 10% 15% Sampling Rate 20% 25%

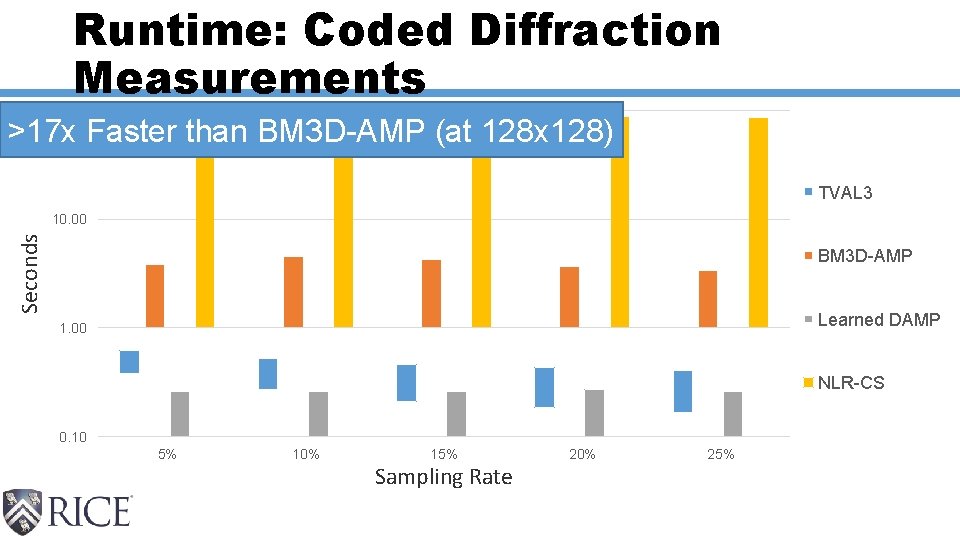

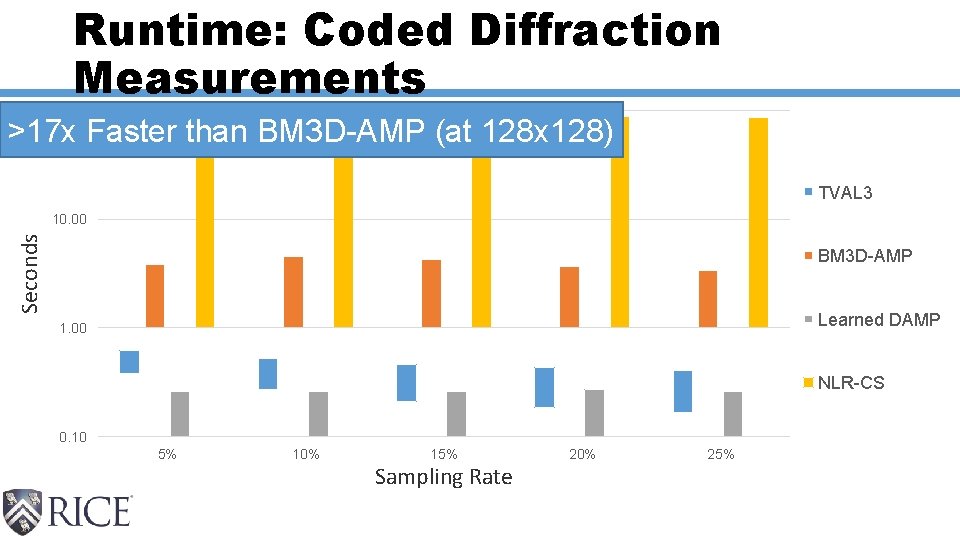

Runtime: Coded Diffraction Measurements 100. 00 >17 x Faster than BM 3 D-AMP (at 128 x 128) TVAL 3 Seconds 10. 00 BM 3 D-AMP Learned DAMP 1. 00 NLR-CS 0. 10 5% 10% 15% Sampling Rate 20% 25%

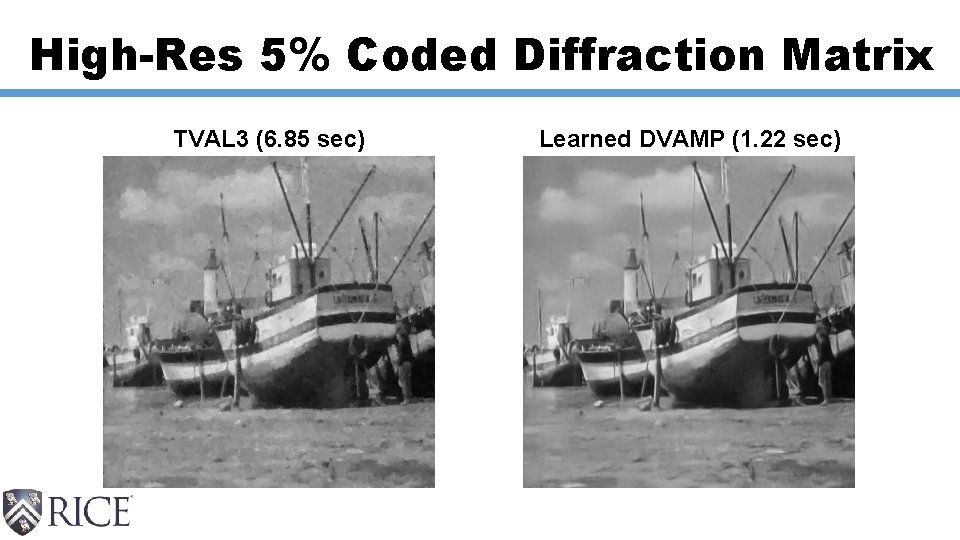

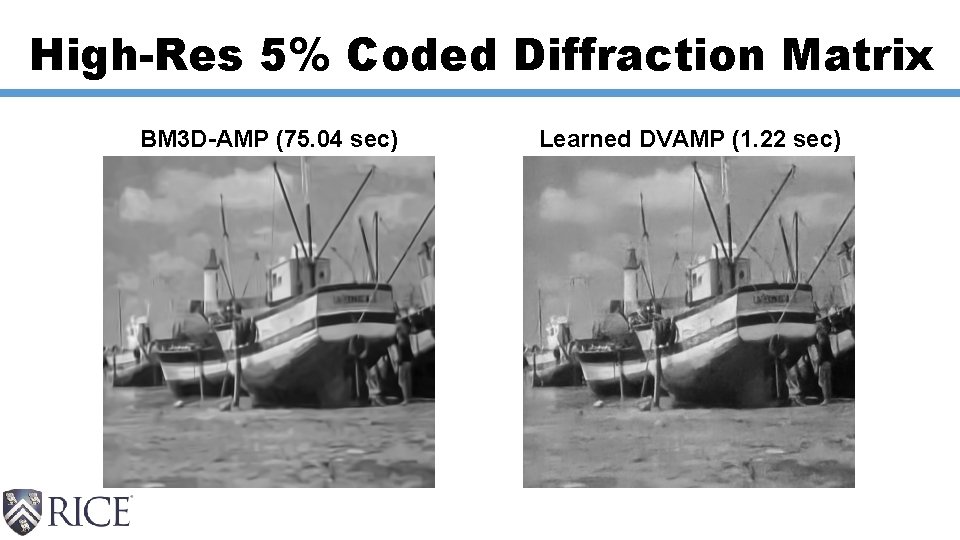

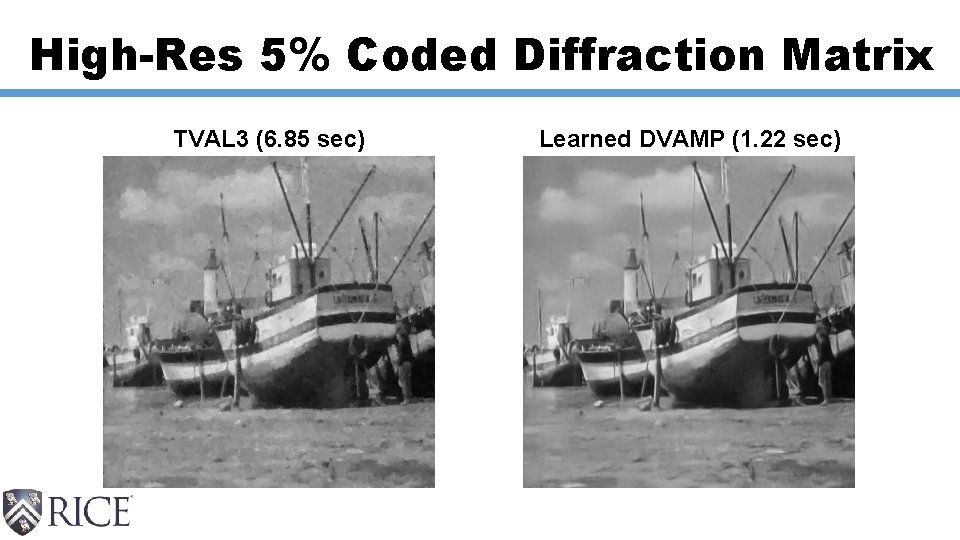

High-Res 5% Coded Diffraction Matrix TVAL 3 (6. 85 sec) Learned DVAMP (1. 22 sec)

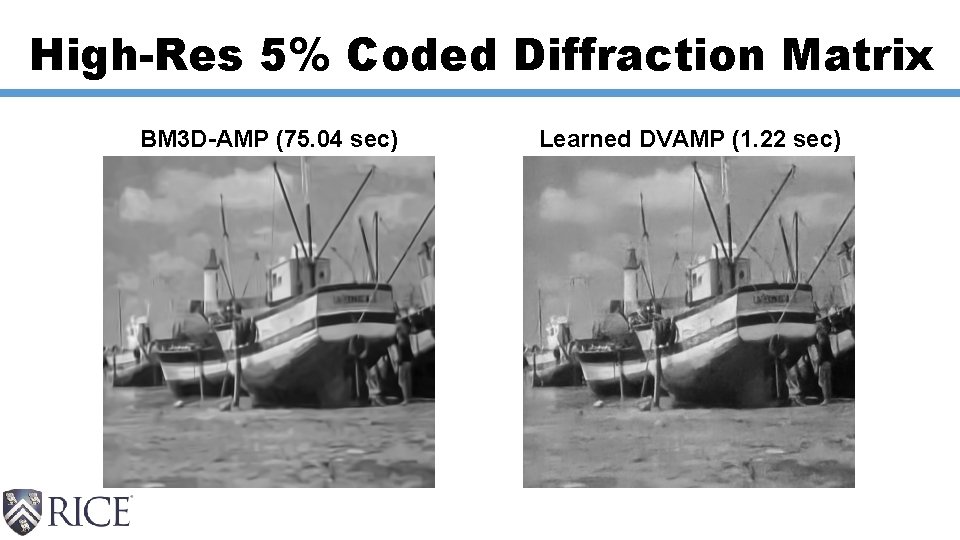

High-Res 5% Coded Diffraction Matrix BM 3 D-AMP (75. 04 sec) Learned DVAMP (1. 22 sec)

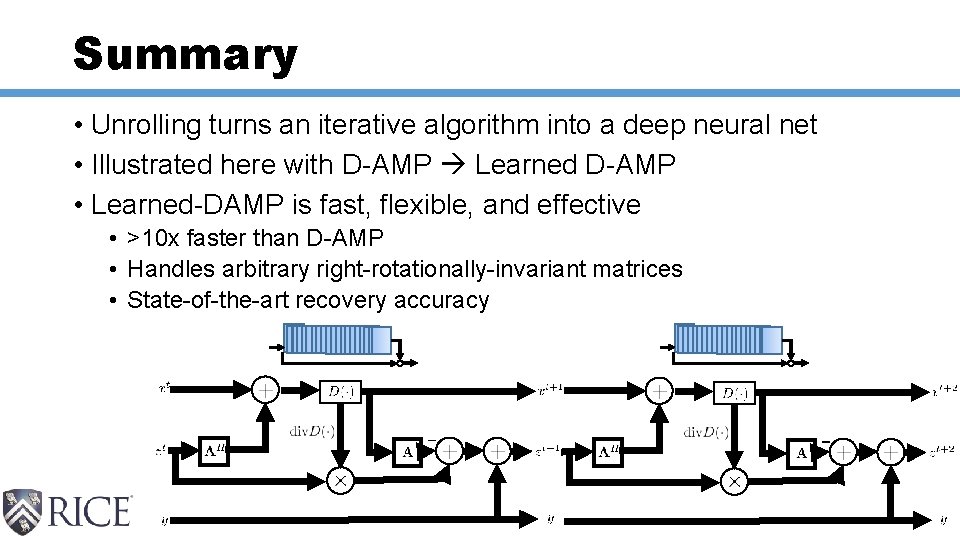

Summary • Unrolling turns an iterative algorithm into a deep neural net • Illustrated here with D-AMP Learned D-AMP • Learned-DAMP is fast, flexible, and effective • >10 x faster than D-AMP • Handles arbitrary right-rotationally-invariant matrices • State-of-the-art recovery accuracy

Acknowledgments • NSF GRFP