Universit di Pisa Parsing Giuseppe Attardi Dipartimento di

![Universal Dependencies [de Marneffe et al. LREC 2006] • The basic dependency representation is Universal Dependencies [de Marneffe et al. LREC 2006] • The basic dependency representation is](https://slidetodoc.com/presentation_image_h/cce55d49248d27c837beb4ed2e42855e/image-74.jpg)

![Bio. NLP 2009/2011 Relation extraction shared tasks [Björne et al. 2009] slide by C. Bio. NLP 2009/2011 Relation extraction shared tasks [Björne et al. 2009] slide by C.](https://slidetodoc.com/presentation_image_h/cce55d49248d27c837beb4ed2e42855e/image-78.jpg)

- Slides: 82

Università di Pisa Parsing Giuseppe Attardi Dipartimento di Informatica Università di Pisa

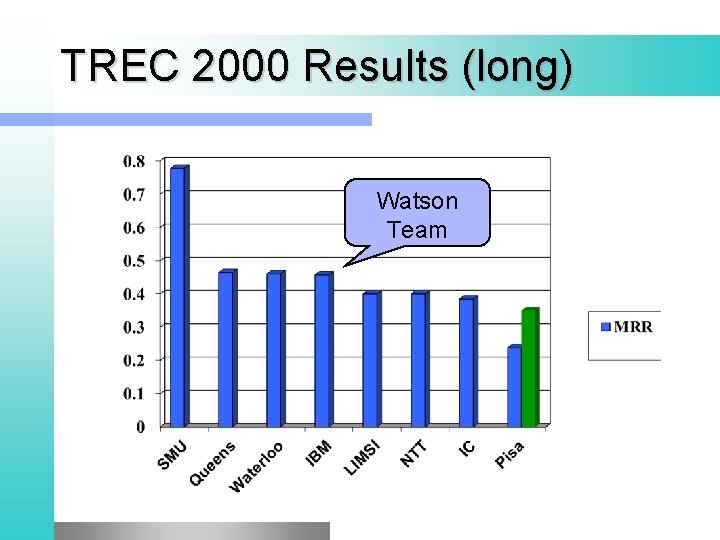

Question Answering at TREC Consists of answering a set of 500 fact-based questions, e. g. “When was Mozart born? ” l Systems were allowed to return 5 ranked answer snippets to each question. l § IR think § Mean Reciprocal Rank (MRR) scoring: • 1, 0. 5, 0. 33, 0. 25, 0. 2, 0 for 1, 2, 3, 4, 5, 6+ doc § Mainly Named Entity answers (person, place, date, …) l From 2002 systems are only allowed to return a single exact answer

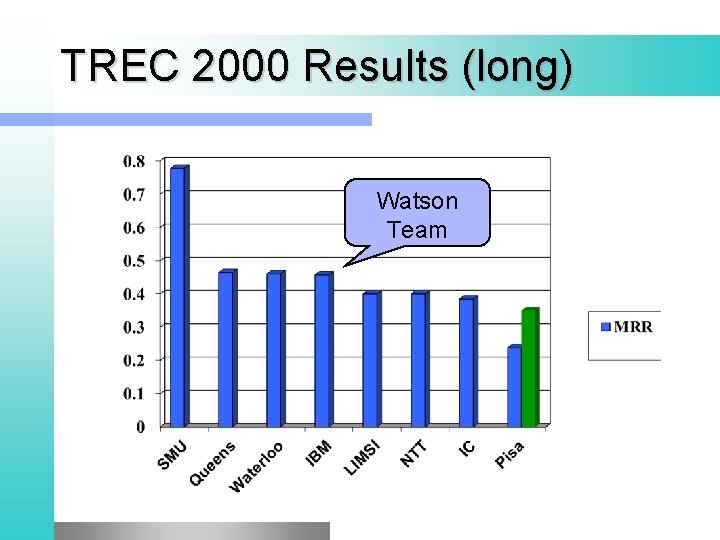

TREC 2000 Results (long) Watson Team

Falcon The Falcon system from SMU was by far best performing system at TREC 2000 l It used NLP and performed deep semantic processing l

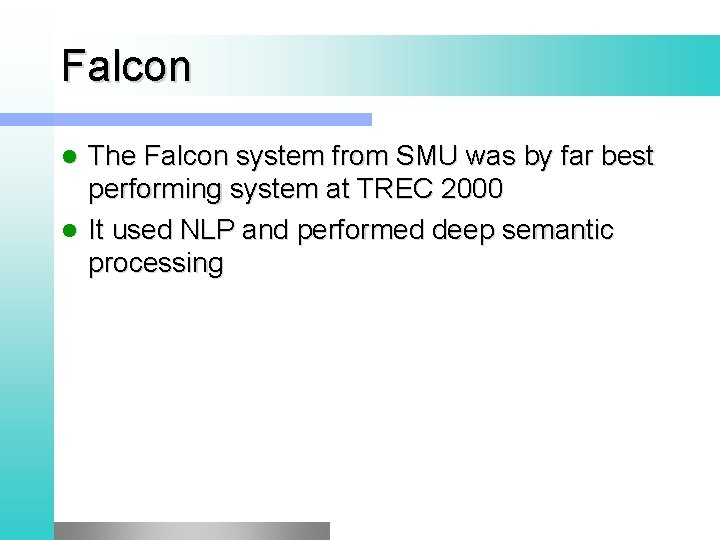

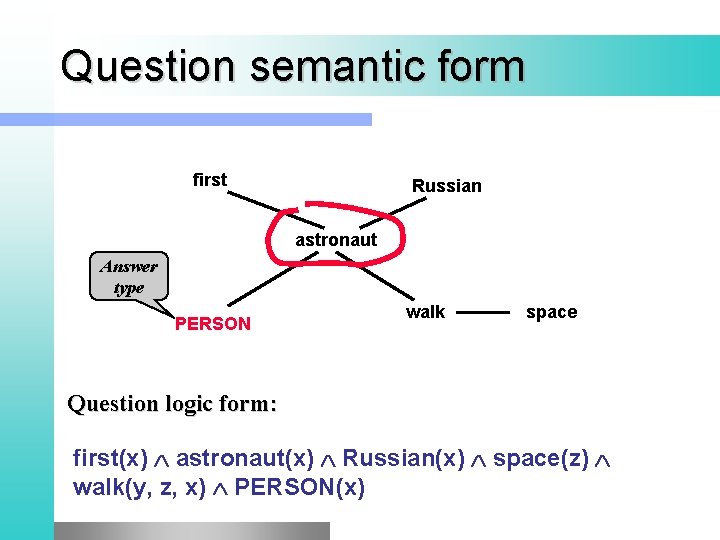

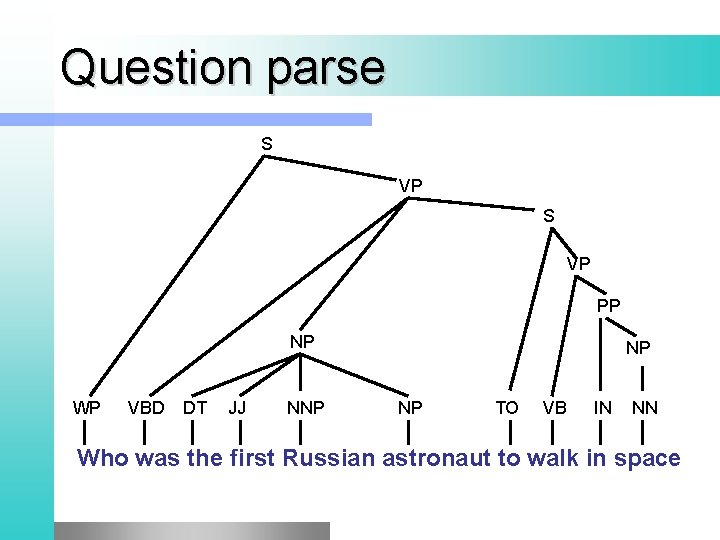

Question parse S VP PP NP WP VBD DT JJ NNP NP NP TO VB IN NN Who was the first Russian astronaut to walk in space

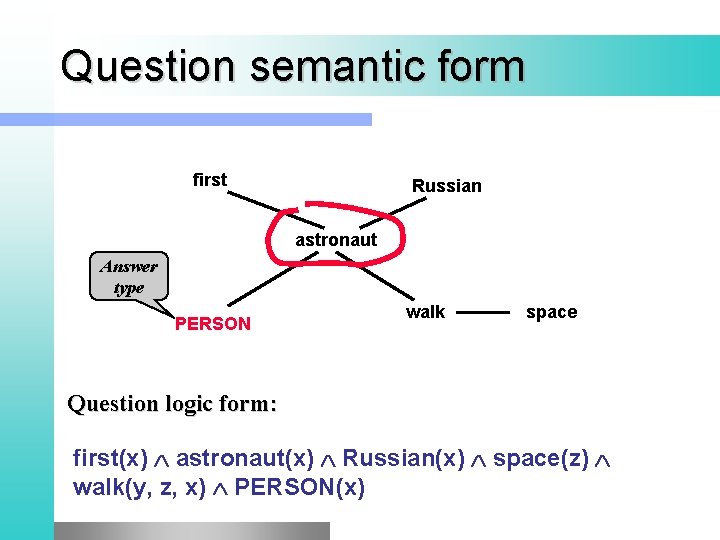

Question semantic form first Russian astronaut Answer type PERSON walk space Question logic form: first(x) astronaut(x) Russian(x) space(z) walk(y, z, x) PERSON(x)

Parsing in QA Top systems in TREC 2005 perform parsing of queries and answer paragraphs l Some use specially built parser l Parsers are slow: ~ 1 min/sentence l

Practical Uses of Parsing Google Knowledge Graph enriched from relations extracted from Dependency Trees l Google index parses all documents l Google Translator applies dependency parsing to sentences l Sentiment Analysis improves by dependency parsing l

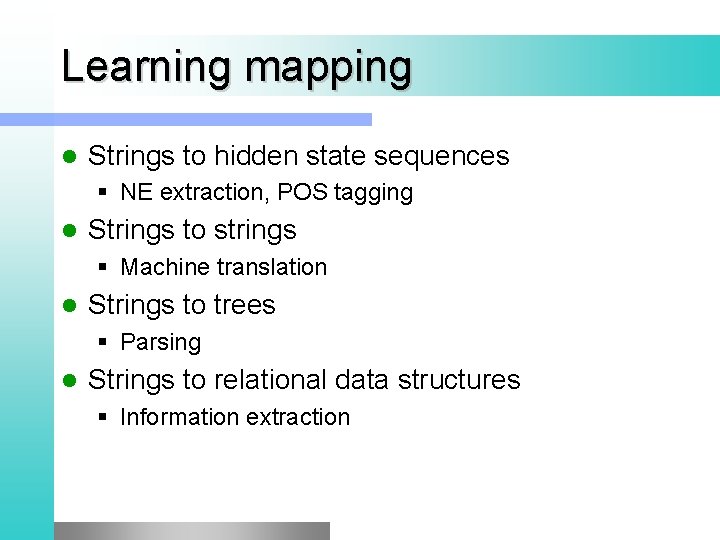

Statistical Methods in NLP l Some NLP problems: § Information extraction • Named entities, Relationships between entities, etc. § Finding linguistic structure • Part-of-speech tagging, Chunking, Parsing l Can be cast as learning mapping: § Strings to hidden state sequences • NE extraction, POS tagging § Strings to strings • Machine translation § Strings to trees • Parsing § Strings to relational data structures • Information extraction

Techniques § Log-linear (Maximum Entropy) taggers § Probabilistic context-free grammars (PCFGs) § Discriminative methods: • Conditional MRFs, Perceptron, Kernel methods

Learning mapping l Strings to hidden state sequences § NE extraction, POS tagging l Strings to strings § Machine translation l Strings to trees § Parsing l Strings to relational data structures § Information extraction

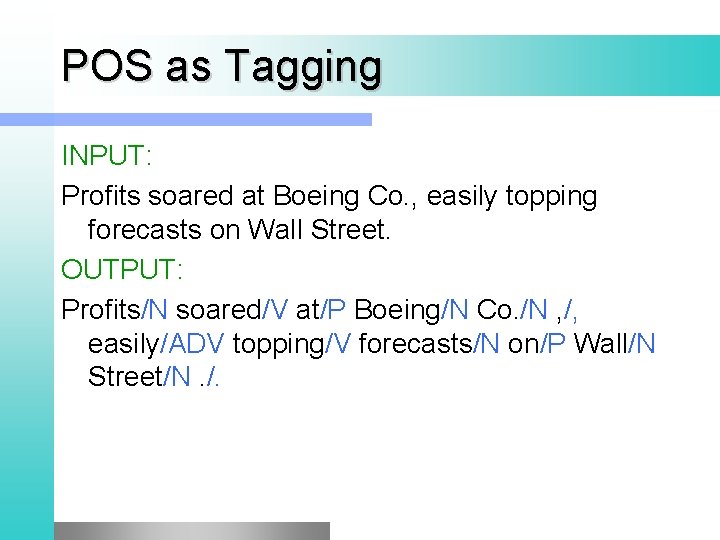

POS as Tagging INPUT: Profits soared at Boeing Co. , easily topping forecasts on Wall Street. OUTPUT: Profits/N soared/V at/P Boeing/N Co. /N , /, easily/ADV topping/V forecasts/N on/P Wall/N Street/N. /.

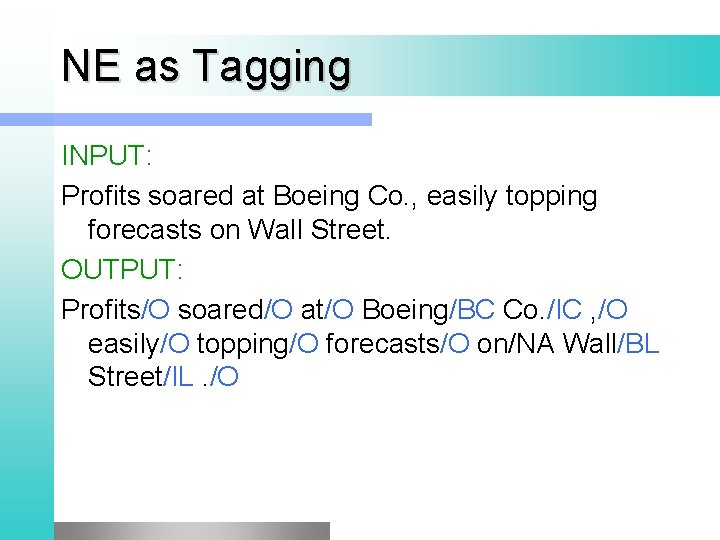

NE as Tagging INPUT: Profits soared at Boeing Co. , easily topping forecasts on Wall Street. OUTPUT: Profits/O soared/O at/O Boeing/BC Co. /IC , /O easily/O topping/O forecasts/O on/NA Wall/BL Street/IL. /O

Parsing Technology

Constituent Parsing

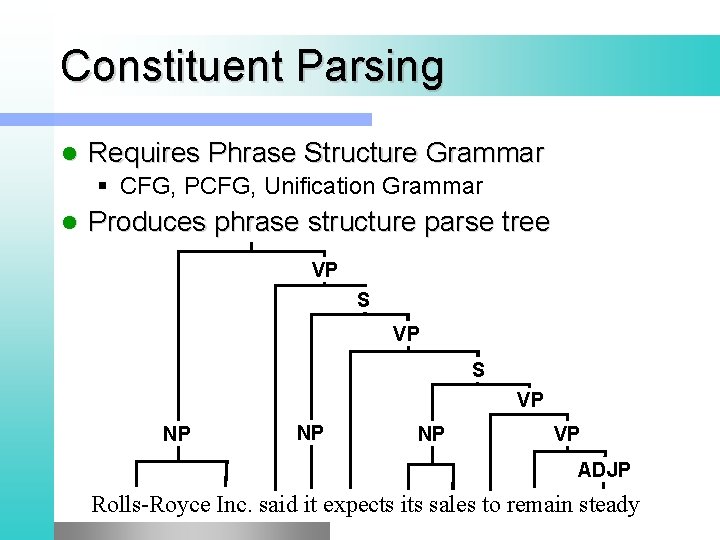

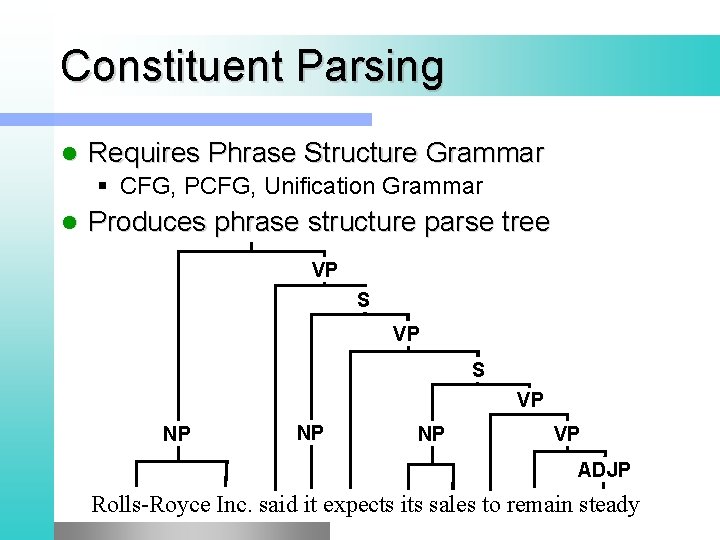

Constituent Parsing l Requires Phrase Structure Grammar § CFG, PCFG, Unification Grammar l Produces phrase structure parse tree VP S VP NP NP NP VP ADJP Rolls-Royce Inc. said it expects its sales to remain steady

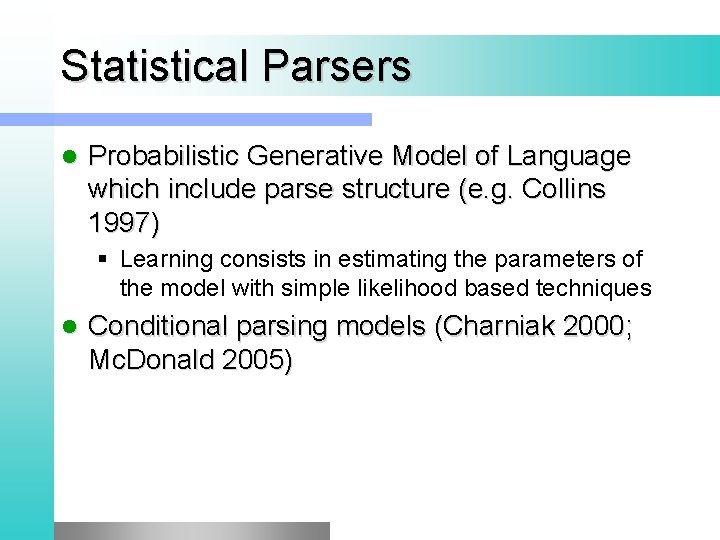

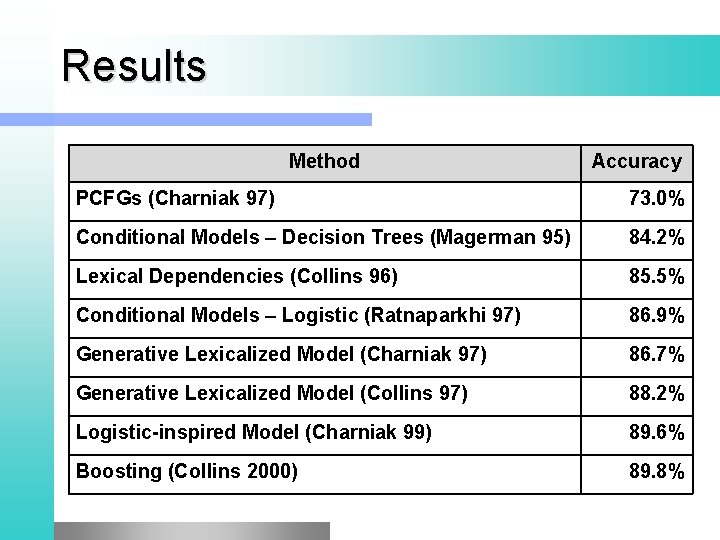

Statistical Parsers l Probabilistic Generative Model of Language which include parse structure (e. g. Collins 1997) § Learning consists in estimating the parameters of the model with simple likelihood based techniques l Conditional parsing models (Charniak 2000; Mc. Donald 2005)

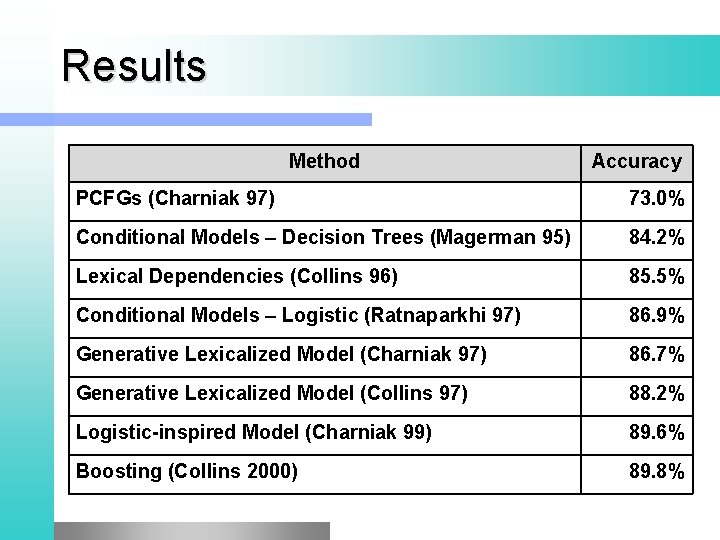

Results Method Accuracy PCFGs (Charniak 97) 73. 0% Conditional Models – Decision Trees (Magerman 95) 84. 2% Lexical Dependencies (Collins 96) 85. 5% Conditional Models – Logistic (Ratnaparkhi 97) 86. 9% Generative Lexicalized Model (Charniak 97) 86. 7% Generative Lexicalized Model (Collins 97) 88. 2% Logistic-inspired Model (Charniak 99) 89. 6% Boosting (Collins 2000) 89. 8%

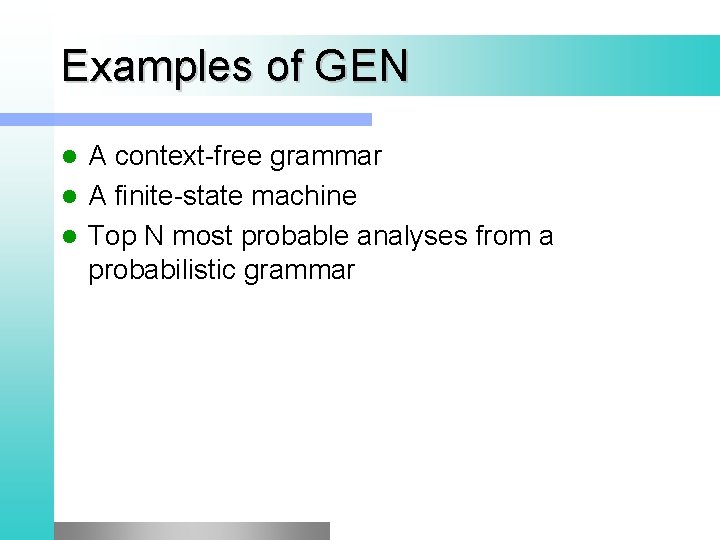

Linear Models for Parsing and Tagging l Three components: GEN is a function from a string to a set of candidates F maps a candidate to a feature vector W is a parameter vector

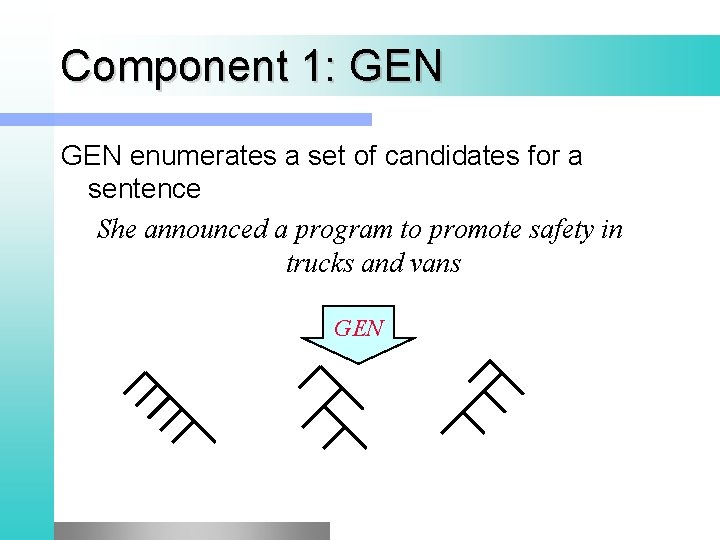

Component 1: GEN enumerates a set of candidates for a sentence She announced a program to promote safety in trucks and vans GEN

Examples of GEN A context-free grammar l A finite-state machine l Top N most probable analyses from a probabilistic grammar l

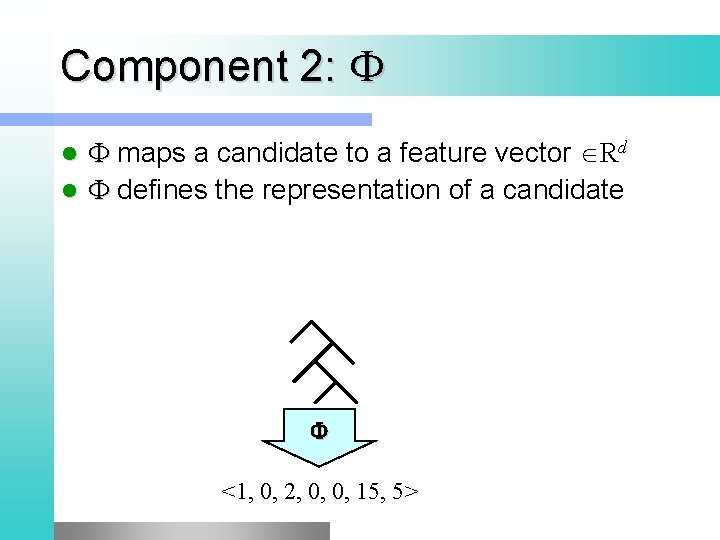

Component 2: F F maps a candidate to a feature vector Rd l F defines the representation of a candidate l F <1, 0, 2, 0, 0, 15, 5>

Feature A “feature” is a function on a structure, e. g. , h(x) = Number of times is seen in x A B C Feature vector: A set of functions h 1…hd define a feature vector F(x) = <h 1(x), h 2(x) … hd(x)>

Component 3: W W is a parameter vector Rd l F • W map a candidate to a real-valued score l

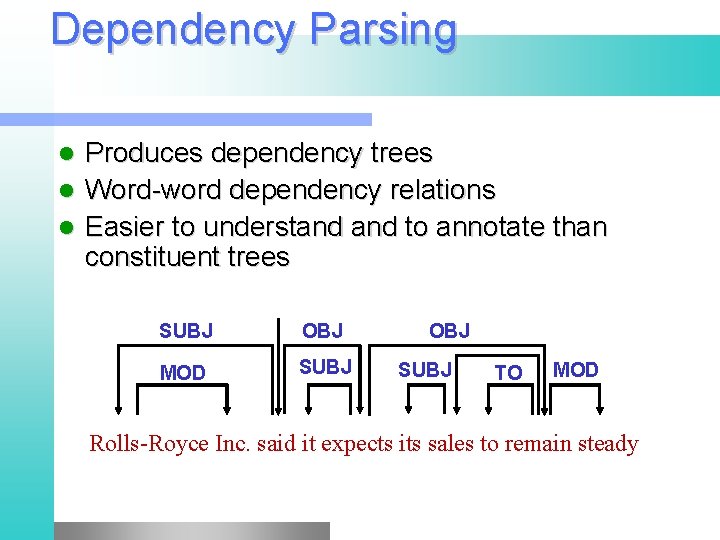

Putting it all together X is set of sentences, Y is set of possible outputs (e. g. trees) l Need to learn a function F : X → Y l GEN, F, W define l l Choose the highest scoring tree as the most plausible structure

Dependency Parsing

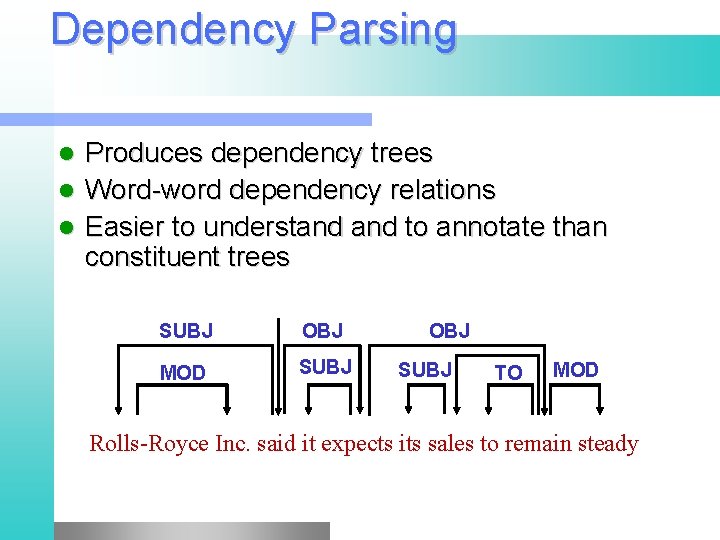

Dependency Parsing Produces dependency trees l Word-word dependency relations l Easier to understand to annotate than constituent trees l SUBJ OBJ MOD SUBJ OBJ SUBJ TO MOD Rolls-Royce Inc. said it expects its sales to remain steady

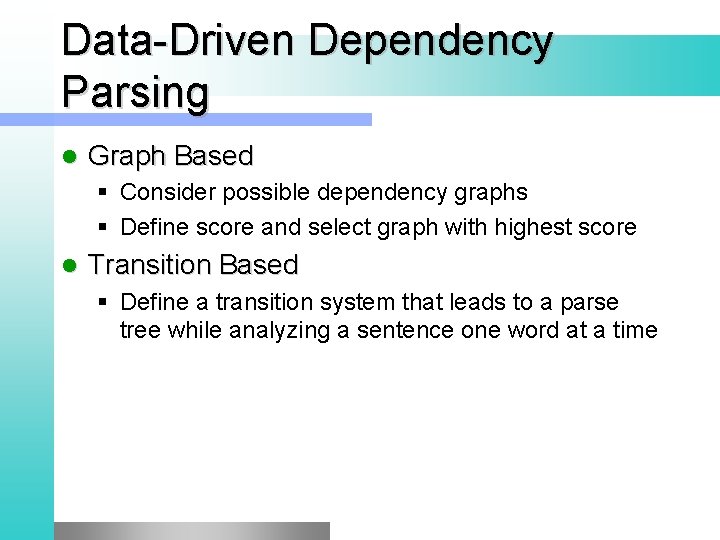

Data-Driven Dependency Parsing l Graph Based § Consider possible dependency graphs § Define score and select graph with highest score l Transition Based § Define a transition system that leads to a parse tree while analyzing a sentence one word at a time

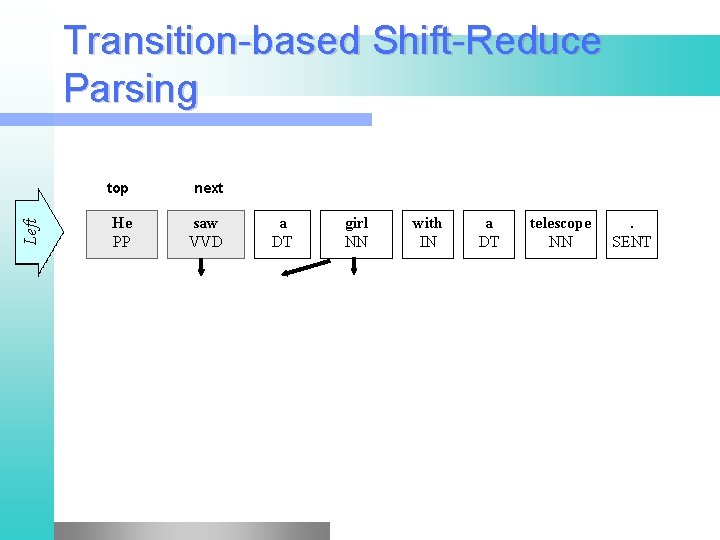

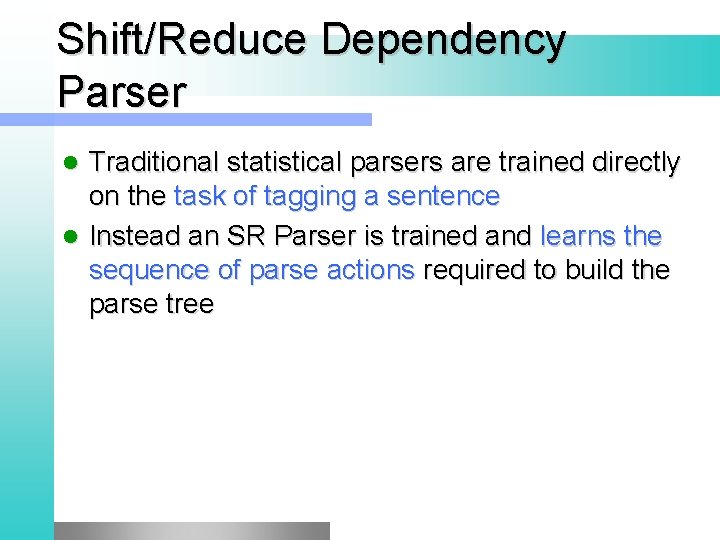

Right Shift Left Transition-based Shift-Reduce Parsing top next He PP saw VVD a DT girl NN with IN a DT telescope NN . SENT

Shift/Reduce Dependency Parser Traditional statistical parsers are trained directly on the task of tagging a sentence l Instead an SR Parser is trained and learns the sequence of parse actions required to build the parse tree l

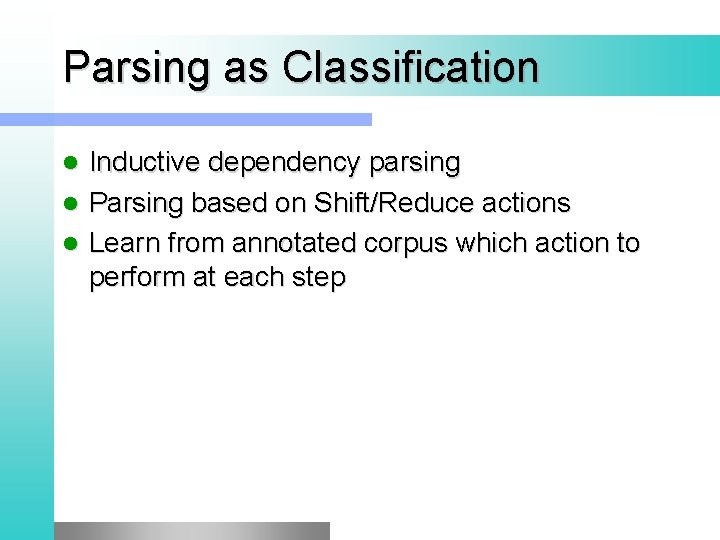

Grammar Not Required A traditional parser requires a grammar for generating candidate trees l An inductive parser needs no grammar l

Parsing as Classification Inductive dependency parsing l Parsing based on Shift/Reduce actions l Learn from annotated corpus which action to perform at each step l

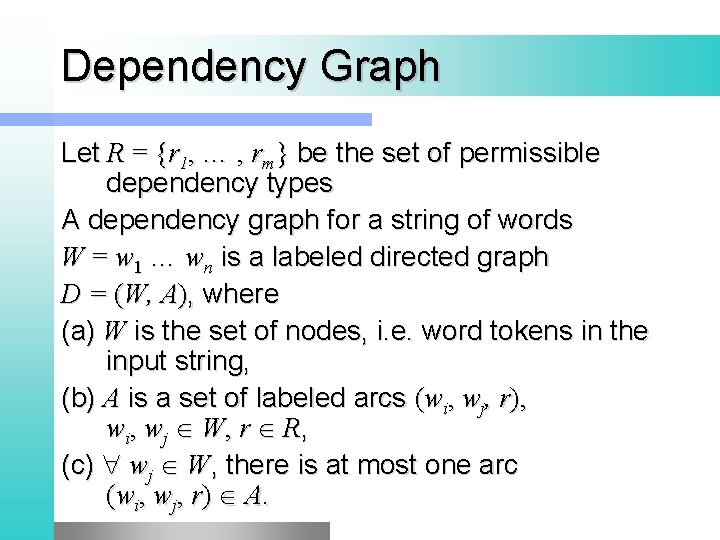

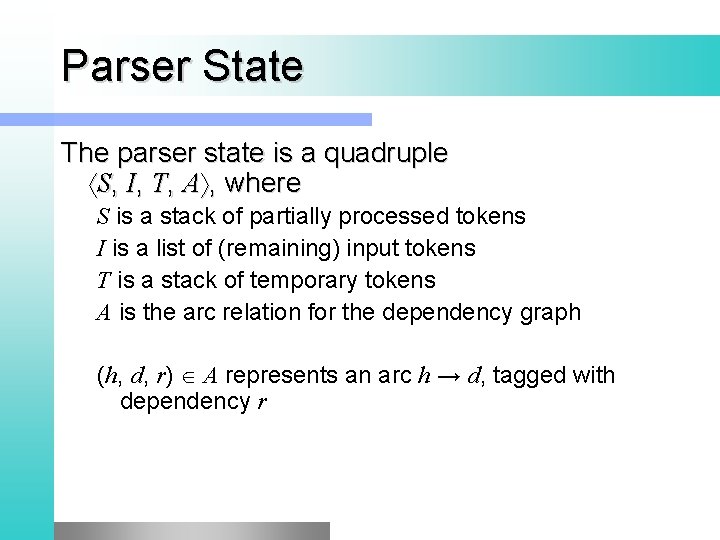

Dependency Graph Let R = {r 1, … , rm} be the set of permissible dependency types A dependency graph for a string of words W = w 1 … wn is a labeled directed graph D = (W, A), where (a) W is the set of nodes, i. e. word tokens in the input string, (b) A is a set of labeled arcs (wi, wj, r), w i , w j W, r R , (c) wj W, there is at most one arc (w i , w j , r ) A.

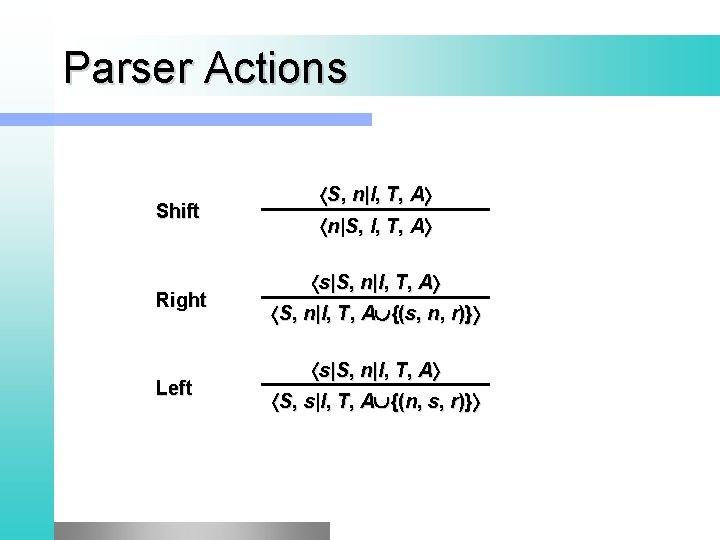

Parser State The parser state is a quadruple S, I, T, A , where S is a stack of partially processed tokens I is a list of (remaining) input tokens T is a stack of temporary tokens A is the arc relation for the dependency graph (h, d, r) A represents an arc h → d, tagged with dependency r

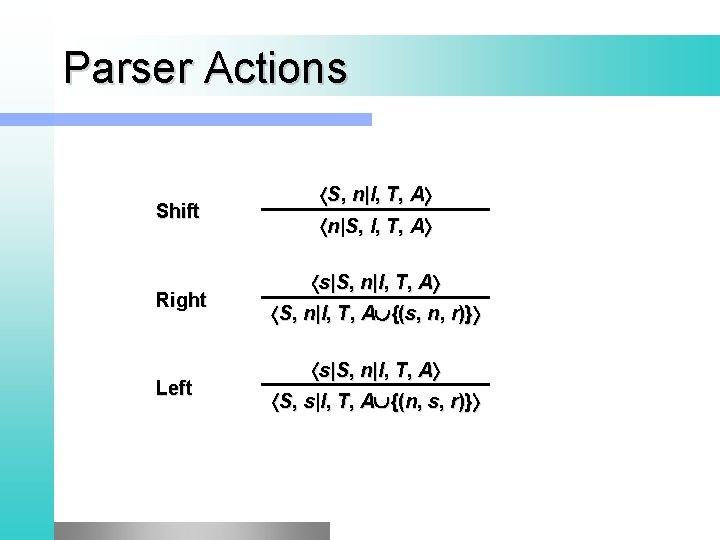

Parser Actions Shift Right Left S , n | I , T , A n | S , I , T , A s | S , n | I , T , A S, n|I, T, A {(s, n, r)} s | S , n | I , T , A S, s|I, T, A {(n, s, r)}

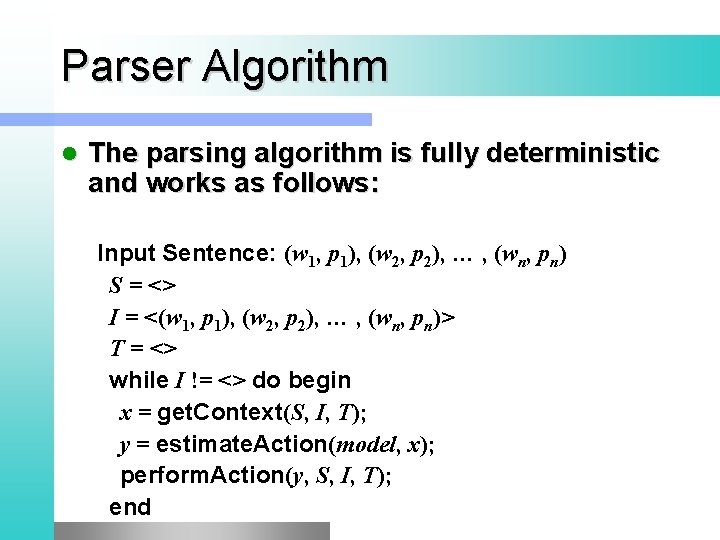

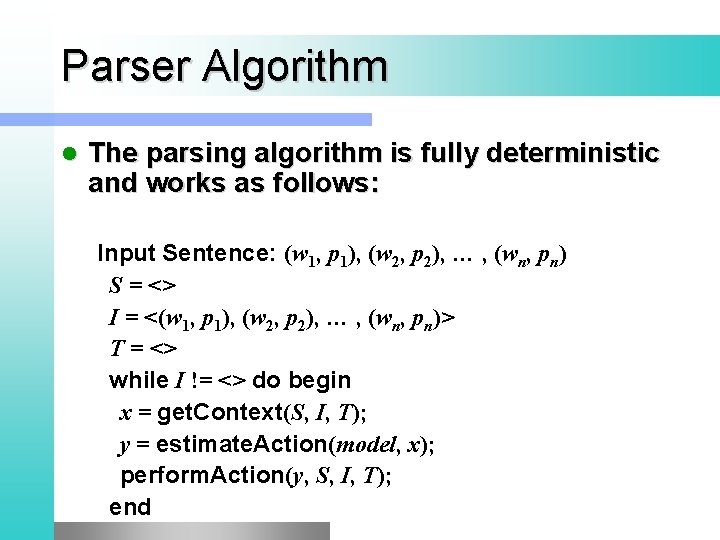

Parser Algorithm l The parsing algorithm is fully deterministic and works as follows: Input Sentence: (w 1, p 1), (w 2, p 2), … , (wn, pn) S = <> I = <(w 1, p 1), (w 2, p 2), … , (wn, pn)> T = <> while I != <> do begin x = get. Context(S, I, T); y = estimate. Action(model, x); perform. Action(y, S, I, T); end

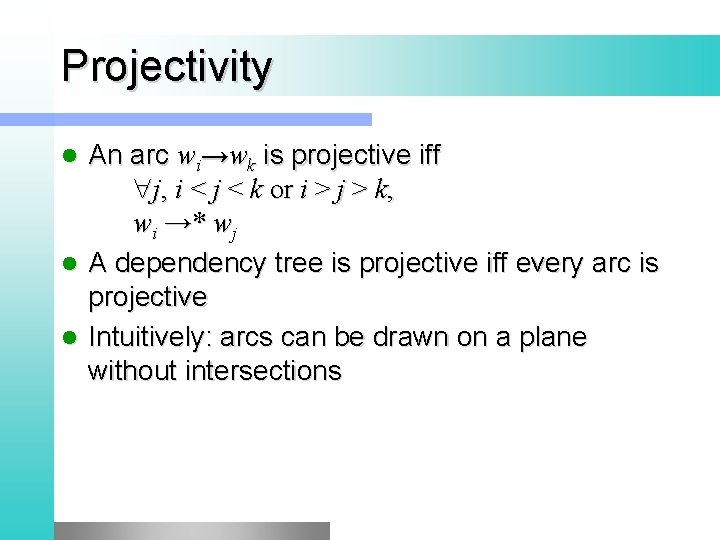

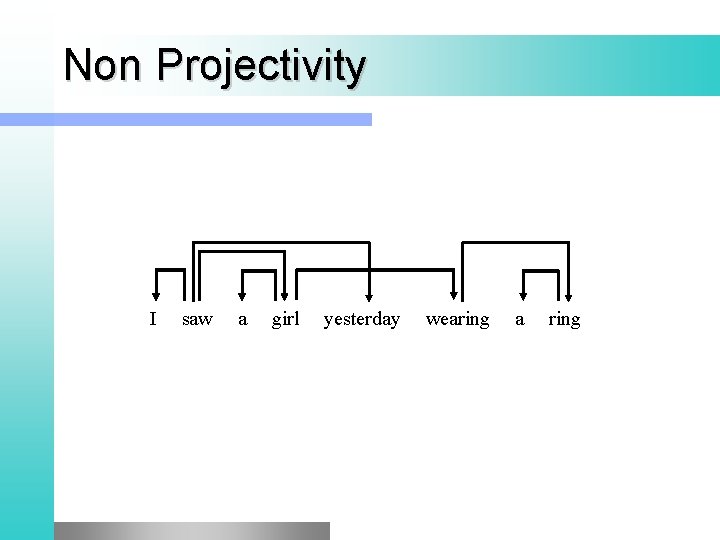

Projectivity An arc wi→wk is projective iff j, i < j < k or i > j > k, wi →* wj l A dependency tree is projective iff every arc is projective l Intuitively: arcs can be drawn on a plane without intersections l

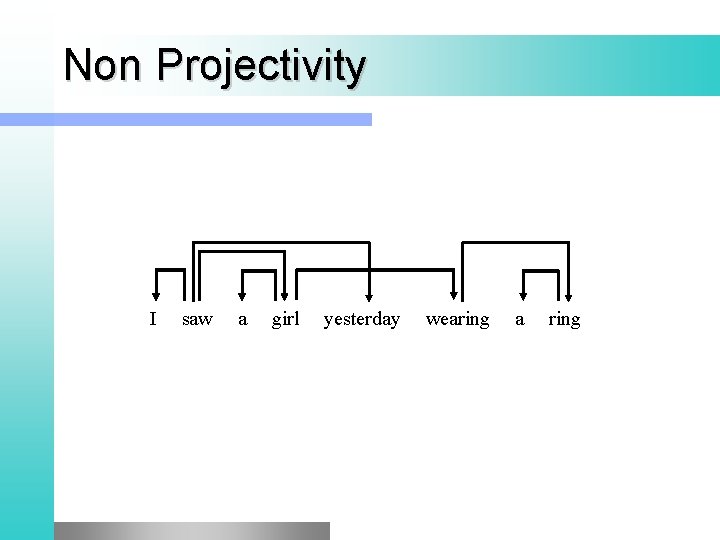

Non Projectivity I saw a girl yesterday wearing a ring

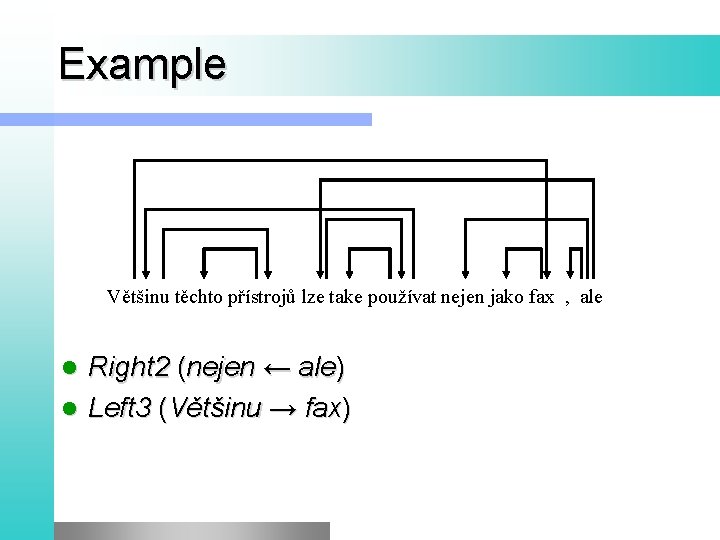

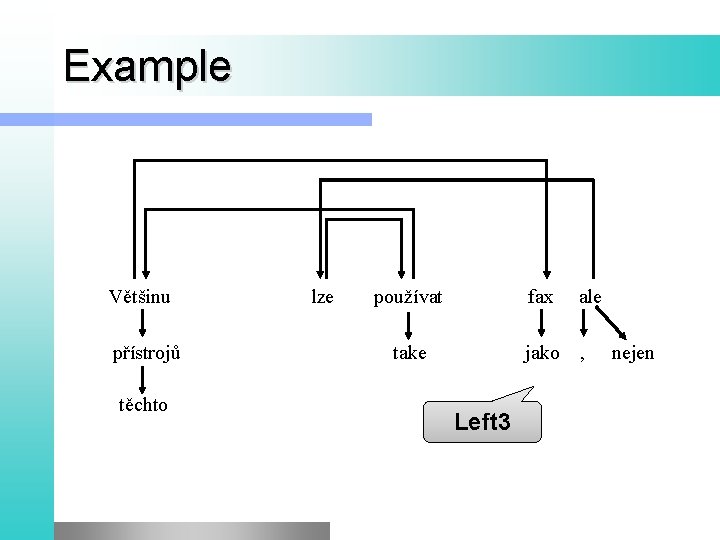

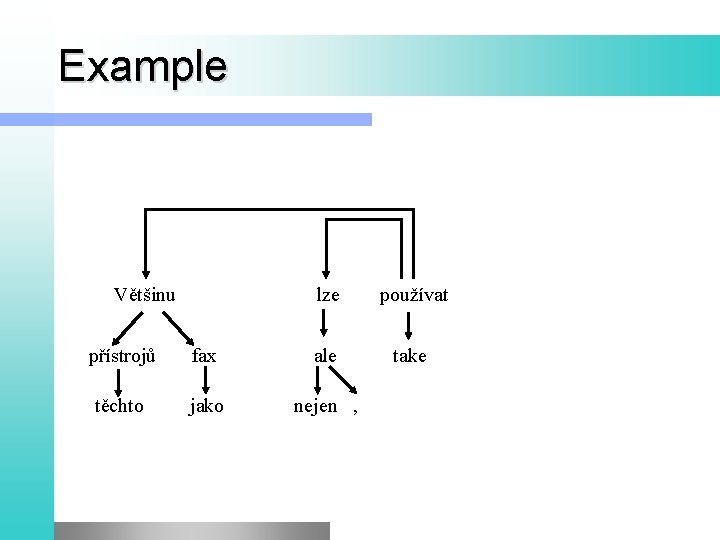

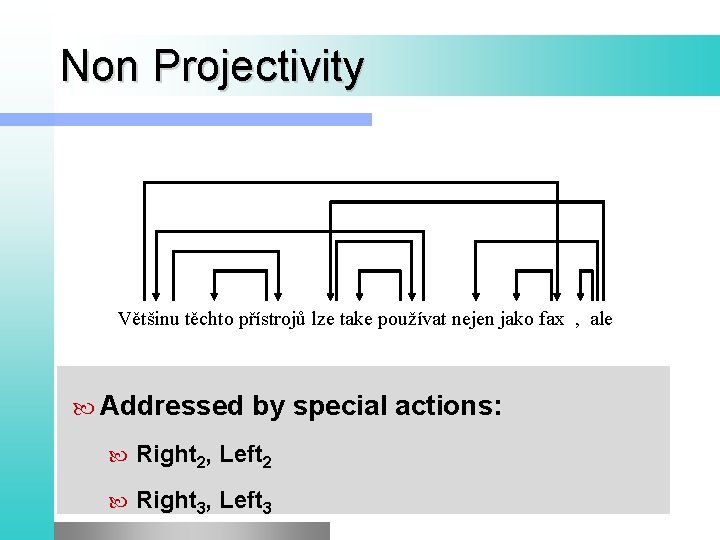

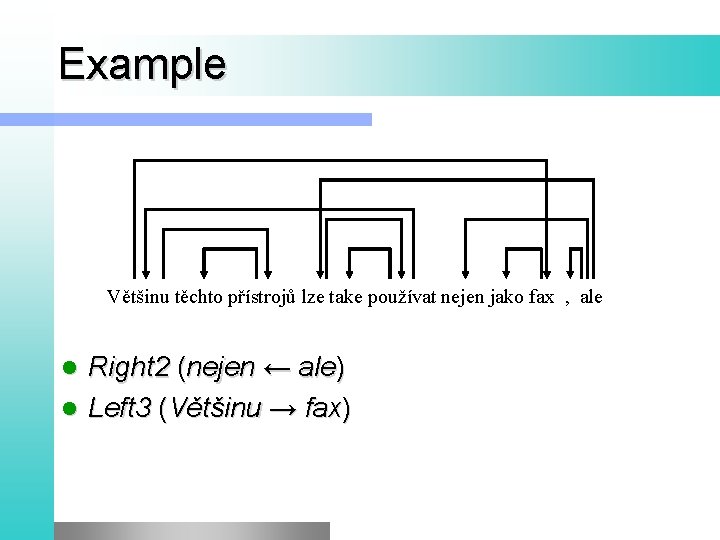

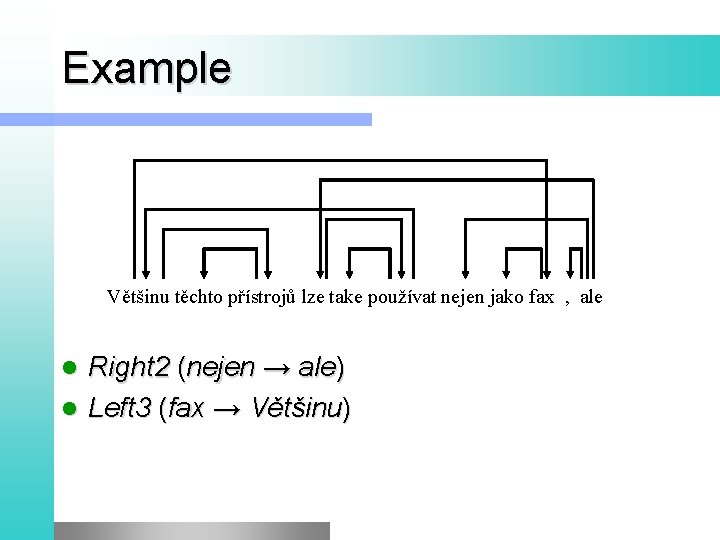

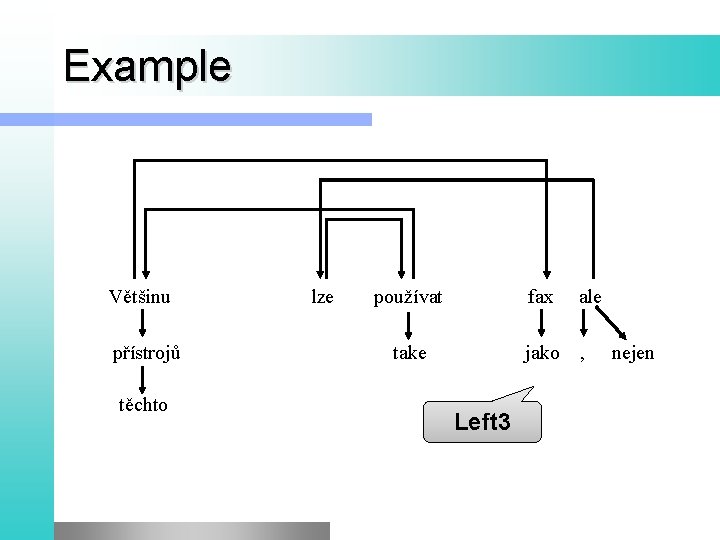

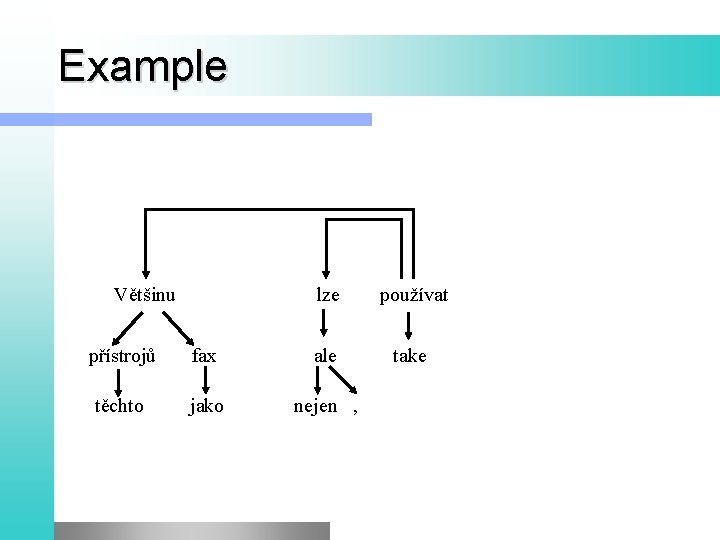

Non Projectivity Většinu těchto přístrojů lze take používat nejen jako fax , ale Addressed by special actions: Right 2, Left 2 Right 3, Left 3

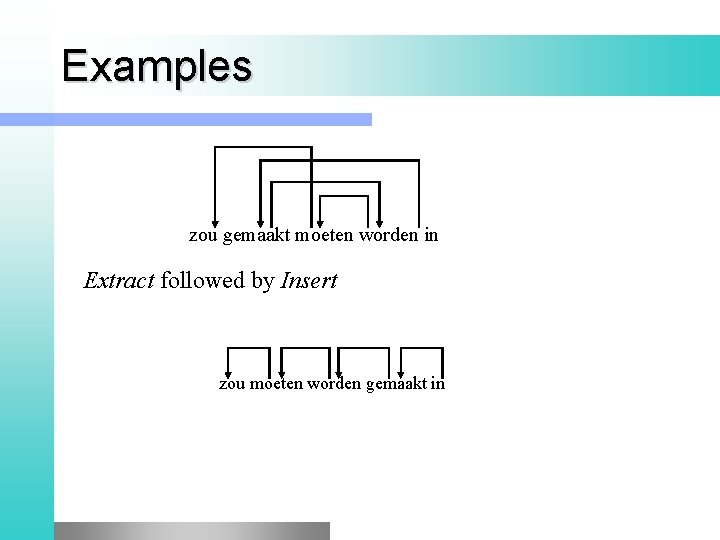

Actions for non-projective arcs Right 2 Left 2 Right 3 Left 3 Extract Insert s 1 | s 2 | S , n | I , T , A s 1|S, n|I, T, A {(s 2, r, n)} s 1 | s 2 | S , n | I , T , A s 2|S, s 1|I, T, A {(n, r, s 2)} s 1 | s 2 | s 3 | S , n | I , T , A s 1|s 2|S, n|I, T, A {(s 3, r, n)} s 1 | s 2 | s 3 | S , n | I , T , A s 2|s 3|S, s 1|I, T, A {(n, r, s 3)} s 1 | s 2 | S , n | I , T , A n | s 1 | S , I , s 2 | T , A S , I , s 1 | T , A s 1 | S , I , T , A

Example Většinu těchto přístrojů lze take používat nejen jako fax , ale Right 2 (nejen ← ale) l Left 3 (Většinu → fax) l

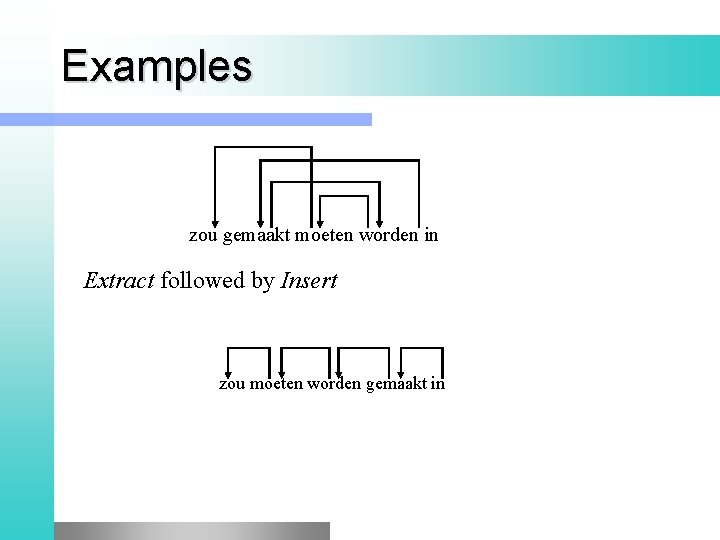

Examples zou gemaakt moeten worden in Extract followed by Insert zou moeten worden gemaakt in

Example Většinu těchto přístrojů lze take používat nejen jako fax , ale Right 2 (nejen → ale) l Left 3 (fax → Většinu) l

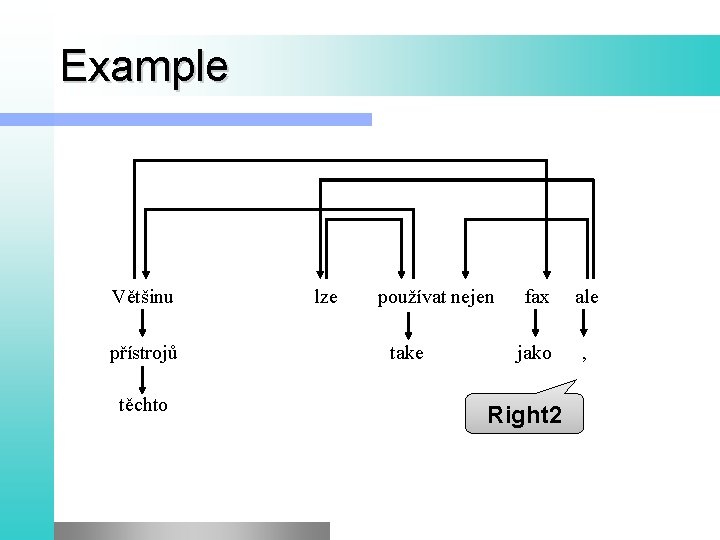

Example Většinu přístrojů těchto lze používat nejen take fax ale jako , Right 2

Example Většinu přístrojů těchto lze používat fax ale take jako , Left 3 nejen

Example Většinu lze používat take přístrojů fax ale těchto jako nejen ,

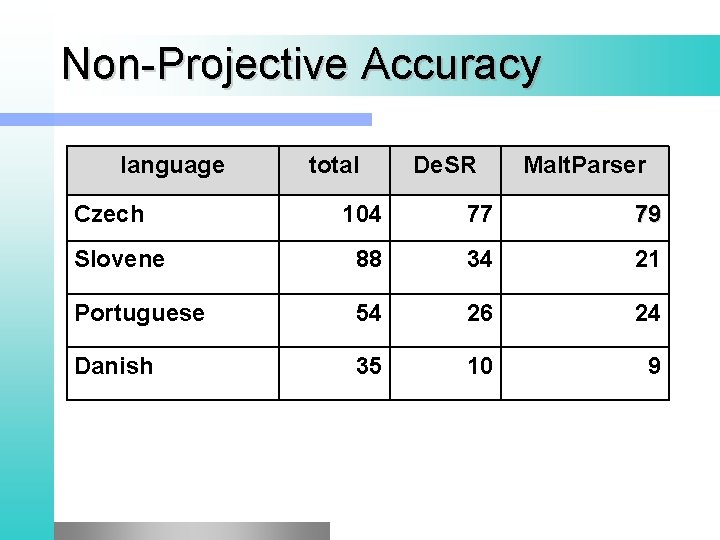

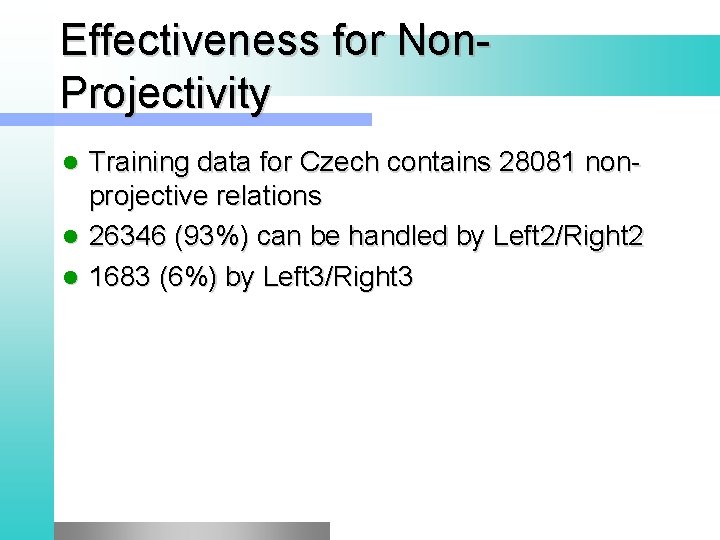

Effectiveness for Non. Projectivity Training data for Czech contains 28081 nonprojective relations l 26346 (93%) can be handled by Left 2/Right 2 l 1683 (6%) by Left 3/Right 3 l

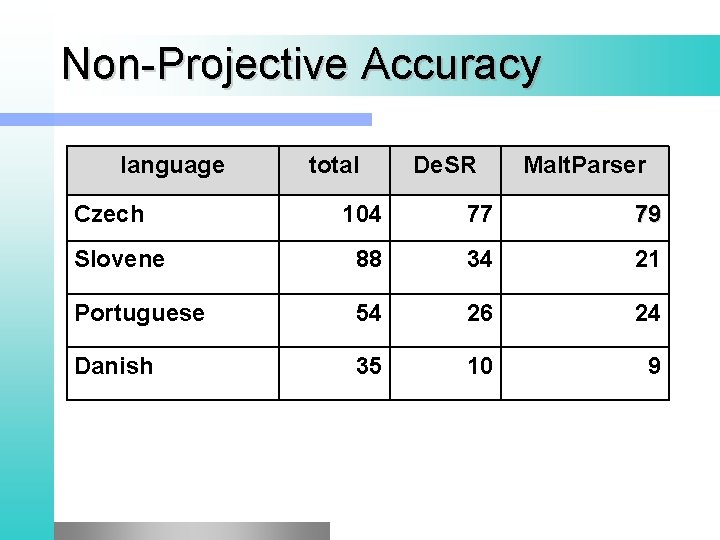

Non-Projective Accuracy language Czech total De. SR Malt. Parser 104 77 79 Slovene 88 34 21 Portuguese 54 26 24 Danish 35 10 9

Learning Phase

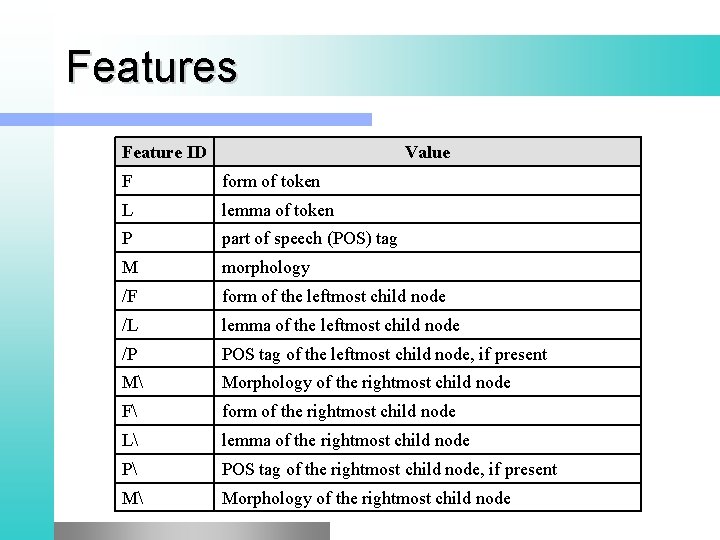

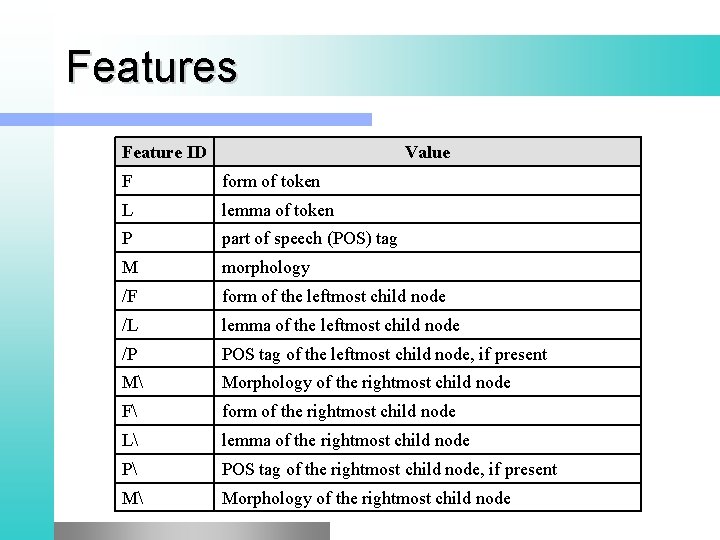

Features Feature ID Value F form of token L lemma of token P part of speech (POS) tag M morphology /F form of the leftmost child node /L lemma of the leftmost child node /P POS tag of the leftmost child node, if present M Morphology of the rightmost child node F form of the rightmost child node L lemma of the rightmost child node P POS tag of the rightmost child node, if present M Morphology of the rightmost child node

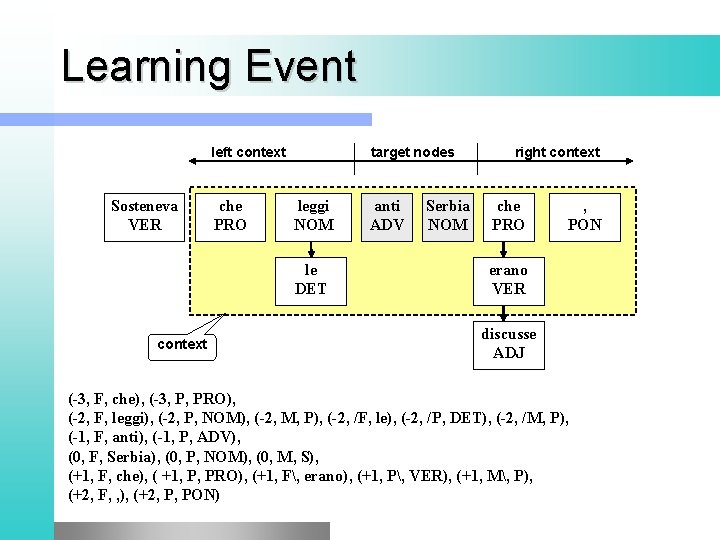

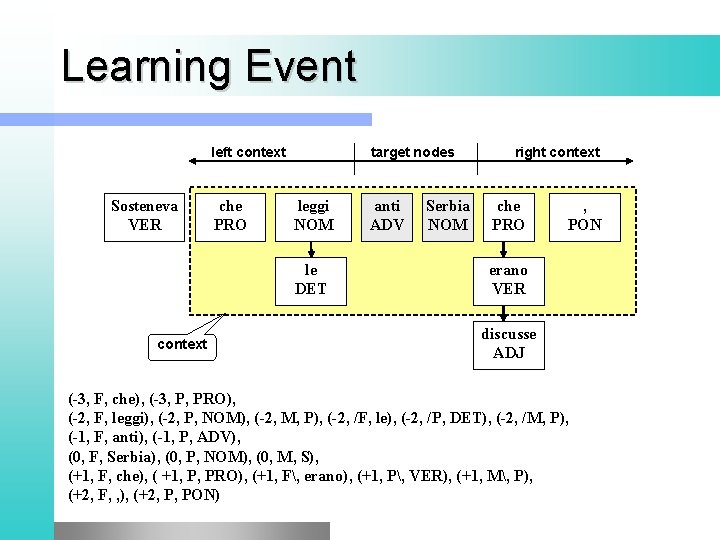

Learning Event left context Sosteneva VER che PRO target nodes leggi NOM le DET context anti ADV Serbia NOM right context che PRO , PON erano VER discusse ADJ (-3, F, che), (-3, P, PRO), (-2, F, leggi), (-2, P, NOM), (-2, M, P), (-2, /F, le), (-2, /P, DET), (-2, /M, P), (-1, F, anti), (-1, P, ADV), (0, F, Serbia), (0, P, NOM), (0, M, S), (+1, F, che), ( +1, P, PRO), (+1, F, erano), (+1, P, VER), (+1, M, P), (+2, F, , ), (+2, P, PON)

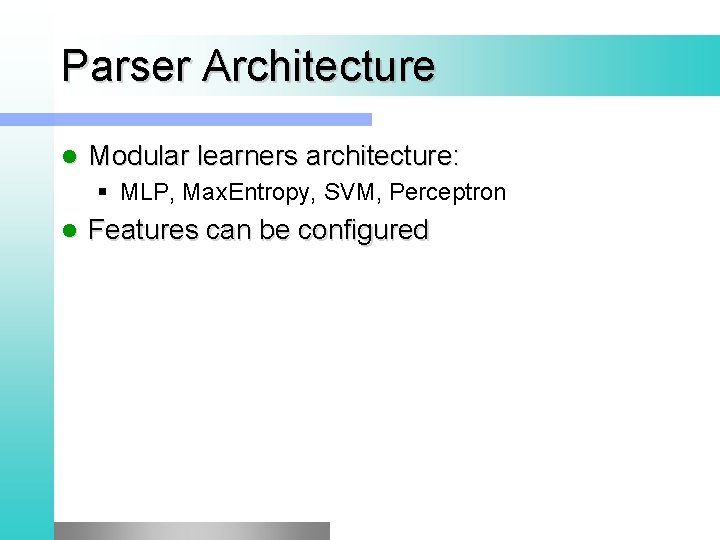

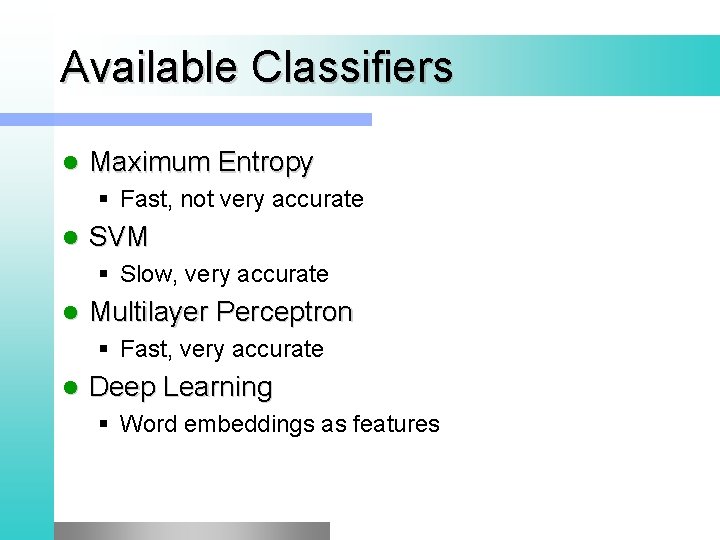

De. SR (Dependency Shift Reduce) l l l Multilanguage statistical transition based dependency parser Linear algorithm Capable of handling non-projectivity Trained on 28 languages Available from: http: //desr. sourceforge. net/

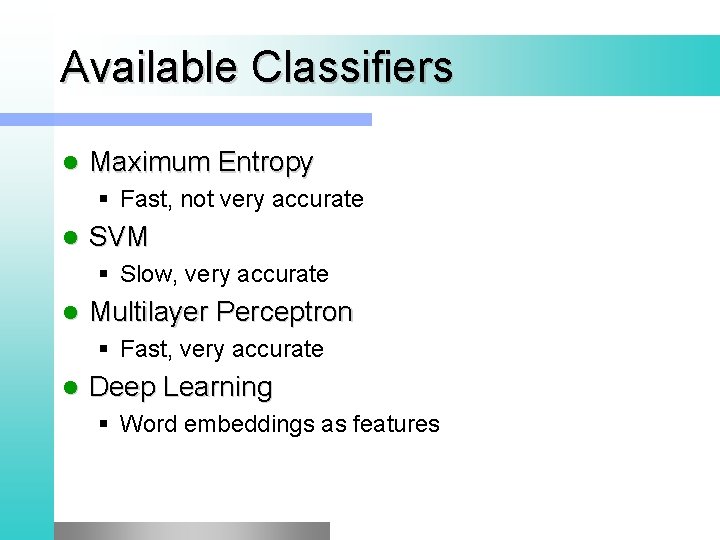

Parser Architecture l Modular learners architecture: § MLP, Max. Entropy, SVM, Perceptron l Features can be configured

Available Classifiers l Maximum Entropy § Fast, not very accurate l SVM § Slow, very accurate l Multilayer Perceptron § Fast, very accurate l Deep Learning § Word embeddings as features

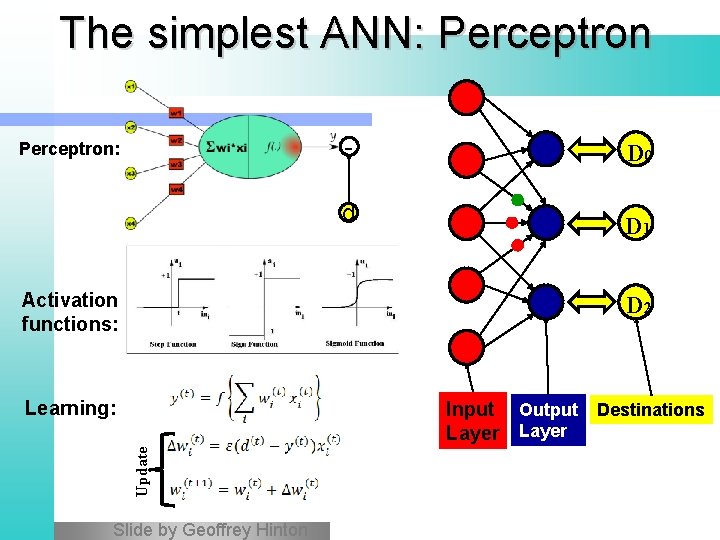

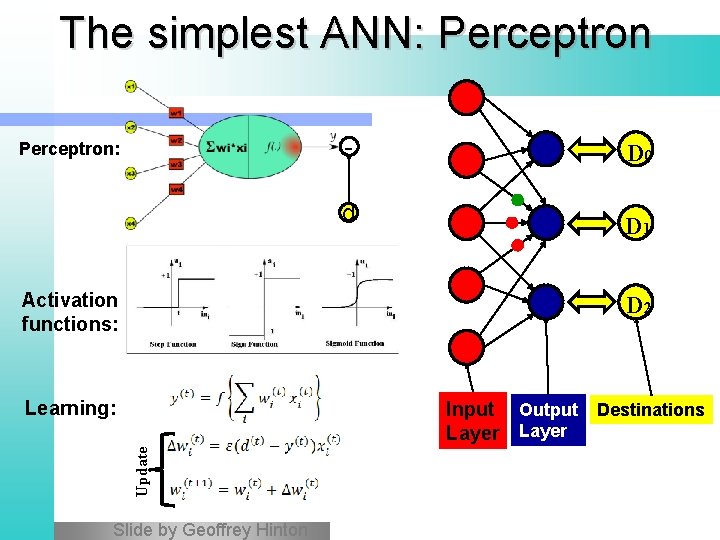

The simplest ANN: Perceptron - Perceptron: d Activation functions: D 0 D 1 D 2 Learning: Update Input Output Destinations Layer Slide by Geoffrey Hinton

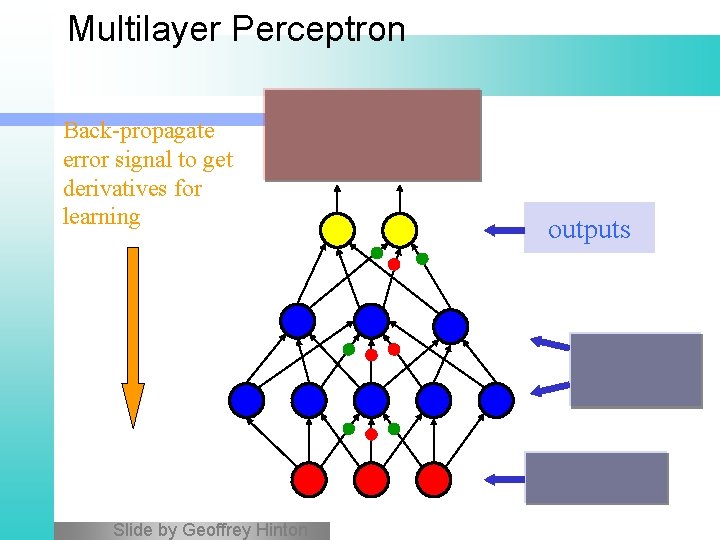

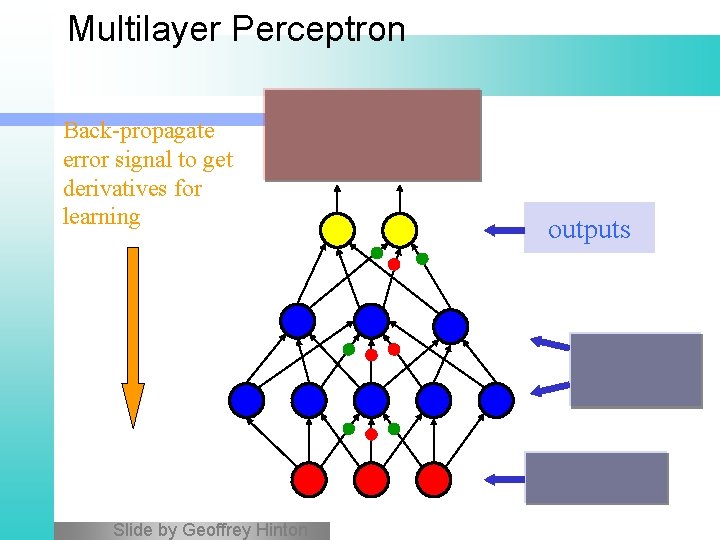

Multilayer Perceptron Back-propagate error signal to get derivatives for learning Compare outputs with correct answer to get error signal outputs hidden layers input vector Slide by Geoffrey Hinton

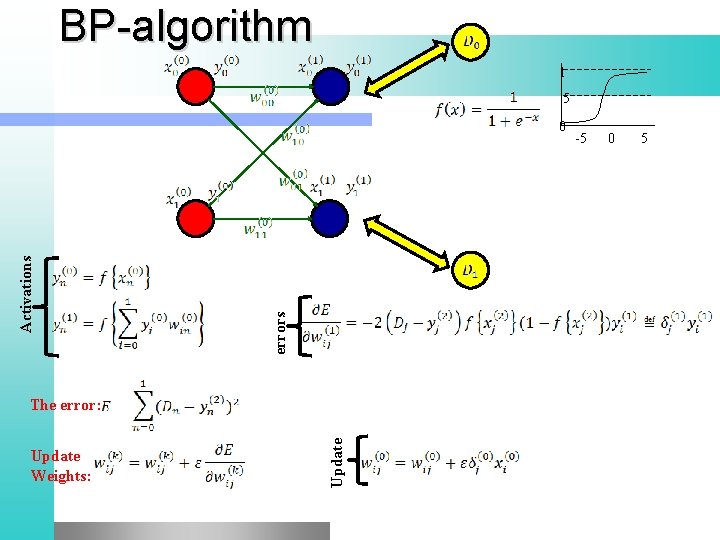

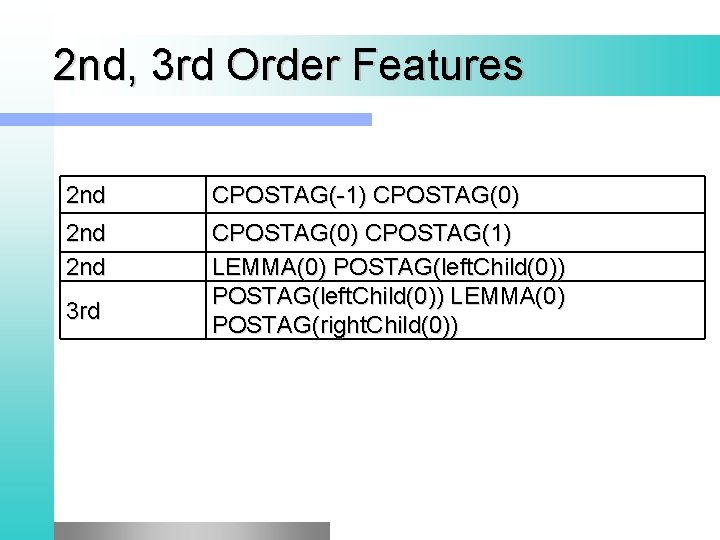

BP-algorithm 1. 5 errors Activations 0 Update Weights: Update The error: -5 0 5

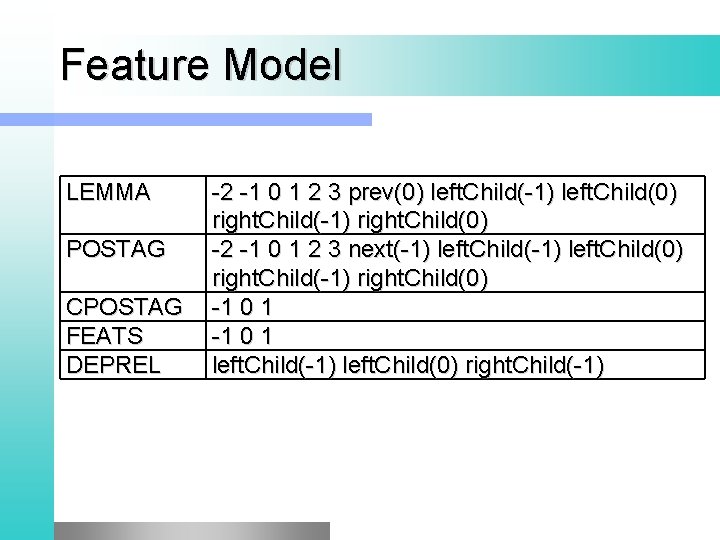

Feature Model LEMMA POSTAG CPOSTAG FEATS DEPREL -2 -1 0 1 2 3 prev(0) left. Child(-1) left. Child(0) right. Child(-1) right. Child(0) -2 -1 0 1 2 3 next(-1) left. Child(0) right. Child(-1) right. Child(0) -1 0 1 left. Child(-1) left. Child(0) right. Child(-1)

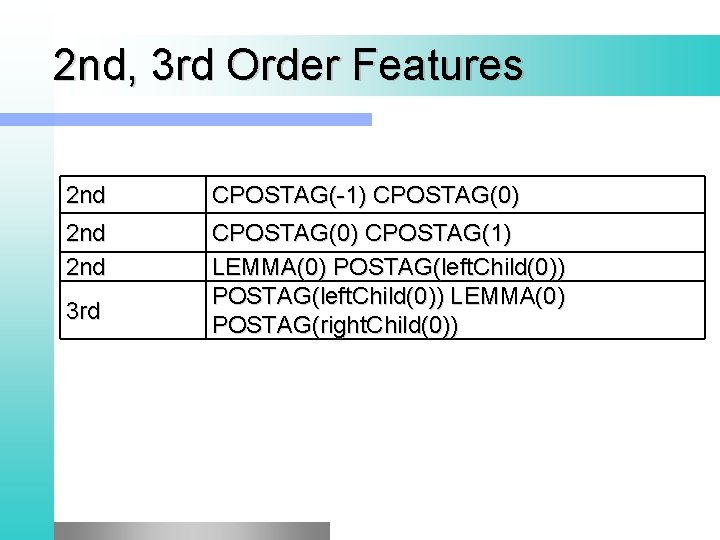

2 nd, 3 rd Order Features 2 nd CPOSTAG(-1) CPOSTAG(0) 2 nd CPOSTAG(0) CPOSTAG(1) LEMMA(0) POSTAG(left. Child(0)) LEMMA(0) POSTAG(right. Child(0)) 3 rd

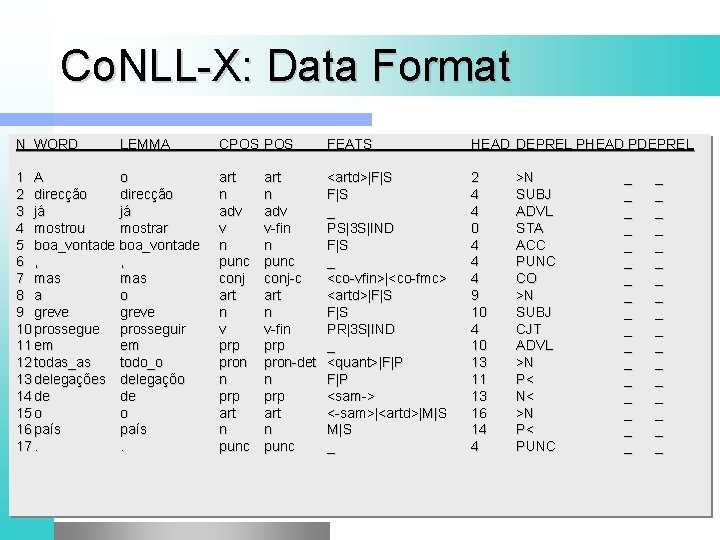

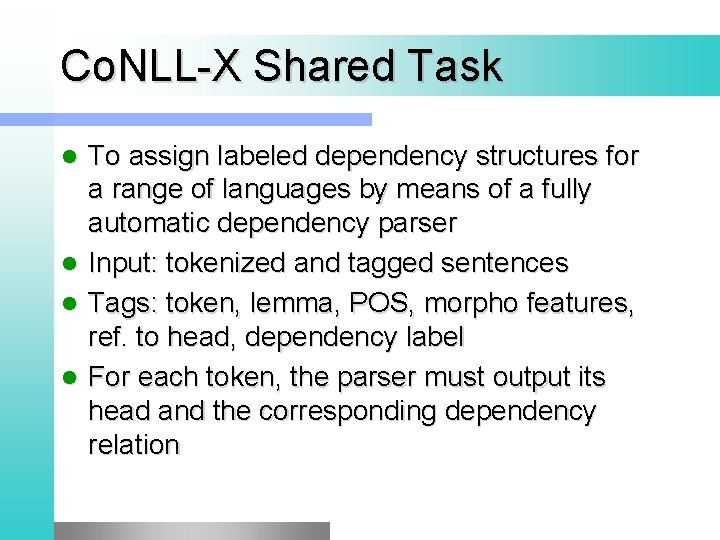

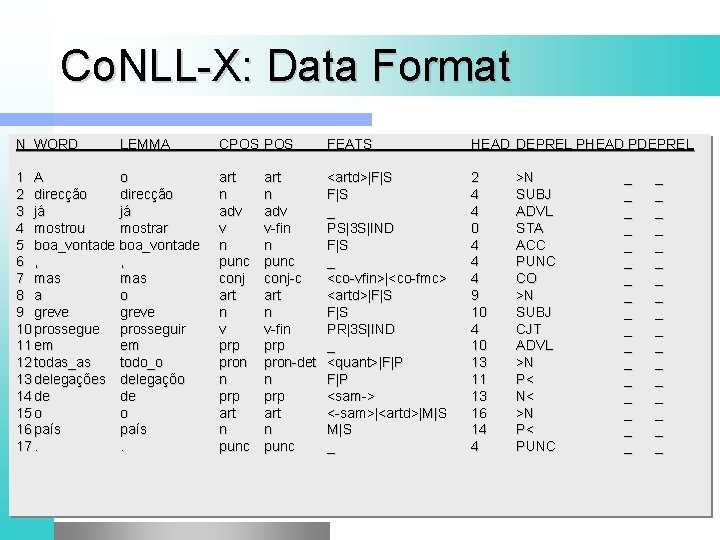

Co. NLL-X Shared Task To assign labeled dependency structures for a range of languages by means of a fully automatic dependency parser l Input: tokenized and tagged sentences l Tags: token, lemma, POS, morpho features, ref. to head, dependency label l For each token, the parser must output its head and the corresponding dependency relation l

Co. NLL-X: Data Format N WORD LEMMA 1 A o 2 direcção 3 já já 4 mostrou mostrar 5 boa_vontade 6 , , 7 mas 8 a o 9 greve 10 prossegue prosseguir 11 em em 12 todas_as todo_o 13 delegações delegaçõo 14 de de 15 o o 16 país 17. . CPOS FEATS HEAD DEPREL PHEAD PDEPREL art n adv v n punc conj art n v prp pron n prp art n punc <artd>|F|S _ PS|3 S|IND F|S _ <co-vfin>|<co-fmc> <artd>|F|S PR|3 S|IND _ <quant>|F|P <sam-> <-sam>|<artd>|M|S _ 2 4 4 0 4 4 4 9 10 4 10 13 11 13 16 14 4 art n adv v-fin n punc conj-c art n v-fin prp pron-det n prp art n punc >N SUBJ ADVL STA ACC PUNC CO >N SUBJ CJT ADVL >N P< N< >N P< PUNC _ _ _ _ _ _ _ _ _

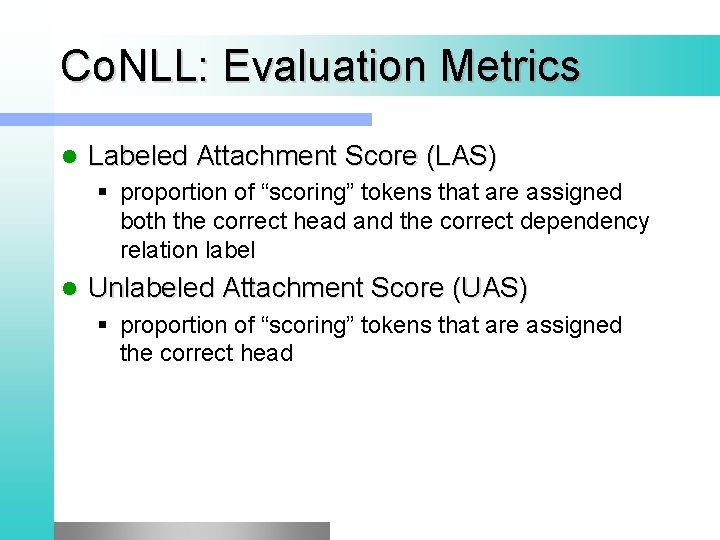

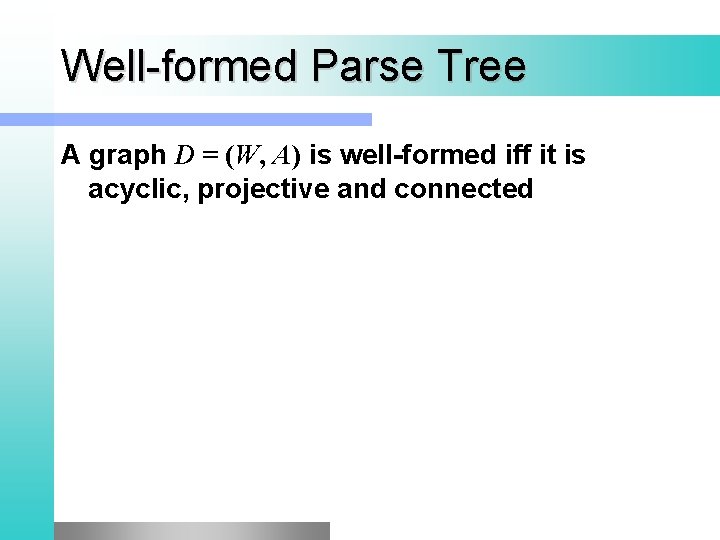

Co. NLL: Evaluation Metrics l Labeled Attachment Score (LAS) § proportion of “scoring” tokens that are assigned both the correct head and the correct dependency relation label l Unlabeled Attachment Score (UAS) § proportion of “scoring” tokens that are assigned the correct head

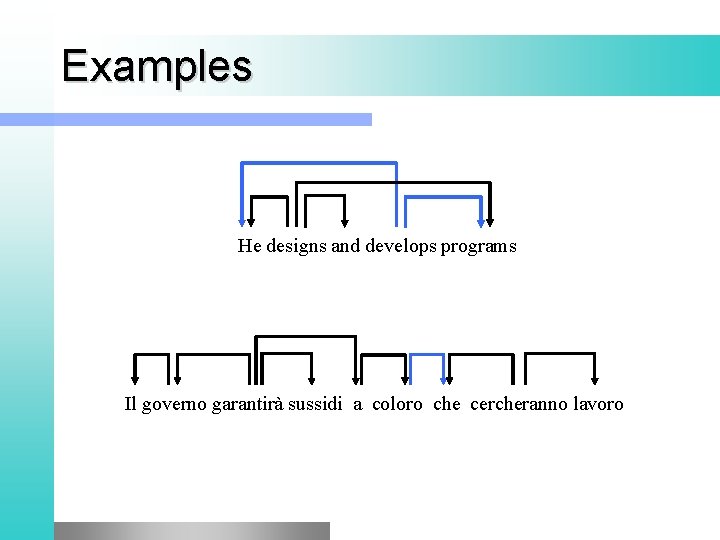

Well-formed Parse Tree A graph D = (W, A) is well-formed iff it is acyclic, projective and connected

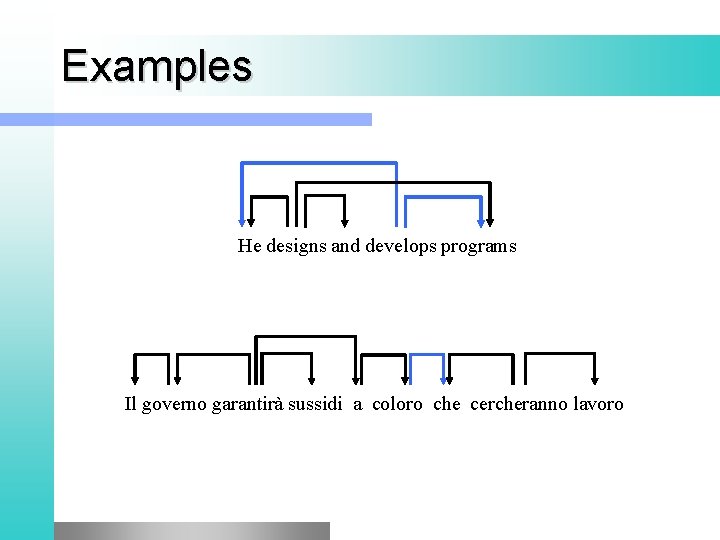

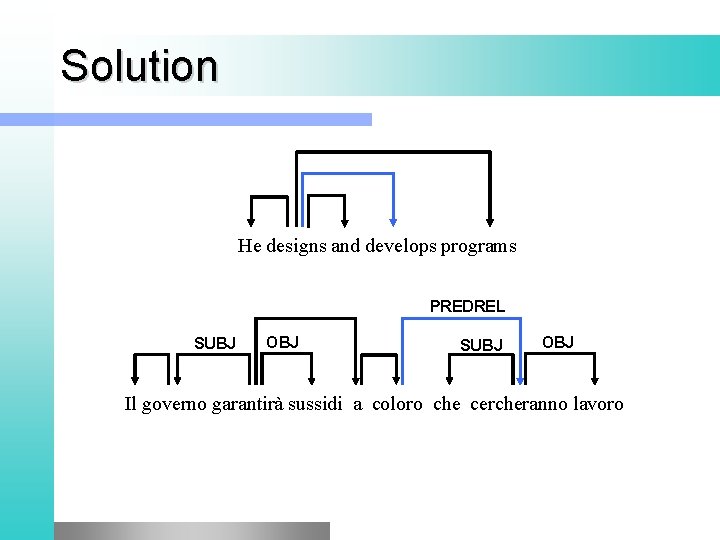

Examples He designs and develops programs Il governo garantirà sussidi a coloro che cercheranno lavoro

Solution He designs and develops programs PREDREL SUBJ OBJ Il governo garantirà sussidi a coloro che cercheranno lavoro

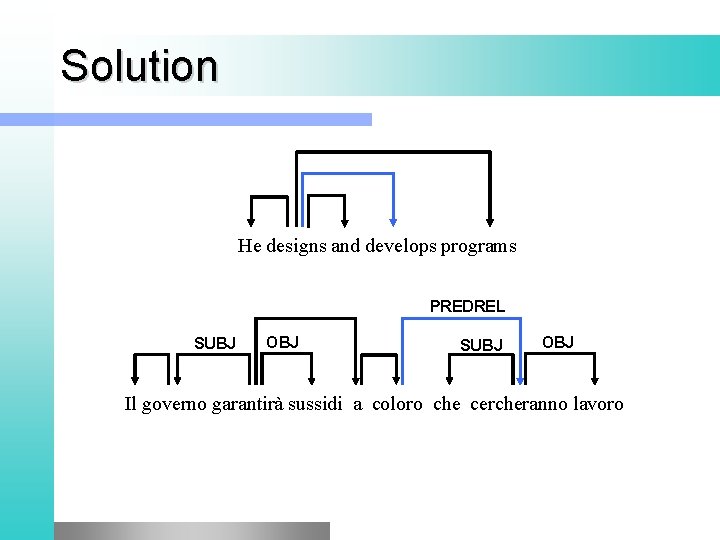

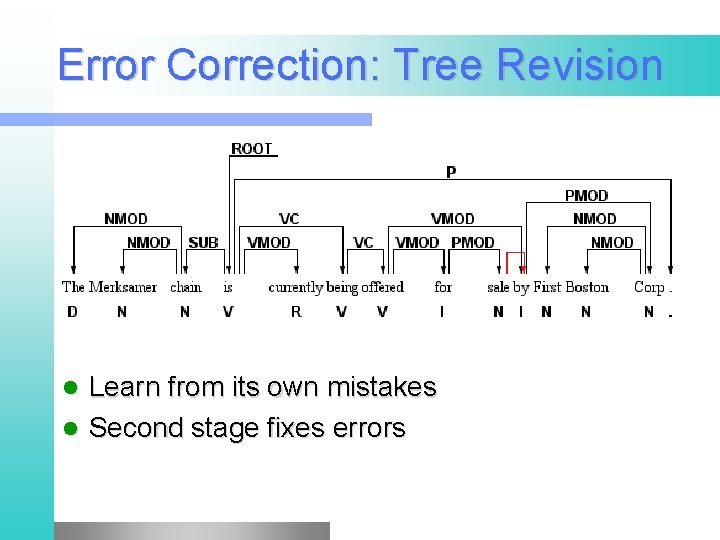

Error Correction: Tree Revision Learn from its own mistakes l Second stage fixes errors l

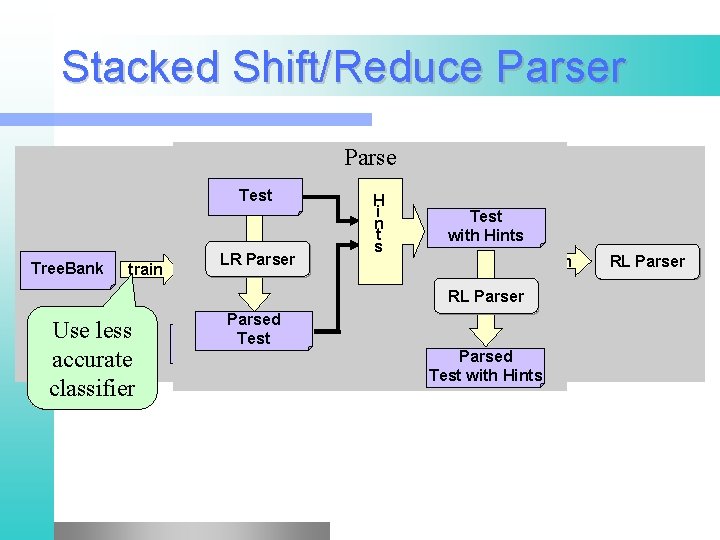

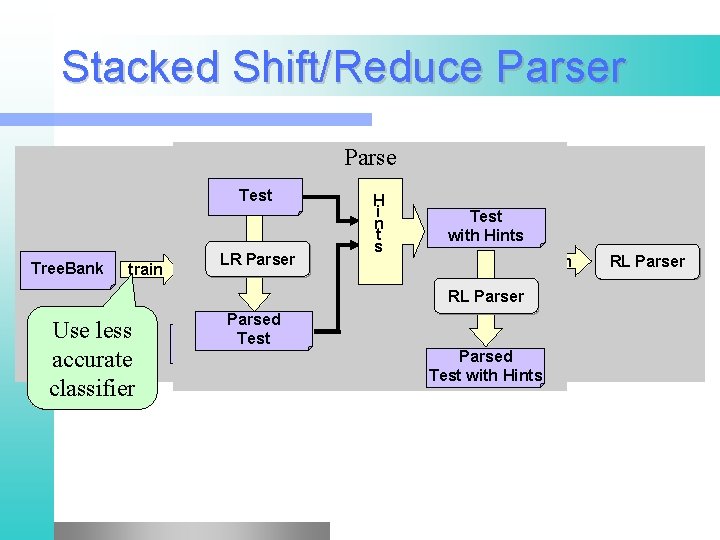

Stacked Shift/Reduce Parser Parse Train Test Tree. Bank train Use less accurate classifier LR Parser Parsed Test Tree. Bank H i n t s Test with Hints Tree. Bank train with Hints RL Parser Parsed Test with Hints RL Parser

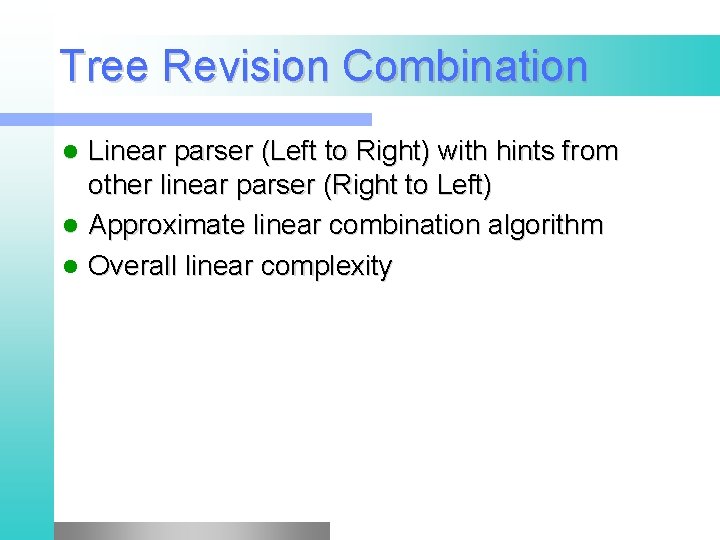

Tree Revision Combination Linear parser (Left to Right) with hints from other linear parser (Right to Left) l Approximate linear combination algorithm l Overall linear complexity l

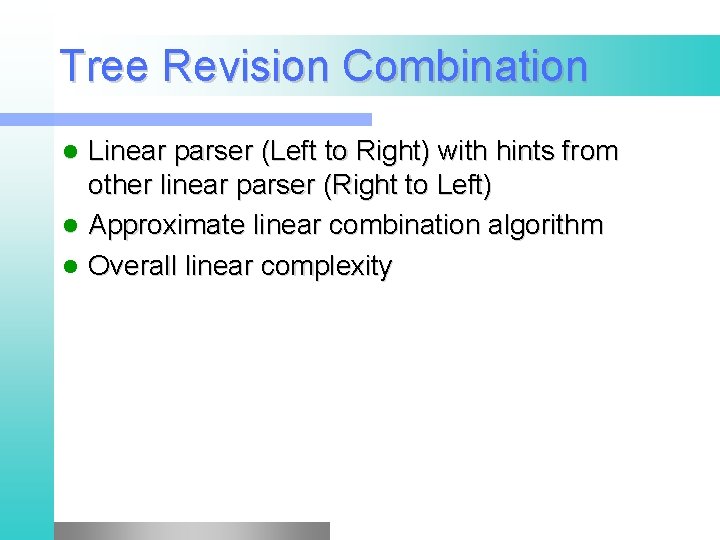

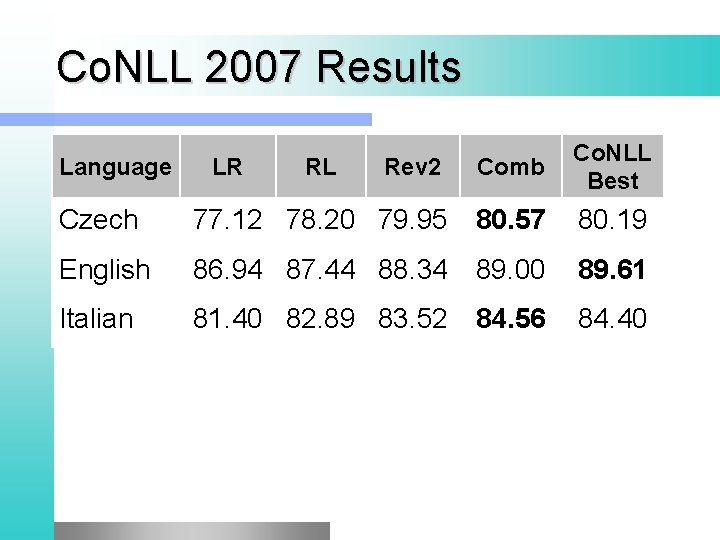

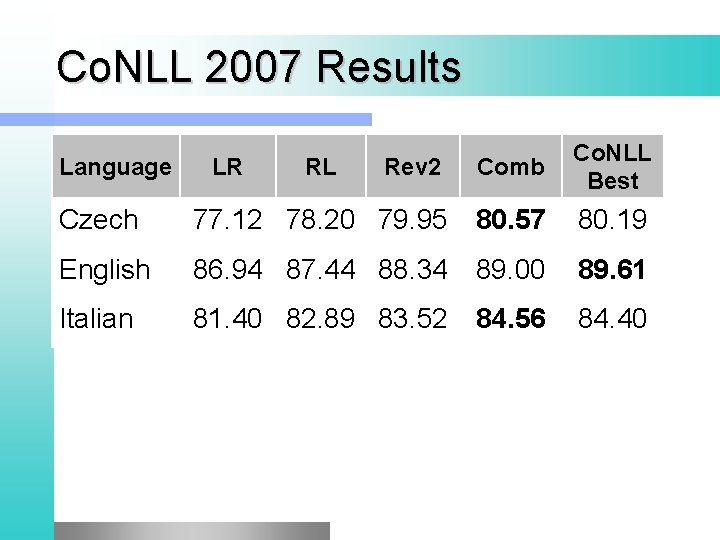

Co. NLL 2007 Results Rev 2 Comb Co. NLL Best Czech 77. 12 78. 20 79. 95 80. 57 80. 19 English 86. 94 87. 44 88. 34 89. 00 89. 61 Italian 81. 40 82. 89 83. 52 84. 56 84. 40 Language LR RL

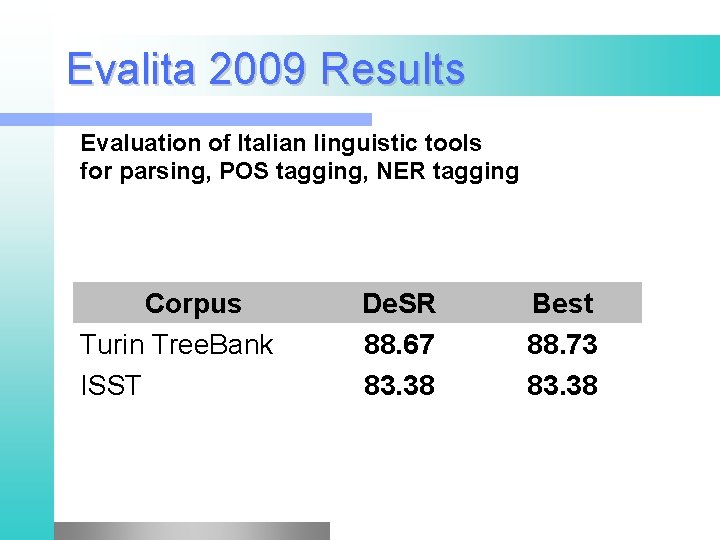

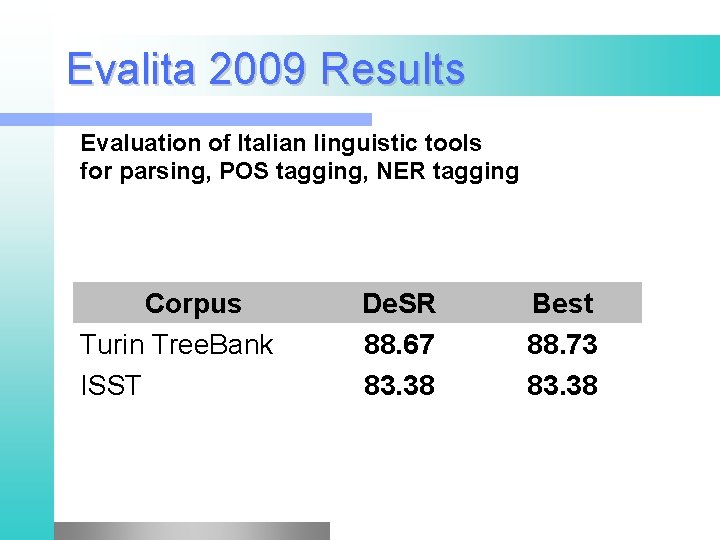

Evalita 2009 Results Evaluation of Italian linguistic tools for parsing, POS tagging, NER tagging Corpus Turin Tree. Bank ISST De. SR 88. 67 83. 38 Best 88. 73 83. 38

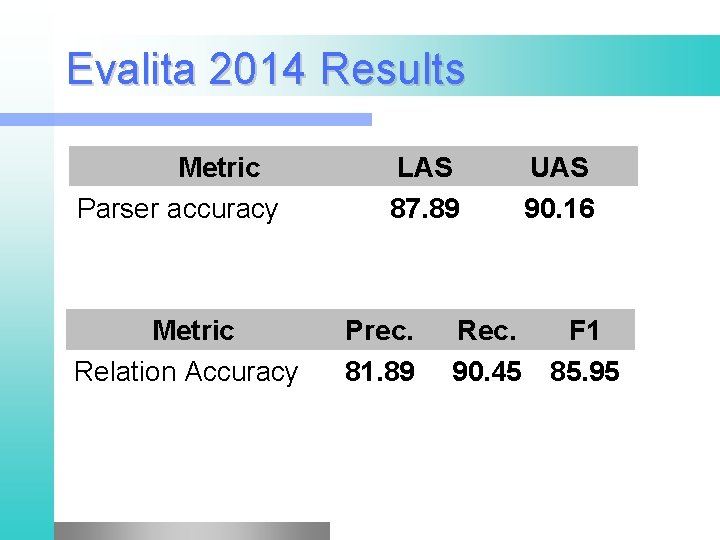

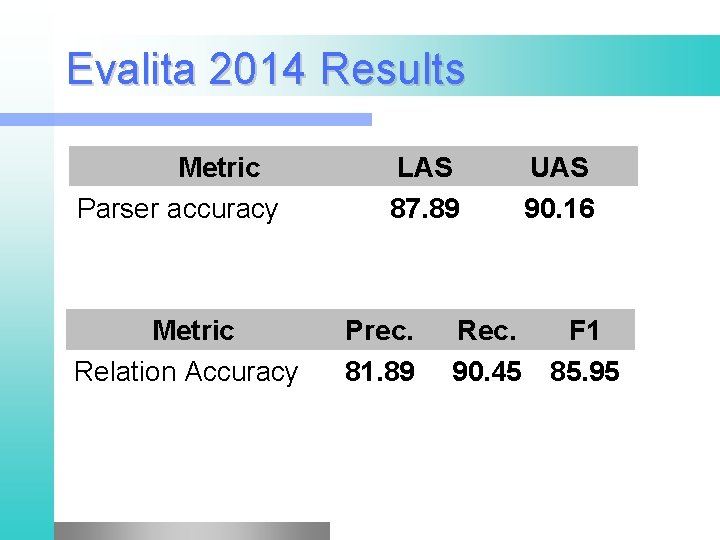

Evalita 2014 Results Metric Parser accuracy Metric Relation Accuracy LAS 87. 89 Prec. 81. 89 Rec. 90. 45 UAS 90. 16 F 1 85. 95

Dependencies encode relational structure Relation Extraction with Stanford Dependencies

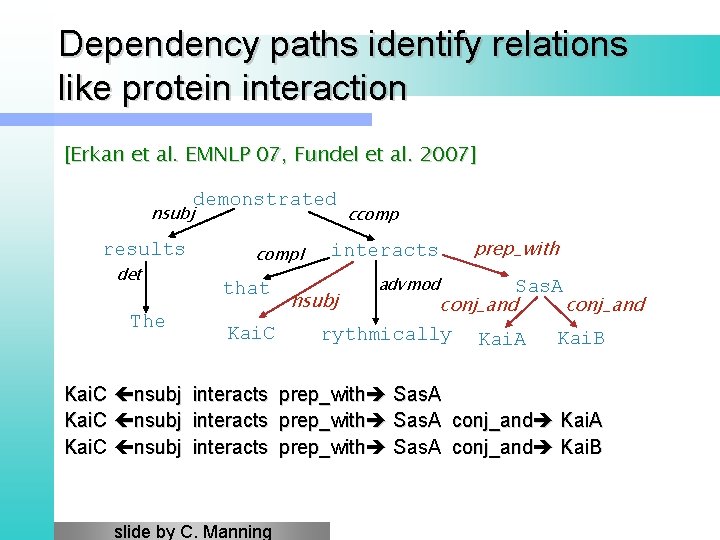

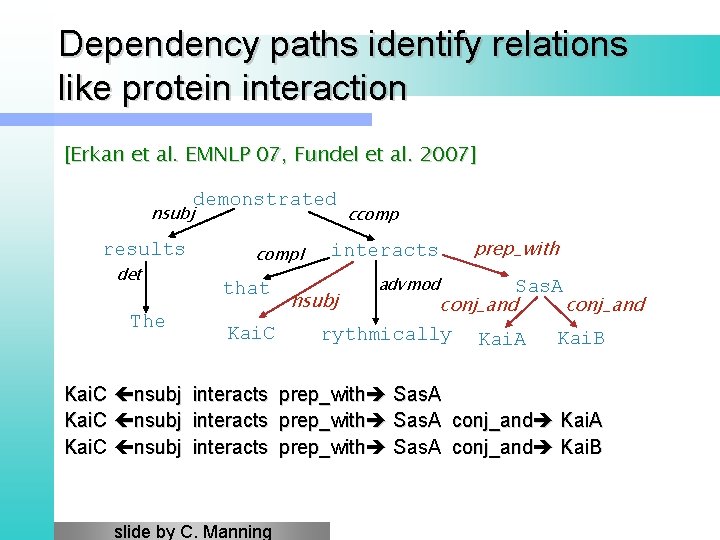

Dependency paths identify relations like protein interaction [Erkan et al. EMNLP 07, Fundel et al. 2007] demonstrated nsubj results det The compl ccomp interacts prep_with advmod Sas. A nsubj conj_and Kai. C rythmically Kai. A Kai. B that Kai. C nsubj interacts prep_with Sas. A conj_and Kai. A Kai. C nsubj interacts prep_with Sas. A conj_and Kai. B slide by C. Manning

![Universal Dependencies de Marneffe et al LREC 2006 The basic dependency representation is Universal Dependencies [de Marneffe et al. LREC 2006] • The basic dependency representation is](https://slidetodoc.com/presentation_image_h/cce55d49248d27c837beb4ed2e42855e/image-74.jpg)

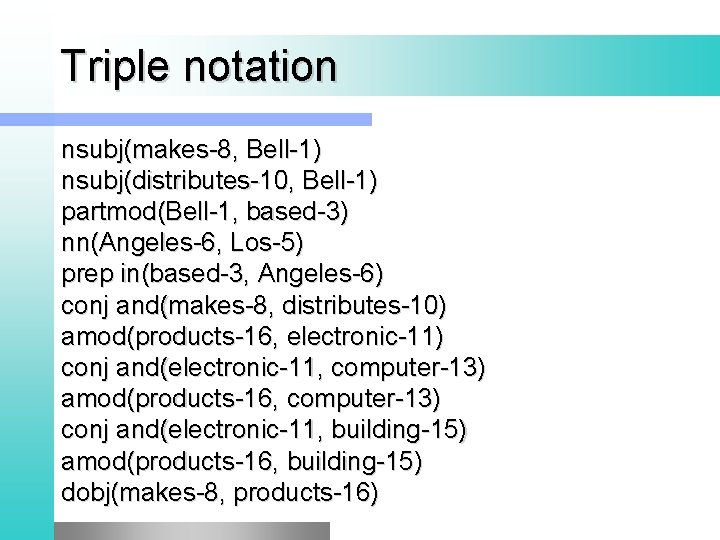

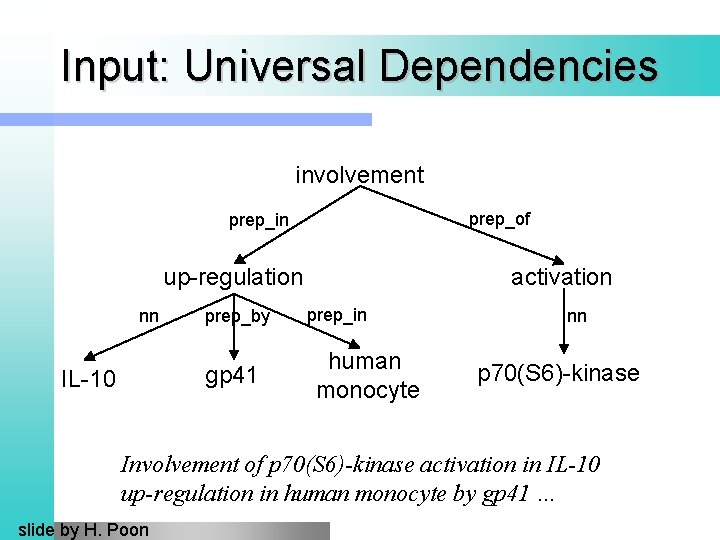

Universal Dependencies [de Marneffe et al. LREC 2006] • The basic dependency representation is projective • It can be generated by postprocessing headed phrase structure parses (Penn Treebank syntax) • It can also be generated directly by dependency parsers jumped prep nsubj boy amod det the slide by C. Manning little over pobj the det fence

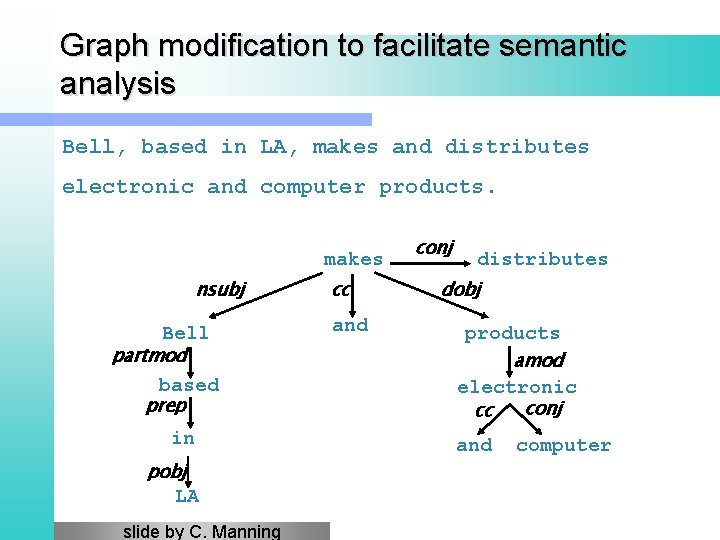

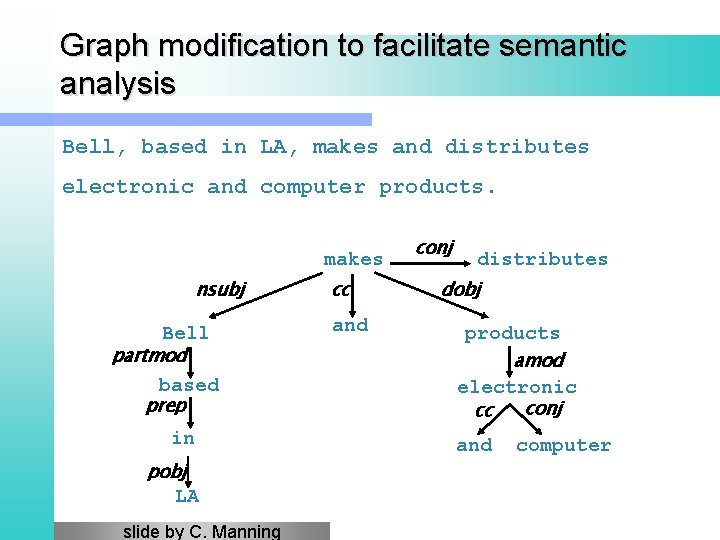

Graph modification to facilitate semantic analysis Bell, based in LA, makes and distributes electronic and computer products. makes nsubj Bell partmod based prep in pobj LA slide by C. Manning cc and conj distributes dobj products amod electronic conj cc and computer

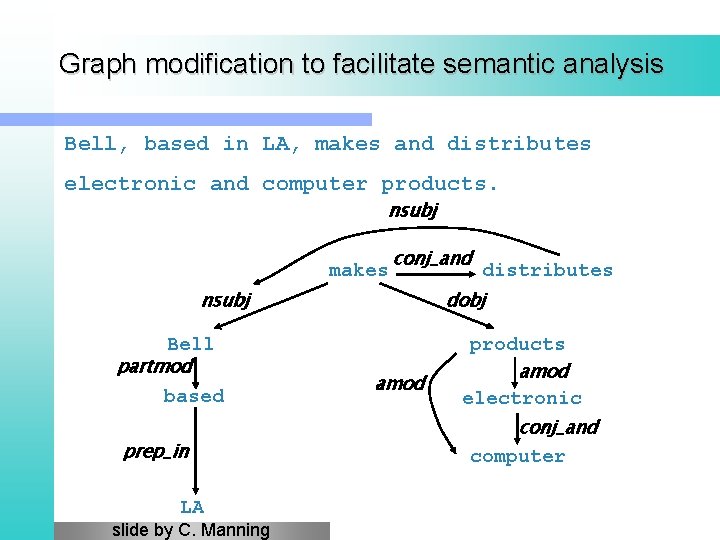

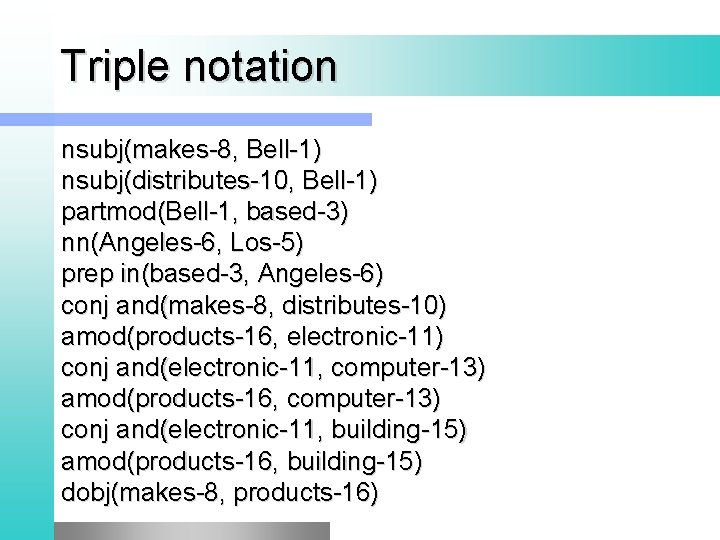

Triple notation nsubj(makes-8, Bell-1) nsubj(distributes-10, Bell-1) partmod(Bell-1, based-3) nn(Angeles-6, Los-5) prep in(based-3, Angeles-6) conj and(makes-8, distributes-10) amod(products-16, electronic-11) conj and(electronic-11, computer-13) amod(products-16, computer-13) conj and(electronic-11, building-15) amod(products-16, building-15) dobj(makes-8, products-16)

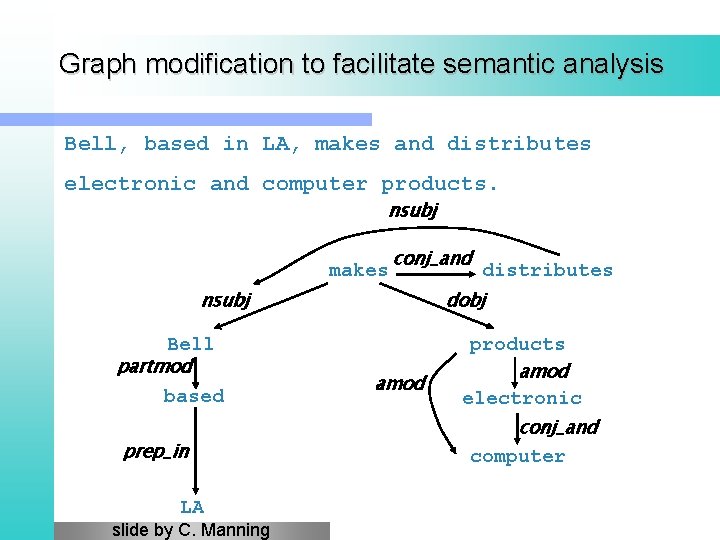

Graph modification to facilitate semantic analysis Bell, based in LA, makes and distributes electronic and computer products. nsubj makes conj_and nsubj Bell partmod based prep_in LA slide by C. Manning distributes dobj amod products amod electronic conj_and computer

![Bio NLP 20092011 Relation extraction shared tasks Björne et al 2009 slide by C Bio. NLP 2009/2011 Relation extraction shared tasks [Björne et al. 2009] slide by C.](https://slidetodoc.com/presentation_image_h/cce55d49248d27c837beb4ed2e42855e/image-78.jpg)

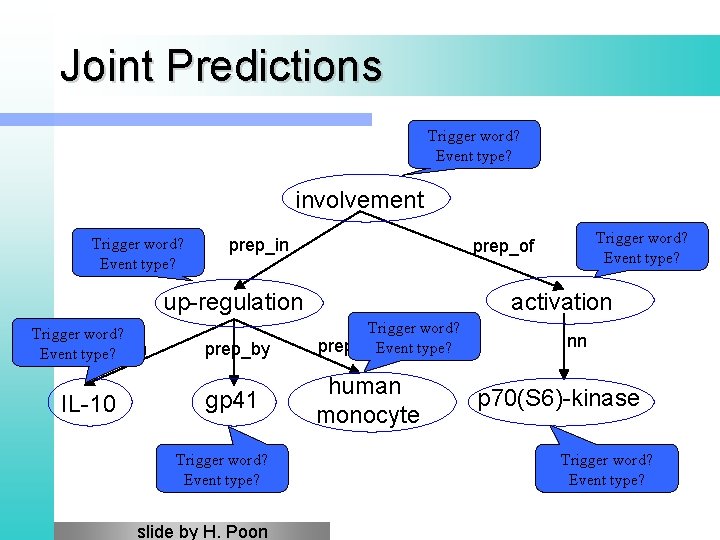

Bio. NLP 2009/2011 Relation extraction shared tasks [Björne et al. 2009] slide by C. Manning

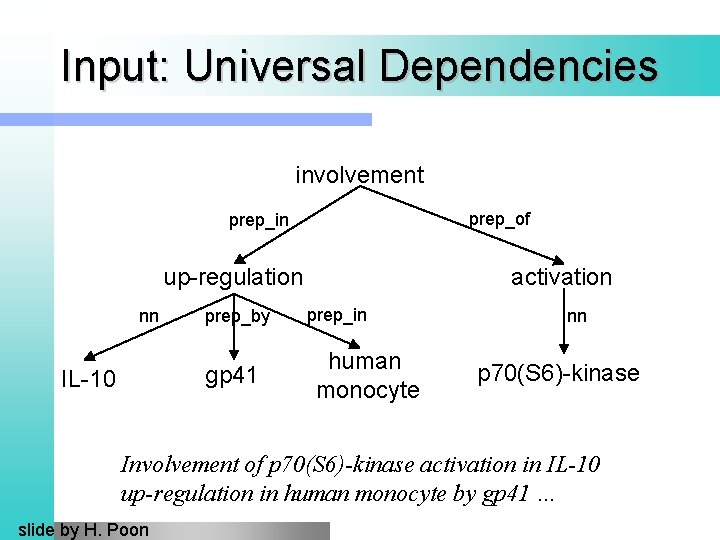

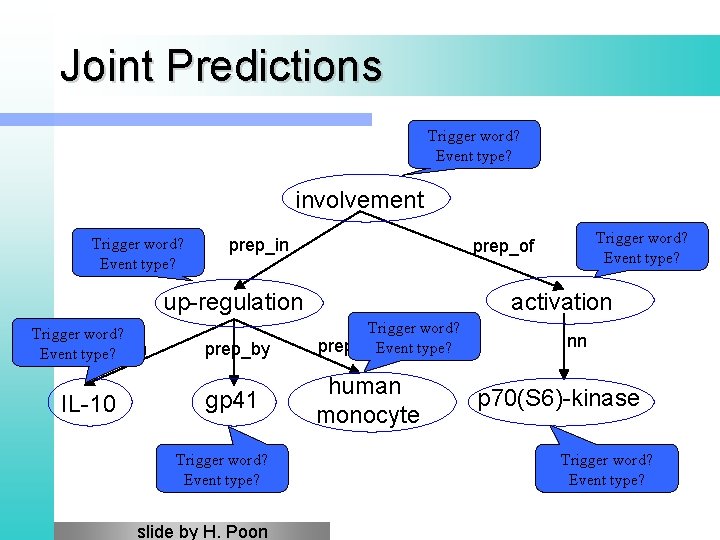

Input: Universal Dependencies involvement prep_of prep_in up-regulation nn prep_by gp 41 IL-10 activation prep_in human monocyte nn p 70(S 6)-kinase Involvement of p 70(S 6)-kinase activation in IL-10 up-regulation in human monocyte by gp 41 … slide by H. Poon

Joint Predictions Trigger word? Event type? involvement Trigger word? Event type? prep_in up-regulation Trigger word? Event type? nn IL-10 prep_by gp 41 Trigger word? Event type? slide by H. Poon Trigger word? Event type? prep_of activation Trigger word? prep_in. Event type? human monocyte nn p 70(S 6)-kinase Trigger word? Event type?

References l l l G. Attardi, Experiments with a Multilanguage Non. Projective Dependency Parser, Proc. of the Tenth Conference on Natural Language Learning, New York, (NY), 2006. G. Attardi, F. Dell'Orletta. Reverse Revision and Linear Tree Combination for Dependency Parsing. Proc. of NAACL HLT 2009, 2009. G. Attardi, F. Dell'Orletta, M. Simi, J. Turian. Accurate Dependency Parsing with a Stacked Multilayer Perceptron. Proc. of Workshop Evalita 2009, ISBN 978 -88 -903581 -1 -1, 2009. H. Yamada, Y. Matsumoto. 2003. Statistical Dependency Analysis with Support Vector Machines. In Proc. IWPT. M. T. Kromann. 2001. Optimality parsing and local cost functions in discontinuous grammars. In Proc. FG-MOL.

References l D. Cer, M. de Marneffe, D. Jurafsky and C. Manning, Parsing to Stanford Dependencies: Trade-offs between speed and accuracy, In Proc. of LREC-10. 2010