Universal Morphological Analysis using Structured Nearest Neighbor Prediction

Universal Morphological Analysis using Structured Nearest Neighbor Prediction Young-Bum Kim, João V. Graça, and Benjamin Snyder University of Wisconsin-Madison 28 July, 2011 The University of Wisconsin-Madison

Unsupervised NLP v Unsupervised learning in NLP has become popular 27 papers in this year ACL+EMNLP v Relies on inductive bias, encoded in model structure or learning algorithm. Example : HMM for POS induction, encodes transitional regularity ? ? ? I like to The University of Wisconsin-Madison ? read 1

Inductive Biases v Formulated with weak empirical grounding (or left implicit) v Single, simple bias for all languages low performance, complicated models, fragility, language dependence. Our approach : learn complex, universal bias using labeled languages i. e. Empirically learn what the space of plausible human languages looks like to guide unsupervised learning The University of Wisconsin-Madison 2

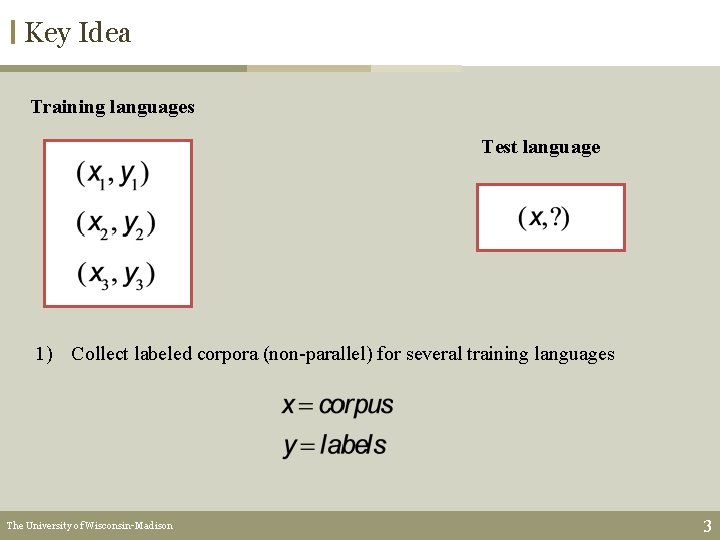

Key Idea Training languages Test language 1) Collect labeled corpora (non-parallel) for several training languages The University of Wisconsin-Madison 3

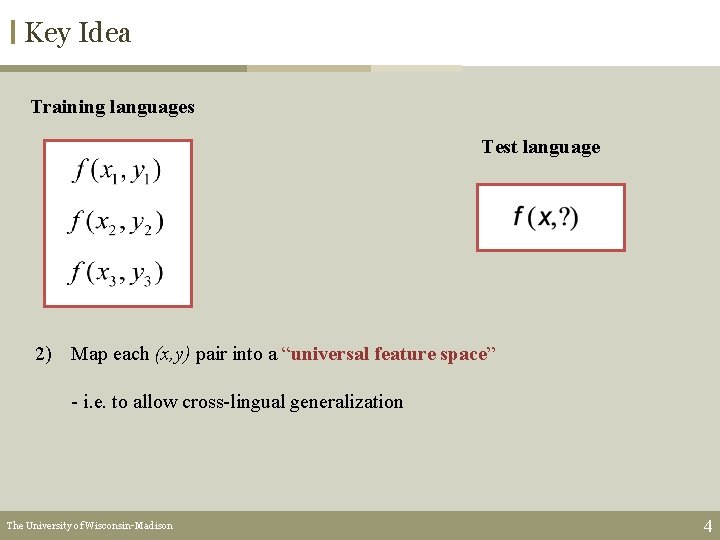

Key Idea Training languages Test language 2) Map each (x, y) pair into a “universal feature space” - i. e. to allow cross-lingual generalization The University of Wisconsin-Madison 4

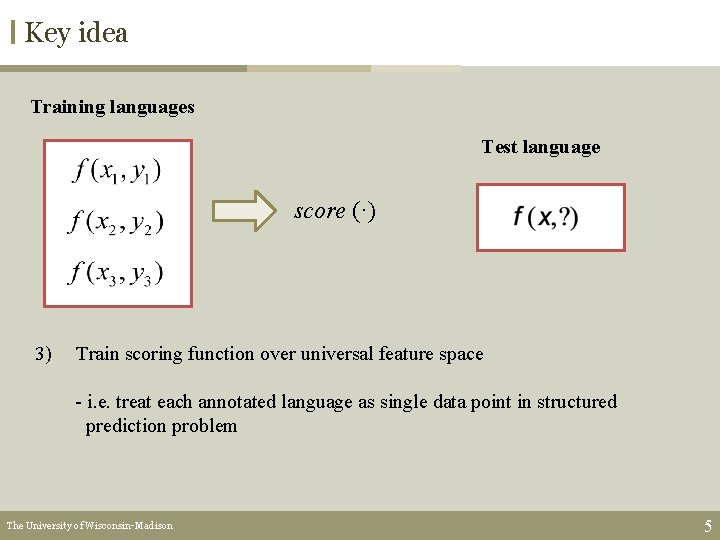

Key idea Training languages Test language score (·) 3) Train scoring function over universal feature space - i. e. treat each annotated language as single data point in structured prediction problem The University of Wisconsin-Madison 5

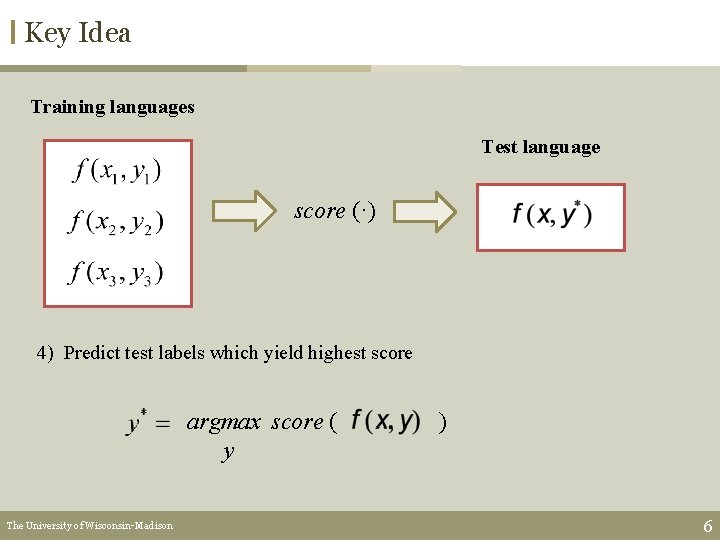

Key Idea Training languages Test language score (·) 4) Predict test labels which yield highest score argmax score ( y The University of Wisconsin-Madison ) 6

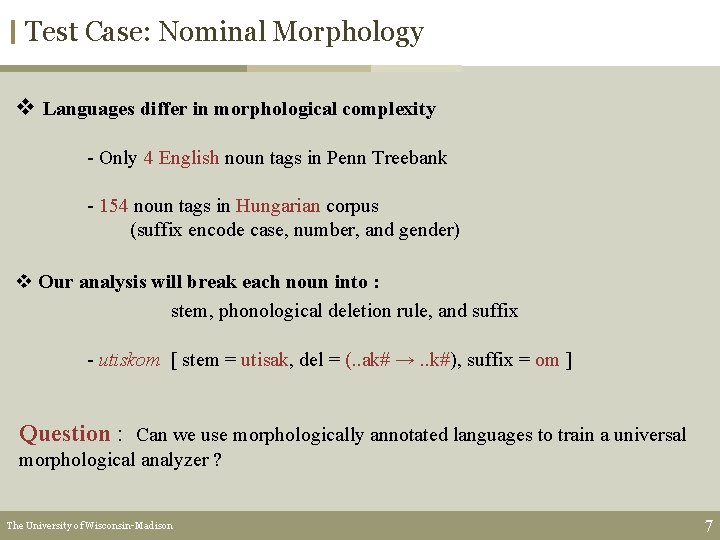

Test Case: Nominal Morphology v Languages differ in morphological complexity - Only 4 English noun tags in Penn Treebank - 154 noun tags in Hungarian corpus (suffix encode case, number, and gender) v Our analysis will break each noun into : stem, phonological deletion rule, and suffix - utiskom [ stem = utisak, del = (. . ak# →. . k#), suffix = om ] Question : Can we use morphologically annotated languages to train a universal morphological analyzer ? The University of Wisconsin-Madison 7

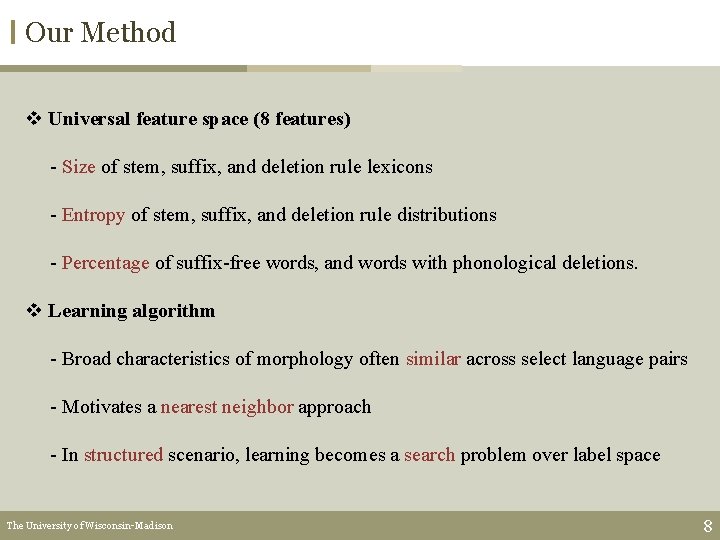

Our Method v Universal feature space (8 features) - Size of stem, suffix, and deletion rule lexicons - Entropy of stem, suffix, and deletion rule distributions - Percentage of suffix-free words, and words with phonological deletions. v Learning algorithm - Broad characteristics of morphology often similar across select language pairs - Motivates a nearest neighbor approach - In structured scenario, learning becomes a search problem over label space The University of Wisconsin-Madison 8

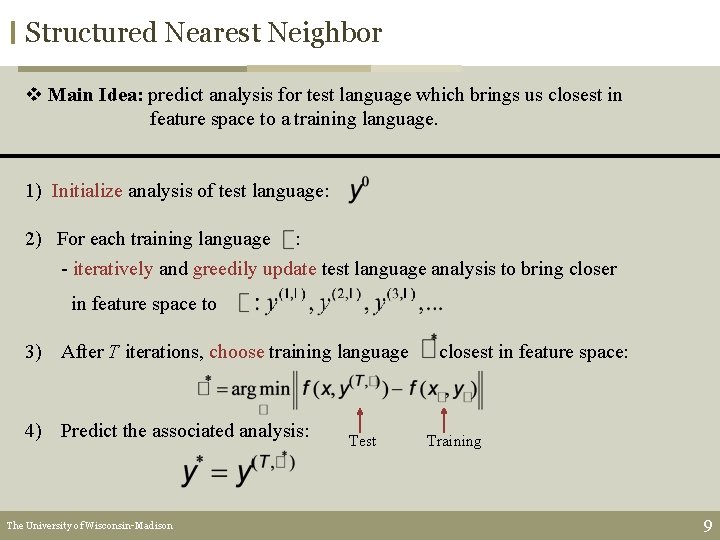

Structured Nearest Neighbor v Main Idea: predict analysis for test language which brings us closest in feature space to a training language. 1) Initialize analysis of test language: 2) For each training language : - iteratively and greedily update test language analysis to bring closer in feature space to 3) After T iterations, choose training language 4) Predict the associated analysis: The University of Wisconsin-Madison Test closest in feature space: Training 9

Structured Nearest Neighbor Training languages: Initialize test language labels: The University of Wisconsin-Madison 10

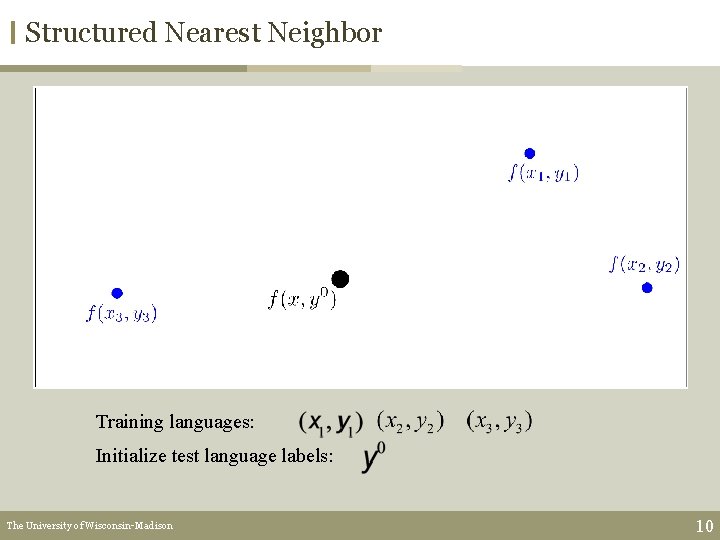

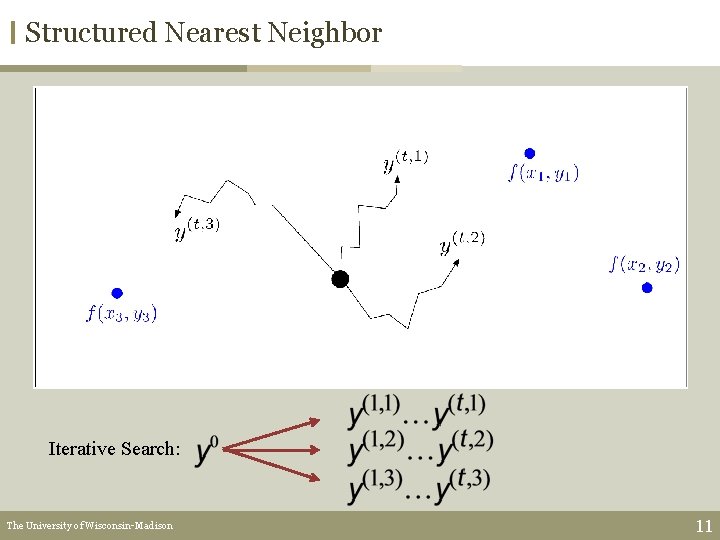

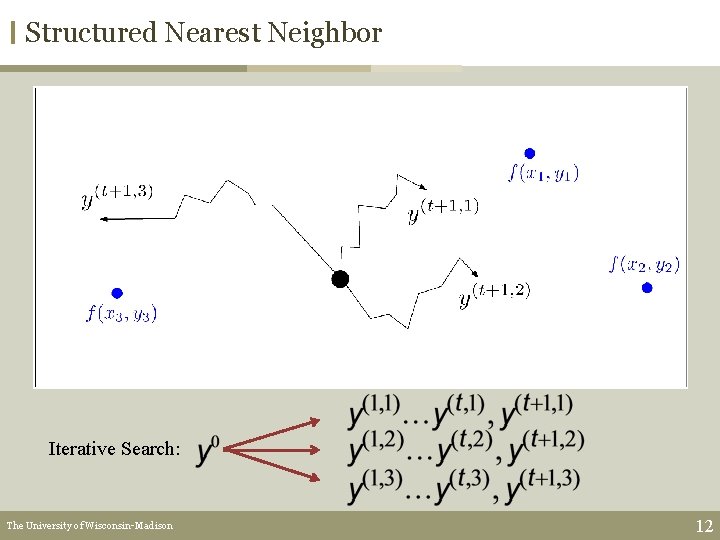

Structured Nearest Neighbor Iterative Search: The University of Wisconsin-Madison 11

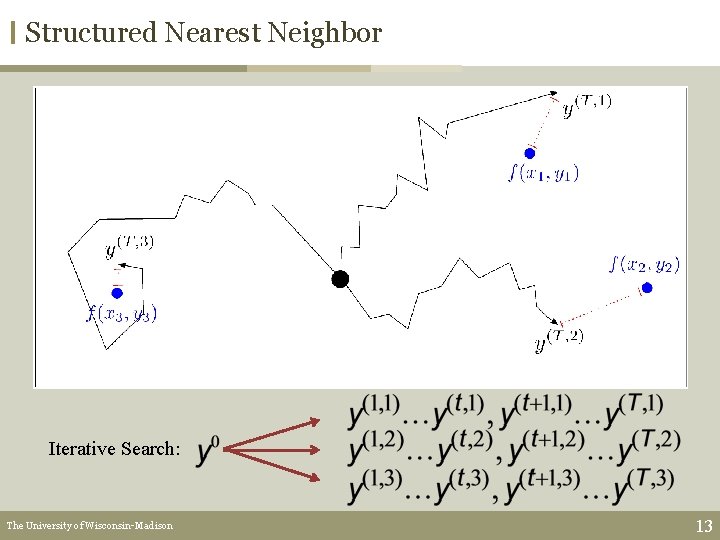

Structured Nearest Neighbor Iterative Search: The University of Wisconsin-Madison 12

Structured Nearest Neighbor Iterative Search: The University of Wisconsin-Madison 13

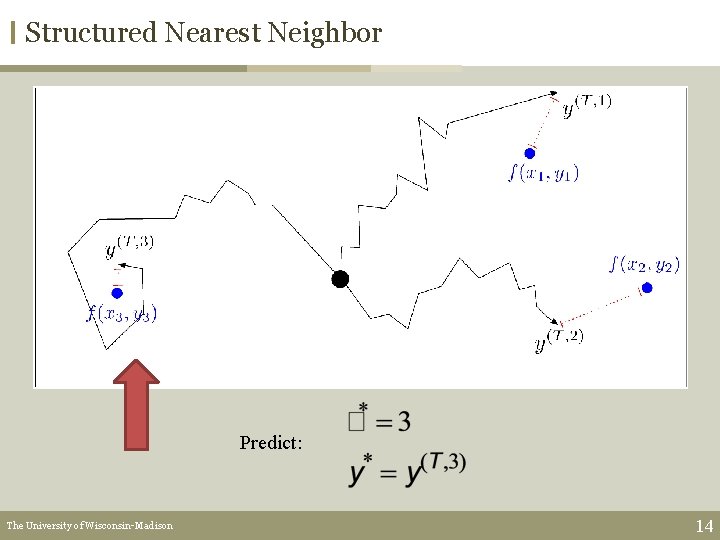

Structured Nearest Neighbor Predict: The University of Wisconsin-Madison 14

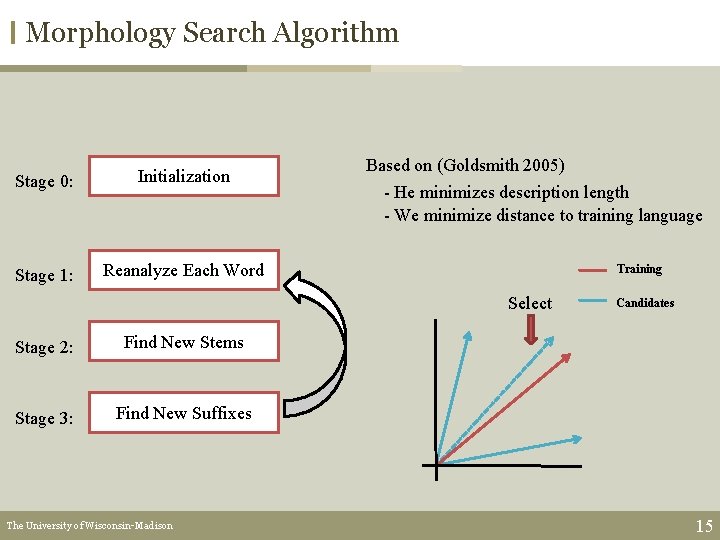

Morphology Search Algorithm Stage 0: Initialization Stage 1: Reanalyze Each Word Based on (Goldsmith 2005) - He minimizes description length - We minimize distance to training language Training Select Stage 2: Find New Stems Stage 3: Find New Suffixes The University of Wisconsin-Madison Candidates 15

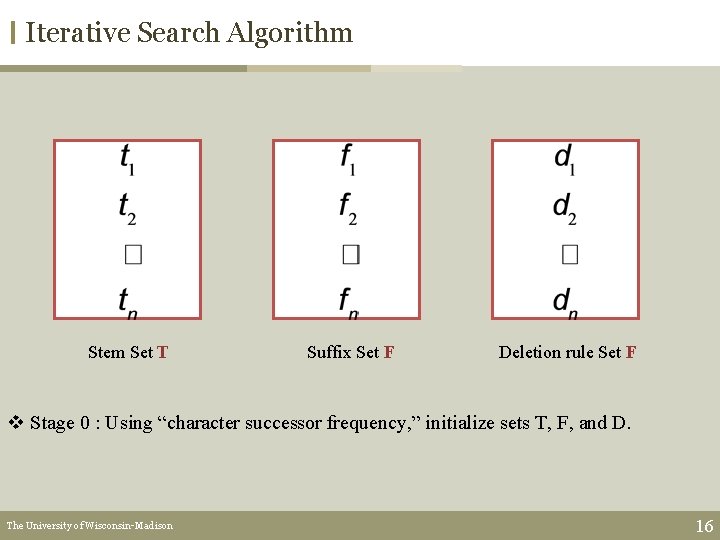

Iterative Search Algorithm Stem Set T Suffix Set F Deletion rule Set F v Stage 0 : Using “character successor frequency, ” initialize sets T, F, and D. The University of Wisconsin-Madison 16

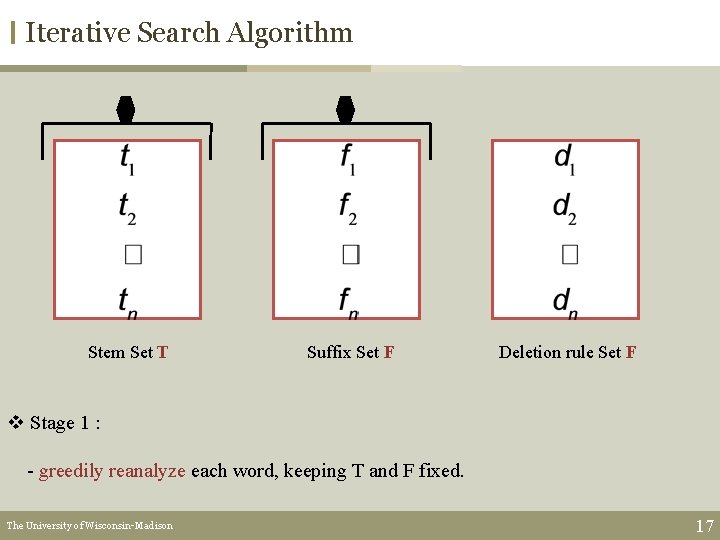

Iterative Search Algorithm Stem Set T Suffix Set F Deletion rule Set F v Stage 1 : - greedily reanalyze each word, keeping T and F fixed. The University of Wisconsin-Madison 17

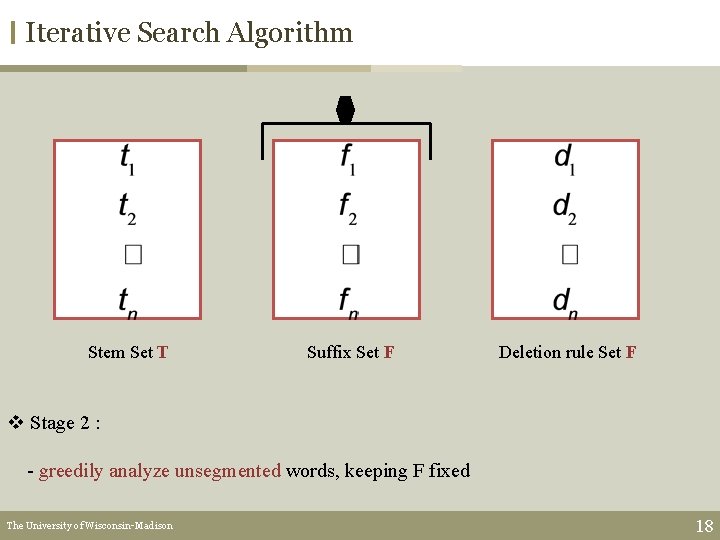

Iterative Search Algorithm Stem Set T Suffix Set F Deletion rule Set F v Stage 2 : - greedily analyze unsegmented words, keeping F fixed The University of Wisconsin-Madison 18

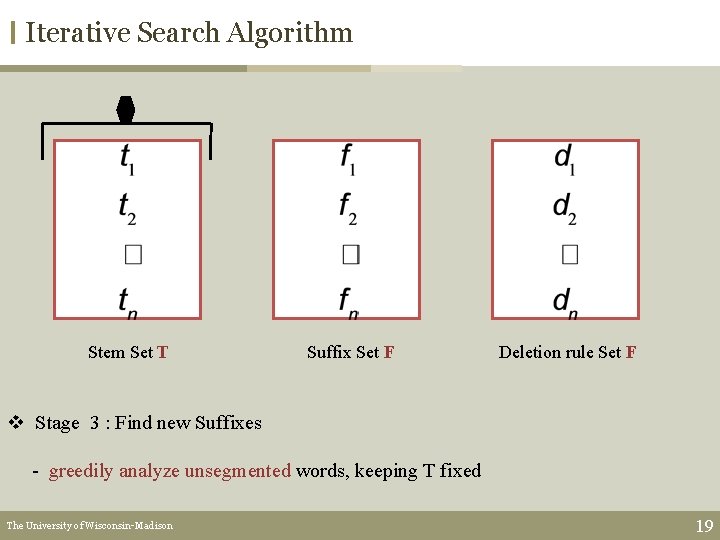

Iterative Search Algorithm Stem Set T Suffix Set F Deletion rule Set F v Stage 3 : Find new Suffixes - greedily analyze unsegmented words, keeping T fixed The University of Wisconsin-Madison 19

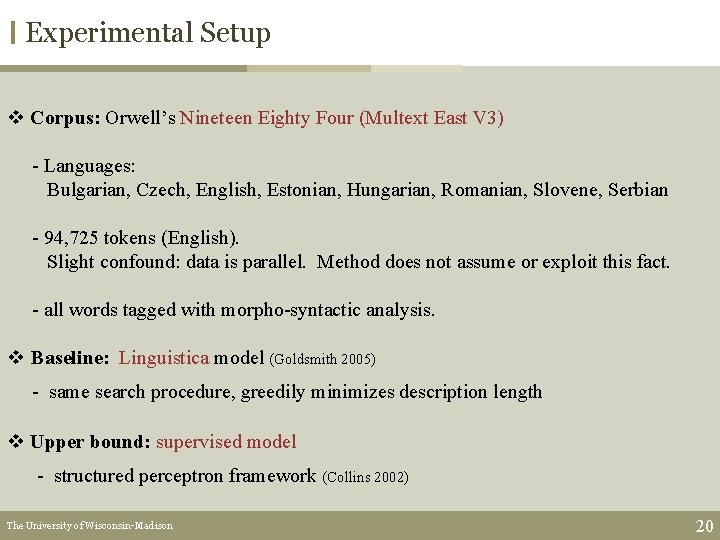

Experimental Setup v Corpus: Orwell’s Nineteen Eighty Four (Multext East V 3) - Languages: Bulgarian, Czech, English, Estonian, Hungarian, Romanian, Slovene, Serbian - 94, 725 tokens (English). Slight confound: data is parallel. Method does not assume or exploit this fact. - all words tagged with morpho-syntactic analysis. v Baseline: Linguistica model (Goldsmith 2005) - same search procedure, greedily minimizes description length v Upper bound: supervised model - structured perceptron framework (Collins 2002) The University of Wisconsin-Madison 20

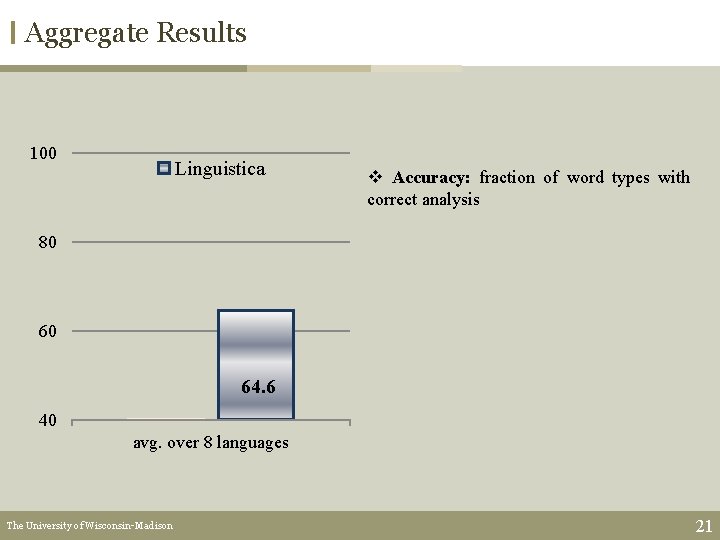

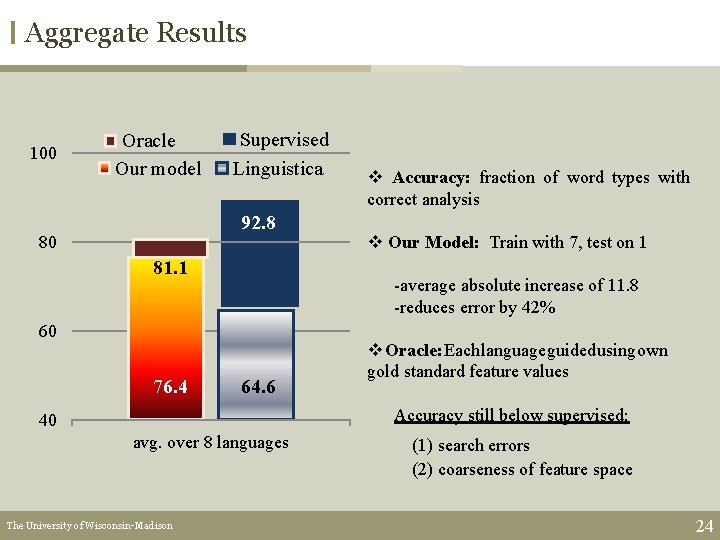

Aggregate Results 100 Linguistica v Accuracy: fraction of word types with correct analysis 80 60 64. 6 40 avg. over 8 languages The University of Wisconsin-Madison 21

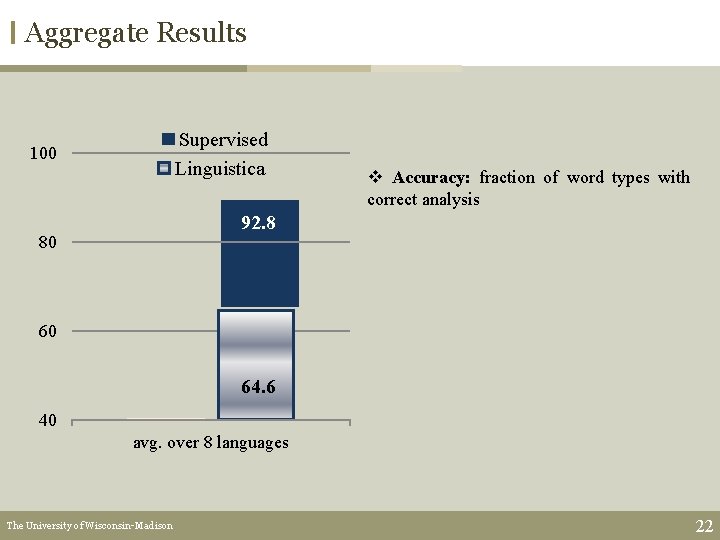

Aggregate Results Supervised Linguistica 100 v Accuracy: fraction of word types with correct analysis 92. 8 80 60 64. 6 40 avg. over 8 languages The University of Wisconsin-Madison 22

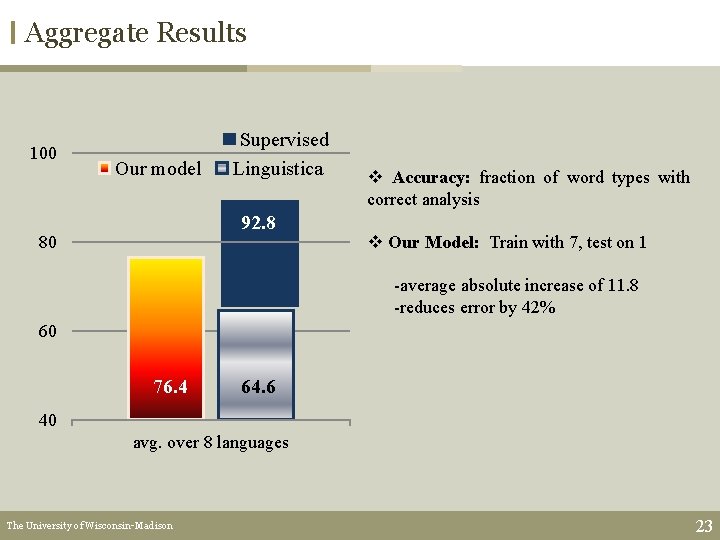

Aggregate Results 100 Our model Supervised Linguistica 92. 8 80 v Accuracy: fraction of word types with correct analysis v Our Model: Train with 7, test on 1 -average absolute increase of 11. 8 -reduces error by 42% 60 76. 4 64. 6 40 avg. over 8 languages The University of Wisconsin-Madison 23

Aggregate Results 100 Oracle Our model Supervised Linguistica 92. 8 80 81. 1 v Our Model: Train with 7, test on 1 -average absolute increase of 11. 8 -reduces error by 42% 60 76. 4 v Accuracy: fraction of word types with correct analysis 64. 6 v Oracle: Each language guided using own gold standard feature values Accuracy still below supervised: 40 avg. over 8 languages The University of Wisconsin-Madison (1) search errors (2) coarseness of feature space 24

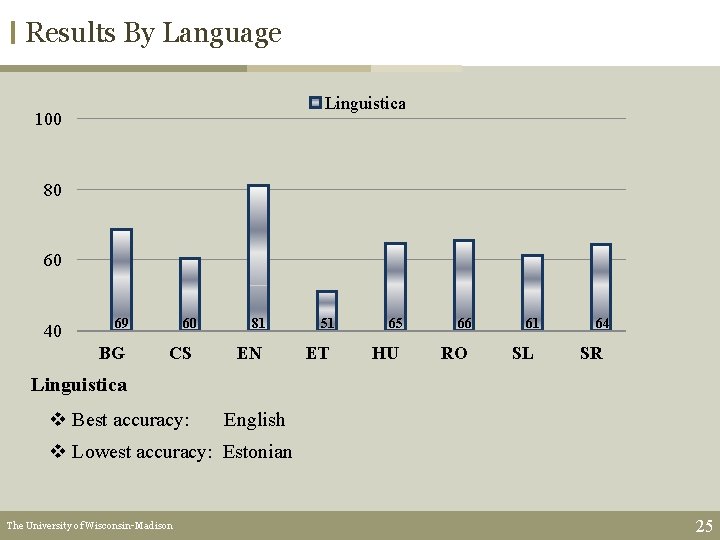

Results By Language Linguistica 100 80 60 40 69 BG 60 CS 81 EN 51 ET 65 HU 66 RO 61 SL 64 SR Linguistica v Best accuracy: English v Lowest accuracy: Estonian The University of Wisconsin-Madison 25

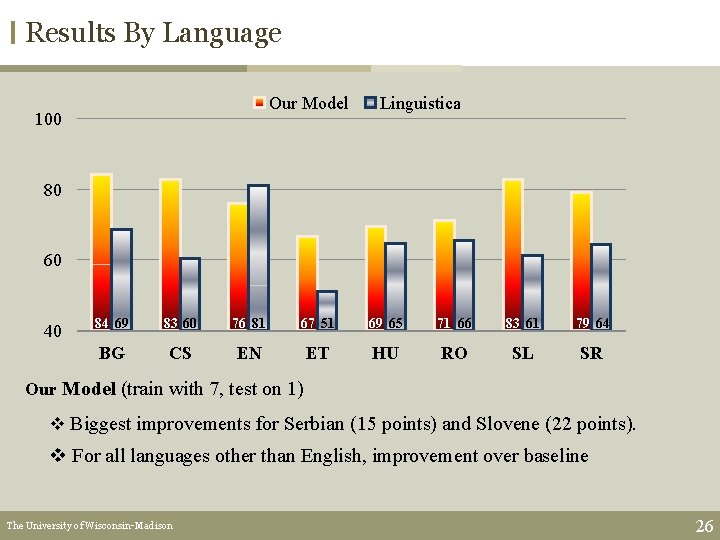

Results By Language Our Model 100 Linguistica 80 60 40 84 69 83 60 76 81 67 51 69 65 71 66 83 61 79 64 BG CS EN ET HU RO SL SR Our Model (train with 7, test on 1) v Biggest improvements for Serbian (15 points) and Slovene (22 points). v For all languages other than English, improvement over baseline The University of Wisconsin-Madison 26

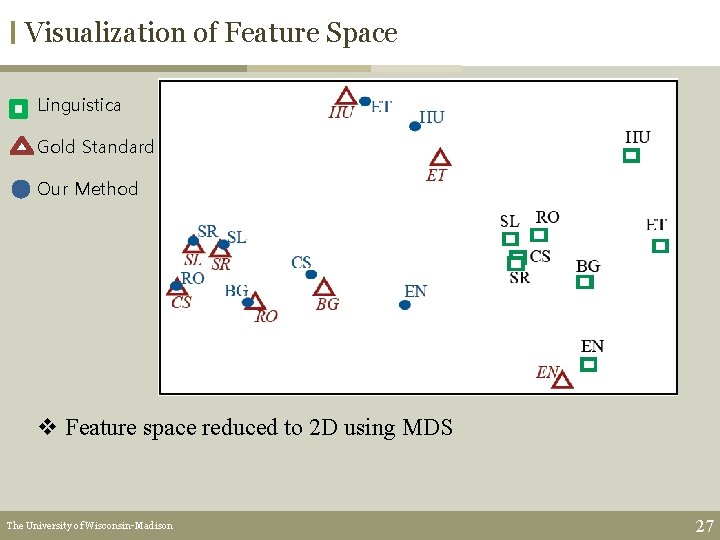

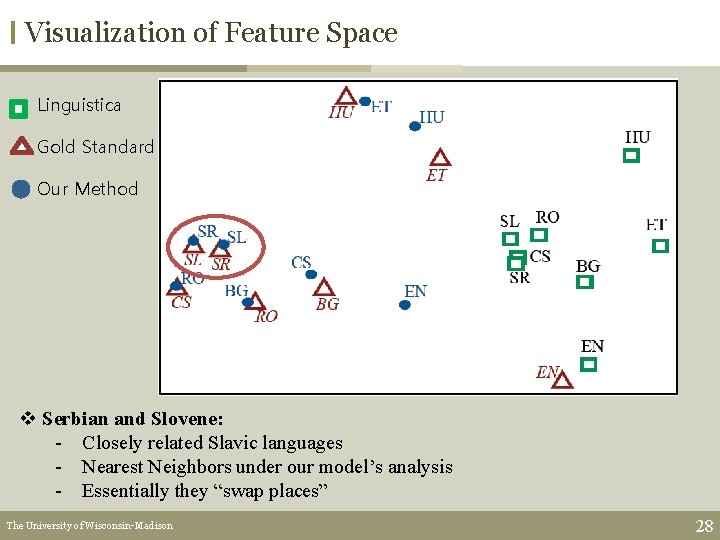

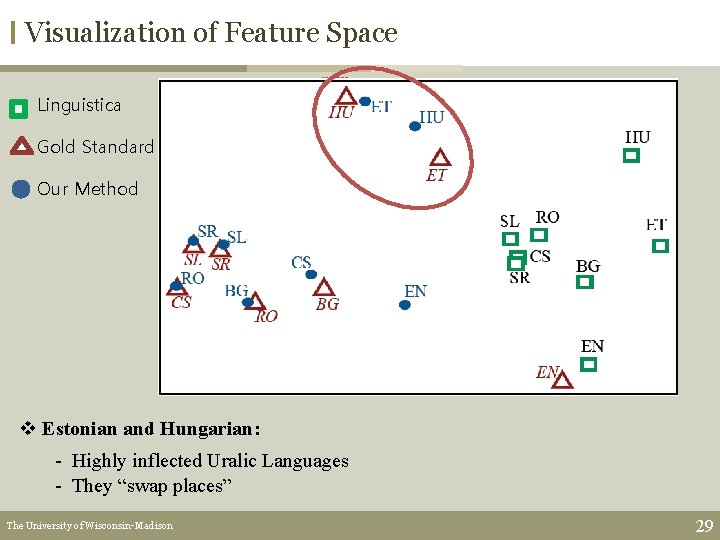

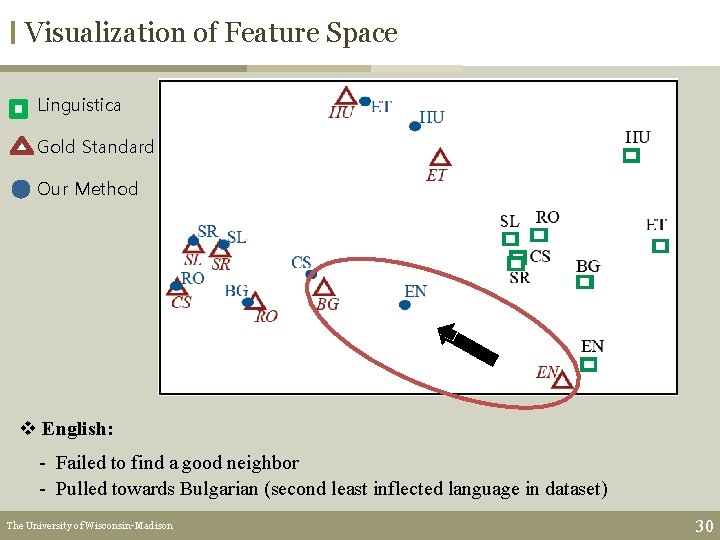

Visualization of Feature Space Linguistica Gold Standard Our Method v Feature space reduced to 2 D using MDS The University of Wisconsin-Madison 27

Visualization of Feature Space Linguistica Gold Standard Our Method v Serbian and Slovene: - Closely related Slavic languages - Nearest Neighbors under our model’s analysis - Essentially they “swap places” The University of Wisconsin-Madison 28

Visualization of Feature Space Linguistica Gold Standard Our Method v Estonian and Hungarian: - Highly inflected Uralic Languages - They “swap places” The University of Wisconsin-Madison 29

Visualization of Feature Space Linguistica Gold Standard Our Method v English: - Failed to find a good neighbor - Pulled towards Bulgarian (second least inflected language in dataset) The University of Wisconsin-Madison 30

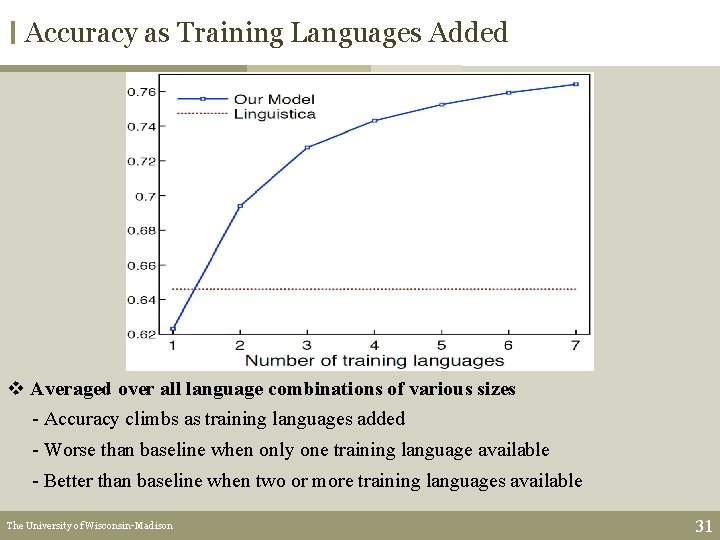

Accuracy as Training Languages Added v Averaged over all language combinations of various sizes - Accuracy climbs as training languages added - Worse than baseline when only one training language available - Better than baseline when two or more training languages available The University of Wisconsin-Madison 31

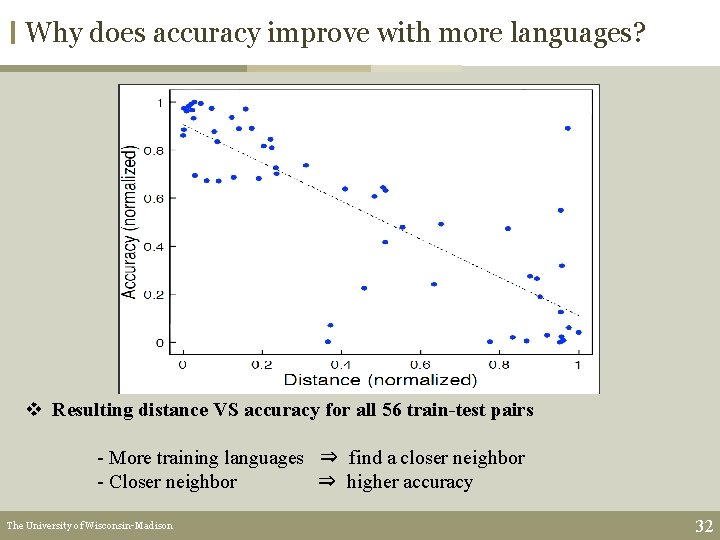

Why does accuracy improve with more languages? v Resulting distance VS accuracy for all 56 train-test pairs - More training languages ⇒ find a closer neighbor - Closer neighbor ⇒ higher accuracy The University of Wisconsin-Madison 32

Summary Main Idea: Recast unsupervised learning as cross-lingual structured prediction Test case: morphological analysis of 8 languages. v Formulated universal feature space for morphology v Developed novel structured nearest neighbor approach v Our method yields substantial accuracy gains The University of Wisconsin-Madison 33

Future Work v Shortcoming - uniform weighting of dimensions in the universal feature space - some features may be more important than others v Future work: learn distance metric on universal feature space The University of Wisconsin-Madison 34

Thank You Thank you The University of Wisconsin-Madison 35

- Slides: 36