unfairness in Machine Learning Aaron Roth University of

![What Should Fairness Mean? • Idea [DHPRZ 12]: “Similar people should be treated similarly” What Should Fairness Mean? • Idea [DHPRZ 12]: “Similar people should be treated similarly”](https://slidetodoc.com/presentation_image_h/c5e225aa2730c877a93fc1e71ae409ea/image-7.jpg)

![Fairness in Sequential Decision-Making [JKMR 16, JKMNR 17] • • • Contextual bandit settings Fairness in Sequential Decision-Making [JKMR 16, JKMNR 17] • • • Contextual bandit settings](https://slidetodoc.com/presentation_image_h/c5e225aa2730c877a93fc1e71ae409ea/image-9.jpg)

![Can apply “Weakly Meritocratic Fairness” in other settings • Reinforcement Learning [JJKMR 17] – Can apply “Weakly Meritocratic Fairness” in other settings • Reinforcement Learning [JJKMR 17] –](https://slidetodoc.com/presentation_image_h/c5e225aa2730c877a93fc1e71ae409ea/image-16.jpg)

- Slides: 19

(un)fairness in Machine Learning Aaron Roth University of Pennsylvania

Machine Learning and Social Norms • • Sample norms: privacy, fairness, transparency, accountability… Possible approaches • Case study: privacy-preserving machine learning • Fair machine learning – “traditional”: legal, regulatory, watchdog – Embed social norms in data, algorithms, models – “single”, strong, definition (differential privacy) – almost every ML algorithm has a private version – not so much… – impossibility results

(Un)Fairness Where? • Data (input) – e. g. more arrests where there are more police – Label should be “committed a crime”, but is “convicted of a crime” – try to “correct” bias • Models (output) – e. g. discriminatory treatment of subpopulations – build or “post-process” models with subpopulation guarantees – equality of false positive/negative rates; calibration • Algorithms (process) – – learning algorithm generating data through its decisions e. g. don’t learn outcomes of denied mortgages lack of clear train/test division design (sequential) algorithms that are fair

Theory and Empirics • Empirical: – Messy, complicated, many entangled problems. – Important for diagnosing the extent of the problem. – Important for validating modelling assumptions, validating conclusions! • Theory: – Important to define precisely what you mean. – And to make promises about your algorithms. – Methodology: • pick a learning framework and a technical definition of fairness • explore consequences: fair algorithms and (matching) impossibility/lower bounds • understand inherent “costs” of fairness

![What Should Fairness Mean Idea DHPRZ 12 Similar people should be treated similarly What Should Fairness Mean? • Idea [DHPRZ 12]: “Similar people should be treated similarly”](https://slidetodoc.com/presentation_image_h/c5e225aa2730c877a93fc1e71ae409ea/image-7.jpg)

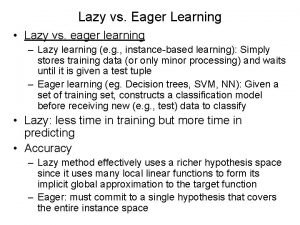

What Should Fairness Mean? • Idea [DHPRZ 12]: “Similar people should be treated similarly” – Similar in what metric? • An attractive metric already present in many models… – “True Quality” – What you are trying to predict! – Problem: You don’t know it.

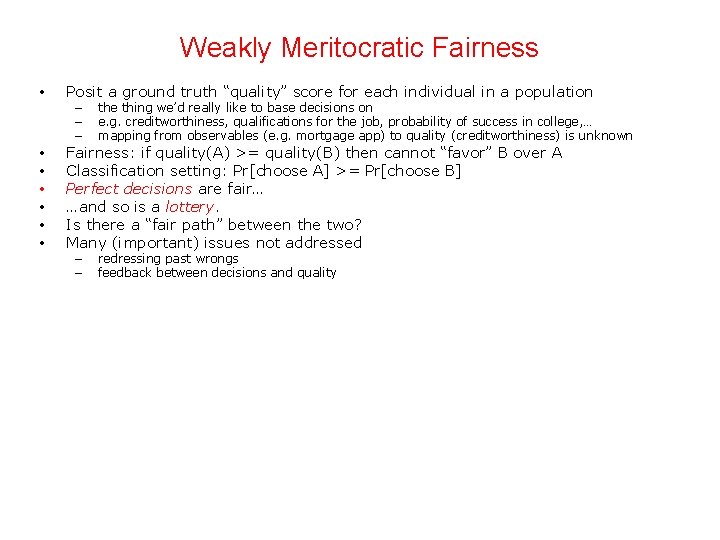

Weakly Meritocratic Fairness • • Posit a ground truth “quality” score for each individual in a population – – – the thing we’d really like to base decisions on e. g. creditworthiness, qualifications for the job, probability of success in college, … mapping from observables (e. g. mortgage app) to quality (creditworthiness) is unknown – – redressing past wrongs feedback between decisions and quality Fairness: if quality(A) >= quality(B) then cannot “favor” B over A Classification setting: Pr[choose A] >= Pr[choose B] Perfect decisions are fair… …and so is a lottery. Is there a “fair path” between the two? Many (important) issues not addressed

![Fairness in Sequential DecisionMaking JKMR 16 JKMNR 17 Contextual bandit settings Fairness in Sequential Decision-Making [JKMR 16, JKMNR 17] • • • Contextual bandit settings](https://slidetodoc.com/presentation_image_h/c5e225aa2730c877a93fc1e71ae409ea/image-9.jpg)

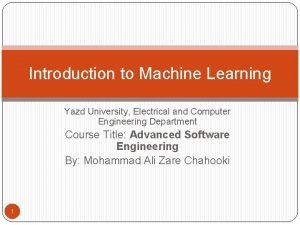

Fairness in Sequential Decision-Making [JKMR 16, JKMNR 17] • • • Contextual bandit settings Each round, some number of individuals arrive, possibly from different demographic subpopulations We can choose some number to “accept”, but must do so fairly at every round Unknown but parametric function mapping observed features to true quality Classical algorithm ignoring fairness: UCB, “optimism under uncertainty” – asymptotically optimal/fair, but badly violates fairness along the way • • Fair. UCB: introduce technique of confidence interval chaining Linear case: fair, no-regret algorithm with regret: • • Tight dependence on k (vs. linear for UCB) Quantify the cost of fairness

Hard to Empirically Test •

Less satisfying theoretically, but. . • Makes no assumptions about data generating process • Easy to train on real data: can evaluate entire “pareto curve” trading off fairness and accuracy. • Can test concrete hypotheses on data. • Like: “Is disparate treatment important for fairness? ”

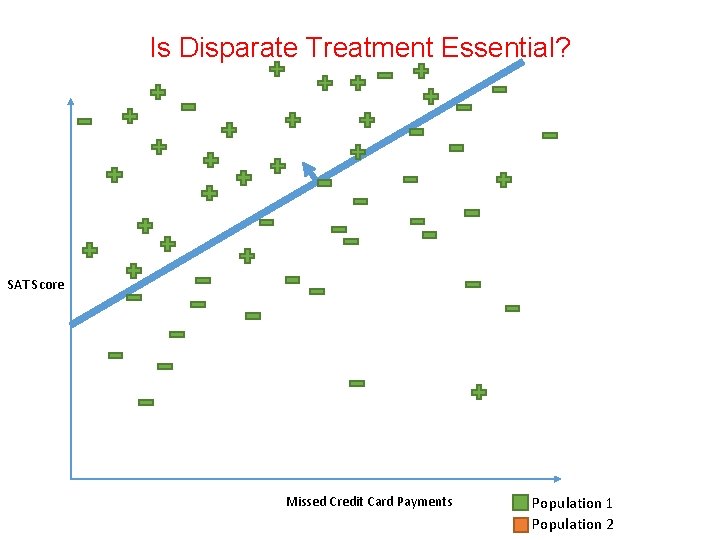

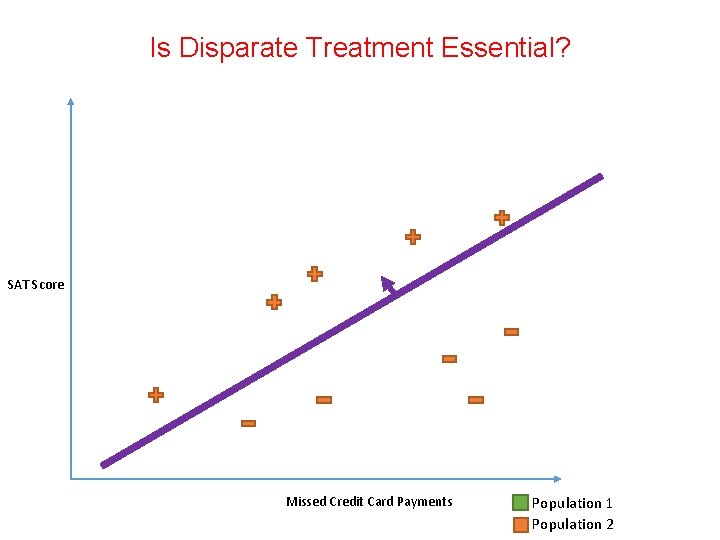

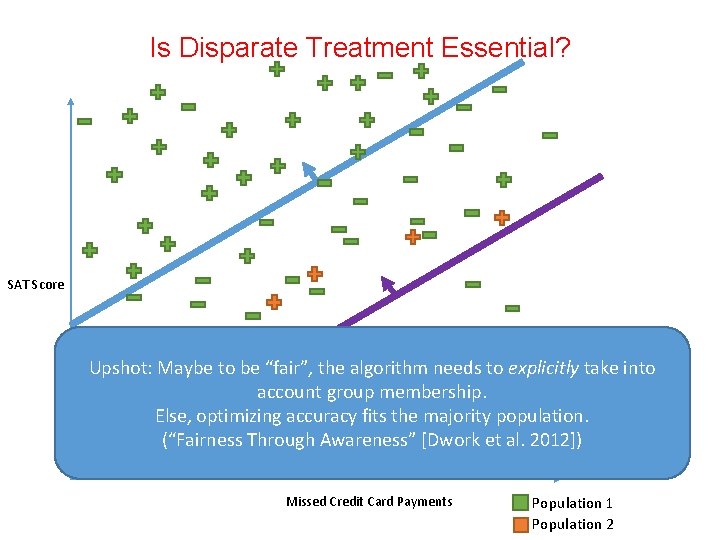

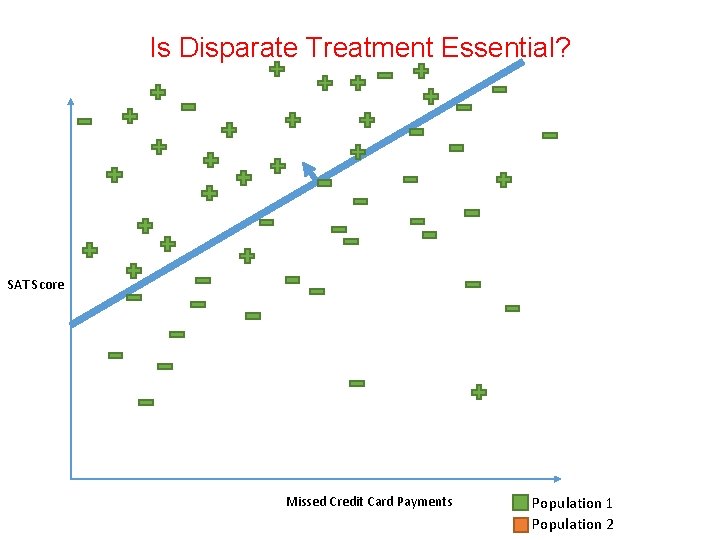

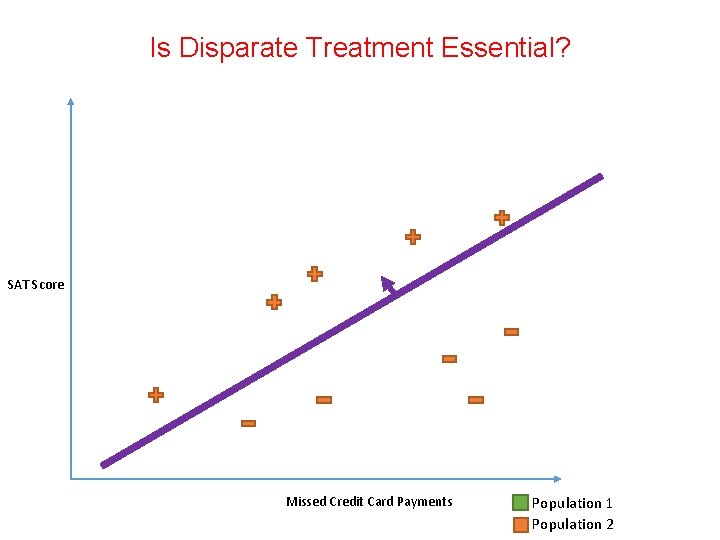

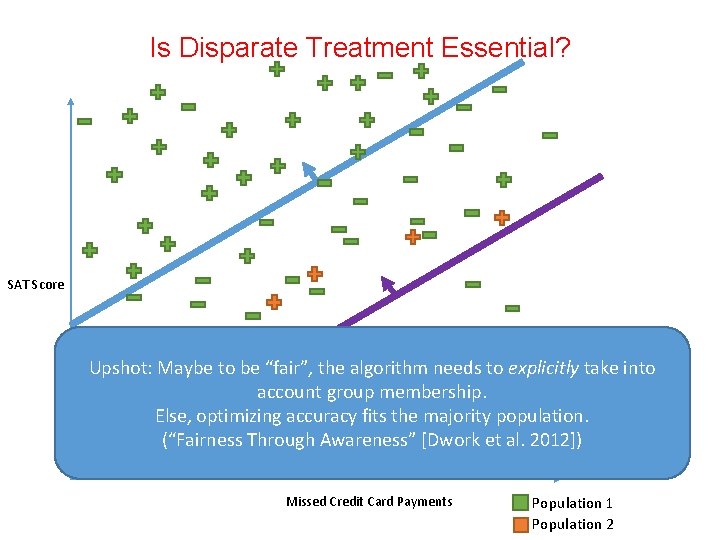

Is Disparate Treatment Essential? SAT Score Missed Credit Card Payments Population 1 Population 2

Is Disparate Treatment Essential? SAT Score Missed Credit Card Payments Population 1 Population 2

Is Disparate Treatment Essential? SAT Score Upshot: Maybe to be “fair”, the algorithm needs to explicitly take into account group membership. Else, optimizing accuracy fits the majority population. (“Fairness Through Awareness” [Dwork et al. 2012]) Missed Credit Card Payments Population 1 Population 2

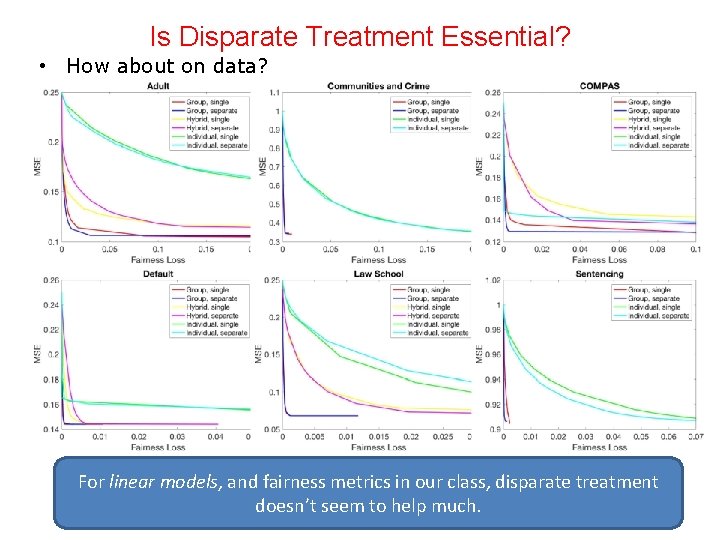

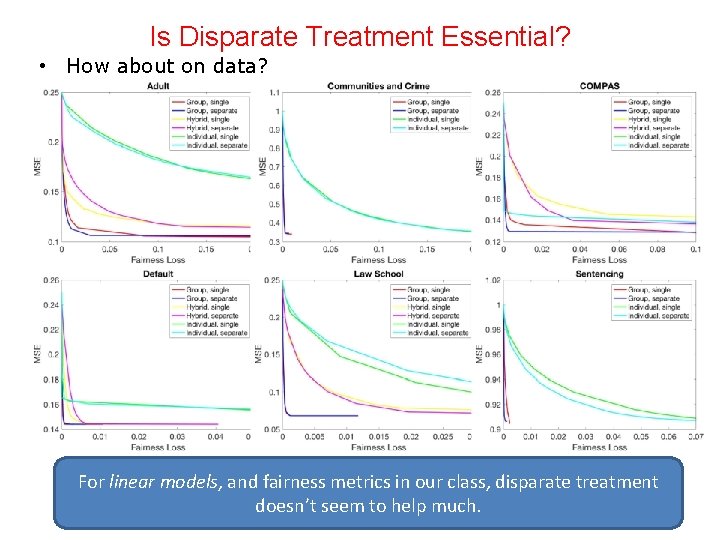

Is Disparate Treatment Essential? • How about on data? For linear models, and fairness metrics in our class, disparate treatment doesn’t seem to help much.

![Can apply Weakly Meritocratic Fairness in other settings Reinforcement Learning JJKMR 17 Can apply “Weakly Meritocratic Fairness” in other settings • Reinforcement Learning [JJKMR 17] –](https://slidetodoc.com/presentation_image_h/c5e225aa2730c877a93fc1e71ae409ea/image-16.jpg)

Can apply “Weakly Meritocratic Fairness” in other settings • Reinforcement Learning [JJKMR 17] – state-based learning with discounted future reward – modeling “affirmative action” vs. “myopic meritocracy” • Incentives for Myopic Agents [KKMPRVW 17] – regulatory models – Can’t design the algorithm; can incentivize the actor (with partial information) • Cross-Population Ranking [KRW 17] – e. g. faculty candidates in different/incomparable subareas – want to compare by true subpopulation distributions (CDF rank)

Lots of recent work • See www. fatml. org • Some highlights: – “Fairness Through Awareness, ” Dwork, Hardt, Pitassi, Reingold, Zemel. ITCS 2012. – “Inherent Tradeoffs of Fair Determination of Risk Scores, ” Klienberg, Mullainathan, Raghavan. ITCS 2016. – “Fair Prediction with Disparate Impact: A Study of Bias in Recidivism Prediction. Chouldechova. 2017. – “Equal Opportunity in Supervised Learning, ” Hardt, Price, Srebro. NIPS 2016.

From This Talk: • Fairness in Learning: Classic and Contextual Bandits. Joseph, Kearns, Morgenstern, Roth. NIPS 2016. • Fairness Incentives for Myopic Agents. Kannan, Kearns, Morgenstern, Pai, Roth, Vohra, Wu. EC 2017. • Fairness in Reinforcement Learning. Jabbari, Joseph, Kearns, Morgenstern, Roth. ICML 2017. • Meritocratic Fairness for Cross Population Selection. Kearns, Roth, Wu. ICML 2017. • Fair Algorithms for Infinite and Contextual Bandits. Joseph, Kearns, Morgenstern, Neel, Roth. 2017. • A Convex Framework for Fair Regression. Berk, Heidari, Jabbari, Joseph, Kearns, Morgenstern, Neel, Roth. 2017.

Collaborators: Richard Berk, Hoda Heidari, Shahin Jabbari, Matthew Joseph, Sampath Kannan, Michael Kearns, Jamie Morgenstern, Seth Neel, Mallesh Pai, Rakesh Vohra, Steven Wu Thanks!

Lori baker tim roth

Lori baker tim roth Standard divisor calculator

Standard divisor calculator Concept learning task in machine learning

Concept learning task in machine learning Analytical learning in machine learning

Analytical learning in machine learning Pac learning model in machine learning

Pac learning model in machine learning Machine learning t mitchell

Machine learning t mitchell Inductive and analytical learning

Inductive and analytical learning Deductive learning vs inductive learning

Deductive learning vs inductive learning Instance based learning in machine learning

Instance based learning in machine learning Inductive learning machine learning

Inductive learning machine learning First order rule learning in machine learning

First order rule learning in machine learning Lazy learners vs eager learner

Lazy learners vs eager learner Deep learning vs machine learning

Deep learning vs machine learning Cuadro comparativo e-learning b-learning m-learning

Cuadro comparativo e-learning b-learning m-learning Primerica ira roth

Primerica ira roth Lutz sperling

Lutz sperling Marija roth psiholog

Marija roth psiholog Artikulationsschema roth

Artikulationsschema roth Philip roth's the fanatic

Philip roth's the fanatic Dan roth upenn

Dan roth upenn