Understanding Alpha Go Understanding Alpha Go Go Overview

- Slides: 24

Understanding Alpha. Go

Understanding Alpha. Go

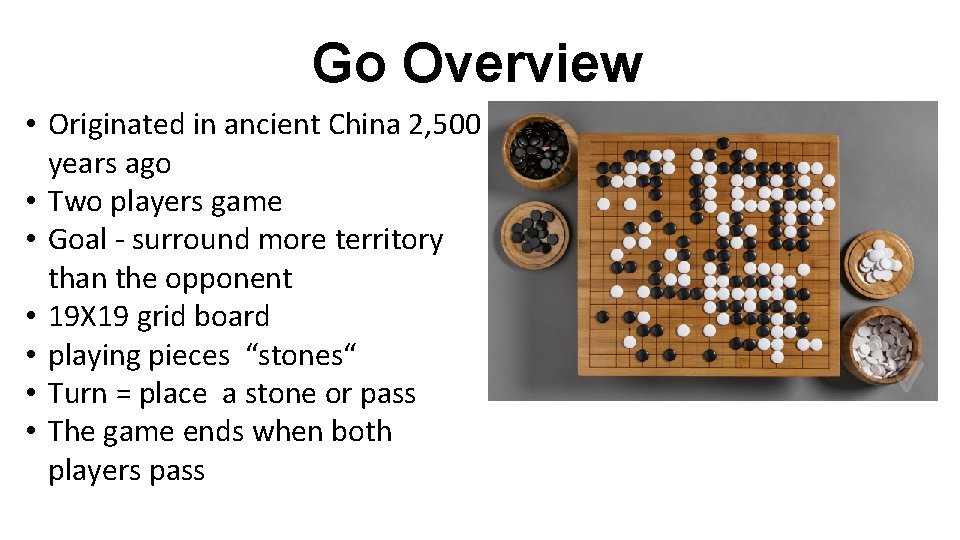

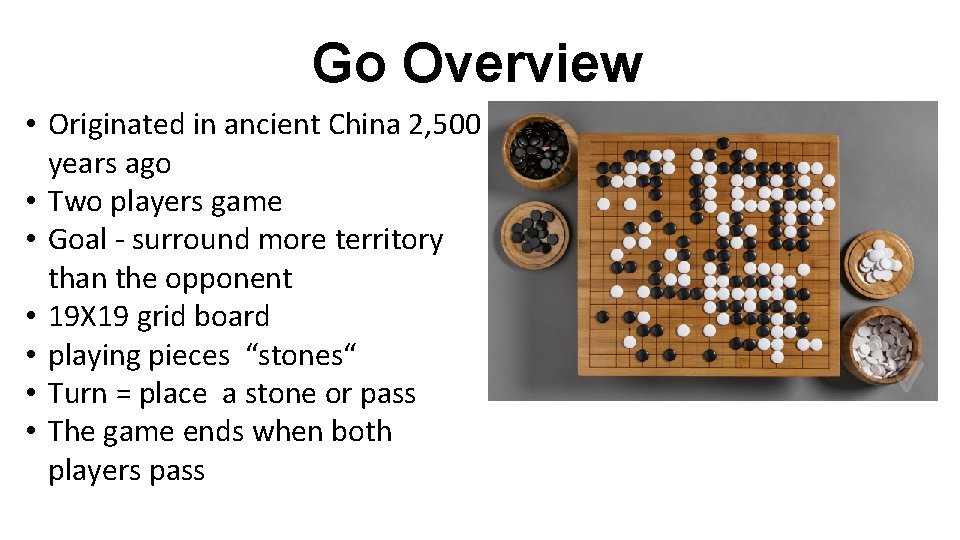

Go Overview • Originated in ancient China 2, 500 years ago • Two players game • Goal - surround more territory than the opponent • 19 X 19 grid board • playing pieces “stones“ • Turn = place a stone or pass • The game ends when both players pass

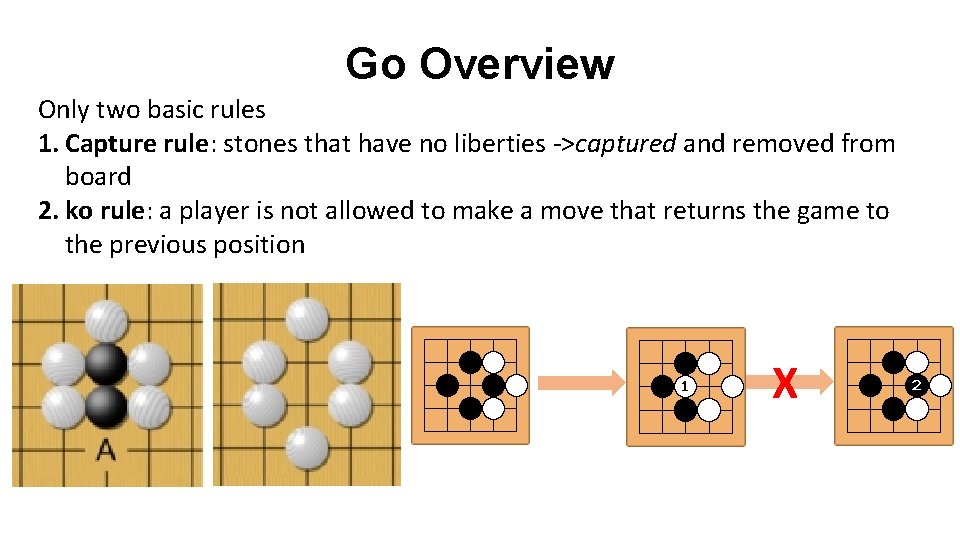

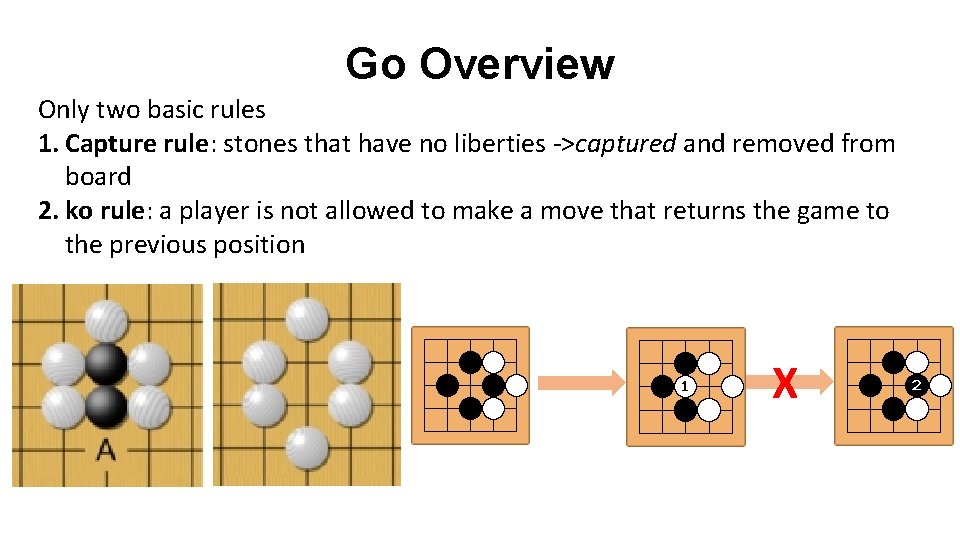

Go Overview Only two basic rules 1. Capture rule: stones that have no liberties ->captured and removed from board 2. ko rule: a player is not allowed to make a move that returns the game to the previous position X

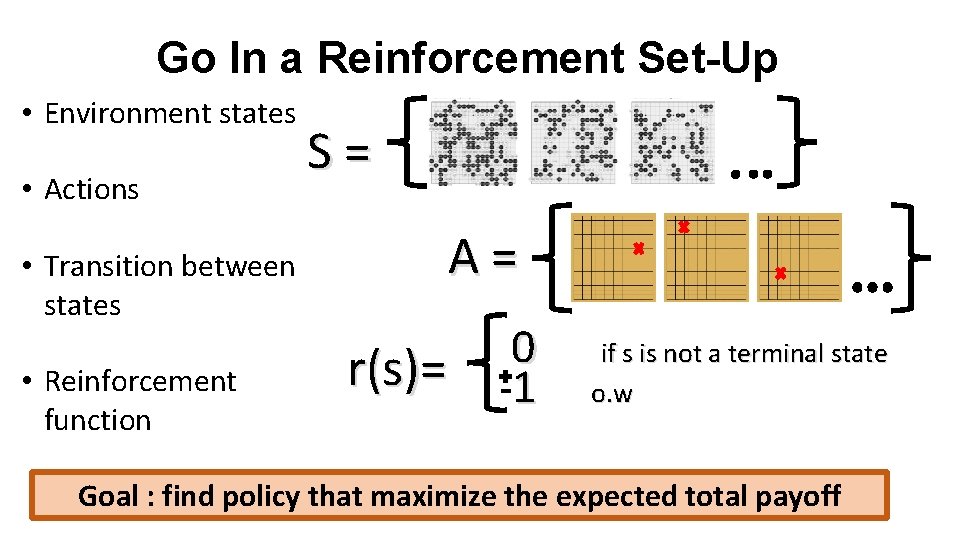

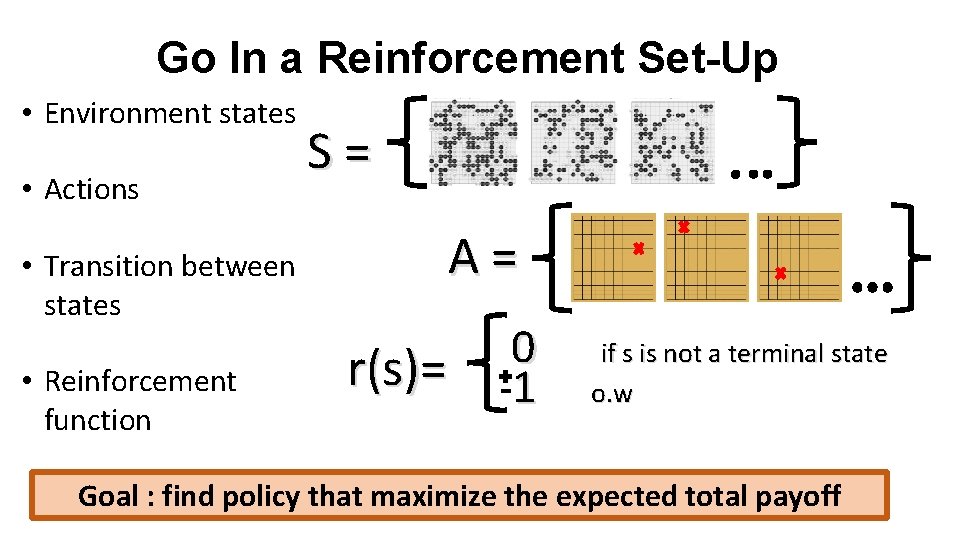

Go In a Reinforcement Set-Up • Environment states • Actions • Transition between states • Reinforcement function S = A = r(s)= 0 if s is not a terminal state 1 o. w Goal : find policy that maximize the expected total payoff

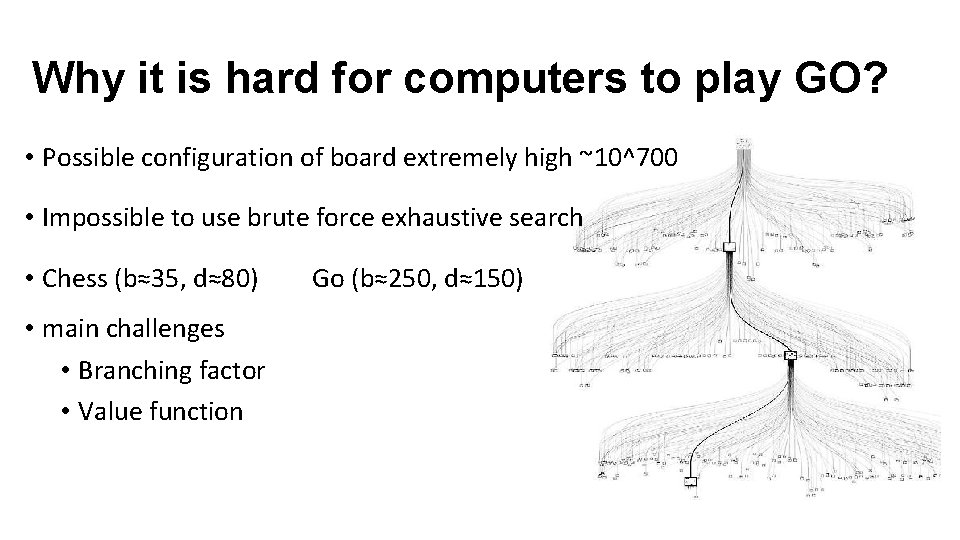

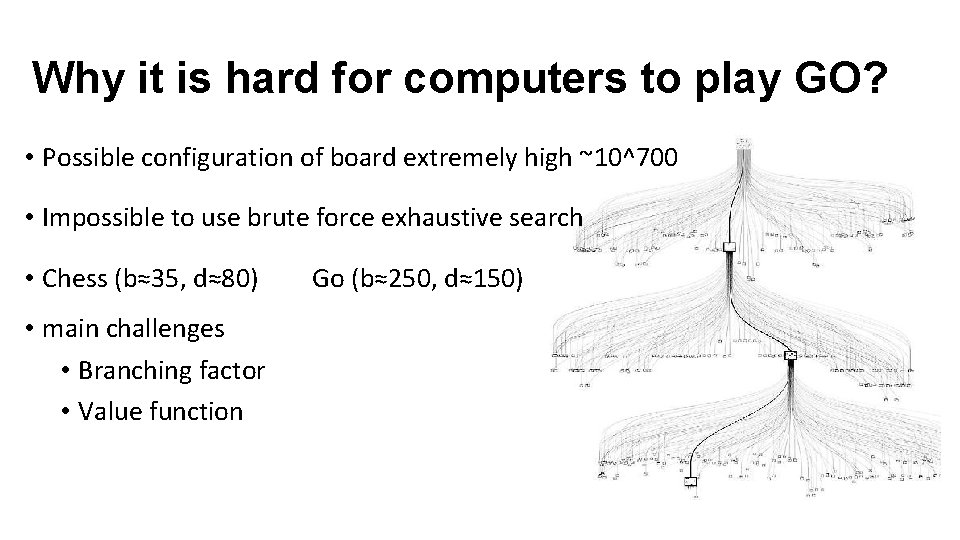

Why it is hard for computers to play GO? • Possible configuration of board extremely high ~10^700 • Impossible to use brute force exhaustive search • Chess (b≈35, d≈80) • main challenges • Branching factor • Value function Go (b≈250, d≈150)

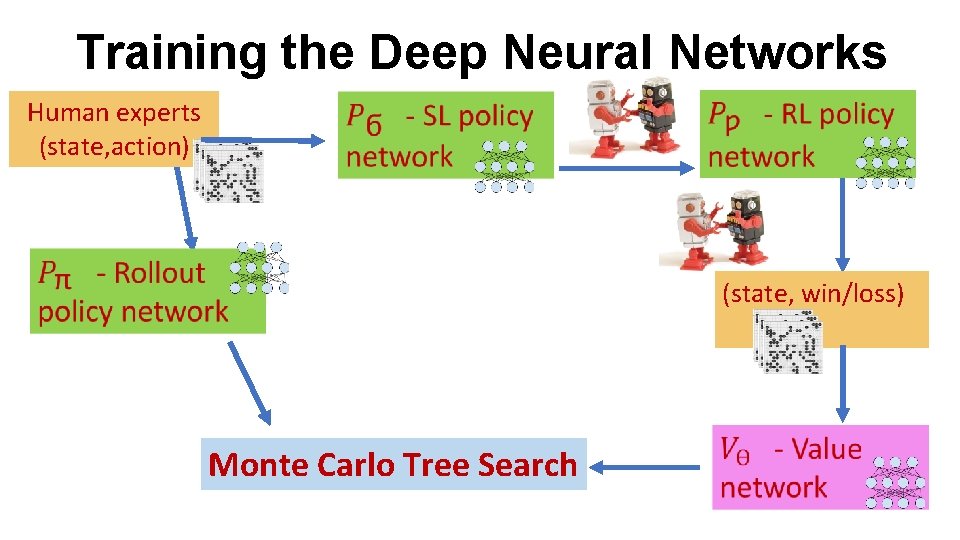

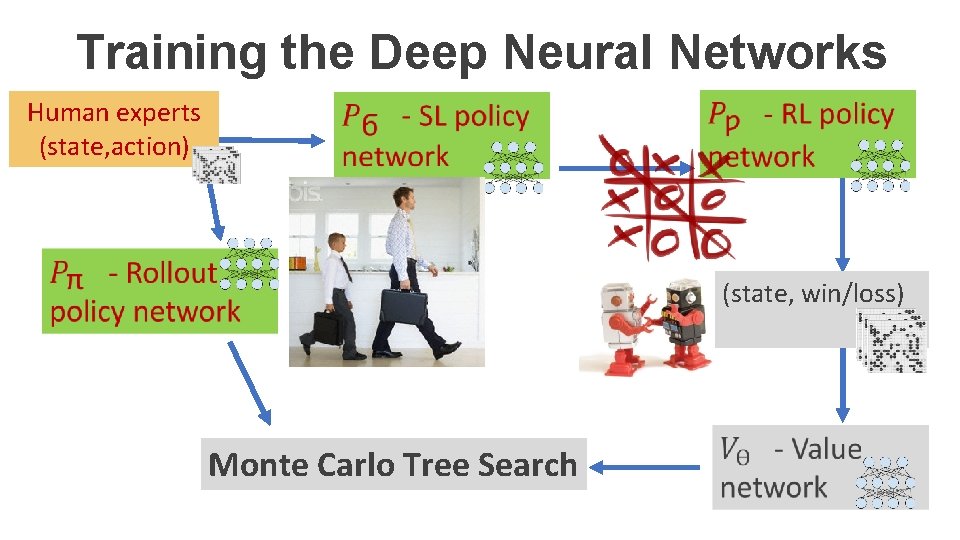

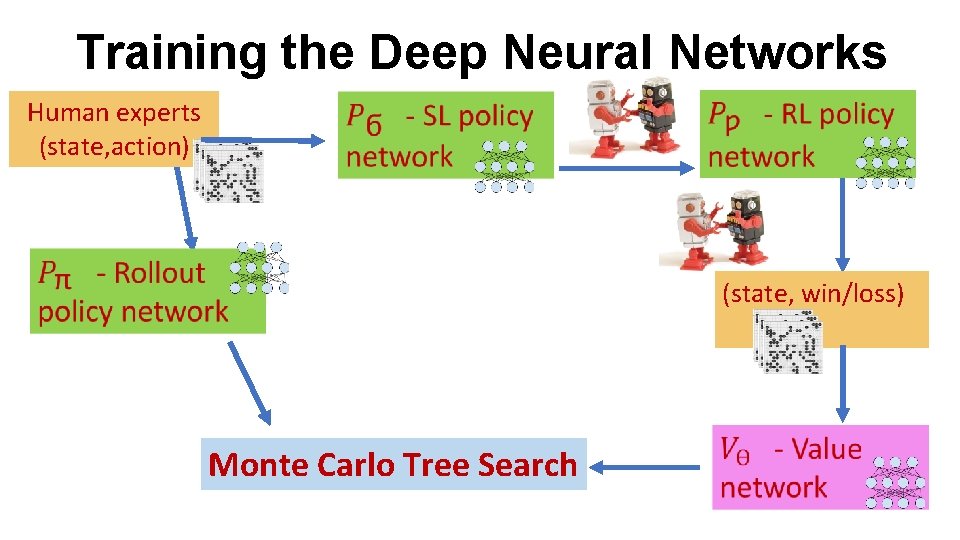

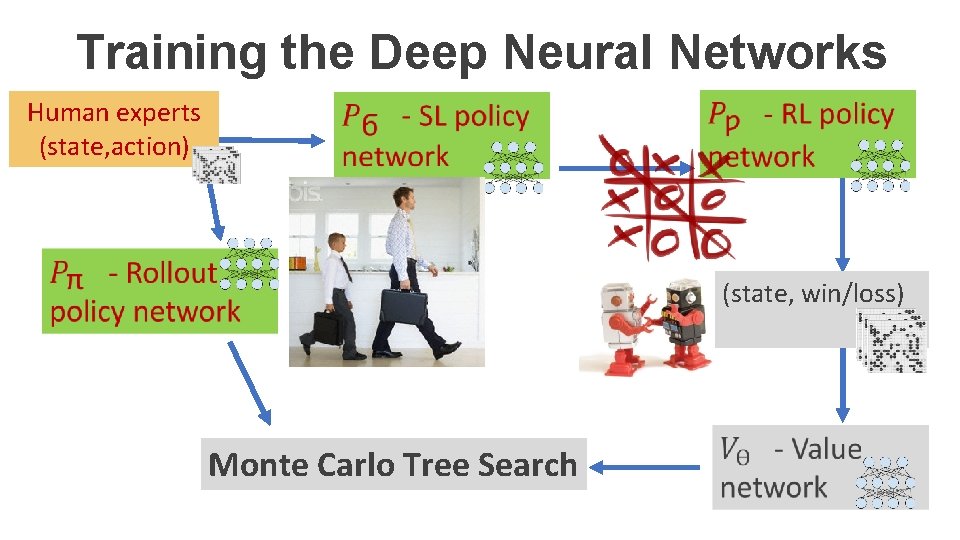

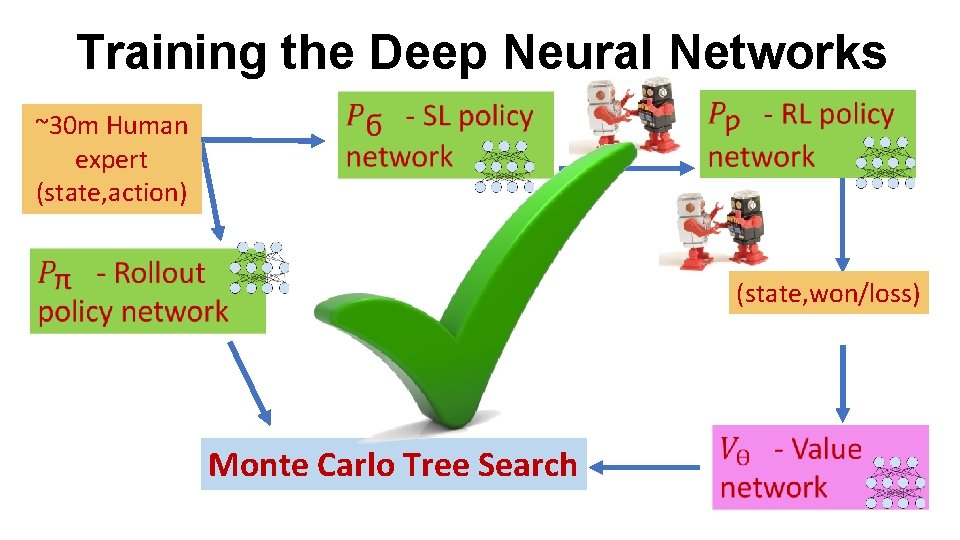

Training the Deep Neural Networks Human experts (state, action) (state, win/loss) Monte Carlo Tree Search

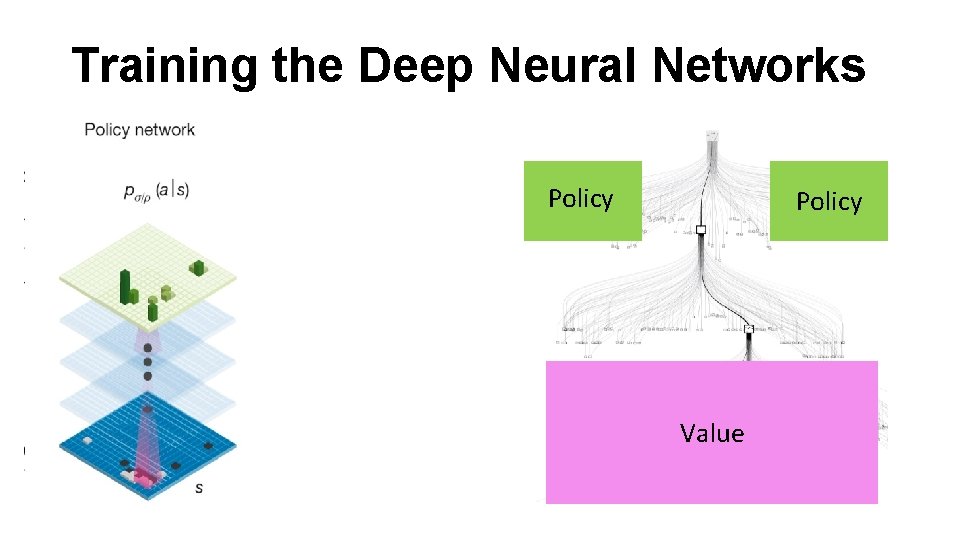

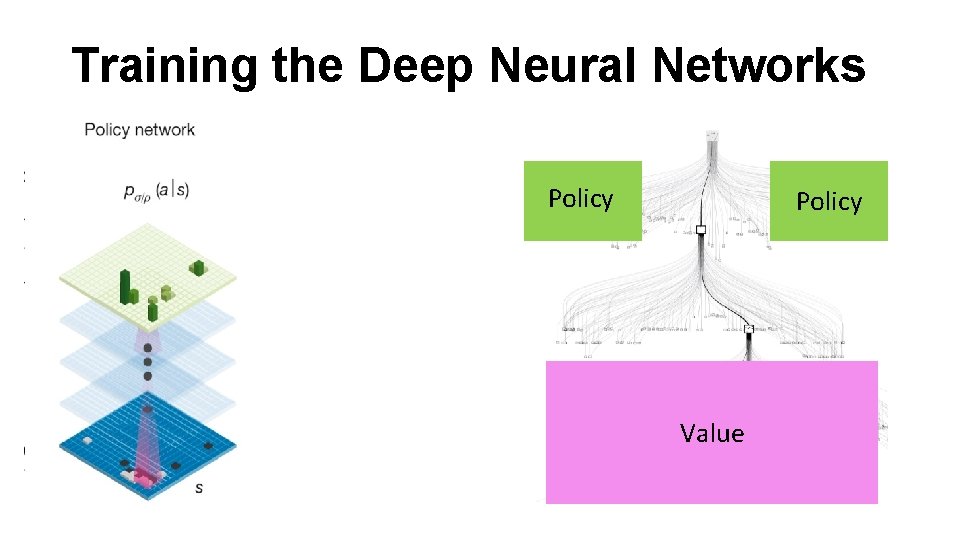

Training the Deep Neural Networks Policy Value

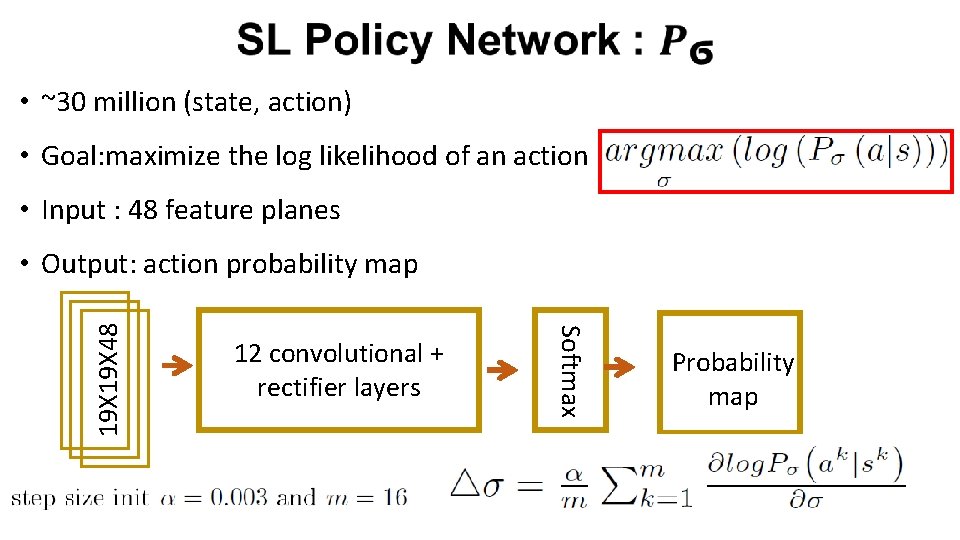

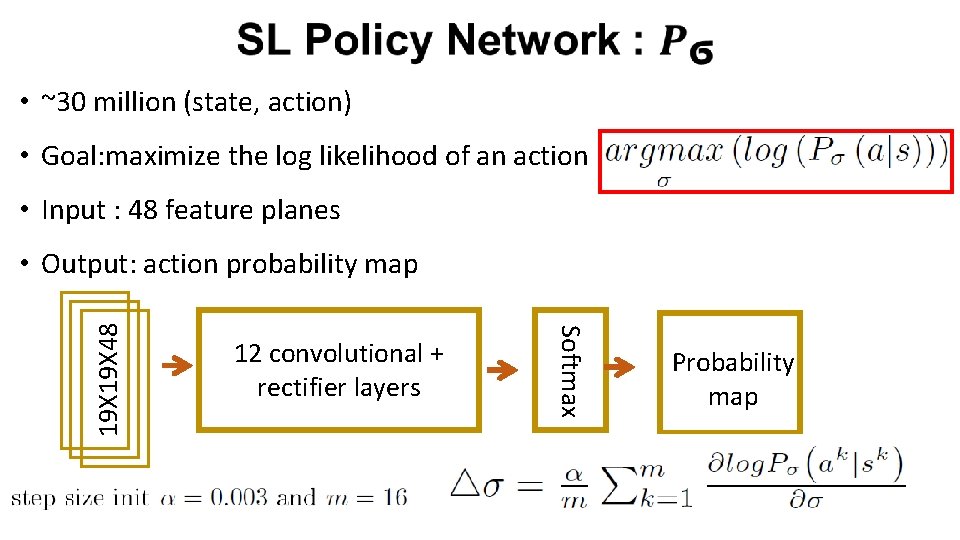

• ~30 million (state, action) • Goal: maximize the log likelihood of an action • Input : 48 feature planes 12 convolutional + rectifier layers Softmax 19 X 48 • Output: action probability map Probability map

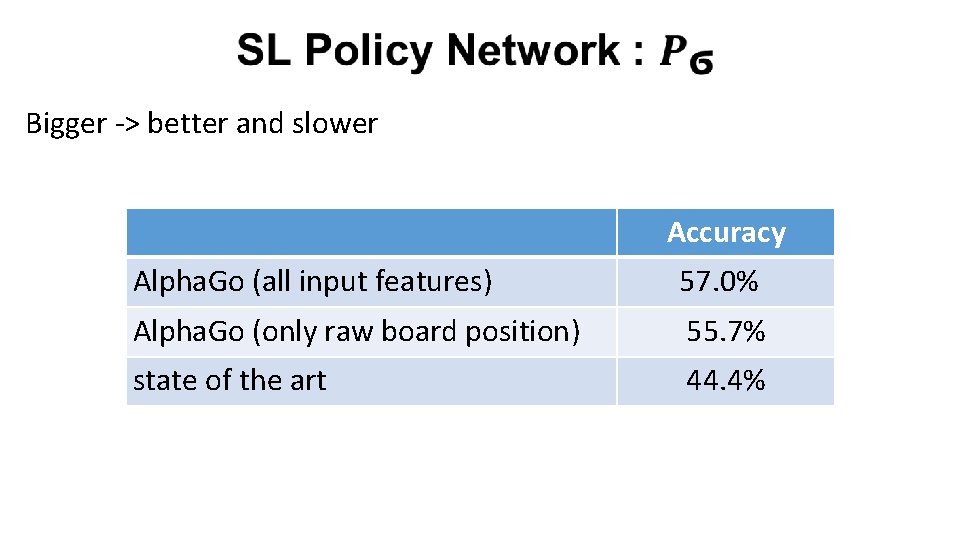

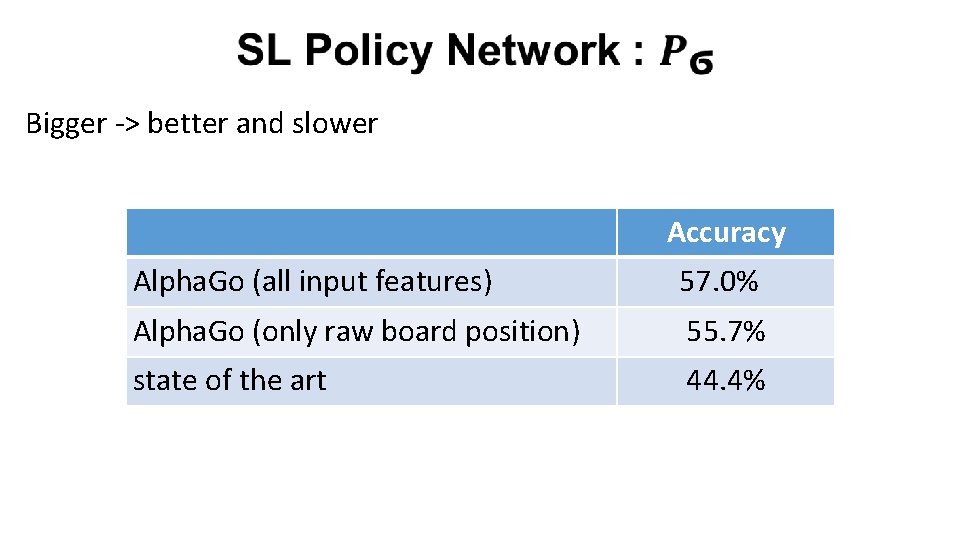

Bigger -> better and slower Accuracy Alpha. Go (all input features) 57. 0% Alpha. Go (only raw board position) 55. 7% state of the art 44. 4%

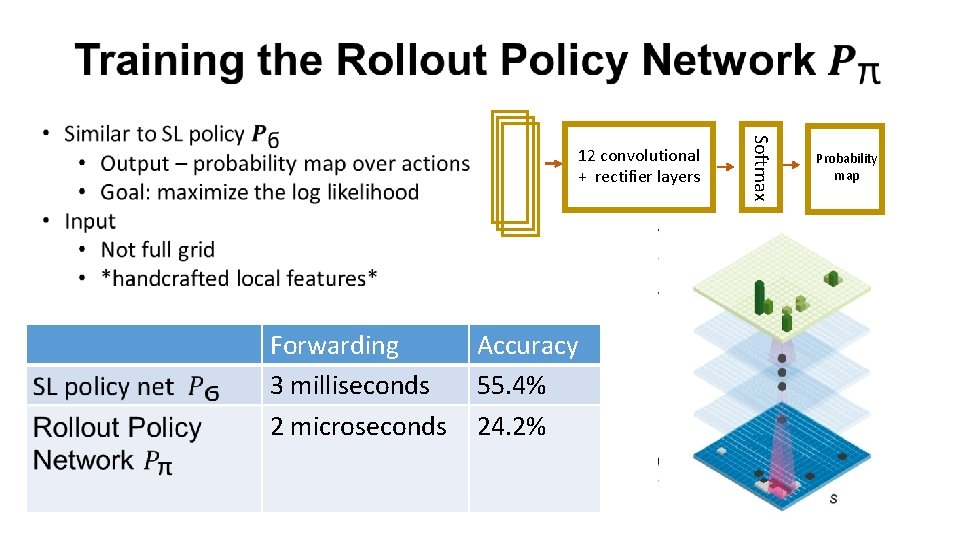

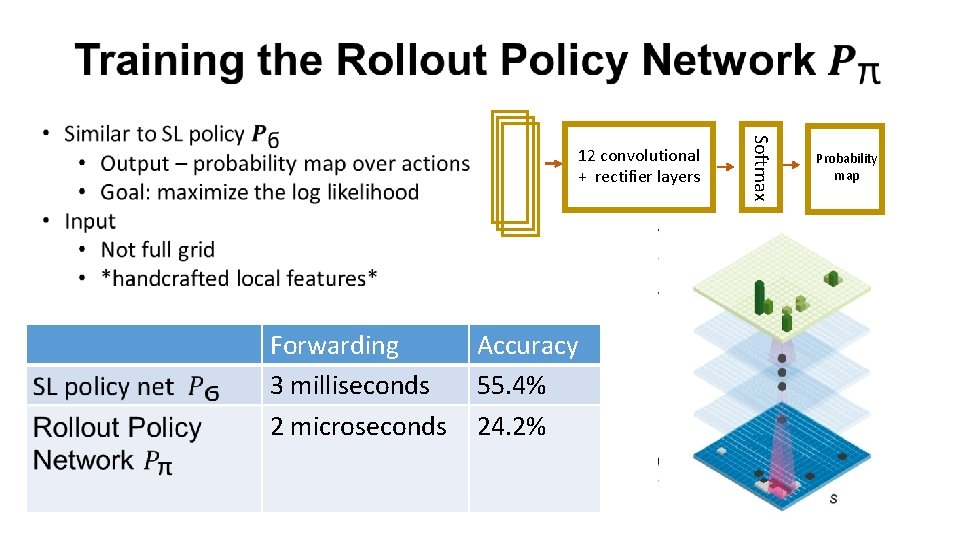

Forwarding 3 milliseconds 2 microseconds Accuracy 55. 4% 24. 2% Softmax 12 convolutional + rectifier layers Probability map

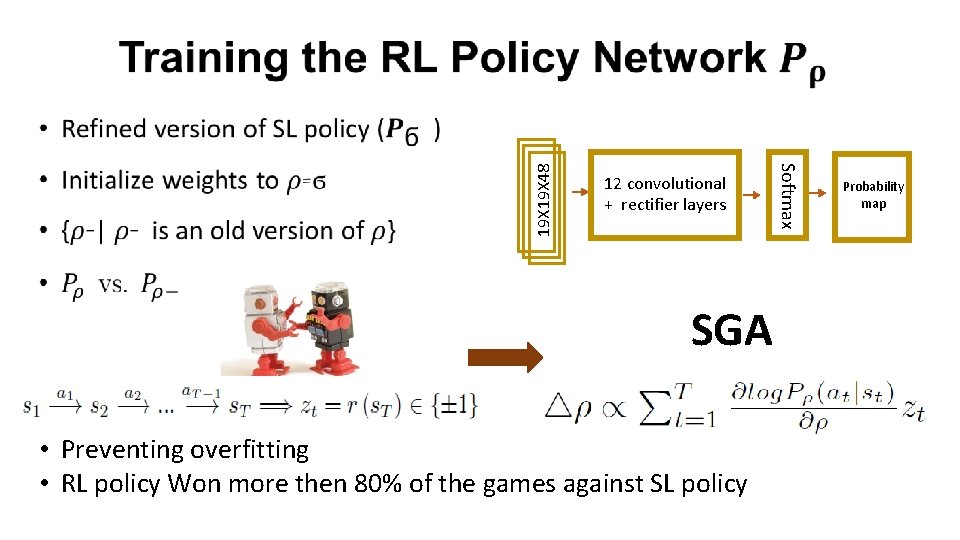

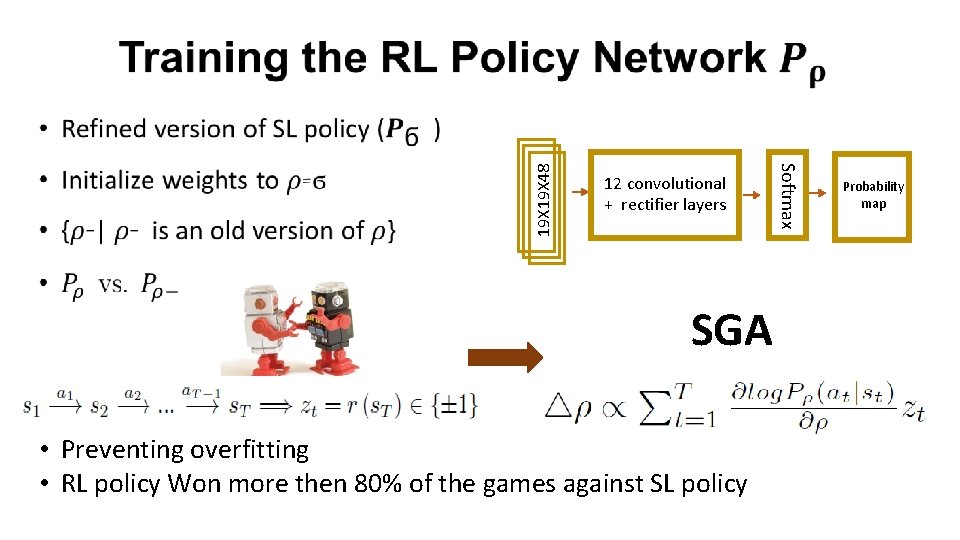

12 convolutional + rectifier layers SGA • Preventing overfitting • RL policy Won more then 80% of the games against SL policy Softmax 19 X 48 Probability map

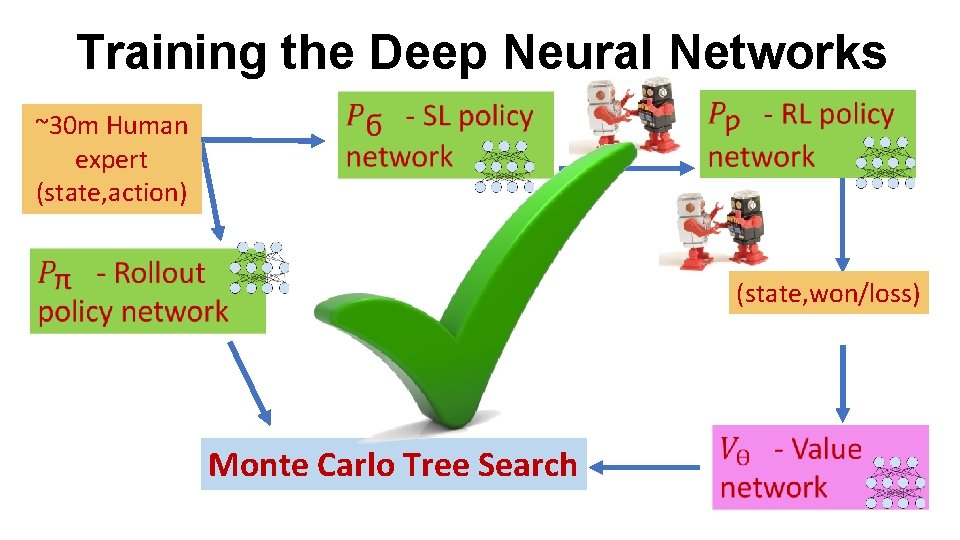

Training the Deep Neural Networks Human experts (state, action) (state, win/loss) Monte Carlo Tree Search

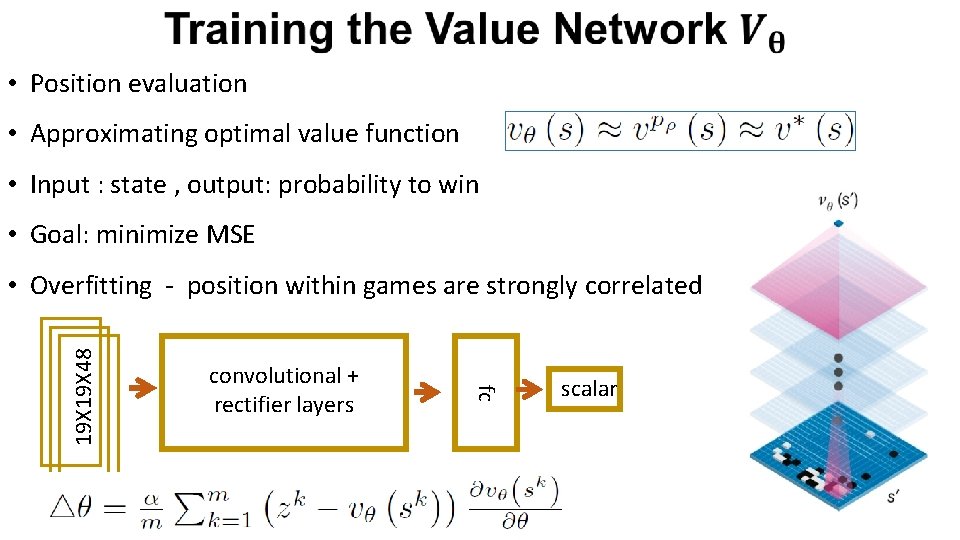

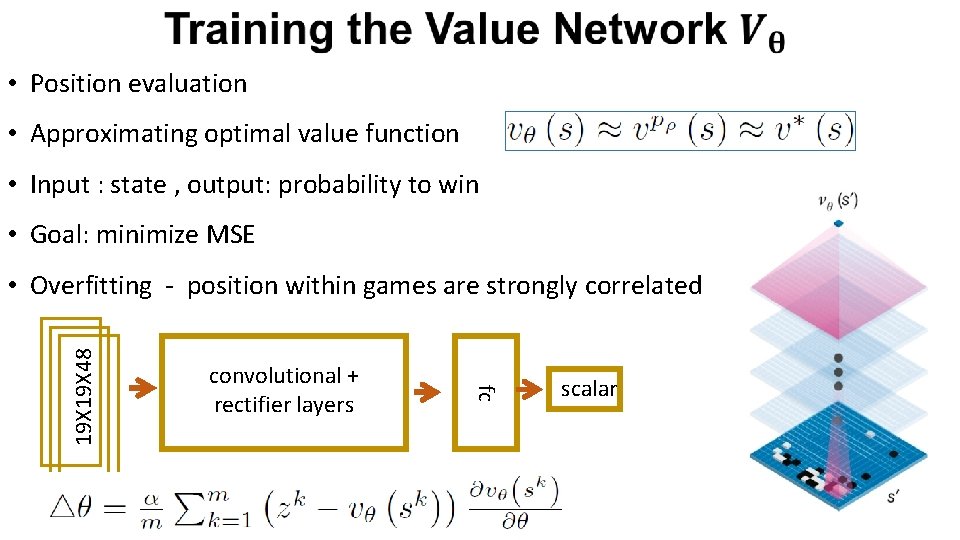

• Position evaluation • Approximating optimal value function • Input : state , output: probability to win • Goal: minimize MSE convolutional + rectifier layers fc 19 X 48 • Overfitting - position within games are strongly correlated scalar

Training the Deep Neural Networks ~30 m Human expert (state, action) (state, won/loss) Monte Carlo Tree Search

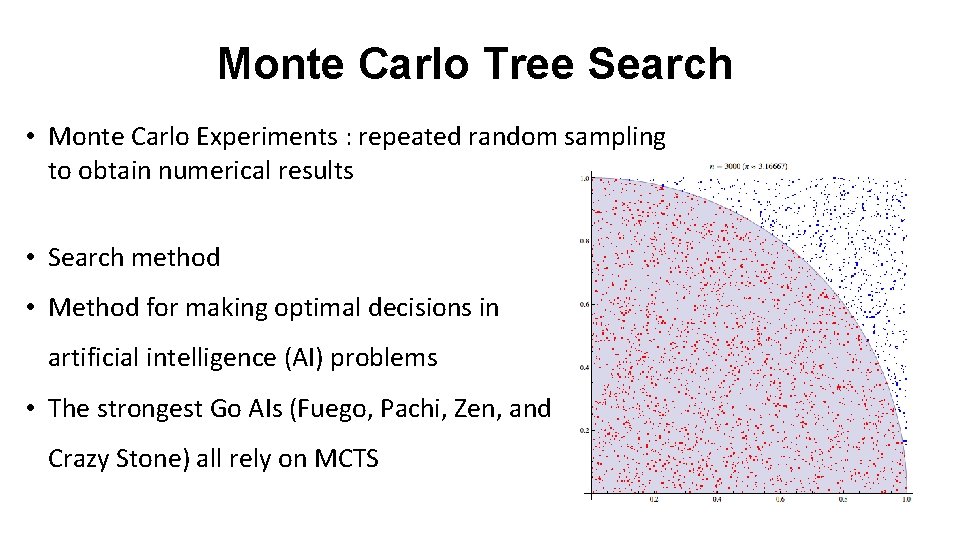

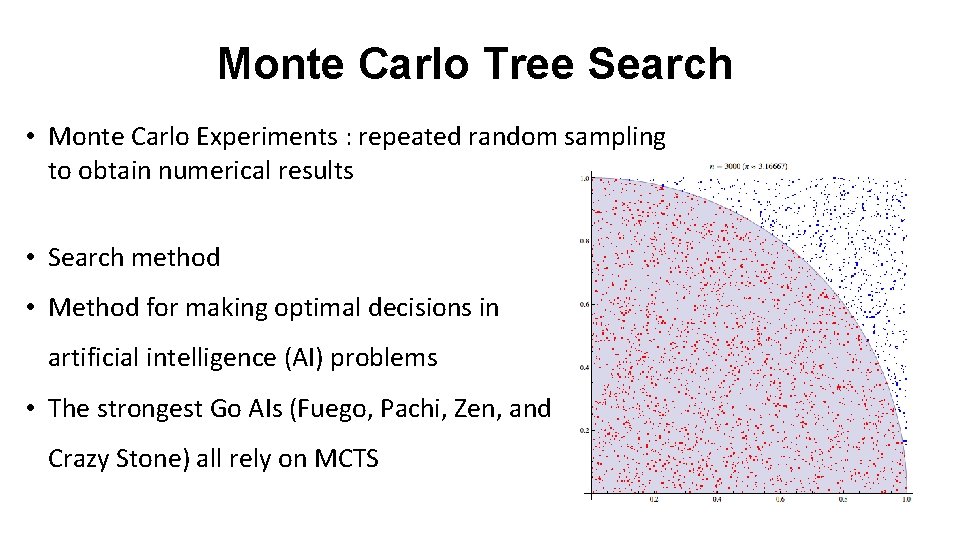

Monte Carlo Tree Search • Monte Carlo Experiments : repeated random sampling to obtain numerical results • Search method • Method for making optimal decisions in artificial intelligence (AI) problems • The strongest Go AIs (Fuego, Pachi, Zen, and Crazy Stone) all rely on MCTS

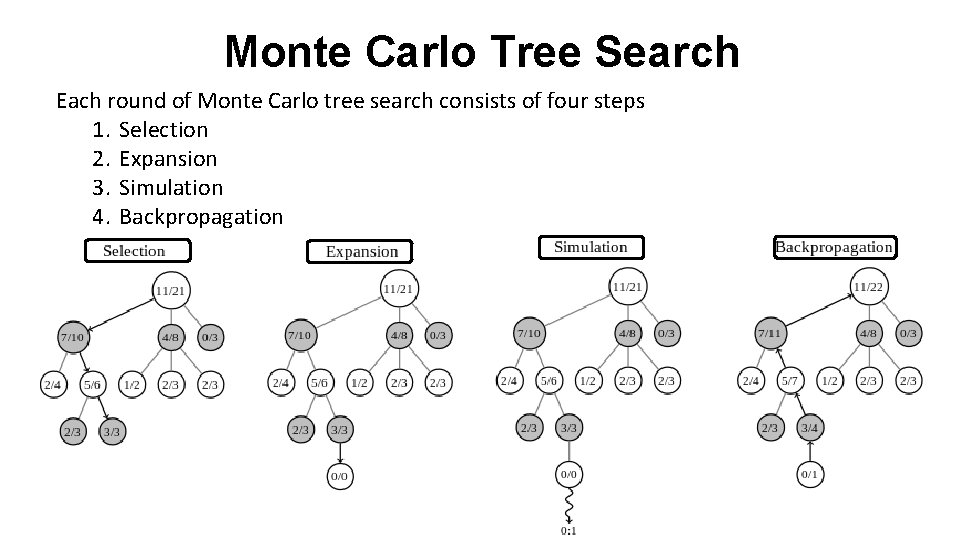

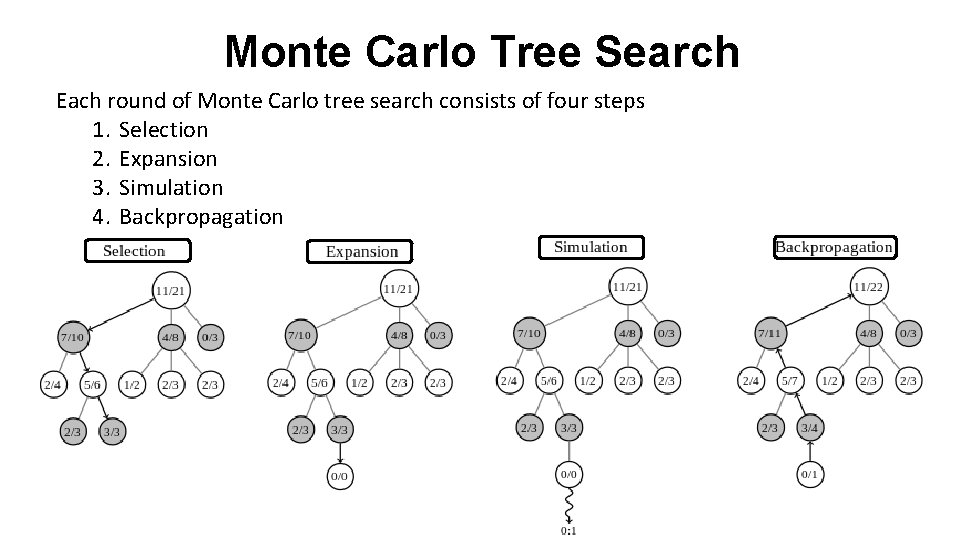

Monte Carlo Tree Search Each round of Monte Carlo tree search consists of four steps 1. Selection 2. Expansion 3. Simulation 4. Backpropagation

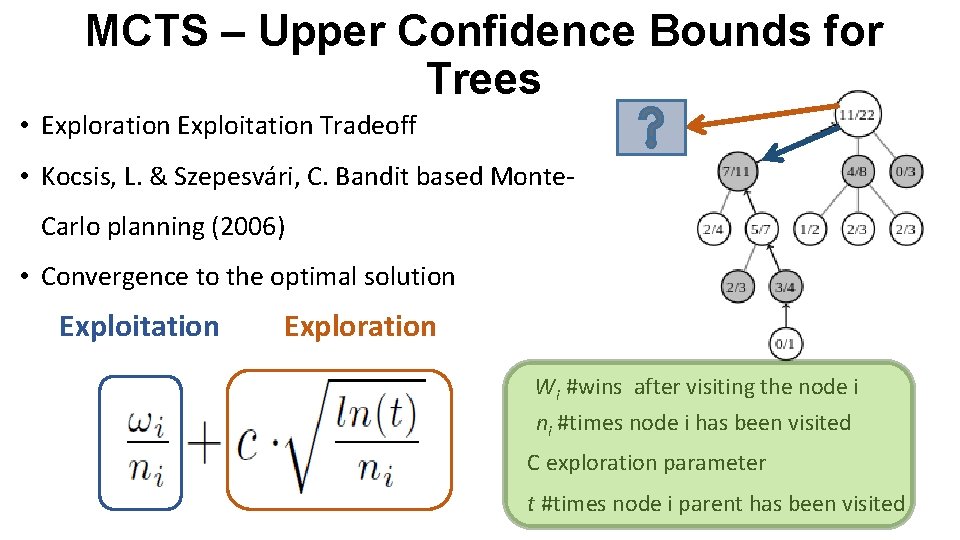

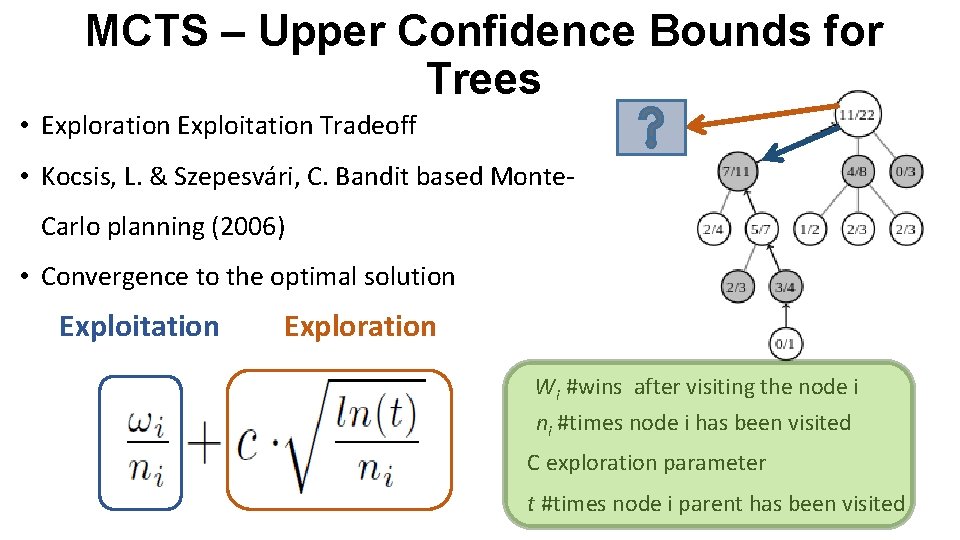

MCTS – Upper Confidence Bounds for Trees • Exploration Exploitation Tradeoff • Kocsis, L. & Szepesvári, C. Bandit based Monte. Carlo planning (2006) • Convergence to the optimal solution Exploitation Exploration Wi #wins after visiting the node i ni #times node i has been visited C exploration parameter t #times node i parent has been visited

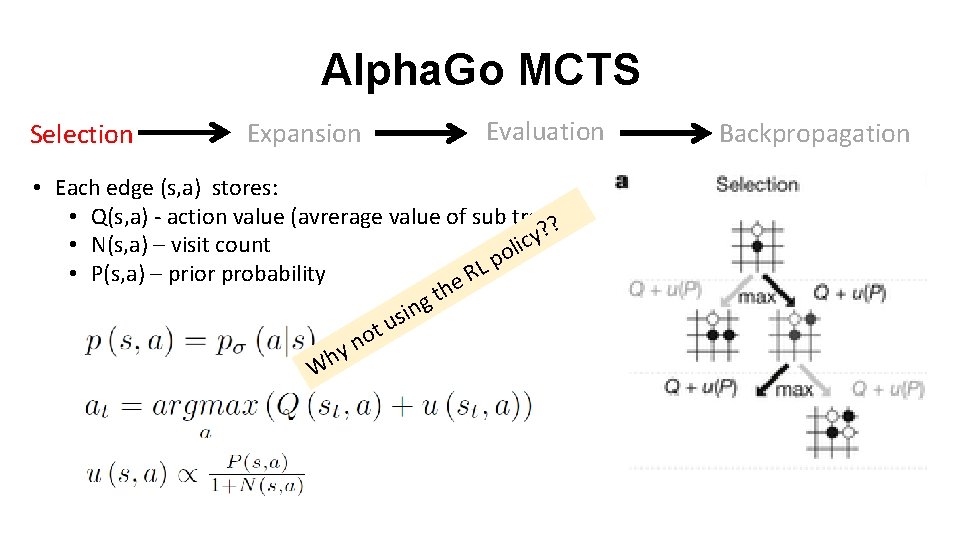

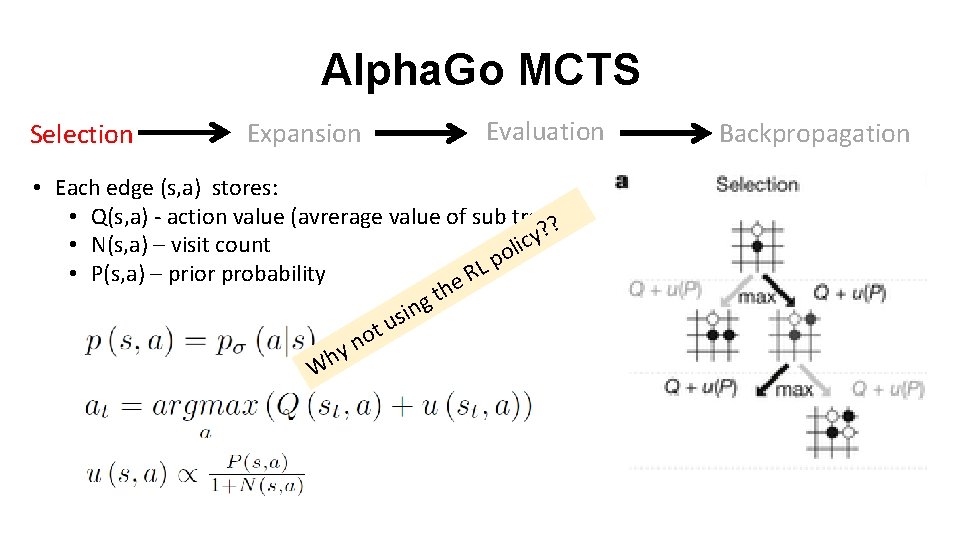

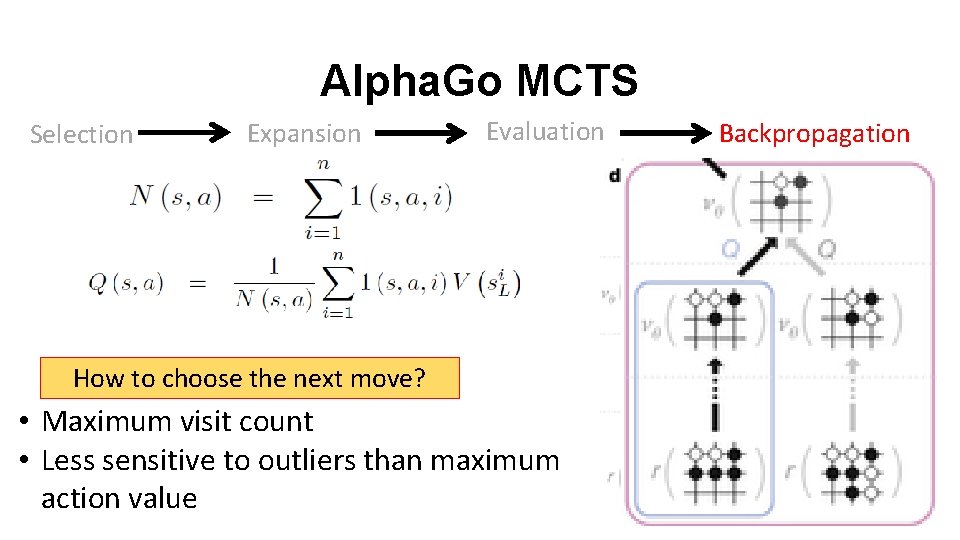

Alpha. Go MCTS Selection Expansion Evaluation • Each edge (s, a) stores: • Q(s, a) - action value (avrerage value of sub tree)? ? y c i • N(s, a) – visit count l o p L • P(s, a) – prior probability R e h t g in s u t o n y Wh Backpropagation

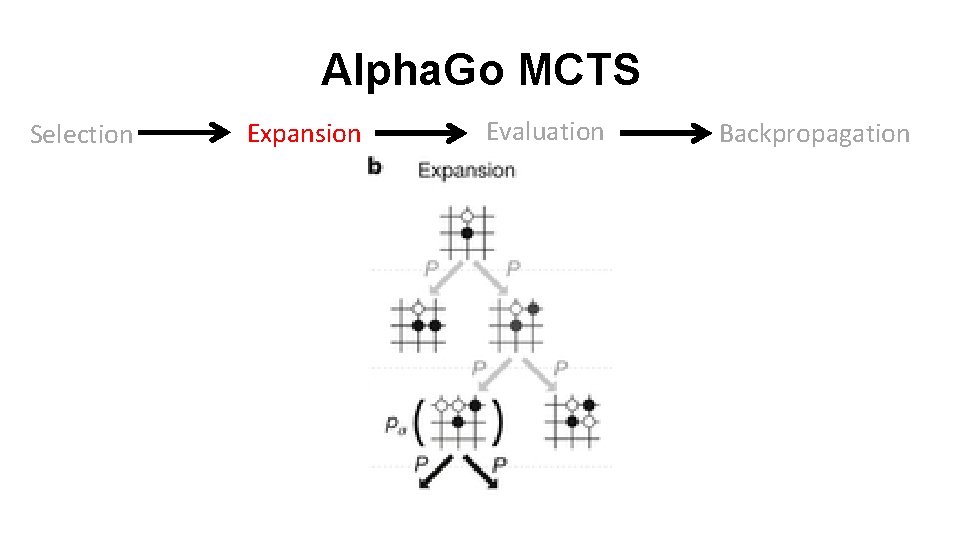

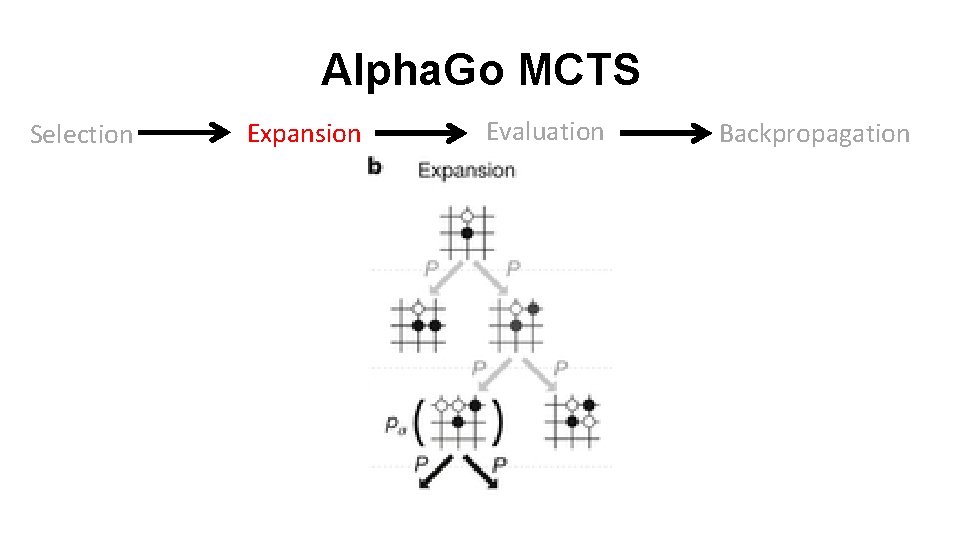

Alpha. Go MCTS Selection Expansion Evaluation Backpropagation

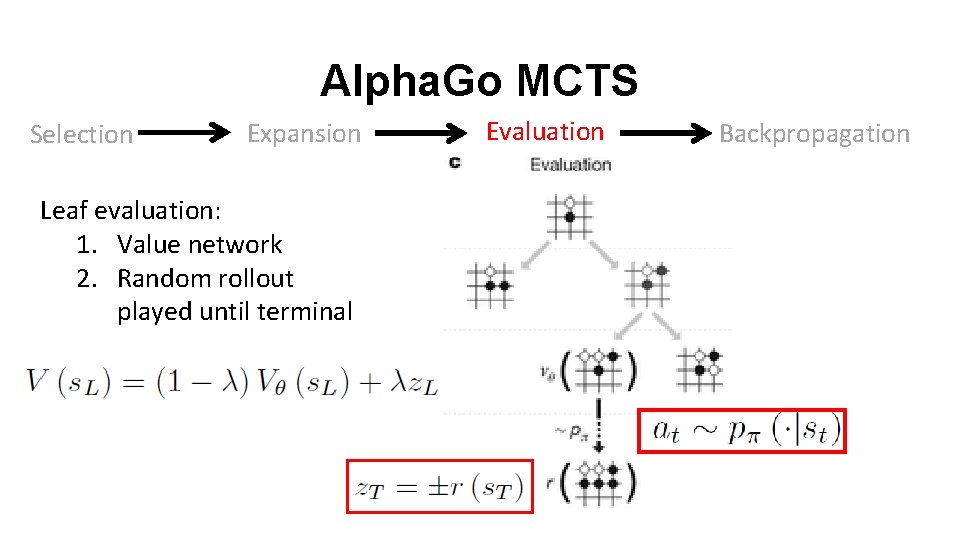

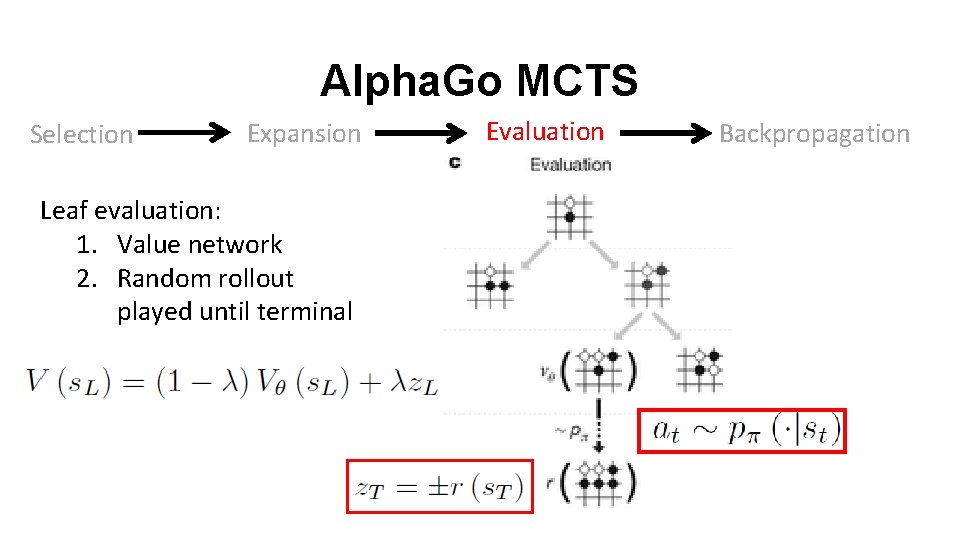

Alpha. Go MCTS Selection Expansion Leaf evaluation: 1. Value network 2. Random rollout played until terminal Evaluation Backpropagation

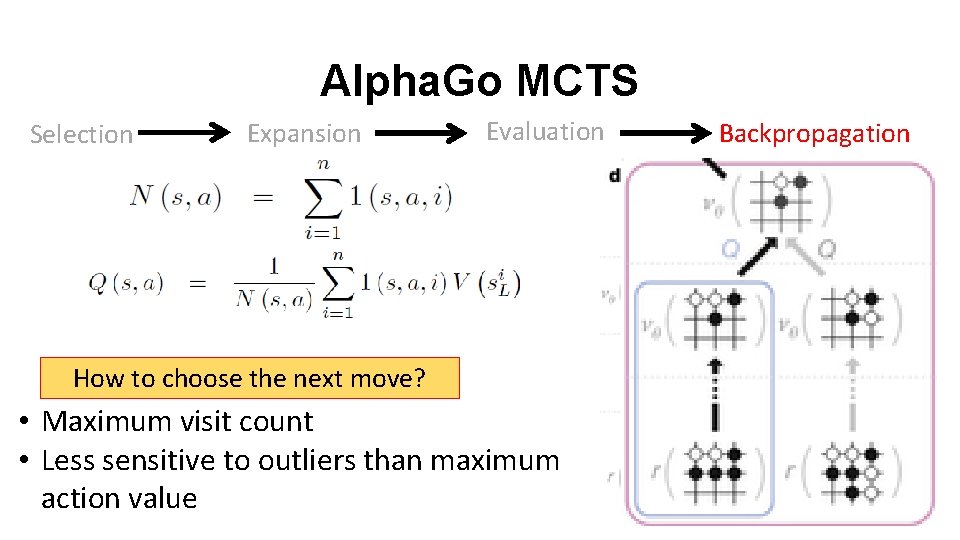

Alpha. Go MCTS Selection Expansion Evaluation How to choose the next move? • Maximum visit count • Less sensitive to outliers than maximum action value Backpropagation

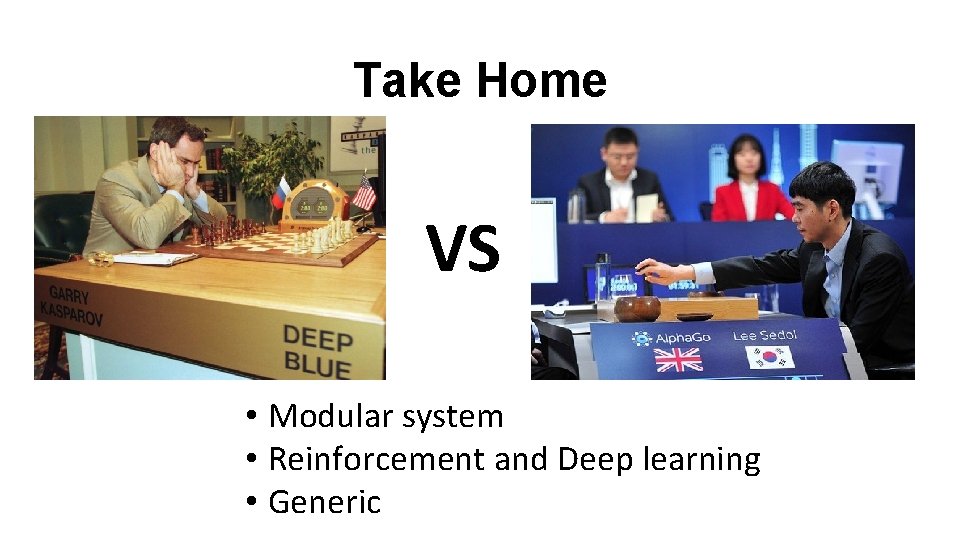

Alpha. Go VS Experts 4: 1 5: 0

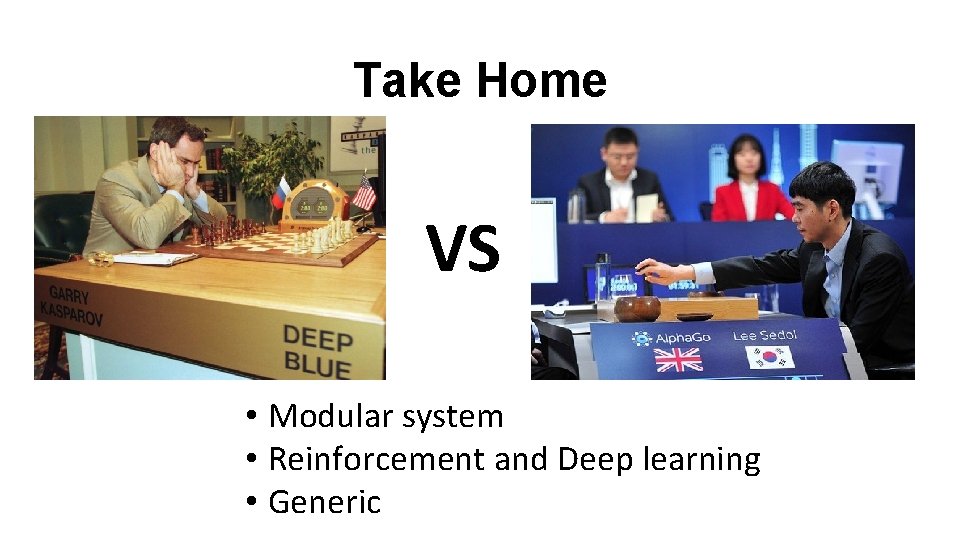

Take Home VS • Modular system • Reinforcement and Deep learning • Generic