Under The Hood Part I WebBased Information Architectures

![Under The Hood [Part I] Web-Based Information Architectures MSEC 20 -760 Mini II Jaime Under The Hood [Part I] Web-Based Information Architectures MSEC 20 -760 Mini II Jaime](https://slidetodoc.com/presentation_image_h2/3ad1138ec367e08d2b69d4c200d127f3/image-1.jpg)

Under The Hood [Part I] Web-Based Information Architectures MSEC 20 -760 Mini II Jaime Carbonell

Topics Covered • The Vector Space Model for IR (VSM) • Evaluation Metrics for IR • Query Expansion (the Rocchio Method) • Inverted Indexing for Efficiency • A Glimpse into Harder Problems

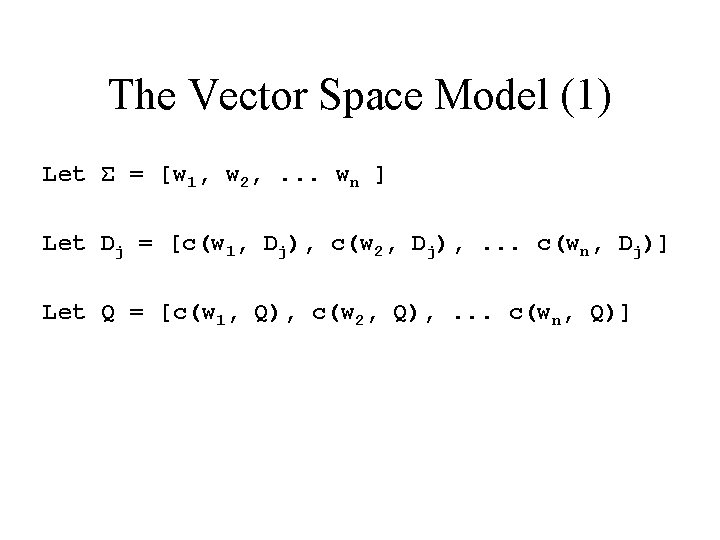

The Vector Space Model (1) Let Σ = [w 1, w 2, . . . wn ] Let Dj = [c(w 1, Dj), c(w 2, Dj), . . . c(wn, Dj)] Let Q = [c(w 1, Q), c(w 2, Q), . . . c(wn, Q)]

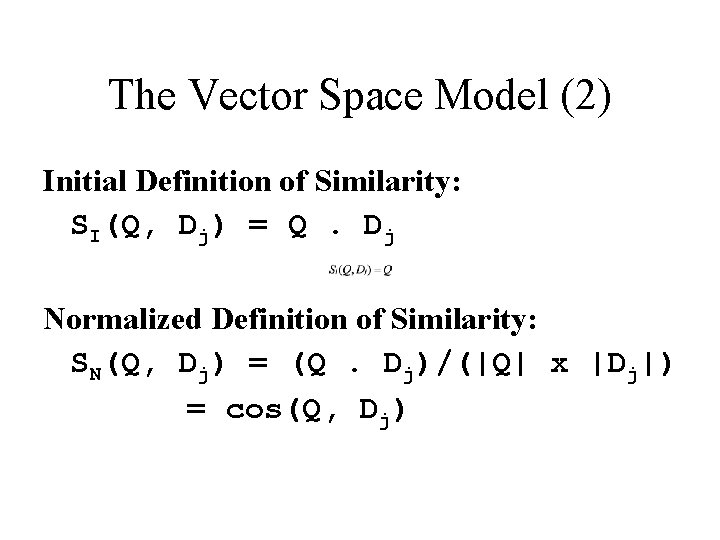

The Vector Space Model (2) Initial Definition of Similarity: SI(Q, Dj) = Q. Dj Normalized Definition of Similarity: SN(Q, Dj) = (Q. Dj)/(|Q| x |Dj|) = cos(Q, Dj)

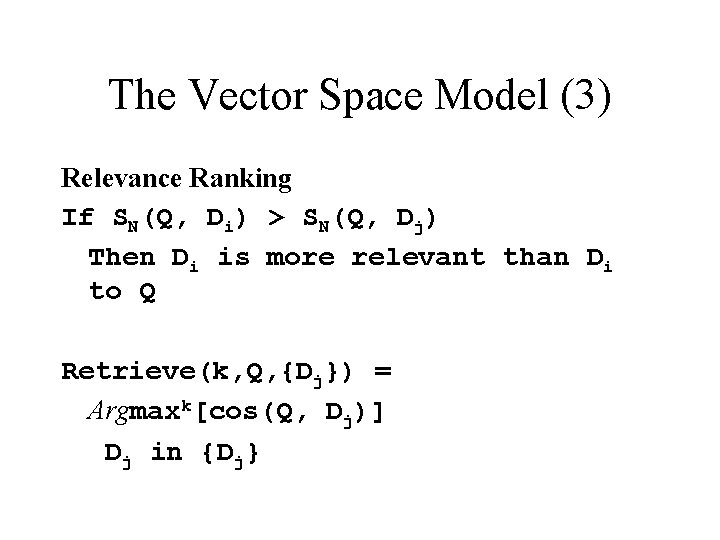

The Vector Space Model (3) Relevance Ranking If SN(Q, Di) > SN(Q, Dj) Then Di is more relevant than Di to Q Retrieve(k, Q, {Dj}) = Argmaxk[cos(Q, Dj)] Dj in {Dj}

Refinements to VSM (1) Word normalization • Words in morphological root form countries => country interesting => interest • Stemming as a fast approximation countries, country => countr moped => mop • Reduces vocabulary (always good) • Generalizes matching (usually good) • More useful for non-English IR (Arabic has > 100 variants per verb)

Refinements to VSM (2) Stop-Word Elimination • Discard articles, auxiliaries, prepositions, . . . typically 100 -300 most frequent small words • Reduce document length by 30 -40% • Retrieval accuracy improves slightly (510%)

Refinements to VSM (3) Proximity Phrases • E. g. : "air force" => airforce • Found by high-mutual information p(w 1 w 2) >> p(w 1)p(w 2) p(w 1 & w 2 in k-window) >> p(w 1 in k-window) p(w 2 in same k-window) • Retrieval accuracy improves slightly (5 -10%) • Too many phrases => inefficiency

Refinements to VSM (4) Words => Terms • term = word | stemmed word | phrase • Use exactly the same VSM method on terms (vs words)

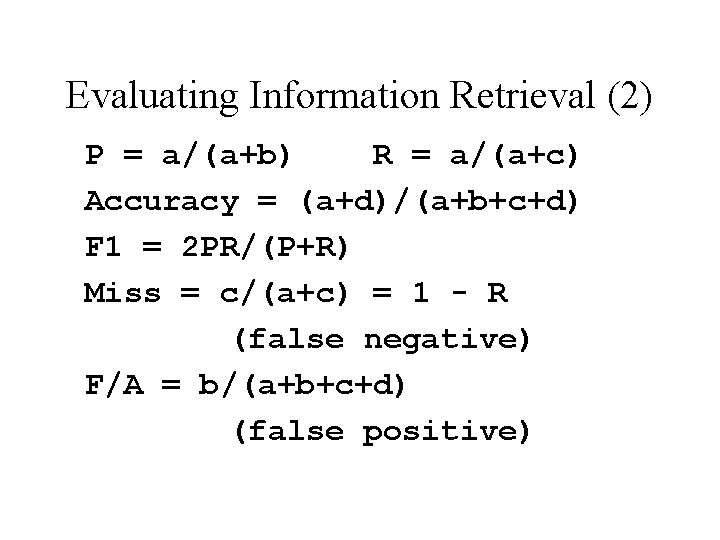

Evaluating Information Retrieval (1) Contingency table: retrieved relevant a not-relevant b not retrieved c d

Evaluating Information Retrieval (2) P = a/(a+b) R = a/(a+c) Accuracy = (a+d)/(a+b+c+d) F 1 = 2 PR/(P+R) Miss = c/(a+c) = 1 - R (false negative) F/A = b/(a+b+c+d) (false positive)

Evaluating Information Retrieval (3) 11 -point precision curves • IR system generates total ranking • Plot precision at 10%, 20%, 30%. . . recall,

Query Expansion (1) Observations: • Longer queries often yield better results • User’s vocabulary may differ from document vocabulary Q: how to avoid heart disease D: "Factors in minimizing stroke and cardiac arrest: Recommended dietary and exercise regimens" • Maybe longer queries have more chances to help recall.

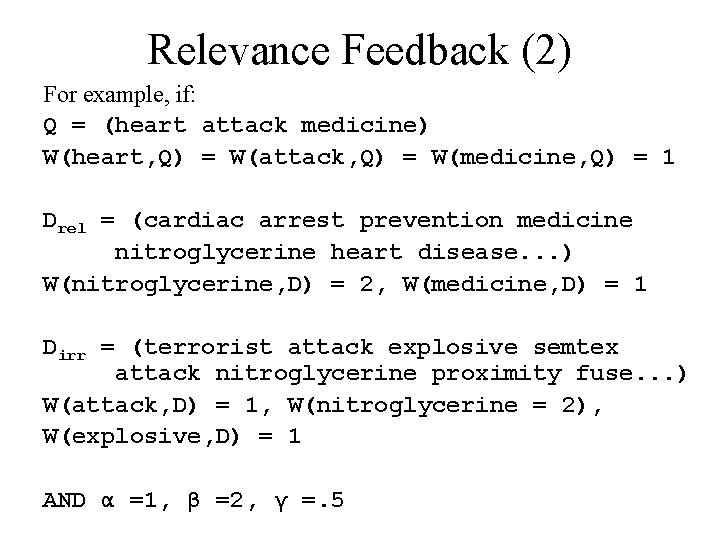

Query Expansion (2) Bridging the Gap • Human query expansion (user or expert) • Thesaurus-based expansion Seldom works in practice (unfocused) • Relevance feedback – Widen a thin bridge over vocabulary gap – Adds words from document space to query • Pseudo-Relevance feedback • Local Context analysis

![Relevance Feedback (1) Rocchio Formula Q’ = F[Q, Dret ] F = weighted vector Relevance Feedback (1) Rocchio Formula Q’ = F[Q, Dret ] F = weighted vector](http://slidetodoc.com/presentation_image_h2/3ad1138ec367e08d2b69d4c200d127f3/image-15.jpg)

Relevance Feedback (1) Rocchio Formula Q’ = F[Q, Dret ] F = weighted vector sum, such as: W(t, Q’) = αW(t, Q) + βW(t, Drel ) - γW(t, Dirr )

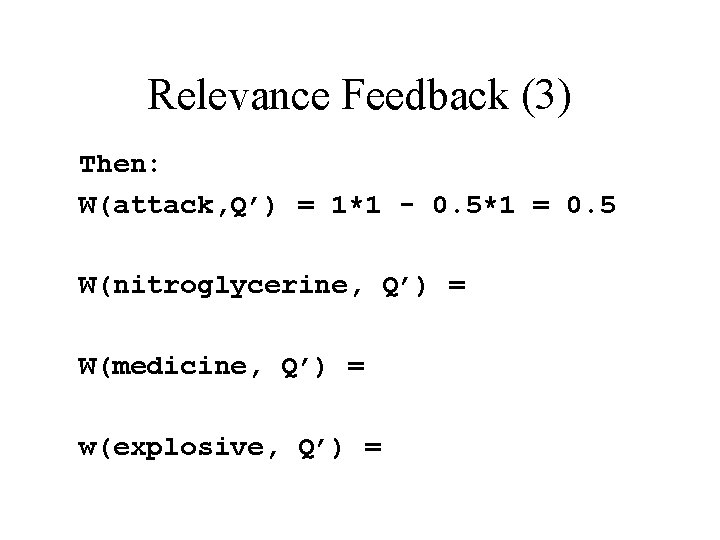

Relevance Feedback (2) For example, if: Q = (heart attack medicine) W(heart, Q) = W(attack, Q) = W(medicine, Q) = 1 Drel = (cardiac arrest prevention medicine nitroglycerine heart disease. . . ) W(nitroglycerine, D) = 2, W(medicine, D) = 1 Dirr = (terrorist attack explosive semtex attack nitroglycerine proximity fuse. . . ) W(attack, D) = 1, W(nitroglycerine = 2), W(explosive, D) = 1 AND α =1, β =2, γ =. 5

Relevance Feedback (3) Then: W(attack, Q’) = 1*1 - 0. 5*1 = 0. 5 W(nitroglycerine, Q’) = W(medicine, Q’) = w(explosive, Q’) =

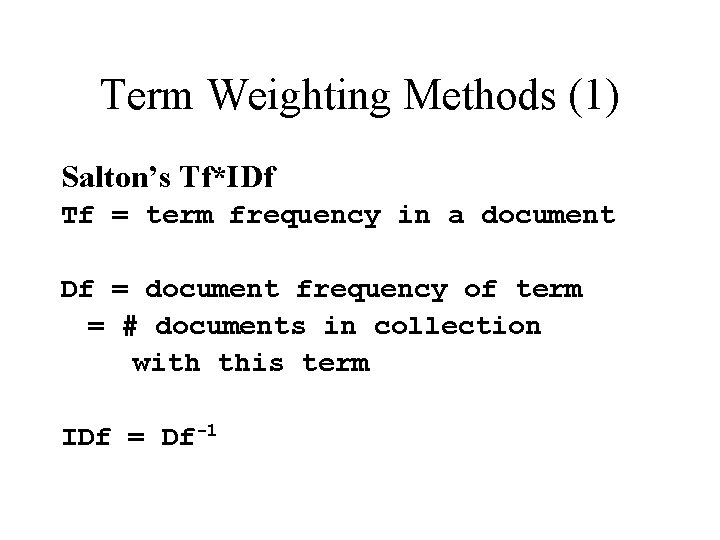

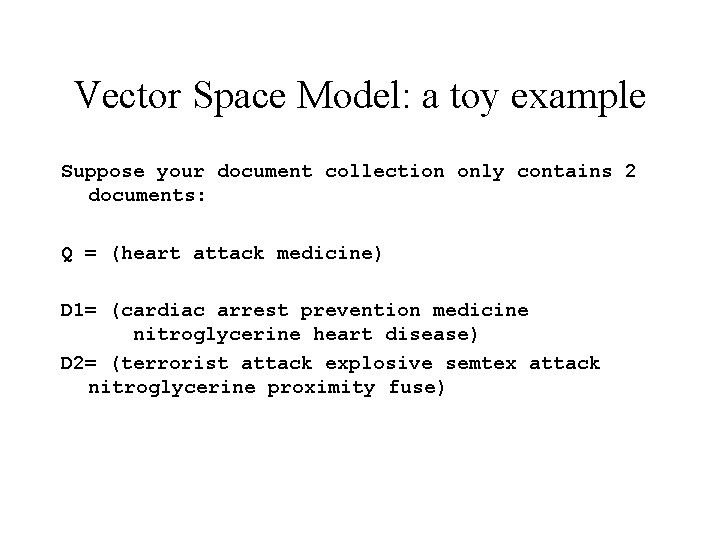

Term Weighting Methods (1) Salton’s Tf*IDf Tf = term frequency in a document Df = document frequency of term = # documents in collection with this term IDf = Df-1

Term Weighting Methods (2) Salton’s Tf*IDf Tf. IDf = f 1(Tf)*f 2(IDf) E. g. f 1(Tf) =Tf*ave(|Dj|)/|D| E. g. f 2(IDf) = log 2(IDF) f 1 and f 2 can differ for Q and D

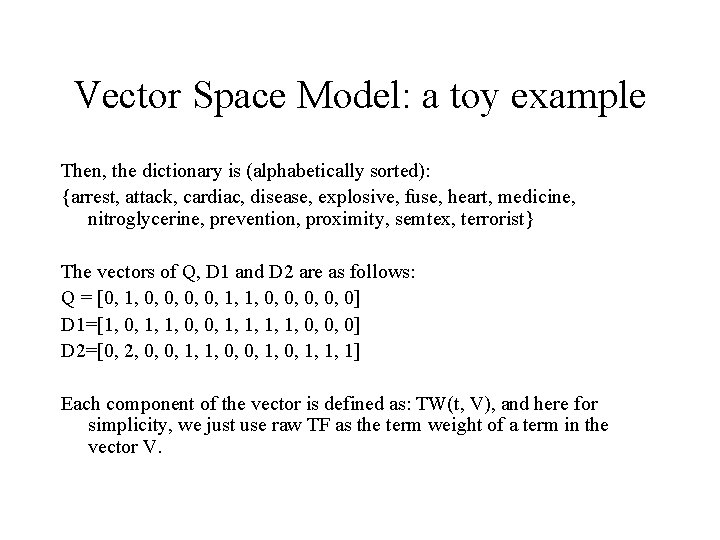

Vector Space Model: a toy example Suppose your document collection only contains 2 documents: Q = (heart attack medicine) D 1= (cardiac arrest prevention medicine nitroglycerine heart disease) D 2= (terrorist attack explosive semtex attack nitroglycerine proximity fuse)

Vector Space Model: a toy example Then, the dictionary is (alphabetically sorted): {arrest, attack, cardiac, disease, explosive, fuse, heart, medicine, nitroglycerine, prevention, proximity, semtex, terrorist} The vectors of Q, D 1 and D 2 are as follows: Q = [0, 1, 0, 0, 1, 1, 0, 0, 0] D 1=[1, 0, 1, 1, 0, 0, 0] D 2=[0, 2, 0, 0, 1, 1, 1] Each component of the vector is defined as: TW(t, V), and here for simplicity, we just use raw TF as the term weight of a term in the vector V.

Efficient Implementations of VSM (1) Exploit sparseness • Only compute non-zero multiplies in dotproducts • Do not even look at zero elements (how? ) • => Use non-stop terms to index documents

![Efficient Implementations of VSM (2) Inverted Indexing • Find all unique [stemmed] terms in Efficient Implementations of VSM (2) Inverted Indexing • Find all unique [stemmed] terms in](http://slidetodoc.com/presentation_image_h2/3ad1138ec367e08d2b69d4c200d127f3/image-23.jpg)

Efficient Implementations of VSM (2) Inverted Indexing • Find all unique [stemmed] terms in document collection • Remove stopwords from word list • If collection is large (over 100, 000 documents), [Optionally] remove singletons Usually spelling errors or obscure names • Alphabetize or use hash table to store list • For each term create data structure like:

Efficient Implementations of VSM (3) [term IDFtermi, <doci, freq(term, doci ) docj, freq(term, docj ). . . >] or: [term IDFtermi, <doci, freq(term, doci), [pos 1, i, pos 2, i, . . . ] docj, freq(term, docj), [pos 1, j, pos 2, j, . . . ]. . . >] posl, 1 indicates the first position of term in documentj and so on.

Open Research Problems in IR (1) Beyond VSM • Vectors in different Spaces: Generalized VSM, Latent Semantic Indexing. . . • Probabilistic IR (Language Modeling): P(D|Q) = P(Q|D)P(D)/P(Q)

Open Research Problems in IR (2) Beyond Relevance • Appropriateness of doc to user comprehension level, etc. • Novelty of information in doc to user antiredundancy as approx to novelty

Open Research Problems in IR (3) Beyond one Language • Translingual IR • Transmedia IR

Open Research Problems in IR (4) Beyond Content Queries • "What’s new today? " • "What sort of things to you know about" • "Build me a Yahoo-style index for X" • "Track the event in this news-story"

- Slides: 28