Unconstrained optimization Gradient based algorithms Steepest descent Conjugate

Unconstrained optimization • Gradient based algorithms – Steepest descent – Conjugate gradients – Newton and quasi-Newton • Population based algorithms – Nelder Mead’s sequential simplex – Stochastic algorithms

Unconstrained local minimization • The necessity for one dimensional searches • The most intuitive choice of sk is the direction of steepest descent • This choice, however is very poor • Methods are based on the dictum that all functions of interest are locally quadratic

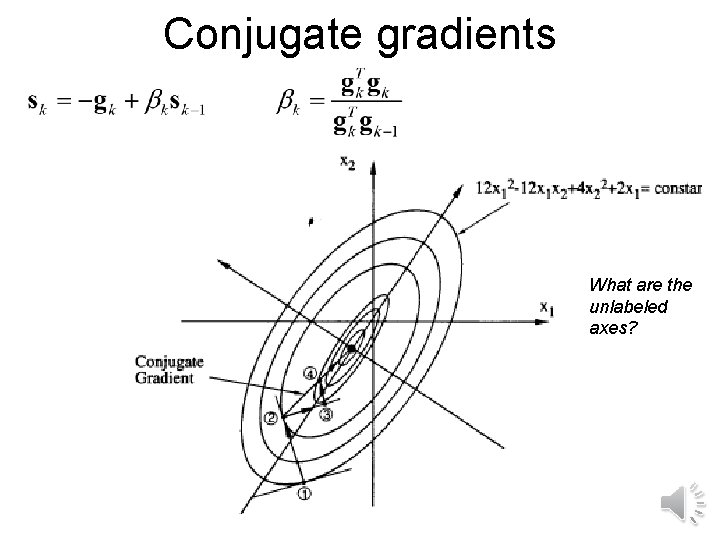

Conjugate gradients What are the unlabeled axes?

Newton and quasi-Newton methods • Newton • Quasi-Newton methods use successive evaluations of gradients to obtain approximation to Hessian or its inverse • Earliest was DFP, currently best known is BFGS • Like conjugate gradients guaranteed to converge in n steps or less for a quadratic function.

Matlab fminfunc X=FMINUNC(FUN, X 0, OPTIONS) minimizes with the default optimization parameters replaced by values in the structure OPTIONS, an argumentcreated with the OPTIMSET function. See OPTIMSET for details. Used options are Display, Tol. X, Tol. Fun, Derivative. Check, Diagnostics, Fun. Val. Check Grad. Obj, Hess. Pattern, Hessian, Hess. Mult, Hess. Update, Initial. Hess. Type, Initial. Hess. Matrix, Max. Fun. Evals, Max. Iter, Diff. Min. Change and Diff. Max. Change, Large. Scale, Max. PCGIter, Precond. Band. Width, Tol. PCG, Typical. X.

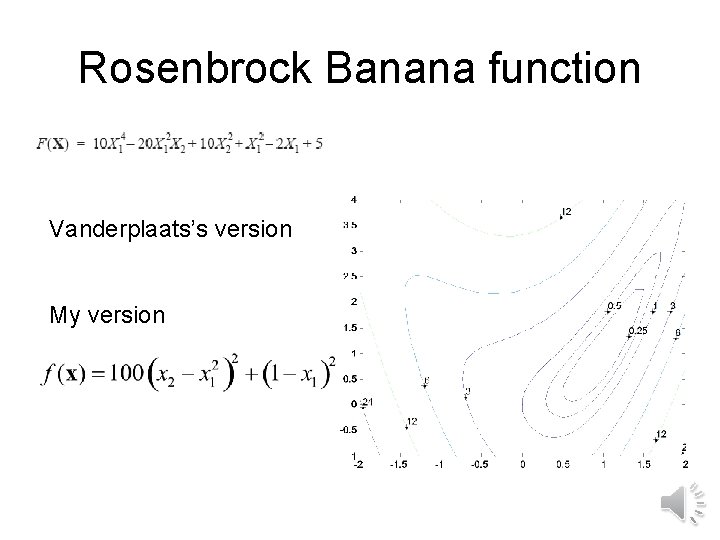

Rosenbrock Banana function. Vanderplaats’s version My version

![Matlab output [x, fval, exitflag, output] = fminunc(@banana, [-1. 2, 1]) Warning: Gradient must Matlab output [x, fval, exitflag, output] = fminunc(@banana, [-1. 2, 1]) Warning: Gradient must](http://slidetodoc.com/presentation_image_h2/eafda6b9f1d914151757480af6534403/image-7.jpg)

Matlab output [x, fval, exitflag, output] = fminunc(@banana, [-1. 2, 1]) Warning: Gradient must be provided for trust-region algorithm; using line-search algorithm instead. Local minimum found. Optimization completed because the size of the gradient is less than the default value of the function tolerance. x =1. 0000 fval =2. 8336 e-011 exitflag =1 output = iterations: 36, func. Count: 138 algorithm: 'medium-scale: Quasi-Newton line search‘ How would we reduce the number of iterations?

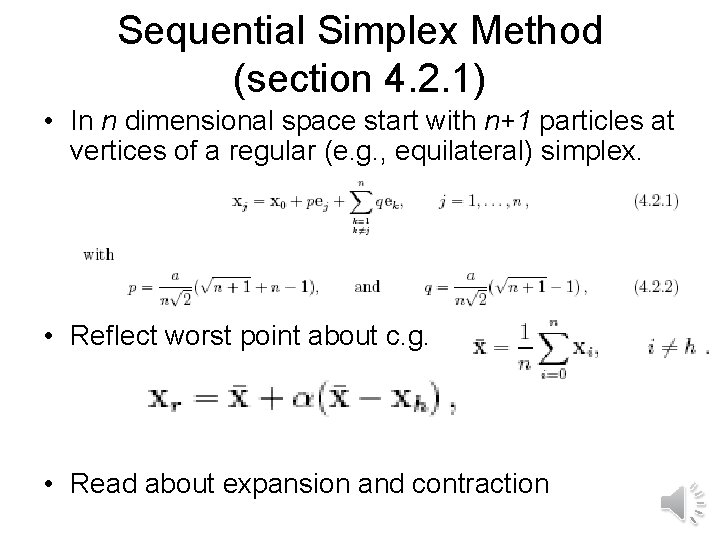

Sequential Simplex Method (section 4. 2. 1) • In n dimensional space start with n+1 particles at vertices of a regular (e. g. , equilateral) simplex. • Reflect worst point about c. g. • Read about expansion and contraction

![Matlab commands function [y]=banana(x) global z 1 global z 2 global yg global count Matlab commands function [y]=banana(x) global z 1 global z 2 global yg global count](http://slidetodoc.com/presentation_image_h2/eafda6b9f1d914151757480af6534403/image-9.jpg)

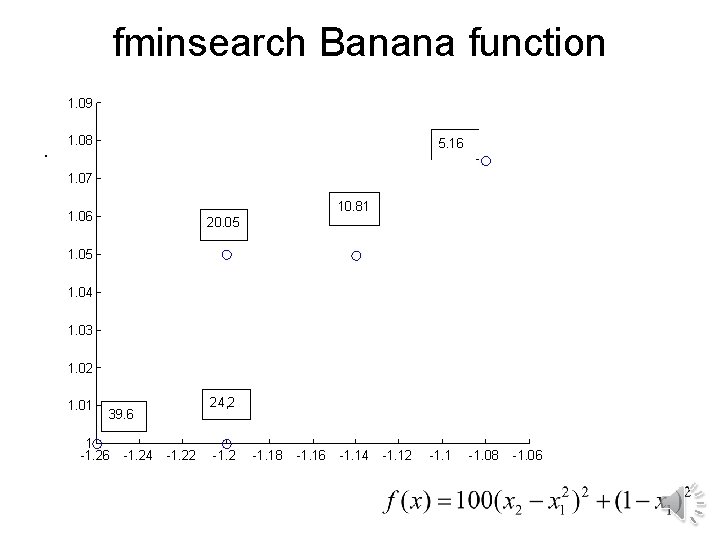

Matlab commands function [y]=banana(x) global z 1 global z 2 global yg global count y=100*(x(2)-x(1)^2)^2+(1 -x(1))^2; z 1(count)=x(1); z 2(count)=x(2); yg(count)=y; count=count+1; global z 2 >> global yg >> global z 1 >> global count >> count =1; >> options=optimset('Max. Fun. Evals', 20) [x, fval] = fminsearch(@banana, [-1. 2, 1], options) >> mat=[z 1; z 2; yg] mat = Columns 1 through 8 -1. 200 -1. 260 -1. 200 -1. 140 -1. 080 -1. 020 -0. 960 1. 000 1. 050 1. 075 1. 125 1. 1875 1. 150 24. 20 39. 64 20. 05 10. 81 5. 16 4. 498 6. 244 9. 058 Columns 9 through 16 -1. 020 -1. 065 -1. 125 -1. 046 -1. 031 -1. 007 -1. 013 1. 125 1. 175 1. 100 1. 119 1. 094 1. 078 1. 113 4. 796 5. 892 4. 381 7. 259 4. 245 4. 218 4. 441 4. 813

fminsearch Banana function 1. 09 . 1. 08 5. 16 1. 07 10. 81 1. 06 20. 05 1. 04 1. 03 1. 02 1. 01 24, 2 39. 6 1 -1. 26 -1. 24 -1. 22 -1. 18 -1. 16 -1. 14 -1. 12 -1. 1 -1. 08 -1. 06

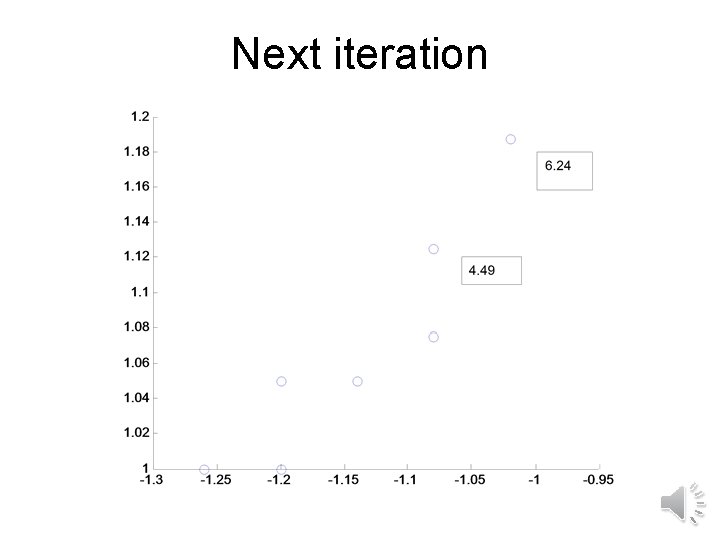

Next iteration

![Completed search [x, fval, exitflag, output] = fminsearch(@banana, [-1. 2, 1]) x =1. 0000 Completed search [x, fval, exitflag, output] = fminsearch(@banana, [-1. 2, 1]) x =1. 0000](http://slidetodoc.com/presentation_image_h2/eafda6b9f1d914151757480af6534403/image-12.jpg)

Completed search [x, fval, exitflag, output] = fminsearch(@banana, [-1. 2, 1]) x =1. 0000 fval =8. 1777 e-010 exitflag =1 output = iterations: 85 func. Count: 159 algorithm: 'Nelder-Mead simplex direct search‘ Why is the number of iterations large compared to function evaluations (36 and 138 for fminunc)?

- Slides: 12