Uncertainty Bayesian Belief Networks 1 DataMining with Bayesian

Uncertainty & Bayesian Belief Networks 1

Data-Mining with Bayesian Networks on the Internet • Internet can be seen as a massive repository of Data • Data is often updated • Once meaningful data has been collected from the Internet, some model is needed which is able to: – be learnt from the vast amount of available data – enable the user to reason about the data. – Be easily updated given new data 2

Section 1 - Bayesian Networks An Introduction • • • Brief Summary of Expert Systems Causal Reasoning Probability Theory Bayesian Networks - Definition, inference Current issues in Bayesian Networks Other Approaches to Uncertainty 3

Expert Systems 1 Rule Based Systems • 1960 s - Rule Based Systems • Model human Expertise using IF. . THEN rules or Production Rules. • Combines the rules (or Knowledge Base) with an inference engine to reason about the world. • Given certain observations, produces conclusions. • Relatively successful but limited. 4

2 Uncertainty • • Rule based systems failed to handle uncertainty Only dealt with true or false facts Partly overcome using Certainty factors However, other problems: no differentiation between causal rules and diagnostic rules. 5

3 Normative Expert Systems • Model Domain rather than Expert • Classical probability used rather than ad-hoc calculus • Expert support rather than Expert Model • 1980 s - More Powerful Computers make complex probability calculations feasible • Bayesian Networks introduced (Pearl 1986) e. g. MUNIN. 6

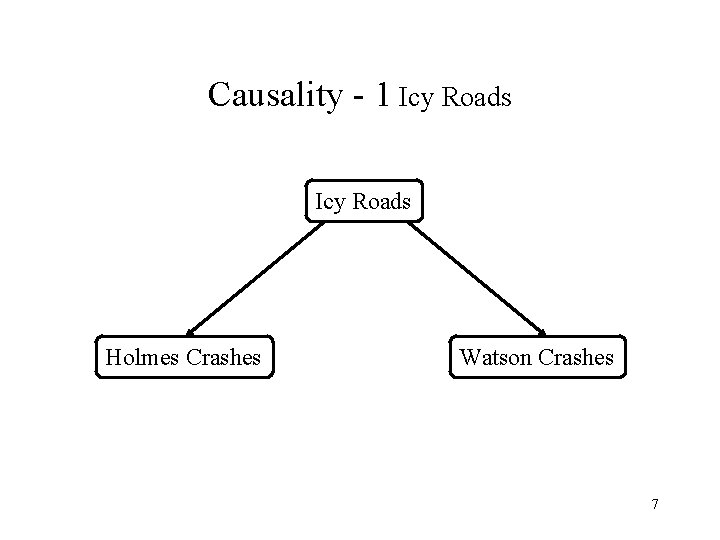

Causality - 1 Icy Roads Holmes Crashes Watson Crashes 7

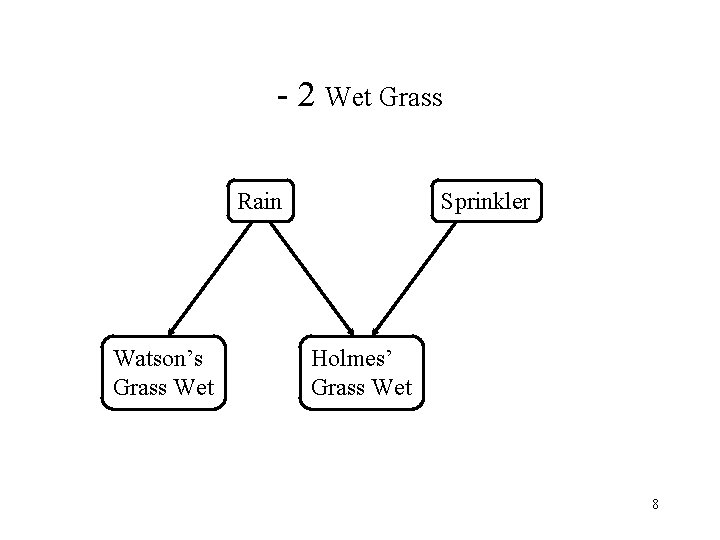

- 2 Wet Grass Rain Watson’s Grass Wet Sprinkler Holmes’ Grass Wet 8

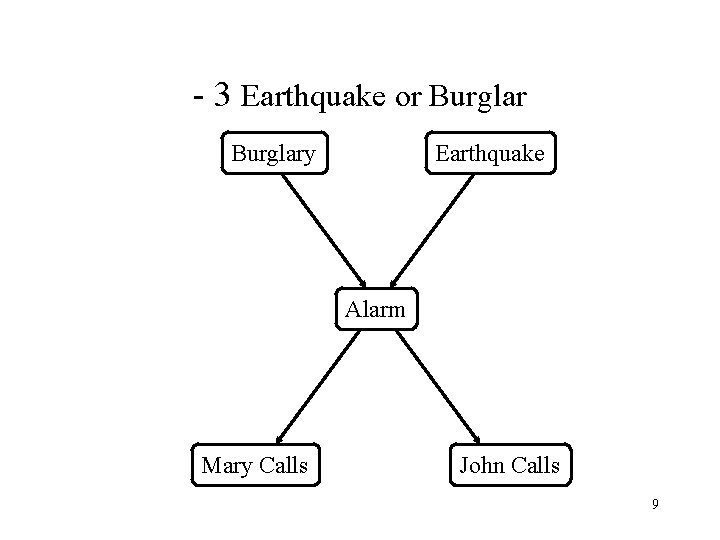

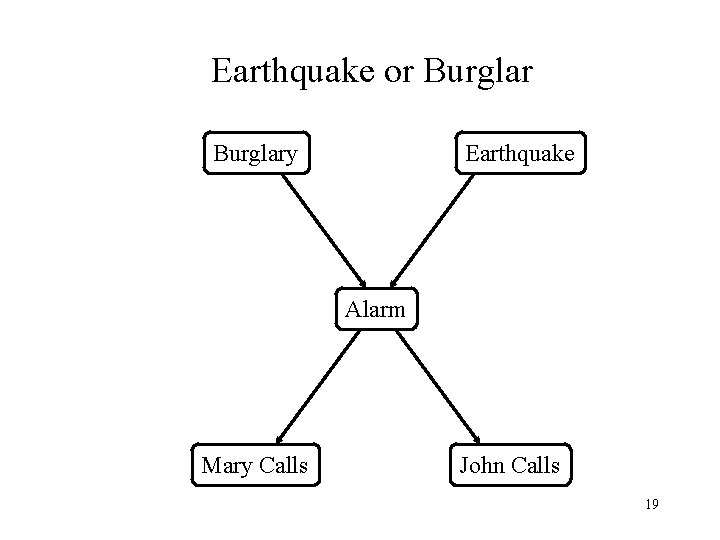

- 3 Earthquake or Burglary Earthquake Alarm Mary Calls John Calls 9

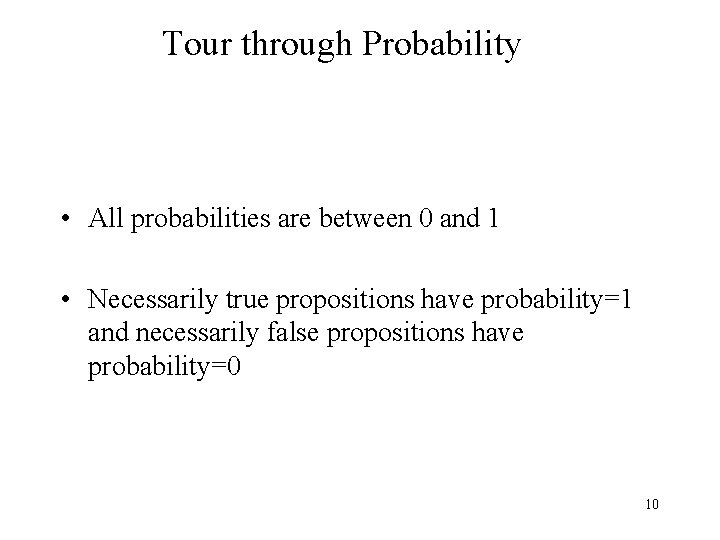

Tour through Probability • All probabilities are between 0 and 1 • Necessarily true propositions have probability=1 and necessarily false propositions have probability=0 10

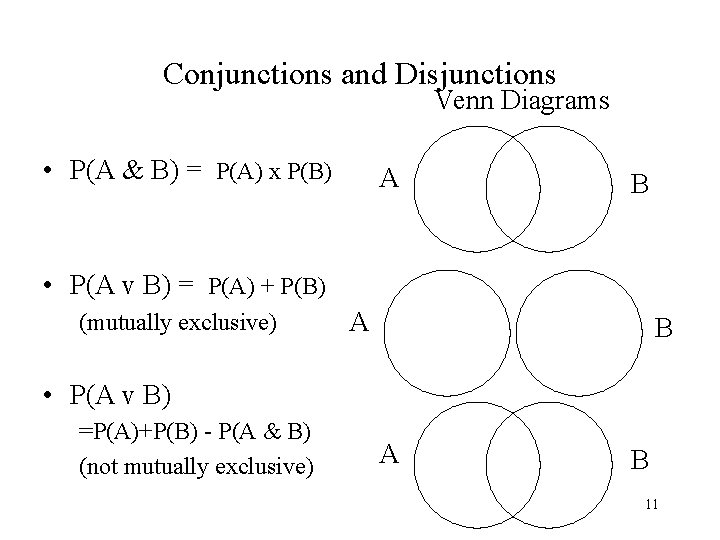

Conjunctions and Disjunctions Venn Diagrams • P(A & B) = P(A) x P(B) A B • P(A v B) = P(A) + P(B) (mutually exclusive) A B • P(A v B) =P(A)+P(B) - P(A & B) (not mutually exclusive) A B 11

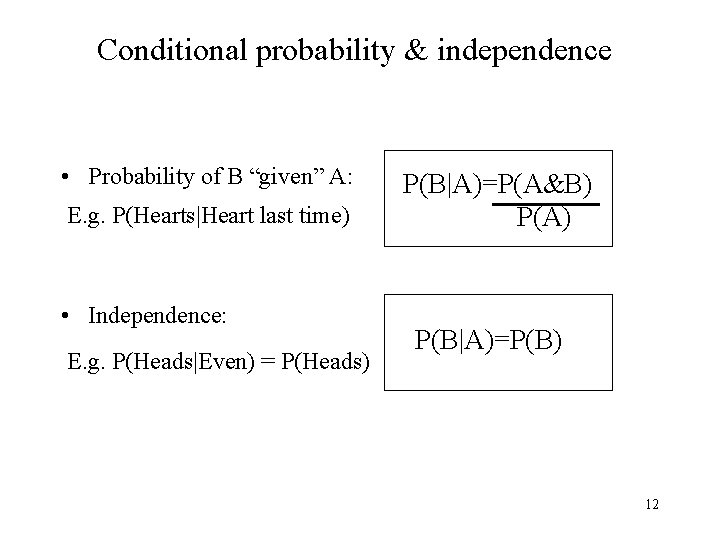

Conditional probability & independence • Probability of B “given” A: E. g. P(Hearts|Heart last time) • Independence: E. g. P(Heads|Even) = P(Heads) P(B|A)=P(A&B) P(A) P(B|A)=P(B) 12

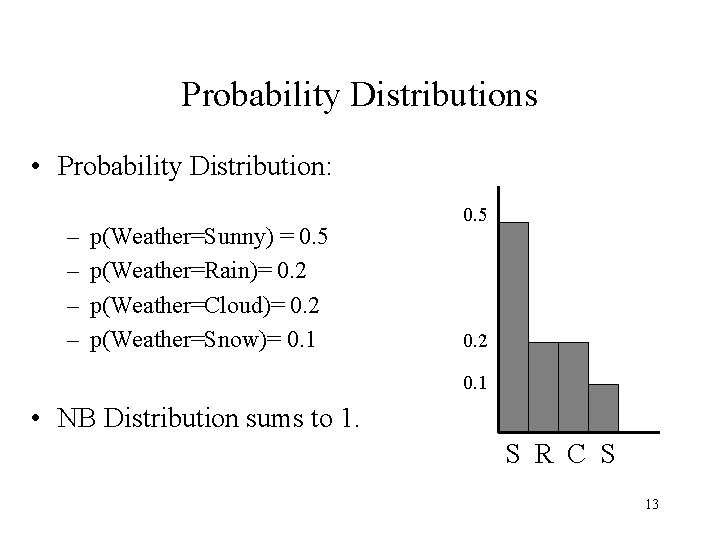

Probability Distributions • Probability Distribution: – – p(Weather=Sunny) = 0. 5 p(Weather=Rain)= 0. 2 p(Weather=Cloud)= 0. 2 p(Weather=Snow)= 0. 1 0. 5 0. 2 0. 1 • NB Distribution sums to 1. S R C S 13

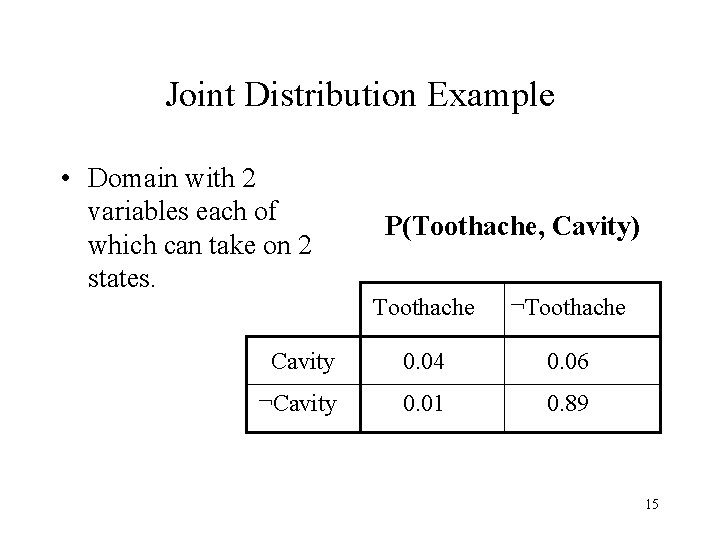

Joint Probability • Completely specifies all beliefs in a problem domain. • Joint prob Distribution is an n-dimensional table with a probability in each cell of that state occurring. • Written as P(X 1, X 2, X 3 …, Xn) • When instantiated as P(x 1, x 2 …, xn) 14

Joint Distribution Example • Domain with 2 variables each of which can take on 2 states. P(Toothache, Cavity) Toothache ¬Toothache Cavity 0. 04 0. 06 ¬Cavity 0. 01 0. 89 15

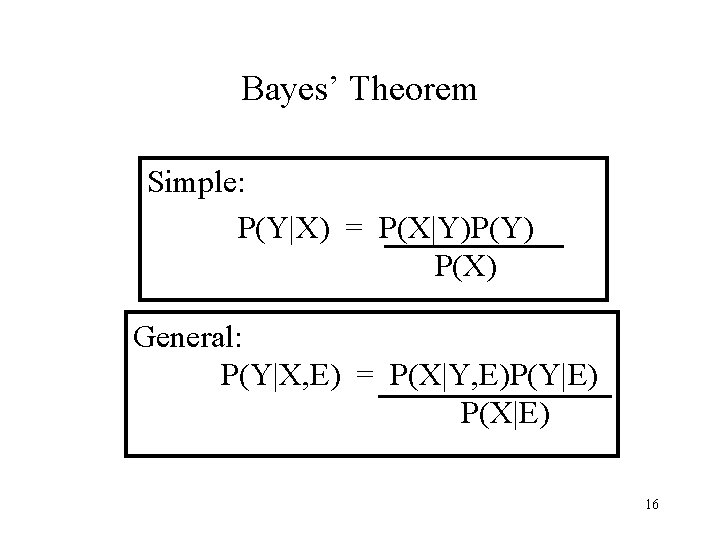

Bayes’ Theorem Simple: P(Y|X) = P(X|Y)P(Y) P(X) General: P(Y|X, E) = P(X|Y, E)P(Y|E) P(X|E) 16

Bayesian Probability • No need for repeated Trials • Appear to follow rules of Classical Probability • How well do we assign probabilities? The Probability Wheel: A Tool for Assessing Probabilities 17

Bayesian Network - Definition • • Causal Structure Interconnected Nodes Directed Acyclic Links Joint Distribution formed from conditional distributions at each node. 18

Earthquake or Burglary Earthquake Alarm Mary Calls John Calls 19

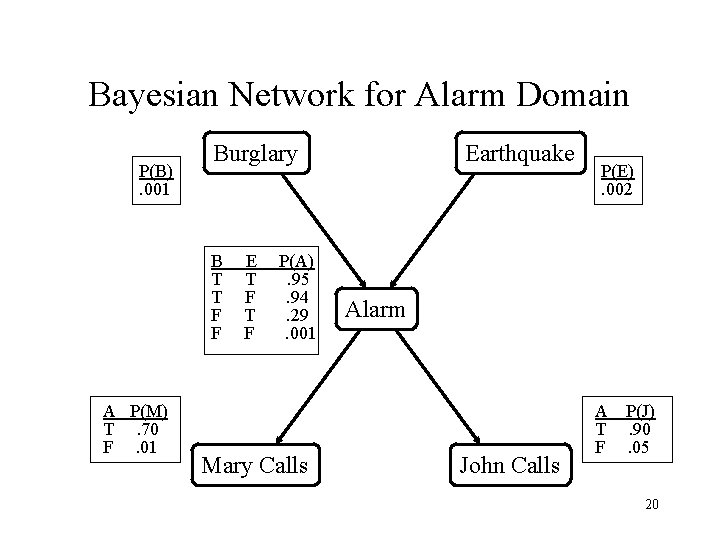

Bayesian Network for Alarm Domain P(B). 001 Burglary B T T F F A P(M) T. 70 F. 01 E T F P(A). 95. 94. 29. 001 Mary Calls Earthquake P(E). 002 Alarm John Calls A T F P(J). 90. 05 20

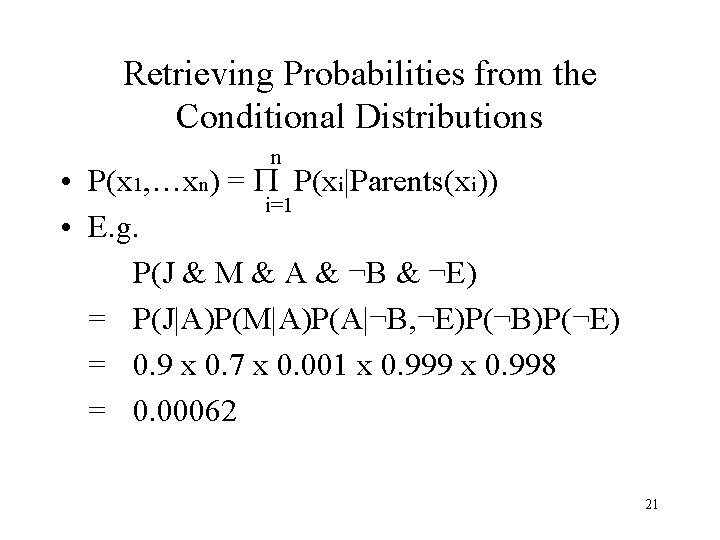

Retrieving Probabilities from the Conditional Distributions n • P(x 1, …xn) = P P(xi|Parents(xi)) i=1 • E. g. P(J & M & A & ¬B & ¬E) = P(J|A)P(M|A)P(A|¬B, ¬E)P(¬B)P(¬E) = 0. 9 x 0. 7 x 0. 001 x 0. 999 x 0. 998 = 0. 00062 21

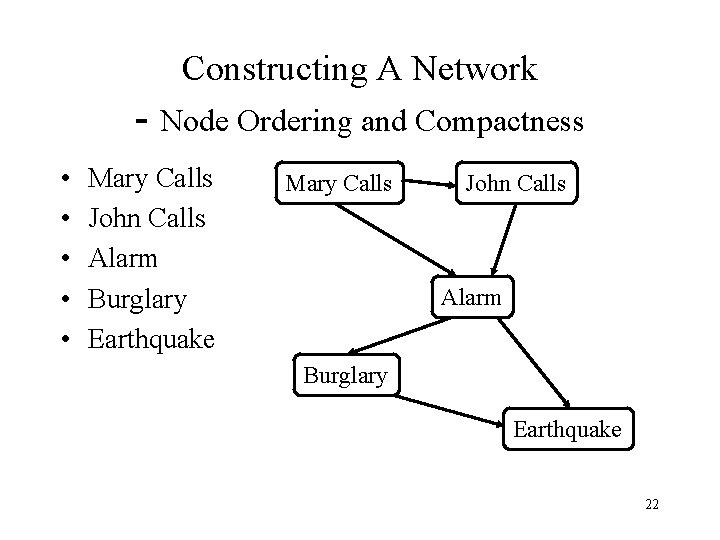

Constructing A Network - Node Ordering and Compactness • • • Mary Calls John Calls Alarm Burglary Earthquake 22

Node Ordering and Compactness contd. • • • Mary Calls Johns Calls Earthquake Burglary Alarm 23

Node Ordering and Compactness contd. • • • Mary Calls Johns Calls Earthquake Burglary Alarm Mary Calls John Calls Earthquake Burglary Alarm 24

Conditional Independence revisited - D-Separation • To do inference in a Belief Network we have to know if two sets of variables are conditionally independent given a set of evidence. • Method to do this is called Direction-Dependent Separation or D-Separation. 25

D-Separation contd. • If every undirected path from a node in X to a node in Y is d-separated by E, then X and Y are conditionally independent given E. • X is a set of variables with unknown values • Y is a set of variables with unknown values • E is a set of variables with known values. 26

D-Separation contd. • A set of nodes, E, d-separates two sets of nodes, X and Y, if every undirected path from a node in X to a node in Y is Blocked given E. • A path is blocked given a set of nodes, E if: 1) Z is in E and Z has one arrow leading in and one leading out. 2) Z is in E and has both arrows leading out. 3) Neither Z nor any descendant of Z is in E and both path arrows lead in to Z. 27

Blocking X E Y Z Z Z 28

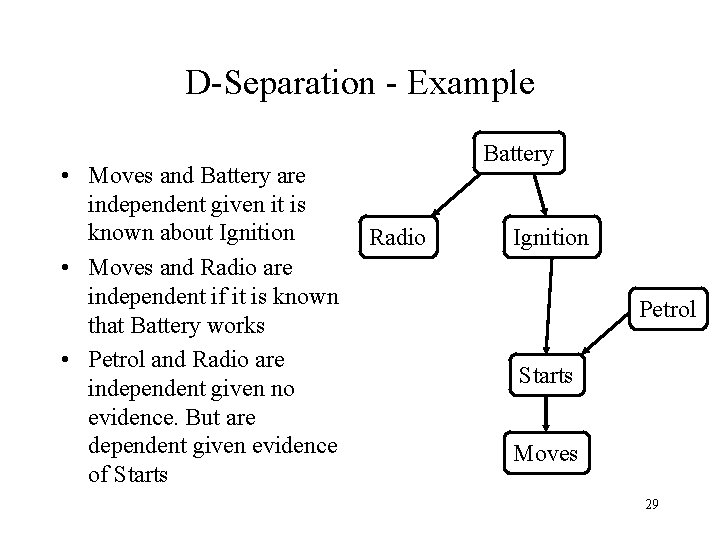

D-Separation - Example • Moves and Battery are independent given it is known about Ignition • Moves and Radio are independent if it is known that Battery works • Petrol and Radio are independent given no evidence. But are dependent given evidence of Starts Battery Radio Ignition Petrol Starts Moves 29

Inference • Diagnostic Inferences (effects to causes) • Causal Inferences (causes to effects) • Intercausal Inferences - or ‘Explaining Away’ (between causes of common effect) • Mixed Inferences (combination of two or more of the above) 30

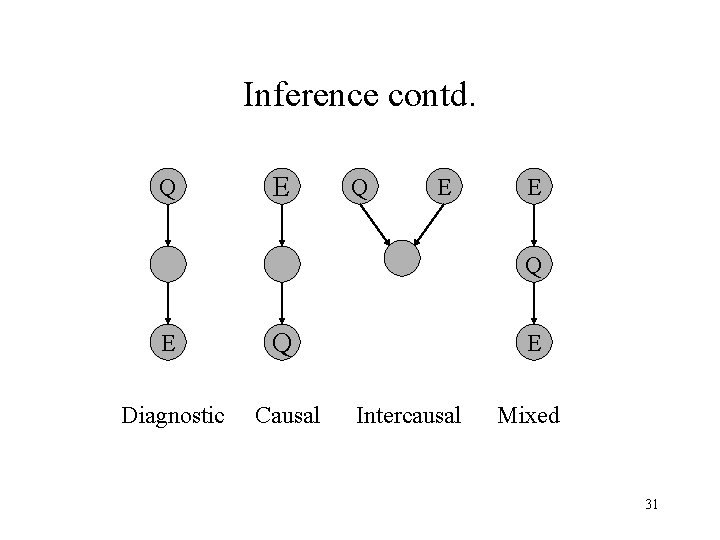

Inference contd. Q E E Q Diagnostic Causal E Intercausal Mixed 31

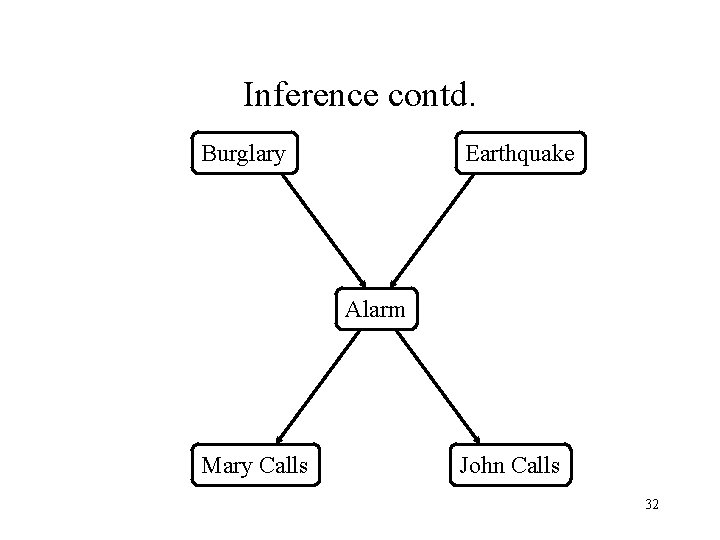

Inference contd. Burglary Earthquake Alarm Mary Calls John Calls 32

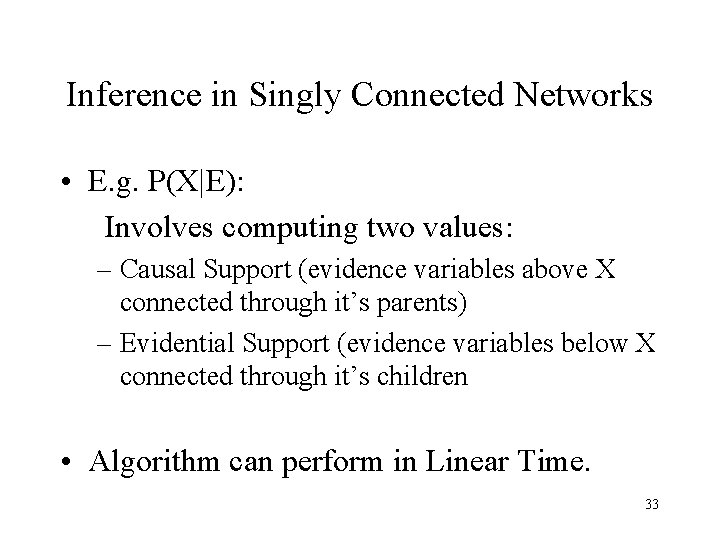

Inference in Singly Connected Networks • E. g. P(X|E): Involves computing two values: – Causal Support (evidence variables above X connected through it’s parents) – Evidential Support (evidence variables below X connected through it’s children • Algorithm can perform in Linear Time. 33

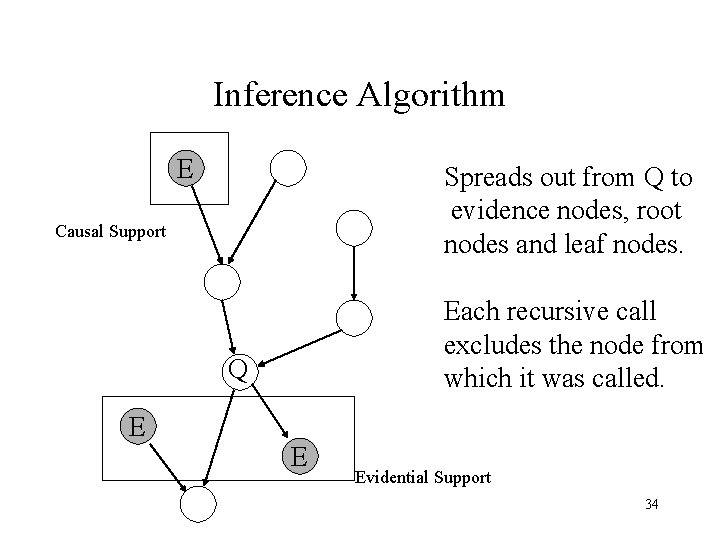

Inference Algorithm E Spreads out from Q to evidence nodes, root nodes and leaf nodes. Causal Support Each recursive call excludes the node from which it was called. Q E E Evidential Support 34

Inference in Multiply Connected Networks • Exact Inference is known to be NP-Hard • Approaches include: – Clustering – Conditioning – Stochastic Simulation • Stochastic Simulation is most often used, particularly on large networks. 35

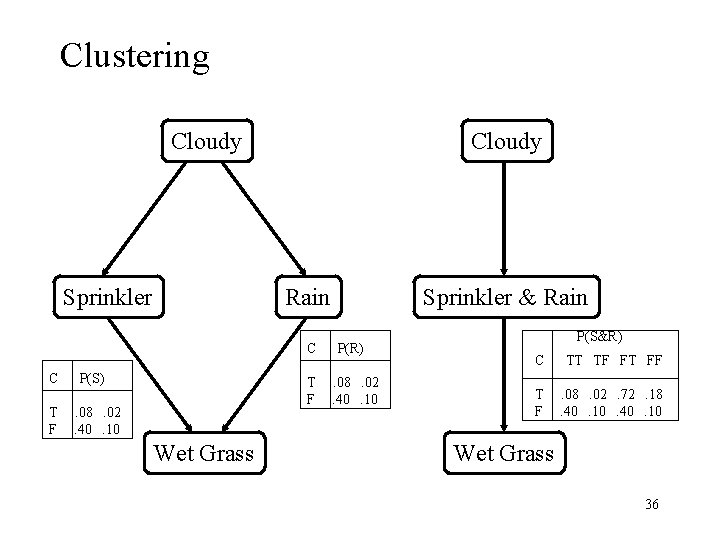

Clustering Cloudy Sprinkler Cloudy Rain C C T F P(S) T F . 08. 02. 40. 10 Wet Grass Sprinkler & Rain P(R). 08. 02. 40. 10 P(S&R) C TT TF FT FF T F . 08. 02. 72. 18. 40. 10 Wet Grass 36

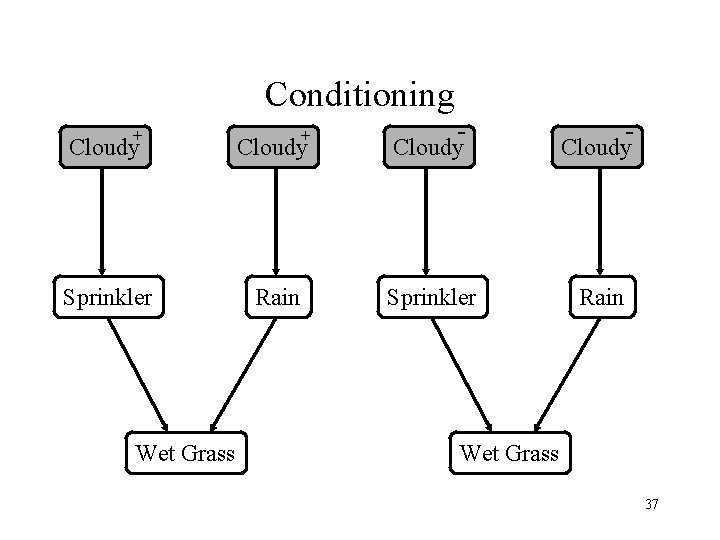

Conditioning + + - - Cloudy Sprinkler Rain Wet Grass 37

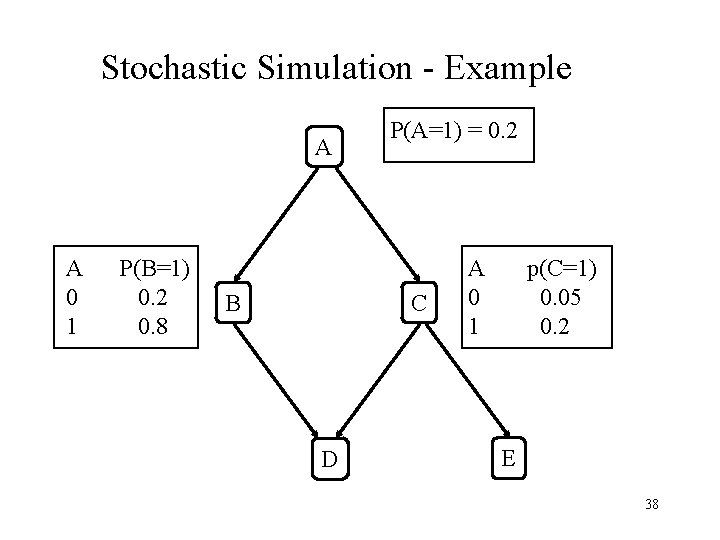

Stochastic Simulation - Example A A 0 1 P(B=1) 0. 2 0. 8 B P(A=1) = 0. 2 C D A 0 1 p(C=1) 0. 05 0. 2 E 38

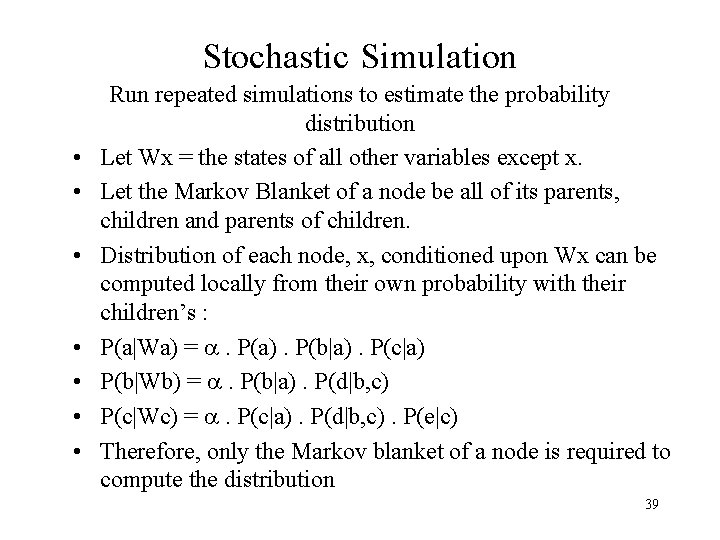

Stochastic Simulation • • Run repeated simulations to estimate the probability distribution Let Wx = the states of all other variables except x. Let the Markov Blanket of a node be all of its parents, children and parents of children. Distribution of each node, x, conditioned upon Wx can be computed locally from their own probability with their children’s : P(a|Wa) = . P(a). P(b|a). P(c|a) P(b|Wb) = . P(b|a). P(d|b, c) P(c|Wc) = . P(c|a). P(d|b, c). P(e|c) Therefore, only the Markov blanket of a node is required to compute the distribution 39

The Algorithm • Set all observed nodes to their values • Set all other nodes to random values • STEP 1 • Select a node randomly from the network • According to the states of the node’s markov blanket, compute P(x=state, Wx) for all states • STEP 2 • Use a random number generator that is biased according to the distribution computed in step 1 to select the next value of the node • Repeat 40

Algorithm contd. • The final probability distribution of each unobserved node is calculated from either: 1) the number of times each node took a particular state 2) the average conditional probability of each node taking a particular state given the other variables states. 41

Case Study - Pathfinder • Diagnostic Expert System for Lymph-Node Diseases 4 Versions of Pathfinder : 1) Rule Based 2) Experimented with Certainty Factors/Dempster-Shafer theory/Bayesian Models 3) Refined Probabilities 4) Refined dependencies 42

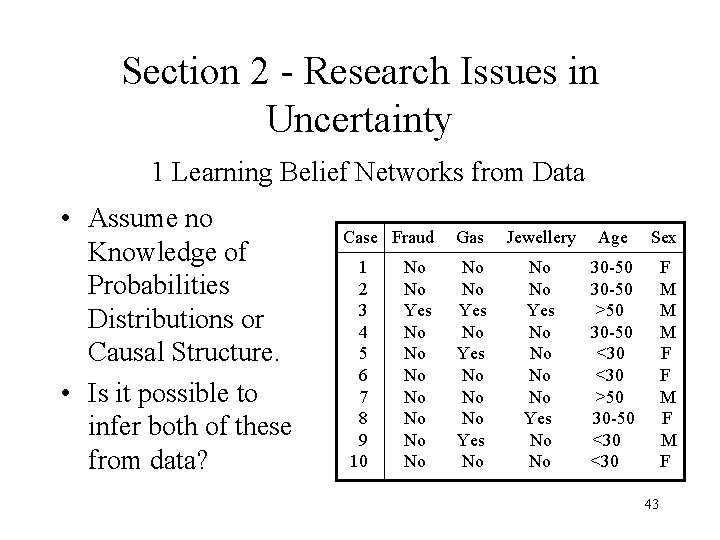

Section 2 - Research Issues in Uncertainty 1 Learning Belief Networks from Data • Assume no Knowledge of Probabilities Distributions or Causal Structure. • Is it possible to infer both of these from data? Case Fraud 1 2 3 4 5 6 7 8 9 10 No No Yes No No Gas Jewellery Age Sex No No Yes No No Yes No No 30 -50 >50 30 -50 <30 <30 F M M M F F M F 43

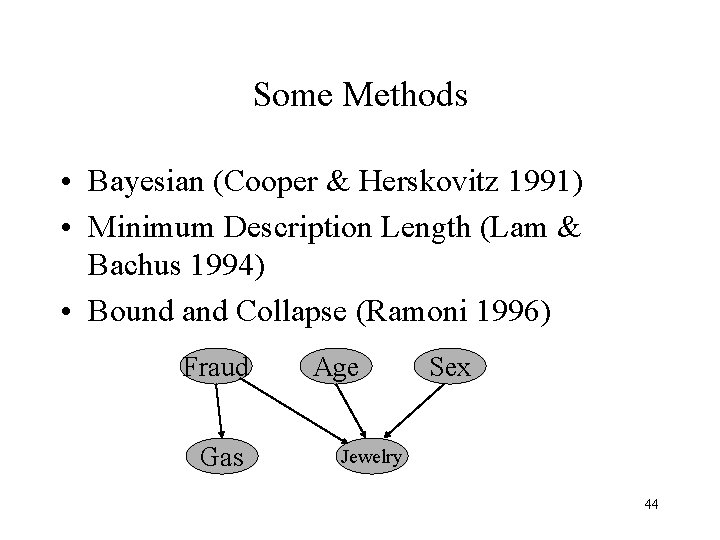

Some Methods • Bayesian (Cooper & Herskovitz 1991) • Minimum Description Length (Lam & Bachus 1994) • Bound and Collapse (Ramoni 1996) Fraud Gas Age Sex Jewelry 44

2 Dynamics - Markov Models State Transition Model State t-2 State t-1 State t+1 State t+2 Percept t-1 Percept t+1 Percept t+2 Sensor Model 45

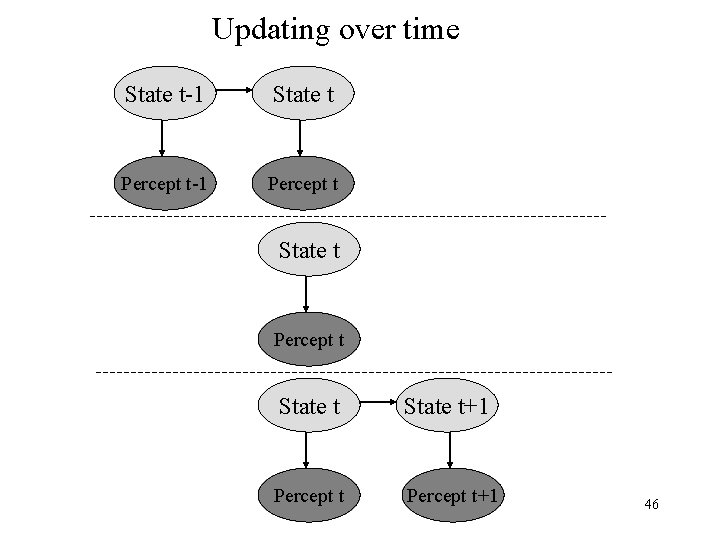

Updating over time State t-1 State t Percept t-1 Percept t State t+1 Percept t+1 46

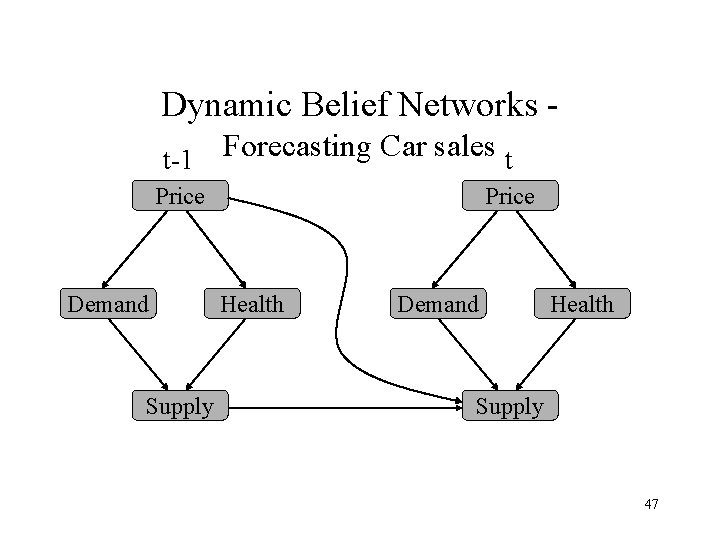

Dynamic Belief Networks Forecasting Car sales t-1 t Price Demand Supply Price Health Demand Health Supply 47

3 Other approaches to modeling Uncertainty • Default Reasoning • Dempster - Shafer Theory • Fuzzy Logic ? 48

- Slides: 48