UltraEfficient Scientific Computing More Science Less Power John

- Slides: 57

Ultra-Efficient Scientific Computing More Science Less Power John Shalf Leonid Oliker, Michael Wehner, Kathy Yelick RAMP Retreat: January 16, 2008

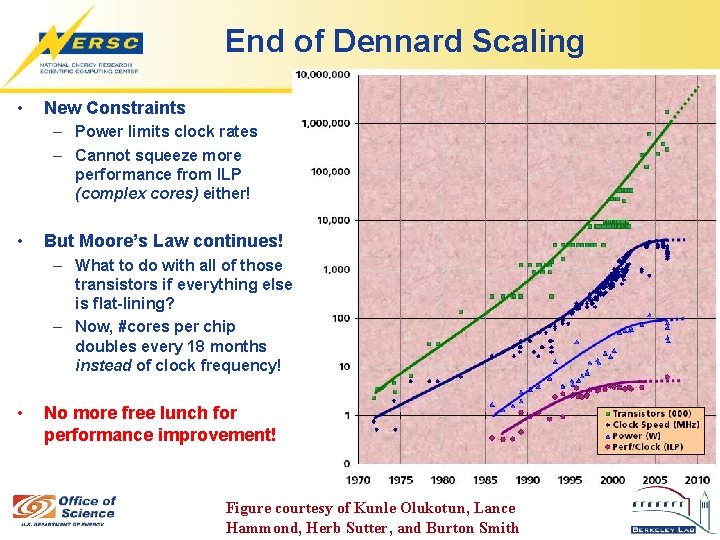

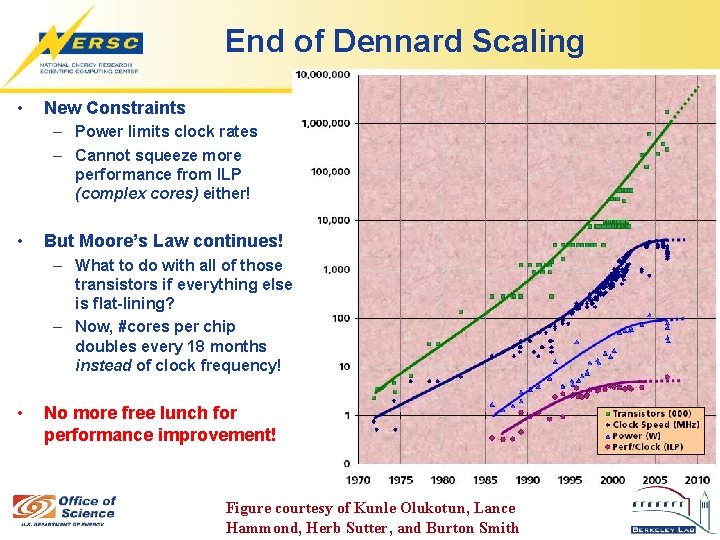

End of Dennard Scaling • New Constraints – Power limits clock rates – Cannot squeeze more performance from ILP (complex cores) either! • But Moore’s Law continues! – What to do with all of those transistors if everything else is flat-lining? – Now, #cores per chip doubles every 18 months instead of clock frequency! • No more free lunch for performance improvement! Figure courtesy of Kunle Olukotun, Lance Hammond, Herb Sutter, and Burton Smith

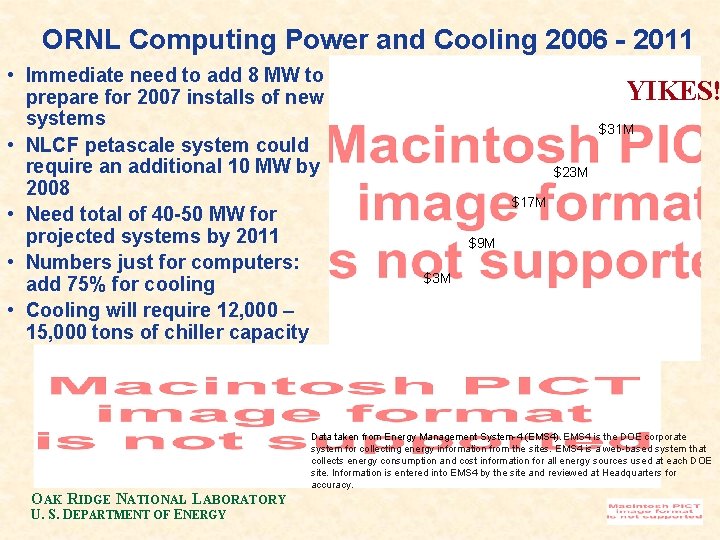

ORNL Computing Power and Cooling 2006 - 2011 • Immediate need to add 8 MW to prepare for 2007 installs of new systems • NLCF petascale system could require an additional 10 MW by 2008 • Need total of 40 -50 MW for projected systems by 2011 • Numbers just for computers: add 75% for cooling • Cooling will require 12, 000 – 15, 000 tons of chiller capacity YIKES! $31 M $23 M $17 M $9 M $3 M Cost estimates based on $0. 05 k. W/hr OAK RIDGE NATIONAL LABORATORY U. S. DEPARTMENT OF ENERGY Data taken from Energy Management System-4 (EMS 4). EMS 4 is the DOE corporate system for collecting energy information from the sites. EMS 4 is a web-based system that collects energy consumption and cost information for all energy sources used at each DOE site. Information is entered into EMS 4 by the site and reviewed at Headquarters for accuracy.

Top 500 Estimated Power Requirements

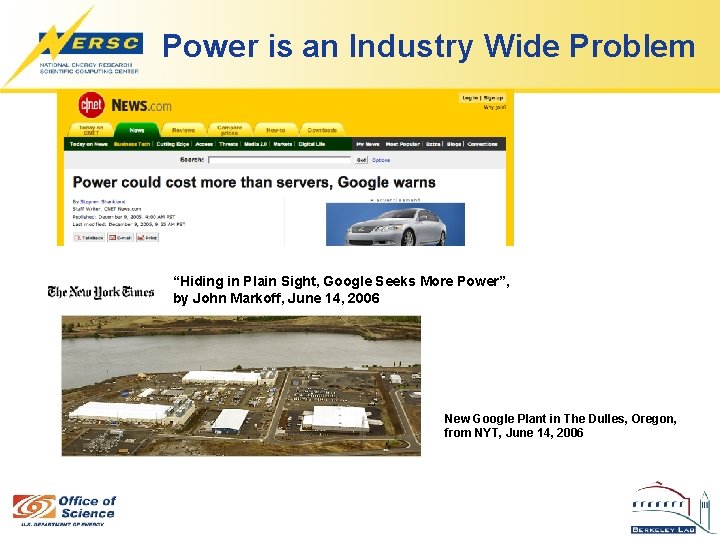

Power is an Industry Wide Problem “Hiding in Plain Sight, Google Seeks More Power”, by John Markoff, June 14, 2006 New Google Plant in The Dulles, Oregon, from NYT, June 14, 2006

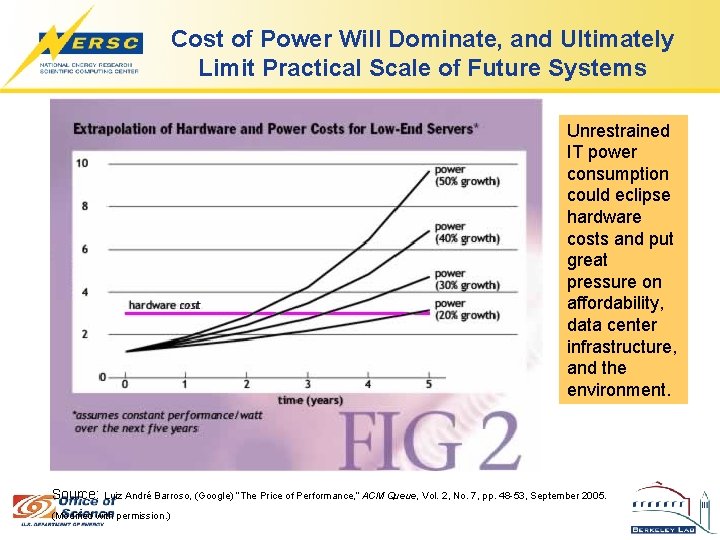

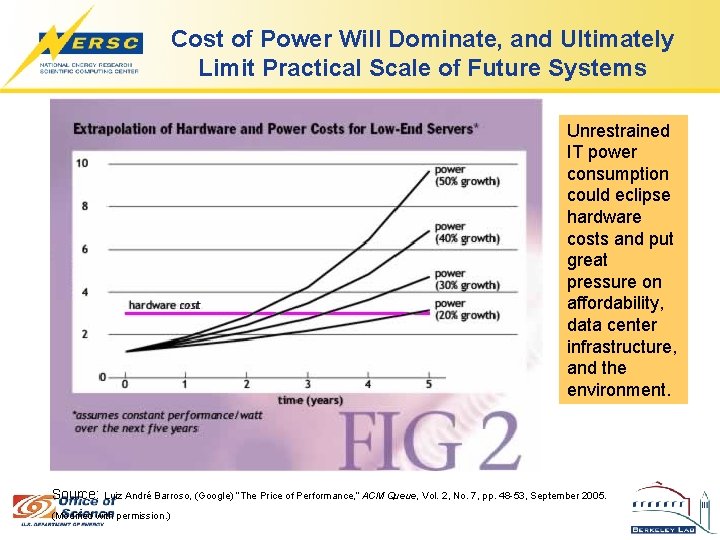

Cost of Power Will Dominate, and Ultimately Limit Practical Scale of Future Systems Unrestrained IT power consumption could eclipse hardware costs and put great pressure on affordability, data center infrastructure, and the environment. Source: Luiz André Barroso, (Google) “The Price of Performance, ” ACM Queue, Vol. 2, No. 7, pp. 48 -53, September 2005. (Modified with permission. )

Ultra-Efficient Computing: 100 x over Business As Usual • Cooperative effort we call “science-driven system architecture” – Effective future exascale systems must be developed in the context of application requirements • Radically change HPC system development via applicationdriven hardware/software co-design – Achieve 100 x power efficiency and 100 x capability of mainstream HPC approach for targeted high impact applications – Accelerate development cycle for exascale HPC systems – Approach is applicable to numerous scientific areas in the DOE Office of Science – Proposed pilot application: Ultra-high resolution climate change simulation

New Design Constraint: POWER • Transistors still getting smaller – Moore’s Law is alive and well • But Dennard scaling is dead! – No power efficiency improvements with smaller transistors – No clock frequency scaling with smaller transistors – All “magical improvement of silicon goodness” has ended • Traditional methods for extracting more performance are well -mined – Cannot expect exotic architectures to save us from the “power wall” – Even resources of DARPA can only accelerate existing research prototypes (not “magic” new technology)!

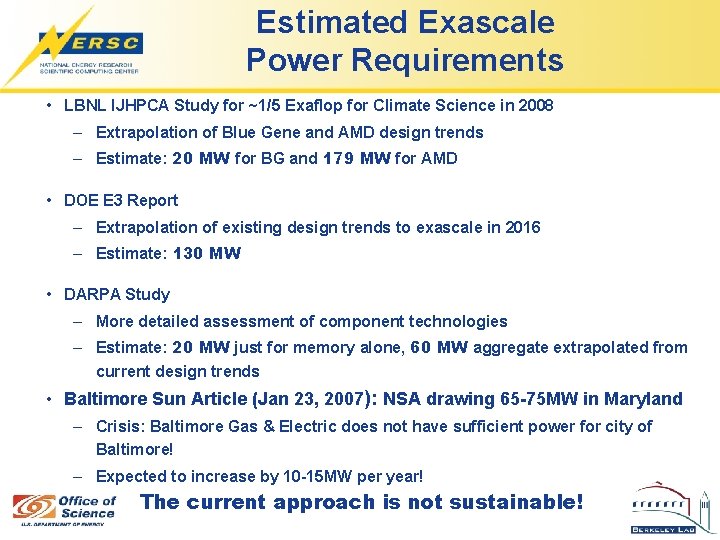

Estimated Exascale Power Requirements • LBNL IJHPCA Study for ~1/5 Exaflop for Climate Science in 2008 – Extrapolation of Blue Gene and AMD design trends – Estimate: 20 MW for BG and 179 MW for AMD • DOE E 3 Report – Extrapolation of existing design trends to exascale in 2016 – Estimate: 130 MW • DARPA Study – More detailed assessment of component technologies – Estimate: 20 MW just for memory alone, 60 MW aggregate extrapolated from current design trends • Baltimore Sun Article (Jan 23, 2007): NSA drawing 65 -75 MW in Maryland – Crisis: Baltimore Gas & Electric does not have sufficient power for city of Baltimore! – Expected to increase by 10 -15 MW per year! The current approach is not sustainable!

Path to Power Efficiency Reducing Waste in Computing • Examine methodology of low-power embedded computing market – optimized for low power, low cost, and high computational efficiency “Years of research in low-power embedded computing have shown only one design technique to reduce power: reduce waste. ” Mark Horowitz, Stanford University & Rambus Inc. • Sources of Waste – Wasted transistors (surface area) – Wasted computation (useless work/speculation/stalls) – Wasted bandwidth (data movement) – Designing for serial performance

Our New Design Paradigm: Application-Driven HPC • Identify high-impact exascale scientific applications • Tailor system architecture to highly parallel applications • Co-design algorithms and software together with the hardware – Enabled by hardware emulation environments – Supported by auto-tuning for code generation

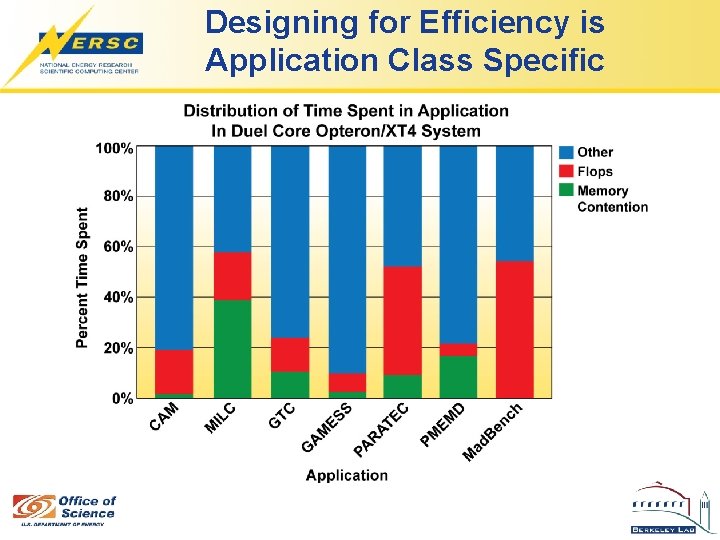

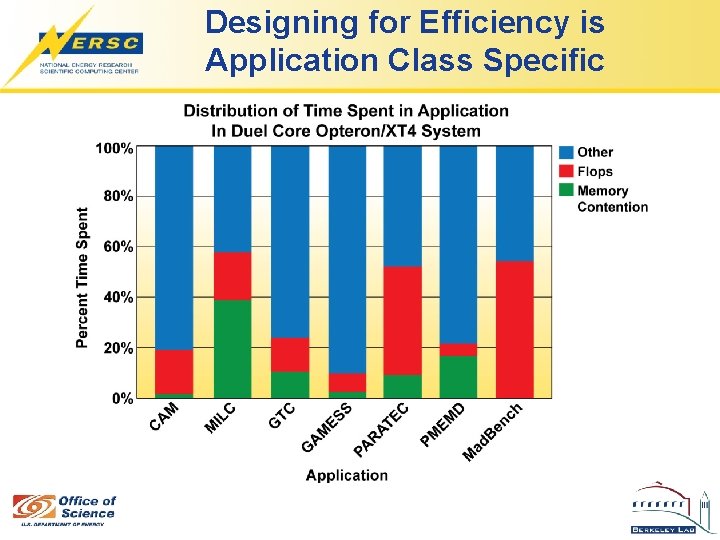

Designing for Efficiency is Application Class Specific

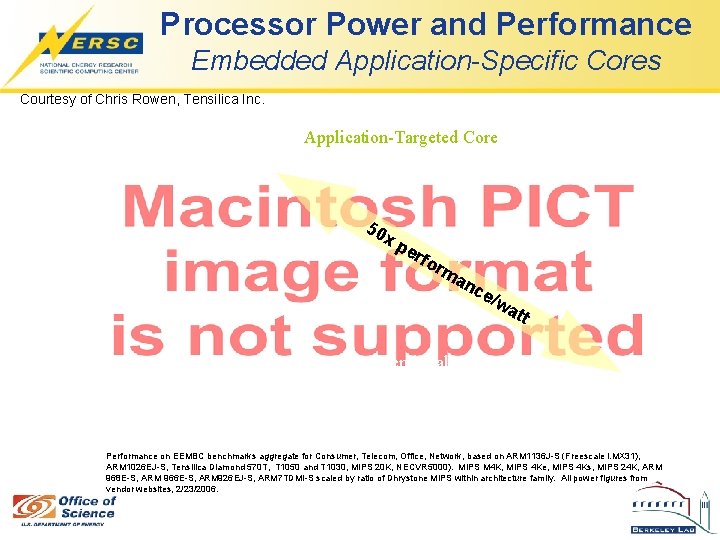

Processor Power and Performance Embedded Application-Specific Cores Courtesy of Chris Rowen, Tensilica Inc. Application-Targeted Core 50 x pe rf orm an ce /w a tt Conventional Embedded Core Performance on EEMBC benchmarks aggregate for Consumer, Telecom, Office, Network, based on ARM 1136 J-S (Freescale i. MX 31), ARM 1026 EJ-S, Tensilica Diamond 570 T, T 1050 and T 1030, MIPS 20 K, NECVR 5000). MIPS M 4 K, MIPS 4 Ke, MIPS 4 Ks, MIPS 24 K, ARM 968 E-S, ARM 966 E-S, ARM 926 EJ-S, ARM 7 TDMI-S scaled by ratio of Dhrystone MIPS within architecture family. All power figures from vendor websites, 2/23/2006.

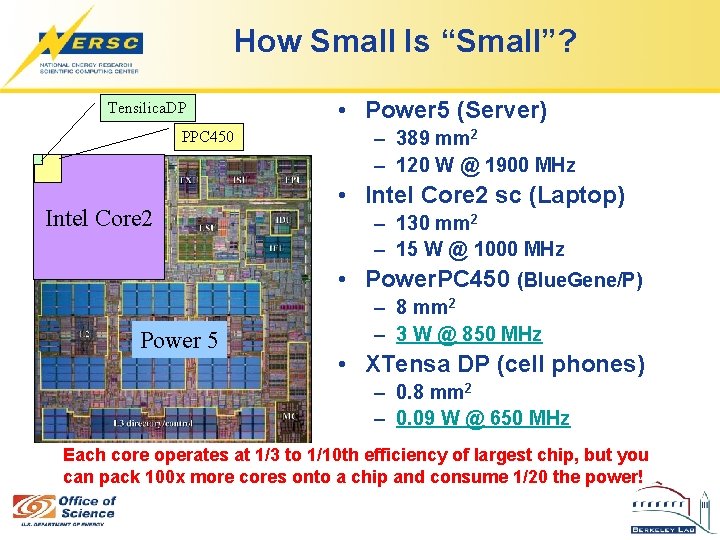

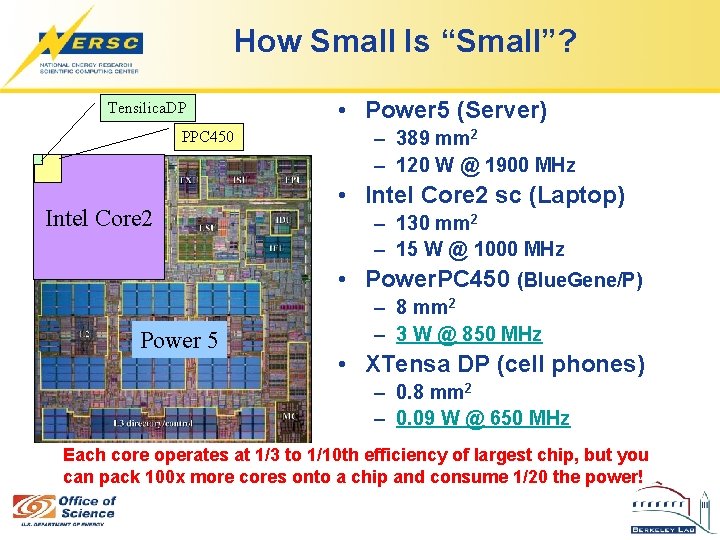

How Small Is “Small”? Tensilica. DP PPC 450 Intel Core 2 • Power 5 (Server) – 389 mm 2 – 120 W @ 1900 MHz • Intel Core 2 sc (Laptop) – 130 mm 2 – 15 W @ 1000 MHz • Power. PC 450 (Blue. Gene/P) Power 5 – 8 mm 2 – 3 W @ 850 MHz • XTensa DP (cell phones) – 0. 8 mm 2 – 0. 09 W @ 650 MHz Each core operates at 1/3 to 1/10 th efficiency of largest chip, but you can pack 100 x more cores onto a chip and consume 1/20 the power!

Chris Rowen Data

Intel

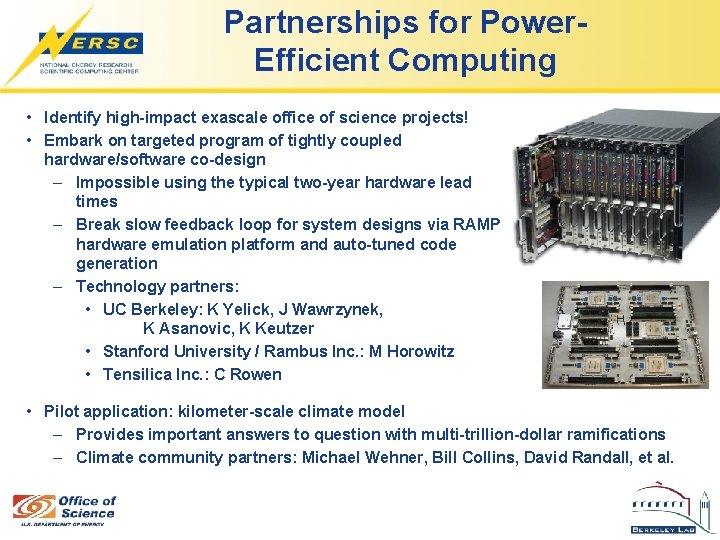

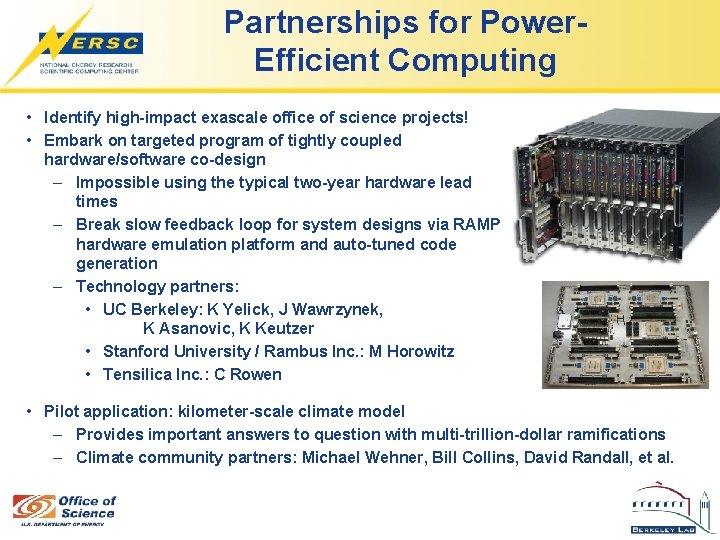

Partnerships for Power. Efficient Computing • Identify high-impact exascale office of science projects! • Embark on targeted program of tightly coupled hardware/software co-design – Impossible using the typical two-year hardware lead times – Break slow feedback loop for system designs via RAMP hardware emulation platform and auto-tuned code generation – Technology partners: • UC Berkeley: K Yelick, J Wawrzynek, K Asanovic, K Keutzer • Stanford University / Rambus Inc. : M Horowitz • Tensilica Inc. : C Rowen • Pilot application: kilometer-scale climate model – Provides important answers to question with multi-trillion-dollar ramifications – Climate community partners: Michael Wehner, Bill Collins, David Randall, et al.

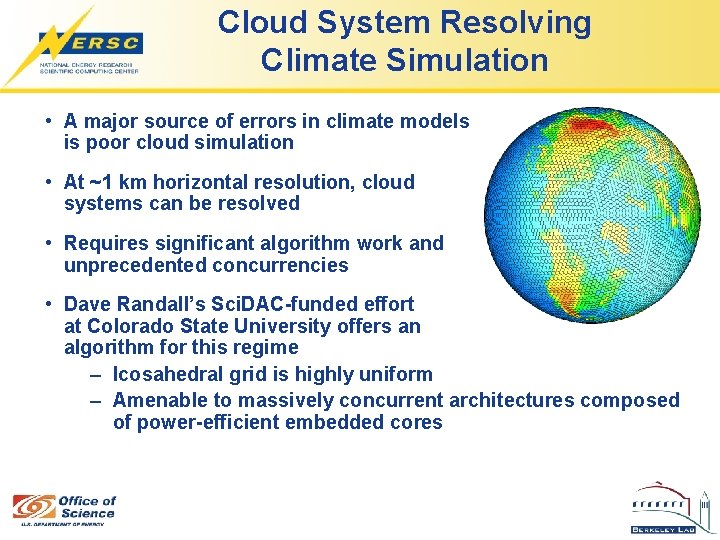

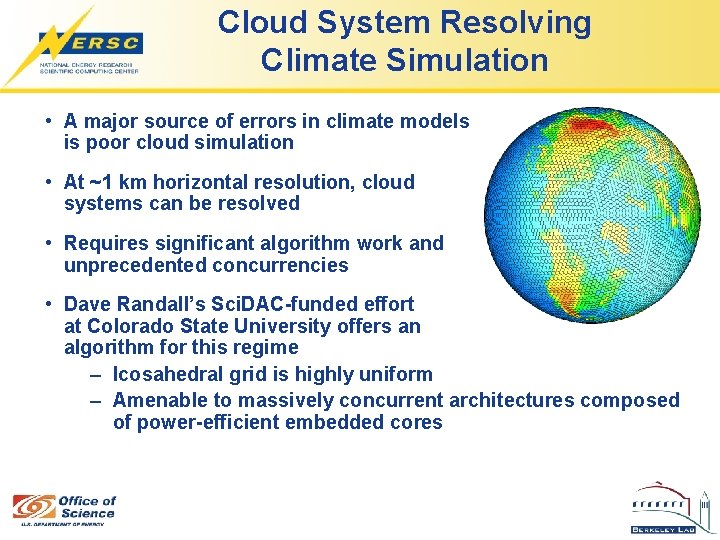

Cloud System Resolving Climate Simulation • A major source of errors in climate models is poor cloud simulation • At ~1 km horizontal resolution, cloud systems can be resolved • Requires significant algorithm work and unprecedented concurrencies • Dave Randall’s Sci. DAC-funded effort at Colorado State University offers an algorithm for this regime – Icosahedral grid is highly uniform – Amenable to massively concurrent architectures composed of power-efficient embedded cores

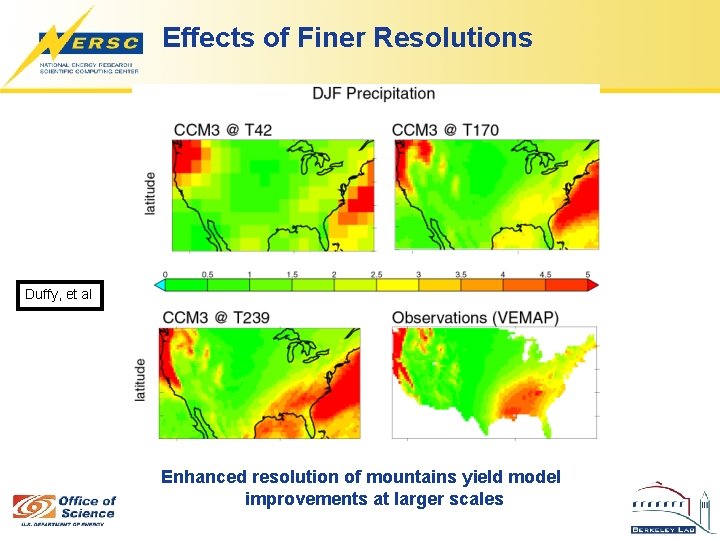

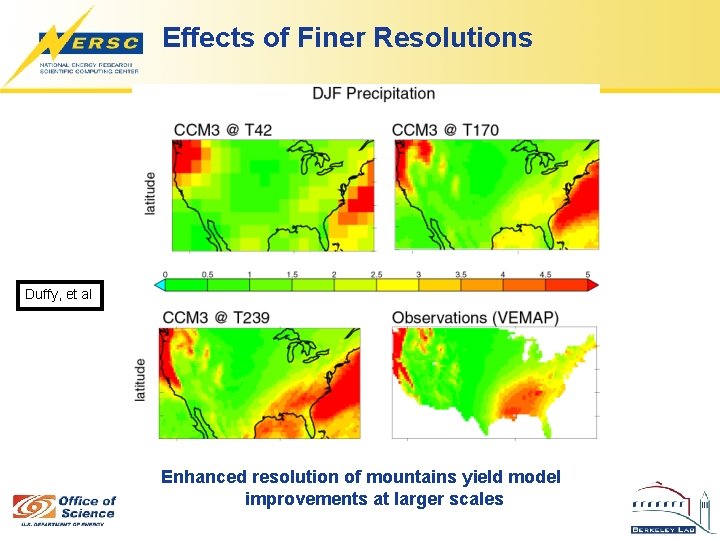

Effects of Finer Resolutions Duffy, et al Enhanced resolution of mountains yield model improvements at larger scales

Pushing Current Model to High Resolution 20 km resolution produces reasonable tropical cyclones

Kilometer-scale fidelity • Current cloud parameterizations break down somewhere around 10 km – – – Deep convective processes responsible for moisture transport from near surface to higher altitudes are inadequately represented at current resolutions Assumptions regarding the distribution of cloud types become invalid in the Arakawa-Schubert scheme Uncertainty in short and long term forecasts can be traced to these inaccuracies • However, at ~2 or 3 km, a radical reformulation of atmospheric general circulation models is possible: – Cloud system resolving models replace cumulus convection and large scale precipitation parameterizations. • Will this lead to better global cloud distributions

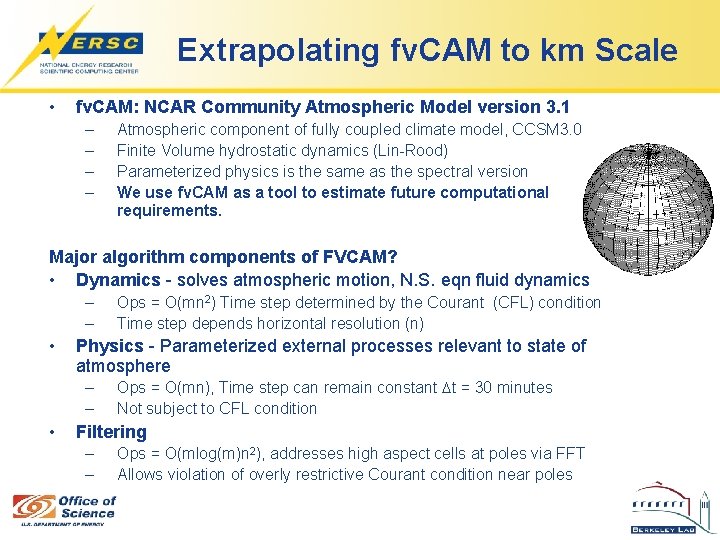

Extrapolating fv. CAM to km Scale • fv. CAM: NCAR Community Atmospheric Model version 3. 1 – – Atmospheric component of fully coupled climate model, CCSM 3. 0 Finite Volume hydrostatic dynamics (Lin-Rood) Parameterized physics is the same as the spectral version We use fv. CAM as a tool to estimate future computational requirements. Major algorithm components of FVCAM? • Dynamics - solves atmospheric motion, N. S. eqn fluid dynamics – – • Physics - Parameterized external processes relevant to state of atmosphere – – • Ops = O(mn 2) Time step determined by the Courant (CFL) condition Time step depends horizontal resolution (n) Ops = O(mn), Time step can remain constant Dt = 30 minutes Not subject to CFL condition Filtering – – Ops = O(mlog(m)n 2), addresses high aspect cells at poles via FFT Allows violation of overly restrictive Courant condition near poles

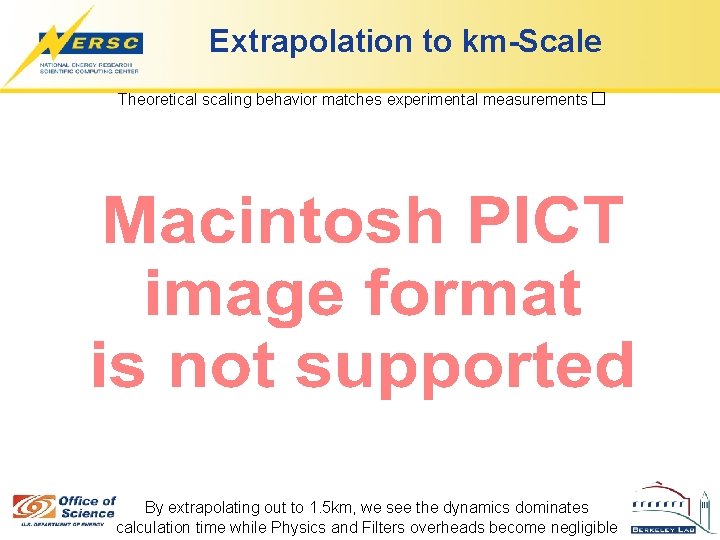

Extrapolation to km-Scale Theoretical scaling behavior matches experimental measurements� By extrapolating out to 1. 5 km, we see the dynamics dominates calculation time while Physics and Filters overheads become negligible

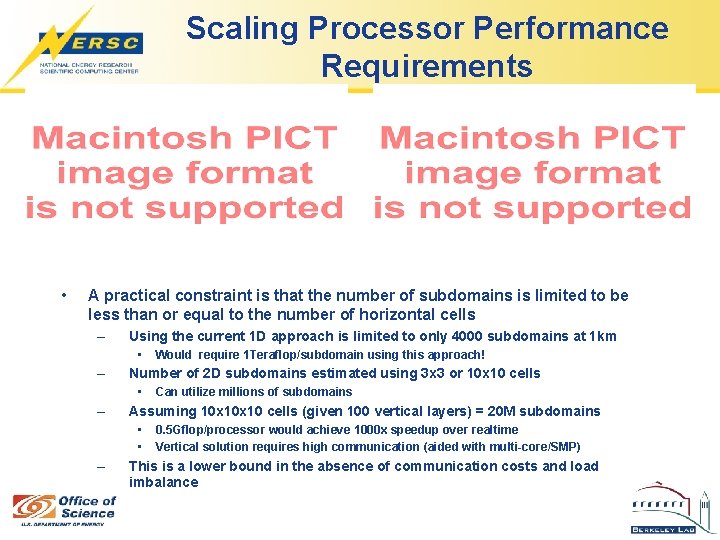

Scaling Processor Performance Requirements • A practical constraint is that the number of subdomains is limited to be less than or equal to the number of horizontal cells – Using the current 1 D approach is limited to only 4000 subdomains at 1 km • – Number of 2 D subdomains estimated using 3 x 3 or 10 x 10 cells • – Can utilize millions of subdomains Assuming 10 x 10 cells (given 100 vertical layers) = 20 M subdomains • • – Would require 1 Teraflop/subdomain using this approach! 0. 5 Gflop/processor would achieve 1000 x speedup over realtime Vertical solution requires high communication (aided with multi-core/SMP) This is a lower bound in the absence of communication costs and load imbalance

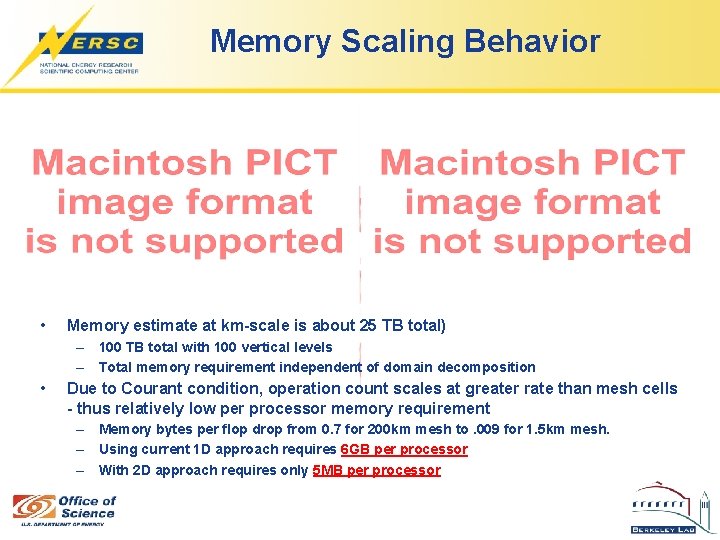

Memory Scaling Behavior • Memory estimate at km-scale is about 25 TB total) – 100 TB total with 100 vertical levels – Total memory requirement independent of domain decomposition • Due to Courant condition, operation count scales at greater rate than mesh cells - thus relatively low per processor memory requirement – Memory bytes per flop drop from 0. 7 for 200 km mesh to. 009 for 1. 5 km mesh. – Using current 1 D approach requires 6 GB per processor – With 2 D approach requires only 5 MB per processor

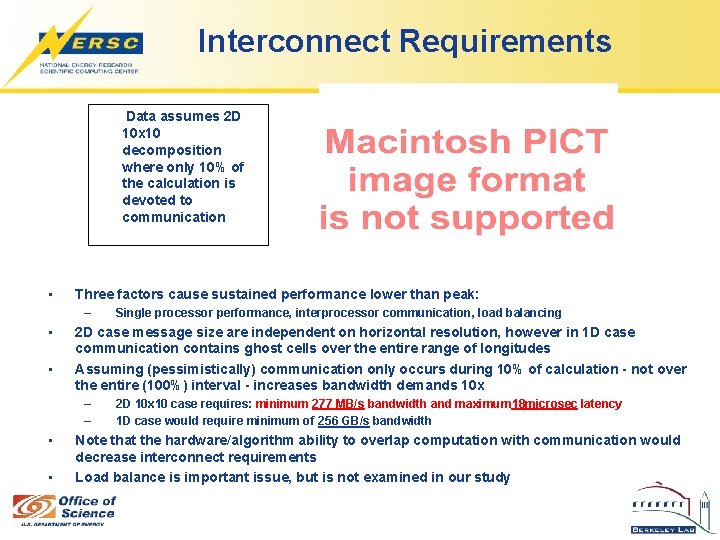

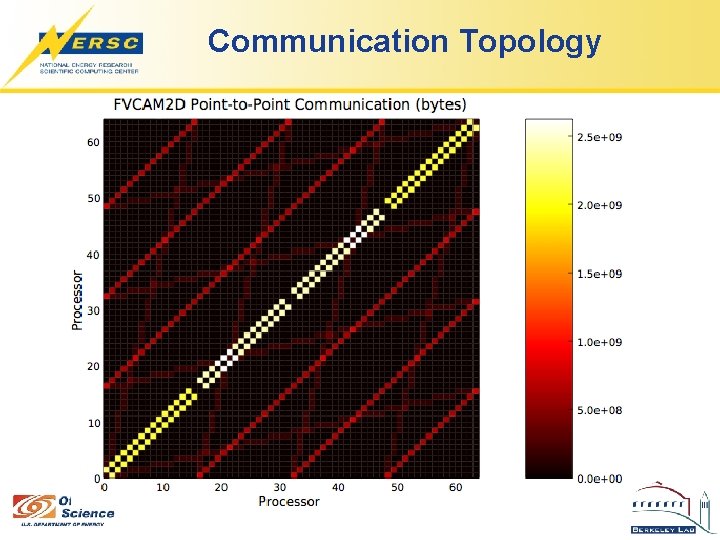

Interconnect Requirements Data assumes 2 D 10 x 10 decomposition where only 10% of the calculation is devoted to communication • Three factors cause sustained performance lower than peak: – • • 2 D case message size are independent on horizontal resolution, however in 1 D case communication contains ghost cells over the entire range of longitudes Assuming (pessimistically) communication only occurs during 10% of calculation - not over the entire (100%) interval - increases bandwidth demands 10 x – – • • Single processor performance, interprocessor communication, load balancing 2 D 10 x 10 case requires: minimum 277 MB/s bandwidth and maximum 18 microsec latency 1 D case would require minimum of 256 GB/s bandwidth Note that the hardware/algorithm ability to overlap computation with communication would decrease interconnect requirements Load balance is important issue, but is not examined in our study

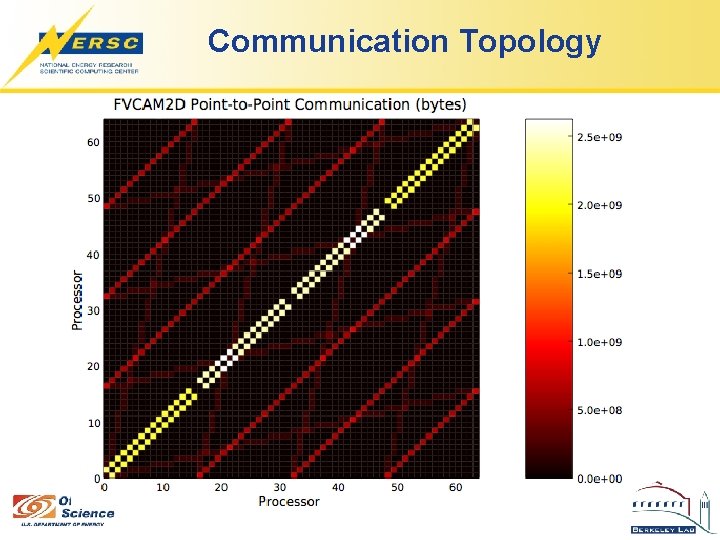

Communication Topology

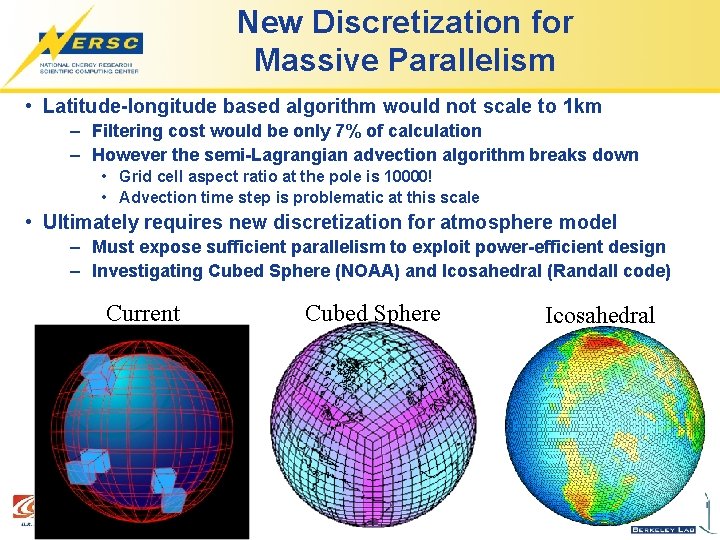

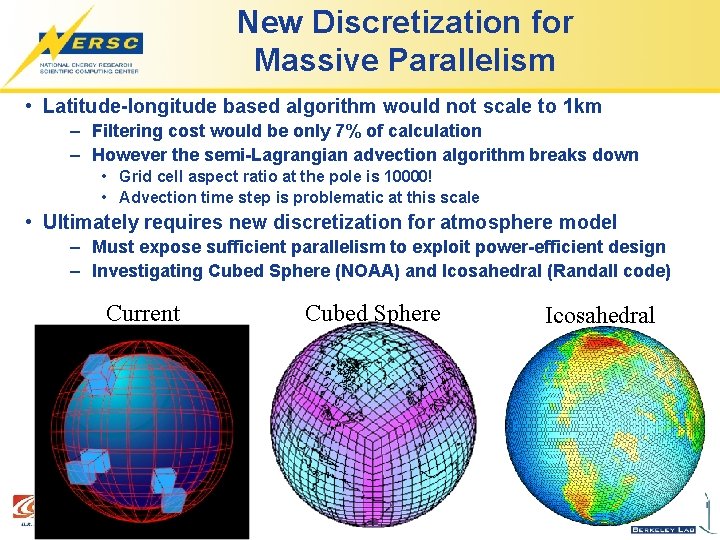

New Discretization for Massive Parallelism • Latitude-longitude based algorithm would not scale to 1 km – Filtering cost would be only 7% of calculation – However the semi-Lagrangian advection algorithm breaks down • Grid cell aspect ratio at the pole is 10000! • Advection time step is problematic at this scale • Ultimately requires new discretization for atmosphere model – Must expose sufficient parallelism to exploit power-efficient design – Investigating Cubed Sphere (NOAA) and Icosahedral (Randall code) Current Cubed Sphere Icosahedral

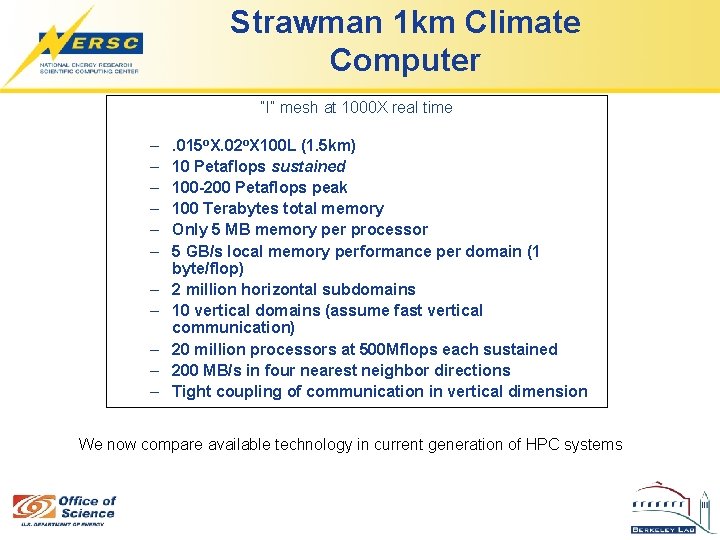

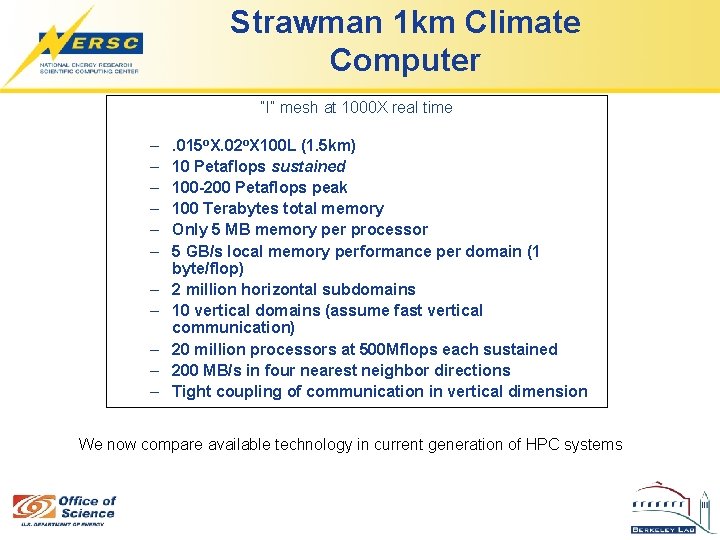

Strawman 1 km Climate Computer “I” mesh at 1000 X real time – – – . 015 o. X. 02 o. X 100 L (1. 5 km) 10 Petaflops sustained 100 -200 Petaflops peak 100 Terabytes total memory Only 5 MB memory per processor 5 GB/s local memory performance per domain (1 byte/flop) 2 million horizontal subdomains 10 vertical domains (assume fast vertical communication) 20 million processors at 500 Mflops each sustained 200 MB/s in four nearest neighbor directions Tight coupling of communication in vertical dimension We now compare available technology in current generation of HPC systems

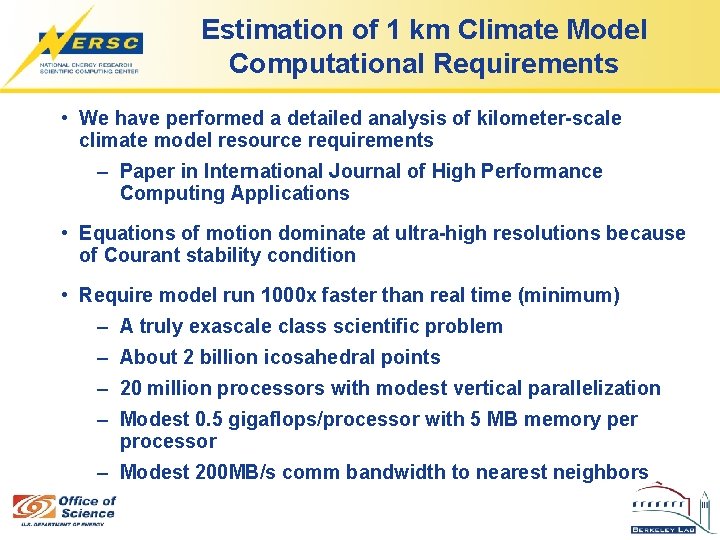

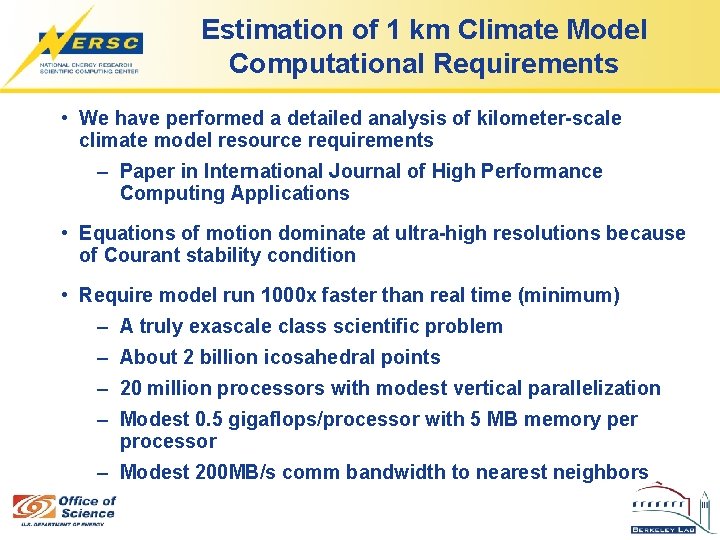

Estimation of 1 km Climate Model Computational Requirements • We have performed a detailed analysis of kilometer-scale climate model resource requirements – Paper in International Journal of High Performance Computing Applications • Equations of motion dominate at ultra-high resolutions because of Courant stability condition • Require model run 1000 x faster than real time (minimum) – A truly exascale class scientific problem – About 2 billion icosahedral points – 20 million processors with modest vertical parallelization – Modest 0. 5 gigaflops/processor with 5 MB memory per processor – Modest 200 MB/s comm bandwidth to nearest neighbors

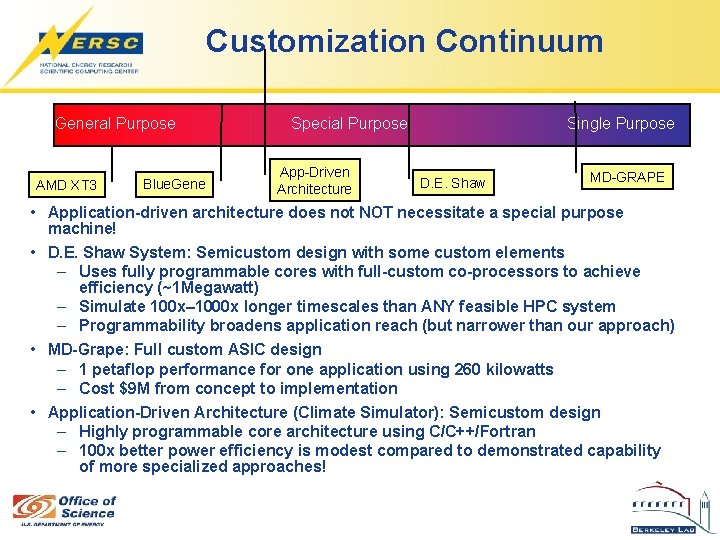

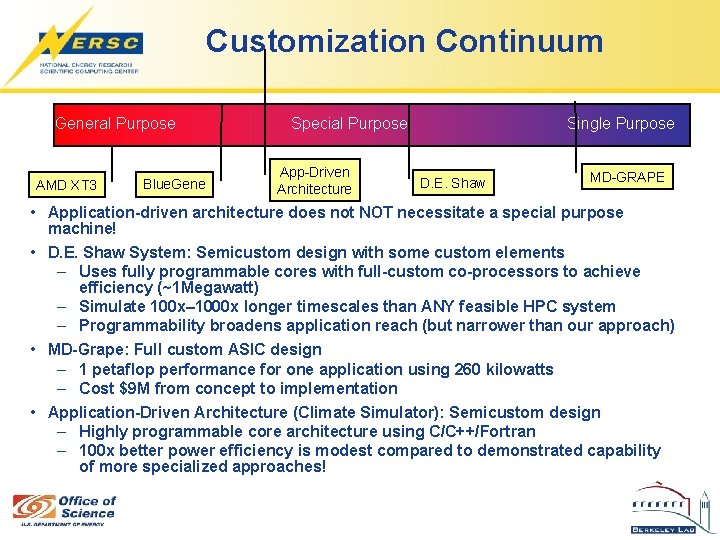

Customization Continuum General Purpose AMD XT 3 Blue. Gene Special Purpose App-Driven Architecture Single Purpose D. E. Shaw MD-GRAPE • Application-driven architecture does not NOT necessitate a special purpose machine! • D. E. Shaw System: Semicustom design with some custom elements – Uses fully programmable cores with full-custom co-processors to achieve efficiency (~1 Megawatt) – Simulate 100 x– 1000 x longer timescales than ANY feasible HPC system – Programmability broadens application reach (but narrower than our approach) • MD-Grape: Full custom ASIC design – 1 petaflop performance for one application using 260 kilowatts – Cost $9 M from concept to implementation • Application-Driven Architecture (Climate Simulator): Semicustom design – Highly programmable core architecture using C/C++/Fortran – 100 x better power efficiency is modest compared to demonstrated capability of more specialized approaches!

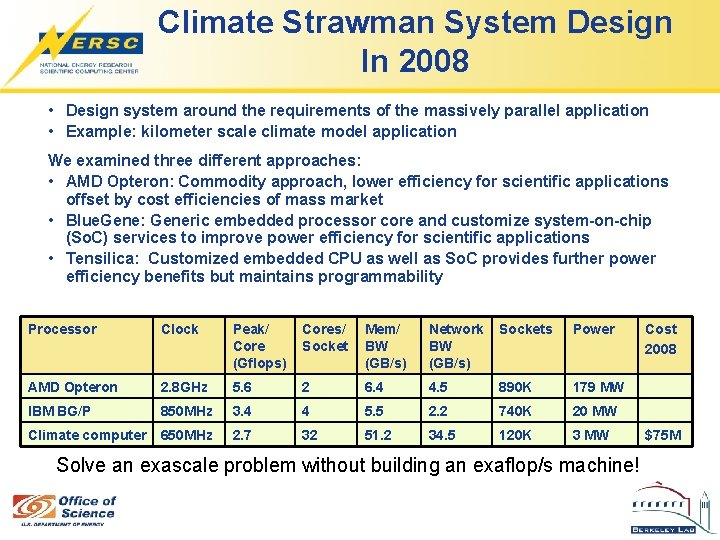

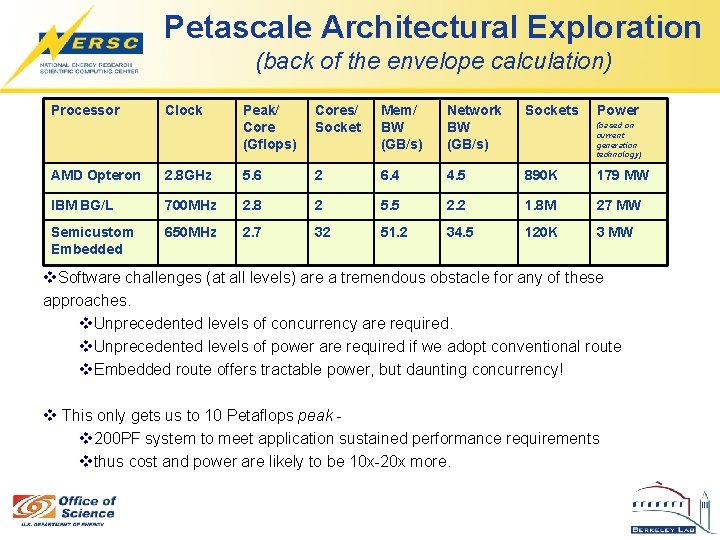

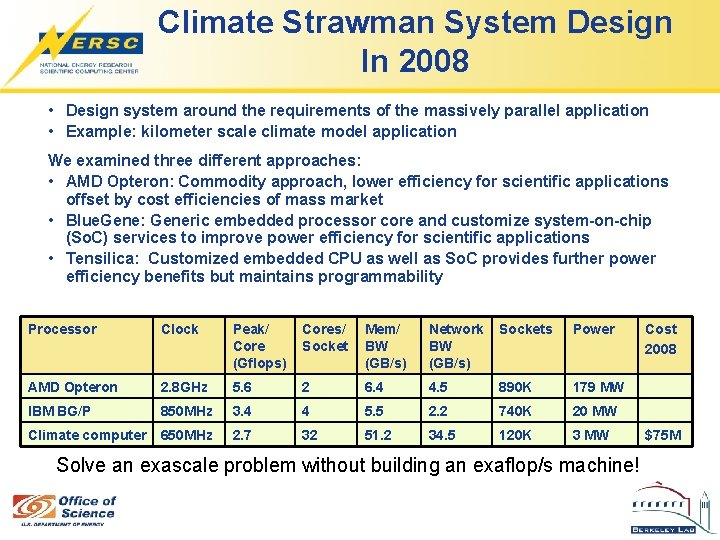

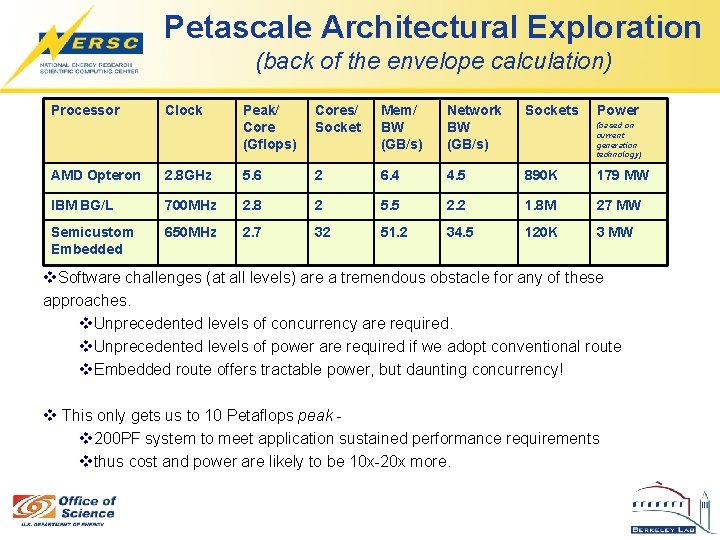

Climate Strawman System Design In 2008 • Design system around the requirements of the massively parallel application • Example: kilometer scale climate model application We examined three different approaches: • AMD Opteron: Commodity approach, lower efficiency for scientific applications offset by cost efficiencies of mass market • Blue. Gene: Generic embedded processor core and customize system-on-chip (So. C) services to improve power efficiency for scientific applications • Tensilica: Customized embedded CPU as well as So. C provides further power efficiency benefits but maintains programmability Processor Clock Peak/ Core (Gflops) Cores/ Socket Mem/ BW (GB/s) Network BW (GB/s) Sockets Power AMD Opteron 2. 8 GHz 5. 6 2 6. 4 4. 5 890 K 179 MW IBM BG/P 850 MHz 3. 4 4 5. 5 2. 2 740 K 20 MW Climate computer 650 MHz 2. 7 32 51. 2 34. 5 120 K 3 MW Solve an exascale problem without building an exaflop/s machine! Cost 2008 $75 M

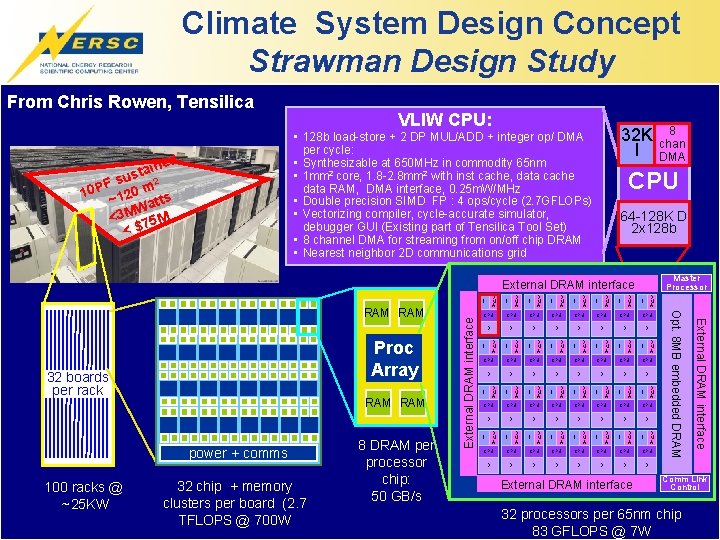

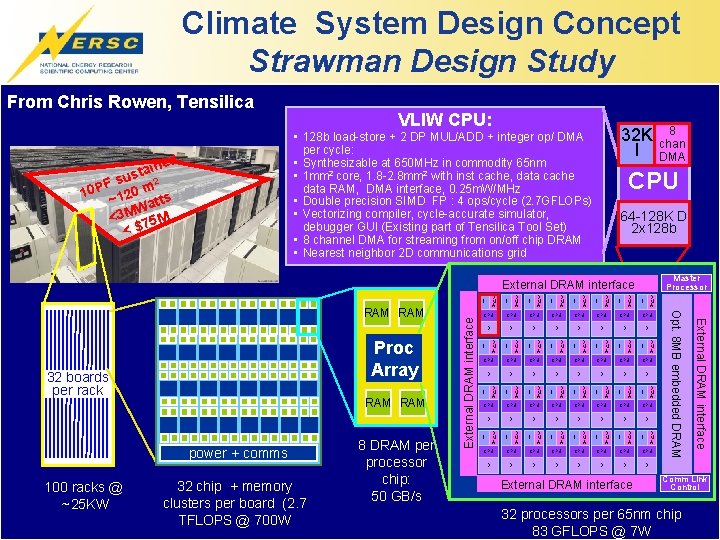

Climate System Design Concept Strawman Design Study From Chris Rowen, Tensilica VLIW CPU: 32 K I • 128 b load-store + 2 DP MUL/ADD + integer op/ DMA per cycle: • Synthesizable at 650 MHz in commodity 65 nm • 1 mm 2 core, 1. 8 -2. 8 mm 2 with inst cache, data cache data RAM, DMA interface, 0. 25 m. W/MHz • Double precision SIMD FP : 4 ops/cycle (2. 7 GFLOPs) • Vectorizing compiler, cycle-accurate simulator, debugger GUI (Existing part of Tensilica Tool Set) • 8 channel DMA for streaming from on/off chip DRAM • Nearest neighbor 2 D communications grid d aine t s F su m 2 P 0 0 1 ~12 atts W <3 M 75 M <$ CPU 64 -128 K D 2 x 128 b Master Processor External DRAM interface D M A 32 boards per rack RAM power + comms 100 racks @ ~25 KW 32 chip + memory clusters per board (2. 7 TFLOPS @ 700 W 8 DRAM per processor chip: 50 GB/s D M A I D M A I D M A I CPU CPU CPU CPU D D D D D M A I D M A I D M A I CPU CPU D D D D D M A I D M A I CPU CPU D D D D External DRAM interface Proc Array D M A I Opt. 8 MB embedded DRAM RAM External DRAM interface I 8 chan DMA Comm Link Control 32 processors per 65 nm chip 83 GFLOPS @ 7 W

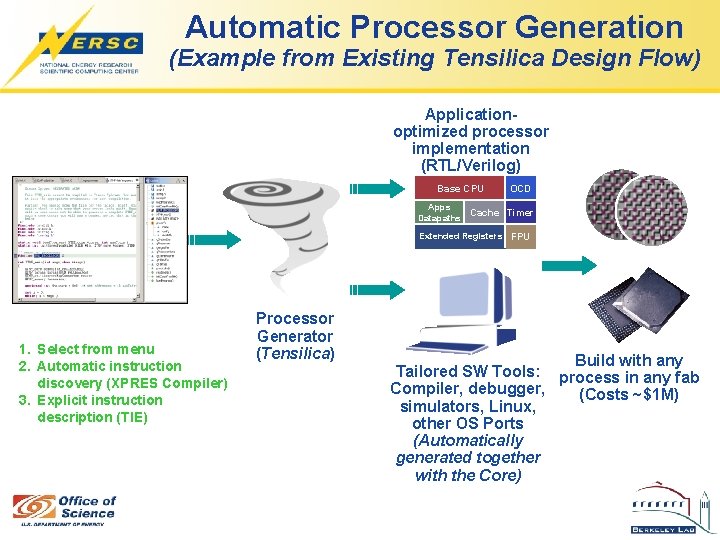

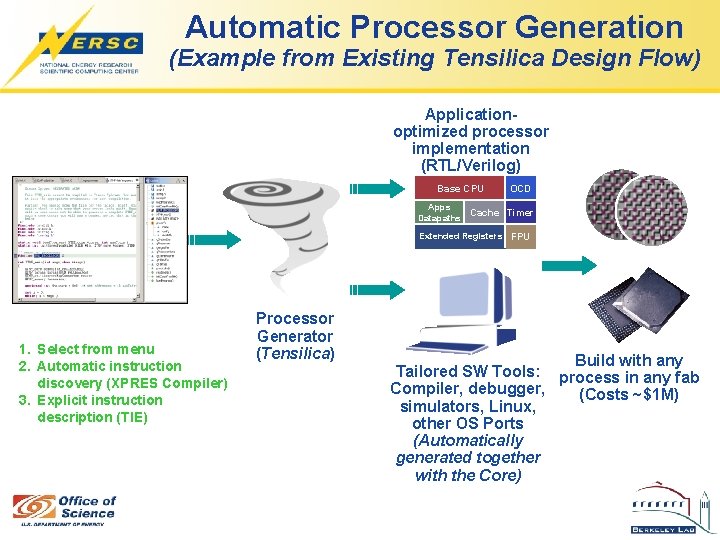

Automatic Processor Generation (Example from Existing Tensilica Design Flow) Applicationoptimized processor implementation (RTL/Verilog) Base CPU OCD Apps Cache Timer Datapaths Extended Registers FPU Processor configuration 1. Select from menu 2. Automatic instruction discovery (XPRES Compiler) 3. Explicit instruction description (TIE) Processor Generator (Tensilica) Build with any Tailored SW Tools: process in any fab Compiler, debugger, (Costs ~$1 M) simulators, Linux, other OS Ports (Automatically generated together with the Core)

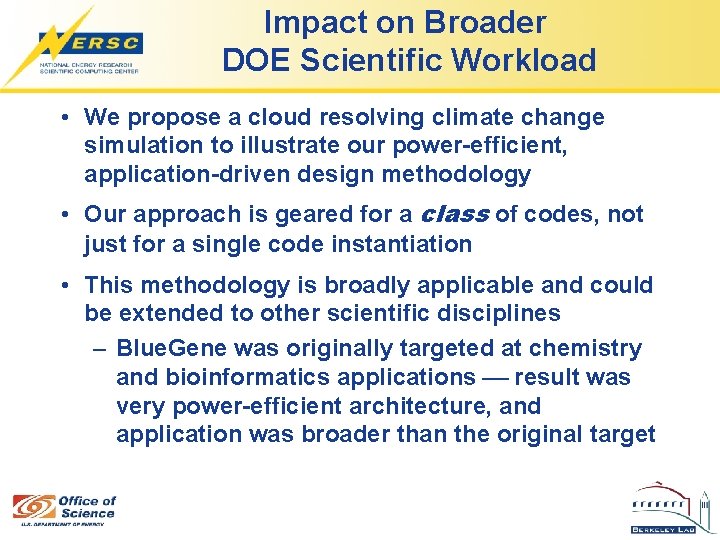

Impact on Broader DOE Scientific Workload • We propose a cloud resolving climate change simulation to illustrate our power-efficient, application-driven design methodology • Our approach is geared for a class of codes, not just for a single code instantiation • This methodology is broadly applicable and could be extended to other scientific disciplines – Blue. Gene was originally targeted at chemistry and bioinformatics applications result was very power-efficient architecture, and application was broader than the original target

More Info • NERSC Science Driven System Architecture Group – http: //www. nersc. gov/projects/SDSA • Power Efficient Semi-custom Computing – http: //vis. lbl. gov/~jshalf/SIAM_CSE 07 • The “View from Berkeley” – http: //view. eecs. berkeley. edu • Memory Bandwidth – http: //www. nersc. gov/projects/SDSA/reports/uploaded/S OS 11_mem_Shalf. pdf

Extra

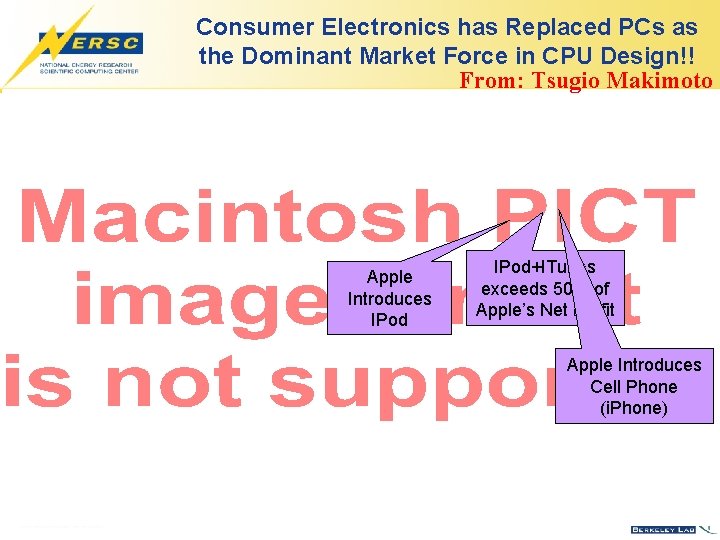

Consumer Electronics Convergence From: Tsugio Makimoto

Consumer Electronics has Replaced PCs as the Dominant Market Force in CPU Design!! From: Tsugio Makimoto Apple Introduces IPod+ITunes exceeds 50% of Apple’s Net Profit Apple Introduces Cell Phone (i. Phone)

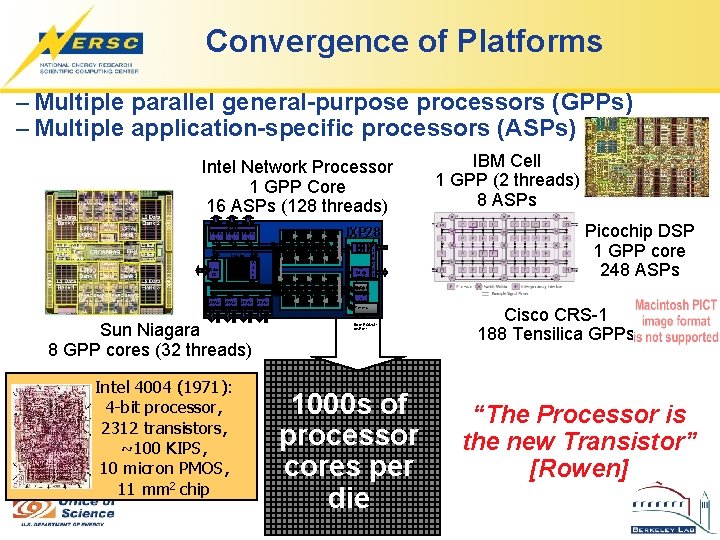

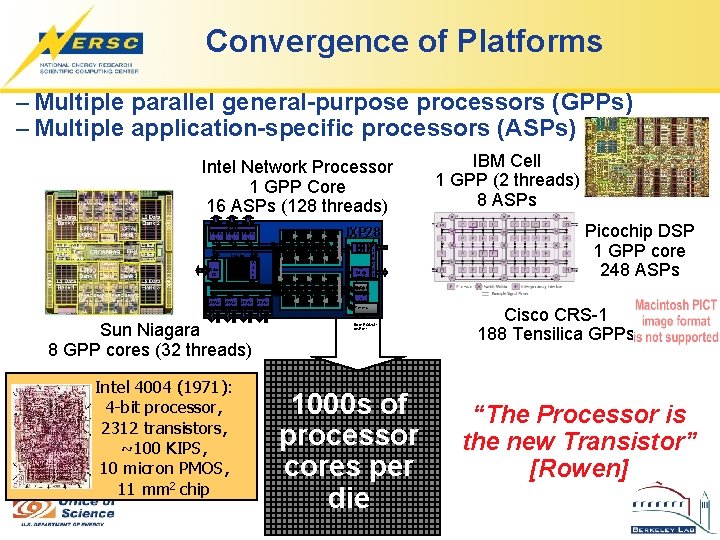

Convergence of Platforms – Multiple parallel general-purpose processors (GPPs) – Multiple application-specific processors (ASPs) Intel Network Processor 1 GPP Core 16 ASPs (128 threads) 1 8 RDRA M 1 PCI 64 b (64 b) 66 MHz QDR SRAM 1 E/D Q 1 1 8 8 1 8 Stripe RDRA M 2 1 8 RDRA M 3 Intel® XScale ™ Core 32 K IC 32 K DC QDR SRAM 2 E/D Q 1 1 8 8 MEv 2 8 7 6 5 G A S K E T QDR SRAM 3 E/D Q 1 1 8 8 Sun Niagara 8 GPP cores (32 threads) Intel 4004 (1971): 4 -bit processor, 2312 transistors, ~100 KIPS, 10 micron PMOS, 11 mm 2 chip MEv 2 1 2 3 4 QDR SRAM 4 E/D Q 1 1 8 8 IXP 28 00 Rbuf 64 @ 128 B MEv 2 9 10 11 12 Tbuf 64 @ 128 B Hash 48/64/1 CSRs 28 Scratc -Fast_wr MEv 2 16 15 14 13 h -UART 16 KB -Timers S P 16 b I 4 or C S 16 b I X -GPIO Boot. ROM/Sl ow. Port 1000 s of processor cores per die IBM Cell 1 GPP (2 threads) 8 ASPs Picochip DSP 1 GPP core 248 ASPs Cisco CRS-1 188 Tensilica GPPs “The Processor is the new Transistor” [Rowen]

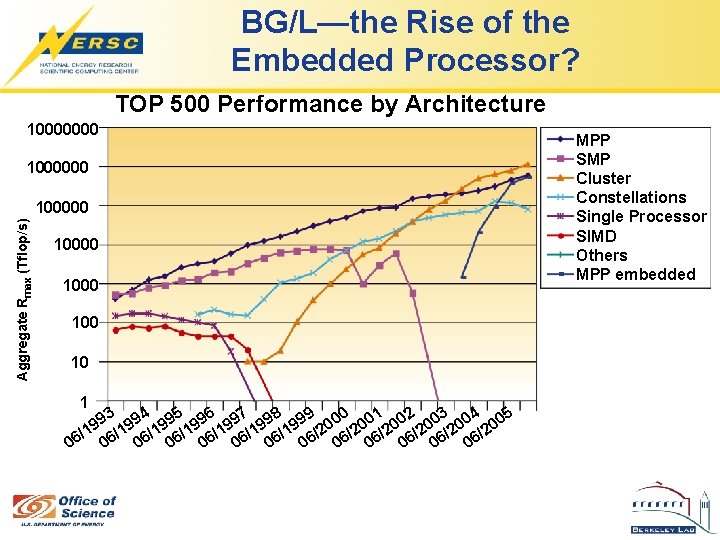

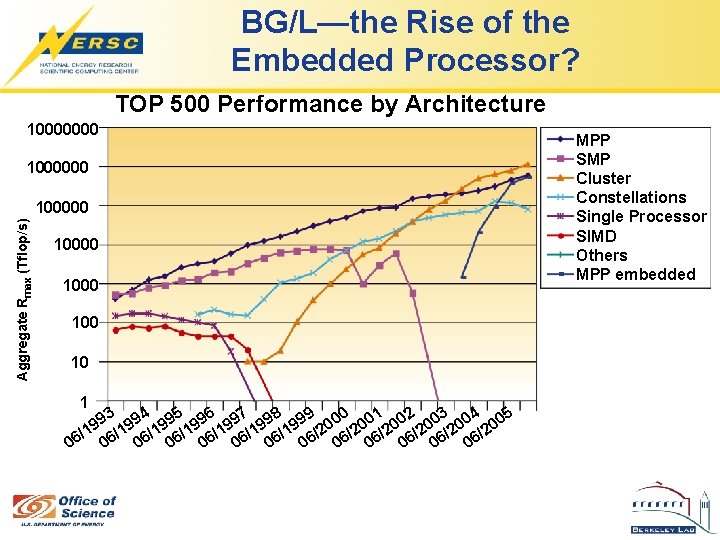

BG/L—the Rise of the Embedded Processor? TOP 500 Performance by Architecture 10000000 1000000 Aggregate Rmax (Tflop/s) 100000 1000 10 1 93 994 995 996 997 998 999 000 001 002 003 004 005 9 1 / /1 6/1 6/1 6/2 6/2 6/2 6 6 0 0 0 0 MPP SMP Cluster Constellations Single Processor SIMD Others MPP embedded

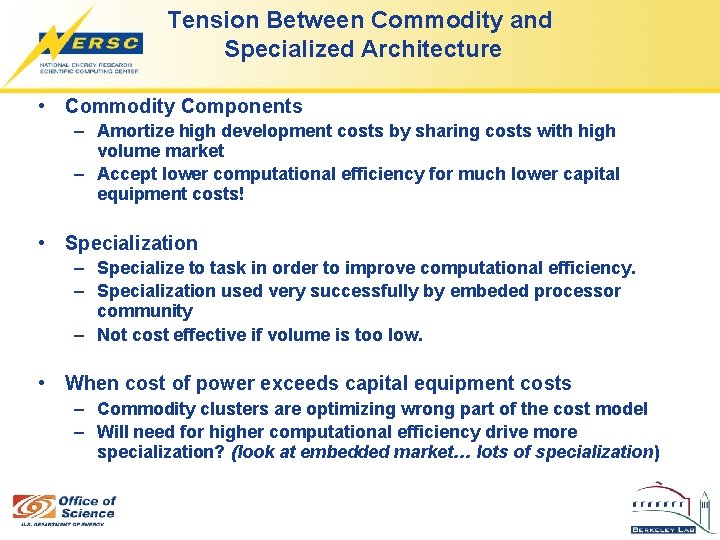

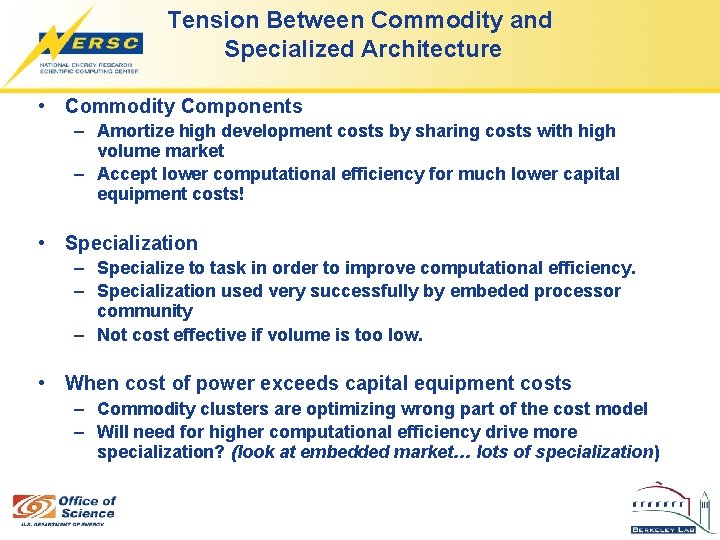

Tension Between Commodity and Specialized Architecture • Commodity Components – Amortize high development costs by sharing costs with high volume market – Accept lower computational efficiency for much lower capital equipment costs! • Specialization – Specialize to task in order to improve computational efficiency. – Specialization used very successfully by embeded processor community – Not cost effective if volume is too low. • When cost of power exceeds capital equipment costs – Commodity clusters are optimizing wrong part of the cost model – Will need for higher computational efficiency drive more specialization? (look at embedded market… lots of specialization)

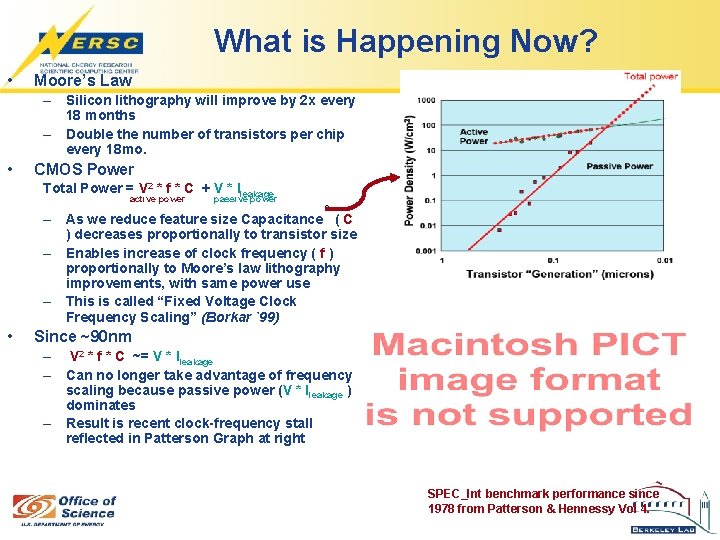

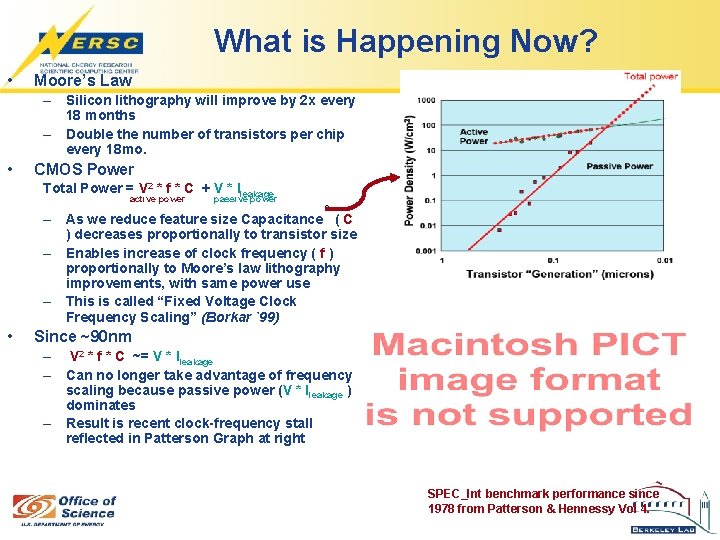

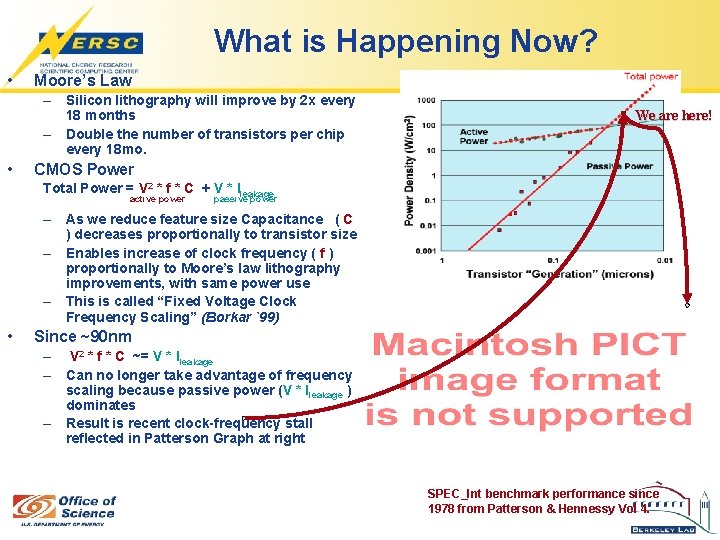

What is Happening Now? • Moore’s Law – Silicon lithography will improve by 2 x every 18 months – Double the number of transistors per chip every 18 mo. • CMOS Power Total Power = V 2 * f * C + V * Ileakage active power passive power – As we reduce feature size Capacitance ( C ) decreases proportionally to transistor size – Enables increase of clock frequency ( f ) proportionally to Moore’s law lithography improvements, with same power use – This is called “Fixed Voltage Clock Frequency Scaling” (Borkar `99) • Since ~90 nm – V 2 * f * C ~= V * Ileakage – Can no longer take advantage of frequency scaling because passive power (V * Ileakage ) dominates – Result is recent clock-frequency stall reflected in Patterson Graph at right SPEC_Int benchmark performance since 1978 from Patterson & Hennessy Vol 4.

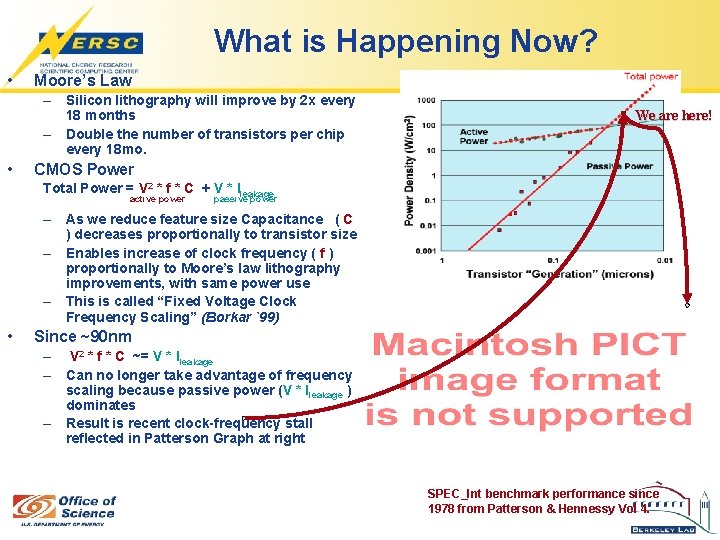

What is Happening Now? • Moore’s Law – Silicon lithography will improve by 2 x every 18 months – Double the number of transistors per chip every 18 mo. • We are here! CMOS Power Total Power = V 2 * f * C + V * Ileakage active power passive power – As we reduce feature size Capacitance ( C ) decreases proportionally to transistor size – Enables increase of clock frequency ( f ) proportionally to Moore’s law lithography improvements, with same power use – This is called “Fixed Voltage Clock Frequency Scaling” (Borkar `99) • Since ~90 nm – V 2 * f * C ~= V * Ileakage – Can no longer take advantage of frequency scaling because passive power (V * Ileakage ) dominates – Result is recent clock-frequency stall reflected in Patterson Graph at right SPEC_Int benchmark performance since 1978 from Patterson & Hennessy Vol 4.

Some Final Comments on Convergence (who is in the driver’s seat of the multicore revolution? )

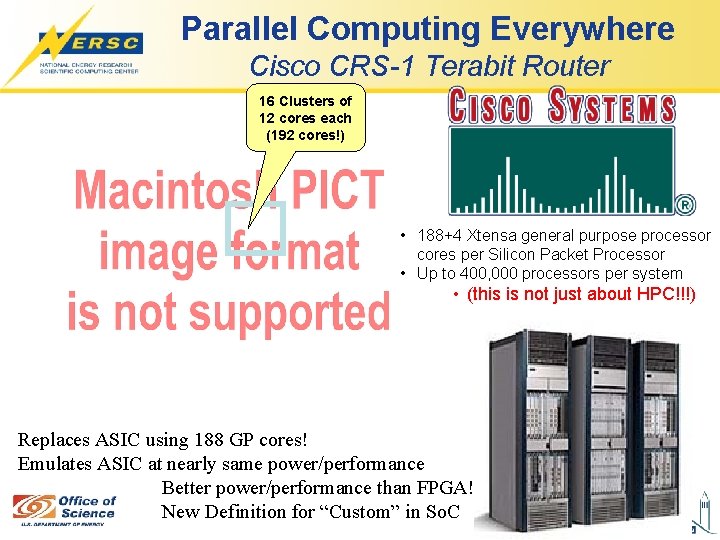

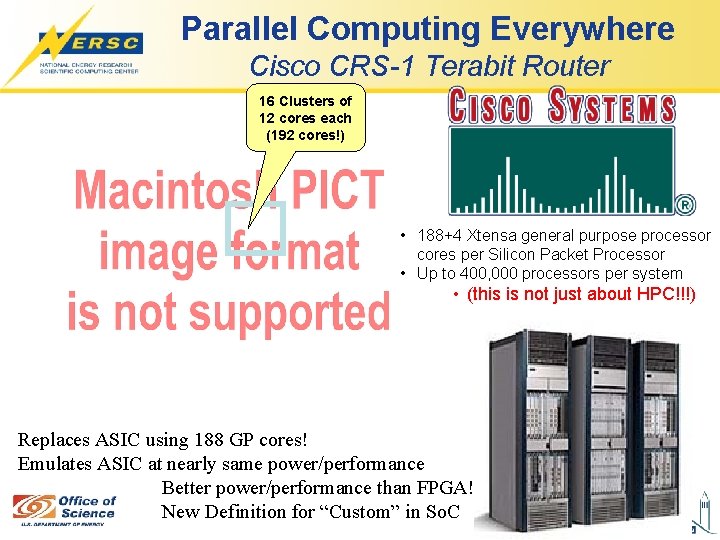

Parallel Computing Everywhere Cisco CRS-1 Terabit Router 16 Clusters of 12 cores each (192 cores!) 16 PPE • 188+4 Xtensa general purpose processor cores per Silicon Packet Processor • Up to 400, 000 processors per system • (this is not just about HPC!!!) Replaces ASIC using 188 GP cores! Emulates ASIC at nearly same power/performance Better power/performance than FPGA! New Definition for “Custom” in So. C

Conclusions • Enormous transition is underway that affects all sectors of computing industry – Motivated by power limits – Proceeding before emergence of the parallel programming model • Will lead to new era of architectural exploration given uncertainties about programming and execution model (and we MUST explore!) • Need to get involved now – 3 -5 years for new hardware designs to emerge – 3 -5 years lead for new software ideas necessary to support new hardware to emerge – 5+ MORE years to general adoption of new software

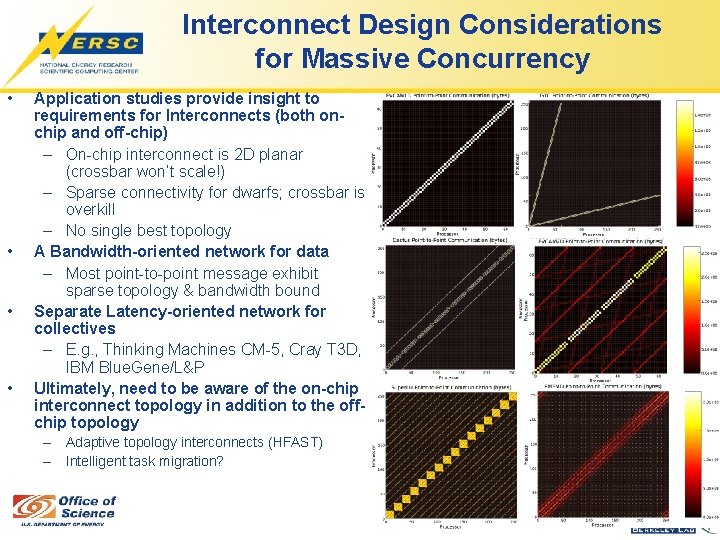

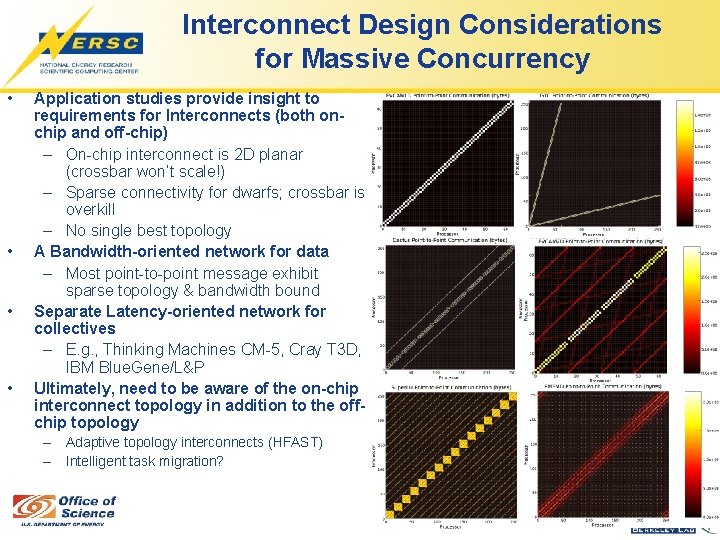

Interconnect Design Considerations for Massive Concurrency • • Application studies provide insight to requirements for Interconnects (both onchip and off-chip) – On-chip interconnect is 2 D planar (crossbar won’t scale!) – Sparse connectivity for dwarfs; crossbar is overkill – No single best topology A Bandwidth-oriented network for data – Most point-to-point message exhibit sparse topology & bandwidth bound Separate Latency-oriented network for collectives – E. g. , Thinking Machines CM-5, Cray T 3 D, IBM Blue. Gene/L&P Ultimately, need to be aware of the on-chip interconnect topology in addition to the offchip topology – Adaptive topology interconnects (HFAST) – Intelligent task migration?

Reliable System Design • The future is unreliable – Silicon Lithography pushes towards the atomic scale, the opportunity for spurious hardware errors will increase dramatically • Reliability of a system is not necessarily proportional to the number of cores in the system – Reliability is proportional to # of sockets in system (not #cores/chip) – At LLNL, BG/L has longer MTBF than Purple despite having 12 x more processor cores – Integrating more peripheral devices onto a single chip (e. g. caches, memory controller, interconnect) can further reduce chip count and increase reliability (System-on. Chip/SOC) • A key limiting factor is software infrastructure – Software was designed assuming perfect data integrity (but that is not a multicore issue) – Software written with implicit assumption of smaller concurrency (1 M cores not part of original design assumptions) – Requires fundamental re-thinking of OS and math library design assumptions

Operating Systems for CMP • Old OS Assumptions are bogus for hundreds of cores! – Assumes limited number of CPUs that must be shared • Old OS: time-multiplexing (context switching and cache pollution!) • New OS: spatial partitioning – Greedy allocation of finite I/O device interfaces (eg. 100 cores go after the network interface simultaneously) • Old OS: First process to acquire lock gets device (resource/lock contention! Nondeterm delay!) • New OS: Qo. S management for symmetric device access – Background task handling via threads and signals • Old OS: Interrupts and threads (time-multiplexing) (inefficient!) • New OS: side-cores dedicated to DMA and async I/O – Fault Isolation • Old OS: CPU failure --> Kernel Panic (will happen with increasing frequency in future silicon!) • New OS: CPU failure --> Partition Restart (partitioned device drivers) – Old OS invoked any interprocessor communication or scheduling vs. direct HW access

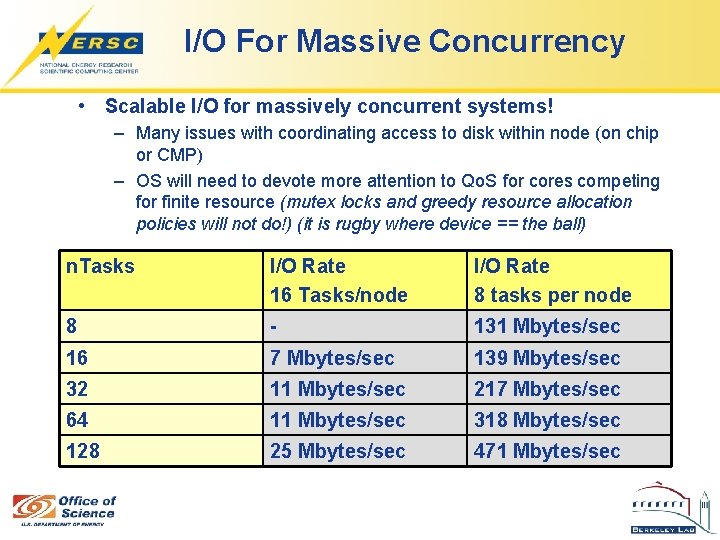

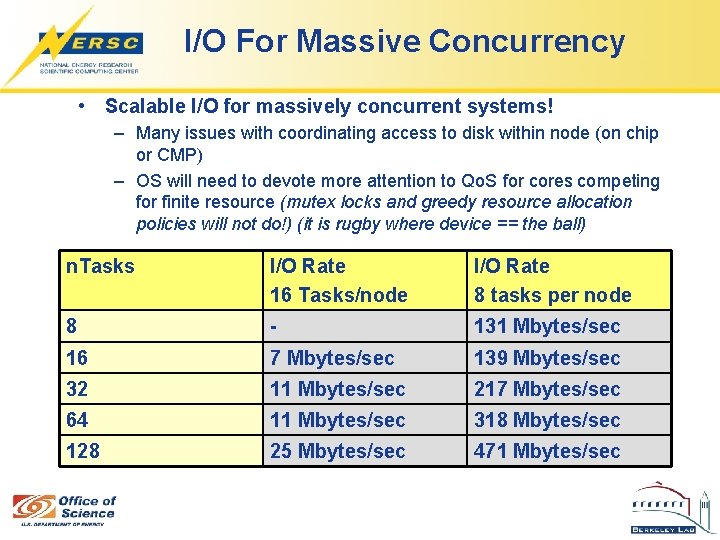

I/O For Massive Concurrency • Scalable I/O for massively concurrent systems! – Many issues with coordinating access to disk within node (on chip or CMP) – OS will need to devote more attention to Qo. S for cores competing for finite resource (mutex locks and greedy resource allocation policies will not do!) (it is rugby where device == the ball) n. Tasks I/O Rate 16 Tasks/node I/O Rate 8 tasks per node 8 - 131 Mbytes/sec 16 7 Mbytes/sec 139 Mbytes/sec 32 11 Mbytes/sec 217 Mbytes/sec 64 11 Mbytes/sec 318 Mbytes/sec 128 25 Mbytes/sec 471 Mbytes/sec

Intel

Chris Rowen Data

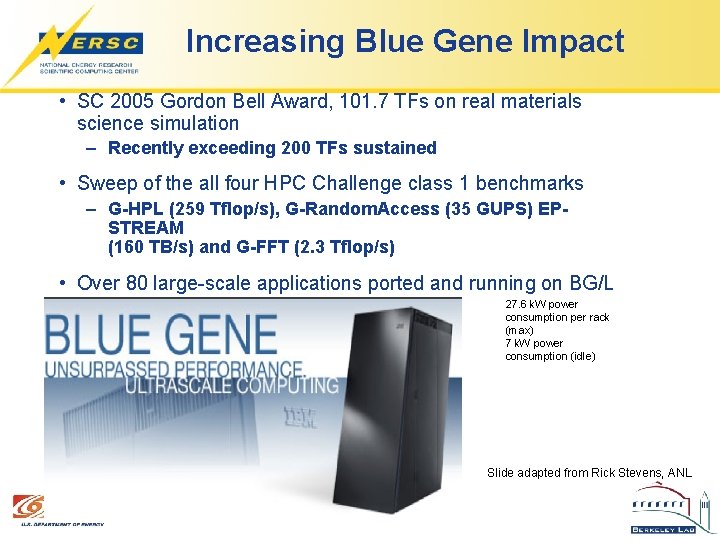

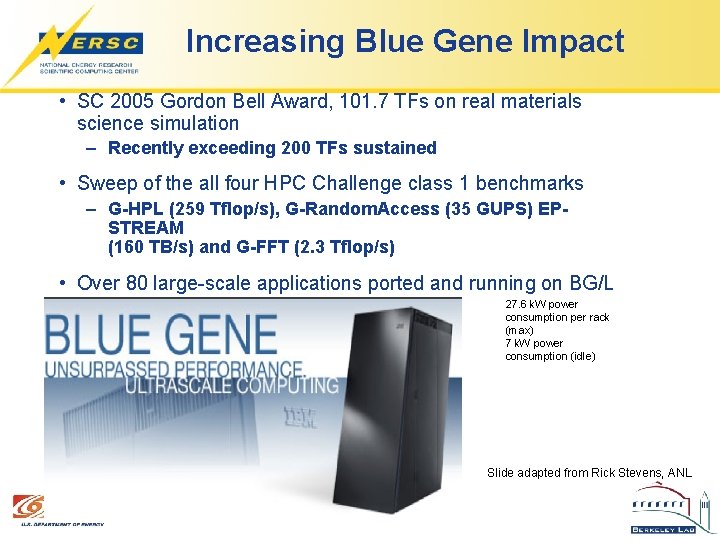

Increasing Blue Gene Impact • SC 2005 Gordon Bell Award, 101. 7 TFs on real materials science simulation – Recently exceeding 200 TFs sustained • Sweep of the all four HPC Challenge class 1 benchmarks – G-HPL (259 Tflop/s), G-Random. Access (35 GUPS) EPSTREAM (160 TB/s) and G-FFT (2. 3 Tflop/s) • Over 80 large-scale applications ported and running on BG/L 27. 6 k. W power consumption per rack (max) 7 k. W power consumption (idle) Slide adapted from Rick Stevens, ANL

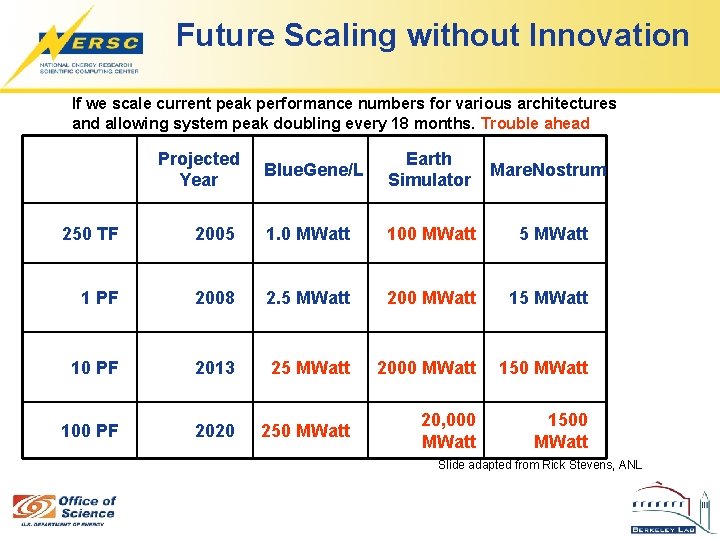

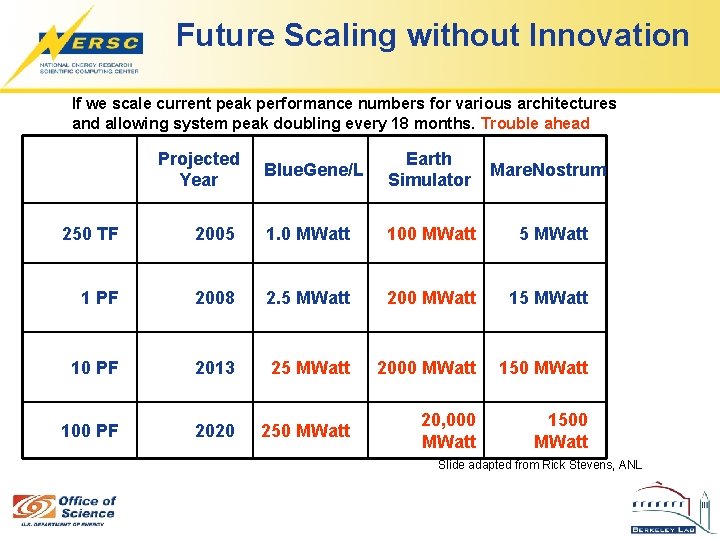

Future Scaling without Innovation If we scale current peak performance numbers for various architectures and allowing system peak doubling every 18 months. Trouble ahead Projected Year Blue. Gene/L Earth Simulator Mare. Nostrum 250 TF 2005 1. 0 MWatt 100 MWatt 5 MWatt 1 PF 2008 2. 5 MWatt 200 MWatt 15 MWatt 10 PF 2013 25 MWatt 2000 MWatt 150 MWatt 100 PF 2020 250 MWatt 20, 000 MWatt 1500 MWatt Slide adapted from Rick Stevens, ANL

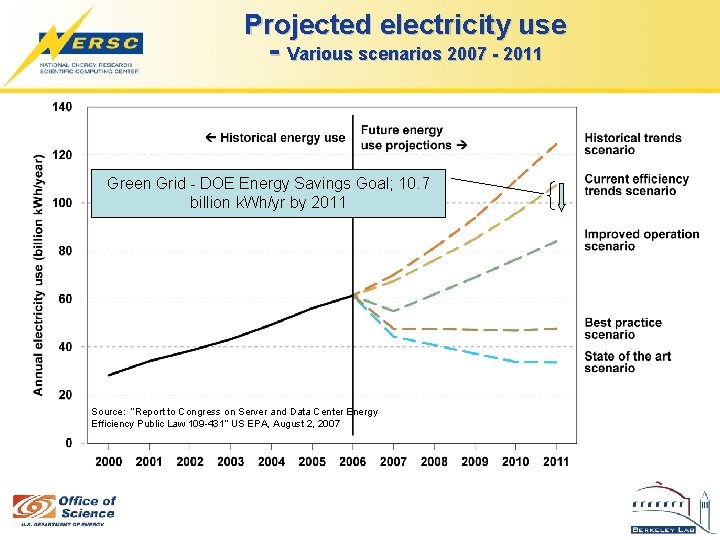

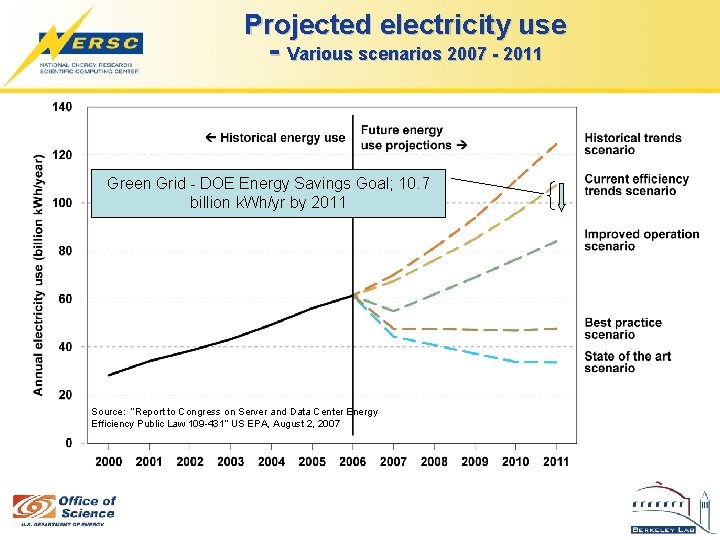

Projected electricity use - Various scenarios 2007 - 2011 Green Grid - DOE Energy Savings Goal; 10. 7 billion k. Wh/yr by 2011 Source: “Report to Congress on Server and Data Center Energy Efficiency Public Law 109 -431” US EPA, August 2, 2007

Petascale Architectural Exploration (back of the envelope calculation) Processor Clock Peak/ Core (Gflops) Cores/ Socket Mem/ BW (GB/s) Network BW (GB/s) Sockets Power (based on current generation technology) AMD Opteron 2. 8 GHz 5. 6 2 6. 4 4. 5 890 K 179 MW IBM BG/L 700 MHz 2. 8 2 5. 5 2. 2 1. 8 M 27 MW Semicustom Embedded 650 MHz 2. 7 32 51. 2 34. 5 120 K 3 MW v. Software challenges (at all levels) are a tremendous obstacle for any of these approaches. v. Unprecedented levels of concurrency are required. v. Unprecedented levels of power are required if we adopt conventional route v. Embedded route offers tractable power, but daunting concurrency! v This only gets us to 10 Petaflops peak v 200 PF system to meet application sustained performance requirements vthus cost and power are likely to be 10 x-20 x more.