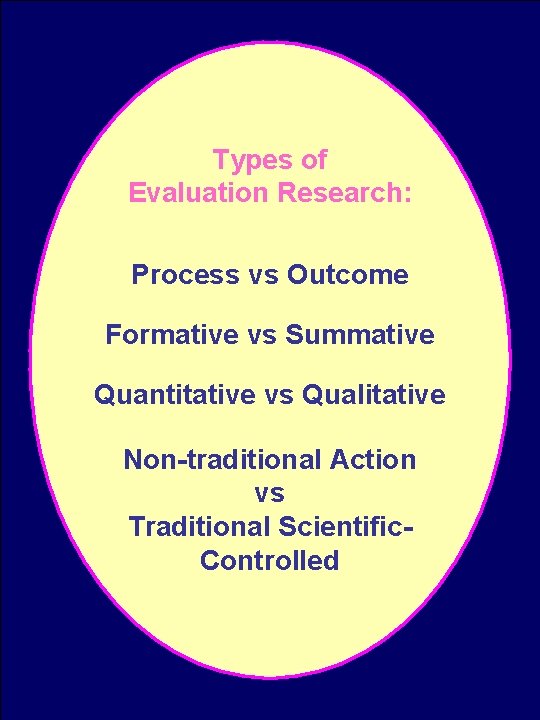

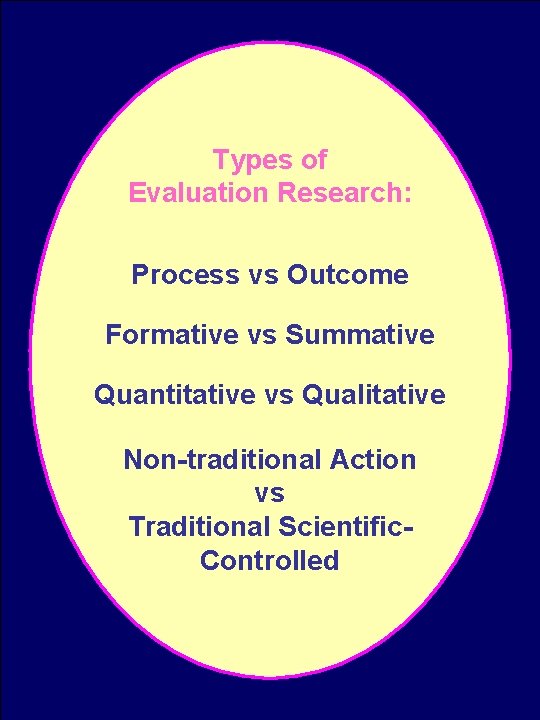

Types of Evaluation Research Process vs Outcome Formative

- Slides: 14

Types of Evaluation Research: Process vs Outcome Formative vs Summative Quantitative vs Qualitative Non-traditional Action vs Traditional Scientific. Controlled

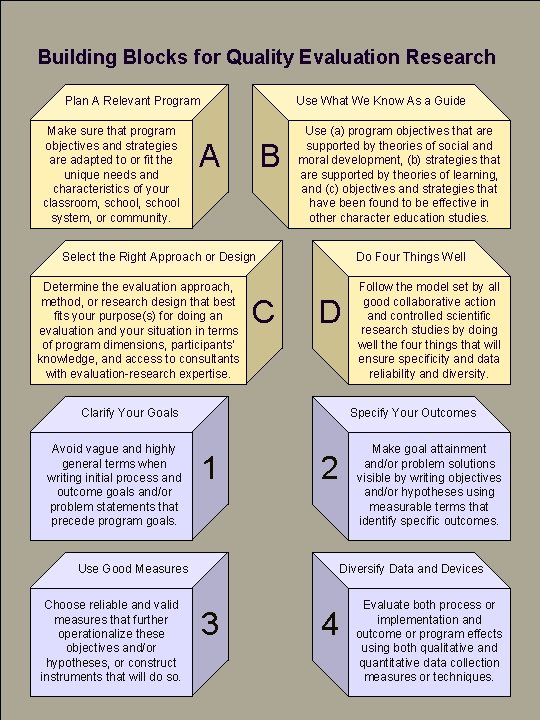

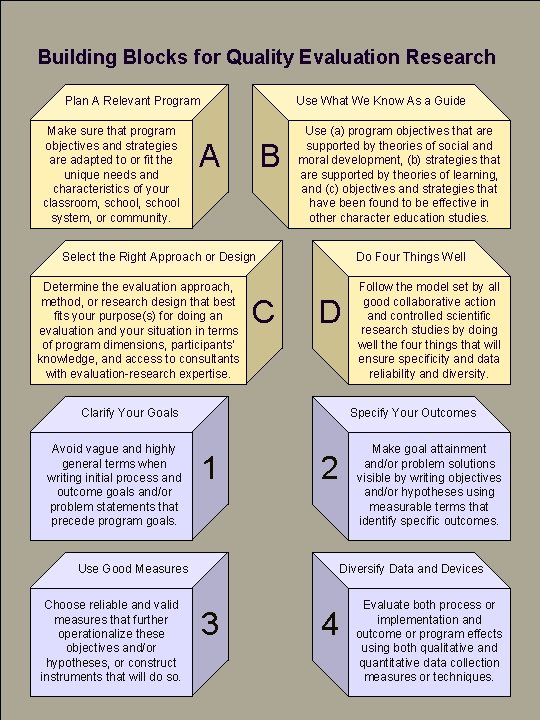

Building Blocks for Quality Evaluation Research Plan A Relevant Program Make sure that program objectives and strategies are adapted to or fit the unique needs and characteristics of your classroom, school system, or community. Use What We Know As a Guide A B Use (a) program objectives that are supported by theories of social and moral development, (b) strategies that are supported by theories of learning, and (c) objectives and strategies that have been found to be effective in other character education studies. Select the Right Approach or Design Determine the evaluation approach, method, or research design that best fits your purpose(s) for doing an evaluation and your situation in terms of program dimensions, participants’ knowledge, and access to consultants with evaluation-research expertise. C Do Four Things Well D Clarify Your Goals Avoid vague and highly general terms when writing initial process and outcome goals and/or problem statements that precede program goals. Specify Your Outcomes 1 2 Use Good Measures Choose reliable and valid measures that further operationalize these objectives and/or hypotheses, or construct instruments that will do so. Follow the model set by all good collaborative action and controlled scientific research studies by doing well the four things that will ensure specificity and data reliability and diversity. Make goal attainment and/or problem solutions visible by writing objectives and/or hypotheses using measurable terms that identify specific outcomes. Diversify Data and Devices 3 4 Evaluate both process or implementation and outcome or program effects using both qualitative and quantitative data collection measures or techniques.

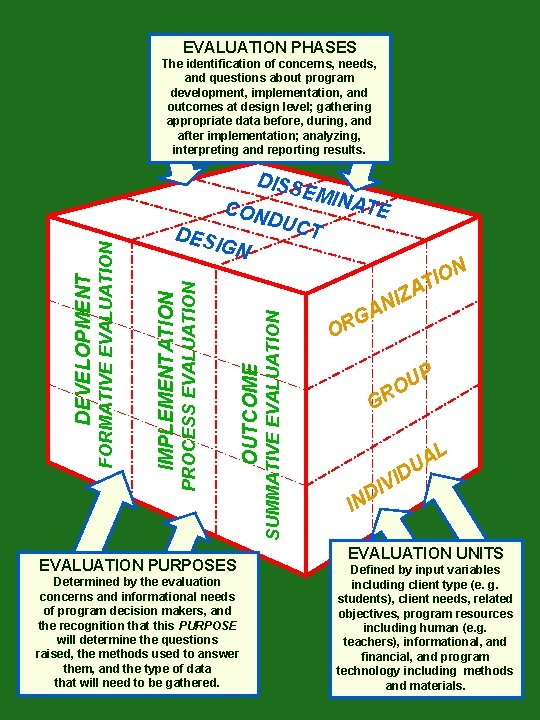

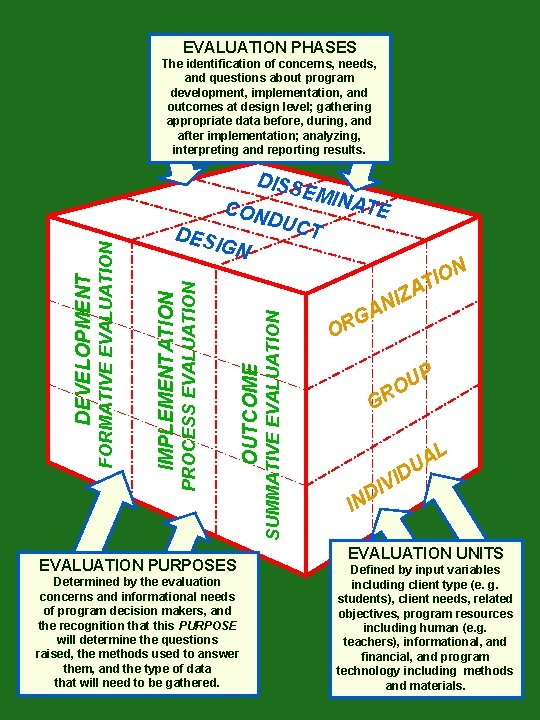

EVALUATION PHASES EVALUATION PURPOSES Determined by the evaluation concerns and informational needs of program decision makers, and the recognition that this PURPOSE will determine the questions raised, the methods used to answer them, and the type of data that will need to be gathered. SUMMATIVE EVALUATION OUTCOME PROCESS EVALUATION DISS EMIN ATE CON DUC T DES IGN IMPLEMENTATION FORMATIVE EVALUATION DEVELOPMENT The identification of concerns, needs, and questions about program development, implementation, and outcomes at design level; gathering appropriate data before, during, and after implementation; analyzing, interpreting and reporting results. N O I T A Z I N A G R O P U RO G IN VID I D L A U EVALUATION UNITS Defined by input variables including client type (e. g. students), client needs, related objectives, program resources including human (e. g. teachers), informational, and financial, and program technology including methods and materials.

Why Are Programs Evaluated? 1. 2. 4. 5. 6. 7. 8. 9. 10 11. 12. 13. 14. 15. 16. 17. To maximize the chances that the program to be planned will be relevant and thus address clients’ needs. To find out if program components have been implemented and to what degree. To determine if there is progress in the right direction and if unanticipated side effects and problems have occurred. To find out what program components, methods, and strategies are working. To obtain detailed information that will allow for improvements during the course of the program. To provide information that will make quality control possible. To see if program participants are supporting the program and to what degree. To motivate participants who might otherwise do little or nothing. To see if stated process and outcome goals and objectives were achieved. To determine if a program should be continued, expanded, modified, or ended. To produce findings that could be of value to the planners and operators of other similar programs. To determine which alternative programs and related theories are the most effective. To satisfy grant requirements. To generate additional support for a program from administrators, board members, legislators, and the public. To obtain funds or keep the funds coming. To provide a detailed insider view for sponsors. Because participants are highly professional and thus interested in planning programs that will work, interested in improving programs, and interested in adding to the knowledge base of their profession.

Outcome/ Summative Evaluation Traditional Scientific or Action Research Process/ Formative Evaluation Student and/or Climate Outcomes; More Quantitative Than Qualitative Means of Achieving Outcomes; More Qualitative Than Quantitative Improvement Thru Delayed Feedback; Guides Future Research Improvement Thru Ongoing Feedback; Makes Intended Effects More Likely Probable Attribution of Program Effects to Program Strategies Plausible Attribution of Program Effects to Program Strategies Structured Investigation That Uses Comparison Groups, Time-Series Analyses, and Hypotheses To Rule Out Unintended Causes for Effects Semi-Structured Investigation That Examines Program Operations Using Observations, Interviews, Open Survey Questions, and Checklists Rigid Pre-Program Selection of Desired Results, Reliable and Valid Measures, and Strategies Routine Midcourse Adjustments Thru Specification and Monitoring of Program Elements

Quantitative Evaluation Qualitative Evaluation Controlled Study Predetermined Hypotheses Standardized Measures Quasi -Experimental Design Statistical Analysis Generalization of Results In-depth, Naturalistic Information Gathering; No Predetermined Hypotheses, Response Categories, or Standardized Measures; Non-Statistical and Inductive Analysis of Data Counting and Recording Events Reliable and Validity Instruments Means and Medians Precoded Observation Forms School Climate Surveys Introspective Questionnaires Tests of Knowledge and Skill In-Depth Interviews Open -Ended Questions Extended Observations Detailed Note Taking Journals, Videos, Newsletters All Organized Into Themes and Categories. Primarily Used for Outcome/ Summative Evaluation or Assessing Program Effects; Uses Comparison Groups and Pre-Post Testing; Quick Feedback About Implementation or Process Primarily Used for Process/ Formative Evaluation; Allows for Adjustments During Implementation of Program; Gathered at All points From Needs Assessment to Outcome Assessment Limited Qualification Possible; Should Be Limited Since Primary Function Is to Determine the Statistical Probability That Program Elements Produced Desired Outcomes Limited Quantification Possible; Should Be Limited Since Primary Functions Are to Provide an In-Depth Understanding and to Explore Areas Where Little Is known

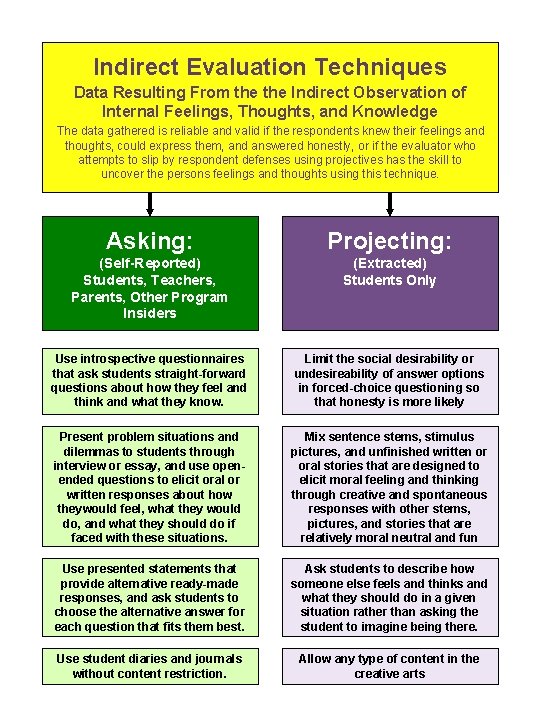

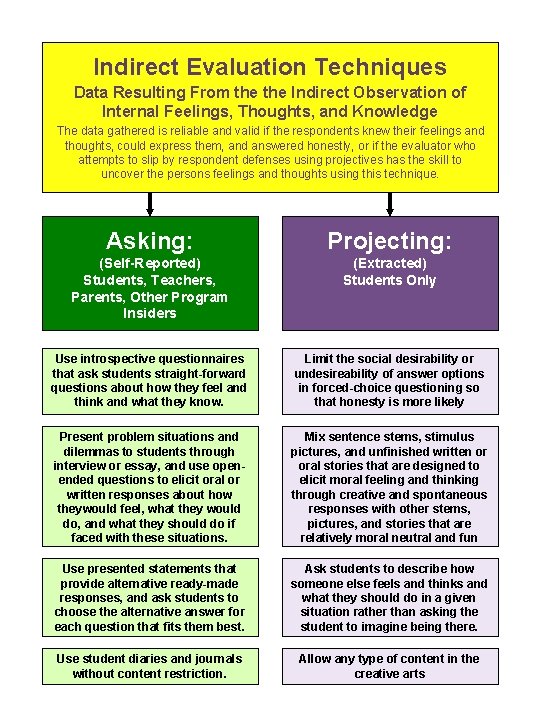

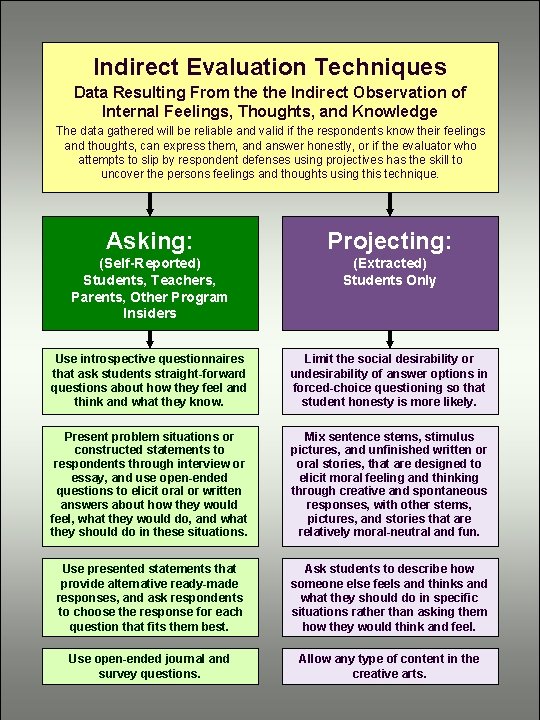

Indirect Evaluation Techniques Data Resulting From the Indirect Observation of Internal Feelings, Thoughts, and Knowledge The data gathered is reliable and valid if the respondents knew their feelings and thoughts, could express them, and answered honestly, or if the evaluator who attempts to slip by respondent defenses using projectives has the skill to uncover the persons feelings and thoughts using this technique. Asking: Projecting: (Self-Reported) Students, Teachers, Parents, Other Program Insiders (Extracted) Students Only Use introspective questionnaires that ask students straight-forward questions about how they feel and think and what they know. Limit the social desirability or undesireability of answer options in forced-choice questioning so that honesty is more likely Present problem situations and dilemmas to students through interview or essay, and use openended questions to elicit oral or written responses about how theywould feel, what they would do, and what they should do if faced with these situations. Mix sentence stems, stimulus pictures, and unfinished written or oral stories that are designed to elicit moral feeling and thinking through creative and spontaneous responses with other stems, pictures, and stories that are relatively moral neutral and fun Use presented statements that provide alternative ready-made responses, and ask students to choose the alternative answer for each question that fits them best. Ask students to describe how someone else feels and thinks and what they should do in a given situation rather than asking the student to imagine being there. Use student diaries and journals without content restriction. Allow any type of content in the creative arts

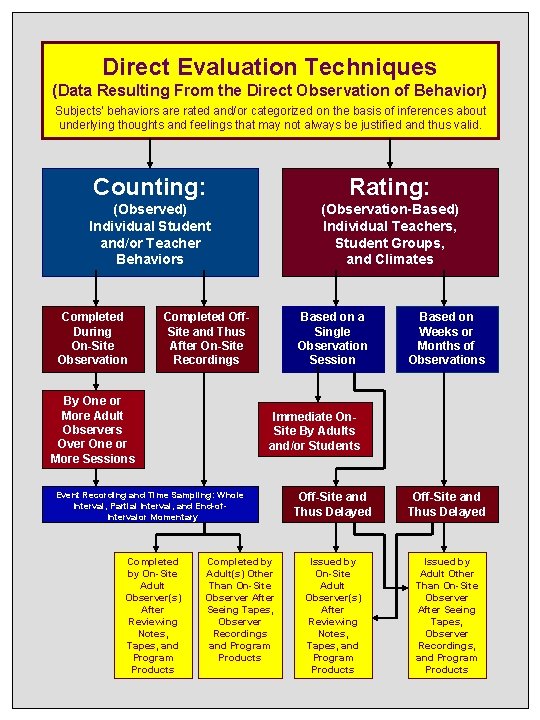

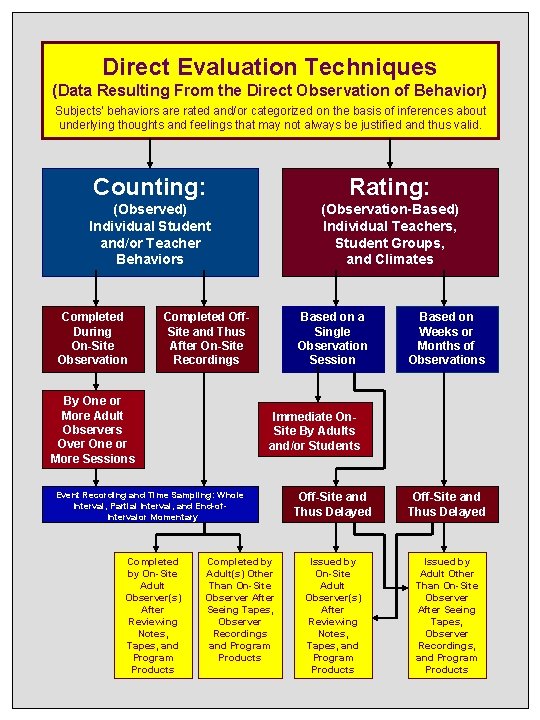

Direct Evaluation Techniques (Data Resulting From the Direct Observation of Behavior) Subjects’ behaviors are rated and/or categorized on the basis of inferences about underlying thoughts and feelings that may not always be justified and thus valid. Counting: Rating: (Observed) Individual Student and/or Teacher Behaviors (Observation-Based) Individual Teachers, Student Groups, and Climates Completed During On-Site Observation Completed Off. Site and Thus After On-Site Recordings By One or More Adult Observers Over One or More Sessions Based on a Single Observation Session Immediate On. Site By Adults and/or Students Event Recording and Time Sampling: Whole Interval, Partial Interval, and End-of. Intervalor Momentary Completed by On-Site Adult Observer(s) After Reviewing Notes, Tapes, and Program Products Based on Weeks or Months of Observations Completed by Adult(s) Other Than On-Site Observer After Seeing Tapes, Observer Recordings and Program Products Off-Site and Thus Delayed Issued by On-Site Adult Observer(s) After Reviewing Notes, Tapes, and Program Products Issued by Adult Other Than On-Site Observer After Seeing Tapes, Observer Recordings, and Program Products

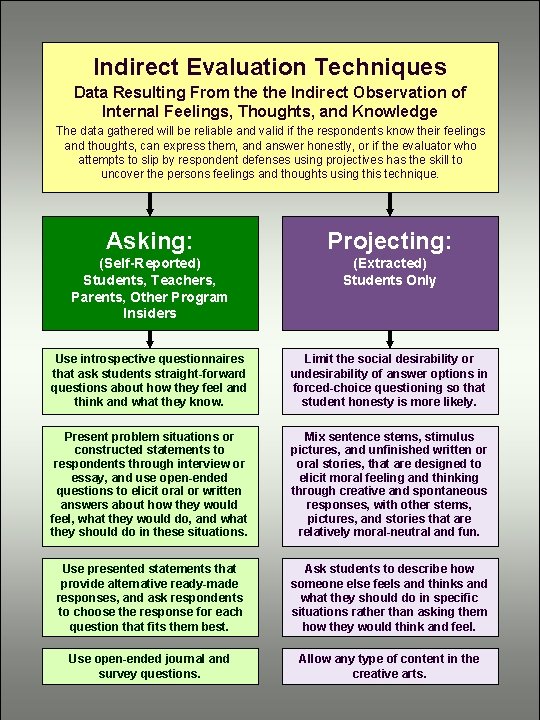

Indirect Evaluation Techniques Data Resulting From the Indirect Observation of Internal Feelings, Thoughts, and Knowledge The data gathered will be reliable and valid if the respondents know their feelings and thoughts, can express them, and answer honestly, or if the evaluator who attempts to slip by respondent defenses using projectives has the skill to uncover the persons feelings and thoughts using this technique. Asking: Projecting: (Self-Reported) Students, Teachers, Parents, Other Program Insiders (Extracted) Students Only Use introspective questionnaires that ask students straight-forward questions about how they feel and think and what they know. Limit the social desirability or undesirability of answer options in forced-choice questioning so that student honesty is more likely. Present problem situations or constructed statements to respondents through interview or essay, and use open-ended questions to elicit oral or written answers about how they would feel, what they would do, and what they should do in these situations. Mix sentence stems, stimulus pictures, and unfinished written or oral stories, that are designed to elicit moral feeling and thinking through creative and spontaneous responses, with other stems, pictures, and stories that are relatively moral-neutral and fun. Use presented statements that provide alternative ready-made responses, and ask respondents to choose the response for each question that fits them best. Ask students to describe how someone else feels and thinks and what they should do in specific situations rather than asking them how they would think and feel. Use open-ended journal and survey questions. Allow any type of content in the creative arts.

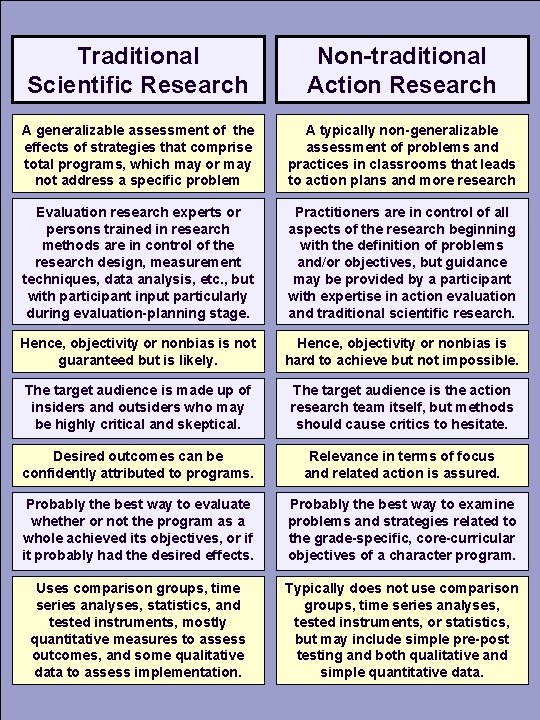

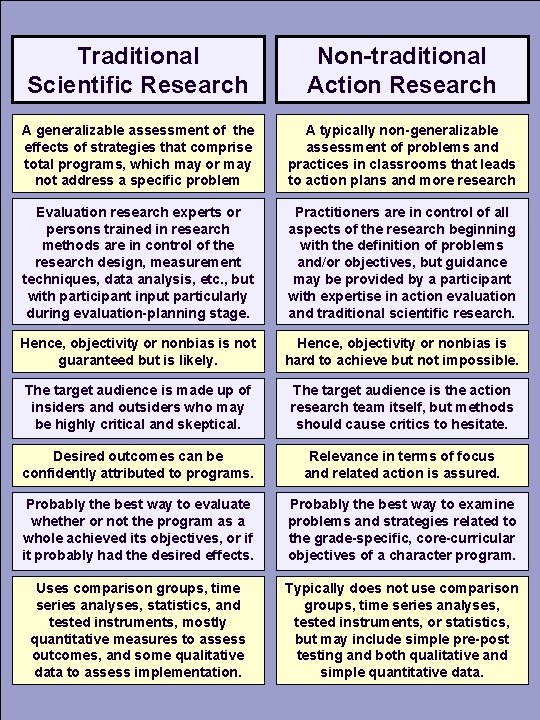

Traditional Scientific Research Non-traditional Action Research A generalizable assessment of the effects of strategies that comprise total programs, which may or may not address a specific problem A typically non-generalizable assessment of problems and practices in classrooms that leads to action plans and more research Evaluation research experts or persons trained in research methods are in control of the research design, measurement techniques, data analysis, etc. , but with participant input particularly during evaluation-planning stage. Practitioners are in control of all aspects of the research beginning with the definition of problems and/or objectives, but guidance may be provided by a participant with expertise in action evaluation and traditional scientific research. Hence, objectivity or nonbias is not guaranteed but is likely. Hence, objectivity or nonbias is hard to achieve but not impossible. The target audience is made up of insiders and outsiders who may be highly critical and skeptical. The target audience is the action research team itself, but methods should cause critics to hesitate. Desired outcomes can be confidently attributed to programs. Relevance in terms of focus and related action is assured. Probably the best way to evaluate whether or not the program as a whole achieved its objectives, or if it probably had the desired effects. Probably the best way to examine problems and strategies related to the grade-specific, core-curricular objectives of a character program. Uses comparison groups, time series analyses, statistics, and tested instruments, mostly quantitative measures to assess outcomes, and some qualitative data to assess implementation. Typically does not use comparison groups, time series analyses, tested instruments, or statistics, but may include simple pre-post testing and both qualitative and simple quantitative data.

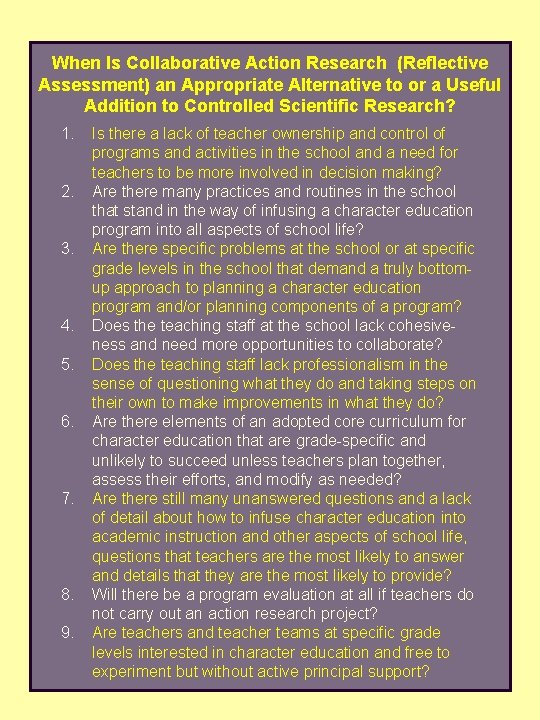

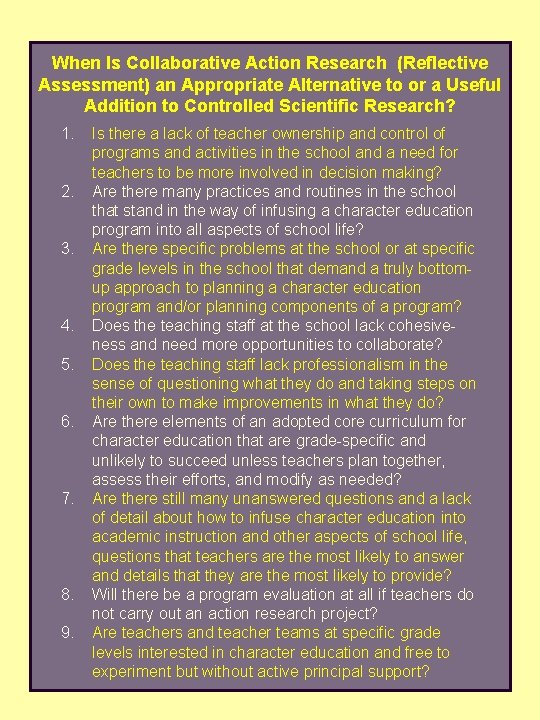

When Is Collaborative Action Research (Reflective Assessment) an Appropriate Alternative to or a Useful Addition to Controlled Scientific Research? 1. 2. 3. 4. 5. 6. 7. 8. 9. Is there a lack of teacher ownership and control of programs and activities in the school and a need for teachers to be more involved in decision making? Are there many practices and routines in the school that stand in the way of infusing a character education program into all aspects of school life? Are there specific problems at the school or at specific grade levels in the school that demand a truly bottomup approach to planning a character education program and/or planning components of a program? Does the teaching staff at the school lack cohesiveness and need more opportunities to collaborate? Does the teaching staff lack professionalism in the sense of questioning what they do and taking steps on their own to make improvements in what they do? Are there elements of an adopted core curriculum for character education that are grade-specific and unlikely to succeed unless teachers plan together, assess their efforts, and modify as needed? Are there still many unanswered questions and a lack of detail about how to infuse character education into academic instruction and other aspects of school life, questions that teachers are the most likely to answer and details that they are the most likely to provide? Will there be a program evaluation at all if teachers do not carry out an action research project? Are teachers and teacher teams at specific grade levels interested in character education and free to experiment but without active principal support?

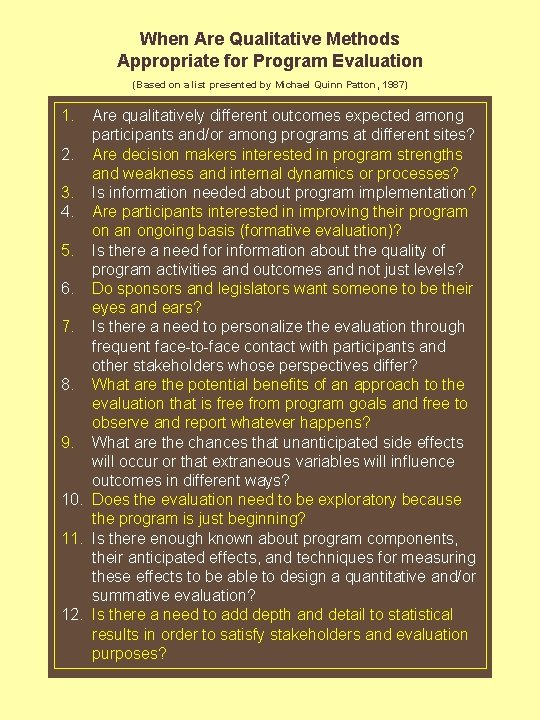

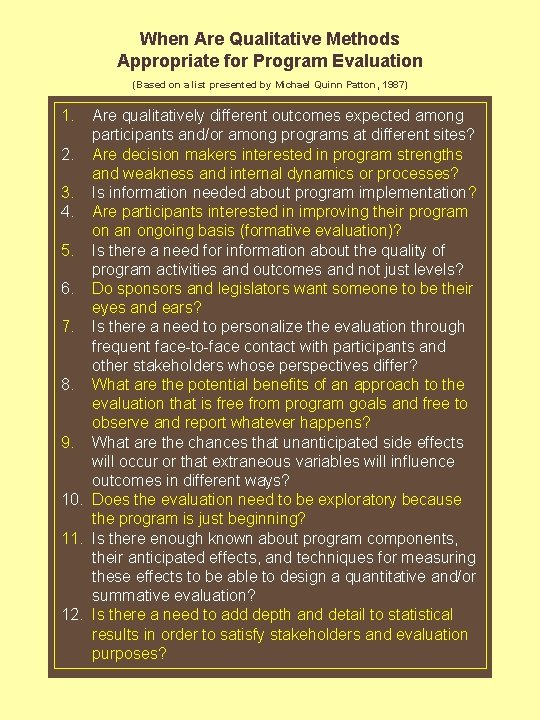

When Are Qualitative Methods Appropriate for Program Evaluation (Based on a list presented by Michael Quinn Patton, 1987) 1. Are qualitatively different outcomes expected among participants and/or among programs at different sites? 2. Are decision makers interested in program strengths and weakness and internal dynamics or processes? 3. Is information needed about program implementation? 4. Are participants interested in improving their program on an ongoing basis (formative evaluation)? 5. Is there a need for information about the quality of program activities and outcomes and not just levels? 6. Do sponsors and legislators want someone to be their eyes and ears? 7. Is there a need to personalize the evaluation through frequent face-to-face contact with participants and other stakeholders whose perspectives differ? 8. What are the potential benefits of an approach to the evaluation that is free from program goals and free to observe and report whatever happens? 9. What are the chances that unanticipated side effects will occur or that extraneous variables will influence outcomes in different ways? 10. Does the evaluation need to be exploratory because the program is just beginning? 11. Is there enough known about program components, their anticipated effects, and techniques for measuring these effects to be able to design a quantitative and/or summative evaluation? 12. Is there a need to add depth and detail to statistical results in order to satisfy stakeholders and evaluation purposes?

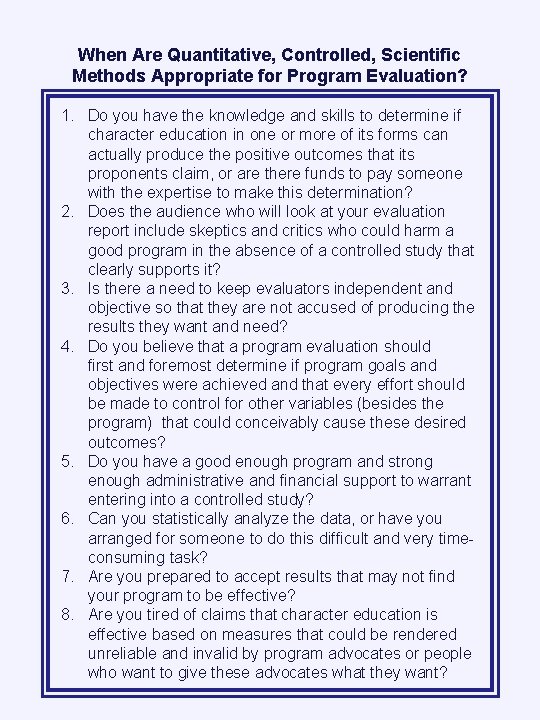

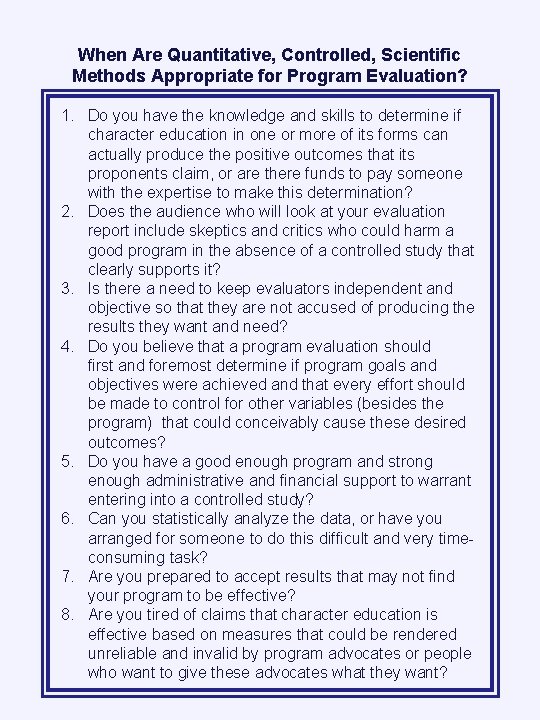

When Are Quantitative, Controlled, Scientific Methods Appropriate for Program Evaluation? 1. Do you have the knowledge and skills to determine if character education in one or more of its forms can actually produce the positive outcomes that its proponents claim, or are there funds to pay someone with the expertise to make this determination? 2. Does the audience who will look at your evaluation report include skeptics and critics who could harm a good program in the absence of a controlled study that clearly supports it? 3. Is there a need to keep evaluators independent and objective so that they are not accused of producing the results they want and need? 4. Do you believe that a program evaluation should first and foremost determine if program goals and objectives were achieved and that every effort should be made to control for other variables (besides the program) that could conceivably cause these desired outcomes? 5. Do you have a good enough program and strong enough administrative and financial support to warrant entering into a controlled study? 6. Can you statistically analyze the data, or have you arranged for someone to do this difficult and very timeconsuming task? 7. Are you prepared to accept results that may not find your program to be effective? 8. Are you tired of claims that character education is effective based on measures that could be rendered unreliable and invalid by program advocates or people who want to give these advocates what they want?

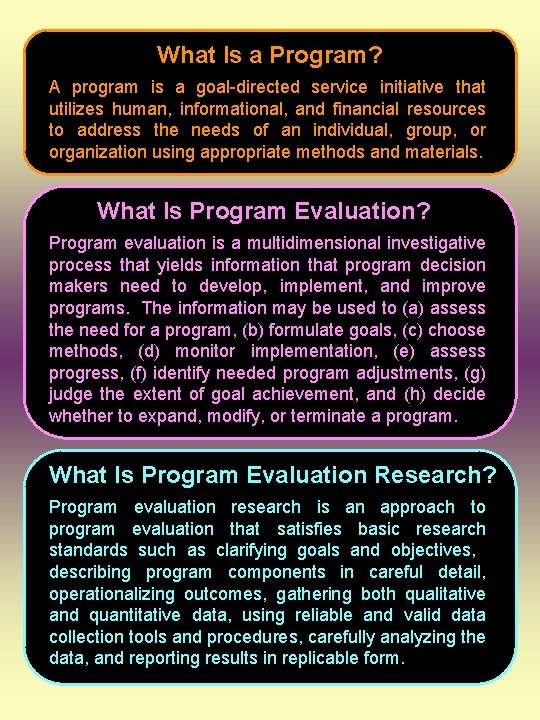

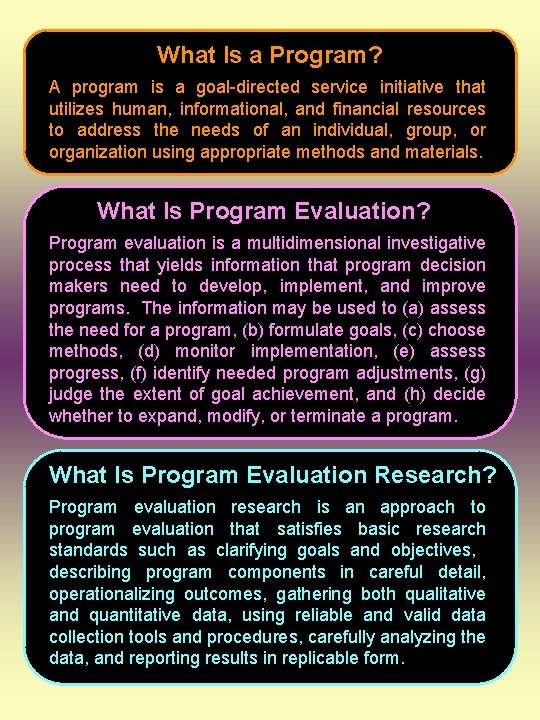

What Is a Program? A program is a goal-directed service initiative that utilizes human, informational, and financial resources to address the needs of an individual, group, or organization using appropriate methods and materials. What Is Program Evaluation? Program evaluation is a multidimensional investigative process that yields information that program decision makers need to develop, implement, and improve programs. The information may be used to (a) assess the need for a program, (b) formulate goals, (c) choose methods, (d) monitor implementation, (e) assess progress, (f) identify needed program adjustments, (g) judge the extent of goal achievement, and (h) decide whether to expand, modify, or terminate a program. What Is Program Evaluation Research? Program evaluation research is an approach to program evaluation that satisfies basic research standards such as clarifying goals and objectives, describing program components in careful detail, operationalizing outcomes, gathering both qualitative and quantitative data, using reliable and valid data collection tools and procedures, carefully analyzing the data, and reporting results in replicable form.