Tutorial 8 Markov Chains Markov Chains Consider a

- Slides: 14

Tutorial 8 Markov Chains

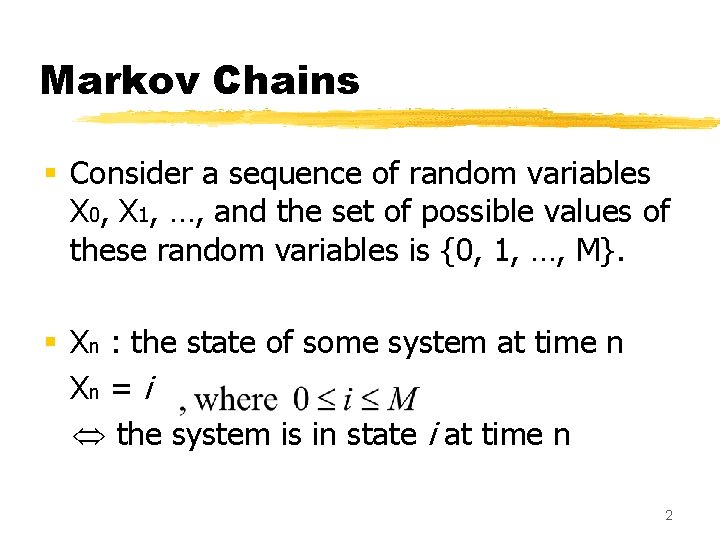

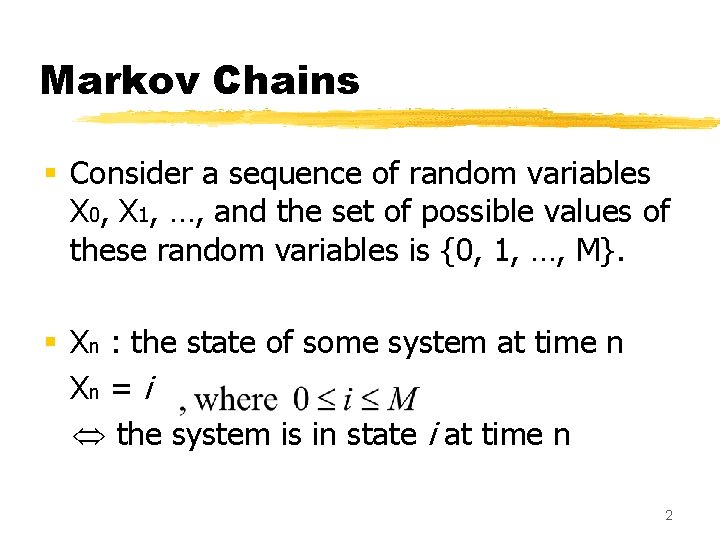

Markov Chains § Consider a sequence of random variables X 0, X 1, …, and the set of possible values of these random variables is {0, 1, …, M}. § Xn : the state of some system at time n Xn = i the system is in state i at time n 2

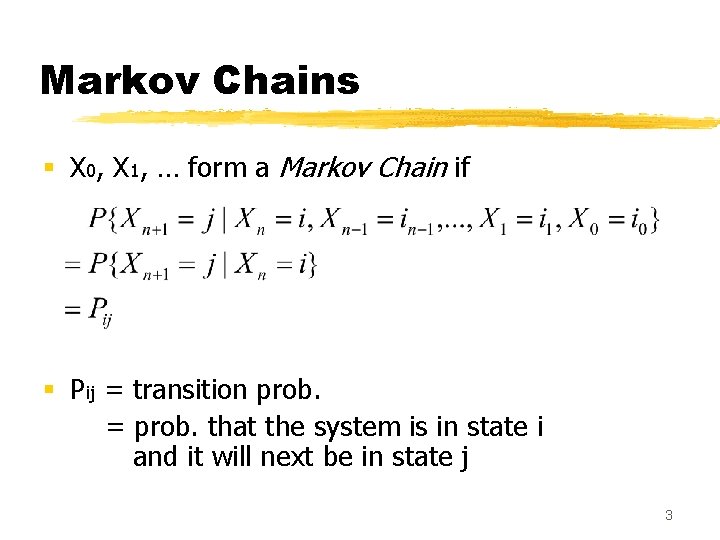

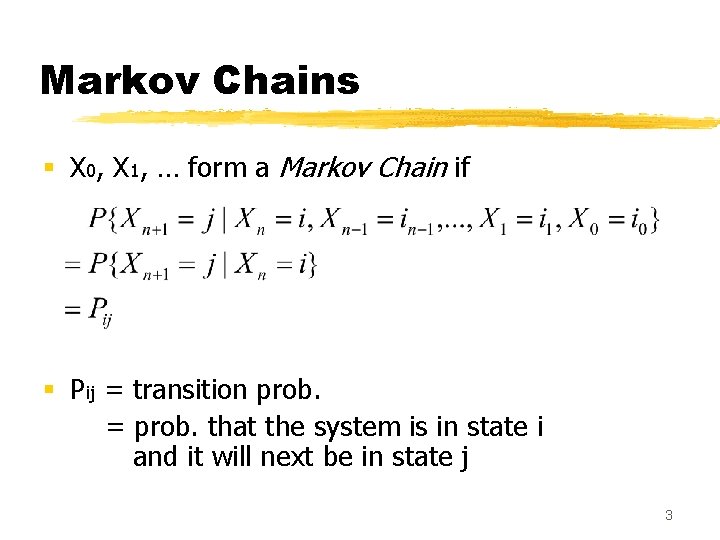

Markov Chains § X 0, X 1, … form a Markov Chain if § Pij = transition prob. = prob. that the system is in state i and it will next be in state j 3

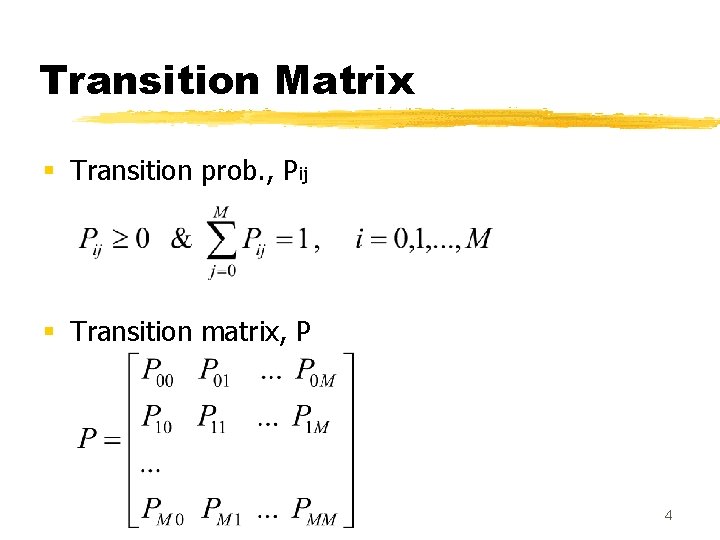

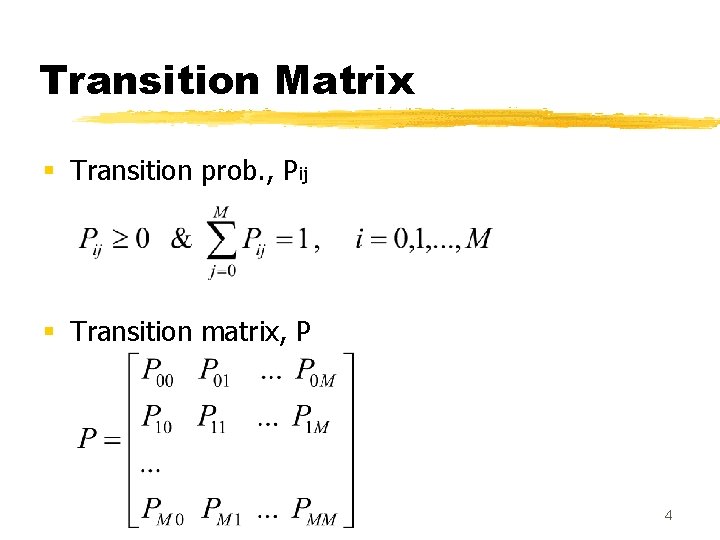

Transition Matrix § Transition prob. , Pij § Transition matrix, P 4

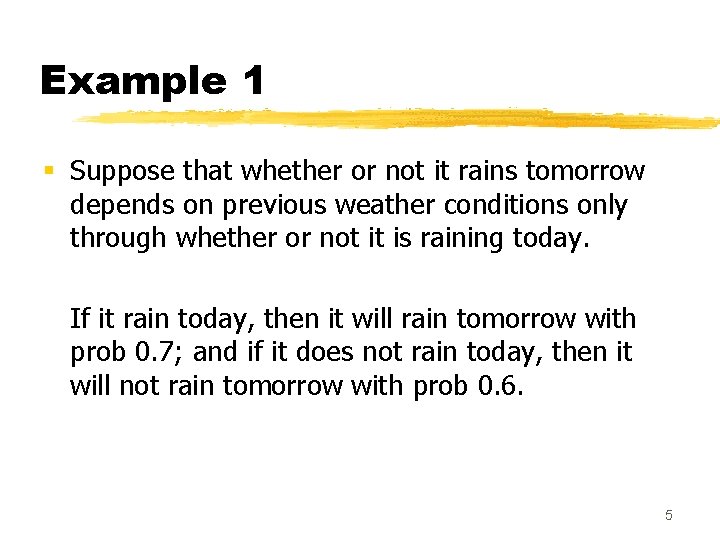

Example 1 § Suppose that whether or not it rains tomorrow depends on previous weather conditions only through whether or not it is raining today. If it rain today, then it will rain tomorrow with prob 0. 7; and if it does not rain today, then it will not rain tomorrow with prob 0. 6. 5

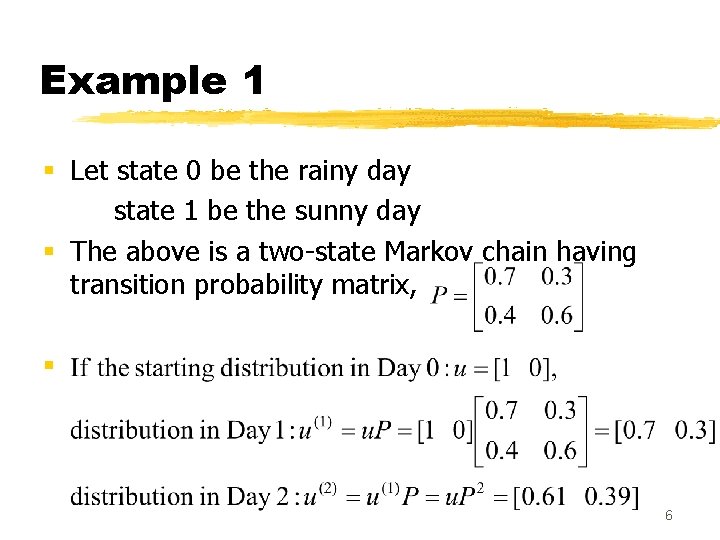

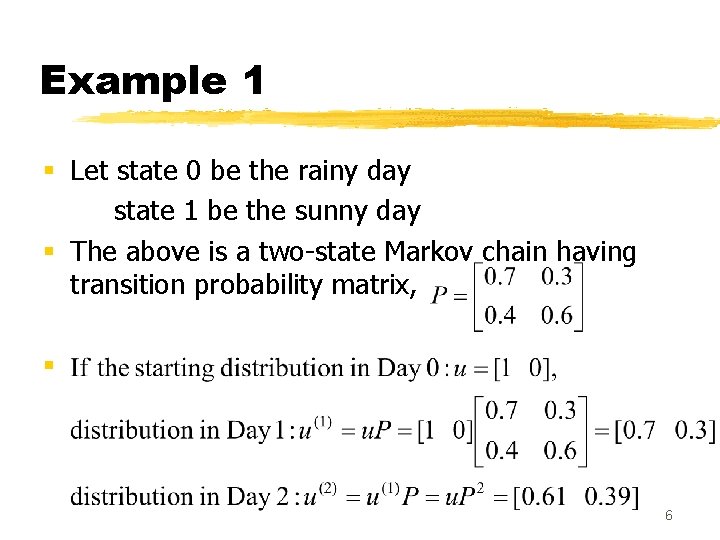

Example 1 § Let state 0 be the rainy day state 1 be the sunny day § The above is a two-state Markov chain having transition probability matrix, § 6

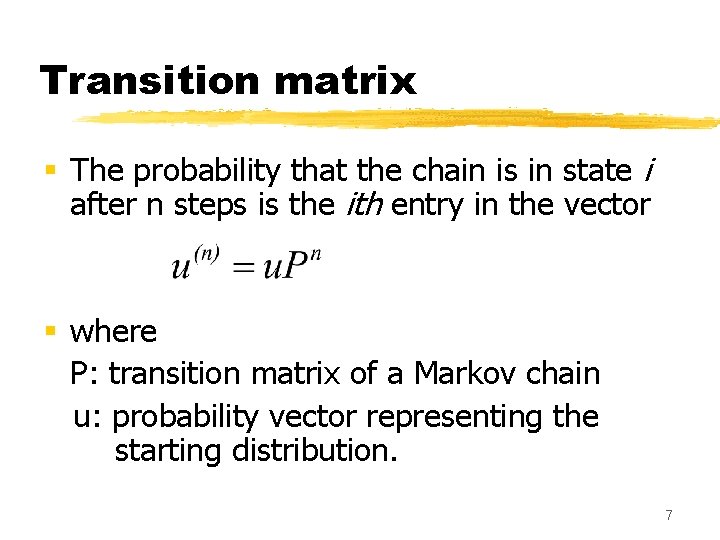

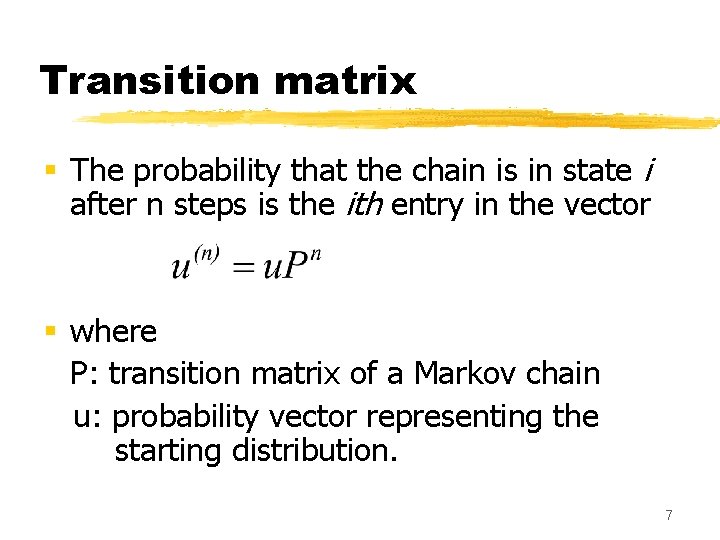

Transition matrix § The probability that the chain is in state i after n steps is the ith entry in the vector § where P: transition matrix of a Markov chain u: probability vector representing the starting distribution. 7

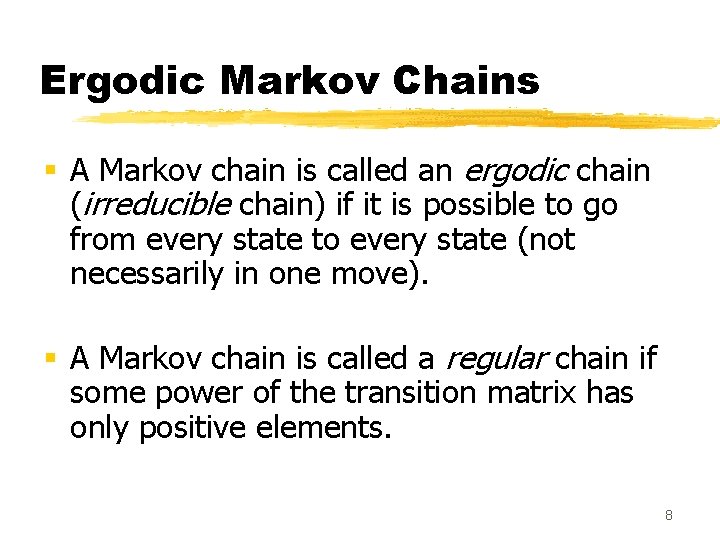

Ergodic Markov Chains § A Markov chain is called an ergodic chain (irreducible chain) if it is possible to go from every state to every state (not necessarily in one move). § A Markov chain is called a regular chain if some power of the transition matrix has only positive elements. 8

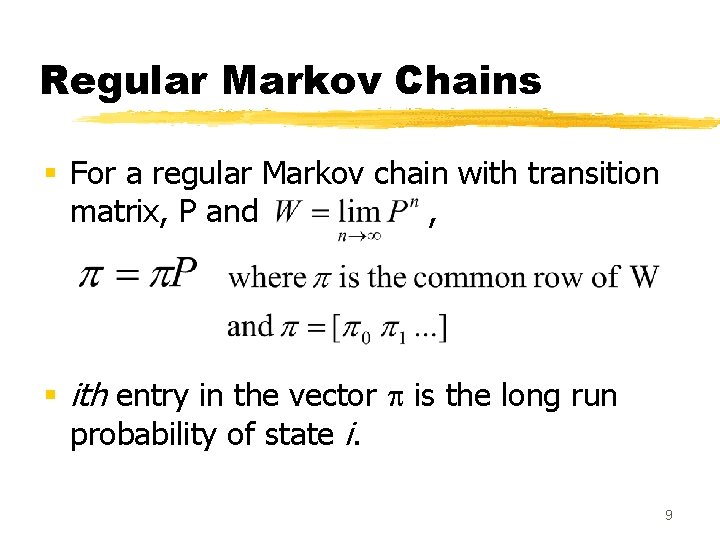

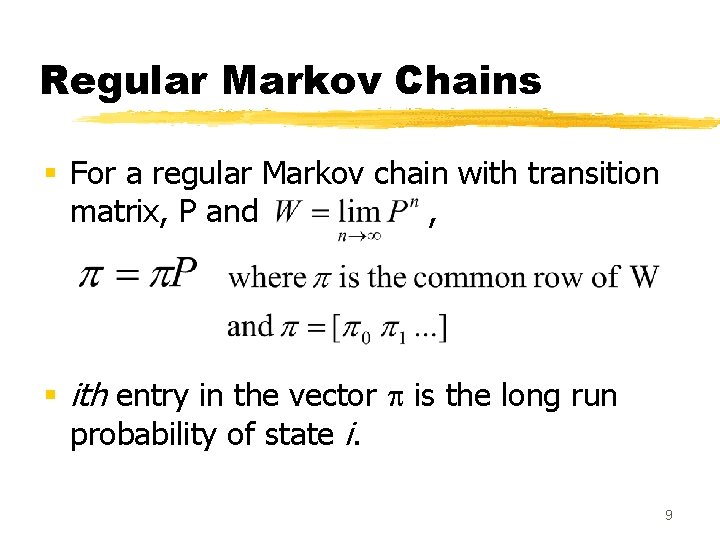

Regular Markov Chains § For a regular Markov chain with transition matrix, P and , § ith entry in the vector is the long run probability of state i. 9

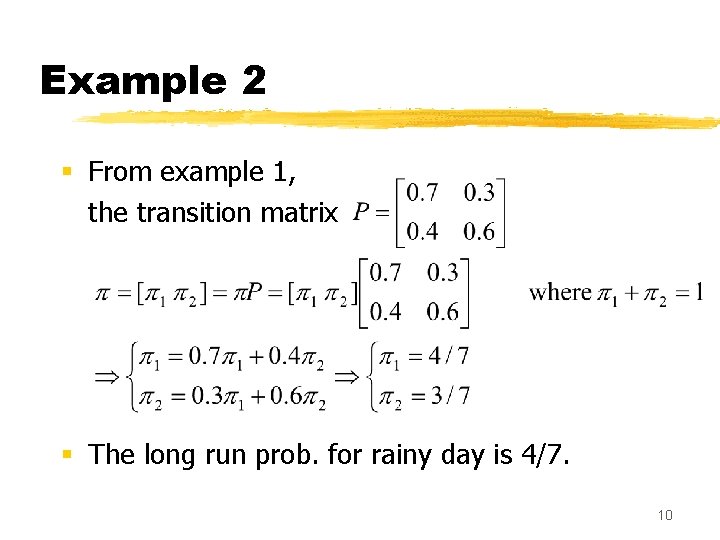

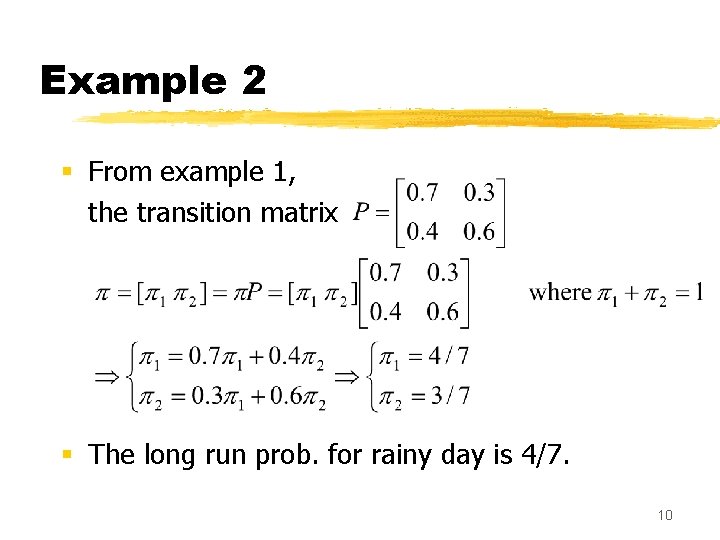

Example 2 § From example 1, the transition matrix § The long run prob. for rainy day is 4/7. 10

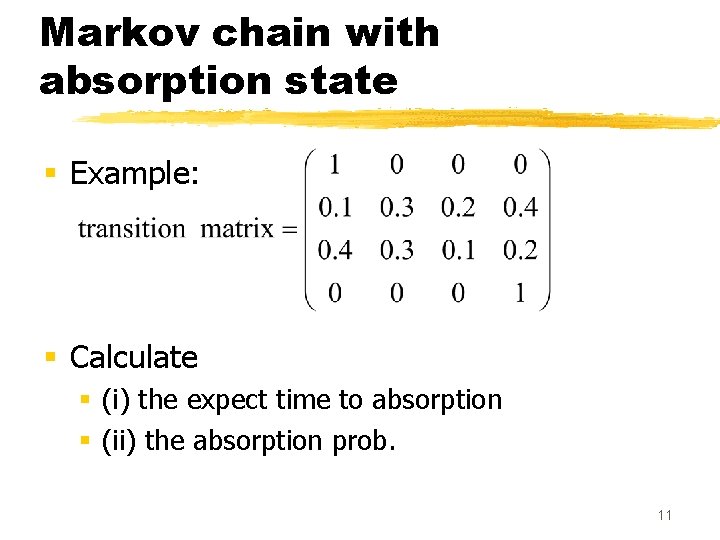

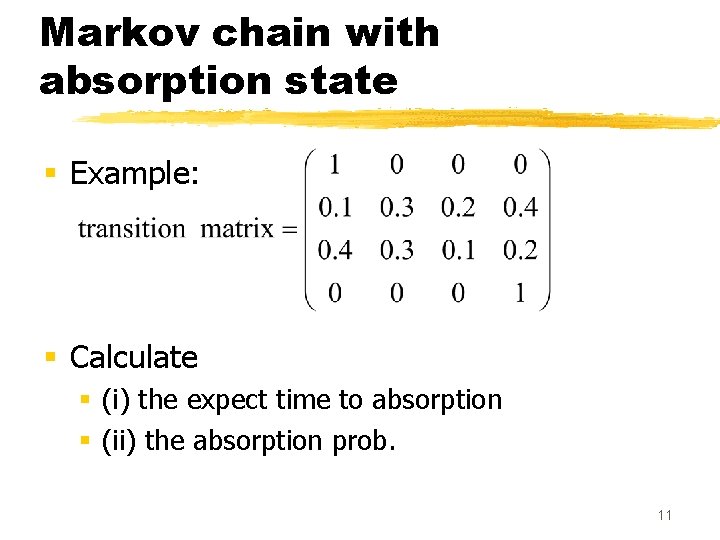

Markov chain with absorption state § Example: § Calculate § (i) the expect time to absorption § (ii) the absorption prob. 11

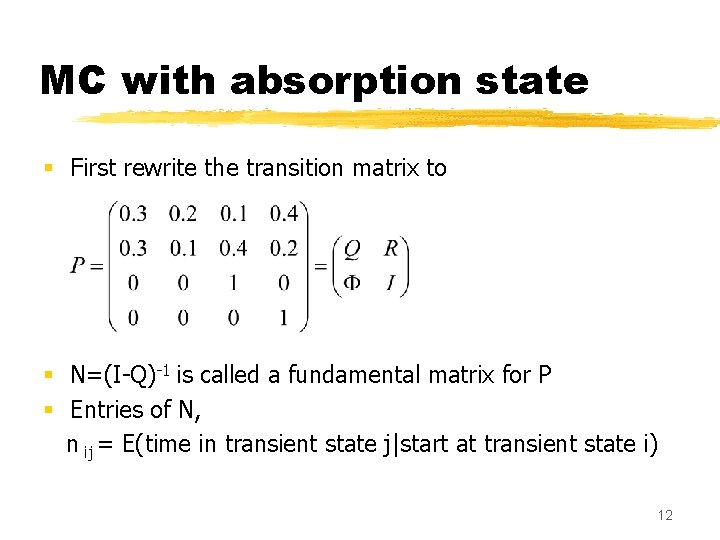

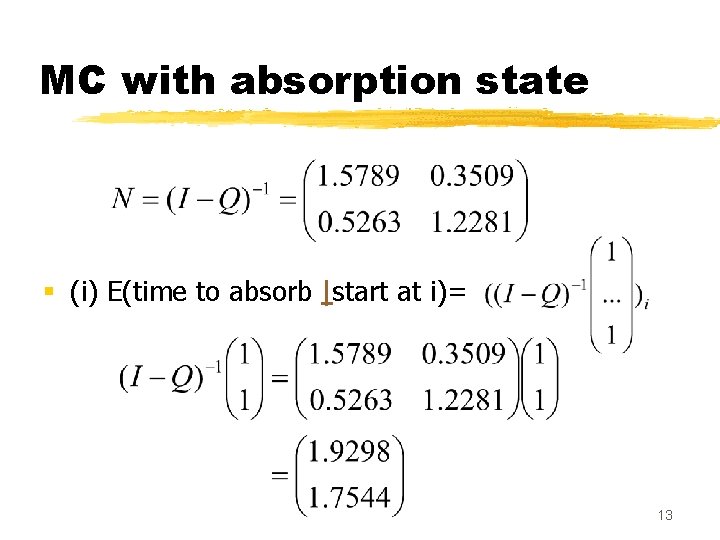

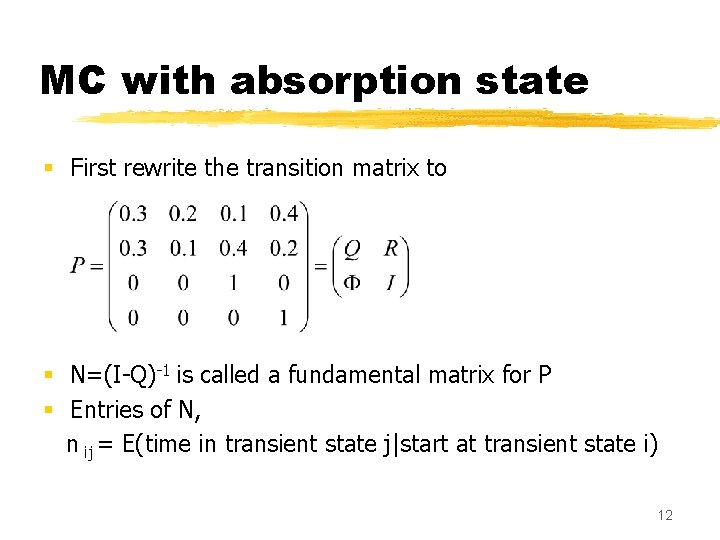

MC with absorption state § First rewrite the transition matrix to § N=(I-Q)-1 is called a fundamental matrix for P § Entries of N, n ij = E(time in transient state j|start at transient state i) 12

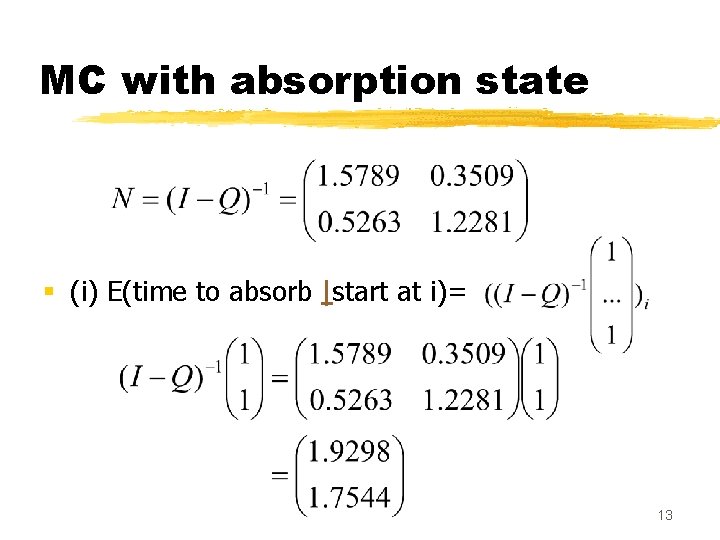

MC with absorption state § (i) E(time to absorb |start at i)= 13

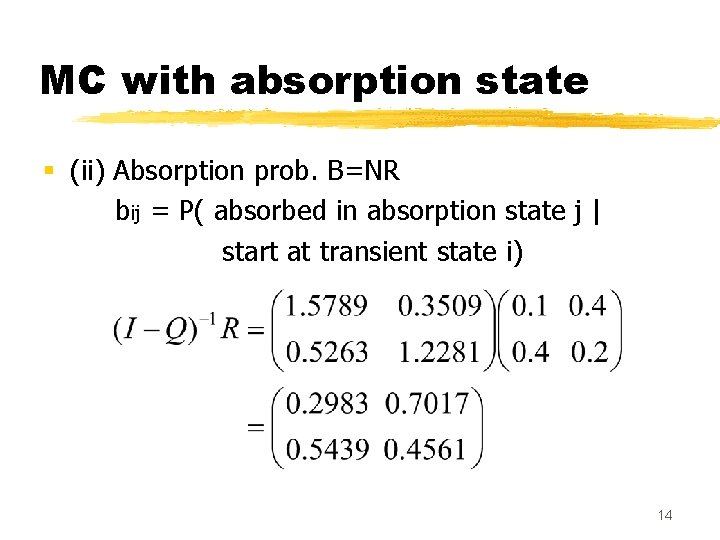

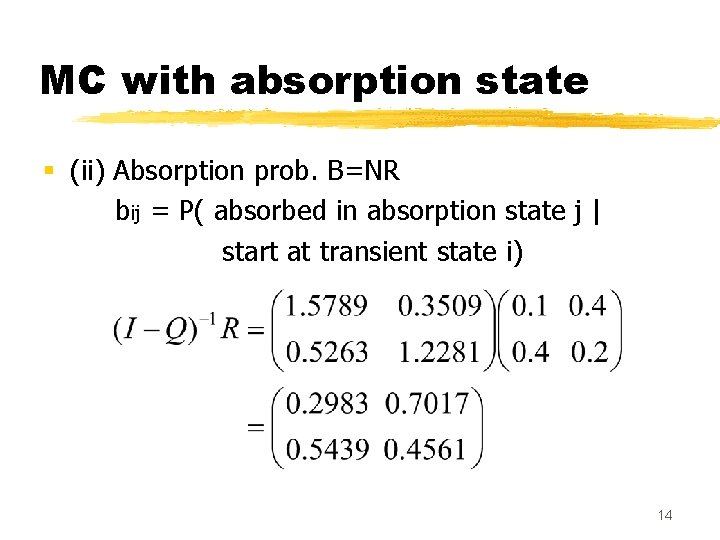

MC with absorption state § (ii) Absorption prob. B=NR bij = P( absorbed in absorption state j | start at transient state i) 14