Trustless Grid Computing in Con Cert Progress Report

- Slides: 55

Trustless Grid Computing in Con. Cert (Progress Report) Robert Harper Carnegie Mellon University

Acknowledgements • Co-PI’s Karl Crary, Frank Pfenning, Peter Lee. • Support NSF ITR program. • Students (who do the real work) Chang, Delap, Dreyer, Kliger , Magill, Moody, Murphy, Petersen, Sarkar, Vanderwaart, Watkins. • Thanks to FGC Organizers for the invitation!

Grid Computing • “The network is a computer. ” – Exploit idle resources on the network. – Many ad hoc grids. • SETI@HOME • FOLDING@HOME • But what is a general grid model? – Trust model, programming model, participation model?

Application Model • What is the (a? ) grid computer? – Parallelism? – Dependencies? – Sharing resources? – Failures? • Centralized vs. distributed. – Bottlenecks (e. g. , SETI traffic at UCB). – Reliability, robustness.

Application Model • Most grid app’s are massively parallel. – Depth = 1, no dependencies. – Ray tracing, GIMPS, SETI. • Is a grid useful for depth > 1? – Game-tree search. – Theorem proving. • Is parallelism the only benefit? – What about data locality?

Host Model • Active intervention required. – Must download code, apply upgrades. – Must decide on which grids to participate. • Motivation to participate? – At scale, largely altruism, coolness. – Ad hoc grids on an intranet. – Economic models? (Cf Lillibridge, et al. )

Trust Relationships • Hosts trust applications. – Denial of service attacks. – Privacy/secrecy attacks. – Accidental misbehavior (e. g. , SETI). • Applications trust hosts. – Spoofed answers. – Collusion among participants. • Can we minimize these?

The Con. Cert Approach • One computer, many keyboards. – Decentralized scheduling. – Emphasis on code mobility. • Policy-based participation. – Declarative statement of participation criteria. – Applications must prove compliance. • Dependency-based scheduling. – Arbitrary depth. – And/or dependencies. – Inspired by CILK/NOW.

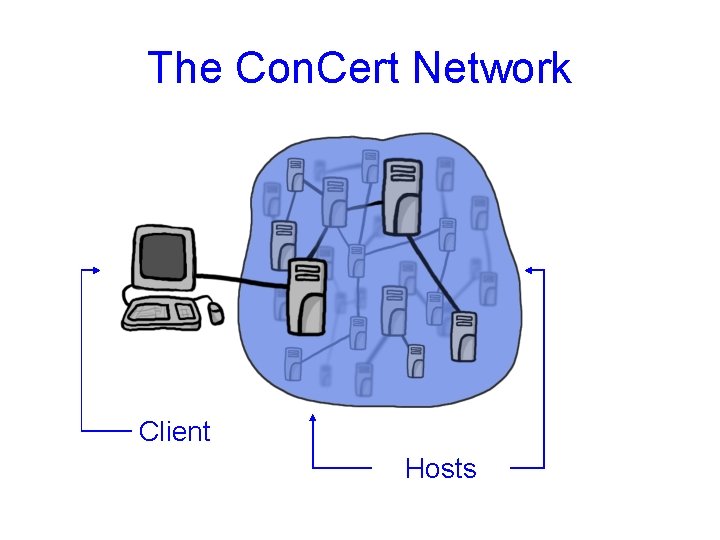

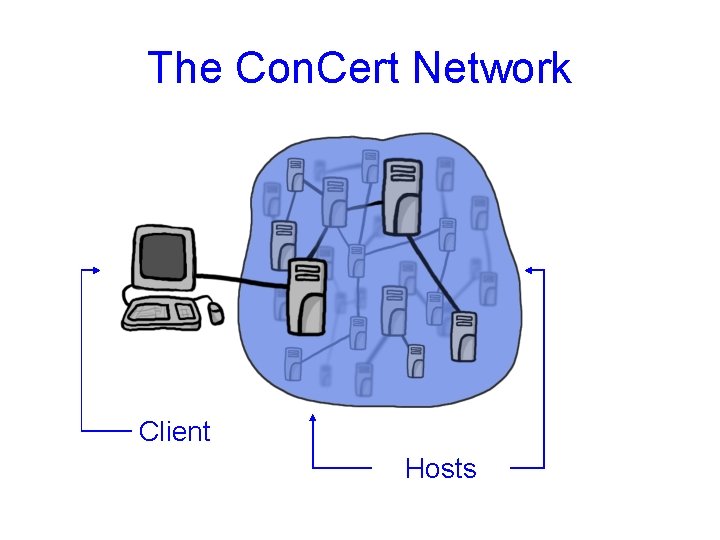

The Con. Cert Network Client Hosts

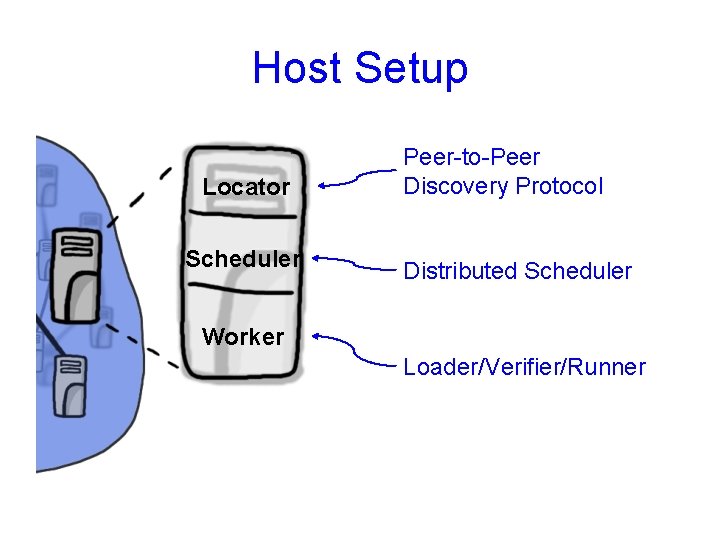

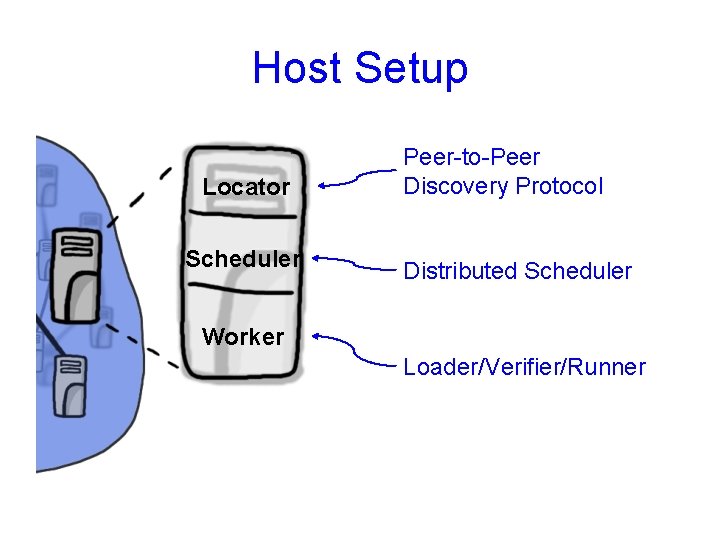

Host Setup Locator Scheduler Peer-to-Peer Discovery Protocol Distributed Scheduler Worker Loader/Verifier/Runner

Scheduler • Maintain ready and waiting queues. – Ready queue: available for “stealing”. – Wait queue: awaiting satisfying assignment. • Work-stealing model. – Who has work to do? – Grab work, compute result, deliver to owner. • Dependencies. – Supports depth > 1 parallelism. – Don’t care and don’t know parallelism.

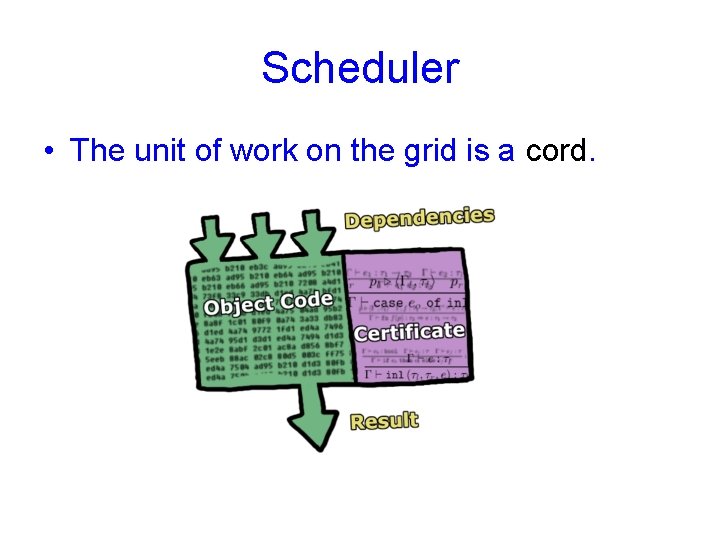

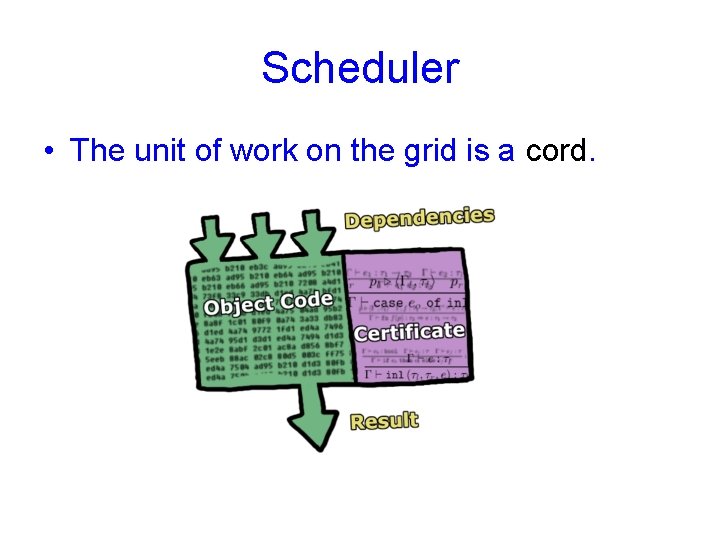

Scheduler • The unit of work on the grid is a cord.

Scheduler • Cord structure: – Code: cached using MD 5 fingerprints. – Certificate of compliance: (more later). – Dependencies: positive boolean formula. • Assumptions: – Idempotent: can always be re-run. – Non-blocking: runs to completion (but may create more cords, often as continuations). – Communication only via dependencies. Satisfying assignment passed on activation.

Worker • Steal work from (self or) neighbor. • Obtain cord from host. – Typically arguments + dependencies. – Code shipped at most once. • Verify certificate of compliance. • Load and execute as a DLL. – Currently combined with verification. – Should verify at most once (cache result). • Deliver result to owner.

Control • Client. – Submit a job to the grid. – “One per keyboard. ” • Monitor. – Web server interface. – Displays cord status. – [Change policy. ]

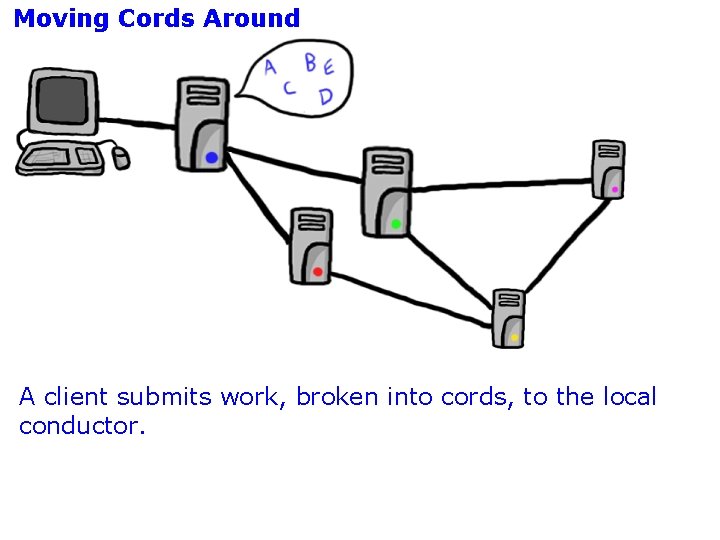

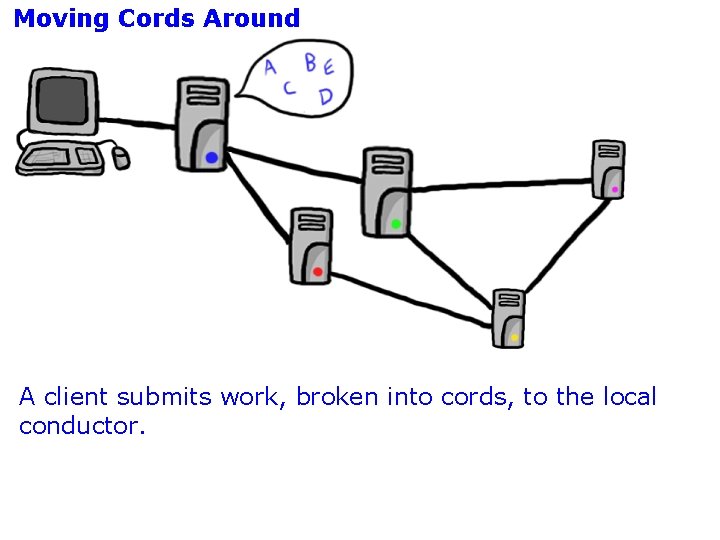

Moving Cords Around A client submits work, broken into cords, to the local conductor.

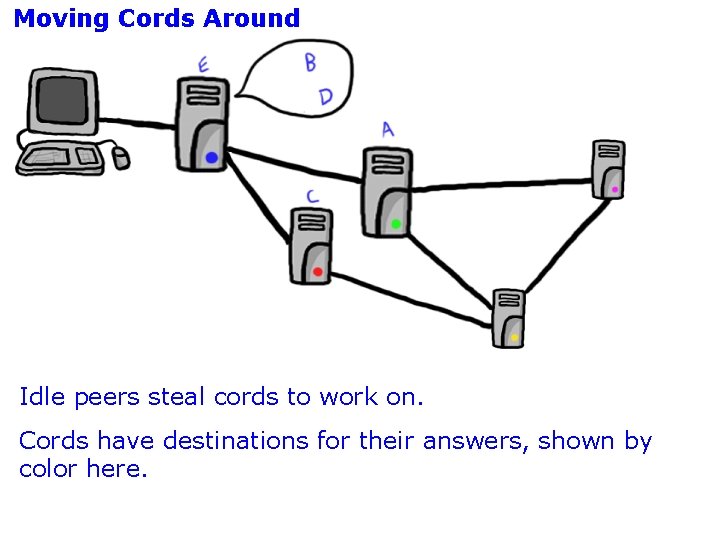

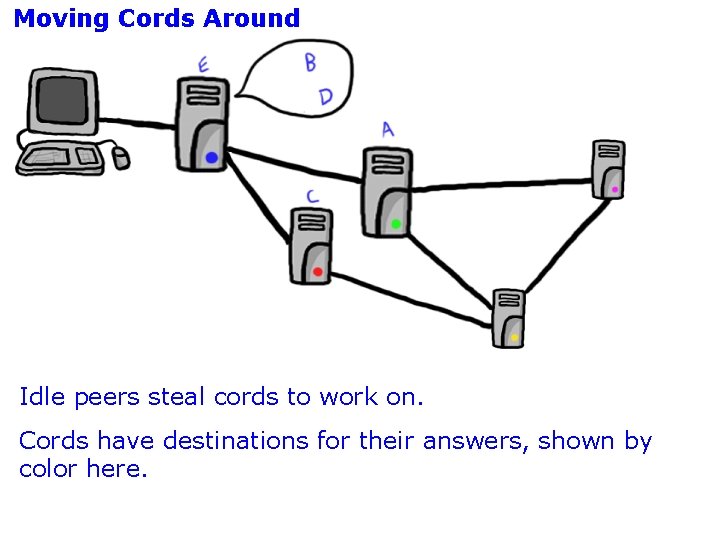

Moving Cords Around Idle peers steal cords to work on. Cords have destinations for their answers, shown by color here.

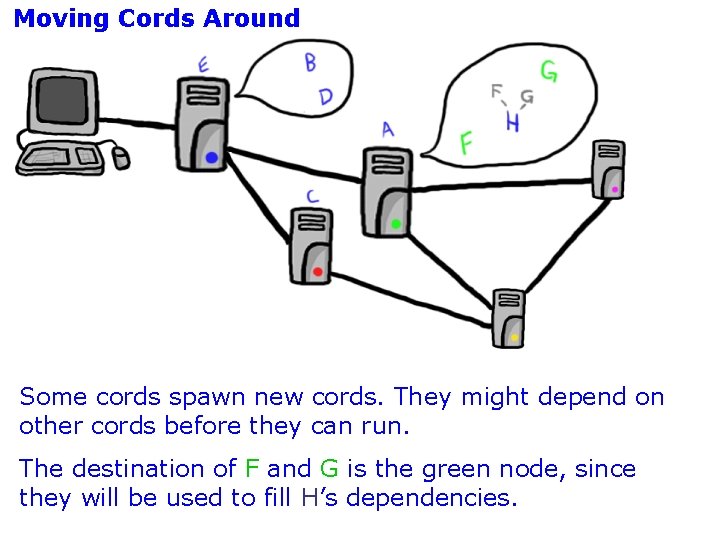

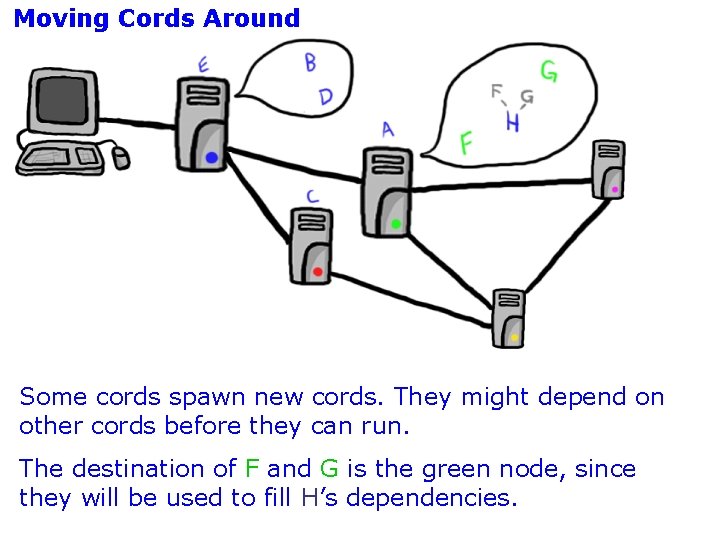

Moving Cords Around Some cords spawn new cords. They might depend on other cords before they can run. The destination of F and G is the green node, since they will be used to fill H’s dependencies.

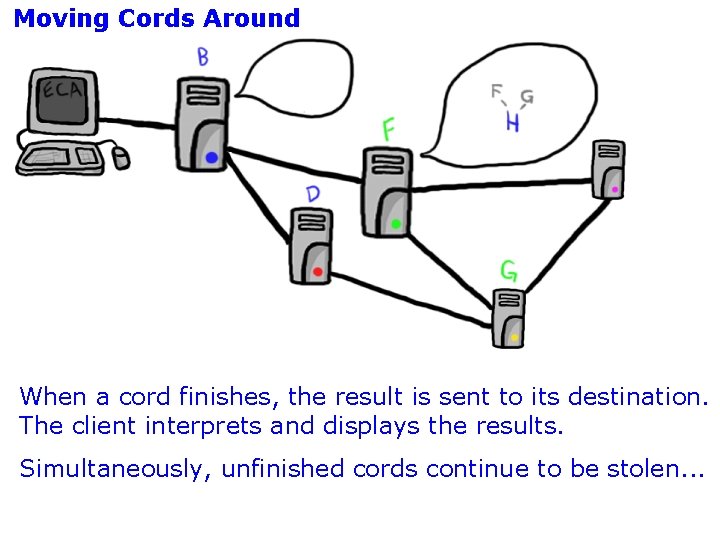

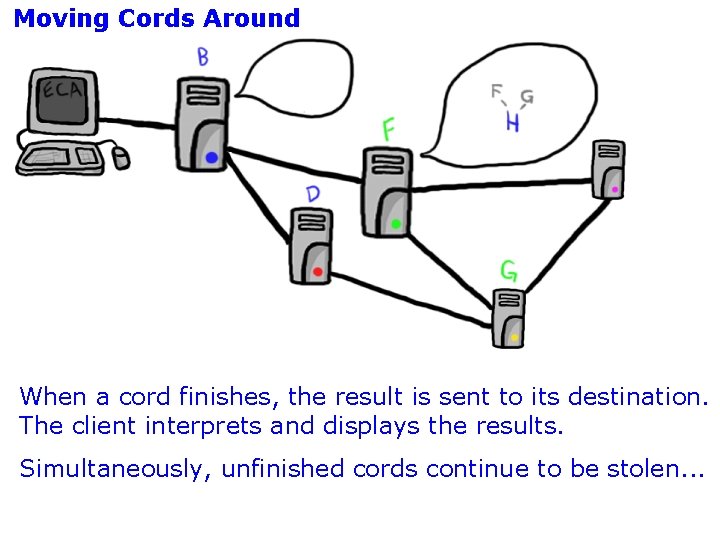

Moving Cords Around When a cord finishes, the result is sent to its destination. The client interprets and displays the results. Simultaneously, unfinished cords continue to be stolen. . .

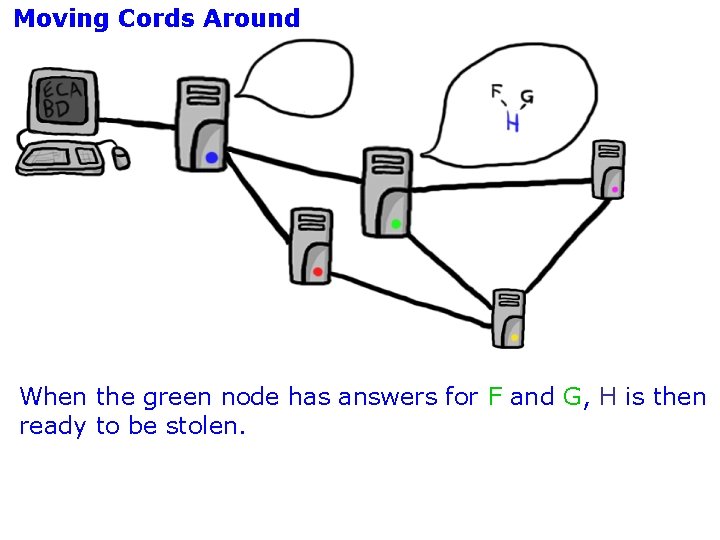

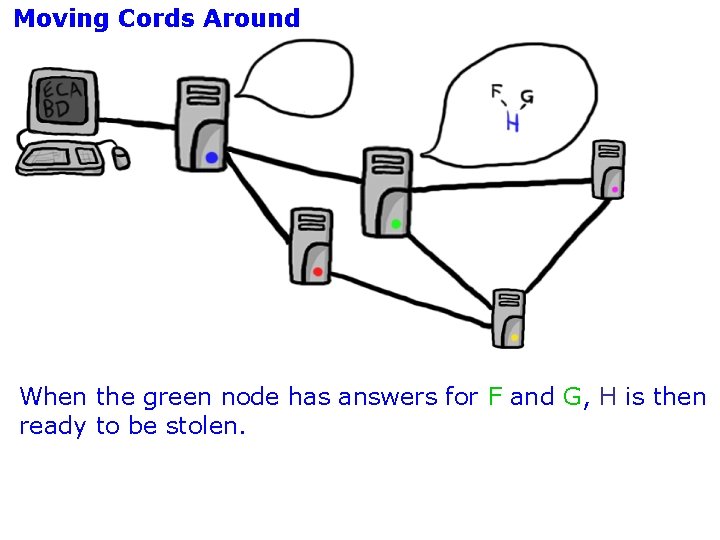

Moving Cords Around When the green node has answers for F and G, H is then ready to be stolen.

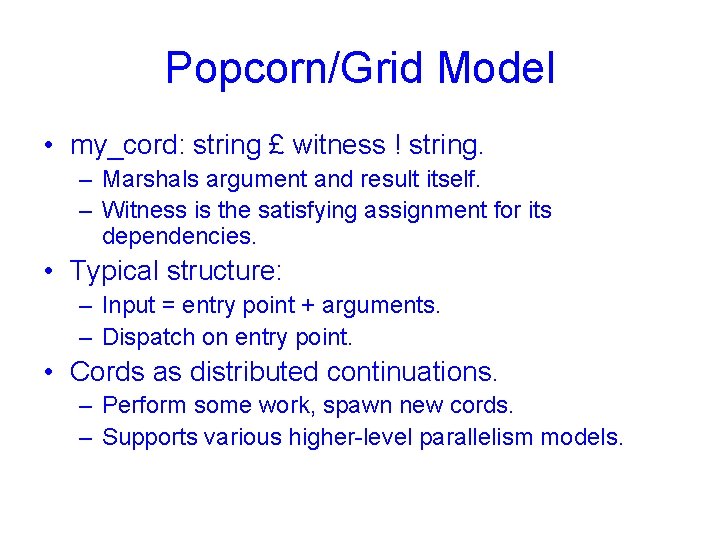

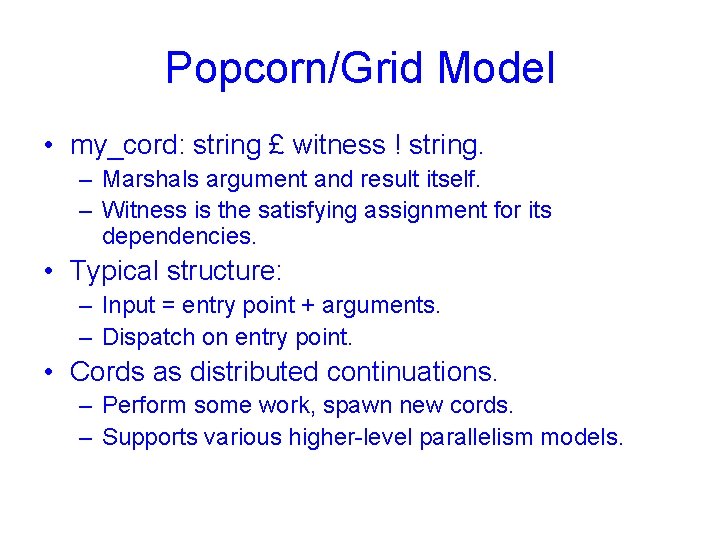

Popcorn/Grid Model • my_cord: string £ witness ! string. – Marshals argument and result itself. – Witness is the satisfying assignment for its dependencies. • Typical structure: – Input = entry point + arguments. – Dispatch on entry point. • Cords as distributed continuations. – Perform some work, spawn new cords. – Supports various higher-level parallelism models.

ML/Grid Model • One program for client and its cords. – Compiler separates client from cords. • Compiler handles marshalling. • Run-time checks enforce distinctions (more later). – Cord cannot perform I/O. – Client cannot submit itself as a cord. • Compiles to TAL/Grid.

ML/Grid Model • Primitives: – spawn : (unit ! ) ! task – sync : task ! – relax : task list ! £ task list • Must be provided as primitives. – Requires access to representations. • Further higher-level libraries. – E. g. , parallelism models.

Examples • GML ray-tracer (ICFP 01 Contest). – Depth = 1. – Written in Popcorn/Grid, compiles to TALx 86/Grid. • Chess player. – Depth > 1, and-or dependencies. – Written in Popcorn/Grid, compiles to TALx 86/Grid. • Theorem prover for MLL. – Depth > 1, and-or dependencies. – Written in SML, runs on simulator. – Being ported to ML/Grid.

Some Problems • Failures. – Fail-stop model is easily supported. – Demonic failures require result certification. • Abandoning cords. – Or-dependencies are satisfied by first cord to deliver answer. – Parent must be prepared to receive result long after it is no longer needed. • Sharing results. – Grid-wide cache of answers?

Result Certification • Main idea: make host prove validity of answer. – Avoid need for application to trust hosts. • Some applications admit native certification. – For theorem prover: the proof. – For factoring, the facts. • Are there general result certification methods? – Work-stealing model precludes random allocation / redundancy methods (SETI, Bayanihan). – Centralized methods are not robust or scalable.

Result Certification • A crazy idea: use the PCP theorem. – Use interactive dialog to spot-check a proof. • Host proves that it ran given code on given data. – Execution trace is a proof that it did. – But traces can be huge! • Engage in a dialog with O(1) rounds to check proof with high probability. – Avoids need to transmit trace itself. – But the representation is enormous!

Two Foundational Questions • What is a type system for a GPL? – Enforce mobility constraints. – Clean type system to support development, compilation, certification. • What policies can we support? – How to state policies? – How to prove compliance? – How to support multiple policies?

A Type System for GPL • Main idea: modalities for mobility. – Cf. related ideas by Cardelli, Gordon, et al. – Cf. recent work by Walker. – Here: Curry-Howard applied to modal logic. • Necessity (¤ A): a computation of A anywhere. – Classifies mobile code of type A. – Enforces marshalling and access restrictions. • Possibility (¦ A): a computation of A somewhere. – Classifies remote code of type A. – Ensures that access is limited to remote values.

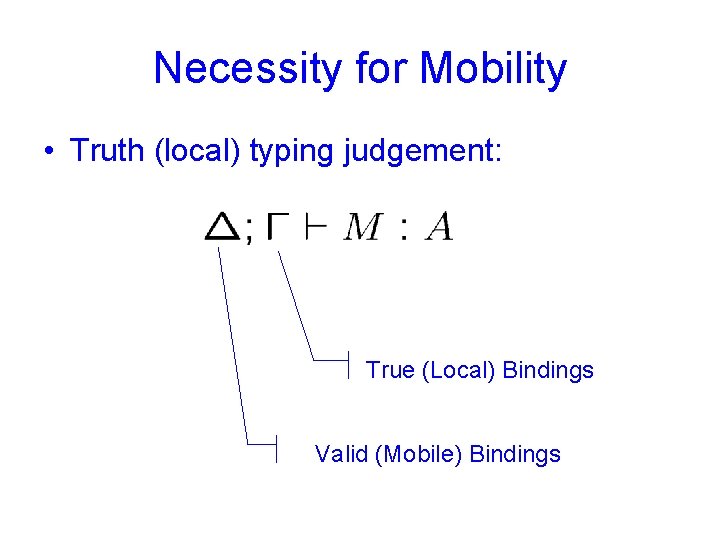

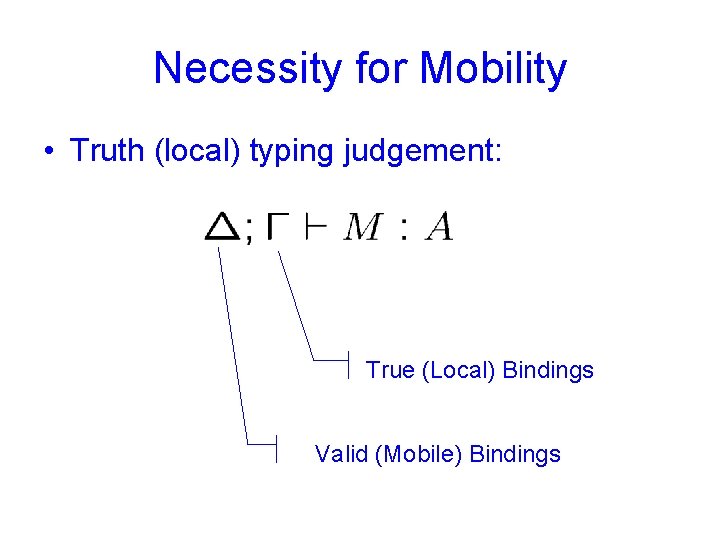

Necessity for Mobility • Truth (local) typing judgement: True (Local) Bindings Valid (Mobile) Bindings

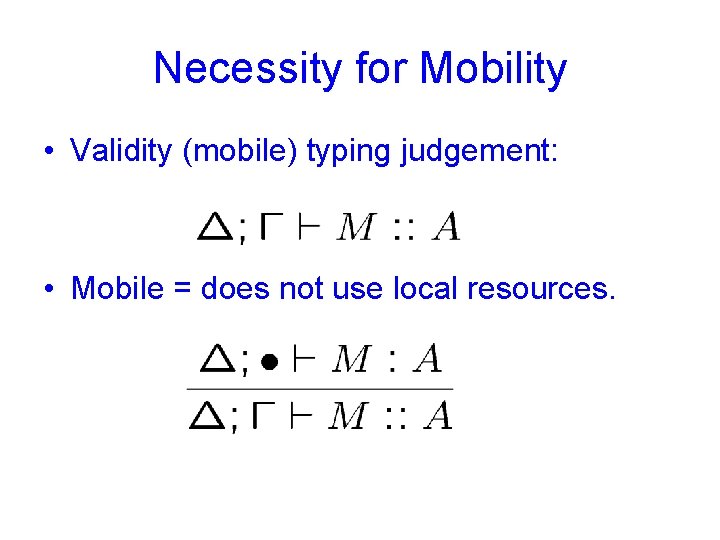

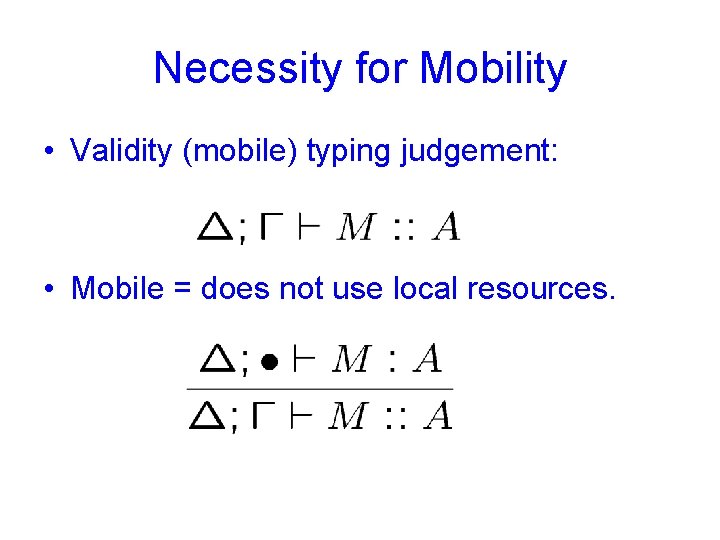

Necessity for Mobility • Validity (mobile) typing judgement: • Mobile = does not use local resources.

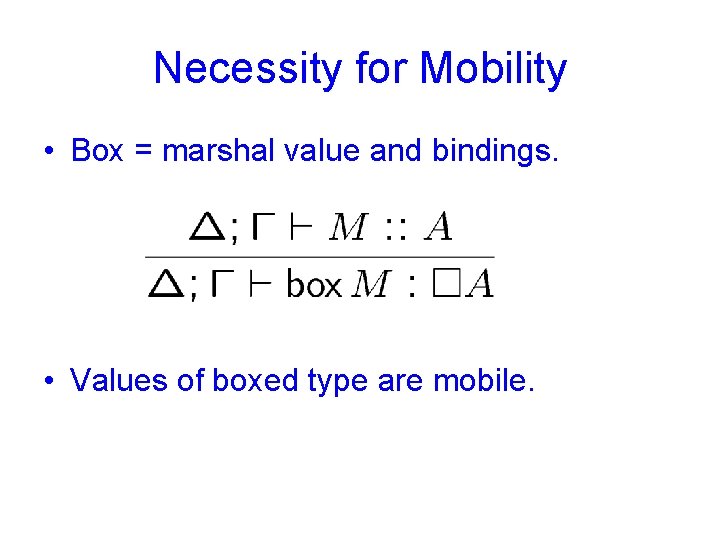

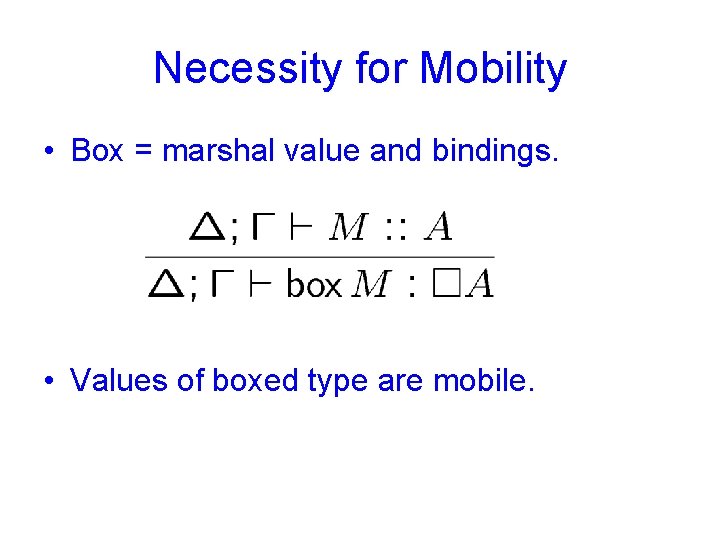

Necessity for Mobility • Box = marshal value and bindings. • Values of boxed type are mobile.

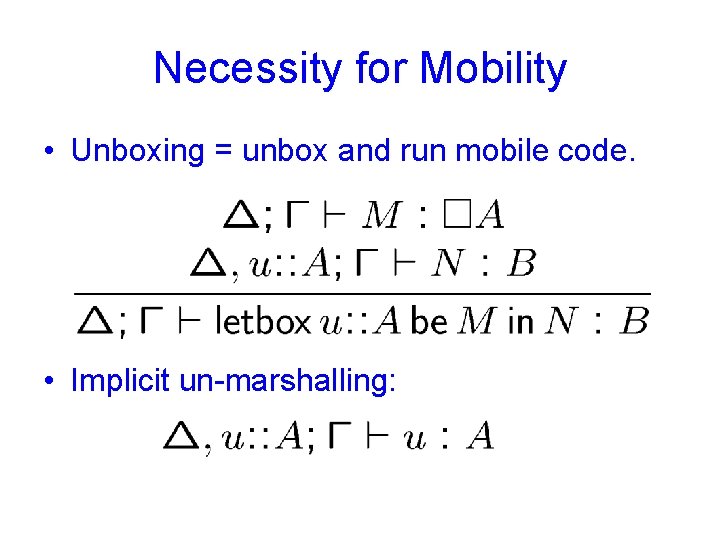

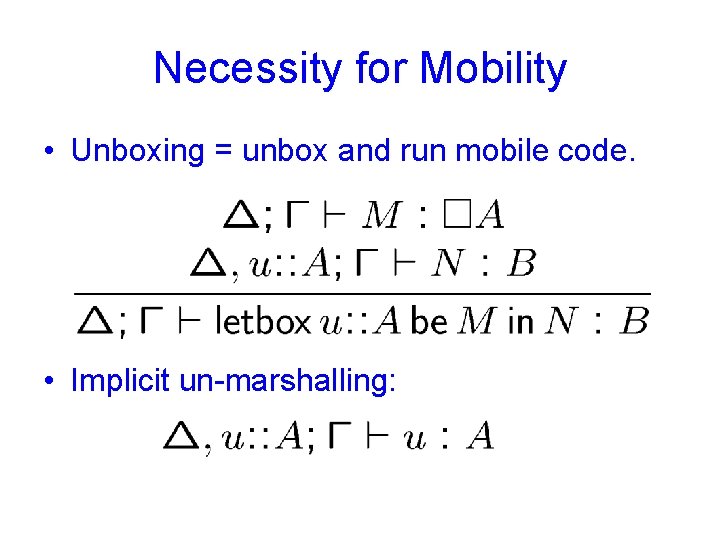

Necessity for Mobility • Unboxing = unbox and run mobile code. • Implicit un-marshalling:

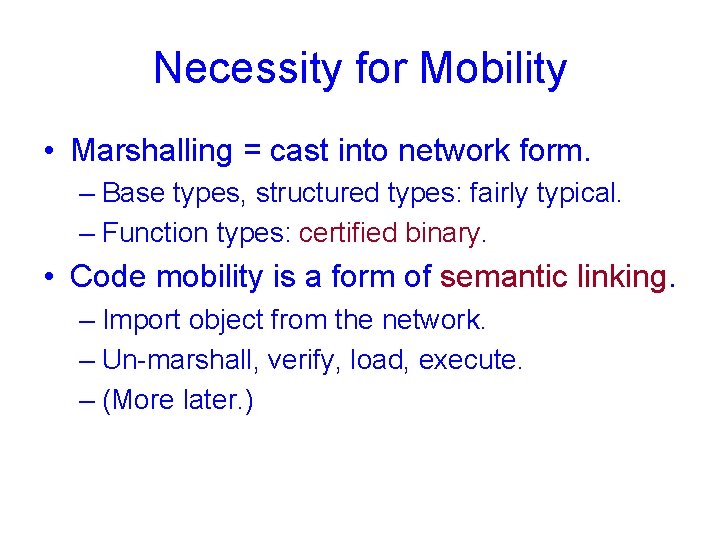

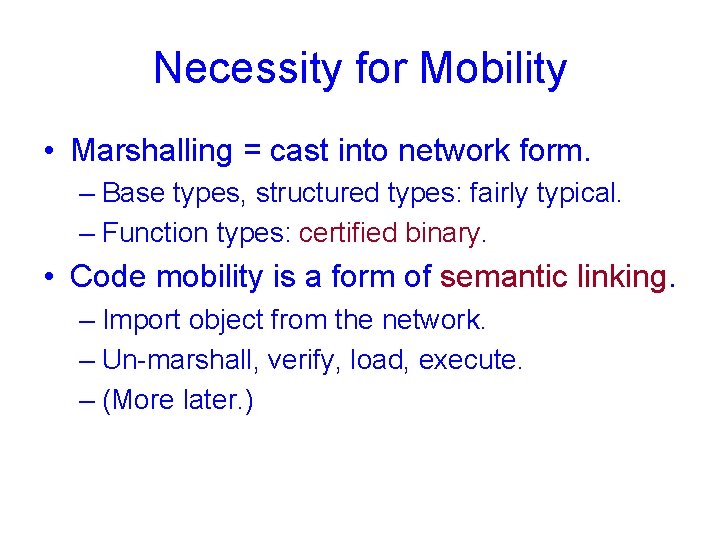

Necessity for Mobility • Marshalling = cast into network form. – Base types, structured types: fairly typical. – Function types: certified binary. • Code mobility is a form of semantic linking. – Import object from the network. – Un-marshall, verify, load, execute. – (More later. )

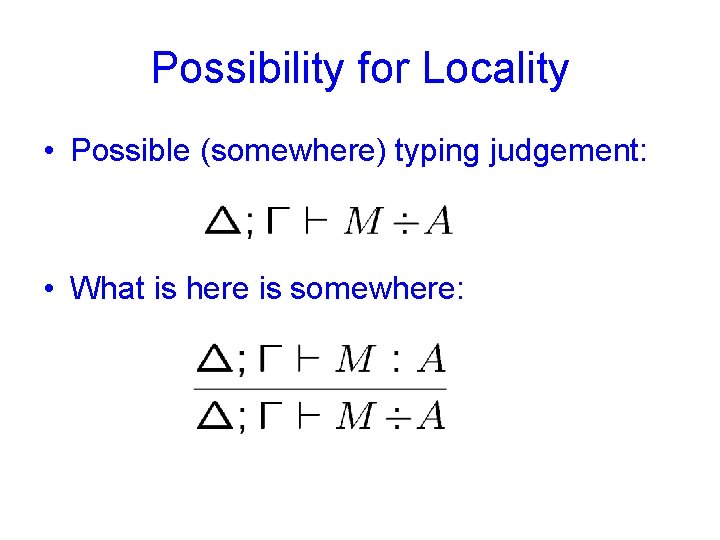

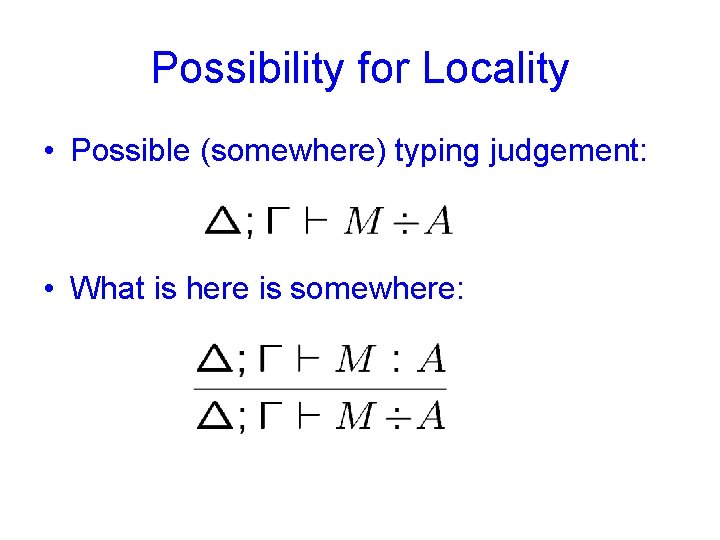

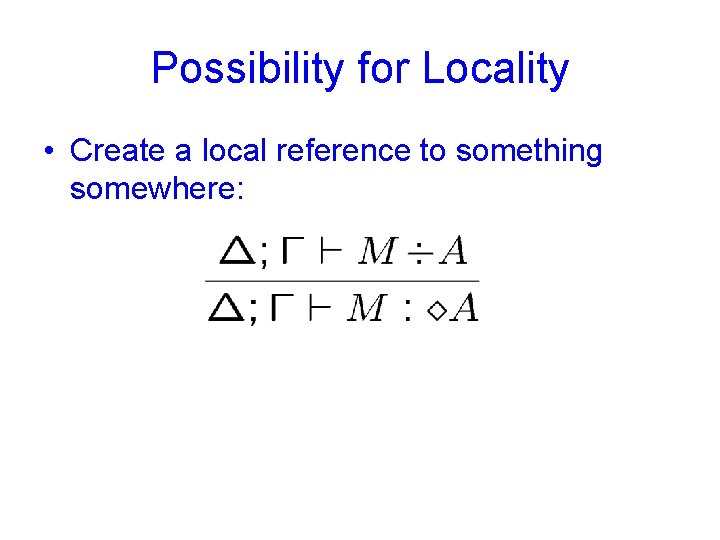

Possibility for Locality • Possible (somewhere) typing judgement: • What is here is somewhere:

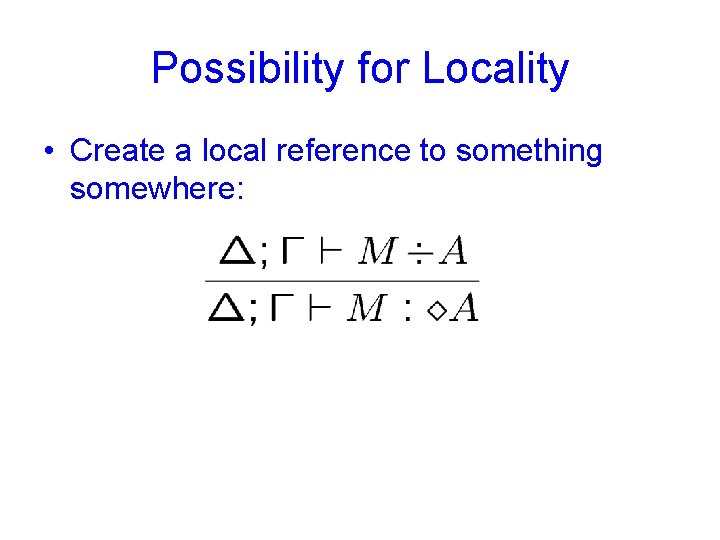

Possibility for Locality • Create a local reference to something somewhere:

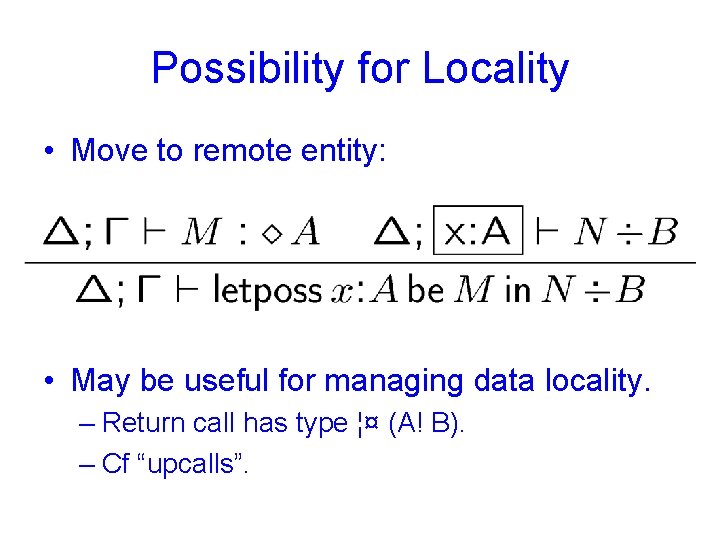

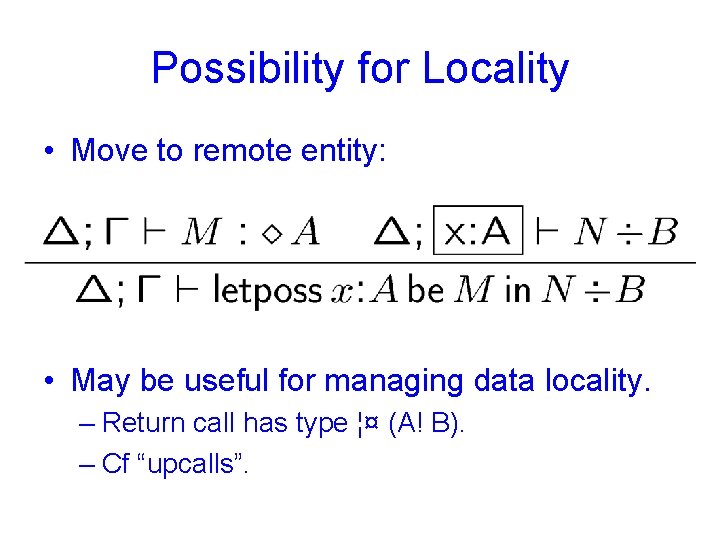

Possibility for Locality • Move to remote entity: • May be useful for managing data locality. – Return call has type ¦¤ (A! B). – Cf “upcalls”.

Modalities for Mobility • These rules are for S 4 modal logic. – Accessibility is reflexive and transitive. • Is this the right notion of accessibility? – Symmetry = S 5. “You can go home again. ” – Judgmental form requires three contexts. – Explicit-world form uses a record of contexts. • Other varieties of modal logic are also under consideration.

Policies and Certification • Current certification methods are uniform. – 9 sec. policy 8 problems safety is assured. • Eg, PCC for Java • Eg, TAL for Popcorn. – Safety means memory and type safety. • Baseline requirement. • But not adequate for all applications. • Recall: policies should be per-host.

Foundational Certification • Non-uniform setup: 8 probs 9 type system – Shift the type system for object code out of the TCB (untrusted, problem-specific). – Must provide a proof that type system is safe. • Compare Appel, et al. – Their goal: minimize TCB. – Our goal: support multiple safety policies. – Could be consolidated, but it’s a lot of work.

Foundational Safety • Host specifies target architecture. – Fully realistic, e. g. , IA-32 + OS + RTS. – No unsafe transitions. • Safety policy: target does not get stuck. – Any type system must come with a proof of progress relative to the target machine. – Experience shows that progress proofs are readily mechanizable.

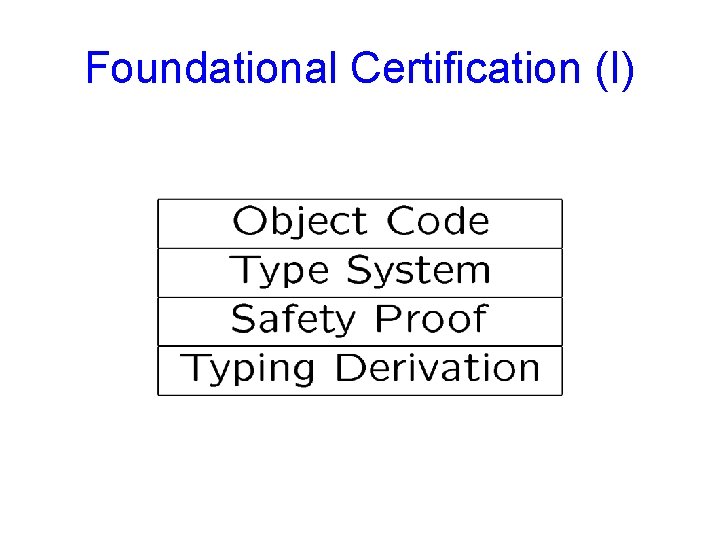

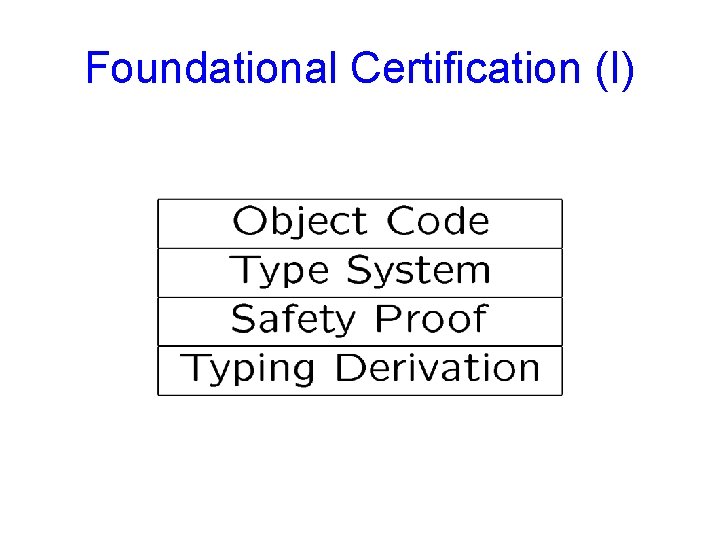

Foundational Certification (I)

Foundational Certification (I) • Object code is essentially a DLL. • Type system is specified in LF. – Using typical LF representations. • Safety proof: well-typed ) safe. – Represented as an LF term. – Obtained with Twelf proof search engine. • Derivation: type annotations for code. – Makes mechanical checking feasible.

Foundational Certification (I) • May cache type system and safety proof. – Reduces certificate size. – Many cords for one type system is typical. • May use oracle strings for derivation. – Relies on details of operational behavior of host-side checker. – Therefore not completely declarative. – But significantly reduces certificate size.

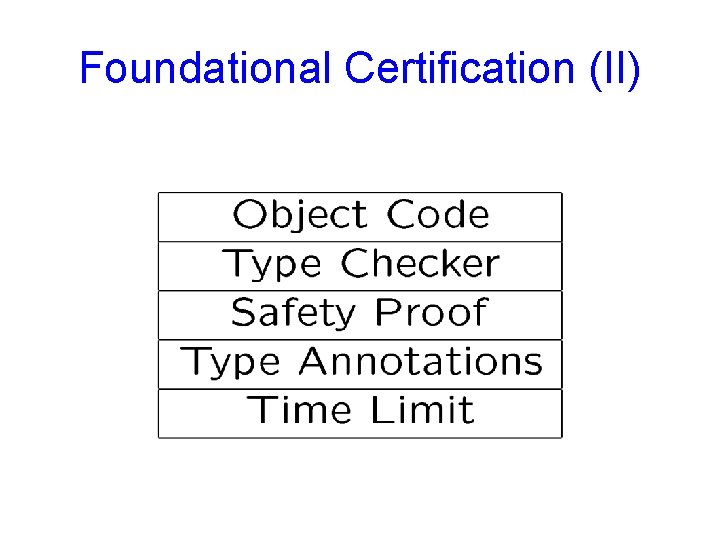

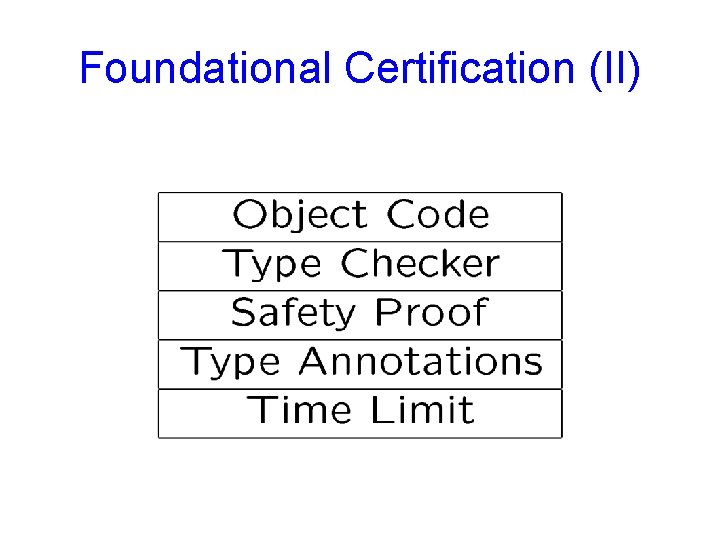

Foundational Certification (II)

Foundational Certification (II) • Object code is a DLL as before. • Type checker is a program. – Currently, a Twelf logic program. – Could be ML code. • Safety proof shows partial correctness of the checker. – Checking succeeds ) safety. • Annotations support mechanical checking. • Time limit precludes looping. – Can refuse if limit is too large.

Examples • TALT – Essentially TALx 86 with a safety proof. – Proof is mechanically derived and checked. – Structured as a safety proof for an abstract machine plus a simulation lemma for target. • TALT + Resource Bounds – Goal: ensure that object code yields processor at set intervals. – Precludes denial of CPU service.

Resource Bound Certification • Type system enforces upper bound on yield interval. – Specified as a parameter of the type system. • Basic method: – Conservative instruction counting (join points). – Yield processor at start of every basic block. – Prove that block can complete before next yield (else split block).

Resource Bound Certification • Smarter techniques are under development. – Better analysis of code behavior across calls. – Fewer yields overall. • Run-time checks reduce overhead. – Use static analysis to insert minor yields that check true interval. – Minor yields re-calibrate, possibly incurring a major yield (system call).

A Meta-Grid? • Con. Cert Conductor represents one model of grid computing. – Compute-intensive, distributed scheduling. – Not much reason to believe this is canonical. • Can we support a variety of models inside of a single meta-grid? – Applications choose grid model. – Hosts are indifferent to programming model.

A Meta-Grid? • The ur-grid: – A TCP port. – Foundational code certification. • A grid framework: – Scheduler, recovery model, host policy. – Runs application cords.

A Meta-Grid? • Key capability: safe dynamic loading and linking. – Current Con. Cert framework must be certified against host safety policy. – It must be able to load application policies and application code. • Requires a fairly sophisticated theory of sage linking.

Semantic Linking • Marshalling is meta-programming. – Create values of a grid type system. – Cast grid values as local values. • Certification is how we marshal code. – Functions are marshalled as closures plus proof of compliance with host type system. – Ensures that cast will succeed, safely. • The ur-grid is just an unmarshaller. • Grid frameworks are meta-programs.

Summary • Declarative approach to safe grids. – Passive, policy-based participation model. – Logic and proof technology for specifying policies and proving compliance. • Close interplay between systems building and foundational theory. – Type systems for mobile code. – Type systems for various safety policies.

Thanks! • Web site: http: //www. cs. cmu. edu/~concert. • Demonstration available after talk. • Questions or comments?